1. Introduction

Machine-learning-assisted modelling has become a new paradigm of research in a variety of scientific and engineering disciplines (Brunton, Noack & Koumoutsakos Reference Brunton, Noack and Koumoutsakos2020; Weinan Reference Weinan2021). A very incomplete list includes Ling, Kurzawski & Templeton (Reference Ling, Kurzawski and Templeton2016), Koch-Janusz & Ringel (Reference Koch-Janusz and Ringel2018), Bergen et al. (Reference Bergen, Johnson, de Hoop and Beroza2019), Sengupta et al. (Reference Sengupta, Amos, Hosking, Rasmussen, Juniper and Young2020), Ma, Zhang & Yu (Reference Ma, Zhang and Yu2021), Guastoni et al. (Reference Guastoni, Güemes, Ianiro, Discetti, Schlatter, Azizpour and Vinuesa2021), Park & Choi (Reference Park and Choi2021), Karniadakis et al. (Reference Karniadakis, Kevrekidis, Lu, Perdikaris, Wang and Yang2021), Yu et al. (Reference Yu, Yuan, Qi, Wang, Li and Chen2022) and Juniper (Reference Juniper2023), the vast majority of which present improved performance, but a prominent critique is that the resulting models are ‘black boxes’. They cannot be explicitly expressed in mathematical forms. This not only sacrifices interpretability but also makes the resulting models difficult to disseminate between end users (Beetham & Capecelatro Reference Beetham and Capecelatro2020).

In contrast to the aforementioned studies, Bongard & Lipson (Reference Bongard and Lipson2007) and Schmidt & Lipson (Reference Schmidt and Lipson2009) proposed using stratified symbolic regression and genetic programming to discover governing equations from data in low-dimensional systems. While this approach is innovative, it is difficult to scale up to high-dimensional systems. Genetic programming encodes equations using the nonlinear parse trees with varying sizes and shapes, which can become bloated in high-dimensional problems, making evolutions computationally expensive (Vaddireddy et al. Reference Vaddireddy, Rasheed, Staples and San2020). Subsequently, Brunton, Proctor & Kutz (Reference Brunton, Proctor and Kutz2016) proposed a seminal work called sparse identification of nonlinear dynamics (SINDy), which employs sparse regression to identify the most informative subset from a large predetermined library of candidate functions and determines the corresponding coefficients. The SINDy is advantageous in deriving models that are explicit and concise, and has been widely used to discover equations in the form of first-order ordinary differential equations, alternatively with linear embedding (Lusch, Kutz & Brunton Reference Lusch, Kutz and Brunton2018; Champion et al. Reference Champion, Lusch, Kutz and Brunton2019a), for applications in fluid systems (Loiseau & Brunton Reference Loiseau and Brunton2018; Loiseau, Noack & Brunton Reference Loiseau, Noack and Brunton2018; Zhang & Ma Reference Zhang and Ma2020), predictive control of nonlinear dynamics (Kaiser, Kutz & Brunton Reference Kaiser, Kutz and Brunton2018) and multi-time-scale systems (Champion, Brunton & Kutz Reference Champion, Brunton and Kutz2019b). To date, there have been many extensions to SINDy, such as partial differential equation functional identification of nonlinear dynamic (Rudy et al. Reference Rudy, Brunton, Proctor and Kutz2017; Schaeffer Reference Schaeffer2017), implicit sparse regression (Mangan et al. Reference Mangan, Brunton, Proctor and Kutz2016; Kaheman, Kutz & Brunton Reference Kaheman, Kutz and Brunton2020), physics-constrained sparse regression (Loiseau & Brunton Reference Loiseau and Brunton2018) and sparse relaxed regularized regression (Zheng et al. Reference Zheng, Askham, Brunton, Kutz and Aravkin2018). Basically, the sparse regression-based methods are confronted with two issues: the difficulty in accurately computing the derivative of noisy data and the requirement that all variables in the equation are observable. Gurevich, Reinbold & Grigoriev (Reference Gurevich, Reinbold and Grigoriev2019), Reinbold, Gurevich & Grigoriev (Reference Reinbold, Gurevich and Grigoriev2020) and Alves & Fiuza (Reference Alves and Fiuza2022) employed the weak formulation of differential equations to decrease the noise sensitivity and eliminate the dependence on unobservable variables. Furthermore, Reinbold & Grigoriev (Reference Reinbold and Grigoriev2019), Reinbold et al. (Reference Reinbold, Kageorge, Schatz and Grigoriev2021) and Gurevich, Reinbold & Grigoriev (Reference Gurevich, Reinbold and Grigoriev2021) considered three appropriate physical constraints, including locality, smoothness and symmetries, to dramatically constrain the size of candidate library to be concise and effective.

More recently, in addition to sparse regression-based methods, two promising categories of data-driven methods have been proposed for discovering explicit models. The first category is the neural network-based method, such as PDE-Net (Long et al. Reference Long, Lu, Ma and Dong2018; Long, Lu & Dong Reference Long, Lu and Dong2019) and equation learner (EQL) (Sahoo, Lampert & Martius Reference Sahoo, Lampert and Martius2018). The PDE-Net approximates differential operators with convolutions and employs a symbolic multilayer neural network for model recovery, resulting in high expressivity and flexibility. The EQL uses a special neural network structure whose activation functions are symbolic operators, and it was demonstrated that the derived models can be generalized to the parameter spaces not covered by the training dataset. However, both neural network-based methods are criticized for the resulting equations being overly complex.

Another category is gene expression programming (GEP)-based methods (Ferreira Reference Ferreira2001; Vaddireddy et al. Reference Vaddireddy, Rasheed, Staples and San2020; Xing et al. Reference Xing, Zhang, Ma and Wen2022), which learn the forms of functions and their corresponding coefficients concurrently. The preselected elements for GEP include only mathematical operators, physical constants and physical variables. The resulting equations are constructed by randomly combining basic elements while satisfying the syntactic requirements of the mathematical expression, rather than by linearly combining the predetermined candidate functions. Unlike the genetic programming method used in Schmidt & Lipson (Reference Schmidt and Lipson2009), GEP encodes equations with fixed length linear strings that have unfixed open reading frames (ORFs). This feature of fixed length prevents bloating issues and excessive computational costs when dealing with complex problems. Additionally, variable ORFs ensure the variety of expression products, thus providing strong expressivity. Moreover, GEP performs a global exploration in the space of mathematical expressions, tending to obtain good results in a reasonable time. Therefore, in terms of data fitting, GEP has almost all the advantages of the aforementioned methods, featuring explicitness, concision, enhanced expressivity and flexibility without assuming the forms of candidate functions. Generally, GEP endeavours to discover the equations with less error; however, dimensional homogeneity cannot be guaranteed, particularly for problems with a variety of variables. Without any constraints or assumptions on the function forms, it is highly probable to obtain some overfitting and unphysical equations, which are sensitive to hyperparameters.

Considering the pros and cons of GEP, we propose a novel dimensional homogeneity constrained GEP (DHC-GEP) method for discovering governing equations. To the best of our knowledge, this is the first time that the constraint of dimensional homogeneity has been introduced to GEP. The constraint is implemented via a dimensional verification process before evaluating loss, without altering the fundamental features of the original GEP (Original-GEP) (Ferreira Reference Ferreira2001), including the structure of chromosomes, the rules of expression, selection and genetic operators. Therefore, DHC-GEP inherits all the advantages of Original-GEP. More importantly, through two benchmark studies, we demonstrate that DHC-GEP has three critical improvements over Original-GEP: (a) robust to the size and noise level of datasets; (b) less sensitive to hyperparameters; (c) lower computational costs.

Furthermore, we extend the application of DHC-GEP to discover the unknown constitutive relations for non-equilibrium flows, including one-dimensional shock wave and rarefied Poiseuille flow. The conventional governing equations for fluid flows are the Navier–Stokes–Fourier (NSF) equations, which are derived based on the conservation of mass, momentum and energy, as well as the empirical assumptions of linear constitutive relations for the viscous stress and heat flux. Note that in strong non-equilibrium flows, these linear constitutive relations breakdown, and thus NSF equations are no longer applicable. Although high-order constitutive relations can be derived based on kinetic theory (Chapman & Cowling Reference Chapman and Cowling1990), such as Burnett equations (Burnett Reference Burnett1936), their applicability is still very limited. Instead, our data-driven strategy is to derive the unknown constitutive relations from the data generated with molecular simulations. A general flowchart is shown in figure 1. Considering that constitutive relations describe the local transport mechanisms of momentum and energy, we regard local non-equilibrium parameters as key factors, and meticulously select the imported variables to satisfy the Galilean invariance (Han et al. Reference Han, Ma, Ma and E2019; Huang et al. Reference Huang, Ma, Zhou and Yong2021). Besides, the constraint of the second law of thermodynamics is embedded by adding an additional loss term, which is related to entropy production, to the loss function. The derived equations are more accurate than conventional Burnett equations over a wide range of Knudsen number and Mach number.

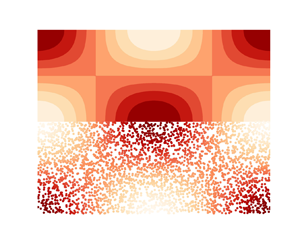

Figure 1. Flowchart of discovering unknown governing equations from data. First, macroscopic flow field data is generated via microscopic molecular simulation. Then, the basic elements preselected for DHC-GEP are constituted with state variables, mathematical operators and other variables of concern. Taking the one-dimensional shock wave case as an example, the terminal set involves fundamental physical parameters (viscosity (![]() $\mu$), heat conductivity (

$\mu$), heat conductivity (![]() $\kappa$)), state variables (velocity (

$\kappa$)), state variables (velocity (![]() $u$), density (

$u$), density (![]() $\rho$), temperature (

$\rho$), temperature (![]() $\theta$)), gradient terms (

$\theta$)), gradient terms (![]() $u_x$,

$u_x$, ![]() $\rho _x$,

$\rho _x$, ![]() $\theta _x$) and gradient-length local (GLL) Knudsen number (

$\theta _x$) and gradient-length local (GLL) Knudsen number (![]() ${Kn}_{\rho }$,

${Kn}_{\rho }$, ![]() ${Kn}_{\theta }$). The target variable is the heat flux in the

${Kn}_{\theta }$). The target variable is the heat flux in the ![]() $x$ direction (

$x$ direction (![]() $q_x$). The gradient terms are computed with auto differentiation (AD) based on neural networks, which is introduced in Appendix A. Finally, based on the terminal and function sets, DHC-GEP conducts a global search in the space of mathematical expressions until a satisfactory equation that fits well with the target variable is obtained.

$q_x$). The gradient terms are computed with auto differentiation (AD) based on neural networks, which is introduced in Appendix A. Finally, based on the terminal and function sets, DHC-GEP conducts a global search in the space of mathematical expressions until a satisfactory equation that fits well with the target variable is obtained.

The remainder of this paper is organized as follows. In § 2.1, we briefly introduce the molecular simulation method, i.e. the direct simulation Monte Carlo (DSMC) method. In § 2.2, the DHC-GEP method is introduced in detail. In § 3, we demonstrate the improved performance of DHC-GEP on two benchmark studies. Then, in § 4, we extend its applications to discovering unknown constitutive relations for two non-equilibrium flows. Conclusions and discussions are drawn in § 6.

2. Methodology

2.1. Direct simulation Monte Carlo

Generally, the Knudsen number (![]() $Kn$), which is defined as the ratio of the molecular mean free path to the characteristic length scale of system, is used to classify various flow regimes, with the continuum regime being in the range of

$Kn$), which is defined as the ratio of the molecular mean free path to the characteristic length scale of system, is used to classify various flow regimes, with the continuum regime being in the range of ![]() $Kn \le 0.01$, the slip regime being

$Kn \le 0.01$, the slip regime being ![]() $0.01 < Kn \le 0.1$, the transition regime being

$0.01 < Kn \le 0.1$, the transition regime being ![]() $0.1 < Kn \le 10$ and the free molecular flow being

$0.1 < Kn \le 10$ and the free molecular flow being ![]() $Kn > 10$. While the computational fluid dynamics (CFD) methods based on the NSF equations have been successfully applied to the continuum regime and can also be used for the slip regime with appropriate slip boundary conditions, they cannot accurately simulate transitional and free molecular gas flows. On the contrary, the Boltzmann equation can describe gas flows in all flow regimes, which can be written as

$Kn > 10$. While the computational fluid dynamics (CFD) methods based on the NSF equations have been successfully applied to the continuum regime and can also be used for the slip regime with appropriate slip boundary conditions, they cannot accurately simulate transitional and free molecular gas flows. On the contrary, the Boltzmann equation can describe gas flows in all flow regimes, which can be written as

Here, ![]() $f$ is the molecular velocity distribution function, which represents the density of molecules in phase-space (i.e. the space spanned by position and velocity). Here

$f$ is the molecular velocity distribution function, which represents the density of molecules in phase-space (i.e. the space spanned by position and velocity). Here ![]() $\boldsymbol {c}$ is molecular velocity,

$\boldsymbol {c}$ is molecular velocity, ![]() $\boldsymbol {G}$ is external force and

$\boldsymbol {G}$ is external force and ![]() $S$ is a complex integral term, which represents the change of distribution function due to the interaction between molecules. Owing to the presence of

$S$ is a complex integral term, which represents the change of distribution function due to the interaction between molecules. Owing to the presence of ![]() $S$, the Boltzmann equation is difficult to solve numerically.

$S$, the Boltzmann equation is difficult to solve numerically.

The DSMC is a stochastic molecule-based method that approximates the molecular velocity distribution function in the Boltzmann equation with simulation molecules (Oran, Oh & Cybyk Reference Oran, Oh and Cybyk1998). It has been demonstrated that DSMC converges to the solution of the Boltzmann equation in the limit of a large number of simulation molecules (Wagner Reference Wagner1992). In DSMC, each simulation molecule represents ![]() $F$ real molecules and

$F$ real molecules and ![]() $F$ is the so-called simulation ratio. The DSMC tracks the simulation molecules as they move, collide with other molecules and reflect from boundaries. The macroscopic physical quantities are obtained via sampling corresponding molecular information and making an average at the sampling cells. Specifically, density (

$F$ is the so-called simulation ratio. The DSMC tracks the simulation molecules as they move, collide with other molecules and reflect from boundaries. The macroscopic physical quantities are obtained via sampling corresponding molecular information and making an average at the sampling cells. Specifically, density (![]() $\rho$) and macroscopic velocity (

$\rho$) and macroscopic velocity (![]() $v_i$) are computed with

$v_i$) are computed with

where ![]() $m$ is molecular mass,

$m$ is molecular mass, ![]() $N_p$ is the number of simulation molecules in the sampling cell, and

$N_p$ is the number of simulation molecules in the sampling cell, and ![]() $V_{cell}$ is the volume of the sampling cell. The velocity of each molecule can be regarded as a sum of two parts, i.e. the macroscopic velocity of the cell to which it belongs and the molecular thermal velocity (which is defined as

$V_{cell}$ is the volume of the sampling cell. The velocity of each molecule can be regarded as a sum of two parts, i.e. the macroscopic velocity of the cell to which it belongs and the molecular thermal velocity (which is defined as ![]() $C_i=c_i-v_i$). Based on the molecular thermal velocity, temperature (

$C_i=c_i-v_i$). Based on the molecular thermal velocity, temperature (![]() $T$) is computed with

$T$) is computed with

where ![]() $k_B$ is the Boltzmann constant, and

$k_B$ is the Boltzmann constant, and ![]() $\boldsymbol{C}^2=C_1^2+C_2^2+C_3^2$. Pressure tensor (

$\boldsymbol{C}^2=C_1^2+C_2^2+C_3^2$. Pressure tensor (![]() $p_{ij}$) is computed with

$p_{ij}$) is computed with

\begin{equation} p_{ij}=\frac{mF}{V_{cell}}\left ( {\sum_{cell}c_ic_j}-N_pv_iv_j \right ), \end{equation}

\begin{equation} p_{ij}=\frac{mF}{V_{cell}}\left ( {\sum_{cell}c_ic_j}-N_pv_iv_j \right ), \end{equation}

and is generally split into its trace and trace-free parts, i.e. pressure (![]() $p$) and stress tensor (

$p$) and stress tensor (![]() $\tau _{ij}$),

$\tau _{ij}$), ![]() $p_{ij}=p\delta _{ij}+\tau _{ij}$. Here,

$p_{ij}=p\delta _{ij}+\tau _{ij}$. Here, ![]() $\delta _{ij}$ is Kronecker delta (Heinbockel Reference Heinbockel2001). Therefore, pressure (

$\delta _{ij}$ is Kronecker delta (Heinbockel Reference Heinbockel2001). Therefore, pressure (![]() $p$) and stress tensor (

$p$) and stress tensor (![]() $\tau _{ij}$) are computed with

$\tau _{ij}$) are computed with

The heat flux (![]() $q_i$) is computed with

$q_i$) is computed with

The local viscosity coefficient is computed with

where ![]() $\mu _0 = 2.117\times 10^{-5}\ \mathrm {kg}\ {\rm m}^{-1}\ {\rm s}^{-1}$ is the reference viscosity at the reference temperature

$\mu _0 = 2.117\times 10^{-5}\ \mathrm {kg}\ {\rm m}^{-1}\ {\rm s}^{-1}$ is the reference viscosity at the reference temperature ![]() $T_0 = 273\ \mathrm {K}$. Here

$T_0 = 273\ \mathrm {K}$. Here ![]() $\omega$ is the viscosity exponent and is determined by the molecular model employed. For the hard sphere (HS) model (Bird Reference Bird1994), the Maxwell molecule model (Bird Reference Bird1994) and the variable hard sphere (VHS) model (Bird Reference Bird1981),

$\omega$ is the viscosity exponent and is determined by the molecular model employed. For the hard sphere (HS) model (Bird Reference Bird1994), the Maxwell molecule model (Bird Reference Bird1994) and the variable hard sphere (VHS) model (Bird Reference Bird1981), ![]() $\omega$ is equal to 0.5, 1.0 and 0.81, respectively. The local heat conductivity coefficient is computed according to the local viscosity coefficient using

$\omega$ is equal to 0.5, 1.0 and 0.81, respectively. The local heat conductivity coefficient is computed according to the local viscosity coefficient using

where ![]() $Pr$ is the Prandtl number and is equal to

$Pr$ is the Prandtl number and is equal to ![]() $2/3$ for a monatomic gas.

$2/3$ for a monatomic gas.

For a specific application, DSMC first initializes the simulation molecules according to the initial distributions of density, temperature and macroscopic velocity. Then, the molecular motions and intermolecular collisions are sequentially conducted in each computational time step. The molecular motions are implemented in a deterministic way. Each molecule moves ballistically from its original position to a new position, and the displacement is equal to the product of its velocity times the time step. If the trajectory crosses any boundaries, an appropriate gas–wall interaction model would be employed to determine the reflected velocity. Common gas–wall interaction models include specular, diffuse and Maxwell reflection models. In our simulations, the diffuse model is employed. The intermolecular collisions are implemented in a probabilistic way. Among the several algorithms for modelling intermolecular collisions, the no-time-counter technique (Bird Reference Bird1994) is the most widely used. In the no-time-counter, any two molecules in the same computational cell are selected as a collision pair with a probability that is proportional to the relative speed between them. Then, the post-collision velocities of molecules are determined depending on the molecular model employed. In this work we employ the HS model for the first two benchmark cases (diffusion flow and Taylor–Green vortex), the Maxwell molecule model for the shock wave case and the VHS model for the Poiseuille flow case. It is noteworthy that because the molecular motions and intermolecular collisions are conducted in a decoupled manner, the time step in DSMC needs to be smaller than the molecular mean collision time, and the cell size for the selection of collision pairs needs to be smaller than the molecular mean free path.

The DSMC has been successfully applied to simulate gas flows in the whole flow regimes, and the results have been well validated (Stefanov, Roussinov & Cercignani Reference Stefanov, Roussinov and Cercignani2002; Sun & Boyd Reference Sun and Boyd2002; Zhang, Fan & Fei Reference Zhang, Fan and Fei2010; Gallis et al. Reference Gallis, Bitter, Koehler, Torczynski, Plimpton and Papadakis2017). The advantage of the DSMC method is that there is no need to assume any macroscopic governing equations a priori. The macroscopic quantities, such as density and velocity, are obtained by sampling molecular information and making an average at the sampling cells. In this work, the aim of using data generated by DSMC is to prove the ability of DHC-GEP to discover the governing equations that could be unknown.

2.2. Dimensional homogeneity constrained gene expression programming

2.2.1. General framework

The DHC-GEP is an enhanced GEP method, which compiles mathematical expressions into chromosomes and iteratively discovers the equation that is suitable for describing the training data by mimicking the natural law of evolution. The flowchart of DHC-GEP is shown in figure 2(a). It starts with creating ![]() $N_i$ random individuals of initial population. Each individual has two forms: genotype (chromosome (CS)) and phenotype (expression tree (ET)). Phenotype is the expression product of genotype. Each CS is composed of one or more genes. A specific schematic diagram of a gene is shown in figure 2(b). There are two parts in a gene, i.e. head and tail. The head consists of the symbols from the function set or terminal set, while the tail consists of only symbols from the terminal set. The function set and terminal set are both predefined according to the specific problem. For the problems in this work, the function set includes basic mathematical operators (

$N_i$ random individuals of initial population. Each individual has two forms: genotype (chromosome (CS)) and phenotype (expression tree (ET)). Phenotype is the expression product of genotype. Each CS is composed of one or more genes. A specific schematic diagram of a gene is shown in figure 2(b). There are two parts in a gene, i.e. head and tail. The head consists of the symbols from the function set or terminal set, while the tail consists of only symbols from the terminal set. The function set and terminal set are both predefined according to the specific problem. For the problems in this work, the function set includes basic mathematical operators (![]() $+,\ -,\ \times,\ \div$). If necessary for other problems, the function set can also include nonlinear functions such as sin, cos and even user-defined functions. The terminal set includes the symbols of variables and physical constants. Taking the first symbol of the gene as the root node, we can translate the gene into the ET (shown in figure 2b) through level-order traversal according to the argument requirement of each function. Note that the final four symbols are not expressed, and the region before them is called ORF. The length of ORF is variable, which is determined by the argument requirement of the function nodes in the head. On the contrary, the length of gene is fixed, preventing the individuals from bloating. Assuming the length of the head is

$+,\ -,\ \times,\ \div$). If necessary for other problems, the function set can also include nonlinear functions such as sin, cos and even user-defined functions. The terminal set includes the symbols of variables and physical constants. Taking the first symbol of the gene as the root node, we can translate the gene into the ET (shown in figure 2b) through level-order traversal according to the argument requirement of each function. Note that the final four symbols are not expressed, and the region before them is called ORF. The length of ORF is variable, which is determined by the argument requirement of the function nodes in the head. On the contrary, the length of gene is fixed, preventing the individuals from bloating. Assuming the length of the head is ![]() $n$, then the length of the tail must be

$n$, then the length of the tail must be ![]() $n \times ( {h - 1} ) + 1$, where

$n \times ( {h - 1} ) + 1$, where ![]() $h$ is the maximum number of arguments in the functions. Four genes with different ORFs are shown in figure 3. Although the four genes have the same length, the complexities of their expression products are different. Therefore, ORF is the decisive factor that ensures the diversity of expression products and the strong expressivity of DHC-GEP. This is also the reason that the length of the head determines only the upper limit of complexity, not the lower limit. When discovering unknown governing equations, a relatively long head would be a good choice. Because with ORF, a long gene can represent either a simple equation or a complex equation.

$h$ is the maximum number of arguments in the functions. Four genes with different ORFs are shown in figure 3. Although the four genes have the same length, the complexities of their expression products are different. Therefore, ORF is the decisive factor that ensures the diversity of expression products and the strong expressivity of DHC-GEP. This is also the reason that the length of the head determines only the upper limit of complexity, not the lower limit. When discovering unknown governing equations, a relatively long head would be a good choice. Because with ORF, a long gene can represent either a simple equation or a complex equation.

Figure 2. Main characteristics of DHC-GEP. (a) Flowchart of DHC-GEP. (b) Schematic diagram of a gene and its corresponding expression tree and mathematical expression. (c) Strategy of dimensional verification.

Figure 3. Four genes with different ORFs.

Then, via dimensional verification (which will be introduced in § 2.2.2), all the individuals are classified into valid individuals and invalid individuals according to whether they satisfy the dimensional homogeneity. The valid individuals would be translated into mathematical expressions (MEs) and evaluated for losses with input data according to the loss function, which is defined using the mean relative error (MRE) as

\begin{equation} {{L_{MRE}}} = \frac{1}{N}\sum_{i = 1}^N {\left| {\frac{{{{\hat Y}_i} - {Y_i}}}{{{Y_i}}}} \right|} . \end{equation}

\begin{equation} {{L_{MRE}}} = \frac{1}{N}\sum_{i = 1}^N {\left| {\frac{{{{\hat Y}_i} - {Y_i}}}{{{Y_i}}}} \right|} . \end{equation}

Here, the variable with a superscript ![]() $\wedge$ is the predicted variable, and

$\wedge$ is the predicted variable, and ![]() $N$ is the total number of data points. The invalid individuals would be directly assigned a significant loss and eliminated in the subsequent iterations. The best individual (with the lowest loss) is replicated to the next generation directly. Other individuals are selected as superior individuals with probabilities that are inversely proportional to their losses. Based on the superior individuals, the population of the next generation is reproduced via genetic operators. Common genetic operators include replication, mutation, transposition and recombination. The schematic diagrams of them are shown in figure 4; the details are introduced as follows.

$N$ is the total number of data points. The invalid individuals would be directly assigned a significant loss and eliminated in the subsequent iterations. The best individual (with the lowest loss) is replicated to the next generation directly. Other individuals are selected as superior individuals with probabilities that are inversely proportional to their losses. Based on the superior individuals, the population of the next generation is reproduced via genetic operators. Common genetic operators include replication, mutation, transposition and recombination. The schematic diagrams of them are shown in figure 4; the details are introduced as follows.

(i) Replication is responsible for copying the selected superior individuals to the next generation. After replication, the population maintains the same size as the initial population.

(ii) Mutation is a change in some symbols of the genes. To maintain the structural organization of the genes, the symbols in the head can be changed into either functions or terminals, while the symbols in the tail can only be changed into terminals. In addition, note that mutations in genes do not necessarily lead to changes in expression products, unless the mutation occurs in ORF.

(iii) Transposition is the replacement of a gene segment with another position. Specifically, it includes insertion sequence (IS) transposition, root insertion sequence (RIS) transposition and gene transposition. For IS transposition, a short segment with a function or terminal in the first position is transposed to the head of the genes. The length of the transposed segment and the insertion site are randomly selected. The sequence before the insertion site remains unchanged. The sequence after the insertion site is shifted backwards as a whole, and the last symbols (as many as the length of the transposed segment) of the head are deleted. The RIS transposition is similar to IS transposition, except that the first position of the transposed segment must be a function, and the insertion site must be the root. Gene transposition occurs in the CSs that consist of multiple genes. During gene transposition, one gene switches position with another gene.

(iv) Recombination is an exchange of gene segments between two selected parent CSs, including one-point recombination, two-point recombination and gene recombination. For one-point recombination, one bond is randomly selected as the crossover point. Then, both selected CSs are cut at this point, and exchange the segments after the crossover point. For two-point recombination, two crossover points are selected, and the exchanged segments are between these two points. For gene recombination, the exchanged segment is an entire gene.

Figure 4. Schematic diagrams of genetic operators.

Note that the genetic operators, except replication, are not necessarily invoked in every CS, but are invoked with certain probabilities. In this work, the probabilities of the genetic operators being invoked refer to Ferreira (Reference Ferreira2006), listed in table 1, which were concluded from various examples via the trial-and-error approach.

Table 1. Probabilities for genetic operators being invoked.

The above processes are iteratively conducted until a satisfactory individual is obtained. Specifically, in benchmark studies, we manually terminate the evolution when the target equation is discovered. In cases of discovering the unknown constitutive relations, the evolution is terminated automatically if the optimal equation remains unchanged for 1000 consecutive generations.

Other advanced knowledge is provided in the appendices, including the Dc domain (Appendix B) for generating numerical constants and the linear scaling (Appendix C) for discovering numerical coefficients.

2.2.2. Dimensional verification

The dimensional verification is the additional process to implement the constraint of dimensional homogeneity, and is introduced as follows (a simplified version is shown in figure 2c). The general form of a governing equation is assumed to be

Here, ![]() $Y$ is the target variable, and

$Y$ is the target variable, and ![]() $f(\cdot )$ is the mathematical expression coded by a CS. Here

$f(\cdot )$ is the mathematical expression coded by a CS. Here ![]() $X$ and

$X$ and ![]() $\vartheta$ represent variables and physical constants, respectively. Dimensional homogeneity means that

$\vartheta$ represent variables and physical constants, respectively. Dimensional homogeneity means that ![]() $f(\cdot )$ should have the same dimension as

$f(\cdot )$ should have the same dimension as ![]() $Y$, and every part in

$Y$, and every part in ![]() $f(\cdot )$ should satisfy the constraint that the parameters for operators

$f(\cdot )$ should satisfy the constraint that the parameters for operators ![]() $+$ or

$+$ or ![]() $-$ must have the same dimensions.

$-$ must have the same dimensions.

Generally, dimensional verification includes calculating the dimension of ![]() $f(\cdot )$ and comparing it with the dimension of

$f(\cdot )$ and comparing it with the dimension of ![]() $Y$. For calculating dimensions, there are five principles that should be noted.

$Y$. For calculating dimensions, there are five principles that should be noted.

(i) Computers cannot directly deal with symbolic operations, but only numerical operations.

(ii) In SI units, there are seven base dimensions: length (

$L$); mass (

$L$); mass ( $M$); time (

$M$); time ( $T$); electric current (

$T$); electric current ( $I$); thermodynamic temperature (

$I$); thermodynamic temperature ( $\varTheta$); amount of substance (

$\varTheta$); amount of substance ( $N$); luminous intensity (

$N$); luminous intensity ( $J$). The dimensions of any other physical quantities can be derived by powers, products or quotients of these base dimensions.

$J$). The dimensions of any other physical quantities can be derived by powers, products or quotients of these base dimensions.(iii) Base dimensions are independent of each other. Any dimension cannot be derived by other base dimensions.

(iv) Physical quantities with different dimensions cannot be added or subtracted. Adding or subtracting the physical quantities with the same dimension does not change the dimension.

(v) When physical quantities are multiplied or divided, the corresponding dimensions are multiplied or divided equally.

Considering the above principles, we propose to assign number tags to physical quantities and replace the dimensional calculations with numerical calculations. For the seven base dimensions, the tags are 2, 3, 5, 7, 11, 13 and 17, respectively. These numbers are prime numbers, ensuring that the base dimensions are independent of each other. The tags for other physical quantities are derived according to their dimensions. For example, as the dimension of velocity is length divided by time (![]() $L/T$), its tag is defined as

$L/T$), its tag is defined as ![]() $2/5$. It is noteworthy that the tags are always in the form of fractions rather than floats to avoid truncation errors. The tags for numerical constants and dimensionless quantities are set to

$2/5$. It is noteworthy that the tags are always in the form of fractions rather than floats to avoid truncation errors. The tags for numerical constants and dimensionless quantities are set to ![]() $1$. In addition, according to the last two principles, we modify the operation rules of mathematical operators. The pseudocodes for addition (

$1$. In addition, according to the last two principles, we modify the operation rules of mathematical operators. The pseudocodes for addition (![]() $+$) and multiplication (

$+$) and multiplication (![]() $\times$) are provided in Algorithm 1 and Algorithm 2, respectively. Subtraction (

$\times$) are provided in Algorithm 1 and Algorithm 2, respectively. Subtraction (![]() $-$) and division (

$-$) and division (![]() $\div$) are defined in similar ways. With the number tags and modified mathematical operators, we can compute the dimension of each node in the ET from the bottom up. If the parameters for one function node (

$\div$) are defined in similar ways. With the number tags and modified mathematical operators, we can compute the dimension of each node in the ET from the bottom up. If the parameters for one function node (![]() $+$ or

$+$ or ![]() $-$) do not have the same dimension, the individual is directly identified as invalid one. Otherwise, we can calculate the specific dimension of the individual, i.e. the number tag for the root node. Comparing it with the tag for the target variable, we can conclude whether the individual is dimensionally homogeneous.

$-$) do not have the same dimension, the individual is directly identified as invalid one. Otherwise, we can calculate the specific dimension of the individual, i.e. the number tag for the root node. Comparing it with the tag for the target variable, we can conclude whether the individual is dimensionally homogeneous.

Algorithm 1 Modified addition function

Algorithm 2 Modified multiplication function

Note that the datasets and source codes used in this work are available on GitHub at https://github.com/Wenjun-Ma/DHC-GEP.

3. Demonstration on benchmark studies

3.1. Diffusion equation

We employ the DSMC method to simulate a two-dimensional diffusion flow in a square domain, as shown in figure 5(a). The side length of the square is ![]() $L = 100\lambda$, and

$L = 100\lambda$, and ![]() $\lambda$ is the mean free path of argon gas molecules under standard conditions (pressure

$\lambda$ is the mean free path of argon gas molecules under standard conditions (pressure ![]() $p = 1.01 \times {10^5}\ {\rm {Pa}}$ and temperature

$p = 1.01 \times {10^5}\ {\rm {Pa}}$ and temperature ![]() $T = 273\ {\rm K}$), i.e.

$T = 273\ {\rm K}$), i.e. ![]() $\lambda =6.25\times 10^{-8}\ \mathrm {m}$. As a result, the Knudsen number (

$\lambda =6.25\times 10^{-8}\ \mathrm {m}$. As a result, the Knudsen number (![]() $Kn = \lambda / L$) is 0.01. All the four sides are set to periodic boundaries. The simulation includes only argon gas, which is divided into two species, denoted as species-A and species-B, respectively. At the initial instant, the density distributions of these two species are

$Kn = \lambda / L$) is 0.01. All the four sides are set to periodic boundaries. The simulation includes only argon gas, which is divided into two species, denoted as species-A and species-B, respectively. At the initial instant, the density distributions of these two species are ![]() ${\rho _A} = 0.5{\rho _0}( {1 - \cos ( {2{\rm \pi} y/L} )} )$ and

${\rho _A} = 0.5{\rho _0}( {1 - \cos ( {2{\rm \pi} y/L} )} )$ and ![]() ${\rho _B} = 0.5{\rho _0}( {1 + \cos ( {2{\rm \pi} y/L} )} )$, respectively. Here,

${\rho _B} = 0.5{\rho _0}( {1 + \cos ( {2{\rm \pi} y/L} )} )$, respectively. Here, ![]() ${\rho _0}=1.78\ {\rm kg}\,{{\rm m}^{-3}}$ is the density of argon gas under standard conditions, and

${\rho _0}=1.78\ {\rm kg}\,{{\rm m}^{-3}}$ is the density of argon gas under standard conditions, and ![]() $y$ is the spatial coordinate in the vertical direction. The initial macroscopic velocity is uniformly zero. During simulation, the macroscopic velocity and the total density of the two species remain uniform and unchanged, while the respective densities of these two species vary with space and time.

$y$ is the spatial coordinate in the vertical direction. The initial macroscopic velocity is uniformly zero. During simulation, the macroscopic velocity and the total density of the two species remain uniform and unchanged, while the respective densities of these two species vary with space and time.

Figure 5. (a) Schematic diagram of the computational domain for the diffusion flow. (b) Distributions of density at the instants of ![]() $t= 0,\ 200\tau,\ 400\tau,\ 600\tau, 800\tau,\ 1000\tau$.

$t= 0,\ 200\tau,\ 400\tau,\ 600\tau, 800\tau,\ 1000\tau$.

The computational domain is divided into ![]() $256 \times 256$ cells, which means that the cell size (

$256 \times 256$ cells, which means that the cell size (![]() ${\rm \Delta} x,{\rm \Delta} y$) is approximately

${\rm \Delta} x,{\rm \Delta} y$) is approximately ![]() $0.4\lambda$. In each cell, 4000 simulation molecules are randomly distributed at the initial instant, with one simulation molecule representing

$0.4\lambda$. In each cell, 4000 simulation molecules are randomly distributed at the initial instant, with one simulation molecule representing ![]() $4\times 10^{6}$ real molecules. The initial thermal velocity for each simulation molecule is sampled randomly from a Maxwell distribution at 273 K. The computational time step (

$4\times 10^{6}$ real molecules. The initial thermal velocity for each simulation molecule is sampled randomly from a Maxwell distribution at 273 K. The computational time step (![]() ${\rm \Delta} t$) is

${\rm \Delta} t$) is ![]() $0.1\tau$, where

$0.1\tau$, where ![]() $\tau$ is the mean collision time of argon gas molecules under standard conditions with the definition of

$\tau$ is the mean collision time of argon gas molecules under standard conditions with the definition of ![]() $\tau =\lambda /\bar {c}$. Here,

$\tau =\lambda /\bar {c}$. Here, ![]() $\bar {c}$ is the molecular mean velocity, i.e.

$\bar {c}$ is the molecular mean velocity, i.e. ![]() $\bar {c} =\sqrt {8k_{B}T/{\rm \pi} m}$, where

$\bar {c} =\sqrt {8k_{B}T/{\rm \pi} m}$, where ![]() $k_B$ and

$k_B$ and ![]() $m$ are the Boltzmann constant and molecular mass, respectively.

$m$ are the Boltzmann constant and molecular mass, respectively.

Note that the density varies only along the vertical direction. We average the data along the horizontal direction to reduce the statistical errors. During simulation, we sample the macroscopic densities of species-A at the instants of ![]() $t = 0, 10\tau, 20\tau,\ldots, 1000\tau$. The temporal evolution of density distributions is shown in figure 5(b). The final dataset is composed of 25 856 (

$t = 0, 10\tau, 20\tau,\ldots, 1000\tau$. The temporal evolution of density distributions is shown in figure 5(b). The final dataset is composed of 25 856 (![]() $256 \times 101$) data points that are distributed in 256 cells along the vertical direction at 101 instants.

$256 \times 101$) data points that are distributed in 256 cells along the vertical direction at 101 instants.

The theoretical governing equation of this flow is the diffusion equation, i.e.

Here, ![]() $D$ is the diffusion coefficient. According to the Chapman–Enskog theory (Chapman & Cowling Reference Chapman and Cowling1990), the diffusion coefficient for an HS gas at the first-order approximation is

$D$ is the diffusion coefficient. According to the Chapman–Enskog theory (Chapman & Cowling Reference Chapman and Cowling1990), the diffusion coefficient for an HS gas at the first-order approximation is

Therefore, for the argon gas in our simulation, ![]() $D$ equals

$D$ equals ![]() $1.40 \times {10^{-5}}\ {{\rm m}^2}\ {{\rm s}^{ - 1}}$.

$1.40 \times {10^{-5}}\ {{\rm m}^2}\ {{\rm s}^{ - 1}}$.

Before using the dataset generated by DSMC, we test DHC-GEP and Original-GEP on a clean dataset. The only difference between the DSMC dataset and the clean dataset lies in the target variable (![]() ${{\partial \rho }}/{{\partial t}}$). In the clean dataset,

${{\partial \rho }}/{{\partial t}}$). In the clean dataset, ![]() ${{\partial \rho }}/{{\partial t}}$ is directly computed based on

${{\partial \rho }}/{{\partial t}}$ is directly computed based on ![]() $D$ and

$D$ and ![]() ${{{\partial ^2}\rho }}/{{\partial {y^2}}}$, according to (3.1). We define the function set as

${{{\partial ^2}\rho }}/{{\partial {y^2}}}$, according to (3.1). We define the function set as ![]() $\{ { + , - , \times, \div } \}$, and the terminal set as

$\{ { + , - , \times, \div } \}$, and the terminal set as ![]() $\{ {\mu,D, {{\partial \rho }}/{{\partial y}}, {{{\partial ^2}\rho }}/{{\partial {y^2}}}, {{{\partial ^3}\rho }}/{{\partial {y^3}}}} \}$. Here,

$\{ {\mu,D, {{\partial \rho }}/{{\partial y}}, {{{\partial ^2}\rho }}/{{\partial {y^2}}}, {{{\partial ^3}\rho }}/{{\partial {y^3}}}} \}$. Here, ![]() $\mu$ is the viscosity coefficient, and is introduced as a distraction term. According to the Chapman–Enskog theory (Chapman & Cowling Reference Chapman and Cowling1990),

$\mu$ is the viscosity coefficient, and is introduced as a distraction term. According to the Chapman–Enskog theory (Chapman & Cowling Reference Chapman and Cowling1990), ![]() $\mu$ equals

$\mu$ equals ![]() $({5{\rm \pi} }/{32}) ({\lambda ^{2} }/{\tau })\rho = 2.08 \times {10^{ - 5}}\ {\rm kg}\ {{\rm m}^{-1}}\ {{\rm s}^{ - 1}}$. All the hyperparameters are set to be the same for both GEP methods across all cases (see Appendix E). The derived equations are shown in table 2. It can be observed that the derived equation of DHC-GEP is consistent with the theoretical equation, while the derived equation of Original-GEP is partially correct. Specifically, Original-GEP identifies the correct function form and coefficient (

$({5{\rm \pi} }/{32}) ({\lambda ^{2} }/{\tau })\rho = 2.08 \times {10^{ - 5}}\ {\rm kg}\ {{\rm m}^{-1}}\ {{\rm s}^{ - 1}}$. All the hyperparameters are set to be the same for both GEP methods across all cases (see Appendix E). The derived equations are shown in table 2. It can be observed that the derived equation of DHC-GEP is consistent with the theoretical equation, while the derived equation of Original-GEP is partially correct. Specifically, Original-GEP identifies the correct function form and coefficient (![]() $1.40 \times {10^{ - 5}}$). In the view of data fitting, this is a correct result. However, Original-GEP fails to recognize that the coefficient (

$1.40 \times {10^{ - 5}}$). In the view of data fitting, this is a correct result. However, Original-GEP fails to recognize that the coefficient (![]() $1.40 \times {10^{ - 5}}$) preceding the diffusion term represents the diffusion coefficient. Consequently, while the resulting equation may be numerically accurate, it lacks complete physical significance. For another diffusion flow with a different diffusion coefficient, the derived equation of Original-GEP is no longer valid, while the derived equation of DHC-GEP is generally applicable.

$1.40 \times {10^{ - 5}}$) preceding the diffusion term represents the diffusion coefficient. Consequently, while the resulting equation may be numerically accurate, it lacks complete physical significance. For another diffusion flow with a different diffusion coefficient, the derived equation of Original-GEP is no longer valid, while the derived equation of DHC-GEP is generally applicable.

Table 2. Derived equations of DHC-GEP.

Subsequently, we test DHC-GEP and Original-GEP on the DSMC dataset. All settings are consistent with those in the test on the clean dataset. The derived equations are shown in table 2. The coefficient of the equation discovered by DHC-GEP has a minor deviation from that of the theoretical equation, and the loss is not zero. This is because DSMC is a stochastic molecule-based method, the data of which are inherently noisy. It is impossible for DSMC to simulate a flow with the diffusion coefficient being exactly ![]() $1.40 \times {10^{ - 5}}\,{{\rm m}^2}\,{{\rm s}^{ - 1}}$. Small fluctuations around the theoretical value are acceptable. In addition, calculating derivatives also introduces errors. Therefore, we believe that DHC-GEP has discovered the correct equation, while the derived equation of Original-GEP is clearly wrong for not satisfying the fundamental dimensional homogeneity. Besides, we generate another dataset of diffusion flow with a diffusion coefficient being

$1.40 \times {10^{ - 5}}\,{{\rm m}^2}\,{{\rm s}^{ - 1}}$. Small fluctuations around the theoretical value are acceptable. In addition, calculating derivatives also introduces errors. Therefore, we believe that DHC-GEP has discovered the correct equation, while the derived equation of Original-GEP is clearly wrong for not satisfying the fundamental dimensional homogeneity. Besides, we generate another dataset of diffusion flow with a diffusion coefficient being ![]() $3.0 \times {10^{ - 5}}\,{{\rm m}^2}\,{{\rm s}^{ - 1}}$ and calculate the losses of the two derived equations based on the new dataset. The losses for the derived equations of Original-GEP and DHC-GEP are 0.55 and 0.06, respectively. Therefore, the equation obtained by Original-GEP is clearly overfitting, while the equation obtained by DHC-GEP is generally applicable in different diffusion flows.

$3.0 \times {10^{ - 5}}\,{{\rm m}^2}\,{{\rm s}^{ - 1}}$ and calculate the losses of the two derived equations based on the new dataset. The losses for the derived equations of Original-GEP and DHC-GEP are 0.55 and 0.06, respectively. Therefore, the equation obtained by Original-GEP is clearly overfitting, while the equation obtained by DHC-GEP is generally applicable in different diffusion flows.

To test the sensitivity of DHC-GEP to hyperparameters, we conduct a parametric study on the length of the head, the number of genes in a CS and the number of individuals in a population. Specifically, the parameter spaces for these three hyperparameters are set to ![]() $\{ 10, 11, 12, 13, 14, 15 \}$,

$\{ 10, 11, 12, 13, 14, 15 \}$, ![]() $\{1, 2 \}$ and

$\{1, 2 \}$ and ![]() $\{800, 1000, 1200, 1400, 1600, 1660 \}$, respectively, resulting in a total of 72 (

$\{800, 1000, 1200, 1400, 1600, 1660 \}$, respectively, resulting in a total of 72 (![]() $6\times 2\times 6$) distinct parameter combinations. Other hyperparameters are kept unchanged, including the probabilities of invoking the genetic operators and the maximum number of evolution generations. In all 72 sets of parameter combinations, DHC-GEP consistently discovers the correct equation. In contrast, Original-GEP discovers 71 completely different results, all of which are overfitting and fail to satisfy the dimensional homogeneity constraint despite their lower losses than the correct equations.

$6\times 2\times 6$) distinct parameter combinations. Other hyperparameters are kept unchanged, including the probabilities of invoking the genetic operators and the maximum number of evolution generations. In all 72 sets of parameter combinations, DHC-GEP consistently discovers the correct equation. In contrast, Original-GEP discovers 71 completely different results, all of which are overfitting and fail to satisfy the dimensional homogeneity constraint despite their lower losses than the correct equations.

Considering that DSMC is a simulation method at mesoscale, which coarse grains the molecular description to the hydrodynamic regime (Hadjiconstantinou Reference Hadjiconstantinou2000), the influence of the coarse graining on the derived equations of DHC-GEP is discussed. For the same computational domain, we employ seven sets of sampling cells with different resolutions (![]() $256\times 256$,

$256\times 256$, ![]() $128\times 128$,

$128\times 128$, ![]() $64\times 64$,

$64\times 64$, ![]() $32\times 32$,

$32\times 32$, ![]() $16\times 16$,

$16\times 16$, ![]() $8\times 8$,

$8\times 8$, ![]() $4\times 4$) to acquire macroscopic physical quantities. The derived equations based on these datasets are summarized in table 3. It is encouraging to note that DHC-GEP is still capable of discovering the correct equation when the resolution is

$4\times 4$) to acquire macroscopic physical quantities. The derived equations based on these datasets are summarized in table 3. It is encouraging to note that DHC-GEP is still capable of discovering the correct equation when the resolution is ![]() $16\times 16$ and the size of the sampling cells is up to

$16\times 16$ and the size of the sampling cells is up to ![]() $6.3\lambda$. From the results, it can be concluded that DHC-GEP is robust to coarse graining, as long as the sampled data can accurately capture the gradients of macroscopic quantities.

$6.3\lambda$. From the results, it can be concluded that DHC-GEP is robust to coarse graining, as long as the sampled data can accurately capture the gradients of macroscopic quantities.

Table 3. Derived equations of DHC-GEP based on the datasets with different resolutions.

In DHC-GEP, the overfitting results are automatically filtered out due to not satisfying the dimensional homogeneity. On the contrary, Original-GEP always favours the equations with smaller loss, so it tends to converge to overfitting results if data are noisy. Our numerical experiences show that Original-GEP may discover the correct equations only when tuning the hyperparameters repeatedly and terminating the evolution at a proper generation (when overfitting equations have not appeared).

We compare the computational cost needed per 1000 generations of evolution. Based on the 3.5 GHz Intel Xeon E5-1620 processor, the average CPU runtime of Original-GEP (443 s) is almost twice that of DHC-GEP (216 s). The main reason is that DHC-GEP can identify some individuals as invalid through dimensional verification and then skip the process of evaluating losses for these individuals. It can still save computational time despite the extra expense of dimensional verification. Additionally, as the complexity of the problem increases, the number of invalid individuals also increases, so the advantage of the computational efficiency of DHC-GEP becomes more significant.

Furthermore, we conduct a much more challenging test in which we do not include the transport coefficients in the terminal set. Specifically, the terminal set is ![]() $\{ {\tau,\lambda, {{\partial \rho }}/{{\partial y}}, {{{\partial ^2}\rho }}/{{\partial {y^2}}}, {{{\partial ^3}\rho }}/{{\partial {y^3}}}} \}$, which involves only the fundamental physical property parameters of gas (molecular mean collision time (

$\{ {\tau,\lambda, {{\partial \rho }}/{{\partial y}}, {{{\partial ^2}\rho }}/{{\partial {y^2}}}, {{{\partial ^3}\rho }}/{{\partial {y^3}}}} \}$, which involves only the fundamental physical property parameters of gas (molecular mean collision time (![]() $\tau$) and molecular mean free path (

$\tau$) and molecular mean free path (![]() $\lambda$)), but excludes the diffusion coefficient (

$\lambda$)), but excludes the diffusion coefficient (![]() $D$). Other hyperparameters are the same as those in the original test. The derived equation is

$D$). Other hyperparameters are the same as those in the original test. The derived equation is

According to (3.2), the coefficient (![]() $0.579({\lambda ^{2} }/{\tau })$) is essentially the diffusion coefficient. This result demonstrates that DHC-GEP has the capability to derive accurate equations with minor prior knowledge of the essential transport coefficients, and can deduce the essential transport coefficients from fundamental physical property parameters using dimensional homogeneity as a constraint.

$0.579({\lambda ^{2} }/{\tau })$) is essentially the diffusion coefficient. This result demonstrates that DHC-GEP has the capability to derive accurate equations with minor prior knowledge of the essential transport coefficients, and can deduce the essential transport coefficients from fundamental physical property parameters using dimensional homogeneity as a constraint.

3.2. Vorticity transport equation

We employ DSMC to simulate the temporal evolution of a Taylor–Green vortex, as shown in figure 6(a). The simulation model is approximately the same as that of diffusion flow, including simulation conditions, geometry and boundary conditions. The major difference is that the initial distribution of macroscopic velocity is a Taylor–Green vortex,

where ![]() $u$ and

$u$ and ![]() $v$ are the velocities in the horizontal (

$v$ are the velocities in the horizontal (![]() $x$) and vertical (

$x$) and vertical (![]() $y$) directions, respectively, and

$y$) directions, respectively, and ![]() ${v_0}$ is the initial amplitude of velocity and equals

${v_0}$ is the initial amplitude of velocity and equals ![]() $30\,{\rm m}\,{{\rm s}^{ - 1}}$ in our simulation.

$30\,{\rm m}\,{{\rm s}^{ - 1}}$ in our simulation.

Figure 6. Temporal evolution of vorticity. (a) Taylor–Green vortex at the instants of ![]() $t = 0,100\tau, 200\tau$. Here,

$t = 0,100\tau, 200\tau$. Here, ![]() $\tau$ and

$\tau$ and ![]() $\lambda$ are the mean collision time and mean free path of argon gas molecules at standard condition, respectively. (b) Viscous flow around a cylinder at the instants of

$\lambda$ are the mean collision time and mean free path of argon gas molecules at standard condition, respectively. (b) Viscous flow around a cylinder at the instants of ![]() $t = 0\,{\rm s},\ 3\,{\rm s},\ 6\,{\rm s}$.

$t = 0\,{\rm s},\ 3\,{\rm s},\ 6\,{\rm s}$.

The computational cell and time step are set the same as those in the diffusion flow. Additionally, we introduce sampling cells. In this case, each sampling cell consists of 16 computational cells, i.e. the whole computational domain has ![]() $64 \times 64$ sampling cells. The macroscopic physical quantities obtained with sampling cells are cleaner than those obtained with computational cells, due to more molecules being sampled in each cell. In addition, to further reduce the statistical errors, 10 independent simulations are conducted with different random number sequences to make an ensemble average. During simulation, we sample the macroscopic velocities (

$64 \times 64$ sampling cells. The macroscopic physical quantities obtained with sampling cells are cleaner than those obtained with computational cells, due to more molecules being sampled in each cell. In addition, to further reduce the statistical errors, 10 independent simulations are conducted with different random number sequences to make an ensemble average. During simulation, we sample the macroscopic velocities (![]() $u,v$) and vorticities (

$u,v$) and vorticities (![]() ${\omega _z}$) at the instants of

${\omega _z}$) at the instants of ![]() $t = 0, 10\tau, 20\tau,\ldots, 310\tau$. The final dataset is composed of 131 072 (

$t = 0, 10\tau, 20\tau,\ldots, 310\tau$. The final dataset is composed of 131 072 (![]() $64 \times 64 \times 32$) data points distributed in

$64 \times 64 \times 32$) data points distributed in ![]() $64 \times 64$ sampling cells at 32 instants.

$64 \times 64$ sampling cells at 32 instants.

The theoretical governing equation is the vorticity transport equation,

Here, ![]() ${\omega _z}$ is the vorticity in the

${\omega _z}$ is the vorticity in the ![]() $z$ direction.

$z$ direction. ![]() $\upsilon$ is the kinematic viscosity and approximately equals

$\upsilon$ is the kinematic viscosity and approximately equals ![]() $1.17 \times {10^{ - 5}}\,{{\rm m}^2}\,{{\rm s}^{ - 1}}$. Compared with the diffusion equation (3.1), this equation is more complex, involving multiple variables and nonlinear terms.

$1.17 \times {10^{ - 5}}\,{{\rm m}^2}\,{{\rm s}^{ - 1}}$. Compared with the diffusion equation (3.1), this equation is more complex, involving multiple variables and nonlinear terms.

In this case, we define the target variable as ![]() ${{\partial {\omega _z}}}/{{\partial t}}$, the function set as

${{\partial {\omega _z}}}/{{\partial t}}$, the function set as ![]() $\{ { + , - , \times, \div } \}$, and the terminal set as

$\{ { + , - , \times, \div } \}$, and the terminal set as ![]() $

\{ \upsilon,u,v,\omega _z, {{\partial u}}/{{\partial x}}, {{{\partial ^2}u}}/{{\partial {x^2}}},

{{\partial u}}/{{\partial y}}, {{{\partial ^2}u}}/{{\partial {y^2}}}, {{\partial v}}/{{\partial x}},

{{{\partial ^2}v}}/{{\partial {x^2}}}, {{\partial v}}/{{\partial y}}, {{{\partial ^2}v}}/{{\partial {y^2}}},

{{\partial \omega _z }}/{{\partial x}}, {{{\partial ^2}\omega _z }}/{{\partial {x^2}}}, {{\partial \omega _z }}/{{\partial y}},

{{{\partial ^2}\omega _z }}/{{\partial {y^2}}}\}$.

$

\{ \upsilon,u,v,\omega _z, {{\partial u}}/{{\partial x}}, {{{\partial ^2}u}}/{{\partial {x^2}}},

{{\partial u}}/{{\partial y}}, {{{\partial ^2}u}}/{{\partial {y^2}}}, {{\partial v}}/{{\partial x}},

{{{\partial ^2}v}}/{{\partial {x^2}}}, {{\partial v}}/{{\partial y}}, {{{\partial ^2}v}}/{{\partial {y^2}}},

{{\partial \omega _z }}/{{\partial x}}, {{{\partial ^2}\omega _z }}/{{\partial {x^2}}}, {{\partial \omega _z }}/{{\partial y}},

{{{\partial ^2}\omega _z }}/{{\partial {y^2}}}\}$.

We also test the performances of DHC-GEP on the clean dataset and the DSMC dataset sequentially. The results are provided in table 2. An interesting observation is that the derived equations of DHC-GEP miss two convective terms ![]() $( {u ({{\partial {\omega _z}}}/{{\partial x}}), v({{\partial {\omega _z}}}/{{\partial y}})})$. This is caused by the unique feature of the Taylor–Green vortex. The analytical solution of the Taylor–Green vortex is

$( {u ({{\partial {\omega _z}}}/{{\partial x}}), v({{\partial {\omega _z}}}/{{\partial y}})})$. This is caused by the unique feature of the Taylor–Green vortex. The analytical solution of the Taylor–Green vortex is

\begin{equation} \begin{cases} \begin{array}{*{35}{@{}l}} u=v_0\, \cos(x)\sin(y) {\exp ({ - 2\upsilon t})}, \\ v={-}v_0\, \sin(x)\cos(y) {\exp ({ - 2\upsilon t})}, \\ {{\omega _z}} ={-}2v_0\, \cos(x)\cos(y) {\exp ({ - 2\upsilon t})}. \end{array} \end{cases} \end{equation}

\begin{equation} \begin{cases} \begin{array}{*{35}{@{}l}} u=v_0\, \cos(x)\sin(y) {\exp ({ - 2\upsilon t})}, \\ v={-}v_0\, \sin(x)\cos(y) {\exp ({ - 2\upsilon t})}, \\ {{\omega _z}} ={-}2v_0\, \cos(x)\cos(y) {\exp ({ - 2\upsilon t})}. \end{array} \end{cases} \end{equation}

Substituting (3.6) into the convective terms ![]() $( {u ({{\partial {\omega _z}}}/{{\partial x}}), v({{\partial {\omega _z}}}/{{\partial y}})})$, it is clear that the sum of the convective terms is automatically zero. Therefore, the equations without convective terms are also correct for the Taylor–Green vortex. It can be considered as a specialized variant of the complete vorticity transport equation in the Taylor–Green vortex.

$( {u ({{\partial {\omega _z}}}/{{\partial x}}), v({{\partial {\omega _z}}}/{{\partial y}})})$, it is clear that the sum of the convective terms is automatically zero. Therefore, the equations without convective terms are also correct for the Taylor–Green vortex. It can be considered as a specialized variant of the complete vorticity transport equation in the Taylor–Green vortex.

To discover the complete vorticity transport equation and validate the performance of DHC-GEP on discovering nonlinear terms, we further consider a viscous flow around a cylinder at Reynolds number being 100, as shown in figure 6(b). The dataset is the open access dataset provided by Rudy et al. (Reference Rudy, Brunton, Proctor and Kutz2017), and the theoretical governing equation is

For the sake of simplicity, we regard the data as dimensional data with kinematic viscosity ![]() $\upsilon = 0.01\,{{\rm m}^2}\,{{\rm s}^{ - 1}}$. We randomly sampled 200 spatiotemporal data points from the Taylor–Green vortex dataset and another 200 spatiotemporal data points from the flow around cylinder dataset, forming a hybrid dataset consisting of 400 spatiotemporal data points. The hyperparameters of DHC-GEP are consistent with those in the case of the Taylor–Green vortex. The results are provided in table 2. The DHC-GEP discovers the correct and complete vorticity transport equation. This demonstrates that in some special cases, where the overfitting equations may satisfy the dimensional homogeneity and fit well with the training data, employing multiple datasets can help mitigate overfitting. Overfitting equations are generally dataset specific. While an overfitting equation may adequately describe a particular dataset, it cannot be expected to generalize well to multiple datasets. Besides, note that the training dataset consisting of 400 spatiotemporal data points is relatively small. Generally, if the size of dataset is small, the information carried by data is sparse, leading to the derived equations overfitting to specific phenomena. However, in DHC-GEP, the overfitting equations are automatically filtered out by the constraint of dimensional homogeneity.

$\upsilon = 0.01\,{{\rm m}^2}\,{{\rm s}^{ - 1}}$. We randomly sampled 200 spatiotemporal data points from the Taylor–Green vortex dataset and another 200 spatiotemporal data points from the flow around cylinder dataset, forming a hybrid dataset consisting of 400 spatiotemporal data points. The hyperparameters of DHC-GEP are consistent with those in the case of the Taylor–Green vortex. The results are provided in table 2. The DHC-GEP discovers the correct and complete vorticity transport equation. This demonstrates that in some special cases, where the overfitting equations may satisfy the dimensional homogeneity and fit well with the training data, employing multiple datasets can help mitigate overfitting. Overfitting equations are generally dataset specific. While an overfitting equation may adequately describe a particular dataset, it cannot be expected to generalize well to multiple datasets. Besides, note that the training dataset consisting of 400 spatiotemporal data points is relatively small. Generally, if the size of dataset is small, the information carried by data is sparse, leading to the derived equations overfitting to specific phenomena. However, in DHC-GEP, the overfitting equations are automatically filtered out by the constraint of dimensional homogeneity.

4. Application on discovering unknown constitutive relations

The general governing equations for fluid flows are the conservation equations of mass, momentum and energy, as follows:

\begin{equation} \begin{cases}

\begin{array}{*{35}{l}} \dfrac{{{\rm D}\rho }}{{{\rm D} t}}

+ \rho \dfrac{{\partial {v_k}}}{{\partial {x_k}}} = 0, \\

\rho \dfrac{{{\rm D}{v_i}}}{{{\rm D} t}} + \dfrac{{\partial

p}}{{\partial {x_i}}} + \dfrac{{\partial {\tau

_{ik}}}}{{\partial {x_k}}} = \rho {F_i}, \\

\dfrac{3}{2}\dfrac{{{\rm D}\theta }}{{{\rm D} t}} +

\dfrac{{\partial {q_k}}}{{\partial {x_k}}} ={-} ({p{\delta

_{ij}} + {\tau _{ij}}})\dfrac{{\partial {v_i}}}{{\partial

{x_j}}}. \end{array} \end{cases}

\end{equation}

\begin{equation} \begin{cases}

\begin{array}{*{35}{l}} \dfrac{{{\rm D}\rho }}{{{\rm D} t}}

+ \rho \dfrac{{\partial {v_k}}}{{\partial {x_k}}} = 0, \\

\rho \dfrac{{{\rm D}{v_i}}}{{{\rm D} t}} + \dfrac{{\partial

p}}{{\partial {x_i}}} + \dfrac{{\partial {\tau

_{ik}}}}{{\partial {x_k}}} = \rho {F_i}, \\

\dfrac{3}{2}\dfrac{{{\rm D}\theta }}{{{\rm D} t}} +

\dfrac{{\partial {q_k}}}{{\partial {x_k}}} ={-} ({p{\delta

_{ij}} + {\tau _{ij}}})\dfrac{{\partial {v_i}}}{{\partial

{x_j}}}. \end{array} \end{cases}

\end{equation}

Here, ![]() ${{\rm D}}/{{{\rm D} t}}={\partial }/{{\partial t}}+{v_i}({\partial }/{{\partial {x_i}}})$ is the substantial derivative, and

${{\rm D}}/{{{\rm D} t}}={\partial }/{{\partial t}}+{v_i}({\partial }/{{\partial {x_i}}})$ is the substantial derivative, and ![]() $\theta = {k}_{B}T/m$ is the temperature in energy/mass units. Besides the five basic physical variables

$\theta = {k}_{B}T/m$ is the temperature in energy/mass units. Besides the five basic physical variables ![]() $\rho$,

$\rho$, ![]() ${v_i}$ and

${v_i}$ and ![]() $\theta$, there are eight additional variables, i.e. the viscous stress

$\theta$, there are eight additional variables, i.e. the viscous stress ![]() ${\tau _{ij}}$ and heat flux

${\tau _{ij}}$ and heat flux ![]() ${q_i}$. To numerically solve (4.1), the additional constitutive relations that close the viscous stress and heat flux are needed. In the continuum regime, the NSF equations are widely employed, which assumes that the viscous stress/heat flux is linearly proportional to the local strain rate/temperature gradient. However, for strong non-equilibrium flows, the NSF equations are not valid (Boyd, Chen & Candler Reference Boyd, Chen and Candler1995).

${q_i}$. To numerically solve (4.1), the additional constitutive relations that close the viscous stress and heat flux are needed. In the continuum regime, the NSF equations are widely employed, which assumes that the viscous stress/heat flux is linearly proportional to the local strain rate/temperature gradient. However, for strong non-equilibrium flows, the NSF equations are not valid (Boyd, Chen & Candler Reference Boyd, Chen and Candler1995).

Alternatively, high-order constitutive relations have been derived from the Boltzmann equation using the Chapman–Enskog method (Chapman & Cowling Reference Chapman and Cowling1990), including Burnett equations (Burnett Reference Burnett1936), super-Burnett (Su-Burnett) equations (Shavaliyev Reference Shavaliyev1993) and augmented-Burnett (Au-Burnett) equations (Zhong, MacCormack & Chapman Reference Zhong, MacCormack and Chapman1993), to account for the non-equilibrium effects. However, despite being proven to be superior to NSF equations, these equations are still unsatisfactory in strong non-equilibrium flows. In this work, we employ DHC-GEP to discover the unknown constitutive relations in two representative non-equilibrium flows, as the examples to illustrate how to apply DHC-GEP to discover unknown governing equations.

To avoid any potential misleading, it is important to emphasize that we do not employ DHC-GEP to discover the complex governing equations like (4.1). In this work, we are focused on discovering the constitutive relations for the two unclosed variables within (4.1), i.e. the viscous stress and heat flux.

4.1. One-dimensional shock wave

In the research community of non-equilibrium flows, the one-dimensional shock wave is a benchmark to validate solvers and formulations in numerical computations. We employ DSMC to simulate the one-dimensional shock wave of argon gas, the general structure of which is shown in figure 7.

Figure 7. General structure of one-dimensional shock. Each variable is normalized with its maximum value in the computational domain.

The one-dimensional shock essentially connects two equilibrium states. In this work, for upstream (i.e. the free stream), the temperature (![]() ${T_1}$) is

${T_1}$) is ![]() $300\,{\rm K}$, the density (

$300\,{\rm K}$, the density (![]() ${\rho _1}$) is

${\rho _1}$) is ![]() $1.067 \times {10^{ - 4}}\,{\rm kg}\,{{\rm m}^{ - 3}}$ and the mean free path (

$1.067 \times {10^{ - 4}}\,{\rm kg}\,{{\rm m}^{ - 3}}$ and the mean free path (![]() ${\lambda _1}$) is

${\lambda _1}$) is ![]() $1.114 \times {10^{ - 3}}\,{\rm m}$. For downstream, the state variables are computed with Rankine–Hugoniot relations

$1.114 \times {10^{ - 3}}\,{\rm m}$. For downstream, the state variables are computed with Rankine–Hugoniot relations

\begin{equation} \begin{cases}

\begin{array}{*{35}{l}} {T_2} = \dfrac{{({5Ma_\infty ^2 -

1})({Ma_\infty ^2 + 3})}}{{16Ma_\infty ^2}}{T_1},

\\ {\rho _2} = \dfrac{{4Ma_\infty ^2}}{{Ma_\infty ^2 +

3}}{\rho _1}, \\ {v_2} = \dfrac{{Ma_\infty ^2 +

3}}{{4Ma_\infty ^2}}{v_1}. \end{array}

\end{cases}

\end{equation}

\begin{equation} \begin{cases}

\begin{array}{*{35}{l}} {T_2} = \dfrac{{({5Ma_\infty ^2 -

1})({Ma_\infty ^2 + 3})}}{{16Ma_\infty ^2}}{T_1},

\\ {\rho _2} = \dfrac{{4Ma_\infty ^2}}{{Ma_\infty ^2 +

3}}{\rho _1}, \\ {v_2} = \dfrac{{Ma_\infty ^2 +

3}}{{4Ma_\infty ^2}}{v_1}. \end{array}

\end{cases}

\end{equation}

Here, ![]() $M{a_\infty }$ and

$M{a_\infty }$ and ![]() ${v_1}$ are the Mach number and velocity of the free stream, respectively. Note that for this one-dimensional problem, the velocity direction of each position in the flow field is along the

${v_1}$ are the Mach number and velocity of the free stream, respectively. Note that for this one-dimensional problem, the velocity direction of each position in the flow field is along the ![]() $x$ direction, and all the flow variables vary along only the

$x$ direction, and all the flow variables vary along only the ![]() $x$ direction. Therefore, we set one cell along the

$x$ direction. Therefore, we set one cell along the ![]() $y$ and

$y$ and ![]() $z$ directions, but 120 cells along the

$z$ directions, but 120 cells along the ![]() $x$ direction. A schematic diagram is shown in figure 8. The lengths of the computational domain and computational cell are

$x$ direction. A schematic diagram is shown in figure 8. The lengths of the computational domain and computational cell are ![]() $30{\lambda _1}$ and

$30{\lambda _1}$ and ![]() $0.25{\lambda _1}$, respectively. The time step is

$0.25{\lambda _1}$, respectively. The time step is ![]() $0.1\tau$.

$0.1\tau$.

Figure 8. Schematic diagram of the computational domain for one-dimensional shock.

During simulation, we sample the macroscopic velocities (![]() $u$), densities (

$u$), densities (![]() $\rho$), temperatures (

$\rho$), temperatures (![]() $T$) and heat flux in the

$T$) and heat flux in the ![]() $x$ direction (

$x$ direction (![]() ${q_x}$) at the instants of

${q_x}$) at the instants of ![]() $t = 10\,000\tau, 10\,010\tau,\ 10\,020\tau,\ldots,50\,000\tau$. Then, we obtain the final data by averaging all the sample data.

$t = 10\,000\tau, 10\,010\tau,\ 10\,020\tau,\ldots,50\,000\tau$. Then, we obtain the final data by averaging all the sample data.

In this case, the target variable is defined as the heat flux in the ![]() $x$ direction (

$x$ direction (![]() ${q_x}$). The function set is defined as

${q_x}$). The function set is defined as ![]() $\{ { + , - , \times, \div } \}$ as usual, while the terminal set is meticulously constructed to embed physics knowledge as follows.

$\{ { + , - , \times, \div } \}$ as usual, while the terminal set is meticulously constructed to embed physics knowledge as follows.

(i) Constitutive relations describe the local transport mechanisms of momentum and energy, and hence we regard local non-equilibrium parameters as key factors. Specifically, the GLL Knudsen number defined as

$K{n_Q} = ({{{\lambda _l}}}/{Q})({\partial Q}/{\partial x})$ is selected. The local non-equilibrium characteristics intensify as the increase of the absolute value of

$K{n_Q} = ({{{\lambda _l}}}/{Q})({\partial Q}/{\partial x})$ is selected. The local non-equilibrium characteristics intensify as the increase of the absolute value of  $K{n_Q}$. Here,

$K{n_Q}$. Here,  ${\lambda _l}$ is the local mean free path, and

${\lambda _l}$ is the local mean free path, and  $Q$ represents state variables, including temperature (

$Q$ represents state variables, including temperature ( $\theta$) and density (

$\theta$) and density ( $\rho$).

$\rho$).(ii) The transports of momentum and energy are driven by the gradients of state variables. As a result, the gradient terms are selected, including

${{\partial u}}/{{\partial x}}, {{\partial \rho }}/{{\partial x}}, {{\partial \theta }}/{{\partial x}}, {{{\partial ^2}u}}/{{\partial {x^2}}}, {{{\partial ^2}\rho }}/{{\partial {x^2}}}, {{{\partial ^2}\theta }}/{{\partial {x^2}}}, {{{\partial ^3}u}}/{{\partial {x^3}}}, {{{\partial ^3}\rho }}{{\partial {x^3}}}$ and

${{\partial u}}/{{\partial x}}, {{\partial \rho }}/{{\partial x}}, {{\partial \theta }}/{{\partial x}}, {{{\partial ^2}u}}/{{\partial {x^2}}}, {{{\partial ^2}\rho }}/{{\partial {x^2}}}, {{{\partial ^2}\theta }}/{{\partial {x^2}}}, {{{\partial ^3}u}}/{{\partial {x^3}}}, {{{\partial ^3}\rho }}{{\partial {x^3}}}$ and  ${{{\partial ^3}\theta }}/{{\partial {x^3}}}$.

${{{\partial ^3}\theta }}/{{\partial {x^3}}}$.(iii) The state variables themselves are important factors. Besides, it is noteworthy that the constitutive relations should satisfy Galilean invariance (Han et al. Reference Han, Ma, Ma and E2019; Li, Dong & Wang Reference Li, Dong and Wang2021), which means that the constitutive relations cannot contain velocity (

$u$) explicitly outside the partial differential operators. The proof is provided in Appendix F. Therefore, the state variables (

$u$) explicitly outside the partial differential operators. The proof is provided in Appendix F. Therefore, the state variables ( $\theta$ and

$\theta$ and  $\rho$) excluding velocity are selected. This is also the reason that we do not select the GLL Knudsen number of velocity.

$\rho$) excluding velocity are selected. This is also the reason that we do not select the GLL Knudsen number of velocity.(iv) The parameters representing the physical properties of gas are selected, including local viscosity (

$\mu$), local heat conductivity (

$\mu$), local heat conductivity ( $\kappa$), heat capacity ratio (

$\kappa$), heat capacity ratio ( $\gamma = 5/3$ for argon gas) and viscosity exponent (

$\gamma = 5/3$ for argon gas) and viscosity exponent ( $\omega = 1$ for Maxwell molecules).

$\omega = 1$ for Maxwell molecules).

Finally, the terminal set is ![]() $\{K{n_\theta },K{n_\rho }, {{\partial u}}/{{\partial x}}, {{\partial \rho }}/{{\partial x}}, {{\partial \theta }}/{{\partial x}}, {{{\partial ^2}u}}/{{\partial {x^2}}}, {{{\partial ^2}\rho }}/{{\partial {x^2}}}, {{{\partial ^2}\theta }}/{{\partial {x^2}}}, {{{\partial ^3}u}}/{{\partial {x^3}}}, {{{\partial ^3}\rho }}/{{\partial {x^3}}}, {{{\partial ^3}\theta }}/{{\partial {x^3}}},\rho,\theta,\mu,\kappa, \gamma,\omega \}$.