Published online by Cambridge University Press: 23 March 2022

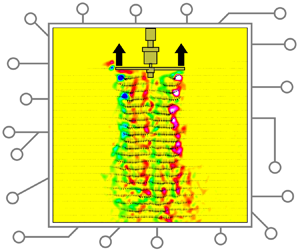

With particle image velocimetry (PIV), cross-correlation and optical flow methods have been mainly adopted to obtain the velocity field from particle images. In this study, a novel artificial intelligence (AI) architecture is proposed to predict an accurate flow field and drone rotor thrust from high-resolution particle images. As the ground truth, the flow fields past a high-speed drone rotor obtained from a fast Fourier transform-based cross-correlation algorithm were used along with the thrusts measured by a load cell. Two deep-learning models were developed, and for instantaneous flow-field prediction, a generative adversarial network (GAN) was employed for the first time. It is a spectral-norm-based residual conditional GAN translator that provides a stable adversarial training and high-quality flow generation. Its prediction accuracy is 97.21 % (coefficient of determination, or R2). Subsequently, a deep convolutional neural network was trained to predict the instantaneous rotor thrust from the flow field, and the model is the first AI architecture to predict the thrust. Based on an input of the generated flow field, the network had an R2 accuracy of 94.57 %. To understand the prediction pathways, the internal part of the model was investigated using a class activation map. The results showed that the model recognized the area receiving kinetic energy from the rotor and successfully made a prediction. The proposed architecture is accurate and nearly 600 times faster than the cross-correlation PIV method for real-world complex turbulent flows. In this study, the rotor thrust was calculated directly from the flow field using deep learning for the first time.