1. Introduction

Spherical robots represent a special nonconventional class of mobile robots that demonstrates remarkable dynamic characteristics. These robots are composed of an external spherical shell, and their drive units and electronic components are all sealed inside this spherical shell. This inherently provides protection to the key components of the robot and makes it suitable for hazardous and aquatic environments. Also, the contact between the outer spherical shell of the robot and the ground is just a single point, which makes their motion highly efficient. Moreover, there is no concern for the overturning of these robots, which renders them suitable for applications where frequent falling is inevitable. Furthermore, spherical robots can be designed as holonomic as well as non-holonomic robots depending upon the application needs. Therefore, despite having some disadvantages, such as their inability to climb stairs, the structural characteristics of the spherical robots make them robust and suitable for a multitude of applications, including child development [Reference Mizumura, Ishibashi, Yamada, Takanishi and Ishii1], underwater research and surveillance [Reference Gu, Guo and Zheng2, Reference Yue, Guo and Shi3], security [Reference Lu and Chu4], and terrestrial and extraterrestrial exploration [Reference Hogan, Forbes and Barfoot5].

The last decade saw much work in the field of spherical robots. Several designs were presented, especially for the actuation mechanism of the robot [Reference Chase and Pandya6]. One strategy proposed is to use a ball or wheels or a car inside the spherical robot [Reference Ba, Hoang, Lee, Nguyen, Duong and Tham7, Reference Dong, Lee, Woo and Leb8]. The ball or the car is then controlled and the friction between the wheel or ball and the inner surface of the spherical shell is used to transmit the force to the robot. Several researchers also used rotating flywheels or control moment gyroscopes as the actuation mechanism for spherical robots [Reference Kim and Jung9]–[Reference Lee and Jung11]. As rotated at a high speed, these flywheels would generate an angular momentum, which is balanced by the movement of the spherical robot. Another strategy is to displace the center of mass of the robot, which would disturb the robot’s equilibrium and drive it. The use of sliding masses was proposed to realize spherical robot motion based on this principle [Reference Burkhardt12]. More popular spherical robots are the pendulum-driven spherical robots, which use one or two pendulums to displace their center of mass, generating higher torque compared with other types of spherical robots [Reference Othman13]–[Reference Li, Zhu, Zheng, Mao, Arif, Song and Zhang16].

To fully utilize the advantages of spherical robots and deploy them for real-world field applications, visual sensing and perception abilities are necessary. Despite much work done on these robots, especially in the last two decades, not much attention has been paid to the sensing and perception of spherical robots. This is due to the fact that these robots are still in their early stages of development, and much work is needed on the design of drive units, dynamic modeling, and motion control. Also, the unconventional structure of these robots makes it difficult to mount sensors on them. A few studies are found in the literature on the spherical robot visual system. A portable amphibious robot was presented in ref. [Reference Satria17] for live-streaming purposes. Quan et al. designed a spherical robot with a binocular stereo camera for the inspection of plant stem diameter [Reference Quan, Chen, Li, Qiao, Xi, Zhang and Sun18]. Similarly, a docking system was developed for an amphibious spherical robot based on a binocular camera [Reference Guo, Liu, Shi, Guo, Xing, Hou, Chen, Su and Liu19]. A monocular camera was used on BYQ-5 spherical robot for vision-based path identification [Reference Zhang, Jia and Sun20].

For intricate operations involved in applications such as surveillance and planetary exploration and for realizing autonomy, wide visual information of the surroundings of the robot is necessary. However, the work on visual sensing and perception of spherical robots in the literature is for very specific cases and with a small field of vision, which requires the robot to turn to collect further information. However, this is impractical for security and surveillance applications where the environment is dynamic and requires the latest information without delays. For this purpose, in this paper, we present a panoramic visual system for spherical robots, which can acquire visual information of all its surrounding environment at any given time.

To realize a panoramic view, image stitching is used. We considered only classical feature extraction and matching algorithms since these methods are suitable for resource-constrained applications, such as mobile robotics. SIFT (scale invariant feature transform) is a renowned classical method used for feature extraction and matching because of its ability to extract distinctive features that are invariant to scale and rotational changes [Reference Lowe21]. However, the SIFT descriptors are high-dimensional, which leads to large memory requirements. Moreover, it also requires careful parameter tuning, which can be sensitive to the image data and application. ORB (oriented FAST and rotated BRIEF) is another classical algorithm that is often used in robotic applications, especially because of its higher computational speed as its descriptors are binary in nature [Reference Xu, Tian, Feng and Zhou22]. However, this also limits its ability to capture fine-grained details and discriminate similar-looking features, which is crucial in image stitching and many other robotic visual applications. Furthermore, the camera mounting angles on the spherical robot can also lead to different viewpoints, especially for close-range objects. ORB’s orientation assignment may not fully compensate for large changes in viewpoints, which might impact the stitching of the view fields. A-KAZE is another algorithm known for its higher efficiency, and distinctive and robust features [Reference Alcantarilla, Nuevo and Bartoli23]. It represents a compromise between the speed of ORB and the accuracy of the SIFT algorithm. In this paper, we use the SURF algorithm [Reference Bay, Ess, Tuytelaars and Van Gool24], which ensures higher robustness to viewpoint changes and higher feature-matching accuracy compared to ORB due to its more expressive feature descriptors. Moreover, compared to SIFT and A-KAZE, SURF has fewer parameters to tune and has low-dimensional descriptors leading to simplicity and lower memory usage, which is beneficial for robotic applications. However, on the downside, SURF is patented and also has a higher computational cost than that of ORB and A-KAZE.

In this paper, we present the design of a spherical robot panoramic visual system by using classical algorithms because of their lower computational cost which is suitable for real-time robotic applications. We consider a case of a surveillance spherical robot. For mounting four cameras on the robot, our designed spherical robot has side lobes with translucent domes. For the installation parameters of the onboard cameras, we derived a geometrical model, which considers the robot’s geometry as well as the overlapping ratio of the image streams. For feature extraction, classical SURF is used and its computational cost is reduced by threshold optimization and reducing the region of interest. The stitching seam lines are reduced by changing the weight functions in fade-in and fade-out algorithm. Cache-based dynamic image fusion is used to increase the frame rate of the output panoramic view field. Finally, the YOLO algorithm is used for object detection in the wide field of view (FOV) as a case scenario of a surveillance spherical robot. Although algorithmic improvements and analyses are considered, the primary goal of the paper is the design of the panoramic visual system for spherical robots rather than algorithmic novelty.

The main contributions of this paper are:

-

1. The first spherical robot with 360

$^{\circ}$

-wide panoramicFOV is presented. A mathematical model is established for the camera installation parameters for the long-range and short-range visual acquisition, which can be used for any spherical robot that uses cameras on its sides.

$^{\circ}$

-wide panoramicFOV is presented. A mathematical model is established for the camera installation parameters for the long-range and short-range visual acquisition, which can be used for any spherical robot that uses cameras on its sides. -

2. Application-based algorithmic improvements are made specifically for the spherical robot panoramic visual system. First, the SURF algorithm is used for feature extraction and matching only in the region of interest (ROI). The ROI and the thresholds are selected on experimental basis for optimal SURF feature extraction and matching accuracy and efficiency. Improved weight gain functions are used in fade-in and fade-out algorithm, which resulted in negligible ghosting effects. For dynamic stitching, caching of the KeyFrame is used which further reduced the stitching time and improved the frame rate of the output panoramic video.

-

3. Finally, the visual system is tested on a spherical robot prototype for real-time performance. The output panoramic video is then subjected to an application scenario of object detection, which pushes this species of robots for real-world practical applications.

2. Spherical robot visual acquisition system model

The spherical robot is installed with four cameras, each camera having a 120

![]() $^{\circ}$

-wide FOV. Two of the cameras capture images for the front 180

$^{\circ}$

-wide FOV. Two of the cameras capture images for the front 180

![]() $^{\circ}$

-wide FOV, whereas the other two cameras for the rear 180

$^{\circ}$

-wide FOV, whereas the other two cameras for the rear 180

![]() $^{\circ}$

. The symmetry of the robot enables the use of the same algorithms twice to acquire front and rear panoramic views. Therefore, for better presentation, we only discuss the two front cameras, and all procedures are repeated for the rear two.

$^{\circ}$

. The symmetry of the robot enables the use of the same algorithms twice to acquire front and rear panoramic views. Therefore, for better presentation, we only discuss the two front cameras, and all procedures are repeated for the rear two.

2.1. Short-range visual acquisition model

The short range for a spherical robot is considered when the distance of the target object plane is less than 20 m from the robot. The schematic model is shown in Fig. 1, where β is the FOV of a single camera, α is the camera axis angle, L is the distance between the robot and target object plane, d is the distance between the two cameras, also called baseline width, D′E′ is the cumulative FOV of the two cameras, and DE is the width of the overlapped region. From the quadrilateral ACBF (Fig. 2),

where γ is the angle of the overlapping region of the FOV of two cameras. The area CDE represents the overlapping region of the FOVs of the two cameras. The distance H of this region from the baseline AB is

Using similar triangles, we get the width DE of the overlapped region as

\begin{equation*} DE=\left(\frac{L}{H}-1\right)AB=\left(\frac{2L\tan \left(\frac{\gamma }{2}\right)}{d}-1\right)d=2L\tan \left(\frac{\gamma }{2}\right)-d \end{equation*}

\begin{equation*} DE=\left(\frac{L}{H}-1\right)AB=\left(\frac{2L\tan \left(\frac{\gamma }{2}\right)}{d}-1\right)d=2L\tan \left(\frac{\gamma }{2}\right)-d \end{equation*}

Figure 1. Short-range visual acquisition schematic model.

Figure 2. Camera axis angle for short-range visual acquisition.

The overlapping ratio, denoted by

![]() $\eta$

, is critical for image stitching, with its desired value ranging between 0.3 and 0.5. The expression of the overlapping ratio for the spherical robot is derived as

$\eta$

, is critical for image stitching, with its desired value ranging between 0.3 and 0.5. The expression of the overlapping ratio for the spherical robot is derived as

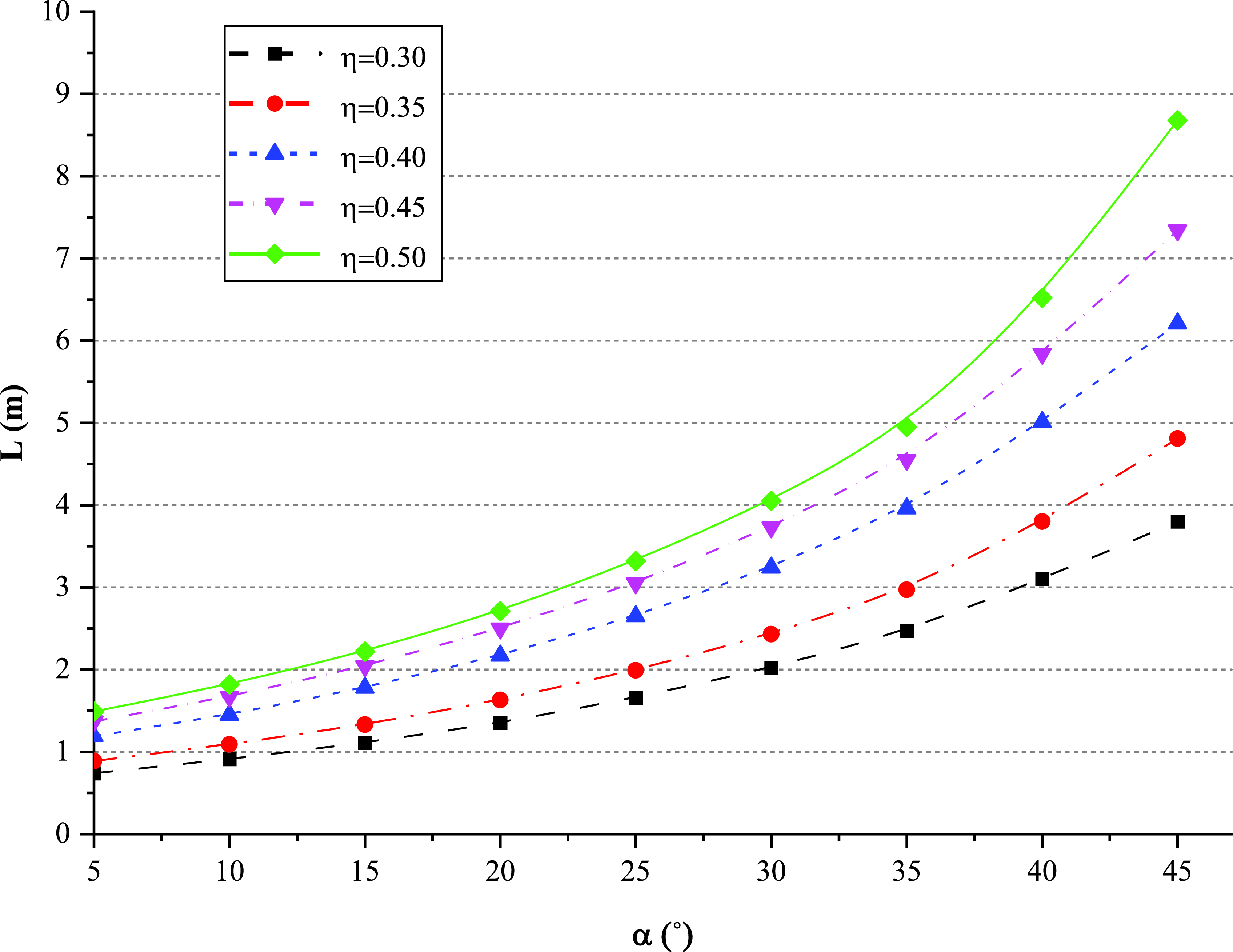

And the distance L of the target object plane is expressed by Eq. (2a) and plotted in Fig. 3:

Figure 3. Long-range visual acquisition schematic model.

Using the physical parameters of our spherical robot, the above relationship is plotted as shown in Fig. 2, which shows as the distance of the target object increases, the axis angle of the two cameras should also increase. This also serves well for minimizing parallax problems.

2.2. Long-range visual acquisition model

The long-range model is considered when the distance of the target object plane is larger than 20 m. The schematic model is shown in Fig. 3. The overlapping ratio

![]() $\eta$

for the long-range visual acquisition is given by

$\eta$

for the long-range visual acquisition is given by

Rearranging the above equation gives the relationship between the target object distance and the camera axis angle as

\begin{equation} L=\frac{d}{2\tan \left(\frac{\gamma }{2}\right)\left(1-\frac{\beta \eta }{\gamma \sin \beta }\right)}=\frac{d}{2\tan \left(\frac{\beta -\alpha }{2}\right)\left(1-\frac{\beta \eta }{\beta -\alpha \sin \beta }\right)} \end{equation}

\begin{equation} L=\frac{d}{2\tan \left(\frac{\gamma }{2}\right)\left(1-\frac{\beta \eta }{\gamma \sin \beta }\right)}=\frac{d}{2\tan \left(\frac{\beta -\alpha }{2}\right)\left(1-\frac{\beta \eta }{\beta -\alpha \sin \beta }\right)} \end{equation}

To visualize the relationship expressed in the Eq. (2b), the plot is shown in Fig. 4. Similar to the case of short-range visual acquisition, angle α in the case of long-range visual acquisition also increases with the increase in distance L. However, the effect of the overlapping

![]() $\eta$

ratio decreases at larger distances.

$\eta$

ratio decreases at larger distances.

Figure 4. Camera axis angle for long-range visual acquisition.

The overlapping ratio for this case is expressed as

2.3. Spherical robot geometrical model

The shape and size of the spherical robot influence the camera axis angle as well as the overlapping ratio of the acquired video streams. Among other dimensions, the width of the robot is more critical for our case as the cameras are attached on its sides. The schematic diagram of the robot with a camera is shown in Fig. 5.

Figure 5. Geometrical model of the spherical robot visual acquisition system.

In Fig. 5, the width of the spherical robot shell is denoted by do, and the radius of its side face is r. By trigonometry, we get:

From (2c) and (2d), we get the overlapping ratio:

\begin{equation*} \eta =\frac{\beta -\alpha }{\beta }=1-\frac{\alpha }{\arctan \left(-\frac{2r}{d-d_{0}}\right)} \end{equation*}

\begin{equation*} \eta =\frac{\beta -\alpha }{\beta }=1-\frac{\alpha }{\arctan \left(-\frac{2r}{d-d_{0}}\right)} \end{equation*}

The relationship between the overlapping ratio

![]() $\eta$

and the baseline width is plotted in Fig. 6.

$\eta$

and the baseline width is plotted in Fig. 6.

Figure 6. Overlapping ratio as a function of baseline width.

For a given camera axis angle α, the overlapping ratio decreases with the increase in baseline width d. To keep the overlapping ratio within the desired limit, we selected a baseline width of 400 mm for our spherical robot.

3. Image stitching

This paper uses static and dynamic image stitching of the visual streams from the two cameras to generate the real-time panoramic FOV.

3.1. Threshold for feature extraction

The Hessian matrix describes the local curvature of a function and is composed of second-order partial derivatives. The Hessian matrix threshold determines the accuracy and efficiency of the feature-matching algorithm, that is the larger the threshold, the higher the accuracy and lower the efficiency. We performed the matching tests for several images and plotted the relationships, as shown in Fig. 7.

Figure 7. Influence of Hessian threshold on feature extraction and matching.

The goodmatch represents the points that meet the threshold screening, whereas efficiency is the ratio of goodmatch points to the time spent. It is noted that setting the Hessian matrix threshold between 1500 and 2000 ascertains higher matching efficiency.

Lowe threshold is the ratio of the distance of the matching feature point from its nearest neighbor to the second nearest neighbor. Using results from data sample, we related the Hessian matrix threshold with efficiency under different Lowe thresholds, as shown in Fig. 8(a).

Figure 8. Influence of low threshold on feature extraction and matching.

As the Lowe threshold increases, the efficiency of feature matching also increases. However, the feature-matching accuracy decreases. This is due to the relaxation of the screening scale with the increase in the Lowe threshold. Moreover, the highest correct matching pairs were found for a Lowe threshold between 0.3 and 0.6. Therefore, for our spherical robot panoramic system, we set the Hessian threshold range between 1500 and 2000 and the Lowe threshold between 0.3 and 0.6.

3.2. Feature extraction

Our spherical robot prototype uses an onboard Raspberry Pi, which offers much lower computation power. Furthermore, we used SURF for feature extraction and matching primarily due to its expressive descriptor ensuring better feature matching, which is computationally expensive.

To reduce the computation load for ensuring high output frame rates of the panoramic video stream, we extracted the features only from the region of interest (ROI), which is the overlapped region as shown in Fig. 9. Since this region only accounts for 30-40% of the full image, the computation cost is significantly reduced.

Figure 9. SURF feature extraction (a) extraction from the complete view, (b) extraction from ROI.

To explore the best-suited feature extraction region

![]() $\varepsilon$

, we conducted image stitching experiments for different overlapping ratios

$\varepsilon$

, we conducted image stitching experiments for different overlapping ratios

![]() $\eta$

, and the resulting relationship is shown in Fig. 10.

$\eta$

, and the resulting relationship is shown in Fig. 10.

Figure 10. Feature extraction region analysis (a) based on stitching time, (b) based on matching accuracy.

In the figure, the area on the left side of the red line, that is,

![]() $\varepsilon$

=0.35, is the region where the number of matching points is too low and leads to failed stitching. It can be seen that an increase in

$\varepsilon$

=0.35, is the region where the number of matching points is too low and leads to failed stitching. It can be seen that an increase in

![]() $\varepsilon$

leads to an increase in stitching time and a decrease in accuracy, which is a consequence of an increase in false matches as the feature extraction region enlarges. The inflection points for each value of

$\varepsilon$

leads to an increase in stitching time and a decrease in accuracy, which is a consequence of an increase in false matches as the feature extraction region enlarges. The inflection points for each value of

![]() $\eta$

, after which the slope of respective curves decreases, are highlighted in the shaded region. From experiments, we observed that

$\eta$

, after which the slope of respective curves decreases, are highlighted in the shaded region. From experiments, we observed that

![]() $\varepsilon$

=

$\varepsilon$

=

![]() $\eta$

+1 gives the best image stitching performance in terms of both speed and accuracy. From all the analyses discussed in the paper up to this point, we selected an overlapping ratio of

$\eta$

+1 gives the best image stitching performance in terms of both speed and accuracy. From all the analyses discussed in the paper up to this point, we selected an overlapping ratio of

![]() $\eta$

=0.4 for our spherical robot visual system. The extracted features were further cleansed through RANSAC, and the two images are then subjected to cylindrical spherical projection.

$\eta$

=0.4 for our spherical robot visual system. The extracted features were further cleansed through RANSAC, and the two images are then subjected to cylindrical spherical projection.

3.3. Static image fusion

For the seamless fusion of the images acquired by the two cameras on the spherical robot, we used the fade-in and fade-out algorithm and changed its weight functions, given by

In the above equation, g1 and g2 represent the gray value of the left and right camera images, respectively. The difference in the illumination of pixels acquired by two cameras leads to differences in the gray values of the pixels of two images. This difference is mitigated by multiplying the ratio of the gray values with the new weights. The quality of the image fusion is evaluated in terms of standard deviation (SD) and information entropy (H), as given in Table I. The proposed weights ensured higher value of SD, that is, dispersion of grayscale value, and H, that is, quantity of image information, which improves the fusion quality of images, which mitigated the stitching seams and ghosting effects as seen in Fig. 11. A slight difference in the gray values of the two fused images is still observed after using our improved fade-in and fade-out algorithm. This difference is due to the use of only the overlapping region of the two images. However, it has no drastic effects on real-time object detection performance, which is adversely affected by the ghosting effect. These results are presented in Section 5.4.

Table I. Evaluation of fusion algorithms.

Figure 11. Image fusion (a) average method (b), maximum method (c), fade-in and fade-out (d), improved fade-in and fade-out.

3.4. Dynamic image fusion

For the dynamic image fusion, we used the sequential image fusion method with caching of KeyFrames. The sequential method performs feature extraction and matching, feature cleansing, projection transformation, and image fusion for each image frame, which results in a panoramic video with a low frame rate.

Instead, we used the caching of the KeyFrames, that is, one of the acquired image pairs is set as a KeyFrame, and the homography is calculated for it and saved as a cache. Since the speed of the spherical robot is not very fast, the same homography can also be used for the subsequent frames for a certain time range. This saves time from feature matching and cleansing of each frame, which are the two most time-taking steps in image stitching. The algorithm is shown in Fig. 12. In practice, we set a KeyFrame after every four frames. The time consumed during each step when the caching-based sequential dynamic image stitching was performed is given in Table II.

Figure 12. Sequential dynamic image stitching based on caching of keyFrames.

Table II. Time for each step in the caching-based sequential dynamic image stitching.

The resulting panoramic video frame rate is plotted in Fig. 13. It can be seen that the frame rate fluctuates about an average of 22.16 fps, with a sudden drop to about 10 fps at each KeyFrame.

Figure 13. Output video frame rate from cache-based sequential dynamic image stitching.

4. Real-time object detection

The 180

![]() $^{\circ}$

-wide panoramic view acquired is then used for realizing real-time object detection. The objective of the spherical robot is to carry out mobile surveillance task, which requires quick and correct identification of the objects in its 360

$^{\circ}$

-wide panoramic view acquired is then used for realizing real-time object detection. The objective of the spherical robot is to carry out mobile surveillance task, which requires quick and correct identification of the objects in its 360

![]() $^{\circ}$

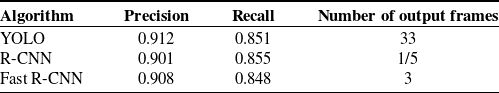

FOV. Deep learning guarantees both high accuracy and high detection speed. We conducted experiments to test YOLO, R-CNN, and Fast R-CNN algorithms to compare their speed, precision, and recall. The experimental results are presented in Table III. The results show YOLO outperforms both R-CNN and Fast R-CNN, especially in the number of output frames. Therefore, we used YOLO. Since Raspberry Pi could not handle this computation, we used the ground station for object detection.

$^{\circ}$

FOV. Deep learning guarantees both high accuracy and high detection speed. We conducted experiments to test YOLO, R-CNN, and Fast R-CNN algorithms to compare their speed, precision, and recall. The experimental results are presented in Table III. The results show YOLO outperforms both R-CNN and Fast R-CNN, especially in the number of output frames. Therefore, we used YOLO. Since Raspberry Pi could not handle this computation, we used the ground station for object detection.

Table III. Object detection test results.

5. Experimental results

Real-time experiments were performed using the spherical robot prototype to comprehensively evaluate our designed panoramic visual system for the spherical robot. The experimental platform is shown in Fig. 14.

Figure 14. Spherical robot experimental platform.

Our spherical robot uses two pendulums to drive the robot and uses two flywheels to provide inertial stabilization. The four cameras are installed on the two sides of the robot inside translucent lobes. A bearing prevents the motion transmission between the pendulum and the shaft to which the camera bracket is attached. For computation, the robot carries a Raspberry Pi. The video resolution should neither be too high (computation cost) nor too low (stitching quality). Therefore, we selected cameras with the highest resolution of 1280x720. The overall system is shown in Fig. 15.

Figure 15. Spherical robot panoramic visual system.

5.1. Camera axis angle

Although SURF is robust to viewpoint changes, the feature matching speed and accuracy can be improved by adjusting the camera axis angles. To test this, we considered two cases; (1) short-range indoor experiments and (2) long-range outdoor experiments. The performance was judged based on good match points, that is, feature points that meet the threshold after feature matching, inliers, that is, feature points that lie within the threshold after applying RANSAC, matching accuracy, and matching time. The results for the short-range indoor experiments are shown in Fig. 16. It can be seen that the number of good match points, inliers, and the correct matches decreases with the increase in the camera axis angle, whereas the matching time increases.

Figure 16. Influence of camera axis angle on feature matching for short-range image acquisition.

Similarly, in Fig. 17, it can be seen that the number of good matches, inliers, and correct matches decreases with the increase in the camera-axis angle. However, the feature matching does not get impacted by the change of axis angle for long-range visual acquisition. The matching accuracy for both short-range and long-range scenarios is found best for the camera-axis angle range of 30-40

![]() $^{\circ}$

. Also considering the feature matching speed, this camera axis angle range is the optimal choice and is used for our robot.

$^{\circ}$

. Also considering the feature matching speed, this camera axis angle range is the optimal choice and is used for our robot.

Figure 17. Influence of camera axis angle on feature matching for long-range image acquisition.

5.2. Real-time dynamic image-stitching

We tested the performance of our proposed algorithms during the real-time spherical robot experiments and compared their performance against the original algorithms. During the experiments, we set the KeyFrame after every 25 frames. As discussed before, the feature extraction and projection transformations were only performed on the KeyFrames. The experimental results for the feature matching accuracy and the image stitching time for the original SURF algorithm (when features are extracted from the whole image) are compared with that of the proposed SURF algorithm (when features are only extracted from ROI) in Fig. 18. It can be seen that the matching accuracy for both algorithms stayed almost the same, since most of the inliers that may correctly be matched lie inside the ROI. However, the KeyFrame stitching time was shortened by a factor of 2.37.

Figure 18. Real-time keyFrames stitching speed and accuracy.

5.3. Real-time panoramic field of view

Using the algorithms and parameters from previous sections, the real-time 360

![]() $^{\circ}$

panoramic view for the spherical robot was formed. The image streams from the front two cameras were used to create the front 180

$^{\circ}$

panoramic view for the spherical robot was formed. The image streams from the front two cameras were used to create the front 180

![]() $^{\circ}$

- wide panoramic view of the scene, whereas the rear two cameras were used to generate the rear 180

$^{\circ}$

- wide panoramic view of the scene, whereas the rear two cameras were used to generate the rear 180

![]() $^{\circ}$

-wide panoramic view. The two views were not combined as it would add computational cost, which cannot be handled by the onboard Raspberry Pi. The resulting views are shown in Fig. 19.

$^{\circ}$

-wide panoramic view. The two views were not combined as it would add computational cost, which cannot be handled by the onboard Raspberry Pi. The resulting views are shown in Fig. 19.

Figure 19. Real-time image stitching results of the spherical robot panoramic visual system.

5.4. Real-time object detection

The spherical robot visual system was experimentally evaluated, which also constitutes the analysis of individual algorithms for feature extraction and matching, image projection transformation, feature cleansing, static image fusion, and dynamic image fusion, in addition to object detection. Different frames from real-time spherical robot object detection experiments are shown in Fig. 20.

Figure 20. Real-time object detection in 360

![]() $^{\circ}$

wide panoramic field of view.

$^{\circ}$

wide panoramic field of view.

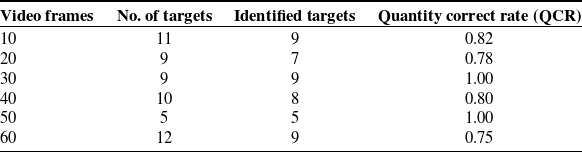

The quantified results of object detection in the panoramic view acquired from the spherical robot are given in Table IV. The minimum accuracy of object detection was found to be 75%, whereas a maximum of 100%. Since this paper is mainly focused on developing the panoramic visual system for the spherical robot and not on developing and benchmarking new algorithms, our initial tests were conducted using only four classes. In the future, we aim to test our robot in environments with multiple object classes and further explore algorithmic possibilities.

Table IV. Object detection results in panoramic field of view of spherical robot.

The final frame rate of the panoramic visual system for the spherical robot was 15.39 fps, as shown in Fig. 21(a). The figure also depicts the influence of constraining the SURF feature extraction to ROI in the KeyFrames. It is also interesting to note that if caching of the KeyFrames is used for sequential dynamic image stitching, the effect of constraining the feature extraction to only ROI depends upon the frequency of selecting KeyFrames. If the scene changes frequently, this strategy will not result in a significant improvement in the output frame rate.

Figure 21. Real-time spherical robot visual perception performance (a) target detection speed (b) target detection at seams for the improved image fusion algorithm.

Furthermore, the image seams are the critical regions of the panoramic FOVs. To test the fade-in and fade-out algorithm with our proposed weights, we plotted the confidence of target recognition on the image seams, as shown in Fig. 21(b). Our proposed weights smoothen the pixel intensity changes across the seams, which not only ensure better visuals but also improve the target recognition results.

5.5. Comparison with panoramic visual systems in literature

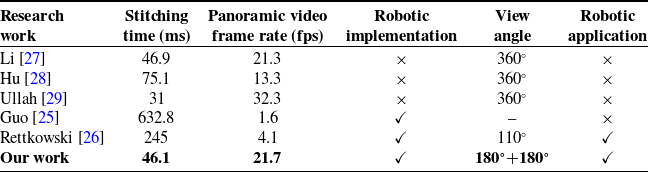

In the literature, we found several studies on the algorithms to generate panoramic video. However, their physical implementation was mostly limited to panorama-generating drones and hand-held devices. The requirements of panorama generation for robotic platforms are disparate from those of panorama-generating devices. Therefore, we cannot compare our results with such devices. Only a few robotic platforms were found in the literature that used multiple cameras to generate a wide FOV [Reference Guo, Sun and Guo25, Reference Rettkowski, Gburek and Göhringer26]. For comparison, we selected studies that either presented robotic visual systems or focused on panoramic video generation algorithms for real-time applications, as shown in Table V. In the table, robotic implementation refers to the case when the panorama was generated by a mobile vehicle or a robot. Whereas, the robotic application shows the work that used this panoramic vision in some robotic applications, such as object detection or navigation.

Table V. Panoramic visual systems in literature.

Table V shows that only Ullah et al. [Reference Ullah, Zia, Kim, Han and Lee29] reported a higher frame rate of the panoramic video. They used six equally spaced cameras on a drone for realizing a 360

![]() $^{\circ}$

-wideFOV. However, this drone was kept stationary throughout the process. Since there was no change in the scenery, it was unnecessary to continuously carry out feature extraction, image registration, and blending. Li et al. [Reference Li, Zhang and Zang27] reported a frame rate of 21.3 fps, which is close to the frame rate of the panorama of our spherical robot visual system. However, their algorithm was not tested on a robotic platform. The only study that considered the robotic implementation and application was presented by Rettkowski et al. [Reference Rettkowski, Gburek and Göhringer26]. They used two cameras on a wheeled robot to generate a panorama. However, the stitching speed was very low, and the panorama was only 110

$^{\circ}$

-wideFOV. However, this drone was kept stationary throughout the process. Since there was no change in the scenery, it was unnecessary to continuously carry out feature extraction, image registration, and blending. Li et al. [Reference Li, Zhang and Zang27] reported a frame rate of 21.3 fps, which is close to the frame rate of the panorama of our spherical robot visual system. However, their algorithm was not tested on a robotic platform. The only study that considered the robotic implementation and application was presented by Rettkowski et al. [Reference Rettkowski, Gburek and Göhringer26]. They used two cameras on a wheeled robot to generate a panorama. However, the stitching speed was very low, and the panorama was only 110

![]() $^{\circ}$

wide.

$^{\circ}$

wide.

6. Conclusion and future outlook

This paper provides a firm foundation for the development of intelligent spherical robots with a 360

![]() $^{\circ}$

-wide visual perception. The designed panoramic visual system for spherical robots considers the geometrical constraints of the robot and ensures a high frame rate of output panoramic video. We used four onboard cameras, each acquiring a 120

$^{\circ}$

-wide visual perception. The designed panoramic visual system for spherical robots considers the geometrical constraints of the robot and ensures a high frame rate of output panoramic video. We used four onboard cameras, each acquiring a 120

![]() $^{\circ}$

-wide FOV. For high robustness to view-point changes and more expressive descriptors for feature matching, we used the SURF algorithm. Its computation cost was reduced by constraining the feature extraction region to the overlapping region of the two views. The image fusion performance of the fade-in and fade-out algorithm was enhanced by using improved weight functions that allow gradual dispersion of pixel intensities. Since image registration and homography calculation add majorly to computational time, caching of the KeyFrames was used in the sequential dynamic image stitching, which increased the overall frame rate of the output video. Finally, using the real-time 360° panoramic view field, object detection was realized using the YOLO algorithm. The comprehensive experimental evaluation promised a spherical robot prototype capable of providing a 360

$^{\circ}$

-wide FOV. For high robustness to view-point changes and more expressive descriptors for feature matching, we used the SURF algorithm. Its computation cost was reduced by constraining the feature extraction region to the overlapping region of the two views. The image fusion performance of the fade-in and fade-out algorithm was enhanced by using improved weight functions that allow gradual dispersion of pixel intensities. Since image registration and homography calculation add majorly to computational time, caching of the KeyFrames was used in the sequential dynamic image stitching, which increased the overall frame rate of the output video. Finally, using the real-time 360° panoramic view field, object detection was realized using the YOLO algorithm. The comprehensive experimental evaluation promised a spherical robot prototype capable of providing a 360

![]() $^{\circ}$

-wide FOV at 21.69 fps and performing real-time mobile object detection with an average frame rate of 15.39 fps. However, during our tests, only four classes for object detection were used, which is not enough for actual monitoring and mobile surveillance tasks. Furthermore, as the primary aim of the paper was toward application rather than algorithmic innovation, it is suggested to explore the use of more advanced algorithms. Moreover, for unpaved grounds, video stabilization algorithms will be necessary to compensate for the unstable motion of spherical robots, which represents the next step in the realization of a complete spherical robot visual system.

$^{\circ}$

-wide FOV at 21.69 fps and performing real-time mobile object detection with an average frame rate of 15.39 fps. However, during our tests, only four classes for object detection were used, which is not enough for actual monitoring and mobile surveillance tasks. Furthermore, as the primary aim of the paper was toward application rather than algorithmic innovation, it is suggested to explore the use of more advanced algorithms. Moreover, for unpaved grounds, video stabilization algorithms will be necessary to compensate for the unstable motion of spherical robots, which represents the next step in the realization of a complete spherical robot visual system.

Author contributions

Muhammad Affan Arif contributed to the study conception, results plotting, and analyses and wrote the article. Zhu Aibin supervised and guided the system design and experimentation. Han Mao contributed to data gathering and article writing. Yao Tu contributed to the technical work.

Financial support

This work was supported in part by the National Natural Science Foundation of China under Grants 52175061 and U1813212, in part by the Shanxi Provincial Key Research Project under Grant 2020XXX001, and in part by the Xinjiang Funded by Autonomous Region Major Science and Technology Special Project under Grant 2021A02002, in part by the Shaanxi Provincial Key R&D Program under Grant 2020GXLH-Y-007, Grants 2021GY-333, 2021GY-286, and 2020GY-207, in part by the Fundamental Research Funds for the Central Universities under Grant xzd012022019.

Competing interests

Authors declare no competing interests exist.

Ethical approval

Not applicable.