In September 2005, Eugene Garfield, creator of the Journal Impact Factor (JIF), addressed the Fifth International Congress on Peer Review in Biomedical Publication, in a presentation he called “The Agony and the Ecstasy: The History and the Meaning of the Journal Impact Factor.” He remarked, “Like nuclear energy, the impact factor is a mixed blessing. I expected it to be used constructively, while recognizing that in the wrong hands it might be abused” (Garfield Reference Garfield2005, 1).

In this “Notes from the Editors,” we discuss both the “mixed blessing” that is the journal impact factor and also other ways beyond JIF to measure the impact of a scholarly journal such as the APSR. JIF can serve as a useful tool in helping authors, editors, and publishers compare, across journals, the ratio of citations to citable publications during a given time period. However, it should not be mistaken for a direct and unproblematic measure of a journal’s influence, let alone used as a simple proxy for the quality or importance of the research that a journal publishes.

We begin by discussing JIF and the APSR’s recent performance according to this metric. We then consider complementary approaches to measuring the journal’s impact.

IMPACT FACTOR

Many scholars and most journal publishers and editors await eagerly—sometimes anxiously—the annual release of the Journal Citation Report (JCR), which Clarivate Analytics has published since 1975. The JCR reports journals’ impact factors for the previous year. Many people in the higher education and academic publishing industries lean on this metric as an approximation of journal quality and/or prestige.

What exactly is JIF? It is a ratio of the total number of citations received (numerator) to the total number of “citable items” published (denominator) in a given journal during a given time period.Footnote 1 For example, the APSR’s 2022 JIF of 6.8 means that, on average, articles and letters published by the journal in 2020 and 2021 received a bit less than seven citations in 2022. In 2022, the APSR ranked fifth in JIF out of 187 established journals in the discipline, up from tenth out of 169 in 2017. In recent years, the APSR’s impact factor has also improved relative to that of other discipline-wide political science journals (see Figure 1).

Figure 1. APSR Impact Factor, 2016–2022

Note: Beginning in 2020, JIF is calculated using online, rather than print publication dates. As a result, JIF measures before and after 2020 are not directly comparable. AJPS = American Journal of Political Science; APSR = American Political Science Review; BJPS = British Journal of Political Science; JOP = Journal of Politics; POP = Perspectives on Politics.

Source: https://jcr.clarivate.com/jcr/home (Journal Citation Reports, 2017-2023).

Although a JIF of 6.8 is excellent for a political science journal, it would be terrible for a journal in the field of medicine, where the highest JIF in 2022 was more than 37 times that of the APSR. Footnote 2 This comparison illustrates some well-known limitations of JIF as a measure of impact, limitations that Bankovsky (Reference Bankovsky2019), Giles and Garand (Reference Giles and Garand2007), and others have shown apply within the discipline of political science (see also Bohannon Reference Bohannon2016; Seglen Reference Seglen1997; Williams Reference Williams2007).

JIF varies with disciplinary norms and practices, including some that are only tangentially related, if at all, to research influence. In medicine, for example, there is a strong emphasis on systematic reviews, which increases the numerators in medical journals’ impact factors (citations) relative to their denominators (“citable items”). In addition, JIF underestimates the impact of research in fields that emphasize books over articles, since it only counts citations in indexed journals. The measure is also sensitive to individual articles that receive unusually high numbers of citations, whether because they are genuinely impactful (in the sense that subsequent scholarship draws on their insights) or because they are widely criticized. What is more, research impact is not necessarily immediate; the 2-year window of the measure means that JIF fails to capture the impact of articles that receive relatively little uptake initially but grow influential with time.

BEYOND IMPACT FACTOR

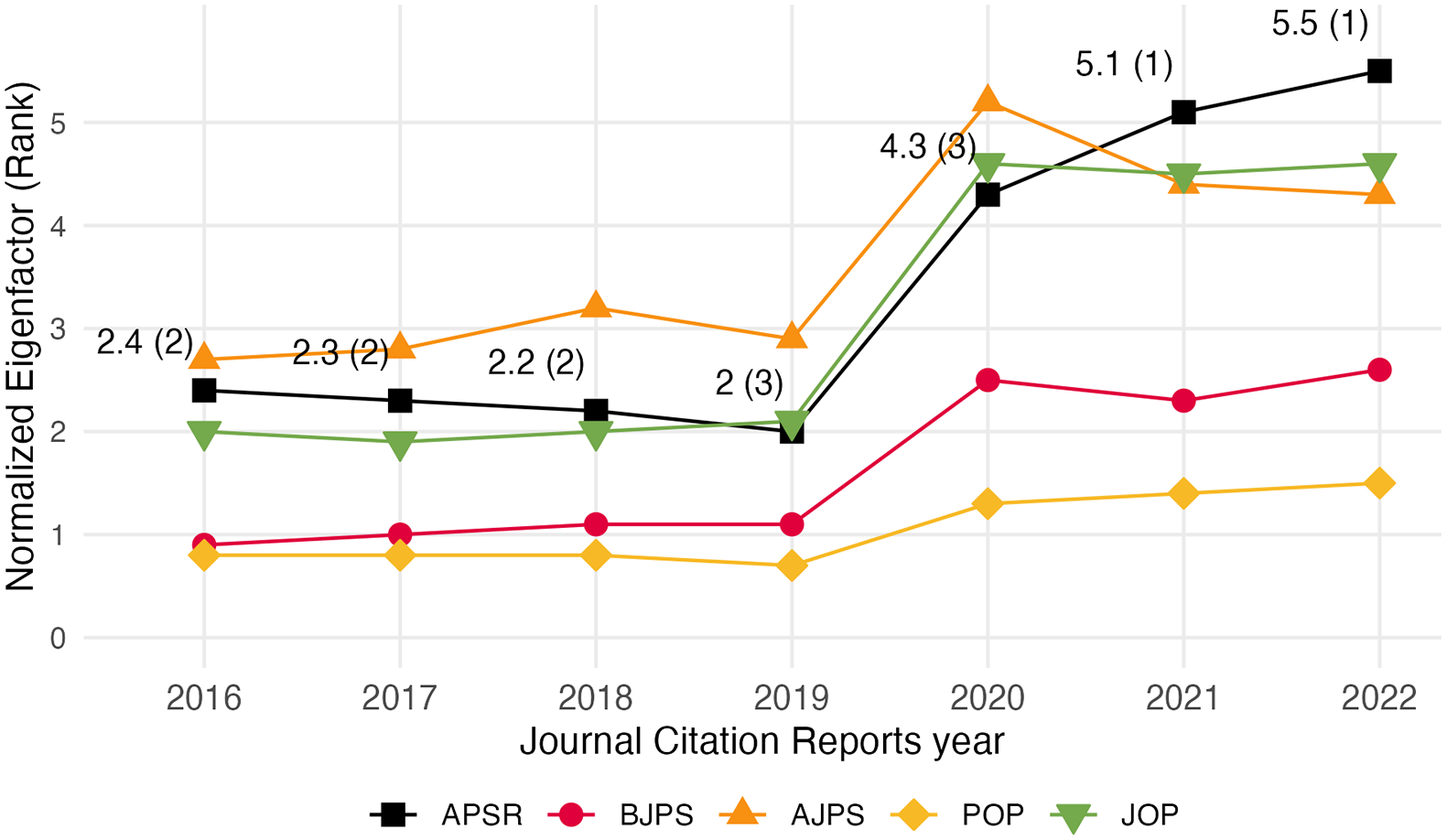

For these and related reasons, authors, editors, and publishers typically consider multiple measures when gauging the impact of a scholarly journal. One example of an alternative measure is the Normalized Eigenfactor, which tallies citations over 5-year, rather than 2-year periods; weights more heavily citations from highly ranked journals compared with those from lower-ranked journals; eliminates from its calculus authors’ self-citations; and is normalized for the number of journals indexed in the JCR. With a 2022 Normalized Eigenfactor Score of 5.5, the APSR ranks first out of 187 political science journals (see Figure 2).

Figure 2. APSR Eigenfactor, 2016–2022

Source: Journal Citation Reports, Clarivate, 2017–2023.

As editors, we also track the APSR’s impact in the form of online engagement, using the Altmetric (short for alternative metrics) Attention Score: proprietary data on downloads, shares, and online citations and comments on social media, online news media, blogs, and the like.Footnote 3 We view Altmetric as useful complements to JIF and other citation-based measures, because they count both public and scholarly engagement, and yet they are positively and significantly correlated with scholarly citation patterns (Dion, Vargas, and Mitchell Reference Dion, Vargas and Mitchell2023).

Altmetric Attention Scores are calculated only at the level of the individual article, and an article’s score can increase or decrease over time. Here, we gathered the Altmetric Attention Scores for all research outputs published in recent volumes for the same five journals and calculated the median score by journal and year.Footnote 4 As is the case with the journal’s Impact Factor, this snapshot of its Almetric Attention Score has improved in recent years, both in absolute terms and relative to that of other discipline-wide political science journals (see Figure 3).

Figure 3. APSR Altmetrics, 2016–2022

Source: Altmetric.com, as of June 30, 2023.

It is worth underscoring that impact as captured by any citation- or engagement-based measure should not be conflated with research significance or quality. Presumably, such conflation is among the types of “abuse” that worried Eugene Garfield.

Measures like JIF, Normalized Eigenfactor, and Almetric Attention Score reflect patterns of scholarly and reader engagement, which can vary with factors wholly unrelated to the importance of the research that a journal publishes. Engagement might vary with, for example, whether a press is relatively fast or slow to adopt innovations that increase readership, like digital publishing and Open Access; whether a journal has an active social media presence; and whether a journal’s website is easy to access and navigate. What is more, research suggests that engagement with individual articles varies with research-irrelevant author characteristics, including race, age, gender, and institutional affiliation (Dion, Sumner, and Mitchell Reference Dion, Sumner and Mitchell2018; Tahamtan, Afshar, and Ahamdzade Reference Tahamtan, Afshar and Ahamdzadeh2016). And bias readily creeps into engagement, as illustrated by the phenomenon of “[w]ell-known and highly-cited authors achiev-[ing] citations simply due to their prominence and prestige in their field of study” (Tahamtan, Afshar, and Ahamdzade Reference Tahamtan, Afshar and Ahamdzadeh2016, 1208).

In other words, not just JIFs, but also Normalized Eigenfactor, Altmetric Attention Scores, and other citation- and engagement-based measures of research impact are “mixed blessings.” As Garfield (Reference Garfield2005) stressed, they can be informative when they are “used constructively,” and in ways mindful of their limitations. However, they should not be mistaken for, and they should not be relied on as proxies for, objective, unbiased measures of research importance.

Comments

No Comments have been published for this article.