Introduction

The Clinical and Translational Science Award (CTSA) Program (CTSA Program) seeks to transform how biomedical research is conducted, speed the translation of discoveries into treatments for patients, engage communities in clinical research efforts, and train new generations of clinical and translational researchers. The CTSA Program was established in 2006 under the National Center for Research Resources (NCRR) with funding for 12 academic health centers. Currently, the CTSA Program is managed by the National Center for Advancing Translational Sciences (NCATS) which was created as a result of National Institutes of Health (NIH) reorganization and abolishment of NCRR. NCATS invests more than a half billion dollars annually to fund a national consortium of more than 50 academic medical research institutions (referred to as CTSA hubs) across 30 states and the District of Columbia [1]. The CTSA Program is considered to be a large, complex initiative with CTSA hubs varying in size (financially and operationally), program goals, services offered, priority audiences, and affiliated institutions connected to the funded academic health centers.

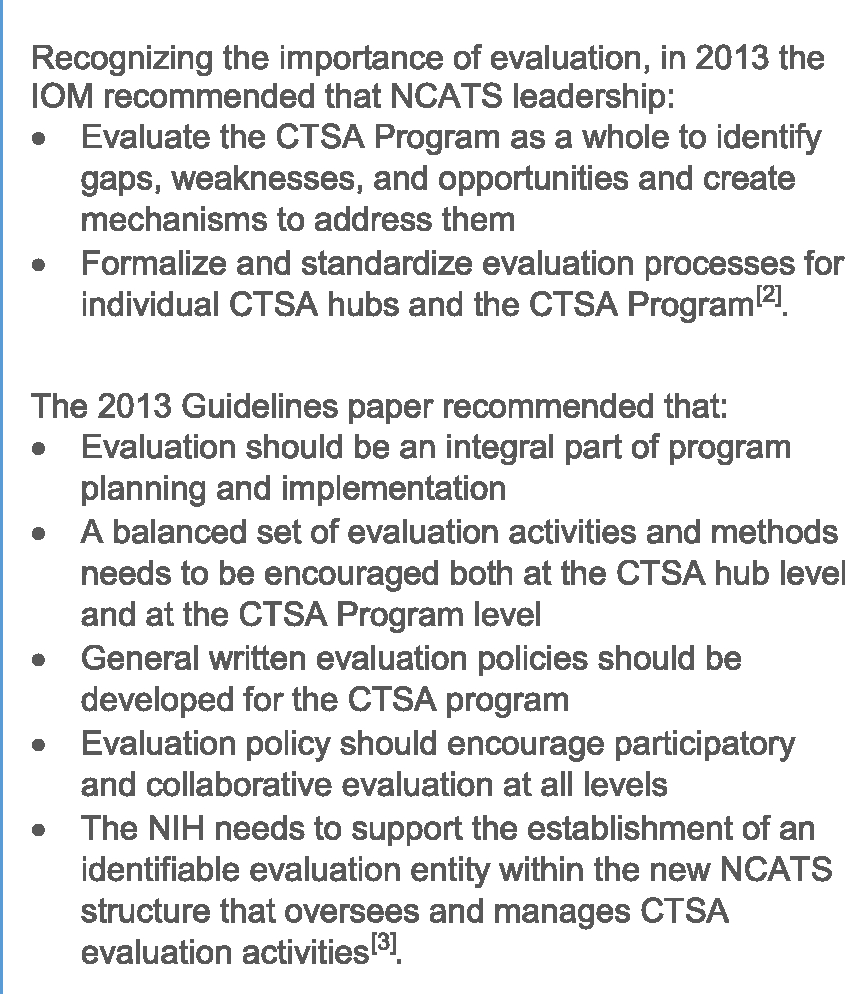

At the inception of NCATS, NIH commissioned an Institute of Medicine (IOM) study in July 2012 to review the CTSA Program. In June 2013, the IOM released its report in which it provided recommendations to strengthen the CTSA Program to support transforming translational science for the benefit of human health [2]. Since then, the NCATS and the CTSA Program leadership view this report as a strategic guiding document, setting the vision moving forward. In the 2013 report, the IOM recommended that formal CTSA Program-wide evaluation processes should be undertaken to measure progress toward NCATS’ vision for the CTSA Program [2]. Around the same time, a group of CTSA evaluators also released a special publication of CTSA-specific evaluation guidelines based on the American Evaluation Associations’ Guiding Principles as the cornerstone of good evaluation practice [Reference Trochim3, 4]. The 2013 Guidelines publication noted that “an endeavor as ambitious and complex as the CTSA Program requires high-quality evaluation…to show that it is well implemented, efficiently managed, adequately resourced and demonstrably effective” [Reference Trochim3].

The purpose of this paper is to examine the progress made to evaluate the CTSA Program from the perspectives of CTSA evaluators. We use a content analysis of evaluation requirements in the CTSA Funding Opportunity Announcements (FOAs) released since 2005 to illustrate the changing definition, influence, and role for evaluation. Additionally, based on input from CTSA evaluators, gathered via a survey in 2018, we describe opportunities to further strengthen CTSA evaluation and provide needed data for documenting impact to its diverse stakeholders.

Importance of Evaluation

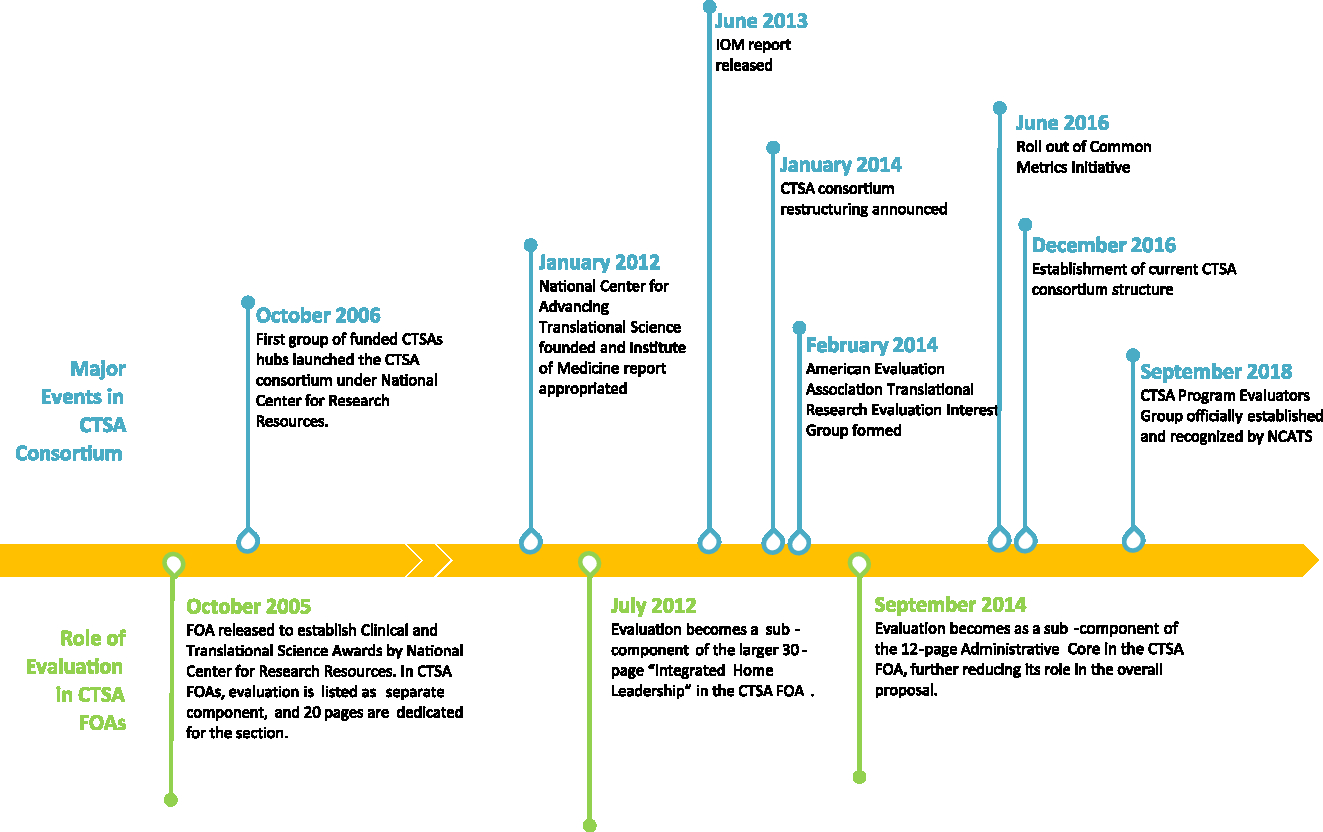

Evaluation use in the CTSA context, and as used by IOM report, is consistent with the widely used definition put forward by Patton. Patton defines evaluation as the “systematic collection of information about the activities, characteristics, and results of programs to make judgements about the program, improve or further develop program effectiveness, inform decisions about future programming, and/or increase understanding” [Reference Patton5]. The IOM report indicates that “in a multifaceted and complex effort such as the CTSA Program, evaluation is a formidable undertaking, but one that is vital to ensuring accountability and planning for future directions” [2]. In the report, which reviewed the CTSA Program from 2006 to 2012, IOM commended NIH for having recognized the importance of evaluation by putting in place requirements for CTSA hubs to have and implement evaluation plans, initiating external evaluations of the CTSA program as a whole, and supporting the Evaluation Key Function Committee (KFC). However, as illustrated in Fig. 1 and described below, starting with 2012, the role of evaluation, ways evaluators collaborate cross-hubs, evaluation priorities at CTSA hubs, and guidance from NIH have been inconsistent [6–18].

Fig. 1. CTSA Evaluation Timeline 2005–2018. This figure illustrates the changing priorities, roles, and definitions of evaluation in CTSA FOAs. It also highlights major events in CTSA Consortium related to evaluation, such as release of IOM report, formation of evaluators collaborative groups, implementation of CMI, and establishment of current CTSA consortium structure.

Role of Evaluation at the CTSA Program Level

FOAs communicate the funding agencies’ priorities and goals. Therefore, FOAs released since 2005 were reviewed to better understand the importance of evaluation by NCATS [6–18]. This review suggested that at the time of IOM report, evaluation was considered a “key function” of the CTSA Program, requiring evaluation to be addressed – substantively and in detail – as a separate component in the grant proposal. Hubs were asked to describe how evaluation would be used to assess the implementation of program goals, track progress toward indicators, conduct studies to justify value, and support national evaluation of the CTSA Program. At that time, CTSA hub evaluators also had a formal CTSA-sponsored forum, called the KFC, to share resources and expertise and to engage in substantial cross-hub discussions and projects.

However, beginning in 2012 (under NCATS), the FOAs no longer listed evaluation as its own separate core. Evaluation was, instead, folded into the Administrative (or formally called Integrated Home Leadership) section with limited space to describe evaluation goals and methods. Most significant changes occurred in 2014 when NCATS announced the restructuring and dissolution of evaluation as a “key function.” During this shift, the FOAs instituted stricter page limits for CTSA proposals, leaving evaluation with minimal space to describe the methods by which the hub’s goals and impact would be assessed.

In 2014, NCATS FOAs also introduced the Common Metrics Initiative (CMI) in response to the IOM recommendation to have a set of common measures to gather data on key research processes and describe the impact of the CTSA Program [2,15]. The CMI centers on the continuous improvement aspect of evaluation with the current focus addressing two major strategic goals of the CTSA Program:

1. Clinical and translational science research workforce development; and

2. Excellence in clinical and translational science research [19].

CMI is briefly described in Fig. 2.

Fig. 2. Background on CMI. This figure provides a brief overview of the CMI.

As a result, the FOAs have largely reframed evaluation as continuous improvement and participation in the CMI. Although evaluation remained and continues to remain a significant section within the related workforce development proposals (TL1 and KL2 grants), minimal accounting is currently required in the UL1 grant proposal around evaluation goals and methods beyond the CMI [15–18].

Cross-Hub Evaluation Collaborations

Throughout the reorganization of the CTSA Program and shifts in the priorities and level of support provided by NCATS, CTSA evaluators have worked across hubs and CTSA domains, particularly workforce development and community engagement, to move the field of CTSA evaluation forward. This has resulted in a body of evaluation-relevant publications that supports the work of evaluators and the CTSA Program overall (see Supplementary Material). Furthermore, when the Evaluation KFC was dissolved in 2014, several CTSA evaluators, with support from their local CTSA hub leadership, formed the Translational Research Evaluation Topical Interest Group (AEA TRE-TIG) as part of the American Evaluation Association and the Evaluation Special Interest Group in the Association for Clinical and Translational Science (ACTS) to share innovative work being conducted to assess impact in their hubs. These groups have an annual opportunity to meet, network, and share evaluation practices and findings during annual conferences.

Most recently, with the implementation of CMI, Tufts University, the coordinating center for CMI at the time, formed a national CTSA Evaluators Group as part of their coordinated effort to utilize the expertise of evaluators, train hubs in the Results-Based Accountability framework and operationalize guidelines for the initial set of common metrics, and initiate collaborative learning calls [20, Reference Friedman21]. These collaborative calls have evolved over time to serve as a forum to discuss evaluation-related topics not limited to CMI and are now supported by the University of Rochester’s Center for Leading Innovation and Collaboration (CLIC) in their capacity as the Coordinating Center for the CTSA Program. Participation in the CTSA Evaluators Group from all CTSA hubs is encouraged and supported by CTSA hub leadership. In the past 2 years, the CTSA Program Evaluators Group has formed multiple working and research groups, such as the CTSA Evaluation Guidelines Working Group that came together to assess the progress toward CTSA evaluation. Products of the other workgroups include consortium-wide bibliometrics analyses, case studies of successful translation, and an environmental scan of evaluation programs.

Evaluation Within CTSA Hubs

Building off surveys described in ref. [Reference Kane22], in 2018, a workgroup established under the CTSA Program Evaluators Group administered a survey to 64 CTSA hub evaluation leaders to learn more about how CTSA hubs perceive and engage in both local and national evaluations [Reference Kane22]. Sixty-one CTSA hubs provided a response to the survey (95%).

As illustrated here, even with the changing priorities, goals, and support for evaluation, the CTSA hub evaluators have maintained the value of evaluation and illustrate that being an integral part of any program, evaluation can support ongoing management and continuous learning by conceptualizing the program design, clarifying the purpose to users, shedding light on intended outcomes, and serving as an incentive or catalyst for positive change and improved outcomes. However, consistent with the recent legislation passed by Congress to reform federal program evaluation policies,Footnote 1 it is evident from the FOAs that evaluation needs to be strengthened at the national level to meet the IOM report and the 2013 Guidelines paper recommendations (Fig. 3).

Fig. 3. Recommendations for evaluation from 2013. This figure highlights key recommendations made by IOM report and the 2013 Guidelines related to CTSA evaluation as a reference.

Opportunities to Further Strengthen CTSA Evaluation

CTSA evaluators have been sharing ideas for strengthening CTSA evaluation over the years through the various cross-hub evaluation collaboration opportunities, meetings during AEA and ACTS conferences and ad-hoc conversations. To formally capture this feedback, the 2018 survey asked evaluators the following: If you were given the opportunity to meet face-to-face with the NCATS leadership group and candidly discuss your observations and experiences with CTSA evaluation, what would you advise them to consider doing to strengthen CSTA evaluation so that it appropriately informs research policy and justifies resource allocations with Congress and the public?

The authors of this paper conducted a content analysis on responses provided to this question. Initially, the responses were reviewed individually to develop a set of themes and messages that emerged. The group then reviewed the themes and messages together and identified four consistent themes. For validity, these four themes were summarized and shared with the CTSA Evaluation Guidelines Workgroup members. Based on this analysis, the following four strong opportunities to further strengthen CTSA evaluation emerged.

Continue to Build the Collaborative Evaluation Infrastructure at Local and National Levels

Despite inconsistent guidance from NCATS, CTSA evaluators have engaged in cross-hub collaborations to continue to move local and national evaluation of CTSA Program. Only recently has NCATS started to increase their support for CTSA evaluation, as discussed earlier. However, it is still up to the CTSA hub leadership to design their local evaluation structure and support. This limits the resources and capacity of CTSA evaluators to work on local and national evaluation initiatives.

NCATS has an opportunity to develop and communicate a strategic evaluation plan for the CTSA Program. This evaluation plan will allow CTSA hubs a structured way to substantially address how evaluation will be used to promote continuous improvement, assess impact across programs at a hub level, and identify ways evaluators will be utilized to support the national initiatives. To support implementation and alignment of this plan, in future FOAs evaluation could be returned to a separate but cross-cutting component that speaks to the importance of evaluation at both local and national levels. Furthermore, NCATS could invite evaluators to participate in discussions focused around strategic planning of new or existing national initiatives. Evaluators can serve as team players who bring strong strategic planning and facilitation skills and systems-level insight on successes and barriers from their engagement across programs and the consortium. In addition, NCATS could support dedicated face-to-face meetings for CTSA evaluators beyond the annual conferences where only a few evaluators are able to attend. These meetings should provide an opportunity to share findings from both local and national evaluation studies. This level of support would be invaluable for both NCATS and evaluators.

Make Better Use of Existing Data

Using the strategic and structured evaluation plan developed for the CTSA Program, NCATS should leverage existing data that are reported by all CTSA hubs to assess progress and impact of the CTSA Program. While this formative assessment will not replace a need for comprehensive evaluation of the CTSA Program, it can be used to better understand what is working (or not) and identify gaps and opportunities. One feasible example is to utilize annual progress reports submitted by all CTSA hubs. Even though the submission dates vary by funding cycles, in their annual progress reports, CTSA hubs provide data to NCATS on utility and reach of core services, proxy return-on-investment measures, products yielded, and academic productivity of TL1 training and KL2 scholar participants. Therefore, these progress reports can serve, if structured appropriately, as an important, relevant, and accessible data source to speak to the effectiveness, impact, and value of the CTSA Program. Consequentially, over the years and in the recent survey, evaluators have recommended that these data be de-identified, summarized, and shared annually with hubs or be made available more broadly to stakeholders within the consortium. Access to these data would allow for further national-level or cross-site analyses catalyzing evaluation studies to test promising and best practices, better understanding of outcomes, and describing progress being made across the consortium, as well as more effective strategic management and program improvement.

NCATS has started working on this opportunity by launching an exploratory subcommittee, which includes a couple of evaluators, to consider the feasibility of using data from progress reports for national analysis. The subcommittee aims to develop a charge that can guide a more extensive committee of evaluators and administrators to go through the progress reports and recommend which data elements can be used as is or with slight modifications in reporting. These are the first steps to making better use of the substantial investment of time and resources spent by hubs in annual reporting and repurposing existing data for evaluation and evidence-based decision-making. NCATS should continue to identify and support similar opportunities to leverage existing data, particularly those located within the NIH infrastructure such as information on financials for cost-analysis to conduct comprehensive evaluation studies that get to illustrating value, merit, and impact of the CTSA Program.

Strengthen and Augment the Common Metrics Initiative

As noted above, significant progress has been made toward developing a set of common metrics, as recommended by both the IOM and the 2013 Guidelines. The substantial efforts that have gone into the development of the initial set of common metrics have led to the identification of several key process metrics for translational research, have integrated them into a comprehensive performance management framework, and have led to greater internal dialog about a more coherent commitment to evaluation in the CTSAs. However, it is unclear if the currently implemented common metrics can be utilized to illustrate impact of the CTSA Program as recommended by the IOM. Additionally, as suggested by CTSA evaluators in the 2018 survey, common metrics, while necessary, are not by themselves sufficient for high-quality program evaluation at a local or national level. Reflecting on the progress made so far and uncertainty of its utility, the CMI leadership should revisit the common metrics to ensure that the metrics are in alignment with the overall strategic plan and can indeed assess the impact of the CTSA Program. Engaging CTSA evaluators in this strategic alignment exercise would be important as evaluators often carry the major burden for implementing the CMI at their hubs and have the expertise to design and assess impact.

Recently, CLIC has been engaging CTSA evaluators at various conferences to collect their feedback on design, implementation, and evaluation as they revisit the CMI. This has been a welcome engagement that hopefully will lead to identification of meaningful program indicators and utilization of various evaluation methods and sources of evaluative data, both quantitative and qualitative to more thoroughly assess the impact of the CTSA Program.

Pursue Internal and External Opportunities to Evaluate the CTSA Program at the National Level

As noted above, NCATS has taken steps to implement the IOM’s recommendation to formalize evaluation processes. However, it has not yet acted on another recommendation of the IOM, namely that the CTSA Program as a whole be evaluated. In the 2013 Guidelines, evaluators recommended that the CTSA Program be proactive and strategic regarding the integration of evaluation at local and national levels. Comprehensive consortium-wide evidence is necessary to demonstrate that the innovations supported by the CTSA Program are moving research along the translational spectrum and are truly leading to improvements in human health.

The last external evaluation of the CTSA Program completed by Westat covered the period before the IOM report (2009–2012) [Reference Frechtling23]. A next-generation, comprehensive CTSA Program evaluation would be a valuable step in determining what progress the CTSA Program has made toward realizing its objectives. In the 2018 survey, evaluators noted that CMI is an initial step in conducting internal evaluation of the CTSA Program, but it is insufficient for conducting comprehensive evaluation studies needed to accurately determine the value, merit, quality, and significance of the CTSA Program. Evaluators suggested going beyond CMI and undertaking a retrospective national evaluation of the program to address specific consortium-wide evaluation topics including, but not limited to: assessing the impact of the CTSA Program’s current focus on informatics, regulatory knowledge and support, community and collaboration, biostatistics, and network capacity on the translational research enterprise and evaluating how career development and training opportunities provided through the KL2 and TL1 programs are enhancing scholars’ and trainees’ skills in the core translational research competencies and team science. These types of assessments could be conducted internally or externally. Findings from such evaluation studies will provide accountability and concrete outcomes of the now decade-long funding of the CTSAs to all stakeholders of the program, including the public, which ultimately funds the CTSA Program.

Conclusion

We applaud the CTSA evaluators for continuing to collaborate with each other even as the definition and role of evaluation have shifted at the national level. We also appreciate the increased recognition and support from NCATS toward evaluation in the recent years. However, much more is needed to strengthen CTSA evaluation to fully assess the value-add of the CTSAs, both at local and national levels. In light of the formal shifts and strong collaborative culture among evaluators, we recognize the need for a comprehensive evaluation plan and policy that can be used as a guiding document for the entire consortium. As recommended in the 2013 Guidelines, having an evaluation entity within NCATS structure to help guide and manage CTSA activities would be vital to develop and effectively implement an evaluation plan and policy that directly reflects the mission and vision of the CTSA Program. CTSA evaluators look forward to working with NCATS in establishing and engaging this national entity to design and implement evaluations that are aligned with the overall mission and vision of the CTSA Program, while supporting individual hub priorities.

The opportunities discussed here represent some of the immediate steps that could be taken to move that process forward from the lens of CTSA evaluators. There may be additional opportunities identified by other stakeholders that are not reflected here. We, therefore, put forth an open invitation to other key stakeholders, particularly NCATS and the community of CTSA PIs, to join us in the dialog needed to move the CTSA evaluation to the next level.

Author ORCIDs

Tanha Patel, https://orcid.org/0000-0002-4432-1469

Acknowledgments

We want to thank and acknowledge all of the members of the CTSA Evaluation Guidelines Working Group, in addition to the authors: Emily Connors, Medical College of Wisconsin; Ashley Dunn, Stanford University; John Farrar, University of Pennsylvania; Deborah M. Fournier, PhD, Boston University; Keith Herzog, Northwestern University; Nicole Kaefer, University of Pittsburgh; Nikki Llewellyn, Emory University; Chelsea Proulx, University of Pittsburgh; Doris Rubio, University of Pittsburgh; L. Aubree Shay, UTHealth School of Public Health in San Antonio; and Boris Volkov, University of Minnesota for their support and dedication to review the progress made with CTSA evaluation. We would also like to specially thank co-chairs of the CTSA Survey Workgroup, Joe Hunt from Indiana University and Cathleen Kane from Weill Cornell Clinical Translational Science Center, for allowing us to include a set of questions in the survey, sharing the findings, and supporting this workgroup. Furthermore, we would like to thank Jenny Wagner, MPH, University of California, Davis for her help with the graphics and Melissa Sullivan, University of California, Davis for her help in hosting and coordinating with workgroup members. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Financial support

This project has been supported by Clinical and Translational Science Awards awarded to our institutions by the National Center for Advancing Translational Sciences. The grant numbers for the authors’ institutions are UL1TR001420 (TP), UL1TR001860 (JR), UL1TR002384 (WT), UL1TR002319 (JE), UL1TR002373 (LS), and UL1TR002489 (GD).

Disclosures

The authors have no conflicts of interest to disclose.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/cts.2019.387.