1. Introduction

Obtaining accurate numerical solutions to turbulent fluid flows remains a challenging task, and is subject to active research efforts in fluid dynamics (Argyropoulos & Markatos Reference Argyropoulos and Markatos2015) and adjacent fields including climate research (Aizinger et al. Reference Aizinger, Korn, Giorgetta and Reich2015) and the medical sciences (Bozzi et al. Reference Bozzi, Dominissini, Redaelli and Passoni2021). Direct numerical simulation (DNS), which attempts to fully resolve the vast scale of turbulent motion, is prohibitively expensive in many flow scenarios and is thus often adverted by using turbulence models. For instance, Reynolds-averaged Navier–Stokes (RANS) modelling has successfully been deployed to complex flow problems such as aircraft shape design and optimisation of turbo-machinery (Argyropoulos & Markatos Reference Argyropoulos and Markatos2015). However, the temporally averaged solutions from RANS simulations lack concrete information about instantaneous vortex movements in the flow. Thus, large eddy simulation (LES) constitutes another common choice for turbulence modelling, providing a time-sensitive perspective to the turbulent flows (Pope Reference Pope2004). The computational expense of LES is nevertheless still substantial, and their applicability remains restricted (Choi & Moin Reference Choi and Moin2012; Slotnick et al. Reference Slotnick, Khodadoust, Alonso, Darmofal, Gropp, Lurie and Mavriplis2014; Yang Reference Yang2015).

The persistent challenges of traditional approaches motivate the use of machine learning, in particular deep learning, for turbulence modelling (Duraisamy, Iaccarino & Xiao Reference Duraisamy, Iaccarino and Xiao2019). The reduced complexity of steady-state RANS made these set-ups a promising target for early efforts of machine learning-based turbulence. As a result, substantial progress has been made towards data-driven prediction of RANS flow fields, vastly outperforming pure numerical solvers in the process (Ling, Kurzawski & Templeton Reference Ling, Kurzawski and Templeton2016; Bhatnagar et al. Reference Bhatnagar, Afshar, Pan, Duraisamy and Kaushik2019; Thuerey et al. Reference Thuerey, Weißenow, Prantl and Hu2020).

Contrasting data-driven RANS modelling, further studies were motivated by the additional challenges of predicting transient turbulence. Some of these target performance gains over numerical models by moving the temporal advancement to a reduced-order embedding, where Koopman-based approaches have been an effective choice for constructing these latent spaces (Lusch, Kutz & Brunton Reference Lusch, Kutz and Brunton2018; Eivazi et al. Reference Eivazi, Guastoni, Schlatter, Azizpour and Vinuesa2021). In the domain of deep learning-based fluid mechanics, these studies are also among the first to explore the effects of recurrent application of neural networks on training. A related approach by Li et al. (Reference Li, Kovachki, Azizzadenesheli, Liu, Bhattacharya, Stuart and Anandkumar2020) moved the learned temporal integrator to Fourier space, with successful applications to a range of problems, including Navier–Stokes flow. An extensive comparison of different turbulence prediction architectures is provided by Stachenfeld et al. (Reference Stachenfeld, Fielding, Kochkov, Cranmer, Pfaff, Godwin, Cui, Ho, Battaglia and Sanchez-Gonzalez2021), and includes applications to multiple flow scenarios.

While turbulence prediction aims to remove the numerical solver at inference time, other concepts in machine learning turbulence try to integrate a learned model in the solver. In the following, we will refer to approaches characterised by this integration of neural networks into numerical solvers as hybrid methods. Some of these efforts target the data-driven development of LES models. An early work showcased the capability of neural networks to reproduce the turbulent viscosity coefficient (Sarghini, De Felice & Santini Reference Sarghini, De Felice and Santini2003). Furthermore, Maulik et al. (Reference Maulik, San, Rasheed and Vedula2019) proposed a supervised machine learning method to infer the subgrid scale (SGS) stress tensor from the flow field, and achieved promising results on the two-dimensional decaying turbulence test cases. Herein, the a priori evaluations served as a learning target and could be accurately reproduced, however, a posteriori evaluations were not always in direct agreement. Beck, Flad & Munz (Reference Beck, Flad and Munz2019) trained a data-driven closure model based on a convolutional neural network (CNN) and demonstrated good accuracy at predicting the closure on a three-dimensional homogeneous turbulence case, albeit stating that using their trained model in LES is not yet possible. Related prediction capabilities with trade-offs in terms of model stability of a similar supervised approach were reported by Cheng et al. (Reference Cheng, Giometto, Kauffmann, Lin, Cao, Zupnick, Li, Li, Abernathey and Gentine2019). Xie et al. (Reference Xie, Li, Ma and Wang2019) utilised a similar approach on compressible flows, later expanding their method to multi-scale filtering (Xie et al. Reference Xie, Wang, Li, Wan and Chen2020). Park & Choi (Reference Park and Choi2021) studied possible formulations for the input to the neural network and evaluated their results on a turbulent channel flow.

Beyond the supervised learning methods covered so far, Novati, de Laroussilhe & Koumoutsakos (Reference Novati, de Laroussilhe and Koumoutsakos2021) proposed a multi-agent reinforcement learning approach, where the LES viscosity coefficient was inferred by local agents distributed in the numerical domain. Their hybrid solver achieved good results when applied to a forward simulation. These previous studies on machine learning-based turbulence models lead to two fundamental observations. Firstly, sufficiently large networks parameterise a wide range of highly nonlinear functions. Their parameters, i.e. network weights, can be trained to identify and differentiate turbulent structures and draw modelling conclusions from these structures, which yields high accuracy towards a priori statistics. Secondly, the feedback from supervised training formulations cannot express the long-term effects of these modelling decisions, and thus cannot provide information about the temporal stability of a model. While reinforcement learning provides long temporal evolutions, its explorative nature makes this method computationally expensive. To exploit the benefits of data-driven training such as supervised models, and simultaneously provide training feedback over long time horizons, a deeper integration of neural network models in numerical solvers is necessary.

Further research achieved this deep integration by training networks through differentiable solvers and adjoint optimisation for partial differential equations. Such works initially focused on learning-based control tasks (de Avila Belbute-Peres et al. Reference de Avila Belbute-Peres, Smith, Allen, Tenenbaum and Kolter2018; Holl, Thuerey & Koltun Reference Holl, Thuerey and Koltun2020). By combining differentiable solvers with neural network models, optimisation gradients can propagate through solver steps and network evaluations (Thuerey et al. Reference Thuerey, Holl, Mueller, Schnell, Trost and Um2021). This allows for targeting of loss formulations that require a temporal evolution of the underlying partial differential equation. These techniques were shown to overcome the stability issues of supervised methods, and thus provided a basis for hybrid methods in unsteady simulations. By integrating CNNs into the numerical solver, Um et al. (Reference Um, Brand, Fei, Holl and Thuerey2020) found models to improve with increased time horizons seen during training, which resulted in a stable learned correction function that was capable of efficiently improving numerical solutions to various partial differential equations. Similarly, Kochkov et al. (Reference Kochkov, Smith, Alieva, Wang, Brenner and Hoyer2021) found differentiable solver architectures to be beneficial for training turbulence models. While this work estimates substantial performance gains over traditional techniques for first-order time integration schemes, we will evaluate a different solver that is second order in time, putting more emphasis on an evaluation with appropriate metrics from fluid mechanics.

In another related approach, Sirignano, MacArt & Freund (Reference Sirignano, MacArt and Freund2020) proposed a learned correction motivated by turbulence predictions in LES of isotropic turbulence, and later expanded on this by studying similar models in planar jets (MacArt, Sirignano & Freund Reference MacArt, Sirignano and Freund2021). Here, a posteriori statistics served as a training target, and the authors also compared the performance of models trained on temporally averaged and instantaneous data. However, the study did not investigate the temporal effects of hybrid solvers and their training methodologies in more detail.

In this paper, we seek to develop further understanding of turbulence modelling with hybrid approaches. In an effort to bridge the gap between the previously mentioned papers, we want to address a series of open questions. Firstly, no previous adjoint-based learning approach has been evaluated on a range of turbulent flow scenarios. While this has been done for other, purely predictive learning tasks (Li et al. Reference Li, Kovachki, Azizzadenesheli, Liu, Bhattacharya, Stuart and Anandkumar2020; Stachenfeld et al. Reference Stachenfeld, Fielding, Kochkov, Cranmer, Pfaff, Godwin, Cui, Ho, Battaglia and Sanchez-Gonzalez2021), we will demonstrate the applicability of adjoint-based training of hybrid methods in multiple different scenarios. Secondly, there is little information on the choice of loss functions for turbulence models in specific flow scenarios. Previous studies have focused on matching ground truth data. Their optimisation procedures did not emphasise specific fluid dynamical features that might be particularly important in the light of long-term model accuracy and stability. Thirdly, previous works on adjoint optimisation have not studied in detail how the number of unrolled steps seen during training affects the neural network models’ a posteriori behaviour. While previous work on flow prediction reported good results when using multiple prediction steps during training (Lusch et al. Reference Lusch, Kutz and Brunton2018; Eivazi et al. Reference Eivazi, Guastoni, Schlatter, Azizpour and Vinuesa2021), we want to explore how this approach behaves with learned turbulence models in hybrid solvers. In order to provide insights into these questions, we utilise a CNN to train a corrective forcing term through a differentiable solver, which allows an end-to-end training that is flexible towards the number of unrolled steps, loss formulations and training targets. We then show that the same network architecture can achieve good accuracy with respect to a posteriori metrics of three different flow scenarios. In our method, we relax the timestep requirements usually found in unsteady turbulence modelling, such as LES, by downscaling our simulations such that a constant Courant–Friedrichs–Lewy (CFL) ratio is maintained. By implication, a learned model is trained to (i) take the classical sub-grid-scale closure into account, (ii) approximate temporal effects and (iii) correct for discretisation errors. It is worth noting that a network trained for these three targets combines their treatment into one output, with the result that these treatments cannot be separated at a network-output level. Instead, our a posteriori evaluations show that neural network models can learn to account for all three of these elements.

The turbulence models are trained and evaluated on three different, two-dimensional flow cases: the isotropic decaying turbulence, a temporally developing mixing layer as well as the spatially developing mixing layer. We show that, in all cases, training a turbulence model through an increasing number of unrolled solver steps enhances the model accuracy and thus demonstrate the benefits of a differentiable solver. Unless stated otherwise, all of the evaluations in the coming sections were performed on out-of-sample data and show the improved generalising capabilities of models trained with the proposed unrollment strategy.

Our unrollment study extends to 60 simulation steps during training. The long solver unrollments involve recurrent network applications, which can lead to training instabilities caused by exploding and diminishing gradients. We introduce a custom gradient stopping technique that splits the gradient calculations into non-overlapping subranges, for which the gradients are evaluated individually. This techniques keeps the long-term information from all unrolled steps, but stops the propagation of gradients through a large number of steps and thus avoids the training instabilities.

Furthermore, our results indicate that accurate models with respect to a posteriori turbulence statistics are achieved without directly using them as training targets. Nonetheless, a newly designed loss formulation inspired by a posteriori evaluations and flow physics is shown to yield further improvements. Finally, we provide a performance analysis of our models that measures speed ups of up to ![]() $14$ with respect to comparably accurate solutions from traditional solvers.

$14$ with respect to comparably accurate solutions from traditional solvers.

The remainder of this paper is organised as follows. In § 2, we give an overview of our methodology and the solver–network interaction. A description and evaluation of experiments with the isotropic decaying turbulence case is found in § 3, which is followed by similar studies regarding the temporally developing mixing layer and the spatially developing mixing layer in §§ 4 and 5, respectively. Section 6 studies the effect our method of splitting back-propagated gradients into subranges. A comparison of computational costs at inference time can be found in § 7, while § 8 contains concluding thoughts.

2. Learning turbulence models

In this paper, we study neural networks for turbulence modelling in incompressible fluids. These flows are governed by the Navier–Stokes equations

\begin{equation} \left.\begin{array}{c@{}}

\dfrac{\partial \boldsymbol{u}}{\partial t} +

\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u}

={-}\boldsymbol{\nabla} p +

\dfrac{1}{{Re}}\nabla^{2}\boldsymbol{u} + \boldsymbol{f}

,\\ \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u} =

0, \end{array}\right\}

\end{equation}

\begin{equation} \left.\begin{array}{c@{}}

\dfrac{\partial \boldsymbol{u}}{\partial t} +

\boldsymbol{u}\boldsymbol{\cdot}\boldsymbol{\nabla}\boldsymbol{u}

={-}\boldsymbol{\nabla} p +

\dfrac{1}{{Re}}\nabla^{2}\boldsymbol{u} + \boldsymbol{f}

,\\ \boldsymbol{\nabla} \boldsymbol{\cdot} \boldsymbol{u} =

0, \end{array}\right\}

\end{equation}

where ![]() $\boldsymbol {u}= [u \ v]^\textrm {T}$,

$\boldsymbol {u}= [u \ v]^\textrm {T}$, ![]() $p$ and

$p$ and ![]() $Re$ are the velocity field, pressure field and Reynolds number respectively. The term

$Re$ are the velocity field, pressure field and Reynolds number respectively. The term ![]() $\boldsymbol {f} = [f_x \ f_y]^\textrm {T}$ represents an external force on the fluid. In the context of turbulent flows, an accurate solution to these equations entails either resolving and numerically simulating all turbulent scales, or modelling the turbulence physics through an approximative model.

$\boldsymbol {f} = [f_x \ f_y]^\textrm {T}$ represents an external force on the fluid. In the context of turbulent flows, an accurate solution to these equations entails either resolving and numerically simulating all turbulent scales, or modelling the turbulence physics through an approximative model.

Our aim is to develop a method that enhances fluid simulations by means of a machine learning model. In particular, we aim to improve the handling of fine temporal and spatial turbulence scales that are potentially under-resolved, such that the influence of these scales on the larger resolved motions needs to be modelled. The function that approximates these effects is solely based on low-resolution data and is herein parameterised by a CNN. The network is then trained to correct a low-resolution numerical solution during the simulation, such that the results coincide with a downsampled high-resolution dataset. Within this hybrid approach, the turbulence model directly interacts with the numerical solver at training and at inference time. To achieve this objective, we utilise differentiable solvers, i.e. solvers which provide derivatives with respect to their output state. Such solvers can be seen as part of the differentiable programming methodology in deep learning, which is equivalent to employing the adjoint method from classical optimisation (Giles et al. Reference Giles, Duta, Muller and Pierce2003) in the context of neural networks. The differentiability of the solver enables the propagation of optimisation gradients through multiple solver steps and neural network evaluations.

2.1. Differentiable PISO solver

Our differentiable solver is based on the semi-implicit pressure-implicit with splitting of operators (PISO) scheme introduced by Issa (Reference Issa1986), which has been used for a wide range of flow scenarios (Kim & Benson Reference Kim and Benson1992; Barton Reference Barton1998). Each second-order time integration step is split into an implicit predictor step solving the discretised momentum equation, followed by two corrector steps that ensure the incompressibility of the numerical solution to the velocity field. The Navier–Stokes equations are discretised using the finite-volume method, while all cell fluxes are computed to second-order accuracy.

The solver is implemented on the basis of TensorFlow (TF) (Abadi Reference Abadi2016), which facilitates parallel execution of linear algebra operations on the graphics processing unit (GPU), as well as the differentiability of said operations. Additional functions exceeding the scope of TF are written as custom operations and implemented using compute unified device architecture (CUDA) programming. This approach allows us to seamlessly integrate initially unsupported features such as sparse matrix operations in the TF graph. More details about the solver can be found in Appendix A, where the solver equations are listed in Appendix A.1, implementation details in Appendix A.2 and a verification is conducted in Appendix A.3. Figure 1 gives a brief overview of the solver procedure.

Figure 1. Solver procedure of the PISO scheme and its interaction with the convolutional neural network; data at time ![]() $t_n$ are taken from the DNS dataset and processed by the downsampling operation q that yields downsampled representations of the input fields, before entering the differentiable solver; the solver unrollment performs

$t_n$ are taken from the DNS dataset and processed by the downsampling operation q that yields downsampled representations of the input fields, before entering the differentiable solver; the solver unrollment performs ![]() $m$ steps, each of which is corrected by the CNN, and is equivalent to

$m$ steps, each of which is corrected by the CNN, and is equivalent to ![]() $\tau$ high-resolution steps; the optimisation loss takes all resulting (intermediate) timesteps.

$\tau$ high-resolution steps; the optimisation loss takes all resulting (intermediate) timesteps.

In the following, we will denote a PISO solver step ![]() $\mathcal {S}$ as

$\mathcal {S}$ as

where ![]() $\boldsymbol {u}_{n}$,

$\boldsymbol {u}_{n}$, ![]() $p_{n}$ and

$p_{n}$ and ![]() $\boldsymbol {f}_n$ represent discretised velocity, pressure and forcing fields at time

$\boldsymbol {f}_n$ represent discretised velocity, pressure and forcing fields at time ![]() $t_n$.

$t_n$.

2.2. Neural network architecture

Turbulence physics strongly depends on the local neighbourhood. Thus, the network has to infer the influence of unresolved scales for each discrete location based on the surrounding flow fields. This physical relation can be represented by discrete convolutions, where each output value is computed based solely on the surrounding computational cells as well as a convolutional weighting kernel. This formulation introduces a restricted receptive field for the convolution and ensures the local dependence of its output (Luo et al. Reference Luo, Li, Urtasun and Zemel2016). Chaining multiple of these operations results in a deep CNN, which has been successfully used in many applications ranging from computer vision and image recognition (Albawi, Mohammed & Al-Zawi Reference Albawi, Mohammed and Al-Zawi2017) to fluid mechanics and turbulence research (Beck et al. Reference Beck, Flad and Munz2019; Lapeyre et al. Reference Lapeyre, Misdariis, Cazard, Veynante and Poinsot2019; Guastoni et al. Reference Guastoni, Güemes, Ianiro, Discetti, Schlatter, Azizpour and Vinuesa2021).

We use a fully convolutional network with 7 convolutional layers and leaky rectified linear unit (ReLU) activations, containing ![]() $\sim 82\times 10^{3}$ trainable parameters. As illustrated in figure 1, our CNN takes the discretised velocity and pressure gradient fields as input. This formulation contains full information of the field variable states, and enables the modelling of both temporal and spatial effects of turbulence, as well as correction of numerical inaccuracies. However, any principles of the modelled physics, such as Galilean invariance in the case of SGS closure, must be learnt by the network itself. The choice of network inputs is by no means trivial, but shall not be further studied in this paper. Refer to Choi & Moin (Reference Choi and Moin2012); Xie et al. (Reference Xie, Li, Ma and Wang2019, Reference Xie, Wang, Li, Wan and Chen2020) and MacArt et al. (Reference MacArt, Sirignano and Freund2021) for in-depth analyses. The output of our networks is conditioned on its weights

$\sim 82\times 10^{3}$ trainable parameters. As illustrated in figure 1, our CNN takes the discretised velocity and pressure gradient fields as input. This formulation contains full information of the field variable states, and enables the modelling of both temporal and spatial effects of turbulence, as well as correction of numerical inaccuracies. However, any principles of the modelled physics, such as Galilean invariance in the case of SGS closure, must be learnt by the network itself. The choice of network inputs is by no means trivial, but shall not be further studied in this paper. Refer to Choi & Moin (Reference Choi and Moin2012); Xie et al. (Reference Xie, Li, Ma and Wang2019, Reference Xie, Wang, Li, Wan and Chen2020) and MacArt et al. (Reference MacArt, Sirignano and Freund2021) for in-depth analyses. The output of our networks is conditioned on its weights ![]() $\theta$, and can be interpreted as a corrective force

$\theta$, and can be interpreted as a corrective force ![]() $\boldsymbol {f}_{{CNN}}(\tilde {\boldsymbol {u}}_{n}, \boldsymbol {\nabla } \tilde {p}_{n}|\theta ):\mathbb {R}^{\tilde N_x\times \tilde N_y \times 4}\xrightarrow {}\mathbb {R}^{\tilde N_x \times \tilde N_y\times 2}$ to the under-resolved simulation of the Navier–Stokes equations (2.1) with domain size

$\boldsymbol {f}_{{CNN}}(\tilde {\boldsymbol {u}}_{n}, \boldsymbol {\nabla } \tilde {p}_{n}|\theta ):\mathbb {R}^{\tilde N_x\times \tilde N_y \times 4}\xrightarrow {}\mathbb {R}^{\tilde N_x \times \tilde N_y\times 2}$ to the under-resolved simulation of the Navier–Stokes equations (2.1) with domain size ![]() $\tilde N_x\times \tilde N_y$. This force directly enters the computational chain at PISO's implicit predictor step. As a consequence, the continuity equation is still satisfied at the end of a solver step, even if the simulation is manipulated by the network forcing. For a detailed description of the network structure, including CNN kernel sizes, initialisations and padding, refer to Appendix B.

$\tilde N_x\times \tilde N_y$. This force directly enters the computational chain at PISO's implicit predictor step. As a consequence, the continuity equation is still satisfied at the end of a solver step, even if the simulation is manipulated by the network forcing. For a detailed description of the network structure, including CNN kernel sizes, initialisations and padding, refer to Appendix B.

2.3. Unrolling timesteps for training

Our method combines the numerical solver introduced in § 2.1 with the modelling capabilities of CNNs as outlined in § 2.2. As also illustrated in figure 1, the resulting data-driven training algorithm works based on a dataset ![]() $(\boldsymbol {u}(t_n), p(t_n))$ consisting of high-resolution (

$(\boldsymbol {u}(t_n), p(t_n))$ consisting of high-resolution (![]() $N_x\times N_y$) velocity fields

$N_x\times N_y$) velocity fields ![]() $\boldsymbol {u}(t_n)\in \mathbb {R}^{N_x\times N_y \times 2}$ and corresponding pressure fields

$\boldsymbol {u}(t_n)\in \mathbb {R}^{N_x\times N_y \times 2}$ and corresponding pressure fields ![]() $p(t_n)\in \mathbb {R}^{N_x\times N_y}$ for the discrete time

$p(t_n)\in \mathbb {R}^{N_x\times N_y}$ for the discrete time ![]() $t_n$. In order to use these DNS data for training under-resolved simulations on different grid resolutions, we define a downsampling procedure

$t_n$. In order to use these DNS data for training under-resolved simulations on different grid resolutions, we define a downsampling procedure ![]() $q(\boldsymbol {u}, p):\mathbb {R}^{ N_x\times N_y \times 3}\xrightarrow {}\mathbb {R}^{\tilde N_x \times \tilde N_y\times 3}$ , that takes samples from the dataset and outputs the data

$q(\boldsymbol {u}, p):\mathbb {R}^{ N_x\times N_y \times 3}\xrightarrow {}\mathbb {R}^{\tilde N_x \times \tilde N_y\times 3}$ , that takes samples from the dataset and outputs the data ![]() $(\tilde {\boldsymbol {u}}_n,\tilde {p}_n)$ at a lower target resolution (

$(\tilde {\boldsymbol {u}}_n,\tilde {p}_n)$ at a lower target resolution (![]() $\tilde N_x\times \tilde N_y$) via bilinear interpolation. This interpolation provides a simple method of acquiring data at the shifted cell locations of different discretisations. It can be seen as a repeated linear interpolation to take care of two spatial dimensions. The resampling of DNS data is used to generate input and target frames of an optimisation step. For the sake of simplicity, we will denote a downsampled member of the dataset consisting of velocities and pressure as

$\tilde N_x\times \tilde N_y$) via bilinear interpolation. This interpolation provides a simple method of acquiring data at the shifted cell locations of different discretisations. It can be seen as a repeated linear interpolation to take care of two spatial dimensions. The resampling of DNS data is used to generate input and target frames of an optimisation step. For the sake of simplicity, we will denote a downsampled member of the dataset consisting of velocities and pressure as ![]() $\tilde {q}_n=q(\boldsymbol {u}(t_n), p(t_n))$. Similarly, we will write

$\tilde {q}_n=q(\boldsymbol {u}(t_n), p(t_n))$. Similarly, we will write ![]() $\tilde {\boldsymbol {f}}_n= \boldsymbol {f}_{CNN}(\tilde {\boldsymbol {u}}_{n},\boldsymbol {\nabla } \tilde {p}_{n}|\theta )$. Note that the network operates solely on low-resolution data and introduces a corrective forcing to the low-resolution simulation, with the goal of reproducing the behaviour of a DNS. We formulate the training objective as

$\tilde {\boldsymbol {f}}_n= \boldsymbol {f}_{CNN}(\tilde {\boldsymbol {u}}_{n},\boldsymbol {\nabla } \tilde {p}_{n}|\theta )$. Note that the network operates solely on low-resolution data and introduces a corrective forcing to the low-resolution simulation, with the goal of reproducing the behaviour of a DNS. We formulate the training objective as

for a loss function ![]() $\mathcal {L}$ that satisfies

$\mathcal {L}$ that satisfies ![]() $\mathcal {L}(x,y)\xrightarrow {}0$ for

$\mathcal {L}(x,y)\xrightarrow {}0$ for ![]() $x\approx y$. By this formulation, the network takes a downsampled DNS snapshot and should output a forcing which makes the flow fields after a low-resolution solver step closely resemble the next downsampled frame. The temporal increment

$x\approx y$. By this formulation, the network takes a downsampled DNS snapshot and should output a forcing which makes the flow fields after a low-resolution solver step closely resemble the next downsampled frame. The temporal increment ![]() $\tau$ between these subsequent frames is set to match the timesteps in the low-resolution solver

$\tau$ between these subsequent frames is set to match the timesteps in the low-resolution solver ![]() $\mathcal {S}$, which in turn are tuned to maintain Courant numbers identical to the DNS.

$\mathcal {S}$, which in turn are tuned to maintain Courant numbers identical to the DNS.

Um et al. (Reference Um, Brand, Fei, Holl and Thuerey2020) showed that similar training tasks benefit from unrolling multiple temporal integration steps in the optimisation loop. The optimisation can then account for longer-term effects of the network output on the temporal evolution of the solution, increasing accuracy and stability in the process. We utilise the same technique and find it to be critical for the long-term stability of turbulence models. Our notation from equations (2.2) and (2.3) is extended to generalise the formulation towards multiple subsequent snapshots. When training a model through ![]() $m$ unrolled steps, the optimisation objective becomes

$m$ unrolled steps, the optimisation objective becomes

\begin{equation} \min_\theta\left(\sum_{s=0}^{m}\mathcal{L}(\tilde{q}_{n+s\tau} ,\mathcal{S}_\tau^{s}(\tilde{q}_n,\tilde{\boldsymbol{f}}_n))\right), \end{equation}

\begin{equation} \min_\theta\left(\sum_{s=0}^{m}\mathcal{L}(\tilde{q}_{n+s\tau} ,\mathcal{S}_\tau^{s}(\tilde{q}_n,\tilde{\boldsymbol{f}}_n))\right), \end{equation}

where ![]() $\mathcal {S}^{s}$ denotes the successive execution of

$\mathcal {S}^{s}$ denotes the successive execution of ![]() $s$ solver steps including network updates, starting with the initial fields

$s$ solver steps including network updates, starting with the initial fields ![]() $q_i$. By this formulation the optimisation works towards matching not only the final, but also all intermediate frames. Refer to Appendix A.1 for a detailed explanation of this approach, including equations for optimisation and loss differentiation.

$q_i$. By this formulation the optimisation works towards matching not only the final, but also all intermediate frames. Refer to Appendix A.1 for a detailed explanation of this approach, including equations for optimisation and loss differentiation.

2.4. Loss functions

As introduced in (2.3), the training of deep CNNs is an optimisation of its parameters. The loss function ![]() $\mathcal {L}$ serves as the optimisation objective and thus has to assess the quality of the network output. Since our approach targets the reproduction of DNS-like behaviour on a coarse gird, the chosen loss function should consequently aim to minimise the distance between the state of a modelled coarse simulation and the DNS. In this context, a natural choice is the

$\mathcal {L}$ serves as the optimisation objective and thus has to assess the quality of the network output. Since our approach targets the reproduction of DNS-like behaviour on a coarse gird, the chosen loss function should consequently aim to minimise the distance between the state of a modelled coarse simulation and the DNS. In this context, a natural choice is the ![]() $\mathcal {L}_2$ loss on the

$\mathcal {L}_2$ loss on the ![]() $s$th unrolled solver step

$s$th unrolled solver step

since this formulation drives the optimisation towards resembling a desired outcome. Therefore, the ![]() $\mathcal {L}_2$ loss trains the network to directly reproduce the downsampled high-resolution fields, and the perfect reproduction from an ideal model gives

$\mathcal {L}_2$ loss trains the network to directly reproduce the downsampled high-resolution fields, and the perfect reproduction from an ideal model gives ![]() $\mathcal {L}_2=0$. Since the differentiable solver allows us to unroll multiple simulation frames, we apply this loss formulation across a medium-term time horizon and thus also optimise towards multi-step effects. By repeatedly taking frames from a large DNS dataset in a stochastic sampling process, a range of downsampled instances are fed to the training procedure. While the DNS dataset captures all turbulence statistics, they are typically lost in an individual training iteration. This is due to the fact that training mini-batches do not generally include sufficient samples to represent converged statistics, and no specific method is used to satisfy this criterion. This means that data in one training iteration solely carry instantaneous information. Only the repeated stochastic sampling from the dataset lets the network recover awareness of the underlying turbulence statistics. The repeated matching of instantaneous DNS behaviour thus encodes the turbulence statistics in the training procedure. While the

$\mathcal {L}_2=0$. Since the differentiable solver allows us to unroll multiple simulation frames, we apply this loss formulation across a medium-term time horizon and thus also optimise towards multi-step effects. By repeatedly taking frames from a large DNS dataset in a stochastic sampling process, a range of downsampled instances are fed to the training procedure. While the DNS dataset captures all turbulence statistics, they are typically lost in an individual training iteration. This is due to the fact that training mini-batches do not generally include sufficient samples to represent converged statistics, and no specific method is used to satisfy this criterion. This means that data in one training iteration solely carry instantaneous information. Only the repeated stochastic sampling from the dataset lets the network recover awareness of the underlying turbulence statistics. The repeated matching of instantaneous DNS behaviour thus encodes the turbulence statistics in the training procedure. While the ![]() $\mathcal {L}_2$ loss described in (2.5) has its global minimum when the DNS behaviour is perfectly reproduced, in practice, we find that it can neglect the time evolution of certain fine scale, low amplitude features of the solutions. This property of the

$\mathcal {L}_2$ loss described in (2.5) has its global minimum when the DNS behaviour is perfectly reproduced, in practice, we find that it can neglect the time evolution of certain fine scale, low amplitude features of the solutions. This property of the ![]() $\mathcal {L}_2$ loss is not unique to turbulence modelling and has previously been observed in machine learning in other scientific fields such as computer vision (Yu et al. Reference Yu, Fernando, Hartley and Porikli2018). To alleviate these shortcomings, we include additional loss formulations, which alter the loss landscape to avoid these local minima.

$\mathcal {L}_2$ loss is not unique to turbulence modelling and has previously been observed in machine learning in other scientific fields such as computer vision (Yu et al. Reference Yu, Fernando, Hartley and Porikli2018). To alleviate these shortcomings, we include additional loss formulations, which alter the loss landscape to avoid these local minima.

We define a spectral energy loss ![]() $\mathcal {L}_{E}$, designed to improve the accuracy of the learned model on fine spatial scales. It is formulated as the log-spectral distance of the spectral kinetic energies at the

$\mathcal {L}_{E}$, designed to improve the accuracy of the learned model on fine spatial scales. It is formulated as the log-spectral distance of the spectral kinetic energies at the ![]() $s$th step

$s$th step

\begin{equation} \mathcal{L}_E = \sqrt{\int_k \log\left(\frac{\tilde{E}_{s}(k)}{E_{s \tau}^{q}(k)}\right)^{2} \, {{\rm d}}k}, \end{equation}

\begin{equation} \mathcal{L}_E = \sqrt{\int_k \log\left(\frac{\tilde{E}_{s}(k)}{E_{s \tau}^{q}(k)}\right)^{2} \, {{\rm d}}k}, \end{equation}

where ![]() $\tilde {E}_s(k)$ denotes the spectral kinetic energy of the low-resolution velocity field at wavenumber

$\tilde {E}_s(k)$ denotes the spectral kinetic energy of the low-resolution velocity field at wavenumber ![]() $k$, and

$k$, and ![]() $E^{q}_{s\tau }$ represents the same quantity for the downsampled DNS data. In practice, this loss formulation seeks to equalise the kinetic energy in the velocity field for each discrete wavenumber. The

$E^{q}_{s\tau }$ represents the same quantity for the downsampled DNS data. In practice, this loss formulation seeks to equalise the kinetic energy in the velocity field for each discrete wavenumber. The ![]() $\log$ rescaling of the two respective spectra regularises the relative influence of different spatial scales. This energy loss elevates the relative importance of fine scale features.

$\log$ rescaling of the two respective spectra regularises the relative influence of different spatial scales. This energy loss elevates the relative importance of fine scale features.

Our final aim is to train a model that can be applied to a standalone forward simulation. The result of a neural network-modelled low-resolution simulation step should therefore transfer all essential turbulence information, such that the same model can once again be applied in the subsequent step. The premises of modelling the unresolved behaviour are found in the conservation equation for the implicitly filtered low-resolution kinetic energy in tensor notation

\begin{equation} \frac{\partial \widetilde{E_f}}{\partial t} + \tilde{u}_i \frac{\partial \widetilde{E_f}}{\partial x_i}+\frac{\partial}{\partial x_j} \tilde{u}_i \left[ \delta_{ij}\tilde{p}+ \tau_{ij}^{r} - \frac{2}{{Re}}\tilde{{\mathsf{S}}}_{ij} \right] ={-}\epsilon_f-\mathcal{P}^{r}, \end{equation}

\begin{equation} \frac{\partial \widetilde{E_f}}{\partial t} + \tilde{u}_i \frac{\partial \widetilde{E_f}}{\partial x_i}+\frac{\partial}{\partial x_j} \tilde{u}_i \left[ \delta_{ij}\tilde{p}+ \tau_{ij}^{r} - \frac{2}{{Re}}\tilde{{\mathsf{S}}}_{ij} \right] ={-}\epsilon_f-\mathcal{P}^{r}, \end{equation}

where ![]() $\tilde {E}_f$ denotes the kinetic energy of the filtered velocity field,

$\tilde {E}_f$ denotes the kinetic energy of the filtered velocity field, ![]() $\tau _{ij}^{r}$ represents the SGS stress tensor,

$\tau _{ij}^{r}$ represents the SGS stress tensor, ![]() ${\tilde {{\mathsf{S}}}}_{ij}= \frac {1}{2}({\partial \tilde {u}_i}/{\partial x_j} + {\partial \tilde {u}_j}/{\partial x_i})$ is the resolved rate of strain and

${\tilde {{\mathsf{S}}}}_{ij}= \frac {1}{2}({\partial \tilde {u}_i}/{\partial x_j} + {\partial \tilde {u}_j}/{\partial x_i})$ is the resolved rate of strain and ![]() $\epsilon _f$ and

$\epsilon _f$ and ![]() $\mathcal {P}^{r}$ are sink and source terms for the filtered kinetic energy. These terms are defined as

$\mathcal {P}^{r}$ are sink and source terms for the filtered kinetic energy. These terms are defined as ![]() $\epsilon _f = ({2}/{Re}){\tilde {{\mathsf{S}}}}_{ij}{\tilde {{\mathsf{S}}}}_{ij}$ and

$\epsilon _f = ({2}/{Re}){\tilde {{\mathsf{S}}}}_{ij}{\tilde {{\mathsf{S}}}}_{ij}$ and ![]() $\mathcal {P}^{r}=-\tau _{ij}^{r}{\tilde {{\mathsf{S}}}}_{ij}$. The viscous sink

$\mathcal {P}^{r}=-\tau _{ij}^{r}{\tilde {{\mathsf{S}}}}_{ij}$. The viscous sink ![]() $\epsilon _f$ represents the dissipation of kinetic energy due to molecular viscous stresses at grid-resolved scales. In hybrid methods, this viscous dissipation at grid level

$\epsilon _f$ represents the dissipation of kinetic energy due to molecular viscous stresses at grid-resolved scales. In hybrid methods, this viscous dissipation at grid level ![]() $\epsilon _f$ is fully captured by the numerical solver. On the contrary, the source term

$\epsilon _f$ is fully captured by the numerical solver. On the contrary, the source term ![]() $\mathcal {P}^{r}$ representing the energy transfer from resolved scales to residual motions cannot be computed, because the SGS stresses

$\mathcal {P}^{r}$ representing the energy transfer from resolved scales to residual motions cannot be computed, because the SGS stresses ![]() $\tau _{ij}^{r}$ are unknown. One part of the modelling objective is to estimate these unresolved stresses and the interaction of filtered and SGS motions. Since the energy transfer between these scales

$\tau _{ij}^{r}$ are unknown. One part of the modelling objective is to estimate these unresolved stresses and the interaction of filtered and SGS motions. Since the energy transfer between these scales ![]() $\mathcal {P}^{r}$ depends on the filtered rate of strain

$\mathcal {P}^{r}$ depends on the filtered rate of strain ![]() ${\tilde{{\mathsf{S}}}}_{ij}$, special emphasis is required to accurately reproduce the filtered rate of strain tensor. This motivates the following rate of strain loss at the

${\tilde{{\mathsf{S}}}}_{ij}$, special emphasis is required to accurately reproduce the filtered rate of strain tensor. This motivates the following rate of strain loss at the ![]() $s$th unrolled solver step

$s$th unrolled solver step

where ![]() ${{\mathsf{S}}}^{q}_{ij, s}$ denotes the rate of strain of the downsampled high-resolution velocity field. This loss term ensures that the output of a hybrid solver step carries the information necessary to infer an accurate forcing in the subsequent step.

${{\mathsf{S}}}^{q}_{ij, s}$ denotes the rate of strain of the downsampled high-resolution velocity field. This loss term ensures that the output of a hybrid solver step carries the information necessary to infer an accurate forcing in the subsequent step.

While our models primarily focus on influences of small-scale motions on the large-scale resolved quantities, we now draw attention to the largest scale, the mean flow. To account for the mean flow at training time, an additional loss contribution is constructed to match the multi-step statistics and written as

where ![]() $\langle \rangle _{s=0}^{m}$ denotes an averaging over the

$\langle \rangle _{s=0}^{m}$ denotes an averaging over the ![]() $m$ unrolled training steps with iterator

$m$ unrolled training steps with iterator ![]() $s$. This notation resembles Reynolds averaging, albeit being focused on the shorter time horizon unrolled during training. Matching the averaged quantities is essential to achieving long-term accuracy of the modelled simulations for statistically steady simulations, but lacks physical meaning in transient cases. Therefore, this loss contribution is solely applied to statistically steady simulations. In this case, the rolling average

$s$. This notation resembles Reynolds averaging, albeit being focused on the shorter time horizon unrolled during training. Matching the averaged quantities is essential to achieving long-term accuracy of the modelled simulations for statistically steady simulations, but lacks physical meaning in transient cases. Therefore, this loss contribution is solely applied to statistically steady simulations. In this case, the rolling average ![]() $\langle \rangle _{s=0}^{m}$ approaches the steady mean flow for increasing values of

$\langle \rangle _{s=0}^{m}$ approaches the steady mean flow for increasing values of ![]() $m$.

$m$.

Our combined turbulence loss formulation as used in the network optimisations additively combines the aforementioned terms as

where ![]() $\lambda$ denotes the respective loss factor. Their exact values are mentioned in the flow scenario specific chapters. Note that these loss terms, similar to the temporal unrolling, do not influence the architecture or computational performance of the trained neural network at inference time. They only exist at training time to guide the network to an improved state with respect to its trainable parameters. In the following main sections of this paper, we use three different turbulence scenarios to study effects of the number of unrolled steps and the whole turbulence loss

$\lambda$ denotes the respective loss factor. Their exact values are mentioned in the flow scenario specific chapters. Note that these loss terms, similar to the temporal unrolling, do not influence the architecture or computational performance of the trained neural network at inference time. They only exist at training time to guide the network to an improved state with respect to its trainable parameters. In the following main sections of this paper, we use three different turbulence scenarios to study effects of the number of unrolled steps and the whole turbulence loss ![]() $\mathcal {L}_{T}$. An ablation on the individual components of

$\mathcal {L}_{T}$. An ablation on the individual components of ![]() $\mathcal {L}_{T}$ is provided in Appendix F. We start with employing the training strategy on isotropic decaying turbulence.

$\mathcal {L}_{T}$ is provided in Appendix F. We start with employing the training strategy on isotropic decaying turbulence.

3. Two-dimensional isotropic decaying turbulence

Isotropic decaying turbulence in two dimensions provides an idealised flow scenario (Lilly Reference Lilly1971), and is frequently used for evaluating model performance (San Reference San2014; Maulik et al. Reference Maulik, San, Rasheed and Vedula2019; Kochkov et al. Reference Kochkov, Smith, Alieva, Wang, Brenner and Hoyer2021). It is characterised by a large number of vortices that merge at the large spatial scales whilst the small scales decay in intensity over time. We use this flow configuration to explore and evaluate the relevant parameters, most notably the number of unrolled simulation steps as well as the effects of loss formulations.

In order to generate training data, we ran a simulation on a square domain with periodic boundaries in both spatial directions. The initial velocity and pressure fields were generated using the initialisation procedure by San & Staples (Reference San and Staples2012). The Reynolds numbers are calculated as ![]() $Re = (\hat {e} \hat {l})/\nu$, with the kinetic energy

$Re = (\hat {e} \hat {l})/\nu$, with the kinetic energy ![]() $\hat {e}=(\langle u_iu_i\rangle )^{1/2}$ and the integral length scale

$\hat {e}=(\langle u_iu_i\rangle )^{1/2}$ and the integral length scale ![]() $\hat {l} = \hat {u}/\hat {\omega }$ and

$\hat {l} = \hat {u}/\hat {\omega }$ and ![]() $\hat {\omega }=(\langle \omega _i\omega _i\rangle )^{1/2}$. The Reynolds number of this initialisation was

$\hat {\omega }=(\langle \omega _i\omega _i\rangle )^{1/2}$. The Reynolds number of this initialisation was ![]() $Re=126$. The simulation was run for a duration of

$Re=126$. The simulation was run for a duration of ![]() $T=10^{4}\Delta t_{{DNS}}= 100\hat {t}$, where the integral time scale is calculated as

$T=10^{4}\Delta t_{{DNS}}= 100\hat {t}$, where the integral time scale is calculated as ![]() $\hat {t}=1/\hat {\omega }$ at the initial state. During the simulation, the backscatter effect transfers turbulence energy to the larger scales, which increases the Reynolds number (Kraichnan Reference Kraichnan1967; Chasnov Reference Chasnov1997). In our dataset, the final Reynolds number was

$\hat {t}=1/\hat {\omega }$ at the initial state. During the simulation, the backscatter effect transfers turbulence energy to the larger scales, which increases the Reynolds number (Kraichnan Reference Kraichnan1967; Chasnov Reference Chasnov1997). In our dataset, the final Reynolds number was ![]() $Re=296$. Note that despite this change in Reynolds number, the turbulence kinetic energy is still decreasing and the flow velocities will decay to zero.

$Re=296$. Note that despite this change in Reynolds number, the turbulence kinetic energy is still decreasing and the flow velocities will decay to zero.

Our aim is to estimate the effects of small-scale turbulent features on a coarser grid based on fully resolved simulation data. Consequently, the dataset should consist of a fully resolved DNS and satisfy the resolution requirements. In this case the square domain ![]() $(L_x,L_y)=(2{\rm \pi},2{\rm \pi} )$ was discretised by

$(L_x,L_y)=(2{\rm \pi},2{\rm \pi} )$ was discretised by ![]() $(N_x,N_y) = (1024,1024)$ grid cells and the simulation evolved with a timestep satisfying CFL

$(N_x,N_y) = (1024,1024)$ grid cells and the simulation evolved with a timestep satisfying CFL ![]() $=0.3$.

$=0.3$.

We trained a series of models on downsampled data, where spatial and temporal resolutions were decreased by a factor of ![]() $8$, resulting in an effective simulation size reduction of

$8$, resulting in an effective simulation size reduction of ![]() $8^{3}=512$. Our best performing model was trained through

$8^{3}=512$. Our best performing model was trained through ![]() $30$ unrolled simulation steps. This is equivalent to

$30$ unrolled simulation steps. This is equivalent to ![]() $1.96\hat t$ for the initial simulation state. Due to the decaying nature of this test case, the integral time scale increases over the course of the simulation, while the number of unrolled timesteps is kept constant. As a consequence, the longest unrollments of 30 steps cover a temporal horizon similar to the integral time scale. All our simulation set-ups will study unrollment horizons ranging up to the relevant integral time scale, and best results are achieved when the unrollment approaches the integral time scale. For training the present set-up, the loss factors from equation (2.10) were chosen as

$1.96\hat t$ for the initial simulation state. Due to the decaying nature of this test case, the integral time scale increases over the course of the simulation, while the number of unrolled timesteps is kept constant. As a consequence, the longest unrollments of 30 steps cover a temporal horizon similar to the integral time scale. All our simulation set-ups will study unrollment horizons ranging up to the relevant integral time scale, and best results are achieved when the unrollment approaches the integral time scale. For training the present set-up, the loss factors from equation (2.10) were chosen as ![]() $(\lambda _2 , \lambda _E, \lambda _{S}, \lambda _{MS})= (10, 5\times 10^{-2}, 1\times 10^{-5}, 0)$.

$(\lambda _2 , \lambda _E, \lambda _{S}, \lambda _{MS})= (10, 5\times 10^{-2}, 1\times 10^{-5}, 0)$.

To evaluate the influence of the choice of loss function and the number of unrolled steps, several alternative models were evaluated. Additionally, we trained a model with a traditional supervised approach. In this setting, the differentiable solver is not used, and the training is performed purely on the basis of the training dataset. In this case, the corrective forcing is added after a solver step is computed. The optimisation becomes

The equations for the supervised training approach are detailed in Appendix A.1. Furthermore, a LES with the standard Smagorinsky model was included in the comparison. A parameter study targeting the Smagorinsky coefficient revealed that a value of ![]() $C_s=0.008$ handles the physical behaviour of our set-up best. See Appendix D for details. An overview of all models and their parameters is given in table 1.

$C_s=0.008$ handles the physical behaviour of our set-up best. See Appendix D for details. An overview of all models and their parameters is given in table 1.

Table 1. Training details for models trained on the isotropic turbulence case, wtih NN representing various versions of our neural network models; mean squared error (MSE) evaluated at ![]() $t_1 = 64\Delta t\approx 5\hat {t}$ and

$t_1 = 64\Delta t\approx 5\hat {t}$ and ![]() $t_2 = 512\Delta t\approx 40\hat {t}$.

$t_2 = 512\Delta t\approx 40\hat {t}$.

After training, a forward simulation was run for comparison with a test dataset. For the test data, an entirely different, randomly generated initialisation was used, resulting in a velocity field different from the simulation used for training. The test simulations were advanced for ![]() $1000\Delta t = 80\hat t$.

$1000\Delta t = 80\hat t$.

Note that the temporal advancement of the forward simulations greatly surpasses the unrolled training horizon, which leads to instabilities with the supervised and 1-step model, and ultimately to the divergence of their simulations. Consequently, we conclude that more unrolled steps are critical for the applicability of the learned models and do not include the 1-step model in further evaluations. While an unrollment of multiple steps also improves the accuracy of supervised models, these models nevertheless fall short of their differentiable counterparts, as seen in a deeper study in Appendix E.

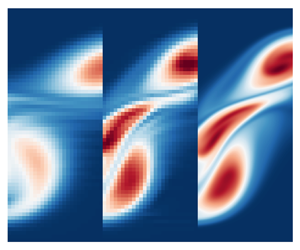

We provide visual comparisons of vorticity snapshots in figure 2, where our method's improvements become apparent. The network-modelled simulations produce a highly accurate evolution of vorticity centres, and comparable performance cannot be achieved without a model for the same resolution. We also investigate the resolved turbulence kinetic energy spectra in figure 3. Whilst the no-model simulation overshoots the DNS energy at its smallest resolved scales, the learned model simulations perform better and match the desired target spectrum. Figure 4 shows temporal evolutions of the domain-wide-resolved turbulence energy and the domain-wide-resolved turbulence dissipation rate. The turbulence energy is evaluated according to ![]() $E(t)= \int u_i'(t) u_i'(t) \, {\textrm {d}} \varOmega$, where

$E(t)= \int u_i'(t) u_i'(t) \, {\textrm {d}} \varOmega$, where ![]() $u_i'$ is the turbulent fluctuation. We calculate the turbulence dissipation as

$u_i'$ is the turbulent fluctuation. We calculate the turbulence dissipation as ![]() $\epsilon (t)=\int \langle \mu ({\partial u'_i\partial u_i'}/{\partial x_j \partial x_j}) \rangle \, {\textrm {d}} \varOmega$. Simulations with our CNN models strongly agree with the downsampled DNS.

$\epsilon (t)=\int \langle \mu ({\partial u'_i\partial u_i'}/{\partial x_j \partial x_j}) \rangle \, {\textrm {d}} \varOmega$. Simulations with our CNN models strongly agree with the downsampled DNS.

Figure 2. Vorticity visualisations of DNS, no-model, LES, and learned model simulations at ![]() $t = (350,700) \Delta t$ on the test dataset, zoomed-in version below.

$t = (350,700) \Delta t$ on the test dataset, zoomed-in version below.

Figure 3. Resolved turbulence kinetic energy spectra of the downsampled DNS, no-model, LES and learned model simulations; the learned 30-step model matches the energy distribution of downsampled DNS data; the vertical line represents the Nyquist wavenumber of the low-resolution grid.

Figure 4. Comparison of DNS, no-model, LES and learned model simulations with respect to resolved turbulence kinetic energy over time (a); and turbulence dissipation rate over time (b).

All remaining learned models stay in close proximity to the desired high-resolution evolutions, whereas the LES-modelled and no-model simulations significantly deviate from the target. Overall, the neural network models trained with more unrolled steps outperformed others, while the turbulence loss formulation ![]() $\mathcal {L}_{T}$ also had a positive effect.

$\mathcal {L}_{T}$ also had a positive effect.

In particular, the backscatter effect is crucial for simulations of decaying turbulence (Kraichnan Reference Kraichnan1967; Smith, Chasnov & Waleffe Reference Smith, Chasnov and Waleffe1996). The CNN adequately dampens the finest scales, as seen in the high wavenumber section of the energy spectrum (figure 3), it also successfully boosts larger scale motions. In contrast, the no-model simulation lacks dampening at the finest scales and cannot reproduce the backscatter effect on the larger ones. On the other hand, the dissipative nature of the Smagorinsky model used in the LES leads to under-sized spectral energies across all scales. Especially the spectral energies of no-model and LES around wavenumber ![]() $k=10$ show large deviations form the ground truth, while the CNN model accurately reproduces its behaviour. These large turbulent scales are the most relevant to the resolved turbulence energy and dissipation statistics, which is reflected in figure 4. Herein, the neural network models maintain the best approximations, and high numbers of unrolled steps show the best performance at long simulation horizons. The higher total energy of the neural network modelled simulations can be attributed to the work done by the network forcing, which is visualised together with the SGS stress tensor work from the LES simulation as well as its SGS energy in figure 5. This analysis reveals that the neural networks do more work on the system as the LES model does, which explains the higher and more accurate turbulence energy in figure 4 and the spectral energy behaviour at large scales in figure 3.

$k=10$ show large deviations form the ground truth, while the CNN model accurately reproduces its behaviour. These large turbulent scales are the most relevant to the resolved turbulence energy and dissipation statistics, which is reflected in figure 4. Herein, the neural network models maintain the best approximations, and high numbers of unrolled steps show the best performance at long simulation horizons. The higher total energy of the neural network modelled simulations can be attributed to the work done by the network forcing, which is visualised together with the SGS stress tensor work from the LES simulation as well as its SGS energy in figure 5. This analysis reveals that the neural networks do more work on the system as the LES model does, which explains the higher and more accurate turbulence energy in figure 4 and the spectral energy behaviour at large scales in figure 3.

Figure 5. NN-model work on the flow field, work by the LES model and the estimated SGS energies from LES.

4. Temporally developing planar mixing layers

Next, we apply our method to the simulation of two-dimensional planar mixing layers. Due to their relevance to applications such as chemical mixing and combustion, mixing layers have been the focus of theoretical and numerical studies in the fluid-mechanics community. These studies have brought forth a large set and good understanding of a posteriori evaluations, like the Reynolds-averaged turbulent statistics or the vorticity and momentum thickness. Herein, we use these evaluations to assess the accuracy of our learned models with respect to metrics that are not directly part of the learning targets.

Temporally evolving planar mixing layers are the simplest numerical representation of a process driven by the Kelvin–Helmholtz instability in the shear layer. They are sufficiently defined by the Reynolds number, domain sizes, boundary conditions and an initial condition. Aside from the shear layer represented by a ![]() $\tanh$-profile, the initial flow fields feature an oscillatory disturbance that triggers the instability, leading to the roll up of the shear layer. This has been investigated by theoretical studies involving linear stability analysis (Michalke Reference Michalke1964) and numerical simulation (Rogers & Moser Reference Rogers and Moser1994). Our set-up is based on the work by Michalke (Reference Michalke1964), who studied the stability of the shear layer and proposed initialisations that lead to shear layer roll up. As initial condition, we add randomised modes to the mean profile, resulting in the streamfunction

$\tanh$-profile, the initial flow fields feature an oscillatory disturbance that triggers the instability, leading to the roll up of the shear layer. This has been investigated by theoretical studies involving linear stability analysis (Michalke Reference Michalke1964) and numerical simulation (Rogers & Moser Reference Rogers and Moser1994). Our set-up is based on the work by Michalke (Reference Michalke1964), who studied the stability of the shear layer and proposed initialisations that lead to shear layer roll up. As initial condition, we add randomised modes to the mean profile, resulting in the streamfunction

where ![]() $a$ is the amplitude of the perturbation,

$a$ is the amplitude of the perturbation, ![]() $\alpha$ parameterises the decay of the perturbation in

$\alpha$ parameterises the decay of the perturbation in ![]() $y$-direction and

$y$-direction and ![]() $\omega _\varPsi$ represents the perturbation frequency. The initial flow field can then be calculated by

$\omega _\varPsi$ represents the perturbation frequency. The initial flow field can then be calculated by

At the initial state this results in a velocity step ![]() $\Delta U= {U_2}-{U_1}=1$ and a vorticity thickness of

$\Delta U= {U_2}-{U_1}=1$ and a vorticity thickness of ![]() $\delta _\omega ={\Delta U}/({\partial U }/{\partial y}|_{{max}})= 1$, where velocities marked as

$\delta _\omega ={\Delta U}/({\partial U }/{\partial y}|_{{max}})= 1$, where velocities marked as ![]() $U$ represent mean-stream quantities. Thus,

$U$ represent mean-stream quantities. Thus, ![]() $U_2$ and

$U_2$ and ![]() $U_1$ are the fast and slow mean velocities of the shear layer. The computational domain of size

$U_1$ are the fast and slow mean velocities of the shear layer. The computational domain of size ![]() $(L_x,L_y)=(40{\rm \pi},20{\rm \pi} )$ is discretised by

$(L_x,L_y)=(40{\rm \pi},20{\rm \pi} )$ is discretised by ![]() $(N_x,N_y)=(1024,512)$ grid cells for the high-resolution dataset generation. The streamwise boundaries are periodic, while the spanwise boundaries in the

$(N_x,N_y)=(1024,512)$ grid cells for the high-resolution dataset generation. The streamwise boundaries are periodic, while the spanwise boundaries in the ![]() $y$-direction are set to a free-slip boundary, where

$y$-direction are set to a free-slip boundary, where ![]() ${\partial u}/{\partial y} |_{\varOmega _y}=0$,

${\partial u}/{\partial y} |_{\varOmega _y}=0$, ![]() $v|_{\varOmega _y}=0$ and

$v|_{\varOmega _y}=0$ and ![]() $p|_{\varOmega _y}=0$. The Reynolds number based on the unperturbed mean profile and the vorticity thickness is calculated to be

$p|_{\varOmega _y}=0$. The Reynolds number based on the unperturbed mean profile and the vorticity thickness is calculated to be ![]() $Re={\Delta U \delta _\omega }/{\nu }=250$ for all randomised initialisations. The simulations are run for

$Re={\Delta U \delta _\omega }/{\nu }=250$ for all randomised initialisations. The simulations are run for ![]() $T=420=12\ 000 \Delta t_{DNS}$. Our dataset consists of three simulations based on different initialisations. Their perturbation details are found in table 2. Two of these simulations were used as training datasets, while all of our evaluation is performed on the remaining one as the extrapolation test dataset.

$T=420=12\ 000 \Delta t_{DNS}$. Our dataset consists of three simulations based on different initialisations. Their perturbation details are found in table 2. Two of these simulations were used as training datasets, while all of our evaluation is performed on the remaining one as the extrapolation test dataset.

Table 2. Perturbation details for initial conditions of temporal mixing layer training and test datasets.

Following the approach in § 3, the model training uses a ![]() $8\times$ downscaling in space and time. The loss composition was set to

$8\times$ downscaling in space and time. The loss composition was set to ![]() $(\lambda _2 , \ \lambda _E, \ \lambda _{S}, \ \lambda _{MS})= (100, \ 2, \ 5\times 10^{-2}, \ 0)$. We used the same CNN architecture as introduced earlier, although due to the difference in boundary conditions a different padding procedure was chosen (see Appendix B). To illustrate the impact of the turbulence loss

$(\lambda _2 , \ \lambda _E, \ \lambda _{S}, \ \lambda _{MS})= (100, \ 2, \ 5\times 10^{-2}, \ 0)$. We used the same CNN architecture as introduced earlier, although due to the difference in boundary conditions a different padding procedure was chosen (see Appendix B). To illustrate the impact of the turbulence loss ![]() $\mathcal {L}_{T}$ and an unrolling of

$\mathcal {L}_{T}$ and an unrolling of ![]() $60$ numerical steps, we compare with several variants with reduced loss formulations and fewer unrolling steps. The maximum number of

$60$ numerical steps, we compare with several variants with reduced loss formulations and fewer unrolling steps. The maximum number of ![]() $60$ unrolled steps corresponds to

$60$ unrolled steps corresponds to ![]() $16 t_{\delta _\theta }$ integral time scales computed on the momentum thickness as

$16 t_{\delta _\theta }$ integral time scales computed on the momentum thickness as ![]() $t_{\delta _\theta } = \delta _\theta /\Delta U$. With the shear layer growing, the momentum thickness increases

$t_{\delta _\theta } = \delta _\theta /\Delta U$. With the shear layer growing, the momentum thickness increases ![]() $7$-fold, which decreases the number of integral time scales to

$7$-fold, which decreases the number of integral time scales to ![]() $2$ for 60 steps of unrollment. Table 3 shows details of the model parameterisations. To avoid instabilities in the gradient calculation that could ultimately lead to unstable training, we split the back-propagation into subranges for the

$2$ for 60 steps of unrollment. Table 3 shows details of the model parameterisations. To avoid instabilities in the gradient calculation that could ultimately lead to unstable training, we split the back-propagation into subranges for the ![]() $60$-step model. This method stabilises an otherwise unstable training of the

$60$-step model. This method stabilises an otherwise unstable training of the ![]() $60$-step model, and a split into

$60$-step model, and a split into ![]() $30$-step long back-propagation subranges performs best. Such a model is added to the present evaluations as

$30$-step long back-propagation subranges performs best. Such a model is added to the present evaluations as ![]() $\textrm {NN}_{60,\mathcal {L}_{T}}$. Detailed results regarding the back-propagation subranges are discussed in § 6.

$\textrm {NN}_{60,\mathcal {L}_{T}}$. Detailed results regarding the back-propagation subranges are discussed in § 6.

Table 3. Model details for unrollment study; MSE with respect to DNS from test data at ![]() $t_e = 512\Delta t$.

$t_e = 512\Delta t$.

The trained models were compared with a downsampled DNS and a no-model simulation, all sharing the same starting frame from the test dataset. This test dataset changes the initial condition, where different perturbation frequencies and amplitudes result in a variation in vortex roll-up and vortex merging behaviour of the mixing layer. The resulting numerical solutions were compared at three different evolution times ![]() $t=[256 \ 640 \ 1024]\Delta t$. Figure 6 shows the vorticity heat map of the solutions. Qualitatively, the simulations corrected by the CNN exhibit close visual proximity to the DNS by boosting peaks in vorticity where applicable, and additionally achieve a dampening of spurious oscillations.

$t=[256 \ 640 \ 1024]\Delta t$. Figure 6 shows the vorticity heat map of the solutions. Qualitatively, the simulations corrected by the CNN exhibit close visual proximity to the DNS by boosting peaks in vorticity where applicable, and additionally achieve a dampening of spurious oscillations.

Figure 6. Vorticity visualisations of DNS, no-model and learned model simulations at ![]() $t=(256,640,1024)\Delta t$ on the test dataset.

$t=(256,640,1024)\Delta t$ on the test dataset.

These observations are matched by corresponding statistical evaluations. The statistics are obtained by averaging the simulation snapshots along their streamwise axis and the resulting turbulence fluctuations were processed for each evaluation time. Figure 7 shows that all ![]() $\mathcal {L}_{T}$-models closely approximate the DNS reference with respect to their distribution of resolved turbulence kinetic energy and Reynolds stresses along the cross-section, while the no-model simulation clearly deviates. Note that the mixing process causes a transfer of momentum from fast to slow moving sections through the effects of turbulent fluctuations. The shear layer growth is thus dominated by turbulent diffusion. Consequently, accurate estimates of the turbulent fluctuations are necessary for the correct evolution of the mixing layer. These fluctuations are most visible in the Reynolds stresses

$\mathcal {L}_{T}$-models closely approximate the DNS reference with respect to their distribution of resolved turbulence kinetic energy and Reynolds stresses along the cross-section, while the no-model simulation clearly deviates. Note that the mixing process causes a transfer of momentum from fast to slow moving sections through the effects of turbulent fluctuations. The shear layer growth is thus dominated by turbulent diffusion. Consequently, accurate estimates of the turbulent fluctuations are necessary for the correct evolution of the mixing layer. These fluctuations are most visible in the Reynolds stresses ![]() $u'v'$, and an accurate estimation is an indicator for well-modelled turbulent momentum diffusion. The evaluations also reveal that unrolling more timesteps during training gains additional performance improvements. These effects are most visible when comparing the 10-step and 60-step model in a long temporal evolution, as seen in the Reynolds stresses in figure 7. The evaluation of resolved turbulence kinetic energies shows that the models correct for the numerical dissipation of turbulent fluctuations, while, in contrast, there is an underestimation of kinetic energy in the no-model simulation. While longer unrollments generally yield better accuracy, it is also clear that

$u'v'$, and an accurate estimation is an indicator for well-modelled turbulent momentum diffusion. The evaluations also reveal that unrolling more timesteps during training gains additional performance improvements. These effects are most visible when comparing the 10-step and 60-step model in a long temporal evolution, as seen in the Reynolds stresses in figure 7. The evaluation of resolved turbulence kinetic energies shows that the models correct for the numerical dissipation of turbulent fluctuations, while, in contrast, there is an underestimation of kinetic energy in the no-model simulation. While longer unrollments generally yield better accuracy, it is also clear that ![]() $30$ steps come close to saturating the model performance in this particular flow scenario. With the integral time scales mentioned earlier, it becomes clear that 30 simulation steps capture one integral time scale of the final simulation phase, i.e. the phase of the decaying simulation that exhibits the longest time scales. One can conclude that an unrollment of one time scale is largely sufficient, and further improvements of unrolling 2 time scales with

$30$ steps come close to saturating the model performance in this particular flow scenario. With the integral time scales mentioned earlier, it becomes clear that 30 simulation steps capture one integral time scale of the final simulation phase, i.e. the phase of the decaying simulation that exhibits the longest time scales. One can conclude that an unrollment of one time scale is largely sufficient, and further improvements of unrolling 2 time scales with ![]() $60$ steps are only minor.

$60$ steps are only minor.

Figure 7. Comparison of DNS, no-model and learned model simulations with respect to resolved turbulence kinetic energy (a) and Reynolds stresses (b).

The resolved turbulence kinetic energy spectra are evaluated to assess the spatial scales at which the corrective models are most active. The spectral analysis at the centreline is visualised in figure 8, whilst the kinetic energy obtained from fluctuations across the cross-section with respect to streamwise averages is shown in figure 9. These plots allow two main observations: firstly, the deviation of kinetic energy mostly originates from medium-sized spatial scales, which are dissipated by the no-model simulation but are accurately reconstructed by the neural network trained with ![]() $\mathcal {L}_T$. This effect is connected to the dampening of vorticity peaks in the snapshots in figure 6. Secondly, the fine-scale spectral energy of the no-model simulation has an amplitude similar to the DNS over long temporal horizons (figure 9). This can be attributed to numerical oscillations rather than physical behaviour. These numerical oscillations, as also seen in the snapshots in figure 6, exist for the no-model simulation but are missing in the

$\mathcal {L}_T$. This effect is connected to the dampening of vorticity peaks in the snapshots in figure 6. Secondly, the fine-scale spectral energy of the no-model simulation has an amplitude similar to the DNS over long temporal horizons (figure 9). This can be attributed to numerical oscillations rather than physical behaviour. These numerical oscillations, as also seen in the snapshots in figure 6, exist for the no-model simulation but are missing in the ![]() $\mathcal {L}_T$-modelled simulations. Training a model without the additional loss terms in

$\mathcal {L}_T$-modelled simulations. Training a model without the additional loss terms in ![]() $\mathcal {L}_T$ from (2.10), i.e. only with the

$\mathcal {L}_T$ from (2.10), i.e. only with the ![]() $\mathcal {L}_2$ from (2.5), yields a model that is inaccurate and results in unphysical oscillations. It does not reproduce the vorticity centres, and is also unstable over long temporal horizons. Herein, non-physical oscillations are introduced, which also show up in the cross-sectional spectral energies and vorticity visualisations. We thus conclude that best performance can be achieved with a network trained with

$\mathcal {L}_2$ from (2.5), yields a model that is inaccurate and results in unphysical oscillations. It does not reproduce the vorticity centres, and is also unstable over long temporal horizons. Herein, non-physical oscillations are introduced, which also show up in the cross-sectional spectral energies and vorticity visualisations. We thus conclude that best performance can be achieved with a network trained with ![]() $\mathcal {L}_T$, which learns to dampen numerical oscillations and reproduces physical fluctuations across all spatial scales.

$\mathcal {L}_T$, which learns to dampen numerical oscillations and reproduces physical fluctuations across all spatial scales.

Figure 8. Centreline kinetic energy spectra for the downsampled DNS, no-model and learned model simulations.

Figure 9. Cross-sectional kinetic energy spectra of the downsampled DNS, no-model and learned model simulations.

It is worth noting that our method is capable of enhancing an under-resolved simulation across a wide range of turbulent motions. The vortex size in the validation simulation ranges from ![]() $7\delta _{\omega _0}$ at the starting frame to

$7\delta _{\omega _0}$ at the starting frame to ![]() $60\delta _{\omega _0}$ after evolving for

$60\delta _{\omega _0}$ after evolving for ![]() $1200 \Delta t$. This timespan encompasses two vortex merging events, both of which cannot be accurately reproduced with a no-model, or a

$1200 \Delta t$. This timespan encompasses two vortex merging events, both of which cannot be accurately reproduced with a no-model, or a ![]() $\mathcal {L}_2$-model simulation, but are captured by the

$\mathcal {L}_2$-model simulation, but are captured by the ![]() $\mathcal {L}_T$-trained network models. This is shown in the comparison of the momentum thicknesses over time in figure 10. The reproduction of turbulence statistics (figure 7) yields, in the long term, an accurate turbulent diffusion of momentum and mixing layer growth for the models trained with

$\mathcal {L}_T$-trained network models. This is shown in the comparison of the momentum thicknesses over time in figure 10. The reproduction of turbulence statistics (figure 7) yields, in the long term, an accurate turbulent diffusion of momentum and mixing layer growth for the models trained with ![]() $\mathcal {L}_T$. On the contrary, the

$\mathcal {L}_T$. On the contrary, the ![]() $\mathcal {L}_2$ model fails to reproduce the vortex cores and deviates with respect to the momentum thickness for long temporal horizons.

$\mathcal {L}_2$ model fails to reproduce the vortex cores and deviates with respect to the momentum thickness for long temporal horizons.

Figure 10. Momentum thickness of DNS, no-model and learned model simulations, evaluated based on the streamwise averages.

5. Spatially developing planar mixing layers

In contrast to the temporally developing mixing layers investigated in § 4, the spatially developing counterpart features a fixed view on a statistically steady flow field, which introduces a new set of challenges to the learning task. While the main difficulty in previous transient simulations was the modelling of an evolving range of turbulent scales, the statistically steady nature of the spatially developing mixing layer requires a reproduction of the turbulent statistics in its own statistically steady state. This in turn necessitates long-term accuracy and stability.

Spatially mixing layers develop from an instability in the shear layer. This instability is driven by a disturbance at the inlet, whose characteristics have great effect on the mixing layer growth (Ho & Huang Reference Ho and Huang1982). In a simulation environment, these disturbances are realised by a Dirichlet inlet boundary condition, where temporally varying perturbations are added to a steady mean flow profile. As proposed by Ko, Lucor & Sagaut (Reference Ko, Lucor and Sagaut2008), a suitable inlet condition including perturbations can be written as

\begin{equation} u_{{in}}(y,t) = 1 + \frac{\Delta U}{2}\tanh(2y) + \sum_{d=1}^{N_d}\epsilon_d(1-\tanh^{2}(y/2))\cos(K_d y)\sin(\varOmega_dt), \end{equation}

\begin{equation} u_{{in}}(y,t) = 1 + \frac{\Delta U}{2}\tanh(2y) + \sum_{d=1}^{N_d}\epsilon_d(1-\tanh^{2}(y/2))\cos(K_d y)\sin(\varOmega_dt), \end{equation}

where the number of perturbation modes ![]() $N_d=2$ holds for our simulations. Furthermore, we used inviscid wall conditions for the two