1. Introduction

Natural language generation (NLG) (Gatt and Krahmer Reference Gatt and Krahmer2018) is a subfield of natural language processing aiming to enable computers the ability to write correct, coherent, and appealing texts. NLG includes popular tasks like machine translation (Bahdanau, Cho, and Bengio Reference Bahdanau, Cho and Bengio2015), summarization (Rush, Chopra, and Weston Reference Rush, Chopra and Weston2015), and dialogue response generation (Yarats and Lewis Reference Yarats and Lewis2018).

An increasingly prominent task in NLG is text style transfer (TST). TST aims at transferring a sentence from one style to another without appreciably changing the content (Hu et al. Reference Hu, Lee, Aggarwal and Zhang2022; Jin et al. Reference Jin, Jin, Hu, Vechtomova and Mihalcea2022). TST encompasses several sub-tasks, including sentiment transfer, news rewriting, storytelling, text simplification, and writing assistants, among others. For example, author imitation (Xu et al. Reference Xu, Ritter, Dolan, Grishman and Cherry2012) is the task of paraphrasing a sentence to fit another author’s style. Automatic poetry generation (Ghazvininejad et al. Reference Ghazvininejad, Shi, Choi and Knight2016) applies style transfer to create poetry in different fashions.

TST inherits the challenges of NLG, namely the lack of parallel training corpora and reliable evaluation metrics (Reiter and Belz Reference Reiter and Belz2009; Gatt and Krahmer Reference Gatt and Krahmer2018). Since parallel examples from each domain style are usually unavailable, most style transfer works focus on the unsupervised configuration (Han, Wu, and Niu Reference Han, Wu and Niu2017; He et al. Reference He, Wang, Neubig and Berg-Kirkpatrick2020; Malmi, Severyn, and Rothe Reference Malmi, Severyn and Rothe2020a). Our work also follows the unsupervised style transfer setting, learning from non-parallel data only.

Most previous models that address the style transfer problem adopt the sequence-to-sequence encoder-decoder framework (Shen et al. Reference Shen, Lei, Barzilay and Jaakkola2017; Hu et al. Reference Hu, Yang, Liang, Salakhutdinov and Xing2017; Fu et al. Reference Fu, Tan, Peng, Zhao and Yan2018). The encoder aims at extracting a style-independent latent representation while the decoder generates the text conditioned on the disentangled latent representation plus a style attribute. This family of methods learns how to disentangle the content and style in the latent space. Disengaging the content from the style means that it is impossible to recover the style from the content. Nevertheless, Lample et al. (Reference Lample, Subramanian, Smith, Denoyer, Ranzato and Boureau2019) show that disentanglement is hard to do, difficult to judge the quality, and particularly unnecessary. Thus, following previous work (Lample et al. Reference Lample, Subramanian, Smith, Denoyer, Ranzato and Boureau2019; Dai et al. Reference Dai, Liang, Qiu and Huang2019), we make no assumptions about the disentangled latent representations of the input sentences.

We rely on transformer (Vaswani et al. Reference Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser and Polosukhin2017) as our base neural sequence-to-sequence (Seq2Seq) architecture. The transformer is a deep neural network that has succeeded in many NLP tasks, particularly when it allies with pretrained masked language models (MLM), such as BERT (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019). However, using MLM in text generation tasks is less prevalent. This is because models like BERT focus on encoding bidirectional representations through the masked language modeling task, while text generation better fits an auto-regressive decoding process.

We explore training the Seq2Seq model with two procedures. One approach trains it from scratch with the dataset itself, and the other pretrains the model using available paraphrased data before presenting it to the dataset examples. We show that pretraining a Seq2Seq model on a massive paraphrase data benefits TST tasks, mainly the ones that can be considered rewriting tasks. Furthermore, we investigate if we can leverage masked language models to benefit the style transfer task, even though their use is less widespread than auto-regressive models for language generation-based tasks. Notably, we want to investigate if TST with a masked language model can output texts with the desired style while still preserving the input text main topic or theme. The investigation considers the following research questions:

RQ1: Does extracting knowledge from a Masked Language Model improve the performance of Seq2Seq models in the style transfer task and consequently generate high-quality texts?

RQ2: What is the impact of pretraining the Seq2Seq model on paraphrase data to the style transfer task?

To answer the research questions, we build our model upon the neural architecture block proposed in Dai et al. (Reference Dai, Liang, Qiu and Huang2019). We leverage their transformer neural network and training strategies. Our adopted transformer network is similar to the original one (Vaswani et al. Reference Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser and Polosukhin2017), except for an additional style embedding inserted into the encoder as the first embedding component. Regarding the training techniques, we inherited the adversarial training and the back-translation techniques of Dai et al. (Reference Dai, Liang, Qiu and Huang2019). Nevertheless, we formulate the main cost function to extract knowledge from a MLM.

To show we can take advantage of an MLM to improve the performance of the style transfer task, we try to distill the knowledge of a pretrained MLM to leverage its learned bidirectional representations. We modify the training objective by transferring the knowledge it contains to the Seq2Seq model. We hypothesize that the predictive power of an MLM improves the performance of TST. As our model uses both Transformers and a MLM for training, we call it MATTES (MAsked Transformer for TExt Style transfer).Footnote a Furthermore, to evaluate if pretraining the Seq2Seq model benefits the TST task, we select a large-scale collection of diverse paraphrase data, namely, the PARABANK 2 dataset (Hu et al. Reference Hu, Singh, Holzenberger, Post and Van Durme2019). For comparison, we experimented training from scratch with the dataset examples and starting from the pretrained model.

For evaluating the proposed model and learning strategies, we select the author imitation (Xu et al. Reference Xu, Ritter, Dolan, Grishman and Cherry2012), formality transfer (Rao and Tetreault Reference Rao and Tetreault2018), and the polarity swap (Li et al. Reference Li, Jia, He and Liang2018) tasks, all in English, given the availability of benchmark datasets. The first task aims at paraphrasing a sentence in an author’s style. The second task consists of rewriting a formal sentence into its informal counterpart and vice-versa. The remaining task, also known as sentiment transfer, aims at reversing the sentiment of a text from positive to negative and vice-versa, preserving the overall theme. These tasks are usually gathered in the literature under the general style transfer label and addressed with the same methods. However, they have critical differences: author imitation and formality transfer imply rephrasing a sentence to match the desired target style without changing its meaning. On the other hand, polarity swap aims to change a text with positive polarity into a text with negative polarity (or vice-versa). In this case, while the general topic must be preserved, the meaning is not maintained (e.g., turning “I had a bad experience” into “I had a great experience”). In this sense, author imitation and formality transfer can be seen much more as rewriting than polarity swap. This way, it is possible to consider them similar to the broader objective of paraphrasing. In this manuscript, we also address these tasks similarly, but this is to investigate how their different nature affects the models, task modeling, and evaluation.

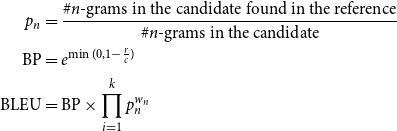

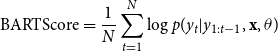

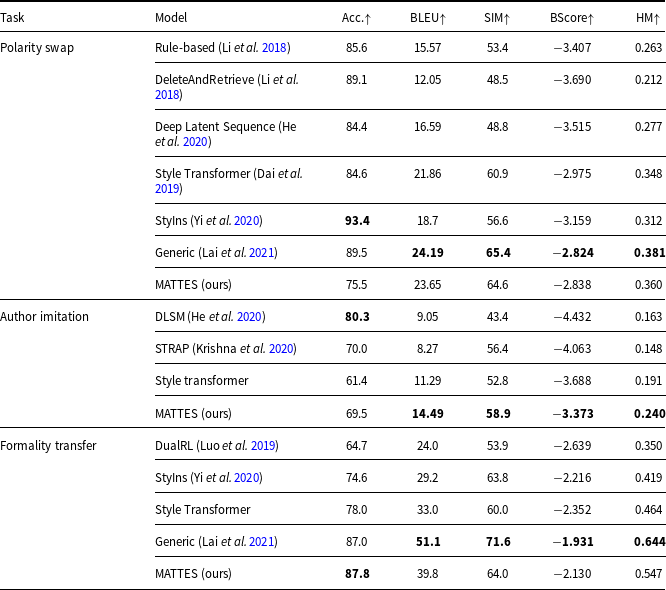

Regarding task evaluation, the literature commonly assesses the performance of TST on style strength and content preservation. To verify that the generated text agrees with the desired style, we train a classifier using texts of both styles, with style as the class, and measure its accuracy. To measure content preservation, the most important feature a style transfer model should possess, three metrics were used: BLEU (Papineni et al. Reference Papineni, Roukos, Ward and Zhu2002), semantic similarity (SIM) (Wieting et al. Reference Wieting, Berg-Kirkpatrick, Gimpel and Neubig2019), and BARTScore (Yuan, Neubig, and Liu Reference Yuan, Neubig and Liu2021). The results pointed out that using a pretrained Seq2Seq as starting point and using a pretrained MLM throughout the main training of the model improves the quality of the generated texts. We show that the former is extremely helpful for rewriting tasks while the latter is more task agnostic oriented but slightly impacts the performance.

The contributions of this paper are:

-

1. A novel unsupervised training method that distills knowledge from a pretrained MLM. To our knowledge, this is the first study that uses an MLM within the training objective on the style transfer task. We show that extracting the rich bidirectional representations of an MLM benefits the TST task.

-

2. An evaluation of two training strategies of the Seq2Seq model. We show that rewriting tasks, such as author imitation and formality transfer, benefit from a Seq2seq model pretrained with paraphrase data.

-

3. In the experiments with author imitation and formality transfer, we achieve state-of-the-art results when pretraining the Seq2Seq model with paraphrase data and using our distillation training technique.

The rest of the manuscript is organized as follows. Section 2 briefly describes basic concepts related to our proposal. Section 3 reviews related work to highlight how MATTES is placed within the literature on the theme. Next, we state the problem tackled here and present our approach in Section 4. Section 5 devises the experiments, and Section 6 concludes the manuscript and points out future directions.

2. Key concepts

This section reviews essential concepts that form the architectural backbone of our proposal. We describe the general paradigm widely used to handle NLP tasks that demand transforming a sequence of tokens into another sequence. Next, we review the Transformer architecture, which relies on the attention mechanism to enhance the sequence generation. Finally, we discuss the autoregressive and MLMs, as we rely on the latter to build MATTES.

2.1 Sequence-to-sequence models with the transformer architecture

Sequence-to-Sequence (Seq2Seq) models are frameworks based on deep learning to address tasks that require obtaining a sequence of values as output from a sequence of input values (Sutskever, Vinyals, and Le Reference Sutskever, Vinyals and Le2014). At a high level, a Seq2Seq model comprises two neural networks, one encoding the input and the other decoding the encoded representation to produce the output.

Early models included recurrent neural networks as the encoder and decoder components. In this case, the encoder’s role is to read the input sequence

![]() $X = < x_1, \ldots, x_n>$

, where

$X = < x_1, \ldots, x_n>$

, where

![]() $x_i$

is a token, and generate a fixed-dimension vector

$x_i$

is a token, and generate a fixed-dimension vector

![]() $C$

representing the context. Then, the decoder generates the output sequence

$C$

representing the context. Then, the decoder generates the output sequence

![]() $Y = < y_1, \ldots, y_m>$

, starting from the context vector

$Y = < y_1, \ldots, y_m>$

, starting from the context vector

![]() $C$

. However, compressing a variable-length sequence into a single context vector is challenging, especially when the input sequence is long. Thus, Seq2Seq models with vanilla recurrent networks fail to capture long textual dependencies due to the information bottleneck when relying on a single context vector.

$C$

. However, compressing a variable-length sequence into a single context vector is challenging, especially when the input sequence is long. Thus, Seq2Seq models with vanilla recurrent networks fail to capture long textual dependencies due to the information bottleneck when relying on a single context vector.

The attention mechanism emerged to address the information bottleneck problem of seq2seq recurrent neural networks (Bahdanau et al. Reference Bahdanau, Cho and Bengio2015). Instead of using only one context vector at the end of the encoding process, the attention vector provides the decoding network with a full view of the input sequence at every step of the decoding process. Thus, in the output generation process, the decoder can decide which tokens are important at any given time.

Using only attention mechanisms and dismissing recurrent and convolutional components, Vaswani et al. (Reference Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser and Polosukhin2017) created an architecture named Transformer, which was successful in numerous NLP tasks (Radford et al. Reference Radford, Narasimhan, Salimans and Sutskever2018; Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019). The Transformer architecture follows the Seq2Seq paradigm comprising an encoder–decoder architecture. The encoder consists of six identical encoding layers. Each comprises a multihead self-attention mechanism, residual connections, an add-norm mechanism, and a feed-forward network. The decoder also consists of six identical layers similar to the encoding layers. However, the decoder gets two inputs and applies the attention twice, where, in one of them, the input is masked. This prevents the token in a certain position from having access to tokens after it during the generation process. Also, the final decoder layer has a size equal to the number of words in the vocabulary.

2.2 Autorregressive and masked language models

Language models compute the probability of the occurrence of a token given a context. Autoregressive language models compute the probability of occurrence of a token, given the previous tokens in the sequence. Thus, given a sequence

![]() $\textbf{x} = (x_1, x_2, \ldots, x_m)$

, an autoregressive language model calculates the probability

$\textbf{x} = (x_1, x_2, \ldots, x_m)$

, an autoregressive language model calculates the probability

![]() $p(x_t| x_{< t})$

. The probability of a sequence of

$p(x_t| x_{< t})$

. The probability of a sequence of

![]() $m$

tokens

$m$

tokens

![]() $x_1, x_2, \ldots, x_m$

is given by

$x_1, x_2, \ldots, x_m$

is given by

![]() $P(x_1, x_2, \ldots, x_m)$

. Since it is computationally expensive to enumerate all possible combinations of tokens that come before a token,

$P(x_1, x_2, \ldots, x_m)$

. Since it is computationally expensive to enumerate all possible combinations of tokens that come before a token,

![]() $P(x_1, x_2, \ldots, x_m)$

is usually conditioned to a window of

$P(x_1, x_2, \ldots, x_m)$

is usually conditioned to a window of

![]() $n$

previous tokens instead of all the previous ones. In these cases,

$n$

previous tokens instead of all the previous ones. In these cases,

Pretraining neural language models using self-supervision from a large volume of texts has been highly effective in improving the performance of various NLP tasks (Peters et al. Reference Peters, Neumann, Iyyer, Gardner, Clark, Lee and Zettlemoyer2018; Radford et al. Reference Radford, Narasimhan, Salimans and Sutskever2018; Howard and Ruder Reference Howard and Ruder2018; Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019). The pretrained language models can be later adjusted with fine-tuning to handle specific downstream tasks or domains.

Different self-supervised goals were explored in the literature to pretrain a language model, including autoregressive and MLM strategies (Yang et al. Reference Yang, Dai, Yang, Carbonell, Salakhutdinov and Le2019, Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019; Yang et al. Reference Yang, Dai, Yang, Carbonell, Salakhutdinov and Le2019; Lan et al. Reference Lan, Chen, Goodman, Gimpel, Sharma and Soricut2020; Lan et al. Reference Lan, Chen, Goodman, Gimpel, Sharma and Soricut2020). An autoregressive language model, given a sequence

![]() $\textbf{x} = (x_1, x_2, \ldots, x_m)$

, factors the probability into a left-to-right product

$\textbf{x} = (x_1, x_2, \ldots, x_m)$

, factors the probability into a left-to-right product

![]() $p(\textbf{x}) = \prod _{t=1}^{T}p(x_t| x_{< t})$

or from right-to-left

$p(\textbf{x}) = \prod _{t=1}^{T}p(x_t| x_{< t})$

or from right-to-left

![]() $p(\textbf{x}) = \prod _{t=T}^{1}p(x_t| x_{> t})$

. The problem with these models is unidirectionality since, during training, a token can only be aware of the tokens to its left or right. Many works point out that it is fundamental for several tasks to obtain bidirectional representations incorporating the context of both the left and the right (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019).

$p(\textbf{x}) = \prod _{t=T}^{1}p(x_t| x_{> t})$

. The problem with these models is unidirectionality since, during training, a token can only be aware of the tokens to its left or right. Many works point out that it is fundamental for several tasks to obtain bidirectional representations incorporating the context of both the left and the right (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019).

Devlin et al. (Reference Devlin, Chang, Lee and Toutanova2019) introduced the task of masked language modeling (MLM) with BERT, a transformer-encoder. MLM consists of replacing a percentage (in the original work,

![]() $15\%$

) of the sequence tokens with a token [MASK] and then predicting those masked tokens. In BERT (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019), the probability distribution of the masked tokens regards both the left and right contexts of the sentence.

$15\%$

) of the sequence tokens with a token [MASK] and then predicting those masked tokens. In BERT (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019), the probability distribution of the masked tokens regards both the left and right contexts of the sentence.

2.2.1 ALBERT: A lite version of BERT

Overall, increasing the size of a pretrained neural language model positively impacts subsequent tasks. However, growing the model becomes unfeasible at some point due to GPU/TPU memory limitations and huge training time. ALBERT (Lan et al. Reference Lan, Chen, Goodman, Gimpel, Sharma and Soricut2020) addressed these problems with two techniques for reducing parameters. This manuscript relies on an ALBERT model to extract knowledge from its rich contextualized representations. Although any other MLM model could have been selected, we adopt ALBERT because it uses fewer computational resources when compared to a BERT of the same size.

The architectural skeleton of ALBERT is similar to BERT, which means that it uses a transformer encoder with GELU activation function (Hendrycks and Gimpel Reference Hendrycks and Gimpel2016). ALBERT makes three main contributions to BERT design choices, as follows. Following BERT notation,

![]() $E$

is the size of the vocabulary vector representations,

$E$

is the size of the vocabulary vector representations,

![]() $L$

is the number of encoder layers, and

$L$

is the number of encoder layers, and

![]() $H$

is the hidden layers’ representation size.

$H$

is the hidden layers’ representation size.

Parameter factorization

BERT ties

![]() $E$

to the size of the hidden states representation

$E$

to the size of the hidden states representation

![]() $H$

, that is,

$H$

, that is,

![]() $E = H$

, which is not efficient, both for modeling and practical reasons. From a modeling point of view, the learned representations for vocabulary tokens are context-independent, while the hidden layers of learned representations are context-dependent. As the representational power of BERT lies in the possibility of obtaining rich contextualized representations from non-contextualized representations, untying

$E = H$

, which is not efficient, both for modeling and practical reasons. From a modeling point of view, the learned representations for vocabulary tokens are context-independent, while the hidden layers of learned representations are context-dependent. As the representational power of BERT lies in the possibility of obtaining rich contextualized representations from non-contextualized representations, untying

![]() $E$

from

$E$

from

![]() $H$

is a more efficient use of model parameters. From a practical point of view, as in most NLP tasks, the vocabulary size

$H$

is a more efficient use of model parameters. From a practical point of view, as in most NLP tasks, the vocabulary size

![]() $V$

is usually large; if

$V$

is usually large; if

![]() $E = H$

, increasing

$E = H$

, increasing

![]() $H$

increases the representation matrix of vocabulary tokens, which is of length

$H$

increases the representation matrix of vocabulary tokens, which is of length

![]() $V \times E$

. This can result in a model with billions of parameters, many of which are sparsely updated during training. Thus, ALBERT decomposes the matrix of vocabulary representations into two smaller matrices, reducing the parameters from

$V \times E$

. This can result in a model with billions of parameters, many of which are sparsely updated during training. Thus, ALBERT decomposes the matrix of vocabulary representations into two smaller matrices, reducing the parameters from

![]() $O(V \times H)$

to

$O(V \times H)$

to

![]() $O(V \times E + E \times H)$

. This is a significant reduction when

$O(V \times E + E \times H)$

. This is a significant reduction when

![]() $H \gg E$

.

$H \gg E$

.

Shared parameters between layers

ALBERT has a scheme for sharing parameters between layers to improve handling parameters efficiently. Although it is possible to share the parameters between the layers partially, the default configuration shares all the parameters, both neural network and attention parameters. With this, it is possible to increase the depth without increasing the number of parameters.

Coherence component between sentences in the loss function

BERT has an extra component in the loss function besides predicting the masked tokens, called next-sentence prediction (NSP). During training, NSP learns whether two sentences appear consecutively in the original text. Arguing that this component is not practical as a task when compared to the MLM task, Lan et al. (Reference Lan, Chen, Goodman, Gimpel, Sharma and Soricut2020) proposed a component called sentence-order prediction (SOP). This component uses as a positive example two consecutive text segments from the same document (such as BERT). As a negative example, the same two segments, but with the order changed. The results indicated that ALBERT improved performance in subsequent tasks that involved coding more than one sentence.

Although masked pretrained models, such as BERT and ALBERT, benefit several NLP tasks, they fit less text generation tasks since they are transformer encoders, and decoders are more suitable to generate texts. However, it is common when writing a text that words that appeared before can be changed after writing a later sequence, giving an intuitive idea of context and bidirectionality. In order to make use of rich bidirectional representations from a MLM, MATTES distills knowledge from ALBERT to benefit the TST task.

3. Literature review and related work

TST aims to automatically change stylistic features, such as formality, sentiment, author style, humor, and complexity, while trying to preserve the content. This manuscript focuses on unsupervised TST, given that creating a parallel corpus of texts with different styles is challenging and requires much human effort. In this scenario, the approaches differ in investigating how to disentangle style and content or not disentangling them at all (Hu et al. Reference Hu, Lee, Aggarwal and Zhang2022). Lample et al. (Reference Lample, Subramanian, Smith, Denoyer, Ranzato and Boureau2019) argued that it is difficult to judge whether content and style representations obtained are disentangled and that disentanglement is not necessary for the TST task either. Recent studies, including this manuscript, explore the TST task without disentangling content and style. Next, we elicit works that explicitly decouple the content from the style, try to detach them implicitly using latent variables, or do not rely on disentanglement strategies to position our work within the current TST literature.

3.1 Explicit disentanglement

Models following this strategy generate texts through direct replacement of keywords associated with the style (Li et al. Reference Li, Jia, He and Liang2018; Xu et al. Reference Xu, Sun, Zeng, Zhang, Ren, Wang and Li2018; Zhang et al. Reference Zhang, Xu, Yang and Sun2018a; Sudhakar, Upadhyay, and Maheswaran Reference Sudhakar, Upadhyay and Maheswaran2019; Wu et al. Reference Wu, Ren, Luo and Sun2019a, Reference Wu, Zhang, Zang, Han and Hu2019b; Malmi, Severyn, and Rothe Reference Malmi, Severyn and Rothe2020b).

The method Delete, Retrieve, Generate (Li et al. Reference Li, Jia, He and Liang2018) explicitly replaces keywords in a text with words of the target style. First, it removes the words that best represent the original style. Then, it fetches the text most similar to the input from the target corpus. Next, it extracts the words most closely associated with the target style from the returned text and combines them with the sentence acquired in the first step to generate the output text using a sequence-to-sequence neural network model. Sudhakar et al. (Reference Sudhakar, Upadhyay and Maheswaran2019) extended the model Delete, Retrieve, Generate to improve the Delete step using a transformer (Vaswani et al. Reference Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser and Polosukhin2017). The method POINT-THEN-OPERATE (Wu et al. Reference Wu, Ren, Luo and Sun2019) also changes the input sentence but relies on hierarchical reinforcement learning. One RL agent points out the positions that should be edited in the sentence, and another RL agent changes it.

Zhang et al. (Reference Zhang, Xu, Yang and Sun2018a) adopted a keyword substitution technique similar to Delete, Retrieve, Generate to transfer sentiment in texts. Also focusing on sentiment transfer, Xu et al. (Reference Xu, Sun, Zeng, Zhang, Ren, Wang and Li2018) developed a model with a neutralization and an emotionalization components. The former extracts semantic information without emotional content, while the latter adds sentiment content to the neutralized positions.

The Mask and Infill method (Wu et al. Reference Wu, Zhang, Zang, Han and Hu2019b) works in two stages. First, it masks words associated with the style using frequency rates. Next, it fills the masked positions with the target style using a pretrained MLM. Malmi et al. (Reference Malmi, Severyn and Rothe2020b) also used a pretrained MLM to remove snippets and generate the replacement snippets. Although these works rely on MLMs, they differ from our proposal as they use language models only to predict tokens to replace previously removed ones.

3.2 Implicit disentanglement

Models focusing on implicit disentanglement detach content and style from the original sentence but do not explicitly alter the original sentence. They learn latent content and style representations for a given text to separate content from style. Then, the content representation is combined with the target style representation to generate the text in the target style.

Most recent solutions leverage adversarial learning (Hu et al. Reference Hu, Yang, Liang, Salakhutdinov and Xing2017; Shen et al. Reference Shen, Lei, Barzilay and Jaakkola2017; Fu et al. Reference Fu, Tan, Peng, Zhao and Yan2018; Zhao et al. Reference Zhao, Kim, Zhang, Rush and LeCun2018b; Chen et al. Reference Chen, Dai, Tao, Shen, Gan, Zhang, Zhang, Zhang, Wang and Carin2018; Logeswaran, Lee, and Bengio Reference Logeswaran, Lee and Bengio2018; Lai et al. Reference Lai, Hong, Chen, Lu and Lin2019; John et al. Reference John, Mou, Bahuleyan and Vechtomova2019; Yin et al. Reference Yin, Huang, Dai and Chen2019) to obtain style-agnostic representations of the sentence content. After learning the latent content representation, the decoder receives as input that representation along with the label of the desired style to generate a variation of the input text with the desired style. Yang et al. (Reference Yang, Hu, Dyer, Xing and Berg-Kirkpatrick2018) followed that strategy and used two language models, one for each stylistic domain. The model minimizes the perplexity of sentences generated according to these pretrained language models.

Other techniques separate content from the style by artificially generating parallel data via back-translation, then converting them back to the original domain, forcing them to be the same as the input. Besides addressing the lack of parallel data, such a strategy can normalize the input sentence by stripping away information that is predictive of its original style. With back-translation, one-direction outputs and inputs can be used as pairs to train the model of the opposite transfer direction. Prabhumoye et al. (Reference Prabhumoye, Tsvetkov, Salakhutdinov and Black2018) used an English-French neural translation model to rephrase the sentence and remove the stylistic properties of the text. The English sentence is initially translated into French. The French text is then translated back to English using a French-English neural model. Finally, this style-independent latent representation learned is used to generate texts in a different style using a multiple-decoder approach. Zhang et al. (Reference Zhang, Ren, Liu, Wang, Chen, Li, Zhou and Chen2018b) followed back-translation first to create pseudo-parallel data. Then, those data initialize an iterative back-translation pipeline to train two style transfer systems based on neural translation models. Krishna et al. (Reference Krishna, Wieting and Iyyer2020) used a paraphrasing model to create pseudo-parallel data and then trained style-specific inverse paraphrase models that convert these paraphrased sentences back into the original stylized sentence. Despite adopting back-translation, our proposal does not expect that the model output is deprived of style information. On the contrary, during training, we try to control the style of the generated output.

Some disentanglement strategies explore learning a style attribute to control the generation of texts in different styles. The method presented in (Hu et al. Reference Hu, Yang, Liang, Salakhutdinov and Xing2017) induces a model that uses a variational autoencoder (VAE) to learn latent representations of sentences. These representations are composed of unstructured variables (content)

![]() $z$

and structured variables (style)

$z$

and structured variables (style)

![]() $c$

that aim to represent salient and independent features of the sentence semantics. Finally,

$c$

that aim to represent salient and independent features of the sentence semantics. Finally,

![]() $z$

and

$z$

and

![]() $c$

are inserted into a decoder to generate text in the desired style. Tian et al. (Reference Tian, Hu and Yu2018) extended this approach, adding constraints to preserve style-independent content, using Part-of-speech categories and a content-conditioned language model. Zhao, Kim, Zhang, Rush and LeCun (2018) proposed a regularized adversarial autoencoder that expands the use of adversarial autoencoders to discrete sequences. Park et al. (Reference Park, Hwang, Chen, Choo, Ha, Kim and Yim2019) relied on adversarial training and VAE to expand previous methods to generate paraphrases guided by a target style.

$c$

are inserted into a decoder to generate text in the desired style. Tian et al. (Reference Tian, Hu and Yu2018) extended this approach, adding constraints to preserve style-independent content, using Part-of-speech categories and a content-conditioned language model. Zhao, Kim, Zhang, Rush and LeCun (2018) proposed a regularized adversarial autoencoder that expands the use of adversarial autoencoders to discrete sequences. Park et al. (Reference Park, Hwang, Chen, Choo, Ha, Kim and Yim2019) relied on adversarial training and VAE to expand previous methods to generate paraphrases guided by a target style.

3.3 Non-disentanglement approaches

Although the models included in this category do not assume the need to disentangle content and style from input sentences, they also rely on controlled generation, adversarial learning, reinforcement learning, back-translation, and probabilistic models, similar to some models in the previous categories.

Jain et al. (Reference Jain, Mishra, Azad and Sankaranarayanan2019) proposed a framework for controlled natural language transformation that consists of an encoder–decoder neural network reinforced by transformations carried out through auxiliary modules. Zhang, Ding, and Soricut (Reference Zhang, Ding and Soricut2018) devised the SHAPED method with an architecture that has shared parameters updated from all training examples and not-shared parameters updated with only examples from their respective distributions. Zhou et al. (Reference Zhou, Chen, Liu, Xiao, Su, Guo and Wu2020) proposed a Seq2Seq model that dynamically evaluates the relevance of each output word for the target style. Lample et al. (Reference Lample, Subramanian, Smith, Denoyer, Ranzato and Boureau2019) also used the strategy of learning attribute representations to control text generation. They demonstrated that it is difficult to prove that the style is separated from the disentangled content representation and that performing this disentanglement is unnecessary for the TST task to succeed. Dai et al. (Reference Dai, Liang, Qiu and Huang2019) proposed a transformer-based architecture with trainable style vectors. Our proposal follows this architecture, but it differs in the training strategy since it alters the loss function of the generating network to extract knowledge from a MLM.

The methods presented in Mueller, Gifford, and Jaakkola (Reference Mueller, Gifford and Jaakkola2017); Wang, Hua, and Wan (Reference Wang, Hua and Wan2019); Liu et al. (Reference Liu, Fu, Zhang, Pal and Lv2020); Xu, Cheung, and Cao (Reference Xu, Cheung and Cao2020) have in common the fact that they manipulate the hidden representations obtained from the input sentence to generate texts in the desired style. The method proposed in Mueller et al. (Reference Mueller, Gifford and Jaakkola2017) comprises a recurrent VAE and an output predictor neural network. By imposing boundary conditions during optimization and using the VAE decoder to generate the revised sentences, the method ensures that the transformation is similar to the original sentence, is associated with better outputs, and looks natural. The method devised in Liu et al. (Reference Liu, Fu, Zhang, Pal and Lv2020) has three components: (1) a VAE, which has an encoder that maps the sentence to a smooth continuous vector space and a decoder that maps back the continuous representation to a sentence; (2) attribute predictors, which use the continuous representation obtained by the VAE as input and predict the attributes of the output sentence; and (3) content predictors of a Bag-of-word (BoW) variable for the output sentence. The method proposed in Wang et al. (Reference Wang, Hua and Wan2019) comprises an autoencoder based on Transformers to learn a hidden input representation. Next, the task becomes an optimization problem that edits the obtained hidden representation according to the target attribute. VAE is also the core of the method proposed in Xu et al. (Reference Xu, Cheung and Cao2020) to control text generation. The method proposed in He et al. (Reference He, Wang, Neubig and Berg-Kirkpatrick2020) addresses the TST task with unsupervised learning, formulating it as a probabilistic deep generative model, where the optimization objective arises naturally, without the need to create artificial custom objectives.

Gong et al. (Reference Gong, Bhat, Wu, Xiong and Hwu2019) leveraged reinforcement learning with a generator and an evaluator network. The evaluator is an adversarially trained style discriminator with semantic and syntactic constraints punctuating the sentence generated by style, content preservation, and fluency. The method proposed in Luo et al. (Reference Luo, Li, Zhou, Yang, Chang, Sun and Sui2019) also uses reinforcement learning and considers the problem of transferring style from one domain to another as a dual task. To this end, two rewards are modeled based on this framework to reflect style control and content preservation.

Lai et al. (Reference Lai, Toral and Nissim2021) created a three-step procedure on top of the large pretrained seq2seq model BART (Lewis et al. Reference Lewis, Liu, Goyal, Ghazvininejad, Mohamed, Levy, Stoyanov and Zettlemoyer2020). First, they further pretrain the BART model on an existing collection of generic paraphrases and synthetic pairs created using a general-purpose lexical resource. Second, they use iterative back-translation with several reward strategies to train two models simultaneously, each in a transfer direction. Third, using their best systems from the previous step, they create a static resource of parallel data. These pairs are used to fine-tune the original BART with all reward strategies in a supervised way. As this model, ours also has a preliminary pretraining phase on the same paraphrase data. Nevertheless, our main training procedure differs, relying mostly on adversarial and distillation techniques for style control.

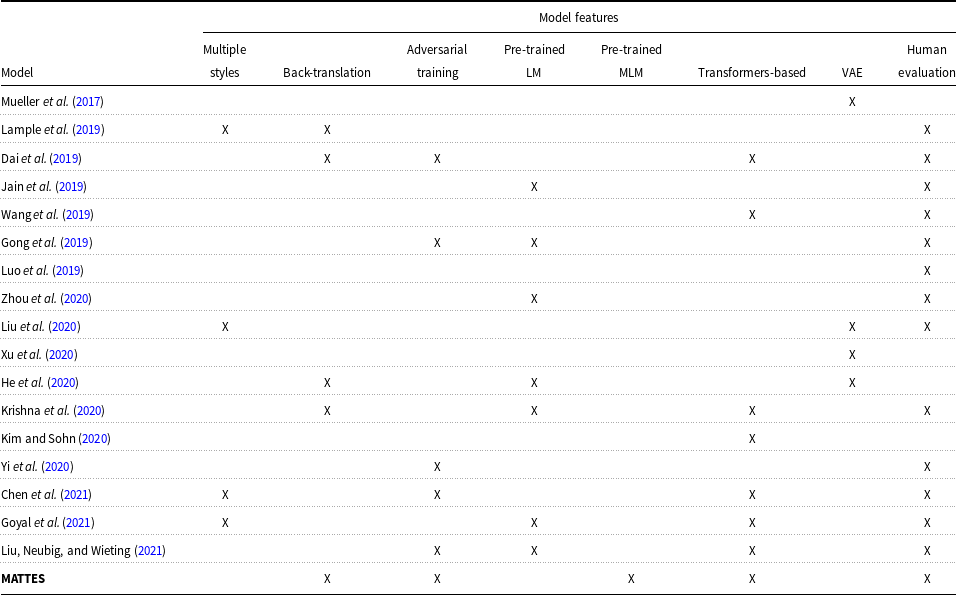

Table 1 exhibits different features of some works from the last 5 years that, like MATTES, do not follow any disentanglement strategy to decouple content from style in the unsupervised TST task.

Table 1. Non-disentaglemet publications according to model characteristics

4. MATTES: Masked transformer for text style transfer

This Section presents MATTES, our proposed approach that distills knowledge from a MLM aiming at improving the quality of the text generated by a Seq2Seq model for the TST task. The MLM adopted here is ALBERT (Lan et al. Reference Lan, Chen, Goodman, Gimpel, Sharma and Soricut2020), a lighter version of the popular BERT that still gets better or competitive results but relies on parameter reduction techniques to improve training efficiency and reduce memory costs.

MATTES follows the architecture established in Dai et al. (Reference Dai, Liang, Qiu and Huang2019), which in turn uses an adversarial training framework (Radford, Metz, and Chintala Reference Radford, Metz and Chintala2016). Training adopts two neural networks. One is a discriminator network operating only during training. The discriminator is a style classifier aiming at making the model learn to differentiate the original and the reconstructed sentences from the translated sentences. The other neural network is the text generator. It comprises an encoder and a decoder, based on a transformer architecture. The generator network receives as input a sentence

![]() $X$

and a target output style

$X$

and a target output style

![]() $s^{\text{tgt}}$

and produces a sentence

$s^{\text{tgt}}$

and produces a sentence

![]() $Y$

in the target style, making the proposed model a mapping function

$Y$

in the target style, making the proposed model a mapping function

![]() $Y = f_\theta (X,s^{\text{tgt}})$

. The final model learned by MATTES and used for inference is the generator network, while the discriminator network is used only during training.

$Y = f_\theta (X,s^{\text{tgt}})$

. The final model learned by MATTES and used for inference is the generator network, while the discriminator network is used only during training.

Before going into further details about the specific components of MATTES, Section 4.1 formalizes how we investigate the textual style transfer task in this manuscript. Afterward, the main paradigms that constitute the proposed method are presented: Section 4.2 presents the Seq2Seq learning component, and Section 4.3 devises the MLM and the knowledge distillation method that extracts knowledge from the MLM. Finally, Section 4.4 describes the adversarial learning algorithm proposed here and gives additional details about the model architecture and design choices.

4.1 Problem formulation

This manuscript assumes the styles as elements of a set

![]() $S$

. For example, S = {positive, negative} for the polarity swap task, where the text style can be positive or negative. For training the model, there is a set of sentences

$S$

. For example, S = {positive, negative} for the polarity swap task, where the text style can be positive or negative. For training the model, there is a set of sentences

![]() $D = \{(X_1,s_1),\ldots,(X_k,s_k)\}$

labeled with their style, that is,

$D = \{(X_1,s_1),\ldots,(X_k,s_k)\}$

labeled with their style, that is,

![]() $X_i$

is a sentence and

$X_i$

is a sentence and

![]() $s_i \in S$

is the style attribute of the sentence. From

$s_i \in S$

is the style attribute of the sentence. From

![]() $D$

, we extract a set of style sentences

$D$

, we extract a set of style sentences

![]() $D_s = \{ X : (X,s) \in D\}$

, which represent all the sentences of

$D_s = \{ X : (X,s) \in D\}$

, which represent all the sentences of

![]() $D$

with attribute

$D$

with attribute

![]() $s$

. In the polarity swap task, it would be all sentences with attribute positive, for example. All sequences in the same dataset

$s$

. In the polarity swap task, it would be all sentences with attribute positive, for example. All sequences in the same dataset

![]() $D_i$

share specific characteristics related to the style of the sequences.

$D_i$

share specific characteristics related to the style of the sequences.

The goal of the textual style transfer learning task tackled in this manuscript is to build a model that receives as input a sentence

![]() $X$

and a target style

$X$

and a target style

![]() $s^{\text{tgt}}$

, where

$s^{\text{tgt}}$

, where

![]() $X$

is a sentence with style

$X$

is a sentence with style

![]() $s^{\text{src}} \neq s^{\text{tgt}}$

, and produces a sentence

$s^{\text{src}} \neq s^{\text{tgt}}$

, and produces a sentence

![]() $Y$

that preserves as much as possible the content of

$Y$

that preserves as much as possible the content of

![]() $X$

while incorporating the style

$X$

while incorporating the style

![]() $s^{\text{tgt}}$

. MATTES addresses the problem of style transfer with unsupervised machine learning. Thus, the only data available for training are the sequences

$s^{\text{tgt}}$

. MATTES addresses the problem of style transfer with unsupervised machine learning. Thus, the only data available for training are the sequences

![]() $X$

and their style source

$X$

and their style source

![]() $s^{\text{src}}$

. MATTES do not have access to a template sentence

$s^{\text{src}}$

. MATTES do not have access to a template sentence

![]() $X^{*}$

, which would be the conversion of

$X^{*}$

, which would be the conversion of

![]() $X$

to the target style

$X$

to the target style

![]() $s^{\text{tgt}}$

.

$s^{\text{tgt}}$

.

When we adopt the strategy of pretraining the Seq2Seq model, there is another style element in the set

![]() $S$

that we call the paraphrase style. For the polarity swap task,

$S$

that we call the paraphrase style. For the polarity swap task,

![]() $S$

would be S = {positive, negative, paraphrase}, for example. This new style is not part of the domain dataset, and it only exists to make it possible to start from the pretrained model in the overall training.

$S$

would be S = {positive, negative, paraphrase}, for example. This new style is not part of the domain dataset, and it only exists to make it possible to start from the pretrained model in the overall training.

4.2 Sequence-to-sequence model

This section describes the sequence-to-sequence architecture and how it is pretrained on a large amount of generic data to improve the model.

4.2.1 Sequence-to-sequence learning architecture

As usual, we adopt the Seq2Seq encoder–decoder paradigm to solve the TST task. Formally, during learning, the model trains to generate an output sequence

![]() $Y = (y_1, \ldots, y_N )$

of length

$Y = (y_1, \ldots, y_N )$

of length

![]() $N$

, conditioned on the input sequence

$N$

, conditioned on the input sequence

![]() $X = (x_1, \ldots, x_M)$

of length

$X = (x_1, \ldots, x_M)$

of length

![]() $M$

, where

$M$

, where

![]() $x_i \in X$

and

$x_i \in X$

and

![]() $y_i \in Y$

are tokens. The encoder–decoder neural network achieves the goal of generating the output sequence by learning a conditional probability distribution

$y_i \in Y$

are tokens. The encoder–decoder neural network achieves the goal of generating the output sequence by learning a conditional probability distribution

![]() $P_{\theta }(Y|X)$

, by minimizing the cross entropy loss function

$P_{\theta }(Y|X)$

, by minimizing the cross entropy loss function

![]() $\mathcal{L}(\theta )$

$\mathcal{L}(\theta )$

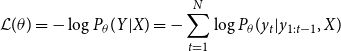

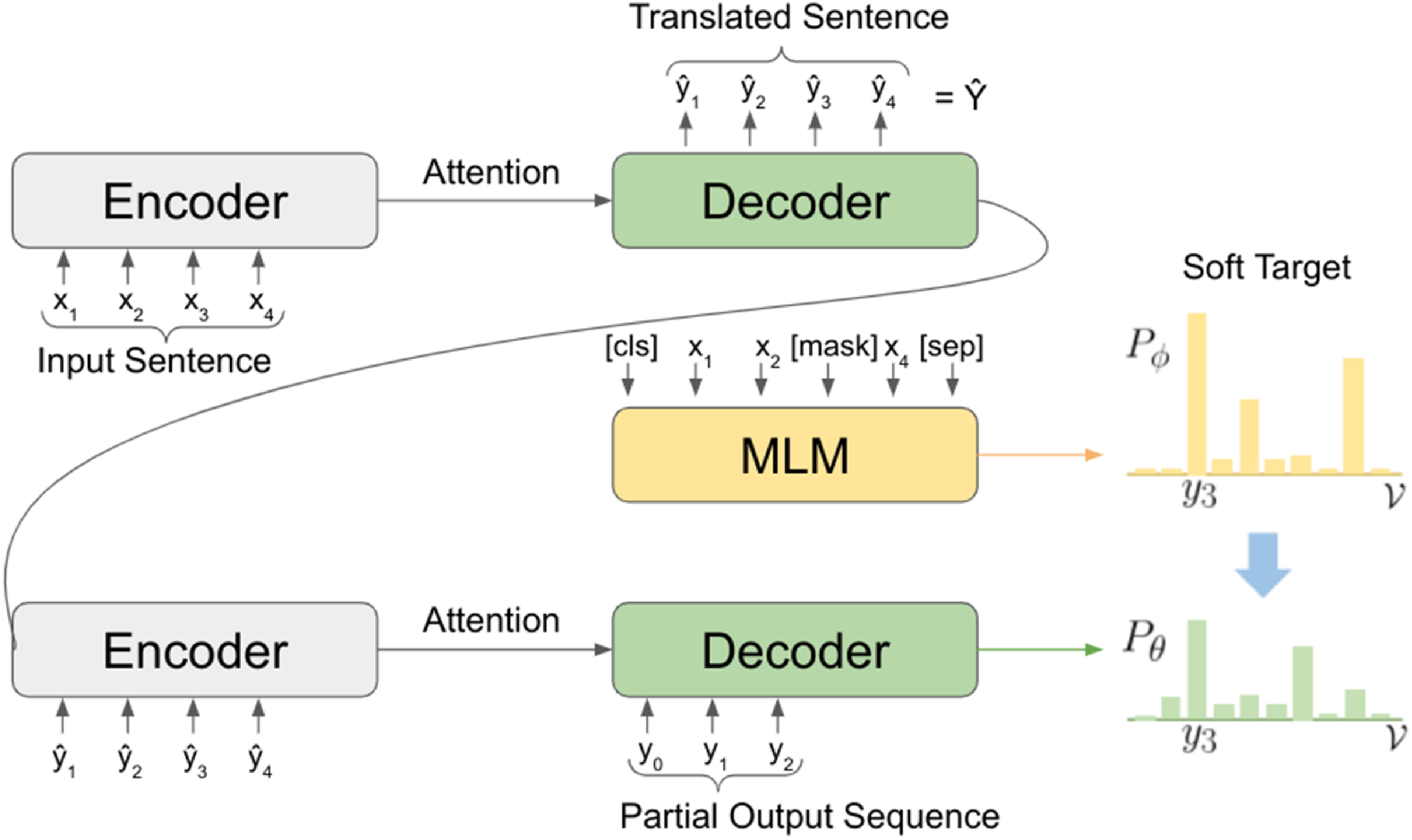

\begin{equation} \begin{split} \mathcal{L}(\theta ) = -\log P_{\theta }(Y|X) = - \sum _{t=1}^{N}\log P_{\theta }(y_t|y_{1:t-1},X) \end{split} \end{equation}

\begin{equation} \begin{split} \mathcal{L}(\theta ) = -\log P_{\theta }(Y|X) = - \sum _{t=1}^{N}\log P_{\theta }(y_t|y_{1:t-1},X) \end{split} \end{equation}

where

![]() $\theta$

are the model parameters.

$\theta$

are the model parameters.

Following (Dai et al. Reference Dai, Liang, Qiu and Huang2019), in this manuscript, the Transformer (Vaswani et al. Reference Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser and Polosukhin2017) architecture is adopted as the Seq2Seq model. During the training and inference process, in addition to the embeddings of the input sentence

![]() $X$

, the model also receives as input an embedding of the target style. Hence, the input embeddings passed to the encoder are the target style embedding followed by the embeddings of the input sentence, which is the sum of the token embeddings and the positional embeddings, following the Transformer architecture. Thus, the method aims to learn a model that represents a probability distribution conditioned not only on

$X$

, the model also receives as input an embedding of the target style. Hence, the input embeddings passed to the encoder are the target style embedding followed by the embeddings of the input sentence, which is the sum of the token embeddings and the positional embeddings, following the Transformer architecture. Thus, the method aims to learn a model that represents a probability distribution conditioned not only on

![]() $X$

but also on the desired target style

$X$

but also on the desired target style

![]() $s^{\text{tgt}}$

. Thus, Equation (2) is modified to meet this characteristic, giving rise to the loss function

$s^{\text{tgt}}$

. Thus, Equation (2) is modified to meet this characteristic, giving rise to the loss function

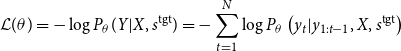

\begin{equation} \begin{split} \mathcal{L}(\theta ) = -\log P_{\theta }(Y|X,s^{\text{tgt}}) = - \sum _{t=1}^{N}\log P_{\theta }\left(y_t|y_{1:t-1},X,s^{\text{tgt}}\right) \end{split} \end{equation}

\begin{equation} \begin{split} \mathcal{L}(\theta ) = -\log P_{\theta }(Y|X,s^{\text{tgt}}) = - \sum _{t=1}^{N}\log P_{\theta }\left(y_t|y_{1:t-1},X,s^{\text{tgt}}\right) \end{split} \end{equation}

4.2.2 Sequence-to-sequence pre-training

Pretraining and fine-tuning are usually adopted when target tasks have few examples available (Radford et al. Reference Radford, Narasimhan, Salimans and Sutskever2018; Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019). Several pretraining approaches adopt MLM, a kind of denoising auto-encoder trained to reconstruct the text where some random words have been masked. This kind of pretraining has mainly improved the performance of natural language understanding tasks, which can be justified by the fact that MLMs are composed only of a bidirectional encoder, while generation tasks adopt a left-to-right decoder. In this sense, the model BART (Lewis et al. Reference Lewis, Liu, Goyal, Ghazvininejad, Mohamed, Levy, Stoyanov and Zettlemoyer2020) combined bidirectional and auto-regressive transformers to show that sequence-to-sequence pretraining can benefit downstream language generation tasks.

Following this rationale, we pretrain our Seq2Seq model in a large collection of paraphrase pairs (Hu et al. Reference Hu, Singh, Holzenberger, Post and Van Durme2019). With that, we expect the model to learn the primary task of rewriting, allowing the generation of pseudo-parallel data and, consequently, handling the style transfer in a supervised fashion. We added another style embedding inside our style embedding layer to adapt this technique to our training framework. This way, besides the actual styles of the sentences, there is an additional one that we refer to as

![]() $s^{\text{para}}$

. It is the only style inserted into the model during the pretraining phase. During this phase, we minimize the following loss function

$s^{\text{para}}$

. It is the only style inserted into the model during the pretraining phase. During this phase, we minimize the following loss function

\begin{equation} \begin{split} \mathcal{L}(\theta ) = -\log P_{\theta }(Y|X,s^{\text{para}}) = - \sum _{t=1}^{N}\log P_{\theta }\left(y_t|y_{1:t-1},X,s^{\text{para}}\right) \end{split} \end{equation}

\begin{equation} \begin{split} \mathcal{L}(\theta ) = -\log P_{\theta }(Y|X,s^{\text{para}}) = - \sum _{t=1}^{N}\log P_{\theta }\left(y_t|y_{1:t-1},X,s^{\text{para}}\right) \end{split} \end{equation}

4.3 Knowledge distillation

This section starts by describing the MLM adopted in this manuscript. Next, it devised the knowledge distillation strategy proposed here to leverage the MLM.

4.3.1 Masked language model

One of the main contributions of this manuscript is to introduce the ability to transfer knowledge contained in the rich bidirectional contextualized representations provided by a MLM to a Seq2Seq model. MATTES adopts ALBERT (Lan et al. Reference Lan, Chen, Goodman, Gimpel, Sharma and Soricut2020) as the MLM, whose architecture is similar to the popular BERT model (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019) but has fewer parameters.

From the language model learned by ALBERT, one can obtain the probability distribution of the masked tokens according to

where

![]() $x_*^m \in X^m$

are the masked tokens,

$x_*^m \in X^m$

are the masked tokens,

![]() $l$

is the number of masked tokens in

$l$

is the number of masked tokens in

![]() $X$

,

$X$

,

![]() $X^u$

are the unmasked tokens, and

$X^u$

are the unmasked tokens, and

![]() $\phi$

are ALBERT parameters.

$\phi$

are ALBERT parameters.

Before training the main model, we fine-tuned ALBERT with the available training dataset. Although ALBERT is prepared to handle a couple of sentences as inputs, given the problem definition, only one sentence is required. This is true either when fine-tuning or when training the main Seq2Seq model. Because of that, MATTES does not adopt ALBERT Sentence-order prediction loss component during the fine-tuning. When training the main TST model, ALBERT parameters do not change. As the next section explains, MATTES uses the probability distribution provided by ALBERT for each token of the input sentence as a label for training the model in one of the components of the loss function. Thus, instead of forcing the model to generate a probability distribution with the entire probability mass in a single token, the model is forced to have a smoother probability distribution, injecting probability mass into several tokens.

4.3.2 Knowledge distillation from the masked language model

This section details how we introduce the knowledge distillation strategy into the model loss function. To preserve the content of the original message, several previous TST methods adopt a back-translation (BT) strategy (Lample et al. Reference Lample, Subramanian, Smith, Denoyer, Ranzato and Boureau2019; Dai et al. Reference Dai, Liang, Qiu and Huang2019; He et al. Reference He, Wang, Neubig and Berg-Kirkpatrick2020; Lai et al. Reference Lai, Toral and Nissim2021), proposed in Sennrich, Haddow, and Birch (Reference Sennrich, Haddow and Birch2016) for the machine translation task. BT generates pseudo-parallel sequences for training when no parallel sentences are available in the examples, thus generating latent parallel data. Thus, in the context of the TST task, pairs of sentences are created to train the model by automatically converting sentences from the training set to another style.

During training, the BT component takes a sentence

![]() $X$

and its style

$X$

and its style

![]() $s$

as input and converts it to a target style target

$s$

as input and converts it to a target style target

![]() $\hat{s}\neq s$

, generating the sentence

$\hat{s}\neq s$

, generating the sentence

![]() $\hat{Y} = f_\theta (X, \hat{s})$

. After that, the generated sentence

$\hat{Y} = f_\theta (X, \hat{s})$

. After that, the generated sentence

![]() $\hat{Y}$

is passed as input to the model along with the original style

$\hat{Y}$

is passed as input to the model along with the original style

![]() $s$

, and the network is trained to learn to predict the original input sentence

$s$

, and the network is trained to learn to predict the original input sentence

![]() $X$

. The input sentence is first converted to the target style and then converted back to its original style.

$X$

. The input sentence is first converted to the target style and then converted back to its original style.

The method proposed in Dai et al. (Reference Dai, Liang, Qiu and Huang2019) adopts the back-translation strategy to learn a TST model, minimizing the negative value of the logarithm of the probability that the generated sentence is equal to the original sentence with

where

![]() $f_\theta (X, \hat{s})$

indicates the converted sentence and

$f_\theta (X, \hat{s})$

indicates the converted sentence and

![]() $s$

is the original input style.

$s$

is the original input style.

Sequence-to-sequence models are normally trained from left to right. Thus, when generating each token to compose the sentence, the vocabulary probability distribution is conditioned only to the previous tokens in the sentence. This is to avoid each token seeing itself and the others after it. However, such an approach has the disadvantage of estimating the probability distribution only with the left context.

MATTES uses a configuration called masked transformer during training to overcome this limitation and generate a distribution that includes bidirectional information. The adoption of this architecture in the style transfer task comes from the observation that the probability distribution of a masked token

![]() $x_t^{m}$

given by an MLM contains both past and future context information. Thus, as no sentence pairs are available to perform supervised training, the central idea is to force MATTES to generate a distribution provided by ALBERT for each token. By doing that, MATTES smooths the probability curve and spreads probability mass over more tokens. Such additional information can improve the quality of the generated texts and, in particular, the style control of the model. Thus, during the optimization of the loss function component related to the back-translation (Equation 6), the target is no longer the distribution in which the entire probability mass is in a single token. Instead, it becomes the probability distribution given by the MLM

$x_t^{m}$

given by an MLM contains both past and future context information. Thus, as no sentence pairs are available to perform supervised training, the central idea is to force MATTES to generate a distribution provided by ALBERT for each token. By doing that, MATTES smooths the probability curve and spreads probability mass over more tokens. Such additional information can improve the quality of the generated texts and, in particular, the style control of the model. Thus, during the optimization of the loss function component related to the back-translation (Equation 6), the target is no longer the distribution in which the entire probability mass is in a single token. Instead, it becomes the probability distribution given by the MLM

![]() $P_{\phi }(x_t^m|X^u)$

(Equation 5), for each token

$P_{\phi }(x_t^m|X^u)$

(Equation 5), for each token

![]() $x_t$

of the sentence input. The distribution provided by the MLM becomes a softer target for text generation during training, moving the model away from learning a more abrupt and unreal distribution, where the entire probability mass is in a single token.

$x_t$

of the sentence input. The distribution provided by the MLM becomes a softer target for text generation during training, moving the model away from learning a more abrupt and unreal distribution, where the entire probability mass is in a single token.

Another point that MATTES leverages with MLM is the use of a knowledge distillation scheme (Hinton, Vinyals, and Dean Reference Hinton, Vinyals and Dean2015). Distilling consists of extracting the knowledge contained in one model through a specific training technique. This technique is commonly used to transfer information from a large, already trained model, a teacher, to a smaller model, a student, better suited for production. In such a scheme, the student uses the teacher’s output values as the goal, instead of relying on the training set labels. The distillation process controls better the optimization and regularization of the training process (Phuong and Lampert Reference Phuong and Lampert2019).

Several previous distillation methods train both the teacher and the student in the same task, aiming at model compression (Hinton et al. Reference Hinton, Vinyals and Dean2015; Sun et al. Reference Sun, Cheng, Gan and Liu2019). Here, we have a different goal, as we use distillation to take advantage of pretrained bidirectional representations generated through a MLM. Thus, ALBERT provides smoother labels to be used as targets during training in the knowledge distillation component of the Seq2Seq loss function, inspired by the method proposed in Chen et al. (Reference Chen, Gan, Cheng, Liu and Liu2020), to improve the quality of the generated text.

MATTES benefits from the distillation scheme by making the MLM assume the teacher’s role, while the unsupervised Seq2Seq model behaves like the student. Equation (7) shows how we adapt the back-translation component to be a bidirectional knowledge distillation component:

\begin{equation} \begin{aligned} \mathcal{L}_{\text{bidi}}(\theta ) ={} & -\sum _{t=1}^{N} \sum _{w \in V}P_{\phi }(x_t = w |X^u) \cdot \\ &\cdot \log P_{\theta }(y_t = w |y_{1:t-1},f_\theta (X,\hat{s},s)) \end{aligned} \end{equation}

\begin{equation} \begin{aligned} \mathcal{L}_{\text{bidi}}(\theta ) ={} & -\sum _{t=1}^{N} \sum _{w \in V}P_{\phi }(x_t = w |X^u) \cdot \\ &\cdot \log P_{\theta }(y_t = w |y_{1:t-1},f_\theta (X,\hat{s},s)) \end{aligned} \end{equation}

where

![]() $P_{\phi }(x_t)$

is the soft target provided by the MLM with learned parameters

$P_{\phi }(x_t)$

is the soft target provided by the MLM with learned parameters

![]() $\phi$

,

$\phi$

,

![]() $N$

is the sentence length, and

$N$

is the sentence length, and

![]() $V$

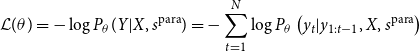

denotes the vocabulary. Note that the parameters of the MLM are fixed during the training process. Figure 1 illustrates the learning process, where the objective is to make the probability distribution of the word

$V$

denotes the vocabulary. Note that the parameters of the MLM are fixed during the training process. Figure 1 illustrates the learning process, where the objective is to make the probability distribution of the word

![]() $P_\theta (y_t)$

, provided by the student, be closer to the distribution provided by the teacher,

$P_\theta (y_t)$

, provided by the student, be closer to the distribution provided by the teacher,

![]() $ P_\phi (x_t)$

.

$ P_\phi (x_t)$

.

Figure 1. Training illustration when the model is predicting the token

![]() $y_3$

using an MLM.

$y_3$

using an MLM.

![]() $P_\theta$

is the student distribution, while

$P_\theta$

is the student distribution, while

![]() $P_\phi$

is the teacher soft distribution provided by the MLM.

$P_\phi$

is the teacher soft distribution provided by the MLM.

MATTES is trained with an adapted back-translation method that implements a knowledge distillation procedure:

where

![]() $\alpha$

is a hyperparameter to adjust the relative importance of the soft targets provided by the MLM and the original targets.

$\alpha$

is a hyperparameter to adjust the relative importance of the soft targets provided by the MLM and the original targets.

With the introduction of the new loss function term, the distribution is forced to become smoother during training. When the traditional one-hot representations are used as a target during training, the model is forced to generate the correct token. All other tokens do not matter to the model, treating equally tokens that would be more likely to occur and tokens with almost zero chance of occurring. In this way, by relying on a smoother distribution, the model increases its potential to generate more fluent sentences as the probability spreads over more tokens. Also, a smoother distribution avoids token generation entirely out of context and better controls the desired style.

4.4 Learning algorithm

Training the model proposed here follows the algorithm defined in Dai et al. (Reference Dai, Liang, Qiu and Huang2019). Both the discriminator and the generator networks are trained adversarially. First, we describe how to train the discriminator network and then the generator, where the masked transformer proposed in this manuscript is inserted.

4.4.1 Learning the discriminator network

The discriminator neural network is a multiclass classifier with

![]() $K+1$

classes.

$K+1$

classes.

![]() $K$

classes are associated with

$K$

classes are associated with

![]() $K$

different styles, and the remaining class refers to the converted sentences generated by the masked Transformer. The discriminator network is trained to distinguish the original and the reconstructed sentences from the translated sentences. It means the classifier is trained to classify a sentence

$K$

different styles, and the remaining class refers to the converted sentences generated by the masked Transformer. The discriminator network is trained to distinguish the original and the reconstructed sentences from the translated sentences. It means the classifier is trained to classify a sentence

![]() $X$

with style

$X$

with style

![]() $s$

and its reconstructed sentence

$s$

and its reconstructed sentence

![]() $Y$

, as belonging to the class

$Y$

, as belonging to the class

![]() $s$

, and the translated

$s$

, and the translated

![]() $\hat{Y}$

as belonging to the class of translated sentences. Accordingly, its loss function is defined as

$\hat{Y}$

as belonging to the class of translated sentences. Accordingly, its loss function is defined as

where

![]() $\rho$

are the parameters of the discriminator network,

$\rho$

are the parameters of the discriminator network,

![]() $c$

is the stylistic domain of the sample that can assume

$c$

is the stylistic domain of the sample that can assume

![]() $K+1$

categories. The parameters

$K+1$

categories. The parameters

![]() $\theta$

of the generator network are not updated when training the discriminator.

$\theta$

of the generator network are not updated when training the discriminator.

4.4.2 Learning the generator network

A reconstruction component, a knowledge distillation component, and an adversarial component compose the final loss function of the masked transformer, as follows.

Input sentence reconstruction component

When the model receives as input a sentence

![]() $X$

along with its style

$X$

along with its style

![]() $s$

, the model must be able to reconstruct the original sentence. The following reconstruction component is added to the loss function to make the model achieve this ability:

$s$

, the model must be able to reconstruct the original sentence. The following reconstruction component is added to the loss function to make the model achieve this ability:

During training,

![]() $\mathcal{L}_{\text{self}}$

is optimized in the traditional way, that is, from left to right, masking the future context, according to Equation (3). Despite being possible, this component does not adopt the technique of knowledge distillation as we would like to isolate the distillation to a single component and verify its benefit.

$\mathcal{L}_{\text{self}}$

is optimized in the traditional way, that is, from left to right, masking the future context, according to Equation (3). Despite being possible, this component does not adopt the technique of knowledge distillation as we would like to isolate the distillation to a single component and verify its benefit.

Knowledge distillation component

To extract knowledge from a pretrained MLM to improve the transductive process of converting a sentence from one style to another, we introduce the following knowledge distillation component

where

![]() $\mathcal{L}_{\text{BT}}$

is as Equation (6) and

$\mathcal{L}_{\text{BT}}$

is as Equation (6) and

![]() $\mathcal{L}_{\text{bidi}}$

is defined as Equation (7). With this, we expect to smooth the probability distribution of the style converter model, producing more fluent sentences resembling the target domain texts.

$\mathcal{L}_{\text{bidi}}$

is defined as Equation (7). With this, we expect to smooth the probability distribution of the style converter model, producing more fluent sentences resembling the target domain texts.

During training, to generate the knowledge distillation component (Equation 11) of our cost function, the translated sentence style

![]() $\hat{s}$

inserted into the generator network to obtain

$\hat{s}$

inserted into the generator network to obtain

![]() $\hat{Y}$

depends on whether we are training from scratch or using the generator network already pretrained on paraphrase data. In the former case, as we do not have the style

$\hat{Y}$

depends on whether we are training from scratch or using the generator network already pretrained on paraphrase data. In the former case, as we do not have the style

![]() $s^{\text{para}}$

, we convert it to the other existing style. On the latter,

$s^{\text{para}}$

, we convert it to the other existing style. On the latter,

![]() $\hat{Y}$

is created according to the paraphrase style

$\hat{Y}$

is created according to the paraphrase style

![]() $s^{\text{para}}$

. In both approaches,

$s^{\text{para}}$

. In both approaches,

![]() $\hat{Y}$

is inserted back into the network along with the original style

$\hat{Y}$

is inserted back into the network along with the original style

![]() $s$

to generate our final probability distribution

$s$

to generate our final probability distribution

![]() $P_\theta$

. This architectural modification unlocks our model to handle multiple styles at once. This way, regardless of the number of style domains, during training, we translate to

$P_\theta$

. This architectural modification unlocks our model to handle multiple styles at once. This way, regardless of the number of style domains, during training, we translate to

![]() $s^{\text{para}}$

and then back to

$s^{\text{para}}$

and then back to

![]() $s^{\text{src}}$

. In inference time, we first translate to

$s^{\text{src}}$

. In inference time, we first translate to

![]() $s^{\text{para}}$

and then to

$s^{\text{para}}$

and then to

![]() $s^{\text{tgt}}$

. Translating to the paraphrase style can be thought of as normalizing the input sentence, striping out stylistic information regarding the source style.

$s^{\text{tgt}}$

. Translating to the paraphrase style can be thought of as normalizing the input sentence, striping out stylistic information regarding the source style.

Adversarial component

When adopting the training from scratch approach, if the model is only trained with the sentence reconstruction and knowledge distillation components, it could quickly converge to learn to copy the input sentence, that is, stick to learning the identity function. Thus, to avoid this problematic and unwanted behavior, an adversarial component is added to the cost function to encourage texts converted to style

![]() $\hat{s}$

, different from the input sentence style

$\hat{s}$

, different from the input sentence style

![]() $s$

, to get closer to texts from the style

$s$

, to get closer to texts from the style

![]() $\hat{s}$

.

$\hat{s}$

.

In the scenario with the presence of paraphrase style, the adversarial component tries to modify the generator such that the generated paraphrase sentence

![]() $\hat{Y}$

is pushed to become similar to other existing styles of the training set, as long as they are different from

$\hat{Y}$

is pushed to become similar to other existing styles of the training set, as long as they are different from

![]() $s^{\text{src}}$

.

$s^{\text{src}}$

.

Generalizing for both approaches, the converted sentence

![]() $\hat{Y}$

is inserted into the discriminator neural network and, during training, the probability of the generated sentence being of the style

$\hat{Y}$

is inserted into the discriminator neural network and, during training, the probability of the generated sentence being of the style

![]() $s^{\text{tgt}}$

, such that

$s^{\text{tgt}}$

, such that

![]() $s^{\text{tgt}}$

$s^{\text{tgt}}$

![]() $\neq$

$\neq$

![]() $s^{\text{src}}$

and

$s^{\text{src}}$

and

![]() $s^{\text{tgt}}$

$s^{\text{tgt}}$

![]() $\neq$

$\neq$

![]() $s^{\text{para}}$

, is maximized through optimization of the loss function defined in Equation 12. The

$s^{\text{para}}$

, is maximized through optimization of the loss function defined in Equation 12. The

![]() $\rho$

parameters of the discriminator network are not updated when training the generator network.

$\rho$

parameters of the discriminator network are not updated when training the generator network.

These three loss functions are merged, and the overall objective for the generator network becomes:

where

![]() $a_1$

and

$a_1$

and

![]() $a_2$

are hyperparameters to adjust each component’s importance in the loss function of the generator network.

$a_2$

are hyperparameters to adjust each component’s importance in the loss function of the generator network.

4.4.3 Adversarial training

Generative adversarial networks (GANs) (Goodfellow et al. Reference Goodfellow, Pouget-Abadie, Mirza, Xu, Warde-Farley, Ozair, Courville and Bengio2014) are differentiable generator neural networks based on a game theory scenario where a generator network must compete against an opponent. The generator network produces samples

![]() $x=g(z;\theta ^{(g)})$

. The discriminator network’s adversary aims to distinguish between samples from the training set and the generator network. Thus, during training, the discriminator learns to classify samples as real or artificially generated by the generator network. Simultaneously, the generator network tries to trick the discriminator and produces samples that look like they are coming from the probability distribution of the dataset. At convergence, the generator samples will be indistinguishable from the actual training set samples, and the discriminator network can be dropped.

$x=g(z;\theta ^{(g)})$

. The discriminator network’s adversary aims to distinguish between samples from the training set and the generator network. Thus, during training, the discriminator learns to classify samples as real or artificially generated by the generator network. Simultaneously, the generator network tries to trick the discriminator and produces samples that look like they are coming from the probability distribution of the dataset. At convergence, the generator samples will be indistinguishable from the actual training set samples, and the discriminator network can be dropped.

In the context of this manuscript, the adversarial strategy can be better understood according to the approach adopted. When training from scratch, it aims at converting texts to the desired style without incurring the failure to copy the input text. When training from a pretrained paraphrase model, the adversarial technique tries to push the generated paraphrases toward different styles

![]() $s^{\text{tgt}}$

, such that

$s^{\text{tgt}}$

, such that

![]() $s^{\text{tgt}}$

$s^{\text{tgt}}$

![]() $\neq$

$\neq$

![]() $s^{\text{src}}$

and

$s^{\text{src}}$

and

![]() $s^{\text{tgt}}$

$s^{\text{tgt}}$

![]() $\neq$

$\neq$

![]() $s^{\text{para}}$

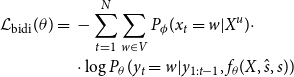

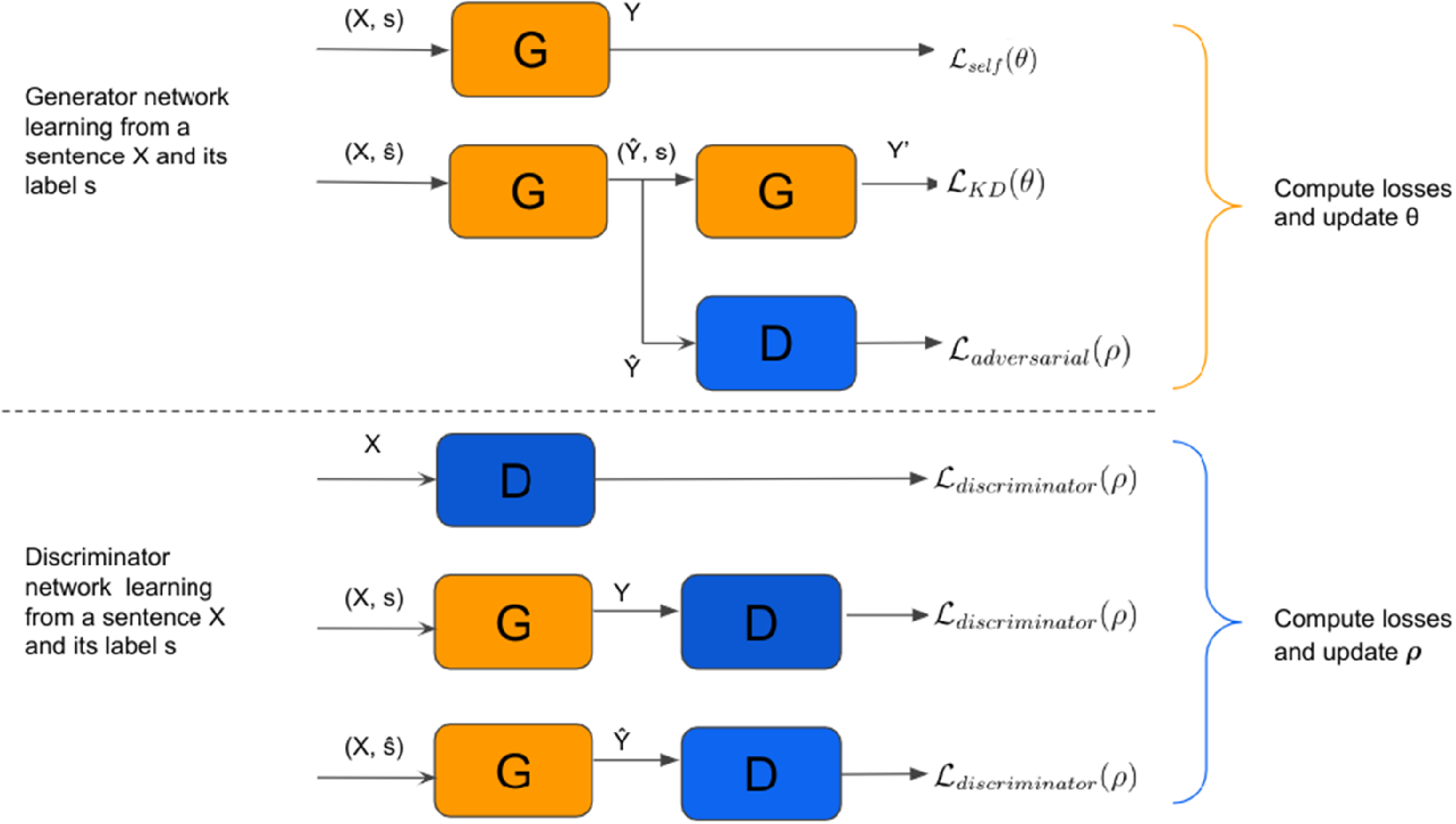

. The general training procedure consists of, repeatedly, performing

$s^{\text{para}}$

. The general training procedure consists of, repeatedly, performing

![]() $n_d$

discriminator training steps, minimizing

$n_d$

discriminator training steps, minimizing

![]() $\mathcal{L}_{\text{discriminator}}(\rho )$

, followed by

$\mathcal{L}_{\text{discriminator}}(\rho )$

, followed by

![]() $n_f$

generator network training steps, minimizing

$n_f$

generator network training steps, minimizing

![]() $a_1\mathcal{L}_{\text{self}}(\theta )+a_2\mathcal{L}_{\text{KD}}(\theta )+\mathcal{L}_{\text{adversarial}}(\rho )$

, until convergence. During the discriminator training steps, only the

$a_1\mathcal{L}_{\text{self}}(\theta )+a_2\mathcal{L}_{\text{KD}}(\theta )+\mathcal{L}_{\text{adversarial}}(\rho )$

, until convergence. During the discriminator training steps, only the

![]() $\rho$

parameters of the discriminator network are updated. Analogously, during the generator network training steps, only the parameters

$\rho$

parameters of the discriminator network are updated. Analogously, during the generator network training steps, only the parameters

![]() $\theta$

of the masked transformer are updated. Figure 2 illustrates the adversarial training adopted by MATTES.

$\theta$

of the masked transformer are updated. Figure 2 illustrates the adversarial training adopted by MATTES.

Figure 2. Adversarial training illustration.

![]() $G$

denotes the generator network, while

$G$

denotes the generator network, while

![]() $D$

denotes the discriminator network.

$D$

denotes the discriminator network.

When back-translating during training (Figure 1), MATTES do not generate the transformed sentence

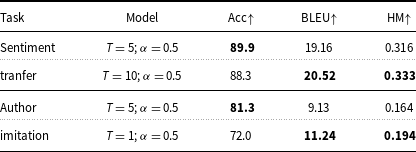

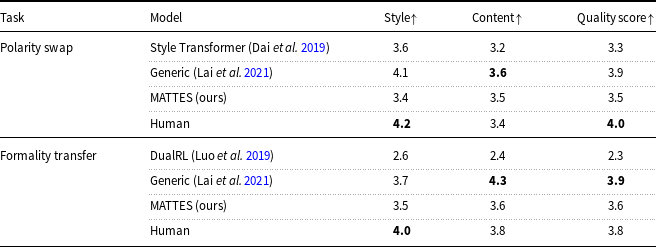

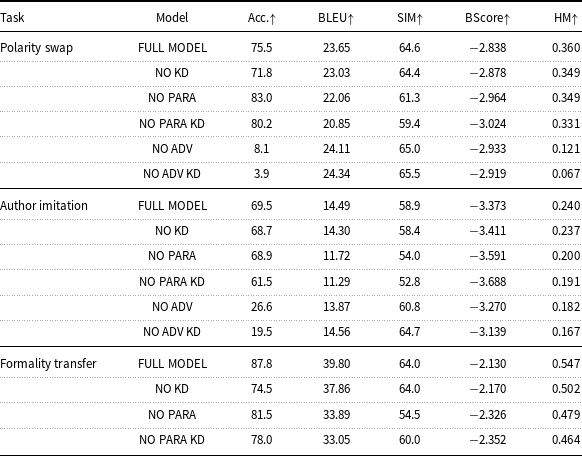

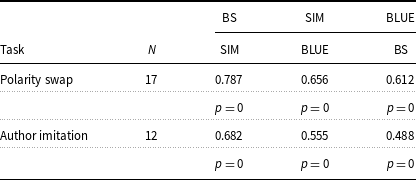

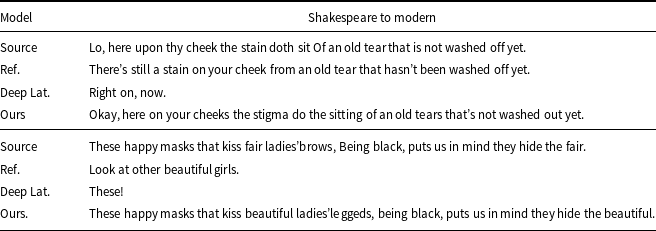

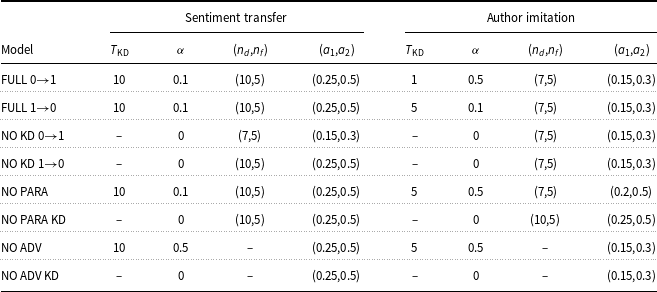

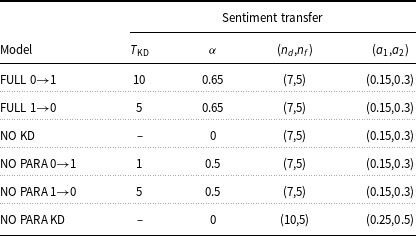

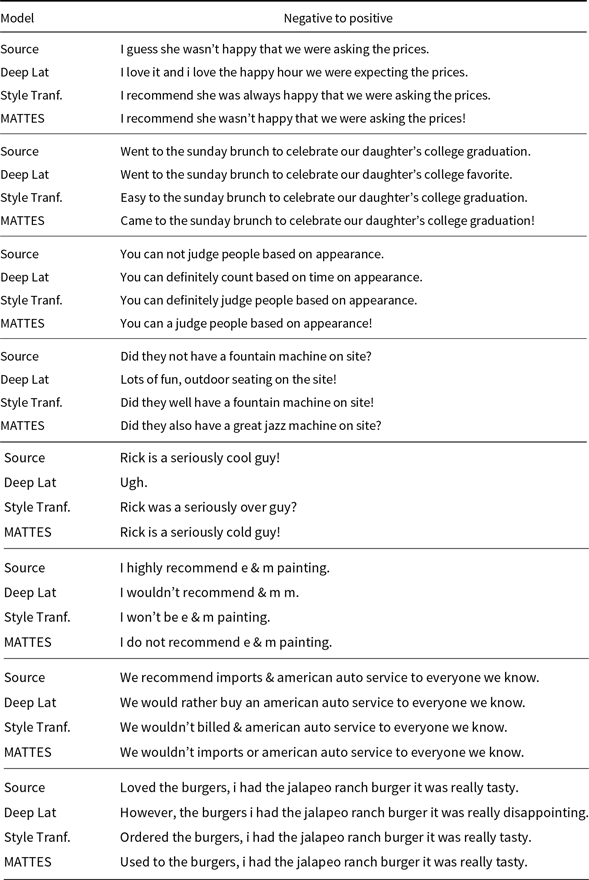

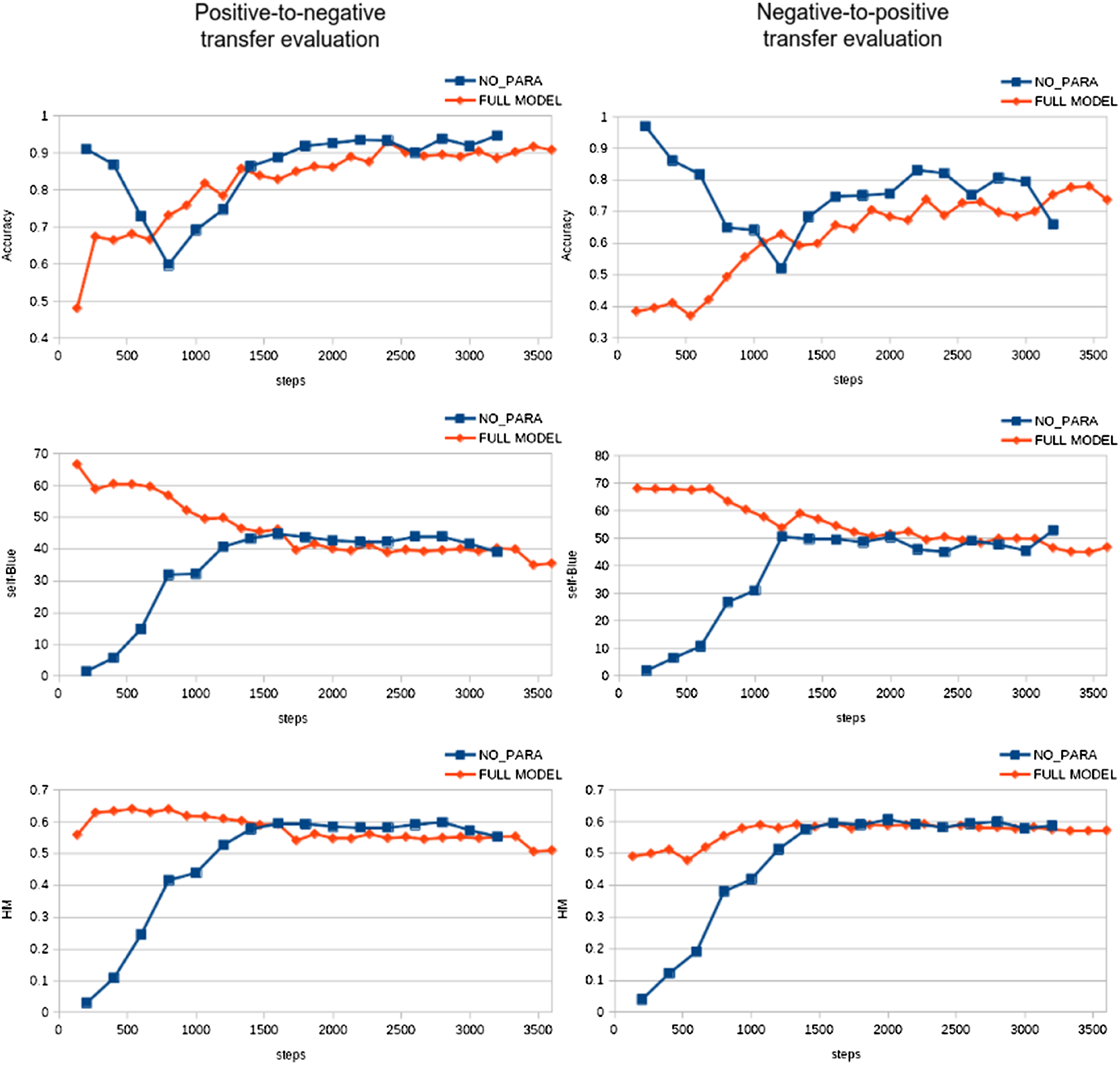

![]() $\hat{Y}$