1 Introduction

With over 607 thousand casualties by COVID-19 as of November 2021, Brazil has suffered the pandemic’s second-largest death toll (288 per 100 thousand). The country currently ranks third in the world for the number of cases, which surpass 21.8 million (over 10,334 per 100 thousand) (Petterson et al., 2021). This massive public health crisis has come hand in hand with the so-called “infodemic” or, as defined by the World Health Organization (WHO), the “overabundance of information and the rapid spread of misleading or fabricated news, images, and videos, [which], like the virus, is highly contagious, grows exponentially (…), and complicates COVID-19 pandemic response efforts” (World Health Organization, 2020).

According to the Regional Center for Studies on the Development of the Information Society (Centro Regional de Estudos para o Desenvolvimento da Sociedade da Informação, 2020 ), Brazil had an estimated 134 million self-reported internet users in 2019 (out of a total population of 211 million). There has been considerable growth in internet accessibility in recent years, with the percentage of users surging from 39% of the population over 10 years of age in 2009 to 74% in 2019. While geographical disparities remain, it is the first time in which over half of the rural population (53%) has access to the internet (versus 77% in urban areas). The overall number of connected households has reached 71% in 2019 (over 50 million versus 46.5 million in 2018).

More widespread access to internet connection and smartphones has considerably changed communications in the country, especially due to the increased reliance on messaging applications. According to ICT Households 2019, these applications (notably, WhatsApp, Skype, and Facebook Messenger) are the most common reason for going online (92%), far surpassing social media (76%), e-mail (58%), and e-commerce (39%) (Reference ValenteValente, 2020). The cross-platform centralized messaging and voice-over-IP service WhatsApp is particularly strong in Brazil, with over 120 million monthly active users (Reference Haynes and BoadleHaynes & Boadle, 2018). Owned by Meta Platforms, Inc. (formerly Facebook, Inc.), the application runs on mobile devices but is also accessible from desktop computers. It enables users to send text and voice messages, make voice and video calls, and share a variety of content, including images, videos, etc. The application’s prevalence in Brazil can be explained by the high cost of SMS, which was as much as 55 times more than in the United States in 2015 (Activate, 2015). While requiring a mobile number, WhatsApp typically integrates popular zero-rating plans that exempt particular apps from data consumption.

WhatsApp widespread adoption in Brazil has been accompanied by an expansion of uses beyond simple text messaging. In the words of Saboia (2016, paragraph 2) “in Brazil WhatsApp has become something much bigger than a chat app: a one-stop solution for everyone, from small businesses to government agencies, to manage everything, from transactions to relationships”. One such innovative function is community building. With up to 256 participants, WhatsApp group chats can be accessed through invitation, link, or QR code. These chats are increasingly common tools to gather people around shared interests or ideologies. The collaborative environment enables input from everyone and facilitates the proliferation of messages at unprecedented speeds.

A recent survey of 75,000 people in 38 markets showed that respondents from Brazil were highly likely to participate in large WhatsApp groups with people they do not know — a trend that, according to the authors, “reflects how messaging applications can be used to easily share information at scale, potentially encouraging the spread of misinformation” (Reference Newman, Fletcher, Kalogeropoulos and NielsenNewman et al., 2019, p. 10). The same study further points out that WhatsApp has become a primary platform for discussing and sharing news not only in Brazil but also in Malaysia and South Africa.

While enabling the various benefits of fast and easy communication, messaging applications also constitute hotspots for the spread of misinformation. With over 55 billion messages sent by its users every day (WhatsApp, 2017), WhatsApp has been a central channel for the proliferation of coronavirus-related fake news around the world (Reference Delcker, Wanat and ScottDelcker et al., 2020; Reference RommRomm, 2020). In Brazil, an analysis of nearly 60 thousand messages found in publicly accessible, politically-oriented groups revealed that those containing misinformation were shared more frequently, by a larger number of users, and in a greater number of groups than other textual messages (Reference Resende, Melo, Reis, Vasconcelos, Almeida and BenevenutoResende et al., 2019). As demonstrated by Galhardi et al. (2020), WhatsApp has played a central role in the dissemination of misinformation about the COVID-19 pandemic, concentrating over 70% of coronavirus-related fake news found by the authors.

Despite its crucial stake in the proliferation of misinformation in emerging countries, WhatsApp remains largely unexplored by experimental research. Numerous questions regarding the influence of WhatsApp use on truth discernment remain unanswered, including whether a higher identification with a group chat’s ideology leads to greater belief in messages that are posted within that group. The present study aims to fill in this gap by exploring the effect of political orientation, group identification, and need for belonging on belief in COVID-related misinformation. We were particularly curious about how identification with WhatsApp groups affects truth discernment when considering the accuracy of information that originates from said groups. Moreover, we wondered if need for belonging would play a role in this effect. In other words, we questioned whether individuals with a greater need for belonging are more likely to believe in messages that come from groups with which they identify the most.

We work with Baumeister and Leary’s (1995, p. 497) belongingness hypothesis, according to which “human beings have a pervasive drive to form and maintain (…) lasting, positive, and significant interpersonal relationships”. In light of recent studies on the influence of political orientation on the belief in fake news (e.g., Van Bavel & Pereira, 2018), we argue that the need for belonging and identity-protection goals impact cognitive processes and undermine one’s ability to discern misinformation. It does so by triggering motivated reasoning, or “the tendency of people to conform assessments of information to some goal or end extrinsic to accuracy” (Reference KahanKahan, 2013, p. 408). We argue that WhatsApp groups are a privileged environment for building and sharing identity goals, especially for people with a high need for belonging. Therefore, they constitute a prime context for the study of how misinformation propagates and should no longer be neglected.

We decided to work with politically-oriented WhatsApp messages in particular due to the politicization of the pandemic in Brazil. Overall, the lack of consensus on how to respond to the pandemic has intensified ideological divides, accelerating polarization between supporters of the current right-wing President, Jair Bolsonaro, and his opponents. A former army captain, Bolsonaro served for almost three decades as a conservative member of the Federal Chamber of Deputies (the equivalent to the U.S. House of Representatives). His rise to the presidency, in 2018, was a major political shift in the country, where the left-wing Workers’ Party (Partido dos Trabalhadores, or PT, in Portuguese) had won the previous four presidential elections. The 2018 race was marked by controversy regarding the ineligibility of former President and most prominent name in the left-wing Workers’ Party Luiz Inácio Lula da Silva (known as Lula), who had been jailed for corruption and money laundering — his conviction was annulled by the Supreme Court in 2021.

It is worth noting that, since the end of the military dictatorship (1964–1985), Brazil has consolidated a multiparty presidential system. The diversity of political organizations and ever-changing coalitions have made it traditionally difficult to understand Brazilian politics within the dichotomous view of left versus right, or liberals versus conservatives. However, the rise of Bolsonaro has been accompanied by growing polarization and increasing resonance with the political debates found in the United States (Reference Layton, Smith, Moseley and CohenLayton et al., 2021). Throughout the coronavirus pandemic, the right-wing in Brazil has been closely associated with neoliberalism, anti-corruption and anti-communism discourse, pro-gun rights, support of pentecostal churches, and, within extreme circles, scientific denialism. On the other hand, the left-wing has been associated with diverse social movements, such as social justice, feminism, LGBT+ rights, land reform, workers’ rights, and more.

Over a year into his mandate, Bolsonaro joined various other world leaders who have garnered attention for their unorthodox responses to COVID-19. In the early days of the pandemic, the President largely dismissed the virus’ impact, publicly comparing it to a “measly cold”. As the crisis evolved, he advocated the use of hydroxychloroquine (Reference Ricard and MedeirosRicard & Medeiros, 2020), among other demonstrations of scientific denialism and reliance on false information. Given the growing body of evidence pointing towards the ability of political leaders and personalities to promote belief in conspiracy theories and misinformation among like-minded individuals (Reference Swire, Berinsky, Lewandowsky and EckerSwire et al., 2017; Reference Uscinski, Enders, Klofstad, Seelig, Funchion, Everett, Wuchty, Premaratne and MurthiUscinski et al., 2020), we expected right-leaning participants to be particularly susceptible to coronavirus-related falsehoods. However, as previously stated, we predicted that this effect would be moderated not only by the identification with the WhatsApp group in which the information would be presented but also by the individual’s need for belonging.

In addition to tapping into the still uncharted dynamics of WhatsApp group messaging and its interplay with political identity, we aimed to contribute to some of the ongoing debates within the misinformation literature by studying the replicability of certain findings in the Brazilian context. For instance, we wondered if the illusory truth effect would lead to greater perceived accuracy of familiar messages versus unknown ones. Experimental studies that manipulate familiarity have shown that repetition increases belief (e.g., Pennycook et al., 2018; Reference De keersmaecker, Dunning, Pennycook, Rand, Sanchez, Unkelbach and RoetsDe keersmaecker et al., 2020; Reference Hassan and BarberHassan & Barber, 2021). However, it remains unclear whether this effect would hold true in observational investigations, given the lack of control over the context of previous exposure to information (which could include, for instance, fact-checking sources).

We were particularly curious as to whether analytical and open-minded thinking would predict greater truth discernment, as found by Reference Bronstein, Pennycook, Bear, Rand and CannonPennycook and Rand (2018) and Bronstein et al. (2018). Designed to cue intuitive but incorrect responses, the Cognitive Reflection Test (CRT; Reference FrederickFrederick, 2005) aims to measure an individual’s disposition to engage in reflective, analytical thinking. Research has linked higher CRT scores with decreased reliance on biases and heuristics (Reference Toplak, West and StanovichToplak et al., 2011), greater skepticism about religious, paranormal, and conspiratorial concepts (Reference Pennycook, Fugelsang and KoehlerPennycook et al., 2015), and enhanced ability to discern true and fake news (Reference Bronstein, Pennycook, Bear, Rand and CannonPennycook & Rand, 2018).

Complementary, the Actively Open-Minded Thinking about Evidence Scale (AOT-E; Reference Pennycook, Cheyne, Koehler and FugelsangPennycook et al., 2020a) aims to assess whether people are open to change their beliefs according to evidence. As proposed by Baron (1985, 1993), actively open-minded thinking (AOT) favors the recognition of false claims, as it encourages the consideration of evidence and opinions that are contrary to one’s initial conclusion or preferred belief. Research has shown negative correlations between AOT and paranormal beliefs (Reference Svedholm and LindemanSvedholm & Lindeman, 2013), belief in conspiracy theories (Reference Swami, Voracek, Stieger, Tran and FurnhamSwami et al., 2014), and superstitious beliefs (Reference Svedholm and LindemanSvedholm & Lindeman, 2017, using the Fact Resistance sub-scale of the original scale). Given our focus on the infodemic, AOT’s function as a standard of evaluation for the trustworthiness of various sources of information is particularly relevant (Reference BaronBaron, 2019).

Though expecting a positive impact of both analytical and open-minded thinking on truth discernment, we also questioned how this effect would interact with each message’s (in)consistency with the participants’ political identity. Given the politicization and polarization of the pandemic in Brazil, we expected that right-leaning participants would be more likely to believe in falsehoods due to their consistency with the discourse adopted by the country’s conservative leadership.

Keeping in mind the context of WhatsApp groups, we were curious about the interplay between two potential phenomena: on the one hand, a decreased discernment among right-leaning participants; on the other hand, an increased belief in information that is posted in groups with which there is greater identification, particularly in individuals with a higher need for belonging.

2 Method

Sample size determination, data manipulation, exclusions, and all measures in the study are reported below. All data and materials are available at the Open Science Framework [https://osf.io/cjs2m/].

2.1 Participants

This study was run between June 15 and July 7, 2020. We recruited voluntary participants using paid advertising on Facebook, a useful tool for countries in which services such as MTurk or Lucid are not widely available (Reference Samuels and ZuccoSamuels & Zucco, 2013). In total, 1007 participants from all regions of Brazil completed the study, 148 participants were excluded due to their failure to complete the attention check item. Our final sample consisted of 859 participants, 52.74% female, 46.10% male, and 1.16% other; with a mean age of 44 years (SD=14; age range=18-78); 22.70% had completed or were pursuing graduate studies, 45.05% had completed or were pursuing undergraduate studies, and 32.25% had completed high school or below; 21.89% of which identified politically as left or extremely left, 60.30% as center and 17.81% as right or extremely right. Our sample size determination followed the same criteria as Pennycook et al. (2020b), namely, (a) it is a satisfactory sample size for this experimental design, (b) it was within our financial means given our recruiting method, and (c) it is comparable to what has been used in past research.

2.2 Materials and Measures

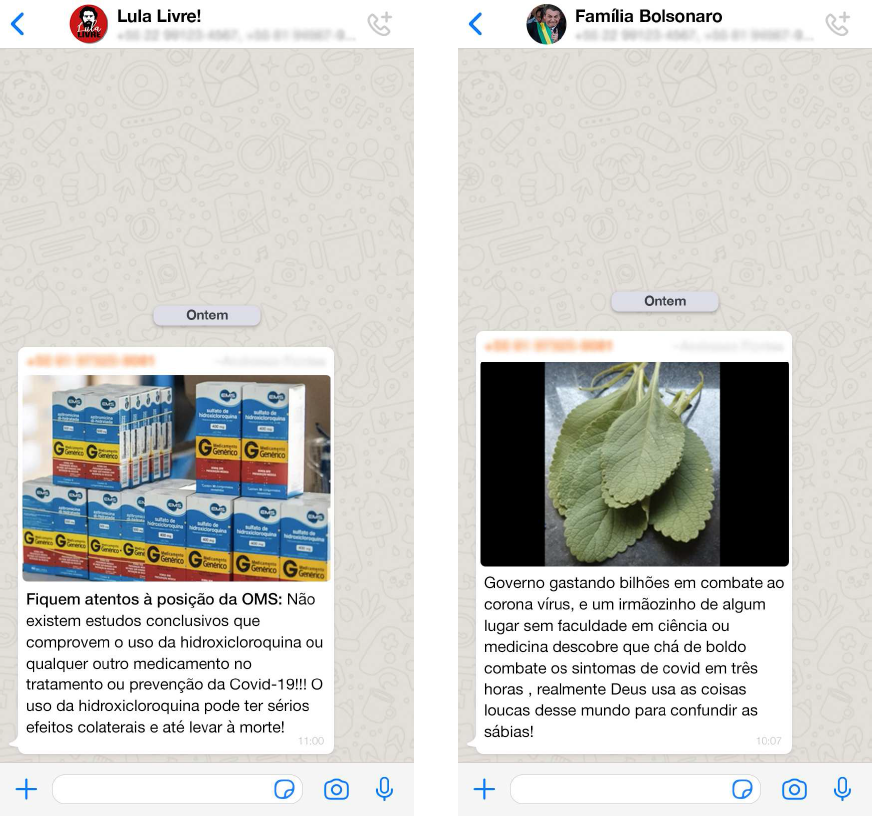

In a within-subject design, participants reported the perceived accuracy of 20 messages with COVID-19 related information (10 true and 10 false) presented in WhatsApp message format (see Figure 1). After seeing each message, participants reported their perceived accuracy by answering the question “How true is the above message?”. To do so, they used a slider scale ranging from 0 (completely false) to 100 (completely true). The content of each message was found online, either in fact-checking websites or trustworthy sources, such as the U.S. Centers for Disease Control and Prevention.

Figure 1: Examples of experimental stimuli - true (left) and false (right) WhatsApp messages.

True information pertained to the following topics: masks; the second wave of infections; coronavirus lifespan on different surfaces; immunity after contamination; coronavirus in dogs; asymptomatic transmission; hydroxychloroquine; loss of smell and taste; COVID-19 in children; and hydration. False information encompassed the following topics: virus vs. bacteria; boldo tea; contaminated masks from China; coronavirus as man-made; social isolation; coronavirus pH levels; chloroquine; false COVID-19 reports; Brazilian military; and 5G networks. All the experimental stimuli and their translation to English may be found in our supplemental material [https://osf.io/cjs2m/].

In addition to the perceived accuracy of each message, participants reported their familiarity with the content by answering the question “Did you have previous knowledge of this message’s content?” (we purposefully asked about the content and not the message itself because information is often shared through WhatsApp and social media with varying formats but similar content). Possible answers included “yes” (coded as 1), “no”, and “not sure” (both coded as 0).

We created visual stimuli (screenshots) that mimicked the format of WhatsApp’s mobile application. Each image contained the title and image of the group chat on top, the message area with WhatsApp’s default wallpaper in the center, and the text, image, and voice input bar at the bottom. The messages were randomly assigned to be presented within the visual context of one of five fictitious WhatsApp group chats. By the end of the task, each participant had seen 2 true messages and 2 false messages in each group.

Each group’s name and image indicated its political orientation (or lack thereof). For the titles, we selected political slogans and popular sayings that expressed either discontent with the Workers’ Party and support for Bolsonaro (right-wing groups) or discontent with Bolsonaro and support for Lula (left-wing groups). The detailed descriptions of the groups were:

- One neutral group: “Amigos” (in English, “Friends”);

- Two right-wing groups: “Família Bolsonaro” and “PT Nunca Mais” (in English, “Bolsonaro Family” and “Workers’ Party Never Again”);

- Two left-wing groups: “Lula Livre” and “Não é meu presidente” (in English, “Free Lula” and “Not my President”).

Participants indicated their willingness to participate in each WhatsApp group (yes or no) and reported their identification with each chat (answering the question “how much do you identify with each of the following groups?”) on a slider scale from 0 (no identification) to 100 (complete identification). Given the political nature of the titles and images for each group, we assume that identification means support of the particular orientation and ideology that is portrayed.

Prior to the experimental task, participants reported the frequency with which they consumed COVID-19 news (multiple-choice alternatives ranged from less than once a week to multiple times a day). They also specified which sources of information they used (the list included governmental websites, international organizations’ websites, TV, radio, YouTube, digital and print news outlets, social media, messaging applications, and “other”). Participants were also asked, “How much do you trust the following sources as providers of useful and accurate information about COVID-19?”. Participants’ answers varied from 0 (not at all) to 100 (completely) on a slider scale and pertained to 1) governmental authorities; 2) newspaper, magazines, TV, and radio (from now on referred to as “traditional media”); 3) doctors and other medical professionals; 4) social media (Facebook, WhatsApp groups, etc.); 5) WHO; 6) family and friends.

Participants reported their daily WhatsApp use (answers ranged from “don’t use it every day” to “more than 2 hours a day”) and participation in group chats related to 1) family and friends; 2) politics; 3) work and study; 4) news; and 5) personal interests (sports, religion, etc.) (they were instructed to select all that applied).

Participants also answered three questions regarding their political views. Firstly, they indicated their position on the political spectrum (scale of 1, extremely to the left, to 7, extremely to the right). Secondly, they answered who they voted for in the 2018 runoff election (options included the current President, Bolsonaro; left-wing candidate, Haddad; blank or null; and rather not say). Finally, participants reported their level of satisfaction with the current administration using a scale of 1 (extremely dissatisfied) to 5 (extremely satisfied). Participants also reported their age, education level, gender, and state of residence.

They further completed a version of the Cognitive Reflection Test (CRT-2), the Actively Open-Minded Thinking about Evidence Scale (AOT-E), and the Need to Belong Scale (NTBS), as described below.

CRT-2

Participants answered a 4-item variation of the Cognitive Reflection Test (CRT-2; Reference Thomson and OppenheimerThomson & Oppenheimer, 2016). Similar to the original test, the CRT-2 is designed to prompt intuitive but incorrect responses; however, it is not as prone to numeracy confounds and is not as widely known by respondents in Brazil. It includes questions such as “If you are running a race and you pass the person in second place, what place are you in?”. The test had low reliability (α =.48) which is expected from the original study (α = .51).

Actively Open-Minded Thinking About Evidence Scale (AOT-E)

As a variation of the Actively Open-Minded Thinking Scale (Reference Stanovich and WestStanovich & West, 2007), the AOT-E contains 8 items (e.g., “A person should always consider new possibilities”) and measures whether people are open to change their beliefs according to evidence (Reference Pennycook, Cheyne, Koehler and FugelsangPennycook et al., 2020a). This scale showed strong reliability in the original study (α =.87), in which responses ranged from 1 (strongly disagree) to 6 (strongly agree). For the present study, we translated the items to Brazilian Portuguese and used a 5-point scale, which enabled participants to take a neutral standing (3 or neither agree nor disagree). Our version also showed good reliability (α =.72).

Need to Belong Scale (NTBS)

Developed by Leary et al. (2013), this scale was designed to measure the dispositional dimension of the need to belong, such as seeking social connections and valuing acceptance by others. We used a translation to Brazilian Portuguese that was adapted and validated by Reference Gastal and PilatiGastal and Pilati (2016). It contained the 10 original items and had strong reliability (original study: α =.78; present study: α =.77). Participants could answer with a scale ranging from 1 (strongly disagree) to 5 (strongly agree).

We included a screener question as a part of the AOT-E block of questions. The item instructed participants to select “Totally Disagree” to indicate that they were effectively paying attention to the survey. We included the dichotomous variable “attention” (coded as 1 for “passed”, 0 for “failed”).

2.3 Procedures

Participants answered an online survey developed in Qualtrics [https://www.qualtrics.com/]. The study had eight components, presented in the following order: 1) research presentation and consent; 2) CRT-2; 3) sources of information usage and trust; 4) WhatsApp usage and willingness to participate in group chats; 5) experimental task; 6) NTBS; 7) AOT-E; 8) political orientation, satisfaction with the current President and 2018 candidate; and 9) socio-demographic questions. Within each component, the order of items was randomized.

As previously described, the experimental portion of the study had a within-subject, repeated measures design. Participants used 0–100 slider scales to report the perceived accuracy of 20 messages (10 true and 10 false) that were randomly presented within the visual context of five WhatsApp group chats (in the format of screenshots). Our manipulation thus included the accuracy of messages (true vs. false) and the context in which they were presented (five group chats of varying political orientation). Participants’ responses to the visual stimuli served as the basis for the calculation of our dependent variables.

We followed the steps of Reference Bronstein, Pennycook, Bear, Rand and CannonPennycook and Rand (2018) and Pennycook et al. (2020b) to compute discernment. This variable represents the difference between the total score of perceived accuracy of true messages and the total score of perceived accuracy of false messages. It was transformed to range from –1 to 1, with higher discernment scores indicating an overall judgment of true and false messages as they were. This variable presented adequate reliability (α =.79).

We further created two more specific discernment variables, based on the (in)consistency with the WhatsApp groups in which the messages were presented. Given the previously discussed politicization of the pandemic, we considered false information to be consistent with right-wing WhatsApp groups and inconsistent with left-wing ones. We also considered true information to be inconsistent with right-wing WhatsApp groups and consistent with left-wing ones. Information presented within the neutral group, Amigos (i.e., Friends), were not considered. These variables were also transformed to range from –1 to 1, with higher discernment scores indicating an overall judgment of true and false messages as they were.

Our last dependent variable was perceived accuracy (by true and false content), which is the mean of each participant’s responses (in the 0 to 100 perceived accuracy scale) transformed to range between 0 and 1.

As for our independent variables, we implemented additional transformations to allow easier interpretation of results and usage of data. Political scales (orientation and satisfaction with the current President) were centered around 0, allowing them to range from –1 to 1 (–1 represents “extremely dissatisfied” and “extremely to the left” while 1 means “extremely satisfied” or “extremely to the right”).

Finally, we created three variables for familiarity: a general one, one for true content, and another for false content. Considering that all participants were exposed to the same messages, we counted how many times they reported having previous knowledge of the information. For the true and false scores, the total number of (true or false) familiar messages was divided by 10. For the general score, the sum of familiar messages (both true and false) was divided by 20. Therefore, all familiarity variables ranged from 0 to 1.

Our analyses were conducted in R, version 4.0.5, and consisted of robust paired t-tests and correlations. Assumption tests were conducted for each procedure and can be found in our supplemental material.

3 Results

We began by investigating variables that were central to our goal of understanding the dynamics of misinformation in WhatsApp groups (i.e., daily WhatsApp use, participation in group chats, willingness to participate in, and identification with the fictitious WhatsApp group chats).

Participants reported remarkably high use of WhatsApp on a daily basis, reflecting the nationwide trend discussed in the introduction. 97.2% used the application at least 15 minutes a day and 36.1% did so more than 2 hours a day. Participation in group chats was also high, reaching 89% of our sample. While 80% reported being part of groups related to family and friends, only 10% participated in chats related to politics.

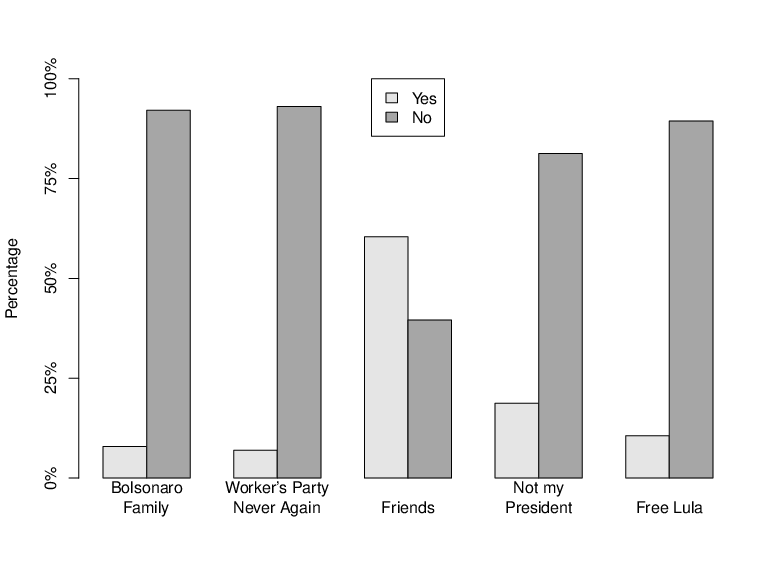

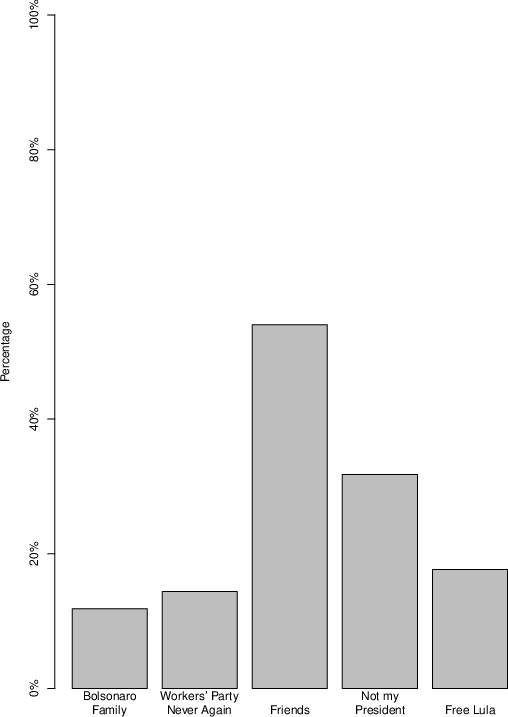

Figure 2 shows that most participants said that they would not participate in the politically-oriented groups, a trend that persisted regardless of the ideological orientation. Conversely, the majority (60.4%) reported that they would participate in the neutral group, “Friends”. Figure 3 presents the mean levels of reported identification with each group and standard deviations. It is worth noting that reported identification was consistently low and that standard errors include zero for the political groups, except for the neutral group (mean=54, SD=39.86). Unfortunately, these results lead to the conclusion that participation and identification data are not fit to be included in further analyses.

Figure 2: Willingness to participate in WhatsApp groups.

Figure 3: Identification with WhatsApp groups (mean).

Despite participants’ lack of identification with our fictitious WhatsApp groups, we considered that their political stance could still have influenced discernment levels. Specifically, we wondered whether the consistency of each group’s political orientation with the real-life discourse around the country would affect discernment (as previously mentioned, we determined that true information was consistent with left-wing groups and inconsistent with right-wing groups, and vice versa). Our analyses revealed no difference between these groups. Mean discernment (.20) was the same for messages presented in both consistent (SD=.13) and inconsistent groups (SD=.12). The paired t-test also showed that there was no difference (t(516)=.096, p=.92, d=.002).

We proceeded to explore the perceived accuracy of true and false messages. Our analyses revealed that true information was perceived as more accurate (mean=.69, SD=.18) than false content (mean=.20, SD=.20). This difference (.55, 95% CI [.53, .57]) was relevant considering the paired t-test (t(516)=51.96, p<.001, d=.94). Given the large variability in this measure, the t-test conducted was robust and winsorized (Reference Mair and WilcoxMair & Wilcox, 2020). Also, taking into account that this variable ranged from 0 to 1, it could be said that false content was perceived as more inaccurate than true content was perceived as accurate (since .20 is closer to 0 than .69 is closer to 1.00).

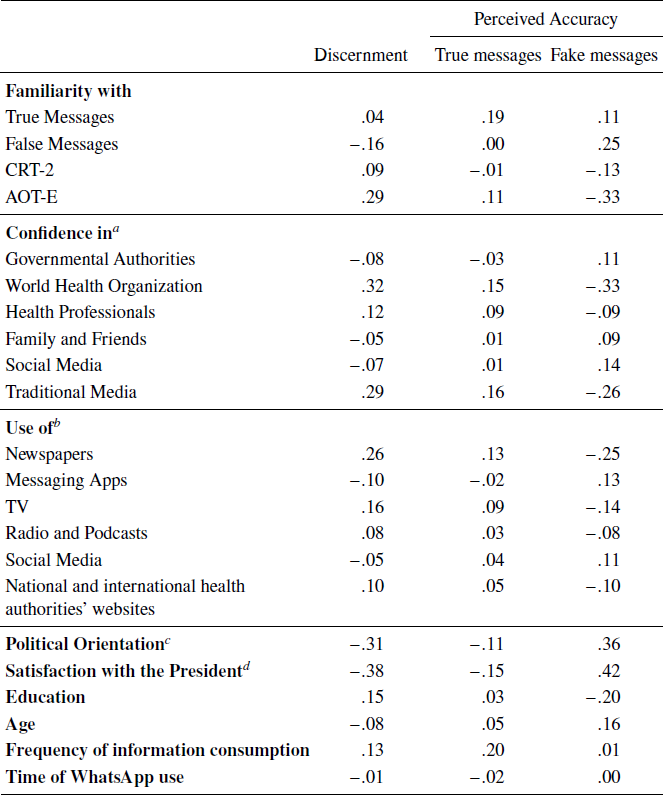

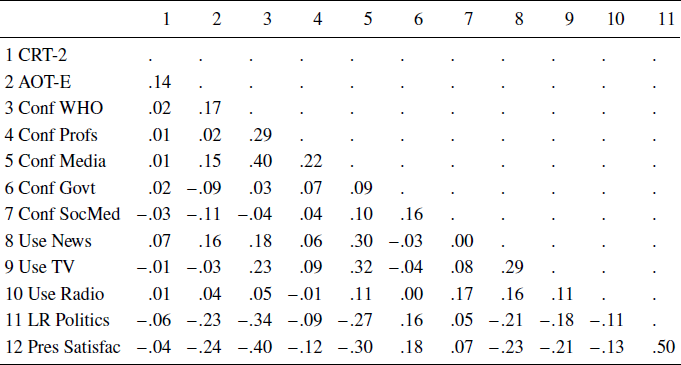

Further exploration of our dependent variables revealed an absence of normal distribution, which led us to explore correlations through Kendall’s τ coefficient. Specifically, we investigated correlations between discernment and various measurements, including demographic and political characteristics, individual differences in analytical and open-minded thinking, need for belonging, as well as information-seeking behavior, confidence in different sources of COVID-19 information, WhatsApp use, and familiarity with true and false information. As reported in Table 1, CRT-2 scores (although with a small coefficient), AOT-E scores, confidence in the WHO and healthcare professionals, confidence and use of traditional media, education, and frequency of information had positive correlation coefficients. Familiarity with false messages, confidence in government authorities and social media, use of messaging applications, political orientation, satisfaction with the current President, and age were negatively correlated with discernment (though some with small coefficients).

Table 1: Correlations (Kendall’s τ ) for main study variables

Note.

a as reliable sources of COVID-19 information.

b as a source of COVID-19 information.

c 1=extremely to the left; 1=extremely to the right.

d –1=extremely dissatisfied;

1=extremely satisfied. Correlations above .09 (absolute value) are p<.01; those above .06 are p<.05.

Table 1 also presents detailed correlations involving the perceived accuracy of true and false messages. Familiarity with false and true messages were positively correlated with accuracy scores for false and true content, respectively. Political orientation and satisfaction with the current President were positively correlated with the perceived accuracy of false messages while negatively associated with that of true information. It is worth noting that the coefficient for the correlation between AOT-E scores and the perceived accuracy of false messages is three times the one for true information (though working in opposite directions). Similarly, CRT-2 scores are solely, and negatively, correlated with the perceived accuracy of false messages.

The correlations presented on Table 2 further allow us to identify potential patterns in information consumption, especially those between political orientation and satisfaction with the current President and confidence in different sources of COVID-19 information. Both right-wing orientation and greater satisfaction were negatively correlated with trust in the WHO, doctors and medical professionals, and traditional media as reliable sources of COVID-19 information (they were also negatively correlated with the use of traditional media, particularly TV, newspapers, and radio). Conversely, both political orientation and satisfaction with the current President were positively correlated with confidence in governmental authorities, and satisfaction with the current President had a very small but positive correlation with trust in social media as a reliable source of information.

Table 2: Correlations (Kendall’s τ ) for secondary study variables

Note. 1. CRT-2. 2. AOT-E. Confidence in 3. WHO. 4. Health Professionals. 5. Traditional Media. 6. Governmental Authorities. 7. Social Media as reliable sources of COVID-19 information. Use of 8. Newspapers. 9. TV. 10. Radio and Podcasts as a source of COVID-19 information. 11. Political Orientation (–1=extremely to the left; 1=extremely to the right). 12. Satisfaction with the President (–1=extremely dissatisfied; 1=extremely satisfied). Correlations above .09 (absolute value) are p<.01; those above .06 are p<.05.

4 Discussion

This study aimed to tap into the numerous questions regarding the ongoing COVID-19 infodemic, particularly the spread of misinformation through messaging applications and its potential interplay with group identity and need for belonging. As did Galhardi et al. (2020)’s, our results shed light on the role of WhatsApp, along with social media platforms, in intensifying this phenomenon in Brazil. We found small but negative correlations between confidence in social media as a reliable provider of COVID-19 information and the use of messaging applications as sources of such information and the ability to discern true and false content. This result is in accordance with recent international literature, including a study of 2,254 UK residents that showed that people who believe in COVID-19 conspiracy theories, such as symptoms being linked to 5G radiation, are more likely to be getting their information about COVID-19 from social media (Reference Duffy and AllingtonDuffy & Allington, 2020).

It is worth noting that the time spent daily on WhatsApp had no influence on the participants’ performance, which indicates that the main issue is not the general use of the application but its role as a source of information and the confidence that some people place on group chats as providers of “uncensored” and “unbiased” news. The rise of WhatsApp as an alternative to “official” fake news has been documented in other countries, such as Spain (Reference Elías and Catalan-MatamorosElías & Catalan-Matamoros, 2020) and India (Reference Reis, Melo, Garimella and BenevenutoReis et al., 2020). Such evidence should inspire innovative strategies that leverage social media and messaging applications to target misinformation and promote the spread of reliable content. Through a randomized experiment in Zimbabwe, Bowles et al. (2020) verified that the dissemination of accurate information through WhatsApp increased knowledge about COVID-19 and reduced potentially harmful behavior.

While confidence in social media had a negative correlation, reliance on newspapers, TV, and radio had a positive association with discernment. Despite the undeniable shortcomings of many agents within the “traditional media” category, which is by no means immune to biased discourse, long-standing mechanisms of fact-checking and research seem to have favored the distribution of evidence-based information, in line with the WHO. Various agents within the professional media recognize that the pandemic has created an opportunity to “prove the value of trustworthy news” (Carson et al., 2020, paragraph 2).

These results resonate with van Dijck and Alinejad (2020, paragraph 1), who point out that the linear model of science communication “has gradually converted into a networked model where social media propel information flows circulating between all actors involved”. In this context of transformation, our results underline the urgency of a multifaceted approach. Targeted measures that educate users about the dynamics of (mis)information within messaging applications and social media should be combined with efforts to rebuild confidence in professional media. The former could be achieved through new technological solutions that expand fact-checking capabilities, particularly those that preserve users’ privacy through end-to-end encryption, as proposed by Reis et al. (2020). The latter could benefit from stronger policies and standards along with greater transparency about how information is gathered, evaluated, and presented to the public (The Trust Project, 2021).

As for our contribution to existing debates within the misinformation literature, specifically the illusory truth effect, our results corroborate previous findings according to which repetition increases the perceived truthfulness of information. Despite methodological differences (i.e. our study did not manipulate familiarity), familiarity with true and false was positively correlated with accuracy scores for true and false information, respectively.

These results underscore the importance of recent studies that shed light on strategies to counter the harmful effect of prior exposure to falsehoods. In a series of four experiments, Brashier et al. (2020) verified that an initial accuracy focus (i.e. priming of a fact-checking behavior) prevented the illusion effect when participants held relevant knowledge. Similarly, Jackson (2019) saw a reduction in the illusory truth effect when corrections were presented multiple times. Given the great difficulties of stopping the spread of misinformation, especially in encrypted messaging applications, it is good to know that awareness campaigns could have positive effects on discernment.

Unfortunately, our research was limited in its ability to identify the context in which participants had previously encountered each message. It is likely that at least part of the false information presented in our experiment had been previously seen in fact-checking websites. Further research is necessary to elucidate the dynamics between the illusory truth effect and fact-checking initiatives.

In line with our original expectation, our results demonstrated that both analytical and open-minded thinking were positively associated with truth discernment. Both AOT-E and CRT-2 scores were positively correlated with discernment, in accordance with Reference Bronstein, Pennycook, Bear, Rand and CannonPennycook and Rand (2018) and Bronstein et al. (2018), however, the coefficient for CRT-2 scores was extremely small. These results underscore the importance of AOT as a standard to judge the trustworthiness of information amidst the infodemic. Baron (2019) reminds us that trustworthy sources present signs of AOT, including the nature of evidence and its limitations. Participants with greater AOT-E scores seemed to have a greater propensity to identify these signs and calibrate trust levels accordingly.

In fact, they were particularly good at identifying false messages. Correlations between CRT-2 and AOT-E scores and the perceived accuracy of true and false information show that the misclassification of false messages is largely responsible for low discernment among those with lower levels of analytical and open-minded thinking. These results underline the importance of developing effective strategies to debunk fake news, especially in the context of messaging applications.

As for the limitations of our study, it is important to point out that we were unable to test our primary prediction, that individuals with a greater need for belonging would have less discernment of information presented in the context of WhatsApp groups with which they identified the most. The participants’ lack of identification with and unwillingness to participate in our fictitious group chats made it impossible to elucidate our research question. Among other plausible explanations, this could have been due to the group’s names, which may have been perceived as too extreme and/or polarized or not sufficiently related to recent political hot-topics.

The specificities of the Brazilian context may also be related to our inability to investigate the absence of effect from group identification and need for belonging. Our choice to randomly present information within different WhatsApp groups diverged significantly from what is actually found in real chats (for instance, it is highly unlikely that real left-wing groups would distribute messages about coronavirus as a Chinese communist threat). However, the absence of difference in mean discernment of information that was consistent versus inconsistent with the groups’ political orientation seems to suggest that our manipulation was simply ineffective. In any case, future research should investigate the impact of identification with WhatsApp groups on the perceived accuracy of information regarding less politicized topics.

Other relevant limitations of our study pertain to our sample composition and the nature of our conclusions. Even though we were able to get participants from all geographic regions of Brazil, our Facebook recruitment efforts did not yield the desired representativeness in socio-demographic terms, nor did it reach a sufficient number of individuals who used Whatsapp as their main source of information. Future efforts should consider the implementation of alternative recruitment methods. Moreover, even though our analyses unveil some interesting associations, their correlational nature leads to limited conclusions. It is imperative to devise more effective experimental designs that will allow for a better understanding of the dynamics of misinformation on messaging applications.

Despite these shortcomings, our results still shed light on the importance of political orientation in the COVID-19 infodemic. As previously stated, political leaders can play an important role in influencing the perception of information among their partisans (Reference Swire, Berinsky, Lewandowsky and EckerSwire et al., 2017; Reference Uscinski, Enders, Klofstad, Seelig, Funchion, Everett, Wuchty, Premaratne and MurthiUscinski et al., 2020). In the context of the pandemic, various world leaders (both left- and right-wing) have adopted positions of incredulity, denial of scientific evidence, and promotion of unproven methods of prevention and cure. Given the specifics of Brazil’s political scenario, particularly the President’s discourse, the impact of the politicization of the pandemic is evident in the fact that right-leaning participants (and especially those with greater levels of satisfaction with the current President) consistently showed lower levels of discernment. These results echo the findings of Calvillo et al. (2020), according to which conservatism predicted less accurate discernment between real and fake COVID-19 headlines in the U.S. context.

The interplay of politicization and infodemic is particularly worrisome as it undermines confidence in science, a trend that can have lasting effects that go far beyond COVID-19. As pointed out by Barry et al. (2020), distrust in science creates fertile ground for misinformation. Our results underline the importance of a two-pronged approach: promoting knowledge of what constitutes scientific evidence and cultivating the ability to continuously reevaluate beliefs according to new evidence. Future research should focus on developing strategies to effectively achieve both goals.