1. Introduction

In the past few decades, large amounts of data have been generated from experiments and numerical simulations of turbulent flows (e.g. Duraisamy, Iaccarino & Xiao Reference Duraisamy, Iaccarino and Xiao2019). To analyse high-dimensional data, low-order representations are typically sought (e.g. Taira et al. Reference Taira, Brunton, Dawson, Rowley, Colonius, McKeon, Schmidt, Gordeyev, Theofilis and Ukeiley2017). Reduced-order modelling consists of predicting the time evolution of high-dimensional systems with a low-order representation of the system. By predicting the system based on a (relatively) small number of degrees of freedom, reduced-order modelling significantly reduces the computational cost and provides insights into the physics of the system. In this paper, we construct a reduced-order model by: (i) inferring a lower-dimensional manifold, the latent space, where the turbulent dynamics live; and (ii) predicting the turbulent dynamics in the latent space. To generate the latent space, techniques such as proper orthogonal decomposition (POD) (Lumley Reference Lumley1970) and dynamic mode decomposition (DMD) (Schmid Reference Schmid2010) are commonly used. They have been applied successfully in multiple settings, such as extracting spatiotemporal features and controlling flowfields (e.g. Rowley, Colonius & Murray Reference Rowley, Colonius and Murray2004; Brunton & Noack Reference Brunton and Noack2015; Rowley & Dawson Reference Rowley and Dawson2017). The downside of these methodologies is that they are linear approximators, which require a large number of modes to describe turbulent flowfields (e.g. Alfonsi & Primavera Reference Alfonsi and Primavera2007; Muralidhar et al. Reference Muralidhar, Podvin, Mathelin and Fraigneau2019). To reduce the number of modes to accurately describe flowfields, nonlinear mappings have shown promising results in recent years (e.g. Brunton, Noack & Koumoutsakos Reference Brunton, Noack and Koumoutsakos2020; Fernex, Noack & Semaan Reference Fernex, Noack and Semaan2021).

A robust data-driven method that computes a low-dimensional representation of the data is the autoencoder (Kramer Reference Kramer1991; Goodfellow, Bengio & Courville Reference Goodfellow, Bengio and Courville2016). Autoencoders typically consist of a series of neural networks that map the original field to (and back from) the latent space. In fluids, Milano & Koumoutsakos (Reference Milano and Koumoutsakos2002) developed a feed-forward neural network autoencoder to investigate a turbulent channel flow. Since then, the advent of data-driven techniques tailored for the analysis of spatially varying data, such as convolutional neural networks (CNNs) (Lecun et al. Reference Lecun, Bottou, Bengio and Haffner1998), has greatly extended the applicability of autoencoders (Hinton & Salakhutdinov Reference Hinton and Salakhutdinov2006; Agostini Reference Agostini2020). For example, Fukami, Fukagata & Taira (Reference Fukami, Fukagata and Taira2019) employed CNNs to improve the resolution of sparse measurements of a turbulent flowfield, Murata, Fukami & Fukagata (Reference Murata, Fukami and Fukagata2020) analysed the autoencoder modes in the laminar wake past a cylinder, and Kelshaw, Rigas & Magri (Reference Kelshaw, Rigas and Magri2022) proposed a physics-informed autoencoder for super-resolution of turbulence, to name only a few.

Once the latent space is generated, we wish to predict the temporal dynamics within the latent space. To do so, one option is to project the governing equations onto the low-order space (Antoulas Reference Antoulas2005). This is a common method when the governing equations are known, but it becomes difficult to implement when the equations are not exactly known (Yu, Yan & Guo Reference Yu, Yan and Guo2019). An alternative option is the inference of differential equations within the latent space, with genetic programming (e.g. Schmidt & Lipson Reference Schmidt and Lipson2009) or symbolic regression (e.g. Loiseau, Noack & Brunton Reference Loiseau, Noack and Brunton2018). In this paper, we focus on developing a reduced-order modelling approach that does not require differential equations.

To forecast temporal dynamics based on a sequence of inputs, recurrent neural networks (RNNs) (Rumelhart, Hinton & Williams Reference Rumelhart, Hinton and Williams1986) are the state-of-the-art data-driven architectures (e.g. Goodfellow et al. Reference Goodfellow, Bengio and Courville2016; Chattopadhyay, Hassanzadeh & Subramanian Reference Chattopadhyay, Hassanzadeh and Subramanian2020). RNNs are designed to infer the correlation within data sequentially ordered in time via an internal state, which is updated at each time step. Through this mechanism, RNNs retain the information of multiple previous time steps when predicting the future evolution of the system. This is useful in reduced-order modelling, in which the access to a limited number of variables (a subset of the full state of the system) causes the evolution of the latent space dynamics (i.e. partially observed dynamics) to be non-Markovian, as explained in Vlachas et al. (Reference Vlachas, Pathak, Hunt, Sapsis, Girvan, Ott and Koumoutsakos2020). In fluids, RNNs have been deployed for predicting flows past bluff bodies of different shapes (Hasegawa et al. Reference Hasegawa, Fukami, Murata and Fukagata2020), replicating the statistics of turbulence (Srinivasan et al. Reference Srinivasan, Guastoni, Azizpour, Schlatter and Vinuesa2019; Nakamura et al. Reference Nakamura, Fukami, Hasegawa, Nabae and Fukagata2021) and controlling gliding (Novati, Mahadevan & Koumoutsakos Reference Novati, Mahadevan and Koumoutsakos2019), to name a few. Among RNNs, reservoir computers in the form of echo state networks (ESNs) (Jaeger & Haas Reference Jaeger and Haas2004; Maass, Natschläger & Markram Reference Maass, Natschläger and Markram2002) are versatile architectures for the prediction of chaotic dynamics. ESNs are universal approximators under non-stringent assumptions of ergodicity (Grigoryeva & Ortega Reference Grigoryeva and Ortega2018; Hart, Hook & Dawes Reference Hart, Hook and Dawes2021), which perform (at least) as well as other architectures such as long short-term memory (LSTM) networks (Vlachas et al. Reference Vlachas, Pathak, Hunt, Sapsis, Girvan, Ott and Koumoutsakos2020), but they are simpler to train. This is because training the ESNs is a quadratic optimisation problem, whose global minimum is a solution of a linear system (Lukoševičius Reference Lukoševičius2012). In contrast, the training of LSTMs require an iterative gradient descent, which can converge to a local minimum of a multimodal loss function (Goodfellow et al. Reference Goodfellow, Bengio and Courville2016). ESNs are designed to be straightforward and computationally cheaper to train than other networks, but their performance is sensitive to the selection of hyperparameters, which need to be computed through ad hoc algorithms (Racca & Magri Reference Racca and Magri2021). In fluid dynamics, ESNs have been employed to (i) optimise ergodic averages in thermoacoustic oscillations (Huhn & Magri Reference Huhn and Magri2022), (ii) predict extreme events in chaotic flows (Doan, Polifke & Magri Reference Doan, Polifke and Magri2021; Racca & Magri Reference Racca and Magri2022a,Reference Racca and Magrib) and control their occurrence (Racca & Magri Reference Racca and Magri2022a, Reference Racca and Magri2023), (iii) infer the model error (i.e. bias) in data assimilation of thermoacoustics systems (Nóvoa, Racca & Magri Reference Nóvoa, Racca and Magri2023) and (iv) investigate the stability and covariant Lyapunov vectors of chaotic attractors (Margazoglou & Magri Reference Margazoglou and Magri2023). Racca & Magri (Reference Racca and Magri2022a) showed that ESNs perform similarly to the LSTMs of Srinivasan et al. (Reference Srinivasan, Guastoni, Azizpour, Schlatter and Vinuesa2019) in the forecasting of chaotic flows, whilst requiring ![]() ${\approx }0.1\,\%$ data.

${\approx }0.1\,\%$ data.

The objective of this work is threefold. First, we develop the CAE-ESN by combining convolutional autoencoders (CAEs) with ESNs to predict turbulent flowfields. Second, we time-accurately and statistically predict a turbulent flow at different Reynolds numbers. Third, we carry out a correlation analysis between the reconstruction error and the temporal prediction of the CAE-ESN.

This paper is organised as follows. Section 2 presents the two-dimensional turbulent flow and introduces the tools for nonlinear analysis. Section 3 describes the CAE-ESN. Section 4 analyses the reconstruction of the flowfield. Section 5 analyses the time-accurate prediction of the flow and discusses the correlation between reconstruction error and temporal prediction of the system. Section 6 analyses the prediction of the statistics of the flow and the correlation between time-accurate and statistical performance. Section 7 extends the CAE-ESN to the prediction of three-dimensional turbulence. Finally, § 8 summarises the results.

2. Kolmogorov flow

We consider the two-dimensional non-dimensionalised incompressible Navier–Stokes equations

where ![]() $p$ is the pressure,

$p$ is the pressure, ![]() $\boldsymbol {u}=(u_1,u_2)$ is the velocity and

$\boldsymbol {u}=(u_1,u_2)$ is the velocity and ![]() $\boldsymbol {x}=(x_1,x_2)$ are the spatial coordinates. The time-independent diverge-free forcing is

$\boldsymbol {x}=(x_1,x_2)$ are the spatial coordinates. The time-independent diverge-free forcing is ![]() $\boldsymbol {f}=\sin (n_fx_2)\boldsymbol {e}_1$, where

$\boldsymbol {f}=\sin (n_fx_2)\boldsymbol {e}_1$, where ![]() $\boldsymbol {e}_1= (1,0)^{\rm T}$ and

$\boldsymbol {e}_1= (1,0)^{\rm T}$ and ![]() $n_f$ is the wavenumber of the forcing. The Reynolds number is

$n_f$ is the wavenumber of the forcing. The Reynolds number is ![]() $Re=\sqrt {\chi }/\nu$, where

$Re=\sqrt {\chi }/\nu$, where ![]() $\chi$ is the amplitude of the forcing and

$\chi$ is the amplitude of the forcing and ![]() $\nu$ is the kinematic viscosity (Chandler & Kerswell Reference Chandler and Kerswell2013). We solve the flow in the doubly periodical domain

$\nu$ is the kinematic viscosity (Chandler & Kerswell Reference Chandler and Kerswell2013). We solve the flow in the doubly periodical domain ![]() $\boldsymbol {x} \in \mathbb {T}^2=[0,2{\rm \pi} ]\times [0,2{\rm \pi} ]$. For this choice of forcing and boundary conditions, the flow is typically referred to as the Kolmogorov flow (e.g. Platt, Sirovich & Fitzmaurice Reference Platt, Sirovich and Fitzmaurice1991). We integrate the equations using KolSol (https://github.com/MagriLab/KolSol), which is a publicly available pseudospectral solver based on the Fourier–Galerkin approach described by Canuto et al. (Reference Canuto, Hussaini, Quarteroni and Zang1988). The equations are solved in the Fourier domain with a fourth-order explicit Runge–Kutta integration scheme with a timestep

$\boldsymbol {x} \in \mathbb {T}^2=[0,2{\rm \pi} ]\times [0,2{\rm \pi} ]$. For this choice of forcing and boundary conditions, the flow is typically referred to as the Kolmogorov flow (e.g. Platt, Sirovich & Fitzmaurice Reference Platt, Sirovich and Fitzmaurice1991). We integrate the equations using KolSol (https://github.com/MagriLab/KolSol), which is a publicly available pseudospectral solver based on the Fourier–Galerkin approach described by Canuto et al. (Reference Canuto, Hussaini, Quarteroni and Zang1988). The equations are solved in the Fourier domain with a fourth-order explicit Runge–Kutta integration scheme with a timestep ![]() ${\rm d}t=0.01$. The results are stored every

${\rm d}t=0.01$. The results are stored every ![]() $\delta t=0.1$. As suggested in Farazmand (Reference Farazmand2016), we select the number of Fourier modes from convergence tests on the kinetic energy spectra (see supplementary material available at https://doi.org/10.1017/jfm.2023.716). The solution in the Fourier domain is projected onto a

$\delta t=0.1$. As suggested in Farazmand (Reference Farazmand2016), we select the number of Fourier modes from convergence tests on the kinetic energy spectra (see supplementary material available at https://doi.org/10.1017/jfm.2023.716). The solution in the Fourier domain is projected onto a ![]() $48\times 48$ grid in the spatial domain with the inverse Fourier transform. The resulting 4608-dimensional velocity flowfield,

$48\times 48$ grid in the spatial domain with the inverse Fourier transform. The resulting 4608-dimensional velocity flowfield, ![]() $\boldsymbol {q}(t) \in \mathbb {R}^{48\times 48\times 2}$, is the flow state vector.

$\boldsymbol {q}(t) \in \mathbb {R}^{48\times 48\times 2}$, is the flow state vector.

The Kolmogorov flow shows a variety of regimes that depend on the forcing wavenumber, ![]() $n_f$, and Reynolds number,

$n_f$, and Reynolds number, ![]() $Re$ (Platt et al. Reference Platt, Sirovich and Fitzmaurice1991). In this work, we analyse

$Re$ (Platt et al. Reference Platt, Sirovich and Fitzmaurice1991). In this work, we analyse ![]() $n_f=4$ and

$n_f=4$ and ![]() $Re=\{30,34\}$, for which we observe quasiperiodic and turbulent solutions, respectively. To globally characterise the flow, we compute the average dissipation rate,

$Re=\{30,34\}$, for which we observe quasiperiodic and turbulent solutions, respectively. To globally characterise the flow, we compute the average dissipation rate, ![]() $D$, per unit volume

$D$, per unit volume

where ![]() $d(x_1,x_2,t)$ is the local dissipation rate and

$d(x_1,x_2,t)$ is the local dissipation rate and ![]() $||{\cdot }||$ is the

$||{\cdot }||$ is the ![]() $L_2$ norm. The dissipation rate has been employed in the literature to analyse the Kolmogorov flow (Chandler & Kerswell Reference Chandler and Kerswell2013; Farazmand Reference Farazmand2016). We characterise the solutions in figure 1 and Appendix B through the average dissipation rate,

$L_2$ norm. The dissipation rate has been employed in the literature to analyse the Kolmogorov flow (Chandler & Kerswell Reference Chandler and Kerswell2013; Farazmand Reference Farazmand2016). We characterise the solutions in figure 1 and Appendix B through the average dissipation rate, ![]() $D$, and the local dissipation rate at the centre of the domain,

$D$, and the local dissipation rate at the centre of the domain, ![]() $d_{{\rm \pi},{\rm \pi} }$. The phase plots show the trajectories obtained with the optimal time delay given by the first minimum of the average mutual information,

$d_{{\rm \pi},{\rm \pi} }$. The phase plots show the trajectories obtained with the optimal time delay given by the first minimum of the average mutual information, ![]() $\tau$ (Kantz & Schreiber Reference Kantz and Schreiber2004). In the first regime (

$\tau$ (Kantz & Schreiber Reference Kantz and Schreiber2004). In the first regime (![]() $Re=30$), the average dissipation rate is a limit cycle (figure 1a), whilst the local dissipation rate has a toroidal structure (figure 1b), which indicates quasiperiodic variations in the flow state. The average dissipation rate is periodic, despite the flow state being quasiperiodic, because some temporal frequencies are filtered out when averaging in space (more details are given in Appendix B). In the second regime (

$Re=30$), the average dissipation rate is a limit cycle (figure 1a), whilst the local dissipation rate has a toroidal structure (figure 1b), which indicates quasiperiodic variations in the flow state. The average dissipation rate is periodic, despite the flow state being quasiperiodic, because some temporal frequencies are filtered out when averaging in space (more details are given in Appendix B). In the second regime (![]() $Re=34$), the solution is turbulent for both global and local quantities (figure 1c,d).

$Re=34$), the solution is turbulent for both global and local quantities (figure 1c,d).

Figure 1. Phase plots of the global, ![]() $D$, and local,

$D$, and local, ![]() $d_{{\rm \pi},{\rm \pi} }$, dissipation rates for (a,b) quasiperiodic regime of the flow state (

$d_{{\rm \pi},{\rm \pi} }$, dissipation rates for (a,b) quasiperiodic regime of the flow state (![]() $Re=30$) and (c,d) turbulent regime (

$Re=30$) and (c,d) turbulent regime (![]() $Re=34$). Here

$Re=34$). Here ![]() $\tau = [5.0,13.0,4.8,12.0]$, respectively.

$\tau = [5.0,13.0,4.8,12.0]$, respectively.

2.1. Lyapunov exponents and attractor dimension

As explained in § 4, in order to create a reduced-order model, we need to select the number of degrees of freedom of the latent space. We observe that at a statistically stationary regime, the latent space should be at least as large as the turbulent attractor. Therefore, we propose using the turbulent attractor's dimension as a lower bound for the latent space dimension. In chaotic (turbulent) systems, the dynamics are predictable only for finite times because infinitesimal errors increase in time with an average exponential rate given by the (positive) largest Lyapunov exponent, ![]() $\varLambda _1$ (e.g. Boffetta et al. Reference Boffetta, Cencini, Falcioni and Vulpiani2002). The inverse of the largest Lyapunov exponent provides a timescale for assessing the predictability of chaotic systems, which is referred to as the Lyapunov time (LT),

$\varLambda _1$ (e.g. Boffetta et al. Reference Boffetta, Cencini, Falcioni and Vulpiani2002). The inverse of the largest Lyapunov exponent provides a timescale for assessing the predictability of chaotic systems, which is referred to as the Lyapunov time (LT), ![]() $1\mathrm {LT}=\varLambda _1^{-1}$. To quantitatively assess time accuracy (§ 5), we normalise the time by the LT. In addition to being unpredictable, chaotic systems are dissipative, which means that the solution converges to a limited region of the phase space, i.e. the attractor. The attractor typically has a significantly smaller number of degrees of freedom than the original system (Eckmann & Ruelle Reference Eckmann and Ruelle1985). An upper bound on the number of degrees of freedom of the attractor, i.e. its dimension, can be estimated via the Kaplan–Yorke dimension (Kaplan & Yorke Reference Kaplan and Yorke1979)

$1\mathrm {LT}=\varLambda _1^{-1}$. To quantitatively assess time accuracy (§ 5), we normalise the time by the LT. In addition to being unpredictable, chaotic systems are dissipative, which means that the solution converges to a limited region of the phase space, i.e. the attractor. The attractor typically has a significantly smaller number of degrees of freedom than the original system (Eckmann & Ruelle Reference Eckmann and Ruelle1985). An upper bound on the number of degrees of freedom of the attractor, i.e. its dimension, can be estimated via the Kaplan–Yorke dimension (Kaplan & Yorke Reference Kaplan and Yorke1979)

\begin{equation} N_{KY} = j + \frac{\displaystyle\sum\limits_{i=1}^j\varLambda_i}{|\varLambda_{j+1}|}, \end{equation}

\begin{equation} N_{KY} = j + \frac{\displaystyle\sum\limits_{i=1}^j\varLambda_i}{|\varLambda_{j+1}|}, \end{equation}

where ![]() $\varLambda _i$ are the

$\varLambda _i$ are the ![]() $j$ largest Lyapunov exponents for which

$j$ largest Lyapunov exponents for which ![]() $\sum _{i=1}^j\varLambda _i \geq 0$. Physically, the

$\sum _{i=1}^j\varLambda _i \geq 0$. Physically, the ![]() $m$ largest Lyapunov exponents are the average exponential expansion/contraction rates of an

$m$ largest Lyapunov exponents are the average exponential expansion/contraction rates of an ![]() $m$-dimensional infinitesimal volume of the phase space moving along the attractor (e.g. Boffetta et al. Reference Boffetta, Cencini, Falcioni and Vulpiani2002). To obtain the

$m$-dimensional infinitesimal volume of the phase space moving along the attractor (e.g. Boffetta et al. Reference Boffetta, Cencini, Falcioni and Vulpiani2002). To obtain the ![]() $m$ largest Lyapunov exponents, we compute the evolution of

$m$ largest Lyapunov exponents, we compute the evolution of ![]() $m$ random perturbations around a trajectory that spans the attractor. The space spanned by the

$m$ random perturbations around a trajectory that spans the attractor. The space spanned by the ![]() $m$ perturbations approximates an

$m$ perturbations approximates an ![]() $m$-dimensional subspace of the tangent space. Because errors grow exponentially in time, the evolution of the perturbations is exponentially unstable and the direct computation of the Lyapunov exponents numerically overflows. To overcome this issue, we periodically orthonormalise the perturbations, following the algorithm of Benettin et al. (Reference Benettin, Galgani, Giorgilli and Strelcyn1980) (see the supplementary material). In so doing, we find the quasiperiodic attractor to be 3-dimensional and the chaotic attractor to be 9.5-dimensional. Thus, both attractors have approximately 3 order of magnitude fewer degrees of freedom than the flow state (which has 4608 degrees of freedom, see § 2). We take advantage of these estimates in §§ 4–5, in which we show that we need more than 100 POD modes to accurately describe the attractor that has less than 10 degrees of freedom. The leading Lyapunov exponent in the chaotic case is

$m$-dimensional subspace of the tangent space. Because errors grow exponentially in time, the evolution of the perturbations is exponentially unstable and the direct computation of the Lyapunov exponents numerically overflows. To overcome this issue, we periodically orthonormalise the perturbations, following the algorithm of Benettin et al. (Reference Benettin, Galgani, Giorgilli and Strelcyn1980) (see the supplementary material). In so doing, we find the quasiperiodic attractor to be 3-dimensional and the chaotic attractor to be 9.5-dimensional. Thus, both attractors have approximately 3 order of magnitude fewer degrees of freedom than the flow state (which has 4608 degrees of freedom, see § 2). We take advantage of these estimates in §§ 4–5, in which we show that we need more than 100 POD modes to accurately describe the attractor that has less than 10 degrees of freedom. The leading Lyapunov exponent in the chaotic case is ![]() $\varLambda _1=0.065$, therefore, the LT is

$\varLambda _1=0.065$, therefore, the LT is ![]() $1\mathrm {LT}=0.065^{-1}\approx 15.4$.

$1\mathrm {LT}=0.065^{-1}\approx 15.4$.

3. Convolutional autoencoder echo state network (CAE-ESN)

In order to decompose high-dimensional turbulent flows into a lower-order representation, a latent space of the physical dynamics is computed. For time prediction, the dynamics are mapped onto the latent space on which their evolution can be predicted at a lower computational cost than the original problem. In this work, we generate the low-dimensional space using a CAE (Hinton & Salakhutdinov Reference Hinton and Salakhutdinov2006), which offers a nonlinear reduced-order representation of the flow. By projecting the physical dynamics onto the latent space, we obtain a low-dimensional time series, whose dynamics are predicted by an ESN (Jaeger & Haas Reference Jaeger and Haas2004).

3.1. Convolutional autoencoder

The autoencoder consists of an encoder and a decoder (figure 2a). The encoder, ![]() $\boldsymbol {g}({\cdot })$, maps the high-dimensional physical state,

$\boldsymbol {g}({\cdot })$, maps the high-dimensional physical state, ![]() $\boldsymbol {q}(t) \in \mathbb {R}^{N_{{phys}}}$, into the low-dimensional latent state,

$\boldsymbol {q}(t) \in \mathbb {R}^{N_{{phys}}}$, into the low-dimensional latent state, ![]() $\boldsymbol {z} \in \mathbb {R}^{N_{{lat}}}$ with

$\boldsymbol {z} \in \mathbb {R}^{N_{{lat}}}$ with ![]() $N_{{lat}} \ll N_{{phys}}$; whereas the decoder,

$N_{{lat}} \ll N_{{phys}}$; whereas the decoder, ![]() $\boldsymbol {f}({\cdot })$, maps the latent state back into the physical space with the following goal

$\boldsymbol {f}({\cdot })$, maps the latent state back into the physical space with the following goal

where ![]() $\hat {\boldsymbol {q}}(t) \in \mathbb {R}^{N_{{phys}}}$ is the reconstructed state. We use CNNs (Lecun et al. Reference Lecun, Bottou, Bengio and Haffner1998) as the building blocks of the autoencoder. In CNNs, a filter of size

$\hat {\boldsymbol {q}}(t) \in \mathbb {R}^{N_{{phys}}}$ is the reconstructed state. We use CNNs (Lecun et al. Reference Lecun, Bottou, Bengio and Haffner1998) as the building blocks of the autoencoder. In CNNs, a filter of size ![]() $k_f\times k_f\times d_f$, slides through the input,

$k_f\times k_f\times d_f$, slides through the input, ![]() $\boldsymbol {y}_1 \in \mathbb {R}^{N_{1x}\times N_{1y}\times N_{1z}}$, with stride,

$\boldsymbol {y}_1 \in \mathbb {R}^{N_{1x}\times N_{1y}\times N_{1z}}$, with stride, ![]() $s$, so that the output,

$s$, so that the output, ![]() $\boldsymbol {y}_2 \in \mathbb {R}^{N_{2x}\times N_{2y}\times N_{2z}}$, of the CNN layer is

$\boldsymbol {y}_2 \in \mathbb {R}^{N_{2x}\times N_{2y}\times N_{2z}}$, of the CNN layer is

\begin{equation} y_{2_{ijm}} = \mathrm{func} \left(\sum_{l_1=1}^{k_f} \sum_{l_2=1}^{k_f} \sum_{k=1}^{N_{1z}} y_{1_{s(i-1)+l_1,s(j-1)+l_2,k}}W_{l_1l_2km} + b_{m} \right), \end{equation}

\begin{equation} y_{2_{ijm}} = \mathrm{func} \left(\sum_{l_1=1}^{k_f} \sum_{l_2=1}^{k_f} \sum_{k=1}^{N_{1z}} y_{1_{s(i-1)+l_1,s(j-1)+l_2,k}}W_{l_1l_2km} + b_{m} \right), \end{equation}

where ![]() $\mathrm {func}$ is the nonlinear activation function, and

$\mathrm {func}$ is the nonlinear activation function, and ![]() $W_{l_1l_2km}$ and

$W_{l_1l_2km}$ and ![]() $b_{m}$ are the weights and bias of the filter, which are computed by training the network. We choose

$b_{m}$ are the weights and bias of the filter, which are computed by training the network. We choose ![]() $\mathrm {func}=\tanh$ following Murata et al. (Reference Murata, Fukami and Fukagata2020). A visual representation of the convolution operation is shown in figure 2(b). The size of the output is a function of the input size, the kernel size, the stride and the artificially added entries around the input (i.e. the padding). By selecting periodic padding, we enforce the boundary conditions of the flow (figure 2c). The convolution operation filters the input by analyzing patches of size

$\mathrm {func}=\tanh$ following Murata et al. (Reference Murata, Fukami and Fukagata2020). A visual representation of the convolution operation is shown in figure 2(b). The size of the output is a function of the input size, the kernel size, the stride and the artificially added entries around the input (i.e. the padding). By selecting periodic padding, we enforce the boundary conditions of the flow (figure 2c). The convolution operation filters the input by analyzing patches of size ![]() $k_f \times k_f$. In doing so, CNNs take into account the spatial structure of the input and learn localised structures in the flow. Moreover, the filter has the same weights as it slides through the input (parameter sharing), which allows us to create models with significantly less weights than fully connected layers. Because the filter provides the mathematical relationship between nearby points in the flowfield, parameter sharing is physically consistent with the governing equations of the flow, which are invariant to translation.

$k_f \times k_f$. In doing so, CNNs take into account the spatial structure of the input and learn localised structures in the flow. Moreover, the filter has the same weights as it slides through the input (parameter sharing), which allows us to create models with significantly less weights than fully connected layers. Because the filter provides the mathematical relationship between nearby points in the flowfield, parameter sharing is physically consistent with the governing equations of the flow, which are invariant to translation.

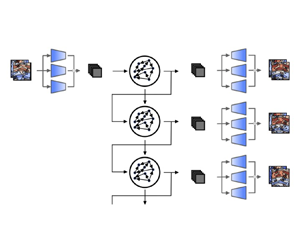

Figure 2. (a) Schematic representation of the multiscale autoencoder, (b) convolution operation for filter size ![]() $(2\times 2\times 1)$,

$(2\times 2\times 1)$, ![]() $\text {stride}=1$ and

$\text {stride}=1$ and ![]() $\text {padding}=1$ and (c) periodic padding. In (b,c), blue and white squares indicate the input and the padding, respectively. The numbers in (c) indicate pictorially the values of the flowfield, which can be interpreted as pixel values.

$\text {padding}=1$ and (c) periodic padding. In (b,c), blue and white squares indicate the input and the padding, respectively. The numbers in (c) indicate pictorially the values of the flowfield, which can be interpreted as pixel values.

The encoder consists of a series of padding and convolutional layers. At each stage, we (i) apply periodic padding, (ii) perform a convolution with stride equal to two to half the spatial dimensions (Springenberg et al. Reference Springenberg, Dosovitskiy, Brox and Riedmiller2014) and increase the depth of the output and (iii) perform a convolution with stride equal to one to keep the same dimensions and increase the representation capability of the network. The final convolutional layer has a varying depth, which depends on the size of the latent space. The decoder consists of a series of padding, transpose convolutional and centre crop layers. An initial periodic padding enlarges the latent space input through the periodic boundary conditions. The size of the padding is chosen so that the spatial size of the output after the last transpose convolutional layer is larger than the physical space. In the next layers, we (i) increase the spatial dimensions of the input through the transpose convolution with stride equal to two and (ii) perform a convolution with stride equal to one to keep the same dimensions and increase the representation capability of the network. The transpose convolution is the inverse operation of the convolution shown in figure 2(b), in which the roles of the input and the output are inverted (Zeiler et al. Reference Zeiler, Krishnan, Taylor and Fergus2010). The centre crop layer eliminates the outer borders of the picture after the transpose convolution, in a process opposite to padding, which is needed to match the spatial size of the output of the decoder with the spatial size of the input of the encoder. A final convolutional layer with a linear activation function sets the depth of the output of the decoder to be equal to the input of the encoder. We use the linear activation to match the amplitude of the inputs to the encoder. The encoder and decoder have similar number of trainable parameters (more details are given in Appendix C).

In this study, we use a multiscale autoencoder (Du et al. Reference Du, Qu, He and Guo2018), which has been successfully employed to generate latent spaces of turbulent flowfields (Nakamura et al. Reference Nakamura, Fukami, Hasegawa, Nabae and Fukagata2021). The multiscale autoencoder employs three parallel encoders and decoders, which have different spatial sizes of the filter (figure 2a). The size of the three filters are ![]() $3\times 3$,

$3\times 3$, ![]() $5\times 5$ and

$5\times 5$ and ![]() $7\times 7$, respectively. By employing different filters, the multiscale architecture learns spatial structures of different sizes, which are characteristic features of turbulent flows. We train the autoencoder by minimising the mean squared error (MSE) between the outputs and the inputs

$7\times 7$, respectively. By employing different filters, the multiscale architecture learns spatial structures of different sizes, which are characteristic features of turbulent flows. We train the autoencoder by minimising the mean squared error (MSE) between the outputs and the inputs

\begin{equation} \mathcal{L} = \sum^{N_t}_{i=1} \frac{1}{ N_tN_{{phys}}} \left\vert\left\vert\hat{\boldsymbol{q}}(t_i) - \boldsymbol{q}(t_i)\right\vert\right\vert^2, \end{equation}

\begin{equation} \mathcal{L} = \sum^{N_t}_{i=1} \frac{1}{ N_tN_{{phys}}} \left\vert\left\vert\hat{\boldsymbol{q}}(t_i) - \boldsymbol{q}(t_i)\right\vert\right\vert^2, \end{equation}

where ![]() $N_t$ is the number of training snapshots. To train the autoencoder, we use a dataset of 30 000 time units (generated by integrating (2.1)–(2.2)), which we sample with timestep

$N_t$ is the number of training snapshots. To train the autoencoder, we use a dataset of 30 000 time units (generated by integrating (2.1)–(2.2)), which we sample with timestep ![]() $\delta t_{{CNN}} = 1$. Specifically, we use 25 000 time units for training and 5000 for validation. We divide the training data in minibatches of 50 snapshots each, where every snapshot is

$\delta t_{{CNN}} = 1$. Specifically, we use 25 000 time units for training and 5000 for validation. We divide the training data in minibatches of 50 snapshots each, where every snapshot is ![]() $500\delta t_{{CNN}}$ from the previous input of the minibatch. The weights are initialised following Glorot & Bengio (Reference Glorot and Bengio2010), and the minimisation is performed by stochastic gradient descent with the AMSgrad variant of the Adam algorithm (Kingma & Ba Reference Kingma and Ba2017; Reddi, Kale & Kumar Reference Reddi, Kale and Kumar2018) with adaptive learning rate. The autoencoder is implemented in Tensorflow (Abadi et al. Reference Abadi2015).

$500\delta t_{{CNN}}$ from the previous input of the minibatch. The weights are initialised following Glorot & Bengio (Reference Glorot and Bengio2010), and the minimisation is performed by stochastic gradient descent with the AMSgrad variant of the Adam algorithm (Kingma & Ba Reference Kingma and Ba2017; Reddi, Kale & Kumar Reference Reddi, Kale and Kumar2018) with adaptive learning rate. The autoencoder is implemented in Tensorflow (Abadi et al. Reference Abadi2015).

3.2. Echo state networks

Once the mapping from the flowfield to the latent space has been found by the encoder at the current time step, ![]() $\boldsymbol {z}(t_i) = \boldsymbol {g}(\boldsymbol {q}(t_i))$, we wish to compute the latent state at the next time step,

$\boldsymbol {z}(t_i) = \boldsymbol {g}(\boldsymbol {q}(t_i))$, we wish to compute the latent state at the next time step, ![]() $\boldsymbol {z}(t_{i+1})$. Because the latent space does not contain full information about the system, the next latent state,

$\boldsymbol {z}(t_{i+1})$. Because the latent space does not contain full information about the system, the next latent state, ![]() $\boldsymbol {z}(t_{i+1})$ cannot be computed straightforwardly as a function of the current latent state only,

$\boldsymbol {z}(t_{i+1})$ cannot be computed straightforwardly as a function of the current latent state only, ![]() $\boldsymbol {z}(t_i)$ (for more details refer to Kantz & Schreiber Reference Kantz and Schreiber2004; Vlachas et al. Reference Vlachas, Pathak, Hunt, Sapsis, Girvan, Ott and Koumoutsakos2020). To compute the latent vector at the next time step, we incorporate information from multiple previous time steps to retain a recursive memory of the past. (This can be alternatively motivated by dynamical systems’ reconstruction via delayed embedding Takens (Reference Takens1981), i.e.

$\boldsymbol {z}(t_i)$ (for more details refer to Kantz & Schreiber Reference Kantz and Schreiber2004; Vlachas et al. Reference Vlachas, Pathak, Hunt, Sapsis, Girvan, Ott and Koumoutsakos2020). To compute the latent vector at the next time step, we incorporate information from multiple previous time steps to retain a recursive memory of the past. (This can be alternatively motivated by dynamical systems’ reconstruction via delayed embedding Takens (Reference Takens1981), i.e. ![]() $\boldsymbol {z}(t_{i+1}) = \boldsymbol {F}(\boldsymbol {z}(t_{i}), \boldsymbol {z}(t_{i-1}), \ldots, \boldsymbol {z}(t_{i-d_{{emb}}}))$, where

$\boldsymbol {z}(t_{i+1}) = \boldsymbol {F}(\boldsymbol {z}(t_{i}), \boldsymbol {z}(t_{i-1}), \ldots, \boldsymbol {z}(t_{i-d_{{emb}}}))$, where ![]() $d_{{emb}}$ is the embedding dimension.) This can be achieved with RNNs, which compute the next time step as a function of previous time steps by updating an internal state that keeps memory of the system. Because of the long-lasting time dependencies of the internal state, however, training RNNs with backpropagation through time is notoriously difficult (Werbos Reference Werbos1990). ESNs overcome this issue by nonlinearly expanding the inputs into a higher-dimensional system, the reservoir, which acts as the memory of the system (Lukoševičius Reference Lukoševičius2012). The output of the network is a linear combination of the reservoir's dynamics, whose weights are the only trainable parameters of the system. Thanks to this architecture, training ESNs consists of a straightforward linear regression problem, which avoids backpropagation through time.

$d_{{emb}}$ is the embedding dimension.) This can be achieved with RNNs, which compute the next time step as a function of previous time steps by updating an internal state that keeps memory of the system. Because of the long-lasting time dependencies of the internal state, however, training RNNs with backpropagation through time is notoriously difficult (Werbos Reference Werbos1990). ESNs overcome this issue by nonlinearly expanding the inputs into a higher-dimensional system, the reservoir, which acts as the memory of the system (Lukoševičius Reference Lukoševičius2012). The output of the network is a linear combination of the reservoir's dynamics, whose weights are the only trainable parameters of the system. Thanks to this architecture, training ESNs consists of a straightforward linear regression problem, which avoids backpropagation through time.

As shown in figure 3, in an ESN, at any time ![]() $t_i$: (i) the latent state input,

$t_i$: (i) the latent state input, ![]() $\boldsymbol {z}(t_i)$, is mapped into the reservoir state, by the input matrix,

$\boldsymbol {z}(t_i)$, is mapped into the reservoir state, by the input matrix, ![]() $\boldsymbol{\mathsf{W}}_{{in}} \in \mathbb {R}^{N_r\times (N_{{lat}}+1)}$, where

$\boldsymbol{\mathsf{W}}_{{in}} \in \mathbb {R}^{N_r\times (N_{{lat}}+1)}$, where ![]() $N_r > N_{{lat}}$; (ii) the reservoir state,

$N_r > N_{{lat}}$; (ii) the reservoir state, ![]() $\boldsymbol {r} \in \mathbb {R}^{N_r}$, is updated at each time iteration as a function of the current input and its previous value; and (iii) the updated reservoir is used to compute the output, which is the predicted latent state at the next timestep,

$\boldsymbol {r} \in \mathbb {R}^{N_r}$, is updated at each time iteration as a function of the current input and its previous value; and (iii) the updated reservoir is used to compute the output, which is the predicted latent state at the next timestep, ![]() $\hat {\boldsymbol {z}}(t_{i+1})$. This process yields the discrete dynamical equations that govern the ESN's evolution (Lukoševičius Reference Lukoševičius2012)

$\hat {\boldsymbol {z}}(t_{i+1})$. This process yields the discrete dynamical equations that govern the ESN's evolution (Lukoševičius Reference Lukoševičius2012)

\begin{equation} \left.\begin{gathered} \boldsymbol{r}(t_{i+1}) = \tanh\Big(\boldsymbol{\mathsf{W}}_{{in}}\Big[\tilde{\boldsymbol{z}}(t_i);b_{in}\Big]+ \boldsymbol{\mathsf{W}}\boldsymbol{r}(t_i)\Big), \\ \hat{\boldsymbol{z}}(t_{i+1}) = \Big[\boldsymbol{r}(t_{i+1});1\Big]^{\rm T}\boldsymbol{\mathsf{W}}_{{out}}, \end{gathered}\right\} \end{equation}

\begin{equation} \left.\begin{gathered} \boldsymbol{r}(t_{i+1}) = \tanh\Big(\boldsymbol{\mathsf{W}}_{{in}}\Big[\tilde{\boldsymbol{z}}(t_i);b_{in}\Big]+ \boldsymbol{\mathsf{W}}\boldsymbol{r}(t_i)\Big), \\ \hat{\boldsymbol{z}}(t_{i+1}) = \Big[\boldsymbol{r}(t_{i+1});1\Big]^{\rm T}\boldsymbol{\mathsf{W}}_{{out}}, \end{gathered}\right\} \end{equation}

where ![]() $\tilde {({\cdot })}$ indicates that each component is normalised by its range,

$\tilde {({\cdot })}$ indicates that each component is normalised by its range, ![]() $\boldsymbol{\mathsf{W}} \in \mathbb {R}^{N_r\times N_r}$ is the state matrix,

$\boldsymbol{\mathsf{W}} \in \mathbb {R}^{N_r\times N_r}$ is the state matrix, ![]() $b_{{in}}$ is the input bias and

$b_{{in}}$ is the input bias and ![]() $\boldsymbol{\mathsf{W}}_{{out}} \in \mathbb {R}^{(N_{r}+1)\times N_{{lat}}}$ is the output matrix. The matrices

$\boldsymbol{\mathsf{W}}_{{out}} \in \mathbb {R}^{(N_{r}+1)\times N_{{lat}}}$ is the output matrix. The matrices ![]() $\boldsymbol{\mathsf{W}}_{{in}}$ and

$\boldsymbol{\mathsf{W}}_{{in}}$ and ![]() $\boldsymbol{\mathsf{W}}$ are (pseudo)randomly generated and fixed, whilst the weights of the output matrix,

$\boldsymbol{\mathsf{W}}$ are (pseudo)randomly generated and fixed, whilst the weights of the output matrix, ![]() $\boldsymbol{\mathsf{W}}_{{out}}$, are computed by training the network. The input matrix,

$\boldsymbol{\mathsf{W}}_{{out}}$, are computed by training the network. The input matrix, ![]() $\boldsymbol{\mathsf{W}}_{{in}}$, has only one element different from zero per row, which is sampled from a uniform distribution in

$\boldsymbol{\mathsf{W}}_{{in}}$, has only one element different from zero per row, which is sampled from a uniform distribution in ![]() $[-\sigma _{{in}},\sigma _{{in}}]$, where

$[-\sigma _{{in}},\sigma _{{in}}]$, where ![]() $\sigma _{{in}}$ is the input scaling. The state matrix,

$\sigma _{{in}}$ is the input scaling. The state matrix, ![]() $\boldsymbol{\mathsf{W}}$, is an Erdős–Renyi matrix with average connectivity,

$\boldsymbol{\mathsf{W}}$, is an Erdős–Renyi matrix with average connectivity, ![]() $d=3$, in which each neuron (each row of

$d=3$, in which each neuron (each row of ![]() $\boldsymbol{\mathsf{W}}$) has on average only

$\boldsymbol{\mathsf{W}}$) has on average only ![]() $d$ connections (non-zero elements), which are obtained by sampling from a uniform distribution in

$d$ connections (non-zero elements), which are obtained by sampling from a uniform distribution in ![]() $[-1,1]$; the entire matrix is then scaled by a multiplication factor to set the spectral radius,

$[-1,1]$; the entire matrix is then scaled by a multiplication factor to set the spectral radius, ![]() $\rho$. The role of the spectral radius is to weigh the contribution of past inputs in the computation of the next time step, therefore, it determines the embedding dimension,

$\rho$. The role of the spectral radius is to weigh the contribution of past inputs in the computation of the next time step, therefore, it determines the embedding dimension, ![]() $d_{{emb}}$. The larger the spectral radius, the more memory of previous inputs the machine has (Lukoševičius Reference Lukoševičius2012). The value of the connectivity is kept small to speed up the computation of

$d_{{emb}}$. The larger the spectral radius, the more memory of previous inputs the machine has (Lukoševičius Reference Lukoševičius2012). The value of the connectivity is kept small to speed up the computation of ![]() $\boldsymbol{\mathsf{W}}\boldsymbol {r}(t_i)$, which, thanks to the sparseness of

$\boldsymbol{\mathsf{W}}\boldsymbol {r}(t_i)$, which, thanks to the sparseness of ![]() $\boldsymbol{\mathsf{W}}$, consists of only

$\boldsymbol{\mathsf{W}}$, consists of only ![]() $N_rd$ operations. The bias in the inputs and outputs layers are added to break the inherent symmetry of the basic ESN architecture (Lu et al. Reference Lu, Pathak, Hunt, Girvan, Brockett and Ott2017). The input bias,

$N_rd$ operations. The bias in the inputs and outputs layers are added to break the inherent symmetry of the basic ESN architecture (Lu et al. Reference Lu, Pathak, Hunt, Girvan, Brockett and Ott2017). The input bias, ![]() $b_{{in}}=0.1$ is selected for it to have the same order of magnitude of the normalised inputs,

$b_{{in}}=0.1$ is selected for it to have the same order of magnitude of the normalised inputs, ![]() $\hat {\boldsymbol {z}}$, whilst the output bias is determined by training

$\hat {\boldsymbol {z}}$, whilst the output bias is determined by training ![]() $\boldsymbol{\mathsf{W}}_{{out}}$.

$\boldsymbol{\mathsf{W}}_{{out}}$.

Figure 3. Schematic representation of the closed-loop evolution of the ESN in the latent space, which is decompressed by the decoder.

The ESN can be run either in open-loop or closed-loop configuration. In the open-loop configuration, we feed the data as the input at each time step to compute the reservoir dynamics, ![]() $\boldsymbol {r}(t_i)$. We use the open-loop configuration for washout and training. Washout is the initial transient of the network, during which we do not compute the output,

$\boldsymbol {r}(t_i)$. We use the open-loop configuration for washout and training. Washout is the initial transient of the network, during which we do not compute the output, ![]() $\hat {\boldsymbol {z}}(t_{i+1})$. The purpose of washout is for the reservoir state to become (i) up-to-date with respect to the current state of the system and (ii) independent of the arbitrarily chosen initial condition,

$\hat {\boldsymbol {z}}(t_{i+1})$. The purpose of washout is for the reservoir state to become (i) up-to-date with respect to the current state of the system and (ii) independent of the arbitrarily chosen initial condition, ![]() $\boldsymbol {r}(t_0) = {0}$ (echo state property). After washout, we train the output matrix,

$\boldsymbol {r}(t_0) = {0}$ (echo state property). After washout, we train the output matrix, ![]() $\boldsymbol{\mathsf{W}}_{{out}}$, by minimising the MSE between the outputs and the data over the training set. Training the network on

$\boldsymbol{\mathsf{W}}_{{out}}$, by minimising the MSE between the outputs and the data over the training set. Training the network on ![]() $N_{{tr}} + 1$ snapshots consists of solving the linear system (ridge regression)

$N_{{tr}} + 1$ snapshots consists of solving the linear system (ridge regression)

where ![]() $\boldsymbol{\mathsf{R}}\in \mathbb {R}^{(N_r+1)\times N_{{tr}}}$ and

$\boldsymbol{\mathsf{R}}\in \mathbb {R}^{(N_r+1)\times N_{{tr}}}$ and ![]() $\boldsymbol{\mathsf{Z}}_{{d}}\in \mathbb {R}^{N_{{lat}}\times N_{{tr}}}$ are the horizontal concatenations of the reservoir states with bias,

$\boldsymbol{\mathsf{Z}}_{{d}}\in \mathbb {R}^{N_{{lat}}\times N_{{tr}}}$ are the horizontal concatenations of the reservoir states with bias, ![]() $[\boldsymbol {r};1]$, and of the output data, respectively;

$[\boldsymbol {r};1]$, and of the output data, respectively; ![]() $\boldsymbol{\mathsf{I}}$ is the identity matrix and

$\boldsymbol{\mathsf{I}}$ is the identity matrix and ![]() $\beta$ is the Tikhonov regularisation parameter (Tikhonov et al. Reference Tikhonov, Goncharsky, Stepanov and Yagola2013). In the closed-loop configuration (figure 3), starting from an initial data point as an input and an initial reservoir state obtained through washout, the output,

$\beta$ is the Tikhonov regularisation parameter (Tikhonov et al. Reference Tikhonov, Goncharsky, Stepanov and Yagola2013). In the closed-loop configuration (figure 3), starting from an initial data point as an input and an initial reservoir state obtained through washout, the output, ![]() $\hat {\boldsymbol {z}}$, is fed back to the network as an input for the next time step prediction. In doing so, the network is able to autonomously evolve in the future. After training, the closed-loop configuration is deployed for validation and test on unseen dynamics.

$\hat {\boldsymbol {z}}$, is fed back to the network as an input for the next time step prediction. In doing so, the network is able to autonomously evolve in the future. After training, the closed-loop configuration is deployed for validation and test on unseen dynamics.

3.2.1. Validation

During validation, we use part of the data to select the hyperparameters of the network by minimising the error of the prediction with respect to the data. In this work, we optimise the input scaling, ![]() $\sigma _{{in}}$, spectral radius,

$\sigma _{{in}}$, spectral radius, ![]() $\rho$, and Tikhonov parameter,

$\rho$, and Tikhonov parameter, ![]() $\beta$, which are the key hyperparameters for the performance of the network (Lukoševičius Reference Lukoševičius2012). We use a Bayesian optimisation to select

$\beta$, which are the key hyperparameters for the performance of the network (Lukoševičius Reference Lukoševičius2012). We use a Bayesian optimisation to select ![]() $\sigma _{{in}}$ and

$\sigma _{{in}}$ and ![]() $\rho$, and perform a grid search within

$\rho$, and perform a grid search within ![]() $[\sigma _{{in}},\rho ]$ to select

$[\sigma _{{in}},\rho ]$ to select ![]() $\beta$ (Racca & Magri Reference Racca and Magri2021). The range of the hyperparameters vary as a function of the testcase (see the supplementary material). In addition, we add to the training inputs,

$\beta$ (Racca & Magri Reference Racca and Magri2021). The range of the hyperparameters vary as a function of the testcase (see the supplementary material). In addition, we add to the training inputs, ![]() $\boldsymbol {z}_{tr}$, Gaussian noise,

$\boldsymbol {z}_{tr}$, Gaussian noise, ![]() $\mathcal {N}$, with a zero mean and standard deviation, such that

$\mathcal {N}$, with a zero mean and standard deviation, such that ![]() $z_{tr_i} = z_i + \mathcal {N}(0,k_z\sigma (z_i))$, where

$z_{tr_i} = z_i + \mathcal {N}(0,k_z\sigma (z_i))$, where ![]() $\sigma ({\cdot })$ is the standard deviation and

$\sigma ({\cdot })$ is the standard deviation and ![]() $k_z$ is a tunable parameter (Appendix D). Adding noise to the data improves the ESN forecasting of chaotic dynamics because the network explores more regions around the attractor, thereby becoming more robust to errors (Lukoševičius Reference Lukoševičius2012; Vlachas et al. Reference Vlachas, Pathak, Hunt, Sapsis, Girvan, Ott and Koumoutsakos2020; Racca & Magri Reference Racca and Magri2022a).

$k_z$ is a tunable parameter (Appendix D). Adding noise to the data improves the ESN forecasting of chaotic dynamics because the network explores more regions around the attractor, thereby becoming more robust to errors (Lukoševičius Reference Lukoševičius2012; Vlachas et al. Reference Vlachas, Pathak, Hunt, Sapsis, Girvan, Ott and Koumoutsakos2020; Racca & Magri Reference Racca and Magri2022a).

To select the hyperparameters, we employ the recycle validation (RV) (Racca & Magri Reference Racca and Magri2021). The RV is a tailored validation strategy for the prediction of dynamical systems with RNNs, which has been shown to outperform other validation strategies, such as the single shot validation (SSV), in the prediction of chaotic flows (more details are given in Appendix D). In the RV, the network is trained only once on the entire dataset, and validation is performed on multiple intervals already used for training. This is possible because RNNs operate in two configurations (open-loop and closed-loop), which means that the networks can be validated in closed-loop on data used for training in open-loop.

4. Spatial reconstruction

We analyse the ability of the autoencoder to create a reduced-order representation of the flowfield, i.e. the latent space. The focus is on the spatial reconstruction of the flow. Prediction in time is discussed in §§ 5–6.

4.1. Reconstruction error

The autoencoder maps the flowfield onto the latent space and then reconstructs the flowfield based on the information contained in the latent space variables. The reconstructed flowfield (the output) is compared with the original flowfield (the input). The difference between the two flowfields is the reconstruction error, which we quantify with the normalised root-mean-squared error (NRMSE)

\begin{equation} \mathrm{NRMSE}(\boldsymbol{q}) = \sqrt{\dfrac{\displaystyle\sum\limits_i^{N_{{phys}}} \dfrac{1}{N_{{phys}}} (\hat{q}_i-q_{i})^2 }{\displaystyle\sum\limits_i^{N_{{phys}}} \dfrac{1}{N_{{phys}}}\sigma(q_i)^2}}, \end{equation}

\begin{equation} \mathrm{NRMSE}(\boldsymbol{q}) = \sqrt{\dfrac{\displaystyle\sum\limits_i^{N_{{phys}}} \dfrac{1}{N_{{phys}}} (\hat{q}_i-q_{i})^2 }{\displaystyle\sum\limits_i^{N_{{phys}}} \dfrac{1}{N_{{phys}}}\sigma(q_i)^2}}, \end{equation}

where ![]() $i$ indicates the

$i$ indicates the ![]() $i$th component of the physical flowfield,

$i$th component of the physical flowfield, ![]() $\boldsymbol {q}$, and the reconstructed flowfield,

$\boldsymbol {q}$, and the reconstructed flowfield, ![]() $\hat {\boldsymbol {q}}$, and

$\hat {\boldsymbol {q}}$, and ![]() $\sigma ({\cdot })$ is the standard deviation. We compare the results for different sizes of the latent space with the reconstruction obtained by POD (Lumley Reference Lumley1970), also known as principal component analysis (Pearson Reference Pearson1901). In POD, the

$\sigma ({\cdot })$ is the standard deviation. We compare the results for different sizes of the latent space with the reconstruction obtained by POD (Lumley Reference Lumley1970), also known as principal component analysis (Pearson Reference Pearson1901). In POD, the ![]() $N_{{lat}}$-dimensional orthogonal basis that spans the reduced-order space is given by the eigenvectors

$N_{{lat}}$-dimensional orthogonal basis that spans the reduced-order space is given by the eigenvectors ![]() $\boldsymbol {\varPhi }^{({phys})}_i$ associated with the largest

$\boldsymbol {\varPhi }^{({phys})}_i$ associated with the largest ![]() $N_{{lat}}$ eigenvalues of the covariance matrix,

$N_{{lat}}$ eigenvalues of the covariance matrix, ![]() $\boldsymbol{\mathsf{C}}=({1}/({N_t-1}))\boldsymbol{\mathsf{Q}}_{\boldsymbol{\mathsf{d}}}^T\boldsymbol{\mathsf{Q}}_{\boldsymbol{\mathsf{d}}}$, where

$\boldsymbol{\mathsf{C}}=({1}/({N_t-1}))\boldsymbol{\mathsf{Q}}_{\boldsymbol{\mathsf{d}}}^T\boldsymbol{\mathsf{Q}}_{\boldsymbol{\mathsf{d}}}$, where ![]() $\boldsymbol{\mathsf{Q}}_{\boldsymbol{\mathsf{d}}}$ is the vertical concatenation of the

$\boldsymbol{\mathsf{Q}}_{\boldsymbol{\mathsf{d}}}$ is the vertical concatenation of the ![]() $N_t$ flow snapshots available during training, from which the mean has been subtracted. Both POD and the autoencoder minimise the same loss function (3.3). However, POD provides the optimal subspace onto which linear projections of the data preserve its energy. On the other hand, the autoencoder provides a nonlinear mapping of the data, which is optimised to preserve its energy. (In the limit of linear activation functions, autoencoders perform similarly to POD (Baldi & Hornik Reference Baldi and Hornik1989; Milano & Koumoutsakos Reference Milano and Koumoutsakos2002; Murata et al. Reference Murata, Fukami and Fukagata2020).) The size of the latent space of the autoencoder is selected to be larger than the Kaplan–Yorke dimension, which is

$N_t$ flow snapshots available during training, from which the mean has been subtracted. Both POD and the autoencoder minimise the same loss function (3.3). However, POD provides the optimal subspace onto which linear projections of the data preserve its energy. On the other hand, the autoencoder provides a nonlinear mapping of the data, which is optimised to preserve its energy. (In the limit of linear activation functions, autoencoders perform similarly to POD (Baldi & Hornik Reference Baldi and Hornik1989; Milano & Koumoutsakos Reference Milano and Koumoutsakos2002; Murata et al. Reference Murata, Fukami and Fukagata2020).) The size of the latent space of the autoencoder is selected to be larger than the Kaplan–Yorke dimension, which is ![]() $N_{KY} = \{3, 9.5\}$ for the quasiperiodic and turbulent testcases, respectively (§ 2.1). We do so to account for the (nonlinear) approximation introduced by the CAE-ESN, and numerical errors in the optimisation of the architecture.

$N_{KY} = \{3, 9.5\}$ for the quasiperiodic and turbulent testcases, respectively (§ 2.1). We do so to account for the (nonlinear) approximation introduced by the CAE-ESN, and numerical errors in the optimisation of the architecture.

Figure 4 shows the reconstruction error over 600 000 snapshots (for a total of ![]() $2\times 10^6$ snapshots between the two regimes) in the test set. We plot the results for POD and the autoencoder through the energy (variance) captured by the modes

$2\times 10^6$ snapshots between the two regimes) in the test set. We plot the results for POD and the autoencoder through the energy (variance) captured by the modes

where ![]() $\overline {\mathrm {NRMSE}}$ is the time-averaged NRMSE. The rich spatial complexity of the turbulent flow (figure 4b), with respect to the quasiperiodic solution (figure 4a), is apparent from the magnitude and slope of the reconstruction error as a function of the latent space dimension. In the quasiperiodic case, the error is at least one order of magnitude smaller than the turbulent case for the same number of modes, showing that fewer modes are needed to characterise the flowfield. In both cases, the autoencoder is able to accurately reconstruct the flowfield. For example, in the quasiperiodic and turbulent cases the nine-dimensional autoencoder latent space captures

$\overline {\mathrm {NRMSE}}$ is the time-averaged NRMSE. The rich spatial complexity of the turbulent flow (figure 4b), with respect to the quasiperiodic solution (figure 4a), is apparent from the magnitude and slope of the reconstruction error as a function of the latent space dimension. In the quasiperiodic case, the error is at least one order of magnitude smaller than the turbulent case for the same number of modes, showing that fewer modes are needed to characterise the flowfield. In both cases, the autoencoder is able to accurately reconstruct the flowfield. For example, in the quasiperiodic and turbulent cases the nine-dimensional autoencoder latent space captures ![]() $99.997\,\%$ and

$99.997\,\%$ and ![]() $99.78\,\%$ of the energy, respectively. The nine-dimensional autoencoder latent space provides a better reconstruction than 100 POD modes for the same cases. Overall, the autoencoder provides an error at least two orders of magnitude smaller than POD for the same size of the latent space. These results show that the nonlinear compression of the autoencoder outperforms the optimal linear compression of POD.

$99.78\,\%$ of the energy, respectively. The nine-dimensional autoencoder latent space provides a better reconstruction than 100 POD modes for the same cases. Overall, the autoencoder provides an error at least two orders of magnitude smaller than POD for the same size of the latent space. These results show that the nonlinear compression of the autoencoder outperforms the optimal linear compression of POD.

Figure 4. Reconstruction error in the test set as a function of the latent space size in (a) the quasiperiodic case and (b) the chaotic case for POD and the CAE.

4.2. Autoencoder principal directions and POD modes

We compare the POD modes provided by the autoencoder reconstruction, ![]() $\boldsymbol {\varPhi }^{({phys})}_{{Dec}}$, with the POD modes of the data,

$\boldsymbol {\varPhi }^{({phys})}_{{Dec}}$, with the POD modes of the data, ![]() $\boldsymbol {\varPhi }^{({phys})}_{{True}}$. We do so to interpret the reconstruction of the autoencoder as compared with POD. For brevity, we limit our analysis to the latent space of 18 variables in the turbulent case. We decompose the autoencoder latent dynamics into proper orthogonal modes,

$\boldsymbol {\varPhi }^{({phys})}_{{True}}$. We do so to interpret the reconstruction of the autoencoder as compared with POD. For brevity, we limit our analysis to the latent space of 18 variables in the turbulent case. We decompose the autoencoder latent dynamics into proper orthogonal modes, ![]() $\boldsymbol {\varPhi }^{({lat})}_i$, which we name ‘autoencoder principal directions’ to distinguish them from the POD modes of the data. Figure 5(a) shows that four principal directions are dominant, as indicated by the change in slope of the energy, and that they contain roughly

$\boldsymbol {\varPhi }^{({lat})}_i$, which we name ‘autoencoder principal directions’ to distinguish them from the POD modes of the data. Figure 5(a) shows that four principal directions are dominant, as indicated by the change in slope of the energy, and that they contain roughly ![]() $97\,\%$ of the energy of the latent space signal. We therefore focus our analysis on the four principal directions, from which we obtain the decoded field

$97\,\%$ of the energy of the latent space signal. We therefore focus our analysis on the four principal directions, from which we obtain the decoded field

\begin{equation} \boldsymbol{\hat{q}}_{{Dec}4}(t) = \boldsymbol{f}\left(\sum_{i=1}^4 a_i(t) \boldsymbol{\varPhi}^{({lat})}_i\right), \end{equation}

\begin{equation} \boldsymbol{\hat{q}}_{{Dec}4}(t) = \boldsymbol{f}\left(\sum_{i=1}^4 a_i(t) \boldsymbol{\varPhi}^{({lat})}_i\right), \end{equation}

where ![]() $a_i(t) = \boldsymbol {\varPhi }^{({lat})T}_i\boldsymbol {z}(t)$ and

$a_i(t) = \boldsymbol {\varPhi }^{({lat})T}_i\boldsymbol {z}(t)$ and ![]() $\boldsymbol {f}({\cdot })$ is the decoder (§ 3.1). The energy content in the reconstructed flowfield is shown in figure 5(b). On the one hand, the full latent space, which consists of 18 modes, reconstructs accurately the energy content of the first 50 POD modes. On the other hand,

$\boldsymbol {f}({\cdot })$ is the decoder (§ 3.1). The energy content in the reconstructed flowfield is shown in figure 5(b). On the one hand, the full latent space, which consists of 18 modes, reconstructs accurately the energy content of the first 50 POD modes. On the other hand, ![]() $\boldsymbol {\hat {q}}_{{Dec}4}$ closely matches the energy content of the first few POD modes of the true flow field, but the error increases for larger numbers of POD modes. This happens because

$\boldsymbol {\hat {q}}_{{Dec}4}$ closely matches the energy content of the first few POD modes of the true flow field, but the error increases for larger numbers of POD modes. This happens because ![]() $\boldsymbol {\hat {q}}_{{Dec}4}$ contains less information than the true flow field, so that fewer POD modes are necessary to describe it. The results are further corroborated by the scalar product with the physical POD modes of the data (figure 6). The decoded field obtained from all the principal components accurately describes the majority of the first 50 physical POD modes, and

$\boldsymbol {\hat {q}}_{{Dec}4}$ contains less information than the true flow field, so that fewer POD modes are necessary to describe it. The results are further corroborated by the scalar product with the physical POD modes of the data (figure 6). The decoded field obtained from all the principal components accurately describes the majority of the first 50 physical POD modes, and ![]() $\boldsymbol {\hat {q}}_{{Dec}4}$ accurately describes the first POD modes. These results indicate that only few nonlinear modes contain information about several POD modes.

$\boldsymbol {\hat {q}}_{{Dec}4}$ accurately describes the first POD modes. These results indicate that only few nonlinear modes contain information about several POD modes.

Figure 5. (a) Energy as a function of the latent space principal directions and (b) energy as a function of the POD modes in the physical space for the field reconstructed using 4 and 18 (all) latent space principal direction and data (True).

Figure 6. Scalar product of the POD modes of the data with (a) the POD modes of the decoded flowfield obtained from all the principal directions and (b) the POD modes of the decoded flowfield obtained from four principal directions.

A visual comparison of the most energetic POD modes of the true flow field and of ![]() $\boldsymbol {\hat {q}}_{{Dec}4}$ is shown in figure 7. The first eight POD modes are recovered accurately. The decoded latent space principal directions,

$\boldsymbol {\hat {q}}_{{Dec}4}$ is shown in figure 7. The first eight POD modes are recovered accurately. The decoded latent space principal directions, ![]() $\boldsymbol {f}(\boldsymbol {\varPhi }^{({lat})}_i)$, are plotted in figure 8. We observe that the decoded principal directions are in pairs as the linear POD modes, but they differ significantly from any of the POD modes of figure 7. This is because each mode contains information about multiple POD modes, since the decoder infers nonlinear interactions among the POD modes. Further analysis of the modes and the latent space requires tools from Riemann geometry (Magri & Doan Reference Magri and Doan2022). This is beyond the scope of this study.

$\boldsymbol {f}(\boldsymbol {\varPhi }^{({lat})}_i)$, are plotted in figure 8. We observe that the decoded principal directions are in pairs as the linear POD modes, but they differ significantly from any of the POD modes of figure 7. This is because each mode contains information about multiple POD modes, since the decoder infers nonlinear interactions among the POD modes. Further analysis of the modes and the latent space requires tools from Riemann geometry (Magri & Doan Reference Magri and Doan2022). This is beyond the scope of this study.

Figure 7. POD modes, one per row, for (a) the reconstructed flow field based on four principal components in latent space and (b) the data. Even modes are shown in the supplementary material. The reconstructed flow contains the first eight modes.

Figure 8. Decoded four principal directions in latent space.

5. Time-accurate prediction

Once the autoencoder is trained to provide the latent space, we train an ensemble of 10 ESNs to predict the latent space dynamics. We use an ensemble of networks to take into account the random initialisation of the input and state matrices (Racca & Magri Reference Racca and Magri2022a). We predict the low-dimensional dynamics to reduce the computational cost, which becomes prohibitive when time-accurately predicting high-dimensional systems with RNNs (Pathak et al. Reference Pathak, Hunt, Girvan, Lu and Ott2018a; Vlachas et al. Reference Vlachas, Pathak, Hunt, Sapsis, Girvan, Ott and Koumoutsakos2020). Figure 9 shows the computational time required to forecast the evolution of the system by solving the governing equations and using the CAE-ESN. The governing equations are solved using a single GPU Nvidia Quadro RTX 4000, whereas the CAE-ESN uses a single CPU Intel i7-10700K (ESN) and single GPU Nvidia Quadro RTX 4000 (decoder) in sequence. The CAE-ESN is two orders of magnitude faster than solving the governing equations because the ESNs use sparse matrix multiplications and can advance in time with a ![]() $\delta t$ larger than the numerical solver (see chapter 3.4 of Racca Reference Racca2023).

$\delta t$ larger than the numerical solver (see chapter 3.4 of Racca Reference Racca2023).

Figure 9. Computational time required to forecast the evolution of the system for one time unit. Times for the CAE-ESN are for the largest size of the reservoir, which takes the longest time.

5.1. Quasiperiodic case

We train the ensemble of ESNs using 15 000 snapshots equispaced by ![]() $\delta t = 1$. The networks are validated and tested in closed-loop on intervals lasting 500 time units. One closed-loop prediction of the average and local dissipation rates (2.3a,b) in the test set are plotted in figure 10. The network accurately predicts the two quantities for several oscillations (figure 10a,b). The CAE-ESN accurately predicts the entire 4608-dimensional state (see figure 3) for the entire interval, as shown by the NRMSE for the state in figure 10(c).

$\delta t = 1$. The networks are validated and tested in closed-loop on intervals lasting 500 time units. One closed-loop prediction of the average and local dissipation rates (2.3a,b) in the test set are plotted in figure 10. The network accurately predicts the two quantities for several oscillations (figure 10a,b). The CAE-ESN accurately predicts the entire 4608-dimensional state (see figure 3) for the entire interval, as shown by the NRMSE for the state in figure 10(c).

Figure 10. Prediction of (a) the local and (b) the average dissipation rate in the quasiperiodic case in the test set, i.e. unseen dynamics, for a CAE-ESN with a 2000 neurons reservoir and 9-dimensional latent space.

Figure 11 shows the quantitative results for different latent spaces and reservoir sizes. We plot the percentiles of the network ensemble for the mean over 50 intervals in the test set, ![]() $\langle \cdot \rangle$, of the time-averaged NRMSE. The CAE-ESN time-accurately predicts the system in all cases analysed, except for small reservoirs in large latent spaces (figure 11c,d). This is because larger reservoirs are needed to accurately learn the dynamics of larger latent spaces. For all the different latent spaces, the accuracy of the prediction increases with the size of the reservoir. For 5000 neurons reservoirs, the NRMSE for the prediction in time is the same order of magnitude as the NRMSE of the reconstruction (figure 4). This means that the CAE-ESN learns the quasiperiodic dynamics and accurately predicts the future evolution of the system for several characteristic timescales of the system.

$\langle \cdot \rangle$, of the time-averaged NRMSE. The CAE-ESN time-accurately predicts the system in all cases analysed, except for small reservoirs in large latent spaces (figure 11c,d). This is because larger reservoirs are needed to accurately learn the dynamics of larger latent spaces. For all the different latent spaces, the accuracy of the prediction increases with the size of the reservoir. For 5000 neurons reservoirs, the NRMSE for the prediction in time is the same order of magnitude as the NRMSE of the reconstruction (figure 4). This means that the CAE-ESN learns the quasiperiodic dynamics and accurately predicts the future evolution of the system for several characteristic timescales of the system.

Figure 11. The 25th, 50th and 75th percentiles of the average NRMSE in the test set as a function of the latent space size and reservoir size in the quasiperiodic case.

5.2. Turbulent case

We validate and test the networks for the time-accurate prediction of the turbulent dynamics against the prediction horizon (PH), which, in this paper, is defined as the time interval during which the NRMSE (4.1) of the prediction of the network with respect to the true data is smaller than a user-defined threshold, ![]() $k$

$k$

where ![]() $t_p$ is the time from the start of the closed-loop prediction and

$t_p$ is the time from the start of the closed-loop prediction and ![]() $k=0.3$. The PH is a commonly used metric, which is tailored for the data-driven prediction of diverging trajectories in chaotic (turbulent) dynamics (Pathak et al. Reference Pathak, Wikner, Fussell, Chandra, Hunt, Girvan and Ott2018b; Vlachas et al. Reference Vlachas, Pathak, Hunt, Sapsis, Girvan, Ott and Koumoutsakos2020). The PH is evaluated in LTs (§ 2). (The PH defined here is specific for data-driven prediction of chaotic dynamics, and differs from the definition of Kaiser et al. (Reference Kaiser2014).)

$k=0.3$. The PH is a commonly used metric, which is tailored for the data-driven prediction of diverging trajectories in chaotic (turbulent) dynamics (Pathak et al. Reference Pathak, Wikner, Fussell, Chandra, Hunt, Girvan and Ott2018b; Vlachas et al. Reference Vlachas, Pathak, Hunt, Sapsis, Girvan, Ott and Koumoutsakos2020). The PH is evaluated in LTs (§ 2). (The PH defined here is specific for data-driven prediction of chaotic dynamics, and differs from the definition of Kaiser et al. (Reference Kaiser2014).)

Figure 12(a) shows the prediction of the dissipation rate in an interval of the test set, i.e. on data not seen by the network during training. The predicted trajectory closely matches the true data for about 3 LTs. Because the tangent space of chaotic attractors is exponentially unstable, the prediction error ultimately increases with time (figure 12b). To quantitatively assess the performance of the CAE-ESN, we create 20 latent spaces for each of the latent space sizes ![]() $[18,36,54]$. Because we train 10 ESNs per each latent space, we analyse results from 600 different CAE-ESNs. Each latent space is obtained by training autoencoders with different random initialisations and optimisations of the weights of the convolutional layers (§ 3.1). The size of the latent space is selected to be larger than the Kaplan–Yorke dimension of the system,

$[18,36,54]$. Because we train 10 ESNs per each latent space, we analyse results from 600 different CAE-ESNs. Each latent space is obtained by training autoencoders with different random initialisations and optimisations of the weights of the convolutional layers (§ 3.1). The size of the latent space is selected to be larger than the Kaplan–Yorke dimension of the system, ![]() $N_{KY} = 9.5$ (§ 2.1), in order to contain the attractor's dynamics. In each latent space, we train the ensemble of 10 ESNs using 60 000 snapshots equispaced by

$N_{KY} = 9.5$ (§ 2.1), in order to contain the attractor's dynamics. In each latent space, we train the ensemble of 10 ESNs using 60 000 snapshots equispaced by ![]() $\delta t =0.5$. Figure 13 shows the average PH over 100 intervals in the test set for the best performing latent space of each size. The CAE-ESN successfully predicts the system for up to more 1.5 LTs for all sizes of the latent space. Increasing the reservoir size slightly improves the performance with a fixed latent space size, whilst latent spaces of different sizes perform similarly. This means that small reservoirs in small latent spaces are sufficient to time-accurately predict the system.

$\delta t =0.5$. Figure 13 shows the average PH over 100 intervals in the test set for the best performing latent space of each size. The CAE-ESN successfully predicts the system for up to more 1.5 LTs for all sizes of the latent space. Increasing the reservoir size slightly improves the performance with a fixed latent space size, whilst latent spaces of different sizes perform similarly. This means that small reservoirs in small latent spaces are sufficient to time-accurately predict the system.

Figure 12. Prediction of the dissipation rate in the turbulent case in the test set, i.e. unseen dynamics, for a CAE-ESN with a 10 000 neurons reservoir and 36-dimensional latent space. The vertical line in panel (a) indicates the PH.

Figure 13. The 25th, 50th and 75th percentiles of the average PH in the test set as a function of the latent space and reservoir size in the turbulent case.

A detailed analysis of the performance of the ensemble of the latent spaces of the same size is shown in figure 14. Figure 14(a) shows the reconstruction error. The small amplitude of the errorbars indicates that different autoencoders with same latent space size reconstruct the flowfield with similar energy. Figure 14(b) shows the performance of the CAE-ESN with 10 000 neurons reservoirs for the time-accurate prediction as a function of the reconstruction error. In contrast to the error in figure 14(a), the PH varies significantly, ranging from 0.75 to more than 1.5 LTs in all latent space sizes. There is no discernible correlation between the PH and reconstruction error. This means that the time-accurate performance depends more on the training of the autoencoder than the latent space size. To quantitatively assess the correlation between two quantities, ![]() $[\boldsymbol {a_1},\boldsymbol {a_2}]$, we use the Pearson correlation coefficient (Galton Reference Galton1886; Pearson Reference Pearson1895)

$[\boldsymbol {a_1},\boldsymbol {a_2}]$, we use the Pearson correlation coefficient (Galton Reference Galton1886; Pearson Reference Pearson1895)

\begin{equation} r(\boldsymbol{a_1},\boldsymbol{a_2}) = \frac{\displaystyle \sum (a_{1_i}-\langle a_1 \rangle)(a_{2_i}-\langle a_2 \rangle)} {\displaystyle \sqrt{\sum (a_{1_i}-\langle a_1 \rangle)^2}\sqrt{\sum (a_{2_i}- \langle a_2 \rangle)^2}}. \end{equation}

\begin{equation} r(\boldsymbol{a_1},\boldsymbol{a_2}) = \frac{\displaystyle \sum (a_{1_i}-\langle a_1 \rangle)(a_{2_i}-\langle a_2 \rangle)} {\displaystyle \sqrt{\sum (a_{1_i}-\langle a_1 \rangle)^2}\sqrt{\sum (a_{2_i}- \langle a_2 \rangle)^2}}. \end{equation}

The values ![]() $r=\{-1,0,1\}$ indicate anticorrelation, no correlation and correlation, respectively. The Pearson coefficient between the reconstruction error and the PH is

$r=\{-1,0,1\}$ indicate anticorrelation, no correlation and correlation, respectively. The Pearson coefficient between the reconstruction error and the PH is ![]() $r=-0.10$ for the medians of the 60 cases analysed in figure 14(b), which means that the PH is weakly correlated with the reconstruction error. This indicates that latent spaces that similarly capture the energy of the system, may differ in capturing the dynamical content of the system; i.e. some modes that are critical for the dynamical evolution of the system do not necessarily affect the reconstruction error, and therefore may not necessarily be captured by the autoencoder. This is a phenomenon that also affects different reduced-order modelling methods, specifically POD (Rowley & Dawson Reference Rowley and Dawson2017; Agostini Reference Agostini2020). In figure 14(c), we plot the PH of the medians of the errorbars of figure 14(b) for different latent spaces. Although the performance varies within the same size of the latent space, we observe that the PH improves and the range of the errorbars decreases with increasing size of the latent space. This is because autoencoders that better approximate the system in a