Introduction

As trusted local actors, community-based organizations (CBOs) are well-positioned to incorporate research evidence, local expertise, and contextual factors to improve health [Reference Kerner, Rimer and Emmons1–Reference Wilson, Lavis, Travers and Rourke4]. These organizations often fill important gaps in reaching populations served ineffectively by traditional healthcare channels and offer a unique opportunity to promote health equity [Reference Wilson, Lavis, Travers and Rourke4,Reference Griffith, Allen and DeLoney5]. The scale of their potential impact is substantial – CBOs delivered about $200 billion in services in the US in 2017 [Reference Wyman6]. The term CBOs refers to mission-driven organizations that address community needs and reflect community values, are typically nonprofit and led by a board of members, and deliver services in coordination with community stakeholders [Reference Wilson, Lavis and Guta7]. While CBOs can be core implementation channels for evidence-based interventions (EBIs), they face several challenges in this regard. Barriers include insufficient training and skills to use EBIs, competing priorities, balancing capacity-building and service delivery, insufficient organizational supports for the use of EBIs, and a lack of clarity around how to sustain successful EBIs [Reference Stephens and Rimal8–Reference Hailemariam, Bustos, Montgomery, Barajas, Evans and Drahota12]. These challenges are particularly relevant for CBOs working with communities that have been and/or are currently being marginalized and excluded from opportunities for health and wellbeing, where resource constraints are often heightened [Reference Griffith, Allen and DeLoney5,Reference Bach-Mortensen, Lange and Montgomery9]. Building capacity for EBI use is a critical element of designing for dissemination and implementation, for example, as highlighted by Interactive Systems Framework and the push-pull-capacity model [Reference Orleans13,Reference Wandersman, Duffy and Flaspohler14]. Capacity to use EBIs is a driver of implementation outcomes and, ultimately, health impact and is thus a critical area of focus [Reference Brownson, Fielding and Green10]. Capacity-building to implement EBIs has attracted a fair amount of attention, with successes in increasing the adoption and implementation of EBIs, for example, among the staff of local health departments, policymakers, and some community-based settings [Reference McCracken, Friedman and Brandt15,Reference Fagan, Hanson, Briney and David Hawkins16,Reference Brownson, Fielding and Green10].

It is difficult to capitalize on the capacity-building literature given a lack of consensus regarding the definition of capacity as a concept. The World Health Organization describes capacity as the “knowledge, skills, commitment, structures, systems, and leadership to enable effective health promotion” [Reference Smith, Tang and Nutbeam17]. This is echoed by an influential synthesis of the literature on capacity-building for EBI use, which describes capacity as having sufficient structures, personnel, and resources to utilize EBIs [Reference Brownson, Fielding and Green10]. Further expanding potential conceptualizations, frameworks such as the Interactive Systems Framework attend to capacity in the systems integral to putting EBIs into practice, emphasizing general capacity and EBI-specific capacity [Reference Wandersman, Duffy and Flaspohler14].

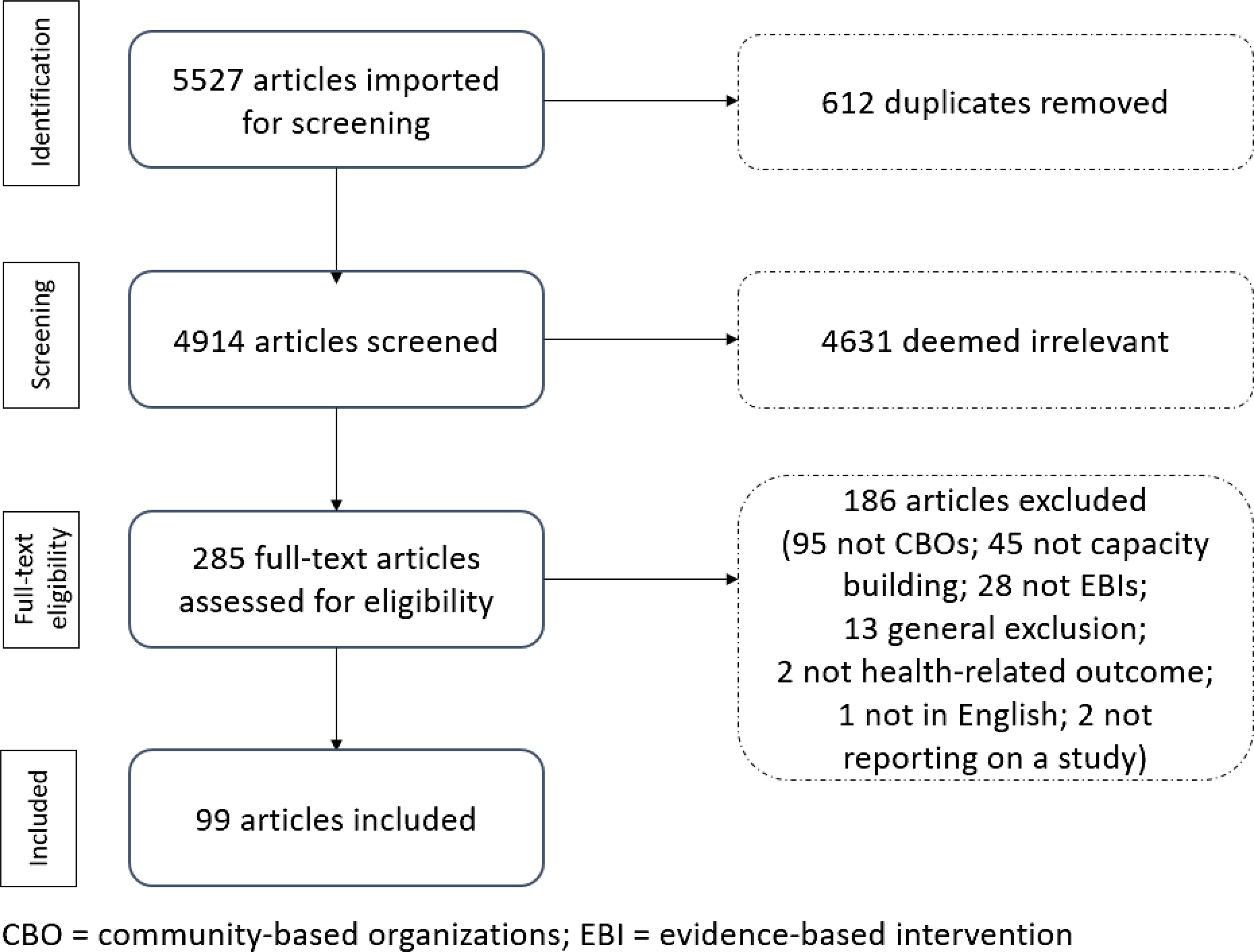

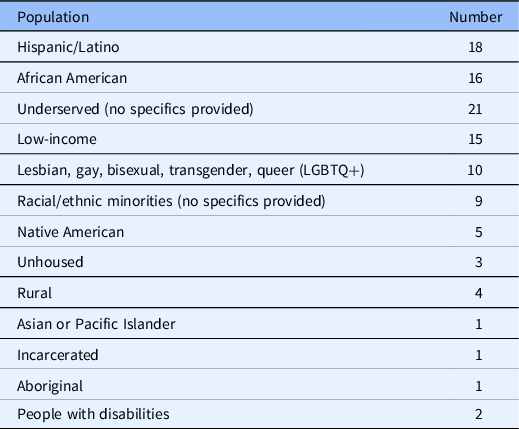

Another limitation in the field is a shortage of validated measures of capacity generally [Reference Crisp, Swerissen and Duckett18,Reference DeCorby-Watson, Mensah, Bergeron, Abdi, Rempel and Manson19] and for use in CBOs [Reference Brownson, Fielding and Green10]. While the use of reliable and valid measures is integral to advancing knowledge regarding the capacity-building implementation strategies that warrant further attention, most measures have been inadequately assessed for psychometric properties [Reference Brownson, Fielding and Green10,Reference Chaudoir, Dugan and Barr20]. Where validated measures exist, they were often developed for non-CBO practitioners, such as health department staff, and include items that would be irrelevant in CBOs, for example, items that ask about consultations with staff epidemiologists [Reference Stamatakis, Ferreira Hino and Allen21]. The measurement gaps matter, as limited data describe the link between capacity-building strategies, capacity, and implementation outcomes [Reference Collins, Phields and Duncan22]. Burgeoning efforts to bridge this measurement gap have yielded essential assessment tools to improve the implementation of EBIs in local settings [Reference Stamatakis, Ferreira Hino and Allen21,Reference Reis, Duggan, Allen, Stamatakis, Erwin and Brownson23]. A final potential gap in the literature relates to the need to tailor capacity-building interventions to adjust for the context in which an EBI will be implemented. On one hand, CBOs serving marginalized populations are recognized as prime partners for delivering EBIs to advance health equity [Reference Wilson, Lavis, Travers and Rourke4,Reference Griffith, Allen and DeLoney5]. On the other, our previous work highlights a disconnect that practitioners working with marginalized populations perceive between capacity-building interventions and their needs and expertise [Reference Ramanadhan, Galbraith-Gyan and Revette24]. We were unable to find an assessment of the extent to which these organizations are present in the capacity-building literature, prompting further attention. Given the importance of increasing CBO capacity to utilize EBIs in the service of improved population health and health equity, we conducted a scoping review to examine the available literature and identify important research gaps. Our study focused on researchers addressing capacity-building for EBI use in CBOs and asked 1) how is capacity defined and conceptualized, 2) to what extent are validated measures available and used, and 3) to what extent is equity a focus in this work? The inquiry is grounded in a systematic review of capacity-building for EBI use in community settings by Leeman and colleagues, which defines capacity as the general and program-specific awareness, knowledge, skills, self-efficacy, and motivation to use an EBI. The review also identified several capacity-building strategies shown to increase adoption and implementation, such as providing technical assistance in addition to training and tools [Reference Leeman, Calancie and Hartman25]. We have adapted this work to serve as the conceptual framework for this review, as summarized in Fig. 1.

Fig. 1. Conceptual framework for the review, adapted from Leeman and colleagues [Reference Leeman, Calancie and Hartman25].

Materials and Methods

Design

A team of researchers conducted this review. Two of the authors (SR and HMB) have been studying the use of EBIs in community settings for more than 15 years. Three members of the team were students (of public health, medicine, and psychology) (MW, ML, SK), one member manages implementation science projects (SLM), and one member (CM) is a research librarian at Harvard Medical School’s Countway Library. The team had the necessary complementary expertise to conduct the review. We did not register the scoping review given its exploratory nature. The researchers adapted the process described by Katz and Wandersman [Reference Katz and Wandersman26]. We utilized the PRISMA checklist for scoping reviews to support reporting [Reference Tricco, Lillie and Zarin27] and have provided details as Supplemental File 1.

Step 1: Identify the research questions. 1) How are researchers defining and conceptualizing “capacity” and related outcomes to support the use of EBIs in CBOs? 2) To what extent are validated measures available and used? 3) To what extent are capacity-building studies attending to health equity?

Step 2: Conduct the search. Relevant studies were identified by searching the following databases: PubMed/Medline (National Library of Medicine), Web of Science Core Collection (Clarivate), and Global Health (C.A.B. International, EBSCO), on August 13, 2021. Controlled vocabulary terms (i.e., MeSH or Global Health thesaurus terms) were included when available and appropriate. The search strategies were designed and executed by a research librarian (CM). No language limits or year restrictions were applied, and bibliographies of relevant articles were reviewed to identify additional studies. We sought articles at the intersection of three core areas: 1) CBOs, 2) evidence-based practice, and 3) capacity-building. The search strategy used in PubMed included the combination of MeSH terms and keywords searched within the title and abstract was as follows:

(“Community Health Workers”[Mesh] OR “Community Health Services”[Mesh:NoExp] OR “Health Promotion”[Mesh:NoExp] OR “Organizations, Nonprofit”[Mesh:NoExp] OR “Health Education”[Mesh:NoExp] OR “Patient Education as Topic”[Mesh] OR “Consumer Health Information”[Mesh] OR community-based[tiab] OR community health[tiab] OR consumer health[tiab] OR health education[tiab] OR health promotion[tiab] OR Lady health worker*[tiab] OR Lay health worker*[tiab] OR Village health worker*[tiab] OR local organization*[tiab] OR non-clinical[tiab] OR non profit*[tiab] OR nonprofit*[tiab] OR prevention support[tiab] OR community organization*[tiab] OR “Public Health Practice”[Mesh:noexp] OR public health practic*[tiab]) AND (“Evidence-Based Practice”[Mesh:noexp] OR “Implementation Science”[Mesh] OR evidence based[tiab] OR evidence informed[tiab] OR effective intervention*[tiab] OR knowledge translation[tiab] OR implementation science[tiab] OR practice-based evidence [tiab]) AND (“Capacity Building”[Mesh] OR “Professional Competence”[Mesh:NoExp] OR “Staff Development”[Mesh] OR capacity[tiab] OR competencies[tiab] OR skills[tiab] OR work force[tiab] OR workforce[tiab] OR professional development[tiab] OR staff[tiab] OR practitioners[tiab] OR knowledge broker*[tiab]).

The search strategies for the other databases appear in Supplemental File 2. As noted elsewhere, terminology in this area has not been standardized [Reference Leeman, Calancie and Hartman25]. The researchers worked with the librarian to identify a broad list of search terms to be sufficiently inclusive.

Step 3. Select articles based on the following inclusion/exclusion criteria. We imported search results into Covidence software. For each article, pairs of study team members reviewed the title and abstract. Inclusion criteria were as follows: 1) addressed CBOs AND health-focused EBIs AND capacity; 2) addressed practitioner capacity-building; 3) articles were retrievable as full-text in English. Exclusion criteria were as follows: 1) did not address the capacity of the workforce (e.g., related only to community capacity); 2) referred to capacity-building, but not in a substantive way; 3) capacity-building was explored, but not concerning EBIs; 4) article did not report on a study or conceptual model (e.g., letter to the editor). The research team reviewed and resolved conflicts at this stage as a team, with mediation by the lead author. The same process was utilized for the review of full-text articles. We included review articles to examine conceptualizations of capacity-building and to identify additional studies for inclusion.

Step 4. Extract and code data from the articles. Using Excel, pairs of researchers double-coded data for each article, and the first author resolved conflicts in the final stage. We drew on previous reviews of capacity-building to identify the fields to extract [Reference Leeman, Calancie and Hartman25]. Basic study information included location (country plus state for US), conceptual vs. empirical piece, setting (e.g., CBO), types of practitioners targeted (e.g., CBO staff), health focus (e.g., obesity prevention), and extent to which the study focused on health equity. We also coded the use of qualitative and/or quantitative data. For capacity-building, we coded the level of focus and whether a definition of capacity was offered (directly, indirectly, or not at all). We coded for whether or not capacity was measured. For articles in which capacity was measured quantitatively, we assessed whether or not psychometric data were provided. Finally, we extracted the identified outcomes of capacity-building highlighted by the article.

A few categories deserve further explanation. To describe the health equity focus, the team coded presence or absence of an emphasis on at least one of the following: 1) For US studies, NIH-designated US health disparity populations as defined by NIMCHD [28], including Blacks/African Americans, Hispanics/Latinos, American Indians/Alaska Natives, Asian Americans, Native Hawaiians, and other Pacific Islanders, socioeconomically disadvantaged populations, underserved rural populations, and sexual and gender minorities; 2) other underserved populations from high-income countries (e.g., medically underserved communities, incarcerated populations, disabled populations); and 3) populations from low- and middle-income countries. For articles with a focus on these populations, we also coded whether the study included these populations (e.g., including racial and ethnic minorities as part of a general recruitment effort) or focused on them (e.g., a study that delivered a capacity-building intervention to organizations serving low-income communities).

Step 5. Analyze and summarize the data. Once the dataset was finalized, the data were summarized using descriptive statistics. All analyses were conducted using Microsoft Excel.

Results

Search Results

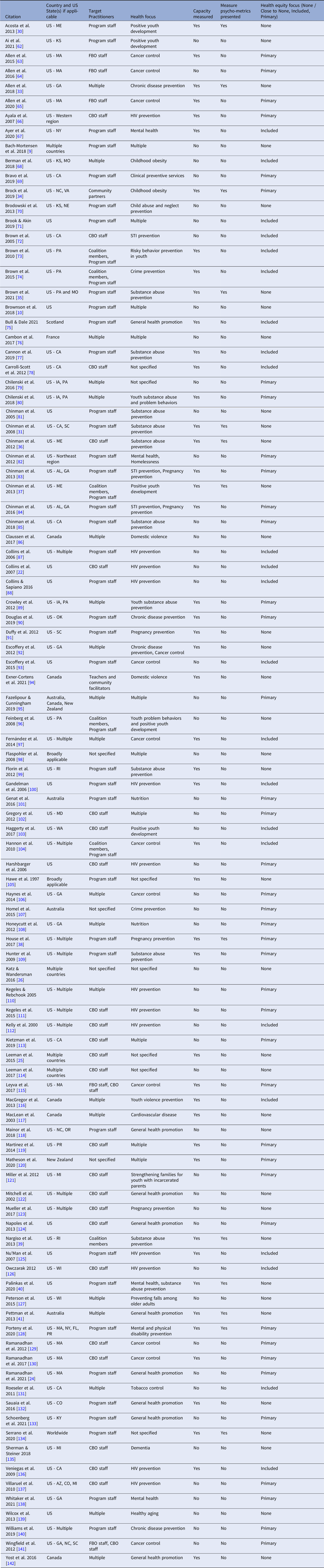

As seen in Fig. 2, the initial search yielded 5527 articles, 285 full-text articles were screened, and a pool of 99 articles was retained for the review. This process is visualized according to the PRISMA reporting standards [Reference Moher, Liberati, Tetzlaff, Altman and Group29].

Fig. 2. PRISMA flow chart.

Core attributes of the 99 included articles are presented in Table 1.

Table 1. Description of included publications (n = 99)

CBO = community-based organization; FBO = faith-based organization.

As seen in Table 1, the included studies were published between 1997 and 2021. About half (47%) were published between 1997 and 2014 and the remainder from 2015 to August 2021. A total of 80 were based in the US, 9 were from other high-income countries, 2 explicitly referenced findings in low- and middle-income countries, and 8 did not specify.

Question 1: How Did Researchers Define Capacity in the Context of CBO Practitioners using EBIs?

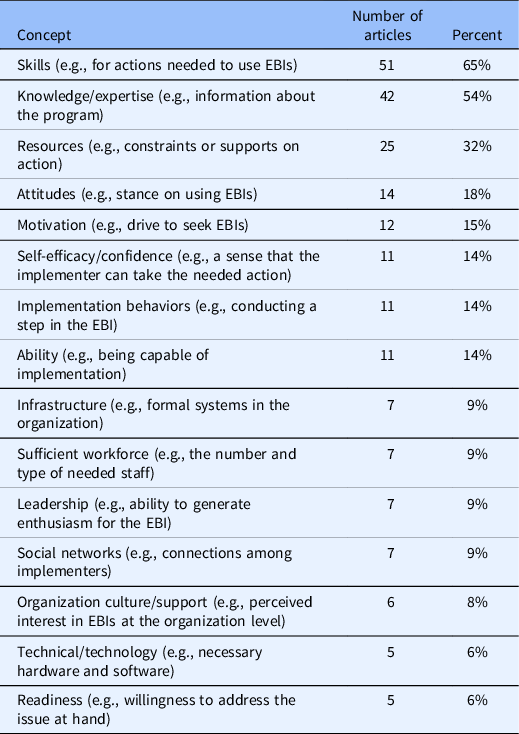

Of the 99 articles, 47 defined capacity explicitly (47%), another 31 defined it indirectly (31%), and 21 did not define it at all (21%). Of those that offered direct or indirect definitions, 34 concepts were described, with an average of 3.3 per article. Common concepts included practitioner-level attributes, for example, knowledge and skills, organization-level attributes, for example, leadership and fiscal resources, and system-level attributes, for example, partnerships and informal systems. Among the concepts that were infrequently mentioned, a few related to the broader functioning of groups, communities, or the larger political environment. Overall, 162 concepts (64% of total) were at the practitioner level, 80 (30%) were at the organization level, and 14 (5%) were at the system-level attributes. Concepts mentioned five or more times are presented in Table 2.

Table 2. Concepts that appeared in five or more articles, among the 78 studies that offered explicit or indirect definitions of capacity, ordered by decreasing frequency

EBI = evidence-based intervention.

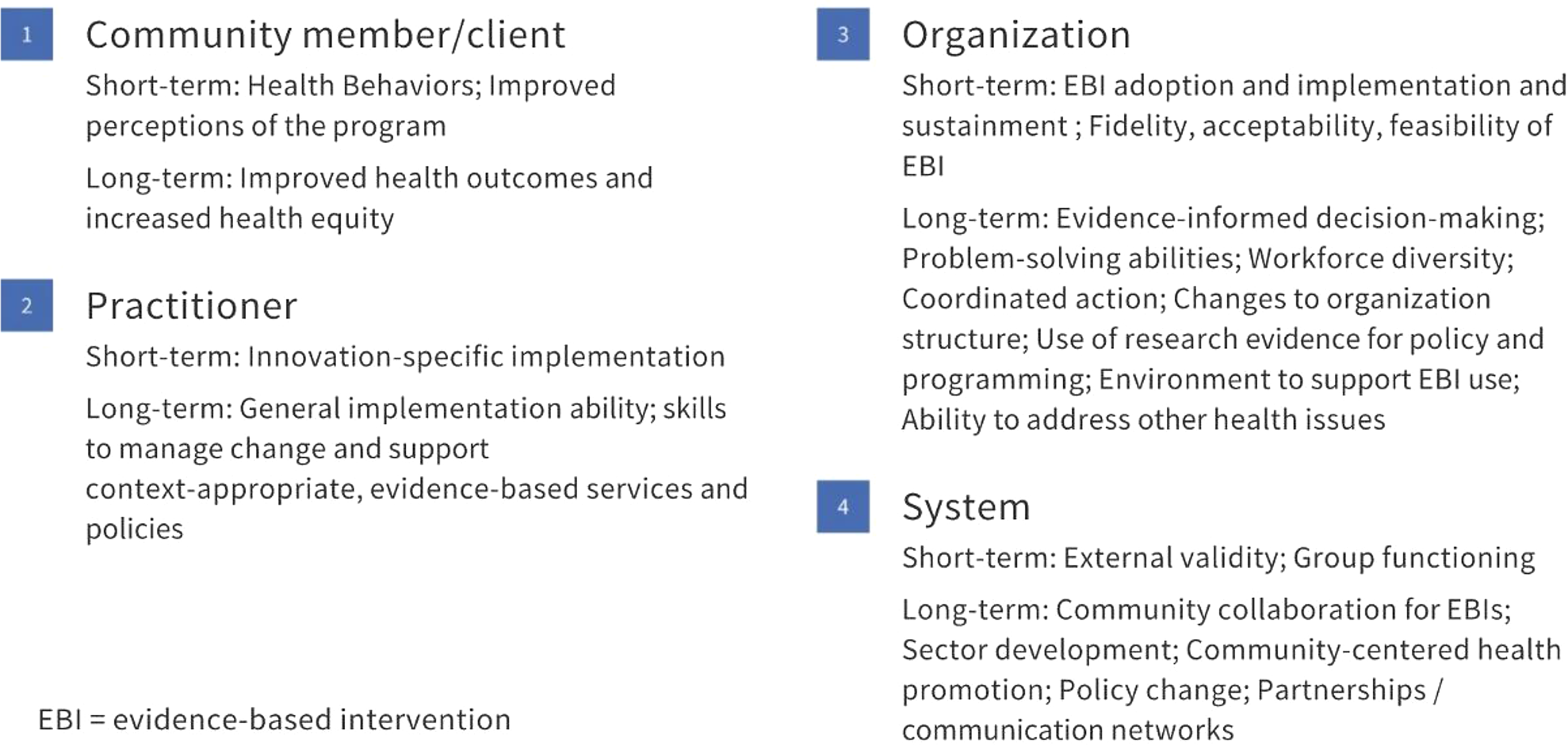

We also examined how researchers linked practitioner capacity and capacity-building efforts to key outcomes at multiple levels and across short- and long-term timeframes (Fig. 3).

Fig. 3. Range of outcomes linked to capacity-building activities (n = 99 articles).

Question 2: To What Extent Did Quantitative Studies Measure Capacity, and to What Extent were Psychometric Data Provided?

A total of 57 articles (57%) included quantitative analytic components, and of those, 48 (82%) measured capacity and 11 (23%) offered psychometric data for the capacity measures. The foci and types of psychometric data presented are summarized below.

-

1. Acosta and colleagues [Reference Acosta, Chinman and Ebener30] used a combination of a Getting to Outcomes approach and the Consolidated Framework for Implementation Research [Reference Chinman, Hunter and Ebener31,Reference Damschroder, Aron, Keith, Kirsh, Alexander and Lowery32] in this study of positive youth development. They defined practitioner prevention capacity in terms of perceived efficacy (ability to complete necessary tasks on one’s own) and behaviors (conducting the necessary implementation tasks, both related to the approach broadly and the intervention specifically. They offered reliability data for each core capacity scale and drew on previously utilized scales.

-

2. Allen and colleagues [Reference Allen, O’Connor, Best, Lakshman, Jacob and Brownson33] conducted a survey that emphasized the importance of skills, availability of skilled staff, organizational supports, and use of research evidence before and after receiving training on evidence-based decision-making. The team scored the perceived importance of each of the ten key skills and the availability of staff members with that skill. Additionally, measures included frequency of using research evidence and work unit and agency expectations and supports for evidence-based decision-making. Finally, a list of steps taken to enhance capacity for evidence-based decision-making was utilized. The measures were validated through five rounds of review by an expert panel, cognitive testing with former state chronic disease directors, and test-retest reliability assessment with state health department staff.

-

3. Brock and colleagues [Reference Brock, Estabrooks and Hill34] examined the capacity for a community advisory board (including CBO representatives) to implement an evidence-based obesity program using participatory processes. They used a 63-item survey to capture 13 domains, including capacity efforts (decision-making, conflict resolution, communication, problem assessment, group roles, and resources); capacity outcomes (trust, leadership, participation and influence, collective efficacy); and sustainability outcomes (sustainability, accomplishments, and community power). They reported reliability data for the survey items.

-

4. Brown and colleagues [Reference Brown, Chilenski and Wells35] described measures as part of a protocol for a hybrid, Type 3 cluster-randomized trial examining coalition and prevention program support through technical assistance. Their measure of coalition capacity included cohesion (e.g., sense of unity and trust) and efficiency (e.g., focus and work ethic) for internal team processes.

-

5. Chinman and colleagues [Reference Chinman, Hunter and Ebener31] conducted a study based on the Getting to Outcomes framework. For the capacity assessment, they use 23 items to measure self-efficacy (in terms of how much help would be needed) for Getting to Outcomes activities (e.g., conducting a needs assessment). They conducted a factor analysis and assessed the internal consistency reliability of this scale. A separate set of 16 items examined attitudes towards steps of the program process, for example, conducting a formal evaluation. They conducted a factor analysis and calculated internal consistency reliability.

-

6. Chinman and colleagues [Reference Chinman, Acosta and Ebener36] conducted a study with the Getting to Outcomes framework and examined prevention capacity as knowledge and skills. The Knowledge Score averaged seven items and examined how much help the respondent would need to carry out a given prevention activity, for example, supporting program sustainability. Internal consistency reliability data were presented. The Skills Score averaged six items and assessed respondents’ frequency of engaging in the prevention activities; internal consistency reliability data were presented.

-

7. Chinman and colleagues [Reference Chinman, Acosta and Ebener37] conducted a trial drawing on the Getting to Outcomes framework and key capacity measures focused on efficacy. A five-item efficacy scale focused on respondents’ comfort with engaging in program activities related to asset development. The second efficacy scale focused on comfort implementing the 10-step Getting to Outcomes process. Internal consistency reliability was reported for both scales.

-

8. House and colleagues [Reference House, Tevendale and Martinez-Garcia38] drew on the Getting to Outcomes framework and assessed change in capacity for program partners to use EBIs. Relevant items focused on knowledge and confidence in using the Getting to Outcomes process for EBI implementation. Scale reliability data were presented.

-

9. Nargiso and colleagues [Reference Nargiso, Friend and Egan39] examined general capacity of a prevention-focused coalition grounded in the Systems Prevention Framework. Coalitions rated themselves on a 5-point scale for ten items across five domains of capacity: mobilization, structure, task leadership, cohesion, and planning/implementation. They also had an overall coalition capacity score which was a standardized average across the scores. Experts also rated the coalitions regarding leadership, turnover, meetings, visibility, and technological capacity. Inter-rater reliability between participants and experts was calculated. Additionally, the team measured innovation-specific capacity. Experts rated the understanding, partnerships, knowledge of local decision-making related to policy, membership support, and quality of strategic plan. Once more, inter-rater reliability between participants and experts was calculated.

-

10. Palinkas and colleagues [Reference Palinkas, Chou, Spear, Mendon, Villamar and Brown40] created a measurement for program sustainment that includes a section on “infrastructure and capacity to support sustainment.” Seven items address relevant concepts and data for inter-item reliability, convergent validity, and discriminant validity were presented.

-

11. Pettman and colleagues [Reference Pettman, Armstrong, Jones, Waters and Doyle41] measured capacity in terms of implementation behaviors, knowledge, confidence, and attitudes. Although they did not provide psychometric data in the report, they reported using adapted versions of previously validated items.

Question 3: To What Extent were Studies Focused on Health Equity?

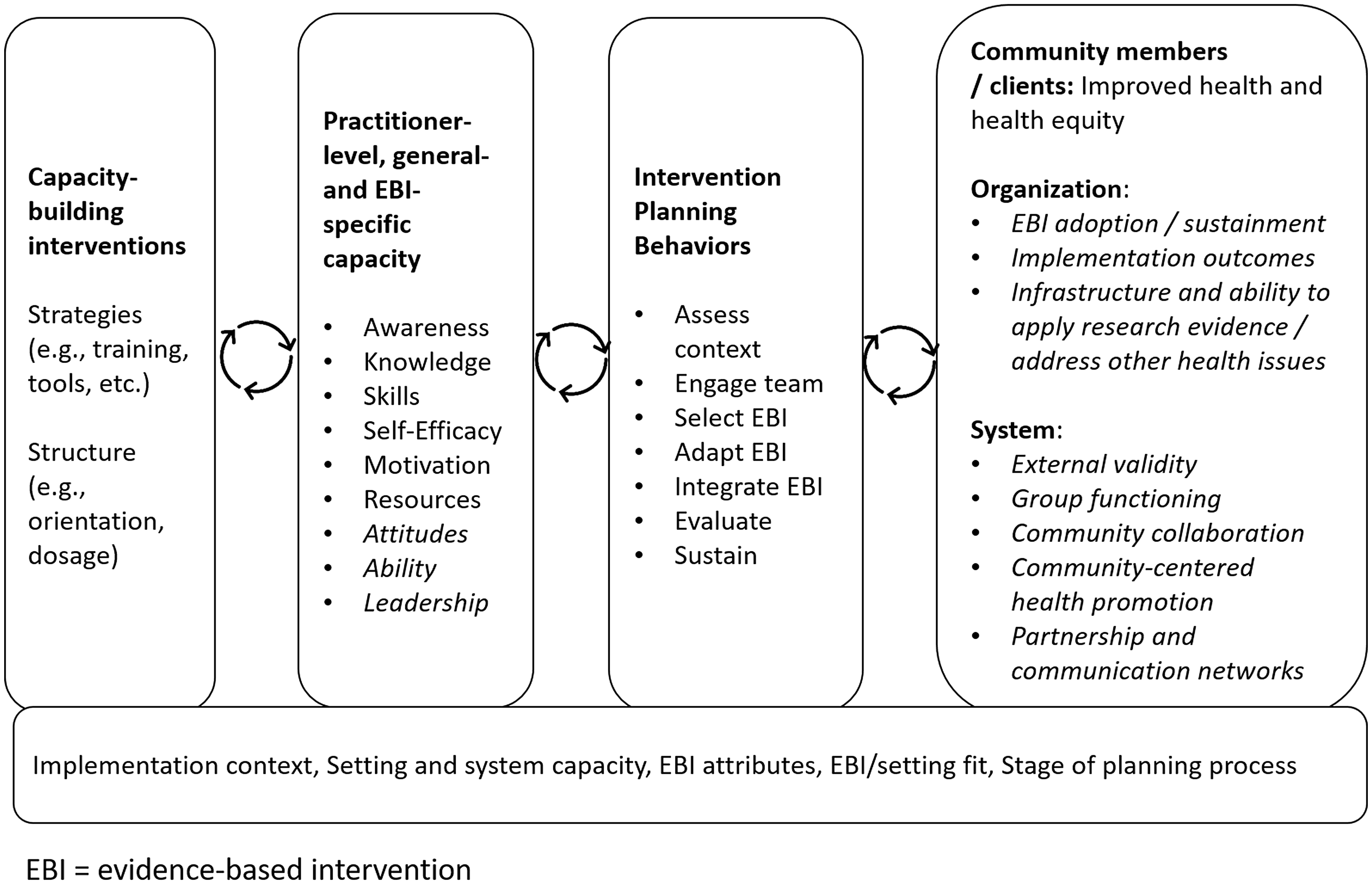

Of the 99 studies, 40 focused exclusively on populations experiencing inequities (40%), 22 included those populations (22%), and 37 did not focus on populations experiencing inequities (37%). As shown in Table 3, the most commonly studied populations included Hispanics/Latinos, African Americans, populations described in the article as “underserved” or low-income, and LGBTQ + populations. We note that the reference to underserved populations did not always include a description of how that was operationalized. Several other priority populations were only represented by one or a small number of studies, for example, people living in rural areas or with disabilities.

Table 3. Populations of focus as described in reviewed studies, with some studies addressing the needs of multiple populations (n = 99 articles)

Discussion

This scoping review used a comprehensive search strategy to examine how the capacity for EBI use in CBOs is defined and measured. Broadly, our work highlights the need for those addressing capacity-building for EBI use in CBOs to 1) be explicit about models and definitions of capacity-building as implementation strategies, 2) specify expected impacts and outcomes across multiple levels, 3) develop and use validated measures for quantitative work, and 4) integrate equity considerations into the conceptualization and measurement of capacity-building efforts.

First, our results emphasize the need for researchers to be more explicit about their definitions of capacity as a target and capacity-building as a means to support implementation. We found that fewer than half of the articles reviewed offered an explicit definition of capacity. Core concepts covered in definitions centered on practitioner-level attributes, including skills, knowledge, and self-efficacy, though these were not always defined either. At the same time, discussions of practitioner capacity also included organization- and system-level attributes. The variation illustrates the lack of consensus in the field regarding the core dimensions of practitioner capacity [Reference Brownson, Fielding and Green10,Reference Simmons, Reynolds and Swinburn42]. Understanding capacity-building efforts as implementation strategies may help prompt reporting that includes details about the involved actors, actions, targets of action, temporality/ordering, dose, expected outcomes, and justification for selection [Reference Proctor, Powell and McMillen43].

In terms of expected impact, the overall takeaway was that capacity-building is a long-term, dynamic, system-oriented process that transforms resources into short- and long-term change at multiple levels. Expected impacts ranged from community member/client and practitioner outcomes to organization- and system-level change, echoing other recent reviews of capacity-building [Reference DeCorby-Watson, Mensah, Bergeron, Abdi, Rempel and Manson19]. In the context of an outcomes model, such as the Proctor model [Reference Proctor, Silmere and Raghavan44], we might think of short-term impacts of capacity-building as driving implementation outcomes and longer-term outcomes that include a system’s increased ability to utilize research evidence and address new challenges [Reference Ramanadhan, Viswanath, Fisher, Cameron and Christensen45]. Viewing capacity-building in the context of professional development prompts the addition of evaluation not only of practitioner skills, knowledge, etc., but also attitudes towards EBIs, job satisfaction and tenure, and other essential supports for EBI delivery in community settings [Reference Allen, Palermo, Armstrong and Hay46]. As summarized in Fig. 4, the review offers a number of extensions to both the dimensions of capacity that warrant further attention as well as to the organization- and system-level outcomes that may result.

Fig. 4. Model of practitioner-level capacity-building, with extensions from review in italics.

The results also highlight a need to improve the use and reporting of validated measures for quantitative assessments. While most quantitative studies measured capacity (48 of 57), only 11 (or 23%) offered psychometric data for these measures. This relates to a broader gap in implementation science highlighted by Lewis and Dorsey, that too few measures have psychometric data, most measures are not applied in different contexts or for different populations, and there are no minimal reporting standards for measures [Reference Lewis, Dorsey, Albers, Shlonsky and Mildon47]. By increasing the testing of capacity measures for reliability and predictive validity, researchers can address gaps identified through this and previous reviews [Reference Chaudoir, Dugan and Barr20,Reference Emmons, Weiner, Fernandez and Tu48]. Other useful potential additions to the literature include identifying “gold standard” measures, determining how and when to measure capacity, gathering data from multiple levels and dynamic systems, and capturing change over time [Reference Ebbesen, Heath, Naylor and Anderson49]. There is a particular opportunity for implementation scientists to ensure that reporting offers a detailed description of context related to the multiple levels involved in capacity-building, going beyond the required elements to expand on information central to advancing health equity [Reference Bragge, Grimshaw and Lokker50–Reference Pinnock, Slack, Pagliari, Price and Sheikh52].

Last, we saw that several studies addressed health inequities, with 62 of the 99 studies focusing or including populations experiencing health inequities. Our work and the broader literature emphasize supporting CBOs in EBI delivery to address health inequities [Reference Ramanadhan, Galbraith-Gyan and Revette24,Reference Ramanadhan, Aronstein, Martinez-Dominguez, Xuan and Viswanath53,Reference Chambers and Kerner54]. At the same time, almost all of the studies that specified a location were grounded in high-income countries. Given that capacity-building is intended to be quite context-specific, this suggests an important gap in the peer-reviewed literature. Stakeholders and researchers in low- and middle-income countries have highlighted gaps in the availability, depth and breadth, support, and local customization based on in-country expertise of capacity-building interventions for EBI use [Reference Betancourt and Chambers55,Reference Turner, Bogdewic and Agha56]. As these gaps are addressed, it may be useful to draw on recent advances in implementation science frameworks that provide guidance on how to operationalize the incorporation of equity goals into implementation planning [Reference Chinman, Woodward, Curran and Hausmann57–Reference Eslava-Schmalbach, Garzón-Orjuela, Elias, Reveiz, Tran and Langlois60].

As with any study, we must ground our findings in the context of a set of limitations. First, we coded data from peer-reviewed articles, many of which had strict word limits. Thus, an activity may have taken place (e.g., validation of a measure) separately from article content. Second, the review focused exclusively on peer-reviewed literature. We are aware of many capacity-building initiatives undertaken by national and international organizations that would not have been included based on our search parameters. Third, we did not examine the details of qualitative assessments of capacity in this analysis but will do so in future work. Finally, although we attempted to build a comprehensive search strategy, we may not have found all of the relevant articles in the field. We tried to reduce this risk by relying on the expertise of a professional librarian. At the same time, several strengths outweigh these weaknesses. First, to our knowledge, this is the first comprehensive review of capacity-building measures for CBOs. Given the importance of CBOs for EBI delivery in support of health equity, this is a significant contribution. Second, we used duplicated screening and coding processes throughout to maintain rigor. Finally, the experience of the team with implementation science, health equity, and CBOs allowed for thoughtful consideration of the research questions and also the interpretation of results.

As measures for capacity among CBOs are strengthened, it will be critical to ensure that the definitions and models resonate with implementers and supporting systems. This may prompt the addition or broadening of some conceptualizations. As noted by Trickett, capacity-building has typically focused on building support for a given research-based resource, but if the goal is sustained use of research evidence, evaluations should also question how this work builds towards other goals in practice and community settings [Reference Trickett61]. Through clear specification of capacity-building implementation strategies, use of validated measures for multi-level outcomes, and an intentional equity frame, we can develop high-impact supports for CBO practitioners, a set of critical institutions for addressing health inequities.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/cts.2022.426

Acknowledgments

Research reported in this publication was supported by the National Cancer Institute of the National Institutes of Health under Award Numbers U54CA156732 (SR) and P30CA021765 (HB). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The work was also supported by a Dean’s Activation Award from the Harvard T.H. Chan School of Public Health (SR) and the American Lebanese and Syrian Associated Charities of St. Jude Children’s Research Hospital (HB). The funding bodies did not influence the design of the study and collection, analysis, or interpretation of data or the writing of the manuscript.

Disclosures

The authors have no conflicts of interest to declare.