1. Introduction

Motivated by measuring risks under model uncertainty, [Reference Peng31–Reference Peng33, Reference Peng36] introduced the notion of a sublinear expectation space, called a G-expectation space. The G-expectation theory has been widely used to evaluate random outcomes, not using a single probability measure, but using the supremum over a family of possibly mutually singular probability measures. One of the fundamental results in this theory is the robust central limit theorem introduced in [Reference Peng34, Reference Peng36]. The corresponding convergence rate was an open problem until recently. The first convergence rate was established in [Reference Fang, Peng, Shao and Song14, Reference Song37] using Stein’s method and later in [Reference Krylov28] using a stochastic control method under different model assumptions. More recently, [Reference Huang and Liang20] studied the convergence rate of a more general central limit theorem via a monotone approximation scheme for the G-equation.

On the other hand, nonlinear Lévy processes have been studied in [Reference Hu and Peng19, Reference Neufeld and Nutz29]. For

![]() $\alpha\in(1,2)$

, they considered a nonlinear

$\alpha\in(1,2)$

, they considered a nonlinear

![]() $\alpha$

-stable Lévy process

$\alpha$

-stable Lévy process

![]() $(X_{t})_{t\geq0}$

defined on a sublinear expectation space

$(X_{t})_{t\geq0}$

defined on a sublinear expectation space

![]() $(\Omega,\mathcal{H},\hat{\mathbb{E}})$

, whose local characteristics are described by a set of Lévy triplets

$(\Omega,\mathcal{H},\hat{\mathbb{E}})$

, whose local characteristics are described by a set of Lévy triplets

![]() $\Theta=\{(0,0,F_{k_{\pm}})\colon k_{\pm}\in K_{\pm}\}$

, where

$\Theta=\{(0,0,F_{k_{\pm}})\colon k_{\pm}\in K_{\pm}\}$

, where

![]() $K_{\pm}\subset(\lambda_{1},\lambda_{2})$

for some

$K_{\pm}\subset(\lambda_{1},\lambda_{2})$

for some

![]() $\lambda_{1},\lambda_{2}\geq0$

, and

$\lambda_{1},\lambda_{2}\geq0$

, and

![]() $F_{k_{\pm}}(\textrm{d} z)$

is the

$F_{k_{\pm}}(\textrm{d} z)$

is the

![]() $\alpha$

-stable Lévy measure

$\alpha$

-stable Lévy measure

Such a nonlinear

![]() $\alpha$

-stable Lévy process can be characterized via a fully nonlinear partial integro-differential equation (PIDE). For any

$\alpha$

-stable Lévy process can be characterized via a fully nonlinear partial integro-differential equation (PIDE). For any

![]() $\phi \in C_{\textrm{b,Lip}}(\mathbb{R})$

, [Reference Neufeld and Nutz29] proved the representation result

$\phi \in C_{\textrm{b,Lip}}(\mathbb{R})$

, [Reference Neufeld and Nutz29] proved the representation result

![]() $u(t,x)\;:\!=\;\hat{\mathbb{E}}[\phi(x+X_{t})]$

,

$u(t,x)\;:\!=\;\hat{\mathbb{E}}[\phi(x+X_{t})]$

,

![]() $(t,x)\in \lbrack 0,T]\times \mathbb{R}$

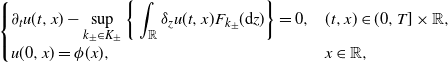

, where u is the unique viscosity solution of the fully nonlinear PIDE

$(t,x)\in \lbrack 0,T]\times \mathbb{R}$

, where u is the unique viscosity solution of the fully nonlinear PIDE

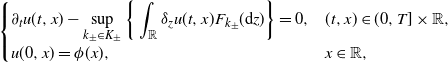

\begin{equation} \left \{ \begin{array}[c]{l@{\quad}l} \displaystyle \partial_{t}u(t,x) - \sup\limits_{k_{\pm}\in K_{\pm}} \bigg\{\int_{\mathbb{R}}\delta_{z}u(t,x)F_{k_{\pm}}(\textrm{d} z)\bigg\} = 0, & (t,x)\in(0,T]\times\mathbb{R}, \\[5pt] \displaystyle u(0,x)=\phi(x), & x\in \mathbb{R}, \end{array} \right. \end{equation}

\begin{equation} \left \{ \begin{array}[c]{l@{\quad}l} \displaystyle \partial_{t}u(t,x) - \sup\limits_{k_{\pm}\in K_{\pm}} \bigg\{\int_{\mathbb{R}}\delta_{z}u(t,x)F_{k_{\pm}}(\textrm{d} z)\bigg\} = 0, & (t,x)\in(0,T]\times\mathbb{R}, \\[5pt] \displaystyle u(0,x)=\phi(x), & x\in \mathbb{R}, \end{array} \right. \end{equation}

with

![]() $\delta_{z}u(t,x)\;:\!=\;u(t,x+z)-u(t,x)-D_{x}u(t,x)z$

. In contrast to the fully nonlinear PIDEs studied in the partial differential equation (PDE) literature, (1) is driven by a family of

$\delta_{z}u(t,x)\;:\!=\;u(t,x+z)-u(t,x)-D_{x}u(t,x)z$

. In contrast to the fully nonlinear PIDEs studied in the partial differential equation (PDE) literature, (1) is driven by a family of

![]() $\alpha$

-stable Lévy measures rather than a single Lévy measure. Moreover, since

$\alpha$

-stable Lévy measures rather than a single Lévy measure. Moreover, since

![]() $F_{k_{\pm}}(\textrm{d} z)$

possesses a singularity at the origin, the integral term degenerates and (1) is a degenerate equation.

$F_{k_{\pm}}(\textrm{d} z)$

possesses a singularity at the origin, the integral term degenerates and (1) is a degenerate equation.

The corresponding generalized central limit theorem for

![]() $\alpha$

-stable random variables under sublinear expectation was established in [Reference Bayraktar and Munk6]. For this, let

$\alpha$

-stable random variables under sublinear expectation was established in [Reference Bayraktar and Munk6]. For this, let

![]() $(\xi_{i})_{i=1}^{\infty}$

be a sequence of independent and identically distributed (i.i.d.)

$(\xi_{i})_{i=1}^{\infty}$

be a sequence of independent and identically distributed (i.i.d.)

![]() $\mathbb{R}$

-valued random variables on a sublinear expectation space

$\mathbb{R}$

-valued random variables on a sublinear expectation space

![]() $(\Omega,\mathcal{H},\tilde{\mathbb{E})}$

. After proper normalization, [Reference Bayraktar and Munk6] showed that

$(\Omega,\mathcal{H},\tilde{\mathbb{E})}$

. After proper normalization, [Reference Bayraktar and Munk6] showed that

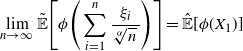

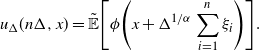

\[ \lim_{n\rightarrow\infty}\tilde{\mathbb{E}}\Bigg[\phi\Bigg(\sum_{i=1}^{n} \frac{\xi_{i}}{\sqrt[\alpha]{n}\,}\Bigg)\Bigg] = {\hat{\mathbb{E}}}[\phi(X_{1})]\]

\[ \lim_{n\rightarrow\infty}\tilde{\mathbb{E}}\Bigg[\phi\Bigg(\sum_{i=1}^{n} \frac{\xi_{i}}{\sqrt[\alpha]{n}\,}\Bigg)\Bigg] = {\hat{\mathbb{E}}}[\phi(X_{1})]\]

for any

![]() $\phi\in C_{\textrm{b,Lip}}(\mathbb{R})$

. We refer to the above convergence result as the

$\phi\in C_{\textrm{b,Lip}}(\mathbb{R})$

. We refer to the above convergence result as the

![]() $\alpha$

-stable central limit theorem under sublinear expectation.

$\alpha$

-stable central limit theorem under sublinear expectation.

Noting that

![]() $\hat{\mathbb{E}}[\phi(X_{1})]=u(1,0)$

, where u is the viscosity solution of (1), in this work we study the rate of convergence for the

$\hat{\mathbb{E}}[\phi(X_{1})]=u(1,0)$

, where u is the viscosity solution of (1), in this work we study the rate of convergence for the

![]() $\alpha$

-stable central limit theorem under sublinear expectation via the numerical analysis method for the nonlinear PIDE (1). To do this, we first construct a sublinear expectation space

$\alpha$

-stable central limit theorem under sublinear expectation via the numerical analysis method for the nonlinear PIDE (1). To do this, we first construct a sublinear expectation space

![]() $(\mathbb{R},C_\textrm{Lip}(\mathbb{R}),\tilde{\mathbb{E}})$

and introduce a random variable

$(\mathbb{R},C_\textrm{Lip}(\mathbb{R}),\tilde{\mathbb{E}})$

and introduce a random variable

![]() $\xi$

on this space. For given

$\xi$

on this space. For given

![]() $T>0$

and

$T>0$

and

![]() $\Delta \in(0,1)$

, using the random variable

$\Delta \in(0,1)$

, using the random variable

![]() $\xi$

under

$\xi$

under

![]() $\tilde{\mathbb{E}}$

as input, we define a discrete scheme

$\tilde{\mathbb{E}}$

as input, we define a discrete scheme

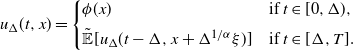

![]() $u_{\Delta}\colon[0,T]\times \mathbb{R\rightarrow R}$

to approximate u by

$u_{\Delta}\colon[0,T]\times \mathbb{R\rightarrow R}$

to approximate u by

\begin{equation} u_{\Delta}(t,x) = \left\{ \begin{array}[c]{l@{\quad}l} \phi(x) & \text{if } t\in\lbrack0,\Delta), \\[5pt] \tilde{\mathbb{E}}[u_{\Delta}(t-\Delta,x+\Delta^{1/\alpha}\xi)] & \text{if } t\in \lbrack \Delta,T]. \end{array} \right. \end{equation}

\begin{equation} u_{\Delta}(t,x) = \left\{ \begin{array}[c]{l@{\quad}l} \phi(x) & \text{if } t\in\lbrack0,\Delta), \\[5pt] \tilde{\mathbb{E}}[u_{\Delta}(t-\Delta,x+\Delta^{1/\alpha}\xi)] & \text{if } t\in \lbrack \Delta,T]. \end{array} \right. \end{equation}

Taking

![]() $T=1$

and

$T=1$

and

![]() $\Delta={1}/{n}$

, we can recursively apply the above scheme to obtain

$\Delta={1}/{n}$

, we can recursively apply the above scheme to obtain

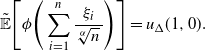

\[ \tilde{\mathbb{E}}\Bigg[\phi\Bigg(\sum_{i=1}^{n}\frac{\xi_{i}}{\sqrt[\alpha]{n}\,}\Bigg)\Bigg]=u_{\Delta}(1,0).\]

\[ \tilde{\mathbb{E}}\Bigg[\phi\Bigg(\sum_{i=1}^{n}\frac{\xi_{i}}{\sqrt[\alpha]{n}\,}\Bigg)\Bigg]=u_{\Delta}(1,0).\]

In this way, the convergence rate of the

![]() $\alpha$

-stable central limit theorem is transformed into the convergence rate of the discrete scheme (2) for approximating the nonlinear PIDE (1).

$\alpha$

-stable central limit theorem is transformed into the convergence rate of the discrete scheme (2) for approximating the nonlinear PIDE (1).

The basic framework for convergence of numerical schemes to viscosity solutions of Hamilton–Jacobi–Bellman equations was established in [Reference Barles and Souganidis5], which showed that any monotone, stable, and consistent approximation scheme converges to the correct solution, provided that there exists a comparison principle for the limiting equation. The corresponding convergence rate was first obtained with the introduction of the shaking coefficients technique to construct a sequence of smooth subsolutions/supersolutions in [Reference Krylov25–Reference Krylov27]. This technique was further developed to general monotone approximation schemes (see [Reference Barles and Jakobsen2–Reference Barles and Jakobsen4] and references therein).

The design and analysis of numerical schemes for nonlinear PIDEs is a relatively new area of research. For nonlinear degenerate PIDEs driven by a family of

![]() $\alpha$

-stable Lévy measures, there are no general results giving error bounds for numerical schemes. Most of the existing results in the PDE literature only deal with a single Lévy measure and its finite difference method, e.g. [Reference Biswas, Chowdhury and Jakobsen7–Reference Biswas, Jakobsen and Karlsen9, Reference Jakobsen, Karlsen and La Chioma22]. One exception is [Reference Coclite, Reichmann and Risebro12], which considers a nonlinear PIDE driven by a set of tempered

$\alpha$

-stable Lévy measures, there are no general results giving error bounds for numerical schemes. Most of the existing results in the PDE literature only deal with a single Lévy measure and its finite difference method, e.g. [Reference Biswas, Chowdhury and Jakobsen7–Reference Biswas, Jakobsen and Karlsen9, Reference Jakobsen, Karlsen and La Chioma22]. One exception is [Reference Coclite, Reichmann and Risebro12], which considers a nonlinear PIDE driven by a set of tempered

![]() $\alpha$

-stable Lévy measures for

$\alpha$

-stable Lévy measures for

![]() $\alpha \in(0,1)$

by using the finite difference method.

$\alpha \in(0,1)$

by using the finite difference method.

To derive the error bounds for the discrete scheme (2), the key step is to interchange the roles of the discrete scheme and the original equation when the approximate solution has enough regularity. The classical regularity estimates of the approximate solution depend on the finite variance of random variables. Since

![]() $\xi$

has infinite variance, the method developed in [Reference Krylov28] cannot be applied to

$\xi$

has infinite variance, the method developed in [Reference Krylov28] cannot be applied to

![]() $u_{\Delta}$

. To overcome this difficulty, by introducing a truncated discrete scheme

$u_{\Delta}$

. To overcome this difficulty, by introducing a truncated discrete scheme

![]() $u_{\Delta,N}$

related to a truncated random variable

$u_{\Delta,N}$

related to a truncated random variable

![]() $\xi^{N}$

with finite variance, we construct a new type of regularity estimate of

$\xi^{N}$

with finite variance, we construct a new type of regularity estimate of

![]() $u_{\Delta,N}$

that plays a pivotal role in establishing the space and time regularity properties for

$u_{\Delta,N}$

that plays a pivotal role in establishing the space and time regularity properties for

![]() $u_{\Delta}$

. With the help of a precise estimate of the truncation

$u_{\Delta}$

. With the help of a precise estimate of the truncation

![]() $\tilde{\mathbb{E}}[|\xi-\xi^{N}|]$

, a novel estimate for

$\tilde{\mathbb{E}}[|\xi-\xi^{N}|]$

, a novel estimate for

![]() $|u_{\Delta}-u_{\Delta,N}|$

is obtained. By choosing a proper N, we then establish the regularity estimates for

$|u_{\Delta}-u_{\Delta,N}|$

is obtained. By choosing a proper N, we then establish the regularity estimates for

![]() $u_{\Delta}$

. Together with the concavity of (1) and (2), and the regularity estimates of their solutions, we are able to interchange their roles and thus derive the error bounds for the discrete scheme. To the best of our knowledge, these are the first error bounds for numerical schemes for fully nonlinear PIDEs associated with a family of

$u_{\Delta}$

. Together with the concavity of (1) and (2), and the regularity estimates of their solutions, we are able to interchange their roles and thus derive the error bounds for the discrete scheme. To the best of our knowledge, these are the first error bounds for numerical schemes for fully nonlinear PIDEs associated with a family of

![]() $\alpha$

-stable Lévy measures, which in turn provide a nontrivial convergence rate result for the

$\alpha$

-stable Lévy measures, which in turn provide a nontrivial convergence rate result for the

![]() $\alpha$

-stable central limit theorem under sublinear expectation.

$\alpha$

-stable central limit theorem under sublinear expectation.

On the other hand, the classical probability literature mainly deals with

![]() $\Theta$

as a singleton, so

$\Theta$

as a singleton, so

![]() $(X_{t})_{t\geq0}$

becomes a classical Lévy process with triplet

$(X_{t})_{t\geq0}$

becomes a classical Lévy process with triplet

![]() $\Theta$

, and

$\Theta$

, and

![]() $X_{1}$

is an

$X_{1}$

is an

![]() $\alpha$

-stable random variable. The corresponding convergence rate of the classical

$\alpha$

-stable random variable. The corresponding convergence rate of the classical

![]() $\alpha$

-stable central limit theorem (with

$\alpha$

-stable central limit theorem (with

![]() $\Theta$

as a singleton) has been studied using the Kolmogorov distance (see, e.g., [Reference Davydov and Nagaev13, Reference Hall15–Reference Häusler and Luschgy17, Reference Ibragimov and Linnik21, Reference Juozulynas and Paulauskas24]) and the Wasserstein-1 distance or the smooth Wasserstein distance (see, e.g., [Reference Arras, Mijoule, Poly and Swan1, Reference Chen, Goldstein and Shao10, Reference Chen and Xu11, Reference Jin, Li and Lu23, Reference Nourdin and Peccati30, Reference Xu38]). The first type is proved by the characteristic functions, which do not exist in the sublinear framework, while the second type relies on Stein’s method which also fails under the sublinear setting.

$\Theta$

as a singleton) has been studied using the Kolmogorov distance (see, e.g., [Reference Davydov and Nagaev13, Reference Hall15–Reference Häusler and Luschgy17, Reference Ibragimov and Linnik21, Reference Juozulynas and Paulauskas24]) and the Wasserstein-1 distance or the smooth Wasserstein distance (see, e.g., [Reference Arras, Mijoule, Poly and Swan1, Reference Chen, Goldstein and Shao10, Reference Chen and Xu11, Reference Jin, Li and Lu23, Reference Nourdin and Peccati30, Reference Xu38]). The first type is proved by the characteristic functions, which do not exist in the sublinear framework, while the second type relies on Stein’s method which also fails under the sublinear setting.

The rest of the paper is organized as follows. In Section 2, we review some necessary results about sublinear expectation and

![]() $\alpha$

-stable Lévy processes. In Section 3, we list the assumptions and our main results, the convergence rate of

$\alpha$

-stable Lévy processes. In Section 3, we list the assumptions and our main results, the convergence rate of

![]() $\alpha$

-stable random variables under sublinear expectation. We present two examples to illustrate our results in Section 4. Finally, by using the monotone scheme method, the proof of our main result is given in Section 5.

$\alpha$

-stable random variables under sublinear expectation. We present two examples to illustrate our results in Section 4. Finally, by using the monotone scheme method, the proof of our main result is given in Section 5.

2. Preliminaries

In this section, we recall some basic results of sublinear expectation and

![]() $\alpha$

-stable Lévy processes that are needed in the sequel. For more details, we refer the reader to [Reference Bayraktar and Munk6, Reference Neufeld and Nutz29, Reference Peng32, Reference Peng36] and the references therein.

$\alpha$

-stable Lévy processes that are needed in the sequel. For more details, we refer the reader to [Reference Bayraktar and Munk6, Reference Neufeld and Nutz29, Reference Peng32, Reference Peng36] and the references therein.

We start with some notation. Let

![]() $C_\textrm{Lip}(\mathbb{R}^{n})$

be the space of Lipschitz functions on

$C_\textrm{Lip}(\mathbb{R}^{n})$

be the space of Lipschitz functions on

![]() $\mathbb{R}^{n}$

, and

$\mathbb{R}^{n}$

, and

![]() $C_\textrm{b,Lip}(\mathbb{R}^{n})$

be the space of bounded Lipschitz functions on

$C_\textrm{b,Lip}(\mathbb{R}^{n})$

be the space of bounded Lipschitz functions on

![]() $\mathbb{R}^{n}$

. For any subset

$\mathbb{R}^{n}$

. For any subset

![]() $Q\subset \lbrack0,T]\times \mathbb{R}$

and for any bounded function on Q, we define the norm

$Q\subset \lbrack0,T]\times \mathbb{R}$

and for any bounded function on Q, we define the norm

![]() $|\omega|_{0}\;:\!=\;\sup_{(t,x)\in Q}|\omega(t,x)|$

. We also use the following spaces:

$|\omega|_{0}\;:\!=\;\sup_{(t,x)\in Q}|\omega(t,x)|$

. We also use the following spaces:

![]() $C_\textrm{b}(Q)$

and

$C_\textrm{b}(Q)$

and

![]() $C_\textrm{b}^{\infty}(Q)$

, denoting, respectively, the space of bounded continuous functions on Q and the space of bounded continuous functions on Q with bounded derivatives of any order. For the rest of this paper we take a nonnegative function

$C_\textrm{b}^{\infty}(Q)$

, denoting, respectively, the space of bounded continuous functions on Q and the space of bounded continuous functions on Q with bounded derivatives of any order. For the rest of this paper we take a nonnegative function

![]() $\zeta \in C^{\infty} (\mathbb{R}^{2})$

with unit integral and support in

$\zeta \in C^{\infty} (\mathbb{R}^{2})$

with unit integral and support in

![]() $\{(t,x)\colon-1<t<0,|x|<1\}$

and, for

$\{(t,x)\colon-1<t<0,|x|<1\}$

and, for

![]() $\varepsilon \in(0,1)$

, let

$\varepsilon \in(0,1)$

, let

![]() $\zeta_{\varepsilon}(t,x)=\varepsilon^{-3}\zeta(t/\varepsilon^{2},x/\varepsilon)$

.

$\zeta_{\varepsilon}(t,x)=\varepsilon^{-3}\zeta(t/\varepsilon^{2},x/\varepsilon)$

.

2.1. Sublinear expectation

Let

![]() $\mathcal{H}$

be a linear space of real-valued functions defined on a set

$\mathcal{H}$

be a linear space of real-valued functions defined on a set

![]() $\Omega$

such that if

$\Omega$

such that if

![]() $X_{1},\ldots,X_{n}\in\mathcal{H}$

, then

$X_{1},\ldots,X_{n}\in\mathcal{H}$

, then

![]() $\varphi(X_{1},\ldots,X_{n})\in \mathcal{H}$

for each

$\varphi(X_{1},\ldots,X_{n})\in \mathcal{H}$

for each

![]() $\varphi \in C_{\textrm{Lip}}(\mathbb{R}^{n})$

.

$\varphi \in C_{\textrm{Lip}}(\mathbb{R}^{n})$

.

Definition 1. A functional

![]() $\hat{\mathbb{E}}\colon\mathcal{H}\rightarrow\mathbb{R}$

is called a sublinear expectation if, for all

$\hat{\mathbb{E}}\colon\mathcal{H}\rightarrow\mathbb{R}$

is called a sublinear expectation if, for all

![]() $X,Y \in \mathcal{H}$

, it satisfies the following properties:

$X,Y \in \mathcal{H}$

, it satisfies the following properties:

-

(i) Monotonicity: If

$X\geq Y$

then

$X\geq Y$

then

$\hat{\mathbb{E}}[X] \geq \hat{\mathbb{E}}[Y]$

.

$\hat{\mathbb{E}}[X] \geq \hat{\mathbb{E}}[Y]$

. -

(ii) Constant preservation:

$\hat{\mathbb{E}}[c]=c$

for any

$\hat{\mathbb{E}}[c]=c$

for any

$c\in \mathbb{R}$

.

$c\in \mathbb{R}$

. -

(iii) Subadditivity:

$\hat{\mathbb{E}}[X+Y] \leq \hat{\mathbb{E}}[X] + \hat{\mathbb{E}}[Y]$

.

$\hat{\mathbb{E}}[X+Y] \leq \hat{\mathbb{E}}[X] + \hat{\mathbb{E}}[Y]$

. -

(iv) Positive homogeneity:

$\hat{\mathbb{E}}[\lambda X] = \lambda\hat{\mathbb{E}}[X]$

for each

$\hat{\mathbb{E}}[\lambda X] = \lambda\hat{\mathbb{E}}[X]$

for each

$\lambda>0$

.

$\lambda>0$

.

The triplet

![]() $(\Omega,\mathcal{H},\hat{\mathbb{E})}$

is called a sublinear expectation space. From the definition of the sublinear expectation

$(\Omega,\mathcal{H},\hat{\mathbb{E})}$

is called a sublinear expectation space. From the definition of the sublinear expectation

![]() $\hat{\mathbb{E}}$

, the following results can be easily obtained.

$\hat{\mathbb{E}}$

, the following results can be easily obtained.

Proposition 1. For

![]() $X,Y \in \mathcal{H}$

,

$X,Y \in \mathcal{H}$

,

-

(i) if

$\hat{\mathbb{E}}[X] = -\hat{\mathbb{E}}[-X]$

, then

$\hat{\mathbb{E}}[X] = -\hat{\mathbb{E}}[-X]$

, then

$\hat{\mathbb{E}}[X+Y] = \hat{\mathbb{E}}[X] + \hat{\mathbb{E}}[Y]$

;

$\hat{\mathbb{E}}[X+Y] = \hat{\mathbb{E}}[X] + \hat{\mathbb{E}}[Y]$

; -

(ii)

$|\hat{\mathbb{E}}[X] - \hat{\mathbb{E}}[Y]| \leq \hat{\mathbb{E}}[\vert X-Y\vert]$

;

$|\hat{\mathbb{E}}[X] - \hat{\mathbb{E}}[Y]| \leq \hat{\mathbb{E}}[\vert X-Y\vert]$

; -

(iii)

$\hat{\mathbb{E}}[\vert XY\vert] \leq (\hat{\mathbb{E}}[\vert X\vert^{p}])^{1/p} \cdot (\hat{\mathbb{E}}[\vert Y\vert^{q}])^{1/q}$

for

$\hat{\mathbb{E}}[\vert XY\vert] \leq (\hat{\mathbb{E}}[\vert X\vert^{p}])^{1/p} \cdot (\hat{\mathbb{E}}[\vert Y\vert^{q}])^{1/q}$

for

$1\leq p,q<\infty$

with

$1\leq p,q<\infty$

with

${1}/{p}+{1}/{q}=1$

.

${1}/{p}+{1}/{q}=1$

.

Definition 2. Let

![]() $X_{1}$

and

$X_{1}$

and

![]() $X_{2}$

be two n-dimensional random vectors defined respectively in sublinear expectation spaces

$X_{2}$

be two n-dimensional random vectors defined respectively in sublinear expectation spaces

![]() $(\Omega_{1},\mathcal{H}_{1},\hat{\mathbb{E}}_{1})$

and

$(\Omega_{1},\mathcal{H}_{1},\hat{\mathbb{E}}_{1})$

and

![]() $(\Omega_{2},\mathcal{H}_{2},\hat{\mathbb{E}}_{2})$

. They are called identically distributed, denoted by

$(\Omega_{2},\mathcal{H}_{2},\hat{\mathbb{E}}_{2})$

. They are called identically distributed, denoted by

![]() $X_{1}\overset{\textrm{d}}{=}X_{2}$

, if

$X_{1}\overset{\textrm{d}}{=}X_{2}$

, if

![]() $\hat{\mathbb{E}}_{1}[\varphi(X_{1})] = \hat{\mathbb{E}}_{2}[\varphi(X_{2})]$

for all

$\hat{\mathbb{E}}_{1}[\varphi(X_{1})] = \hat{\mathbb{E}}_{2}[\varphi(X_{2})]$

for all

![]() $\varphi \in C_\textrm{Lip}(\mathbb{R}^{n})$

.

$\varphi \in C_\textrm{Lip}(\mathbb{R}^{n})$

.

Definition 3. In a sublinear expectation space

![]() $(\Omega,\mathcal{H},\hat{\mathbb{E})}$

, a random vector

$(\Omega,\mathcal{H},\hat{\mathbb{E})}$

, a random vector

![]() $Y=(Y_{1}$

,

$Y=(Y_{1}$

,

![]() $\ldots,Y_{n}) \in \mathcal{H}^{n}$

is said to be independent of another random vector

$\ldots,Y_{n}) \in \mathcal{H}^{n}$

is said to be independent of another random vector

![]() $X=(X_{1},\ldots,X_{m})\in\mathcal{H}^{m}$

under

$X=(X_{1},\ldots,X_{m})\in\mathcal{H}^{m}$

under

![]() $\hat{\mathbb{E}}[\!\cdot\!]$

, denoted by

$\hat{\mathbb{E}}[\!\cdot\!]$

, denoted by

![]() $Y\perp X$

, if, for every test function

$Y\perp X$

, if, for every test function

![]() $\varphi \in C_\textrm{Lip}(\mathbb{R}^{m}\times \mathbb{R}^{n})$

,

$\varphi \in C_\textrm{Lip}(\mathbb{R}^{m}\times \mathbb{R}^{n})$

,

![]() $\hat{\mathbb{E}}[\varphi(X,Y)] = \hat{\mathbb{E}}[\hat{\mathbb{E}}[\varphi(x,Y)]_{x=X}]$

.

$\hat{\mathbb{E}}[\varphi(X,Y)] = \hat{\mathbb{E}}[\hat{\mathbb{E}}[\varphi(x,Y)]_{x=X}]$

.

![]() $\bar{X}=(\bar{X}_{1},\ldots,\bar{X}_{m})\in \mathcal{H}^{m}$

is said to be an independent copy of X if

$\bar{X}=(\bar{X}_{1},\ldots,\bar{X}_{m})\in \mathcal{H}^{m}$

is said to be an independent copy of X if

![]() $\bar{X}\overset{\textrm{d}}{=}X$

and

$\bar{X}\overset{\textrm{d}}{=}X$

and

![]() $\bar{X}\perp X$

.

$\bar{X}\perp X$

.

More details concerning general sublinear expectation spaces can be found in [Reference Peng33, Reference Peng36] and references therein.

2.2.

$\alpha$

-stable Lévy process

$\alpha$

-stable Lévy process

Definition 4. Let

![]() $\alpha\in(0,2]$

. A random variable X on a sublinear expectation space

$\alpha\in(0,2]$

. A random variable X on a sublinear expectation space

![]() $(\Omega,\mathcal{H},\hat{\mathbb{E})}$

is said to be (strictly)

$(\Omega,\mathcal{H},\hat{\mathbb{E})}$

is said to be (strictly)

![]() $\alpha$

-stable if, for all

$\alpha$

-stable if, for all

![]() $a,b\geq0$

,

$a,b\geq0$

,

![]() $aX+bY\overset{\textrm{d}}{=}(a^{\alpha}+b^{\alpha})^{1/\alpha}X$

, where Y is an independent copy of X.

$aX+bY\overset{\textrm{d}}{=}(a^{\alpha}+b^{\alpha})^{1/\alpha}X$

, where Y is an independent copy of X.

Remark 1. For

![]() $\alpha=1$

, X is the maximal random variable discussed in [Reference Hu and Li18, Reference Peng34, Reference Peng36]. When

$\alpha=1$

, X is the maximal random variable discussed in [Reference Hu and Li18, Reference Peng34, Reference Peng36]. When

![]() $\alpha=2$

, X becomes the G-normal random variable introduced in [Reference Peng35, Reference Peng36]. In this paper, we shall focus on the case of

$\alpha=2$

, X becomes the G-normal random variable introduced in [Reference Peng35, Reference Peng36]. In this paper, we shall focus on the case of

![]() $\alpha\in(1,2)$

and consider X for a nonlinear

$\alpha\in(1,2)$

and consider X for a nonlinear

![]() $\alpha$

-stable Lévy process

$\alpha$

-stable Lévy process

![]() $(X_{t})_{t\geq0}$

in the framework of [Reference Neufeld and Nutz29].

$(X_{t})_{t\geq0}$

in the framework of [Reference Neufeld and Nutz29].

Let

![]() $\alpha \in(1,2)$

,

$\alpha \in(1,2)$

,

![]() $K_{\pm}$

be a bounded measurable subset of

$K_{\pm}$

be a bounded measurable subset of

![]() $\mathbb{R}_{+}$

, and

$\mathbb{R}_{+}$

, and

![]() $F_{k_{\pm}}$

be the

$F_{k_{\pm}}$

be the

![]() $\alpha$

-stable Lévy measure

$\alpha$

-stable Lévy measure

for all

![]() $k_{-},k_{+}\in K_{\pm}$

, and denote by

$k_{-},k_{+}\in K_{\pm}$

, and denote by

![]() $\Theta\;:\!=\;\{(0,0,F_{k_{\pm}})\colon k_{\pm}\in K_{\pm}\}$

the set of Lévy triplets. From [Reference Neufeld and Nutz29, Theorem 2.1], we can define a nonlinear

$\Theta\;:\!=\;\{(0,0,F_{k_{\pm}})\colon k_{\pm}\in K_{\pm}\}$

the set of Lévy triplets. From [Reference Neufeld and Nutz29, Theorem 2.1], we can define a nonlinear

![]() $\alpha$

-stable Lévy process

$\alpha$

-stable Lévy process

![]() $(X_{t})_{t\geq0}$

with respect to a sublinear expectation

$(X_{t})_{t\geq0}$

with respect to a sublinear expectation

![]() $\hat{\mathbb{E}}[\!\cdot\!] = \sup_{P\in\mathfrak{B}_{\Theta}}E^{P}[\!\cdot\!]$

, where

$\hat{\mathbb{E}}[\!\cdot\!] = \sup_{P\in\mathfrak{B}_{\Theta}}E^{P}[\!\cdot\!]$

, where

![]() $E^{P}$

is the usual expectation under the probability measure P, and

$E^{P}$

is the usual expectation under the probability measure P, and

![]() $\mathfrak{B}_{\Theta}$

is the set of all semimartingales with

$\mathfrak{B}_{\Theta}$

is the set of all semimartingales with

![]() $\Theta$

-valued differential characteristics. This implies the following:

$\Theta$

-valued differential characteristics. This implies the following:

-

•

$(X_{t})_{t\geq0}$

is real-valued càdlàg process and

$(X_{t})_{t\geq0}$

is real-valued càdlàg process and

$X_{0}=0$

.

$X_{0}=0$

. -

•

$(X_{t})_{t\geq0}$

has stationary increments, i.e.

$(X_{t})_{t\geq0}$

has stationary increments, i.e.

$X_{t}-X_{s}$

and

$X_{t}-X_{s}$

and

$X_{t-s}$

are identically distributed for all

$X_{t-s}$

are identically distributed for all

$0\leq s\leq t$

.

$0\leq s\leq t$

. -

•

$(X_{t})_{t\geq0}$

has independent increments, i.e.

$(X_{t})_{t\geq0}$

has independent increments, i.e.

$X_{t}-X_{s}$

is independent of

$X_{t}-X_{s}$

is independent of

$(X_{s_{1}},\ldots,X_{s_{n}})$

for each

$(X_{s_{1}},\ldots,X_{s_{n}})$

for each

$n\in\mathbb{N}$

and

$n\in\mathbb{N}$

and

$0\leq s_{1}\leq \cdots \leq s_{n}\leq s$

.

$0\leq s_{1}\leq \cdots \leq s_{n}\leq s$

.

We now present some basic lemmas for the

![]() $\alpha$

-stable Lévy process

$\alpha$

-stable Lévy process

![]() $(X_{t})_{t\geq0}$

. We refer to [Reference Bayraktar and Munk6, Lemmas 2.6–2.9] and [Reference Neufeld and Nutz29, Lemmas 5.1–5.3] for the details of the proofs.

$(X_{t})_{t\geq0}$

. We refer to [Reference Bayraktar and Munk6, Lemmas 2.6–2.9] and [Reference Neufeld and Nutz29, Lemmas 5.1–5.3] for the details of the proofs.

Lemma 1.

![]() $\hat{\mathbb{E}}[|X_{1}|]<\infty$

.

$\hat{\mathbb{E}}[|X_{1}|]<\infty$

.

Lemma 2. For all

![]() $\lambda>0$

and

$\lambda>0$

and

![]() $t\geq0$

,

$t\geq0$

,

![]() $X_{\lambda t}$

and

$X_{\lambda t}$

and

![]() $\lambda^{1/\alpha}X_{t}$

are identically distributed.

$\lambda^{1/\alpha}X_{t}$

are identically distributed.

Lemma 3. Suppose that

![]() $\phi\in C_\textrm{b,Lip}(\mathbb{R})$

. Then, for any

$\phi\in C_\textrm{b,Lip}(\mathbb{R})$

. Then, for any

![]() $(t,x)\in[0,T]\times\mathbb{R}$

,

$(t,x)\in[0,T]\times\mathbb{R}$

,

![]() $u(t,x)=\hat{\mathbb{E}}[\phi(x+X_{t})]$

is the unique viscosity solution of the fully nonlinear PIDE

$u(t,x)=\hat{\mathbb{E}}[\phi(x+X_{t})]$

is the unique viscosity solution of the fully nonlinear PIDE

\begin{equation} \left \{ \begin{array}[c]{l@{\quad}l} \displaystyle \partial_{t}u(t,x) - \sup\limits_{k_{\pm}\in K_{\pm}} \bigg\{\int_{\mathbb{R}}\delta_{z}u(t,x)F_{k_{\pm}}(\textrm{d} z)\bigg\} = 0, & (t,x) \in (0,T]\times\mathbb{R}, \\[5pt] \displaystyle u(0,x) = \phi(x), & x \in \mathbb{R}, \end{array} \right. \end{equation}

\begin{equation} \left \{ \begin{array}[c]{l@{\quad}l} \displaystyle \partial_{t}u(t,x) - \sup\limits_{k_{\pm}\in K_{\pm}} \bigg\{\int_{\mathbb{R}}\delta_{z}u(t,x)F_{k_{\pm}}(\textrm{d} z)\bigg\} = 0, & (t,x) \in (0,T]\times\mathbb{R}, \\[5pt] \displaystyle u(0,x) = \phi(x), & x \in \mathbb{R}, \end{array} \right. \end{equation}

with

![]() $\delta_{z}u(t,x)\;:\!=\;u(t,x+z)-u(t,x)-D_{x}u(t,x)z$

. Moreover, for any

$\delta_{z}u(t,x)\;:\!=\;u(t,x+z)-u(t,x)-D_{x}u(t,x)z$

. Moreover, for any

![]() $0\leq s\leq t\leq T$

,

$0\leq s\leq t\leq T$

,

![]() $u(t,x)=\hat{\mathbb{E}}[u(t-s,x+X_{s})]$

.

$u(t,x)=\hat{\mathbb{E}}[u(t-s,x+X_{s})]$

.

Lemma 4. Suppose that

![]() $\phi \in C_\textrm{b,Lip}(\mathbb{R})$

. Then the function u is uniformly bounded by

$\phi \in C_\textrm{b,Lip}(\mathbb{R})$

. Then the function u is uniformly bounded by

![]() $|\phi|_{0}$

and jointly continuous. More precisely, for any

$|\phi|_{0}$

and jointly continuous. More precisely, for any

![]() $t,s\in[0,T]$

and

$t,s\in[0,T]$

and

![]() $x,y\in\mathbb{R}$

,

$x,y\in\mathbb{R}$

,

where

![]() $C_{\phi,\mathcal{K}}$

is a constant depending only on the Lipschitz constant of

$C_{\phi,\mathcal{K}}$

is a constant depending only on the Lipschitz constant of

![]() $\phi$

and

$\phi$

and

3. Main results

First, we construct a sublinear expectation space and introduce random variables on it. For each

![]() $k_{\pm}\in K_{\pm}\subset(\lambda_{1},\lambda_{2})$

for some

$k_{\pm}\in K_{\pm}\subset(\lambda_{1},\lambda_{2})$

for some

![]() $\lambda_{1},\lambda_{2}\geq0$

, let

$\lambda_{1},\lambda_{2}\geq0$

, let

![]() $W_{k_{\pm}}$

be a classical mean-zero random variable with a cumulative distribution function (CDF)

$W_{k_{\pm}}$

be a classical mean-zero random variable with a cumulative distribution function (CDF)

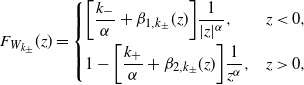

\begin{equation} F_{W_{k_{\pm}}}(z) = \left \{ \begin{array}[c]{l@{\quad}l} \displaystyle \bigg[\frac{k_{-}}{\alpha} + \beta_{1,k_{\pm}}(z)\bigg]\frac{1}{|z|^{\alpha}}, & z<0, \\[3mm] \displaystyle 1 - \bigg[\frac{k_{+}}{\alpha} + \beta_{2,k_{\pm}}(z)\bigg]\frac{1}{z^{\alpha}}, & z>0, \end{array} \right. \end{equation}

\begin{equation} F_{W_{k_{\pm}}}(z) = \left \{ \begin{array}[c]{l@{\quad}l} \displaystyle \bigg[\frac{k_{-}}{\alpha} + \beta_{1,k_{\pm}}(z)\bigg]\frac{1}{|z|^{\alpha}}, & z<0, \\[3mm] \displaystyle 1 - \bigg[\frac{k_{+}}{\alpha} + \beta_{2,k_{\pm}}(z)\bigg]\frac{1}{z^{\alpha}}, & z>0, \end{array} \right. \end{equation}

for some functions

![]() $\beta_{1,k_{\pm}}\colon(\!-\infty,0] \rightarrow \mathbb{R}$

and

$\beta_{1,k_{\pm}}\colon(\!-\infty,0] \rightarrow \mathbb{R}$

and

![]() $\beta_{2,k_{\pm}}\colon[0,\infty)\rightarrow \mathbb{R}$

such that

$\beta_{2,k_{\pm}}\colon[0,\infty)\rightarrow \mathbb{R}$

such that

![]() $\lim_{z\rightarrow-\infty}\beta_{1,k_{\pm}}(z)=\lim_{z\rightarrow \infty}\beta_{2,k_{\pm}}(z)=0$

. Define a sublinear expectation

$\lim_{z\rightarrow-\infty}\beta_{1,k_{\pm}}(z)=\lim_{z\rightarrow \infty}\beta_{2,k_{\pm}}(z)=0$

. Define a sublinear expectation

![]() $\tilde{\mathbb{E}}$

on

$\tilde{\mathbb{E}}$

on

![]() $C_\textrm{Lip}(\mathbb{R})$

by

$C_\textrm{Lip}(\mathbb{R})$

by

for all

![]() $\varphi \in C_\textrm{Lip}(\mathbb{R})$

. Clearly,

$\varphi \in C_\textrm{Lip}(\mathbb{R})$

. Clearly,

![]() $(\mathbb{R},C_\textrm{Lip}(\mathbb{R}),\tilde{\mathbb{E}})$

is a sublinear expectation space. Let

$(\mathbb{R},C_\textrm{Lip}(\mathbb{R}),\tilde{\mathbb{E}})$

is a sublinear expectation space. Let

![]() $\xi$

be a random variable on this space given by

$\xi$

be a random variable on this space given by

![]() $\xi(z)=z$

for all

$\xi(z)=z$

for all

![]() $z\in \mathbb{R}$

. Since

$z\in \mathbb{R}$

. Since

![]() $W_{k\pm}$

has mean zero, this yields

$W_{k\pm}$

has mean zero, this yields

![]() $\tilde{\mathbb{E}}[\xi]=\tilde{\mathbb{E}}[-\xi]=0$

.

$\tilde{\mathbb{E}}[\xi]=\tilde{\mathbb{E}}[-\xi]=0$

.

We need the following assumptions, which are motivated by [Reference Bayraktar and Munk6, Example 4.2].

Assumption 1. For each

![]() $k_{\pm}\in K_{\pm}$

,

$k_{\pm}\in K_{\pm}$

,

![]() $\beta_{1,k_{\pm}}$

and

$\beta_{1,k_{\pm}}$

and

![]() $\beta_{2,k_{\pm}}$

are continuously differentiable functions in (4) satisfying

$\beta_{2,k_{\pm}}$

are continuously differentiable functions in (4) satisfying

![]() $\int_{\mathbb{R}}z\,\textrm{d} F_{W_{k_{\pm}}}(z)=0$

.

$\int_{\mathbb{R}}z\,\textrm{d} F_{W_{k_{\pm}}}(z)=0$

.

Assumption 2. There exists a constant

![]() $M>0$

such that, for any

$M>0$

such that, for any

![]() $k_{\pm}\in K_{\pm}$

, the following quantities are less than M:

$k_{\pm}\in K_{\pm}$

, the following quantities are less than M:

Assumption 3. There exists a constant

![]() $q>0$

such that, for any

$q>0$

such that, for any

![]() $k_{\pm}\in K_{\pm}$

and

$k_{\pm}\in K_{\pm}$

and

![]() $\Delta \in(0,1)$

, the following quantities are less than

$\Delta \in(0,1)$

, the following quantities are less than

![]() $C\Delta^{q}$

:

$C\Delta^{q}$

:

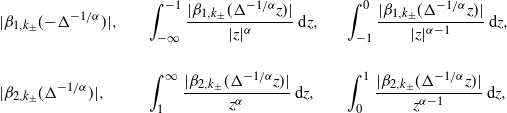

$$\begin{array}[c]{l@{\quad}l@{\quad}l} \displaystyle|\beta_{1,k_{\pm}}(\!-\!\Delta^{-1/\alpha})|,& \displaystyle\int_{-\infty}^{-1}\frac{|\beta_{1,k_{\pm}}(\Delta^{-1/\alpha}z)|}{|z|^{\alpha}}\,\textrm{d} z, & \displaystyle\int_{-1}^{0}\frac{|\beta_{1,k_{\pm}}(\Delta^{-1/\alpha}z)|}{|z|^{\alpha-1}}\,\textrm{d} z, \\[5pt] & & \\[5pt] \displaystyle|\beta_{2,k_{\pm}}(\Delta^{-1/\alpha})|, & \displaystyle\int_{1}^{\infty}\frac{|\beta_{2,k_{\pm}}(\Delta^{-1/\alpha}z)|}{z^{\alpha}}\,\textrm{d} z, & \displaystyle\int_{0}^{1}\frac{|\beta_{2,k_{\pm}}(\Delta^{-1/\alpha}z)|}{z^{\alpha-1}}\,\textrm{d} z, \end{array} $$

$$\begin{array}[c]{l@{\quad}l@{\quad}l} \displaystyle|\beta_{1,k_{\pm}}(\!-\!\Delta^{-1/\alpha})|,& \displaystyle\int_{-\infty}^{-1}\frac{|\beta_{1,k_{\pm}}(\Delta^{-1/\alpha}z)|}{|z|^{\alpha}}\,\textrm{d} z, & \displaystyle\int_{-1}^{0}\frac{|\beta_{1,k_{\pm}}(\Delta^{-1/\alpha}z)|}{|z|^{\alpha-1}}\,\textrm{d} z, \\[5pt] & & \\[5pt] \displaystyle|\beta_{2,k_{\pm}}(\Delta^{-1/\alpha})|, & \displaystyle\int_{1}^{\infty}\frac{|\beta_{2,k_{\pm}}(\Delta^{-1/\alpha}z)|}{z^{\alpha}}\,\textrm{d} z, & \displaystyle\int_{0}^{1}\frac{|\beta_{2,k_{\pm}}(\Delta^{-1/\alpha}z)|}{z^{\alpha-1}}\,\textrm{d} z, \end{array} $$

where

![]() $C>0$

is a constant.

$C>0$

is a constant.

Remark 2. Note that by Assumption 1 alone, the terms in Assumption 2 are finite and the terms in Assumption 3 approach zero as

![]() $\Delta\rightarrow0$

. In other words, the content of Assumptions 2 and 3 are the uniform bounds and the existence of minimum convergence rates.

$\Delta\rightarrow0$

. In other words, the content of Assumptions 2 and 3 are the uniform bounds and the existence of minimum convergence rates.

Remark 3. By (4), we can write

![]() $\beta_{1,k_{\pm}}$

and

$\beta_{1,k_{\pm}}$

and

![]() $\beta_{2,k_{\pm}}$

as

$\beta_{2,k_{\pm}}$

as

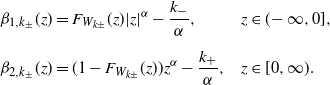

\begin{alignat*}{2} \beta_{1,k_{\pm}}(z) & = F_{W_{k\pm}}(z)|z|^{\alpha} - \frac{k_{-}}{\alpha}, & z & \in (\!-\infty,0], \\[5pt] \beta_{2,k_{\pm}}(z) & = (1-F_{W_{k\pm}}(z))z^{\alpha} - \frac{k_{+}}{\alpha}, \quad & z & \in \lbrack0,\infty). \end{alignat*}

\begin{alignat*}{2} \beta_{1,k_{\pm}}(z) & = F_{W_{k\pm}}(z)|z|^{\alpha} - \frac{k_{-}}{\alpha}, & z & \in (\!-\infty,0], \\[5pt] \beta_{2,k_{\pm}}(z) & = (1-F_{W_{k\pm}}(z))z^{\alpha} - \frac{k_{+}}{\alpha}, \quad & z & \in \lbrack0,\infty). \end{alignat*}

Under Assumption 1, it can be checked that for any

![]() $k_{\pm}\in K_{\pm}$

the following quantities are uniformly bounded (we also assume the uniform bound is M):

$k_{\pm}\in K_{\pm}$

the following quantities are uniformly bounded (we also assume the uniform bound is M):

\[ \begin{array}[c]{l@{\quad}l@{\quad}l} \displaystyle|\beta_{1,k_{\pm}}(\!-\!1)|, & & \displaystyle\int_{-1}^{0}\frac{|-\beta_{1,k_{\pm}}^{\prime}(z)z+\alpha\beta_{1,k_{\pm}}(z)|} {|z|^{\alpha-1}}\,\textrm{d} z, \\[5pt] & & \\[5pt] \displaystyle|\beta_{2,k_{\pm}}(1)|, & & \displaystyle\int_{0}^{1}\frac{|-\beta_{2,k_{\pm}}^{\prime}(z)z+\alpha\beta_{2,k_{\pm}}(z)|}{z^{\alpha-1}}\,\textrm{d} z. \end{array} \]

\[ \begin{array}[c]{l@{\quad}l@{\quad}l} \displaystyle|\beta_{1,k_{\pm}}(\!-\!1)|, & & \displaystyle\int_{-1}^{0}\frac{|-\beta_{1,k_{\pm}}^{\prime}(z)z+\alpha\beta_{1,k_{\pm}}(z)|} {|z|^{\alpha-1}}\,\textrm{d} z, \\[5pt] & & \\[5pt] \displaystyle|\beta_{2,k_{\pm}}(1)|, & & \displaystyle\int_{0}^{1}\frac{|-\beta_{2,k_{\pm}}^{\prime}(z)z+\alpha\beta_{2,k_{\pm}}(z)|}{z^{\alpha-1}}\,\textrm{d} z. \end{array} \]

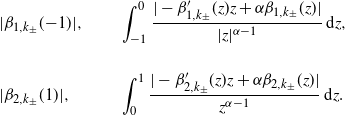

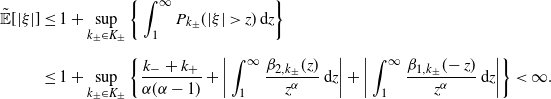

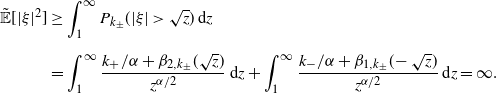

Remark 4. Under Assumptions 1 and (A2), it is easy to check that

where

![]() $\{P_{k_{\pm}}, k_{\pm}\in K_{\pm}\}$

is the set of probability measures related to uncertainty distributions

$\{P_{k_{\pm}}, k_{\pm}\in K_{\pm}\}$

is the set of probability measures related to uncertainty distributions

![]() $\{F_{W_{k\pm}},k_{\pm}\in K_{\pm}\}$

. It follows that

$\{F_{W_{k\pm}},k_{\pm}\in K_{\pm}\}$

. It follows that

\begin{align*} \tilde{\mathbb{E}}[|\xi|] & \leq 1 + \sup_{k_{\pm}\in K_{\pm}}\bigg\{\int_{1}^{\infty}P_{k_{\pm}}(|\xi|>z)\,\textrm{d} z\bigg\} \\[5pt] & \leq 1 + \sup_{k_{\pm}\in K_{\pm}}\bigg\{\frac{k_{-}+k_{+}}{\alpha(\alpha-1)} + \bigg\vert\int_{1}^{\infty}\frac{\beta_{2,k_{\pm}}(z)}{z^{\alpha}}\,\textrm{d} z\bigg\vert + \bigg\vert\int_{1}^{\infty}\frac{\beta_{1,k_{\pm}}(\!-z)}{z^{\alpha}}\,\textrm{d} z\bigg\vert\bigg\} < \infty. \end{align*}

\begin{align*} \tilde{\mathbb{E}}[|\xi|] & \leq 1 + \sup_{k_{\pm}\in K_{\pm}}\bigg\{\int_{1}^{\infty}P_{k_{\pm}}(|\xi|>z)\,\textrm{d} z\bigg\} \\[5pt] & \leq 1 + \sup_{k_{\pm}\in K_{\pm}}\bigg\{\frac{k_{-}+k_{+}}{\alpha(\alpha-1)} + \bigg\vert\int_{1}^{\infty}\frac{\beta_{2,k_{\pm}}(z)}{z^{\alpha}}\,\textrm{d} z\bigg\vert + \bigg\vert\int_{1}^{\infty}\frac{\beta_{1,k_{\pm}}(\!-z)}{z^{\alpha}}\,\textrm{d} z\bigg\vert\bigg\} < \infty. \end{align*}

Similarly,

\begin{align*} \tilde{\mathbb{E}}[|\xi|^{2}] & \geq \int_{1}^{\infty}P_{k_{\pm}}(|\xi|>\sqrt{z})\,\textrm{d} z \\[5pt] & = \int_{1}^{\infty}\frac{k_{+}/\alpha+\beta_{2,k_{\pm}}(\sqrt{z})}{z^{\alpha/2}}\,\textrm{d} z + \int_{1}^{\infty}\frac{k_{-}/\alpha+\beta_{1,k_{\pm}}(\!-\sqrt{z})}{z^{\alpha/2}}\,\textrm{d} z = \infty. \end{align*}

\begin{align*} \tilde{\mathbb{E}}[|\xi|^{2}] & \geq \int_{1}^{\infty}P_{k_{\pm}}(|\xi|>\sqrt{z})\,\textrm{d} z \\[5pt] & = \int_{1}^{\infty}\frac{k_{+}/\alpha+\beta_{2,k_{\pm}}(\sqrt{z})}{z^{\alpha/2}}\,\textrm{d} z + \int_{1}^{\infty}\frac{k_{-}/\alpha+\beta_{1,k_{\pm}}(\!-\sqrt{z})}{z^{\alpha/2}}\,\textrm{d} z = \infty. \end{align*}

Let

![]() $(\xi_{i})_{i=1}^{\infty}$

be a sequence of i.i.d.

$(\xi_{i})_{i=1}^{\infty}$

be a sequence of i.i.d.

![]() $\mathbb{R}$

-valued random variables defined on

$\mathbb{R}$

-valued random variables defined on

![]() $(\mathbb{R},C_\textrm{Lip}(\mathbb{R}),\tilde{\mathbb{E}})$

in the sense that

$(\mathbb{R},C_\textrm{Lip}(\mathbb{R}),\tilde{\mathbb{E}})$

in the sense that

![]() $\xi_{1}=\xi$

,

$\xi_{1}=\xi$

,

![]() $\xi_{i+1}\overset{\textrm{d}}{=}\xi_{i}$

, and

$\xi_{i+1}\overset{\textrm{d}}{=}\xi_{i}$

, and

![]() $\xi_{i+1}\perp(\xi_{1},\xi_{2},\ldots,\xi_{i})$

for each

$\xi_{i+1}\perp(\xi_{1},\xi_{2},\ldots,\xi_{i})$

for each

![]() $i\in \mathbb{N}$

; we write

$i\in \mathbb{N}$

; we write

Now we state our first main result.

Theorem 1. Suppose that Assumptions 1–3 hold. Let

![]() $(\bar{S}_{n})_{n=1}^{\infty}$

be a sequence as defined in (5), and

$(\bar{S}_{n})_{n=1}^{\infty}$

be a sequence as defined in (5), and

![]() $(X_{t})_{t\geq0}$

be a nonlinear

$(X_{t})_{t\geq0}$

be a nonlinear

![]() $\alpha$

-stable Lévy process with the characteristic set

$\alpha$

-stable Lévy process with the characteristic set

![]() $\Theta$

. Then, for any

$\Theta$

. Then, for any

![]() $\phi \in C_\textrm{b,Lip}(\mathbb{R})$

,

$\phi \in C_\textrm{b,Lip}(\mathbb{R})$

,

![]() $\big|\tilde{\mathbb{E}}[\phi(\bar{S}_{n})] - \hat{\mathbb{E}}[\phi(X_{1})]\big| \leq C_{0}n^{-\Gamma(\alpha,q)}$

, where

$\big|\tilde{\mathbb{E}}[\phi(\bar{S}_{n})] - \hat{\mathbb{E}}[\phi(X_{1})]\big| \leq C_{0}n^{-\Gamma(\alpha,q)}$

, where

with

![]() $q>0$

given in Assumption 3, and

$q>0$

given in Assumption 3, and

![]() $C_{0}$

is a constant depending on the Lipschitz constant of

$C_{0}$

is a constant depending on the Lipschitz constant of

![]() $\phi$

, which is given in Theorem 2.

$\phi$

, which is given in Theorem 2.

Remark 5. The classical

![]() $\alpha$

-stable central limit theorem (see, e.g., [Reference Ibragimov and Linnik21, Theorem 2.6.7]) states that for a classical mean-zero random variable

$\alpha$

-stable central limit theorem (see, e.g., [Reference Ibragimov and Linnik21, Theorem 2.6.7]) states that for a classical mean-zero random variable

![]() $\xi_{1}$

, the sequence

$\xi_{1}$

, the sequence

![]() $\bar{S}_{n}$

converges in law to

$\bar{S}_{n}$

converges in law to

![]() $X_{1}$

as

$X_{1}$

as

![]() $n\rightarrow \infty$

if and only if the CDF of

$n\rightarrow \infty$

if and only if the CDF of

![]() $\xi$

has the form given in (4), where

$\xi$

has the form given in (4), where

![]() $(X_{t})_{t\geq0}$

is a classical Lévy process with triplet

$(X_{t})_{t\geq0}$

is a classical Lévy process with triplet

![]() $(0,0,F_{k_{\pm}})$

. In the framework of sublinear expectation, sufficient conditions for the

$(0,0,F_{k_{\pm}})$

. In the framework of sublinear expectation, sufficient conditions for the

![]() $\alpha$

-stable central limit theorem are given in [Reference Bayraktar and Munk6], which showed that, for a mean-zero random variable

$\alpha$

-stable central limit theorem are given in [Reference Bayraktar and Munk6], which showed that, for a mean-zero random variable

![]() $\xi_{1}$

under the sublinear expectation

$\xi_{1}$

under the sublinear expectation

![]() $\tilde{\mathbb{E}}$

defined above,

$\tilde{\mathbb{E}}$

defined above,

![]() $\bar{S}_{n}$

converges in law to

$\bar{S}_{n}$

converges in law to

![]() $X_{1}$

as

$X_{1}$

as

![]() $n\rightarrow\infty$

, where

$n\rightarrow\infty$

, where

![]() $(X_{t})_{t\geq0}$

is a nonlinear Lévy process with triplet set

$(X_{t})_{t\geq0}$

is a nonlinear Lévy process with triplet set

![]() $\Theta$

. Here, Theorem 1 further provides an explicit convergence rate of the limit theorem in [Reference Bayraktar and Munk6], which can be seen as a special

$\Theta$

. Here, Theorem 1 further provides an explicit convergence rate of the limit theorem in [Reference Bayraktar and Munk6], which can be seen as a special

![]() $\alpha$

-stable central limit theorem under the sublinear expectation.

$\alpha$

-stable central limit theorem under the sublinear expectation.

Remark 6. Assumptions 1–3 are sufficient conditions for [Reference Bayraktar and Munk6, Theorem 3.1]. Indeed, by [Reference Bayraktar and Munk6, Proposition 2.10], we know that for any

![]() $0<h<1$

,

$0<h<1$

,

![]() $u\in C_\textrm{b}^{1,2}([h,1+h]\times\mathbb{R})$

. Under Assumptions 1–3, by using II from (19) we get, for any

$u\in C_\textrm{b}^{1,2}([h,1+h]\times\mathbb{R})$

. Under Assumptions 1–3, by using II from (19) we get, for any

![]() $\phi \in C_\textrm{b,Lip}(\mathbb{R})$

and

$\phi \in C_\textrm{b,Lip}(\mathbb{R})$

and

![]() $0<h<1$

,

$0<h<1$

,

uniformly on

![]() $[0,1]\times \mathbb{R}$

as

$[0,1]\times \mathbb{R}$

as

![]() $n\rightarrow \infty$

, where v is the unique viscosity solution of

$n\rightarrow \infty$

, where v is the unique viscosity solution of

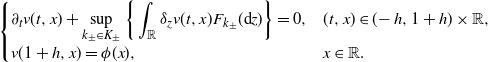

\[ \left\{ \begin{array}[c]{l@{\quad}l} \displaystyle \partial_{t}v(t,x) + \sup\limits_{k_{\pm}\in K_{\pm}}\bigg\{ \int_{\mathbb{R}}\delta_{z}v(t,x)F_{k_{\pm}}(\textrm{d} z)\bigg\} = 0, & (t,x) \in (\!-h,1+h)\times\mathbb{R}, \\[5pt] \displaystyle v(1+h,x) = \phi(x), & x\in\mathbb{R}. \end{array} \right. \]

\[ \left\{ \begin{array}[c]{l@{\quad}l} \displaystyle \partial_{t}v(t,x) + \sup\limits_{k_{\pm}\in K_{\pm}}\bigg\{ \int_{\mathbb{R}}\delta_{z}v(t,x)F_{k_{\pm}}(\textrm{d} z)\bigg\} = 0, & (t,x) \in (\!-h,1+h)\times\mathbb{R}, \\[5pt] \displaystyle v(1+h,x) = \phi(x), & x\in\mathbb{R}. \end{array} \right. \]

In addition, the necessary conditions for the

![]() $\alpha$

-stable central limit theorem under sublinear expectation are still unknown.

$\alpha$

-stable central limit theorem under sublinear expectation are still unknown.

4. Two examples

In this section we give two examples to illustrate our results.

Example 1. Let

![]() $(\xi_{i})_{i=1}^{\infty}$

be a sequence of i.i.d.

$(\xi_{i})_{i=1}^{\infty}$

be a sequence of i.i.d.

![]() $\mathbb{R}$

-valued random variables defined on

$\mathbb{R}$

-valued random variables defined on

![]() $(\mathbb{R},C_\textrm{Lip}(\mathbb{R}),\tilde{\mathbb{E}})$

with CDF (4) satisfying

$(\mathbb{R},C_\textrm{Lip}(\mathbb{R}),\tilde{\mathbb{E}})$

with CDF (4) satisfying

![]() $\beta_{1,k_{\pm}}(z)=0$

for

$\beta_{1,k_{\pm}}(z)=0$

for

![]() $z\leq-1$

and

$z\leq-1$

and

![]() $\beta_{2,k_{\pm}}(z)=0$

for

$\beta_{2,k_{\pm}}(z)=0$

for

![]() $z\geq1$

with

$z\geq1$

with

![]() $\lambda_{2}<{\alpha}/{2}$

. The exact expressions for

$\lambda_{2}<{\alpha}/{2}$

. The exact expressions for

![]() $\beta_{1,k_{\pm}}(z)$

and

$\beta_{1,k_{\pm}}(z)$

and

![]() $\beta_{2,k_{\pm}}(z)$

for

$\beta_{2,k_{\pm}}(z)$

for

![]() $0<|z|<1$

are not specified here, but we require

$0<|z|<1$

are not specified here, but we require

![]() $\beta_{1,k_{\pm}}(z)$

and

$\beta_{1,k_{\pm}}(z)$

and

![]() $\beta_{2,k_{\pm}}(z)$

to satisfy Assumption 1. It is clear that Assumption 2 holds. In addition, for each

$\beta_{2,k_{\pm}}(z)$

to satisfy Assumption 1. It is clear that Assumption 2 holds. In addition, for each

![]() $k_{\pm}\in K_{\pm}$

and

$k_{\pm}\in K_{\pm}$

and

![]() $\Delta\in(0,1)$

,

$\Delta\in(0,1)$

,

where

![]() $c\;:\!=\;\sup_{z\in(0,1)}|\beta_{2,k_{\pm}}(z)|<\infty$

, and similarly for the negative half-line. This indicates that Assumption 3 holds with

$c\;:\!=\;\sup_{z\in(0,1)}|\beta_{2,k_{\pm}}(z)|<\infty$

, and similarly for the negative half-line. This indicates that Assumption 3 holds with

![]() $q=({2-\alpha})/{\alpha}$

. According to Theorem 1, we get the convergence rate

$q=({2-\alpha})/{\alpha}$

. According to Theorem 1, we get the convergence rate

![]() $\big|\tilde{\mathbb{E}}[\phi(\bar{S}_{n})]-\hat{\mathbb{E}}[\phi(X_{1})]\big|\leq C_{0}n^{-\Gamma(\alpha)}$

, where

$\big|\tilde{\mathbb{E}}[\phi(\bar{S}_{n})]-\hat{\mathbb{E}}[\phi(X_{1})]\big|\leq C_{0}n^{-\Gamma(\alpha)}$

, where

Example 2. Let

![]() $(\xi_{i})_{i=1}^{\infty}$

be a sequence of i.i.d.

$(\xi_{i})_{i=1}^{\infty}$

be a sequence of i.i.d.

![]() $\mathbb{R}$

-valued random variables defined on

$\mathbb{R}$

-valued random variables defined on

![]() $(\mathbb{R},C_\textrm{Lip}(\mathbb{R}),\tilde{\mathbb{E}})$

with CDF (4) satisfying

$(\mathbb{R},C_\textrm{Lip}(\mathbb{R}),\tilde{\mathbb{E}})$

with CDF (4) satisfying

![]() $\beta_{1,k_{\pm}}(z)=a_{1}|z|^{\alpha-\beta}$

for

$\beta_{1,k_{\pm}}(z)=a_{1}|z|^{\alpha-\beta}$

for

![]() $z\leq-1$

and

$z\leq-1$

and

![]() $\beta_{2,k_{\pm}}(z)= a_{2}z^{\alpha-\beta}$

for

$\beta_{2,k_{\pm}}(z)= a_{2}z^{\alpha-\beta}$

for

![]() $z\geq1$

, with

$z\geq1$

, with

![]() $\beta>\alpha$

and two proper constants

$\beta>\alpha$

and two proper constants

![]() $a_{1}, a_{2}$

. The exact expressions for

$a_{1}, a_{2}$

. The exact expressions for

![]() $\beta_{1,k_{\pm}}(z)$

and

$\beta_{1,k_{\pm}}(z)$

and

![]() $\beta_{2,k_{\pm}}(z)$

for

$\beta_{2,k_{\pm}}(z)$

for

![]() $0<|z|<1$

are not specified here, but we require that

$0<|z|<1$

are not specified here, but we require that

![]() $\beta_{1,k_{\pm}}(z)$

and

$\beta_{1,k_{\pm}}(z)$

and

![]() $\beta_{2,k_{\pm}}(z)$

satisfy Assumption 1. For simplicity, we will only check the integral along the positive half-line; the negative half-line case is similar. Observe that

$\beta_{2,k_{\pm}}(z)$

satisfy Assumption 1. For simplicity, we will only check the integral along the positive half-line; the negative half-line case is similar. Observe that

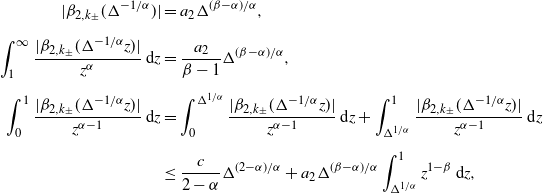

which shows that Assumption 2 holds. Also, it can be verified that, for each

![]() $k_{\pm}\in K_{\pm}$

and

$k_{\pm}\in K_{\pm}$

and

![]() $\Delta \in(0,1)$

,

$\Delta \in(0,1)$

,

\begin{align*} |\beta_{2,k_{\pm}}(\Delta^{-1/\alpha})| & = a_{2}\Delta^{({\beta-\alpha})/{\alpha}}, \\[5pt] \int_{1}^{\infty}\frac{|\beta_{2,k_{\pm}}(\Delta^{-1/\alpha}z)|}{z^{\alpha}}\,\textrm{d} z & = \frac{a_{2}}{\beta-1}\Delta^{({\beta-\alpha})/{\alpha}}, \\[5pt] \int_{0}^{1}\frac{|\beta_{2,k_{\pm}}(\Delta^{-1/\alpha}z)|}{z^{\alpha-1}}\,\textrm{d} z & = \int_{0}^{\Delta^{1/\alpha}}\frac{|\beta_{2,k_{\pm}}(\Delta^{-1/\alpha}z)|}{z^{\alpha-1}}\,\textrm{d} z + \int_{\Delta^{1/\alpha}}^{1}\frac{|\beta_{2,k_{\pm}}(\Delta^{-1/\alpha}z)|}{z^{\alpha-1}}\,\textrm{d} z \\[5pt] & \leq \frac{c}{2-\alpha}\Delta^{({2-\alpha})/{\alpha}} + a_{2}\Delta^{({\beta-\alpha})/{\alpha}}\int_{\Delta^{1/\alpha}}^{1}z^{1-\beta}\,\textrm{d} z, \end{align*}

\begin{align*} |\beta_{2,k_{\pm}}(\Delta^{-1/\alpha})| & = a_{2}\Delta^{({\beta-\alpha})/{\alpha}}, \\[5pt] \int_{1}^{\infty}\frac{|\beta_{2,k_{\pm}}(\Delta^{-1/\alpha}z)|}{z^{\alpha}}\,\textrm{d} z & = \frac{a_{2}}{\beta-1}\Delta^{({\beta-\alpha})/{\alpha}}, \\[5pt] \int_{0}^{1}\frac{|\beta_{2,k_{\pm}}(\Delta^{-1/\alpha}z)|}{z^{\alpha-1}}\,\textrm{d} z & = \int_{0}^{\Delta^{1/\alpha}}\frac{|\beta_{2,k_{\pm}}(\Delta^{-1/\alpha}z)|}{z^{\alpha-1}}\,\textrm{d} z + \int_{\Delta^{1/\alpha}}^{1}\frac{|\beta_{2,k_{\pm}}(\Delta^{-1/\alpha}z)|}{z^{\alpha-1}}\,\textrm{d} z \\[5pt] & \leq \frac{c}{2-\alpha}\Delta^{({2-\alpha})/{\alpha}} + a_{2}\Delta^{({\beta-\alpha})/{\alpha}}\int_{\Delta^{1/\alpha}}^{1}z^{1-\beta}\,\textrm{d} z, \end{align*}

where

![]() $c=\sup_{z\in(0,1)}|\beta_{2,k_{\pm}}(z)|<\infty$

. We further distinguish three cases based on the value of

$c=\sup_{z\in(0,1)}|\beta_{2,k_{\pm}}(z)|<\infty$

. We further distinguish three cases based on the value of

![]() $\beta$

.

$\beta$

.

If

![]() $\beta=2$

,

$\beta=2$

,

where

![]() $C=({c}/({2-\alpha}))+a_{2}$

, for any small

$C=({c}/({2-\alpha}))+a_{2}$

, for any small

![]() $\varepsilon>0$

.

$\varepsilon>0$

.

If

![]() $\alpha<\beta<2$

,

$\alpha<\beta<2$

,

where

If

![]() $\beta>2$

, it follows that

$\beta>2$

, it follows that

where

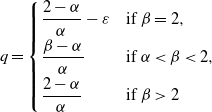

Then, Assumption 3 holds with

\[ q=\left \{ \begin{array}[c]{l@{\quad}l} \dfrac{2-\alpha}{\alpha}-\varepsilon & \text{if }\beta=2, \\[2mm] \dfrac{\beta-\alpha}{\alpha} & \text{if }\alpha<\beta<2, \\[2mm] \dfrac{2-\alpha}{\alpha} & \text{if }\beta>2 \end{array} \right. \]

\[ q=\left \{ \begin{array}[c]{l@{\quad}l} \dfrac{2-\alpha}{\alpha}-\varepsilon & \text{if }\beta=2, \\[2mm] \dfrac{\beta-\alpha}{\alpha} & \text{if }\alpha<\beta<2, \\[2mm] \dfrac{2-\alpha}{\alpha} & \text{if }\beta>2 \end{array} \right. \]

for any small

![]() $\varepsilon>0$

. From Theorem 1, we immediately obtain

$\varepsilon>0$

. From Theorem 1, we immediately obtain

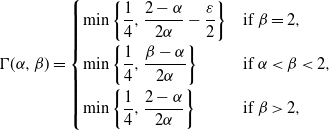

![]() $\big|\tilde{\mathbb{E}}[\phi(\bar{S}_{n})]-\hat{\mathbb{E}}[\phi(X_{1})]\big|\leq C_{0}n^{-\Gamma(\alpha,\beta)}$

, where

$\big|\tilde{\mathbb{E}}[\phi(\bar{S}_{n})]-\hat{\mathbb{E}}[\phi(X_{1})]\big|\leq C_{0}n^{-\Gamma(\alpha,\beta)}$

, where

\[ \Gamma(\alpha,\beta) = \left \{ \begin{array}[c]{l@{\quad}l} \min\bigg\{\dfrac{1}{4},\dfrac{2-\alpha}{2\alpha}-\dfrac{\varepsilon}{2}\bigg\} & \text{if }\beta=2, \\[3mm] \min\bigg\{\dfrac{1}{4},\dfrac{\beta-\alpha}{2\alpha}\bigg\} & \text{if }\alpha<\beta<2, \\[3mm] \min\bigg\{\dfrac{1}{4},\dfrac{2-\alpha}{2\alpha}\bigg\} & \text{if }\beta>2, \end{array} \right. \]

\[ \Gamma(\alpha,\beta) = \left \{ \begin{array}[c]{l@{\quad}l} \min\bigg\{\dfrac{1}{4},\dfrac{2-\alpha}{2\alpha}-\dfrac{\varepsilon}{2}\bigg\} & \text{if }\beta=2, \\[3mm] \min\bigg\{\dfrac{1}{4},\dfrac{\beta-\alpha}{2\alpha}\bigg\} & \text{if }\alpha<\beta<2, \\[3mm] \min\bigg\{\dfrac{1}{4},\dfrac{2-\alpha}{2\alpha}\bigg\} & \text{if }\beta>2, \end{array} \right. \]

with

![]() $\varepsilon>0$

.

$\varepsilon>0$

.

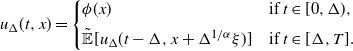

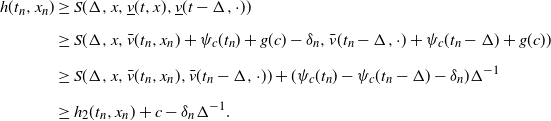

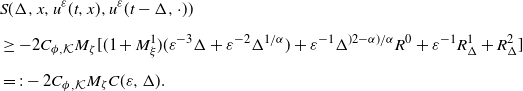

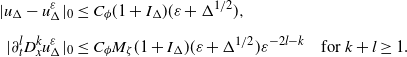

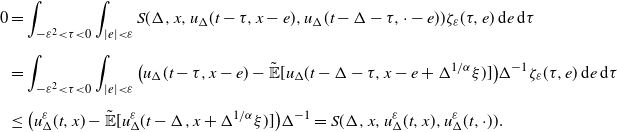

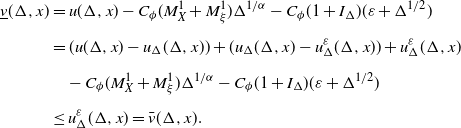

5. Proof of Theorem 1: Monotone scheme method

In this section we introduce the numerical analysis tools of nonlinear partial differential equations to prove Theorem 1. Noting that

![]() $\hat{\mathbb{E}}[\phi(X_{1})]=u(1,0)$

, where u is the viscosity solution of (3), we propose a discrete scheme to approximate u by merely using the random variable

$\hat{\mathbb{E}}[\phi(X_{1})]=u(1,0)$

, where u is the viscosity solution of (3), we propose a discrete scheme to approximate u by merely using the random variable

![]() $\xi$

under

$\xi$

under

![]() $\tilde{\mathbb{E}}$

as input. For given

$\tilde{\mathbb{E}}$

as input. For given

![]() $T>0$

and

$T>0$

and

![]() $\Delta \in(0,1)$

, define

$\Delta \in(0,1)$

, define

![]() $u_{\Delta}\colon[0,T]\times \mathbb{R\rightarrow R}$

recursively by

$u_{\Delta}\colon[0,T]\times \mathbb{R\rightarrow R}$

recursively by

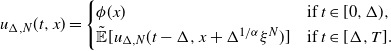

\begin{equation} u_{\Delta}(t,x) = \left\{ \begin{array}[c]{l@{\quad}l} \phi(x) & \text{if } t\in \lbrack0,\Delta), \\[5pt] \tilde{\mathbb{E}}[u_{\Delta}(t-\Delta,x+\Delta^{{1}/{\alpha}}\xi)] & \text{if } t\in\lbrack\Delta,T]. \end{array} \right.\end{equation}

\begin{equation} u_{\Delta}(t,x) = \left\{ \begin{array}[c]{l@{\quad}l} \phi(x) & \text{if } t\in \lbrack0,\Delta), \\[5pt] \tilde{\mathbb{E}}[u_{\Delta}(t-\Delta,x+\Delta^{{1}/{\alpha}}\xi)] & \text{if } t\in\lbrack\Delta,T]. \end{array} \right.\end{equation}

From the above recursive process, we can see that, for each

![]() $x\in \mathbb{R}$

and

$x\in \mathbb{R}$

and

![]() $n\in \mathbb{N}$

such that

$n\in \mathbb{N}$

such that

![]() $n\Delta \leq T$

,

$n\Delta \leq T$

,

![]() $u_{\Delta}(\cdot,x)$

is a constant on the interval

$u_{\Delta}(\cdot,x)$

is a constant on the interval

![]() $[n\Delta,(n+1)\Delta \wedge T)$

, that is,

$[n\Delta,(n+1)\Delta \wedge T)$

, that is,

![]() $u_{\Delta}(t,x)=u_{\Delta}(n\Delta,x)$

for all

$u_{\Delta}(t,x)=u_{\Delta}(n\Delta,x)$

for all

![]() $t\in \lbrack n\Delta,(n+1)\Delta \wedge T)$

.

$t\in \lbrack n\Delta,(n+1)\Delta \wedge T)$

.

By induction (see [Reference Huang and Liang20, Theorem 2.1]), we can derive that, for all

![]() $n\in \mathbb{N}$

such that

$n\in \mathbb{N}$

such that

![]() $n\Delta \leq T$

and

$n\Delta \leq T$

and

![]() $x\in \mathbb{R}$

,

$x\in \mathbb{R}$

,

\[ u_{\Delta}(n\Delta,x) = \tilde{\mathbb{E}}\Bigg[\phi\Bigg(x+\Delta^{{1}/{\alpha}}\sum_{i=1}^{n}\xi_{i}\Bigg)\Bigg].\]

\[ u_{\Delta}(n\Delta,x) = \tilde{\mathbb{E}}\Bigg[\phi\Bigg(x+\Delta^{{1}/{\alpha}}\sum_{i=1}^{n}\xi_{i}\Bigg)\Bigg].\]

In particular, taking

![]() $T=1$

and

$T=1$

and

![]() $\Delta={1}/{n}$

, we have

$\Delta={1}/{n}$

, we have

![]() $u_{\Delta}(1,0)=\tilde{\mathbb{E}[}\phi(\bar{S}_{n})]$

, and Theorem 1 follows from the following result.

$u_{\Delta}(1,0)=\tilde{\mathbb{E}[}\phi(\bar{S}_{n})]$

, and Theorem 1 follows from the following result.

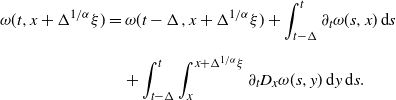

Theorem 2. Suppose that Assumptions 1–3 hold, and

![]() $\phi\in C_\textrm{b,Lip}(\mathbb{R})$

. Then, for any

$\phi\in C_\textrm{b,Lip}(\mathbb{R})$

. Then, for any

![]() $(t,x) \in \lbrack0,T]\times\mathbb{R}$

,

$(t,x) \in \lbrack0,T]\times\mathbb{R}$

,

![]() $\vert u(t,x) - u_{\Delta}(t,x)\vert \leq C_{0}\Delta^{\Gamma(\alpha,q)}$

, where the Berry–Esseen constant

$\vert u(t,x) - u_{\Delta}(t,x)\vert \leq C_{0}\Delta^{\Gamma(\alpha,q)}$

, where the Berry–Esseen constant

![]() $C_{0}=L_{0}\vee U_{0}$

, with

$C_{0}=L_{0}\vee U_{0}$

, with

![]() $L_{0}$

and

$L_{0}$

and

![]() $U_{0}$

given explicitly in Lemmas 11 and 12, respectively, and

$U_{0}$

given explicitly in Lemmas 11 and 12, respectively, and

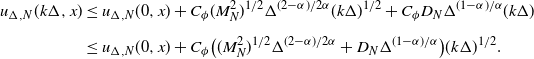

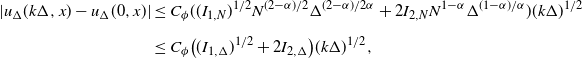

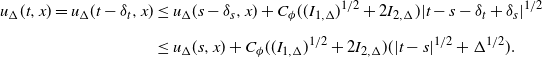

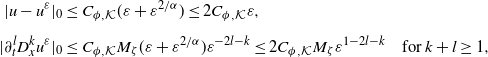

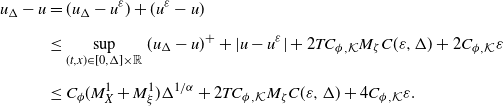

5.1. Regularity estimates

To prove Theorem 2, we first need to establish the space and time regularity properties of

![]() $u_{\Delta}$

, which are crucial for proving the convergence of

$u_{\Delta}$

, which are crucial for proving the convergence of

![]() $u_{\Delta}$

to u and determining its convergence rate. Before showing our regularity estimates of

$u_{\Delta}$

to u and determining its convergence rate. Before showing our regularity estimates of

![]() $u_{\Delta}$

, we set

$u_{\Delta}$

, we set

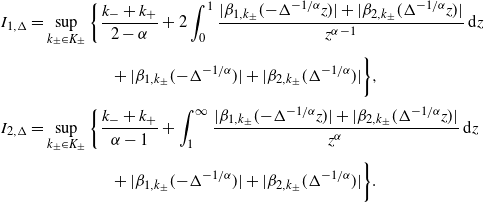

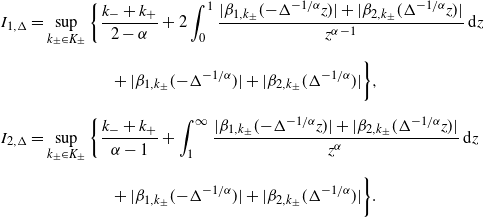

\begin{align*} I_{1,\Delta} & = \sup\limits_{k_{\pm}\in K_{\pm}}\bigg\{\frac{k_{-}+k_{+}}{2-\alpha} + 2\int_{0}^{1}\frac{|\beta_{1,k_{\pm}}(\!-\!\Delta^{-{1}/{\alpha}}z)|+|\beta_{2,k_{\pm}}(\Delta^{-{1}/{\alpha}}z)|} {z^{\alpha-1}}\,\textrm{d} z \\[2pt] & \qquad\qquad\quad + |\beta_{1,k_{\pm}}(\!-\!\Delta^{-{1}/{\alpha}})| + |\beta_{2,k_{\pm}}(\Delta^{-{1}/{\alpha}})|\bigg\}, \\[2pt] I_{2,\Delta} & = \sup\limits_{k_{\pm}\in K_{\pm}}\bigg\{\frac{k_{-}+k_{+}}{\alpha-1} + \int_{1}^{\infty}\frac{|\beta_{1,k_{\pm}}(\!-\!\Delta^{-{1}/{\alpha}}z)|+|\beta_{2,k_{\pm}}(\Delta^{-{1}/{\alpha}}z)|} {z^{\alpha}}\,\textrm{d} z \\[2pt] & \qquad\qquad\quad + |\beta_{1,k_{\pm}}(\!-\!\Delta^{-{1}/{\alpha}})| + |\beta_{2,k_{\pm}}(\Delta^{-{1}/{\alpha}})|\bigg\}.\end{align*}

\begin{align*} I_{1,\Delta} & = \sup\limits_{k_{\pm}\in K_{\pm}}\bigg\{\frac{k_{-}+k_{+}}{2-\alpha} + 2\int_{0}^{1}\frac{|\beta_{1,k_{\pm}}(\!-\!\Delta^{-{1}/{\alpha}}z)|+|\beta_{2,k_{\pm}}(\Delta^{-{1}/{\alpha}}z)|} {z^{\alpha-1}}\,\textrm{d} z \\[2pt] & \qquad\qquad\quad + |\beta_{1,k_{\pm}}(\!-\!\Delta^{-{1}/{\alpha}})| + |\beta_{2,k_{\pm}}(\Delta^{-{1}/{\alpha}})|\bigg\}, \\[2pt] I_{2,\Delta} & = \sup\limits_{k_{\pm}\in K_{\pm}}\bigg\{\frac{k_{-}+k_{+}}{\alpha-1} + \int_{1}^{\infty}\frac{|\beta_{1,k_{\pm}}(\!-\!\Delta^{-{1}/{\alpha}}z)|+|\beta_{2,k_{\pm}}(\Delta^{-{1}/{\alpha}}z)|} {z^{\alpha}}\,\textrm{d} z \\[2pt] & \qquad\qquad\quad + |\beta_{1,k_{\pm}}(\!-\!\Delta^{-{1}/{\alpha}})| + |\beta_{2,k_{\pm}}(\Delta^{-{1}/{\alpha}})|\bigg\}.\end{align*}

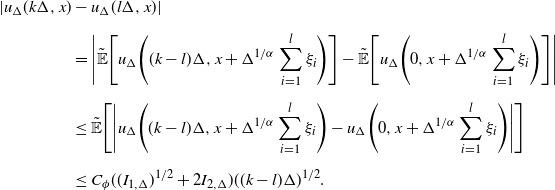

Theorem 3. Suppose that Assumptions 1 and 3 hold, and

![]() $\phi\in C_\textrm{b,Lip}(\mathbb{R})$

. Then:

$\phi\in C_\textrm{b,Lip}(\mathbb{R})$

. Then:

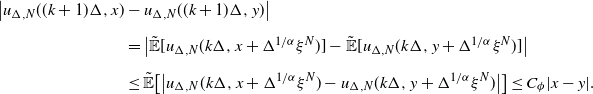

-

(i) for any

$t\in\lbrack0,T]$

and

$t\in\lbrack0,T]$

and

$x,y\in \mathbb{R}$

,

$x,y\in \mathbb{R}$

,

$\vert u_{\Delta}(t,x) - u_{\Delta}(t,y)\vert \leq C_{\phi}|x-y|$

;

$\vert u_{\Delta}(t,x) - u_{\Delta}(t,y)\vert \leq C_{\phi}|x-y|$

; -

(ii) for any

$t,s\in\lbrack0,T]$

and

$t,s\in\lbrack0,T]$

and

$x\in \mathbb{R}$

,

$x\in \mathbb{R}$

,

$\vert u_{\Delta}(t,x) - u_{\Delta}(s,x)\vert \leq C_{\phi}I_{\Delta}(|t-s|^{1/2}+\Delta^{1/2})$

;

$\vert u_{\Delta}(t,x) - u_{\Delta}(s,x)\vert \leq C_{\phi}I_{\Delta}(|t-s|^{1/2}+\Delta^{1/2})$

;

where

![]() $C_{\phi}$

is the Lipschitz constant of

$C_{\phi}$

is the Lipschitz constant of

![]() $\phi$

and,

$\phi$

and,

![]() $I_{\Delta}=\sqrt{I_{1,\Delta}}+2I_{2,\Delta}$

with

$I_{\Delta}=\sqrt{I_{1,\Delta}}+2I_{2,\Delta}$

with

![]() $I_{\Delta}<\infty$

.

$I_{\Delta}<\infty$

.

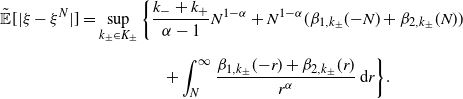

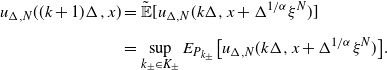

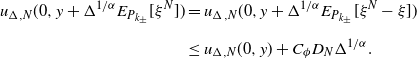

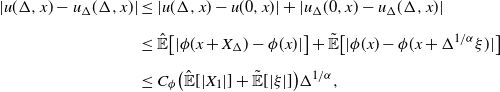

Notice that

![]() $\tilde{\mathbb{E}}[\xi^{2}]=\infty$

, so the classical method developed in [Reference Krylov28] fails. To prove Theorem 3, for fixed

$\tilde{\mathbb{E}}[\xi^{2}]=\infty$

, so the classical method developed in [Reference Krylov28] fails. To prove Theorem 3, for fixed

![]() $N>0$

, we define

$N>0$

, we define

![]() $\xi^{N}\;:\!=\;\xi\mathbf{1}_{\{|\xi|\leq N\}}$

and introduce the truncated scheme

$\xi^{N}\;:\!=\;\xi\mathbf{1}_{\{|\xi|\leq N\}}$

and introduce the truncated scheme

![]() $u_{\Delta,N}\colon[0,T]\times \mathbb{R\rightarrow R}$

recursively by

$u_{\Delta,N}\colon[0,T]\times \mathbb{R\rightarrow R}$

recursively by

\begin{equation} u_{\Delta,N}(t,x) = \left\{ \begin{array}[c]{l@{\quad}l} \phi(x) & \text{if } t\in \lbrack0,\Delta), \\[2pt] \tilde{\mathbb{E}}[u_{\Delta,N}(t-\Delta,x+\Delta^{{1}/{\alpha}}\xi^{N})] & \text{if } t\in\lbrack \Delta,T]. \end{array} \right.\end{equation}

\begin{equation} u_{\Delta,N}(t,x) = \left\{ \begin{array}[c]{l@{\quad}l} \phi(x) & \text{if } t\in \lbrack0,\Delta), \\[2pt] \tilde{\mathbb{E}}[u_{\Delta,N}(t-\Delta,x+\Delta^{{1}/{\alpha}}\xi^{N})] & \text{if } t\in\lbrack \Delta,T]. \end{array} \right.\end{equation}

We get the following estimates.

Lemma 5. For each fixed

![]() $N>0$

,

$N>0$

,

![]() $\tilde{\mathbb{E}}[|\xi^{N}|^{2}]=N^{2-\alpha}I_{1,N}$

, where

$\tilde{\mathbb{E}}[|\xi^{N}|^{2}]=N^{2-\alpha}I_{1,N}$

, where

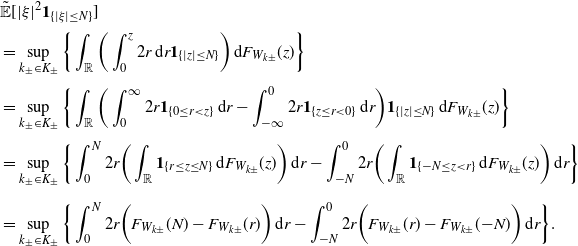

Proof. Using Fubini’s theorem,

\begin{align*} & \tilde{\mathbb{E}}[|\xi|^{2}\mathbf{1}_{\{|\xi|\leq N\}}] \\[2pt] & = \sup_{k_{\pm}\in K_{\pm}}\bigg\{ \int_{\mathbb{R}}\bigg(\int_{0}^{z}2r\,\textrm{d} r\mathbf{1}_{\{|z|\leq N\}}\bigg)\,\textrm{d} F_{W_{k\pm}}(z)\bigg\} \\[2pt] & = \sup_{k_{\pm}\in K_{\pm}}\bigg\{ \int_{\mathbb{R}}\bigg(\int_{0}^{\infty}2r\mathbf{1}_{\{0\leq r<z\}}\,\textrm{d} r - \int_{-\infty}^{0}2r\mathbf{1}_{\{z\leq r<0\}}\,\textrm{d} r\bigg)\mathbf{1}_{\{|z|\leq N\}}\,\textrm{d} F_{W_{k\pm}}(z)\bigg\} \\[2pt] & = \sup_{k_{\pm}\in K_{\pm}}\bigg\{ \int_{0}^{N}2r\bigg(\int_{\mathbb{R}}\mathbf{1}_{\{r\leq z\leq N\}}\,\textrm{d} F_{W_{k\pm}}(z)\bigg)\,\textrm{d} r - \int_{-N}^{0}2r\bigg(\int_{\mathbb{R}}\mathbf{1}_{\{-N\leq z<r\}}\,\textrm{d} F_{W_{k\pm}}(z)\bigg)\,\textrm{d} r\bigg\} \\[5pt] & = \sup_{k_{\pm}\in K_{\pm}}\bigg\{ \int_{0}^{N}2r\bigg(F_{W_{k\pm}}(N)-F_{W_{k\pm}}(r)\bigg)\,\textrm{d} r - \int_{-N}^{0}2r\bigg(F_{W_{k\pm}}(r)-F_{W_{k\pm}}(\!-\!N)\bigg)\,\textrm{d} r\bigg\}. \end{align*}

\begin{align*} & \tilde{\mathbb{E}}[|\xi|^{2}\mathbf{1}_{\{|\xi|\leq N\}}] \\[2pt] & = \sup_{k_{\pm}\in K_{\pm}}\bigg\{ \int_{\mathbb{R}}\bigg(\int_{0}^{z}2r\,\textrm{d} r\mathbf{1}_{\{|z|\leq N\}}\bigg)\,\textrm{d} F_{W_{k\pm}}(z)\bigg\} \\[2pt] & = \sup_{k_{\pm}\in K_{\pm}}\bigg\{ \int_{\mathbb{R}}\bigg(\int_{0}^{\infty}2r\mathbf{1}_{\{0\leq r<z\}}\,\textrm{d} r - \int_{-\infty}^{0}2r\mathbf{1}_{\{z\leq r<0\}}\,\textrm{d} r\bigg)\mathbf{1}_{\{|z|\leq N\}}\,\textrm{d} F_{W_{k\pm}}(z)\bigg\} \\[2pt] & = \sup_{k_{\pm}\in K_{\pm}}\bigg\{ \int_{0}^{N}2r\bigg(\int_{\mathbb{R}}\mathbf{1}_{\{r\leq z\leq N\}}\,\textrm{d} F_{W_{k\pm}}(z)\bigg)\,\textrm{d} r - \int_{-N}^{0}2r\bigg(\int_{\mathbb{R}}\mathbf{1}_{\{-N\leq z<r\}}\,\textrm{d} F_{W_{k\pm}}(z)\bigg)\,\textrm{d} r\bigg\} \\[5pt] & = \sup_{k_{\pm}\in K_{\pm}}\bigg\{ \int_{0}^{N}2r\bigg(F_{W_{k\pm}}(N)-F_{W_{k\pm}}(r)\bigg)\,\textrm{d} r - \int_{-N}^{0}2r\bigg(F_{W_{k\pm}}(r)-F_{W_{k\pm}}(\!-\!N)\bigg)\,\textrm{d} r\bigg\}. \end{align*}

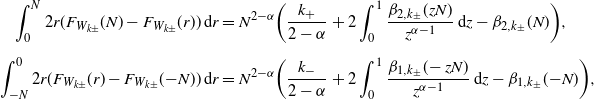

By changing variables, it is straightforward to check that

\begin{align*} \int_{0}^{N}2r(F_{W_{k\pm}}(N)-F_{W_{k\pm}}(r))\,\textrm{d} r & = N^{2-\alpha}\bigg(\frac{k_{+}}{2-\alpha} + 2\int_{0}^{1}\frac{\beta_{2,k_{\pm}}(zN)}{z^{\alpha-1}}\,\textrm{d} z - \beta_{2,k_{\pm}}(N)\bigg), \\[5pt] \int_{-N}^{0}2r(F_{W_{k\pm}}(r)-F_{W_{k\pm}}(\!-\!N))\,\textrm{d} r & = N^{2-\alpha}\bigg(\frac{k_{-}}{2-\alpha} + 2\int_{0}^{1}\frac{\beta_{1,k_{\pm}}(\!-zN)}{z^{\alpha-1}}\,\textrm{d} z - \beta_{1,k_{\pm}}(\!-\!N)\bigg), \end{align*}

\begin{align*} \int_{0}^{N}2r(F_{W_{k\pm}}(N)-F_{W_{k\pm}}(r))\,\textrm{d} r & = N^{2-\alpha}\bigg(\frac{k_{+}}{2-\alpha} + 2\int_{0}^{1}\frac{\beta_{2,k_{\pm}}(zN)}{z^{\alpha-1}}\,\textrm{d} z - \beta_{2,k_{\pm}}(N)\bigg), \\[5pt] \int_{-N}^{0}2r(F_{W_{k\pm}}(r)-F_{W_{k\pm}}(\!-\!N))\,\textrm{d} r & = N^{2-\alpha}\bigg(\frac{k_{-}}{2-\alpha} + 2\int_{0}^{1}\frac{\beta_{1,k_{\pm}}(\!-zN)}{z^{\alpha-1}}\,\textrm{d} z - \beta_{1,k_{\pm}}(\!-\!N)\bigg), \end{align*}

which immediately implies the result.

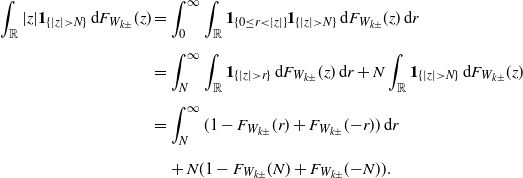

Lemma 6. For each fixed

![]() $N>0$

,

$N>0$

,

![]() $\tilde{\mathbb{E}}[|\xi-\xi^{N}|]=N^{1-\alpha}I_{2,N}$

, where

$\tilde{\mathbb{E}}[|\xi-\xi^{N}|]=N^{1-\alpha}I_{2,N}$

, where

Proof. Notice that

Observe by Fubini’s theorem that

\begin{align} \int_{\mathbb{R}}|z|\mathbf{1}_{\{|z|>N\}}\,\textrm{d} F_{W_{k\pm}}(z) & = \int_{0}^{\infty}\int_{\mathbb{R}}\mathbf{1}_{\{0\leq r<|z|\}}\mathbf{1}_{\{|z|>N\}}\,\textrm{d} F_{W_{k\pm}}(z)\,\textrm{d} r \nonumber \\[5pt] & = \int_{N}^{\infty}\int_{\mathbb{R}}\mathbf{1}_{\{|z|>r\}}\,\textrm{d} F_{W_{k\pm}}(z)\,\textrm{d} r + N\int_{\mathbb{R}}\mathbf{1}_{\{|z|>N\}}\,\textrm{d} F_{W_{k\pm}}(z) \nonumber \\[5pt] & = \int_{N}^{\infty}(1-F_{W_{k\pm}}(r)+F_{W_{k\pm}}(\!-\!r))\,\textrm{d} r \nonumber \\[5pt] & \quad + N(1-F_{W_{k\pm}}(N)+F_{W_{k\pm}}(\!-\!N)). \end{align}

\begin{align} \int_{\mathbb{R}}|z|\mathbf{1}_{\{|z|>N\}}\,\textrm{d} F_{W_{k\pm}}(z) & = \int_{0}^{\infty}\int_{\mathbb{R}}\mathbf{1}_{\{0\leq r<|z|\}}\mathbf{1}_{\{|z|>N\}}\,\textrm{d} F_{W_{k\pm}}(z)\,\textrm{d} r \nonumber \\[5pt] & = \int_{N}^{\infty}\int_{\mathbb{R}}\mathbf{1}_{\{|z|>r\}}\,\textrm{d} F_{W_{k\pm}}(z)\,\textrm{d} r + N\int_{\mathbb{R}}\mathbf{1}_{\{|z|>N\}}\,\textrm{d} F_{W_{k\pm}}(z) \nonumber \\[5pt] & = \int_{N}^{\infty}(1-F_{W_{k\pm}}(r)+F_{W_{k\pm}}(\!-\!r))\,\textrm{d} r \nonumber \\[5pt] & \quad + N(1-F_{W_{k\pm}}(N)+F_{W_{k\pm}}(\!-\!N)). \end{align}

\begin{align*} \tilde{\mathbb{E}}[|\xi-\xi^{N}|] & = \sup_{k_{\pm}\in K_{\pm}}\bigg\{\frac{k_{-}+k_{+}}{\alpha-1}N^{1-\alpha} + N^{1-\alpha}(\beta_{1,k_{\pm}}(\!-\!N) + \beta_{2,k_{\pm}}(N)) \\[5pt] & \qquad\qquad\quad + \int_{N}^{\infty}\frac{\beta_{1,k_{\pm}}(\!-\!r)+\beta_{2,k_{\pm}}(r)}{r^{\alpha}}\,\textrm{d} r\bigg\}. \end{align*}

\begin{align*} \tilde{\mathbb{E}}[|\xi-\xi^{N}|] & = \sup_{k_{\pm}\in K_{\pm}}\bigg\{\frac{k_{-}+k_{+}}{\alpha-1}N^{1-\alpha} + N^{1-\alpha}(\beta_{1,k_{\pm}}(\!-\!N) + \beta_{2,k_{\pm}}(N)) \\[5pt] & \qquad\qquad\quad + \int_{N}^{\infty}\frac{\beta_{1,k_{\pm}}(\!-\!r)+\beta_{2,k_{\pm}}(r)}{r^{\alpha}}\,\textrm{d} r\bigg\}. \end{align*}

By changing variables, we immediately conclude the proof.

Lemma 7. Suppose that

![]() $\phi \in C_\textrm{b,Lip}(\mathbb{R})$

. Then:

$\phi \in C_\textrm{b,Lip}(\mathbb{R})$

. Then:

-

(i) for any

$k\in\mathbb{N}$

such that

$k\in\mathbb{N}$

such that

$k\Delta \leq T$

and

$k\Delta \leq T$

and

$x,y\in \mathbb{R}$

,

$x,y\in \mathbb{R}$

,

$\vert u_{\Delta,N}(k\Delta,x)-u_{\Delta,N}(k\Delta,y)\vert \leq C_{\phi}|x-y|$

;

$\vert u_{\Delta,N}(k\Delta,x)-u_{\Delta,N}(k\Delta,y)\vert \leq C_{\phi}|x-y|$

; -

(ii) for any

$k\in \mathbb{N}$

such that

$k\in \mathbb{N}$

such that

$k\Delta \leq T$

and

$k\Delta \leq T$

and

$x\in \mathbb{R}$

,

$x\in \mathbb{R}$

,  \begin{align*} \vert u_{\Delta,N}(k\Delta,x)-u_{\Delta,N}(0,x)\vert & \leq C_{\phi}\big((I_{1,N})^{{1}/{2}}N^{({2-\alpha})/{2}}\Delta^{({2-\alpha})/{2\alpha}} \\[5pt] & \qquad\quad + I_{2,N}N^{1-\alpha}\Delta^{({1-\alpha})/{\alpha}}\big)(k\Delta)^{{1}/{2}}; \end{align*}

\begin{align*} \vert u_{\Delta,N}(k\Delta,x)-u_{\Delta,N}(0,x)\vert & \leq C_{\phi}\big((I_{1,N})^{{1}/{2}}N^{({2-\alpha})/{2}}\Delta^{({2-\alpha})/{2\alpha}} \\[5pt] & \qquad\quad + I_{2,N}N^{1-\alpha}\Delta^{({1-\alpha})/{\alpha}}\big)(k\Delta)^{{1}/{2}}; \end{align*}

where

![]() $C_{\phi}$

is the Lipschitz constant of

$C_{\phi}$

is the Lipschitz constant of

![]() $\phi$

, and

$\phi$

, and

![]() $I_{1,N}$

,

$I_{1,N}$

,

![]() $I_{2,N}$

are given in Lemmas 5 and 6, respectively.

$I_{2,N}$

are given in Lemmas 5 and 6, respectively.

Proof. Assertion (i) is proved by induction using (7). Clearly, the estimate holds for

![]() $k=0$

. In general, we assume the assertion holds for some

$k=0$

. In general, we assume the assertion holds for some

![]() $k\in\mathbb{N}$

with

$k\in\mathbb{N}$

with

![]() $k\Delta\leq T$

. Then, using Proposition 1, we have

$k\Delta\leq T$

. Then, using Proposition 1, we have

\begin{align*} \big\vert u_{\Delta,N}((k+1)\Delta,x) & - u_{\Delta,N}((k+1)\Delta,y)\big\vert \\[5pt] & = \big\vert\tilde{\mathbb{E}}[u_{\Delta,N}(k\Delta,x+\Delta^{{1}/{\alpha}}\xi^{N})] - \tilde{\mathbb{E}}[u_{\Delta,N}(k\Delta,y+\Delta^{{1}/{\alpha}}\xi^{N})]\big\vert \\[5pt] & \leq \tilde{\mathbb{E}}\big[\big\vert u_{\Delta,N}(k\Delta,x+\Delta^{{1}/{\alpha}}\xi^{N}) - u_{\Delta,N}(k\Delta,y+\Delta^{{1}/{\alpha}}\xi^{N})\big\vert\big] \leq C_{\phi}|x-y|. \end{align*}

\begin{align*} \big\vert u_{\Delta,N}((k+1)\Delta,x) & - u_{\Delta,N}((k+1)\Delta,y)\big\vert \\[5pt] & = \big\vert\tilde{\mathbb{E}}[u_{\Delta,N}(k\Delta,x+\Delta^{{1}/{\alpha}}\xi^{N})] - \tilde{\mathbb{E}}[u_{\Delta,N}(k\Delta,y+\Delta^{{1}/{\alpha}}\xi^{N})]\big\vert \\[5pt] & \leq \tilde{\mathbb{E}}\big[\big\vert u_{\Delta,N}(k\Delta,x+\Delta^{{1}/{\alpha}}\xi^{N}) - u_{\Delta,N}(k\Delta,y+\Delta^{{1}/{\alpha}}\xi^{N})\big\vert\big] \leq C_{\phi}|x-y|. \end{align*}

By the principle of induction the assertion is true for all

![]() $k\in \mathbb{N}$

with

$k\in \mathbb{N}$

with

![]() $k\Delta \leq T$

.

$k\Delta \leq T$

.

Now we establish the time regularity for

![]() $u_{\Delta,N}$

in (ii). Note that Young’s inequality implies that, for any

$u_{\Delta,N}$

in (ii). Note that Young’s inequality implies that, for any

![]() $x,y>0$

,

$x,y>0$

,

![]() $xy\leq \frac{1}{2}(x^{2}+y^{2})$

. For any

$xy\leq \frac{1}{2}(x^{2}+y^{2})$

. For any

![]() $\varepsilon>0$

, let

$\varepsilon>0$

, let

![]() $x=|x-y|$

and

$x=|x-y|$

and

![]() $y={1}/{\varepsilon}$

; then it follows from (i) that

$y={1}/{\varepsilon}$

; then it follows from (i) that

where

![]() $A=({\varepsilon}/{2})C_{\phi}$

and

$A=({\varepsilon}/{2})C_{\phi}$

and

![]() $B=({1}/{2\varepsilon})C_{\phi}$

.

$B=({1}/{2\varepsilon})C_{\phi}$

.

We claim that, for any

![]() $k\in \mathbb{N}$

such that

$k\in \mathbb{N}$

such that

![]() $k\Delta \leq T$

and

$k\Delta \leq T$

and

![]() $x,y\in \mathbb{R}$

,

$x,y\in \mathbb{R}$

,

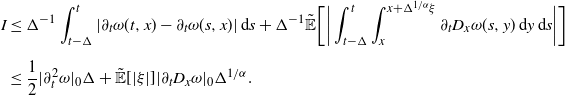

where