CLINICIAN'S CAPSULE

What is known about the topic?

Best practices are published to guide the applicant selection process to Canadian emergency medicine residency programs, but uptake is variable.

What did this study ask?

Can novel approaches and best practice guidelines be feasibly used to guide the resident selection process?

What did this study find?

We outline a defensible and transparent process using best practices and novel techniques that can be replicated by others.

Why does this study matter to clinicians?

The residency selection process is under scrutiny in Canada so best practices should be implemented in a transparent way.

INTRODUCTION

Medical students, residents, and the media have scrutinized the Canadian Resident Matching Service (CaRMS) selection process because of the rising rates of unmatched Canadian medical students.Reference Ryan1–5 Specifically, critics have flagged the process as being opaque and plagued by bias and subjectivity.Reference Ryan1,Reference Persad6,Reference Persad7 Furthermore, the CaRMS selection processes are not standardized among programs that has led to uncertainty and frustration among applicants.Reference Bandiera, Wycliffe-Jones and Busing8,Reference McInnes9 While Best Practices in Applications and Selection have been published,Reference Bandiera, Abrahams and Cipolla10 implementation rates are variable.Reference Bandiera, Wycliffe-Jones and Busing8 Despite recommendations for the scholarly dissemination of innovations in the selection process,Reference Bandiera, Abrahams and Cipolla10 we are unaware of any resident selection processes that have been described comprehensively in traditional or grey literature.

In response to these concerns and calls for greater transparency,Reference Ryan1,Reference Bandiera, Wycliffe-Jones and Busing8,Reference Bandiera, Abrahams and Ruetalo11 we describe the selection process used by our Canadian emergency medicine (EM) residency program, its innovations, and its outcomes. We believe that our standardized approach is rigorous and defensible and hope that its dissemination will encourage other programs to share and enhance their own processes while complying with best practices and spreading innovations.

METHODS

The University of Saskatchewan Behavioral Ethics Board deemed the data presented within this manuscript exempt from ethical review. Figure 1 outlines our overall CaRMS process in a flow diagram of a de-identified sample year. Appendix 1 contains a sample spreadsheet demonstrating how application and interview data are recorded and analyzed.

Figure 1. Flowchart outlining the application review and interview process in a de-identified year.

Application requirements

Our application requirements and the personal characteristics assessed as part of the selection process are outlined within our program description available at www.carms.ca. Our requirements included a personal letter, three letters of reference, curriculum vitae (CV), Medical School Performance Record (MSPR), Computer-Based Assessment for Sampling Personal Characteristics (CASPer) score, medical school transcript, proof of Canadian citizenship, English language proficiency (if applicable), and a personal photo (used as a memory aid following interviews). Appendix 2 outlines our instructions for the personal letter and letters of reference and describes the characteristics we assessed throughout the selection process. We chose these characteristics as we felt that they best mapped to our program's goals and mission statement. Prior to the publication of this manuscript, applicants were not aware of our specific application review process or scoring distributions. Applicants submitted all required application materials to www.carms.ca.

Application review

Our program reviewed only complete applications. Two staff physicians and two to four residents reviewed each application. The number of file reviewers changed annually based on the total number of trainees in our program at the time and their relative availability. Reviewer training occurred by distribution of standardized instructions (Appendix 3).Reference Bandiera, Abrahams and Cipolla10 We encouraged reviewers to review no more than 10 files in a single day to mitigate respondent fatigue.Reference Ben-Nun and Lavrakas12 We intentionally selected staff reviewers to ensure a diverse range of years of experience, areas of interest, gender, and certification route (e.g., Royal College of Physicians and Surgeons of Canada [Royal College] or Certification in the College of Family Physicians [CCFP-EM]). We selected resident reviewers to ensure diversity in years of training. We assigned reviewers such that each applicant's file was reviewed by both male and female reviewers. Reviewers self-disclosed conflicts of interest and were only assigned to review the files of applicants with whom they did not have a conflict. No reviewer was assigned more than 30 files, and no two reviewers reviewed the same 30 applicants. Reviews were completed within a three-week period. We suggested that reviewers spend 15–20 minutes on each file (total of 7.5–10 hours).

We created and pre-populated a cloud-based spreadsheet (Google Sheets, California, USA), with applicant names and identification codes, reviewer assignments, data entry cells, and formulas. Each reviewer was given a spreadsheet tab to rate their assigned applicants. File reviewers used a standardized, criteria-based scoring rubric for each section of the application (Appendix 4). Free-text cells on the spreadsheet allowed comments to be recorded to serve as memory aids. To help stratify which applicants near the interview cut-off threshold should be granted an interview, we asked reviewers, “Should we interview this applicant - yes or no?”. The CASPer score was visible to file reviewers on our data entry spreadsheet but was not assessed by file reviewers.

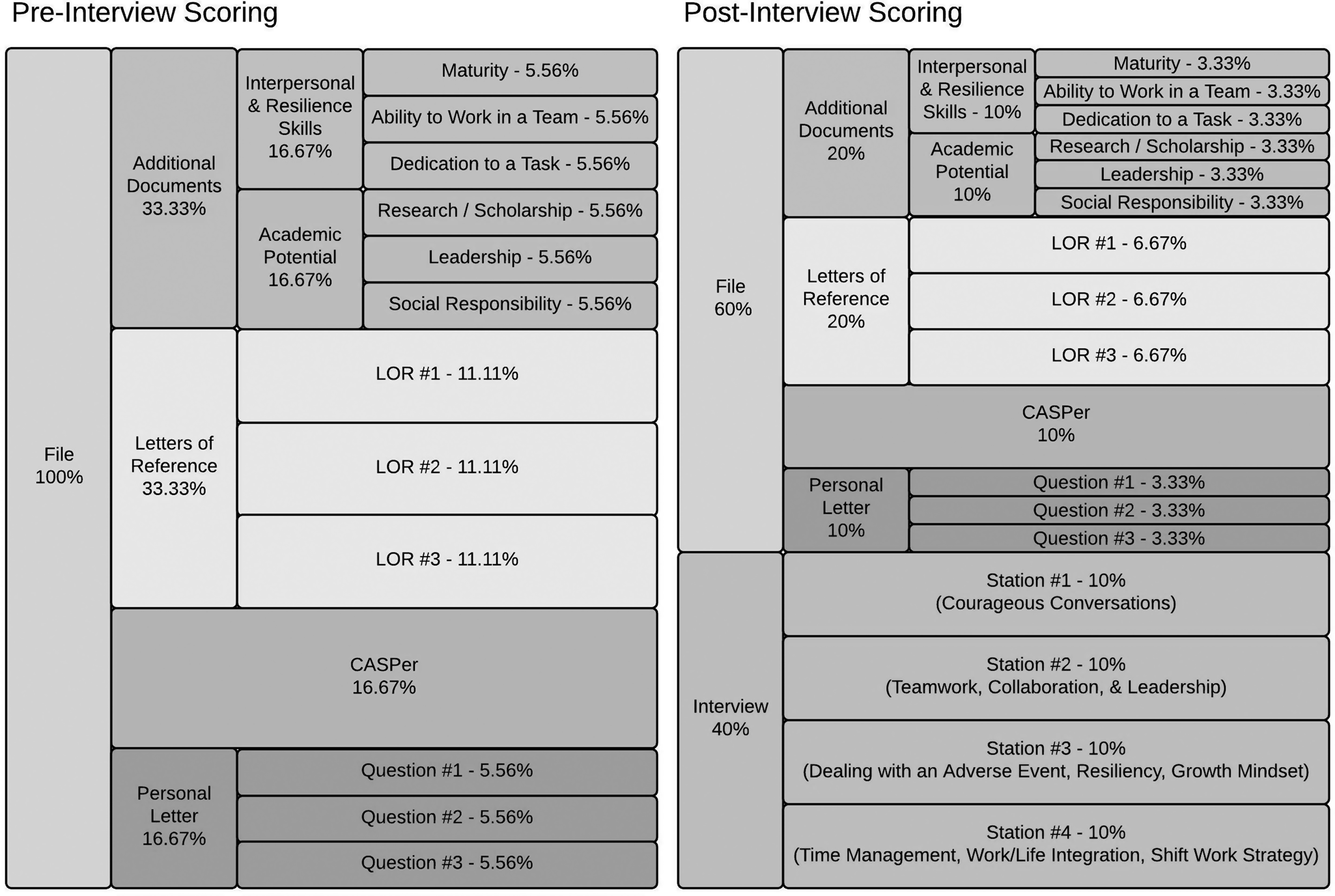

This cloud-based format allowed the simultaneous collection of data from multiple file reviewers while also performing real-time ranking calculations. The pre-populated formulas converted each reviewer's scores into a Z-score for each applicant using the formulas outlined in Appendix 5. The Z-scores were then amalgamated into a pre-interview Z-score using a weighted average incorporating resident file review Z-scores (5/12ths), staff file review Z-scores (5/12ths) and the CASPer Canadian medical graduate (CMG) Z-score (2/12ths) (Figure 2; Appendix 5). Applicants were ranked by their amalgamated pre-interview Z-score.

Figure 2. Pre- and post-interview scoring distributions.

File review group discussion

File reviewers and administrative staff met to finalize the interview and wait list after all files were reviewed. Discussion was facilitated using a spreadsheet detailing applicants’ scores, ranking, and reviewers’ free-text comments. We heat-mapped the ratings (red lowest score/yellow median score/green highest score colour gradient) to visually highlight discrepancies among CASPer CMG Z-scores, the file review Z-scores of each reviewer, and the overall file review Z-score of each applicant.

The files of each applicant ranked in a position that would result in an interview (top 32), as well as at least eight positions below this threshold, were discussed. This discussion followed a loose structure that included professionalism concerns and the review of discordant Z-scores. Changes to the interview list were generally slight, but substantial changes occurred if egregious professionalism concerns existed. Changes were generally made by consensus. If consensus could not be achieved, the program director made a final determination. For each application cycle, we sent 32 interview invitations and three wait list notifications by email within four weeks of the file review opening. Applicants had 10 days to accept or decline their interview offer.

Social events

Our program holds social events each year to welcome the applicants to the city, encourage socializing in an informal setting, and provide additional program information. Specifically, a faculty member hosted an informal gathering for the applicants and the members of the program at their home, residents led tours of the city and the main hospital, and the program hosted a lunch that incorporated a presentation describing our program. While the social events were not formally evaluated, professionalism was expected. Professionalism concerns raised by faculty, residents, and administrative staff were considered in the final rank list discussion.

Interviews

Once again, we created and pre-populated a cloud-based spreadsheet (Google Sheets, California, USA), with applicant names and identification codes, interview room assignments, data entry cells, and formulas. Interviewers rated each applicant on a station-specific tab of the spreadsheet. Each applicant completed four 12-minute multiple-mini interview (MMI) stations with three-minute breaks between stations (one hour total). Four applicants completed the circuit each hour, with all 32 interviews being conducted on a single interview date. Each station consisted of an informal ice-breaker question, followed by a behavioural-style interview question (Appendix 6). We designed the main interview questions to assess the desired qualities of applicants listed as part of our CaRMS description. If time permitted, predetermined standardized follow-up questions or the applicants’ own questions could be asked.

We provided interviewers with standardized instructions prior to the interview date (Appendix 6). Each station had two or three interviewers and consisted of at least one male and one female interviewer. One station solely comprised current residents while the remaining three stations comprised CCFP-EM and Royal College EM staff, along with an occasional resident. In 2019, an EM social worker also participated as an interviewer. Interviewers determined each applicant's score individually within each room. Each station was scored on a 10-point scale based on a predefined description of the ideal answer (Appendix 6). The pre-populated formulas calculated the average interview ratings for each applicant and converted them into station-specific Z-scores using the formulas outlined in Appendix 5.

Final statistical analysis

Following the interviews, we calculated each applicant's final score using predetermined weightings: residents’ file review (1/4th), staff's file review (1/4th), CASPer score (1/10th), and each interview station (1/10th per station) (Figure 2; Appendix 5). The weighting of each element was predetermined by the program director through consultation with faculty and residents. The goal of the weighting was to map the desired characteristics of applicants to the available tools in the applicants’ CaRMS files.

Final group discussion and rank list entry

We invited all residents, staff physicians, interviewers, and administrative staff to participate in a two-hour group discussion immediately following the interviews. We used a heat-mapped spreadsheet outlining the final statistical analysis to highlighted discordant Z-scores and displayed the 32 applicants’ photos and names on a whiteboard in order of their final scores.

The final group discussion followed a loose structure organized by the preliminary rank list and key topics. Applicants were discussed from highest to lowest rank. Key topics that we discussed for each resident included professionalism concerns and discordant Z-scores. In some cases, we identified professionalism concerns during social events or the interview that warranted removal from or modifications to the rank list. Our discussion of discordant Z-scores clarified the reason for these discrepancies and whether they warranted minor rank list adjustments.

For applicants with nearly equivalent final scores, we further considered the diversity of our resident group and the applicants’ fit within our program culture. All adjustments to the rank order list were made by consensus, with the program director making a final determination when consensus could not be achieved.

Administrative staff submitted the final rank list of up to 32 applicants to CaRMS for a program admission quota of three residents per year. Upon completion of the interviews, we securely stored all file review data, with access restricted to the program director and spreadsheet creator. We re-evaluate our overall process annually.

RESULTS

We received between 75 and 90 CaRMS applications during each application cycle between 2017 and 2019. Our file review process required approximately 12 hours per file reviewer, 10 hours per interviewer, and 4 hours for spreadsheet generation and calculations by an experienced spreadsheet user. The cumulative time required for the entire process was approximately 320 person-hours annually, not including attendance at the social event or administrative assistant duties.

Figure 3 demonstrates the ranking of individual applicants who applied to our program in a de-identified sample year. Some applicants who ranked within the top 32 by application score were not offered an interview following the post-file review discussion. Conversely, some applicants who were ranked lower than the interview invitation threshold were moved up the rank list and offered an interview. For example, applicant 22 declined an interview, and applicants 33 and 34 declined waitlist offers that resulted in applicant 35 being interviewed. The interview scores altered the rank list based on individual applicant performance. Post-interview discussion resulted in minor rank list alterations. In one case, an applicant was interviewed but not ranked because of professionalism concerns.

Figure 3. Visual display of applicant rankings through the stages of the CaRMS application and interview ranking process in a sample year.

Figure 4 demonstrates the tight spread of scores surrounding the interview invitation threshold (i.e., 32nd position) after file review. The range of Z-scores among the applicants rated 27th to 37th was small in 2017 (0.12–0.42), 2018 (0.12–0.42), and 2019 (0.18–0.36).

Figure 4. Pre-interview Z-score versus application ranking after file review.

DISCUSSION

While there are many robust and viable approaches to resident selection, we believe medical students and residency programs would benefit from openly published examples of best practices. We have comprehensively described our resident selection methods to address well-founded concerns with the CaRMS process. We hope that reviewing this description would allow applicants to understand how they would be assessed and give programs a blueprint on which to build. Though largely a descriptive manuscript, we have also described several innovations that have not previously been discussed in the literature.

Comparison with previous work

Research on the emergency medicine match is prominent in the United States. However, it generally focuses on evaluating specific questions.Reference DeSantis and Marco13–Reference Oyama, Kwon and Fernandez20 One previous Canadian study provided a detailed description and analysis of their interview process.Reference Dore, Kreuger and Ladhani21 To our knowledge, this is the first comprehensive description of an emergency medicine program's entire resident selection process in the literature.

Our process was developed in keeping with the Best Practices in Applications and Selection recommendationsReference Bandiera, Abrahams and Cipolla10 and the practices of other residency programs about which we learned through personal communications. We incorporated strong assessment practices such as criterion-referenced rating scalesReference Boursicot, Etheridge and Setna22 and multiple assessments from diverse raters.Reference Gormley23 Furthermore, we assessed characteristics that are associated with success in residency (reference letters and publication numbersReference Bhat, Takenaka and Levine17) and have demonstrated reliability (MMIsReference Pau, Jeevaratnam and Chen24 consisting of structured interviews with predefined assessment toolsReference Bandiera and Regehr25). Informal communications with other programs suggest that our use of simultaneous cloud-based rating, Z-scores, and heat maps are innovations that are not commonly used.

Moving forward, our approach to resident selection would differ dramatically from traditional processes because of its transparency. Applicants are historically unaware of the weighting of each component of the file and interview for different programs.Reference McInnes9, Reference Eneh, Jagan and Baxter26–Reference McCann, Nomura, Terzian, Breyer and Davis29 Within this report, we have outlined this clearly (Figure 2) and remain committed to sharing this information openly with our applicants as our selection processes evolves. As applicants’ knowledge of the selection process improves, we anticipate that they will have a greater understanding of how, why, and what is being assessed throughout the selection process.

Strengths and limitations

Some aspects of our process may be controversial. For example, the use of discussion to adjust the final interview and rank lists could be considered subjective and, therefore, inappropriate.Reference Bandiera, Abrahams and Cipolla10 However, previous publications have highlighted how having close colleagues and high social relatedness are important to resident wellness and success.Reference Cohen and Patten30,Reference Raj31 Further, our program finds value in these discussions. Like qualitative research, we believe that when they are conducted in a rigorous and focused fashion,Reference Ryan1 they can provide important insights. Though some have postulated that using vaguely defined constructs such as “fit” are likely to disadvantage non-traditional applications,Reference Persad7 we use them as an opportunity to discuss inequities and underrepresented populations.Reference Bandiera, Abrahams and Ruetalo11 The minute differences in scores among applicants near the interview invitation threshold (Figure 4) suggest that the differences in the applications between applicants who received and did not receive an interview invitation was minimal.

The major limitation of our description was that we have not performed a robust evaluation of the validity or reliability of our selection process. The lack of access to the outcomes of applicants that were not interviewed or matched to our program also makes an evaluation of validity challenging. Our historic data allow us to track some measures of reliability. Our preliminary analyses of these data suggest that the reliability of our file review varies from year to year. We have considered rater training to improve their reliability,Reference Feldman, Lazzara, Vanderbilt and DiazGranados32,Reference Woehr and Huffcutt33 but given the extensive volunteer commitment that we already require of reviewers, we anticipate that there would be little interest. We have also considered having raters evaluate a single component of all applications (e.g., the personal letter) but believe that this reductionist approach could miss insights that would be gleaned from a more holistic application review.Reference Kreiter34,Reference Witzburg and Sondheimer35 Instead, we utilized Z-scores to normalize variations in scoring. While not ideal, this technique eliminates the advantage or disadvantage received by applicants reviewed by a team of generous or stringent reviewers.

One threat to the validity of our process is that we were not able to confirm its fidelity. For example, we advised file reviewers to limit their reviewing to 10 files per day but did not take steps to ensure this was adhered to. Further, we did not ensure that interviewers rate applicants independently so they could have possibly influenced each other's ratings. As interviewers also occasionally served as file reviewers, it was also possible that the file review could have positively or negatively influenced interview rating. While we had a basic framework to guide our interview and rank list discussions, it was not always strictly enforced. These variations represent opportunities to improve in future years.

Lastly, we recognize that our process has been developed over the nine years since our program's founding and is rooted in the local culture of our site and specialty. It will need to be altered prior to adoption by other programs to account for site- and specialty-specific contexts. In particular, the recruitment of sufficient file reviewers and interviewers is unlikely to be possible in programs that have fewer faculty and residents, receive substantially more applications, or have a different culture.

CONCLUSION

We have described a reproducible, defensible, and transparent resident selection process that incorporates several innovative elements. This description will allow its adoption and modification to the context of other Canadian residency training programs. We encourage all Canadian residency programs to develop and disseminate their selection processes to decrease the opacity of the process while allowing best practices and innovations to be disseminated.

Acknowledgments

Leah Chomyshen and Cathy Fulcher, administrative support staff, University of Saskatchewan.

Competing interests

We have no conflicts of interest to disclose. This paper received no specific grant from any funding agency, commercial, or not-for-profit sectors.

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/cem.2019.460.