1. Introduction

Real-world economic scenarios provide stochastic forecasts of economic variables like interest rates, equity returns, and inflation and are widely used in the insurance sector for a variety of applications including asset and liability management (ALM) studies, strategic asset allocation, computing the solvency capital requirement (SCR) within an internal model or pricing assets or liabilities including a risk premium. Unlike risk-neutral economic scenarios that are used for market consistent pricing, real-world economic scenarios aim at being realistic in view of the historical data and/or expert expectations about future outcomes. In the literature, many real-world models have been studied for applications in insurance. Those applications relate to (i) valuation of insurance products, (ii) hedging strategies for annuity portfolios and (iii) risk calculation for economic capital assessment. On item (i), we can mention the work of Boudreault and Panneton (Reference Boudreault and Panneton2009) who study the impact on conditional tail expectation provision of GARCH and regime-switching models calibrated on historical data, and the work of Graf et al. (Reference Graf, Haertel, Kling and Ruß2014) who perform simulations under the real-world probability measure to estimate the risk-return profile of various old-age provision products. On item (ii), Zhu et al. (Reference Zhu, Hardy and Saunders2018) measure the hedging error of several dynamic hedging strategies along real-world scenarios for cash balance pension plans, while Lin and Yang (Reference Lin and Yang2020) calculate the value of a large variable annuity portfolio and its hedge using nested simulations (real-world scenarios for the outer simulations and risk-neutral scenarios for the inner simulations). Finally, on item (iii), Hardy et al. (Reference Hardy, Freeland and Till2006) compare several real-world models for the equity return process in terms of fitting quality and resulting capital requirements and discuss the problem of the validation of real-world scenarios. Similarly, Otero et al. (Reference Otero, Durán, Fernández and Vivel2012) measure the impact on the Solvency II capital requirements (SCR) when using a regime-switching model in comparison to lognormal, GARCH and E-GARCH models. Floryszczak et al. (Reference Floryszczak, Lévy Véhel and Majri2019) introduce a simple model for equity returns allowing to avoid over-assessment of the SCR specifically after market disruptions. On the other hand, Asadi and Al Janabi (Reference Asadi and Al Janabi2020) propose a more complex model for stocks based on ARMA and GARCH processes that results in a higher SCR than in the Solvency II standard model. This literature shows the importance of real-world economic scenarios in various applications in insurance. We observe that the question of the consistency of the generated real-world scenarios is barely discussed or only from a specific angle such as the model likelihood or the ability of the model to reproduce the 1 in 200 worst shock observed on the market.

In the insurance industry, the assessment of the realism of real-world economic scenarios is often referred to as scenario validation. It allows to verify a posteriori the consistency of a given set of real-world economic scenarios with historical data and/or expert views. As such, it also guides which models can better be used to generate real-world economic scenarios. In the risk-neutral framework, the validation step consists for example in verifying the martingale property of the discounted values along each scenario. In the real-world framework, the most widespread practice is to perform a so-called point-in-time validation. It consists in analyzing the distribution of some variables derived from the generated scenarios (for example, annual log-returns for equity stocks or relative variation for an inflation index) at some specific horizons like one year which is the horizon considered in the Solvency II directive. Generally, this analysis only focuses on the first moments of the one-year distribution as real-world models are often calibrated by a moment-matching approach. The main drawback of this approach is that it only allows to capture properties of the simulated scenarios at some point in time. In particular, the consistency of the paths between

![]() $t=0$

and

$t=0$

and

![]() $t=1$

year is not studied so that properties like clustering, smoothness and high-order autocorrelation are not captured. Capturing these properties has its importance as their presence or absence in the economic scenarios can have an impact in real practice, such as for example on a strategic asset allocation having a monthly rebalancing frequency or on the SCR calculation when a daily hedging strategy is involved, since the yearly loss distribution will be path-dependent. In this paper, we propose to address this drawback by comparing the distribution of the stochastic process underlying the simulated paths to the distribution of the historical paths. This can be done using a distance between probability measures, called the maximum mean distance (MMD), and a mathematical object, called the signature, allowing to encode a continuous path in an efficient and parsimonious way. Based on these tools, Chevyrev and Oberhauser (Reference Chevyrev and Oberhauser2022) designed a statistical test allowing to accept or reject the hypothesis that the distributions of two samples of paths are equal. This test has already been used by Buehler et al. (Reference Buehler, Horvath, Lyons, Perez Arribas and Wood2020) to test whether financial paths generated by a conditional variational auto encoder (CVAE) are close to the historical paths being used to train the CVAE. An alternative way to compare the distributions of two sample of paths is to flatten each sequence of observations into a long vector of length

$t=1$

year is not studied so that properties like clustering, smoothness and high-order autocorrelation are not captured. Capturing these properties has its importance as their presence or absence in the economic scenarios can have an impact in real practice, such as for example on a strategic asset allocation having a monthly rebalancing frequency or on the SCR calculation when a daily hedging strategy is involved, since the yearly loss distribution will be path-dependent. In this paper, we propose to address this drawback by comparing the distribution of the stochastic process underlying the simulated paths to the distribution of the historical paths. This can be done using a distance between probability measures, called the maximum mean distance (MMD), and a mathematical object, called the signature, allowing to encode a continuous path in an efficient and parsimonious way. Based on these tools, Chevyrev and Oberhauser (Reference Chevyrev and Oberhauser2022) designed a statistical test allowing to accept or reject the hypothesis that the distributions of two samples of paths are equal. This test has already been used by Buehler et al. (Reference Buehler, Horvath, Lyons, Perez Arribas and Wood2020) to test whether financial paths generated by a conditional variational auto encoder (CVAE) are close to the historical paths being used to train the CVAE. An alternative way to compare the distributions of two sample of paths is to flatten each sequence of observations into a long vector of length

![]() $d\times L$

, where L is the length of the sequence of observations and d is the dimension of each observation, and to apply a multi-variate statistical test. However, Chevyrev and Oberhauser have shown that their signature-based test performs overall better (both in terms of statistical power and in terms of computational cost) than standard multi-variate tests on a collection of multidimensional time series data sets. Moreover, this alternative approach requires that each sequence of observations is of the same length which is not a prerequisite in the case of the signature-based test.

$d\times L$

, where L is the length of the sequence of observations and d is the dimension of each observation, and to apply a multi-variate statistical test. However, Chevyrev and Oberhauser have shown that their signature-based test performs overall better (both in terms of statistical power and in terms of computational cost) than standard multi-variate tests on a collection of multidimensional time series data sets. Moreover, this alternative approach requires that each sequence of observations is of the same length which is not a prerequisite in the case of the signature-based test.

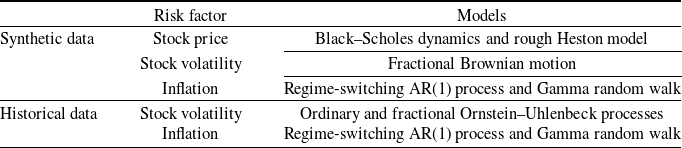

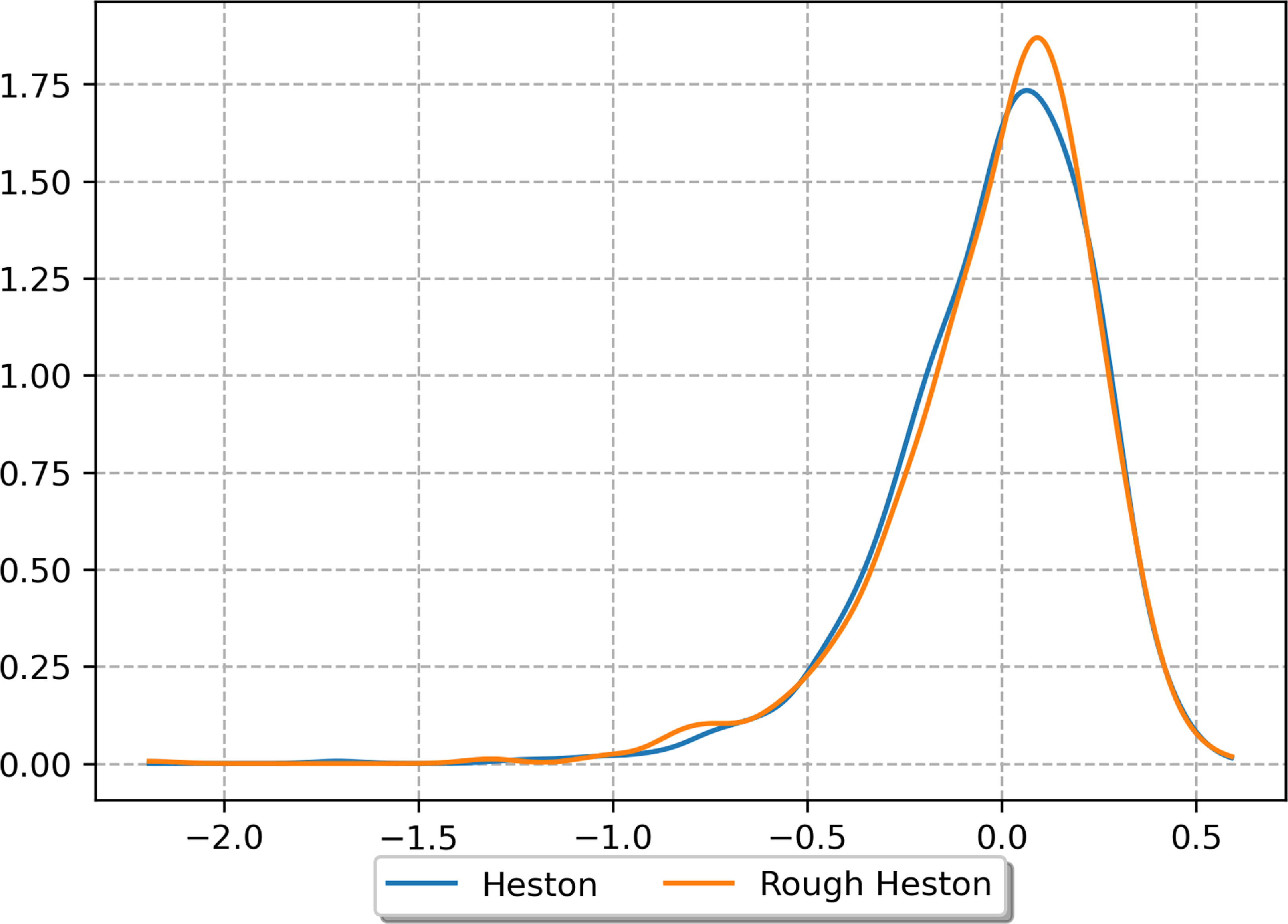

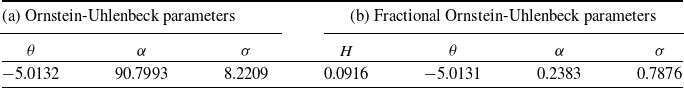

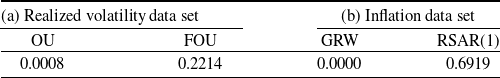

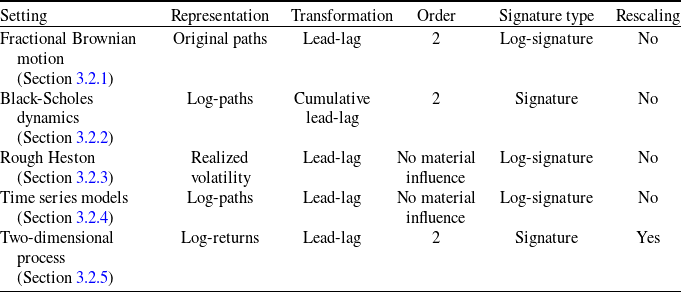

Our contribution is to study more deeply this statistical test from a numerical point of view on a variety of stochastic models and to show its practical interest for the validation of real-world stochastic scenarios when this validation is specified as an hypothesis testing problem. First, we present a numerical analysis with synthetic data in order to measure the statistical power of the test, and second, we work with historical data to study the ability of the test to discriminate between several models in practice. Moreover, two constraints are considered in the numerical experiments. The first one is to impose that the distributions of the annual increments are the same in the two compared samples, which is natural since, without this constraint, point-in-time validation methods could distinguish the two samples. Second, in order to mimic the operational process of real-world scenarios validation in insurance, we consider samples of different sizes: the first sample consisting of synthetic or real historical paths is of small size (typically below 50) while the second sample consisting of the simulated scenarios is of greater size (typically around 1000). Our aim is to demonstrate the high statistical power of the test under these constraints. Numerical results are presented for three financial risk drivers, namely, of a stock price, stock volatility as well as inflation. As for the stock price, we first generate two samples of paths under the widespread Black–Scholes dynamics, each sample corresponding to a specific choice of the (deterministic) volatility structure. We also simulate two samples of paths under both the classic and rough Heston models. As for the stock volatility, the two samples are generated using fractional Brownian motions with different Hurst parameters. Note that the exponential of a fractional Brownian motion, as model for volatility, has been proposed by Gatheral et al. (Reference Gatheral, Jaisson and Rosenbaum2018) who showed that such a model is consistent with historical data. For the inflation, one sample is generated using a regime-switching AR(1) process and the other sample is generated using a random walk with i.i.d. Gamma noises. Finally, we compare two samples of two-dimensional processes with either independent or correlated coordinates when the first coordinate evolves according to the price in the rough Heston model and the second coordinate evolves according to an AR(1) regime-switching process. Besides these numerical results on simulated paths, we also provide numerical results on real historical data. More specifically, we test historical paths of S&P 500 realized volatility (used as a proxy of spot volatility) against sample paths from a standard Ornstein–Uhlenbeck model on the one hand and against sample paths from a fractional Ornstein–Uhlenbeck model on the other hand. We show that the test allows to reject the former model while the latter is not rejected. Similarly, it allows to reject a random walk model with i.i.d. Gamma noises when applied to US inflation data, while a regime-switching AR(1) process is not rejected. A summary of the studied risk factors and associated models is provided in Table 1.

Table 1. Summary of the studied risk factors and associated models in each framework (synthetic data and real historical data).

Remark 1.1. In the literature, the closest work to ours is the one of Bilokon et al. (Reference Bilokon, Jacquier and McIndoe2021). Given a set of paths, they propose to compute the signature of these paths in order to apply the clustering algorithm of Azran and Ghahramani (Reference Azran and Ghahramani2006) and to ultimately identify market regimes. The similarity function underlying the clustering algorithm relies in particular on the MMD Using Black–Scholes sample paths corresponding to four different configurations of the drift and volatility parameters, they show the ability of their methodology to correctly cluster the paths and to identify the number of different configurations (i.e. four). Let us point out that our contributions are different from theirs in several ways. First, their objective is to cluster a set of paths in several groups, while our objective is to statistically test whether two sets of paths come from the same probability distribution. Second, our numerical results go beyond the Black–Scholes model and explore more sophisticated models. Moreover, we also provide numerical results on historical data. Finally, our numerical experiments are conducted in a setting closer to our practical applications where the marginal one-year distributions of the two samples are the same or very close, while the frequency of observation of the paths is lower in our case (we consider monthly observations over one year while they consider 100 observations).

The objective of the present article is also to provide a concise introduction to the signature theory that does not require any prerequisite for insurance practitioners. Introduced for the first time by Chen (Reference Chen1957) in the late 50s and then rediscovered in the 90s in the context of rough path theory (Lyons, Reference Lyons1998), the signature is a mapping that allows to characterize deterministic paths up to some equivalence relation (see Theorem B.2. in the Supplementary Material). Chevyrev and Oberhauser (Reference Chevyrev and Oberhauser2022) have extended this result to stochastic processes as they have shown that the expected signature of a stochastic process characterizes its law. The idea to use the signature to address problems in finance is not new although it is quite recent. To our knowledge, Gyurkó and Lyons (Reference Gyurkó and Lyons2010) are the first in this area. They present a general framework for deriving high order, stable and tractable pathwise approximations of stochastic differential equations relying on the signature and apply their results to the simulation of the Cox–Ingersoll–Ross process. Then, Gyurkó et al. (Reference Gyurkó, Lyons, Kontkowski and Field2013) introduced the signature as a way to obtain a faithful transform of financial data streams that is used as a feature of a classification method. Levin et al. (Reference Levin, Lyons and Ni2013) use the signature to study the problem of regression where the input and the output variables are paths and illustrate their results by considering the prediction task for AR and ARCH time series models. In the same vein, Cohen et al. (Reference Cohen, Lui, Malpass, Mantoan, Nesheim, de Paula, Reeves, Scott, Small and Yang2023) address the nowcasting problem – the problem of computing early estimates of economic indicators that are published with a delay such as the GDP – by applying a regression on the signature of frequently published indicators. Ni et al. (Reference Ni, Szpruch, Sabate-Vidales, Xiao, Wiese and Liao2021) develop a generative adversarial network (GAN) based on the signature allowing to generate time series that capture the temporal dependence in the training and validation data set both for synthetic and real data. In his PhD thesis, Perez Arribas (Reference Perez Arribas2020) shows several applications of the signature in finance including the pricing and hedging of exotic derivatives (see Lyons et al., Reference Lyons, Nejad and Perez Arribas2020) or optimal execution problems (see Kalsi et al., Reference Kalsi, Lyons and Arribas2020 and Cartea et al., Reference Cartea, Pérez Arribas and Sánchez-Betancourt2022). Cuchiero et al. (2023b) extend the work of Perez Arribas and develop a new class of asset price models based on the signature of semimartingales allowing to approximate arbitrarily well classical models such as the SABR and the Heston models. Using this new modelling framework, Cuchiero et al. (2023a) propose a method to solve the joint S&P 500/VIX calibration problem without adding jumps or rough volatility. Akyildirim et al. (Reference Akyildirim, Gambara, Teichmann and Zhou2022) introduce a signature-based machine learning algorithm to detect rare or unexpected items in a given data set of time series type. Cuchiero and Möller (Reference Cuchiero and Möller2023) employ the signature in the context of stochastic portfolio theory to introduce a novel class of portfolios making in particular several optimization tasks highly tractable. Finally, Bayer et al. (Reference Bayer, Hager, Riedel and Schoenmakers2023) propose a new method relying on the signature for solving optimal stopping problems.

The present article is organized as follows: as a preliminary, we introduce in Section 2 the MMD and the signature before describing the statistical test proposed by Chevyrev and Oberhauser (Reference Chevyrev and Oberhauser2022). This test is based on these two notions and allows to assess whether two stochastic processes have the same law using finite numbers of their sample paths. Then in Section 3, we study this test from a numerical point of view. We start by studying its power using synthetic data in settings that are realistic in view of insurance applications and then, we apply it to real historical data. We also discuss several challenges related to the numerical implementation of this approach and highlight its domain of validity in terms of the distance between models and the volume of data at hand.

2. From the MMD and the signature to a two-sample test for stochastic processes

In this section, we start by introducing the MMD, which allows to measure how similar two probability measures are. Second, reproducing Kernel Hilbert spaces (RKHS) are presented as they are key to obtain a simple formula for the MMD. Then, we briefly introduce the signature and we show how it allows to construct an RKHS that we can use to make the MMD a metric able to discriminate two probability measures defined on the bounded variation paths quotiented by some equivalence relation. Finally, the statistical test underlying the signature-based validation is introduced. In what follows,

![]() $\mathcal{X}$

is a metric space.

$\mathcal{X}$

is a metric space.

2.1. The maximum mean distance

Definition 2.1 (Maximum Mean Distance). Let

![]() $\mathcal{G}$

be a class of functions

$\mathcal{G}$

be a class of functions

![]() $f\,:\,\mathcal{X}\rightarrow \mathbb{R}$

and

$f\,:\,\mathcal{X}\rightarrow \mathbb{R}$

and

![]() $\mu$

,

$\mu$

,

![]() $\nu$

two Borel probability measures defined on

$\nu$

two Borel probability measures defined on

![]() $\mathcal{X}$

. The MMD is defined as

$\mathcal{X}$

. The MMD is defined as

Depending on

![]() $\mathcal{G}$

, the MMD is not necessarily a metric (actually, it is a pseudo-metric, that is a metric without the property that two points with zero distance are identical), that is we could have

$\mathcal{G}$

, the MMD is not necessarily a metric (actually, it is a pseudo-metric, that is a metric without the property that two points with zero distance are identical), that is we could have

![]() $MMD_{\mathcal{G}}(\mu,\nu) = 0$

for some

$MMD_{\mathcal{G}}(\mu,\nu) = 0$

for some

![]() $\mu \ne \nu$

if the class of functions

$\mu \ne \nu$

if the class of functions

![]() $\mathcal{G}$

is not rich enough. A sufficiently rich class of functions that makes

$\mathcal{G}$

is not rich enough. A sufficiently rich class of functions that makes

![]() $MMD_{\mathcal{G}}$

a metric is for example the space of bounded continuous functions on

$MMD_{\mathcal{G}}$

a metric is for example the space of bounded continuous functions on

![]() $\mathcal{X}$

equipped with a metric d (Lemma 9.3.2 of Dudley, Reference Dudley2002). A sufficient condition on

$\mathcal{X}$

equipped with a metric d (Lemma 9.3.2 of Dudley, Reference Dudley2002). A sufficient condition on

![]() $\mathcal{G}$

for

$\mathcal{G}$

for

![]() $MMD_\mathcal{G}$

to be a metric is given in Theorem 5 of Gretton et al. (Reference Gretton, Borgwardt, Rasch, Schölkopf and Smola2012).

$MMD_\mathcal{G}$

to be a metric is given in Theorem 5 of Gretton et al. (Reference Gretton, Borgwardt, Rasch, Schölkopf and Smola2012).

As presented in Definition 2.1, the MMD appears more as a theoretical tool than a practical one since computing this distance seems impossible in practice due to the supremum over a class of functions. However, if this class of function is the unit ball in a reproducing kernel Hilbert space (RKHS), the MMD is much simpler to estimate. Before setting out this result precisely, let us make a quick reminder about Mercer kernels and RKHSs.

Definition 2.2 (Mercer kernel). A mapping

![]() $K\,:\,\mathcal{X}\times \mathcal{X}\rightarrow \mathbb{R}$

is called a Mercer kernel if it is continuous, symmetric and positive semi-definite that is for all finite sets

$K\,:\,\mathcal{X}\times \mathcal{X}\rightarrow \mathbb{R}$

is called a Mercer kernel if it is continuous, symmetric and positive semi-definite that is for all finite sets

![]() $\{x_1, \dots, x_k\} \subset \mathcal{X}$

and for all

$\{x_1, \dots, x_k\} \subset \mathcal{X}$

and for all

![]() $(\alpha_1,\dots,\alpha_k)\in\mathbb{R}^k$

, the kernel K satisfies:

$(\alpha_1,\dots,\alpha_k)\in\mathbb{R}^k$

, the kernel K satisfies:

\begin{equation}\sum_{i=1}^k\sum_{j=1}^k \alpha_i\alpha_j K(x_i,x_j) \ge 0.\end{equation}

\begin{equation}\sum_{i=1}^k\sum_{j=1}^k \alpha_i\alpha_j K(x_i,x_j) \ge 0.\end{equation}

Remark 2.1. In the kernel learning literature, it is common to use the terminology “positive definite” instead of “semi-positive definite” but we prefer the latter one in order to be consistent with the linear algebra standard terminology.

We set:

With these notations, we have the following theorem due to Moore-Aronszajn (see Theorem 2 of Chapter III in Cucker and Smale, Reference Cucker and Smale2002):

Theorem 2.1 (Moore-Aronszajn). Let K be a Mercer kernel. Then, there exists a unique Hilbert space

![]() $\mathcal{H}\subset \mathbb{R}^{\mathcal{X}}$

with scalar product

$\mathcal{H}\subset \mathbb{R}^{\mathcal{X}}$

with scalar product

![]() $\langle \cdot, \cdot \rangle_{\mathcal{H}}$

satisfying the following conditions:

$\langle \cdot, \cdot \rangle_{\mathcal{H}}$

satisfying the following conditions:

(i)

![]() $\mathcal{H}_0$

is dense in

$\mathcal{H}_0$

is dense in

![]() $\mathcal{H}$

$\mathcal{H}$

(ii) For all

![]() $f\in \mathcal{H}$

,

$f\in \mathcal{H}$

,

![]() $f(x) = \langle K_x, f \rangle_{\mathcal{H}}$

(reproducing property).

$f(x) = \langle K_x, f \rangle_{\mathcal{H}}$

(reproducing property).

Remark 2.2. The obtained Hilbert space

![]() $\mathcal{H}$

is said to be a reproducing kernel Hilbert space (RKHS) whose reproducing kernel is K.

$\mathcal{H}$

is said to be a reproducing kernel Hilbert space (RKHS) whose reproducing kernel is K.

We may now state the main theorem about the MMD.

Theorem 2.2. Let

![]() $(\mathcal{H},K)$

be a reproducing kernel Hilbert space and let

$(\mathcal{H},K)$

be a reproducing kernel Hilbert space and let

![]() $\mathcal{G}\,:\!=\,\{f\in \mathcal{H} \mid \|f\|_{\mathcal{H}} \le 1\}$

. If

$\mathcal{G}\,:\!=\,\{f\in \mathcal{H} \mid \|f\|_{\mathcal{H}} \le 1\}$

. If

![]() $\int_{\mathcal{X}}\sqrt{K(x,x)}\mu(dx) < \infty$

and

$\int_{\mathcal{X}}\sqrt{K(x,x)}\mu(dx) < \infty$

and

![]() $\int_{\mathcal{X}}\sqrt{K(x,x)}\nu(dx) < \infty$

, then for X, X’ independent random variables distributed according to

$\int_{\mathcal{X}}\sqrt{K(x,x)}\nu(dx) < \infty$

, then for X, X’ independent random variables distributed according to

![]() $\mu$

and Y, Y’ independent random variables distributed according to

$\mu$

and Y, Y’ independent random variables distributed according to

![]() $\nu$

and such that X and Y are independent, K(X, X’), K(Y, Y’) and K(X, Y) are integrable and:

$\nu$

and such that X and Y are independent, K(X, X’), K(Y, Y’) and K(X, Y) are integrable and:

The proof of this theorem can be found in Lemma 6 of Gretton et al. (Reference Gretton, Borgwardt, Rasch, Schölkopf and Smola2012). A natural question at this stage is that of the choice of the Mercer kernel in order to obtain a metric on the space of probability measures defined on the space of continuous mappings from [0, T] to a finite dimensional vector space or at least on a non-trivial subspace of this space. Chevyrev and Oberhauser (Reference Chevyrev and Oberhauser2022) constructed such a Mercer kernel using the signature, which we will now define.

2.2. The signature

This subsection aims at providing a short overview of the signature, more details are given in the Supplementary Material. We call “path” any continuous mapping from some time interval [0, T] to a finite dimensional vector space E which we equip with a norm

![]() $\|\cdot \|_E$

. We denote by

$\|\cdot \|_E$

. We denote by

![]() $\otimes$

the tensor product defined on

$\otimes$

the tensor product defined on

![]() $E\times E$

and by

$E\times E$

and by

![]() $E^{\otimes n}$

the tensor space obtained by taking the tensor product of E with itself n times:

$E^{\otimes n}$

the tensor space obtained by taking the tensor product of E with itself n times:

The space in which the signature takes its values is called the space of formal series of tensors. It can be defined as

with the convention

![]() $E^{\otimes 0} = \mathbb{R}$

.

$E^{\otimes 0} = \mathbb{R}$

.

In a nutshell, the signature of a path X is the collection of all iterated integrals of X against itself. In order to be able to define these iterated integrals of X, one needs to make some assumptions about the regularity of X. The simplest framework is to assume that X is of bounded variation.

Definition 2.3 (Bounded variation path). We say that a path

![]() $X\,:\,[0, T]\rightarrow E$

is of bounded variation on

$X\,:\,[0, T]\rightarrow E$

is of bounded variation on

![]() $[0, T]$

if its total variation

$[0, T]$

if its total variation

is finite with

![]() $\mathcal{D}=\{(t_0,\dots,t_r ) \mid r\in \mathbb{N}^*, t_0 = 0 < t_1 < \dots < t_r = T \}$

the set of all subdivisions of

$\mathcal{D}=\{(t_0,\dots,t_r ) \mid r\in \mathbb{N}^*, t_0 = 0 < t_1 < \dots < t_r = T \}$

the set of all subdivisions of

![]() $[0, T]$

.

$[0, T]$

.

Notation 2.1. We denote by

![]() $\mathcal{C}^1([0, T],E)$

the set of bounded variation paths from

$\mathcal{C}^1([0, T],E)$

the set of bounded variation paths from

![]() $[0, T]$

to E.

$[0, T]$

to E.

Remark 2.3. Intuitively, a bounded variation path on [0, T] is a path whose graph vertical arc length is finite. In fact, if X is a real-valued continuously differentiable path on [0, T], then

![]() $\|X\|_{1,[0, T]} = \int_0^T |X^{\prime}_t| dt $

.

$\|X\|_{1,[0, T]} = \int_0^T |X^{\prime}_t| dt $

.

We can now define the signature of a bounded variation path.

Definition 2.4 (Signature). Let

![]() $X\,:\,[0, T]\rightarrow E$

be a bounded variation path. The signature of X on

$X\,:\,[0, T]\rightarrow E$

be a bounded variation path. The signature of X on

![]() $[0, T]$

is defined as

$[0, T]$

is defined as

![]() $S_{[0, T]}(X) = (\textbf{X}^n)_{n\ge 0}$

where by convention

$S_{[0, T]}(X) = (\textbf{X}^n)_{n\ge 0}$

where by convention

![]() $\textbf{X}^0=1$

and

$\textbf{X}^0=1$

and

where the integrals must be understood in the sense of Riemann–Stieljes. We call

![]() $\textbf{X}^n$

the term of order n of the signature and

$\textbf{X}^n$

the term of order n of the signature and

![]() $S^N_{[0, T]}(X) = (\textbf{X}^n)_{0\le n \le N}$

the truncated signature at order N. Note that when the time interval is clear from the context, we will omit the subscript of S.

$S^N_{[0, T]}(X) = (\textbf{X}^n)_{0\le n \le N}$

the truncated signature at order N. Note that when the time interval is clear from the context, we will omit the subscript of S.

Example 2.1. If X is a one-dimensional bounded variation path, then its signature over

![]() $[0, T]$

is very simple as it reduces to the powers of the increment

$[0, T]$

is very simple as it reduces to the powers of the increment

![]() $X_T-X_0$

, that is for any

$X_T-X_0$

, that is for any

![]() $n\ge 0$

:

$n\ge 0$

:

The above definition could be extended to less regular paths, namely to paths of finite p-variation with

![]() $p<2$

. In this case, the integrals can be defined in the sense of Young (Reference Young1936). However, if

$p<2$

. In this case, the integrals can be defined in the sense of Young (Reference Young1936). However, if

![]() $p\ge 2$

, it is no longer possible to define the iterated integrals. Still, it is possible to give a sense to the signature but the definition is much more involved and relies on the rough path theory so we refer the interested reader to Lyons et al. (Reference Lyons, Caruana and Lévy2007).

$p\ge 2$

, it is no longer possible to define the iterated integrals. Still, it is possible to give a sense to the signature but the definition is much more involved and relies on the rough path theory so we refer the interested reader to Lyons et al. (Reference Lyons, Caruana and Lévy2007).

In this work, we will focus on bounded variation paths where the signature takes its values in the space of finite formal series (as a consequence of Proposition 2.2 of Lyons et al., Reference Lyons, Caruana and Lévy2007):

\begin{equation}T^*(E) \,:\!=\, \left\{\textbf{t}\in T(E) \mid \|\textbf{t}\| \,:\!=\, \sqrt{\sum_{n\ge 0} \|\textbf{t}^n\|_{E^{\otimes n}}^2} <\infty \right\}\end{equation}

\begin{equation}T^*(E) \,:\!=\, \left\{\textbf{t}\in T(E) \mid \|\textbf{t}\| \,:\!=\, \sqrt{\sum_{n\ge 0} \|\textbf{t}^n\|_{E^{\otimes n}}^2} <\infty \right\}\end{equation}

where

![]() $\|\cdot\|_{E^{\otimes n}}$

is the norm induced by the scalar product

$\|\cdot\|_{E^{\otimes n}}$

is the norm induced by the scalar product

![]() $\langle \cdot, \cdot \rangle_{E^{\otimes n}}$

defined on

$\langle \cdot, \cdot \rangle_{E^{\otimes n}}$

defined on

![]() $E^{\otimes n}$

by:

$E^{\otimes n}$

by:

\begin{equation}\langle x, y \rangle_{E^{\otimes n}} = \sqrt{\sum_{I=(i_1,\dots,i_n)\in \{1,\dots,d\}^n} x_Iy_I} \quad \text{for } x,y\in E^{\otimes n}\end{equation}

\begin{equation}\langle x, y \rangle_{E^{\otimes n}} = \sqrt{\sum_{I=(i_1,\dots,i_n)\in \{1,\dots,d\}^n} x_Iy_I} \quad \text{for } x,y\in E^{\otimes n}\end{equation}

with d the dimension of E and

![]() $x_I$

(resp.

$x_I$

(resp.

![]() $y_I$

) the coefficient at position I of x (resp. y).

$y_I$

) the coefficient at position I of x (resp. y).

The signature is a powerful tool allowing to encode a path in a hierarchical and efficient manner. In fact, two bounded variation paths having the same signature are equal up to an equivalence relation (the so-called tree-like equivalence, denoted by

![]() $\sim_t$

, and defined in Appendix B of the Supplementary Material). In other words, the signature is one-to-one on the space

$\sim_t$

, and defined in Appendix B of the Supplementary Material). In other words, the signature is one-to-one on the space

![]() $\mathcal{P}^1([0, T],E)\,:\!=\,\mathcal{C}^1([0, T],E)/\sim_t$

of bounded variation paths quotiented by the tree-like equivalence relation. This is presented in a more comprehensive manner in Appendix B of the Supplementary Material. Now, we would like to characterize the law of stochastic processes with bounded variation sample paths using the expected signature, that is the expectation of the signature taken component-wise. In a way, the expected signature is to stochastic processes what the sequence of moments is to random vectors. Thus, in the same way that the sequence of moments characterizes the law of random vectors only if the moments do not grow too fast, we need that the terms of the expected signature do not grow too fast in order to be able to characterize the law of stochastic processes. In order to avoid to have to restrict the study to laws with compact support (as assumed by Fawcett, Reference Fawcett2002), Chevyrev and Oberhauser (Reference Chevyrev and Oberhauser2022) propose to apply a normalization mapping to the signature ensuring that the norm of the normalized signature is bounded. This property allows them to prove the characterization of the law of a stochastic process by its expected normalized signature (Theorem C.1. in the Supplementary Material). One of the consequences of this result is the following theorem, which makes the connection between the MMD and the signature and represents the main theoretical result underlying the signature-based validation. This result is a particular case of Theorem 30 from Chevyrev and Oberhauser (Reference Chevyrev and Oberhauser2022).

$\mathcal{P}^1([0, T],E)\,:\!=\,\mathcal{C}^1([0, T],E)/\sim_t$

of bounded variation paths quotiented by the tree-like equivalence relation. This is presented in a more comprehensive manner in Appendix B of the Supplementary Material. Now, we would like to characterize the law of stochastic processes with bounded variation sample paths using the expected signature, that is the expectation of the signature taken component-wise. In a way, the expected signature is to stochastic processes what the sequence of moments is to random vectors. Thus, in the same way that the sequence of moments characterizes the law of random vectors only if the moments do not grow too fast, we need that the terms of the expected signature do not grow too fast in order to be able to characterize the law of stochastic processes. In order to avoid to have to restrict the study to laws with compact support (as assumed by Fawcett, Reference Fawcett2002), Chevyrev and Oberhauser (Reference Chevyrev and Oberhauser2022) propose to apply a normalization mapping to the signature ensuring that the norm of the normalized signature is bounded. This property allows them to prove the characterization of the law of a stochastic process by its expected normalized signature (Theorem C.1. in the Supplementary Material). One of the consequences of this result is the following theorem, which makes the connection between the MMD and the signature and represents the main theoretical result underlying the signature-based validation. This result is a particular case of Theorem 30 from Chevyrev and Oberhauser (Reference Chevyrev and Oberhauser2022).

Theorem 2.3. Let E be a Hilbert space and

![]() $\langle\cdot, \cdot\rangle$

the scalar product on

$\langle\cdot, \cdot\rangle$

the scalar product on

![]() $T^*(E)$

defined by

$T^*(E)$

defined by

![]() $\langle \textbf{x}, \textbf{y} \rangle= \sum_{n\ge 0} \langle \textbf{x}^n, \textbf{y}^n \rangle_{E^{\otimes n}}$

for all

$\langle \textbf{x}, \textbf{y} \rangle= \sum_{n\ge 0} \langle \textbf{x}^n, \textbf{y}^n \rangle_{E^{\otimes n}}$

for all

![]() $\textbf{x}$

and

$\textbf{x}$

and

![]() $\textbf{y}$

in

$\textbf{y}$

in

![]() $T^*(E)$

. Then the signature kernel defined on

$T^*(E)$

. Then the signature kernel defined on

![]() $\mathcal{P}^1([0, T],E)$

by

$\mathcal{P}^1([0, T],E)$

by

![]() $K^{sig}(x,y) = \left\langle\Phi(x),\Phi(y)\right\rangle$

where

$K^{sig}(x,y) = \left\langle\Phi(x),\Phi(y)\right\rangle$

where

![]() $\Phi$

is the normalized signature (see Theorem C.1. in the Supplementary Material), is a Mercer kernel and we denote by

$\Phi$

is the normalized signature (see Theorem C.1. in the Supplementary Material), is a Mercer kernel and we denote by

![]() $\mathcal{H}^{sig}$

the associated RKHS. Moreover,

$\mathcal{H}^{sig}$

the associated RKHS. Moreover,

![]() $MMD_{\mathcal{G}}$

where

$MMD_{\mathcal{G}}$

where

![]() $\mathcal{G}$

is the unit ball of

$\mathcal{G}$

is the unit ball of

![]() $\mathcal{H}^{sig}$

is a metric on the space

$\mathcal{H}^{sig}$

is a metric on the space

![]() $\mathcal{M}$

defined as:

$\mathcal{M}$

defined as:

and we have:

where X, X’ are independent random variables distributed according to

![]() $\mu$

and Y, Y’ are independent random variables distributed according to

$\mu$

and Y, Y’ are independent random variables distributed according to

![]() $\nu$

such that Y is independent from X.

$\nu$

such that Y is independent from X.

Based on this theorem, Chevyrev and Oberhauser propose a two-sample statistical test that allows to test whether two samples of paths come from the same distribution and that we now introduce.

2.3. A two-sample test for stochastic processes

Assume that we are given a sample

![]() $(X_1,\dots,X_m)$

consisting of m independent realizations of a stochastic process of unknown law

$(X_1,\dots,X_m)$

consisting of m independent realizations of a stochastic process of unknown law

![]() $\mathbb{P}_X$

and an independent sample

$\mathbb{P}_X$

and an independent sample

![]() $(Y_1,\dots,Y_n)$

consisting of n independent realizations of a stochastic process of unknown law

$(Y_1,\dots,Y_n)$

consisting of n independent realizations of a stochastic process of unknown law

![]() $\mathbb{P}_Y$

. We assume that both processes are in

$\mathbb{P}_Y$

. We assume that both processes are in

![]() $\mathcal{X} = \mathcal{P}^1([0, T],E)$

almost surely. A natural question is whether

$\mathcal{X} = \mathcal{P}^1([0, T],E)$

almost surely. A natural question is whether

![]() $\mathbb{P}_X = \mathbb{P}_Y$

. Let us consider the following null and alternative hypotheses:

$\mathbb{P}_X = \mathbb{P}_Y$

. Let us consider the following null and alternative hypotheses:

According to Theorem 2.3, we have

![]() $MMD_{\mathcal{G}}(\mathbb{P}_X,\mathbb{P}_Y) \neq 0$

under

$MMD_{\mathcal{G}}(\mathbb{P}_X,\mathbb{P}_Y) \neq 0$

under

![]() $H_1$

while

$H_1$

while

![]() $MMD_{\mathcal{G}}(\mathbb{P}_X,\mathbb{P}_Y) = 0$

under

$MMD_{\mathcal{G}}(\mathbb{P}_X,\mathbb{P}_Y) = 0$

under

![]() $H_0$

when

$H_0$

when

![]() $\mathcal{G}$

is the unit ball of the reproducing Hilbert space associated to the signature kernel (note that we use the notation K instead of

$\mathcal{G}$

is the unit ball of the reproducing Hilbert space associated to the signature kernel (note that we use the notation K instead of

![]() $K^{sig}$

for the signature kernel in this section as there is no ambiguity). Moreover,

$K^{sig}$

for the signature kernel in this section as there is no ambiguity). Moreover,

where X, X

′ are two random variables of law

![]() $\mathbb{P}_X$

and Y, Y

′ are two random variables of law

$\mathbb{P}_X$

and Y, Y

′ are two random variables of law

![]() $\mathbb{P}_Y$

with X, Y independent. This suggests to consider the following test statistic:

$\mathbb{P}_Y$

with X, Y independent. This suggests to consider the following test statistic:

\begin{equation}\begin{aligned}MMD^2_{m,n}(X_1,\dots,X_m,Y_1,\dots,Y_n)\,:\!=\, &\frac{1}{m(m-1)}\sum_{1\le i\ne j \le m} K(X_i,X_j)+\frac{1}{n(n-1)}\sum_{1\le i\ne j \le n} K(Y_i,Y_j)\\&-\frac{2}{mn}\sum_{\substack{1\le i \le m \\ 1\le j \le n}} K(X_i,Y_j)\end{aligned}\end{equation}

\begin{equation}\begin{aligned}MMD^2_{m,n}(X_1,\dots,X_m,Y_1,\dots,Y_n)\,:\!=\, &\frac{1}{m(m-1)}\sum_{1\le i\ne j \le m} K(X_i,X_j)+\frac{1}{n(n-1)}\sum_{1\le i\ne j \le n} K(Y_i,Y_j)\\&-\frac{2}{mn}\sum_{\substack{1\le i \le m \\ 1\le j \le n}} K(X_i,Y_j)\end{aligned}\end{equation}

as it is an unbiased estimator of

![]() $MMD^2_{\mathcal{G}}(\mathbb{P}_X,\mathbb{P}_Y)$

. In the sequel, we omit the dependency on

$MMD^2_{\mathcal{G}}(\mathbb{P}_X,\mathbb{P}_Y)$

. In the sequel, we omit the dependency on

![]() $X_1,\dots,X_m$

and

$X_1,\dots,X_m$

and

![]() $Y_1,\dots,Y_n$

for notational simplicity. This estimator is even strongly consistent as stated by the following theorem which is an application of the strong law of large numbers for two-sample U-statistics (Sen, Reference Sen1977).

$Y_1,\dots,Y_n$

for notational simplicity. This estimator is even strongly consistent as stated by the following theorem which is an application of the strong law of large numbers for two-sample U-statistics (Sen, Reference Sen1977).

Theorem 2.4 Assuming that

-

(i)

$\mathbb{E}\left[ \sqrt{K(X, X)}\right] < \infty$

with X distributed according to

$\mathbb{E}\left[ \sqrt{K(X, X)}\right] < \infty$

with X distributed according to

$\mathbb{P}_X$

and

$\mathbb{P}_X$

and

$\mathbb{E}\left[ \sqrt{K(Y, Y)}\right] < \infty$

with Y distributed according to

$\mathbb{E}\left[ \sqrt{K(Y, Y)}\right] < \infty$

with Y distributed according to

$\mathbb{P}_Y$

$\mathbb{P}_Y$

-

(ii)

$\mathbb{E}[|h|\log^+|h|] < \infty$

where

$\mathbb{E}[|h|\log^+|h|] < \infty$

where

$\log^+$

is the positive part of the logarithm and (2.17)with X, X’ distributed according to

$\log^+$

is the positive part of the logarithm and (2.17)with X, X’ distributed according to \begin{equation}h = K(X, X^{\prime})+K(Y, Y^{\prime})-\frac{1}{2}\left( K(X, Y)+K(X, Y^{\prime})+K(X^{\prime},Y)+K(X^{\prime},Y^{\prime})\right)\end{equation}

\begin{equation}h = K(X, X^{\prime})+K(Y, Y^{\prime})-\frac{1}{2}\left( K(X, Y)+K(X, Y^{\prime})+K(X^{\prime},Y)+K(X^{\prime},Y^{\prime})\right)\end{equation}

$\mathbb{P}_X$

, Y, Y’ distributed according to

$\mathbb{P}_X$

, Y, Y’ distributed according to

$\mathbb{P}_Y$

and X, X’,Y, Y’ independent

$\mathbb{P}_Y$

and X, X’,Y, Y’ independent

then:

Under

![]() $H_1$

,

$H_1$

,

![]() $MMD^2_{\mathcal{G}}(\mathbb{P}_X,\mathbb{P}_Y)>0$

so that

$MMD^2_{\mathcal{G}}(\mathbb{P}_X,\mathbb{P}_Y)>0$

so that

![]() $N\times MMD^2_{m,n} \overset{a.s.}{\underset{m,n \to +\infty}{\rightarrow}} +\infty$

for

$N\times MMD^2_{m,n} \overset{a.s.}{\underset{m,n \to +\infty}{\rightarrow}} +\infty$

for

![]() $N=m+n$

. Thus, we reject the null hypothesis at level

$N=m+n$

. Thus, we reject the null hypothesis at level

![]() $\alpha$

if

$\alpha$

if

![]() $MMD^2_{m,n}$

is greater than some threshold

$MMD^2_{m,n}$

is greater than some threshold

![]() $c_{\alpha}$

. In order to determine this threshold, we rely on the asymptotic distribution of

$c_{\alpha}$

. In order to determine this threshold, we rely on the asymptotic distribution of

![]() $MMD^2_{m,n}$

under

$MMD^2_{m,n}$

under

![]() $H_0$

which is due to Gretton et al. (Reference Gretton, Borgwardt, Rasch, Schölkopf and Smola2012, Theorem 12).

$H_0$

which is due to Gretton et al. (Reference Gretton, Borgwardt, Rasch, Schölkopf and Smola2012, Theorem 12).

Theorem 2.5. Let us define the kernel

![]() $\tilde{K}$

by:

$\tilde{K}$

by:

where X and X’ are i.i.d. samples drawn from

![]() $\mathbb{P}_X$

. Assume that:

$\mathbb{P}_X$

. Assume that:

-

(i)

$\mathbb{E}[\tilde{K}(X, X^{\prime})^2] <+\infty$

$\mathbb{E}[\tilde{K}(X, X^{\prime})^2] <+\infty$

-

(ii)

$m/N \rightarrow \rho \in (0,1)$

as

$m/N \rightarrow \rho \in (0,1)$

as

$N=m+n \rightarrow +\infty$

.

$N=m+n \rightarrow +\infty$

.

Under these assumptions, we have:

-

1. under

$H_0$

: (2.20)where

$H_0$

: (2.20)where \begin{equation}N\times MMD^2_{m,n} \underset{N \to +\infty}{\overset{\mathcal{L}}{\rightarrow}}\frac{1}{\rho(1-\rho)}\sum_{\ell=1}^{+\infty}\lambda_{\ell} \left(G_{\ell}^2 -1 \right)\end{equation}

\begin{equation}N\times MMD^2_{m,n} \underset{N \to +\infty}{\overset{\mathcal{L}}{\rightarrow}}\frac{1}{\rho(1-\rho)}\sum_{\ell=1}^{+\infty}\lambda_{\ell} \left(G_{\ell}^2 -1 \right)\end{equation}

$(G_{\ell})_{\ell \ge 1}$

is an infinite sequence of independent standard normal random variables and the

$(G_{\ell})_{\ell \ge 1}$

is an infinite sequence of independent standard normal random variables and the

$\lambda_{\ell}$

’s are the eigenvalues of the operator

$\lambda_{\ell}$

’s are the eigenvalues of the operator

$S_{\tilde{K}}$

defined as (2.21)with

$S_{\tilde{K}}$

defined as (2.21)with \begin{equation}\begin{array}{rrcl}S_{\tilde{K}}\,:\,& L_2(\mathbb{P}_X) &\rightarrow &L_2(\mathbb{P}_X) \\&g &\mapsto &\mathbb{E}[\tilde{K}(\cdot,X)g(X)]\end{array}\end{equation}

\begin{equation}\begin{array}{rrcl}S_{\tilde{K}}\,:\,& L_2(\mathbb{P}_X) &\rightarrow &L_2(\mathbb{P}_X) \\&g &\mapsto &\mathbb{E}[\tilde{K}(\cdot,X)g(X)]\end{array}\end{equation}

$L_2(\mathbb{P}_X)\,:\!=\,\{g\,:\, \mathcal{X} \rightarrow \mathbb{R} \mid \mathbb{E}[g(X)^2] <\infty \}$

.

$L_2(\mathbb{P}_X)\,:\!=\,\{g\,:\, \mathcal{X} \rightarrow \mathbb{R} \mid \mathbb{E}[g(X)^2] <\infty \}$

.

-

2. under

$H_1$

: (2.22)

$H_1$

: (2.22) \begin{equation}N\times MMD^2_{m,n} \underset{N\to +\infty}{\rightarrow} +\infty\end{equation}

\begin{equation}N\times MMD^2_{m,n} \underset{N\to +\infty}{\rightarrow} +\infty\end{equation}

This theorem indicates that if one wants to have a test with level

![]() $\alpha$

, one should take the

$\alpha$

, one should take the

![]() $1-\alpha$

quantile of the above asymptotic distribution as rejection threshold. In order to approximate this quantile, we use an approach proposed by Gretton et al. (Reference Gretton, Fukumizu, Harchaoui and Sriperumbudur2009) that aims at estimating the eigenvalues in Theorem 2.5 using the empirical Gram matrix spectrum. This approach relies on the following theorem (Theorem 1 of Gretton et al., Reference Gretton, Fukumizu, Harchaoui and Sriperumbudur2009).

$1-\alpha$

quantile of the above asymptotic distribution as rejection threshold. In order to approximate this quantile, we use an approach proposed by Gretton et al. (Reference Gretton, Fukumizu, Harchaoui and Sriperumbudur2009) that aims at estimating the eigenvalues in Theorem 2.5 using the empirical Gram matrix spectrum. This approach relies on the following theorem (Theorem 1 of Gretton et al., Reference Gretton, Fukumizu, Harchaoui and Sriperumbudur2009).

Theorem 2.6. Let

![]() $(\lambda_{\ell})_{\ell\ge 1}$

be the eigenvalues defined in Theorem 2.5 and

$(\lambda_{\ell})_{\ell\ge 1}$

be the eigenvalues defined in Theorem 2.5 and

![]() $(G_{\ell})_{\ell\ge 1}$

be a sequence of i.i.d. standard normal variables. For

$(G_{\ell})_{\ell\ge 1}$

be a sequence of i.i.d. standard normal variables. For

![]() $N=m+n$

, we define the centred Gram matrix

$N=m+n$

, we define the centred Gram matrix

![]() $\hat{A}= HAH $

where

$\hat{A}= HAH $

where

![]() $A=(K(Z_i,Z_j))_{1\le i,j \le N}$

(

$A=(K(Z_i,Z_j))_{1\le i,j \le N}$

(

![]() $Z_i = X_i$

if

$Z_i = X_i$

if

![]() $i\le m$

and

$i\le m$

and

![]() $Z_i = Y_{i-m}$

if

$Z_i = Y_{i-m}$

if

![]() $i > m$

) and

$i > m$

) and

![]() $H = I_{N} -\frac{1}{N}\textbf{1}\textbf{1}^T$

. If

$H = I_{N} -\frac{1}{N}\textbf{1}\textbf{1}^T$

. If

![]() $\sum_{l=1}^{+\infty}\sqrt{\lambda_l} < \infty$

and

$\sum_{l=1}^{+\infty}\sqrt{\lambda_l} < \infty$

and

![]() $m/N \rightarrow \rho \in (0,1)$

as

$m/N \rightarrow \rho \in (0,1)$

as

![]() $N \rightarrow +\infty$

, then under

$N \rightarrow +\infty$

, then under

![]() $H_0$

:

$H_0$

:

where

![]() $\hat{\rho}=m/N$

and the

$\hat{\rho}=m/N$

and the

![]() $\nu_{\ell}$

’s are the eigenvalues of

$\nu_{\ell}$

’s are the eigenvalues of

![]() $\hat{A}$

.

$\hat{A}$

.

Therefore, we can approximate the asymptotic distribution in Theorem 2.5 by

with R is the truncation order and

![]() $\nu_1 > \nu_2 > \dots > \nu_R$

are the R first eigenvalues of

$\nu_1 > \nu_2 > \dots > \nu_R$

are the R first eigenvalues of

![]() $\hat{A}$

in decreasing order. A rejection threshold is then obtained by simulating several realizations of the above random variable and then computing their empirical quantile at level

$\hat{A}$

in decreasing order. A rejection threshold is then obtained by simulating several realizations of the above random variable and then computing their empirical quantile at level

![]() $1-\alpha$

.

$1-\alpha$

.

3. Implementation and numerical results

The objective of this section is to show the practical interest of the two-sample test described in the previous section for the validation of real-world economic scenarios. In the sequel, we refer to the two-sample test as the signature-based validation test. As a preliminary, we discuss the challenges implied by the practical implementation of the signature-based validation test.

3.1. Practical implementation of the signature-based validation test

3.1.1. Signature of a finite number of observations

In practice, the historical paths of the risk drivers under interest are not observed continuously, and the data are only available on a discrete time grid with steps that are generally not very small (one day for the realized volatility data and one month for the inflation considered in Section 3.3). One has to embed these observations into a continuous path in order to be able to compute the signature and a fortiori the MMD with the model that we want to test. The two most popular embeddings in the literature are the linear and the rectilinear embeddings. The former one consists in a plain linear interpolation of the observations, while the latter consists in an interpolation using only parallel shifts with respect to the x and y-axis. In the sequel, we will only use the linear embedding as this choice does not seem to have a material impact on the information contained in the obtained signature as shown by the comparative study led by Fermanian (section 4.2 of Fermanian, Reference Fermanian2021). Even if several of the models considered below have paths with unbounded variation, we discretize them with a rather crude step (consistent with insurance practice) and then we compute the signatures of the continuous paths with bounded variation deduced by the linear interpolation embedding.

3.1.2. Extracting information of a one-dimensional process

Remember that if X is a one-dimensional bounded variation path, then its signature over [0, T] is equal to the powers of the increment

![]() $X_T-X_0$

. As a consequence, finer information than the global increment about the evolution of X on [0, T] is lost. In practice, X is often of dimension 1. Indeed, the validation of real-world economic scenarios is generally performed separately for each risk driver and not all together as a first step and only then the validation is performed on a more global level (e.g. through the analysis of the copula among risk drivers). For example, when validating risk drivers such as equity or inflation, X represents an equity or inflation index which is one-dimensional. Moreover, even for multidimensional risk drivers such as interest rates, practitioners tend to work on the marginal distributions (e.g. they focus separately on each maturity for interest rates) so that the validation is again one-dimensional. In order to nonetheless be able to capture finer information about the evolution of X on [0, T], one can apply a transformation to X to recover a multi-dimensional path. The two most widely used transformations are the time transformation and the lead-lag transformation. The time transformation consists in considering the two-dimensional path

$X_T-X_0$

. As a consequence, finer information than the global increment about the evolution of X on [0, T] is lost. In practice, X is often of dimension 1. Indeed, the validation of real-world economic scenarios is generally performed separately for each risk driver and not all together as a first step and only then the validation is performed on a more global level (e.g. through the analysis of the copula among risk drivers). For example, when validating risk drivers such as equity or inflation, X represents an equity or inflation index which is one-dimensional. Moreover, even for multidimensional risk drivers such as interest rates, practitioners tend to work on the marginal distributions (e.g. they focus separately on each maturity for interest rates) so that the validation is again one-dimensional. In order to nonetheless be able to capture finer information about the evolution of X on [0, T], one can apply a transformation to X to recover a multi-dimensional path. The two most widely used transformations are the time transformation and the lead-lag transformation. The time transformation consists in considering the two-dimensional path

![]() $\hat{X}_t\,:\,t\mapsto (t,X_t)$

instead of

$\hat{X}_t\,:\,t\mapsto (t,X_t)$

instead of

![]() $t\mapsto X_t$

. The lead-lag transformation has been introduced by Gyurkó et al. (Reference Gyurkó, Lyons, Kontkowski and Field2013) in order to capture the quadratic variation of a path in the signature. Let X be a real-valued stochastic process and

$t\mapsto X_t$

. The lead-lag transformation has been introduced by Gyurkó et al. (Reference Gyurkó, Lyons, Kontkowski and Field2013) in order to capture the quadratic variation of a path in the signature. Let X be a real-valued stochastic process and

![]() $0=t_0< t_{1/2} < t_1 < \dots < t_{N-1/2} < t_N = T$

be a partition of [0, T]. The lead-lag transformation of X on the partition

$0=t_0< t_{1/2} < t_1 < \dots < t_{N-1/2} < t_N = T$

be a partition of [0, T]. The lead-lag transformation of X on the partition

![]() $(t_{i/2})_{i=0,\dots,2N}$

is the two-dimensional path

$(t_{i/2})_{i=0,\dots,2N}$

is the two-dimensional path

![]() $t\mapsto (X_t^{lead},X_t^{lag})$

defined on [0, T] where:

$t\mapsto (X_t^{lead},X_t^{lag})$

defined on [0, T] where:

-

1. the lead process

$t\mapsto X_t^{lead}$

is the linear interpolation of the points

$t\mapsto X_t^{lead}$

is the linear interpolation of the points

$(X^{lead}_{t_{i/2}})_{i=0,\dots,2N}$

with: (3.1)

$(X^{lead}_{t_{i/2}})_{i=0,\dots,2N}$

with: (3.1) \begin{equation}X^{lead}_{t_{i/2}} =\left\{\begin{array}{l@{\quad}l}X_{t_j} & \text{if } i = 2j \\[4pt]X_{t_{j+1}} & \text{if } i = 2j+1\end{array}\right.\end{equation}

\begin{equation}X^{lead}_{t_{i/2}} =\left\{\begin{array}{l@{\quad}l}X_{t_j} & \text{if } i = 2j \\[4pt]X_{t_{j+1}} & \text{if } i = 2j+1\end{array}\right.\end{equation}

-

2. the lag process

$t\mapsto X_t^{lag}$

is the linear interpolation of the points

$t\mapsto X_t^{lag}$

is the linear interpolation of the points

$(X^{lag}_{t_{i/2}})_{i=0,\dots,2N}$

with: (3.2)

$(X^{lag}_{t_{i/2}})_{i=0,\dots,2N}$

with: (3.2) \begin{equation}X^{lag}_{t_{i/2}} =\left\{\begin{array}{l@{\quad}l}X_{t_j} & \text{if } i=2j \\[4pt]X_{t_j} & \text{if } i=2j+1\end{array}\right.\end{equation}

\begin{equation}X^{lag}_{t_{i/2}} =\left\{\begin{array}{l@{\quad}l}X_{t_j} & \text{if } i=2j \\[4pt]X_{t_j} & \text{if } i=2j+1\end{array}\right.\end{equation}

Illustrations of the lead and lag paths as well as of the lead-lag transformation are provided in Figure 1. Note that by summing the area of each rectangle formed by the lead-lag transformation between two orange dots in Figure 1(b), one recovers the sum of the squares of the increments of X which is exactly the quadratic variation of X over the partition

![]() $(t_i)_{i=0,\dots,N}$

. The link between the signature and the quadratic variation is more formally stated in Proposition A.1. of the Supplementary Material.

$(t_i)_{i=0,\dots,N}$

. The link between the signature and the quadratic variation is more formally stated in Proposition A.1. of the Supplementary Material.

Figure 1. Lead-lag illustrations.

Remark 3.1. The choice of the dates

![]() $(t_{i+1/2})_{i=0,\dots,N-1}$

such that

$(t_{i+1/2})_{i=0,\dots,N-1}$

such that

![]() $t_i < t_{i+1/2} < t_{i+1}$

can be arbitrary since the signature is invariant by time reparametrization (see Proposition B.1. in the Supplementary Material).

$t_i < t_{i+1/2} < t_{i+1}$

can be arbitrary since the signature is invariant by time reparametrization (see Proposition B.1. in the Supplementary Material).

A third transformation can be constructed from the time and the lead-lag transformations. Indeed, given a finite set of observations

![]() $(X_{t_i})_{i=0,\dots,N}$

, one can consider the three-dimensional path

$(X_{t_i})_{i=0,\dots,N}$

, one can consider the three-dimensional path

![]() $t\mapsto (t,X_t^{lead},X_t^{lag})$

. We call this transformation the time lead-lag transformation. Finally, the cumulative lead-lag transformation is the two-dimensional path

$t\mapsto (t,X_t^{lead},X_t^{lag})$

. We call this transformation the time lead-lag transformation. Finally, the cumulative lead-lag transformation is the two-dimensional path

![]() $t\mapsto (\tilde{X}_t^{lead},\tilde{X}_t^{lag})$

where

$t\mapsto (\tilde{X}_t^{lead},\tilde{X}_t^{lag})$

where

![]() $\tilde{X}_t^{lead}$

(resp.

$\tilde{X}_t^{lead}$

(resp.

![]() $\tilde{X}_t^{lag}$

) is the lead (resp. lag) transformation of the points

$\tilde{X}_t^{lag}$

) is the lead (resp. lag) transformation of the points

![]() $(\tilde{X}_{t_{i}})_{i=0,\dots,N+1}$

with:

$(\tilde{X}_{t_{i}})_{i=0,\dots,N+1}$

with:

\begin{equation}\tilde{X}_{t_{i}} = \left\{\begin{array}{l@{\quad}l}0 & \text{for $i=0$}\\[4pt]\sum_{k=0}^{i-1} X_{t_{k}} & \text{for $i=1,\dots,N+1$}.\end{array}\right.\end{equation}

\begin{equation}\tilde{X}_{t_{i}} = \left\{\begin{array}{l@{\quad}l}0 & \text{for $i=0$}\\[4pt]\sum_{k=0}^{i-1} X_{t_{k}} & \text{for $i=1,\dots,N+1$}.\end{array}\right.\end{equation}

This transformation has been introduced by Chevyrev and Kormilitzin (Reference Chevyrev and Kormilitzin2016) because its signature is related to the statistical moments of the initial path X. More details on this point are provided in Remark A.1. in the Supplementary Material.

3.1.3. Numerical computation of the signature and the MMD

The numerical computation of the signature is performed using the iisignature Python package (version 0.24) of Reizenstein and Graham (Reference Reizenstein and Graham2020). The signatory Python package of Kidger and Lyons (Reference Kidger and Lyons2020) could also be used for faster computations. Because the signature is an infinite object, we compute in practice only the truncated signature up to some specified order R. The influence of the truncation order on the statistical power of the test will be discussed in Section 3.2. Note that we will focus on truncation orders below 8 as there is not much information beyond this order given that we work with a limited number of observations of each path which implies that the approximation of high-order iterated integrals will rely on very few points.

3.2. Analysis of the statistical power on synthetic data

In this subsection, we apply the signature-based validation on simulated data, that is the two samples of stochastic processes are numerically simulated. Keeping in mind insurance applications, the two-sample test is structured as follows:

-

Each path is obtained by a linear interpolation from a set of 13 equally spaced observations of the stochastic process under study. The first observation (i.e. the initial value of each path) is the same across all paths. These 13 observations represent monthly observations over a period of one year. In insurance practice, computational time constraints around the asset and liability models generally limit the simulation frequency to a monthly time step. The period of one year is justified by the fact that one needs to split the historical path under study into several shorter paths to get a test sample of size greater than 1. Because the number of historical data points is limited (about 30 years of data for the major equity indices), a split frequency of one year appears reasonable given the monthly observation frequency.

-

The two samples are assumed to be of different sizes (i.e.

$m\neq n$

with the notations of Section 2.3). Several sizes m of the first sample will be tested while the size n of the second sample is always set to 1000. The first sample representing historical paths, we will mainly consider small values of m as m will in practice be equal to the number of years of available data (considering a split of the historical path in one-year length paths as discussed above). For the second sample which consists in simulated paths (for example by an Economic Scenario Generator), we take 1000 simulated paths as it corresponds to a lower bound of the number of scenarios typically used by insurers. Numerical tests (not presented here) have also been performed with a sample size of 5000 instead of 1000, but the results were essentially the same.

$m\neq n$

with the notations of Section 2.3). Several sizes m of the first sample will be tested while the size n of the second sample is always set to 1000. The first sample representing historical paths, we will mainly consider small values of m as m will in practice be equal to the number of years of available data (considering a split of the historical path in one-year length paths as discussed above). For the second sample which consists in simulated paths (for example by an Economic Scenario Generator), we take 1000 simulated paths as it corresponds to a lower bound of the number of scenarios typically used by insurers. Numerical tests (not presented here) have also been performed with a sample size of 5000 instead of 1000, but the results were essentially the same. -

As we aim to explore the capability of the two-sample test to capture properties of the paths that cannot be captured by looking at their marginal distribution at some dates, we impose that the distributions of the increment over [0, T] of the two compared stochastic processes are the same. In other words, we only compare stochastic processes

$(X_t)_{0\le t \le T}$

and

$(X_t)_{0\le t \le T}$

and

$(Y_t)_{0\le t\le T}$

satisfying

$(Y_t)_{0\le t\le T}$

satisfying

$X_T-X_0\overset{\mathcal{L}}{=}Y_T-Y_0$

with

$X_T-X_0\overset{\mathcal{L}}{=}Y_T-Y_0$

with

$T=1$

year. This constraint is motivated by the fact that two models that do not have the same marginal one-year distribution are already discriminated by the current point-in-time validation methods. Moreover, it is a common practice in the insurance industry to calibrate the real-world models by minimizing the distance between model and historical moments so that the model marginal distribution is often close to the historical marginal distribution. Because of this constraint, we will remove the first-order term of the signature in our estimation of the MMD because it is equal to the global increment

$T=1$

year. This constraint is motivated by the fact that two models that do not have the same marginal one-year distribution are already discriminated by the current point-in-time validation methods. Moreover, it is a common practice in the insurance industry to calibrate the real-world models by minimizing the distance between model and historical moments so that the model marginal distribution is often close to the historical marginal distribution. Because of this constraint, we will remove the first-order term of the signature in our estimation of the MMD because it is equal to the global increment

$X_T-X_0$

and it does not provide useful statistical information.

$X_T-X_0$

and it does not provide useful statistical information.

In order to measure the ability of the signature-based validation to distinguish two different samples of paths, we compute the statistical power of the underlying test, which is the probability to correctly reject the null hypothesis under

![]() $H_1$

, by simulating 1000 times two samples of sizes m and n respectively and counting the number of times that the null hypothesis (the stochastic processes underlying the two samples are the same) is rejected. The rejection threshold is obtained using the empirical Gram matrix spectrum as described in Section 2.3. First, we generate a sample of size m and a sample of size n under

$H_1$

, by simulating 1000 times two samples of sizes m and n respectively and counting the number of times that the null hypothesis (the stochastic processes underlying the two samples are the same) is rejected. The rejection threshold is obtained using the empirical Gram matrix spectrum as described in Section 2.3. First, we generate a sample of size m and a sample of size n under

![]() $H_0$

to compute the eigenvalues of the matrix

$H_0$

to compute the eigenvalues of the matrix

![]() $\hat{A}$

in Theorem 2.6. Then, we keep the 20 first eigenvalues in decreasing order and we perform 10,000 simulations of the random variable in Equation (2.24) whose distribution approximates the MMD asymptotic distribution under

$\hat{A}$

in Theorem 2.6. Then, we keep the 20 first eigenvalues in decreasing order and we perform 10,000 simulations of the random variable in Equation (2.24) whose distribution approximates the MMD asymptotic distribution under

![]() $H_0$

. The rejection threshold is obtained as the empirical quantile of level 99% of these samples. For each experiment presented in the sequel, we also simulate 1000 times two samples of sizes m and n under

$H_0$

. The rejection threshold is obtained as the empirical quantile of level 99% of these samples. For each experiment presented in the sequel, we also simulate 1000 times two samples of sizes m and n under

![]() $H_0$

and we count the number of times that the null hypothesis is rejected with this rejection threshold, which gives us the type I error. This step allows us to verify that the computed rejection threshold provides indeed a test of level 99% in all experiments. As we obtain a type I error around 1% in all numerical experiments, we conclude to the accuracy of the computed rejection threshold.

$H_0$

and we count the number of times that the null hypothesis is rejected with this rejection threshold, which gives us the type I error. This step allows us to verify that the computed rejection threshold provides indeed a test of level 99% in all experiments. As we obtain a type I error around 1% in all numerical experiments, we conclude to the accuracy of the computed rejection threshold.

We will now present numerical results for three stochastic processes: the fractional Brownian motion, the Black–Scholes dynamics and the rough Heston model. Two time-series models will also be considered: a regime-switching AR(1) process and a random walk with i.i.d. Gamma noises. Finally, we study a two-dimensional process where one component evolves according to the rough Heston dynamics and the other one evolves according to a regime-switching AR(1) process. The choice of this catalogue of models is both motivated by

-

1. the fact that the models are either widely used or realistic for the simulation of specific risk drivers (equity volatility, equity prices and inflation) and

-

2. the fact that the models allow to illustrate different pathwise properties such as Hölder regularity, volatility, autocorrelation or regime switches.

3.2.1. The fractional Brownian motion

The fractional Brownian motion (fBm) is a generalization of the standard Brownian motion that, outside this standard case, is neither a semimartingale nor a Markov process and whose sample paths can be more or less regular than those of the standard Brownian motion. More precisely, it is the unique centred Gaussian process

![]() $(B_t^H)_{t\ge 0}$

whose covariance function is given by:

$(B_t^H)_{t\ge 0}$

whose covariance function is given by:

where

![]() $H\in (0,1)$

is called the Hurst parameter. Taking

$H\in (0,1)$

is called the Hurst parameter. Taking

![]() $H=1/2$

, we recover the standard Brownian motion. The fBm exhibits two interesting pathwise properties.

$H=1/2$

, we recover the standard Brownian motion. The fBm exhibits two interesting pathwise properties.

-

1. The fBm sample paths are

$H-\epsilon$

Hölder for all

$H-\epsilon$

Hölder for all

$\epsilon >0$

. Thus, when

$\epsilon >0$

. Thus, when

$H<1/2$

, the fractional Brownian motion sample paths are rougher than those of the standard Brownian motion and when

$H<1/2$

, the fractional Brownian motion sample paths are rougher than those of the standard Brownian motion and when

$H>1/2$

, they are smoother.

$H>1/2$

, they are smoother. -

2. The increments are correlated: if

$s<t<u<v$

, then

$s<t<u<v$

, then

$\mathbb{E}\left[\left(B_t^H-B_s^H\right)\left(B_v^H-B_u^H\right)\right]$

is positive if

$\mathbb{E}\left[\left(B_t^H-B_s^H\right)\left(B_v^H-B_u^H\right)\right]$

is positive if

$H>1/2$

and negative if

$H>1/2$

and negative if

$H<1/2$

since

$H<1/2$

since

$x\mapsto x^{2H}$

is convex if

$x\mapsto x^{2H}$

is convex if

$H>1/2$

and concave otherwise.

$H>1/2$

and concave otherwise.

One of the main motivations for studying this process is the work of Gatheral et al. (Reference Gatheral, Jaisson and Rosenbaum2018) which shows that the historical volatility of many financial indices essentially behaves as a fBm with a Hurst parameter around 10%.

Figure 2. Statistical power (as a function of the truncation order and the size of the first sample) of the signature-based validation test when comparing fBm paths with

![]() $H=0.1$

against fBm paths with

$H=0.1$

against fBm paths with

![]() $H^{\prime}=0.2$

. Note that the lead-lag transformation is applied.

$H^{\prime}=0.2$

. Note that the lead-lag transformation is applied.

In the following numerical experiments, we will compare samples from a fBm with Hurst parameter H and samples from a fBm with a different Hurst parameter H

′. One can easily check that

![]() $B_1^H$

has the same distribution than

$B_1^H$

has the same distribution than

![]() $B_1^{H^{\prime}}$

since

$B_1^{H^{\prime}}$

since

![]() $B_1^H$

and

$B_1^H$

and

![]() $B_1^{H^{\prime}}$

are both standard normal variables. Thus, the constraint that both samples have the same one-year marginal distribution (see the introduction of Section 3.2) is satisfied. Note that a variance rescaling should be performed if one considers a horizon that is different from 1 year. We start with a comparison of fBm paths having a Hurst parameter

$B_1^{H^{\prime}}$

are both standard normal variables. Thus, the constraint that both samples have the same one-year marginal distribution (see the introduction of Section 3.2) is satisfied. Note that a variance rescaling should be performed if one considers a horizon that is different from 1 year. We start with a comparison of fBm paths having a Hurst parameter

![]() $H=0.1$

with fBm paths having a Hurst parameter

$H=0.1$

with fBm paths having a Hurst parameter

![]() $H=0.2$

using the lead-lag transformation. In Figure 2, we plot the statistical power as a function of the truncation order R for different values of the first sample size m (we recall that the size of the second sample is fixed to 1000). We observe that even with small sample sizes, we already obtain a power close to 1 at order 2. Note that the power does not increase with the order but decreases at odd orders when the sample size is smaller than 50. This can be explained by the fact that the odd-order terms of the signature of the lead-lagged fBm are linear combinations of monomials in

$H=0.2$

using the lead-lag transformation. In Figure 2, we plot the statistical power as a function of the truncation order R for different values of the first sample size m (we recall that the size of the second sample is fixed to 1000). We observe that even with small sample sizes, we already obtain a power close to 1 at order 2. Note that the power does not increase with the order but decreases at odd orders when the sample size is smaller than 50. This can be explained by the fact that the odd-order terms of the signature of the lead-lagged fBm are linear combinations of monomials in

![]() $B_{t_1}^H,\dots,B^H_{t_{N}}$

that are of odd degree. Since

$B_{t_1}^H,\dots,B^H_{t_{N}}$

that are of odd degree. Since

![]() $(B_t^H)$

is a centred Gaussian process, the expectation of these terms are zero no matter the value of H. As a consequence, the contribution of odd-order terms of the signature to the MMD is the same under

$(B_t^H)$

is a centred Gaussian process, the expectation of these terms are zero no matter the value of H. As a consequence, the contribution of odd-order terms of the signature to the MMD is the same under

![]() $H_0$

and under

$H_0$

and under

![]() $H_1$

. If we conduct the same experiment for

$H_1$

. If we conduct the same experiment for

![]() $H=0.1$

versus

$H=0.1$

versus

![]() $H^{\prime}=0.5$

(corresponding to the standard Brownian motion), we obtain cumulated powers greater than 99% for all tested orders and sample sizes (even

$H^{\prime}=0.5$

(corresponding to the standard Brownian motion), we obtain cumulated powers greater than 99% for all tested orders and sample sizes (even

![]() $m=10$

) even if the power of the odd orders is small (below 45%). This is very promising as it shows that the signature-based validation allows distinguishing very accurately rough fBm paths (with a Hurst parameter in the range of those estimated by Gatheral et al., Reference Gatheral, Jaisson and Rosenbaum2018) from standard Brownian motion paths even with small sample sizes.

$m=10$

) even if the power of the odd orders is small (below 45%). This is very promising as it shows that the signature-based validation allows distinguishing very accurately rough fBm paths (with a Hurst parameter in the range of those estimated by Gatheral et al., Reference Gatheral, Jaisson and Rosenbaum2018) from standard Brownian motion paths even with small sample sizes.

Note that in these numerical experiments, we have not used any tensor normalization while it is a key ingredient in Theorem 2.3. This is motivated by the fact that the power is much worse when we use Chevyrev and Oberhauser’s normalization (see Example 4 in Chevyrev and Oberhauser, Reference Chevyrev and Oberhauser2022) as one can see on Figure 3(a). These lower powers can be understood as a consequence of the fact that the normalization is specific to each path. So the normalization can bring the distribution of

![]() $S(\hat{B}^{H^{\prime}})$

closer to the one of

$S(\hat{B}^{H^{\prime}})$

closer to the one of

![]() $S(\hat{B}^H)$

than without normalization so that it is harder to distinguish them at fixed sample size. Moreover, if the normalization constant

$S(\hat{B}^H)$

than without normalization so that it is harder to distinguish them at fixed sample size. Moreover, if the normalization constant

![]() $\lambda$

is smaller than 1 (which we observe numerically), the high-order terms of the signature become close to zero and their contribution to the MMD is not material. For

$\lambda$

is smaller than 1 (which we observe numerically), the high-order terms of the signature become close to zero and their contribution to the MMD is not material. For

![]() $H=0.1$

versus

$H=0.1$

versus

![]() $H^{\prime}=0.5$

, we observed that the powers remain very close to 100%.

$H^{\prime}=0.5$

, we observed that the powers remain very close to 100%.