“Theories permit consciousness to ‘jump over its own shadow’, to leave behind the given, to represent the transcendent, yet, as is self-evident, only in symbols.”

Introduction

After presenting in Chapter 8 a sketch of the coevolution of human cognition, socioenvironmental interaction, and organizational evolution, we need to look more closely and critically at the concepts and ideas that underpin this view. That raises three fundamental questions – “What do I consider information?,” “What is information processing?,” and “How is information transmitted in societies?” Those questions are the topic of this chapter, which, in order to solidly ground the book is a little more technical than earlier chapters.

It is the main thesis of this book that societies can profitably be seen as an example of self-organizing human communications structures, whether we are talking about urban societies or other forms of human social organization, such as small band societies or hierarchical tribes. The differences are merely organizational ones, owing to the need to deal with larger information loads and energy flows as human problem-solving generates more knowledge, and the concomitant increase in the population requires more food and other resources.

Although the book’s fundamental theses are (1) that the structure of social systems is due to the particularities of human information-processing, and (2) that the best way to look at social systems is from a dissipative flow structure paradigm, it differs in its use of the two core concepts “information” and “flow structure” from earlier studies.

The difference with respect to the information approach presented by Webber (Reference Webber1977), for example, is that I view societal systems as open systems, so that neither the statistical–mechanical concept of entropy nor Shannon’s concept of relative entropy can be used, as they only apply to closed systems in which entropy does not dissipate. As Chapman rightly argues (Reference Chapman1970), the existence of towns is proof that human systems go against the entropy law, which is in essence only usable as a measure of the decay of structure.1 That approach therefore seems of little use.

The difference with earlier applications of the “flow structure” approach, such as P. M. Allen’s (Allen & Sanglier Reference Allen and Sanglier1979; Allen & Engelen Reference Allen, Engelen, Ebeling and Peschel1985) or Haag and Weidlich’s (Reference Haag and Weidlich1984, Reference Haag and Weidlich1986) is that I wish to formulate a theory of the origins of societies that forces us to forego a model of social dynamics formulated in terms of a social theory (Allen) or even migration (Haag & Weidlich), as these make assumptions that we cannot validate for the genesis of societal systems. Just like Day and Walter (Reference Day, Walter, Barnett, Geweke and Shell1989) in their attempt to model long-term economic trends (in the production of energy and matter) must revert to population, we have to revert to information and organization if we wish to model long-term trends in patterning (Lane et al. Reference Lane, Maxfield, Read, van der Leeuw, Lane, Pumain, van der Leeuw and West2009).

Social Systems as Dissipative Structures

I therefore view human institutions very abstractly as self-organizing webs of channels through which matter, energy, and information flow, and model the dynamics of cultural systems as if they are similar to those of dissipative flow structures. As this conception is fundamental to the argument of this book, I will present it here in a more elaborate form.

A simple model of a dissipative structure is that of an autocatalytic chemical reaction in an open system that produces, say, two colored reagents in a liquid that is initially the color of the four substances combined.2 At equilibrium, there is no spatial or temporal structure. When the reaction is pushed away from equilibrium, a spatiotemporal configuration of contrasting colors is generated in the liquid. As it is difficult to represent this in a single picture, I refer the reader to a short YouTube video that explains both the history and the dynamics of this so-called Belouzhov-Zhabotinskii reaction: www.youtube.com/watch?v=nEncoHs6ads.

Structuring continues over relatively long time-spans, which implies that during that period the system is capable of overcoming, at least locally, its tendency toward remixing the colors (in technical terms, it dissipates entropy). The structure, as well as the reaction rate and the dissipation rate, depend on the precise history of instabilities that have occurred.

The applicability of the dissipative structure idea to human institutions, then, hinges on our ability to answer each of the following two questions positively:

Is there at least one equivalent to the autocatalytic reaction just presented that can be held responsible for coherent structuring in human systems?

Is the system an open system, i.e., is it in free exchange of matter, energy, and information with its environment, as is the case with other living systems?

It seems to me that human learning has many properties that permit us to view it as an autocatalytic reaction between observation and knowledge creation. The observation that social systems came into existence and continue to expand, rather than to decay, seems to point to an affirmative answer to the second question.

This chapter is devoted to exploring these questions further. First, I will deal with the individual human being, and consider the learning process as a dynamic interaction between knowledge, information, and observations. The second part deals with the dynamic interaction between the individual and the group, and considers shared knowledge and communication. Finally, I will consider system boundaries and dissipation.

Perception, Cognition, and Learning

Uninterrupted feedback between perception, cognition, and learning is a fundamental characteristic of any human activity. That interaction serves to reduce the apparent chaos of an uncharted environment to manageable proportions. One might visualize the world around us as containing an infinite number of phenomena that each have a potentially infinite number of dimensions along which they can be perceived. In order to give meaning to this chaos (χαοσ (Greek): the infinity that feeds creation), human beings seem to select certain dimensions of perception (the signal) by suppressing perception in many of the other potentially infinite dimensions of variability, relegating these to the status of “noise.”

On the basis of experimental psychology, Tverski and his associates (Tverski Reference Tverski1977; Tverski & Gati Reference Tverski, Gati, Rosch and Lloyd1978; Kahnemann & Tverski Reference Kahnemann, Slovic and Tverski1982) studied pattern recognition and category formation in the human mind. They concluded that:

Similarity and dissimilarity should not be taken as absolutes.

Categorization (judging in which class a phenomenon belongs) occurs by comparing the subject with a referent. Generally, the subject receives more attention than the referent.

Judgment is directly constrained by a context (the other subjects or other referents surrounding the one under consideration).

Judgments of similarity or of dissimilarity are also constrained by the aims of the comparison. For example, similar odds may be judged favorably or unfavorably depending on whether one is told that one may gain or lose in making the bet.

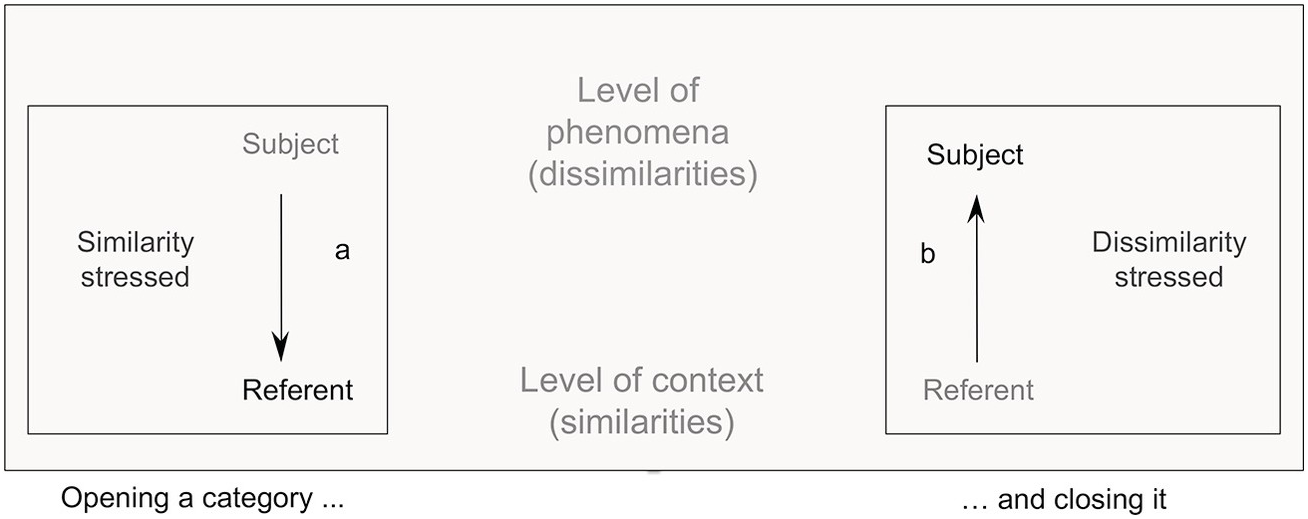

From these observations, one may derive the following model of perception:

1. Perception is based on comparison of patterns perceived. A first comparison always takes place outside any applicable context (the dimensions in which the phenomena occur are unknown), so that there is no referent and no specific aim. Thus, there is no specific bias toward similarity or dissimilarity. If there is any bias at all, it is either due to intuition or to what people have learned on past occasions, which cannot necessarily be mapped onto the case at hand.

2. Once an initial comparison has led to the establishment of a referent (a relevant context or patterning of similarity and dissimilarity), this context is tested against other phenomena to establish its validity. In such testing, the established pattern is the subject and the phenomena are the referents. There is therefore (following Tverski’s second statement) a distinct bias in favor of similarity.

3. Once the context is firmly established and no longer scrutinized, new phenomena are subjects in further comparisons, and the context is the referent. Thus, the comparisons are biased toward the individuality of the phenomena and toward dissimilarity.

4. Once a large number of phenomena have been judged in this way, the initial bias is neutralized, the context is no longer considered relevant at all, and the cycle starts again, so that further comparisons lead to establishing another context.

5. Ultimately, this process leads to the grouping of a large set of phenomena in a number of categories at the same level, which are generally mutually exclusive (establishing dimensions and categories along them). At a certain point, the number of categories is so large that the same comparative process begins again, at a higher level, which treats the groups as phenomena and results in higher level generalizations.

Thus, perception and cognition may be seen as a feedback cycle between the concepts (categorizations) thus generated, their material manifestations, and the (transformed) concepts that derive from and/or are constrained by these material manifestations. This cycle is illustrated in Figure 9.1.

Figure 9.1 The dynamics of category formation as described by Tversky and Gati (Reference Tverski, Gati, Rosch and Lloyd1978). For an explanation, see the text.

This learning process is as endless as it is continuous, and could also be seen as an interaction between knowledge, the formalized set of substantive and relational categorizations that make up the cognitive system of an individual, and information, the messages that derive their raison d’être and their meaning from the fact that they trigger responses from these categorizations, yet never fit any of them exactly. In that sense, information can be seen as potential meaning.

Because the chances that messages exactly fit any preexisting categories are infinitesimally small, they continuously challenge and reshape knowledge. In this sense, then, information is the variation that creates the (flow) structure of knowledge. Paraphrasing Rosen, one might say that information is anything that makes a difference (or answers a question).3 But any information also poses new questions.

Communication: The Spread of Knowledge

Because humans are social beings they share, and therefore necessarily exchange, various commodities. This is as fundamental an aspect of human life as perception, cognition, and learning.

Some of these commodities are at first sight entirely material: food, raw materials, artifacts, statuettes, etc. Other exchanges seem predominantly a question of energy: collaboration in the hunt, in tilling the soil, or in building a house, but also slavery, wage labor, etc. Yet a third category primarily seems to concern information: gossip, opinions, and various other oral exchanges, but also their written counterpart: clay tablets, letters, and what have you, including electronic messages.

But in actual fact, the exchange of all commodities involves aspects of matter, energy, and information. Thus, there is the knowledge where to find raw materials or foodstuffs and the human energy expended in extracting or producing them; the knowledge and energy needed to produce artifacts or statuettes, which are reflected in the final product; the knowledge of the debt incurred in asking someone’s help, which is exchanged against that help, only to be drawn upon or reimbursed later; the matter transformed with that help; the energy with which the words are spoken; the matter to which symbols are entrusted in order to be transported. The examples are literally infinite.

Knowledge determines the exact nature and form of all commodities that are selected and/or produced by human beings, whether exchanged or not. It literally in-forms substance. Or as Roy Rappaport used to say, “Creation is the information of substance and the substantiation of form.”4 That is easy to see for the knowledge that generates specific sequences of actions with specific goals, such as in the manufacture of artifacts. But it also applies to the simple selection of materials, whether foodstuffs or raw materials of any other kind: transformation and selection by human beings are knowledge-based and consequently impart information. Hence all exchanges between human beings have material, energetic, and information aspects. But as we saw in Chapter 8, matter, energy, and information are not exchanged in the same way, nor do they affect the structure of the system in the same way.5

At the level we are talking about, matter can be passed directly from one individual to the next, a transaction in which one individual loses what the other gains. Human energy cannot thus be handed over, as the capacity to expend it is inalienable from the living being that does the expending. Clearly, fuels, animals, and slaves might he thought of as energy that is handed over, but whenever this occurs they are handed over as matter. In an exchange, energy can only be harnessed, so it is expended in favor of someone. Knowledge cannot be handed over either: an individual can only accumulate it by processing information. But knowledge can be used to generate information that may, more or less effectively, be communicated and be used by another individual to accumulate highly similar knowledge. As a result of that process, individuals may share knowledge. In this context, clearly, knowledge is a stock that is inherent in the information-processing system, while information is a flow through that information-processing system.

Not the energy and matter aspects of flows through a society are therefore responsible for that society’s coherence, but the knowledge which controls the exchange of information, energy and matter. The individual participants in a society or other human institution are (and remain) part of it because they know how that institution operates, and can use that knowledge to meet their needs and desires. I emphasize this point because often, in archaeology and in geography as well as ecology and economics, the flow of energy or matter is what is deemed to integrate a society.

If we use this argument to assert that in our opinion the flow of information is responsible for the structural form of human societies, this is not to deny that the availability and location of matter and energy play a part in the survival of human systems. Rather, I would like to suggest that material and energetic constraints are in principle of a temporary nature and that, given enough tension between the organizational dynamics of a human institution and its resource base, people will in due course resolve this tension by creating novel means to exploit the resource base differently (through invention of new techniques, choice of other resources, or of other locations, for example).

It would seem therefore that while on shorter timescales the interaction between the different ways in which matter, energy, and information spread through a system count, the long-term dynamics of human institutions are relatively independent of energy and matter, and are ruled by the dynamics of learning, innovation, and communication. These dynamics seem to be responsible for social interaction and societal patterning, and allow people to realize those material forms for which there is a coincidence between two windows of opportunity, in the ideal and the material/energetic realms respectively.

As I am mainly concerned with the very long term, my primary aim in this chapter is to consider the transmission of information in human societies, that is the syntactic aspect of communication. Scholars in the information sciences have expended considerable effort in presenting a quantifiable syntactic theory of information.6 Although my immediate aim does not extend to quantification, some of the conceptualizations behind these approaches might serve to focus the mind.

The core idea in information theory is that information can be seen as a reduction of uncertainty or elimination of possibilities:

When our ignorance or uncertainty about some state of affairs is reduced by an act (such as an observation, reading or receiving a message), the act may be viewed as a source of information pertaining to the state of affairs under consideration. […] A reduction of uncertainty by an act is accomplished only when some options considered possible prior to the act are eliminated by it. […] The amount of information obtained by the act may then be measured by the difference in uncertainty before and after the act.

There is, however, a clear limitation to the applicability of information theoretical approaches. Their success in quantifying and generalizing the concepts of uncertainty and information has been achieved by limiting their applicability in one important sense: these approaches view information strictly in terms of ignorance – or uncertainty reduction within a given syntactic and semantic framework, which is assumed to be fixed in each particular application (Klir & Folger Reference Klir and Folger1988, 189). In essence, formal Information Theory applies to closed systems in which all probabilities are known. That is why information as a quantitative concept can be said to equal the opposite of uncertainty, and increase in entropy to imply loss of information and vice versa.

In archaeology and history, we deal with open (societal) systems, and we have incomplete knowledge of the systems we study. It seems therefore that one could never successfully apply this kind of quantifiable information concept to archaeology or history, except when studying a defined channel of communication that functions within a defined syntactic and semantic framework, i.e., in a situation where symbols and meanings are known and do not change.

Nevertheless, at least one important conclusion of information theory seems to be relevant, the idea that (within a given unchanging syntactic and semantic framework), communication channels have a limited transmission capacity per unit time, and that as long as the rate at which information is inserted into the channel does not exceed its capacity, it is possible to code the information in such a way that it will reach the receiver with arbitrarily high fidelity.8 By implication, if the amount of information that needs to be transmitted through channels increases, there comes a point where a system has to improve channel capacity, introduce other channels, or alter the semantic relationship between knowledge and information.9

Social Systems as Open Systems

Next, we must answer the second of the two questions asked earlier in this chapter: “Are societal systems in free exchange of matter, energy and information with their environment?” For matter and energy, the answer is evidently positive: humanity can only survive because it takes food, fuel, and other forms of matter and energy from its nonhuman environment, and it transfers much of these commodities back into the external environment as waste, heat, etc.

But the exchange of information with the system’s environment may need some further elaboration. Information, as we have used it here, is a relational concept that links certain observations in the “real” realm of matter and energy with a pattern in the realm of ideas in the brain. I have argued above that humans generate knowledge through perceptual observation and cognitive choice, in essence therefore within the human brain, and at the group level within the societal system. Knowledge does not transcend system boundaries directly. Yet perception and cognition distill knowledge from the observation of phenomena outside the human/societal system. Those phenomena are thus, as it were, potential information to the system. We must conclude that knowledge inside the system is increased by transferring such potential information into the system from the outside. Among transfers in the opposite direction, there is first the direct loss of knowledge through loss of individual or collective memory or the death of individuals. But information is also taken out of the human system when words can be blown away, writings destroyed, and artifacts trampled so that they return to dust. And even when the information stored in artifacts is not destroyed, it ceases to function as such as soon as it is taken out of its particular knowledge context, for example because the latter changes as a result of further information processing.

Transitions in Social Systems as Dissipative Structures

An increase in the information that is communicated among the members of a group would seem to have two consequences. At the level of the individual, it would decrease uncertainty by changing the relationship between the syntactic and semantic aspects of human information processing, increasing the level of abstraction (Dretske Reference Dretske1981). At societal level it would increase participation and coherence, so that it may be said that the degree of organization increases and entropy is dissipated.

In an archaeological context, the latter is the more visible, for example when we look at the way in which a cultural system manages to harness an ever-increasing space, or the same space ever more intensively, by destroying or appropriating its natural resources in a process of (possibly slow) social incorporation (see Ingold Reference Ingold1987).

A simple example is that of “slash-and-burn” agriculture. Bakels (Reference Bakels1978), for example, has shown in detail how the early Neolithic inhabitants of Central and Northwestern Europe (5000 BCE), who are known as the Danubians, exhausted an ever-widening area of their surroundings in procuring for themselves the necessary foodstuffs and raw materials. The fact that this happened rather rapidly is certainly one of the factors responsible for the rapid spread of these peoples (see Ammerman & Cavalli Sforza Reference Ammerman, Cavalli Sforza and Renfrew1973).

I have argued (Reference Ingold1987, 1990) how in the Bronze and Iron Ages (1200 BCE–CE 250), the local population of the wetlands near the Dutch coast repeatedly transformed an untouched, extremely varied, and rich environment by selective use of the resources in it, resulting in a more homogeneous and poorer environment. As soon as a certain threshold of structuring was reached, the inhabitants had to leave an area and move to an adjacent one.

In both these cases, the information (about nature) that was contained in an area, that is those features of it that triggered a response in the knowledge structures of the population, was used for its exploitation up to the moment that the “known environment” could no longer sustain the population. In the process, the symbiosis between the population and its natural environment changed both, so that eventually the symbiosis was no longer possible, at least with the same knowledge. One example that shows the importance of the relationship between available knowledge and survival in the environment emerges when one compares the knowledge available to the Vikings on Greenland and the Inuit in the same area: whereas the stock of knowledge available to the Vikings was hardly sufficient to survive the cooling of the climate after c. 1100 except marginally, the knowledge available to the Inuit enabled them to survive more easily up to the present. This dynamic is further detailed in Chapter 13.

Similar things occur in the relationship between different societal groups. A city such as Uruk (c. 4000 BCE) seems to have slowly “emptied out” the landscape in a wide perimeter around it, probably by absorbing the population of the surrounding villages (Johnson Reference Johnson, Sabloff and Lamberg-Karlovsky1975). When it could not do so any more, probably for logistical reasons, various groups went off to found faraway colonies that fulfilled the same function locally and that remained linked to the heartland by flows of commercial and other contacts, often along the rivers.10 The same was customary among the Greeks in the classical period (sixth to fifth century BCE). As soon as there was a conflict in a community (due to errors in communication or differences in interpretation, whether deliberate or not), groups of (usually young) dissidents were sent off to other parts of the Aegean to colonize new lands. These lands were then to some extent integrated into the Greek cultural sphere. That process is no different from the one that allowed the European nations in the sixteenth to nineteenth centuries to establish colonies in large parts of the world.

As we have seen in the last chapter, the Roman Empire slowly spread over much of the Mediterranean basin, introducing specific forms of knowledge and organization (“Roman Culture”), aligning minds. In so doing it was able to avail itself of more and more foodstuffs, raw materials, and raw energy, among other things in the form of treasure and slaves. As the rate of expansion increased, the process of acculturation outside its frontiers – which was initially, during the Republic, more rapid than the expansion – was eventually (in the first centuries CE) “overtaken” by the latter. That brought expansion to a standstill, and led to a loss of integration in the Empire (and eventually its demise).

In each of these cases, structuring was maintained as long as expansion was possible in one way or another. Expansion keeps trouble away, just as in the chemical reaction that I presented as an example of a dissipative, that structure could only maintain structuring by exporting the inherent tendency of the liquid to mix the colors. It is this aspect of societal systems that seems to me to indicate that they can profitably be considered dissipative information-flow structures.

One consequence is that the very existence of any cultural entity depends on its ability to innovate and keep innovating at such a rate that, continuously, new structuring is created somewhere within it and spreads to other parts (and beyond) so as to keep entropy at bay (see Allen Reference Allen, Aida, Allen and Atlan1985; van der Leeuw Reference van der Leeuw and Manzanilla1987, Reference van der Leeuw, van der Leeuw and Torrence1989, Reference van der Leeuw, Fiches and van der Leeuw1990; McGlade & McGlade Reference McGlade, McGlade, van der Leeuw and Torrence1989). From the very moment that innovation no longer keeps pace with expansion, the entity involved is doomed. As we have seen in the case of the Bronze Age settlement of the western Netherlands, that moment is an inherent part of the cognitive dynamics responsible for the existence of the entity concerned. For the Roman Empire, a similar case can easily be made based on the exponential increase in its size, just as for the other examples given. It might be concluded that, seen from this perspective, the existence of all cultural phenomena is due to a combination of positive feedback, negative feedback, noise, and time lags between innovation and dissipation.

Conclusion

The last few pages have tried to argue the case for considering social phenomena as dissipative flow structures, and have outlined some critical elements of such a conceptualization. To begin with, I have tried to find our way through the confusion underlying the concept of information, and to outline my use of the word. Notably, I have pointed to the cognitive feedback between information and knowledge as the autocatalytic reaction underlying the development of the patterning that individual humans impose on their social and natural environment. I have also outlined why, in my opinion, all conceivable kinds of exchange between people have an information-exchange aspect, and that it is the exchange of information that seems to be responsible for the cohesion of social institutions at all levels. To introduce the concept of channel capacity within a given, fixed, semantic, and syntactic framework, I have drawn upon Shannonian information theory, making it very clear that as this theory applies to closed systems it is not otherwise compatible with the general approach I have chosen.

Shifting my focus somewhat, I have then argued the case for modeling human institutions as open systems and have considered whether such systems do indeed freely transfer information in both directions, inward and outward. Finally, I have briefly presented a few of the many available historical and archaeological cases that point to the fact that social institutions dissipate entropy. I have, however, refrained from trying to present a particular theory of entropy dissipation in human systems.