Introduction

Now let me start outlining my argument in earnest, beginning with a 30,000 ft historical perspective that illuminates some of the intellectual reasons for the current dilemma and places them in the context of wider societal and intellectual changes over the last few centuries, and particularly the last century or so. This historical perspective may seem at first sight to be a diversion, and not necessarily an easy one to read for others than historians of science, but it is fundamental to understand the origins of many aspects of the current western perspective on sustainability that is the main topic of the book.

Beginning with the transition from the early medieval “vitalist” to the dual Renaissance perspective, I will here show how over the last six centuries a perspective linked to what was originally a human cultural category, “nature,” has come to dominate our scientific world view to the point that we are now investigating human functioning (for example of the brain) as a “natural” phenomenon, and have to an important extent lost sight of human behavior as something intrinsically human. That process has also permeated much of our western thinking beyond the realms of science, scholarship, and academia, and anchors our perspective on climate and environmental change.

In doing so, I have focused on the traditional, academic sciences as that is the domain in which I work and to which I hope this book may contribute. As already mentioned in Chapter 2, over and beyond these sciences, there is a wide range of applied sciences where much of what I am arguing here is already current practice, in the sense that their role is to relate “pure” science to the practicalities of everyday life, and that they combine the input of many disciplines.

The last sixty to a hundred years have seen very important and rapid advances in many scientific disciplines. In the natural sciences, we have seen increases in our knowledge about subatomic particles by means of larger and larger accelerators, but also the development of nuclear energy. Astronomy and planetary science have rapidly advanced thanks to the construction of large numbers of (radio-) telescopes and satellites, in the process giving us Geographical Positioning Systems. The discoveries of the double helix and the subsequent mapping of genetic structures have transformed biology, medicine, and our ideas about biological evolution. In materials science, the discovery of unprecedented properties of silicon, and more recently graphene and the nanomaterials, has opened up huge new areas of research. All these discoveries, and many more, have together completely changed our lives, changing what we eat (agro-industry; packaged and frozen foods; the hamburger), how we move around and how far we can go (the jet airplane), what we do in our spare time (the television, computer games); who we consider our friends (Facebook, Twitter) and so forth. But no scientific discoveries have transformed society as much as those that have led to the computer, informatics, the Internet, and – in general – the information sciences.

In the process, science itself has changed. What began in the 1700s as a voluntary, unregulated, and individual inquiry into natural phenomena practiced by the upper middle classes and nobility, funded by their own resources, has developed over the last two and a half centuries into a worldwide community of millions of scientists who are subject to stringent rules (peer review; university administrative structures; promotion and tenure proceedings), and are paid by governments and industries on the premisse [sic!] that their activities will lead to inventions and discoveries that improve our lives, satisfy our curiosities, and keep our economies humming. In particular, after the discovery of many novel tools during World War II (e.g., radar, nuclear energy, jet engines), for some thirty years (1950–1980) the general population’s respect for scientists was at its zenith. Scientists (natural scientists in particular) were counted upon to perform miracles, guide governments, provide industry with the tools to be ever more performing, and invent more and more ways to make life more comfortable and less wearing. But somewhere in the 1980s and 1990s that trust in science began to wane, and an increasing proportion of the population in western countries became more critical of science.

That shift in the perception of the role of science is of direct relevance to us, and to the topic of this book, because the sustainability challenges facing us now will require an all-out scientific effort to find and to apply solutions, and for that effort to succeed scientists need to regain the trust of society at large. Hence, I want to use this chapter to delve a little deeper into the history of the sciences, laying bare some of the dynamics that have shaped the successes, the directions, and the challenges of contemporary scientific research. In doing so, I will of course not introduce novel ideas, but juxtapose ideas from historians of science in a way that suits my main purpose: to put into perspective the ways in which our scientific approaches have been shaped by, and have come to shape, our world, and to point to some of the reasons why a fundamentally different approach is needed.

The Great Wall of Dualism

Let us first consider the word “nature.” Natura is the Latin equivalent of the classical Greek word φυσισ which we encounter in the words physics, physiology, physician, and many other words in the European languages.1 Lewis (Reference Lewis1964) argues that already in classical Greek the word conveys an ambiguity, as it can mean “that which is real” (as opposed to fictional) and thus “the way things should be” (in accordance with nature), as well as “nonhuman,” relating to the world of nonhuman beings. The ambiguity clearly expresses the difficulties in locating human beings on the Greek mental map of earthly phenomena. Human beings must under certain conditions be considered part of nature, while in other circumstances it is preferable to exclude them from nature. The duality is also an essential step in the objectification of nature as it allows one to think of nature as subject to its own dynamics, its own laws, its own behavior, distinct from those that govern the dealings of people. Such objectification is a conditio sine qua non for any attempt to reduce perceived natural risks, indeed for the description of any presumed interaction between people and that what surrounds them.

In two very interesting books, which I summarize here much as I did in my ARCHAEOMEDES publication (1998b), Evernden (Reference Evernden1992) describes some of the transformations this conception underwent, beginning in the early Middle Ages. At that time, a single “vitalist” worldview pertained to all aspects of the world, whether mineral, vegetal, animal, or human. All these realms were seen as inhabited by living beings of different kinds which had close links between them and with the realm of the divine and supernatural. In effect, all that is happening in these realms is seen as an expression of a divine configuration and, in this respect, there was no difference between human beings and any other aspect of nature.

The Renaissance, following on the heels of the major plague epidemics of the fourteenth century (which in some urban locations reduced population numbers by 50% or more), is the next major step. Historians and art historians have long linked the Βlack Death and the Renaissance in their interpretations (e.g., Gombrich Reference Gombrich1961, Reference Gombrich1971; Hay Reference Hay1966), focusing for example on the contrast between the danse macabre and the subsequent explosion in the arts, but also on the introduction of the concept of the individual (as manifest in the first full-face portrait painting, of King Richard II of England), the emergence of the signature as a means of identification in commerce (see Cassirer Reference Cassirer1972), and the first attempts to measure time with mechanical clocks. Evernden cites the groundbreaking work of Jonas (Reference Jonas1982) in according fundamental importance to this period in which a shift occurs from a cyclical perspective in which life and death are both part of a never-ending cycle, to a linear one in which death is the rule, life the anomaly. This opened the door to the notion of an inanimate universe, nature as lifeless “behaving matter,” a notion that has grown ever since in a movement that is closely related to the emergence of mechanistic physics (the so-called Newtonian paradigm) and the emerging separation between science and religion.

It is Evernden’s contention that this growth was made possible by what he calls “the great wall of dualism” (Reference Evernden1992, 90), which protected our conception of humanity from the lifelessness of the inanimate world by maintaining that (nonhuman) nature was subject to fundamentally different laws than were human beings, so that one could concern oneself with the study of the former without attacking the human sense of identity, and thus to reposition human beings with respect to their nonhuman surroundings.

Thus, in the centuries following the Renaissance, Copernicus could introduce the idea that humans are not living on the central body of the universe, but on one among a series of more or less identical planets turning around the sun. Human life thus became an epiphenomenon, a mere anomaly on one planet out of (eventually, centuries later) millions assumed to exist in the universe.

Of direct importance for us here is the push for objectivity in the study of nature, linked to the idea that because human beings are outside the natural realm, their observations and actions on nature would essentially distort its dynamics and our perception of them. As expressed by Shapin and Shaffer: “the solidity and permanence of matters of fact reside in the absence of human agency in their coming to be” (Reference Shapin and Shaffer1985, 17–18). Evidently, this had consequences for the period’s conception of knowledge, which shifted from one in which knowing is achieved through identification with the object of study to one in which knowledge is in the mind, independent of the object, and achieved through the critical observation and study of that object.

Evernden illustrates, by means of examples from Italian and Dutch painting, how the first stage of this slow change occurred differently in different parts of Europe (Reference Evernden1992, 78–79). The stereotyping of Italian landscape painting seems to indicate that, here, nature is assumed to be a coherent system, whereas in Dutch landscape painting the attention for detail and realism seems to indicate that nature is made up of details which project oneself on the retina. It is as if in the Italian case the depiction of nature derives as it were top down, from a particular overall conception, whereas in the northern European examples, nature is depicted bottom up, as an ensemble of observed details. In a similar line of argument, Alpers suggests (Reference Alpers1983, xxv) that Dutch society was oriented toward the visual and material, Italian society toward the verbal and conceptual. However that may be, it is clear that from this period onwards there emerges a contrast between developments in northwestern and in southern Europe. Its most eminent manifestation is the growth of empiricism (ultimately followed by the Industrial Revolution) in Britain and Holland, in opposition to the Cartesian rationalist position that dominated in France and Italy.

It is of importance to our further discussions to emphasize that from this moment on we also observe a growing separation between the natural sciences and the humanities that is the inevitable corollary of the separation between humanity and nature. Humanity is a sphere in which values, thought, spirituality and novelty dominate the scene – contrasting with the mechanics which are thought to dominate in the natural sphere. Until recently, most educational institutions in continental Europe and the Anglo-Saxon world have seen it as their task to educate students in both spheres, but it is my impression that that goal is now in many institutions suffering under the increased pressure on students to reduce study time, and focus on their future employment.

Rationalism and Empiricism

The next stage in the development of our western intellectual tradition that shaped our present scientific capabilities and challenges is the transition to the eighteenth century, and in particular the emergence of the intellectual movement usually referred to as the Enlightenment, in which the above differences between Rationalism and Empiricism solidified. It is crucial because it shaped the scientific articulation between theory and observation. That articulation between the realm of ideas and that of observations led to two very different approaches to science that persist, mutatis mutandis, to this day. The difference is best summarized by contrasting the approach of Descartes in France with that of Bacon in Britain.

Descartes’ famous dictum “Cogito ergo sum” (“I think therefore I am”) reflects a movement in which the importance of thought and reason is emphasized over that of experience. Cogitation leads one to adopt a conception of one’s surroundings, a construct into which experiences can be fitted. If at first sight these experiences do not fit, one has to look at them in different ways until they may confirm, and maybe nuance, the conception one has adopted. Cassirer gives the example of another rationalist, Leonardo da Vinci, for whom “a dualism between the abstract and the concrete, between ‘reason’ and ‘experience’ can no longer exist” (Cassirer Reference Cassirer1972, 154). Both these cases lead to an approach that makes experiences fit a conception. At the cognitive nexus between humans and the world “out there,” what humans perceive is determined by their worldview rather than by the phenomena they observe. This worldview is primarily the result of reflection and cogitation rather than observation.

In Britain and Holland, on the other hand, there seems to be an aversion to attempts to generalize, to build a reasoned worldview. Such a system is deemed to remain hidden from the senses, reasoned and therefore interfering with the direct observation of nature. Hence, Bacon’s view predominates, that to resolve nature into abstractions is less relevant than to dissect it into parts. In arguing that reason has to conform to experience, and that experience deals with the manifest details of nature, the empiricists set about building another worldview by deliberately crumbling the existing one into oblivion. We will come back to that theme when discussing the emergence of our intellectual and scientific disciplines.

It is essential to underline that this empiricist disaggregation prepared the way for a slow shift, as northern Europe flourished economically and scientifically over the next couple of centuries, in which “century by century, item after item is transferred from the object’s side of the account to the subject’s” (Lewis Reference Lewis1964, 214–215). It is as if in the development of the natural sciences an inevitable initial phase of separation between subject (ourselves, people, societies) and object (nature), is followed by an increasing “objectification” of the study of people and societies, so that in the end, we ourselves as humans have become part of the natural sphere of inquiry. It is in this context that the social sciences emerge in the nineteenth and twentieth centuries, and that at present cognition and thought have become subjects of scientific study and explanation in terms of synapses, chemical communication in the human brain, etc. Resulting in the fact that “now […] the subject himself is discounted as merely subjective; we only think that we think” (Lewis Reference Lewis1964, 214–215). Blanckaert (Reference Blanckaert, Ducros, Ducros and Joulian1998) calls this “the naturalization of Man.” Via the “detour” of dualism, we thus see a slow return to a monistic worldview, exchanging the monistic vitalist philosophy of the European early Middle Ages for a materialistic monism in which, nowadays, atoms, molecules, hormones, and genes prevail.

This has created a fundamental paradox in our worldview. In the words of Evernden: “We have in effect been consumed by our own creation [e.g., nature], absorbed into our contrasting category. We created an abstraction so powerful that it could even contain – or deny – ourselves. At first, nature was ours, our domesticated category of regulated otherness. Now we are nature’s, one kind of object among all the others, awaiting final explanation (Reference Evernden1992, 92–93).”

The Royal Society and the Academies

In 1660 the Royal Society was founded in London. Its creation was followed by other academies, such as the French Académie Royale des Sciences founded in 1666, the Swedish Royal Academy of Sciences founded in 1739, and the Hollandse Maatschappij van Wetenschappen founded in 1752 in the Netherlands. These institutions were created by and for scientists, sometimes with funding from private sources, and they selected their members by cooptation based on (informal) peer review. A number of these scientists, not all of course, were socially part of the classes of society (the modernity-oriented aristocracy and the bourgeoisie) that became deeply involved in developing the economy through the applied sciences.

As time progressed, in so far as they were “science” academies – there also emerged, later, academies of art and letters, for example – these contributed substantially to a stricter definition of what was considered (empiricist) science, and in particular to the idea that every step in an argument should be proven or demonstrated to be considered scientific. What this means in different fields of science, and between different intellectual tendencies, is highly variable. But one thing is certain: one cannot “prove” things by invoking the future. Hence, to this day science places a very heavy emphasis on explaining by invoking dynamics that lead to observed phenomena, in effect relating the past and the present without referring to the future. But the sciences and the humanities do this in very different ways.

Newtonian physics (the dominant paradigm until the beginning of the last century) built from empirical observation a worldview in which phenomena could be isolated from one another, and in which processes occurring at the most fundamental scales were considered reversible (e.g., state changes such as between vapor, water, and ice), cyclical (e.g., celestial mechanics), or repeatable (most chemical reactions, if they were not reversible). It is a worldview that is essentially aimed at “dead,” ahistorical phenomena – those whose nature does not fundamentally and irreversibly change during their existence, and who therefore do not have any (long-term) history.

In the humanities, on the other hand, invoking history seems to have been the dominant form of explanatory reasoning, at least since the Renaissance (Girard Reference Girard1990). In historical interpretation, irreversible time was a dominant strand. As a formal discipline (i.e., as a domain isolated from everyday life) History emerged when invoking irreversible time as explanation was challenged by the emergence of the natural sciences in the eighteenth and nineteenth centuries. On the one hand, it is firmly anchored in empiricist thought (cf. the famous words: “interpretations may change, but the facts remain” attributed to the historian von Ranke). But on the other hand, it developed, notably under the impact of Dilthey (1833–1911), into an approach that differed from British empiricism in its epistemological and ontological assumptions.

Dilthey (Reference Dilthey, Makkreel and Rodi1883) acknowledged that the kind of positivist universalism that was current in the natural sciences could not be applied to the humanities. According to his school, the central goal of history (and later of the humanities more in general) is understanding rather than the knowledge that is the central goal of the natural sciences. To gain such understanding, Dilthey proposed the “hermeneutic circle,” the recurring movement between the implicit and the explicit, the particular and the whole, the core and the context, the manifestations of human thinking and the thinking itself. Adopting this position enabled the hermeneuticists to (re-) position people in their historical, geographical, cultural, and social context, and by doing so relate individual, often short-term, actions to longer-term trends. In emphasizing, finally, that gaining understanding has to proceed from the study of the manifestations of human actions to the understanding of their significance, it introduces a particular kind of empiricism that is adapted to the study of people and societies.

The Emergence of the Life Sciences and Ecology

The life sciences emerged in the nineteenth and twentieth centuries as a novel area of scientific endeavor, and one that emphasized long-term irreversibility. They were part of a cluster of disciplines that sprang up between the humanities and the natural sciences at a time when the latter two could no longer easily communicate with each other, once the cohabitation of dualism had been replaced by the battle that accompanied the separation of the two spheres. The disciplines concerned cover a continuum between geology, which is essentially mechanistic in its basic attitude to long-term time (similar causes have similar effects, causality does not irreversibly change) via paleontology, evolutionary biology, and archaeology (in all three, long-term irreversible change is acknowledged, but short-term irreversible change is deemed invisible, incremental or irrelevant) to ethology and anthropology (short-term non-recurrence is accepted; the longer term not really considered).

The “new” disciplines delimited a deliberately ambiguous middle ground, a fuzzy no man’s land, either because they dealt with phenomena which do fundamentally and irreversibly change qualitatively during the period of observation (geology, paleontology, botany, zoology), or because they concerned another apparent contradiction, that between the behavior of natural beings (ethology) and the nature of (human) behavior (anthropology). Such phenomena did not fit the mechanistic approach of the “core” natural sciences because these excluded the study of qualitative change, but neither did they fit the traditional historical approach, which focused almost exclusively on the human (non-recurrent) aspects of behavior.

How did this come about, and what were its effects? Jonas argues that as soon as the natural sciences are, in seventeenth-century northwestern Europe, sufficiently mature “to emerge from the shelter of deism” (Reference Jonas1982, 39), the explanation of the observed functioning of physical systems in terms of general principles gives way to the reconstruction of the possible generation of such systems’ antecedent states, and ultimately from some assumed primordial state of matter. And

the point in modern physics is that the answer to both these questions (i.e., functioning and genesis of the system) must employ the same principles. […] The only qualitative difference admitted between origins in general and their late consequences (if the former are to be more self-explaining than the latter and thus suitable as a relative starting-point for explanation) is that the origins must, in the absence of an intelligent design at the beginning of things, represent a simpler state of matter such as can plausibly be assumed on random conditions.

When the mechanistic Newtonian approach, which was dominant at the time, was extended to living beings the sheer perfection of the construction and functioning of most living beings made it difficult to envisage their simpler and cruder precursors. The odds against a mere chance production of such perfect beings “would seem no less overwhelming than those against the famous monkeys’ randomly hammering out world literature” (Jonas Reference Jonas1982, 42). And moreover, these near-perfect beings continually died and were recreated! It would thus have been easier to explain them as the result of some (divine) design, but such a theory was incompatible with empiricist thought. The two centuries of delay between Kant and Laplace’s explanation of the origins of the solar system and Darwin’s idea of the origins of living species are indicative of the extent to which the study of living beings was caught between the two prongs of a dualistic worldview. “The very concept of dévelopement [sic] was opposed to that of mechanics and still implied some version or other of classical ontology” (Jonas Reference Jonas1982, 42).

The struggle to free the practitioners of the life sciences from traditional ideas is evident when one looks at the emergence of what was then called Natural History as a process in which two emerging disciplines, [societal or human] History and Natural History offset themselves against each other in the eighteenth and nineteenth centuries (for more detail see van der Leeuw Reference van der Leeuw, Ducros, Ducros and Joulian1998a). They both had to grapple with similar issues, such as the relationship between universal principles and individual manifestations, the challenge of dealing with the long term from the same perspective as was used for shorter-term dynamics, the relationship between subject and object, etc.

The contrast between the Lamarckian and the Darwinian models of the origins of life allows us a glimpse of what was necessary to resolve the problem. Lamarck’s explanation of the living world remained thoroughly natural in the sense that he saw reproduction as the identical re-creation of individual generations of complex beings according to a grand design. But at the same time, he introduced a historical element in his point of view by arguing that, though the design remained the same, it had sufficient flexibility to allow changes whenever ‘the environment’ imposed different conditions. There lingered doubt about whether such changes could be passed on to later generations. Historical explanation over the timespan of a generation was admissible, but not (yet) beyond. First representatives were still called for, and remained unexplained.

The post-Darwinian model, on the other hand, avoids the difficulties around the improbability of chance origins by arguing that the first representatives could have been much simpler than the present ones. Distinguishing ontogenetic from phylogenetic evolution allows biologists to explain the past and the present of living species in different ways. The essential role of a central, mechanistic, theory unifying the explanation of past and present is henceforth played by the mechanism accounting for evolution (i.e., variation and natural selection), introduced at the meta-level of the long-term existence of species, rather than at that of the individual and/or the single generation. And last but not least from our perspective, the theory of evolution introduced the idea that heredity is linked to change, rather than to immutability (Jonas Reference Jonas1982, 44). This broke the iron grip of reversibility and/or replicability of explanation, and heralded the reintroduction of historical (rather than evolutionary) explanation in the realm of nature. In this, it was inextricably tied to both geology and prehistoric archaeology – other children of the nineteenth century, which helped push back the age of the world and everything in and on it (e.g., Schnapp Reference Schnapp1993).

In the context of this book, it is also important to look at the early concept of environment which is invoked by Lamarck, and which Darwin reconfigured as the conditions of natural selection. Haeckel developed what he called the new science of ecology which he described as “the science of the relationships of the organism with its environment, including all conditions of existence in the widest sense” (Reference Haeckel1866, 286). Whereas Darwin included mankind in his “web of life,” Haeckel did not. He defined environment in much the same way as nature was defined a millennium or two earlier – as “nonorganism” (ibid., 286). Such negative formulations, of course, do not define anything but they are nevertheless revealing. In this case, there is a change in perspective on time (the opposition past-present) on the one hand, and on the opposition inside-outside on the other. The distant past and the environment become objectifiable and separable around the same time, giving rise to history and ecology as rigorous, “scientific” disciplines.

The next episode begins in about 1910, when the concept of human ecology is introduced to denote the study of the relationship between humankind and its environment. It accelerates with the rise of General Systems Theory (e.g., von Bertalanffy Reference Von Bertalanffy1968) and the concept of ecosystem in particular. After re-imposing a distinction in the late nineteenth century between humanity and its environment, the two are brought together again in two concepts which, each in their own way, make humanness a little bit more natural. Following a phase of reductionism that was made possible (but not initiated) by Darwin, we see the pendulum swing back toward more complex relationships between different parts of nature, including human beings. Humanity becomes Just another unique species (Foley Reference Foley1987), part of the complex web of inter-species relationships that is the fabric of life.

The Founding of the Modern Universities and the Emergence of Disciplines

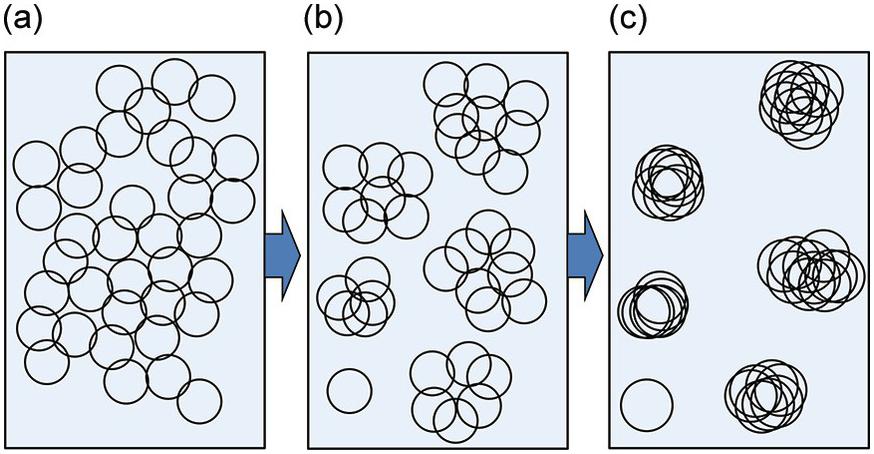

Throughout the Middle Ages and the early modern period, universities were relatively unorganized, bottom-up organizations of individuals who saw it as their mission to share their knowledge and experience with others. As communities of scholars and scientists grew, interacting more and more intensively through travel and correspondence, a process was set in motion that led to a degree of convergence of understanding of the phenomena studied. Some perspectives were agreed upon, others rejected. This trend is schematically illustrated in Figure 3.1.

Figure 3.1 Convergence of groups of practitioners and their questions and ideas leads to cohesion around certain topics, and the abandonment of others. From left to right: (a) individual researchers all investigate different domains and issues; (b) through interaction they come to focus on certain kinds of information, certain methods and techniques, and certain questions to the detriment of others; (c) ultimately, they form coherent communities focused on more and more narrow domains.

A shared language emerged that linked these elements of understanding, and other signals were rejected as noise. This focused groups of scientists and scholars on the knowledge they shared, and what was signal in one group or dimension became noise in others. The overall process is one of aligning some signals by excluding others.

By the middle of the nineteenth century this reached a new stage, when universities were more formally organized, first in Germany under the impact of Wilhelm von Humboldt, and a little later in other countries, including the Americas (the “Harvard model”). This involved the creation of organized disciplines – consisting of groups of professors teaching related topics – and faculties – groups of related disciplines based on the convergence that had been growing for many years. The principal raisons d’être of these nineteenth-century university innovations were the creation of order and education – which gained recognition by the bourgeoisie and authorities as a way to promote innovation in industry and business – and thus to contribute to society at the time of the Industrial Revolution – but also as a way toward personal fulfillment and prestige. The departmental and faculty organization led to discussions among the members of disciplines and faculties about what it was that they all agreed should be jointly taught to their students. As a result, in most disciplines, two important categories of knowledge emerged as fundamental parts of the curricula: knowledge and methods.

Once these had been taught for a while, a major unintended consequence in the conception and practice of science emerged. Up to that time curiosity had driven research. Individuals tackled any problems and questions they thought were interesting, and methods and techniques were a spinoff and a tool (albeit an important one). But once students specialized in certain domains and were taught the “appropriate” questions to ask and the “correct” methods and techniques to tackle them, research became increasingly driven by these questions, methods and techniques rather than by the curiosity that had incited research until then. The result is illustrated in Figure 3.2.

Figure 3.2 The emergence of disciplines inverts the logic of science. Whereas initially the link between the realm of phenomena and that of concepts is epistemological, once methods and techniques formed the basis of disciplines, these links became ontological: from that time on, gradually, the methods and techniques learned began to dominate the choice of questions and challenges to investigate. This stimulated increasingly narrow specialization, and led to difficulties of communication between disciplinary communities.

In particular, this shift from a science driven by shared curiosity and the will to better know or understand the natural and social phenomena that we live amongst, to a science driven by an acquired set of questions, premisses, assumptions, hypotheses, methods and techniques, had as a major consequence that the incomplete but holistic views that had characterized much of seventeenth- and eighteenth-century investigation were replaced by numerous, in themselves more coherent, but fragmentary perspectives on our world. And in particular, it solidified the differences between the natural sciences on the one hand and the humanities on the other.

In summary, the past hundred years appear to have witnessed the culmination of the impact of materialistic monism as an explanation and, through the industrial and technological revolutions, as a way of life. One of its crowning achievements thus far is the research on DNA and on the human brain. Between the pincer movements of on the one hand deriving Mind from Matter (Delbrück Reference Delbrück1986) and on the other having the essence of human individuality evolve from nonliving substances which govern the uniformity and diversity of all living beings, humanness seems inexorably trapped. Is it?

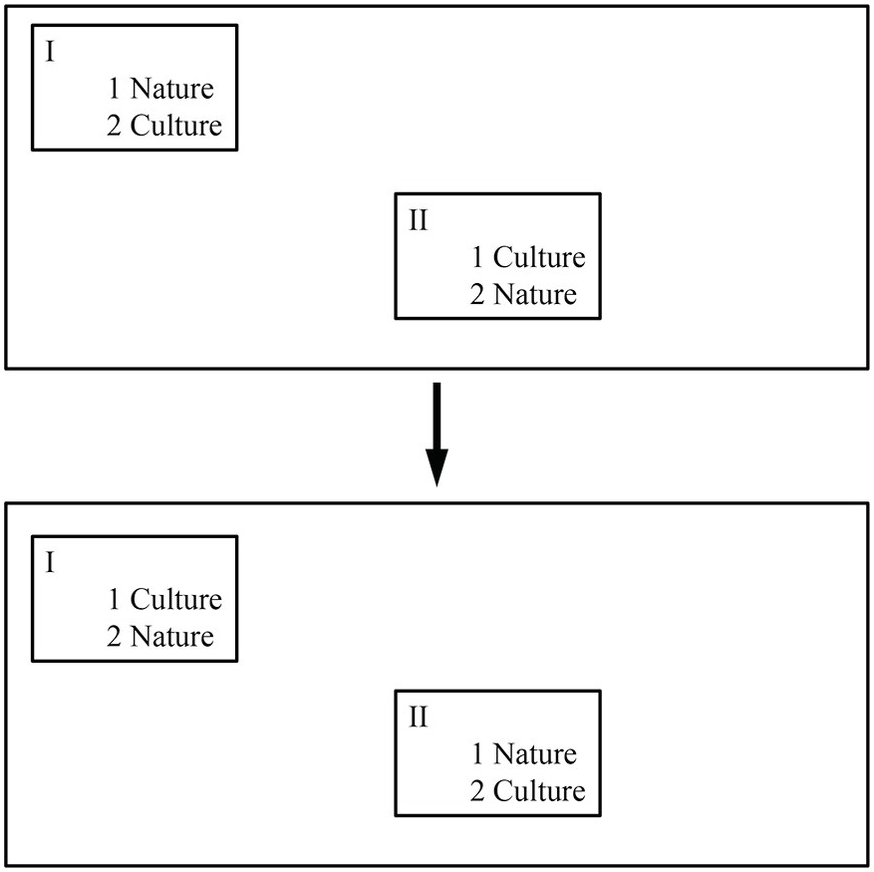

The trap that we are talking about is essentially a tangled hierarchy (see Figure 3.3), a situation of oscillation between two terms which, through the complex set of ties which link them, keep each other in a dynamic, approximately stable, equilibrium – not unlike two rivals, each alternately gaining the upper hand for a short time without ever completely defeating the other (Dupuy Reference Dupuy1990, 112–113). That which is superior at the superior level becomes inferior at the inferior level – inverting the hierarchical opposition within itself, according to the scheme presented by Dupuy. But, of course, such an inversion is not really a way out of the dilemma because all it does is maintain the same hierarchy and the same barrier, but from the other side.

Figure 3.3 Two versions of the tangled hierarchy between nature and culture. Inverting the hierarchy (from the top to the bottom version) does nothing to solve the problem of the opposition of the two concepts.

The only way out is, of course, to negate the opposition and construct a kind of science that does not fall into this trap. In Chapter 4, I will propose that this requires a rethink of our analytical approaches and methodologies from a uniform, holistic perspective.

The Instrumentalization of Science

But before we discuss a possible way out of this dilemma, we must first have a look at how the societal context of science has changed, in particular over the last eighty years. Some of this is due to the evolution of the sciences itself, while other developments are of societal origin. The interaction between the two has had profound effects on both.

These developments have to be seen against the backdrop of two long-term trends. The first of these is the acceleration of innovation since the industrial revolution, and the second the increasing dominance of money as a societal value.

The industrial revolution, and in particular the increasing availability and use of fossil energy has hugely reduced the cost of innovation, which does to a much greater extent consist of the cost of integrating inventions in society than of the cost of producing the inventions themselves. This is an important point that has not usually been taken sufficiently into account in modern innovation studies. In archaeology, it is evident for example in the delay of seven centuries between the invention of ironworking in Asia Minor (c. 1400 BCE) and the transition from the Bronze to the Iron Age in Central and Western Europe (c. 700 BCE). Bronze manufacture is constrained by the availability of the necessary metals (copper and tin). Bronze objects were exchanged all over Europe from a few locations where these materials were found. Iron manufacture is not constrained materially, as iron is found everywhere in streams and marshes. But for some 700 years it was socially constrained because society in Europe was based on power structures related to bronze production. To lift that constraint society had to undergo far-reaching societal changes that broke down the existing power structure, which happened from around 600 BCE. In Scandinavia this proved much more difficult, and the Iron Age did not begin there until the Viking period (c. 700 AD).

An example in the modern period that makes this point with great clarity is the work of Lane and Maxfield (2009) on the effort the Echelon corporation had to expend to get some markets to open up to their major innovation, LonWorks, a distributed information processing package. This involved the creation and maintenance of what Lane and Maxfield (Reference Lane, Pumain, van der Leeuw and West2009) call “scaffolding structures” to maintain the innovative dynamic against very major conservative forces supported by the likes of Honeywell et al. In the United States, they did not succeed and Echelon initially lost the battle for the innovation, but in Italy they did succeed. LonWorks is still current In Italy, and that base allowed the corporation to survive and subsequently build out its presence in the United States with a focus on the Internet of Things.

A second dynamic that has contributed to the acceleration of innovation is the increase in population that has been enabled by developments in sanitation and health as well as education, particularly in cities. It appears that there is a clear positive nonlinearity between population size and the rate of innovation (e.g., Weinberger et al. Reference Weinberger, Quiñinao and Marquet2017), and in particular in cities (Bettencourt et al. Reference Bettencourt, Lobo, Helbing, Kühnert and West2007; Bettencourt Reference Bettencourt2013) when one applies an allometric scaling approach to this relationship. Although there is a debate about the nature of the relationship and the precise shape of the curves that it generates, in my opinion this relationship expresses the fact that the more people are together, the more ideas are generated. I think one can justifiably generalize this argument to apply to human interaction levels in general, as shown in Chapter 11. If that is so, one can argue that the limited interaction in the form of exchange and commerce since the Middle Ages has contributed to the absence of acceleration in innovation until the Industrial Revolution.

As part of that dynamic, I would argue that over the past several centuries we have also seen an accelerating shift from innovation that principally responded to explicit, conscious, and widely experienced needs, to innovation in which inventions meet demands that have not (yet) been widely articulated, or that future users are unaware of, as in the case of many uses of the smartphone or the vast numbers of newly assembled chemicals.

At the same time, in particular during the last eighty years, the increasing emphasis on productivity and more generally on wealth as the major indicator of wellbeing of people, communities, and nations, which has been one of the results of the take-over of many institutions by economists, has seriously reduced the value space by which we judge our wellbeing. This has led to a more and more short-term and financial valuation of many aspects of our societies.

As a – more or less arbitrary – starting point for sketching the changes in science and its role in society we’ll go back to the middle of the nineteenth century. Since the 1850s, major scientific discoveries have enabled new, major industries to emerge (e.g., anilin dyes in the 1850s; Bessemer process for the production of cheap steel in 1883; synthesis of aspirin in 1897; Haber-Bosch process for synthesis of ammonia for munitions and fertilizer in 1915), and this set in motion a trend in which the natural sciences and various industries developed a partnership that was highly profitable to both. In the years since, this has led to the ever-increasing imbrication of the sciences in many, many aspects of the wider economy that is part of our current societies, especially after the wave of innovations that was triggered by World War II: radar, airplanes, television and telecoms, medicine, and so forth.

Among other things due to the Manhattan project (the construction of the first A-bomb) and the victory over Japan that was closely associated with it, belief in the potential of the sciences was at its zenith in the 1950s to 1970s. Then, while the trust in science itself seems to have remained more or less stable (Funk & Kennedy Reference Funk and Kennedy2017), slowly but surely, a more critical attitude developed toward the contribution of science to wider society, possibly as a consequence of decreased understanding of current science (Royal Society 1985) or as part of a more general decrease of trust in society’s institutions (Turchin Reference Turchin2010, Reference Turchin2017; Jones & Saad Reference Jones and Saad2016; Rosenberg Reference Rosenberg2016) due to increasing instability of our socio-political systems.

In the political arena, the Mertonian scientific ethic (Merton 1973) emphasized that scientists should always give an impartial opinion based on research in order to keep the trust of society. That trust had led to an increasing use of science as an argument in political debates, and ultimately to a close relationship between scientists and many social and political institutions that paid scientists in order to obtain scientific results that could convince the wider public of the advantages of certain proposed measures. But that close bond over time turned into a source of mistrust of the sciences because they were increasingly seen as representatives of the established bureaucratic, top-down, order and thus as a threat to the bottom-up social order that many communities have established for themselves (e.g., Wynne Reference Wynne1993).

Since the 1990s, as the wealth of the developed nations is less and less able to meet the cost of their social and material infrastructure (including education, social security, armies, and bureaucracies), the above developments have had consequences for the funding of science. Such funding has changed character in these countries, shifting from government-funded fundamental research to more and more industry-funded applied research, and from strategic, long-term innovation based on new scientific discoveries to tactical innovation based on recombining existing technologies. This is for example visible in the patents that are accorded by the US Patent office, which increasingly concern the combination and elaboration of existing technologies rather than inventions that can lead to completely new technologies (Brynjolfsson and McAfee Reference Brynjolfsson and McAfee2011; Strumsky and Lobo Reference Strumsky and Lobo2015). This trend set in motion a feedback loop that caused governments to fund less and less research in response to the fact that scientists are seen as not sufficiently responsive to the needs of society, so that funding is increasingly undertaken by industries for their own sake.

Regaining Trust

Given the need for scientific leadership to find ways to respond to the accumulated challenges that humanity is facing in the twenty-first century, how might scientists regain the trust of society? One important, almost self-evident but often ignored element of such a way forward would be the realization that scientific results and opinions, just like all statements, are not evaluated in isolation, on their merits alone, but in the contexts in which they are shaped and received. There is no such thing as scientific objectivity or neutrality. Even if the ways in which answers are obtained to scientific questions may be objective, the questions themselves are subjective, as they are impacted by societal and cultural as well as individual institutions, norms, and values. Similarly, scientific opinions are evaluated against the backdrop of the situation in which they are expressed, but also against the institutional and personal credibility of the person expressing them.

Luhmann (Reference Luhmann1989, 99) has expressed this with respect to environmental understanding by asserting that “a society cannot communicate with its environment, it can only communicate self-referentially about its environment within itself” (1985, 99). He views society as a self-organizing (social) system of communications, based on complementarity of expectations among individuals. These expectations are guided by values and meanings, which in turn relate exclusively to other values and meanings, and their constitution prepares the way for further communicative alternatives. Communication is therefore not seen as a transfer of information but as the common actualization of meaning. In the process, the complexity inherent in social interaction is reduced by harmonizing or aligning the perspectives of the actors. Everything that functions as an element in the communications system of a group is itself a product of that system. I will return to this fundamental insight; at this point it suffices to point out that it implies that there are no absolute truths or realities.

It follows from this evident statement that we should, as scientists, accord much more importance to our relationships with the contexts in which our ideas function in society. An evident case in point is the idea – inherent in our current tactical thinking – that we have to find solutions for the challenges we are facing. As I have argued elsewhere (van der Leeuw Reference van der Leeuw2012, see also Chapter 10) most, if not all solutions create their own (unintended and unforeseen) challenges. As what we consider to be such solutions are dictated by the values of our society, we are indirectly also responsible for those challenges.

But this reconsideration will necessarily also involve the institutional contexts in which we do research, the ways in which we express our results, and whether or not we take positions on certain issues. If we have solid scientific evidence for a major future train wreck such as climate change, and we have ideas about how to avoid it, do we limit ourselves as scientists to presenting the dilemma to the general public, or do we argue for certain solutions, as opposed to others?

It is not the goal of this chapter or this book to delve into ways to improve the credibility of science. That is better left to colleagues in the Philosophy of Science and Science and Technology Studies. But it will be indispensable to work toward reflexively recognizing that science is conditional, in the hope that this will lead to a critical examination of our fundamental, pre-analytic assumptions that shape the character and content of our visions and scientific knowledge and understanding.

One of the fundamental aspects of any such examination is the fact that our knowledge of the natural phenomena that many of us consider to be independent of human behavior and impact, such as gravitational fields, the speed of light and similar phenomena, is in effect dependent on our observations, and thus on our cognitive capability. This is a relatively novel but highly important realization that is beginning to permeate the natural sciences through the writings of eminent scientists such as Hawking (see his Brief History of Time (Reference Hawking1998), and Wheeler’s introduction of the Participatory Anthropic Principle (1990), where recent research into the origin of the laws of nature indicates that conscious observation may play a role. By implication, even physicists might have to pay more attention to the cognitive and social sciences to understand what they are seeing.

In that examination, we must also more closely connect the different scientific and nonscientific communities in order to better take into account the social and societal context of our scientific constructs. Scientific reasoning and understanding are indeed impossible to control scientifically. But, as such a program is contrary to the thrust of modern science, which is directed at imposing a degree of control over the reasoning and the identity of science, we cannot expect that such reflexivity will be easily adopted by the scientific community, nor that the majority of humanity will greatly increase its “scientific knowledge and understanding.” But we must try.