Book contents

- Frontmatter

- Contents

- Preface

- Notation

- Part I Bandits, Probability and Concentration

- Part II Stochastic Bandits with Finitely Many Arms

- 6 The Explore-Then-Commit Algorithm

- 7 The Upper Confidence Bound Algorithm

- 8 The Upper Confidence Bound Algorithm: Asymptotic Optimality

- 9 The Upper Confidence Bound Algorithm: Minimax Optimality

- 10 The Upper Confidence Bound Algorithm: Bernoulli Noise

- Part III Adversarial Bandits with Finitely Many Arms

- Part IV Lower Bounds for Bandits with Finitely Many Arms

- Part V Contextual and Linear Bandits

- Part VI Adversarial Linear Bandits

- Part VII Other Topics

- Part VIII Beyond Bandits

- Bibliography

- Index

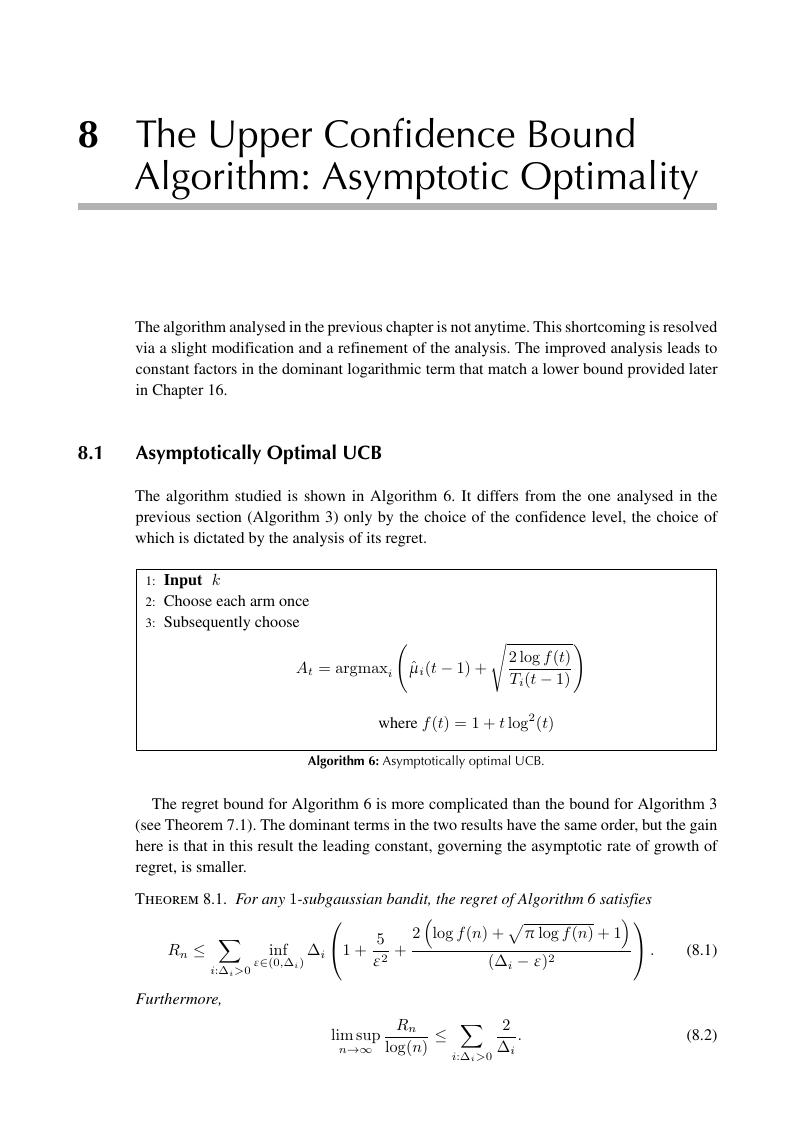

8 - The Upper Confidence Bound Algorithm: Asymptotic Optimality

from Part II - Stochastic Bandits with Finitely Many Arms

Published online by Cambridge University Press: 04 July 2020

- Frontmatter

- Contents

- Preface

- Notation

- Part I Bandits, Probability and Concentration

- Part II Stochastic Bandits with Finitely Many Arms

- 6 The Explore-Then-Commit Algorithm

- 7 The Upper Confidence Bound Algorithm

- 8 The Upper Confidence Bound Algorithm: Asymptotic Optimality

- 9 The Upper Confidence Bound Algorithm: Minimax Optimality

- 10 The Upper Confidence Bound Algorithm: Bernoulli Noise

- Part III Adversarial Bandits with Finitely Many Arms

- Part IV Lower Bounds for Bandits with Finitely Many Arms

- Part V Contextual and Linear Bandits

- Part VI Adversarial Linear Bandits

- Part VII Other Topics

- Part VIII Beyond Bandits

- Bibliography

- Index

Summary

- Type

- Chapter

- Information

- Bandit Algorithms , pp. 97 - 102Publisher: Cambridge University PressPrint publication year: 2020