Introduction

Over the past 50 years, scholars have examined the foundations of successful post-pubertal second language (L2) speech learning, focusing on a wide range of populations within diverse learning contexts (naturalistic immersion vs. classrooms), stages (rate of learning vs. ultimate attainment), and dimensions (segmentals vs. suprasegmentals; accuracy vs. fluency; perception vs. production). There is evidence that learning outcomes are associated with a range of biographical variables, such as the quantity and quality of L2 input (Derwing & Munro, Reference Derwing and Munro2013), types of interlocutors (Flege & Liu, Reference Flege and Liu2001), starting age of immersion (Abrahamsson & Hyltenstam, Reference Abrahamsson and Hyltenstam2009), starting age of foreign language education (Muñoz, Reference Muñoz2014), and learning contexts (Mora & Valls-Ferrer, Reference Mora and Valls-Ferrer2012; for a comprehensive overview, see Saito, Reference Saito, Malovrh and Benati2018). However, there is growing evidence that even if two learners spend the same amount of time practicing a target language in the same way within the same environment, their final outcomes will most likely be different (Doughty, Reference Doughty2019).

In the current study, we build on an emerging paradigm that domain-general auditory processing, which has been proposed to be a predictor of first language (L1) acquisition, plays a critical role in explaining some variance in post-pubertal L2 speech acquisition (Mueller, Friederici & Männel, Reference Mueller, Friederici and Männel2012). Auditory processing is defined as one's capacity to precisely represent, discriminate, and categorize acoustic information. While the relationship between auditory processing and L1 acquisition remains open to discussion, growing evidence suggests that the effects of auditory processing could be relatively strong in the context of L2 acquisition in adulthood, especially in immersion contexts (e.g., Kachlicka, Saito & Tierney, Reference Kachlicka, Saito and Tierney2019; Saito & Tierney, Reference Saito and Tierneyforthcoming; Sun, Saito & Tierney, Reference Sun, Saito and Tierney2021).

We hypothesize that the link between individual differences in auditory processing and language learning is not solely driven by a shared reliance on modality-general cognitive skills or a confounding influence of language experience but is at least partly driven by modality-specific factors. To test this hypothesis, we examined data collected from 70 Japanese learners of English with different levels of L2 experience and proficiency to test the prediction that performance on auditory discrimination tests may help explain individual differences in L2 speech learning, even once language experience and memory capacity were accounted for. According to the major paradigms in L2 speech acquisition (e.g., Flege & Bohn, Reference Flege and Bohn2021 for Speech Learning Model), the capacities to hear the acoustic properties of new sounds anchors the development of perception and production abilities alike, suggesting that speech categorises are perception-based. In the current investigation, therefore, L2 speech proficiency was operationalized via an identification task (perceptually identifying contrasting phonemes in a minimal pair context).

Background

Domain-general auditory processing

In the field of cognitive psychology, much scholarly attention has been given to the perceptual and cognitive abilities underlying first language acquisition. Although the extent to which such networks are specific to language learning has remained open to debate (Campbell & Tyler, Reference Campbell and Tyler2018), an influential view states that language-related processing involves the same neural systems responsible for general-purpose learning (see Hamrick, Lum & Ullman, Reference Hamrick, Lum and Ullman2018 for an overview). One such set of domain-general processes is auditory processing, which collectively refers to a set of abilities related to the use of acoustic information, such as encoding, remembering, and integrating time and frequency characteristics of sounds. These abilities have been proposed to be a cornerstone of various learning behaviours related to speech, language, music, and emotion (Kraus & Banai, Reference Kraus and Banai2007).

In the audition-based account of language learning, auditory processing ability serves as “the gateway to spoken language” (Mueller et al., Reference Mueller, Friederici and Männel2012, p. 15953) such that the detection and interpretation of acoustic information underlies every stage of phonetic, phonological, lexical, and morphosyntactic learning. For instance, toddlers analyse incoming acoustic input to detect segmental and suprasegmental patterns in a target language (Kuhl, Reference Kuhl2004). Successful detection of these patterns can help children recognize word and sentence boundaries (Cutler & Butterfield, Reference Cutler and Butterfield1992), access and select contextually appropriate target words (Norris & McQueen, Reference Norris and McQueen2008), track syntactic structures (Marslen-Wilson, Tyler, Warren, Grenier & Lee, Reference Marslen-Wilson, Tyler, Warren, Grenier and Lee1992), and fill in morphological details (Joanisse & Seidenberg, Reference Joanisse and Seidenberg1998). While there are other sources of lexical and morphosyntactic learning (e.g., visual input for reading), it is possible that auditory processing serves as a potential factor driving multiple stages of language acquisition (Tierney & Kraus, Reference Tierney and Kraus2014).

Prior evidence suggests that individual differences in auditory processing precision may influence the rate and ultimate attainment of first language (L1) acquisition – i.e., the auditory-processing-deficit hypothesis (Goswami, Reference Goswami2015; Tallal, Reference Tallal2004; Wright, B. Bowen, R. & Zecker, Reference Wright, Bowen and Zecker2000). Auditory impairments may slow down the speed of phonological, lexical, and morphosyntactic learning, eventually leading to a range of language problems. Supporting this hypothesis, individual differences in auditory processing abilities have been found to correlate with a range of L1 skills in children and adults, such as segmental and suprasegmental speech perception (Boets, Wouters, van Wieringen, De Smedt & Ghesquière, Reference Boets, Wouters, van Wieringen, De Smedt and Ghesquière2008; Cumming, Wilson & Goswami, Reference Cumming, Wilson and Goswami2015; Won, Tremblay, Clinard, Wright, Sagi & Svirsky, Reference Won, Tremblay, Clinard, Wright, Sagi and Svirsky2016), reading ability (Boets, Vandermosten, Poelmans, Luts, Wouters & Ghesquière, Reference Boets, Vandermosten, Poelmans, Luts, Wouters and Ghesquière2011; Casini, Pech-Georgel & Ziegler, Reference Casini, Pech-Georgel and Ziegler2018; Gibson, Hogben & Fletcher, Reference Gibson, Hogben and Fletcher2006; Goswami, Wang, Cruz, Fosker, Mead & Huss, Reference Goswami, Wang, Cruz, Fosker, Mead and Huss2010; White-Schwoch, Carr, Thompson, Anderson, Nicol, Bradlow, Zecker & Kraus, Reference White-Schwoch, Carr, Thompson, Anderson, Nicol, Bradlow, Zecker and Kraus2015), phonological awareness (Moritz, Yampolsky, Papadelis, Thomson & Wolf, Reference Moritz, Yampolsky, Papadelis, Thomson and Wolf2013; Peynircioglu, Durgunoglu & Oney-Kusefoglu, Reference Peynircioglu, Durgunoglu and Oney-Kusefoglu2002), phonological memory (Tierney, White-Schwoch, MacLean & Kraus, Reference Tierney, White-Schwoch, MacLean and Kraus2017), syntax processing (Gordon, Shivers, Wieland, Kotz, Yoder & McAuley, Reference Gordon, Shivers, Wieland, Kotz, Yoder and McAuley2015) and the incidence of language impairment (Corriveau & Goswami, Reference Corriveau and Goswami2009; Haake, Kob, Willmes & Domahs, Reference Haake, Kob, Willmes and Domahs2013; McArthur & Bishop, Reference McArthur and Bishop2004, Reference McArthur and Bishop2005). Auditory processing measures have been suggested to be a diagnostic tool for dyslexia (Hornickel & Kraus, Reference Hornickel and Kraus2013) and other language-related disorders (Russo, Skoe, Trommer, Nicol, Zecker, Bradlow & Kraus, Reference Russo, Skoe, Trommer, Nicol, Zecker, Bradlow and Kraus2008; but see Rosen, Reference Rosen2003 for further discussion on the causality of auditory processing and language acquisition).

Roles of auditory perception in L2 speech acquisition

Extending this line of thought, scholars have argued that the acquisitional role of auditory processing might be germane to L2 learning as well; in fact, it might be even more integral than it is for L1 acquisition, precisely because of the quantitative and qualitative differences between L1 and L2 learning experience. Although children with developmental language delays (such as dyslexia) are more likely to initially have auditory deficits, auditory-based difficulties with speech and language perception may be mitigated in the long run through the extensive practice and experience with spoken language available in the context of L1 acquisition. Comparatively, adult L2 learners’ access to target language input is limited in quantity. Many learners start studying a target language at a relatively later age through foreign language education (several hours of form-focused instruction per week) (Muñoz, Reference Muñoz2014), and have far fewer opportunities to interact with different types of native interlocutors in various social settings even under immersion conditions (Derwing & Munro, Reference Derwing and Munro2013). This lack of interactive, variable, and sufficient input opportunities jeopardizes the development of compensatory strategies, thereby making any auditory deficit potentially even more problematic over the course of L2 learning (Saito, Sun & Tierney, Reference Saito, Sun and Tierney2020b).

Moreover, one's degree of auditory processing precision may be a more consequential skill in L2 speech acquisition (compared to L1 speech acquisition), due to the relatively demanding nature of acoustic analysis, integration, and adaptation in this context. Unlike L1 acquisition, wherein learning takes place in an auditory system relatively unaffected by language experience, adult L2 learners are required to encode individual acoustic dimensions of new sounds through the lens of the already-established, automatized acoustic representations developed through exposure to their L1 (Flege & Bohn, Reference Flege and Bohn2021). From a theoretical standpoint, auditory analyses during L2 speech learning are subject to the complex interaction between new and previous language experience (e.g., McAllister, Flege, J. & Piske, Reference McAllister, Flege and Piske2002 for feature hypothesis). Not only do learners restructure their L1-specific weights of acoustic cues (e.g., Chinese speakers learning to attend to both pitch and duration for perceiving L2 English prosody; Jasmin, Sun & Tierney, Reference Jasmin, Sun and Tierney2021), but they also detect new spectro-temporal patterns that they would otherwise not use during L1 processing (e.g., Japanese speakers attending to variations in third formants for perceiving L2 English [r] and [l]; Iverson, Kuhl, Akahane-Yamada, Diesch, Tohkura, Kettermann & Siebert, Reference Iverson, Kuhl, Akahane-Yamada, Diesch, Tohkura, Kettermann and Siebert2003). When L2 learners poorly perceive certain auditory dimensions, they may show difficulty in learning new sounds, as they continue to filter out L2 input based on their L1 acoustic representations (Perrachione, T. Lee, Ha, L. Y. & Wong, Reference Perrachione, Lee, Ha and Wong2011).

Previous research has shown that adult L2 learners with greater auditory sensitivity can better perceive novel phonetic and phonological contrasts in a language that they have never heard (Jost, Eberhard-Moscicka, Pleisch, Heusser, Brandeis, Zevin & Maurer, Reference Jost, Eberhard-Moscicka, Pleisch, Heusser, Brandeis, Zevin and Maurer2015; Kempe, Bublitz & Brooks, Reference Kempe, Bublitz and Brooks2015); and that their degree of auditory processing precision predicts the extent to which adult L2 learners can improve their perception of novel and foreign language sounds when they receive intensive training (Lengeris & Hazan, Reference Lengeris and Hazan2010; Wong & Perrachione, Reference Wong and Perrachione2007). Building on these laboratory studies, Omote, Jasmin, and Tierney (Reference Omote, Jasmin and Tierney2017) more recently examined the relationship between auditory processing and L2 English perception proficiency among 25 adult Japanese speakers with varying amounts of naturalistic immersion experience in the UK. The results showed that auditory processing (i.e., neural encoding of sound, as assessed using the frequency-following-response) was strongly predictive of L2 perception attainment, even when all other biographical variables were statistically controlled, including length of immersion and age of acquisition.

The emerging findings in support of the relatively strong connection between auditory processing and post-pubertal L2 speech acquisition have been replicated with other groups of L2 learners with diverse L1 backgrounds (e.g., Chinese, Polish, Spanish; Saito, Sun, Kachlicka, Robert, Nakata & Tierney, Reference Saito, Sun, Kachlicka, Robert, Nakata and Tierney2021b) across different dimensions of L2 learning (e.g., Kachlicka et al., Reference Kachlicka, Saito and Tierney2019 and Saito, Macmillan, Kroeger, Magne, Takizawa, Kachlicka & Tierney, Reference Saito, Macmillan, Kroeger, Magne, Takizawa, Kachlicka and Tierney2022 for lexicogrammar learning; Saito, Sun & Tierney, Reference Saito, Sun and Tierney2019; Saito, Kachlicka, Sun & Tierney, Reference Saito, Kachlicka, Sun and Tierney2020a; Saito, Suzukida, Tran & Tierney, Reference Saito, Suzukida, Tran and Tierney2021a for speech production) from both cross-sectional and longitudinal perspectives (Saito et al., Reference Saito, Sun and Tierney2020b; Sun et al., Reference Sun, Saito and Tierney2021). On the whole, it seems that the outcomes of L2 speech learning could be equally affected by factors related to auditory processing and biographical backgrounds. Whereas all L2 learners demonstrate significant improvement when they engage in naturalistic immersion, certain L2 learners with greater auditory sensitivity are likely to make more of every input opportunity, converting more input into intake, and leading to more advanced L2 speech proficiency in the long run (for a comprehensive review, Saito & Tierney, Reference Saito and Tierneyforthcoming).

Motivation for the current study

Though promising, these initial findings raise a number of issues that need to be further investigated in order to provide a more comprehensive picture of the mechanisms underlying the relationship between auditory processing and adult L2 speech learning. In particular, the current study is designed to explore the complex relationship between perceptual, cognitive, and biographical individual differences, and their composite impact on L2 speech perception and production behaviours.

Although it is clear that there is a link between precise auditory perception and successful L2 speech acquisition, it remains unclear whether this link is driven by a confounding relationship with other cognitive capacities. Specifically, certain scholars have proposed that the link between auditory processing and language learning reflects a shared reliance of both tasks on short- and long-term memory abilities (Ahissar, Lubin, Putter-Katz & Banai, Reference Ahissar, Lubin, Putter-Katz and Banai2006). According to this position, L1 learners with dyslexia are more likely to have auditory processing problems, because they have poorer implicit memory capacities as manifest in less prolonged neural adaptation (i.e., shorter windows of temporal integration), suggesting that memory problems may drive both the reading difficulties and the auditory processing difficulties (Jaffe-Dax, Frenkel & Ahissar, Reference Jaffe-Dax, Frenkel and Ahissar2017).

Behavioural tasks for measuring auditory perception (e.g., formant, pitch, and duration discrimination; for details, see below) may tap into a set of modality-general executive skills as well. Sound discrimination tasks are highly repetitive and abstract, making it difficult for participants to maintain auditory information in short-term memory (Bidelman, Gandour & Krishnan, Reference Bidelman, Gandour and Krishnan2009). During multiple exposures to the same audio stimuli, those with greater memory capacities could maintain an accurate representation for a longer period of time, making more sensory information available for acoustic analyses (Zhang, Moore, Guiraud, Molloy, Yan & Amitay, Reference Zhang, Moore, Guiraud, Molloy, Yan and Amitay2016). In addition, sound discrimination tests may trigger implicit statistical learning of the distribution of stimuli across trials, such that the prior stimulus distribution is combined with the representation of each incoming stimulus (Raviv, Ahissar & Loewenstein, Reference Raviv, Ahissar and Loewenstein2012). Thus, greater implicit and statistical learning ability may help learners to extract the prototypical percept from multiple exposures more easily and reliably, which may in turn help enhance accurate auditory perception, especially when the current sensory stimulus includes noise.

Therefore, it remains to be seen whether, to what degree, and how auditory processing is uniquely linked to the degree of success in L2 speech learning, even once individual differences in explicit and implicit memory are accounted for. If auditory processing is a somewhat independent construct (with only partial overlap with other learner-external and -internal factors), the link between auditory perception and proficiency should remain significant, even when the effects of the executive function and biographical factors are controlled for. Addressing this question will shed light on whether the evidence for the link between auditory processing and L2 speech acquisition supports the theoretical claim that perceptual acuity is a bottleneck of language learning throughout one's lifespan (Cumming et al., Reference Cumming, Wilson and Goswami2015).

To date, many studies have demonstrated a significant role for phonological short-term memory in language learning, when learners are exposed to new sounds and words that they have never heard before (Baddeley, Reference Baddeley1993; Gathercole, Frankish, Pickering & Peaker, Reference Gathercole, Frankish, Pickering and Peaker1999; Papagno, Valentine & Baddeley, Reference Papagno, Valentine and Baddeley1991; Reiterer, Hu, Erb, Rota, Nardo, Grodd, Winkler & Ackermann, Reference Reiterer, Hu, Erb, Rota, Nardo, Grodd, Winkler and Ackermann2011); however, such short-term memory abilities may be irrelevant, as learners engage in more extensive and intensive practice in order to acquire the target language (Hu, Ackermann, Martin, Erb, Winkler & Reiterer, Reference Hu, Ackermann, Martin, Erb, Winkler and Reiterer2013). Though few, some scholars have begun to investigate the mediating roles of cognitive abilities (i.e., short- and long-term memory capacities) in L2 phonological acquisition. For example, Darcy, Park and Yang's (Reference Darcy, Park and Yang2015) study explored the relationship between a total of 30 experienced and inexperienced Korean learners’ L2 English vowel proficiency and working memory abilities (see also Silbert, Smith, Jackson, Campbell, Hughes & Tare, Reference Silbert, Smith, Jackson, Campbell, Hughes and Tare2015 for declarative memory).

Our proposed study was the first attempt to test the hypothesis that perceptual factors predict independent variance in L2 speech acquisition, even once cognitive factors are accounted for. If we assume that auditory processing could be a somewhat independent construct (with only partial overlap with other learner-external and -internal factors), the link between auditory perception and proficiency should remain significant, even when the effects of the executive and biographical factors are controlled for. The findings here were expected to shed light on whether the evidence for the link between auditory processing and L2 (and L1) speech acquisition supports the theoretical claim that perceptual acuity is a bottleneck of language learning throughout one's lifespan (Cumming et al., Reference Cumming, Wilson and Goswami2015).

Current study

In the current investigation, a total of 70 young adult Japanese learners of English with a wide range of L2 experience and proficiency levels were recruited at a university in Japan. As summarized in Table 1, participants completed a battery of tasks designed to tap into auditory processing (formant, pitch, and duration discrimination), short-term memory (phonological memory, working memory), and long-term memory (declarative and procedural memory). These memory abilities could also be categorized as explicit versus implicit: the three tasks (phonological short-term memory, working memory, declarative memory) inevitably require participants’ awareness (Li, Reference Li2016), but the last task (procedural memory) is hypothesized to be free of such awareness (Linck, Hughes, Campbell, Silbert, Tare, Jackson, Smith, Bunting & Doughty, Reference Linck, Hughes, Campbell, Silbert, Tare, Jackson, Smith, Bunting and Doughty2013).

Table 1. Summary of Auditory Processing and Cognitive Measures

Afterwards, the participants took part in an individual interview to report their current and past experience in L2 and music learning. Finally, their perceptual, cognitive, and biographical profiles were linked to their L2 English speech perception and production proficiency, elicited from forced-choice identification and spontaneous picture description tasks. The following research questions and predictions were formulated:

R1: To what degree are participants’ individual differences in auditory perception and memory functions inter-related?

-

In accordance with the literature on L1 acquisition, some overlaps were thought to exist between individual differences in auditory processing and short- and long-term memory functions. This is arguably because auditory processing draws on or/and co-functions with short-term memory skills (Sharma, Purdy & Kelly, Reference Sharma, Purdy and Kelly2009) and/or because auditory processing tasks per se (i.e., sound discrimination) may require participants to access statistical learning of the association, distribution, and probability of the anchor vs. target stimuli (Raviv et al., Reference Raviv, Ahissar and Loewenstein2012).

R2: Does auditory processing explain independent variance in L2 speech perception and production proficiency, even once memory capacities and language experience are accounted for?

-

It has been shown that those with more advanced L2 speech proficiency are likely to have not only more extensive and intensive L2 training experience (Muñoz, Reference Muñoz2014), but also more precise auditory perception (Kachlicka et al., Reference Kachlicka, Saito and Tierney2019). In light of Plonsky and Oswald's (Reference Plonsky and Oswald2014) field-specific guidelines, the size of the audition and acquisition link has been generally medium-to-large (r = .4-.6). Whereas research on the role of working memory in L2 phonology is still limited (cf. Darcy et al., Reference Darcy, Park and Yang2015), Linck, Osthus, Koeth and Bunting's (Reference Linck, Osthus, Koeth and Bunting2014) meta-analysis showed that the relationship between working memory and other areas of L2 acquisition (e.g., grammar) appears to be relatively small (r = .25). Some overlap may exist between individual differences in auditory processing and short- and long-term memory capacity (Sharma et al., Reference Sharma, Purdy and Kelly2009). Nevertheless, auditory processing was hypothesized to primarily reflect the degree of internal noise in the encoding of acoustic information in the early auditory system. Our prediction was that the relationship between auditory processing and L2 speech perception would remain significant, even when memory span and language experience were accounted for.

Method

Participants

To examine the role of auditory processing in various phases of L2 speech acquisition among post-pubertal L2 learners with widely different biographical backgrounds, a total of 70 college-level Japanese learners of English were recruited at a relatively large public university in Japan. Given that auditory processing is somewhat related to chronological age (Skoe, Krizman, Anderson & Kraus, Reference Skoe, Krizman, Anderson and Kraus2015), we recruited a group of young adults within a restricted age range (18–26 years). Given that the presence/absence of naturalistic immersion experience plays a key role in determining the process and product of L2 speech acquisition (Derwing & Munro, Reference Derwing and Munro2013; Muñoz, Reference Muñoz2014), our participants comprised (a) 51 inexperienced participants who had studied L2 English only through foreign language education without any experience abroad; and (b) 19 experienced participants who had study-abroad experience (i.e., more than one month of stay in English speaking countries). All of them were enrolled in undergraduate or graduate-level courses at the time of the project.

Design

The Japanese participants engaged in a series of tasks to investigate: their language experience and backgrounds (5 minutes), auditory processing abilities (10 minutes), working and long-term memory capacities (40 minutes), and L2 English speech proficiency (10 minutes). To call for participants, an electronic flyer was distributed across the university. Interested participants contacted an investigator and then joined an individual meeting. To avoid any unwanted confusion among the participants, all the tasks were delivered in Japanese.

Experience factors

Focusing on a similar L2 population (university-level English-as-a-Foreign-Language students), precursor research has examined what kinds of experience variables could be linked to the rate of L2 speech acquisition in classroom settings. The results showed that the outcomes of advanced L2 speech learning could be linked to not only the length of EFL instruction, but also to participants’ unique L2 learning experience profiles, such as study abroad (e.g., Muñoz, Reference Muñoz2014) and pronunciation training (e.g., Saito & Hanzawa, Reference Saito and Hanzawa2016). Thus, the three variables (length of instruction, study abroad, pronunciation instruction) were taken into consideration in the current study. By modifying the EFL experience questionnaire (Saito & Hanzawa, Reference Saito and Hanzawa2016), each participant was interviewed to collect and code the following informationFootnote 1:

Length of instruction

There is extensive prior evidence that adult L2 learners’ speech proficiency is strongly associated with the length of foreign language education (Muñoz, Reference Muñoz2014). The participating college students likely have different onsets of foreign language education (Grades 1-6), resulting in different amounts of instructed L2 speech learning experience. The length of foreign language education was coded as a continuous variable (M = 11.1 years; SD = 3.48; Range = 6–19).

Specific experience

To further delve into the quality of L2 learning experience, the two unique experience profiles of participants were coded in terms of the presence of English pronunciation training (n = 36 for yes, 34 for no) and study-abroad experience (n = 19 for yes, 51 for no; Muñoz, Reference Muñoz2014). As in Saito and Hanzawa (Reference Saito and Hanzawa2016), pronunciation training was defined as provision of explicit instruction on the perceptual and/or articulatory characteristics of L2 English phonemes which are known to be difficult for a particular group of L1 Japanese speakers (e.g., English [r] and [l]). As reported in many educational reports (e.g., Saito, Reference Saito2014), few teachers receive training on pronunciation training, and pronunciation instruction is ignored in Japanese EFL classrooms. Japanese EFL syllabus typically highlight the rote memorization of vocabulary items and grammar rules followed by reading and writing exercise without much focus on listening and speaking (Nishino & Watanabe, Reference Nishino and Watanabe2008).

Auditory processing measures

For the purpose of comparison with the existing literature on L1 and L2 acquisition (e.g., Kachlicka et al., Reference Kachlicka, Saito and Tierney2019), participants’ acuity to three different dimensions of sounds (formant, pitch, and duration) was to be measured via three different auditory discrimination tasks. For each task, the participant listened to three sounds, two of which were identical, and the third of which was different; their task was to identify which sound was the different sound. Either the first or third sound was always different. For each test, a series of 100 synthesized stimuli were prepared (500 ms in length) along a target acoustic dimension via custom MATLAB scripts. In the precursor project, the test-retest reliability of the test format was examined and confirmed among a range of L1 and L2 speakers (r = .701, p < .001; Saito, Sun & Tierney, Reference Saito, Sun and Tierney2020c).

Stimulus

In the formant discrimination test, the fundamental frequency was set at 100 Hz, and 30 harmonics were created up to 3000 Hz. A parallel formant filter bank was used to impose three formants at 500 Hz, 1500 Hz, and 2500 Hz (Smith, Reference Smith2007). For the comparison stimulus, the target dimension (i.e., F2) varied between 1502 Hz and 1700 Hz with a step of 2 Hz. In the pitch and duration discrimination tests, the stimuli were constructed by modifying a baseline four-harmonic complex tone with a fundamental frequency of 330 Hz, a duration of 500 ms, and a 15 ms linear amplitude ramp at the beginning and end. For the pitch discrimination test, the fundamental frequency of the baseline stimulus was set at 330 Hz, while that of the comparison stimulus ranged from 330.3 to 360 Hz with a step size of 0.3 Hz. For the duration discrimination test, the duration of the baseline stimulus was set at 250 ms, while that of the comparison stimulus ranged from 252.5-500 ms with a step size of 2.5 ms. All the audio files are currently available for reviewers to access for the purpose of thorough peer review (see Supporting Information A, Supplementary Materials).

Procedure

Three different tones were presented with an inter-stimulus interval of 0.5 s. Upon hearing them, the participants chose which of the three tones differed from the other two by pressing the number “1” or “3.” Using Levitt's (Reference Levitt1971) adaptive threshold procedure, the level of difficulty changed from trial to trial based on participants’ performance. Each test started with the standard stimulus (Level 50). When three correct responses were made in a row, the difference became smaller by a degree of 10 steps (more difficult). When their response was incorrect, the difference became wider by a degree of 10 steps (easier).

The step size changed when the direction of difficulty between trials reversed – i.e., when an increase in acoustic difference (easier) was followed by a decrease (more difficult), or vice versa. After the first reversal, the step size decreased (more difficult) from 10 to 5, and then after the second reversal the step size decreased further from 5 to 1. The tests stopped either after 70 trials or eight reversals. To calculate participants’ auditory processing scores, the stimulus levels of the reversals from the third reversal until the end of the block were averaged. Performance across all three tests (pitch, duration, and formant discrimination) was standardized and averaged (after transforming scores on each test to z-scores) to form a composite measure of auditory processing.

Cognitive ability measures

Cognitive abilities are defined as the capacity to transform, remember, use, and elaborate sensory information. In the current study, we assessed participants’ cognitive abilities with a focus on short- and long-term memory under single and/or dual task conditions. We did so in accordance with two major theoretical accounts of cognitive individual differences in L2 acquisition – i.e., working memory (Linck et al., Reference Linck, Osthus, Koeth and Bunting2014) and declarative-procedural memory (Ullman & Lovelett, Reference Ullman and Lovelett2018). Working memory refers to a set of cognitive functions responsible for the control, regulation, and storage of sensory information (e.g., Conway, Jarrold, Kane, Miyake & Towse, Reference Conway, Jarrold, Kane, Miyake and Towse2007). In Baddeley's (Reference Baddeley2003) influential model, the construct of working memory is divided into two subsystems: (a) a storage-based system which maintains short-term memory; and (b) an executive control system which manages information between short- and long-term memory stores.

In terms of long-term memory, one influential paradigm in cognitive psychology is Ullman's declarative and procedural memory model (Ullman, Reference Ullman2004). Declarative memory is thought to be primarily responsible for the explicit learning of episodic and semantic knowledge and associations (Tulving, Reference Tulving1993), and is directly relevant to the early phase of lexical learning (memorizing the sound/meaning of words, irregular morphological forms, and idioms; Ullman & Lovelett, Reference Ullman and Lovelett2018). Procedural memory is a key component of implicit and statistical learning and the control of sensorimotor and cognitive skills, such as sequences, rules, and categorization (Knowlton, Mangels & Squire, Reference Knowlton, Mangels and Squire1996). In the context of L2 learning, it has been suggested that procedural memory is instrumental to the later stages of grammar language learning (acquiring rule-based morphology, syntax and phonology; Ullman & Lovelett, Reference Ullman and Lovelett2018).

As operationalized in Linck et al. (Reference Linck, Hughes, Campbell, Silbert, Tare, Jackson, Smith, Bunting and Doughty2013), four measures were used to tap into participants’ cognitive abilities: non-word repetition to assess phonological short-term memory (e.g., Révész, Reference Révész2012); operation span to test working memory (Turner & Engle, Reference Turner and Engle1989); paired associates to measure declarative memory (Morgan-Short, Faretta-Stutenberg, Brill-Schuetz, Carpenter & Wong, Reference Morgan-Short, Faretta-Stutenberg, Brill-Schuetz, Carpenter and Wong2014); and serial reaction time for procedural memory (Kaufman, DeYoung, Gray, Jiménez, Brown & Mackintosh, Reference Kaufman, DeYoung, Gray, Jiménez, Brown and Mackintosh2010).

Phonological short-term memory

Participants completed a Japanese version of the non-word repetition task (Yamaguchi & Shimizu, Reference Yamaguchi and Shimizu2011), wherein they were asked to remember and repeat random sequences of non-words that follow Japanese phonotactic rules. The test consisted of 20 trials; there were five trials for each set consisting of one, two, three, or four nonwords. For instance, in a four nonword trial, participants heard and repeated four nonwords (e.g., tesaya, nimika, kekayu, yuteka). Credit was given for each correctly repeated word, and the total number of successful repetitions (with 50 as the maximum) was used as a PSTM score, indicating how well participants store phonological information and articulate the words. Cronbach alpha was .77.

Complex working memory

Participants completed an automated operation span test, wherein they were asked to remember a sequence of alphabetical letters while solving math problems under dual task conditions (Unsworth, Heitz, Schrock & Engle, Reference Unsworth, Heitz, Schrock and Engle2005). For each trial, participants first answered whether the solution of an equation was correct (e.g., (1*2) + 1 = 3), and then remembered a letter of the alphabet. After one trial was completed, participants selected the letters in the order in which they were presented. There were 15 sets of letters ranging from 3 to 7, and the total number of letters is 75 (3, 3, 3, 4, 4, 4, 5, 5, 5, 6, 6, 6, 7, 7, 7). The possible maximum score is 75. The Cronbach alpha reliability of the test was .721.

Declarative memory

Participants completed the LLAMA-B test (Meara, Reference Meara2005), which has been used as a behavioural measure of declarative memory (Morgan-Short et al., Reference Morgan-Short, Faretta-Stutenberg, Brill-Schuetz, Carpenter and Wong2014). In this paired associates task, participants were asked to remember the combinations of names and shapes of 20 objects. The participants first learned as many words as possible within two minutes by clicking on images to display the names of various objects. In the following testing phase, they were asked to correctly link the names of randomly chosen objects with the correct picture. The possible maximum score was 20. Cronbach alpha was .75.

Procedural memory

Participants completed a statistical serial reaction time task, which has been used as a behavioral measure of procedural memory (Kaufman et al., Reference Kaufman, DeYoung, Gray, Jiménez, Brown and Mackintosh2010). In this task, participants saw a dot appearing at one of four locations on the computer screen and responded to it as quickly and accurately as possible by pressing the corresponding key. Unbeknownst to participants, the sequence of stimuli was generated by a probabilistic rule: 85% of the sequences followed the rule (probable sequence), whereas the other 15% of the sequences were generated by another rule (improbable sequence). There were eight blocks, and each block consisted of 120 trials, with 960 trials in total. The task was scored by subtracting the mean RTs to the probable sequences from those to the improbable sequences, which reflects the amount of learning. Split half reliability was .538.

L2 speech perception measures

To measure participants’ L2 speech perception proficiency, they completed a two-alternative forced-choice identification test, developed by Slevc and Miyake (Reference Slevc and Miyake2006). The test format comprised a total of 51 minimal pairs (e.g., late vs. rate; filling vs. feeling), wherein participants were asked to discriminate a range of consonants (e.g., [r] vs. [l]) and vowels (e.g., [ɪ] vs. [i]) that are particularly difficult for Japanese learners of English.

There are sizeable phonological differences between Japanese and General American English vowels (English: 15; Japanese: 5) and consonants (English: 24; Japanese: 14). Thus, Japanese speakers have difficulty noticing, learning, and processing the English phonological contrasts absent in the L1 phonetic systems. Given these differences between Japanese and English phonology, Saito (Reference Saito2014) provided a comprehensive overview on why these English contrasts were problematic for Japanese listeners:

• Vowels (Monophthongs): Japanese listeners perceive low front vowel [æ], central mid vowel [ʌ], and low back vowel [ɑ] as a single vowel category because Japanese has only one counterpart, low mid vowel [a] (e.g., Nishi & Kewley-Port, Reference Nishi and Kewley-Port2007)

• Vowels (Diphthongs): Although English diphthongs are pronounced within a single syllable, Japanese listeners tend to substitute English diphthongs with long vowels ([boot] for [bout]) or mispronounce them with two distinguished syllables ([ka] + [u] for [kaʊ]) (Ohata, Reference Ohata2004).

• Liquids: Japanese listeners tend to perceive the English [l]-[r] contrast as the Japanese counterpart, alveolar tap [ɾ] (Iverson et al., Reference Iverson, Kuhl, Akahane-Yamada, Diesch, Tohkura, Kettermann and Siebert2003).

• Fricatives: Japanese has only three fricative sounds [s, z, h], resulting in difficulties for English-specific fricatives [f, v, θ, ð, s, z, ʃ, ʒ].

• Affricates: Japanese listeners have difficulties in perceiving [si] and [ti] because of the allophonic variations in the Japanese systems, /s/ → [ʃ] / i, /t/ → [tʃ] / i.

As described in Slevc and Miyake (Reference Slevc and Miyake2006), the test materials used in the current study featured most of the problematic English-specific phonological contrasts for Japanese listeners (for the test stimuli, see Supporting Information B, Supplementary Materials). The materials were read by a male native speaker of General American English and recorded at the sampling rate of 44.1 kHz with 16-bit quantization. Using headphones, participants listened to all the samples in a fixed order via MATLAB. For each trial, two alternatives were orthographically displayed on a computer screen, and participants were asked to choose which word they had heard.

Although the materials covered a list of the problematic English contrasts (summarized above), the number of presentations of each contrast was not equally distributed. The stimuli were played in a fixed order because we followed the procedure in Slevc and Miyake (Reference Slevc and Miyake2006). This could have resulted in an order effect. According to the results of corpus-based analyses, the target words comprised both frequent and infrequent words (1k to 15k frequency bands; Cobb, Reference Cobb2020). We did not further conduct any post-hoc analyses of the extent to which participants had familiarity with each lexical item. Note that the word frequency and familiarity of stimuli may have affected listeners’ performance (Flege, Takagi, & Mann, Reference Flege, Takagi and Mann1996). Finally, the target stimuli were embedded in two different contexts (n = 26 in isolated words vs. n = 25 in sentences). To control for the phonological, lexical, and task status of each test item, a mixed-effects binomial logistic regression analysis was conducted. Participants’ performance per item (n = 51 items per participant) was used as dependent variables with item ID (1-70) (and participant ID [1-68]) as random effects (see the Results section).

Results

Relationships between auditory processing and memory profiles

As summarized in Supporting Information C (Supplementary Materials), participants’ auditory processing and memory abilities ranged widely. A normality test (Kolmogorov-Smirnov) found the composite auditory processing scores to show positive skewness (D = .101, p = .074), and so, following the procedure in the previous literature (e.g., Kachlicka et al., Reference Kachlicka, Saito and Tierney2019), the raw scores were submitted to a square root transformation. The resulting scores did not significantly deviate from the normal distribution (D = .097, p = .170).

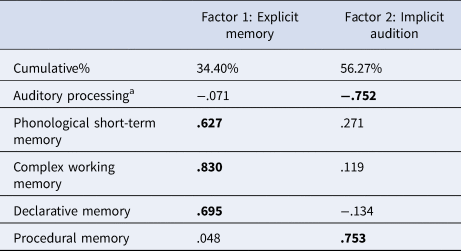

In order to examine the presence of any latent factors underlying a total of five perceptual-cognitive abilities (auditory processing, phonological short-term memory, complex working memory, declarative and procedural memory), exploratory factor analysis was conducted with Varimax rotation. We confirmed the factorability of the entire dataset via two tests: the Bartlett's test of sphericity (χ2 = 26.068, p = .004) and the Kaiser-Meyer-Olkin measure of sampling adequacy (.608). There were only two factors with eigenvalues beyond 1.0. The model accounted for 56.27% of the total variance in the participants’ abilities. In line with Hair, Anderson, Tatham, and Black's (Reference Hair, Anderson, Tatham and Black1998) recommendations, the cut-off value for the practically significant factor loadings was set to 0.4.

As shown in Table 2, Factor 1 could be considered to index participants’ explicit memory abilities: as the tasks (nonword repetition, operation span, paired associates) required them to remember, associate, and elaborate sensory information with awareness. Factor 2 could be interpreted as participants’ relatively implicit perception as the tasks (auditory discrimination, serial reaction time) led them to implicitly detect and learn statistical distributions of numeral strings without awareness. Taken together, the findings suggest that whereas auditory discrimination performance can be considered as an independent construct compared to explicit memory abilities, it may overlap with implicit statistical learning abilities.

Table 2. Summary of a Two-Factor Solution Based on a Factor Analysis of Auditory Processing and Memory Scores

Note. All loadings > .4 were highlighted in bold; a lower values indicate more precise auditory processing

Auditory processing, memory, experience, and L2 speech proficiency

The next objective of the statistical analyses was to examine whether, to what degree, and how auditory processing alone can explain the variances in participants’ L2 speech perception proficiency when their cognitive and experience profiles were statistically controlled for. According to the descriptive results of participants’ identification scores, their L2 speech proficiency widely varied (M = 71.0%, SD = 7.6; Range = 49.0–88.2). To control for the random effects of participants’ performance per item, a mixed-effects binomial logistic regression analysis was performed using the lmer functions from the lme package (Version 1.1-21; Bates, Maechler, Bolker, & Walker, Reference Bates, Maechler, Bolker, Walker, Christensen, Singmann and Grothendieck2011) in the R statistical environment.

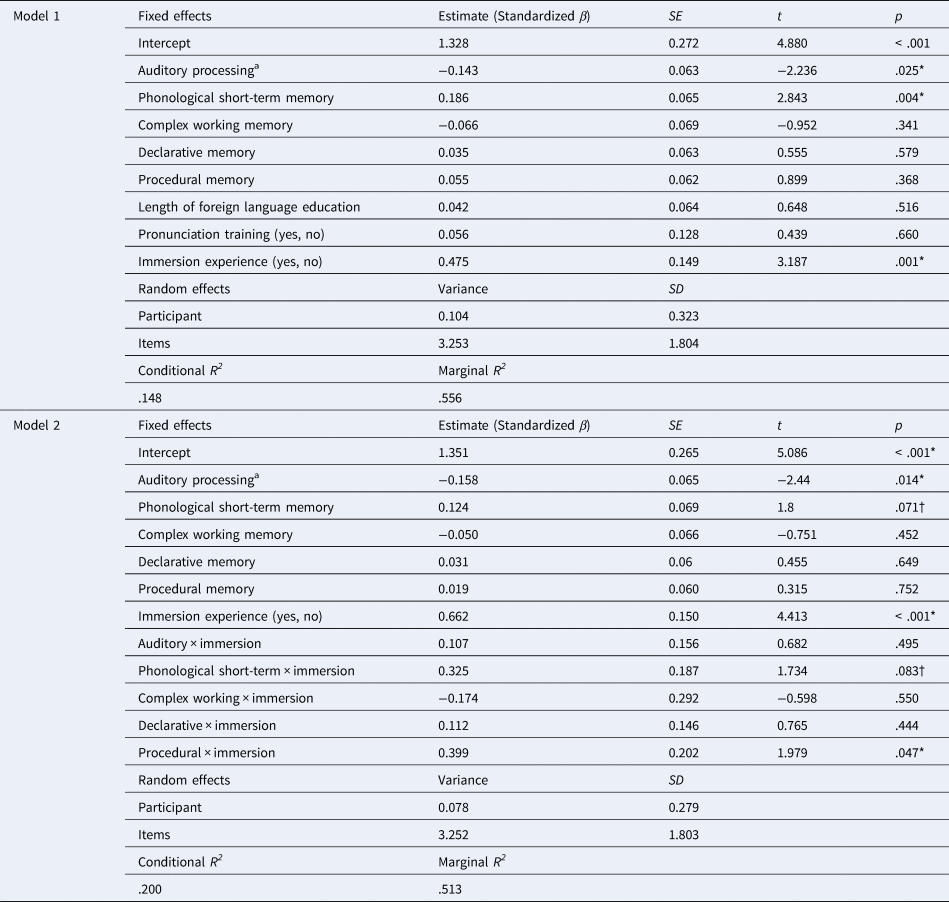

Participants’ binary accuracy scores for each stimulus (0 for incorrect, 1 for correct) were used as dependent variables. Fixed effects included one auditory processing factor (composite auditory processing scores), four memory factors (phonological short-term memory, complex working memory, declarative memory, procedural memory), and three experience factors (length of foreign language education, pronunciation training, immersion experience). The two binary predictors (the presence/absence of pronunciation training and immersion experience) were treatment-coded. Variance Inflation Factor values among the six predictors did not yield multicollinearity problems (1.180-1.416). Random effects including participant ID (1-70) and stimulus ID (1-51) were entered.

The adequacy of the sample size was examined for the analysis involving cross-random factors of participants (N = 70) and stimuli (N = 51), resulting in a total of 3570 observations. In light of Westfall, Kenny and Judd's (Reference Westfall, Kenny and Judd2014) recommended procedure for calculating statistical power for a crossed design model, to reach a medium effect size of d = 0.5, the model resulted in relatively strong power, .990. The figure here substantially exceeds Larson-Hall's (2015) field-specific benchmark, .700.

As summarized in Table 3, Model 1 explained 14.8% of the variance in participants’ L2 speech proficiency. Auditory processing, phonological short-term memory, and the immersion experience factor were identified as significant predictors. According to the standardized β values, the results indicated that participants’ L2 speech proficiency was primarily determined by immersion experience (β = .475), followed by phonological short-term memory (β = .186) and auditory processing (β = −.143).

Table 3. Summary of the Mixed-Effects Model Explaining the Perceptual, Cognitive, and Biographical Correlates of L2 Speech Perception Proficiency

Note. * indicates p < .05; † indicates p < .10; aLower values indicate more precise auditory processing

Given that the immersion experience factor (yes or no) was found to be the strongest predictor of L2 speech learning, there was a possibility that the perceptual and cognitive correlates of L2 speech learning may have differed between those with or without such experience. To further explore this, Model 2 was constructed to include both main and interaction terms. According to the results (see Table 3), whereas auditory processing remained significant (β = -.158, p = .014), the main and interaction effects of phonological short-term memory marginally reached significance (β = .124, .187, p = .071, .083). Interestingly, the interaction effects of procedural memory became significant (β = .202, p = .047). The results of post-hoc Spearman correlation analyses showed that procedural memory was significantly associated with experienced participants’ L2 speech proficiency (r = .584, p = .009), whereas the relationship was not significant among inexperienced participants (r = .106, p = .459).

In sum, the findings suggest (a) that auditory processing factors could make a unique contribution to the outcomes of L2 speech learning even after learners’ individual differences in immersion experience and memory abilities were controlled for; and that (b) the predictive power of cognitive abilities (phonological short-term, procedural memory) tend to be clear especially when the analyses focus on students with immersion experience. The relationship between participants’ auditory processing and proficiency scores (with and without the experience-related and cognitive factors) was visually summarized (see Figure 1).

Fig. 1. Scatterplots displaying the simple correlation between L2 speech proficiency and auditory processing (Left) and the partial correlation between L2 speech proficiency and auditory processing when the variables related to experience (immersion) and cognitive abilities (phonological short-term memory, complex working memory, declarative memory, and procedural memory) controlled for (Right). Lower auditory processing scores indicate more precise auditory acuity.

Discussion

An emerging paradigm suggests that individual differences in auditory processing play a key role in determining the rate and ultimate attainment of post-pubertal L2 speech learning (Kachlicka et al., Reference Kachlicka, Saito and Tierney2019; Saito & Tierney, Reference Saito and Tierneyforthcoming; Sun et al., Reference Sun, Saito and Tierney2021). Examining 70 college-level Japanese speakers of English with varied experience and proficiency levels, the current investigation explores whether auditory processing overlaps or differs from other cognitive abilities (working, declarative, and procedural memory); and the extent to which degree auditory processing can uniquely explain the outcomes of L2 speech learning when participants’ memory and experience profiles are factored out.

Constructs of auditory processing

The results of the factor analyses demonstrated that participants’ auditory processing scores clustered with implicit statistical learning abilities (measured via serial reaction time) but were distinguishable from the other explicit memory abilities (measured via nonword repetition, operation span, and paired associates). The results here lend an empirical support to the view that auditory processing could be a multifaceted phenomenon – as the task design used to assess this ability (adaptive A× B discrimination with a fixed baseline) may tap into other cognitive abilities (Snowling, Gooch, McArthur & Hulme, Reference Snowling, Gooch, McArthur and Hulme2018). Specifically, our results suggest that the auditory discrimination task may represent one's broader abilities to not only perceive subtle acoustic differences (i.e., auditory acuity) but also to detect and integrate the statistical distributions of target vs. anchor stimuli (i.e., implicit cognition; Ahissar et al., Reference Ahissar, Lubin, Putter-Katz and Banai2006).

In the discrimination task, one baseline stimulus was used as an anchor and played at every trial. As participants engaged in more opportunities to compare the acoustic differences and similarities between the anchor and target stimuli, they were induced to conduct a statistical learning of the acoustical properties of the prototype so that they could discriminate it from the target stimuli more accurately and promptly. In essence, our findings were in line with Raviv et al.'s hypothesis that auditory processing and implicit cross-trial statistical learning are interwoven to some degree (Raviv et al., Reference Raviv, Ahissar and Loewenstein2012; Jaffe-Dax et al., Reference Jaffe-Dax, Frenkel and Ahissar2017).

Another crucial point is that what was measured as auditory processing, via the discrimination task (auditory acuity, implicit cognition), was independent of participants’ abilities to remember, analyze, and elaborate on sensory information with awareness (explicit memory). This could be arguably because the nature of the task (A × B discrimination) placed only limited demands on short-term memory capacity, given the relatively short length of each sample (500 ms). Thus, it is reasonable to say that the auditory perception ability measured via the discrimination task could be theoretically distinct from explicit working and declarative memory (but somewhat overlapping with implicit procedural memory).

Auditory processing, memory, experience & L2 speech acquisition

Not surprisingly, the results of mixed-effects modeling analyses showed that Japanese university students’ L2 outcomes could be mainly determined by the presence of participants’ study-abroad experience as reported in the existing literature (e.g., Muñoz, Reference Muñoz2014). However, the remaining variance was associated with participants’ perceptual-cognitive aptitude profiles, including auditory processing (Kachlicka et al., Reference Kachlicka, Saito and Tierney2019), phonological short-term memory (Darcy et al., Reference Darcy, Park and Yang2015), and procedural memory (Linck et al., Reference Linck, Hughes, Campbell, Silbert, Tare, Jackson, Smith, Bunting and Doughty2013). Crucially, auditory processing remained as a significant predictor even after the experience and memory factors were partialled out. The findings here support the emerging paradigm that auditory processing uniquely relates to acquisition regardless of participants’ experience and cognitive states (Kachlicka et al., Reference Kachlicka, Saito and Tierney2019). Given that domain-general auditory processing has been ignored in the existing language aptitude frameworks (e.g., Hi-Lab for Linck et al., Reference Linck, Hughes, Campbell, Silbert, Tare, Jackson, Smith, Bunting and Doughty2013), future researchers are strongly recommended to include both auditory processing and cognitive abilities in test batteries, in order to provide a more comprehensive picture of the aptitude effects in post-pubertal L2 speech learning (cf. Zheng, Saito & Tierney, Reference Zheng, Saito and Tierney2021 for music aptitude vs. auditory processing).

It is noteworthy that the interaction effects of immersion experience were found significant for procedural memory and marginally significant for phonological short-term memory (but not for auditory processing). The results suggest that these cognitive abilities matter for L2 speech acquisition especially when learners engage in more conversational and communicatively authentic input in immersion settings, where they are encouraged to not only detect but also access/use auditory information with interlocutors on a regular basis (Faretta-Stutenberg & Morgan-Short, Reference Faretta-Stutenberg and Morgan-Short2018). At the same time, however, these findings should be interpreted somewhat cautiously. There is a growing amount of evidence showing that auditory processing is a primary determinant of post-pubertal L2 speech learning in an interactive, meaningful and immersive setting, but not necessarily in a form-oriented, foreign language classroom context (Saito et al., Reference Saito, Sun and Tierney2020b; Saito et al., Reference Saito, Suzukida, Tran and Tierney2021a, Reference Saito, Sun, Kachlicka, Robert, Nakata and Tierney2021b). The topic (i.e., the perceptual and cognitive correlates of L2 speech acquisition) needs to be replicated especially among more advanced L2 learners with ample immersion experience (cf. Saito et al., Reference Saito, Kachlicka, Sun and Tierney2020a).

Future directions

The current study derived two tentative conclusions: (a) that auditory perception skills were a relatively independent construct compared to other cognitive abilities (e.g., working, declarative, and procedural memory); and (b) that both perception and cognition skills serve as two separate constructs of aptitude relevant to post-pubertal L2 speech proficiency. In conjunction with the exploratory nature of the current project, there are a range of promising directions that future studies can further pursue to disentangle the complex relationship between auditory processing, cognitive abilities, experience, and L2 speech acquisition.

First, although auditory processing was measured only behaviourally in the current study, some scholars have proposed the use of electrophysiological measures to assess participants’ neural encoding of synthesized sounds at preconscious levels, finding preliminary links to a range of L2 proficiency outcomes (e.g., Kachlicka et al., Reference Kachlicka, Saito and Tierney2019; Saito et al., Reference Saito, Sun and Tierney2019, Reference Saito, Kachlicka, Sun and Tierney2020a; Sun et al., Reference Sun, Saito and Tierney2021). It would be intriguing to investigate to what degree the neural and behavioural assessments of auditory processing differentially relate to a range of executive functions and L2 skills.

Second, following the theoretical and methodological paradigms in the existing literature (e.g., Flege & Bohn, Reference Flege and Bohn2021), a forced-choice identification task was adopted to index participants’ L2 speech proficiency. However, although segmental perception is thought to serve as a cornerstone of acquisition, nevertheless L2 speech proficiency can be characterized as a multilayered phenomenon that needs to be examined from multiple angles (e.g., perception vs. production; segmentals vs. suprasegmentals; controlled vs. spontaneous; phoneme vs. word levels; see Saito & Plonsky, Reference Saito and Plonsky2019 for a conceptual summary). Thus, future studies can further delve into how learners with diverse auditory and cognitive aptitude not only perceive but also produce L2 sounds: at various processing levels (controlled vs. spontaneous); at various time scales (segmental vs. suprasegmental); and in various contexts (frequent vs. infrequent words).

One limitation resulting from our use of a forced-choice perception task is that this method cannot assess the extent to which listeners perceive differences between speech sounds in a gradient versus categorical manner. Electrophysiology research suggests that, across listeners, continuous information about acoustic characteristics of speech sounds – such as voice onset time – is retained both at perceptual and post-perceptual stages (Toscano, McMurray, Dennhardt & Luck, Reference Toscano, McMurray, Dennhardt and Luck2010). However, there are individual differences between listeners in the degree to which they retain continuous information about speech, with some perceiving speech sound contrasts in a more categorical manner and others in a more gradient manner. These individual differences in gradient perception can be revealed with a Visual Analogue Scaling (VAS) task, in which participants are asked to rate the extent to which a sound resembles one of two speech sounds on a continuous scale: participants who only use the ends of the scale perceive speech more categorically, while participants who use the entire range more or less equally are more gradient perceivers (Kapnoula, Winn, Kong, Edwards & McMurray, Reference Kapnoula, Winn, Kong, Edwards and McMurray2017; Kapnoula & McMurray, Reference Kapnoula and McMurray2021). More gradient perceivers make more flexible use of acoustic information when perceiving speech: they are more likely to integrate across multiple cues and are better able to recover from misleading information (Kapnoula, Edwards & McMurray, Reference Kapnoula, Edwards and McMurray2021). More gradient listeners, therefore, may be better able to formulate new perceptual strategies appropriate for their L2, despite having already developed alternate perceptual strategies tuned to their L1. This hypothesis could be tested in future research using the VAS task.

Third, the current study focused on the three experience-related variables – i.e., length of instruction, study-abroad, and pronunciation instruction. However, future studies can further survey precisely how often EFL learners are exposed to the target language on a daily basis per modality (listening, speaking, reading, and writing) and setting (social, home, and school). In order to design such multilayered research, scholars need to approach this topic from both quantitative and qualitative paradigms by using not only retrospective questionnaires but also daily learning log instruments (cf. Ranta & Meckelborg, Reference Ranta and Meckelborg2013).

Fourth, most of the perceptual-cognitive abilities in this study could be considered domain-general (with the exception of phonological short-term memory). With a view towards a full-fledged understanding of the mechanisms underlying advanced L2 speech acquisition, future research can examine how domain-general aptitude is related to domain-specific aptitude (e.g., phonemic coding; Saito et al., Reference Saito, Sun and Tierney2019), and how both domain-general and domain-specific measures can predict the incidence of high-level L2 proficiency (Campbell & Tyler, Reference Campbell and Tyler2018). Fourth, it needs to be acknowledged that most of the literature (including the current investigation) is cross-sectional in nature. To prove the causal relationship between auditory processing, cognitive abilities, experience, and L2 speech learning, a longitudinal study is strongly called for. One promising direction concerns aptitude-treatment interaction: future studies can investigate how those with different auditory and cognitive aptitude can differentially enhance both perception and production skills when they immerse in an L2 speaking environment for a prolonged period of time (e.g., Sun et al., Reference Sun, Saito and Tierney2021), and/or when they receive different types of instruction (e.g., Perrachione et al., Reference Perrachione, Lee, Ha and Wong2011 for perception-based training [high variability phonetic training]; Shao, Saito & Tierney, Reference Shao, Saito and Tierney2022 for production-based training [shadowing]).

Supplementary Material

For supplementary material accompanying this paper, visit https://doi.org/10.1017/S1366728922000153

Acknowledgments

We gratefully acknowledge insightful comments from anonymous Bilingualism: Language and Cognition reviewers on earlier versions of the manuscript. This research was supported by two external grants from ESRC-AHRC UK-Japan SSH Connections Grant (ES/S013024/1; awarded to Saito, Tierney, Révész, Suzuki, Jeong, & Sugiura) and Leverhulme Research Grant (RPG-2019-039; awarded to Saito & Tierney), and two internal grants from the Graduate School of International Cultural Studies (awarded to Jeong), and the Institute of Development, Aging, and Cancer, Tohoku University (awarded to Saito, Sugiura, and Jeong).