1 Introduction

We consider nonlinear dispersive equations

$$ \begin{align} \begin{split} & i \partial_t u(t,x) + \mathcal{L}\left(\nabla, \tfrac{1}{\varepsilon}\right) u(t,x) =\vert \nabla\vert^{\alpha} p\left(u(t,x), \overline{u}(t,x)\right)\\ & u(0,x) = v(x), \end{split} \end{align} $$

$$ \begin{align} \begin{split} & i \partial_t u(t,x) + \mathcal{L}\left(\nabla, \tfrac{1}{\varepsilon}\right) u(t,x) =\vert \nabla\vert^{\alpha} p\left(u(t,x), \overline{u}(t,x)\right)\\ & u(0,x) = v(x), \end{split} \end{align} $$

where we assume a polynomial nonlinearity p and that the structure of (1) implies at least local well-posedness of the problem on a finite time interval

![]() $]0,T]$

,

$]0,T]$

,

![]() $T<\infty $

in an appropriate functional space. Here, u is the complex-valued solution that we want to approximate. Concrete examples are discussed in Section 5, including the cubic nonlinear Schrödinger (NLS) equation

$T<\infty $

in an appropriate functional space. Here, u is the complex-valued solution that we want to approximate. Concrete examples are discussed in Section 5, including the cubic nonlinear Schrödinger (NLS) equation

the Korteweg–de Vries (KdV) equation

$$ \begin{align} \partial_t u +\mathcal{L}\left(\nabla\right) u = \frac12 \partial_x u^2, \quad \mathcal{L}\left(\nabla\right) = i\partial_x^3, \end{align} $$

$$ \begin{align} \partial_t u +\mathcal{L}\left(\nabla\right) u = \frac12 \partial_x u^2, \quad \mathcal{L}\left(\nabla\right) = i\partial_x^3, \end{align} $$

as well as highly oscillatory Klein–Gordon type systems

$$ \begin{align} i \partial_t u = -\mathcal{L}\left(\nabla, \tfrac{1}{\varepsilon}\right) u + \frac{1}{\varepsilon^2}\mathcal{L}\left(\nabla, \tfrac{1}{\varepsilon}\right)^{-1} \textstyle p(u,\overline{u}), \quad \mathcal{L}\left(\nabla, \tfrac{1}{\varepsilon}\right) = \frac{1}{\varepsilon}\sqrt{\frac{1}{\varepsilon^2}-\Delta}. \end{align} $$

$$ \begin{align} i \partial_t u = -\mathcal{L}\left(\nabla, \tfrac{1}{\varepsilon}\right) u + \frac{1}{\varepsilon^2}\mathcal{L}\left(\nabla, \tfrac{1}{\varepsilon}\right)^{-1} \textstyle p(u,\overline{u}), \quad \mathcal{L}\left(\nabla, \tfrac{1}{\varepsilon}\right) = \frac{1}{\varepsilon}\sqrt{\frac{1}{\varepsilon^2}-\Delta}. \end{align} $$

In the last decades, Strichartz and Bourgain space estimates allowed establishing well-posedness results for dispersive equations in low regularity spaces [Reference Burq, Gérard and Tzvetkov15, Reference Bourgain9, Reference Keel and Tao58, Reference Strichartz77, Reference Tao78]. Numerical theory for dispersive partial differential equations (PDEs), on the other hand, is in general still restricted to smooth solutions. This is due to the fact that most classical approximation techniques were originally developed for linear problems and thus, in general, neglect nonlinear frequency interactions in a system. In the dispersive setting (1) the interaction of the differential operator

![]() $\mathcal {L}$

with the nonlinearity p, however, triggers oscillations both in space and in time and, unlike for parabolic problems, no smoothing can be expected. At low regularity and high oscillations, these nonlinear frequency interactions play an essential role: Note that while the influence of

$\mathcal {L}$

with the nonlinearity p, however, triggers oscillations both in space and in time and, unlike for parabolic problems, no smoothing can be expected. At low regularity and high oscillations, these nonlinear frequency interactions play an essential role: Note that while the influence of

![]() $i\mathcal {L}$

can be small, the influence of the interaction of

$i\mathcal {L}$

can be small, the influence of the interaction of

![]() $+i\mathcal {L}$

and

$+i\mathcal {L}$

and

![]() $-i\mathcal {L}$

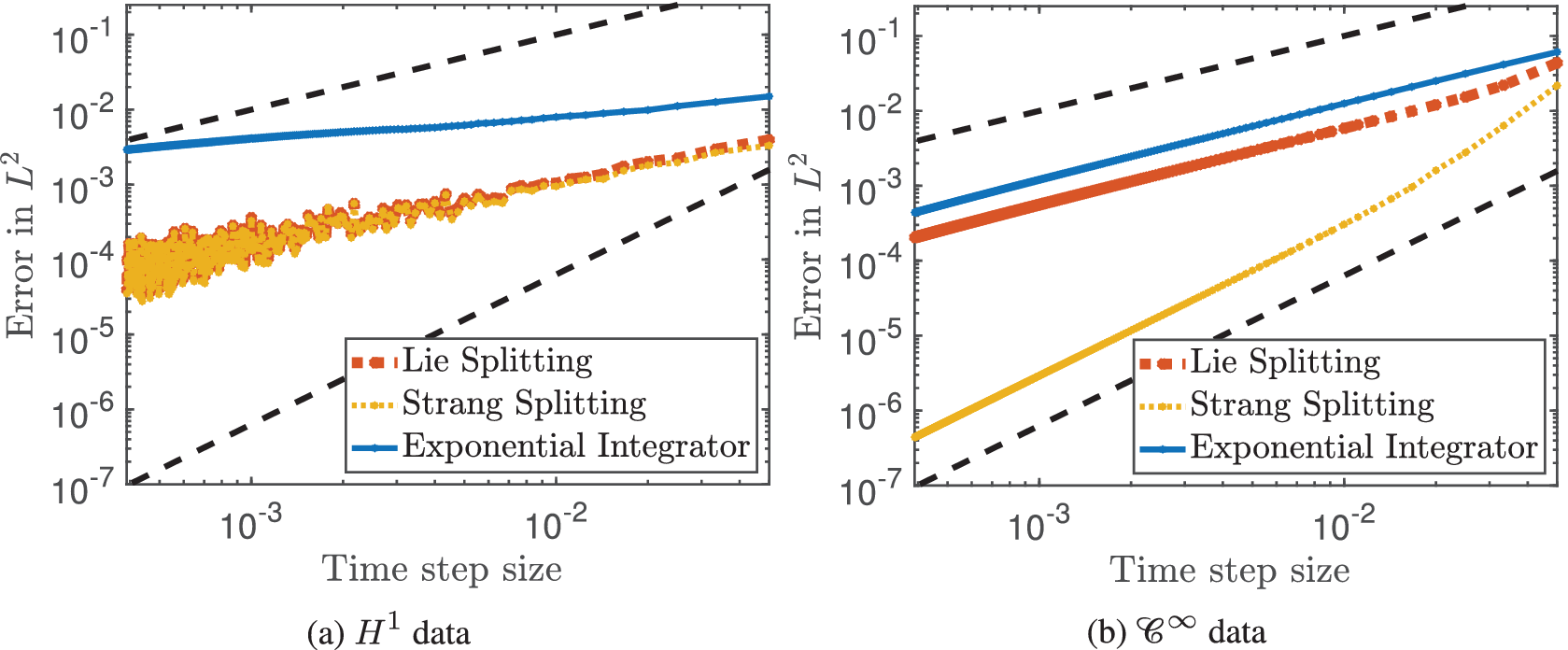

can be huge and vice versa. Classical linearised frequency approximations, used, for example, in splitting methods or exponential integrators (see Table 1) are therefore restricted to smooth solutions. The latter is not only a technical formality: The severe order reduction in case of nonsmooth solutions is also observed numerically (see, e.g., [Reference Jahnke and Lubich57, Reference Ostermann and Schratz72] and Figure 2), and only very little is known on how to overcome this issue. For an extensive overview on numerical methods for Hamiltonian systems, geometric numerical analysis, structure preserving algorithms and highly oscillatory problems we refer to the books Butcher [Reference Butcher17], Engquist et al. [Reference Engquist, Fokas, Hairer and Iserles36], Faou [Reference Faou37], E. Hairer et al. [Reference Hairer, Nørsett and Wanner46, Reference Hairer, Lubich and Wanner45], Holden et al. [Reference Holden, Karlsen, Lie and Risebro51], Leimkuhler and Reich [Reference Leimkuhler and Reich61], McLachlan and Quispel [Reference McLachlan and Quispel67], Sanz-Serna and Calvo [Reference Sanz-Serna and Calvo75] and the references therein.

$-i\mathcal {L}$

can be huge and vice versa. Classical linearised frequency approximations, used, for example, in splitting methods or exponential integrators (see Table 1) are therefore restricted to smooth solutions. The latter is not only a technical formality: The severe order reduction in case of nonsmooth solutions is also observed numerically (see, e.g., [Reference Jahnke and Lubich57, Reference Ostermann and Schratz72] and Figure 2), and only very little is known on how to overcome this issue. For an extensive overview on numerical methods for Hamiltonian systems, geometric numerical analysis, structure preserving algorithms and highly oscillatory problems we refer to the books Butcher [Reference Butcher17], Engquist et al. [Reference Engquist, Fokas, Hairer and Iserles36], Faou [Reference Faou37], E. Hairer et al. [Reference Hairer, Nørsett and Wanner46, Reference Hairer, Lubich and Wanner45], Holden et al. [Reference Holden, Karlsen, Lie and Risebro51], Leimkuhler and Reich [Reference Leimkuhler and Reich61], McLachlan and Quispel [Reference McLachlan and Quispel67], Sanz-Serna and Calvo [Reference Sanz-Serna and Calvo75] and the references therein.

Table 1 Classical frequency approximations of the principal oscillations (7).

In this work, we establish a new framework of resonance-based approximations for dispersive equations which will allow us to approximate with high-order accuracy a large class of equations under (much) lower regularity assumptions than classical techniques require. The key in the construction of the new methods lies in analysing the underlying oscillatory structure of the system (1). We look at the corresponding mild solution given by Duhamel’s formula

$$ \begin{align} u(t) = e^{ it \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} v - ie^{ it \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)}\vert \nabla\vert^\alpha \int_0^t e^{ -i\xi \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} p\left(u(\xi), \overline{u}(\xi)\right) d\xi \end{align} $$

$$ \begin{align} u(t) = e^{ it \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} v - ie^{ it \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)}\vert \nabla\vert^\alpha \int_0^t e^{ -i\xi \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} p\left(u(\xi), \overline{u}(\xi)\right) d\xi \end{align} $$

and its iterations

$$ \begin{align} u(t) = e^{ it \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} v - ie^{ it \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} \vert \nabla\vert^\alpha\mathcal{I}_1( t, \mathcal{L},v,p) +\vert \nabla\vert^{2\alpha} \int_0^t \int_0^\xi \ldots d\xi_1 d \xi. \end{align} $$

$$ \begin{align} u(t) = e^{ it \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} v - ie^{ it \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} \vert \nabla\vert^\alpha\mathcal{I}_1( t, \mathcal{L},v,p) +\vert \nabla\vert^{2\alpha} \int_0^t \int_0^\xi \ldots d\xi_1 d \xi. \end{align} $$

The principal oscillatory integral

![]() $\mathcal {I}_1( t, \mathcal {L},v,p)$

thereby takes the form

$\mathcal {I}_1( t, \mathcal {L},v,p)$

thereby takes the form

$$ \begin{align*} \mathcal{I}_1( t, \mathcal{L},v,p) = \int_0^t \mathcal{O}\mathcal{s}\mathcal{c}(\xi, \mathcal{L}, v,p) d\xi \end{align*} $$

$$ \begin{align*} \mathcal{I}_1( t, \mathcal{L},v,p) = \int_0^t \mathcal{O}\mathcal{s}\mathcal{c}(\xi, \mathcal{L}, v,p) d\xi \end{align*} $$

with the central oscillations

$$ \begin{align} \mathcal{O}\mathcal{s}\mathcal{c}(\xi, \mathcal{L}, v,p) = e^{ -i\xi \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} p\left(e^{ i \xi \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} v , e^{ - i \xi \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} \overline{v} \right) \end{align} $$

$$ \begin{align} \mathcal{O}\mathcal{s}\mathcal{c}(\xi, \mathcal{L}, v,p) = e^{ -i\xi \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} p\left(e^{ i \xi \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} v , e^{ - i \xi \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} \overline{v} \right) \end{align} $$

driven by the nonlinear frequency interactions between the differential operator

![]() $\mathcal {L}$

and the nonlinearity p. In order to obtain a suitable approximation at low regularity, it is central to resolve these oscillations – characterised by the underlying structure of resonances – numerically. Classical linearised frequency approximations, however, neglect the nonlinear interactions in (7). This linearisation is illustrated in Table 1 for splitting and exponential integrator methods ([Reference Hairer, Lubich and Wanner45, Reference Hochbruck and Ostermann49]).

$\mathcal {L}$

and the nonlinearity p. In order to obtain a suitable approximation at low regularity, it is central to resolve these oscillations – characterised by the underlying structure of resonances – numerically. Classical linearised frequency approximations, however, neglect the nonlinear interactions in (7). This linearisation is illustrated in Table 1 for splitting and exponential integrator methods ([Reference Hairer, Lubich and Wanner45, Reference Hochbruck and Ostermann49]).

The aim of this article is to introduce a framework which allows us to embed the underlying nonlinear oscillations (7) and their higher-order counterparts into the numerical discretisation. The main idea for tackling this problem is to introduce a decorated tree formalism that optimises the structure of the local error by mapping it to the particular regularity of the solution.

While first-order resonance-based discretisations have been presented for particular examples – for example, the Nonlinear Schrödinger (NLS), Korteweg–de Vries (KdV), Boussinesq, Dirac and Klein–Gordon equation; see [Reference Hofmanová and Schratz50, Reference Baumstark, Faou and Schratz4, Reference Baumstark and Schratz5, Reference Ostermann and Schratz72, Reference Ostermann and Su73, Reference Schratz, Wang and Zhao76] – no general framework could be established so far. Each and every equation had to be targeted carefully one at a time based on a sophisticated resonance analysis. This is due to the fact that the structure of the underlying oscillations (7) strongly depends on the form of the leading operator

![]() $\mathcal {L}$

, the nonlinearity p and, in particular, their nonlinear interactions.

$\mathcal {L}$

, the nonlinearity p and, in particular, their nonlinear interactions.

In addition to the lack of a general framework, very little is known about the higher-order counterpart of resonance-based discretisations. Indeed, some attempts have been made for second-order schemes (see, e.g., [Reference Hofmanová and Schratz50] for KdV and [Reference Knöller, Ostermann and Schratz60] for NLS), but they are not optimal. This is due to the fact that the leading differential operator

![]() $\mathcal {L} $

triggers a full spectrum of frequencies

$\mathcal {L} $

triggers a full spectrum of frequencies

![]() $k_{\kern-1.2pt j} \in {\mathbf {Z}}^{d}$

. Up to now it was an unresolved issue on how to control their nonlinear interactions up to higher order, in particular, in higher spatial dimensions where stability poses a key problem. Even in case of a simple NLS equation it is an open question whether stable low regularity approximations of order higher than one can be achieved in spatial dimensions

$k_{\kern-1.2pt j} \in {\mathbf {Z}}^{d}$

. Up to now it was an unresolved issue on how to control their nonlinear interactions up to higher order, in particular, in higher spatial dimensions where stability poses a key problem. Even in case of a simple NLS equation it is an open question whether stable low regularity approximations of order higher than one can be achieved in spatial dimensions

![]() $d\geq 2$

. In particular, previous works suggest a severe order reduction ([Reference Knöller, Ostermann and Schratz60]).

$d\geq 2$

. In particular, previous works suggest a severe order reduction ([Reference Knöller, Ostermann and Schratz60]).

To overcome this, we introduce a new tailored decorated tree formalism. Thereby the decorated trees encode the Fourier coefficients in the iteration of Duhamel’s formula, where the node decoration encodes the frequencies which is in the spirit close to [Reference Christ27, Reference Guo, Kwon and Oh44, Reference Gubinelli43]. The main difficulty then lies in controlling the nonlinear frequency interactions within these iterated integrals up to the desired order with the constraint of a given a priori regularity of the solution. The latter is achieved by embedding the underlying oscillations, and their higher-order iterations, via well-chosen Taylor series expansions into our formalism: The dominant interactions will be embedded exactly, whereas only the lower-order parts are approximated within the discretisation.

We base our algebraic structures on the ones developed for stochastic partial differential equations (SPDEs) with regularity structure [Reference Hairer47] which is a generalisation of rough paths [Reference Lyons63, Reference Lyons64, Reference Gubinelli41, Reference Gubinelli42]. Part of the formalism is inspired by [Reference Bruned, Hairer and Zambotti13] and the recentring map used for giving a local description of the solution of singular SPDEs. We adapt it to the context of dispersive PDEs by using a new class of decorated trees encoding the underlying dominant frequencies.

The framework of decorated trees and the underlying Hopf algebras have allowed the resolution of a large class of singular SPDEs [Reference Hairer47, Reference Bruned, Hairer and Zambotti13, Reference Chandra and Hairer22, Reference Bruned, Chandra, Chevyrev and Hairer11] which include a natural random dynamic on the space of loops in a Riemannian manifold in [Reference Bruned, Gabriel, Hairer and Zambotti12]; see [Reference Bruned, Hairer and Zambotti14] for a very brief survey on these developments. With this general framework, one can study properties of singular SPDEs solutions in full subcritical regimes [Reference Chandra, Hairer and Shen23, Reference Berglund and Bruned6, Reference Hairer and Schönbauer48, Reference Chandra, Moinat and Weber24]. The formalism of decorated trees together with the description of the renormalised equation in this context (see [Reference Bruned, Chandra, Chevyrev and Hairer11]) was directly inspired from numerical analysis of ordinary differential equations (ODEs), more precisely, from the characterisation of Runge–Kutta methods via B-series. Indeed, B-series are numerical (multi-)step methods for ODEs represented by a tree expansion; see, for example, [Reference Butcher16, Reference Berland, Owren and Skaflestad7, Reference Chartier, Hairer and Vilmart26, Reference Hairer, Lubich and Wanner45, Reference Iserles, Quispel and Tse56, Reference Calaque, Ebrahimi-Fard and Manchon19]. We also refer to [Reference Munthe-Kaas and Føllesdal68] for a review of B-series on Lie groups and homogeneous manifolds as well as to [Reference Murua and Sanz-Serna69] providing an alternative structure via word series. The field of singular SPDEs took advantage of the B-series formalism and extended their structures via the adjunction of decorations and Taylor expansions. Now, through this work, numerical analysis is taking advantage of these extended structures and enlarges their scope.

This work proposes a new application of the Butcher–Connes–Kreimer Hopf algebra [Reference Butcher16, Reference Connes and Kreimer31] to dispersive PDEs. It gives a new light on structures that have been used in various fields such as numerical analysis, renormalisation in quantum field theory, singular SPDEs and dynamical systems for classifying singularities via resurgent functions introduced by Jean Ecalle (see [Reference Ecalle34, Reference Fauvet and Menous38]). This is another testimony of the universality of this structure and adds a new object to this landscape. Our construction is motivated by two main features: Taylor expansions that are at the foundation of the numerical scheme (added at the level of the algebra as for singular SPDEs) and the frequency interaction (encoded in a tree structure for dispersive PDEs). The combination of the two together with the Butcher–Connes–Kreimer Hopf algebra allows us to design a novel class of schemes at low regularity. We observe a similar Birkhoff type factorisation as in SPDEs and perturbative quantum field theory. This factorisation allows us to single out oscillations and to perform the local error analysis.

Our main result is the new general resonance-based scheme presented in Definition 4.4 with its error structure given in Theorem 4.8. Our general framework is illustrated on concrete examples in Section 5 and simulations show the efficacy of the scheme. The algebraic structure in Section 2 has its own interest where the main objective is to understand the frequency interactions. The Birkhoff factorisation given in Subsection 3.2 is designed for this purpose and is helpful in proving Theorem 4.8. This factorisation seems new in comparison to the literature.

Assumptions. We impose a periodic boundary condition,

![]() $x \in {\mathbf {T}}^d$

. However, our theory can be extended to the full space

$x \in {\mathbf {T}}^d$

. However, our theory can be extended to the full space

![]() ${\mathbf {R}}^d$

. We assume that the differential operator

${\mathbf {R}}^d$

. We assume that the differential operator

![]() $\mathcal {L}$

is real and consider two types of structures of the system (1) which will allow us to handle dispersive equations at low regularity (such as NLS and KdV) and highly oscillatory Klein–Gordon type systems; see also (2)–(4).

$\mathcal {L}$

is real and consider two types of structures of the system (1) which will allow us to handle dispersive equations at low regularity (such as NLS and KdV) and highly oscillatory Klein–Gordon type systems; see also (2)–(4).

-

• The differential operators

$\mathcal {L}\left (\nabla , \frac {1}{\varepsilon }\right ) = \mathcal {L}\left (\nabla \right ) $

and

$\mathcal {L}\left (\nabla , \frac {1}{\varepsilon }\right ) = \mathcal {L}\left (\nabla \right ) $

and

$\vert \nabla \vert ^\alpha $

cast in Fourier space into the form (8)for some

$\vert \nabla \vert ^\alpha $

cast in Fourier space into the form (8)for some $$ \begin{align} \mathcal{L}\left(\nabla \right)(k) = k^\sigma + \sum_{\gamma : |\gamma| < \sigma} a_{\gamma} \prod_{j} k_j^{\gamma_j} ,\qquad \vert \nabla\vert^\alpha(k) = \prod_{ \gamma : |\gamma| {\leq \alpha}} k_j^{\gamma_j} \end{align} $$

$$ \begin{align} \mathcal{L}\left(\nabla \right)(k) = k^\sigma + \sum_{\gamma : |\gamma| < \sigma} a_{\gamma} \prod_{j} k_j^{\gamma_j} ,\qquad \vert \nabla\vert^\alpha(k) = \prod_{ \gamma : |\gamma| {\leq \alpha}} k_j^{\gamma_j} \end{align} $$

$ \alpha \in {\mathbf {R}} $

,

$ \alpha \in {\mathbf {R}} $

,

$ \gamma \in {\mathbf {Z}}^d $

and

$ \gamma \in {\mathbf {Z}}^d $

and

$ |\gamma | = \sum _i \gamma _i $

, where for

$ |\gamma | = \sum _i \gamma _i $

, where for

$k = (k_1,\ldots ,k_d)\in {\mathbf {Z}}^d$

and

$k = (k_1,\ldots ,k_d)\in {\mathbf {Z}}^d$

and

$m = (m_1, \ldots , m_d)\in {\mathbf {Z}}^d$

we set

$m = (m_1, \ldots , m_d)\in {\mathbf {Z}}^d$

we set  $$ \begin{align*} k^\sigma = k_1^\sigma + \ldots + k_d^\sigma, \qquad k \cdot m = k_1 m_1 + \ldots + k_d m_d. \end{align*} $$

$$ \begin{align*} k^\sigma = k_1^\sigma + \ldots + k_d^\sigma, \qquad k \cdot m = k_1 m_1 + \ldots + k_d m_d. \end{align*} $$

-

• We also consider the setting of a given high frequency

$\frac {1}{\vert \varepsilon \vert } \gg 1$

. In this case we assume that the operators

$\frac {1}{\vert \varepsilon \vert } \gg 1$

. In this case we assume that the operators

$\mathcal {L}\left (\nabla , \frac {1}{\varepsilon }\right ) $

and

$\mathcal {L}\left (\nabla , \frac {1}{\varepsilon }\right ) $

and

$\vert \nabla \vert ^\alpha $

take the form (9)for some differential operators

$\vert \nabla \vert ^\alpha $

take the form (9)for some differential operators $$ \begin{align} \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right) = \frac{1}{\varepsilon^{\sigma}} + \mathcal{B}\left(\nabla, \frac{1}{\varepsilon}\right), \qquad \vert \nabla\vert^\alpha = \mathcal{C}\left(\nabla, \frac{1}{\varepsilon}\right) \end{align} $$

$$ \begin{align} \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right) = \frac{1}{\varepsilon^{\sigma}} + \mathcal{B}\left(\nabla, \frac{1}{\varepsilon}\right), \qquad \vert \nabla\vert^\alpha = \mathcal{C}\left(\nabla, \frac{1}{\varepsilon}\right) \end{align} $$

$\mathcal {B}\left (\nabla , \frac {1}{\varepsilon }\right )$

and

$\mathcal {B}\left (\nabla , \frac {1}{\varepsilon }\right )$

and

$\mathcal {C}\left (\nabla , \frac {1}{\varepsilon }\right )$

which can be bounded uniformly in

$\mathcal {C}\left (\nabla , \frac {1}{\varepsilon }\right )$

which can be bounded uniformly in

$ \vert \varepsilon \vert $

and are relatively bounded by differential operators of degree

$ \vert \varepsilon \vert $

and are relatively bounded by differential operators of degree

$\sigma $

and degree

$\sigma $

and degree

$\alpha < \sigma $

, respectively. This allows us to include, for instance, highly oscillatory Klein–Gordon type equations (4) (see also Subsection 5.3).

$\alpha < \sigma $

, respectively. This allows us to include, for instance, highly oscillatory Klein–Gordon type equations (4) (see also Subsection 5.3).

Figure 1 Initial values for Figure 2:

![]() $u_0 \in H^1$

(left) and

$u_0 \in H^1$

(left) and

![]() $u_0 \in \mathcal {C}^\infty $

(right).

$u_0 \in \mathcal {C}^\infty $

(right).

Figure 2 Order reduction of classical schemes based on linearised frequency approximations (cf. Table 1) in case of low regularity data (error versus step size for the cubic Schrödinger equation). For smooth solutions, classical methods reach their full order of convergence (right). In contrast, for less smooth solutions they suffer from severe order reduction (left). The initial values in

![]() $H^1$

and

$H^1$

and

![]() $\mathcal {C}^{\infty }$

are plotted in Figure 1. The slope of the reference solutions (dashed lines) is one and two, respectively.

$\mathcal {C}^{\infty }$

are plotted in Figure 1. The slope of the reference solutions (dashed lines) is one and two, respectively.

In the next section we introduce the resonance-based techniques to solve the dispersive PDE (1) and illustrate our approach on the example of cubic nonlinear Schrödinger equation (2); see Example 1.

1.1 Resonances as a computational tool

Instead of employing classical linearised frequency approximations (cf. Table 1), we want to embed the underlying nonlinear oscillations

$$ \begin{align} \mathcal{O}\mathcal{s}\mathcal{c}(\xi, \mathcal{L}, v,p) = e^{ -i\xi \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} p\left(e^{ i \xi \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} v , e^{ - i \xi \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} \overline{v} \right) \end{align} $$

$$ \begin{align} \mathcal{O}\mathcal{s}\mathcal{c}(\xi, \mathcal{L}, v,p) = e^{ -i\xi \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} p\left(e^{ i \xi \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} v , e^{ - i \xi \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} \overline{v} \right) \end{align} $$

(and their higher-order counterparts) into the numerical discretisation. In case of the 1-dimensional cubic Schrödinger equation (2) the central oscillations (10), for instance, take in Fourier the form (see Example 1 for details)

$$ \begin{align*}\mathcal{O}\mathcal{s}\mathcal{c}(\xi, \Delta, v,\text{cub}) =\sum_{\substack{k_1,k_2,k_3 \in {\mathbf{Z}}\\-k_1+k_2+k_3 = k} } e^{i k x } \overline{\hat{v}}_{k_1} \hat{v}_{k_2} \hat{v}_{k_3} \int_0^\tau e^{i s \mathscr{F}(k) } ds \end{align*} $$

$$ \begin{align*}\mathcal{O}\mathcal{s}\mathcal{c}(\xi, \Delta, v,\text{cub}) =\sum_{\substack{k_1,k_2,k_3 \in {\mathbf{Z}}\\-k_1+k_2+k_3 = k} } e^{i k x } \overline{\hat{v}}_{k_1} \hat{v}_{k_2} \hat{v}_{k_3} \int_0^\tau e^{i s \mathscr{F}(k) } ds \end{align*} $$

with the underlying resonance structure

$$ \begin{align} \mathscr{F}(k) = 2 k_1^2 - 2 k_1 (k_2+k_3) + 2 k_2 k_3. \end{align} $$

$$ \begin{align} \mathscr{F}(k) = 2 k_1^2 - 2 k_1 (k_2+k_3) + 2 k_2 k_3. \end{align} $$

Ideally we would like to resolve all nonlinear frequency interactions (11) exactly in our scheme. However, these result in a generalised convolution (of Coifman–Meyer type [Reference Coifman and Meyer30]) which cannot be converted as a product into the physical space. Thus, the iteration would need to be carried out fully in Fourier space which does not yield a scheme which can be practically implemented in higher spatial dimensions; see also Remark 1.3. The latter in general also holds true in the abstract setting (10).

In order to obtain an efficient and practical resonance-based discretisation, we extract the dominant and lower-order parts from the resonance structure (10). More precisely, we filter out the dominant parts

![]() $ \mathcal {L}_{\text {dom}}$

and treat them exactly while only approximating the lower-order terms in the spirit of

$ \mathcal {L}_{\text {dom}}$

and treat them exactly while only approximating the lower-order terms in the spirit of

$$ \begin{align} \mathcal{O}\mathcal{s}\mathcal{c}(\xi, \mathcal{L}, v,p) = \left[e^{i \xi \mathcal{L}_{\text{dom}} \left(\nabla, \frac{1}{\varepsilon}\right)} p_{\text{dom}}\left(v,\overline{v}\right) \right] p_{\text{low}}(v,\overline{v}) + \mathcal{O}\Big(\xi\mathcal{L}_{\text{low}}\left(\nabla\right)v\Big). \end{align} $$

$$ \begin{align} \mathcal{O}\mathcal{s}\mathcal{c}(\xi, \mathcal{L}, v,p) = \left[e^{i \xi \mathcal{L}_{\text{dom}} \left(\nabla, \frac{1}{\varepsilon}\right)} p_{\text{dom}}\left(v,\overline{v}\right) \right] p_{\text{low}}(v,\overline{v}) + \mathcal{O}\Big(\xi\mathcal{L}_{\text{low}}\left(\nabla\right)v\Big). \end{align} $$

Here,

![]() $\mathcal {L}_{\text {dom}}$

denotes a suitable dominant part of the high frequency interactions and

$\mathcal {L}_{\text {dom}}$

denotes a suitable dominant part of the high frequency interactions and

the corresponding nonoscillatory parts (details will be given in Definition 2.6). The crucial issue is to determine

![]() $\mathcal {L}_{\text {dom}}$

,

$\mathcal {L}_{\text {dom}}$

,

![]() $p_{\text {dom}}$

and

$p_{\text {dom}}$

and

![]() $\mathcal {L}_{\text {low}}, p_{\text {low}}$

in (12) with an interplay between keeping the underlying structure of PDE and allowing a practical implementation at a reasonable cost. We refer to Example 1 for the concrete characterisation in case of cubic NLS, where

$\mathcal {L}_{\text {low}}, p_{\text {low}}$

in (12) with an interplay between keeping the underlying structure of PDE and allowing a practical implementation at a reasonable cost. We refer to Example 1 for the concrete characterisation in case of cubic NLS, where

![]() $\mathcal {L}_{\text {low}} = \nabla $

and

$\mathcal {L}_{\text {low}} = \nabla $

and

![]() $\mathcal {L}_{\text {dom}} = \Delta $

.

$\mathcal {L}_{\text {dom}} = \Delta $

.

Thanks to the resonance-based ansatz (12), the principal oscillatory integral

$$ \begin{align*} \mathcal{I}_1( t, \mathcal{L},v,p) = \int_0^t \mathcal{O}\mathcal{s}\mathcal{c}(\xi, \mathcal{L}, v,p) d\xi \end{align*} $$

$$ \begin{align*} \mathcal{I}_1( t, \mathcal{L},v,p) = \int_0^t \mathcal{O}\mathcal{s}\mathcal{c}(\xi, \mathcal{L}, v,p) d\xi \end{align*} $$

in the expansion of the exact solution (6)

$$ \begin{align} u(t) = e^{ it \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} v - ie^{ it \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} \vert \nabla\vert^\alpha \mathcal{I}_1( t, \mathcal{L},v,p) + \mathcal{O}\left( t^2\vert \nabla\vert^{2 \alpha}{ q_1( v)} \right) \end{align} $$

$$ \begin{align} u(t) = e^{ it \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} v - ie^{ it \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)} \vert \nabla\vert^\alpha \mathcal{I}_1( t, \mathcal{L},v,p) + \mathcal{O}\left( t^2\vert \nabla\vert^{2 \alpha}{ q_1( v)} \right) \end{align} $$

(for some polynomial

![]() $q_1$

) then takes the form

$q_1$

) then takes the form

$$ \begin{align} \begin{split} \mathcal{I}_1( t, \mathcal{L},v,p) & = \int_0^t \left[e^{i \xi \mathcal{L}_{\text{dom}}} p_{\text{dom}}\left(v,\overline{v}\right) \right] p_{\text{low}}(v,\overline{v}) + \mathcal{O}\Big(\xi\mathcal{L}_{\text{low}}\left(\nabla\right){ q_2(v)}\Big)d \xi\\ & = t p_{\text{low}}(v,\overline{v}) \varphi_1\left(i t \mathcal{L}_{\text{dom}} \right) p_{\text{dom}}\left(v,\overline{v}\right) + \mathcal{O}\Big(t^2\mathcal{L}_{\text{low}}\left(\nabla\right){ q_2(v)}\Big) \end{split} \end{align} $$

$$ \begin{align} \begin{split} \mathcal{I}_1( t, \mathcal{L},v,p) & = \int_0^t \left[e^{i \xi \mathcal{L}_{\text{dom}}} p_{\text{dom}}\left(v,\overline{v}\right) \right] p_{\text{low}}(v,\overline{v}) + \mathcal{O}\Big(\xi\mathcal{L}_{\text{low}}\left(\nabla\right){ q_2(v)}\Big)d \xi\\ & = t p_{\text{low}}(v,\overline{v}) \varphi_1\left(i t \mathcal{L}_{\text{dom}} \right) p_{\text{dom}}\left(v,\overline{v}\right) + \mathcal{O}\Big(t^2\mathcal{L}_{\text{low}}\left(\nabla\right){ q_2(v)}\Big) \end{split} \end{align} $$

(for some polynomial

![]() $q_2$

) where for shortness we write

$q_2$

) where for shortness we write

$\mathcal {L} = \mathcal {L}\left (\nabla , \frac {1}{\varepsilon }\right )$

and define

$\mathcal {L} = \mathcal {L}\left (\nabla , \frac {1}{\varepsilon }\right )$

and define

![]() $\varphi _1(\gamma ) = \gamma ^{-1}\left (e^\gamma -1\right )$

for

$\varphi _1(\gamma ) = \gamma ^{-1}\left (e^\gamma -1\right )$

for

![]() $\gamma \in \mathbf {C}$

. Plugging (15) into (14) yields for a small time step

$\gamma \in \mathbf {C}$

. Plugging (15) into (14) yields for a small time step

![]() $\tau $

that

$\tau $

that

$$ \begin{align} u(\tau) = e^{ i\tau \mathcal{L}} v - \tau ie^{ i\tau \mathcal{L}} \vert \nabla\vert^\alpha &\Big[p_{\text{low}}(v,\overline{v}) \varphi_1\left(i \tau \mathcal{L}_{\text{dom}} \left(\nabla, \frac{1}{\varepsilon}\right)\right) p_{\text{dom}}\left(v,\overline{v}\right) \Big] \notag \\ &\qquad\qquad \qquad + \mathcal{O}\left( \tau^2\vert \nabla\vert^{2 \alpha} q_ 1(v) \right) + \mathcal{O}\Big(\tau^2\vert \nabla\vert^{ \alpha}\mathcal{L}_{\text{low}} \left(\nabla\right)q_2({ v})\Big) \end{align} $$

$$ \begin{align} u(\tau) = e^{ i\tau \mathcal{L}} v - \tau ie^{ i\tau \mathcal{L}} \vert \nabla\vert^\alpha &\Big[p_{\text{low}}(v,\overline{v}) \varphi_1\left(i \tau \mathcal{L}_{\text{dom}} \left(\nabla, \frac{1}{\varepsilon}\right)\right) p_{\text{dom}}\left(v,\overline{v}\right) \Big] \notag \\ &\qquad\qquad \qquad + \mathcal{O}\left( \tau^2\vert \nabla\vert^{2 \alpha} q_ 1(v) \right) + \mathcal{O}\Big(\tau^2\vert \nabla\vert^{ \alpha}\mathcal{L}_{\text{low}} \left(\nabla\right)q_2({ v})\Big) \end{align} $$

for some polynomials

![]() $q_1, q_2$

. The expansion of the exact solution (16) builds the foundation of the first-order resonance-based discretisation

$q_1, q_2$

. The expansion of the exact solution (16) builds the foundation of the first-order resonance-based discretisation

$$ \begin{align} u^{n+1} = e^{ i\tau \mathcal{L}} u^n - \tau ie^{ i\tau \mathcal{L}} { \vert \nabla\vert^\alpha} \Big[p_{\text{low}}(u^n,\overline{u}^n) \varphi_1\left(i \tau\mathcal{L}_{\text{dom}} \left(\nabla, \frac{1}{\varepsilon}\right) \right) p_{\text{dom}}\left(u^n,\overline{u}^n\right) \Big]. \end{align} $$

$$ \begin{align} u^{n+1} = e^{ i\tau \mathcal{L}} u^n - \tau ie^{ i\tau \mathcal{L}} { \vert \nabla\vert^\alpha} \Big[p_{\text{low}}(u^n,\overline{u}^n) \varphi_1\left(i \tau\mathcal{L}_{\text{dom}} \left(\nabla, \frac{1}{\varepsilon}\right) \right) p_{\text{dom}}\left(u^n,\overline{u}^n\right) \Big]. \end{align} $$

Compared to classical linear frequency approximations (cf. Table 1), the main gain of the more involved resonance-based approach (17) is the following: All dominant parts

![]() $\mathcal {L}_{\text {dom}}$

are captured exactly in the discretisation, while only the lower-order/nonoscillatory parts

$\mathcal {L}_{\text {dom}}$

are captured exactly in the discretisation, while only the lower-order/nonoscillatory parts

![]() $\mathcal {L}_{\text {low}}$

are approximated. Henceforth, within the resonance-based approach (17) the local error only depends on the lower-order, nonoscillatory operator

$\mathcal {L}_{\text {low}}$

are approximated. Henceforth, within the resonance-based approach (17) the local error only depends on the lower-order, nonoscillatory operator

![]() $\mathcal {L}_{\text {low}}$

, while the local error of classical methods involves the full operator

$\mathcal {L}_{\text {low}}$

, while the local error of classical methods involves the full operator

![]() $\mathcal {L}$

and, in particular, its dominant part

$\mathcal {L}$

and, in particular, its dominant part

![]() $\mathcal {L}_{\text {dom}}$

. Thus, the resonance-based approach (17) allows us to approximate a more general class of solutions

$\mathcal {L}_{\text {dom}}$

. Thus, the resonance-based approach (17) allows us to approximate a more general class of solutions

$$ \begin{align} &u \in \underbrace{ \mathcal{D}\left(\vert \nabla\vert^\alpha \mathcal{L}_{\text{low}}\left(\nabla, \frac{1}{\varepsilon}\right)\right) }_{\text{resonance domain}} \cap \mathcal{D}\left(\vert \nabla\vert^{2\alpha}\right) \notag \\ &\qquad \qquad \supset { \mathcal{D}\left(\vert \nabla\vert^\alpha \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)\right) }\cap \mathcal{D}\left(\vert \nabla\vert^{2\alpha}\right) =\underbrace{ \mathcal{D}\left(\vert \nabla\vert^\alpha \mathcal{L}_{\text{dom}}\left(\nabla, \frac{1}{\varepsilon}\right)\right) }_{\text{classical domain}}\cap \mathcal{D}\left(\vert \nabla\vert^{2\alpha}\right). \end{align} $$

$$ \begin{align} &u \in \underbrace{ \mathcal{D}\left(\vert \nabla\vert^\alpha \mathcal{L}_{\text{low}}\left(\nabla, \frac{1}{\varepsilon}\right)\right) }_{\text{resonance domain}} \cap \mathcal{D}\left(\vert \nabla\vert^{2\alpha}\right) \notag \\ &\qquad \qquad \supset { \mathcal{D}\left(\vert \nabla\vert^\alpha \mathcal{L}\left(\nabla, \frac{1}{\varepsilon}\right)\right) }\cap \mathcal{D}\left(\vert \nabla\vert^{2\alpha}\right) =\underbrace{ \mathcal{D}\left(\vert \nabla\vert^\alpha \mathcal{L}_{\text{dom}}\left(\nabla, \frac{1}{\varepsilon}\right)\right) }_{\text{classical domain}}\cap \mathcal{D}\left(\vert \nabla\vert^{2\alpha}\right). \end{align} $$

Higher-order resonance-based methods. Classical approximation techniques, such as splitting or exponential integrator methods, can easily be extended to higher order; see, for example, [Reference Hairer, Lubich and Wanner45, Reference Hochbruck and Ostermann49, Reference Thalhammer80]. The step from a first- to higher-order approximation lies in subsequently employing a higher-order Taylor series expansion to the exact solution

$$ \begin{align*} u(t) = u(0) + t\partial_t u(0) + \ldots + \frac{t^{r}}{r!} \partial_t^{r} u(0) + \mathcal{O} \left( t^{r+1} \partial_{t}^{r+1} u\right). \end{align*} $$

$$ \begin{align*} u(t) = u(0) + t\partial_t u(0) + \ldots + \frac{t^{r}}{r!} \partial_t^{r} u(0) + \mathcal{O} \left( t^{r+1} \partial_{t}^{r+1} u\right). \end{align*} $$

Within this expansion, the higher-order iterations of the oscillations (7) in the exact solution are, however, not resolved but subsequently linearised. Therefore, classical high-order methods are restricted to smooth solutions as their local approximation error in general involves high-order derivatives

$$ \begin{align} \mathcal{O} \left( t^{r+1} \partial_{t}^{r+1} u\right)=\mathcal{O} \left( t^{r+1} \mathcal{L}^{r+1}\left(\nabla, \tfrac{1}{\varepsilon}\right) u\right). \end{align} $$

$$ \begin{align} \mathcal{O} \left( t^{r+1} \partial_{t}^{r+1} u\right)=\mathcal{O} \left( t^{r+1} \mathcal{L}^{r+1}\left(\nabla, \tfrac{1}{\varepsilon}\right) u\right). \end{align} $$

This phenomenon is also illustrated in Figure 2 where we numerically observe the order reduction of the Strang splitting method (of classical order 2) down to the order of the Lie splitting method (of classical order 1) in case of rough solutions. In particular, we observe that classical high-order methods do not pay off at low regularity as their error behaviour reduces to the one of lower-order methods.

At first glance our resonance-based approach can also be straightforwardly extended to higher order. Instead of considering only the first-order iteration (6) the natural idea is to iterate Duhamel’s formula (5) up to the desired order r; that is, for initial value

![]() $u(0)=v$

,

$u(0)=v$

,

$$ \begin{align} \begin{split} u(t) &= e^{i t \mathcal{L}} v -i e^{i t \mathcal{L}}\nabla^{\alpha} \int_0^te^{ -i \xi_1 \mathcal{L}} p\left( e^{ i \xi_1 \mathcal{L}} v,e^{- i \xi_1 \mathcal{L}} \overline{v}\right) d\xi_1 \\ &\quad{}-e^{i t \mathcal{L}}\nabla^{\alpha} \int_0^te^{ -i \xi_1 \mathcal{L}} \Big[D_1 p \left( e^{ i \xi_1 \mathcal{L}} v,e^{- i \xi_1 \mathcal{L}} \overline{v}\right) \\ &\quad \cdot e^{ i \xi_1\mathcal{L}}\nabla^{\alpha} \int_0^{\xi_1} e^{ -i \xi_2 \mathcal{L}} p\left( e^{ i \xi_1 \mathcal{L}} v,e^{- i \xi_1 \mathcal{L}} \overline{v}\right) d\xi_2 \Big]d\xi_1 \\ &\quad{}+e^{i t \mathcal{L}}\nabla^{\alpha} \int_0^te^{ -i \xi_1 \mathcal{L}} \Big[D_2p \left( e^{ i \xi_1 \mathcal{L}} v,e^{- i \xi_1 \mathcal{L}} \overline{v}\right) \\ & \quad{} \cdot e^{ -i \xi_1\mathcal{L}}\nabla^{\alpha} \int_0^{\xi_1} e^{ i \xi_2 \mathcal{L}} \overline{p\left( e^{ i \xi_1 \mathcal{L}} v,e^{- i \xi_1 \mathcal{L}} \overline{v}\right) }d\xi_2 \Big]d\xi_1 \\ & \quad{}+ \ldots+\nabla^{\alpha} \int_0^t\nabla^{\alpha} \int_0^\xi \ldots \nabla^{\alpha}\int_0^{\xi_r} d\xi_{r} \ldots d\xi_1 d \xi \end{split} \end{align} $$

$$ \begin{align} \begin{split} u(t) &= e^{i t \mathcal{L}} v -i e^{i t \mathcal{L}}\nabla^{\alpha} \int_0^te^{ -i \xi_1 \mathcal{L}} p\left( e^{ i \xi_1 \mathcal{L}} v,e^{- i \xi_1 \mathcal{L}} \overline{v}\right) d\xi_1 \\ &\quad{}-e^{i t \mathcal{L}}\nabla^{\alpha} \int_0^te^{ -i \xi_1 \mathcal{L}} \Big[D_1 p \left( e^{ i \xi_1 \mathcal{L}} v,e^{- i \xi_1 \mathcal{L}} \overline{v}\right) \\ &\quad \cdot e^{ i \xi_1\mathcal{L}}\nabla^{\alpha} \int_0^{\xi_1} e^{ -i \xi_2 \mathcal{L}} p\left( e^{ i \xi_1 \mathcal{L}} v,e^{- i \xi_1 \mathcal{L}} \overline{v}\right) d\xi_2 \Big]d\xi_1 \\ &\quad{}+e^{i t \mathcal{L}}\nabla^{\alpha} \int_0^te^{ -i \xi_1 \mathcal{L}} \Big[D_2p \left( e^{ i \xi_1 \mathcal{L}} v,e^{- i \xi_1 \mathcal{L}} \overline{v}\right) \\ & \quad{} \cdot e^{ -i \xi_1\mathcal{L}}\nabla^{\alpha} \int_0^{\xi_1} e^{ i \xi_2 \mathcal{L}} \overline{p\left( e^{ i \xi_1 \mathcal{L}} v,e^{- i \xi_1 \mathcal{L}} \overline{v}\right) }d\xi_2 \Big]d\xi_1 \\ & \quad{}+ \ldots+\nabla^{\alpha} \int_0^t\nabla^{\alpha} \int_0^\xi \ldots \nabla^{\alpha}\int_0^{\xi_r} d\xi_{r} \ldots d\xi_1 d \xi \end{split} \end{align} $$

where

![]() $ D_1 $

(respectively

$ D_1 $

(respectively

![]() $ D_2 $

) corresponds to the derivative in the first (respectively second) component of

$ D_2 $

) corresponds to the derivative in the first (respectively second) component of

![]() $ p $

. The key idea will then be the following: Instead of linearising the frequency interactions by a simple Taylor series expansion of the oscillatory terms

$ p $

. The key idea will then be the following: Instead of linearising the frequency interactions by a simple Taylor series expansion of the oscillatory terms

![]() $e^{\pm i \xi _\ell \mathcal {L}}$

(as classical methods would do), we want to embed the dominant frequency interactions of (20) exactly into our numerical discretisation. By neglecting the last term involving the iterated integral of order r, we will then introduce the desired local error

$e^{\pm i \xi _\ell \mathcal {L}}$

(as classical methods would do), we want to embed the dominant frequency interactions of (20) exactly into our numerical discretisation. By neglecting the last term involving the iterated integral of order r, we will then introduce the desired local error

$\mathcal {O}\Big (\nabla ^{(r+1)\alpha } t^{r+1}q(u)\Big )$

for some polynomial q.

$\mathcal {O}\Big (\nabla ^{(r+1)\alpha } t^{r+1}q(u)\Big )$

for some polynomial q.

Compared to the first-order approximation (12), this is much more involved as high-order iterations of the nonlinear frequency interactions need to be controlled. The control of these iterated oscillations is not only a delicate problem on the discrete (numerical) level, concerning accuracy, stability, etc., but already on the continuous level: We have to encode the structure (which strongly depends on the underlying structure of the PDE; that is, the form of operator

![]() $\mathcal {L}$

and the shape of nonlinearity p) and at the same time keep track of the regularity assumptions. In order to achieve this in the general setting (1), we will introduce the decorated tree formalism in Subsection 1.2. First, let us first illustrate the main ideas on the example of the cubic periodic Schrödinger equation.

$\mathcal {L}$

and the shape of nonlinearity p) and at the same time keep track of the regularity assumptions. In order to achieve this in the general setting (1), we will introduce the decorated tree formalism in Subsection 1.2. First, let us first illustrate the main ideas on the example of the cubic periodic Schrödinger equation.

Example 1 (cubic periodic Schrödinger equation). We consider the 1-dimensional cubic Schrödinger equation

$$ \begin{align} i \partial_t u + \partial_x^2 u = \vert u\vert^2 u \end{align} $$

$$ \begin{align} i \partial_t u + \partial_x^2 u = \vert u\vert^2 u \end{align} $$

equipped with periodic boundary conditions; that is,

![]() $x \in {\mathbf {T}}$

. The latter casts into the general form (1) with

$x \in {\mathbf {T}}$

. The latter casts into the general form (1) with

$$ \begin{align} \mathcal{L}\left(\nabla, \tfrac{1}{\varepsilon}\right) = \partial_x^2, \quad \alpha = 0 \quad \text{and}\quad p(u,\overline{u}) =u^2 \overline{u}. \end{align} $$

$$ \begin{align} \mathcal{L}\left(\nabla, \tfrac{1}{\varepsilon}\right) = \partial_x^2, \quad \alpha = 0 \quad \text{and}\quad p(u,\overline{u}) =u^2 \overline{u}. \end{align} $$

In the case of cubic NLS, the central oscillatory integral (at first order) takes the form (cf. (7))

$$ \begin{align} \mathcal{I}_1(\tau, \partial_x^2,v) = \int_0^\tau e^{-i s \partial_x^2}\left[ \left( e^{- i s \partial_x^2} \overline{v} \right) \left ( e^{ i s \partial_x^2} v \right)^2\right] d s. \end{align} $$

$$ \begin{align} \mathcal{I}_1(\tau, \partial_x^2,v) = \int_0^\tau e^{-i s \partial_x^2}\left[ \left( e^{- i s \partial_x^2} \overline{v} \right) \left ( e^{ i s \partial_x^2} v \right)^2\right] d s. \end{align} $$

Assuming that

![]() $v\in L^2$

, the Fourier transform

$v\in L^2$

, the Fourier transform

![]() $ v(x) = \sum _{k \in {\mathbf {Z}}}\hat {v}_k e^{i k x} $

allows us to express the action of the free Schrödinger group as a Fourier multiplier; that is,

$ v(x) = \sum _{k \in {\mathbf {Z}}}\hat {v}_k e^{i k x} $

allows us to express the action of the free Schrödinger group as a Fourier multiplier; that is,

$$ \begin{align*} e^{\pm i t \partial_x^2}v(x) = \sum_{k \in {\mathbf{Z}}} e^{\mp i t k^2} \hat{v}_k e^{i k x}. \end{align*} $$

$$ \begin{align*} e^{\pm i t \partial_x^2}v(x) = \sum_{k \in {\mathbf{Z}}} e^{\mp i t k^2} \hat{v}_k e^{i k x}. \end{align*} $$

With this at hand, we can express the oscillatory integral (23) as follows:

$$ \begin{align} \mathcal{I}_1(\tau, \partial_x^2,v) = \sum_{\substack{k_1,k_2,k_3 \in {\mathbf{Z}} \\ -k_1+k_2+k_3 = k} } e^{i k x } \overline{\hat{v}}_{k_1} \hat{v}_{k_2} \hat{v}_{k_3} \int_0^\tau e^{i s \mathscr{F}(k) } ds \end{align} $$

$$ \begin{align} \mathcal{I}_1(\tau, \partial_x^2,v) = \sum_{\substack{k_1,k_2,k_3 \in {\mathbf{Z}} \\ -k_1+k_2+k_3 = k} } e^{i k x } \overline{\hat{v}}_{k_1} \hat{v}_{k_2} \hat{v}_{k_3} \int_0^\tau e^{i s \mathscr{F}(k) } ds \end{align} $$

with the underlying resonance structure

$$ \begin{align}{ \mathscr{F}(k) = 2 k_1^2 - 2 k_1 (k_2+k_3) + 2 k_2 k_3}. \end{align} $$

$$ \begin{align}{ \mathscr{F}(k) = 2 k_1^2 - 2 k_1 (k_2+k_3) + 2 k_2 k_3}. \end{align} $$

In the spirit of (12) we need to extract the dominant and lower-order parts from the resonance structure (25). The choice is based on the following observation. Note that

$2k_1^2$

corresponds to a second-order derivative; that is, with the inverse Fourier transform

$2k_1^2$

corresponds to a second-order derivative; that is, with the inverse Fourier transform

![]() $\mathcal {F}^{\,-1}$

, we have

$\mathcal {F}^{\,-1}$

, we have

$$ \begin{align*} \mathcal{F}^{-1}\left(2k_1^2 \overline{\hat v}_{k_1} \hat v_{k_2} \hat v_{k_3}\right) = \left(- 2\partial_x^2 \overline{v}\right) v^2 \end{align*} $$

$$ \begin{align*} \mathcal{F}^{-1}\left(2k_1^2 \overline{\hat v}_{k_1} \hat v_{k_2} \hat v_{k_3}\right) = \left(- 2\partial_x^2 \overline{v}\right) v^2 \end{align*} $$

while the terms

![]() $k_\ell \cdot k_m$

with

$k_\ell \cdot k_m$

with

![]() $\ell \neq m$

correspond only to first-order derivatives; that is,

$\ell \neq m$

correspond only to first-order derivatives; that is,

$$ \begin{align*} \mathcal{F}^{-1}\left(k_1 \overline{\hat v}_{k_1} k_2 \hat v_{k_2} \hat v_{k_3}\right) = -\vert\partial_x v\vert^2 v, \quad \mathcal{F}^{-1}\left( \overline{\hat v}_{k_1} k_2 \hat v_{k_2} k_3 \hat v_{k_3}\right) = - (\partial_x v)^2\overline{v}. \end{align*} $$

$$ \begin{align*} \mathcal{F}^{-1}\left(k_1 \overline{\hat v}_{k_1} k_2 \hat v_{k_2} \hat v_{k_3}\right) = -\vert\partial_x v\vert^2 v, \quad \mathcal{F}^{-1}\left( \overline{\hat v}_{k_1} k_2 \hat v_{k_2} k_3 \hat v_{k_3}\right) = - (\partial_x v)^2\overline{v}. \end{align*} $$

This motivates the choice

with

$$ \begin{align} {\mathcal{L}_{\text{dom}}(k_1) = 2k_1^2 \quad \text{and}\quad \mathcal{L}_{\text{low}}(k_1,k_2,k_3) = - 2 k_1 (k_2+k_3) + 2 k_2 k_3}. \end{align} $$

$$ \begin{align} {\mathcal{L}_{\text{dom}}(k_1) = 2k_1^2 \quad \text{and}\quad \mathcal{L}_{\text{low}}(k_1,k_2,k_3) = - 2 k_1 (k_2+k_3) + 2 k_2 k_3}. \end{align} $$

In terms of (17) we thus have

$$ \begin{align} \mathcal{L}_{\text{dom}} = - 2\partial_x^2, \quad \quad p_{\text{dom}}(v,\overline{v}) = \overline{v} \quad \text{and}\quad p_{\text{low}}(v,\overline{v}) = v^2 \end{align} $$

$$ \begin{align} \mathcal{L}_{\text{dom}} = - 2\partial_x^2, \quad \quad p_{\text{dom}}(v,\overline{v}) = \overline{v} \quad \text{and}\quad p_{\text{low}}(v,\overline{v}) = v^2 \end{align} $$

and the first-order NLS resonance-based discretisation (17) takes the form

$$ \begin{align} u^{n+1} = e^{ i\tau \partial_x^2} u^n - \tau ie^{ i\tau \partial_x^2} \Big[(u^n)^2 \varphi_1\left(-2 i \tau \partial_x^2 \right) \overline{u}^n \Big]. \end{align} $$

$$ \begin{align} u^{n+1} = e^{ i\tau \partial_x^2} u^n - \tau ie^{ i\tau \partial_x^2} \Big[(u^n)^2 \varphi_1\left(-2 i \tau \partial_x^2 \right) \overline{u}^n \Big]. \end{align} $$

Thanks to (16), we readily see by (26) that the NLS scheme (28) introduces the approximation error

$$ \begin{align} \mathcal{O}\left(\tau^2 \mathcal{L}_{\text{low}}q(u)\right)= \mathcal{O}\left(\tau^2 \partial_xq(u)\right) \end{align} $$

$$ \begin{align} \mathcal{O}\left(\tau^2 \mathcal{L}_{\text{low}}q(u)\right)= \mathcal{O}\left(\tau^2 \partial_xq(u)\right) \end{align} $$

for some polynomial q in u. Compared to the error structure of classical discretisation techniques, which involve the full and thus dominant operator

![]() $\mathcal {L}_{\text {dom}} = \partial _x^2$

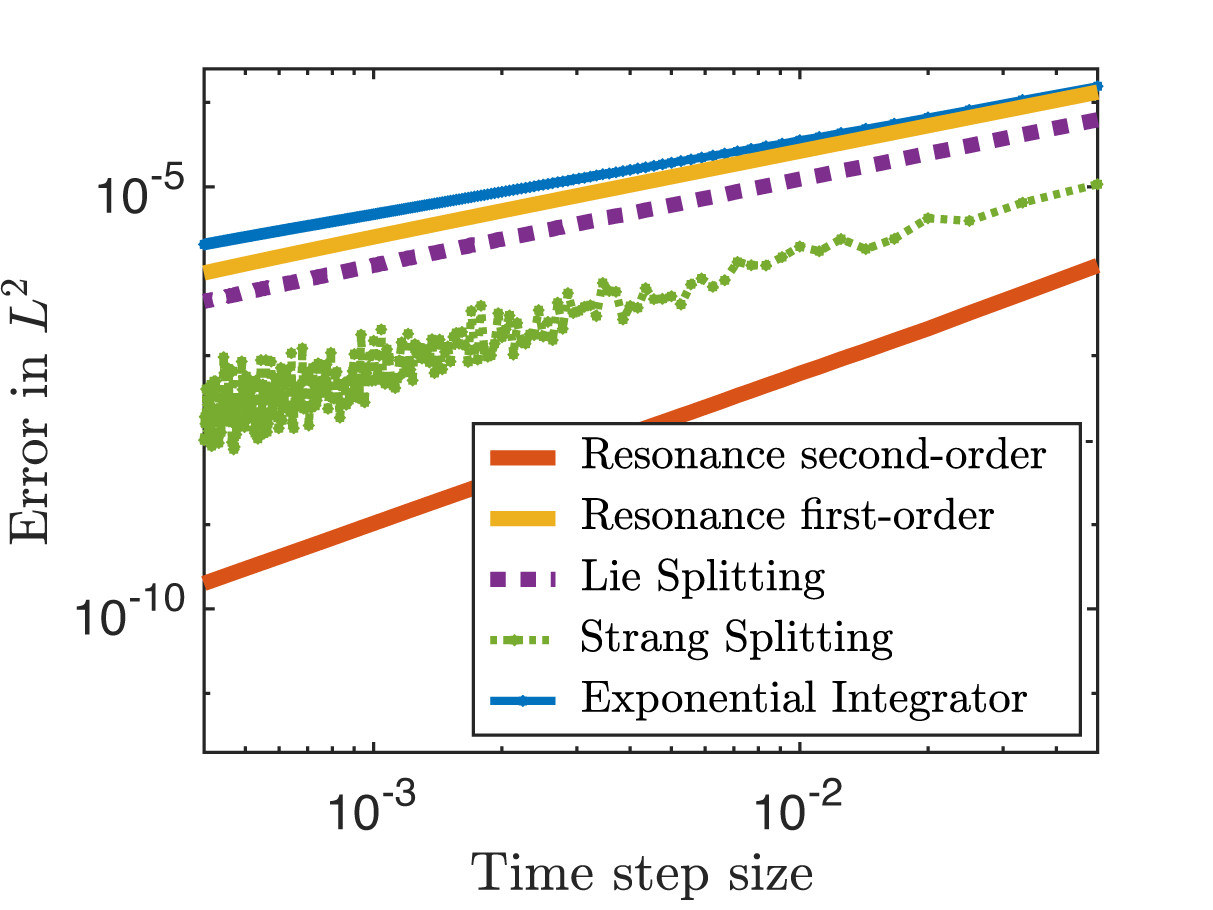

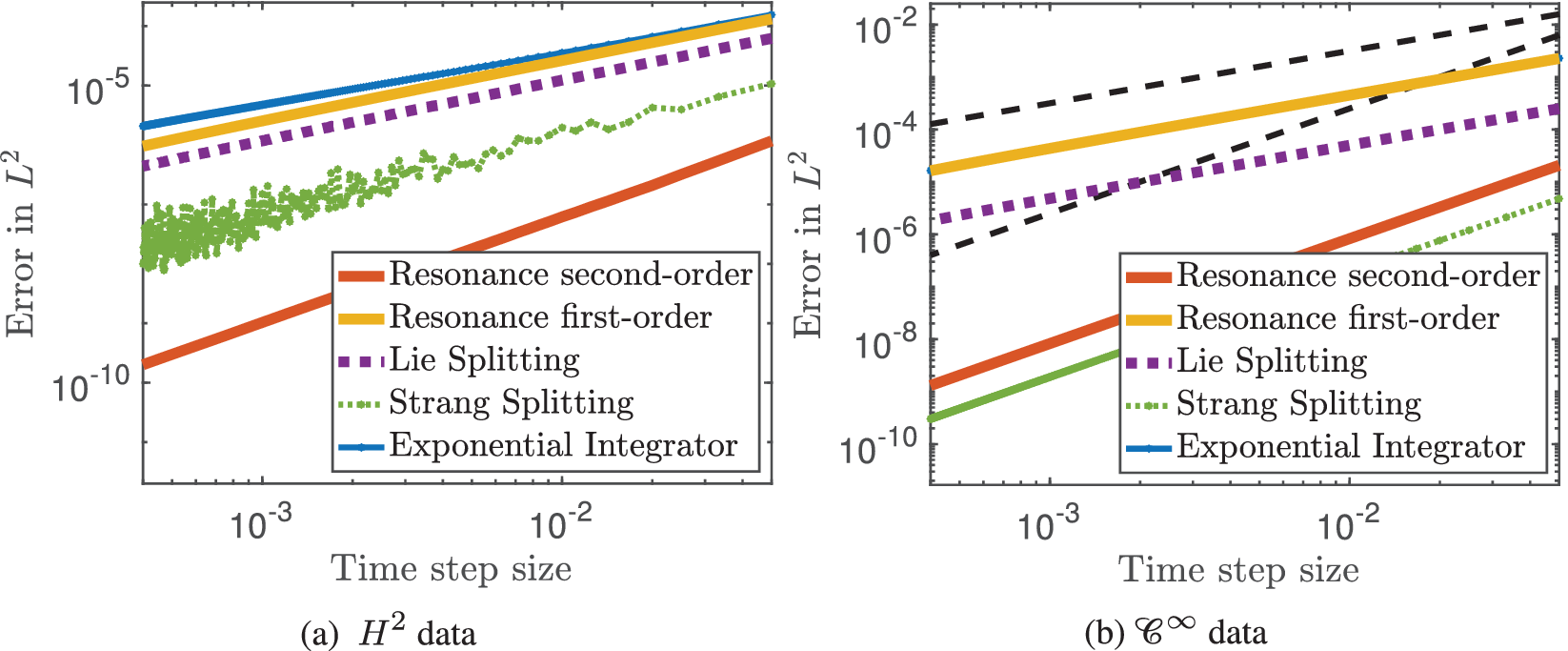

, we thus gain one derivative with the resonance-based scheme (28). This favourable error at low regularity is underlined in Figure 3.

$\mathcal {L}_{\text {dom}} = \partial _x^2$

, we thus gain one derivative with the resonance-based scheme (28). This favourable error at low regularity is underlined in Figure 3.

Figure 3 Error versus step size (double logarithmic plot). Comparison of classical and resonance-based schemes for the cubic Schrödinger equation (21) with

![]() $H^2$

initial data.

$H^2$

initial data.

In Example 1 we illustrated the idea of the resonance-based discretisation on the cubic periodic Schrödinger equation in one spatial dimension. In order to control frequency interactions in the general setting (1) in arbitrary dimensions

![]() $d\geq 1$

up to arbitrary high order, we next introduce our decorated tree formalism.

$d\geq 1$

up to arbitrary high order, we next introduce our decorated tree formalism.

1.2 Main idea of decorated trees for high-order resonance-based schemes

The iteration of Duhamel’s formulation (20) can be expressed using decorated trees. We are interested in computing the iterated frequency interactions in (20). This motivates us to express the latter in Fourier space. Let

![]() $ r $

be the order of the scheme and let us assume that we truncate (20) at this order. Its kth Fourier coefficient at order

$ r $

be the order of the scheme and let us assume that we truncate (20) at this order. Its kth Fourier coefficient at order

![]() $ r $

is given by

$ r $

is given by

$$ \begin{align} U_{k}^{r}(\tau, v) = \sum_{T\kern-1pt\in\kern0.5pt {\cal V}^r_k} \frac{\Upsilon^{p}(T)(v)}{S(T)} \left( \Pi T \right)(\tau), \end{align} $$

$$ \begin{align} U_{k}^{r}(\tau, v) = \sum_{T\kern-1pt\in\kern0.5pt {\cal V}^r_k} \frac{\Upsilon^{p}(T)(v)}{S(T)} \left( \Pi T \right)(\tau), \end{align} $$

where

![]() $ {\cal V}^r_k $

is a set of decorated trees which incorporate the frequency k,

$ {\cal V}^r_k $

is a set of decorated trees which incorporate the frequency k,

![]() $ S(T) $

is the symmetry factor associated to the tree

$ S(T) $

is the symmetry factor associated to the tree

![]() $ T $

,

$ T $

,

![]() $ \Upsilon ^{p}(T) $

is the coefficient appearing in the iteration of Duhamel’s formulation and

$ \Upsilon ^{p}(T) $

is the coefficient appearing in the iteration of Duhamel’s formulation and

![]() $ (\Pi T)(t) $

represents a Fourier iterated integral. The exponent r in

$ (\Pi T)(t) $

represents a Fourier iterated integral. The exponent r in

![]() $ {\cal V}^r_k $

means that we consider only trees of size

$ {\cal V}^r_k $

means that we consider only trees of size

![]() $ r +1 $

which are the trees producing an iterated integral with

$ r +1 $

which are the trees producing an iterated integral with

![]() $ r + 1$

integrals. The decorations that need to be put on the trees are illustrated in Example 2.

$ r + 1$

integrals. The decorations that need to be put on the trees are illustrated in Example 2.

The main difficulty then lies in developing for every

![]() $T \in {\cal V}^r_k$

a suitable approximation to the iterated integrals

$T \in {\cal V}^r_k$

a suitable approximation to the iterated integrals

![]() $ (\Pi T)(t) $

with the aim of minimising the local error structure (in the sense of regularity). In order to achieve this, the key idea is to embed – in the spirit of (12) – the underlying resonance structure of the iterated integrals

$ (\Pi T)(t) $

with the aim of minimising the local error structure (in the sense of regularity). In order to achieve this, the key idea is to embed – in the spirit of (12) – the underlying resonance structure of the iterated integrals

![]() $ (\Pi T)(t) $

into the discretisation.

$ (\Pi T)(t) $

into the discretisation.

Example 2

(cubic periodic Schrödinger equation with decorated trees). When

![]() $r=2$

, decorated trees for cubic NLS are given by

$r=2$

, decorated trees for cubic NLS are given by

where on the nodes we encode the frequencies such that they add up depending on the edge decorations. The root has no decoration. For example, in

![]() $T_1$

the two extremities of the blue edge have the same decoration given by

$T_1$

the two extremities of the blue edge have the same decoration given by

![]() $ -k_1 + k_2 + k_3 $

where the minus sign comes from the dashed edge. Therefore,

$ -k_1 + k_2 + k_3 $

where the minus sign comes from the dashed edge. Therefore,

![]() $ {\cal V}^r_k $

contains infinitely many trees (finitely many shapes but infinitely many ways of splitting up the frequency

$ {\cal V}^r_k $

contains infinitely many trees (finitely many shapes but infinitely many ways of splitting up the frequency

![]() $ k $

among the branches). An edge of type

$ k $

among the branches). An edge of type

![]() encodes a multiplication by

encodes a multiplication by

![]() $ e^{-i \tau k^2} $

where k is the frequency on the nodes adjacent to this edge. An edge of type

$ e^{-i \tau k^2} $

where k is the frequency on the nodes adjacent to this edge. An edge of type

![]() encodes an integration in time of the form

encodes an integration in time of the form

$$ \begin{align*} \int_0^{\tau} e^{i s k^2} \cdots d s. \end{align*} $$

$$ \begin{align*} \int_0^{\tau} e^{i s k^2} \cdots d s. \end{align*} $$

In fact,

![]() $r +1$

, the truncation parameter, corresponds to the maximum number of integration in time; that is, the number of edges with type

$r +1$

, the truncation parameter, corresponds to the maximum number of integration in time; that is, the number of edges with type

![]() . The dashed dots on the edges correspond to a conjugate and a multiplication by

. The dashed dots on the edges correspond to a conjugate and a multiplication by

![]() $(-1)$

applied to the frequency at the top of this edge. Then, if we apply the map

$(-1)$

applied to the frequency at the top of this edge. Then, if we apply the map

![]() $ \Pi $

(which encodes the oscillatory integrals in Fourier space; see Subsection 3.1) to these trees, we obtain

$ \Pi $

(which encodes the oscillatory integrals in Fourier space; see Subsection 3.1) to these trees, we obtain

$$ \begin{align} \begin{split} (\Pi T_0)(\tau) & = e^{-i \tau k^2}, \\ (\Pi T_1)(\tau) & = - i e^{-i \tau k^2} \int_0^\tau e^{i s k^2}\left[ \left( e^{ i s k_1^2} \right) \left ( e^{ -i s k_2^2} \right) \left ( e^{ -i s k_3^2} \right) \right] d s \\ & = - i e^{-i \tau k^2} \int_0^{\tau} e^{is \mathscr{F}(k)} ds \\ (\Pi T_2)(\tau) & = -i e^{-i \tau k^2} \int_0^\tau e^{i s k^2}\left[ \left( e^{ i s k_4^2} \right) \Big( (\Pi T_1)(s) \Big) \left ( e^{ - i s k_5^2} \right) \right] d s \\ (\Pi T_3)(\tau) & = -i e^{-i \tau k^2} \int_0^\tau e^{i s k^2}\left[ \Big( \overline{(\Pi T_1)(s)} \Big)\left ( e^{ -i s k_4^2} \right) \left ( e^{ -i s k_5^2} \right) \right] d s \end{split} \end{align} $$

$$ \begin{align} \begin{split} (\Pi T_0)(\tau) & = e^{-i \tau k^2}, \\ (\Pi T_1)(\tau) & = - i e^{-i \tau k^2} \int_0^\tau e^{i s k^2}\left[ \left( e^{ i s k_1^2} \right) \left ( e^{ -i s k_2^2} \right) \left ( e^{ -i s k_3^2} \right) \right] d s \\ & = - i e^{-i \tau k^2} \int_0^{\tau} e^{is \mathscr{F}(k)} ds \\ (\Pi T_2)(\tau) & = -i e^{-i \tau k^2} \int_0^\tau e^{i s k^2}\left[ \left( e^{ i s k_4^2} \right) \Big( (\Pi T_1)(s) \Big) \left ( e^{ - i s k_5^2} \right) \right] d s \\ (\Pi T_3)(\tau) & = -i e^{-i \tau k^2} \int_0^\tau e^{i s k^2}\left[ \Big( \overline{(\Pi T_1)(s)} \Big)\left ( e^{ -i s k_4^2} \right) \left ( e^{ -i s k_5^2} \right) \right] d s \end{split} \end{align} $$

where the resonance structure

![]() $\mathscr {F}(k)$

is given in (25). One has the constraints

$\mathscr {F}(k)$

is given in (25). One has the constraints

![]() $k= -k_1 +k_2 +k_3$

for

$k= -k_1 +k_2 +k_3$

for

![]() $T_1$

,

$T_1$

,

![]() $k= -k_1 + k_2 + k_3 -k_4 + k_5$

for

$k= -k_1 + k_2 + k_3 -k_4 + k_5$

for

![]() $ T_2 $

and

$ T_2 $

and

![]() $k= k_1 - k_2 - k_3 +k_4 + k_5$

for

$k= k_1 - k_2 - k_3 +k_4 + k_5$

for

![]() $ T_3 $

. Using the definitions in Section 4, one can compute the following coefficients:

$ T_3 $

. Using the definitions in Section 4, one can compute the following coefficients:

$$ \begin{align*} \begin{split} \frac{\Upsilon^p(T_0)(v)}{S(T_0)} & = \hat v_k, \quad \frac{\Upsilon^p(T_1)(v)}{S(T_1)} = \bar{\hat{v}}_{k_1} \hat{v}_{k_2} \hat{v}_{k_3} \\ \frac{\Upsilon^p(T_2)(v)}{S(T_2)} & = 2 \overline{\hat{v}}_{k_1} \hat v_{k_2} \hat v_{k_3} \overline{\hat{v}}_{k_4} \hat v_{k_5}, \quad \frac{\Upsilon^p(T_3)(v)}{S(T_3)} = \hat v_{k_1} \overline{\hat{v}}_{k_2} \overline{\hat{v}}_{k_3} \hat v_{k_4} \hat v_{k_5} \end{split} \end{align*} $$

$$ \begin{align*} \begin{split} \frac{\Upsilon^p(T_0)(v)}{S(T_0)} & = \hat v_k, \quad \frac{\Upsilon^p(T_1)(v)}{S(T_1)} = \bar{\hat{v}}_{k_1} \hat{v}_{k_2} \hat{v}_{k_3} \\ \frac{\Upsilon^p(T_2)(v)}{S(T_2)} & = 2 \overline{\hat{v}}_{k_1} \hat v_{k_2} \hat v_{k_3} \overline{\hat{v}}_{k_4} \hat v_{k_5}, \quad \frac{\Upsilon^p(T_3)(v)}{S(T_3)} = \hat v_{k_1} \overline{\hat{v}}_{k_2} \overline{\hat{v}}_{k_3} \hat v_{k_4} \hat v_{k_5} \end{split} \end{align*} $$

which together with the character

![]() $ \Pi $

encode fully the identity (30).

$ \Pi $

encode fully the identity (30).

Our general scheme is based on the approximation of

![]() $(\Pi T)(t)$

for every tree in

$(\Pi T)(t)$

for every tree in

![]() ${\cal V}_k^r$

. This approximation is given by a new map of decorated trees denoted by

${\cal V}_k^r$

. This approximation is given by a new map of decorated trees denoted by

![]() $\Pi ^{n,r}$

where r is the order of the scheme and n corresponds to the a priori assumed regularity of the initial value v. This new character

$\Pi ^{n,r}$

where r is the order of the scheme and n corresponds to the a priori assumed regularity of the initial value v. This new character

![]() $\Pi ^{n,r}$

will embed the dominant frequency interactions and neglect the lower-order terms in the spirit of (12). Our general scheme will thus take the form

$\Pi ^{n,r}$

will embed the dominant frequency interactions and neglect the lower-order terms in the spirit of (12). Our general scheme will thus take the form

$$ \begin{align} U_{k}^{n,r}(\tau, v) = \sum_{T\kern-1pt\in\kern0.5pt {\cal V}^r_k} \frac{\Upsilon^{p}(T)(v)}{S(T)} \left( \Pi^{n,r} T \right)(\tau) \end{align} $$

$$ \begin{align} U_{k}^{n,r}(\tau, v) = \sum_{T\kern-1pt\in\kern0.5pt {\cal V}^r_k} \frac{\Upsilon^{p}(T)(v)}{S(T)} \left( \Pi^{n,r} T \right)(\tau) \end{align} $$

where the map

![]() $ \Pi ^{n,r} T $

is a low regularity approximation of order

$ \Pi ^{n,r} T $

is a low regularity approximation of order

![]() $ r $

of the map

$ r $

of the map

![]() $ \Pi T $

in the sense that

$ \Pi T $

in the sense that

$$ \begin{align} \left(\Pi T - \Pi^{n,r} T \right)(\tau) = \mathcal{O}\left( \tau^{r+2} \mathcal{L}^{r}_{\tiny{\text{low}}}(T,n) \right). \end{align} $$

$$ \begin{align} \left(\Pi T - \Pi^{n,r} T \right)(\tau) = \mathcal{O}\left( \tau^{r+2} \mathcal{L}^{r}_{\tiny{\text{low}}}(T,n) \right). \end{align} $$

Here

![]() $\mathcal {L}^{r}_{\tiny {\text {low}}}(T,n)$

involves all lower-order frequency interactions that we neglect in our resonance-based discretisation. At first order this approximation is illustrated in (15). The scheme (33) and the local error approximations (34) are the main results of this work (see Theorem 4.8). Let us give the main ideas on how to obtain them.

$\mathcal {L}^{r}_{\tiny {\text {low}}}(T,n)$

involves all lower-order frequency interactions that we neglect in our resonance-based discretisation. At first order this approximation is illustrated in (15). The scheme (33) and the local error approximations (34) are the main results of this work (see Theorem 4.8). Let us give the main ideas on how to obtain them.

The approximation

![]() $ \Pi ^{n,r} $

is constructed from a character

$ \Pi ^{n,r} $

is constructed from a character

![]() $ \Pi ^n $

defined on the vector space

$ \Pi ^n $

defined on the vector space

![]() $ {\mathcal {H}} $

spanned by decorated forests taking values in a space

$ {\mathcal {H}} $

spanned by decorated forests taking values in a space

![]() $ {{\cal C}} $

which depends on the frequencies of the decorated trees (see, e.g., (31) in case of NLS). However, we will add at the root the additional decoration r which stresses that this tree will be an approximation of order r. For this purpose we will introduce the symbol

$ {{\cal C}} $

which depends on the frequencies of the decorated trees (see, e.g., (31) in case of NLS). However, we will add at the root the additional decoration r which stresses that this tree will be an approximation of order r. For this purpose we will introduce the symbol

![]() ${\cal D}^r$

(see, e.g., (35) for

${\cal D}^r$

(see, e.g., (35) for

![]() $T_1$

of NLS). Indeed, we disregard trees which have more integrals in time than the order of the scheme. In particular, we note that

$T_1$

of NLS). Indeed, we disregard trees which have more integrals in time than the order of the scheme. In particular, we note that

![]() $ \Pi ^n {\cal D}^r(T) = \Pi ^{n,r} T$

.

$ \Pi ^n {\cal D}^r(T) = \Pi ^{n,r} T$

.

The map

![]() $ \Pi ^n $

is defined recursively from an operator

$ \Pi ^n $

is defined recursively from an operator

![]() $ {{\cal K}} $

which will compute a suitable approximation (matching the regularity of the solution) of the integrals introduced by the iteration of Duhamel’s formula. This map

$ {{\cal K}} $

which will compute a suitable approximation (matching the regularity of the solution) of the integrals introduced by the iteration of Duhamel’s formula. This map

![]() $ {{\cal K}} $

corresponds to the high-order counterpart of the approach described in Subsection 1.1: It embeds the idea of singling out the dominant parts and integrating them exactly while only approximating the lower-order terms, allowing for an improved local error structure compared to classical approaches. The character

$ {{\cal K}} $

corresponds to the high-order counterpart of the approach described in Subsection 1.1: It embeds the idea of singling out the dominant parts and integrating them exactly while only approximating the lower-order terms, allowing for an improved local error structure compared to classical approaches. The character

![]() $ \Pi ^n $

is the main map for computing the numerical scheme in Fourier space.

$ \Pi ^n $

is the main map for computing the numerical scheme in Fourier space.

Example 3

(cubic periodic Schrödinger equation: computation of

![]() $\Pi ^n$

). We consider the decorated trees

$\Pi ^n$

). We consider the decorated trees

![]() ${\cal D}^r(\bar T_1)$

and

${\cal D}^r(\bar T_1)$

and

![]() $\bar T_1$

given by

$\bar T_1$

given by

One can observe that

$ (\Pi T_1)(t) = e^{-i k^2 t} (\Pi \bar T_1)(t). $

We will define recursively two maps

$ (\Pi T_1)(t) = e^{-i k^2 t} (\Pi \bar T_1)(t). $

We will define recursively two maps

![]() $\mathscr {F}_{\tiny {\text {dom}}} $

and

$\mathscr {F}_{\tiny {\text {dom}}} $

and

![]() $ \mathscr {F}_{\tiny {\text {low}}} $

(see Definition 2.6) on decorated trees that compute the dominant and the lower part of the nonlinear frequency interactions within the oscillatory integral

$ \mathscr {F}_{\tiny {\text {low}}} $

(see Definition 2.6) on decorated trees that compute the dominant and the lower part of the nonlinear frequency interactions within the oscillatory integral

![]() $ (\Pi \bar T_1)(t) $

. In this example, one gets back the values already computed in (26); that is,

$ (\Pi \bar T_1)(t) $

. In this example, one gets back the values already computed in (26); that is,

Moreover, the dominant part of

![]() $T_1$

is due to the observation that

$T_1$

is due to the observation that

$(\Pi T_1)(t) = e^{-i k^2 t} (\Pi \bar T_1)(t)$

given by

$(\Pi T_1)(t) = e^{-i k^2 t} (\Pi \bar T_1)(t)$

given by

because the tree

![]() $T_1$

does not start with an intregral in time. Then, one can write

$T_1$

does not start with an intregral in time. Then, one can write

$$ \begin{align*} (\Pi \bar T_1)(t) = -i \int_0^\tau e^{i s\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) } e^{i s\mathscr{F}_{\tiny{\text{low}}}(\bar T_1) } ds \end{align*} $$

$$ \begin{align*} (\Pi \bar T_1)(t) = -i \int_0^\tau e^{i s\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) } e^{i s\mathscr{F}_{\tiny{\text{low}}}(\bar T_1) } ds \end{align*} $$

and Taylor expand around

![]() $0$

the lower-order term; that is, the factor containing

$0$

the lower-order term; that is, the factor containing

![]() $ \mathscr {F}_{\tiny {\text {low}}}(\bar T_1)$

. The term

$ \mathscr {F}_{\tiny {\text {low}}}(\bar T_1)$

. The term

![]() $\Pi ^{n,1} \bar T_1 = \Pi ^n {\cal D}^1(\bar T_1)$

is then given by

$\Pi ^{n,1} \bar T_1 = \Pi ^n {\cal D}^1(\bar T_1)$

is then given by

$$ \begin{align} ( \Pi^{n,1} \bar T_1)(t) = - i \int_0^\tau e^{i s\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) } ds + \mathscr{F}_{\tiny{\text{low}}}(\bar T_1) \int_0^\tau s e^{i s \mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) } ds. \end{align} $$

$$ \begin{align} ( \Pi^{n,1} \bar T_1)(t) = - i \int_0^\tau e^{i s\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) } ds + \mathscr{F}_{\tiny{\text{low}}}(\bar T_1) \int_0^\tau s e^{i s \mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) } ds. \end{align} $$

One observes that we obtain terms of the form

$ \frac {P}{Q} e^{i R} $

where

$ \frac {P}{Q} e^{i R} $

where

![]() $P,Q, R$

are polynomials in the frequencies

$P,Q, R$

are polynomials in the frequencies

![]() $ k_1, k_2, k_3 $

. Linear combinations of these terms are actually the definition of the space

$ k_1, k_2, k_3 $

. Linear combinations of these terms are actually the definition of the space

![]() $ {{\cal C}} $

. For the local error, one gets

$ {{\cal C}} $

. For the local error, one gets

Here the term

![]() $ \mathscr {F}_{\tiny {\text {low}}} (\bar T_1)^2$

corresponds to the regularity that one has to impose on the solution. One can check by hand that the expression of

$ \mathscr {F}_{\tiny {\text {low}}} (\bar T_1)^2$

corresponds to the regularity that one has to impose on the solution. One can check by hand that the expression of

![]() $ \Pi ^{n,1} \bar T_1$

can be mapped back to the physical space. Such a statement will in general hold true for the character

$ \Pi ^{n,1} \bar T_1$

can be mapped back to the physical space. Such a statement will in general hold true for the character

![]() $ \Pi ^n $

; see Proposition 3.18. This will be important for the practical implementation of the new schemes; see also Remark 1.3. We have not used n in the description of the scheme yet. In fact, it plays a role in the expression of

$ \Pi ^n $

; see Proposition 3.18. This will be important for the practical implementation of the new schemes; see also Remark 1.3. We have not used n in the description of the scheme yet. In fact, it plays a role in the expression of

![]() $ \Pi ^{n,1} \bar T_1 $

. One has to compare

$ \Pi ^{n,1} \bar T_1 $

. One has to compare

![]() $ n $

with the regularity required by the local error (37) introduced by the polynomial

$ n $

with the regularity required by the local error (37) introduced by the polynomial

![]() $ \mathscr {F}_{\tiny {\text {low}}} (\bar T_1)^2 $

but also with the term

$ \mathscr {F}_{\tiny {\text {low}}} (\bar T_1)^2 $

but also with the term

![]() $\mathscr {F}_{\tiny {\text {dom}}}(\bar T_1)^2 $

. Indeed, if the initial value is regular enough, we may want to Taylor expand all of the frequencies – that is, even the dominant parts – in order to get a simpler scheme; see also Remark 1.1.

$\mathscr {F}_{\tiny {\text {dom}}}(\bar T_1)^2 $

. Indeed, if the initial value is regular enough, we may want to Taylor expand all of the frequencies – that is, even the dominant parts – in order to get a simpler scheme; see also Remark 1.1.

In order to obtain a better understanding of the error introduced by the character

![]() $ \Pi ^n $

, one needs to isolate each interaction. Therefore, we will introduce two characters

$ \Pi ^n $

, one needs to isolate each interaction. Therefore, we will introduce two characters

![]() $ \hat \Pi ^n : {\mathcal {H}} \rightarrow {{\cal C}} $

and

$ \hat \Pi ^n : {\mathcal {H}} \rightarrow {{\cal C}} $

and

![]() $ A^n : {\mathcal {H}} \rightarrow \mathbf {C} $

such that

$ A^n : {\mathcal {H}} \rightarrow \mathbf {C} $

such that

$$ \begin{align} \Pi^n = \left( \hat \Pi^n \otimes A^n \right) \Delta \end{align} $$

$$ \begin{align} \Pi^n = \left( \hat \Pi^n \otimes A^n \right) \Delta \end{align} $$

where

![]() $ \Delta : {\mathcal {H}} \rightarrow {\mathcal {H}} \otimes {\mathcal {H}}_+ $

is a coaction and

$ \Delta : {\mathcal {H}} \rightarrow {\mathcal {H}} \otimes {\mathcal {H}}_+ $

is a coaction and

![]() $ ({\mathcal {H}},\Delta ) $

is a right comodule for a Hopf algebra

$ ({\mathcal {H}},\Delta ) $

is a right comodule for a Hopf algebra

![]() $ {\mathcal {H}}_+ $

equipped with a coproduct

$ {\mathcal {H}}_+ $

equipped with a coproduct

![]() ${\Delta ^{\!+}} $

and an antipode

${\Delta ^{\!+}} $

and an antipode

![]() $ {\mathcal {A}} $

. In fact, on can show that

$ {\mathcal {A}} $

. In fact, on can show that

where

![]() $ \Pi ^n $

is extended to a character on

$ \Pi ^n $

is extended to a character on

![]() $ {\mathcal {H}}_+ $

and

$ {\mathcal {H}}_+ $

and

![]() $ {\mathcal {Q}} $

is a projection defined on

$ {\mathcal {Q}} $

is a projection defined on

![]() $ {{\cal C}} $

which keeps only the terms with no oscillations. The identity (39) can be understood as a Birkhoff type factorisation of

$ {{\cal C}} $

which keeps only the terms with no oscillations. The identity (39) can be understood as a Birkhoff type factorisation of

![]() $ \hat \Pi ^n $

using the character

$ \hat \Pi ^n $

using the character

![]() $ \Pi ^n $

. This identity is also reminiscent in the main results obtained for singular SPDEs [Reference Bruned, Hairer and Zambotti13] where two twisted antipodes play a fundamental role providing a variant of the algebraic Birkhoff factorisation.

$ \Pi ^n $

. This identity is also reminiscent in the main results obtained for singular SPDEs [Reference Bruned, Hairer and Zambotti13] where two twisted antipodes play a fundamental role providing a variant of the algebraic Birkhoff factorisation.

Example 4 (cubic periodic Schrödinger equation: Birkhoff factorisation). Integrating the first term in (36) exactly yields two contributions:

$$ \begin{align*} \int_0^\tau e^{i s\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) } ds = \frac{e^{i\tau\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) }}{i\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) } - \frac{1}{i\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) }. \end{align*} $$

$$ \begin{align*} \int_0^\tau e^{i s\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) } ds = \frac{e^{i\tau\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) }}{i\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) } - \frac{1}{i\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) }. \end{align*} $$

Plugging these two terms into

![]() $(\Pi T_2)(\tau )$

defined in (32), we see that we have to control the following two terms:

$(\Pi T_2)(\tau )$

defined in (32), we see that we have to control the following two terms:

$$ \begin{align} \begin{split} - e^{-i \tau k^2} & \int_0^\tau e^{i s k^2}\left[ \left( e^{ i s k_4^2} \right) \Big( \frac{e^{i s\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) - is \bar k^2 } }{i\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) } \Big) \left ( e^{- i s k_5^2} \right) \right] d s \\ - e^{-i \tau k^2} & \int_0^\tau e^{-i s k^2}\left[ \left( e^{ i s k_4^2} \right) \Big( - \frac{e^{-i s \bar k^2 }}{i\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) } \Big) \left ( e^{ -i s k_5^2} \right) \right] d s \end{split} \end{align} $$

$$ \begin{align} \begin{split} - e^{-i \tau k^2} & \int_0^\tau e^{i s k^2}\left[ \left( e^{ i s k_4^2} \right) \Big( \frac{e^{i s\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) - is \bar k^2 } }{i\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) } \Big) \left ( e^{- i s k_5^2} \right) \right] d s \\ - e^{-i \tau k^2} & \int_0^\tau e^{-i s k^2}\left[ \left( e^{ i s k_4^2} \right) \Big( - \frac{e^{-i s \bar k^2 }}{i\mathscr{F}_{\tiny{\text{dom}}}(\bar T_1) } \Big) \left ( e^{ -i s k_5^2} \right) \right] d s \end{split} \end{align} $$

where

![]() $ \bar k = -k_1 + k_2 + k_3$

. The frequency analysis is needed again for approximating the time integral and defining an approximation of

$ \bar k = -k_1 + k_2 + k_3$

. The frequency analysis is needed again for approximating the time integral and defining an approximation of

![]() $(\Pi T_2)(\tau ) $

. One can see that the dominant part of these two terms may differ. This implies that one can get two different local errors for the approximation of these two terms; the final local error is the maximum between the two. At this point, we need an efficient algebraic structure for dealing with all of these frequency interactions in the iterated integrals. We first consider a character

$(\Pi T_2)(\tau ) $

. One can see that the dominant part of these two terms may differ. This implies that one can get two different local errors for the approximation of these two terms; the final local error is the maximum between the two. At this point, we need an efficient algebraic structure for dealing with all of these frequency interactions in the iterated integrals. We first consider a character

![]() $ \hat \Pi ^n $

that keeps only the main contribution; that is, the second term of (40). For any decorated tree

$ \hat \Pi ^n $

that keeps only the main contribution; that is, the second term of (40). For any decorated tree

![]() $ T $

, one expects

$ T $

, one expects

![]() $ \hat \Pi ^n $

to be of the form

$ \hat \Pi ^n $

to be of the form

where

![]() $ B^n({\cal D}^r(T))(t) $

is a polynomial in

$ B^n({\cal D}^r(T))(t) $

is a polynomial in

![]() $ t $

depending on the decorated tree

$ t $

depending on the decorated tree

![]() $ T $

. The character

$ T $

. The character

![]() $ \hat \Pi ^n $

singles out oscillations by keeping at each iteration only the nonzero one. This separation between the various oscillations can be encoded via the Butcher–Connes–Kreimer coaction

$ \hat \Pi ^n $

singles out oscillations by keeping at each iteration only the nonzero one. This separation between the various oscillations can be encoded via the Butcher–Connes–Kreimer coaction

![]() $ \Delta : {\mathcal {H}} \rightarrow {\mathcal {H}} \otimes {\mathcal {H}}_+ $

. An example of computation is given below:

$ \Delta : {\mathcal {H}} \rightarrow {\mathcal {H}} \otimes {\mathcal {H}}_+ $

. An example of computation is given below:

where

![]() $ \ell = - k_1 + k_2 + k_3$

. The space

$ \ell = - k_1 + k_2 + k_3$

. The space

![]() ${\mathcal {H}}_+$