1. Introduction

Countless neurodevelopmental syndromes, such as Cri du Chat, Coffin-Siris, Rett, Angelman, Landau-Kleffner, X-fragile, or Phelan-McDermid syndromes, imply fundamental changes not only in how cognition develops, but also in language. In this regard any visit to a large, mixed special-education school is likely to reveal a bewildering variety of linguistic phenotypes, both between and within such disorders. A multi-dimensional spectrum of language capacities will open, in a landscape that linguistics has barely begun to chart in theoretical or empirical terms.

At a descriptive level, at the bottom of this spectrum, functional language will be seen to be absent altogether, in both production and comprehension and in any sensory modality, as is often the case in several of the above syndromes and in no less than 25–30% of school-age children with autism spectrum disorder (ASD) (Tager-Flusberg & Kasari Reference Tager-Flusberg and Kasari2013; Norrelgen et al. Reference Norrelgen, Fernell, Eriksson, Hedvall, Persson, Sjölin, Gillberg and Kjellmer2015; Hinzen et al. Reference Hinzen, Slušná, Schroeder, Sevilla and Borrellas2019; Slušná et al. Reference Slušná, Rodriguez, Salvado, Vicente and Hinzen2021). There may be other children with primary physical motor speech disabilities, which can leave them unable of any vocal production, while comprehension and writing, and hence language capacities proper, are relatively less affected (as can be the case in children with perisylvian polymicrogyria or Worster-Drought syndromes: see Kuzniecky, Andermann & Guerrini Reference Kuzniecky, Andermann and Guerrini1993; Christen et al. Reference Christen, Hanefeld, Kruse, Imhäuser, Ernst and Finkenstaedt2000). Numerous other linguistic phenotypes will appear between and beyond these.

This diversity serves as a stark reminder that language capacities are neither universal nor uniform in humans. Beyond even a basic map of this territory, we are lacking a typology, and, beyond a typology, a theory of why there should be these types. What is clear is that the variation in question differs profoundly from more familiar clinical linguistic profiles as seen in children typically attending normal schools, such as those with dyslexia, where difficulties centre on reading difficulties, children with specific language impairment (SLI)/developmental language disorder (DLD), where the most obvious problems may be the omission of grammatical morphemes in production, or children with Williams syndrome (WS), where speech can be fluent but content is affected. In these other cases, it is often clear that much of the human language capacity is kept.

Where this is not the case, issues of considerable theoretical linguistic interest arise. The neural basis of absence of functional language by school age, in particular, could be a profound source of insight into the organisation of language in the neurotypical brain. In addition, non-linguistic-cognitive impairments are co-present in all or most of the cases of interest, leading to various degrees of intellectual disability (ID) when standardised tests of IQ are applied, including in non-linguistic cognition in most children with ASD who do not develop language (Slušná et al. Reference Slušná, Rodriguez, Salvado, Vicente and Hinzen2021). As such, every case represents a natural model of how language and cognition relate and disintegrate together.

Our primary aim here is to address a theoretical challenge that this linguistic variation poses.Footnote 2 Today, SLI/DLD and acquired aphasia following strokes still largely define our models of what language impairment is, and it is these models in turn that inform current models of language in the brain (Fridriksson et al. Reference Fridriksson, den Ouden, Hillis, Hickok, Rorden, Basilakos, Yourganov and Bonilha2018). But SLI/DLD may be inappropriate when modelling language dysfunction in non-specific language disorders, as these disorders, unlike SLI/DLD as viewed traditionally, do not leave cognition intact. This dilemma illustrates a language-cognition divide, which has long formed a cornerstone in both generative linguistics and developmental psychology, but is a questionable starting point where fundamental cognitive changes define a clinical condition from the beginning.

In fact, the same divide has meanwhile become problematic in ‘specific’ language disorders themselves. Thus, cognitive impairment across multiple domains in acquired aphasia has now been documented in most group studies (Fonseca, Ferreira & Martins Reference Fonseca, Ferreira and Martins2016; Gonzalez, Rojas & Ardila Reference Gonzalez, Rojas and Ardila2020; Yao et al. Reference Yao, Liu, Liu, Wang, Ye, Lu, Zhao, Chen, Han, Yu, Wang, Liu and Zhang2020), including in cases where left-hemisphere stroke patients with and without aphasia were directly compared (Baldo et al. Reference Baldo, Dronkers, Wilkins, Ludy, Raskin and Kim2005, Reference Baldo, Bunge, Wilson and Dronkers2010). General cognitive impairment has been argued to provide explanatory models for aphasia, with the explicit implication that language at a competence level in fact stays intact in aphasia, in direct reversal of the traditional conception of aphasia as a primary language impairment.Footnote 3 In turn, in SLI/DLD evidence has accumulated that the language impairments in question are not specific to language and could at least in part reflect general cognitive deficits in auditory processing, working memory, procedural learning, processing speed, or motor developmental delays (Miller et al. Reference Miller, Kail, Leonard and Tomblin2001; Kohnert & Windsor Reference Kohnert and Windsor2004; Leonard et al. Reference Leonard, Weismer, Miller, Francis, Tomblin and Kail2007; Bishop Reference Bishop2010; Tsimpli, Kambanaros & Grohmann Reference Tsimpli, Kambanaros, Grohmann and Roberts2017; Schaeffer Reference Schaeffer2018). A classical case for a cognitive disorder leaving language intact, namely WS, has also long been questioned (Karmiloff-Smith et al. Reference Karmiloff-Smith, Grant, Berthoud, Davies, Howlin and Udwin1997; Brock Reference Brock2007).

Together, these findings raise the question of whether there is a theoretically or clinically useful sense in which there are specific language disorders and a language-cognition divide is empirically meaningful at all. They also further reinforce the need for integrated theoretical models of language and cognition. That need extends even further, as any visit to a large hospital’s adult psychiatric or neurological ward will equally reveal a wide range of language phenotypes, from the fluent but formally disorganised and often unintelligible speech of patients with the symptom of formal thought disorder (McKenna & Oh Reference McKenna and Oh2005), to the hallucinated speech of patients with auditory verbal hallucinations (Tovar, Fuentes-Claramonte, et al. Reference Tovar, Fuentes-Claramonte, Soler-Vidal, Ramiro-Sousa, Rodriguez-Martinez, Sarri-Closa, Sarró, Larrubia, drés-Bergareche, Miguel Cesma, Pablo Padilla, Salvador, Pomarol-Clotet and Hinzen2019), the ‘empty’ speech of patients with Alzheimer’s disease (Nicholas et al. Reference Nicholas, Obler, Albert and Helm-Estabrooks1985), or the syntactically disorganised utterances in Huntington’s disease (Hinzen et al. Reference Hinzen, Rossello, Morey, García-Gorro, Camara and de Diego-Balaguer2018).

Linguistically oriented work on these phenotypes remains scarce today. Instead, most work addressing this variation has proceeded opportunistically, harnessing natural language processing tools with the practical aim of finding discriminatory linguistic markers of a pathological process (Ahmed et al. Reference Ahmed, Haigh, de Jager and Garrard2013; Clarke, Foltz & Garrard Reference Clarke, Foltz and Garrard2020; Hitczenko, Mittal & Goldrick Reference Hitczenko, Mittal and Goldrick2021). Typical variables used have been the number of utterances produced, speech rate, number or length of pauses, length of utterances in words, type-token ratio, formal-grammatical errors, dependency depth, idea density, lexical diversity, word connectedness as measured by speech graphs, and proportions of different parts of speech. Few if any of these variables connect to current models of linguistic theory or to specific hypotheses of how structures of language relate to the structure of human cognition and thought. Some studies have taken a more linguistic route and profiled deviances along such ‘levels’ as the ‘lexicon’, ‘syntax’, ‘semantics’, ‘phonology’ and ‘pragmatics’ (Covington et al. Reference Covington, He, Brown-Johnson, Naci, McClain, Sirmon-Taylor, Semple and Brown2005). Yet these levels, too, are theoretically and practically problematic when featuring in models of pathological language, as most linguistic phenomena of interest will intersect between these putative domains, and both the theoreticalFootnote 4 and neurobiologicalFootnote 5 validities of these constructs are open to question.

We aim to contribute to addressing these problems here by developing a new general model of language and cognition that can inform empirical investigations: the Bridge model, according to which the human language faculty bridges between two pre-linguistic-cognitive abilities, namely perceptual categorisation and social interaction. It is this bridge which defines human-specific thought. Thus, thinking and language are inherently integrated and there cannot be atypical language without atypical thought, and vice versa.

We begin by motivating this model in Section 2 and pointing to independent evidence that grammar is linked to reference and hence provides a basis for thought and its content; and that, in this way, grammar contributes to meaning (crucially including interactive meaning), rather than merely being a system to combine words with meaning. In Section 3, we introduce the Bridge model in detail and in Section 4, we illustrate how it can shed light on linguistic and cognitive diversity within ASD. In Section 5, we conclude.

2. Linking language and cognition

Language in its normal use has to be coherently integrated with effectively all other cognitive functions: we talk about what we see, hear, or touch, we need to remember words and their meanings, we plan what we say, and we say what we do based on representing what others know or believe. Beyond integrating specific cognitive domains such as vision, executive functioning, or theory of mind (ToM), however, language in its normal functioning inherently expresses a particular mode of thought. Just as language never seems to occur without thought being conveyed in it, the kind of thought conveyed in language never seems to occur without being expressed in language, in some sensory modality (Hinzen Reference Hinzen2017). In particular, it cannot be adequately expressed in representational media suited to other forms of thought, such as pictures or music. Since major mental disorders are disorders in the specific thought process expressed in language (not in music, say), it is the relation between language and thought that we ultimately need to thematise here. Many specific cognitive domains, such as memory, executive functions, or ToM, are typically affected in neuropsychiatric disorders such as ASD, bipolar disease, or schizophrenia (Boucher & Anns Reference Boucher and Anns2018; Sheffield, Karcher & Barch Reference Sheffield, Karcher and Barch2018; Thibaudeau et al. Reference Thibaudeau, Achim, Parent, Turcotte and Cellard2020). But none of these disorders are likely to reduce to a deficit in any such specific domain. Neurotypical thought necessarily integrates all of these domains, and the role of language in this integration across cognitive domains needs to be our target here. This is so even when the same question arises for all of the specific cognitive domains separately as well (e.g. the role of language in ToM; see Schroeder et al. Reference Schroeder, Durrleman, Sanfeliu and Hinzen2021).

2.1 Expressivism and beyond

One view of the relation between language and thought suggests that the role of language is restricted to the expression or ‘externalisation’ of an otherwise already independently existing functional thought system. This view, which we may call ‘expressivist’, is associated with traditional Cartesian-rationalist theories of language (Arnauld & Lancelot [1676] Reference Arnauld and Lancelot1966), who saw language as a ‘mirror’ of the rational structure of thought, viewed as governed by logic and as independently given; and with the contemporary heirs of Cartesian rationalism (Chomsky Reference Chomsky1966). This is true, insofar as they view language as encoding independently constituted thoughts, perhaps generated in a ‘language of thought’ (LoT) that has its own compositional semantics (Pinker & Jackendoff Reference Pinker and Jackendoff2005; Fodor Reference Fodor2008); or insofar as they depict language development as ‘latching onto’ or communicating a world of ‘concepts’ and ‘thoughts’ that we already possess (Pinker Reference Pinker1994). An expressivist view in the above sense can equally be attributed to the contemporary cognitive-functionalist tradition (Tomasello Reference Tomasello2003; Levinson Reference Levinson and Hagoort2019), insofar as it regards language as a social construction for conventionally representing ‘communicative intentions’, whose contents and hence thought as such are again assumed to be independently given.

Expressivist views are challenged by the pervasive co-morbidity of language across neurodevelopmental and neuropsychiatric disorders noted above: if thought is independently constituted, such a co-morbidity is not predicted. They are also challenged by evidence reviewed below that language is a critical factor in cognitive development from the very beginning of a human life, mediating species-specific forms of categorisation, learning, attention, memory, and social cognition (Arunachalam & Waxman Reference Arunachalam and Waxman2010; Vouloumanos & Waxman Reference Vouloumanos and Waxman2014; Dehaene-Lambertz, Flo & Pena Reference Dehaene-Lambertz, Flo, Pena and Decety2020). In the remainder of this section, we will challenge expressivist views based on a particular interpretation of the traditional insight that, in language, the relation between form (sound) and meaning is mediated by grammar.

We could imagine several ways in which meaning was not so mediated. Monkey call systems identifying predators through warning calls (Seyfarth & Cheney Reference Seyfarth and Cheney2017) have meaning, but they may resemble innate human vocalisations like crying, grunting, sobbing, or laughter more than speech (Deacon Reference Deacon and Kaas2006). In our own species, meanings would not be mediated by grammar if meanings are modelled ontologically as propositions viewed as abstract objects (Frege Reference Frege1956; Katz & Postal Reference Katz and Postal1991), and natural languages are no more relevant to the existence of the meanings they express than the language of arithmetic is to the existence of the numbers referred to in it. For example, the sentence ‘Snow is white’ would express the proposition that snow is white, but this proposition would exist independently of whether there are sentences or people communicating with language. On another possible view, meaning in human language might be effectively causally controlled by word-object links, while language is merely an arbitrary way of referring to the objects in question or for conventionalising the link between them and our non-linguistic ‘concepts’ (Fodor Reference Fodor1998). In both these latter cases, one would not have to look at the structure of the system in which meaning is grammatically configured and conveyed in order to understand the principles on which it was based.

Even if it was true that grammar mediated the relation between form and meaning, it would not follow that the meaning in question depended on grammar for its existence. Grammar could be theoretically unpacked to indicate a function generating binary sets embedding other binary sets (‘Merge’, Chomsky Reference Chomsky, Freidin, Otero and Zubizarreta2008). In this case, all semantic content would either be lexical or be located on the non-linguistic side of a semantic interface – with grammar providing a means for such non-grammatically based meaning (in the form of features) to be recursively combined or for their combination to be restricted. A problem for this view is that there appears to be no restrictive theory of features at present and that all forms of meaning arising at a grammatical level would effectively have to be pre-coded lexically or else simply assumed to exist pre-linguistically. It would be a substantial assumption that the entire range of meanings that can constitute the contents of possible human thoughts would be available independently of language – with language simply representing the fortunate accident of a conventional way of expressing such meanings in humans.

A different possibility is that, without grammatical forms of organisation of the kind seen in every human language, the kind of meanings conveyed in language would not exist. This would immediately make sense of the empirical fact that whatever cognitive process we dignify with the term ‘thinking’ in nonverbal species, cognitive phenotypes across species also differ (Penn, Holyoak & Povinelli Reference Penn, Holyoak and Povinelli2008; Tomasello Reference Tomasello2008); and that we seem to never find the same kinds of meanings when language is absent, as in the case of (declarative) referential meaning which is absent in apes (Tomasello & Call Reference Tomasello and Call2018), absent in humans when language is absent (Maljaars et al. Reference Maljaars, Noens, Jansen, Scholte and van Berckelaer-Onnes2011; Slušná et al. Reference Slušná, Hinzen, Ximenes, Salvado and Rodríguez2018), and highly correlated with language in neurotypical development (Iverson & Goldin-Meadow Reference Iverson and Goldin-Meadow2005; Colonnesi et al. Reference Colonnesi, Stams, Koster and Noom2010).

Pursuing this possibility, Hinzen & Sheehan (Reference Hinzen and Sheehan2015) develop an ‘un-Cartesian’ programme, according to which meaning mediated by grammar specifically is referential meaning: it comes about through embedding lexical concepts in the context of an act of speech as mediated by grammar. Though abstract poetry clearly takes this idea to its limits, in ordinary language use there is no other option of using words than the referential one. Language could not be used like music, say, or as a calculation device. Moreover, and crucially, mere lexical concepts in the sense of isolated content words – house, bark, dog, etc. – are not referential, since they only capture general classes of objects or events and cannot as such pick out a particular dog or house, as opposed to the dog I saw in that house, or the specific event of his barking I witnessed yesterday.

On this view, then, the human-specific form of meaning qua referential meaning is an aspect of grammatical organisation: it is configurational. This makes the view different from a LoT-type view (Fodor Reference Fodor2008), since it hypothesises that grammar is the mechanism that makes thought referential. But it is equally important, on this view, that unlike a monkey call system, reference is internally mediated by a store of lexicalised concepts or content words (i.e. lexically codified semantic memory). These lexical concepts can be activated at any moment of our mental lives for purposes of thought and reference, crucially irrespective of perceptual stimuli occurring in the here and now. This makes such concepts fundamentally different from what we may call percepts – the unimodal (e.g. visual) representations causally evoked by a perceptual stimulus (Hinzen & Sheehan Reference Hinzen and Sheehan2015: ch. 2).

Meaning in humans is a two-tiered system, then: rooted in internally available concepts, it depends on reference, which is mediated by grammar, a cognitive system different from the system of conceptualisation. On this ‘un-Cartesian hypothesis’, grammar is the cognitive function converting a lexicalised semantic memory store into expressions functioning referentially. By giving a specific content to the claim that form mediates meaning, the un-Cartesian model connects language to thought directly: referentiality is as intrinsic to normal language use as it is to the thoughts expressed in it.

Linguistic evidence for this model comes from the fact that reference in its declarative forms (Tomasello & Call Reference Tomasello and Call2018) does not appear to be available non-grammatically, as noted, and systematically exhibits different forms, all of which are mediated by specific grammatical configurations (Sheehan & Hinzen Reference Sheehan and Hinzen2011; Arsenijević & Hinzen Reference Arsenijević and Hinzen2012; Martin & Hinzen Reference Martin and Hinzen2014, Reference Martin and Hinzen2021). Moreover, language is never used non-referentially.Footnote 6 By integrating reference in all of its forms into grammar and conceptualising it as configurational, the widely noted developmental link between reference and grammar (Colonnesi et al. Reference Colonnesi, Stams, Koster and Noom2010) is now predicted: reference in the shape of pointing is not ‘pre-linguistic’ cognition, but itself a signal of linguistic cognition unfolding, a form of cognition inherently integrated with reference as an essential aspect of the meaning it carries. We also predict, arguably correctly, that language (and grammatically mediated aspects of reference specifically) would be affected in all major mental disorders including Alzheimer’s disease, schizophrenia, and ASD (Hinzen Reference Hinzen2017; Hinzen et al. Reference Hinzen, Slušná, Schroeder, Sevilla and Borrellas2019; Tovar et al. Reference Tovar, Schmeisser, Gari, Morey and Hinzen2019; Chapin et al. Reference Chapin, Clarke, Garrard and Hinzen2022).

2.2 The universal spine hypothesis

In developing the un-Cartesian framework, Hinzen & Sheehan (Reference Hinzen and Sheehan2015) only touch on how patterns of cross-linguistic variation bear on the un-Cartesian hypothesis. Meanwhile, however, research on linguistic typology has moved in its own directions, at least one of which is consistent with a broadly un-Cartesian point of view. Specifically, Wiltschko (Reference Wiltschko2014) addresses the basic problem that the identification of syntactic categories in a given language has to employ language-specific diagnostics; but then, how are universal categories identified? How do we know whether there is indeed universal substance to structure, which would serve in the grammatical configuration of reference and propositional meaning, and if there is such substance, what would it be?

In the context of this long-standing challenge, Wiltschko (Reference Wiltschko2014) uses multi-functionality as a universal diagnostic for categorial status. Multi-functionality is the phenomenon whereby a given unit of language can be interpreted in multiple ways depending on its grammatical distribution. For example, in its use as a main verb (Virginia has a room of her own), have denotes the relation of possession; in its use as an auxiliary (Virginia has written her essay) it has a bleached meaning which expresses grammatical content (aspect) only. It follows that a given word (or unit of language) may have one meaning in one grammatical context and a different (albeit often related) meaning in another context. Patterns of multi-functionality are ubiquitous in natural languages calling for a model which predicts them to exist. Assuming that structure comes with substance mediating different kinds of meaning does just that. For any given unit of language, the interpretation will differ depending on its structural position, because the substance associated with structure will affect its interpretation. In this way, syntax not only mediates the relation between sound and meaning for complex expressions, but also for simplex ones. The substance of structure adds meaning to words. We refer to this as the substantivist view of grammar.

Based on cross-linguistic variation, Wiltschko (Reference Wiltschko2014) argues for a substantivist view in which grammatical categories are constructed on a language-specific basis. Specifically, units of language associate with a universal spine (Wiltschko’s term for a hierarchically layered set of structures that define the substance of grammar). Since units of language are necessarily language-specific, as they involve the conventional bundling of sound and meaning that make up the lexicon of a language, it follows that the categories constructed in this way are also language-specific. But the spine restricts the types of categories that languages construct and the hierarchical order that they display.

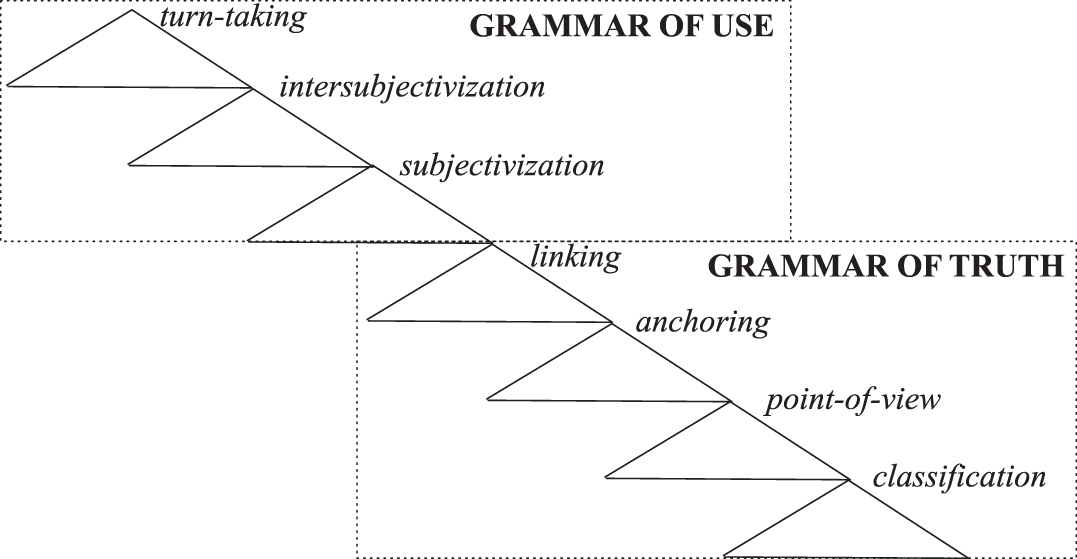

In Wiltschko’s (Reference Wiltschko2014) system as depicted in Figure 1, each layer turns out to be linked to a function, each of which is essential for the configuration of reference and propositional meaning. They are hierarchically organised in the sense that all ‘higher’ ones presuppose the foregoing as necessary parts, reflecting an inherent increase in grammatical complexity: (i) classification serves to classify events and individuals into subcategories (e.g. telic vs. atelic events; mass vs. count nouns, etc.); (ii) the introduction of a point of view serves to map the classified event or individual to a particular perspective on it (e.g. viewing it as perfective or imperfective); (iii) anchoring serves to map the perspectivised event or individual to the utterance context; and (iv) linking serves to map the anchored event or individual to the ongoing discourse.

Figure 1 The universal spine.

This system lends itself to an un-Cartesian construal, insofar as configuring these layers (first an event, then perspectivising it, then embedding it in context, then linking it) inherently corresponds to building the formal ontology of a normal thought itself. The functions of each hierarchical layer are ‘cognitive’ as much as they are ‘linguistic’, thus characterising a distinctively linguistic-cognitive phenotype. Uniform cognitive functions of grammar interact with language-specific resources to create the same effect across languages: a form in which meaning is organised in a particular way and along several layers, spanning the space of thought.Footnote 7

2.3 Beyond reference and truth

Language is as much a vehicle for thought as it is a vehicle for communication. Its communicative functions are reflected in the utterances that humans produce, through discourse markers, expressives, interjections, and the like. These do not contribute to the truth-conditional meaning of an utterance, but instead serve to regulate the interaction itself. Interactional aspects of language are constrained by grammar and thus arguably part of the spine (Wiltschko’s Reference Wiltschko2021 ‘interactional spine’). In other words, the spine constrains the way in which language is to be used in social interaction, beyond merely mediating referential meaning at a truth-conditional level. This implies that the way in which grammar structures the space of possible thought is in fact deeper and indeed potentially exhausts it: it configures both truth- and use-conditional meaning.

Among the evidence that the language function implicates this interactional dimension is the fact that speakers of neuro-typical human populations have clear judgements about the appropriate use of language dedicated to encoding it (Wiltschko Reference Wiltschko2021). Note that even core grammatical categories such as Tense and Person have meaning directly relating to the speech act situation: the relational meaning of Tense connects an event and/or reference time to the utterance time, Person connects event participants to utterance participants (Sigurðsson Reference Sigurðsson2004). But the scope of grammar goes beyond this, in also structuring the mental attitudes that speakers convey as well as the linguistic social interaction. This includes the encoding of speech act types (Ross Reference Ross, Jacobs and Rosenbaum1970; Rizzi Reference Rizzi and Haegeman1997), epistemic attitudes (Speas & Tenny Reference Speas, Tenny and Di Sciullo2003; Bhadra Reference Bhadra2017), tag questions (Wiltschko & Heim Reference Wiltschko, Heim, Kaltenböck, Keizer and Lohmann2016), discourse particles (Haegeman Reference Haegeman2014; Thoma Reference Thoma2016), politeness markers (Miyagawa Reference Miyagawa, Aelbrecht, Haegeman and Nye2012), addressee agreement (Miyagawa Reference Miyagawa2017), vocatives (Hill Reference Hill2007), and response markers (Farkas & Bruce Reference Farkas and Bruce2009; Wiltschko Reference Wiltschko, Bailey and Sheehan2017). There is significant convergence in this body of work, which suggests that the syntactic spine includes a layer of structure dedicated to hosting the language of interaction as an inherent aspect of the substance it carries. Specifically, the evidence suggests that there are several layers with distinct spinal functions which encode (i) subjective meaning (the speaker’s attitude towards the content expressed), (ii) intersubjective meaning (the speaker’s evaluation of how the addressee relates to what is being said), (iii) meta-communicative content (such as turn-taking management) (Wiltschko & Heim Reference Wiltschko, Heim, Kaltenböck, Keizer and Lohmann2016; Corr Reference Corr2021; Wiltschko Reference Wiltschko2021). This matters in the present context, since linguistic diversity in cognitive disorders critically affects the social-interactive and communicative dimensions of language. In this context, models of grammar are needed that include interactional aspects of language and can exhaust the typological space of the co-variation in language and cognition.

Interactional dimensions of grammar are different from meaning configured in lower layers of the spine in a number of respects: (i) They consist of the non-propositional dimensions of meaning, which do not contribute to the configuration of truth-evaluable structures, as they are configured after a truth value is assigned and do not affect this truth value either, regulating what people do with propositions in a context of use. (ii) Interactive language allows for limited recursion only: there is recursion to the extent that several (potentially articulated) layers of non-propositional language are attested. (iii) Interactive language is typically (though not always) found in sentence-peripheral positions. For this reason, interactive language is sometimes considered to be outside the clause (Kaltenboeck, Keizer & Lohmann Reference Kaltenboeck, Keizer and Lohmann2016) and thus not part of grammar proper. However, interactive language displays all of the characteristics we expect if it were associated with the spine (Wiltschko Reference Wiltschko2021): its meaning is mediated by grammatical form, it shows patterns of multi-functionality (many discourse markers, e.g. right, serve double duty in that they can be used both within the grammar of truth and the grammar of use), it is structure-dependent, it exhibits patterns of contrast and may be paradigmatic, and, finally, it displays ordering restrictions. Hence, the interactive dimension, too, has to be part of the universal spine, dominating propositional structure or the grammar of truth, as schematised in Figure 2.

Figure 2 Extending the universal spine hypothesis.

In this way, Wiltschko’s model of cross-linguistic variation makes grammar and the type of thought expressed in it inseparable and seemingly co-extensive, in line with an un-Cartesian perspective. Yet the empirical question remains where human cognition as structured by the spine begins: there is mental and cognitive life both before and outside of language. In the next section, we introduce the Bridge model, which seeks to provide an answer to this question by capitalising on two specific interfaces that we perceive in the architecture of grammar.

3. The Bridge model

The Bridge model is based on the fact that there are two pre-linguistic pillars on which language rests, with which it forms interfaces, and which, we will argue, it connects: First, infant perception functions so as to group stimuli into perceptual classes corresponding to object categories, long before they can produce and, in part, even comprehend words (see below). Second, infants are agents in a space of social interaction from the very beginning. A model that seeks to integrate language and thought has to minimally address the question as to how grammatical cognition is grafted onto these two critical capacities (categorisation and interaction), resulting in a thought system that is as species-specific as it is linguistic. Specifically, we propose that the two ends of the spine (i.e. its bottom and its top) are special in that they have both a linguistic and a pre-linguistic life. This distinguishes them from other cognitive systems, which do not have a dedicated position along the spine and, hence, grammar. For example, there is no aspect of grammar that would indicate how a referent or a proposition is remembered or how it affects our feelings. We can talk about these aspects of cognition (memory, emotions), but there are no grammatical categories dedicated to them. Conversely, the two ends of the spine differ from other layers in that the latter (anchoring, classifying, etc.) do not appear to have a pre-linguistic-cognitive life, in the sense of forming direct interfaces with partially preverbal cognitive systems.

In what follows we review evidence that the two pillars in question (categorisation and social interaction) do indeed have a special status in relation to grammar and the language faculty more generally.

3.1 At the bottom end of the spine: Categorisation

As perceptual creatures, we form object and event categories, which capture commonalities among perceptually presented objects. Categorisation in this general sense is neither dependent on language in humans nor human-specific (Santos et al. Reference Santos, Sulkowski, Spaepen and Hauser2002; Hespos & Spelke Reference Hespos and Spelke2004). Humans, however, are special in that perceptual categories become lexicalised as words, and these play a critical role in how, when, and which object categories are formed; how objects are individuated; which objects are attended to; and how they are memorised (Dehaene-Lambertz et al. Reference Dehaene-Lambertz, Flo, Pena and Decety2020). Thus, from at least 3 months old (Ferry, Hespos & Waxman Reference Ferry, Hespos and Waxman2010), infants group perceptually different objects into categories when these are named but not when they are presented simultaneously with tones. This suggests an incipient understanding of the fact that words refer to things but tones do not. In line with this, 4-month-olds appreciate that speech, apart from being a social signal, has content: it communicates, with words picking out objects in the world about which a thought is entertained (Vouloumanos & Waxman Reference Vouloumanos and Waxman2014; Marno et al. Reference Marno, Farroni, Santos, Ekramnia, Nespor and Mehler2015). By 6 months, words are further analysed in accordance with semantic features that make up their meanings: babies at this age are sensitive to the fact that cars are more similar to strollers than they are to bananas (Bergelson & Aslin Reference Bergelson and Aslin2017). In the course of the second year, parts-of-speech distinctions such as noun, adjective, and verb are used to map percepts onto categories of different formal-ontological types: objects, properties, and events, respectively (Arunachalam & Waxman Reference Arunachalam and Waxman2010). As infants grow into this linguistic space of shared meanings, words exert a top-down influence on visual object perception (Gliga, Volein & Csibra Reference Gliga, Volein and Csibra2010).

In these ways, while object perception as such is at least partially language-independent, it is also partially linguistic in humans, taking off on a different course early on in our species, through the way it is connected to speech, and, via speech, to grammar and thought: early thinking about the perceptual world is inextricably linked to language.

3.2 At the top end of the spine: Social interaction

The perception of speech structures human social interaction from birth as well. It is one of the earliest stimuli processed prenatally (as early as 24–28 weeks: Eggermont & Moore Reference Eggermont, Moore, Werner, Fay and Popper2012) and the subject of preferential attention relative to non-speech sounds from birth (Vouloumanos & Curtin Reference Vouloumanos and Curtin2014). At the brain level, it activates perisylvian language regions similar to those seen activated in language tasks in adults (Dehaene-Lambertz Reference Dehaene-Lambertz2017). As speech is a social stimulus and a crucial social bond, a preferential bias for speech processing makes the space of social interaction inherently linguistic from the start. In this space, the infant is not merely a recipient of linguistic utterances directed at it, but an active partner from the very beginning. Thus, newborns are more likely to vocalise while the mother is speaking (Rosenthal Reference Rosenthal1982). In addition, their early vocalisations, described in the literature as coos and murmurs (Oller Reference Oller2000), occur as part of rapid vocal exchanges with adult partners. These resemble conversations and feature-alternating vocalisations separated by clearly defined pauses (Bateson Reference Bateson1975; Gratier et al. Reference Gratier, Devouche, Guellai, Infanti, Yilmaz and Parlato-Oliveira2015). By around 2 months, maternal and infant vocalisations are separated by pauses ranging from 500 ms to 1 s (Jaffe et al. Reference Jaffe, Beebe, Feldstein, Crown, Jasnow, Rochat and Stern2001). The coos and murmurs are described to be more ‘speech-like’ as compared with vocalisations outside of a turn-taking format (Bloom, Russell & Wassenberg Reference Bloom, Russell and Wassenberg1987). They also elicit emotional and motivated responses from social partners, with vocalisations in general eliciting responses from adult partners more frequently than gaze and smiling (Van Egeren, Barratt & Roach Reference Van Egeren, Barratt and Roach2001), thereby further forging a speech-related social bond.

These findings illustrate that there is a linguistic signature to long-noted precocious social capacities in young infants. A key aspect of the synchronisation of early social interaction is rhythm, to which infants are sensitive: they synchronise their crying, movement, sucking, heart rate, and breathing (Provasi, Anderson & Barbu-Roth Reference Provasi, Anderson and Barbu-Roth2014), with first evidence of sensitivity to rhythm in fetuses at 35 weeks old (Minai et al. Reference Minai, Gustafson, Fiorentino, Jongman and Sereno2017). This is a prerequisite for establishing neurotypical social bonds and is restricted to species which display vocal learning (Schachner et al. Reference Schachner, Brady, Pepperberg and Hauser2009). Rhythm is a crucial property of speech and essential for the acquisition of words: basic rhythmic patterns (stress-, syllable-, or mora-timed) are relevant for word segmentation; and it is critical for acquisition of word order, which can be bootstrapped via prosodic phonological phrasing (Nespor & Vogel Reference Nespor and Vogel1986; Langus, Mehler & Nespor Reference Langus, Mehler and Nespor2017). Similarly, rhythm facilitates early turn-taking behaviour for which neonates at 2–4 days of age show evidence: they display intricate temporal organisation of their vocalisations in face-to-face communication with their mothers. Dominguez et al. (Reference Dominguez, Devouche, Apter and Gratier2016) found that 68.9% of these vocalisations occurred within a time window of 1 second following the mother’s turn, with 26.9% ‘latched’ onto them, i.e. occurring within the first 50 ms, suggesting a surprising predictive grasp of when her turn would end. At the same time, 30% of vocalisations are characterised by a pattern of overlap, differing from fully developed turn-taking behaviour, which is characterised by a ‘no gap/no overlap’ requirement (Sacks, Schegloff & Jefferson Reference Sacks, Schegloff and Jefferson1974), and is acquired only later.

The full development of these adult-like no gap/no overlap turn-taking patterns takes an interesting trajectory hinting at how language acquires its role in providing the turns in question with linguistic content. As early as 5 months (Hilbrink, Gattis & Levinson Reference Hilbrink, Gattis and Levinson2015), and maybe already at 2 months (Gratier et al. Reference Gratier, Devouche, Guellai, Infanti, Yilmaz and Parlato-Oliveira2015), the amount of overlap in the interaction between infants and mothers decreases so as to display more of a turn-taking like structure, i.e. moving towards a no gap/no overlap pattern. However, at some point infants slow down in their responses (Gratier et al. Reference Gratier, Devouche, Guellai, Infanti, Yilmaz and Parlato-Oliveira2015; Leonardi et al. Reference Leonardi, Nomikou, Rohlfing and Rączaszek-Leonardi2017), apparently violating the no gap requirement of adult-like turn-taking behaviour. This may be the result of the time it takes for infants to process previous turns and (later on) to plan the appropriate response (Clark & Lindsey Reference Clark and Lindsey2015; Hilbrink et al. Reference Hilbrink, Gattis and Levinson2015; Casillas, Bobb & Clark Reference Casillas, Bobb and Clark2016) when such turns start to involve linguistic content that has to be processed. The slowdown at 9 months of age reported in Hilbrink et al. (Reference Hilbrink, Gattis and Levinson2015) may specifically correlate with the fact that skills relevant for communication, such as joint attention and pointing, start emerging around this time. As argued in Butterworth (Reference Butterworth and Kita2003), pointing is ‘the royal road to language’. Early pointing richly correlates with linguistic measures, both lexical and grammatical, as noted in Section 2.1 (Iverson & Goldin-Meadow Reference Iverson and Goldin-Meadow2005; Colonnesi et al. Reference Colonnesi, Stams, Koster and Noom2010). Once pointing emerges, grammar does, and the slowdown in turn-taking behaviour could emerge due to it taking on its first lexical and proto-grammatical forms.

Turn-taking, then, like perceptual categorisation, is neither dependent on language in humans nor human-specific, as shown by rudimentary turn-taking abilities in other pro-social species such as marmosets (Chow, Mitchell & Miller Reference Chow, Mitchell and Miller2015) or songbirds (Henry et al. Reference Henry, Craig, Lemasson and Hausberger2015). Yet again, as with perceptual categorisation, when language is grafted on these precursor structures, these are also transformed: categories become words; words become parts of thoughts as configured in clauses; clauses define the contents of conversational turns; and turns are managed with linguistic means. As grammar falls into place, these contents come to exhibit the full referential format of thought as structured by the spine layer by layer, up to and including the discourse and response markers as well as intonational tunes that are the outposts of grammar at its interactional end.

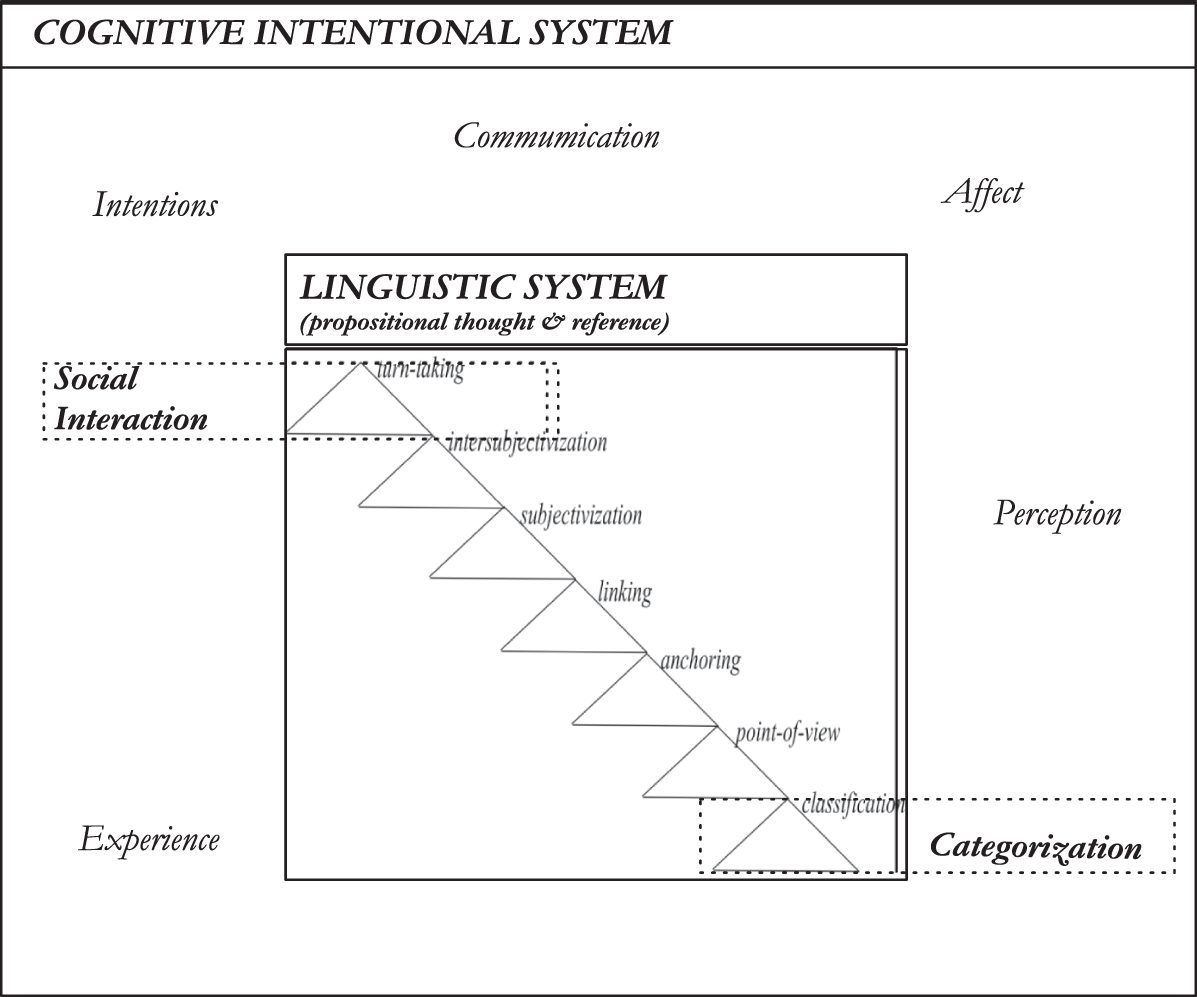

Grammar, in short, acts cognitively as a bridge that connects perception-based categorisation to social interaction in its full scope involving thought, spanning the space in-between, where such thought is hierarchically built. We refer to this as the Bridge model (Figure 3). As depicted there, the extended universal spine is at the core of our language faculty. Its substance determines the grammatical organisation of human thoughts and the ways these thoughts are communicated. It is grafted on evolutionarily older cognitive domains, namely perceptual categorisation and social interaction, which we have suggested are the pillars that are connected via grammar-based thought. The former defines an entry point after which lexical items appear, the latter an exit point, as thought-sized units are packed into turns of social speech. The spine is the bridge in-between.

Figure 3 Language as a bridge between categorisation and social interaction.

As a crucial test case and support of how clinical linguistic diversity might be approached in this way, we finally turn to the challenge of modelling a spectrum of language abilities in ASD.

4. Linguistic diversity in ASD

Language dysfunction has been criterial for ASD during the first decades of the autism concept, up to the point that Frith (Reference Frith1989: 20) could still note that ‘More has been written on the language of autistic children … than any other of their psychological disabilities’. This language focus has largely disappeared with the shift of the field in the 1990s to so-called ‘high-functioning autism’, perceived as being untainted by intellectual disability or language disorder. In line with this shift, current theoretical schemes of the underlying neurocognitive basis of ASD focus on such mechanisms as ToM, weak central coherence, or executive dysfunction, but not language (Happé & Frith Reference Happé and Frith2020). This shift is not unproblematic, however. The practical and clinical significance of language dysfunction in ASD as a whole is uncontested and demands a theoretical explanation. Language remains one of the most important early reasons of referral (Lord, Risi & Pickles Reference Lord, Risi, Pickles, Rice and Warren2004). Some of the earliest warning signs for autism are language-related, such as deviance in babbling (Patten et al. Reference Patten, Belardi, Baranek, Watson, Labban and Oller2014) or reaction to speech sounds (Arunachalam & Luyster Reference Arunachalam and Luyster2016), including an absence of the preference of speech over non-speech and of infant-directed speech relative to adult-directed speech (Droucker, Curtin & Vouloumanos Reference Droucker, Curtin and Vouloumanos2013; Vouloumanos & Curtin Reference Vouloumanos and Curtin2014). Early language levels are a crucial predictor of outcomes (Howlin et al. Reference Howlin, Savage, Moss, Tempier and Rutter2014), including language markers at the brain level (Eyler, Pierce & Courchesne Reference Eyler, Pierce and Courchesne2012; Kuhl et al. Reference Kuhl, Coffey-Corina, Padden, Munson, Estes and Dawson2013; Lombardo et al. Reference Lombardo, Pierce, Eyler, Barnes, Ahrens-Barbeau, Solso, Campbell and Courchesne2015).

Even in the half of the ASD spectrum people where IQ scores are in the normal range, language impairment at structural levels is abundant and universal in aspects of language classed as ‘pragmatic’ (Noterdaeme, Wriedt & Höhne Reference Noterdaeme, Wriedt and Höhne2010; Boucher Reference Boucher2012). ‘Pragmatic’ language capacities in ASD, however, are not somehow separate but correlate with structural language abilities (Reindal et al. Reference Reindal, Nærland, Weidle, Lydersen, Andreassen and Sund2021). In addition, where language is not compromised on standardised tests in high-functioning ASD, linguistic differences often show up in non-standardised tasks (e.g. narrative discourse: Fine et al. Reference Fine, Bartolucci, Szatmari and Ginsberg1994; Norbury & Bishop Reference Norbury and Bishop2003; Bartlett, Armstrong & Roberts Reference Bartlett, Armstrong and Roberts2005; Rumpf et al. Reference Rumpf, Kamp-Becker, Becker and Kauschke2012; Suh et al. Reference Suh, Eigsti, Naigles, Barton, Kelley and Fein2014; Eigsti et al. Reference Eigsti, Stevens, Schultz, Barton, Kelley, Naigles, Orinstein, Troyb and Fein2016), brain activations or structural pathways for language (Mizuno et al. Reference Mizuno, Liu, Williams, Keller, Minshew and Just2011; Stigler et al. Reference Stigler, McDonald, Anand, Saykin and McDougle2011; Radulescu et al. Reference Radulescu, Minati, Ganeshan, Harrison, Gray, Beacher, Chatwin, Young and Critchley2013; Mills et al. Reference Mills, Lai, Brown, Erhart, Halgren, Reilly, Dale, Appelbaum and Moses2015; Moseley et al. Reference Moseley, Correia, Baron-Cohen, Shtyrov, Pulvermüller and Mohr2016; Olivé et al. Reference Olivé, Slušná, Vaquero, Muchart-López, Rodríguez-Fornells and Hinzen2022), or speech processing anomalies (Klin Reference Klin1991; Alcántara et al. Reference Alcántara, Weisblatt, Moore and Bolton2004; Kujala, Lepistö & Näätänen Reference Kujala, Lepistö and Näätänen2013; Vouloumanos & Curtin Reference Vouloumanos and Curtin2014; Foss-Feig et al. Reference Foss-Feig, Schauder, Key, Wallace and Stone2017).

The sharp neglect of ‘low-functioning’ autism (Jack & Pelphrey Reference Jack and Pelphrey2017) since the 1990s also does not make it go away. ASD with ID is estimated to comprise nearly half of the spectrum in the United States (Centers for Disease Control and Prevention 2014). This half harbours the most significant nonverbal or minimally verbal population in our species, a substantial 25–30% of individuals on the spectrum (Tager-Flusberg & Kasari Reference Tager-Flusberg and Kasari2013; Norrelgen et al. Reference Norrelgen, Fernell, Eriksson, Hedvall, Persson, Sjölin, Gillberg and Kjellmer2015), with earlier estimates of up to 50% in the context of other diagnostic criteria (Rutter Reference Rutter, Rutter and Schopler1978; Bryson, Clark & Smith Reference Bryson, Clark and Smith1988). ‘Non- or minimally verbal’ autism (nvASD) in this sense is different from mutism, the selective and affectively grounded absence of speech in the presence of a language capacity. Crucially, comprehension levels in nvASD do not tend to exceed production levels (Hinzen et al. Reference Hinzen, Slušná, Schroeder, Sevilla and Borrellas2019; Slušná et al. Reference Slušná, Rodriguez, Salvado, Vicente and Hinzen2021). ASD with ID is unique among other ID groups in the severity of the language disorders involved and the degree to which comprehension is equally or more affected than production (Maljaars et al. Reference Maljaars, Noens, Jansen, Scholte and van Berckelaer-Onnes2011, Reference Maljaars, Noens, Scholte and van Berckelaer-Onnes2012; Garrido et al. Reference Garrido, Carballo, Franco and García-Retamero2015; Slušná et al. Reference Slušná, Rodriguez, Salvado, Vicente and Hinzen2021). In Down syndrome, the most common cause of ID, no substantial subpopulation with no or minimal language is reported (Martin et al. Reference Martin, Klusek, Estigarribia and Roberts2009). Given that a substantial minority of children with nvASD have nonverbal IQ scores in the normal range (Hus Bal et al. Reference Hus Bal, Katz, Bishop and Krasileva2016), and many children with ASD and total IQ scores in the normal range show language impairment (Kjelgaard & Tager-Flusberg Reference Kjelgaard and Tager-Flusberg2001), it does not appear that IQ as such provides a good model of language dysfunction in ASD.

At this junction, merely recording language impairment in ASD as a pervasive co-morbidity is unsatisfactory as well. To the extent that this co-morbidity exhibits SLI-typical features, one could seek to analyse the language impairment as an admixture of SLI, but this would do nothing to explain this apparent connection between SLI and ASD. It would also face two additional problems: linguistic profiles in SLI and ASD diverge (Boucher Reference Boucher2012; Taylor, Maybery & Whitehouse Reference Taylor, Maybery and Whitehouse2012), and an SLI-type impairment cannot account for language impairment of the nature and scale seen in low-functioning or nonverbal ASD. The strategy would seek to account for language impairment in ASD based on a model of ASD as a ‘cognitive’ disorder and of SLI as a ‘language’ one, recapitulating a traditional dichotomy. This dichotomy cannot be maintained by restricting how language is measured. For example, measuring the construct of ‘language’ through non-word and sentence repetition tasks only, leaving out its sentence-level semantic and social-communicative dimensions (Silleresi et al. Reference Silleresi, Prévost, Zebib, Bonnet-Brilhault, Conte and Tuller2020), would be circular. Moreover, none of the basic mechanisms posited in cognitive models of ASD – ToM, weak central coherence, or executive function deficits – seems to be promising analytical tools for specifically understanding language dysfunction in ASD.Footnote 8

The picture of language impairment in ASD, however, is entirely unsurprising on the Bridge model. ASD is defined by deficits in social interaction and communication, yet in humans, these two take linguistic forms. Criterial diagnostic features in use today specifically are deficits in ‘communication’, ‘reciprocal social interaction’, ‘nonverbal gestures’, and ‘restrictive and repetitive behaviours’. Yet these clinical descriptive categories create the conceptual problem of how a dysfunction in any of these domains could possibly not relate to language (dys-)function. The problems of ‘communication’ and ‘reciprocal social interaction’ in question are evidently not intended to be problems in forms of communication and social interaction as seen in non-humans. On the contrary, symptoms of ASD are located precisely in human-specific forms of communication and social interaction, which involve language function inherently. Individuals with ASD, even at its lowest-functioning end, do both socially interact and communicate (Preissler Reference Preissler2008; Maljaars et al. Reference Maljaars, Noens, Jansen, Scholte and van Berckelaer-Onnes2011; Cantiani et al. Reference Cantiani, Choudhury, Yu, Shafer, Schwartz and Benasich2016; DiStefano et al. Reference DiStefano, Shih, Kaiser, Landa and Kasari2016), just in their own ways rather than the species-typical ones linked to language in its normal use. Human communication and social cognition are linguistic from birth as reviewed in the previous section, and one of the most paradigmatic human ‘reciprocal interactions’, starting from birth, is language. Paradigmatically autism-related so-called ‘nonverbal’ gestures like pointing and shared attention closely relate to language in development, as reviewed above, including social smiles (Hsu, Fogel & Messinger Reference Hsu, Fogel and Messinger2001). Diagnostically significant behaviours such as echolalia or stereotyped and idiosyncratic phrases descriptively are anomalies of normal language function, which is creative and interactive in its normal use by nature and universally exhibits dedicated grammatical devices to act out such functions.

These facts motivate using a model that integrates language, thought, and communication, seeing them as an indissociable triad, when they take their human-specific forms. In terms of the Bridge model, it is evident that the bridge is affected in ASD, not merely the pillars (communication/interaction and perceptual categorisation in a non-linguistic sense). In line with that, grammar-based dimensions of language have often been found to be proportionally more affected in ASD than vocabulary (Boucher Reference Boucher2012; Arunachalam & Luyster Reference Arunachalam and Luyster2016). However, vocabulary and lexical meaning, too, are not quite neurotypical, even in those children with ASD who develop words (Tek et al. Reference Tek, Jaffery, Fein and Naigles2008; Arunachalam & Luyster Reference Arunachalam and Luyster2016). This means that the lexicon cannot be separated from grammatical functioning in ASD, which makes sense in terms of the Bridge model: what truly predates grammar is not the lexicon but perceptual categories, whose lexicalisation depends on grammar. Further in line with this is the fact that in nvASD, where children do not develop phrase speech and hence there is no grammar in production, no substantial lexicon (beyond a few, atypically used words) develops either.Footnote 9

Forms of meaning based on this integrated system depend on layers of a syntactic hierarchy, along which meaning differentiates as specified in this model. It is in the nature of a hierarchy that higher entails lower, and an immediate prediction is therefore that as the bridge disintegrates, we will find that any intact layer, n, should entail the intactness of any lower layer < n, while layers > n could be deviant. This makes predictions for possible and impossible clinical linguistic change – and associated cognitive dysfunction to which each of the layers are connected. In particular, a child who can put single phrases together, such as simple verb-noun combinations, might not show evidence of sensitivity to aspect or episodicity as relating to tense or ‘anchoring’ (see Figure 3); but the other way around (presence of anchoring without basic classifications of events and their participants) should not be observable. It is noteworthy in this regard that problems with time-deixis have been highlighted in ASD from early on (Bartolucci, Pierce & Streiner Reference Bartolucci, Pierce and Streiner1980; Fine et al. Reference Fine, Bartolucci, Szatmari and Ginsberg1994).

It could also be that some children will seek to break into language in the pillars of the bridge: A child with nvASD may seek to identify its own strategies of socially interacting so as to achieve its goals, and learn some words for this purpose, based on the pre-linguistic perceptual categories it has. But the prediction is that as long as this child does not develop grammar, the use of the words in question is not the neurotypical one, involving declarative reference. This is borne out by current research: to the extent that words are acquired in nvASD, they are not processed normally (Preissler Reference Preissler2008; Cantiani et al. Reference Cantiani, Choudhury, Yu, Shafer, Schwartz and Benasich2016), and declarative reference is uniformly absent (Slušná et al. Reference Slušná, Hinzen, Ximenes, Salvado and Rodríguez2018). Analogously, a child with WS might break into language via its interactive end, showing great skills as a conversationalist, yet showing semantic deficits at a grammatical and lexical level, relating to how the spine grows from one pillar to the other.

Where classification, point of view, and anchoring are in place, additional questions arise, such as whether a child can link events placed in context to other events referred to in discourse. If linking is affected, this would predict problems in the more grammaticalised forms of reference (pronouns, definite NPs, and deictic devices), which directly relate to discourse-based linking. This has been the case even in ASD without ID (Bartolucci et al. Reference Bartolucci, Pierce and Streiner1980; Norbury & Bishop Reference Norbury and Bishop2003; Modyanova Reference Modyanova2009; Rumpf et al. Reference Rumpf, Kamp-Becker, Becker and Kauschke2012; Banney, Harper-Hill & Arnott Reference Banney, Harper-Hill and Arnott2015). If linking is possible, in turn, the intricacies of social-interactive language including turn-taking and the interpretation of utterances in relation to epistemic states, may or may not be neurotypical. If they are not, this may specifically result in misuse or under-use of personal pronouns, which has been documented in ASD (Bartolucci et al. Reference Bartolucci, Pierce and Streiner1980; Mizuno et al. Reference Mizuno, Liu, Williams, Keller, Minshew and Just2011; Shield, Meier & Tager-Flusberg Reference Shield, Meier and Tager-Flusberg2015). Areas of particular interest for further investigation are discourse markers, which serve to indicate the epistemic states of the interlocutors (e.g. oh, huh,…), terms of address and other forms specifically geared towards conversational management (e.g. vocatives, intonational tunes), or response markers and backchannels which serve to indicate mutual understanding and (dis)agreement (yes, no, mhm, right).

Given that to be linguistic is to master this hierarchy along the bridge, and the autism spectrum is a language spectrum, our model thus invites mapping language function seen in ASD systematically onto the different hierarchical layers of the spine, creating implicational predictions at each layer as well as for associated cognitive dysfunction as currently measured by non-linguistic variables as ‘perspective-taking’, ‘reciprocity’, or ToM. Language can now be seen as not merely another behavioural and neuro-cognitive domain where a given syndrome shows impairments, but as an integral mechanism in how and why a social-cognitive phenotype unfolds on a different path. Where this mechanism fails, alterations of this phenotype are predicted and rationalisable on a cognitive basis that integrates language, such that linguistic typologies of cognitive disorders can be developed.

5. Conclusions

Two types of linguistic diversity mark the human linguistic phenotype: one staying within the confines of a neurotypical thought process, another co-varying with its disintegration. Our perspective suggests to systematically link such change to changes in our language capacity as modelled here. In the tradition of generative grammar, this capacity has long been conceptualised as the incarnation of a genetically driven universal capacity (termed universal grammar). But as noted in the beginning of this paper, our linguistic phenotype is actually not more universal in humans than our cognitive phenotype: both are subject to systematic variation in our species. While that is uncontroversial in the cognitive case, as the existence of countless cognitive disorders with a genetic basis attest, it is rarely recognised for the linguistic case. We have here proposed to conceptualise such variation as affecting cognitive functions rooted in grammar, where grammar, in turn, is a mechanism for turning percepts linked to lexical addresses into forms of thought endowed with reference and truth and entering into social interactions. While the genetic endowment for this capacity is currently still labelled as ‘universal grammar’ (e.g. Hinzen & Sheehan Reference Hinzen and Sheehan2015), the breakdown of such a universal grammar, on our model, is also the breakdown of species-typical cognition more broadly. If so, it would be mislabelled as a universal grammar: when intact, it is a thought and social communication system as much as it is a grammar system. This single system may then hold a critical new key to making sense of cognitive diversity in mental disorders.