Introduction

The Defining Issues Test (DIT) is a widely used tool in the fields of moral psychology and education for evaluating the development of moral reasoning. Its primary purpose is to measure one’s ability to apply postconventional moral reasoning when faced with moral dilemmas (Thoma, Reference Thoma, Killen and Smetana2006). The DIT generates a P-score, a postconventional reasoning score, which quantifies one’s level of postconventional reasoning development. The score reflects their likelihood of utilizing postconventional reasoning, which involves the ability to re-evaluate existing social norms and laws based on moral principles, rather than personal interests or social norms, across different situations (Rest et al., Reference Rest, Narvaez, Bebeau and Thoma1999).

Despite being a widely used tool in the field, concerns have been raised regarding the potential bias of the DIT toward individuals with varying gender, political, and religious affiliations. For instance, Gilligan (Reference Gilligan1982) argued that the model based on postconventional reasoning development might favor men versus women because women are more likely to be assessed to focus on personal interests, social relations in fact, in solving moral dilemmas from the DIT’s perspective. In addition, some argue that the postconventional reasoning presented in the measure is liberal-biased, so conservative populations, including both politically and religiously conservative ones who value traditions and conventions, are also likely to be unfairly penalized due to their political and religious views, not by their actual developmental level (Crowson & DeBacker, Reference Crowson and DeBacker2008).

Several moral psychologists have suggested that people affiliated with different political and religious groups are likely to endorse different moral foundations and, thus, they are likely to render different moral decisions based on different moral philosophical rationales (Graham et al., Reference Graham, Haidt and Nosek2009). For instance, research on the moral foundations theory has demonstrated that liberals tend to endorse foundations for individualizing, whereas conservatives tend to focus on foundations for social binding (Graham et al., Reference Graham, Haidt and Nosek2009). Hence, without examining whether a test for moral reasoning, the DIT, is capable of assessing one’s moral reasoning in an unbiased manner across people with different moral views, it is impossible to assure that the test can generate reliable and valid outcomes across such people (Han et al., Reference Han, Dawson and Choi2022b).

Previous studies have addressed these concerns by demonstrating that there have not been significant differences in the mean P-scores across the different groups (Thoma, Reference Thoma1986; Thoma et al., Reference Thoma, Narvaez, Rest and Derryberry1999), or P-scores significantly predict socio-moral judgment even after controlling for political and religious affiliations and views (Crowson & DeBacker, Reference Crowson and DeBacker2008). However, such score-based comparisons cannot address concerns regarding whether the test per se or its items are biased. To be able to address the concern, it is necessary to conduct: first, a measurement invariance (MI) test, which examines whether a test measures a construct of interest consistently across different groups (Putnick & Bornstein, Reference Putnick and Bornstein2016); and second, a differential item functioning (DIF) test based on the item response theory, which examines whether a specific item favors a specific group, while the latent scores are the same (Zumbo, Reference Zumbo1999).

Once MI is supported and no item demonstrates a significant DIF across different groups, then it is possible to conclude that the items in the test do not measure one’s latent ability unequally. Of course, there have been a few previous studies employing such methods to evaluate the cross-group validity of the DIT (Choi et al., Reference Choi, Han, Dawson, Thoma and Glenn2019; Richards & Davison, Reference Richards and Davison1992; Winder, Reference Winder2009). However, their sample size was small, or they focused solely on specific groups (e.g., Mormons). Furthermore, the traditional DIT presented a technical challenge for conducting MI or DIF tests due to its scoring method, which uses rank-ordered responses instead of individual item ratings.

In the present study, to address the abovementioned limitations in the previous studies that examined the validity of the DIT across different groups, I tested the MI and DIF of the behavioral DIT (bDIT) with a large dataset collected from more than 1,400 participants. The bDIT, which is a simplified version of the traditional DIT, uses individual item responses to calculate one’s P-score instead of rank-ordered responses (Han et al., Reference Han, Dawson, Thoma and Glenn2020). Consequently, conducting MI and DIF tests with the bDIT is more straightforward. In contrast to previous studies, the present study analyzed a more extensive dataset collected from participants with diverse political and religious affiliations.

Methods

Participants and data collection

Data were acquired from college students (Age mean: 21.93 years; SD: 5.95 years) attending a public university in the Southern United States of America. All data collection procedures and the informed consent form were reviewed and approved by the University of Alabama Institutional Review Board (protocol number: 18-12-1842). Participants were recruited via the educational and psychological research subject pools. They signed up for the study and received a link to a Qualtrics survey form and received a course credit as compensation.

Table 1 summarizes the demographics of the participants in terms of their gender, political, and religious affiliations, which were the main interests of this study. Due to the convergence issue associated with Confirmatory Factor Analysis (CFA), only groups with n ≥ 100 were used for the MI and DIF tests (Han et al., Reference Han, Blackburn, Jeftić, Tran, Stöckli, Reifler and Vestergren2022a). As a result, for political affiliations, Republicans, Democrats, Independents, and Others were analyzed, and, for religious affiliations, Catholics, Evangelical and Non-Evangelical Protestants, Spiritual but not religious, and Others were analyzed.

Table 1. Demographics information of participants

Measures

The bDIT and demographics survey form used in the present study (survey.docx) and the codebook (varlist.xlsx) are available in the Open Science Framework repository at https://osf.io/ybmp6/ for readers’ information.

Behavioral Defining Issues Test

The bDIT consists of three dilemmas: Heinz Dilemma, Newspaper, and Escaped Prisoner (see survey.docx in the repository for the sample test form and items). For each dilemma, participants were asked to examine whether a presented behavioral option to address the dilemma is morally appropriate or inappropriate. Then, they were presented with eight items per dilemma asking the moral philosophical rationale supporting their decision. For each item, three rationale options were presented. Each of the three options corresponds to one of three schemas of moral reasoning proposed in the Neo-Kohlbergian model of moral development, personal interests, maintaining norms, and postconventional schemas. The participants were requested to choose the most important rationale.

Once the participants completed the bDIT, I examined how many postconventional options were selected out of 24 items (eight per dilemma × three dilemmas). Then, one’s P-score, which ranges from 0 to 100%, was calculated as follows:

For instance, if one selected the postconventional options as the most important rationale for 12 items, then the P-score becomes 50. It means that the likelihood of utilization of the postconventional schema while solving moral dilemmas is 50% in this person’s case.

Demographics survey form

At the end of the survey, I presented a demographics survey form to collect participants’ demographics for MI and DIF tests across different groups. The collected demographics include gender, political, and religious affiliations (see survey.docx in the repository for the demographics survey form).

Statistical analysis

First, I evaluated the MI of the bDIT via multigroup confirmatory factor analysis (MG-CFA) implemented in an R package, lavaan (Rosseel, Reference Rosseel2012). Whether MI is supported was examined by the extent to which model fit indicators, that is, RMSEA, SRMR, and CFI, changed when additional constraints were added to the measurement model (Putnick & Bornstein, Reference Putnick and Bornstein2016). I tested four different levels of invariance: configural, metric, scalar, and residual invariance (refer to the Supplementary Material for additional methodological details). Because scalar invariance is minimally required for cross-group comparisons, I focused on whether this level of invariance was achieved (Savalei et al., Reference Savalei, Bonett and Bentler2015). In this process, I treated a response to each item as a dichotomous variable, that is, a postconventional versus non-postconventional, because that is consistent with how the actual P-score is calculated as described above. Then, I assumed a higher-order model with three latent factors, one latent factor per the presented dilemma (see Figure 1 for the CFA model).

Figure 1. Measurement model of the behavioral Defining Issues Test for confirmatory factor analysis.

Second, I also performed the DIF test to investigate whether any item of the test demonstrated a statistically significant preference for a particular group compared to others, even when the latent ability, moral reasoning, was the same. To implement the DIF test, I employed the logistic ordinal regression DIF test with an R package, lordif (Choi et al., Reference Choi, Gibbons and Crane2011) with an R code for the multiprocessing to distribute the tasks to multiple processors that was previously applied in Han et al. (Reference Han, Blackburn, Jeftić, Tran, Stöckli, Reifler and Vestergren2022a; Reference Han, Dawson, Walker, Nguyen and Choi2022c). Once lordif was performed, I tested whether there was any significant uniform or nonuniform DIF for each item to examine whether the item significantly unequally favored one group versus other (see the Supplementary Material for methodological details).

All data and source code files are available in the Open Science Framework repository at https://osf.io/ybmp6/.

Results

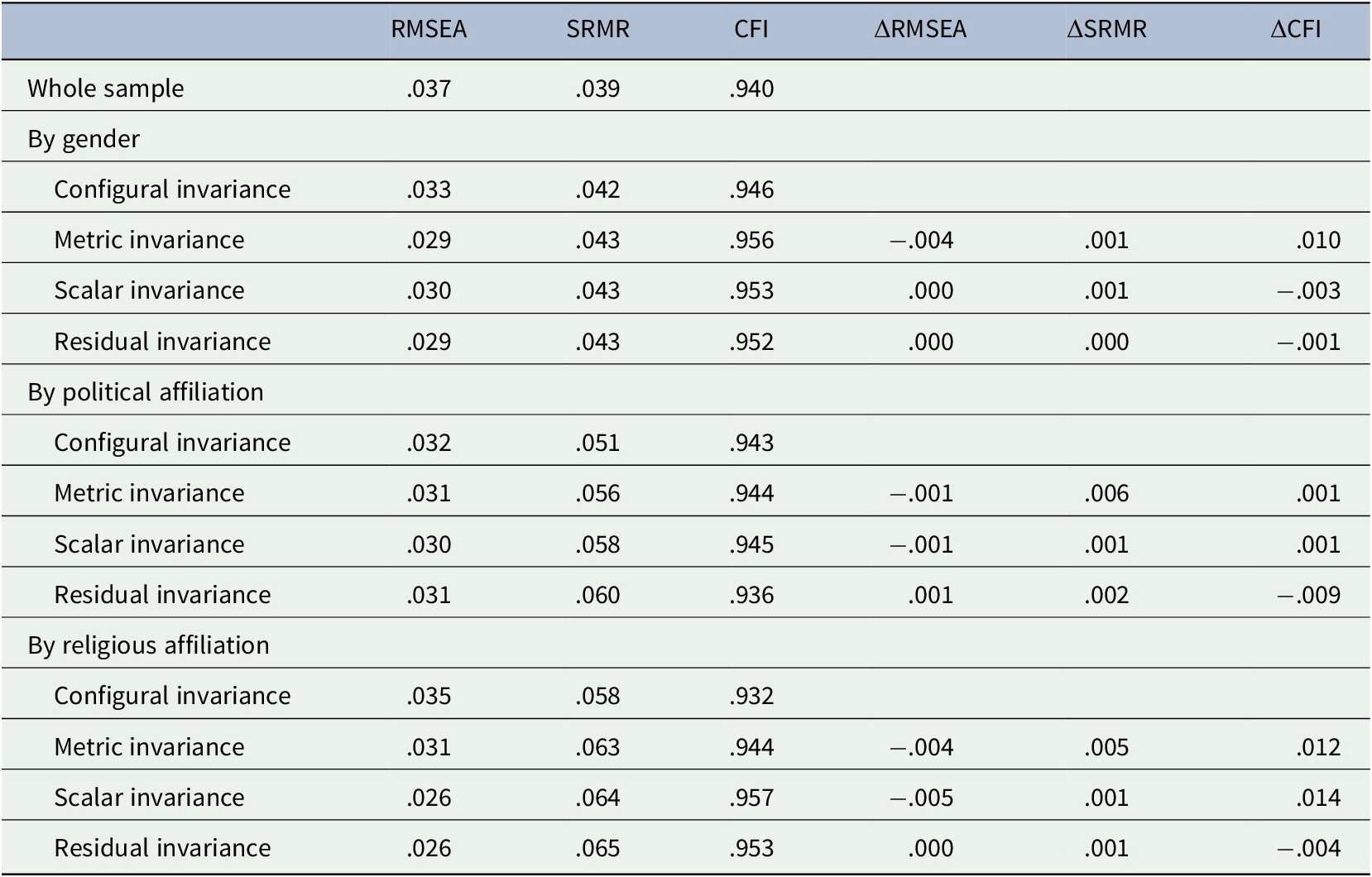

The results from the MI tests demonstrate that residual invariance, the most restrictive invariance, was supported across genders and political and religious affiliations (see Table 2). In all cases, the changes in the fit indicators did not exceed the cutoff values. The results suggest that the equal measurement model, factor loading, intercept, and residual assumptions were satisfied, so the postconventional reasoning can be measured by the bDIT consistently across the groups.

Table 2. Results from measurement invariance tests

Furthermore, the results from the DIF tests also support the point that all items in the bDIT did not significantly favor a specific group. Several χ 2 tests demonstrated significant outcomes, p < .01 (see the top panels of Figures S1–S9 in the Supplementary Material). However, in all cases, both R 2’s and Δβ 1’s were below the thresholds, .02 and .10, respectively (see the middle and bottom panels of Figures S1–S9 in the Supplementary Material for R 2 and Δβ 1 values, respectively). The results suggest that there was no item demonstrating a practically meaningful DIF.

Discussion

In the present study, I examined whether the bDIT can measure the development of postconventional moral reasoning across different gender, political, and religious groups consistently without bias. The MI test indicated that the bDIT assessed postconventional moral reasoning consistently across heterogeneous groups at the test level. At the item level, the DIF test reported that no item significantly favored a specific group. These results suggest that the bDIT was not biased across different groups.

Given that the bDIT did not show any significant non-invariance or DIF across different gender, political, and religious groups, it would be possible to conclude that the test can consistently examine moral reasoning. The results may address the concerns related to the potential gender and liberal biasedness in measuring postconventional moral reasoning. In the United States, in terms of political affiliations, Democrats are supposed to be more liberal and more likely to endorse individualizing moral foundations than Republications (Han et al., Reference Han, Dawson and Choi2022b). In the case of religious affiliations, Evangelical Protestants are generally considered more conservative and more likely to support binding foundations than other religious groups (Sutton et al., Reference Sutton, Kelly and Huver2020). In the present study, I examined the validity evidence of the bDIT among these groups with diverse political and religious views, which are inseparable from moral standpoints. Hence, moral psychologists and educators may employ the bDIT to test participants’ developmental levels of moral reasoning.

However, there are several limitations to the present study that should be acknowledged. First, the study was conducted solely within the United States and, thus, further data should be gathered from a more diverse range of cultural contexts while also taking into consideration different political and religious factors that exist within different countries. Second, the study relied on self-reported political and religious affiliations, which may not fully represent participants’ actual political and religious views. Such variables are categorical and may not capture the complexities of an individual’s beliefs accurately. Finally, while the present study tested the cross-group validity of the bDIT and the bDIT is a reliable and valid proxy for the original DIT (e.g., Choi et al., Reference Choi, Han, Dawson, Thoma and Glenn2019; Han et al., Reference Han, Dawson, Thoma and Glenn2020), it is necessary to examine whether the same level of validity can be supported for the original DIT, the DIT-1, and DIT-2. This can be achieved by administering the original DIT with large, diverse samples (e.g., Choi et al., Reference Choi, Han, Bankhead and Thoma2020) and gathering additional demographic information.

Open peer review

To view the open peer review materials for this article, please visit http://doi.org/10.1017/exp.2023.6.

Supplementary materials

To view supplementary material for this article, please visit http://doi.org/10.1017/exp.2023.6.

Data availability statement

All data and source code files are available in the Open Science Framework repository at https://osf.io/ybmp6/.

Authorship contributions

H.H. conceived and designed the study, performed statistical analyses, and wrote the article.

Funding statement

This research received no specific grant from any funding agency, commercial, or not-for-profit sectors.

Conflict of interest

The author declares none.

Ethical standards

The author asserts that all procedures contributing to this work comply with the ethical standards of the relevant national and institutional committees on human experimentation and with the Helsinki Declaration of 1975, as revised in 2008. All data collection procedures and the informed consent form were reviewed and approved by the University of Alabama Institutional Review Board (protocol number: 18-12-1842).

Comments

Comments to the Author: GENERAL COMMENTARY:

This work addresses a long-standing critique of the DIT — it is biased in favor of certain groups. It addresses this critique well, using a large and heterogenous sample. I do wonder if readers would be interested in the extent to which the present results can be extended to the DIT-1 and DIT-2. And if not, might the author suggest that future research should conduct similar studies with these iterations of the instrument (using large & heterogeneous samples)?

VERY MINOR EDITS:

Wording of the first paragraph of introduction is a bit difficult to follow.