INTRODUCTION

Before conclusions are reached about a patient’s cognitive abilities and psychological symptoms, it is vital that the assessment validity of the neuropsychological evaluation is established. Assessment validity is defined here as “the accuracy or truthfulness of an examinee’s behavioral presentation, self-reported symptoms, or performance on neuropsychological measures” (Bush et al., Reference Bush, Ruff, Tröster, Barth, Koffler, Pliskin, Reynolds and Silver2005: p. 420; Larrabee, Reference Larrabee2015). Phrased otherwise, assessment validity pertains to the credibility of the clinical presentation. To determine the assessment validity, specific tools have been developed: (1) symptom validity tests (SVTs) that measure whether a person’s complaints reflect his or her true experience of symptoms and (2) performance validity tests (PVTs) that measure whether a person’s test performance is reflective of the actual cognitive ability (Larrabee, Reference Larrabee2015; Merten et al., Reference Merten, Dandachi-FitzGerald, Hall, Schmand, Santamaría and González-Ordi2013). In the validation of these validity tests the cut-scores to classify non-credible presentation are traditionally set at a specificity of ≥.90 to safeguard against a false-positive classification of a credible presentation of symptoms and cognitive abilities as non-credible (Boone, Reference Boone and Boone2013, Chapter 2). Also, a “two-test failure rule” (i.e., any pairwise failure on validity tests) has been recommended as a criterion to identify non-credible presentations, particularly in samples with a low base rates of non-credible symptom reports and cognitive test performance (Larrabee, Reference Larrabee2008; Lippa, Reference Lippa2018; Victor, Boone, Serpa, Buchler, & Ziegler, Reference Victor, Boone, Serpa, Buehler and Ziegler2009). The post-test probability that a validity test failure is due to a non-credible presentation depends on the base rate (Rosenfeld et al., Reference Rosenfeld, Sands and Van Gorp2000). For example, failing one validity test with a specificity of .90 and sensitivity of .80, in a sample with a base rate of .10, the post-test probability that a failure is due to non-credible responding is only .47. Therefore, if the base rate is not taken into account, one runs the risk of incorrect interpretation of the validity test failure in low base rate samples.

The usefulness of PVTs and SVTs has been demonstrated in numerous studies, in particular in patients in which external incentives are present (Bianchini, Curtis, & Greve, Reference Bianchini, Curtis and Greve2006; Boone, Reference Boone and Boone2013: Chapter 2), but also in psychological assessments of clinically referred patients (Dandachi-FitzGerald, van Twillert, van de Sande, van Os, & Ponds, Reference Dandachi-FitzGerald, van Twillert, van de Sande, van Os and Ponds2016; Locke, Smigielski, Powell, & Stevens, Reference Locke, Smigielski, Powell and Stevens2008). Because of these findings, assessment validity has now become an integral part of a neuropsychological evaluation (Hirst et al., Reference Hirst, Han, Teague, Rosen, Gretler and Quittner2017).

Motivational deficiency due to cerebral pathology is one of several potential limiting, but insufficiently researched, factors. Especially, concerns have been raised about the potential influence of apathy on PVTs. An apathetic patient may not be able to invest sufficient effort into testing, and consequently be wrongfully classified by the PVT as non-credible (Bigler, Reference Bigler2015).

Cognitive impairments are another potential limiting factor. PVTs, for example, still require a minimum of cognitive abilities to be able to perform normal. Likewise, the SVTs require a minimum level of reading and verbal comprehension to grasp the items. Despite that the role of cognitive impairment has been studied more than the role of motivational deficiencies, the scientific body of knowledge on this topic is still limited.

The current study aims to extend previous studies on the critical limits of validity test performance. To the best of our knowledge no study yet has examined the accuracy of both types of tests – PVTs and SVTs – in a relatively homogenous and large sample of patients with cognitive impairment and apathy. We hypothesize that in this sample the false-positive rate of the individual validity test would be unacceptably high (i.e., >10%), and related to both cognitive impairment and apathy. We further hypothesize that the PVTs require relatively more motivational effort and cognitive abilities to perform than the SVTs, and are therefore more susceptible to apathy and cognitive impairment. In addition, we examine the accuracy of the “two-test failure rule” to identify non-credible presentations of symptoms and cognitive abilities. We hypothesize that the false-positive rate of this classification rule will be within acceptable limits (i.e., <10%), and thereby constitute a superior approach to determine the assessment validity within patient samples with raised levels of cognitive impairment and apathy.

METHOD

Sample

The study followed a cross-sectional, between groups, design. All patients were clinically evaluated at the Maastricht University Medical Centre. Patients with Parkinson’s disease (PD) were referred by the neurologist or psychiatrist for neuropsychological assessment. The referral reason was either to generally evaluate their cognitive functioning or as part of a preoperative screening to determine their eligibility for deep brain stimulation (DBS). No patients with already-implanted DBS systems were included. Patients with mild cognitive impairment (MCI) or dementia were seen at the memory clinic of the hospital for diagnostic evaluation. The final clinical diagnosis was made in a multidisciplinary team and based on multiple sources of information such as third-party information (e.g., a spouse or child was interviewed on the presence of symptoms and impairment in daily functioning), neuropsychological assessment, psychiatric and neurological evaluation, and brain imaging (e.g., MRI scan). The patients evaluated at the memory clinic were participating in a larger research project following the course of cognitive decline (Aalten et al., Reference Aalten, Ramakers, Biessels, Deyn, Koek, OldeRikkert, Oleksik, Richard, Smits, van Swieten, Teune, van der Lugt, Barkhof, Teunissen, Rozendaal, Verhey and Flier2014).

Inclusion criteria were: (1) a clinical diagnosis of MCI or dementia based on the National Institute of Neurological and Communicative Disorders and Stroke – Alzheimer's Disease and Related Disorders Association (NINCDS–ADRDA) criteria (Albert et al., Reference Albert, DeKosky, Dickson, Dubois, Feldman, Fox, Gamst, Holtzman, Jagust, Petersen, Snyder, Carrillo, Thies and Phelps2011; Dubois et al., Reference Dubois, Feldman, Jacova, DeKosky, Barberger-Gateau, Cummings, Delacourte, Galasko, Gauthier, Jicha, Meguro, O'brien, Pasquier, Robert, Rossor, Salloway, Stern, Visser and Scheltens2007) or a diagnosis of PD according to the Queens Square Brain Bank criteria (Lees, Hardy, & Revesz, Reference Lees, Hardy and Revesz2009); (2) mental competency to give informed consent; (3) native Dutch speaker; (4) a minimum of 8 years of formal schooling, and no history of mental retardation. Exclusion criteria were: (1) comorbid major depressive disorder as defined by the Diagnostic and Statistical Manual of Mental Disorders -IV (DSM-IV) criteria (American Psychiatric Association, 2000); (2) other neurological diseases (e.g., epilepsy and multiple sclerosis); (3) a history of acquired brain injury (e.g., cerebrovascular accident and cerebral contusion); (4) involvement in juridical procedures (e.g., litigation).

Measures

Performance validity tests

Test of Memory Malingering (TOMM)

The TOMM is a 50-item forced choice picture recognition task (Tombaugh, Reference Tombaugh2006). We used the cut-score of 45 on the second recognition trial. In the validation studies, this score was associated with a specificity of 1.00, and a sensitivity of .90 in a sample of healthy controls either instructed to malinger brain injury or to perform honestly (Tombaugh, Reference Tombaugh2006). Further, the validation studies showed a specificity of .92 in a sample of patients with dementia (N = 37), and of .97 in a clinical sample of patients with cognitive impairment, aphasia, and traumatic brain injury (N = 108) (Tombaugh, Reference Tombaugh2006).

Dot Counting Test (DCT)

The DCT requires the participant to count grouped and ungrouped dots as quickly as possible. An effort index (i.e., E-score) is calculated from the response time and number of errors. We used the standard cut-score of 17, which in validation studies was associated with a specificity of .90 and a sensitivity of .79 (Boone et al., Reference Boone, Lu, Back, King, Lee, Philpott, Shamieh and Warner-Chacon2002). We also applied the recommended cut-score for mild dementia (i.e., E-score ≥ 22). In a sample of 16 patients with mild dementia this cut-score was associated with a specificity of .94 and a sensitivity of .62.

Symptom validity test

Structured Inventory of Malingered Symptomatology (SIMS)

The SIMS is a 75-item self-report questionnaire addressing bizarre and/or rare symptoms that are rated on a dichotomous (yes-no) scale. A study of the Dutch research version of the SIMS with a group of 298 participants revealed a specificity of .98 and sensitivity of .93 with a cut-score of 16 (Merckelbach & Smith, Reference Merckelbach and Smith2003). A recent meta-analysis recommended to raise the cut-score to 19 in clinical samples (van Impelen, Merckelbach, Jelicic, & Merten, Reference van Impelen, Merckelbach, Jelicic and Merten2014). Therefore, we will provide information of both the standard cut-score of >16 and the recommend cut-score of >19.

Clinical measures

Apathy Evaluation Scale (AES)

The AES is a scale that consists of 18 items phrased as questions that are to be answered on a four-point Likert scale (Marin, Biedrzycki, & Firinciogullari, Reference Marin, Biedrzycki and Firinciogullari1991). The AES is a reliable and valid measure to screen for and to assess the severity of apathy in PD and dementia (Clarke et al., Reference Clarke, Reekum, Simard, Streiner, Freedman and Conn2007; Leentjens et al., Reference Leentjens, Dujardin, Marsh, Martinez-Martin, Richard, Starkstein, Weintraub, Sampaio, Poewe, Rascol, Stebbins and Goetz2008). We used the clinician rated version of the AES, and a cut-score of 38/39 to identify clinical cases of apathy (Pluck & Brown, Reference Pluck and Brown2002).

Mini Mental State Examination (MMSE)

As a global index for cognition, we used the MMSE (Folstein, Folstein, & McHugh, Reference Folstein, Folstein and McHugh1975), with the standard cut-score of <24 to identify clinical cases of cognitive impairment.

Procedure

The study was approved by the Medical Ethical Committee of Maastricht University Medical Centre (MEC 10-3-81). All patients received an information letter and informed consent form. Patients with PD were informed about the study by the nurse practitioner or the referring psychiatrist or neurologist. Patients with MCI or dementia were participating in a larger research project for which a generic information brochure for participants was constructed in order to prevent confusion and information overload (Aalten et al., Reference Aalten, Ramakers, Biessels, Deyn, Koek, OldeRikkert, Oleksik, Richard, Smits, van Swieten, Teune, van der Lugt, Barkhof, Teunissen, Rozendaal, Verhey and Flier2014). For all patients there was a minimum of 1 week reflection time before entering the study. When patients provided informed consent, the validity tests were included in the neuropsychological test battery. The AES and the MMSE were already part of the test battery.

Data Analysis

Data were checked for errors, missing data, outliers, and score distributions. Missing data were excluded pairwise per analysis. Outliers were not removed from the dataset. First, descriptive statistics were calculated for the total sample and for each diagnostic category. Differences between the three diagnostic groups on the dependent variables were examined with one-way ANOVA and post-hoc Bonferroni corrected pairwise comparisons (age), Chi square analysis (gender, education), and with Kruskall-Wallis tests with post-hoc Dunn-bonferroni pairwise comparisons (AES and MMSE). Second, the percentage of participants failing the TOMM, DCT, and SIMS was calculated. Before determination of the accuracy of the “two test failure rule,” the nonparametric bivariate correlation between the validity tests was calculated to make sure that the individual tests do not highly correlate in order to avoid inflation of type I error (Larrabee, Reference Larrabee2008). We calculated the number of validity tests failed. All calculations were conducted twice, with the standard cut-score on the SIMS (>16) and DCT (≥17), and with the adjusted cut-scores on the SIMS (>19) and DCT (≥22). Third, with Fisher’s exact tests we compared the frequency of TOMM, DCT, and SIMS failures in the group of patients with (i.e., AES ≥ 39) and without apathy (i.e., AES < 39), and in the group of patients with (i.e., MMSE < 24) and without a significant degree of cognitive impairment (i.e., MMSE ≥ 24). Analyses were performed with SPSS version 23.

RESULTS

Demographics and Psychometrics

In total, 145 patients were assessed of whom 7 were excluded because of too many missing data. In the final sample (N = 138) there were 8 (5.8%) missing records for the AES, 14 (10.1%) for the TOMM, 16 (11.6%) for the DCT, and 18 (13.0%) for the SIMS. Missing item scores on the SIMS were imputed with the mean item score of that person when there were no more than 15 item scores in total and no more than 3 item scores per subscale missing. There were three extreme outliers on the TOMM, and no extreme outliers on the SIMS extrapolated total score, and on the DCT. Most variables, that is, the TOMM, DCT, SIMS, MMSE, and the AES were not normally distributed.

As can be seen in Table 1, the sample consisted of 138 participants (56.5% male) with a mean age of 71.9 years (SD = 9.1, range: 48–89). In total, 56 patients were diagnosed with dementia, 41 patients with MCI, and 41 patients with PD. Of the 41 PD patients, 9 (22%) were referred for a neuropsychological evaluation as part of the preoperative screening to determine the eligibility for DBS. Gender [χ2(2) = .62, p = .74], and education [χ2(4) = 5.19, p = .27] did not differ significantly between the three diagnostic groups (i.e., dementia, MCI, and PD). There was a group difference in age [F(2, 135) = 35.10, p < .00]. The group of patients with PD was younger than the two other diagnostic groups. The mean rank MMSE score [H(2) = 59.10, p < .01] and the mean rank AES score [H(2) = 24.51, p < .01] differed between the three diagnostic groups. As expected, the mean rank MMSE score was lowest in the patients with dementia, followed by the group of patients with MCI, and then the group of patients with PD (all ps < .01). Patients with dementia had a higher mean rank AES score than the group of patients with MCI (p < .05), and the group of patients with PD (p < .01), who did not differ from each other (p = .12). Regarding the SVTs, the mean rank TOMM score was the lowest in the group of patients with dementia, followed by the group of patients with MCI, and then the group of patients with PD (all ps <.05). The mean rank DCT E-score was higher in the group of patients with dementia than the group of patients with PD (p < .01). The mean rank SIMS score did not significantly differ between the three groups.

Table 1. Demographics and psychometric data

Notes: MCI = mild cognitive impairment; PD = Parkinson’s disease; CDR = clinical dementia rating; AES = Apathy Evaluation; MMSE = Mini Mental State Exam; TOMM 2 = Test of Memory Malingering second recognition trial; DCT E-score = Dot Counting Test Effort-score; SIMS = Structured Inventory of Malingered Symptomatology; n/a = not applicable; aone-way ANOVA; bχ2; cKruskall-Wallis test; *Low = at most primary education; medium = junior vocational training, and high = senior vocational or academic training (Van der Elst et al., Reference Van der Elst, van Boxtel, van Breukelen and Jolles2005).

False-Positive Rate of the Individual Validity Tests

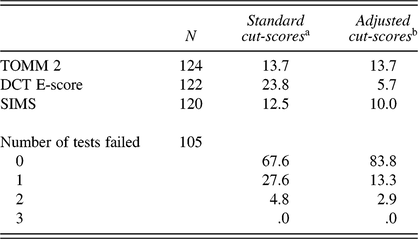

Table 2 shows that 17 of the 124 participants who completed the TOMM scored below the cut-score of 45 on the second recognition trial. In total, 120 participants completed the SIMS, of whom 12 and 15 patients obtained a deviant score using a cut-score of >19 or >16, respectively. As for the DCT, 29 of the 122 participants who completed the DCT scored above the standard cut-score of 17, whereas only 7 participants failed when using the adjusted cut-score of 22.

Table 2. Failure rate (%) of the validity tests

Notes: TOMM 2 = Test of Memory Malingering recognition trial 2; DCT E-score = Dot Counting Test Effort-score; SIMS = Structured Inventory of Malingered Symptomatology; aTOMM recognition trial 2 < 45, SIMS total score > 16, DCT E-score ≥ 17; bTOMM recognition trial 2 < 45, SIMS total score > 19, DCT E-score ≥ 22.

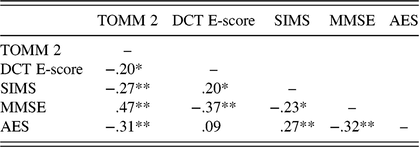

False-Positive Rate of the “Two-Test Failure Rule”

Table 3 shows that the TOMM, DCT, and SIMS were at most weakly correlated. Of the 105 patients who completed all three validity tests, most (83.8%) passed, and none failed all three tests (Table 2). A substantial number of participants failed on one validity test: 27.6% with the standard cut-scores, and 13.3% with the adjusted cut-scores on the SIMS and DCT. By contrast, only 3 (adjusted cut-scores) to 5 (standard cut-scores) patients out of 105 patients failed on two validity tests. Thus, the “two-failure rule” correctly classified 95–97% as credible.

Table 3. Spearman’s rho correlation between the validity tests and clinical measures

Notes: SVTs = symptom validity tests; TOMM 2 = Test of Memory Malingering recognition trial 2; DCT E-score = Dot Counting Test Effort score; SIMS = Structured Inventory of Malingered Symptomatology; MMSE = Mini Mental State Examination; AES = Apathy Evaluation Scale; *p < .05; **p < .01.

Relationship between Validity Test Failure, Apathy, and Cognitive Impairment

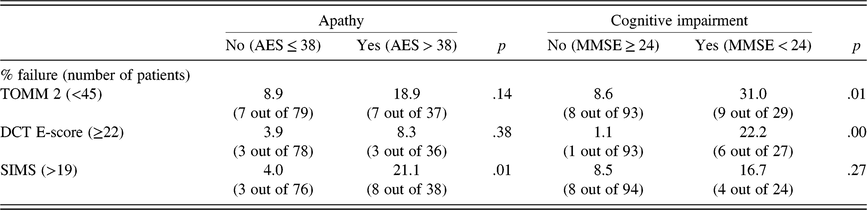

Fisher’s Exact Test (FET) revealed no significant difference between the group of patients with and without apathy in failure rate on the TOMM (19% vs. 9%; p = .14), and on the DCT (8% vs. 4%; p = .38) (see Table 4). On the SIMS, however, the failure rate of patients with apathy was much higher than those without apathy (21% vs. 4%; p = .01) (see Table 4). Regarding cognitive impairment, the opposite pattern occurred. Patients with cognitive impairment on the MMSE failed more frequently than those without cognitive impairment on the TOMM (31% vs. 9%; p = .01), and on the DCT (22% vs. 1%; p = .00). Patients with and without cognitive impairment did not differ in the frequency of failure on the SIMS (17% vs. 9%; p = .26). Using the standard cut-scores for the DCT (≥17) and SIMS (>16) did not change the pattern of significant and non-significant statistical test results.

Table 4. Relation validity test failure with apathy and cognitive impairment

Notes: AES = Apathy Evaluation Scale; MMSE = Mini Mental State Exam; TOMM 2 = Test of Memory Malingering recognition trial 2; DCT E-score = Dot Counting Test Effort-score; SIMS = Structured Inventory of Malingered Symptomatology.

DISCUSSION

Our study shows that the failure rate on the individual validity tests ranged between 12.6% and 23.8%. Raising the cut-scores for the SIMS and the DCT yielded lower false-positive failure rates. In particular, the DCT retained a satisfactory accuracy with adjustment of the cut-score, namely .94 correct classification of valid assessment results. The SIMS attained with the recommended cut-score of >19 an accuracy of .90.

We found a differential sensitivity of the PVTs and SVTs for cognitive impairment and apathy. Failure on the PVTs, the TOMM and the DCT, was related to cognitive impairment: the accuracy remained above .90 in patients who scored equal or higher than 24 on the MMSE, whereas it dropped to .69 for the TOMM, and to .78 for the DCT in patients with a MMSE score lower than 24. This finding is in line with other studies that found a lower MMSE score to be related to PVT failure in genuine patient samples (McGuire, Crawford, & Evans, Reference McGuire, Crawford and Evans2019; Merten, Bossink, & Schmand, Reference Merten, Bossink and Schmand2007). Comparable to our findings is a recent study that examined failure on a PVT, the Word Memory Test, in a sample of 30 patients with PD and essential tremor who were neuropsychologically evaluated as part of the screening for the indication for DBS (Rossetti, Collins, & York, Reference Rossetti, Collins and York2018). These patients had a motivation to perform well, since clinically relevant cognitive impairment might jeopardize their candidacy for the surgical intervention of DBS. The accuracy of the PVT in this sample was .90. Similarly, in patient samples with other neurological disorders such as sickle cell disease patients (Dorociak, Schulze, Piper, Molokie, & Janecek, Reference Dorociak, Schulze, Piper, Molokie and Janecek2018), and Huntington disease (Sieck, Smith, Duff, Paulsen, & Beglinger, Reference Sieck, Smith, Duff, Paulsen and Beglinger2013), the accuracy of stand-alone PVT was ≥.90. So, the evidence points toward a critical limit of moderate to severe cognitive impairment for PVTs. Note that merely the presence of a neurological condition in itself does not automatically mean that the PVTs are not valid anymore. Also, the PVTs have been shown to differ in their sensitivity to cognitive impairment. In our study the DCT had a higher failure rate than the TOMM had, which might be explained by difference in load on working memory of both tests (Merten et al., Reference Merten, Bossink and Schmand2007).

Failure on the PVTs was not related to apathy. The false-positive rate of the TOMM and the DCT did not significantly differ between patients with and without clinical levels of apathy. Thus our findings are not supportive of the notion that apathy might lead to an increased risk of false-positive classification of PVTs.

Intriguingly, failure on the SVT, the SIMS, showed the opposite pattern from the PVTs: failure was related to apathy, but not to cognitive impairment. To the best of our knowledge, this is the first study to examine the limits of an SVT in a sample of patients with cognitive impairment and apathy. These first, and therefore preliminary, results are reassuring that cognitive impairment in patients with dementia, MCI, or PD does not directly lead to an inability to understand and answer the questions of the SIMS. This suggests that in neuropsychological assessments the SIMS is an instrument that can be used to measure the validity of self-reported symptoms, even in neurological populations. Although in need of replication, apathy might be a critical limit for the SIMS.

The second hypothesis is supported by the data. The decision rule that at least two validity tests should be failed for the determination of non-credibility ensured a high correct rating of the assessment validity (.95). Note that this holds true with using the standard cut-scores on the SIMS and DCT. Moreover, the “two-test failure rule” with the standard cut-scores on the validity tests yielded fewer false positives than the individual test with the adjusted cut-score. Thus, our findings suggest that the “two-test failure rule” is superior to adjustment of cut-scores when it comes to maintaining adequate accuracy in patient samples with genuine psychopathology. This is an important finding, because customizing cut-scores for different patient groups poses specific problems. In general, a higher specificity of an individual validity test comes with the price of a lower sensitivity. For example, the sensitivity of the DCT to correctly classify a person who is feigning cognitive impairment is substantially lower with a cut-score of 22 (i.e., .62) than with the standard cut-score of 17 (i.e., .79) (Boone et al., Reference Boone, Lu, Back, King, Lee, Philpott, Shamieh and Warner-Chacon2002). Further, it is often not evident beforehand whether cognitive impairment or psychopathology is present. The reason why psychological assessment is requested is actually to objectify the presence of cognitive impairment and psychopathology. To avoid hindsight bias, the clinician will have to select the appropriate cut-score before psychological testing. It is questionable whether this approach is feasible in clinical practice. Relatedly, selection of the cut-score for the validity test in an individual psychological assessment leaves the door wide open to discussion about the appropriateness of the chosen cut-score, and consequently of the classification of the assessment validity. This complicates the diagnostic assessment and clinical decision making.

Our findings on the accuracy of “two-test failure rule” to classify non-credible test performance compare favorably with those of Davis’s (Davis, Reference Davis2018) with a very large sample of older patients diagnosed with normal cognition, cognitive impairment, MCI, and dementia. In that study 13.2% of the patients with MCI and 52.8% of the patients with dementia failed two validity tests, and their performance was, therefore, wrongfully classified by the “two-test failure rule” as non-credible. A plausible explanation for these divergent findings relates to the type of validity measures used. In the study of Davis five embedded indicators of performance validity were used, with a higher average intercorrelation between measures (i.e., .41) than the freestanding validity tests in our study (i.e., .22). The “two-test failure rule” is based on the chaining of likelihood ratios (Larrabee, Reference Larrabee2008) and can be applied only when the different validity measures are independent. Also, the embedded indicators are generally more susceptible to cognitive impairment than the freestanding validity tests (Merten et al., Reference Merten, Bossink and Schmand2007; Loring et al., Reference Loring, Goldstein, Chen, Drane, Lah, Zhao and Larrabee2016).

Our study is not without limitations. Importantly, there is no gold standard for determination of assessment validity available. Therefore, strictly speaking we cannot exclude the possibility that a failure on the validity test was actually a true positive score. This is not only a limitation of our study but of the field of assessment validity research in general. However, we excluded patients who held external incentives, and the clinical diagnosis was based on multiple sources of information besides neuropsychological assessment (e.g., third-party information on daily functioning and brain imaging). Therefore, we are confident that the assessment validity of the neuropsychological evaluation can be assumed in the vast majority of the patients. Then, our findings only hold for the constellation of the validity tests we used and cannot be automatically extrapolated to other – combinations of – validity tests. Therefore, replication as well as research of different validity tests is called for.

Of note, the two-test failure rule based on chaining of likelihood ratios seems appropriate in samples with low base rates of non-credible responding (Larrabee, Reference Larrabee2008; Lippa, Reference Lippa2018). In specific samples, for example, in patients with no or only mild neurological impairment seen in a forensic context, a heightened base rate of non-credible clinical presentations might be expected, and here even a single validity test failure should raise concern about the validity of the obtained test data (Proto et al., Reference Proto, Pastorek, Miller, Romesser, Sim and Linck2014; Rosenfeld et al., Reference Rosenfeld, Sands and Van Gorp2000).

In conclusion, the results show that in a sample of older patients diagnosed with MCI, dementia, or PD, failing on one validity test is not uncommon, and that the validity tests are differentially sensitive to cognitive impairment and apathy. However, also in this sample, it remains rare to score abnormal on two independent validity tests. Therefore, using the “two test failure rule” is probably better to identify non-credibility. More generally, the implication is that in clinical assessments, validity tests can be used without running an unacceptably high risk of incorrectly classifying a genuine presentation of symptoms and cognitive test scores as non-credible. The study findings can serve as contextual background information for a psychological assessment, in which the failure on two independent validity tests in an examinee who does not have moderate to severe cognitive impairment or clinical relevant apathy due a neurological condition can most likely be classified as an invalid assessment.

ACKNOWLEDGEMENT

We thank Fleur Smeets-Prompers for her contribution to the collection of data.

FINANCIAL SUPPORT

This research received no specific grant from any funding agency, commercial or not-for-profit sectors.

CONFLICT OF INTEREST

The authors have nothing to disclose.

PROVENANCE AND PEER REVIEW

Not commissioned; externally peer reviewed.

CONTRIBUTORSHIP STATEMENT

BDF contributed to the study design, data collection, and data analysis, and wrote the first draft of the article; RP commented on earlier drafts and contributed to the design, data collection, and analysis; AD, AL, and FV were involved in the data collection and commented on earlier drafts.

DATA SHARING STATEMENT

The data underlying this study have been uploaded to the Maastricht University Dataverse and are accessible using the following link: https://hdl.handle.net/10411/8LKJF9