1. Introduction

Communication comes in a style. Be it in language, visual arts or music, the things that people express have a content—what is to be conveyed, and a style—how that is done. These two concepts are evident in the Shakespearean verses “By the pricking of my thumbs, Something wicked this way comes” (Macbeth, Act 4, Scene 1.), where the content (i.e., the foreseeing of an evil future) is encoded in the slant rhyme with peculiar rhythm and unusual vocabulary choices. Style is thus the form given to a core piece of information, which collocates it into some distinctive communicative categories. For instance, we perceive that the above example is a poem, and specifically, one written in an old variety of English.

The binomial of content and style is interesting from a computational perspective because content can be styled in a controlled manner. By considering these two variables, many studies have dealt with the automatic generation of texts (Gatt and Krahmer Reference Gatt and Krahmer2018), images (Wu, Xu, and Hall Reference Wu, Xu and Hall2017), and music (Briot, Hadjeres, and Pachet Reference Briot, Hadjeres and Pachet2020) that display a number of desired features. Works as such create content from scratch and combine it with style, while a kin line of research transforms styles starting from an already existing piece of content. The rationale is: if style and content are two and separate, one can be modified and the other kept unaltered. This practice is pervasive among humans as well. It can be observed, for instance, any time they give an inventive twist to their utterances and creations (e.g., when conveying a literal gist through a metaphor, or when painting by imitating Van Gogh’s singular brush strokes). The field of vision has achieved remarkable success in changing the styles of images (Gatys, Ecker, and Bethge Reference Gatys, Ecker and Bethge2016), and following its footsteps, natural language processing (NLP) has risen to the challenge of style transfer in text.

1.1 Style transfer in text: task definition

The goal of textual style transfer is to modify the style of texts while maintaining their initial content (i.e., their main meaning). More precisely, style transfer requires the learning of

![]() $p(t'\mid s,t)$

: a text

$p(t'\mid s,t)$

: a text

![]() $t'$

has to be produced given the input

$t'$

has to be produced given the input

![]() $t$

and a desired stylistic attribute

$t$

and a desired stylistic attribute

![]() $s$

, where

$s$

, where

![]() $s$

indicates either the presence or the absence of such an attributeFootnote

a

with respect to

$s$

indicates either the presence or the absence of such an attributeFootnote

a

with respect to

![]() $t$

. For example, if

$t$

. For example, if

![]() $t$

is written in a formal language, like the sentence “Please, let us know of your needs”, then

$t$

is written in a formal language, like the sentence “Please, let us know of your needs”, then

![]() $s$

may represent the opposite (i.e., informality), thus requiring

$s$

may represent the opposite (i.e., informality), thus requiring

![]() $t'$

to shift towards a more casual tone, such as “What do you want?”. Therefore, style transfer represents an effort towards conditioned language generation and yet differs from this broader task fundamentally. While the latter creates text and imposes constraints over its stylistic characteristics alone, the style transfer constraints relate to both style, which has to be different between input and output, and content, which has to be similar between the two—for some definition of “similar”. In short, a successful style transfer output checks three criteria. It should exhibit a different stylistic attribute than the source text

$t'$

to shift towards a more casual tone, such as “What do you want?”. Therefore, style transfer represents an effort towards conditioned language generation and yet differs from this broader task fundamentally. While the latter creates text and imposes constraints over its stylistic characteristics alone, the style transfer constraints relate to both style, which has to be different between input and output, and content, which has to be similar between the two—for some definition of “similar”. In short, a successful style transfer output checks three criteria. It should exhibit a different stylistic attribute than the source text

![]() $t$

, it needs to preserve its content, and it has to read as a human production (Mir et al. Reference Mir, Felbo, Obradovich and Rahwan2019).

$t$

, it needs to preserve its content, and it has to read as a human production (Mir et al. Reference Mir, Felbo, Obradovich and Rahwan2019).

1.2 Applications and challenges

Style transfer lends itself well for several applications. For one thing, it supports automatic linguistic creativity, which has a practical entertainment value. Moreover, since it simulates humans’ ability to switch between different communicative styles, it can enable dialogue agents to customize their textual responses for the users and to pick the one that is appropriate in the given situation (Gao et al. Reference Gao, Zhang, Lee, Galley, Brockett, Gao and Dolan2019). Systems capable of style transfer could also improve the readability of texts by paraphrasing them in simpler terms (Cao et al. Reference Cao, Shui, Pan, Kan, Liu and Chua2020) and help in this way non-native speakers (Wang et al. Reference Wang, Wu, Mou, Li and Chao2019b).

The transfer in text has been tackled with multiple styles (e.g., formality and sentiment) and different attributes thereof (e.g., formal vs. informal, sentiment gradations). Nevertheless, advances in these directions are currently hampered by a lack of appropriate data. Learning the task on human-written linguistic variations would be ideal, but writers hardly produce parallel texts with similar content and diverse attributes. If available, resources of this sort might be unusable due to the mismatch between the vocabularies of the source and target sides (Pang, Reference Pang2019b), and constructing them requires expensive annotation efforts (Gong et al. Reference Gong, Bhat, Wu, Xiong and Hwu2019).

The goal of style transfer seems particularly arduous to achieve per se. Most of the time, meaning preservation comes at the cost of only minimal changes in style (Wu et al. Reference Wu, Ren, Luo and Sun2019a), and bold stylistic shifts tend to sacrifice the readability of the output (Helbig, Troiano, and Klinger Reference Helbig, Troiano and Klinger2020). This problem is exacerbated by a lack of standardized evaluation protocols, which makes the adopted methods difficult to compare. In addition, automatic metrics to assess content preservation (i.e., if the input semantics is preserved), transfer accuracy/strength (i.e., if the intended attribute is achieved through the transfer), and fluency or naturalness (i.e., if the generated text appears natural) (Pang and Gimpel Reference Pang and Gimpel2019; Mir et al., Reference Mir, Felbo, Obradovich and Rahwan2019) often misrepresent the actual quality of the output. As a consequence, expensive human-assisted evaluations turn out inevitable (Briakou et al. Reference Briakou, Agrawal, Tetreault and Carpuat2021a, Reference Briakou, Agrawal, Zhang, Tetreault and Carpuat2021b).

1.3 Purpose and scope of this survey

With the spurt of deep learning, style transfer has become a collective enterprise in NLP (Hu et al. Reference Hu, Lee, Aggarwal and Zhang2022; Jin et al. Reference Jin, Jin, Hu, Vechtomova and Mihalcea2022). Much work has explored techniques that separate style from content and has investigated the efficacy of different systems that share some basic workflow components. Typically, a style transfer pipeline comprises an encoder-decoder architecture inducing the target attribute on a latent representation of the input, either directly (Dai et al. Reference Dai, Liang, Qiu and Huang2019) or after the initial attribute has been stripped away (Cheng et al. Reference Cheng, Min, Shen, Malon, Zhang, Li and Carin2020a). Different frameworks have been formulated on top of this architecture, ranging from lexical substitutions (Li et al. Reference Li, Jia, He and Liang2018; Wu et al. Reference Wu, Zhang, Zang, Han and Hu2019b) to machine translation (Jin et al. Reference Jin, Jin, Mueller, Matthews and Santus2019; Mishra, Tater, and Sankaranarayanan Reference Mishra, Tater and Sankaranarayanan2019) and adversarial techniques (Pang and Gimpel Reference Pang and Gimpel2019; Lai et al. Reference Lai, Hong, Chen, Lu and Lin2019). Therefore, the time seems ripe for a survey of the task, and with this paper, we contribute to organizing the existing body of knowledge around it.

The recurring approaches to style transfer make it reasonable to review its methods, but there already exist three surveys that do so (Toshevska and Gievska Reference Toshevska and Gievska2021; Hu et al., Reference Hu, Lee, Aggarwal and Zhang2022; Jin et al., Reference Jin, Jin, Hu, Vechtomova and Mihalcea2022). They take a technical perspective and focus on the methods used to transfer styles. Automatic metrics and evaluation practices have been discussed as well in previous publications (Briakou et al. Reference Briakou, Agrawal, Tetreault and Carpuat2021a, Reference Briakou, Agrawal, Zhang, Tetreault and Carpuat2021b). We move to a different and complementary angle which puts focus on the styles to be transferred. Our leading motive is a question that is rooted in the field but is rarely faced: Can all textual styles be changed or transferred?

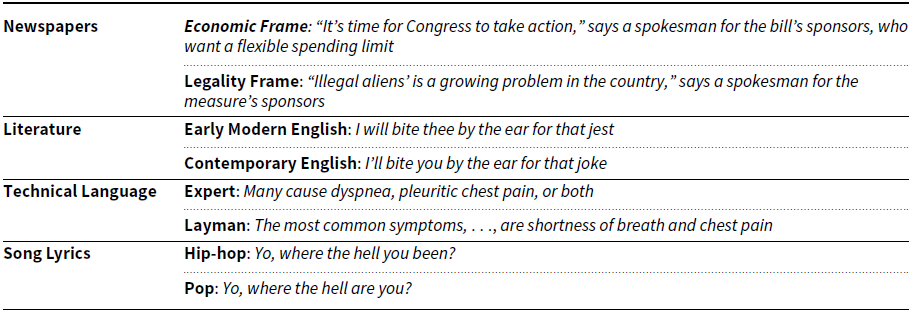

Current publications in the field see style transfer by and large from an engineering angle, aiming at acceptable scores for the three style transfer criteria, and comparing their numerical results in a limited fashion: they neglect the peculiarities of the styles that they are transferring. In our view, each style requires robust understanding in itself, as a pre-requisite for the applied transfer models’ choice and success. We thus provide a detailed look into both well-established styles, and those that remain under-explored in the literature. Instead of asking Is that method advantageous for style transfer?, we are interested in questions like How well does it perform when dealing with a particular style? and Is finding a balance between naturalness, transfer, and content preservation equally difficult for all styles? In this vein, we propose a hierarchy of styles that showcases how they relate to each other. We not only characterize them separately and by tapping on some insights coming from humanity-related disciplines,Footnote b but we also illustrate how they have been handled in the context of style transfer, covering the challenges that they pose (e.g., lack of data), their potential applications, and the methods that have been employed for each of them. Further, we observe if such models have been evaluated in different ways (some of which could fit a style more than others), and lastly, we consider how well styles have been transferred with respect to the three style transfer criteria. Our hierarchy incorporates a selection of papers published from 2008 to September 2021 that we found relevant because of their use or development of datasets for the task at hand, for their proposal of methods that later became well-established in the field, or alternatively, for their proposed evaluation measures. A few of these studies tackle Chinese (Su et al. Reference Su, Huang, Chang and Lin2017; Shang et al. Reference Shang, Li, Fu, Bing, Zhao, Shi and Yan2019), a handful of them deal with multilingual style transfer (Niu, Rao, and Carpuat, Reference Niu, Rao and Carpuat2018; Briakou et al. Reference Briakou, Lu, Zhang and Tetreault2021c), but most works address style transfer for English.

The paper is structured as follows. Section 2 summarizes the technical approaches to this task, covering also some recurring evaluation techniques. Our main contribution, organizing styles in a hierarchy, is outlined in Section 3 (with details in Sections 4 and 5). These discussions include descriptions of data, methods, as well as the evaluations employed for their transfer performance. Section 6 concludes this work and indicates possible directions for future research.

1.4 Intended audience

This survey is addressed to the reader seeking an overview of the state of affairs for different styles that undergo transfer. Specifically, we aim for the following.

Readers needing a sharp focus on a specific style. We revise what has been done within the scope of each style, which could hardly be found in works with a more methodological flavor.

Readers preparing for upcoming style transfer studies, interested in the research gaps within the style transfer landscape. On the one hand, this review can help researchers categorize future work among the massive amount produced in this field, indicating similar works to which they can compare their own. This can eventually guide researchers to decide on the appropriate models for their specific case. On the other hand, we suggest possible “new” styles that were not treated yet but which have an affinity to the existing ones.

Readers questioning the relationship between content and style. NLP has fallen short in asking what textual features can be taken as a style and has directly focused on applying transfer procedures—often generating not too satisfying output texts. Without embarking on the ambitious goal of defining the concept of “style”, we systematize those present in NLP along some theoretically motivated coordinates.

2. Style transfer methods and evaluation

Our survey focuses on styles and relations among them. To connect the theoretical discussion with the methodological approaches to transfer, we now briefly describe the field from a technical perspective. We point the readers to Jin et al. (Reference Jin, Jin, Hu, Vechtomova and Mihalcea2022), Hu et al. (Reference Hu, Lee, Aggarwal and Zhang2022) and Toshevska and Gievska (Reference Toshevska and Gievska2021) for a comprehensive clustering and review of the existing methods, and to Prabhumoye, Black, and Salakhutdinov (Reference Prabhumoye, Black and Salakhutdinov2020) for a high-level overview of the techniques employed in controlled text generation, style transfer included.

Methodological choices typically depend on what data is available. In the ideal scenario, the transfer system can directly observe the linguistic realization of different stylistic attributes on parallel data. However, parallel data cannot be easily found or created for all styles. On the other hand, mono-style corpora that are representative of the attributes of concern might be accessible (e.g., datasets of texts written for children and datasets of scholarly papers), but they might have little content overlap—thus making the learning of content preservation particularly challenging (Romanov et al. Reference Romanov, Rumshisky, Rogers and Donahue2019). Therefore, we group style transfer methods according to these types of corpora, that is, parallel resources [either ready to use (Xu et al. Reference Xu, Ritter, Dolan, Grishman and Cherry2012; Rao and Tetreault Reference Rao and Tetreault2018, i.a.) or created via data augmentation strategies (Zhang, Ge, and Sun Reference Zhang, Ge and Sun2020b, i.a.), and mono-style datasets (Shen et al. Reference Shen, Lei, Barzilay and Jaakkola2017; Li et al., Reference Li, Jia, He and Liang2018; John et al. Reference John, Mou, Bahuleyan and Vechtomova2019, i.a.)]. As illustrated in Figure 1, which adapts the taxonomy of methods presented in Hu et al. (Reference Hu, Lee, Aggarwal and Zhang2022), the two groups are further divided into subcategories with respect to the training techniques adopted to learn the task.

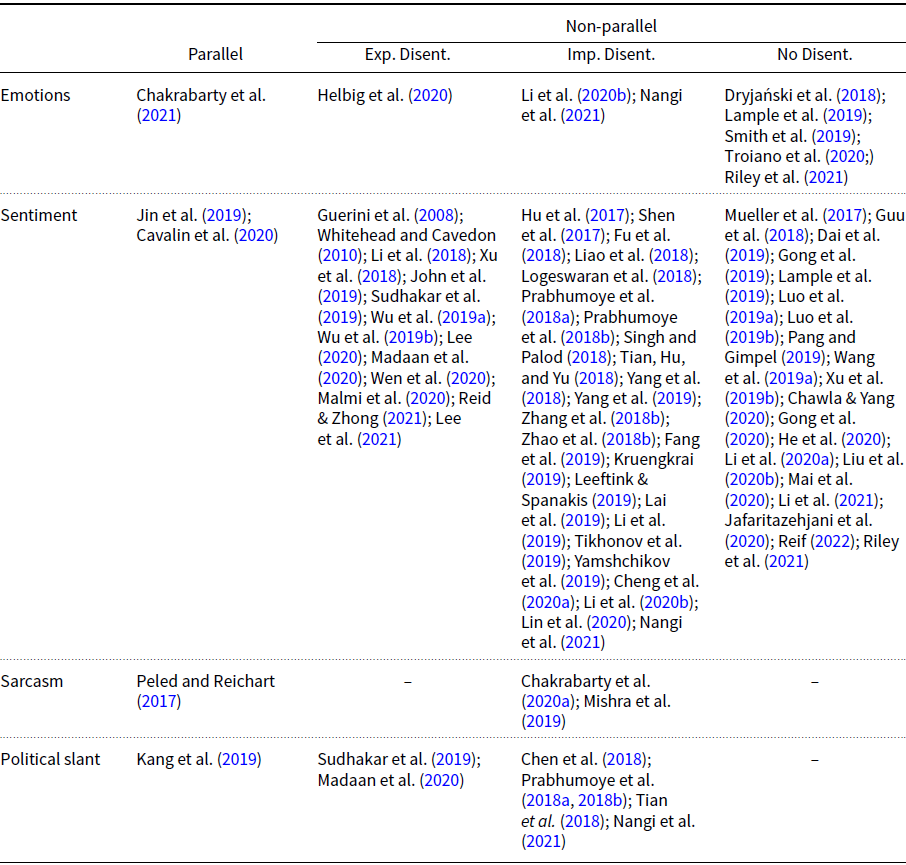

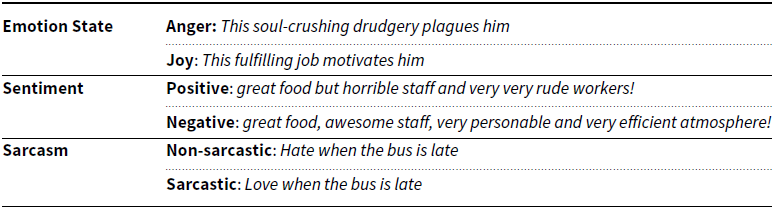

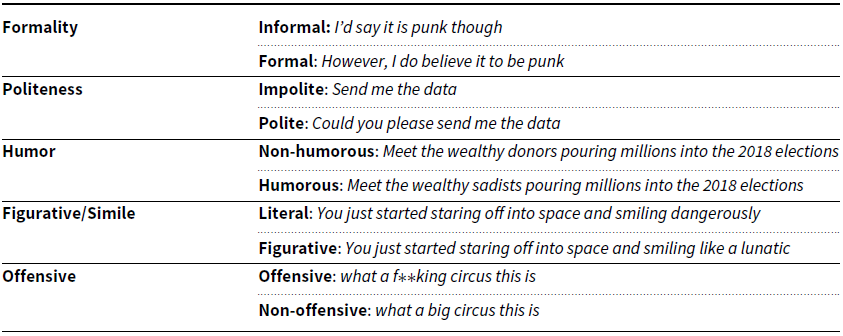

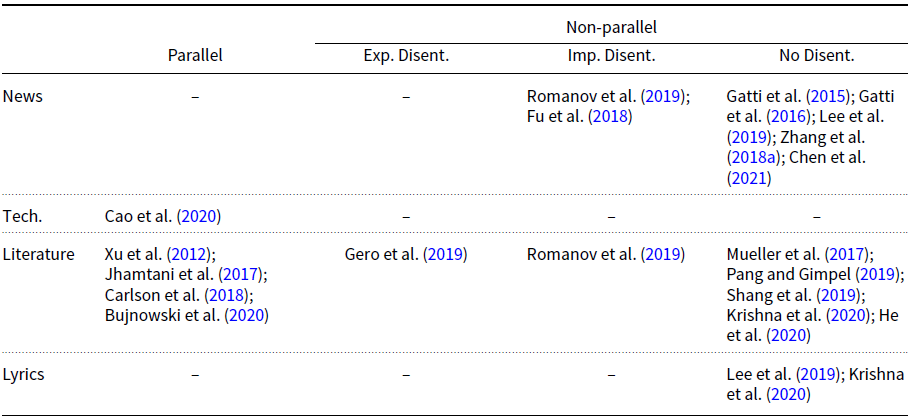

Throughout the paper, such methods are reported to organize the literature in Tables 1, 3, 5, 7 and 9, which inform the reader about the approach that each study has taken for a given style, the approaches that have not yet been leveraged for it (i.e., no author is reported in a cell of a table), and those that have been indiscriminately applied for multiple styles (e.g., the same authors appear more than once in a table, or appear in many of them).

Figure 1. Methods discussed in previous style transfer surveys, adapted from Hu et al. (Reference Hu, Lee, Aggarwal and Zhang2022). In contrast, our contribution is the inspection of styles depicted in Figure 2.

2.1 Parallel data

A parallel corpus for transfer would contain texts with a particular stylistic attribute on one side (e.g., formal texts) and paraphrases with a different attribute on the other (e.g., informal texts). When such datasets exist, style transfer can be approached as a translation problem that maps one attribute into the other. Using a corpus of Shakespearean texts and their modern English equivalents, Xu et al. (Reference Xu, Ritter, Dolan, Grishman and Cherry2012) demonstrated the feasibility of style-conditioned paraphrasing with phrase-based machine translation. Later, neural models started to be trained to capture fine stylistic differences between the source and the target sentences, one instance at a time. Jhamtani et al. (Reference Jhamtani, Gangal, Hovy and Nyberg2017), for example, improved the transfer performance on the Shakespearean dataset by training a sequence-to-sequence architecture with a pointer network that copies some words from the input. Rao and Tetreault (Reference Rao and Tetreault2018) corroborated that machine translation techniques are a strong baseline for style transfer on the Grammarly’s Yahoo Answers Formality Corpus, a parallel corpus for formality transfer which turned out to drive the majority of the style transfer research on parallel data (leveraged by Niu et al. Reference Niu, Rao and Carpuat2018; Wang et al. Reference Wang, Wu, Mou, Li and Chao2019b; Xu, Ge, and Wei Reference Xu, Ge and Wei2019b, among others).

Sequence-to-sequence models achieved remarkable results in conjunction with different style controlling strategies, like multi-task learning (Niu et al. Reference Niu, Rao and Carpuat2018; Xu et al. Reference Xu, Ge and Wei2019b), rule harnessing (Wang et al. Reference Wang, Wu, Mou, Li and Chao2019b), post-editing with grammatical error correction (Ge et al. Reference Ge, Zhang, Wei and Zhou2019), and latent space sharing with matching losses (Wang et al. Reference Wang, Wu, Mou, Li and Chao2020). Parallel resources, however, are scarce or limited in size. This has triggered a number of attempts to synthesize parallel examples. Zhang et al. (Reference Zhang, Ge and Sun2020b) and Jin et al. (Reference Jin, Jin, Mueller, Matthews and Santus2019) exemplify this effort. While the former augmented data with translation techniques (i.e., backtranslation and backtranslation with a style discriminator) and a multi-task transfer framework, Jin et al. (Reference Jin, Jin, Mueller, Matthews and Santus2019) derived a pseudo-parallel corpus from mono-style corpora in an iterative procedure, by aligning sentences which are semantically similar, training a translation model to learn the transfer, and using such translations to refine the alignments in return.

2.2 Non-parallel data

The paucity of parallel resources also encouraged transfer strategies to develop on mono-style corpora (i.e., non-parallel corpora of texts that display one or more attributes of a specific style). This research line mainly approached the task intending to disentangle style and content, either by focusing the paraphrasing edits on the style-bearing portions of the input texts, or by reducing the presence of stylistic information into the texts’ latent representations. On the other hand, a few studies claimed that such disentanglement can be avoided. Therefore, methods working with non-parallel data can be divided into those which do style transfer with an explicit or implicit style-to-content separation and those which operate with no separation.

2.2.1 Explicit style-content disentanglement

Some styles have specific markers in text: expressions like “could you please” or “kindly” are more typical of a formal text than an informal one. This observation motivated a spurt of studies to alter texts at the level of explicit markers—which are replaced in the generated sentences by the markers of a different attribute. The first step of many such studies is to find a comprehensive inventory of style-bearing words. Strategies devised with this goal include frequency statistics-based methods (Li et al., Reference Li, Jia, He and Liang2018; Madaan et al. Reference Madaan, Setlur, Parekh, Poczos, Neubig, Yang, Salakhutdinov, Black and Prabhumoye2020), lexica (Wen et al. Reference Wen, Cao, Yang and Wang2020), attention scores of a style classifier (Xu et al. Reference Xu, Sun, Zeng, Zhang, Ren, Wang and Li2018; Sudhakar, Upadhyay, and Maheswaran Reference Sudhakar, Upadhyay and Maheswaran2019; Helbig et al. Reference Helbig, Troiano and Klinger2020; Reid and Zhong Reference Reid and Zhong2021), or combinations of them (Wu et al. Reference Wu, Zhang, Zang, Han and Hu2019b; Lee Reference Lee2020). As an alternative, Malmi et al. (Reference Malmi, Severyn and Rothe2020) identified spans of text on which masked language models (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019), trained on source and target domains, disagree in terms of likelihood: these would be the portions of a sentence responsible for its style, and their removal would produce a style-agnostic representation for the input.

Candidate expressions are then retrieved to replace the source markers with expressions of the target attribute. Distance metrics used to this end are (weighted) word overlap (Li et al., Reference Li, Jia, He and Liang2018), Euclidean distance (Li et al., Reference Li, Jia, He and Liang2018), and cosine similarity between sentence representations like content embeddings (Li et al., Reference Li, Jia, He and Liang2018), weighted TF-IDF vectors, and averaged GloVe vectors over all tokens (Sudhakar et al. Reference Sudhakar, Upadhyay and Maheswaran2019). Some studies resorted instead to WordNet-based retrievals (Helbig et al. Reference Helbig, Troiano and Klinger2020).

In the last step, (mostly) neural models combine the retrieved tokens with the style-devoid representation of the input, thus obtaining an output with the intended attribute. There are also approaches that skip this step and directly train a generator to produce sentences in the target attribute based on a template (Lee Reference Lee2020, i.a.). Similar techniques for explicit keyword replacements are relatively easy to train and are more explainable than many other methods, like adversarial ones (Madaan et al., Reference Madaan, Setlur, Parekh, Poczos, Neubig, Yang, Salakhutdinov, Black and Prabhumoye2020).

2.2.2 Implicit style-content disentanglement

Approaches for explicit disentanglement cannot be extended to all styles because many of them are too complex and nuanced to be reduced to keyword-level markers. Methods for implicit disentanglement overcome this issue. Their idea is to strip the input style away by operating on the latent representations (rather than at the text level). This usually involves an encoder–decoder architecture. The encoder produces the latent representation of the input and the decoder, which generates text, is guided by training losses controlling for the style and content of the output.

Adversarial learning

Implicit disentanglement has been instantiated by adversarial learning in several ways. To ensure that the representation found by the encoder is devoid of any style-related information, Fu et al. (Reference Fu, Tan, Peng, Zhao and Yan2018) trained a style classifier adversarially, making it unable to recognize the input attribute, while Lin et al. (Reference Lin, Liu, Sun and Kautz2020) applied adversarial techniques to decompose the latent representation into a style code and a content code, demonstrating the feasibility of a one-to-many framework (i.e., one input, many variants). John et al. (Reference John, Mou, Bahuleyan and Vechtomova2019) inferred embeddings for both content and style from the data, with the help of adversarial loss terms that deterred the content space and the style space from containing information about one another, and with a generator that reconstructed input sentences after the words carrying style were manually removed. Note that, since John et al. (Reference John, Mou, Bahuleyan and Vechtomova2019) approximated content with words that do not bear sentiment information, they could also fit under the group of Explicit Style-Content Disentanglement. We include them here because the authors themselves noted that ignoring sentiment words can boost the transfer, but is not essential.

Backtranslation

A whole wave of research banked on the observation that backtranslation washes out some stylistic traits of texts (Rabinovich et al. Reference Rabinovich, Patel, Mirkin, Specia and Wintner2017) and followed the work of Prabhumoye et al. (Reference Prabhumoye, Tsvetkov, Salakhutdinov and Black2018b). There, input sentences were translated into a pivot language and back as a way to manipulate their attributes: the target values were imposed in the backward direction, namely, when decoding the latent representation of the (pivot language) text, thus generating styled paraphrases of the input (in the source language).

Attribute controlled generation

Attribute control proved to be handy to produce style-less representations of the content while learning a code for the stylistic attribute. This emerges, for instance, in Hu et al. (Reference Hu, Yang, Liang, Salakhutdinov and Xing2017), who leveraged a variational auto-encoder and some style discriminators to isolate the latent representation and the style codes, which were then fed into a decoder. While the discriminators elicited the disentanglement, the constraint that the representation of source and target sentence should remain close to each other favored content preservation.

Other methods

An alternative path to disentanglement stems from information theory. Cheng et al. (Reference Cheng, Min, Shen, Malon, Zhang, Li and Carin2020a) defined an objective based on the concepts of mutual information and variation of information as ways to measure the dependency between two random variables (i.e., style and content). On the one hand, the authors minimized the mutual information upper bound between content and style to reduce their interdependency; on the other, they maximized the mutual information between latent embeddings and input sentences, ensuring that sufficient textual information was preserved.

2.2.3 Without disentanglement

By abandoning the disentanglement venture, some studies argued that separating the style of a text from its content is not only difficult to achieve—given the fuzzy boundary between the two, but also superfluous (Lample et al. Reference Lample, Subramanian, Smith, Denoyer, Ranzato and Boureau2019). This observation became the core of a wave of research that can be categorized as follows.

Entangled latent representation editing

Some works edited the latent representations of the input texts learned by an auto-encoder. A common practice in this direction is to jointly train a style classifier and iteratively update the auto-encoder latent representation by maximizing the confidence on the classification of the target attribute (Mueller, Gifford, and Jaakkola Reference Mueller, Gifford and Jaakkola2017; Liu et al. Reference Liu, Fu, Zhang, Pal and Lv2020a). Another approach trained a multi-task learning model on a summarization and an auto-encoding task, and it employed layer normalization and a style-guided encoder attention using the transformer architecture (Wang, Hua, and Wan Reference Wang, Hua and Wan2019a).

Attribute controlled generation

Proven successful by disentanglement-based studies, methods for learning attribute codes were also applied without the content-vs.-style separation. Lample et al. (Reference Lample, Subramanian, Smith, Denoyer, Ranzato and Boureau2019), for instance, employed a denoising auto-encoder together with backtranslation and an averaged attribute embedding vector, which controlled for the presence of the target attribute during generation. Instead of averaging the one-hot encoding for individual attribute values, Smith et al. (Reference Smith, Gonzalez-Rico, Dinan and Boureau2019) used supervised distributed embeddings to leverage similarities between different attributes and perform zero-shot transfer.

Reinforcement learning

Multiple training loss terms have been defined in style transfer to endow the output texts with the three desiderata of content preservation, transfer accuracy, and text naturalness—often referred to as “fluency”. The dependency on differentiable objectives can be bypassed with reinforcement learning, which uses carefully designed training rewards (Luo et al. Reference Luo, Li, Yang, Zhou, Tan, Chang, Sui and Sun2019a, i.a.). Generally, rewards that cope with the presence of the target attribute are based on some style classifiers or discriminators, those pertaining to naturalness rely on language models; and those related to content preservation use Bleu or similar metrics that compare an output text against some reference.

Gong et al. (Reference Gong, Bhat, Wu, Xiong and Hwu2019) worked in a generator-evaluator setup. There, the generator’s output was probed by an evaluator module, whose feedback helped improve the output attribute, semantics, and fluency. Two building blocks can also be found in Luo et al. (Reference Luo, Li, Zhou, Yang, Chang, Sui and Sun2019b). They approached style transfer as a dual task (i.e., source-to-target and target-to-source mappings) in which, to warm-up the reinforcement learning training, a model was initially trained on a pseudo-parallel corpus. Wu et al. (Reference Wu, Ren, Luo and Sun2019a), instead, explored a sequence operation method called Point-Then-Operate, with a high-level agent dictating the text position where the operations should be done and a low-level agent performing them. Their policy-based training algorithm employed extrinsic and intrinsic rewards, as well as a self-supervised loss to model the three transfer desiderata. The model turned out relatively interpretable thanks to these explicitly defined operation steps. Tuning their number, in addition, allowed to control the trade-off between the presence of the initial content and of the target attribute.

An exception among reinforcement learning studies is the cycled reinforcement learning of Xu et al. (Reference Xu, Sun, Zeng, Zhang, Ren, Wang and Li2018), which falls within the disentangling picture.

Probabilistic modelling

Despite being a common practice in unsupervised learning, the definition of task-specific losses can lead to training instability. These objectives are empirically determined among a vast number of possible alternatives. To overcome the issue, He et al. (Reference He, Wang, Neubig and Berg-Kirkpatrick2020) formulated a probabilistic generative strategy that follows objectives defined by some principles of probabilistic inference, and which makes clear assumptions about the data. This approach allowed them to reason transparently about their system design and to outperform many works choosing ad-hoc training objectives.

2.3 Evaluation

The methods presented above are usually assessed with metrics that quantify content preservation, transfer accuracy/intensity, and generation of natural-sounding paraphrases. A detailed discussion of the evaluation methods can be found in Mir et al. (Reference Mir, Felbo, Obradovich and Rahwan2019), Pang (Reference Pang2019a), Briakou et al. (Reference Briakou, Agrawal, Tetreault and Carpuat2021a) and Briakou et al. (Reference Briakou, Agrawal, Zhang, Tetreault and Carpuat2021b), with the latter focusing on human evaluation settings. As they appear in most style transfer publications, we briefly introduce them here and will refer back to them throughout the paper.

Content preservation, i.e., the degree to which an output retains the content of the input, is usually gauged with measures that originated in machine translation. They compute the overlap between the words of the generation system and some reference texts, under the assumption that the two should share much lexical material. Among them are Bleu (Papineni et al. Reference Papineni, Roukos, Ward and Zhu2002) and Meteor (Banerjee and Lavie Reference Banerjee and Lavie2005), often complemented with Rouge (Lin Reference Lin2004), initially a measure for automatic summaries. Transfer accuracy, i.e., the efficacy of the models in varying stylistic attributes, is usually scored by classifiers: trained on a dataset characterized by the style in question, a classifier can tell if an output text has the target attribute or not. Applied on a large scale, this second criterion can be quantified as the percentage of texts that exhibit the desired attribute. Last comes the naturalness or fluency of the variants that have been changed in style. This is typically estimated with the perplexity of language models, indicating the degree to which a sequence of words in a paraphrase is predictable—hence grammatical.

Focusing on automatic content preservation, Tikhonov et al. (Reference Tikhonov, Shibaev, Nagaev, Nugmanova and Yamshchikov2019) advocated that Bleu should be used with some caution in style transfer. They argued that the entanglement between semantics and style in natural language is reflected in the entanglement between the Bleu score measured between input and output and the transfer accuracy. Indeed, they provided evidence that such measures can be easily manipulated: the outputs that a classifier in the generative architecture indicates as having the incorrect attribute could be replaced with sentences which are most similar to the input in their surface form—thus boosting both the reported accuracy and Bleu. Human-written reformulations are necessary in their view for upcoming experiments, as current style transfer architectures become more sophisticated, and therefore, accuracy and Bleu might be too naive metrics to estimate their performance. Going in a similar direction, the extensive meta-analysis of Briakou et al. (Reference Briakou, Agrawal, Tetreault and Carpuat2021a) discusses the pitfalls of automatic methods and the need for standardized evaluation practices (including human evaluation) to boost advance in this field.

3. Style hierarchy

Style transfer relies on a conceptual distinction between meaning and form (e.g., De Saussure Reference De Saussure1959), but what is this form? It is a dimension of sociolinguistic variation that manifests in syntactic and lexical patterns, that can be correlated with independent variables and that, according to Bell (Reference Bell1984), we shift in order to fit an audience. Bell’s characterization emphasizes the intentionality of language variation, accounting only for the styles ingrained in texts out of purpose. Yet, many others emerge as a fingerprint of the authors’ identities, for instance from specific markers of people’s personality and internal states (Brennan, Afroz, and Greenstadt Reference Brennan, Afroz and Greenstadt2012). This already suggests that different styles have diverse characteristics. However, their peculiar challenges have received little attention in the literature. As a remedy for the lacuna, we bring style transfer closer to the linguistic and sociological theories on the phenomenon it targets. We propose a hierarchy of styles in which we place the relevant body of NLP research.

A recent study by Kang and Hovy (Reference Kang and Hovy2021) actually groups styles into a handful of categories (personal, interpersonal, figurative and affective) based on some social goals achieved through communication. Their work did not investigate specific styles. It rather intended to fertilize research towards a cross-style direction, by combining existing corpora into an overarching collection of 15 styles.Footnote c By contrast, our hierarchy concentrates on the peculiarities of styles separately, while indicating the methods that have been used and those that have been dismissed for each of them.

To unify the above-mentioned theoretical insights, we make a first, coarse separation between accidental and voluntary styles, structuring them into the unintended and intended families.Footnote d The former group copes with the self. It corresponds to the personal characteristics of the authors, which we split into factors that define between-persons and within-person language variations. Namely, there are stable traits defining systematic differences between writers and short-term internal changes within an individual subject which, in response to situations, do not persist over time (Beckmann and Wood Reference Beckmann and Wood2017). We call them persona and dynamic states respectively. The other category of styles is intended, as it covers deliberate linguistic choices with which authors adapt to their communicative purpose or environment. Style transfer publications that fall within this group echo what is known as “palimpsest” in literary theories, i.e., the subversion of a text into a pastiche or a parody to imitate an author, degrade a text, or amplify its content (Genette Reference Genette1997). Among these are styles used to express how one feels about the topic of discussion: a speaker/writer can have a positive sentiment on a certain matter, be angry or sad at it, be sarcastic about it, etc. Of this type are styles targeted towards a topic, while others, the non-targeted subset, are more independent of it. Some (circumstantial registers) are rather dependent on the context in which they are deployed, and they convey a general attitude of the writers, a tone in which they talk or a social posture—an example being formality, that speakers increase if they perceive their interlocutor as socially superior (Vanecek and Dressler Reference Vanecek and Dressler1975). Other styles are socially coded. They can be thought of as conventional writing styles tailored to the ideal addressee of the message rather than an actual one, and are typically employed in mass communication, such as scientific, literary, and technical productions.

These categories subsume a number of individual styles. For instance, persona branches out into personality traits, gender and age, and background, which in turn encompasses country and ethnicity, education, and culture. Note that the leaves in our hierarchy are the major styles that have been addressed so far by automatic systems, but many others can be identified and explored in future work. We include some in our discussions. Furthermore, we acknowledge that a few styles pertain to both the unintended and intended branches. Our motivation to insert them under one rather than the other is due to the type of data on which the transfer was made (e.g., emotion state) or to how the problem was phrased by the corresponding studies (e.g., literature).

The remainder of this paper follows the structure of our hierarchy. We provide a top-down discussion of the nodes, starting from the high-level ones, which are presented from a theoretical perspective, and proceeding towards the leaves of the branches, which is where the concrete style transfer works are examined in relation to the data, the methods and the evaluation procedures that they used.

4. Unintended styles

Writers leave traces of their personal data. Information like one’s mental disposition, biological, and social status are revealed by stylometric cues present in a text. These cues might be produced unknowingly, and because of that, they could help to combat plagiarism, foster forensics, and support humanities. On the other hand, accessing knowledge about writers could breach people’s privacy and exacerbate demographic discrimination. Hence, while classification-based studies leveraged such latent information to profile people’s age and gender (Rosenthal and McKeown Reference Rosenthal and McKeown2011; Nguyen et al. Reference Nguyen, Gravel, Trieschnigg and Meder2013; Sarawgi, Gajulapalli, and Choi Reference Sarawgi, Gajulapalli and Choi2011; Fink, Kopecky, and Morawski Reference Fink, Kopecky and Morawski2012), geolocation, and personality (Eisenstein et al. Reference Eisenstein, O’Connor, Smith and Xing2010; Verhoeven, Daelemans, and Plank Reference Verhoeven, Daelemans and Plank2016; Plank and Hovy Reference Plank and Hovy2015), the attempt to defeat authorship recognition moved research towards the transfer of such unintended styles—i.e., age, gender, etc.

Arguably the first work to address this problem is that of Brennan et al. (Reference Brennan, Afroz and Greenstadt2012), who tried to confound stylometric analyses by backtranslating existing texts with available translation services, such as Google Translate and Bing Translator. Their preliminary results did not prove successful, as the writer’s identity remained recognizable through the translation passages from source to targets and back, but follow-up research provided evidence that automatic profilers can be effectively fooled (Kacmarcik and Gamon Reference Kacmarcik and Gamon2006; Emmery, Manjavacas Arevalo, and Chrupała Reference Emmery, Manjavacas Arevalo and Chrupała2018; Shetty, Schiele, and Fritz Reference Shetty, Schiele and Fritz2018; Bo et al. Reference Bo, Ding, Fung and Iqbal2021, i.a.).

Successive style transfer studies narrowed down the considered authors’ traits. They tackled stable features that are a proxy for the writers’ biography, which we subsume under the category of persona, or more dynamic states that characterize writers at a specific place and time. It should be noticed that such works rely on a tacit assumption about writers’ authenticity: writers express themselves spontaneously and do not attempt to mask their own traits (Brennan et al. Reference Brennan, Afroz and Greenstadt2012).

We illustrate the methods used to transfer unintended styles in Table 1.

Table 1. Style transfer methods and the unintended styles of persona

4.1 Persona

Persona includes biographic attributes coping with personality and people’s social identity. Individuals construct themselves “as girls or boys, women or men—but also as, e.g., Asian American” (Eckert and McConnell-Ginet Reference Eckert and McConnell-Ginet1999), that is, they often form an idea of the self as belonging to a group with a shared enterprise or interest (Tajfel Reference Tajfel1974). The interaction within such a group also affects their linguistic habits (Lave and Wenger Reference Lave and Wenger1991) as they develop a similar way of talking. In this sense, linguistic style is a key component of one’s identity (Giles and Johnson Reference Giles and Johnson1987). It manifests some traits unique to a specific person or community (Mendoza-Denton and Iwai (Reference Mendoza-Denton and Iwai1993) provide insights on the topic with respect to the Asian-American English speech).

At least to a degree, persona styles are implicit in the way people express themselves. As opposed to the intended branch of our hierarchy, they are not communicative strategies consciously set in place by the writers, but they are spontaneous indicators of other variables. For instance, it has been shown that women tend to use paralinguistic signals more often than men (Carli Reference Carli1990), that speakers’ vocabulary becomes more positively connotated and less self-referenced in older ages (Pennebaker and Stone Reference Pennebaker and Stone2003), and that subcultures express themselves with a specific slang (Bucholtz Reference Bucholtz2006).

The transfer of persona aims to go from one attribute to the other (e.g., young to old for the style of age), and its main challenge is that different styles are closely intertwined. Age and gender, for instance, can imply each other because “the appropriate age for cultural events often differs for males and females” (Eckert Reference Eckert1997), and therefore, one may not be changed without altering the other. Moreover, there is still a number of styles dealing with people’s communicative behaviors and skills which are left unexplored. Future studies could focus on those, like the pairs playful vs. aggressive, talkative vs. minimally responsive, cooperative vs. antagonist, dominant vs. subject, attentive vs. careless, charismatic vs. uninteresting, native vs. L2 speaker, curious vs. uninterested, avoidant vs. involved.

Table 2. Examples of style transfer on a subset of persona styles. Personality traits sentences come from Shuster et al. (Reference Shuster, Humeau, Hu, Bordes and Weston2019), gender-related ones from Sudhakar et al. (Reference Sudhakar, Upadhyay and Maheswaran2019), the age-related examples from Preoţiuc-Pietro et al. (Reference Preoţiuc-Pietro, Xu and Ungar2016a), and background-related examples from Krishna et al. (Reference Krishna, Wieting and Iyyer2020). For each pair, the input is above

4.1.1 Gender and age

Style transfer treats gender and age as biological facts. The transfer usually includes a mapping between discrete labels: from male to female or vice versa, and from young to old or the other way around (see some examples in Table 2). It should be noticed that such labels disregard the fluidity of one’s gender experience and performance, which would be better described along a spectrum (Eckert and McConnell-Ginet Reference Eckert and McConnell-Ginet2003), and they represent age as a chronological variable rather than a social one depending on peoples’ personal experiences (Eckert Reference Eckert1997). This simplification is not made by style transfer specifically, but it is common to many studies focused on authors’ traits, due to how the available datasets were constructed—e.g., in gender-centric resources, labels are inferred from the name of the texts’ authors (Mislove et al. Reference Mislove, Lehmann, Ahn, Onnela and Rosenquist2011).

The Rt-Gender corpus created by Voigt et al. (Reference Voigt, Jurgens, Prabhakaran, Jurafsky and Tsvetkov2018) stands out among such resources. It was built to research how responses towards a specific gender differ from responses directed to another, in opposition to related corpora that collect linguistic differences between genders. This labeled dataset potentially sets the ground for the next steps in style transfer.

Data

Works on gender style transfer typically follow the choice of data by Reddy and Knight (Reference Reddy and Knight2016), who used tweets posted in the US in 2013 and some reviews from the YelpFootnote e dataset, and inferred gender information from the users’ names.

For this style, there also exists Pastel,Footnote

f

a corpus annotated with attributes of both unintended and intended styles. That is the result of the crowdsourcing effort conducted by Kang, Gangal, and Hovy (Reference Kang, Gangal and Hovy2019), in which

![]() $\approx$

41K parallel sentences were collected in a multimodal setting, and which were annotated with the gender, age, country, political view, education, ethnicity, and time of writing of their authors.

$\approx$

41K parallel sentences were collected in a multimodal setting, and which were annotated with the gender, age, country, political view, education, ethnicity, and time of writing of their authors.

The need to collect attribute-specific rewrites further motivated Xu, Xu, and Qu (Reference Xu, Xu and Qu2019a) to create Alter. As a publicly available toolFootnote g , Alter was developed to overcome one major pitfall of crowdsourcing when it comes to generating gold standards: human annotators might fail to associate textual patterns to a gender label, at least when dealing with short pieces of text. Alter facilitates their rewriting tasks (specifically, to generate texts which are not associated with a particular gender) by providing them with immediate feedback.

Methods

Though not focused on transfer, Preoţiuc-Pietro, Xu, and Ungar (Reference Preoţiuc-Pietro, Xu and Ungar2016a) were the first to show that automatic paraphrases can exhibit the style of writers of different ages and genders, by manipulating the lexical choices made by a text generator. A phrase-based translation model learned that certain sequences of words are more typically used by certain age/gender groups and, together with a language model of the target demographics, it used such information to translate tweets from one group to the other. Their translations turned out to perform lexical substitution, a strategy that was more directly addressed by others. Reddy and Knight (Reference Reddy and Knight2016), for instance, performed substitution in order to defeat a gender classifier. They did so with the guidance of three metrics: one measured the association between words and the target gender label, thus indicating the words to replace to fool the classifier as well as possible substitutes; another quantified the semantic and syntactic similarity between the words to be changed and such substitutes; and the last measured the suitability of the latter in context.

A pitfall of such heuristics, noticed by the authors themselves, is that style and content-bearing words are equal candidates for the edit. Some neural methods bypassed the issue with a similar three-step procedure. That is the case of Sudhakar et al. (Reference Sudhakar, Upadhyay and Maheswaran2019), who proposed a variation of the pipeline in Li et al. (Reference Li, Jia, He and Liang2018). There, (1) only style-bearing words are deleted upon the decision of a Bert-based transformer, where an attention head encodes the stylistic importance of each token in a sentence. Next, (2) candidate substitutes are retrieved: sentences from a target-style corpus are extracted to minimize the distance between the content words of the input and theirs. Lastly, (3) the final output is generated with a decoder-only transformer based on Gpt, having learned a representation of both the content source words and the retrieved attribute words. It should be noted that this method was not designed to transfer genre-related attributes specifically (it achieves different results when dealing with other styles). Also, Madaan et al. (Reference Madaan, Setlur, Parekh, Poczos, Neubig, Yang, Salakhutdinov, Black and Prabhumoye2020) addressed gender as an ancillary task. They used a similar methodology (further discussed in Section 5.3 under Politeness) that first identifies style at the word level, and then changes such words in the output.

Prabhumoye et al. (Reference Prabhumoye, Tsvetkov, Salakhutdinov and Black2018b), instead, separated content and style at the level of the latent input representation, by employing backtranslation as both a paraphrasing and an implicit disentangling technique. Since machine translation systems are optimized for adequacy and fluency, using them in a backtranslation framework can produce paraphrases that are likely to satisfy at least two style transfer desiderata (content preservation and naturalness). To change the input attribute and comply with the third criterion, the authors hinged on the assumption that machine translation reduces the stylistic properties of the input sentence and produces an output in which they are less distinguishable. With this rationale, a sentence in the source language was translated into a pivot language; encoding the latter in the backtranslation step then served to produce a style-devoid representation, and the final decoding step conditioned towards a specific gender attribute returned a stylized paraphrase.

Modelling content and style-related personal attributes separately are in direct conflict with the finding by Kang et al. (Reference Kang, Gangal and Hovy2019), who pointed out that features used for classifying styles are of both types. As opposed to the studies mentioned above, this work transferred multiple persona styles in conjunction (e.g., education and gender) and did so with a sequence-to-sequence model trained on a parallel dataset. Similarly, the proposal of Liu et al. (Reference Liu, Fu, Zhang, Pal and Lv2020b) did not involve any content-to-style separation. With the aim of making style transfer controllable and interpretable, they devised a method based on a variational auto-encoder that performs the task in different steps. It revises the input texts in a continuous space using both gradient information and style predictors, finding an output with the target attribute in such a space.

Evaluation

While Reddy and Knight (Reference Reddy and Knight2016) carried out a small preliminary analysis, others assessed the quality of the outputs with (at least some of) the three criteria, both with automatic and human-based studies. For instance, Sudhakar et al. (Reference Sudhakar, Upadhyay and Maheswaran2019) evaluated the success of the transfer with a classifier and quantified fluency in terms of perplexity. For meaning preservation, other than Bleu (Kang et al. Reference Kang, Gangal and Hovy2019; Sudhakar et al. Reference Sudhakar, Upadhyay and Maheswaran2019), gender transfer was evaluated automatically with metrics based on n-gram overlaps (e.g., Meteor) and embedding-based similarities between output and reference sentences [e.g., Embedding Average similarity and Vector Extrema of Liu et al. (Reference Liu, Lowe, Serban, Noseworthy, Charlin and Pineau2016), as found in Kang et al. (Reference Kang, Gangal and Hovy2019)].

Sudhakar et al. (Reference Sudhakar, Upadhyay and Maheswaran2019) also explored Gleu as a metric that better correlates with human judgments. Initially a measure for error correction, Gleu fits the task of style transfer because it is capable of penalizing portions of texts changed inappropriately while rewarding those successfully changed or maintained. As for human evaluation, the authors asked their raters to judge the final output only with respect to fluency and meaning preservation, considering the transfer of gender a too challenging dimension to rate. Their judges also evaluated texts devoid of style-related attributes.

4.1.2 Personality traits

The category of personality traits contains variables describing characteristics of people that are stable over time, sometimes based on biological facts (Cattell Reference Cattell1946). Studied at first in the field of psychology, personality traits have also been approached in NLP (Plank and Hovy Reference Plank and Hovy2015; Rangel et al. Reference Rangel, Rosso, Potthast, Stein and Daelemans2015, i.a.), as they seem to correlate with specific linguistic features—e.g., depressed writers are more prone to using first-person pronouns and words with negative valence (Rude, Gortner, and Pennebaker Reference Rude, Gortner and Pennebaker2004). This has motivated research to both recognize the authors’ traits from their texts (Celli et al. Reference Celli, Lepri, Biel, Gatica-Perez, Riccardi and Pianesi2014) and to infuse them within newly generated text (Mairesse and Walker Reference Mairesse and Walker2011).

Computational works typically leverage well-established schemas, like the (highly debated) Myers-Briggs Type Indicators (Myers and Myers Reference Myers and Myers2010) and the established Big Five traits (John, Naumann, and Soto Reference John, Naumann and Soto2008). These turn out particularly useful because they qualify people in terms of a handful of dimensions, either binary (introvert-extrovert, intuitive-sensing, thinking-feeling, judging-perceiving) or not (openness to experience, conscientiousness, extraversion, agreeableness, and neuroticism).

Accordingly, a style transfer framework would change the attribute value along such dimensions. Some human-produced examples are the switch from the sweet to dramatic type of personality and the transfer money-minded to optimistic in Table 2 (note that not all attributes addressed in style transfer are equally accepted in psychology). More precisely, each dimension represents a different personality-related style, and this makes traits particularly difficult to transfer: the same author can be defined by a certain amount of all traits, while many other styles only have one dimension (e.g., the dimension of polarity for sentiment), with the two extreme attributes being mutually exclusive (i.e., a sentence is either positively polarized or has a negative valence).

The ability to transfer personality traits brings clear advantages. For instance, the idea that different profiles associate to different consumer behaviors (Foxall and Goldsmith Reference Foxall and Goldsmith1988; Gohary and Hanzaee Reference Gohary and Hanzaee2014) may be exploited to automatically tailor products on the needs of buyers; personification algorithms could also improve health care services, such that chatbots communicate sensitive information in a more human-like manner, with a defined personality, fitting that of the patients; further, they can be leveraged in the creation of virtual characters.

Data

So far, this task explored the collection of image captions crowdsourced by Shuster et al. (Reference Shuster, Humeau, Hu, Bordes and Weston2019), who asked annotators to produce a comment for a given image which would evoke a given personality trait. Their dataset Personality-CaptionsFootnote h contains 241,858 instances and spans across 215 personality types (e.g., sweet, arrogant, sentimental, argumentative, charming). Note that these variables do not exactly correspond to personality traits established in psychology. As an alternative, one could exploit the corpus made available by Oraby et al. (Reference Oraby, Reed, Tandon, S., Lukin and Walker2018), synthesized with a statistical generator. It spans 88k meaning representations of utterances in the restaurant domain and matched reference outputs which display the Big Five personality traits of extraversion, agreeableness, disagreeableness, conscientiousness, and unconsciousness.Footnote i

Methods

Cheng et al. (Reference Cheng, Min, Shen, Malon, Zhang, Li and Carin2020a) provided evidence that the disentanglement between the content of a text and the authors’ personality (where personalities are categorical variables) can take place. Observing that such a disentanglement is in fact arduous to obtain, they proposed a framework based on information theory. Specifically, they quantified the style-content dependence via mutual information, i.e., a metric indicating how dependent two random variables are, in this case measuring the degree to which the learned representations are entangled. Hence, they defined the objective of minimizing the mutual information upper bound (to represent style and content into two independent spaces) while maximizing their mutual information with respect to the input (to make the two types of embeddings maximally representative of the original text).

Without complying with any psychological models, Bujnowski et al. (Reference Bujnowski, Ryzhova, Choi, Witkowska, Piersa, Krumholc and Beksa2020) addressed a task that could belong to this node in our hierarchy. Neutral sentences were transferred into “cute” ones, i.e., excited, positive, and slangy. For that, they trained a multilingual transformer on two parallel datasets, one containing paired mono-style paraphrases and the other containing stylized rewritings, for it to simultaneously learn to paraphrase and apply the transfer.

Evaluation

Other than typical measures for style (i.e., style classifiers’ accuracy) and content (Bleu), Cheng et al. (Reference Cheng, Min, Shen, Malon, Zhang, Li and Carin2020a) considered generation quality, i.e., corpus-level Bleu between the generated sentence and the testing data, as well as the geometric mean of these three for an overall evaluation of their system.

4.1.3 Background

Our last unintended style of persona is the background of writers. Vocabulary choices, grammatical and spelling mistakes, and eventual mixtures of dialect and standard language expose how literate the language user is (Bloomfield Reference Bloomfield1927); dialect itself, or vernacular varieties, marked by traits like copula presence/absence, verb (un)inflection, use of tense (Green Reference Green1998; Martin and Wolfram Reference Martin and Wolfram1998) can give away the geographical or ethnic provenance of the users (Pennacchiotti and Popescu Reference Pennacchiotti and Popescu2011). Further, because these grammatical markers are prone to changing along with word meanings, language carries evidence about the historical time at which it is uttered (Aitchison Reference Aitchison1981).

In this research, streamline are style transfer works leveraging the idea that there is a “style of the time” (Hughes et al. Reference Hughes, Foti, Krakauer and Rockmore2012): they performed diachronic linguistic variations, thus taking timespans as a transfer dimension (e.g., Krishna, Wieting, and Iyyer (Reference Krishna, Wieting and Iyyer2020) transferred among the 1810–1830, 1890–1910, 1990–2010 attributes). Others applied changes between English varieties, for instance switching from British to American English (Lee et al. Reference Lee, Xie, Wang, Drach, Jurafsky and Ng2019), as well as varieties linked to ethnicity, like English Tweets to African American English Tweets and vice versa (Krishna et al. Reference Krishna, Wieting and Iyyer2020), or did the transfer between education levels (Kang et al. Reference Kang, Gangal and Hovy2019).

The following are example outputs of these tasks, from Krishna et al. (Reference Krishna, Wieting and Iyyer2020): “He was being terrorized into making a statement by the same means as the other so-called “witnesses”.” (1990)

![]() $\rightarrow$

“Terror had been employed in the same manner with the other witnesses, to compel him to make a declaration. ” (1810); “As the BMA’s own study of alternative therapy showed, life is not as simple as that.” (British)

$\rightarrow$

“Terror had been employed in the same manner with the other witnesses, to compel him to make a declaration. ” (1810); “As the BMA’s own study of alternative therapy showed, life is not as simple as that.” (British)

![]() $\rightarrow$

“As the F.D.A.’s own study of alternative therapy showed, life is not as simple as that.” (American).

$\rightarrow$

“As the F.D.A.’s own study of alternative therapy showed, life is not as simple as that.” (American).

Such variations could be applied in real-world scenarios in order to adjust the level of literacy of texts, making them accessible for all readers or better resonating with the culture of a specific audience. Future research could proceed into more diverse background-related styles, such as those which are not shared by all writers at a given time or in a specific culture, but which pertain to the private life of subsets of them. For instance, considering hobbies as a regular activity that shapes how people talk, at least for some types of content, one could rephrase the same message in different ways to better fit the communication with, say, an enthusiast of plants, or rather with an addressee who is into book collecting.

Data

Sources that have been used for English varieties are the New York Times and the British National Corpus for English (Lee et al., Reference Lee, Xie, Wang, Drach, Jurafsky and Ng2019). Krishna et al. (Reference Krishna, Wieting and Iyyer2020) employed the corpus of Blodgett, Green, and O’Connor (Reference Blodgett, Green and O’Connor2016) containing African American Tweets, and included this dialectal information in their own datasetFootnote j ; as for the diachronic variations that they considered, texts came from the Corpus of Historical American English (Davies Reference Davies2012). Also the Pastel corpus compiled by Kang et al. (Reference Kang, Gangal and Hovy2019) contains ethnic information, which covers some fine-grained labels, like Hispanic/Latino, Middle Eastern, Caucasian, and Pacific Islander. Their resource includes data about the education of the annotators involved in the data creation process, from unschooled individuals to PhD holders.

Methods

Logeswaran, Lee, and Bengio (Reference Logeswaran, Lee and Bengio2018) followed the line of thought that addresses content preservation and attribute transfer with separate losses. They employed an adversarial term to discourage style preservation, and an auto-reconstruction and a backtranslation term to produce content-compatible outputs. Noticing that the auto-reconstruction and backtranslation losses supported the models in copying much of the input, they overcame the issue by interpolating the latent representations of the input and of the generated sentences.

Other methods used for this style are not based on disentanglement techniques (e.g., Kang et al. Reference Kang, Gangal and Hovy2019). Among those is the proposal of Lee et al. (Reference Lee, Xie, Wang, Drach, Jurafsky and Ng2019), who worked under the assumption that the source attribute is a noisy version of the target one, and in that sense, style transfer is a backtranslation task: their models translated from a “clean” input text to their noisy counterpart, and then denoised it towards the target. Krishna et al. (Reference Krishna, Wieting and Iyyer2020) fine-tuned pretrained language models on automatically generated paraphrases. They created a pseudo-parallel corpus of stylized-to-neutral pairs and trained different paraphrasing models in an “inverse” way, that is, each of them learns to recover a stylistic attribute by reconstructing the input from the artificially-created and style-devoid paraphrases. Hence, at testing time, different paraphrasers transferred different attributes (given a target attribute, the model trained to reconstruct it was applied).

Evaluation

Krishna et al. (Reference Krishna, Wieting and Iyyer2020) proposed some variations on the typical measures for evaluation, hinging on an extensive survey of evaluation practices. As for content preservation, they moved away from n-gram overlap measures like Bleu which both disfavors diversity in the output and does not highlight style-relevant words over the others. Instead, they automatically assessed content with the subword embedding-based model by Wieting and Gimpel (Reference Wieting and Gimpel2018). With respect to fluency, they noticed that perplexity might misrepresent the quality of texts because it can turn out low for sentences simply containing common words. To bypass this problem, they exploited the accuracy of a RoBerta classifier trained on a corpus that contains sentences judged for their grammatical acceptability. Moreover, they jointly optimized automatic metrics by combining accuracy, fluency and similarity at the sentence level, before averaging them at the corpus level.

4.2 Dynamic states

In the group of dynamic styles, we arrange a few states in which writers find themselves in particular contexts. Rather than proxies for stable behaviors or past experiences, they are short-lived qualities, which sometimes arise just in response to a cue. Many facts influencing language slip into this category and represent an opportunity for future exploration. Some of them are: the activity performed while communicating (e.g., moving vs. standing); motivational factors that contribute to how people say the things they say (e.g., hunger, satisfaction); positive and negative moods, as they, respectively, induce more abstract, high-level expressions littered with adjectives, and a more analytic style, focused on detailed information that abounds with concrete verbs (Beukeboom and Semin Reference Beukeboom and Semin2006); the type of communication medium, known to translate into how language is used—for instance, virtual exchanges are fragmentary, have specialized typography, and lack linearity (Ferris Reference Ferris2002).

Another ignored but promising avenue is the transfer of authenticity. Authenticity is a dynamic state transversing all the styles we discussed so far, and at the same time defining a style on its own. In the broader sense, it is related to an idea of truth (Newman Reference Newman2019), as it regards those qualities of texts which allow to identify their author correctly: this is the type of authenticity underlying the other unintended leaves, i.e., the assumption that writers are spontaneous and do not mask nor alter their personal styles. Besides, a puzzling direction could be that of “values” or “expressive authenticity” (Newman Reference Newman2019). Writers may be more or less genuinely committed to the content they convey. Authenticity in the sense of sincerity would be the correspondence between people’s internal states and their external expressions, with a lack of authenticity resulting in a lie. The binomial authentic-deceptive fits style transfer: all content things being equal, what gives a lie away is its linguistic style (Newman et al. Reference Newman, Pennebaker, Berry and Richards2003). Therefore, an authenticity-aware style transfer tool could help understand deceptive communication or directly unveil it. Yet, the transfer between authenticity attributes appears puzzling because successful liars are those who shape their content in a style that seems convincing and trustworthy (Friedman and Tucker Reference Friedman and Tucker1990).

Below are the dynamic states that, to the best of our knowledge, are the only ones present in the style transfer literature (they are visualized in Table 3, with some corresponding examples in Table 4).

Table 3. Style transfer methods distributed across unintended dynamic styles of our hierarchy

4.2.1 Writing time

An instance of dynamic states-related styles in the literature is the time at which writers produce an utterance. Information revolving around the writing time of texts was collected by Kang et al. (Reference Kang, Gangal and Hovy2019) and is contained in their Pastel corpus. The authors considered daily time spans such as Night and Afternoon, that represent the stylistic attributes to transfer in text. These attributes were tackled with the methods discussed above, under persona and background (the success of their transfer was evaluated with the same techniques).

4.2.2 Subjective bias

Talking of subjectivity in language evokes the idea that words do not mirror an external reality, but reflect it as is seen by the speakers (Wierzbicka Reference Wierzbicka1988). In this sense, language has the power to expose personal bias. NLP has risen to a collective endeavor to mitigate the prejudices expressed by humans and reflected in the computational representations of their texts (Bolukbasi et al. Reference Bolukbasi, Chang, Zou, Saligrama and Kalai2016; Zhao et al. Reference Zhao, Zhou, Li, Wang and Chang2018a). For its part, style transfer has surged to the challenge of debiasing language by directly operating on the texts themselves.

Although bias comes in many forms (e.g., stereotypes harmful to specific people or groups of people), only one clear-cut definition has been assumed for conditional text rewriting: bias as a form of inappropriate subjectivity, emerging when personal assessment should be obfuscated as much as possible. That is the case with encyclopedias and textbooks whose authors are required to suppress their own worldviews. An author’s personal framing, however, is not always communicated openly. This is exemplified by the sentence “John McCain exposed as an unprincipled politician”, reported in the only style transfer work on this topic (Pryzant et al. Reference Pryzant, Martinez, Dass, Kurohashi, Jurafsky and Yang2020). Here, the bias would emerge from the word “exposed”, a factive verb presupposing the truth of its object. The goal of style transfer is to move the text towards a more neutral rendering, like one containing the verb “described”.

Bias (and the choice of terms that reinforce it) can operate beyond the conscious level (Chopik and Giasson Reference Chopik and Giasson2017). Further, circumventing one’s skewed viewpoints seems to take an expert effort—as suggested by the analysis of Pryzant et al. (Reference Pryzant, Martinez, Dass, Kurohashi, Jurafsky and Yang2020) on their own corpus, senior Wikipedia revisors are more likely to neutralize texts than less experienced peers. Therefore, we collocate the style subjective bias under the unintended group, and specifically, as a division of dynamic states because prior judgments are open to reconsideration.

Data

Pryzant et al. (Reference Pryzant, Martinez, Dass, Kurohashi, Jurafsky and Yang2020) released a corpusFootnote k of aligned sentences, where each pair consists of a biased version and its neutralized equivalent. The texts are Wikipedia revisions justified by a neutral point of view tag, comprising 180k pre and post revision pairs.

Table 4. Examples of dynamic states, namely writing time [from Kang et al. (Reference Kang, Gangal and Hovy2019)] and subjective bias [taken from Pryzant et al. (Reference Pryzant, Martinez, Dass, Kurohashi, Jurafsky and Yang2020)]. Note that the former is transferred in combination with other styles (i.e., background)

Methods

With the goal of generating a text that is neutralized, but otherwise similar in meaning to an input, Pryzant et al. (Reference Pryzant, Martinez, Dass, Kurohashi, Jurafsky and Yang2020) introduced two algorithms. One, more open to being interpreted, has two components: a neural sequence tagger that estimates the probability that a word in a sentence is subjectively biased, and a machine translation-based step dedicated to editing while being informed by probabilities about subjectivity. The alternative approach directly performs the edit, with Bert as an encoder and with an attentional Lstm as a decoder leveraging a copy and coverage mechanisms.

Evaluation

The models’ accuracy was equated to the proportion of texts that reproduced the changes of editors. In the human-based evaluation, the success of models was measured with the help of English-speaking crowdworkers who passed preliminary tests proving their ability to identify subjective bias.

5. Intended styles

The second branch of the hierarchy stems from the observation that some linguistic variations are intentional. By intended we refer to styles that people modify contextually to the audience they address, their relationship, their social status, and the purpose of their communication. Due to a complex interaction between individuals, society, and contingent situations (Brown and Fraser Reference Brown and Fraser1979), it is not uncommon for speakers to change their language as they change their role in everyday life, alternating between non-occupational roles (stranger, friend), professional positions (doctor, teacher), and kinship-related parts (mother, sibling). Such variations occur as much in speech conversations, as they do in texts (Biber Reference Biber2012).

We split this group of styles into the targeted and non-targeted subcategories. The non-targeted ones, which are the non-evaluative (or non-aspect-based) styles, further develop into the circumstantial and conventional nodes. While all non-targeted leaves can be associated with an idea of linguistic variation, many of them are specifically closer to what theoretical work calls “registers” and “genres”. Understanding the characteristics of these two concepts would shed light on the linguistic level at which the transfer of non-targeted features of text should operate; yet, there is no agreement on the difference between genres and registers, and a precise indication of what differentiates them from style is missing as well (Biber Reference Biber1995). In our discussion, we follow Lee (Reference Lee2001): by genre we mean novels, poems, technical manuals, and all such categories that group texts based on criteria like intended audience or purpose of production; whereas registers are linguistic varieties solicited by an interpersonal context, each of which is functional to immediate use. Therefore, we place the culturally recognized categories to which we can assign texts among the conventional genres, and we collocate linguistic patterns that arise in specific situations among the circumstantial registers. Note that these two classes of styles are not mutually exclusive: a formal register can be instantiated in an academic prose as well as in a sonnet.

5.1 Targeted

The presence of writers in language becomes particularly evident when they assess a topic of discourse. They applaud, disapprove, and convey values. Communications of this type, which pervade social media, have provided fertile ground for the growth and success of opinion mining in NLP. Opinion mining is concerned with the computational processing of stances and emotions targeted towards entities, events, and their properties (Hu and Liu Reference Hu and Liu2006). The same sort of information is the bulk of study for the targeted group in our hierarchy. It is “targeted” because it reflects the relational nature of language, often directed towards an object (Brentano Reference Brentano1874): people state their stances or feelings about things or with respect to properties. Hence, under this group are styles that pertain to the language of evaluations, like sarcasm and emotions.

The tasks of mining opinions and transferring them are kin in that they use similar texts and observe similar phenomena. Yet, they differ in a crucial respect. Each of them looks for information at different levels of granularity. The former task not only recognizes sentiment and opinions, but also extracts more structured information such as the holder of the sentiment, the target, and the aspects of the target of an opinion (Liu and Zhang Reference Liu and Zhang2012). Instead, style transfer only changes the subjective attitudes of writers.