1. Introduction

High utility itemset mining (HUIM) is an important sub-area of frequent itemset mining (FIM), which is the fundamental field of data mining. An itemset is frequent if that itemset possesses a frequency greater than the user-specified minimum support threshold. FIM uses the downward closure property (DCP)Footnote 1 (Agrawal & Srikant, Reference Agrawal and Srikant1994) to reduce the search-space. The FIM algorithms suffer from two major drawbacks. First, the item’s purchase quantities are not taken into account. For example, if a customer has bought two pens, five pens, or ten pens, it is viewed as the same. The second drawback is that all items have the same importance (e.g., unit profit, weight, price, etc.). For example, if a customer has bought a very expensive diamond or just an ordinary pen, it is viewed as equally important. But these assumptions often do not hold in real-life applications. In real life, retailers and corporate managers are interested in finding the itemsets that give them more profit than the items bought together frequently. Therefore, FIM does not fulfil the requirements of the user in real life. To address these important issues, the HUIM (Liu et al., Reference Liu and Liao2005; Liu & Qu, Reference Liu and Qu2012; Fournier-Viger et al., Reference Fournier-Viger, Wu, Zida and Tseng2014; Singh et al., Reference Singh, Shakya, Abhimanyu and Biswas2018, n.d.) field came into the limelight.

HUIM is one of the sub-fields of data mining that includes the price and quantity of items. Hence, it is an important real-life-oriented research problem in data mining. An itemset is called high utility itemsets (HUIs) if its utility value is no less than the user-specified minimum utility threshold (

![]() $min\_util$

). HUIs has many applications such as mobile commerce (Shie et al., Reference Shie, Hsiao, Tseng and Yu2011), cross-marketing analysis (Yen & Lee, Reference Yen and Lee2007

a), web usage mining (Pei et al., Reference Pei, Han, Mortazavi-Asl and Zhu2000), etc. The HUIM algorithms do not follow the DCP for support count. Hence, the FIM strategies and methods cannot be directly applied to the HUIM field. To target the issue of the DCP, Liu et al. proposed Transaction Weighted Utility (TWU)-based overestimation method (Liu et al., 2005). In the literature, there are several HUIM algorithms proposed that follow the TWU-based pruning property (Tseng et al., Reference Tseng, Wu, Shie and Yu2010, Reference Tseng, Shie, Wu and Yu2013; Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009).

$min\_util$

). HUIs has many applications such as mobile commerce (Shie et al., Reference Shie, Hsiao, Tseng and Yu2011), cross-marketing analysis (Yen & Lee, Reference Yen and Lee2007

a), web usage mining (Pei et al., Reference Pei, Han, Mortazavi-Asl and Zhu2000), etc. The HUIM algorithms do not follow the DCP for support count. Hence, the FIM strategies and methods cannot be directly applied to the HUIM field. To target the issue of the DCP, Liu et al. proposed Transaction Weighted Utility (TWU)-based overestimation method (Liu et al., 2005). In the literature, there are several HUIM algorithms proposed that follow the TWU-based pruning property (Tseng et al., Reference Tseng, Wu, Shie and Yu2010, Reference Tseng, Shie, Wu and Yu2013; Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009).

A major limitation of HUIM is setting the appropriate

![]() $min\_util$

threshold. The user does not know which value for the

$min\_util$

threshold. The user does not know which value for the

![]() $min\_util$

threshold is good for their requirements. Specifying the

$min\_util$

threshold is good for their requirements. Specifying the

![]() $min\_util$

threshold is very crucial because it directly affects the number of HUIs. If the

$min\_util$

threshold is very crucial because it directly affects the number of HUIs. If the

![]() $min\_util$

threshold is set too high, few or no HUIs are found, and we lose lots of interesting HUIs. If the

$min\_util$

threshold is set too high, few or no HUIs are found, and we lose lots of interesting HUIs. If the

![]() $min\_util$

threshold is set too low, a large number of HUIs are found. To find the appropriate

$min\_util$

threshold is set too low, a large number of HUIs are found. To find the appropriate

![]() $min\_util$

threshold, a user may thus need to run a HUIM algorithm several times. There are several top-k-based mining algorithms in the literature to solve this problem (Wu et al., Reference Wu, Shie, Tseng and Yu2012; Tseng et al., Reference Tseng, Wu, Fournier-Viger and Yu2016; Ryang & Yun, Reference Ryang and Yun2015; Duong et al., Reference Duong, Liao, Fournier-Viger and Dam2016). In the top-k HUIM field, the value of k needs to be set instead of the

$min\_util$

threshold, a user may thus need to run a HUIM algorithm several times. There are several top-k-based mining algorithms in the literature to solve this problem (Wu et al., Reference Wu, Shie, Tseng and Yu2012; Tseng et al., Reference Tseng, Wu, Fournier-Viger and Yu2016; Ryang & Yun, Reference Ryang and Yun2015; Duong et al., Reference Duong, Liao, Fournier-Viger and Dam2016). In the top-k HUIM field, the value of k needs to be set instead of the

![]() $min\_util$

threshold. The value of k denotes the number of itemsets the user wants to obtain. A top-k HUIM algorithm then returns the k itemsets having the highest

$min\_util$

threshold. The value of k denotes the number of itemsets the user wants to obtain. A top-k HUIM algorithm then returns the k itemsets having the highest

![]() $min\_util$

threshold. Thus, setting the value of k is much easier than

$min\_util$

threshold. Thus, setting the value of k is much easier than

![]() $min\_util$

. top-k HUIM is useful in many domains. For example, top-k HUIM is used to find the k sets of products that are the most profitable when sold together.

$min\_util$

. top-k HUIM is useful in many domains. For example, top-k HUIM is used to find the k sets of products that are the most profitable when sold together.

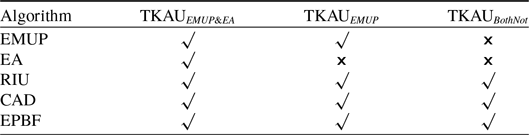

Top-k HUIM is a very active research area. In the literature, we find several articles based on this area, including various extensions of high utility itemset mining like closed, on-shelf, and sequential for specific needs. This paper presents a survey of top-k HUIM approaches that can serve both as an introduction and as a guide to recent advances and opportunities that will be useful for new researchers in this field.

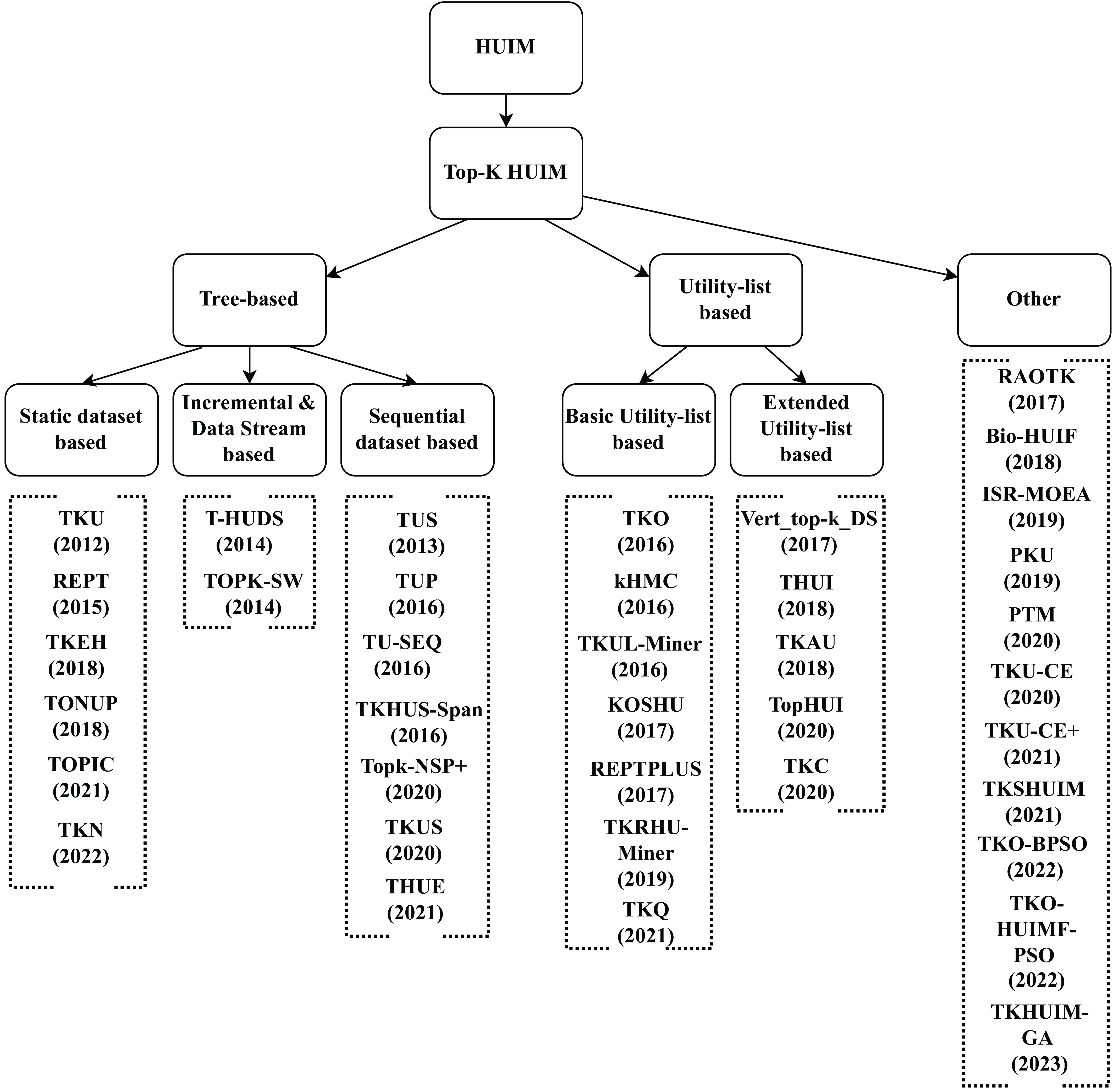

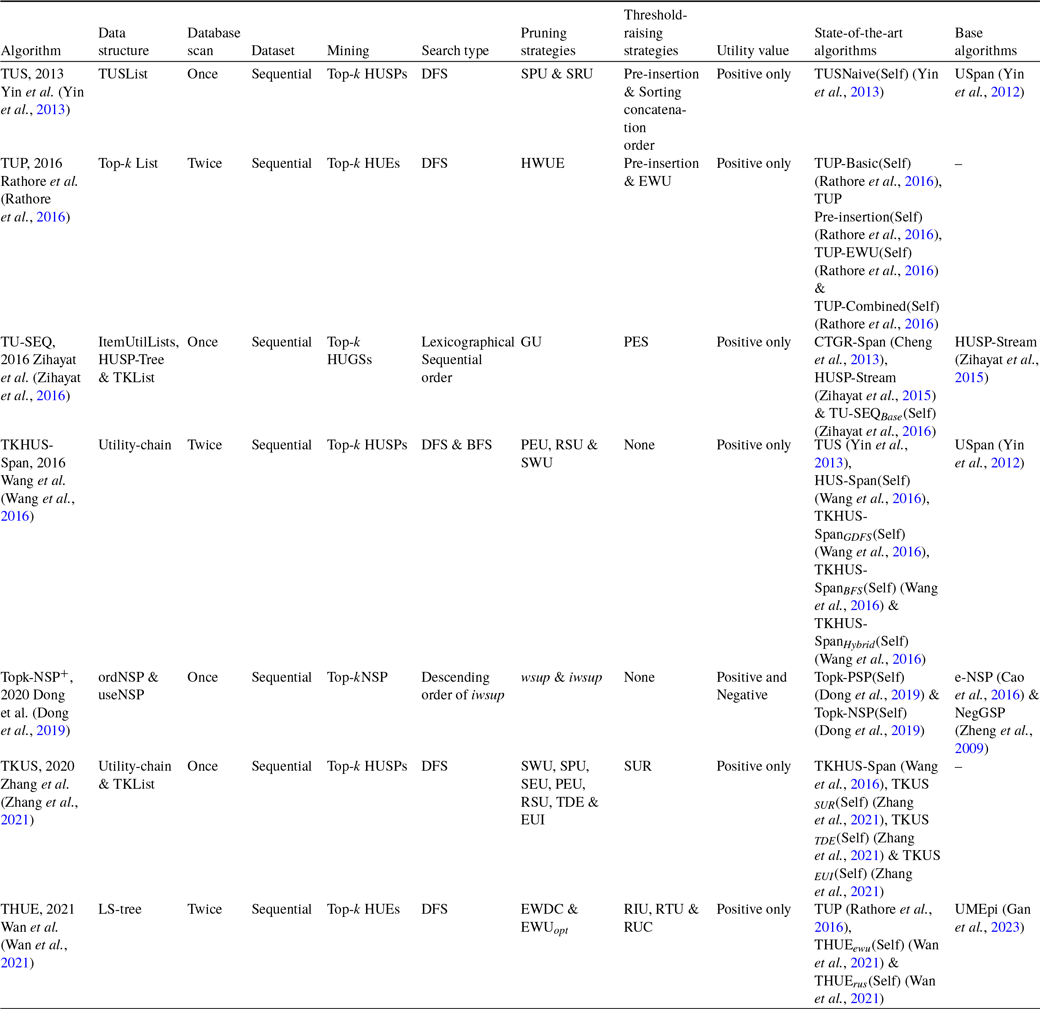

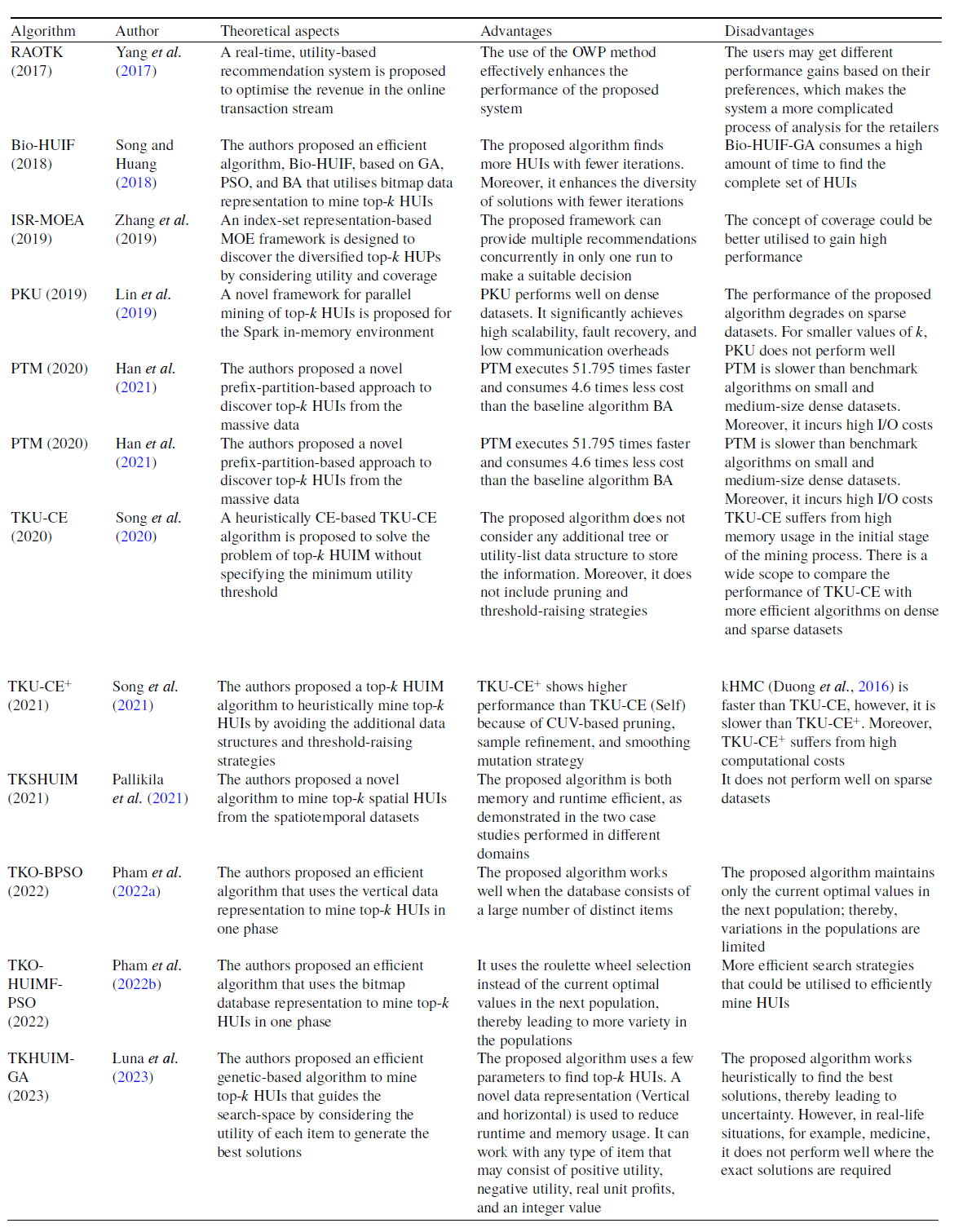

Figure 1. Taxonomy of the state-of-the-art top-k HUIM algorithms

Contribution: The present paper provides an extensive review of top-k HUIM algorithms. A taxonomy of the state-of-the-art top-k HUIM algorithms is presented in Figure 1. The major contribution of this survey is discussed below:

-

• A brief description of traditional HUIM and top-k HUIM approaches with examples is presented.

-

• A taxonomy of top-k HUIM approaches, including tree-based, utility-based, and others, is presented.

-

• A detailed comparative analysis and summary of the currently available state-of-the-art approaches are presented.

-

• The comparative advantages and disadvantages of the current state-of-the-art approaches are also demonstrated.

-

• A brief discussion and summary of the top-k HUIM approaches are presented. Furthermore, future research directions are also discussed.

Differences from existing works

In literature, some works (Zhang et al., Reference Zhang, Almpanidis, Wang and Liu2018; Rahmati & Sohrabi, Reference Rahmati and Sohrabi2019; Fournier-Viger et al., Reference Fournier-Viger, Chun-Wei Lin, Truong-Chi and Nkambou2019; Zhang et al., Reference Zhang, Han, Sun, Du and Shen2020; Gan et al., Reference Gan, Lin, Fournier-Viger, Chao, Tseng and Yu2021; Kumar & Singh, Reference Kumar and Singh2023) have been presented to discover traditional HUIs. In 2018, Zhang et al. presented an empirical evaluation survey on the HUIM algorithms. This work uses real and synthetic datasets to compare the memory usage and runtime efficiency of selected algorithms. This survey only included ten state-of-the-art HUIM algorithms. In 2019, Rahmati et al. presented a comprehensive systematic survey of HUIM approaches. This survey reviews the state-of-the-art HUIM approaches on transactional and uncertain datasets. This work compares the important properties, advantages, and drawbacks of existing approaches. The survey did not discuss any future directions for the existing HUIM field. Later, Fournier-Viger et al. (Reference Fournier-Viger, Chun-Wei Lin, Truong-Chi and Nkambou2019) presented a survey and compared the HUIM approaches. The authors classified the existing state-of-the-art approaches into two categories: one-phase and two-phase algorithms. This work highlighted little about an extension of HUIM like closed HUIs, top-k HUIs, HUIM with negative utility, HUIM with discount strategies, on-shelf HUIM, HUIs in dynamic datasets, and some other extensions. In 2020, Zhang et al. presented a survey about the key technologies for HUIM. This survey categorised the current state-of-the-art approaches into five sub-categories: Apriori-based, tree-based, projection-based, list-based, data-based, and index-based approaches. This work also discussed data-stream-based HUIM algorithms. In 2021, Gan et al. presented a structured and comprehensive survey of HUIM algorithms. The authors classified the state-of-the-art algorithms into Apriori-based, tree-based, projection-based, vertical/horizontal data-format-based, and other hybrid approaches. The survey compares the existing state-of-the-art approaches as well as shows the pros and cons of the available approaches. All the above-discussed works presented surveys only of traditional HUIM approaches. These surveys are not focused on top-k HUIM approaches.

In the literature, we found only one study that shows the comparison of transactional dataset-based top-k HUIM approaches. In 2019, Krishnamoorthy (Reference Krishnamoorthy2019 a) presented a comparative study that categorised the existing top-k HUIM algorithms into two groups: two-phase and one-phase-based algorithms. This comparative study only shows the comparison of two two-phase (TKU and REPT) and two one-phase (TKO and kHMC) algorithms. This work presents an experimental comparison among four transactional dataset-based algorithms, that is, TKU, REPT, TKU, and kHMC. The author utilized eight datasets and categorised them as sparse and dense. The experimental results include runtime performance comparisons, number of candidate-generated comparisons, and memory consumption performance comparisons. Furthermore, the author provides little discussion of other variant datasets, that is, data-stream and on-shelf-based mining approaches.

Our work is distinct from the comparative study presented by Krishnamoorthy (Reference Krishnamoorthy2019 a) on the following aspects:

• Our work presents a detailed survey of more than 35 state-of-the-art papers, whereas Krishnamoorthy’s work included only 4 papers. Our analysis included almost all the available state-of-the-art papers in the top-k HUIM field.

• Krishnamoorthy’s work is an experimental comparative study, and our work is a theoretical comparative analysis. Our analysis is more comprehensive and detailed.

• Krishnamoorthy compared only 12 internal threshold-raising strategies, whereas our analysis included more than 50 threshold-raising strategies. Our analysis discussed more than 55 pruning strategies too.

• Our analysis elaborated on the comparative advantages and disadvantages of the top-k HUIM approaches, whereas Krishnamoorthy’s study does not.

• Our taxonomy included transactional dataset-based, incremental and data-stream-based, sequential dataset-based, basic and extended utility-based, and other hybrid approaches. On the other hand, Krishnamoorthy’s study only included transactional dataset-based approaches.

• Furthermore, we discussed applications and also included a detailed summary and discussion of the top-k HUIM approaches. We also elaborated dynamic dataset-based, and both positive and negative utility value-based approaches.

Applications

User behavior mining: User behavior mining is an emerging topic in the domain of data mining. It analyses the behavior of users and has various applications, for example, website design (Pei et al., Reference Pei, Han, Mortazavi-Asl and Zhu2000), and cross-marketing analysis (Yen & Lee, Reference Yen and Lee2007 a). Periodic behavior can be defined as repeated activities at certain locations with regular time intervals (Li et al., Reference Li, Ding, Han, Kays and Nye2010). It provides insightful and concrete information about the long-moving history. The experts must have specific background knowledge in the domain that requires interesting periods or particular periods to be examined. The utility pattern mining could be used to analysis the user behavior during different time-periods. For example, physicians need to monitor older people to examine their daily and weekly behaviors.

Bio-informatics: Microarrays are effective techniques to evaluate the expression of a massive number of genes. It is used to identify the relationships between gene regulatory events and determine the biological effects of stimuli in the environment. However, it is quite a challenging task to analyse the biological effects on large-scale datasets. The utility pattern mining analysis is quite useful to identify the different genes that frequently occur in the biological conditions in the microarray dataset (Liu et al., Reference Liu, Cheng and Tseng2013). A phylogeny or phylogenetic tree is used to identify the relationship among the set of species. Utility pattern mining techniques are used to identify the common patterns, especially utility trees that are the collection of the phylogenetic tree.

Market-basket analysis: HUIM algorithms (Liu et al., Reference Liu and Liao2005) are extensively used in market-basket analysis, which is the collection of the set of items purchased by customers to generate significant profits for retail businesses, for example, Yahoo. The HUIM algorithms are quite useful for business retailers to make the correct decisions to identify highly profitable itemsets and reduce the inventory cost of more frequent but less profitable itemsets (Liu et al., Reference Liu and Liao2005).

Smart home: Due to the advancement of sensor technology, the electrical usage data of home appliances can be gathered very easily. However, the relevant information about the appliance usage data may exist but is hidden. The Correlation Pattern Miner (CoPMiner) algorithm (Chen et al., Reference Chen, Chen, Peng, Lee, Tseng, Ho, Zhou, Chen and Kao2014) is designed to identify usage patterns and correlations among appliances probabilistically. HUIM algorithms could be a promising solution to find appliance usage patterns that are useful for users to identify unnecessary appliance usage data and take corrective action for better usability of appliances. Moreover, manufacturers can better design the intelligent control system for smart appliances.

Interval-based events in temporal pattern mining: Temporal pattern mining is an interesting and important sub-field of data mining. In medical applications, temporal pattern mining identifies the frequent temporal patterns that a patient has a fever when they have a cough, and these symptoms occur when he or she has flu (Wu & Chen, Reference Wu and Chen2007). The sequential patterns identify the complex interval-based temporal relationship among events. For example, in many diabetic patients, the presence of hyperglycemia overlaps with the absence of glycosuria, which led to the development of effective diabetic testing kits. It requires identifying the complex temporal relationships among events duration. The utility pattern mining could be used to analysis the interval-based events in temporal pattern mining.

Web access pattern mining: Web access patterns are used to predict and understand the browsing behaviour of the users, which is quite useful for enhancing the user experience and website configuration. It is useful to analyse user motivation and give better recommendations and personalised services to the users. Web access patterns, also known as web navigation patterns or click-streams, represent the extracted path via one or more web pages on a website through the web server. The process of discovering patterns from web access logs is known as web usage mining (or web log mining) (Pei et al., Reference Pei, Han, Mortazavi-Asl and Zhu2000). HUIM algorithms can be quite interesting to mine the recorded information regarding the access paths of website visitors. Web access sequence (WAS) mining algorithms access the sequences of web pages visited by the users through web servers (Pei et al., Reference Pei, Han, Mortazavi-Asl and Zhu2000). It can enhance the design of a website that provides effective accessibility among highly correlated web pages, user recommendations, customer classification, and advertisement policies. HUIM algorithms are quite useful for both static and incremental mining of high-utility web access sequences.

The rest of the paper is organised as follows: Section 2 describes the key definitions and notations used in this paper. Section 3 describes the detailed discussion of top-k HUIM approaches. Section 4 presents the discussion and summary of all the available top-k HUIM approaches. Section 5 highlights the future directions for the top-k HUIM problem. Section 6 gives concluding remarks.

2. Preliminaries and definitions

Let I =

![]() $ \{ x_{1}, x_{2},\dots, x_{m} \} $

be a finite set of distinct items. A transactional dataset

$ \{ x_{1}, x_{2},\dots, x_{m} \} $

be a finite set of distinct items. A transactional dataset

![]() $D = \{T_{1}, T_2,\dots,T_n\}$

is a set of transactions, where each transaction

$D = \{T_{1}, T_2,\dots,T_n\}$

is a set of transactions, where each transaction

![]() $T_j \in D$

and n is the total number of transactions in D. Each item in the transaction has a positive integer value called the internal utility (purchase quantity) of the item. Each item

$T_j \in D$

and n is the total number of transactions in D. Each item in the transaction has a positive integer value called the internal utility (purchase quantity) of the item. Each item

![]() $I_j$

is associated with an integer value called an external utility (price or profit). If the value of external utility is not positive, then the item

$I_j$

is associated with an integer value called an external utility (price or profit). If the value of external utility is not positive, then the item

![]() $I_j$

is called a negative item.

$I_j$

is called a negative item.

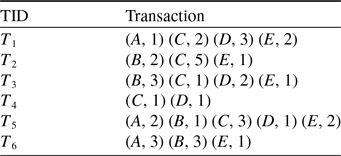

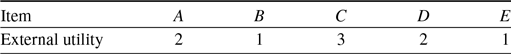

For example, Table 1 shows an example dataset containing six transactions. Each row presents the transaction, where alphabetical letters (

![]() $A, B, C, \dots$

) denote the items. Each item has its own internal utility value. Table 2 shows the external utility values for each item.

$A, B, C, \dots$

) denote the items. Each item has its own internal utility value. Table 2 shows the external utility values for each item.

Table 1. A transactional dataset

Table 2. External utility values

Definition 1

(Internal utility). Each item

![]() $x \in I $

is assigned an internal utility (purchase quantity) referred to as

$x \in I $

is assigned an internal utility (purchase quantity) referred to as

![]() $ IU(x, T_j) $

.

$ IU(x, T_j) $

.

For example, the internal utility of item A in the transaction

![]() $ T_1 $

is 1 as shown in Table 1.

$ T_1 $

is 1 as shown in Table 1.

Definition 2

(External utility). Each item

![]() $x \in I $

is assigned an external utility (e.g., unit profit) referred to as EU(x).

$x \in I $

is assigned an external utility (e.g., unit profit) referred to as EU(x).

For example, the external utility of item A is 2 as shown in Table 2.

Definition 3

(Utility of an item). The utility of an item

![]() $ x \in T_j$

is denoted by

$ x \in T_j$

is denoted by

![]() $ U(x, T_j) $

, where

$ U(x, T_j) $

, where

![]() $ U(x, T_j) $

=

$ U(x, T_j) $

=

![]() $ IU(x, T_j) $

$ IU(x, T_j) $

![]() $ \times EU(x) $

.

$ \times EU(x) $

.

For example, the utility of item A in

![]() $ T_1 $

is computed as:

$ T_1 $

is computed as:

![]() $ U(A, T_1) $

=

$ U(A, T_1) $

=

![]() $ IU (A, T_1) $

$ IU (A, T_1) $

![]() $ \times EU(A) = 1 \times 2 = 2 $

. The

$ \times EU(A) = 1 \times 2 = 2 $

. The

![]() $4^{th}$

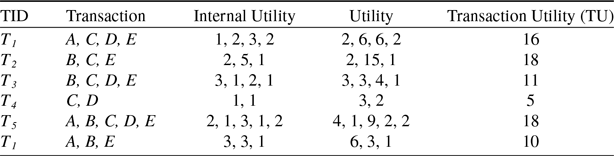

column of Table 3 shows the utility value of each item for all the transactions.

$4^{th}$

column of Table 3 shows the utility value of each item for all the transactions.

Table 3. Transaction utility of the running example.

Definition 4

(Utility of an itemset in a transaction). The utility of an itemset X in a transaction

![]() $T_{j} (X \mathrm{\subseteq } T_{j})$

is denoted by

$T_{j} (X \mathrm{\subseteq } T_{j})$

is denoted by

![]() $U(X,T_{j})$

, which is defined as

$U(X,T_{j})$

, which is defined as

![]() $U(X,T_{j})$

=

$U(X,T_{j})$

=

![]() $\sum_{x \in X \wedge X \subseteq T_j}$

$\sum_{x \in X \wedge X \subseteq T_j}$

![]() ${U(x,T_{j})}$

.

${U(x,T_{j})}$

.

For example,

![]() $ U(\{A,C\}, T_1)$

=

$ U(\{A,C\}, T_1)$

=

![]() $ 2+ 6 = 8 $

.

$ 2+ 6 = 8 $

.

Definition 5

(Utility of an itemset in dataset). The utility of an itemset X in dataset D is denoted by U(X) which is defined as

![]() $ U(X) =\sum_{X \subseteq T_j \in D }$

$ U(X) =\sum_{X \subseteq T_j \in D }$

![]() $U(X, T_j)$

.

$U(X, T_j)$

.

For example,

![]() $ U(\{A,C\}) =$

$ U(\{A,C\}) =$

![]() $ U(\{A,C\}, T_1 ) + U(\{A,C\}, T_5) =$

$ U(\{A,C\}, T_1 ) + U(\{A,C\}, T_5) =$

![]() $ 8+13 = 21 $

.

$ 8+13 = 21 $

.

Definition 6

(Transaction utility). The transaction utility denoted by

![]() $TU (T_{j})$

, for transaction

$TU (T_{j})$

, for transaction

![]() $T_{j}$

is computed as

$T_{j}$

is computed as

![]() $ TU (T_{j}) = \sum^m_i{U(x_i, T_j)}$

, where m is the number of items in

$ TU (T_{j}) = \sum^m_i{U(x_i, T_j)}$

, where m is the number of items in

![]() $T_{j}$

transaction.

$T_{j}$

transaction.

For example,

![]() $ TU(T_1) =$

$ TU(T_1) =$

![]() $ U(A, T_1) + U(C, T_1) +$

$ U(A, T_1) + U(C, T_1) +$

![]() $ U(D, T_1) + U(E, T_1) =$

$ U(D, T_1) + U(E, T_1) =$

![]() $ 2+6+6+2=16$

. The TU of all the transactions is shown in the last column of Table 3.

$ 2+6+6+2=16$

. The TU of all the transactions is shown in the last column of Table 3.

Definition 7

(Total utility). The total utility of a dataset D is denoted as

![]() $ TU_{D} $

and is defined as

$ TU_{D} $

and is defined as

![]() $TU_{D} = \sum_{T_j \in D} TU(T_j)$

$TU_{D} = \sum_{T_j \in D} TU(T_j)$

For example, the total utility of the dataset D is

![]() $ TU_{D} =$

$ TU_{D} =$

![]() $ TU(T_1) + $

$ TU(T_1) + $

![]() $ TU(T_2) +$

$ TU(T_2) +$

![]() $ TU(T_3) +$

$ TU(T_3) +$

![]() $ TU(T_4) +$

$ TU(T_4) +$

![]() $ TU(T_5) +$

$ TU(T_5) +$

![]() $ TU(T_6) $

=

$ TU(T_6) $

=

![]() $ 16 + 18+ 11+ 5+ 18+ 10 =78$

.

$ 16 + 18+ 11+ 5+ 18+ 10 =78$

.

FIM mining discovers frequent patterns by using the DCP; however, the same support-based DCP cannot be applied to the utility value in HUIs. A low-utility itemset may become HUIs when an item is added that has a large utility value. To restore the DCP, HUIM algorithms use the TWU closure property (Liu et al., Reference Liu and Liao2005). All the HUIM algorithms follow the TWU technique to mine the HUIs (Liu et al., Reference Liu and Liao2005; Chu et al., Reference Chu, Tseng and Liang2009; Wu et al., Reference Wu, Shie, Tseng and Yu2012; Ryang & Yun, Reference Ryang and Yun2015; Yin et al., Reference Yin, Zheng, Cao, Song and Wei2013; Zihayat & An, Reference Zihayat and An2014).

Definition 8

(Transaction-weighted utility). The transaction weighted utility of an itemset X denoted by TWU(X) can be defined as

![]() $TWU(X) =$

$TWU(X) =$

![]() $\sum_{X \subseteq T_{j} \in D} TU(T_{j})$

.

$\sum_{X \subseteq T_{j} \in D} TU(T_{j})$

.

For example,

![]() $ TWU(A) =$

$ TWU(A) =$

![]() $ TU(T_1) + TU(T_5) + TU(T_6) =$

$ TU(T_1) + TU(T_5) + TU(T_6) =$

![]() $ 16 + 18 + 10 = 44 $

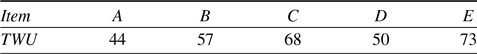

. Table 4 shows the TWU values of all the items in the running example.

$ 16 + 18 + 10 = 44 $

. Table 4 shows the TWU values of all the items in the running example.

Table 4. TWU values of the running example

Property 1

(TWU based overestimation). If the TWU value of itemset X is greater than the utility value of itemset X, that is,

![]() $ TWU(X) \gt U(X) $

, then the itemset is assumed to be overestimated (Liu et al., Reference Liu and Liao2005).

$ TWU(X) \gt U(X) $

, then the itemset is assumed to be overestimated (Liu et al., Reference Liu and Liao2005).

Property 2

(TWU based search-space pruning). If the TWU value of the itemset X is less than the user-defined threshold

![]() $(min\_util) $

, that is,

$(min\_util) $

, that is,

![]() $ TWU(X) \lt min\_util $

, then the itemset cannot be included for further processing (Liu et al., Reference Liu and Liao2005).

$ TWU(X) \lt min\_util $

, then the itemset cannot be included for further processing (Liu et al., Reference Liu and Liao2005).

Definition 9

(High utility itemset). An itemset X is called a high utility itemset if the utility of itemset X is greater than or equal to the user-specified

![]() $ min\_util $

threshold, that is,

$ min\_util $

threshold, that is,

![]() $ U(X) \ge min\_util $

. Otherwise, the itemset is of low utility.

$ U(X) \ge min\_util $

. Otherwise, the itemset is of low utility.

The HUIs for running example with

![]() $ min\_util $

= 30 are as follows:

$ min\_util $

= 30 are as follows:

![]() $ \{\{A,C,D,E\}:33, \{C,D\}:35, \{C,D,E\}:35, \{B,C\}:33, \{B,C,E\}:37, \{C\}:36 $

and

$ \{\{A,C,D,E\}:33, \{C,D\}:35, \{C,D,E\}:35, \{B,C\}:33, \{B,C,E\}:37, \{C\}:36 $

and

![]() $ \{C,E\}:39\}$

, where the number beside each itemset indicates its utility value.

$ \{C,E\}:39\}$

, where the number beside each itemset indicates its utility value.

Definition 10 (Closed itemset). An itemset X is a closed itemset if there is no super-set of itemset X with the same support count. Otherwise, the itemset X is a non-closed itemset.

Two-phase-based algorithms suffer from multiple dataset scans and the generation of lots of candidates. To overcome these limitations, we found several proposed one-phase algorithms. One-phase algorithms are more efficient than two-phase algorithms in terms of execution time and memory space. Most of the one-phase algorithms use a utility-list-based data structure to store information about items, and the remaining utility-based pruning strategy to prune the search-space (Liu & Qu, Reference Liu and Qu2012).

Definition 11

(Remaining utility of an itemset in a transaction). The remaining utility of itemset X in transaction

![]() $ T_j $

denoted by

$ T_j $

denoted by

![]() $ RU(X, T_j) $

is the sum of the utilities of all the items in

$ RU(X, T_j) $

is the sum of the utilities of all the items in

![]() $ T_j/X $

in

$ T_j/X $

in

![]() $ T_j $

where

$ T_j $

where

![]() $ RU(X, T_j) $

=

$ RU(X, T_j) $

=

![]() $ \sum_{i \in (T_j /X)} $

U(i, T) (Liu & Qu, Reference Liu and Qu2012).

$ \sum_{i \in (T_j /X)} $

U(i, T) (Liu & Qu, Reference Liu and Qu2012).

Definition 12

(Utility-list structure). The utility-list structure contains three fields,

![]() $ T_{id}, iutil,$

and rutil. The

$ T_{id}, iutil,$

and rutil. The

![]() $ T_{id} $

indicates the transactions containing itemset X, iutil indicates the U(X), and the rutil indicates the remaining utility of itemset X is

$ T_{id} $

indicates the transactions containing itemset X, iutil indicates the U(X), and the rutil indicates the remaining utility of itemset X is

![]() $RU(X,T_j)$

(Liu & Qu, Reference Liu and Qu2012).

$RU(X,T_j)$

(Liu & Qu, Reference Liu and Qu2012).

Property 3

(Pruning search-space using remaining utility). For an itemset X, if the sum of

![]() $ U(X)+RU(X) $

is less than

$ U(X)+RU(X) $

is less than

![]() $min\_util $

, then itemset X and all its supersets are low utility itemsets. Otherwise, the itemset is eligible for HUIs. The details and proof of the remaining utility upper-bound (REU)-based upper-bound are given in Liu and Qu (Reference Liu and Qu2012).

$min\_util $

, then itemset X and all its supersets are low utility itemsets. Otherwise, the itemset is eligible for HUIs. The details and proof of the remaining utility upper-bound (REU)-based upper-bound are given in Liu and Qu (Reference Liu and Qu2012).

Definition 13 (top-k high utility itemset). An itemset X is top-k HUIs if there exist no more than (k-1) itemsets whose utility is higher than that of X.

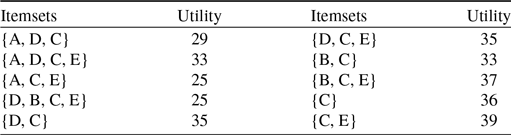

In the running example, Table 5 shows the HUIs for k, where the value of k is 10.

Table 5. High utility itemsets where

![]() $ k=10 $

$ k=10 $

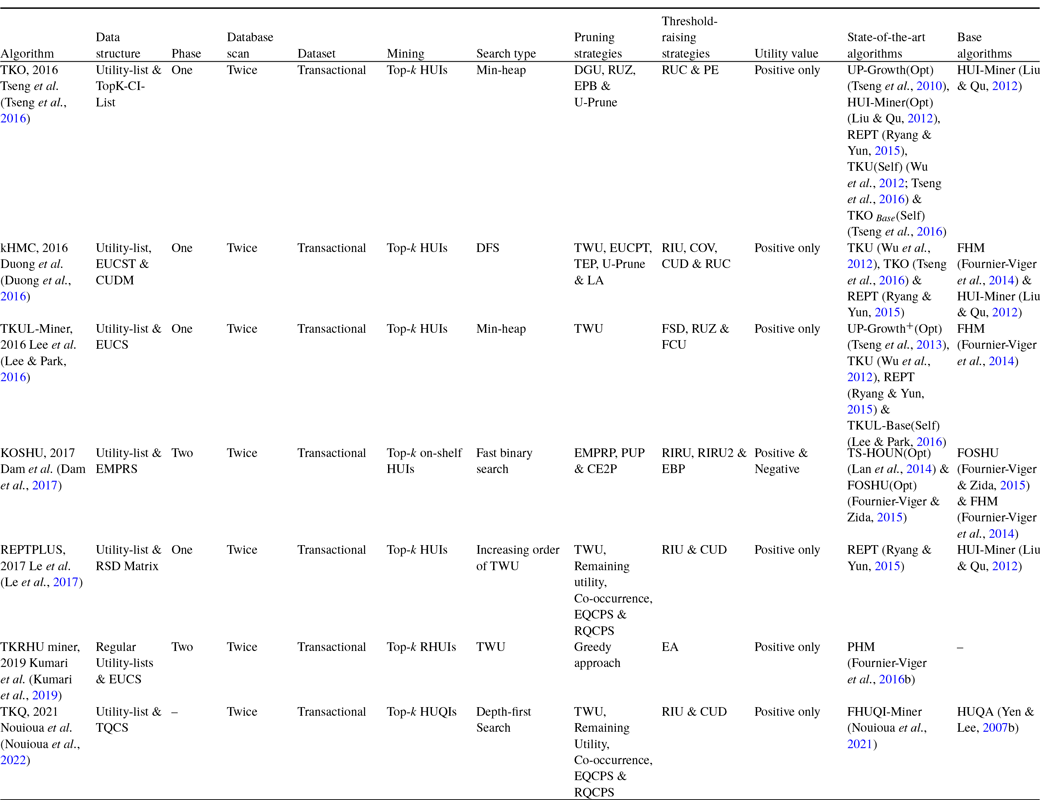

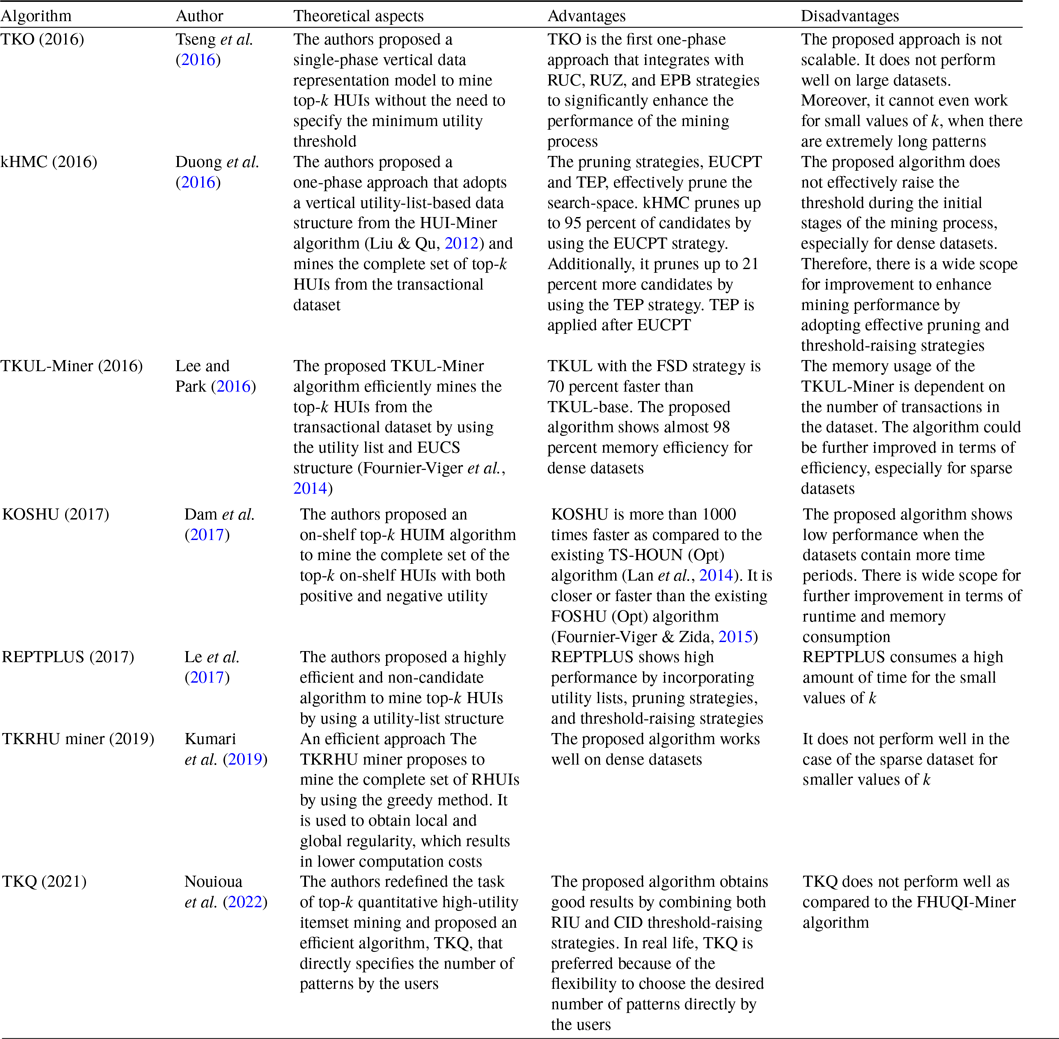

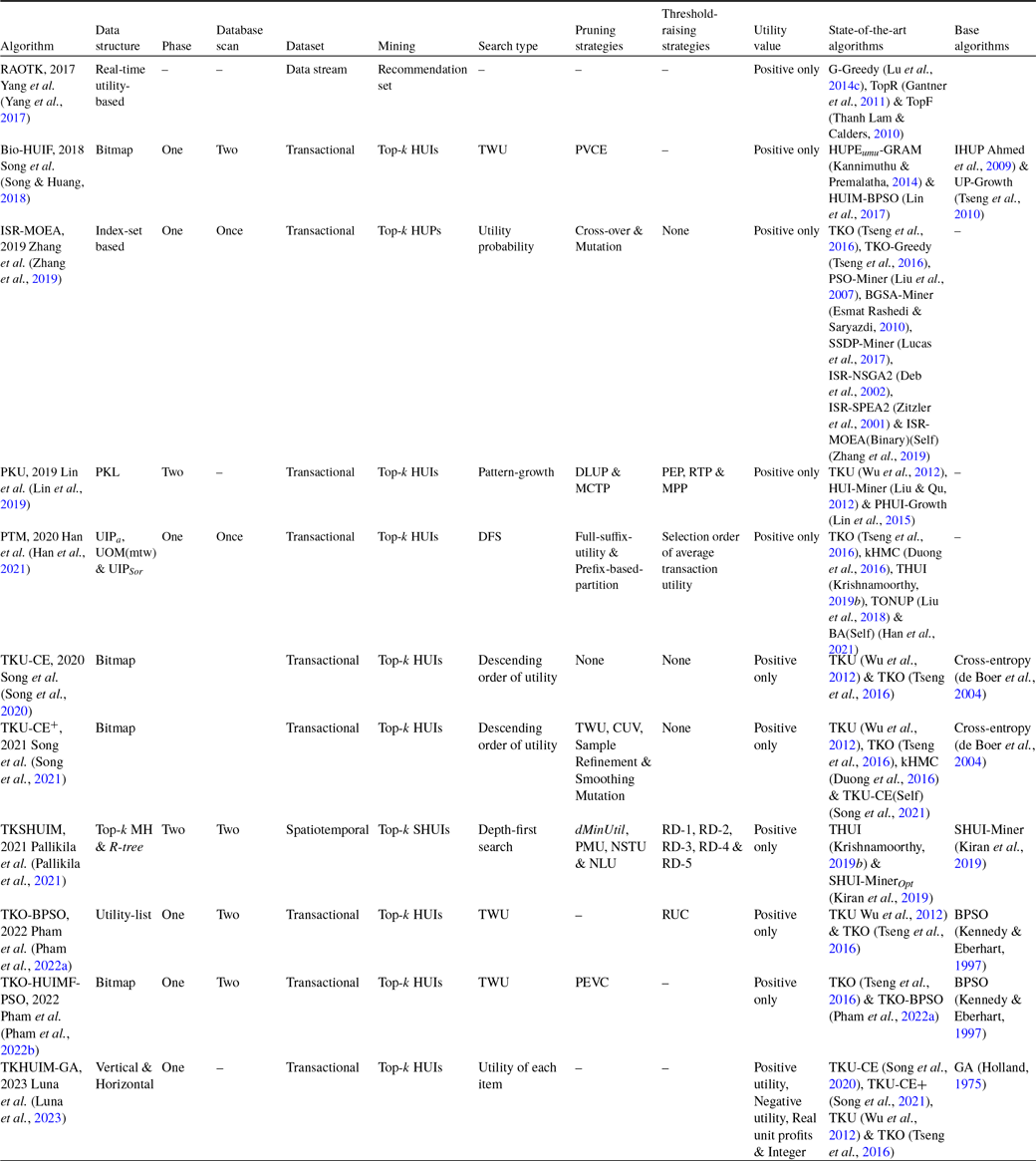

3. Top-k high utility itemset mining algorithms

HUIM algorithms (Tseng et al., Reference Tseng, Wu, Shie and Yu2010; Liu & Qu, Reference Liu and Qu2012; Tseng et al., Reference Tseng, Shie, Wu and Yu2013) are proposed that consider both users’ preferences and items’ importance (for example, weight, cost, profit, quantity, and others). However, HUIM does not follow the anti-monotonic property (Liu et al., Reference Liu and Liao2005; Agrawal & Srikant, Reference Agrawal and Srikant1994). To deal with this issue, most of the HUIM approaches (Liu et al., Reference Liu and Liao2005; Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Lee2009) adopt the TWU model (Liu et al., Reference Liu and Liao2005) that follows the TWDCP (Transaction-Weighted Downward Closure Property)Footnote 2 . However, traditional HUIM approaches suffer from the following drawbacks: (1) generation of numerous unpromising candidate itemsets. (2) scanning the dataset multiple times. (3) It is difficult to set the appropriate threshold in advance. To address these issues, we discuss the top-k HUIM algorithms in-depth that solve the above problems to a large extent. We divide the top-k HUIM into three parts, namely tree-based, utility-list-based, and others.

3.1 Tree-based top-k HUIM algorithms

Tree-based top-k HUIM algorithms are categorised into the following three broad areas of datasets: (1) static, (2) incremental and data stream, and (3) sequential.

3.1.1 Static dataset-based algorithms

Top-k HUIM algorithms (Wu et al., Reference Wu, Shie, Tseng and Yu2012; Ryang & Yun, Reference Ryang and Yun2015) are designed to mine top-k HUIs where the user can specify the value of k to obtain the intended itemsets. It is easy to set the value of k instead of setting the

![]() $min\_util$

threshold. However, it incurs the following challenges: (1) The utility value of the itemsets is neither monotone nor anti-monotone. (2) Present a novel data structure to store the items. (3) It is difficult to raise the intermediate threshold from 0 to prune the search-space. In this sub-section, we have provided the most up-to-date discussion about the static dataset-based top-k HUIM algorithms.

$min\_util$

threshold. However, it incurs the following challenges: (1) The utility value of the itemsets is neither monotone nor anti-monotone. (2) Present a novel data structure to store the items. (3) It is difficult to raise the intermediate threshold from 0 to prune the search-space. In this sub-section, we have provided the most up-to-date discussion about the static dataset-based top-k HUIM algorithms.

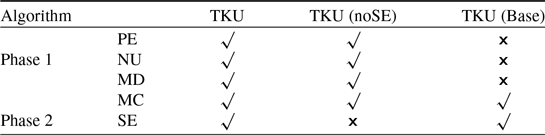

Wu et al. (Reference Wu, Shie, Tseng and Yu2012) developed an effective approach, named TKU (Top-K Utility itemset mining), to discover all the HUIs without setting a minimum-utility threshold from the transactional dataset. Firstly, the authors present a baseline algorithm, named TKU

![]() $_{Base}$

, an extension of UP-Growth (Tseng et al., Reference Tseng, Wu, Shie and Yu2010) and adopt the UP-Tree (Tseng et al., Reference Tseng, Wu, Shie and Yu2010), to keep the details of transactions and top-k HUIs. TKU

$_{Base}$

, an extension of UP-Growth (Tseng et al., Reference Tseng, Wu, Shie and Yu2010) and adopt the UP-Tree (Tseng et al., Reference Tseng, Wu, Shie and Yu2010), to keep the details of transactions and top-k HUIs. TKU

![]() $_{Base}$

consists of the following three parts: (1) constructs the UP-Tree as in Tseng et al. (Reference Tseng, Wu, Shie and Yu2010) and requires two dataset scans, (2) generates the Potential top-k HUIs (PKHUIs) from the UP-Tree, and (3) identifies the actual top-k HUIs from the set of PKHUIs. Four threshold-raising strategies, namely, raising the threshold by Minimum-Utility Itemsets (MUI) of Candidate (MC), Pre-Evaluation (PE), raising the threshold by Node Utilities (NU), and raising the threshold by MIU of Descendants (MD), are designed. The MC strategy is used to determine that the estimated utility is no less than the border threshold. The PE strategy raises the border threshold after the first scan by using the Pre-evaluation Matrix (PEM) structure, which keeps the lower bounds of utility for the particular 2-itemsets. During the second dataset scan, the NU strategy is performed while constructing the UP-Tree. The MD strategy is performed after UP-Tree construction and before PKHUIs generation. These strategies effectively reduce the search-space and unpromising candidates in the first phase. As the number of checked PKHUIs is too high, it takes a lot of time to scan the dataset. To mitigate this effect in the second phase, the fifth threshold-raising strategy, named Sorting candidates and raising threshold by the Exact utility of candidates (SE), is designed to enhance the mining performance by minimising the number of checked candidates. Three versions of TKU, namely TKU, TKU

$_{Base}$

consists of the following three parts: (1) constructs the UP-Tree as in Tseng et al. (Reference Tseng, Wu, Shie and Yu2010) and requires two dataset scans, (2) generates the Potential top-k HUIs (PKHUIs) from the UP-Tree, and (3) identifies the actual top-k HUIs from the set of PKHUIs. Four threshold-raising strategies, namely, raising the threshold by Minimum-Utility Itemsets (MUI) of Candidate (MC), Pre-Evaluation (PE), raising the threshold by Node Utilities (NU), and raising the threshold by MIU of Descendants (MD), are designed. The MC strategy is used to determine that the estimated utility is no less than the border threshold. The PE strategy raises the border threshold after the first scan by using the Pre-evaluation Matrix (PEM) structure, which keeps the lower bounds of utility for the particular 2-itemsets. During the second dataset scan, the NU strategy is performed while constructing the UP-Tree. The MD strategy is performed after UP-Tree construction and before PKHUIs generation. These strategies effectively reduce the search-space and unpromising candidates in the first phase. As the number of checked PKHUIs is too high, it takes a lot of time to scan the dataset. To mitigate this effect in the second phase, the fifth threshold-raising strategy, named Sorting candidates and raising threshold by the Exact utility of candidates (SE), is designed to enhance the mining performance by minimising the number of checked candidates. Three versions of TKU, namely TKU, TKU

![]() $_{noSE}$

, and TKU

$_{noSE}$

, and TKU

![]() $_{Base}$

, are proposed to measure the effectiveness of the developed strategies that are shown in Table 6.

$_{Base}$

, are proposed to measure the effectiveness of the developed strategies that are shown in Table 6.

Table 6. Comparison of three designed versions of the TKU by using different threshold-raising strategies (Wu et al., Reference Wu, Shie, Tseng and Yu2012)

The experimental results show that TKU performs better than TKU

![]() $_{Base}$

and is close to the optimal case of UP-Growth (Tseng et al., Reference Tseng, Wu, Shie and Yu2010) for the execution time over the foodmart, mushroom and chain-store datasets. It is observed that the execution time of TKU is up-to 100 times faster than that of TKU

$_{Base}$

and is close to the optimal case of UP-Growth (Tseng et al., Reference Tseng, Wu, Shie and Yu2010) for the execution time over the foodmart, mushroom and chain-store datasets. It is observed that the execution time of TKU is up-to 100 times faster than that of TKU

![]() $_{Base}$

and two times less than the optimal case of UP-Growth. However, TKU relies on a two-phase model, thereby resulting in a large number of candidates and multiple dataset scans.

$_{Base}$

and two times less than the optimal case of UP-Growth. However, TKU relies on a two-phase model, thereby resulting in a large number of candidates and multiple dataset scans.

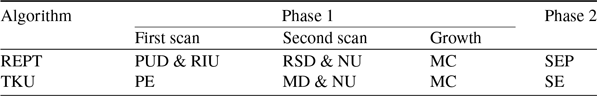

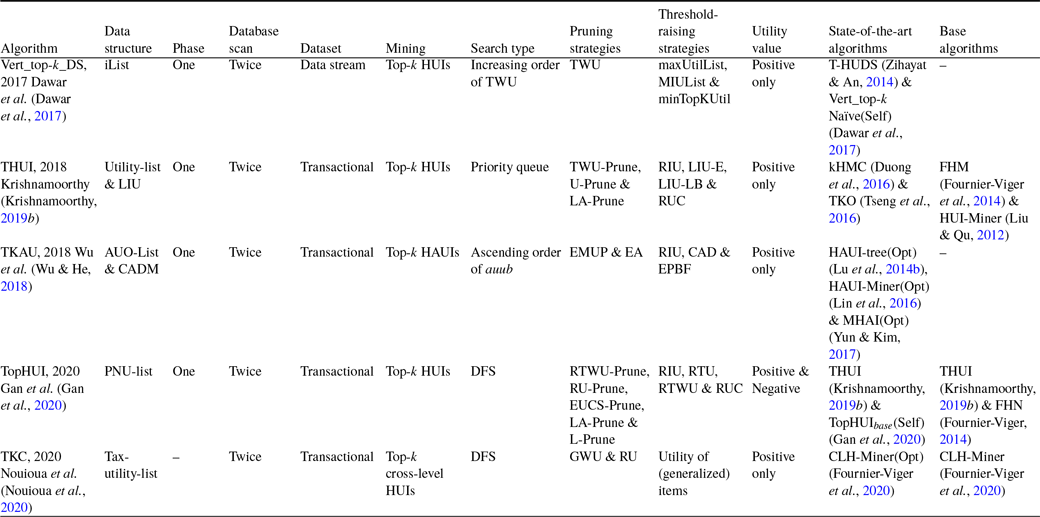

TKU (Wu et al., Reference Wu, Shie, Tseng and Yu2012) fails to process all the patterns having utilities that are less than the specified threshold. To resolve this issue, Ryang and Yun (Reference Ryang and Yun2015) developed an effective approach, named REPT (Raising threshold with Exact and Pre-calculated utilities for Top-k high utility pattern mining), to find the top-k HUIs with highly reduced candidates from the non-binary datasets. Three threshold-raising strategies, namely, raising the threshold based on Pre-evaluation with Utility Descending order (PUD), raising the threshold by Real Item Utilities (RIU), and Raising the threshold with items in Support Descending order (RSD), are designed with exact and pre-evaluated utility of itemsets of length 1 or 2 in the first phase. During the second phase, the fourth threshold-raising strategy, named Sorting candidates and raising the threshold Exact and Pre-calculated utilities of candidates (SEP), is designed with exact and pre-calculated utilities to identify the actual top-k HUIs. The primary differences between REPT and TKU are as follows: (1) During the first dataset scan, TKU employs the PE strategy, based on only pre-evaluated itemsets, while REPT employs two strategies, PUD and RIU, based on both exact and pre-evaluated itemsets. (2) During the second dataset scan, TKU constructs the tree using MD and NU strategies, while REPT increases the threshold again by using RSD and NU strategies. (3) TKU uses the SE strategy to employ only upper-bound utilities, while REPT uses the SEP strategy to employ both exact and pre-calculated utilities of itemsets. Therefore, REPT significantly performs better than TKU. The difference between REPT and TKU for the different threshold-raising strategies is shown in Table 7.

Table 7. Threshold-raising strategies used by REPT and TKU (Ryang & Yun, Reference Ryang and Yun2015)

It is observed that REPT performs better compared to the state-of-the-art works TKU (Wu et al., Reference Wu, Shie, Tseng and Yu2012) and the optimal case of UP-Growth (Tseng et al., Reference Tseng, Wu, Shie and Yu2010) and UP-Growth

![]() $^+$

(Tseng et al., Reference Tseng, Shie, Wu and Yu2013) for the number of generated candidates, runtime, memory utilisation, and scalability from the dense and sparse datasets. Moreover, REPT effectively raises the threshold as compared to the TKU algorithm (Wu et al., Reference Wu, Shie, Tseng and Yu2012) for different values of k and N (where N denotes the number of items). However, REPT incurs the same drawbacks as the TKU approach. Furthermore, it is a tedious job for the users to specify the suitable values of N, used by the RSD strategy.

$^+$

(Tseng et al., Reference Tseng, Shie, Wu and Yu2013) for the number of generated candidates, runtime, memory utilisation, and scalability from the dense and sparse datasets. Moreover, REPT effectively raises the threshold as compared to the TKU algorithm (Wu et al., Reference Wu, Shie, Tseng and Yu2012) for different values of k and N (where N denotes the number of items). However, REPT incurs the same drawbacks as the TKU approach. Furthermore, it is a tedious job for the users to specify the suitable values of N, used by the RSD strategy.

REPT and TKU follow the two-phase model; therefore, these algorithms generate excessive candidates and perform multiple dataset scans to find the accurate utilities of itemsets and extract the desired top-k HUIs. To address these issues, Singh et al. (Reference Singh, Singh, Kumar and Biswas2019b) developed an effective method, named TKEH (Efficient algorithm for mining top-k high utility itemsets), to find the top-k HUIs from the transactional datasets. TKEH adopts the Estimated Utility Co-occurrence Pruning Strategy with Threshold (EUCST), an extended version of FHM (Fournier-Viger et al., Reference Fournier-Viger, Wu, Zida and Tseng2014) and later improved by kHMC (Duong et al., Reference Duong, Liao, Fournier-Viger and Dam2016). EUCST efficiently raises the minimum utility threshold to prune the search-space. The EUCS structure is implemented using a hash map instead of a triangular matrix, as in Fournier-Viger et al. (Reference Fournier-Viger, Wu, Zida and Tseng2014). TKEH scans the dataset twice. In the first dataset scan, it computes the TWU of each 1-item and sorts items as per the increasing order of TWU, while during the second scan, it builds the EUCS structure. However, it is very costly to scan the dataset. To mitigate this effect, the proposed algorithm adopts database projection and transaction merging techniques from EFIM (Zida et al., Reference Zida, Fournier-Viger, Lin, Wu and Tseng2015). Both of these techniques effectively reduce the dataset size, thereby resulting in high cost-effectiveness of the dataset scans. However, the transaction merging technique finds lots of identical transactions. To implement these techniques efficiently, TKEH adopts the method as used in EFIM (Zida et al., Reference Zida, Fournier-Viger, Lin, Wu and Tseng2015). The proposed work adopts the following three threshold-raising strategies: (1) The first strategy, Real Time Utilities (RIU), is adopted from REPT (Ryang & Yun, Reference Ryang and Yun2015), and it raises the minimum utility to the

![]() $k{\rm th} $

largest value stored in the RIU list. (2) Second strategy: CUD is adopted from kHMC (Duong et al., Reference Duong, Liao, Fournier-Viger and Dam2016), and it incorporates the EUCS structure to keep the utilities of 2-itemsets. CUD is applied after the RIU strategy. (3) The third strategy, Coverage (COV), is used to store the pairs of items in the Coverage List (COVL) structure. Two pruning strategies, namely Pruning using sub-tree (SUP) and Pruning using EUC (EUCP), are incorporated to efficiently reduce the search-space. SUP uses the notion of sub-tree utility as in Zida et al. (Reference Zida, Fournier-Viger, Lin, Wu and Tseng2015), while EUCP is used to get the TWU value of each itemset using the EUCS structure.

$k{\rm th} $

largest value stored in the RIU list. (2) Second strategy: CUD is adopted from kHMC (Duong et al., Reference Duong, Liao, Fournier-Viger and Dam2016), and it incorporates the EUCS structure to keep the utilities of 2-itemsets. CUD is applied after the RIU strategy. (3) The third strategy, Coverage (COV), is used to store the pairs of items in the Coverage List (COVL) structure. Two pruning strategies, namely Pruning using sub-tree (SUP) and Pruning using EUC (EUCP), are incorporated to efficiently reduce the search-space. SUP uses the notion of sub-tree utility as in Zida et al. (Reference Zida, Fournier-Viger, Lin, Wu and Tseng2015), while EUCP is used to get the TWU value of each itemset using the EUCS structure.

To measure the effectiveness of the strategies used, five versions of TKEH are designed, namely TKEH, TKEH(CUD), TKEH(RIU), TKEH(sup), and TKEH(tm). Experiments show that TKEH with five versions performs better in contrast to the state-of-the-art TKU (Wu et al., Reference Wu, Shie, Tseng and Yu2012), TKO (Tseng et al., Reference Tseng, Wu, Fournier-Viger and Yu2016), and kHMC (Duong et al., Reference Duong, Liao, Fournier-Viger and Dam2016) for runtime, memory utilisation, and scalability in dense and sparse datasets. The proposed algorithm is 2.66 times and 73.98 times faster than that of kHMC and TKO, respectively, for k = 1000. On the other side, TKU does not work for k = 1000. However, TKEH shows poor performance on highly sparse datasets, for example, the retail dataset. The reason is that the proposed algorithm does not utilise transaction merging and database projection techniques properly for the highly sparse datasets.

Liu et al. (Reference Liu, Zhang, Fung, Li and Iqbal2018) proposed a novel one-phase, efficient and scalable algorithm, named TONUP (TOp-N high Utility Pattern mining), an extension of d

![]() $^2$

HUP (Liu et al., Reference Liu, Wang and Fung2016) and formulated upon the opportunistic pattern-growth approach, to mine the long top-n HUPs without generating candidates. It grows top-k HUPs by enumerating patterns as prefix extensions using depth-first search, computes the utility of each enumerated pattern by using an improved memory-resident structure, shortlists patterns with the first n larger utilities among the enumerate patterns, and employs the

$^2$

HUP (Liu et al., Reference Liu, Wang and Fung2016) and formulated upon the opportunistic pattern-growth approach, to mine the long top-n HUPs without generating candidates. It grows top-k HUPs by enumerating patterns as prefix extensions using depth-first search, computes the utility of each enumerated pattern by using an improved memory-resident structure, shortlists patterns with the first n larger utilities among the enumerate patterns, and employs the

![]() $n{\rm th}$

largest utility as a border threshold to prune the search-space. The memory-resident data structure, improved Chain of Accurate Utility Lists (iCAUL), an extended version of CAUL (Liu et al., Reference Liu, Wang and Fung2016), is proposed to store the utilities of enumerated patterns to enhance the mining performance. Materialized projection is efficient to eliminate the unpromising items by enumerating the prefix extensions, but it incurs additional overhead to allocate the memory and copy transactions. Therefore, a materialize transaction set for patterns is selected based on the pattern length and the percentage of promising items. On the other hand, pseudo-projection is independent of data characteristics, removing the burden on users. Materialized projection works well on dense datasets, while pseudo-projection works well on sparse datasets. The Automatic Materialization in projecting TS (AutoMaterial) strategy provides a balance between pseudo- and materialized projection of transaction sets, maintained in iCAUL by using the self-adjusting method. The Dynamically resorting items in DESCENDing order (DynaDescend) strategy is used to initialise and dynamically adjust the border threshold and dynamically resort the items in the decreasing order of local utility upper-bound. However, it incurs additional costs to dynamically resort to the items. An opportunistic strategy is proposed that maintains the shortlisted patterns, efficiently computes the utilities, quickly raises the border threshold, handles the very long patterns, and captures every opportunity to enhance the efficiency and scalability of full-strength TONUP. The full-strength TONUP uses a suffix tree to keep and shortlist the enumerated patterns. The threshold-raising strategy, named Exact utilities to raise a Border threshold (ExactBoarder), is incorporated to rapidly raise the border threshold by the exact utility of each enumerated pattern using min-heap. The threshold-raising strategy, named Suffix Tree to maintain patterns (SuffixTree), is incorporated to efficiently keep the shortlisted patterns by using a suffix tree. The Opportunistic Shift to a two-round approach (OppoShift) strategy opportunistically shifts the two-round method when the enumerated patterns are too long. The two-round TONUP is up to three times faster than the one-round TONUP.

$n{\rm th}$

largest utility as a border threshold to prune the search-space. The memory-resident data structure, improved Chain of Accurate Utility Lists (iCAUL), an extended version of CAUL (Liu et al., Reference Liu, Wang and Fung2016), is proposed to store the utilities of enumerated patterns to enhance the mining performance. Materialized projection is efficient to eliminate the unpromising items by enumerating the prefix extensions, but it incurs additional overhead to allocate the memory and copy transactions. Therefore, a materialize transaction set for patterns is selected based on the pattern length and the percentage of promising items. On the other hand, pseudo-projection is independent of data characteristics, removing the burden on users. Materialized projection works well on dense datasets, while pseudo-projection works well on sparse datasets. The Automatic Materialization in projecting TS (AutoMaterial) strategy provides a balance between pseudo- and materialized projection of transaction sets, maintained in iCAUL by using the self-adjusting method. The Dynamically resorting items in DESCENDing order (DynaDescend) strategy is used to initialise and dynamically adjust the border threshold and dynamically resort the items in the decreasing order of local utility upper-bound. However, it incurs additional costs to dynamically resort to the items. An opportunistic strategy is proposed that maintains the shortlisted patterns, efficiently computes the utilities, quickly raises the border threshold, handles the very long patterns, and captures every opportunity to enhance the efficiency and scalability of full-strength TONUP. The full-strength TONUP uses a suffix tree to keep and shortlist the enumerated patterns. The threshold-raising strategy, named Exact utilities to raise a Border threshold (ExactBoarder), is incorporated to rapidly raise the border threshold by the exact utility of each enumerated pattern using min-heap. The threshold-raising strategy, named Suffix Tree to maintain patterns (SuffixTree), is incorporated to efficiently keep the shortlisted patterns by using a suffix tree. The Opportunistic Shift to a two-round approach (OppoShift) strategy opportunistically shifts the two-round method when the enumerated patterns are too long. The two-round TONUP is up to three times faster than the one-round TONUP.

TONUP is 1 to 3 orders of magnitude more efficient than the benchmark algorithms, TKU (Wu et al., Reference Wu, Shie, Tseng and Yu2012) and TKO (Tseng et al., Reference Tseng, Wu, Fournier-Viger and Yu2016), and up to 2 orders of magnitude faster than the optimal case of the state-of-the-art methods, UP-Growth

![]() $^+$

(Tseng et al., Reference Tseng, Shie, Wu and Yu2013), HUI-Miner (Liu & Qu, Reference Liu and Qu2012) and EFIM (Zida et al., Reference Zida, Fournier-Viger, Lin, Wu and Tseng2015). The proposed algorithm incurs several disadvantages. It works only for static datasets. Therefore, an incremental algorithm may be used to discover top-n HUPs from dynamic datasets. The proposed algorithm only considers small and medium-sized datasets that can be entirely kept in memory. In cases of massive data that cannot be completely occupied in memory, it fails to discover top-k HUIs directly. One probable solution is that firstly, an extension is utilised to retrieve the data from datasets subject to memory constraints, then computes the local top-k HUIs, and finally fetches results from local datasets. However, this process is cumbersome, generates lots of unpromising candidates, and degrades mining performance.

$^+$

(Tseng et al., Reference Tseng, Shie, Wu and Yu2013), HUI-Miner (Liu & Qu, Reference Liu and Qu2012) and EFIM (Zida et al., Reference Zida, Fournier-Viger, Lin, Wu and Tseng2015). The proposed algorithm incurs several disadvantages. It works only for static datasets. Therefore, an incremental algorithm may be used to discover top-n HUPs from dynamic datasets. The proposed algorithm only considers small and medium-sized datasets that can be entirely kept in memory. In cases of massive data that cannot be completely occupied in memory, it fails to discover top-k HUIs directly. One probable solution is that firstly, an extension is utilised to retrieve the data from datasets subject to memory constraints, then computes the local top-k HUIs, and finally fetches results from local datasets. However, this process is cumbersome, generates lots of unpromising candidates, and degrades mining performance.

TopHUI (Gan et al., Reference Gan, Wan, Chen, Chen and Qiu2020) is the first work that can mine top-k HUIs with or without negative utility; however, it incurs high memory costs and a long runtime. To resolve these problems, Chen et al. (Reference Chen, Wan, Gan, Chen and Fujita2021) proposed an approach named TOPIC (TOP-k high utility Itemset disCovering), with positive and negative utility from the large dataset. An array-based utility-counting method is adopted to efficiently compute the utility bounds to minimise the unpromising candidate generations. Two novel upper bounds, namely Redefined Local Utility and Redefined Sub-tree Utility, are utilised by using the utility-array (UA) to quickly reduce the search-space. TOPIC is an extension of EFIM (Zida et al., Reference Zida, Fournier-Viger, Lin, Wu and Tseng2015), and traverses the search-space by adopting the depth-first method. The items are arranged according to the increasing order of TWU in the search-space when there are only positive utility values. However, in the case of negative utility values, the ascending order of RTWU is considered to follow the lexicographical order. The negative items always follow the positive items in sorted order. The proposed approach incorporates two efficient dataset scanning techniques, namely transaction merging and dataset projection, to minimise the dataset scan cost and memory usage. Two pruning strategies, namely TWU-based pruning strategy (Liu t al., 2005) and RTWU-based pruning strategy (Fournier-Viger, Reference Fournier-Viger, Luo, Yu and Li2014), are adopted to effectively reduce the search-space and discover the exact top-k HUIs. The Priority-queue and threshold-raising strategy, RIU (Ryang & Yun, Reference Ryang and Yun2015), are employed, respectively, to store the itemsets and automatically raise the minimum-utility threshold.

Four versions of the proposed algorithm, namely TOPIC

![]() $_{merge}$

, TOPIC

$_{merge}$

, TOPIC

![]() $_{sub-tree}$

, TOPIC, and TOPIC

$_{sub-tree}$

, TOPIC, and TOPIC

![]() $_{none}$

, are designed to evaluate the effectiveness of adopted strategies. Experiments show that TOPIC outperforms the other proposed versions. The reason is that TOPIC does not require keeping unnecessary details in memory. TOPIC works better than the state-of-the-art TopHUI (Gan et al., Reference Gan, Wan, Chen, Chen and Qiu2020) concerning the runtime, memory cost, and scalability on the benchmark datasets. It produces excellent performance on dense and moderately dense datasets. TOPIC adopts an efficient data structure to avoid the large amount of information stored in memory, thereby resulting in high performance. Moreover, the versions of the proposed algorithm, TOPIC

$_{none}$

, are designed to evaluate the effectiveness of adopted strategies. Experiments show that TOPIC outperforms the other proposed versions. The reason is that TOPIC does not require keeping unnecessary details in memory. TOPIC works better than the state-of-the-art TopHUI (Gan et al., Reference Gan, Wan, Chen, Chen and Qiu2020) concerning the runtime, memory cost, and scalability on the benchmark datasets. It produces excellent performance on dense and moderately dense datasets. TOPIC adopts an efficient data structure to avoid the large amount of information stored in memory, thereby resulting in high performance. Moreover, the versions of the proposed algorithm, TOPIC

![]() $_{merge}$

, TOPIC

$_{merge}$

, TOPIC

![]() $_{sub-tree}$

, and TOPIC

$_{sub-tree}$

, and TOPIC

![]() $_{none}$

, are significantly better than TopHUI because of the adoption of tight upper bounds for RSU and RLU. It is observed that the proposed algorithm is up to 3, 20, 4, and 2 times faster as compared to TopHUI (Gan et al., Reference Gan, Wan, Chen, Chen and Qiu2020) on the Retail, Chess, Mushroom, and T10I4D100K datasets, respectively. Furthermore, TOPIC consumes 8 times less memory than TopHUI to complete the mining process. However, more efficient threshold auto-raising strategies and compressed data structures can be designed for high mining performance.

$_{none}$

, are significantly better than TopHUI because of the adoption of tight upper bounds for RSU and RLU. It is observed that the proposed algorithm is up to 3, 20, 4, and 2 times faster as compared to TopHUI (Gan et al., Reference Gan, Wan, Chen, Chen and Qiu2020) on the Retail, Chess, Mushroom, and T10I4D100K datasets, respectively. Furthermore, TOPIC consumes 8 times less memory than TopHUI to complete the mining process. However, more efficient threshold auto-raising strategies and compressed data structures can be designed for high mining performance.

There are some top-k HUIM algorithms (Gan et al., Reference Gan, Wan, Chen, Chen and Qiu2020; Chen et al., Reference Chen, Wan, Gan, Chen and Fujita2021) available in the literature that deal with the negative itemsets. However, they are not performed well in terms of runtime, memory consumption, weak pattern pruning, and scalability. To deal with these issues, Ashraf et al. (Reference Ashraf, Abdelkader, Rady and Gharib2022) proposed an efficient generalised and adaptive algorithm, named TKN (Efficiently mining Top-K HUIs with positive or Negative profits), to mine top-k HUIs from the positive and negative profit datasets. It uses the pattern-growth method that eliminates the unpromising candidates that exist in the dataset by using a depth-first search. It uses a horizontal dataset presentation as used in EFIM (Zida et al., Reference Zida, Fournier-Viger, Lin, Wu and Tseng2015) that merges the duplicate transactions in the projected datasets, which significantly improves the mining performance. The proposed algorithm utilised transaction projection and merging techniques to significantly reduce the visiting cost of the dataset, thereby significantly reducing execution time and memory usage. A data structure, named LIU, is adopted from THUI (Wan et al., Reference Wan, Chen, Gan, Chen and Goyal2021) that keeps the utilities of itemsets in an ordered way in compact form. It is used to efficiently raise the minimum utility threshold while holding both positive and negative utility. An upper-bound PTWU is introduced to reduce the number of possible HUIs. Another upper-bound Remaining Utility (REU) is adopted from HUI-Miner (Liu & Qu, Reference Liu and Qu2012) to narrow the search-space of the mining process. Two efficient pruning properties, namely Positive sub-tree utility (PSU) and Positive local utility (PLU) are used to prune the search-space in a depth-first search manner. PSU and PLU are the generalised versions of the sub-tree utility and local utility as proposed in EFIM (Zida et al., Reference Zida, Fournier-Viger, Lin, Wu and Tseng2015). Two novel pruning strategies, namely Early pruning (EP) and Early abandoning (EA), are designed. The EP strategy reduces the computational cost of constructing the projected datasets of the prefix itemsets. The EA strategy reduces the number of evaluations to estimate the upper-bound PSU and PLU of the candidates without performing unnecessary dataset scans, thereby increasing mining speed. An array-based approach is utilised to compute the utility and upper bounds in linear time, thus achieving the maximum efficiency to estimate the utility of itemsets. There are three threshold-raising strategies, namely Positive real item utility (PRIU), Positive LIU-Exact (PLIU_E), and Positive LIU-Lower Bound (PLIU_LB), to effectively raise the minimum utility threshold. PRIU strategy is the generalised version of RIU strategy, adopted from the REPT algorithm (Ryang & Yun, Reference Ryang and Yun2015) that is used to raise the minimum utility threshold from the zero value during the first scan. PLIU_E strategy is based on the LIU-E strategy to raise the minimum utility threshold stored in the LIU structure by using the priority queue PIQU_LIU of size k. The PLIU_LB strategy is based on LIU-LB (Krishnamoorthy, Reference Krishnamoorthy2019 b) that computes the lower-bound utility for each stored sequence in the LIU structure.

Three variants of the proposed algorithm, namely TKN(PRIU), TKN(PSU), and TKN(TM) are developed to measure the effectiveness of TKN. TKN(PRIU), TKN(PSU), and TKN(TM) use all the techniques except LIUS-based strategies, transaction merging techniques, and PSU pruning strategies, respectively. It is observed that TKN achieves high speed as compared to TKN(PRIU), TKN(PSU), and TKN(TM). The performance of the proposed algorithm is compared against the negative top-k HUIM, named THN (Sun et al., Reference Sun, Han, Zhang, Shen and Du2021), top-k HUIM, namely THUI (Wan et al., Reference Wan, Chen, Gan, Chen and Goyal2021) and TKEH (Singh et al., Reference Singh, Singh, Kumar and Biswas2019b), and finally with the optimal case of negative-utility-based HUIM, namely FHN (Fournier-Viger, Reference Fournier-Viger, Luo, Yu and Li2014) and GHUM (Krishnamoorthy, Reference Krishnamoorthy2018), in terms of execution time and memory consumption from the dense and sparse datasets. The experimental results show that TKN achieves one order of magnitude more efficiently than THN and about four orders of magnitude more efficiently than THUI and TKEH on highly dense and large datasets. TKN obtains performance very close to the optimal cases of FHN and GHUM. It is observed that for different values of k, TKN prunes 82 percent at the early stages, which saves a significant amount of time. TKN reduces the number of evaluations by 28 percent by computing the upper bound using the EA strategy.

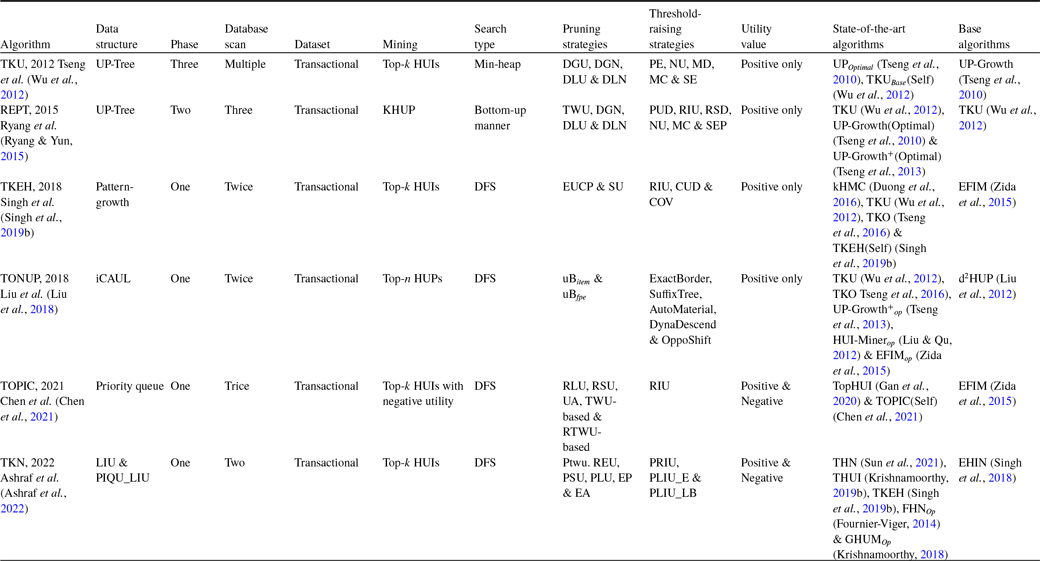

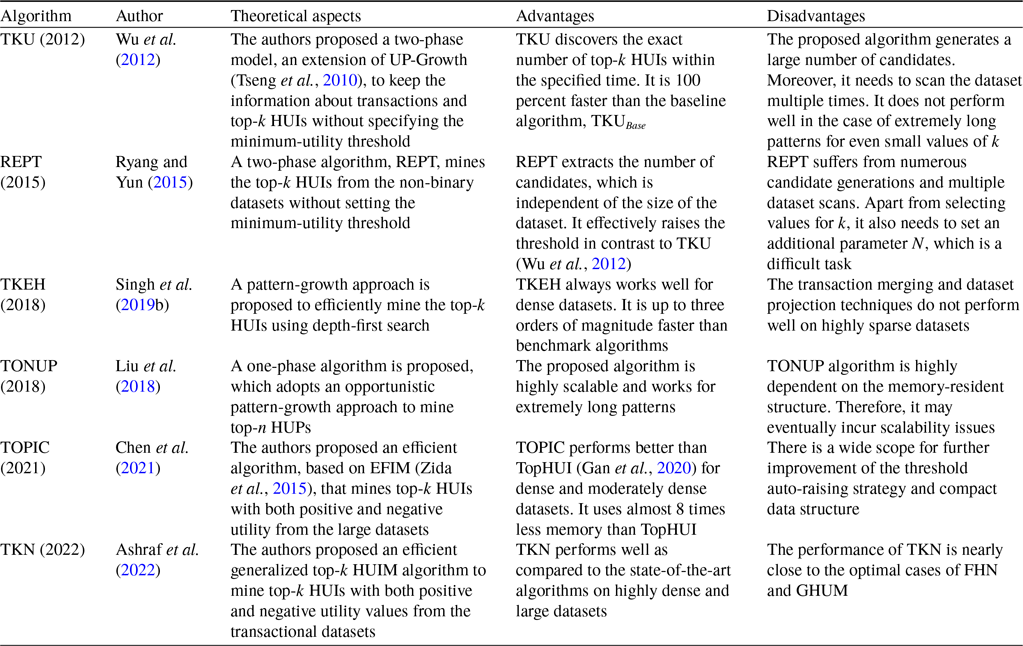

Table 8 describes the overview of the tree-based top-k HUIM algorithms for static datasets. Table 9 highlights the pros and cons of all the available state-of-the-art tree-based top-k HUIM algorithms for static datasets.

Table 8. An overview of the tree-based top-k HUIM algorithms for the static datasets

Table 9. Advantages and disadvantages of the tree-based top-k HUIM algorithms for the static datasets

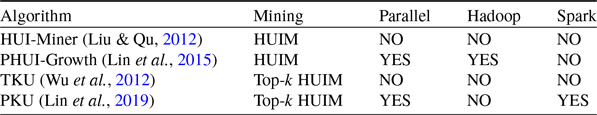

3.1.2 Incremental and data stream based algorithms

Several HUIM algorithms are proposed from data stream datasets (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Choi2012). However, static dataset based algorithms cannot be applied in the case of data streams because of the following reasons: (1) data are coming rapidly, (2) unknown or unlimited data size, and (3) inability to know previous transactions. To address these challenges, the HUIM method for data stream (Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Choi2012) is proposed to efficiently discover the HUIs. However, it takes lots of time to process the data from the data stream. Moreover, the user is required to set a minimum utility threshold. To resolve these issues, the top-k HUIM algorithm (Wu et al., Reference Wu, Shie, Tseng and Yu2012) is designed to mine top-k HUIs without specifying the minimum-utility threshold. However, this method works only for static data. Moreover, it requires high runtime and memory utilization. In this sub-section, we provide an up-to-date discussion about the incremental and data stream dataset-based top-k HUIM algorithms.

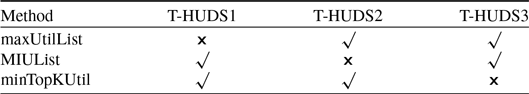

The conventional top-k HUIM algorithms (Wu et al., Reference Wu, Shie, Tseng and Yu2012; Ryang & Yun, Reference Ryang and Yun2015; Duong et al., Reference Duong, Liao, Fournier-Viger and Dam2016; Tseng et al., Reference Tseng, Wu, Fournier-Viger and Yu2016) are not suitable for the data stream because the number of items grows exponentially, thereby failing to identify top-k HUIs. To resolve this problem, Zihayat and An (Reference Zihayat and An2014) proposed the pattern-growth approach, named T-HUDS (Top-k High Utility itemset mining over Data Stream), the first work of its kind, to mine the top-k high utility patterns over a sliding window from the data stream. The authors proposed four strategies to automatically initialise and dynamically adjust the minimum-utility threshold. The first three strategies are performed during the first phase, while the fourth strategy is performed during the second phase of the mining process. The first strategy uses a novel estimation utility model, named prefix utility, to find HUIs and a closer estimation of true utility than TWU. The second strategy initialises the threshold using the Maximum Utility List (maxUtilList). It is computed during the construction and updating of the HUDS-tree. The third strategy adjusts the threshold using the Minimum Itemset Utility List (MIUList) by dynamically adjusting the threshold and storing the top-k Minimum Itemsets Utility (MIU) values of current promising HUIs. The fourth strategy adjusts the threshold with the minimum top-k utility (minTopKUtil) of the previous window. It uses a compressed tree structure, named High Utility Data Stream Tree (HUDS-tree), like FP-tree (Han et al., Reference Han, Pei and Yin2000), to keep the details about the transactions in the sliding window. HUDS-tree is built in one dataset scan only. In phase one, HUDS-tree is mined to generate the Potential top-k HUIs (PTKHUIs), while in phase two, the exact utility of PTKHUIs is calculated to discover the top-k HUIs. To measure the effectiveness of the proposed strategies, two versions of T-HUDS are developed, namely T-HUDS

![]() $_I$

and T-HUDS. The former utilises only the first three strategies during the first phase. On the other hand, T-HUDS uses all the strategies during the first and second phases of the mining process. To measure the effectiveness of threshold-raising strategies in phase one, three versions of the proposed algorithms, namely T-HUDS

$_I$

and T-HUDS. The former utilises only the first three strategies during the first phase. On the other hand, T-HUDS uses all the strategies during the first and second phases of the mining process. To measure the effectiveness of threshold-raising strategies in phase one, three versions of the proposed algorithms, namely T-HUDS

![]() $_1$

, T-HUDS

$_1$

, T-HUDS

![]() $_2$

, and T-HUDS

$_2$

, and T-HUDS

![]() $_3$

, are evaluated. It is observed that T-HUDS

$_3$

, are evaluated. It is observed that T-HUDS

![]() $_3$

performs better than both T-HUDS

$_3$

performs better than both T-HUDS

![]() $_1$

and T-HUDS

$_1$

and T-HUDS

![]() $_2$

, while T-HUDS

$_2$

, while T-HUDS

![]() $_2$

performs better than T-HUDS

$_2$

performs better than T-HUDS

![]() $_1$

. The comparison of these versions, T-HUDS

$_1$

. The comparison of these versions, T-HUDS

![]() $_1$

, T-HUDS

$_1$

, T-HUDS

![]() $_2$

, and T-HUDS

$_2$

, and T-HUDS

![]() $_3$

, is shown in Table 10.

$_3$

, is shown in Table 10.

Table 10. Comparison of three designed versions of the T-HUDS algorithm using different threshold-raising strategies (Zihayat & An, Reference Zihayat and An2014)

T-HUDS outperforms the state-of-the-art algorithm, HUPMS

![]() $_T$

(Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Choi2012) (Basic version of TKU Wu et al., Reference Wu, Shie, Tseng and Yu2012), concerning the number of obtained candidates, threshold, first and second phase time, total runtime, memory usage, window size, and scalability on dense and sparse datasets. However, the sliding window of the proposed algorithm cannot be entirely kept in memory, which may lead to more database scans. Moreover, the HUDS-tree is a lossy compression of the transactions in the sliding window, which may make it difficult to obtain the exact utility of the PTKHUIs.

$_T$

(Ahmed et al., Reference Ahmed, Tanbeer, Jeong and Choi2012) (Basic version of TKU Wu et al., Reference Wu, Shie, Tseng and Yu2012), concerning the number of obtained candidates, threshold, first and second phase time, total runtime, memory usage, window size, and scalability on dense and sparse datasets. However, the sliding window of the proposed algorithm cannot be entirely kept in memory, which may lead to more database scans. Moreover, the HUDS-tree is a lossy compression of the transactions in the sliding window, which may make it difficult to obtain the exact utility of the PTKHUIs.

The main issues of the top-k HUIM approach from data streams, based on the concept of sliding window, follow as follows: (1) How do you maintain the window and keep the utility information of itemsets because the old data needs to be deleted as the new data arrives? (2) How do you discover the top-k HUIs for the sliding window? To solve these problems, Lu et al. (Reference Lu, Liu and Wang2014a) developed an effective sliding window-based method, named TOPK-SW (Top-k high utility itemset mining based on Sliding-Window), to mine the top-k HUIs without candidate generations from the data stream. It performs two main tasks: (1) maintains the data in the current window. (2) mines top-k high utility itemsets on the window. A novel tree structure, named High Utility Itemsets Tree (HUI-Tree), is proposed to maintain the information about the item’s utility in lexicographical order and store each batch of data in the current window. It does not require additional dataset scans. Experiments show that TOPK-SW outperforms the optimal case of the state-of-the-art UP-Growth (Tseng et al., Reference Tseng, Wu, Shie and Yu2010) for the runtime and number of generated patterns from the dense and sparse datasets. TOPK-SW also significantly outperforms the UP(Optimal) in terms of time and space efficiency on dense and long transactional datasets. It is observed that the time efficiency of TOPK-SW can be significantly improved up to one order of magnitude in the case of dense and long transactional datasets. Moreover, the proposed algorithm is more stable as there are variations in the value of k.

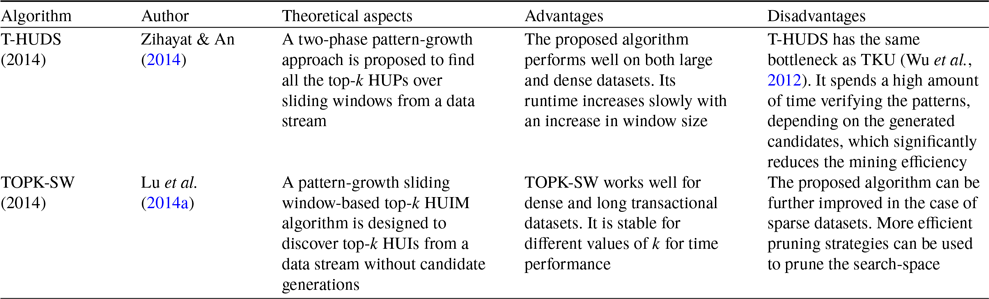

Table 11 describes the comparative overview of the tree-based top-k HUIM algorithms for increment and data stream datasets. Table 12 highlights the pros and cons of all the currently available tree-based top-k HUIM algorithms for increment and data stream datasets.

Table 11. An overview of the tree-based top-k HUIM algorithms for increment and data stream datasets

Table 12. Advantages and disadvantages of the tree-based top-k HUIM algorithms for increment and data stream datasets

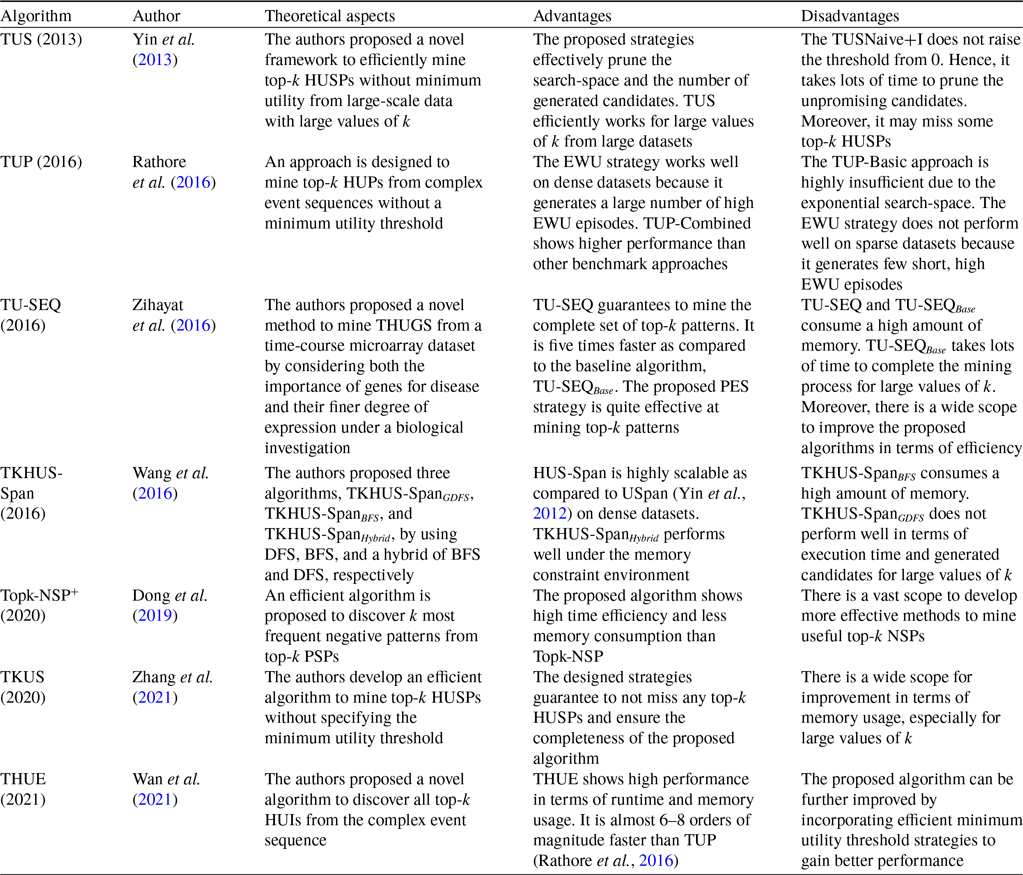

3.1.3 Sequential dataset-based algorithms

Traditional HUIM algorithms (Liu et al., Reference Liu and Liao2005; Liu & Qu, Reference Liu and Qu2012) cannot be applied to the sequential datasets that consist of the itemsets with sequence related information. To address this issue, high-utility sequential pattern mining (HUSPM) algorithm (Yin et al., Reference Yin, Zheng and Cao2012) is proposed to find the high-utility sequential patterns (HUSPs) from the sequential datasets. However, they incur the following challenges: (1) It is hard to set the appropriate threshold because users are unaware of the characteristics of datasets. (2) It takes lots of time to extract the exact number of intended patterns. To address these issues, top-k HUSPM algorithms are proposed, inspired by top-k SPM (Tzvetkov et al., Reference Tzvetkov, Yan and Han2003) and top-k HUIM (Wu et al., Reference Wu, Shie, Tseng and Yu2012), to mine top-k HUSPs. However, there are the following challenges: (1) It is computationally infeasible to prune the search-space. (2) combinatorial explosion of search-space; (3) identification of all top-k HUSPs. In this sub-section, we provide an up-to-date discussion about the sequential dataset-based top-k HUIM algorithms.

The mining of top-k high utility sequential itemsets is an arduous task than that of top-k HUIs for several reasons: (1) The DCP does not hold for top-k high-utility sequences; hence, top-k frequent sequence pattern mining (Tzvetkov et al., Reference Tzvetkov, Yan and Han2003) cannot be straight-forwardly applicable to high-utility sequences. (2) Computational complexity and combinatorial explosion are very high because of the sequencing among itemsets. (3) Raising the minimum threshold is difficult without any loss of the top-k high utility sequential itemsets. To address these issues, Yin et al. (Reference Yin, Zheng, Cao, Song and Wei2013) proposed a method, named TUS (Top-k high Utility Sequence mining), to discover the top-k utility sequences without minimum-utility threshold from the sequential datasets. The authors developed a baseline method, named TUSNaive, to mine the top-k sequential itemsets with high utilities. It adopts the TUSList structure to keep the top-k HUSPs on-the-fly. However, TUSNaive traverses excessive invalid sequences as the minimum threshold begins with 0, thereby reducing the mining performance. To address this issue, three effective strategies are designed. Firstly, the pre-insertion strategy is designed to raise the minimum threshold to stop unwanted candidate generations. Secondly, the sorting concatenation order strategy is performed to effectively identify the promising high-utility sequences. Lastly, a sequence-reduced utilisation (SRU) strategy is applied to maintain the tighter sequence boundary that keeps refreshing the blacklist until all the items in the whitelist satisfy the minimum-utility threshold value.

Three versions of the baseline algorithm, TUSNaive, are designed, namely TUSNaive+ (TUSNaive with SRU), TUSNaive+I (TUSNaive with SRU and pre-insertion), and TUSNaive+S (TUSNaive with SRU and sorting). It is observed that TUS, TUSNaive+I, and TUSNaive+S perform better than TUSNaive+. TUSNaive+I performs better than TUSNaive+S for small values of k, while TUSNaive+S performs better than TUSNaive+I when k exceeds a certain number. Hence, the proposed optimisation measures–SRU, sorting, and pre-insertion–significantly extract the top-k patterns. The experiments are performed on two syntactic datasets and two real datasets, show that TUS performs 10 to 1000 times faster than the baseline algorithm, TUSNaive.

Rathore et al. (Reference Rathore, Dawar, Goyal, Patel, Deshpande, Ravindran and Ranu2016) proposed an effective method, named TUP (Top-k Utility ePisode mining), to mine the top-k high-utility episode from a complex event sequence. The authors developed a basic method, named TUP-Basic, to dynamically keep the sorted list of size k that consists of the top-k HUEs. TUP-basic calculates the minimum occurrence, utility, and Episode-Weighted Utilization (EWU) of 1-length episodes by scanning the dataset only once. But it generates numerous candidates because the threshold starts at 0. It is highly computationally expensive to extract the number of items in the exponential search-space. To raise the minimum utility threshold, an effective Pre-insertion strategy is proposed to pre-insert the event sets concurrently. It raises the minimum utility threshold from 0 to 13 before the start of the mining process. To further enhance the efficiency of the mining process, the EWU strategy is proposed to effectively deal with frequency and utility. It explores those episodes first that have a high EWU value. The TUP-combined strategy combines the features of both the pre-insertion and EWU strategies. The effectiveness of pre-insertion, TUP-EWU, and TUP-Combined is measured for runtime and memory usage. It is observed that the pre-insertion strategy performs better for the total runtime and number of generated candidate episodes on the sparse datasets. On the other hand, TUP-EWU and TUP-Combined perform better for total runtime and memory usage on dense datasets. However, the performance of TUP-EWU is worse on sparse datasets because of a few short high-utility episodes.

Sequential pattern mining is widely used to extract gene regulation sequential patterns. However, it incurs the following challenges: (1) It depends on the frequency or support. (2) It is a tedious task to determine the threshold value. One possible solution is to design top-k HUP algorithms to efficiently work on gene regulation. But the major issues are: (1) The threshold is unknown in advance. (2) raise the threshold with the complete set of top-k patterns. To resolve these problems, Zihayat et al. (Reference Zihayat, Davoudi and An2016) proposed a novel algorithm, named TU-SEQ (Top-k Utility-based gene regulation SEQuential pattern discovery), the first work of its kind, that considers the utility model in terms of the gene importance and degree of expression to mine the top-k high utility gene regulation sequential patterns (top-k HUGSs). TU-SEQ only needs a user-specified number k and disease as an objective to traverse the k most high-utility gene regulation sequences that guarantee to give the complete set of top-k patterns. The authors proposed a baseline algorithm, named TU-SEQ

![]() $_{Base}$