No CrossRef data available.

Published online by Cambridge University Press: 19 December 2024

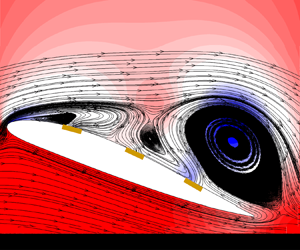

Active flow control based on reinforcement learning has received much attention in recent years. Indeed, the requirement for substantial data for trial-and-error in reinforcement learning policies has posed a significant impediment to their practical application, which also serves as a limiting factor in the training of cross-case agents. This study proposes an in-context active flow control policy learning framework grounded in reinforcement learning data. A transformer-based policy improvement operator is set up to model the process of reinforcement learning as a causal sequence and autoregressively give actions with sufficiently long context on new unseen cases. In flow separation problems, this framework demonstrates the capability to successfully learn and apply efficient flow control strategies across various airfoil configurations. Compared with general reinforcement learning, this learning mode without the need for updating the network parameter has even higher efficiency. This study presents an effective novel technique in using a single transformer model to address the flow separation active flow control problem on different airfoils. Additionally, the study provides an innovative demonstration of incorporating reinforcement-learning-based flow control with aerodynamic shape optimization, leading to collective enhancement in performance. This method efficiently lessens the training burden of the new flow control policy during shape optimization, and opens up a promising avenue for interdisciplinary intelligent co-design of future vehicles.