Introduction

The definition of citizen science is very broad and can encompass many differing degrees of public involvement (Gibb Reference Gibb2019). In recent years, the concept has often been applied to ‘crowd science’, where large groups of volunteers participate in scientific research (Heigl et al. Reference Heigl, Kieslinger, Paul, Uhlik and Dörler2019; Haklay et al. Reference Haklay, Dörler, Heigl, Manzoni, Hecker, Vohland and Vohland2021). Although the term ‘citizen scientist’ has faced resistance in archaeology for potentially excluding specific groups, such as indigenous communities, it remains the most widely accepted term (Liebenberg et al. Reference Liebenberg2021). Archaeology has used citizen science for decades, pre-dating its current popularity; volunteer participation occurs at multiple levels in excavation projects and surveys (Smith Reference Smith2014; Gibb Reference Gibb2019), and the emergence of large-scale, crowd-sourced projects has seen thousands of participants collaborating online. Examples of the latter include the registration of finds by metal-detectorists (Kars & Heeren Reference Kars and Heeren2018; Dobat et al. Reference Dobat, Decker, Heeren, Lewis, Thomas and Wessman2020) and the creation of peer-to-peer collaboration platforms (Wilkins Reference Wilkins2020; Wernke et al. Reference Wernke2024). The popularity of high-profile projects in astrophysics such as Galaxy Zoo—where participants identified galaxies from shapes on telescope images (Willett et al. Reference Willett2017)—and similar projects in other scientific fields (Jones et al. Reference Jones2018, Reference Jones, Arteta, Zisserman, Lempitsky, Lintott and Hart2020) demonstrates the potential for such an approach in archaeology. Specifically, the analysis of high-resolution remote sensing images with the assistance of citizen scientists has gained in popularity (Duckers Reference Duckers2013; Lin et al. Reference Lin, Huynh, Lanckriet and Barrington2014). Such projects rely on multiple participants classifying a single image and then aggregating the results to obtain expert-quality datasets (Swanson et al. Reference Swanson, Kosmala, Lintott and Packer2016).

Although these projects showcase the potential of volunteer scientists, concerns and criticisms regarding the quality of these datasets persist (Dickinson et al. Reference Dickinson2012; Deckers et al. Reference Deckers, Dobat, Ferguson, Heeren, Lewis and Thomas2018). A significant obstacle is that the datasets generated by untrained and unsupervised volunteers are inherently messy and noisy (Kosmala et al. Reference Kosmala, Wiggins, Swanson and Simmons2016; Swanson et al. Reference Swanson, Kosmala, Lintott and Packer2016; Clare et al. Reference Clare2019). This noisiness has led some researchers to dismiss crowd-sourced results as “analytically useless” (Casana Reference Casana2020: 595). While we fundamentally disagree with this assessment, it must be acknowledged that the quality of crowd-sourced data requires critical assessment before further analysis can proceed (Balázs et al. Reference Balázs, Mooney, Nováková, Bastin, Arsanjani and Vohland2021).

In this article, we address this criticism by testing and validating citizen science data drawn from our large-scale citizen science project called Heritage Quest (Erfgoed Gezocht in Dutch) where volunteers classified thousands of lidar images via an online platform (Lambers et al. Reference Lambers, Verschoof-van Der Vaart and Bourgeois2019). We present our investigations into the overall quality of user classifications, inter-user agreement (consensus) and the quality of the classifications based on a ground-based survey.

Background: the Heritage Quest project

Our citizen science project focuses on two regions in the central Netherlands that share similarities in terms of geology, land-use and history: the Veluwe and the Utrechtse Heuvelrug (Figure 1). These regions are characterised by ice-pushed ridges formed during the Saale glacial period (c. 400 000–130 000 years BP), which were subsequently partly covered by cover-sand deposits during the Weichselian glacial period (115 000–11 700 years BP) (Berendsen Reference Berendsen2004). The result of these processes is an undulating landscape with significant variation in elevation. Forests and heathland covered the area from the Neolithic period (5500–2000 BC) onwards, surrounded by marshes and river valleys. Gradually increasing deforestation from prehistoric times (Doorenbosch Reference Doorenbosch2013) expanded further in the Middle Ages (c. AD 1000–1500), leading to the formation of large drift-sand areas (Koster Reference Koster2009). In the late nineteenth and early twentieth centuries, large parts of the area were reforested, resulting in today's extensive forests interspersed with heathlands.

Figure 1. The Heritage Quest research areas (dashed outline, Utrechtse Heuvelrug on the left, the Veluwe on the right) on an elevation map of the Netherlands. Inset) location of research area (black squares) and known barrows (black dots) within the Netherlands (elevation model: Nationaal Georegister 2023; co-ordinates in Amersfoort/RD New, EPSG: 28992) (figure by authors).

Both study regions contain well-preserved archaeological remains located either in heathland or under forest cover. Prehistoric barrows, Celtic field systems, charcoal kilns, hollow roads and landweren (long distance land boundaries dating to the Middle Ages) are among the more common objects found in the area. In the field of computer vision, the term ‘feature’ refers to the properties of an image, while an ‘object’ refers to real-world entities (Traviglia et al. Reference Traviglia, Cowley and Lambers2016: 14). Within this article the term ‘objects’ is therefore used for archaeological features, such as barrows. Lidar imagery is essential in detecting these archaeological objects, which are often obscured by dense vegetation cover.

To analyse the lidar imagery (generated from lidar data provided by the Actueel Hoogtebestand Nederland; Table 1) from both the Veluwe and the Utrechtse Heuvelrug, we used the Zooniverse, a web-based platform that allows people to participate in citizen science projects or ‘people-powered research’ without any specialised background, training or expertise (Simpson et al. Reference Simpson, Page, Roure and Chung2014). In our Zooniverse project, Heritage Quest, we asked participants to mark any potential barrow, Celtic field, cart track and charcoal kiln within small lidar tiles (Figure 2). These tiles were obtained by dividing the lidar image of the entire area into tiles of 300 × 300m (600 × 600 pixels) with five per cent (30 pixels) overlap to all sides. Participants were presented with two different lidar visualisations (see Figure 2), shaded relief and a simple local relief model (Kokalj & Hesse Reference Kokalj and Hesse2017) to assist them in their classification. The first visualisation was more intuitive to interpret by volunteers, while the second allowed for better visibility of faint traces that would otherwise be difficult to distinguish on a shaded relief map. The latter visualisation improved the detection of Celtic fields significantly.

Table 1. Meta-information for the lidar imagery dataset, the so-called Actueel Hoogtebestand Nederland (Nationaal Georegister 2023).

Figure 2. Top image) overview of the interface of the Heritage Quest project on the Zooniverse platform. The image shows both visualisations with a shaded relief image on the left and a simple local relief model on the right. Participants could click on either to mark locations. At any point they could also write comments or questions on this image in the forum. Lower images) segments of the more detailed field guide, providing in-depth information on detecting archaeological objects on lidar imagery (figure by authors).

The user interface was bilingual Dutch/English, ensuring that international citizen scientists, as well as Dutch-speaking volunteers, could participate. Every lidar image was classified by at least 15 different users for the Veluwe and 60 users for the Utrechtse Heuvelrug. A dedicated staff member, assisted by a team of citizen scientists, monitored user-engagement and provided feedback and online support on an accompanying forum throughout the project. When a volunteer joined the project, they were provided with a short tutorial on how to operate the website and how to identify archaeological objects in the images. A comprehensive field guide (see Figure 2) was always available and included many examples of the objects we were interested in, tips on how to identify positive and negative examples (e.g. a roundabout on a road network compared to an actual prehistoric barrow), general background information on the archaeological objects and the region under investigation, as well as an introduction to archaeological prospection and remote sensing in general.

The project was launched in May 2019 on the Veluwe and succeeded in mapping the entire 1780km2 of this area in approximately five months. In total, 2063 users participated, between them classifying 396 552 tiles. Volunteers were asked to place points on the locations of potential barrows and charcoal kilns and to draw polygons covering areas where Celtic fields were detected. Each tile was classified by a minimum of 15 volunteers before being retired and removed from the set of available tiles. In April 2020, the Utrechtse Heuvelrug project launched with 4572 users who classified 300 971 tiles covering an area of 350km2. The workflow for the Utrechtse Heuvelrug focused on identifying barrows, Celtic field-systems and medieval cart tracks, rather than charcoal kilns, as preliminary observations of the images indicated that kilns were very rare in the region. We increased the number of classifications needed before a tile was retired, from 30 to 60, after more than 46 000 tiles were classified on the first day; even so, the entire area was investigated within a month. Taking the results from both projects together, approximately 6.3 per cent of the entire land surface of the Netherlands was classified.

Aggregating classifications in Heritage Quest

A significant challenge in any citizen science project involving large numbers of participants is creating meaningful data from inherently noisy sources (Swanson et al. Reference Swanson, Kosmala, Lintott and Packer2016; Rosenthal et al. Reference Rosenthal2018). Most of the volunteers who participated in our project were not trained as archaeologists; certainly not as remote sensing specialists knowledgeable in the detection of archaeological objects on lidar imagery. Nevertheless, through the repetition of research, the quality of the output data can be increased (Swanson et al. Reference Swanson, Kosmala, Lintott and Packer2016; Rosenthal et al. Reference Rosenthal2018). When each image is investigated by multiple participants, a consensus is reached (inter-user agreement). Objects that fit the requirements given to the citizen scientists in the tutorial will be identified by more participants, while more enigmatic objects will be selected by fewer participants. Moreover, accidental errors are easily filtered out as two volunteers will rarely mark the (exact) same location by accident.

In this article, we focus on aggregating the results from barrow classifications. In total, 222 699 individual barrow classifications were registered across both areas (see Table 2). These individual classifications consist of some errors, or misidentifications, but also of locations that were clicked on dozens of times. To aggregate the results of the online project, the data from the Zooniverse (i.e. all individual classifications or ‘clicks’) were converted into geospatial entities (i.e. points) with real-world co-ordinates and an additional field (count), containing a single integer (1). Subsequently, a chain of processing tools in the open-access geographic information system software QGIS (v. 3.16, QGIS Development Team 2017) was used to turn these points into polygons incorporating the number of classifications per polygon (Algorithm 1): all points were buffered (with an empirically determined 4m radius) and dissolved to turn them into polygons. These polygons were converted into individual, separate features with the Multipart_to_singleparts processing tool. Finally, the number of classifications (points) within these polygons was counted through the Join_attributes_by_location (summary) tool (Figure 3).

Table 2. Factsheet of the results from the Heritage Quest project in both regions.

Note that charcoal kilns were researched in the Veluwe region, but since we did not expect these to be common on the Utrechtse Heuvelrug we asked volunteers to mark medieval cart tracks instead. The latter feature was often flagged by volunteers during the Veluwe project in the forum and we therefore decided to mark these as well.

Figure 3. Algorithm showing the aggregation process in QGIS (figure by authors).

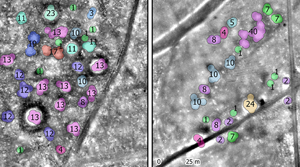

An analysis of the application of the above workflow demonstrates its usability, even in areas where barrows are close together (Figure 4A–C). Occasionally this processing results in the lumping of disparate objects in very close proximity (within 4m) into a single location (Figure 4D) or the artificial separation of a single location into multiple locations when the classifications are overly spread out (more than 4m away; Figure 4A). These issues occur rarely, however, as the average diameter, shape and form of the barrows in the investigated areas are quite stable (see Bourgeois Reference Bourgeois2013).

Figure 4. Results from aggregation at dense concentrations of potential barrows: A) aggregation results from a closely spaced group of barrows—all locations with 12 or more classifications are known burial mounds; B) aggregation results of a group of burial mounds within a Celtic field—volunteers were able to correctly identify all known burial mounds (12 or more classifications); C) aggregation results of a previously known urnfield on the Veluwe consisting of at least 28 or more low burial mounds (Verlinde & Hulst Reference Verlinde and Hulst2010; fig. 53). The volunteers have potentially discovered a much larger number of burial mounds than previously known. D) aggregation results of a previously known line of burial mounds. All previously known barrows have been identified, as well as a number of previously unknown mounds. Note that here, the classifications tend to blend into one another if the mounds are close, with closely spaced objects being added together (i.e. providing 24 or even 40 classifications) (figure by authors).

The resulting aggregation created 77 014 individual consensus locations in the Veluwe (37 812) and the Utrechtse Heuvelrug (39 202). These consist of locations identified by between one and 243 individual users, although the higher end of this scale was only reached a few times, if an object was located in the overlap zone between different tiles.

To ensure the accuracy of the aggregated classifications, we corrected the data on the basis of land-use. Two processes have had a negative impact on the preservation and visibility of barrows in the Netherlands. Firstly, (post)medieval agriculture, urbanisation and infrastructure development have systematically erased all above-ground traces of barrows in these regions. Secondly, areas affected by erosion and sedimentation caused by wind (drift-sand) exhibit a negative correlation with the presence of barrows (Bourgeois Reference Bourgeois2013: 40–47; Verschoof-van der Vaart et al. Reference Verschoof-van der Vaart, Lambers, Kowalczyk and Bourgeois2020). Almost all preserved prehistoric barrows in the central Netherlands have been found in areas covered with forest or vegetation such as natural grasslands or heather.

As such, we expected to find new potential barrows only in forested areas, heath- or grasslands, which were not affected by drift-sand. The chance of discovering unknown above-ground barrows outside of these areas is close to zero (Verschoof-van der Vaart et al. Reference Verschoof-van der Vaart, Lambers, Kowalczyk and Bourgeois2020). Therefore, we removed all consensus locations outside of areas covered with forest, heather or grasslands, using the map on present day land-use created by Centraal Bureau voor de Statistiek (Statistics Netherlands) (Nationaal Georegister 2023; Figure 5). In most cases the inter-user agreement in these areas was low and in total consisted of 22–30 per cent of the overall consensus locations. We also eliminated all locations in known drift-sand areas based on the geomorphological map of the Netherlands 2019 by the Basisregistratie Ondergrond (Nationaal Georegister 2023) and the recent drift-sand map by the Bryologische en Lichenologische Werkgroep and Wageningen University (Sparrius & Riksen Reference Sparrius and Riksen2019). Our fieldwork identified several additional areas of drift-sand and, as all consensus locations we investigated in drift-sand areas were determined to be sand dunes (Verschoof-van der Vaart et al. Reference Verschoof-van der Vaart, Kaptijn and Bourgeois2022a), consensus locations were also removed from these newly identified areas. Finally, we removed all already known and recorded barrows using the Archeologisch Monumenten Register (AMR2) from the State Service for Cultural Heritage (RCE). In total, we retained 22 206 consensus locations (Figure 5).

Figure 5. Workflow illustrating the selection processes to identify potential newly discovered locations within the dataset (figure by authors).

Assessing the quality of the consensus locations

The main challenge we faced was assessing the overall quality of the remaining 22 000-plus consensus locations. Evaluating the data based on known barrows in the region proved problematic because these are primarily scheduled monuments that have been restored by adding a layer of material, often sand, on top of the mound. This increases their height significantly, while vegetation is regularly cleared making these restored mounds significantly easier to detect on lidar imagery than the unknown (and therefore unrestored) barrows. Thus, the detection of these known barrows does not inform us how well the volunteers were able to correctly distinguish natural or modern topographical features from ancient barrows. Using these known barrows to assess the quality would only inform us if the consensus locations conformed to already known and restored prehistoric barrows.

To address this issue, we, together with students and volunteers, investigated 219 individual consensus locations in the field to establish whether they were prehistoric barrows. Using Mergin Maps, an open-source app that uses the internal GPS of a smartphone to present geospatial data, we located the consensus locations. We then collected at least three hand coring samples from across each consensus location: one outside, one in the flank and one (slightly off) the centre of the elevation. Coring is required as a simple visual inspection is often not sufficient for the identification of barrows. Many natural topographical features look very similar to burial mounds and present-day dense vegetation obscures their overall shape, making coring and inspection of the internal build-up of the feature necessary for identification. Based on the coring data, each individual consensus location was then interpreted as either an ancient anthropogenic mound or a natural mound, the latter almost always either wind-blown deposits or glacial meltwater relics. Earlier fieldwork by other researchers had previously assessed 161 of our consensus locations either through coring or small test-trenches. These were added to our dataset, resulting in a total of 380 investigated consensus locations, of which 136 were located in the Utrechtse Heuvelrug and 244 in the Veluwe, with the locations distributed throughout landscapes of different geomorphological types. The results of the fieldwork are published elsewhere (Verschoof-van der Vaart et al. Reference Verschoof-van der Vaart, Kaptijn and Bourgeois2022a, Reference Verschoof-van der Vaart, Kaptijn and Bourgeoisb & Reference Verschoof-van der Vaart, Kaptijn and Bourgeoisc).

Of these 380 consensus locations, 226 were interpreted as barrows, providing an average precision (equal to true positives divided by the sum of true positives and false positives; Verschoof-van der Vaart et al. Reference Verschoof-van der Vaart, Lambers, Kowalczyk and Bourgeois2020) of 0.59. However, this performance changes significantly when the number of volunteers that agreed on a given consensus location is taken into account (i.e. the inter-user agreement; Figure 6). For consensus locations with an inter-user agreement less than seven, the precision (here calculated as the ratio of barrows versus natural topographic features) fluctuates between 0.21 and 0.41. The precision significantly increases to 0.75 for an inter-user agreement of 10, and further improves to around 0.82 for an inter-user agreement of 12 or greater. An inter-user agreement of 15 or more results in a precision of 0.85 of investigated consensus locations being a prehistoric barrow.

Figure 6. Precision versus inter-user agreement based on fieldwork validated consensus locations. The top panel shows the precision for the Veluwe, the centre panel for the Utrechtse Heuvelrug and the bottom panel the overall precision for the entire project. The error bars indicate examples where the anthropogenic nature of the mound could be established, but not conclusively if it represented a barrow. Note that the 15 classifications also contain objects that have been classified by more than 15 contributors due to overlap between images, aggregation of multiple objects into one, or in the case of the Utrechtse Heuvelrug project, due to a higher retirement rate of the image (figure by authors).

Discussion

The results of our study demonstrate that citizen scientists can effectively identify prehistoric barrows in lidar imagery, particularly when there is a high level of inter-user agreement. Extrapolation of the precision from our ground-truthed subsample to all consensus locations indicates that the volunteers have likely discovered about a thousand potential barrows—and this is if we only consider the consensus locations identified by more than seven volunteers (Figure 7). These have a greater than 50 per cent chance of indeed being prehistoric barrows. This figure would double the current number of known barrows in the central Netherlands (Bourgeois Reference Bourgeois2013) and has far reaching implications for research into the communities who built these mounds, as well as for heritage management.

Figure 7. Overview of the locations of all new potential barrows with high (red points) or middle high (orange points) probabilities, known barrows (small black points) and drift-sand (grey shaded areas) (co-ordinates in Amersfoort/RD New, EPSG: 28992) (figure by authors).

However, we also highlight the challenge with using crowd-sourced data for archaeological research; in particular, the noisy nature of the data makes it difficult to assess the quality of the consensus locations. The distinction between natural and anthropogenic elevations on lidar imagery is often difficult to make, even for trained archaeologists (Banaszek et al. Reference Banaszek, Cowley and Middleton2018). Our fieldwork aimed to address this and allowed us to determine the precision of classifications and how this varied depending on the level of inter-user agreement. By validating a sample of the consensus locations, we could determine that the precision increased substantially as the level of inter-user agreement increased. This suggests that our consensus-based approach can be used to complement traditional, expert-led field-survey methods that might otherwise be too time consuming or expensive to cover a similar area. In our project, investigating the dense forests of the Veluwe and Utrechtse Heuvelrug in their entirety with traditional fieldwork would simply not be possible. Furthermore, expert-led field survey or desk-based assessment may also result in incomplete and error-prone datasets (e.g. Kaptijn Reference Kaptijn2009: 42–59). Studies using satellite images as base data for archaeological prospection have shown how mapping results may vary considerably between archaeologists (Sadr Reference Sadr2016) depending on their level of training and experience (Snyder & Haas Reference Snyder and Haas2024). Thus, independent data, either collected by citizen researchers as in Heritage Quest or by computational approaches (Verschoof-van der Vaart & Lambers Reference Verschoof-van der Vaart and Lambers2022), are an important complementary data source for heritage management, as they often include archaeological objects not found by experts. The map we have now generated can be effectively used by local stakeholders, such as municipalities and the state forestry service, to monitor and manage these important features.

Although precision increased with higher levels of inter-user agreement, even low consensus locations still had a non-zero chance of being prehistoric barrows. As the results show, if only one volunteer classified a location as a barrow, it still had a 35 per cent chance of being a barrow. However, it is worth noting that this number is probably inflated owing to the discovery of a Late Prehistoric urnfield during our fieldwork. In this case, the urnfield consisted of a dozen very small mounds of only 0.2–0.3m in elevation that most volunteers did not classify as barrows, yet one of our most prolific users—who classified more than 20 000 images—did identify the small and low mounds as potential barrows. To avoid confusion, the tutorial focused on isolated barrows and omitted the much more difficult to recognise urnfield mounds. Correcting for this urnfield, the actual probability of a location marked only once being a barrow will be lower, and more towards 15–20 per cent. Nevertheless, the discovery of this urnfield suggests that there may be certain types of barrows that are particularly challenging to identify for volunteers, and in fact even for experts. This is an important caveat to keep in mind, and while the consensus-based approach used in this study was effective at identifying a large number of prehistoric barrows, it is possible that some were still missed. Future research could explore ways to improve the detection of these more challenging types of barrows, perhaps through the use of more advanced survey methods or machine learning algorithms.

The latter could be a promising avenue to improve the results obtained through a volunteer-based approach such as the one we have developed. Previously, we have argued for such an approach where human expertise and machine learning algorithms could be used in a collaborative, integrated and iterative process (Lambers et al. Reference Lambers, Verschoof-van Der Vaart and Bourgeois2019). We feel this could help improve accuracy and the identification process while still leveraging the knowledge and experience of archaeologists. Such a human-in-the-loop approach could also overcome some of the limitations facing machine learning algorithms, in particular the recognition of patterns that are novel or outside the norm (Verschoof-van der Vaart & Lambers Reference Verschoof-van der Vaart and Lambers2022). Within our project we only asked volunteers to classify three different object classes but they were also able to flag specific tiles with their own tags and add them to the forum. One of the categories that they systematically flagged were traces from a new type of prehistoric land parcelling system, comparable to Celtic fields, which was hitherto unknown but suggests landscape organisation on a much larger scale than previously believed and the need for an upward revision of population density estimates (Arnoldussen et al. Reference Arnoldussen, Verschoof-van der Vaart, Kaptijn and Bourgeois2022).

Finally, our study has broader implications for citizen science and public engagement within archaeology. By involving thousands of volunteers in the research process, this project not only generated valuable data but also helped to raise awareness and interest in archaeology. Many of our volunteers have become vocal ambassadors of their local archaeological heritage. Local initiatives have been implemented in several of the provinces and municipalities involved, from teaching prehistory and lidar technology in schools (https://www.reizenindetijd.nl/themas/mijn-huis-staat-in/kaartkijken-met-de-ahn/) to nature conservation groups incorporating traces of prehistoric landscapes in their excursions. Information on the project also features in several popular books (Neefjes & Bleumink Reference Neefjes and Bleumink2021; Tonk Reference Tonk2022). This highlights the potential of citizen science not only as a research tool but also as a means of fostering public engagement and interest in archaeology.

Conclusion

Results from our study suggest that a volunteer-based approach to identifying prehistoric barrows complements traditional, expert-led field survey methods. With higher inter-user agreement, the accuracy of identifying prehistoric barrows increases significantly. Based on our fieldwork and an assessment of the quality of the consensus locations we can establish that the precision increases above 0.82 with an inter-user agreement of 12 or higher. Even a lower consensus of 7 or higher still has a precision of 0.7 on average. Taking our results into consideration we have arguably doubled the number of known barrows within the research area. Furthermore, our approach proved to be cost-effective in analysing a large research area in a relatively short period of time, producing results that are of fundamental importance for research, heritage planning and wider community awareness of local heritage.

Acknowledgements

We thank all citizen scientists who devoted so much of their time to the Heritage Quest project. They are the foundation of this research.

Funding statement

The Heritage Quest project is a collaboration between Leiden University, Erfgoed Gelderland and Landschap Erfgoed Utrecht and has been funded by the provinces of Gelderland and Utrecht, the Fonds voor Cultuurparticipatie, the Cultuur- en Erfgoedpact Noord Veluwe, the National Park Utrechtse Heuvelrug, the Municipality of Arnhem and the KF Heinfonds. This publication uses data generated via the Zooniverse.org platform, development of which is funded by generous support, including a Global Impact Award from Google and a grant from the Alfred P. Sloan Foundation.