1. Introduction

Robustness is a core concern in machine learning, as models are deployed in classification tasks such as facial recognition [Reference Xu, Raja, Ramachandra and Busch36], medical imaging [Reference Paschali, Conjeti, Navarro and Navab25] and identifying traffic signs in self-driving cars [Reference Deng, Zheng, Zhang, Chen, Lou and Kim13]. Deep learning models exhibit a concerning security risk – small perturbations imperceptible to the human eye can cause a neural net to misclassify an image [Reference Biggio, Corona, Maiorca, Nelson, Šrndić, Laskov, Giacinto and Roli9, Reference Szegedy, Zaremba and Sutskever32]. The machine learning literature has proposed many defenses, but many of these techniques remain poorly understood. This paper analyzes the statistical consistency of a popular defense method that involves minimizing an adversarial surrogate risk.

The central goal in a classification task is minimizing the proportion of mislabeled data-points – also known as the classification risk. Minimizers to the classification risk are easy to compute analytically and are known as Bayes classifiers. In the adversarial setting, each point is perturbed by a malicious adversary before the classifier makes a prediciton. The proportion of mislabelled data under such an attack is called the adversarial classification risk, and minimizers to this risk are called adversarial Bayes classifiers. Unlike the standard classification setting, computing minimizers to the adversarial classification risk is a nontrivial task [Reference Bhagoji, Cullina and Mittal8, Reference Pydi and Jog27]. Further studies [Reference Frank15, Reference Gnecco-Heredia, Chevaleyre, Negrevergne, Meunier and Pydi18, Reference Pydi and Jog28, Reference Trillos and Murray33, Reference Trillos, Jacobs and Kim35] investigate additional properties of these minimizers, and Frank [Reference Frank15] describes a notion of uniqueness for adversarial Bayes classifiers. The main result in this paper will connect this notion of uniqueness the statistical consistency of a popular defense method.

The empirical adversarial classification error is a discrete notion and minimizing this quantity is computationally intractable. Instead, typical machine learning algorithms minimize a surrogate risk in place of the classification error. In the robust setting, the adversarial training algorithm uses a surrogate risk that computes the supremum of loss over the adversary’s possible attacks, which we refer to as adversarial surrogate risks. However, one must verify that minimizing this adversarial surrogate will also minimize the classification risk. A loss function is adversarially consistent for a particular data distribution if every minimizing sequence of the associated adversarial surrogate risk also minimizes the adversarial classification risk. A loss is simply called adversarially consistent if it is adversarially consistent for all possible data distributions. Surprisingly, Meunier et al. [Reference Meunier, Ettedgui, Pinot, Chevaleyre and Atif23] show that no convex surrogate is adversarially consistent, in contrast to the standard classification setting where most convex losses are statistically consistent [Reference Bartlett, Jordan and McAuliffe6, Reference Lin22, Reference Zhang24, Reference Steinwart31, Reference Zhang37].

Our contributions. We relate the statistical consistency of losses in the adversarial setting to the uniqueness of the adversarial Bayes classifier. Specifically, under reasonable assumptions, a convex loss is adversarially consistent for a specific data distribution iff the adversarial Bayes classifier is unique.

Prior work [Reference Frank15] further demonstrates several distributions for which the adversarial Bayes classifier is unique, and thus a typical convex loss would be consistent. Understanding general conditions under which uniqueness occurs is an open question.

Paper outline. Section 2 discusses related works, and Section 3 presents the problem background. Section 4 states our main theorem and presents some examples. Subsequently, Section 5 discusses intermediate results necessary for proving our main theorem. Next, our consistency results are proved in Sections 6 and 7. Appendices A and B present deferred proofs from Section 5, while Appendix C presents deferred proofs on surrogate risks from Sections 5 and 6. Finally, Appendix D presents deferred proofs from Section 7.

2. Related works

Our results are inspired by prior work that showed that no convex loss is adversarially consistent [Reference Awasthi, Mao, Mohri, Zhong, Chaudhuri, Jegelka, Song, Szepesvari, Niu and Sabato3, Reference Meunier, Ettedgui, Pinot, Chevaleyre and Atif23] yet a wide class of adversarial losses is adversarially consistent [Reference Frank and Niles-Weed16]. These consistency results rely on the theory of surrogate losses, studied by Bartlett et al. [Reference Bartlett, Jordan and McAuliffe6], Lin [Reference Lin22] in the standard classification setting; and by Frank and Niles-Weed Frank and Niles-Weed [Reference Frank and Niles-Weed17], Li and Telgarsky [Reference Li and Telgarsky21] in the adversarial setting. Furthermore, [Reference Awasthi, Mao, Mohri and Zhong2, Reference Bao, Scott and Sugiyama5, Reference Steinwart31] study a property of related to consistency called calibration, which [Reference Meunier, Ettedgui, Pinot, Chevaleyre and Atif23] relate to consistency. Complimenting this analysis, another line of research studies

![]() $\mathcal {H}$

-consistency, which refines the concept of consistency to specific function classes [Reference Awasthi, Mao, Mohri, Zhong, Chaudhuri, Jegelka, Song, Szepesvari, Niu and Sabato3, Reference Long26]. Our proof combines results on losses with minimax theorems for various adversarial risks, as studied by [Reference Frank and Niles-Weed16, Reference Frank and Niles-Weed17, Reference Pydi and Jog28, Reference Trillos, Jacobs and Kim34].

$\mathcal {H}$

-consistency, which refines the concept of consistency to specific function classes [Reference Awasthi, Mao, Mohri, Zhong, Chaudhuri, Jegelka, Song, Szepesvari, Niu and Sabato3, Reference Long26]. Our proof combines results on losses with minimax theorems for various adversarial risks, as studied by [Reference Frank and Niles-Weed16, Reference Frank and Niles-Weed17, Reference Pydi and Jog28, Reference Trillos, Jacobs and Kim34].

Furthermore, our result leverages recent results on the adversarial Bayes classifier, which are extensively studied by [Reference Awasthi, Frank and Mohri4, Reference Bhagoji, Cullina and Mittal8, Reference Bungert, Trillos and Murray10, Reference Frank and Niles-Weed16, Reference Pydi and Jog27, Reference Pydi and Jog28]. Specifically, [Reference Awasthi, Frank and Mohri4, Reference Bhagoji, Cullina and Mittal8, Reference Bungert, Trillos and Murray10] prove the existence of the adversarial Bayes classifier, while Trillos and Murray [Reference Trillos and Murray33] derive necessary conditions that describe the boundary of the adversarial Bayes classifier. Frank [Reference Frank15] defines the notion of uniqueness up to degeneracy and proves that in one dimension, under reasonable distributional assumptions, every adversarial Bayes classifier is equivalent up to degeneracy to an adversarial Bayes classifier which satisfies the necessary conditions of [Reference Trillos and Murray33]. Finally, [Reference Bhagoji, Cullina and Mittal8, Reference Pydi and Jog27] also calculate the adversarial Bayes classifier for distributions in dimensions higher than one by finding an optimal coupling, but whether this method can calculate the equivalence classes under equivalence up to degeneracy remains an open question.

3. Notation and background

3.1 Surrogate risks

This paper investigates binary classification on

![]() $\mathbb {R}^d$

with labels

$\mathbb {R}^d$

with labels

![]() $\{-1,+1\}$

. Class

$\{-1,+1\}$

. Class

![]() $-1$

is distributed according to a measure

$-1$

is distributed according to a measure

![]() ${\mathbb P}_0$

while class

${\mathbb P}_0$

while class

![]() $+1$

is distributed according to measure

$+1$

is distributed according to measure

![]() ${\mathbb P}_1$

. A classifier is a Borel set

${\mathbb P}_1$

. A classifier is a Borel set

![]() $A$

and the classification risk of a set

$A$

and the classification risk of a set

![]() $A$

is the expected proportion of errors when label

$A$

is the expected proportion of errors when label

![]() $+1$

is predicted on

$+1$

is predicted on

![]() $A$

and label

$A$

and label

![]() $-1$

is predicted on

$-1$

is predicted on

![]() $A^C$

:

$A^C$

:

A minimizer to

![]() $R$

is called a Bayes classifier.

$R$

is called a Bayes classifier.

However, minimizing the empirical classification risk is a computationally intractable problem [Reference Ben-David, Eiron and Long7]. A common approach is to instead learn a function

![]() $f$

and then threshold at zero to obtain the classifier

$f$

and then threshold at zero to obtain the classifier

![]() $A=\{{\mathbf x}\,:\, f({\mathbf x})\gt 0\}$

. We define the classification risk of a function

$A=\{{\mathbf x}\,:\, f({\mathbf x})\gt 0\}$

. We define the classification risk of a function

![]() $f$

by

$f$

by

Figure 1. Several common loss functions for classification along with the indicator

![]() ${\mathbf {1}}_{\alpha \leq 0}$

.

${\mathbf {1}}_{\alpha \leq 0}$

.

In order to learn

![]() $f$

, machine learning algorithms typically minimize a better-behaved alternative to the classification risk called a surrogate risk. To obtain this risk, we replace the indicator functions in (1) with the loss

$f$

, machine learning algorithms typically minimize a better-behaved alternative to the classification risk called a surrogate risk. To obtain this risk, we replace the indicator functions in (1) with the loss

![]() $\phi$

, resulting in:

$\phi$

, resulting in:

We restrict to losses with similar properties to the indicator functions in (1) yet are easier to optimize. In particular we require:

Assumption 1.

The loss

![]() $\phi$

is nonincreasing, continuous, and

$\phi$

is nonincreasing, continuous, and

![]() $\lim _{\alpha \to \infty } \phi (\alpha )=0$

.

$\lim _{\alpha \to \infty } \phi (\alpha )=0$

.

See Figure 1 for a comparison of the indicator function and a several common losses. Losses on

![]() $\mathbb {R}$

-valued functions in machine learning typically satisfy Assumption1.

$\mathbb {R}$

-valued functions in machine learning typically satisfy Assumption1.

3.2 Adversarial surrogate risks

In the adversarial setting, a malicious adversary corrupts each data point. We model these corruptions as bounded by

![]() $\epsilon$

in some norm

$\epsilon$

in some norm

![]() $\|\cdot \|$

. The adversary knows both the classifier

$\|\cdot \|$

. The adversary knows both the classifier

![]() $A$

and the label of each data point. Thus, a point

$A$

and the label of each data point. Thus, a point

![]() $({\mathbf x},+1)$

is misclassified when it can be displaced into the set

$({\mathbf x},+1)$

is misclassified when it can be displaced into the set

![]() $A^C$

by a perturbation of size at most

$A^C$

by a perturbation of size at most

![]() $\epsilon$

. This statement can be conveniently written in terms of a supremum. For any function

$\epsilon$

. This statement can be conveniently written in terms of a supremum. For any function

![]() $g\,:\,\mathbb {R}^d\to \mathbb {R}$

, define

$g\,:\,\mathbb {R}^d\to \mathbb {R}$

, define

where

![]() $\overline {B_\epsilon ({\mathbf x})}=\{{\mathbf x}^{\prime}\,:\,\|{\mathbf x}^{\prime}-{\mathbf x}\|\leq \epsilon \}$

is the ball of allowed perturbations. The expected error rate of a classifier

$\overline {B_\epsilon ({\mathbf x})}=\{{\mathbf x}^{\prime}\,:\,\|{\mathbf x}^{\prime}-{\mathbf x}\|\leq \epsilon \}$

is the ball of allowed perturbations. The expected error rate of a classifier

![]() $A$

under an adversarial attack is then

$A$

under an adversarial attack is then

which is known as the adversarial classification risk.Footnote 1 Minimizers of

![]() $R^\epsilon$

are called adversarial Bayes classifiers.

$R^\epsilon$

are called adversarial Bayes classifiers.

Just like (1), we define

![]() $R^\epsilon (f)=R^\epsilon (\{f\gt 0\})$

:

$R^\epsilon (f)=R^\epsilon (\{f\gt 0\})$

:

Again, minimizing an empirical adversarial classification risk is computationally intractable. A surrogate to the adversarial classification risk is formulated asFootnote 2

3.3 The statistical consistency of surrogate risks

Learning algorithms typically minimize a surrogate risk using an iterative procedure, thereby producing a sequence of functions

![]() $f_n$

. One would hope that that

$f_n$

. One would hope that that

![]() $f_n$

also minimizes that corresponding classification risk. This property is referred to as statistical consistency.Footnote

3

$f_n$

also minimizes that corresponding classification risk. This property is referred to as statistical consistency.Footnote

3

Definition 1.

-

• If every sequence of functions

$f_n$

that minimizes

$f_n$

that minimizes

$R_\phi$

also minimizes

$R_\phi$

also minimizes

$R$

for the distribution

$R$

for the distribution

${\mathbb P}_0,{\mathbb P}_1$

, then the loss

${\mathbb P}_0,{\mathbb P}_1$

, then the loss

$\phi$

is consistent for the distribution

$\phi$

is consistent for the distribution

${\mathbb P}_0,{\mathbb P}_1$

. If

${\mathbb P}_0,{\mathbb P}_1$

. If

$R_\phi$

is consistent for every distribution

$R_\phi$

is consistent for every distribution

${\mathbb P}_0,{\mathbb P}_1$

, we say that

${\mathbb P}_0,{\mathbb P}_1$

, we say that

$\phi$

is consistent.

$\phi$

is consistent.

-

• If every sequence of functions

$f_n$

that minimizes

$f_n$

that minimizes

$R_\phi ^\epsilon$

also minimizes

$R_\phi ^\epsilon$

also minimizes

$R^\epsilon$

for the distribution

$R^\epsilon$

for the distribution

${\mathbb P}_0,{\mathbb P}_1$

, then the loss

${\mathbb P}_0,{\mathbb P}_1$

, then the loss

$\phi$

is adversarially consistent for the distribution

$\phi$

is adversarially consistent for the distribution

${\mathbb P}_0,{\mathbb P}_1$

. If

${\mathbb P}_0,{\mathbb P}_1$

. If

$R_\phi ^\epsilon$

is adversarially consistent for every distribution

$R_\phi ^\epsilon$

is adversarially consistent for every distribution

${\mathbb P}_0,{\mathbb P}_1$

, we say that

${\mathbb P}_0,{\mathbb P}_1$

, we say that

$\phi$

is adversarially consistent.

$\phi$

is adversarially consistent.

A case of particular interest is convex

![]() $\phi$

, as these losses are ubiquitous in machine learning. In the non-adversarial context, Theorem2 of [Reference Bartlett, Jordan and McAuliffe6] shows that a convex loss

$\phi$

, as these losses are ubiquitous in machine learning. In the non-adversarial context, Theorem2 of [Reference Bartlett, Jordan and McAuliffe6] shows that a convex loss

![]() $\phi$

is consistent iff

$\phi$

is consistent iff

![]() $\phi$

is differentiable at zero and

$\phi$

is differentiable at zero and

![]() $\phi ^{\prime}(0)\lt 0$

. In contrast, Meunier et al. [Reference Meunier, Ettedgui, Pinot, Chevaleyre and Atif23] show that no convex loss is adversarially consistent. Further results of [Reference Frank and Niles-Weed16] characterize the adversarially consistent losses in terms of the function

$\phi ^{\prime}(0)\lt 0$

. In contrast, Meunier et al. [Reference Meunier, Ettedgui, Pinot, Chevaleyre and Atif23] show that no convex loss is adversarially consistent. Further results of [Reference Frank and Niles-Weed16] characterize the adversarially consistent losses in terms of the function

![]() $C_\phi ^*$

:

$C_\phi ^*$

:

Theorem 1.

The loss

![]() $\phi$

is adversarially consistent if and only if

$\phi$

is adversarially consistent if and only if

![]() $C_\phi ^*(1/2)\lt \phi (0)$

.

$C_\phi ^*(1/2)\lt \phi (0)$

.

Notice that all convex losses satisfy

![]() $C_\phi ^*(1/2)=\phi (0)$

: By evaluating at

$C_\phi ^*(1/2)=\phi (0)$

: By evaluating at

![]() $\alpha =0$

, one can conclude that

$\alpha =0$

, one can conclude that

![]() $C_\phi ^*(1/2)= \inf _\alpha C_\phi (1/2,\alpha )\leq C_\phi (1/2,0)=\phi (0)$

. However,

$C_\phi ^*(1/2)= \inf _\alpha C_\phi (1/2,\alpha )\leq C_\phi (1/2,0)=\phi (0)$

. However,

due to convexity. Notice that Theorem1 does not preclude the adversarial consistency of a loss satisfying

![]() $C_\phi ^*(1/2)=\phi (0)$

for some particular

$C_\phi ^*(1/2)=\phi (0)$

for some particular

![]() ${\mathbb P}_0,{\mathbb P}_1$

. Prior work [Reference Frank and Niles-Weed16, Reference Meunier, Ettedgui, Pinot, Chevaleyre and Atif23] provides a counterexample to consistency only for a single, atypical distribution. The goal of this paper is characterizing when adversarial consistency fails for losses satisfying

${\mathbb P}_0,{\mathbb P}_1$

. Prior work [Reference Frank and Niles-Weed16, Reference Meunier, Ettedgui, Pinot, Chevaleyre and Atif23] provides a counterexample to consistency only for a single, atypical distribution. The goal of this paper is characterizing when adversarial consistency fails for losses satisfying

![]() $C_\phi ^*(1/2)=\phi (0)$

.

$C_\phi ^*(1/2)=\phi (0)$

.

4. Main result

Prior work has shown that there always exists minimizers to the adversarial classification risk, which are referred to as adversarial Bayes classifiers (see Theorem3 below). Furthermore, Frank [Reference Frank15] developed a notion of uniqueness for adversarial Bayes classifiers.

Definition 2.

The adversarial Bayes classifiers

![]() $A_1$

and

$A_1$

and

![]() $A_2$

are equivalent up to degeneracy if any Borel set

$A_2$

are equivalent up to degeneracy if any Borel set

![]() $A$

with

$A$

with

![]() $A_1\cap A_2\subset A\subset A_1\cup A_2$

is also an adversarial Bayes classifier. The adversarial Bayes classifier is unique up to degeneracy if any two adversarial Bayes classifiers are equivalent up to degeneracy.

$A_1\cap A_2\subset A\subset A_1\cup A_2$

is also an adversarial Bayes classifier. The adversarial Bayes classifier is unique up to degeneracy if any two adversarial Bayes classifiers are equivalent up to degeneracy.

When the measure

is absolutely continuous with respect to Lebesgue measure, then equivalence up to degeneracy is an equivalence relation [Reference Frank15, Theorem 3.3]. The central result of this paper relates the consistency of convex losses to the uniqueness of the adversarial Bayes classifier.

Theorem 2.

Assume that

![]() $\mathbb P$

is absolutely continuous with respect to Lebesgue measure and let

$\mathbb P$

is absolutely continuous with respect to Lebesgue measure and let

![]() $\phi$

be a loss with

$\phi$

be a loss with

![]() $C_\phi ^*(1/2)=\phi (0)$

. Then

$C_\phi ^*(1/2)=\phi (0)$

. Then

![]() $\phi$

is adversarially consistent for the distribution

$\phi$

is adversarially consistent for the distribution

![]() ${\mathbb P}_0$

,

${\mathbb P}_0$

,

![]() ${\mathbb P}_1$

iff the adversarial Bayes classifier is unique up to degeneracy.

${\mathbb P}_1$

iff the adversarial Bayes classifier is unique up to degeneracy.

Prior results of Frank [Reference Frank15] provide the tools for verifying when the adversarial Bayes classifier is unique up to degeneracy for a wide class of one dimensional distributions. Below we highlight two interesting examples. In the examples below, the function

![]() $p_1$

will represent the density of

$p_1$

will represent the density of

![]() ${\mathbb P}_1$

and the function

${\mathbb P}_1$

and the function

![]() $p_0$

will represent the density of

$p_0$

will represent the density of

![]() ${\mathbb P}_0$

.

${\mathbb P}_0$

.

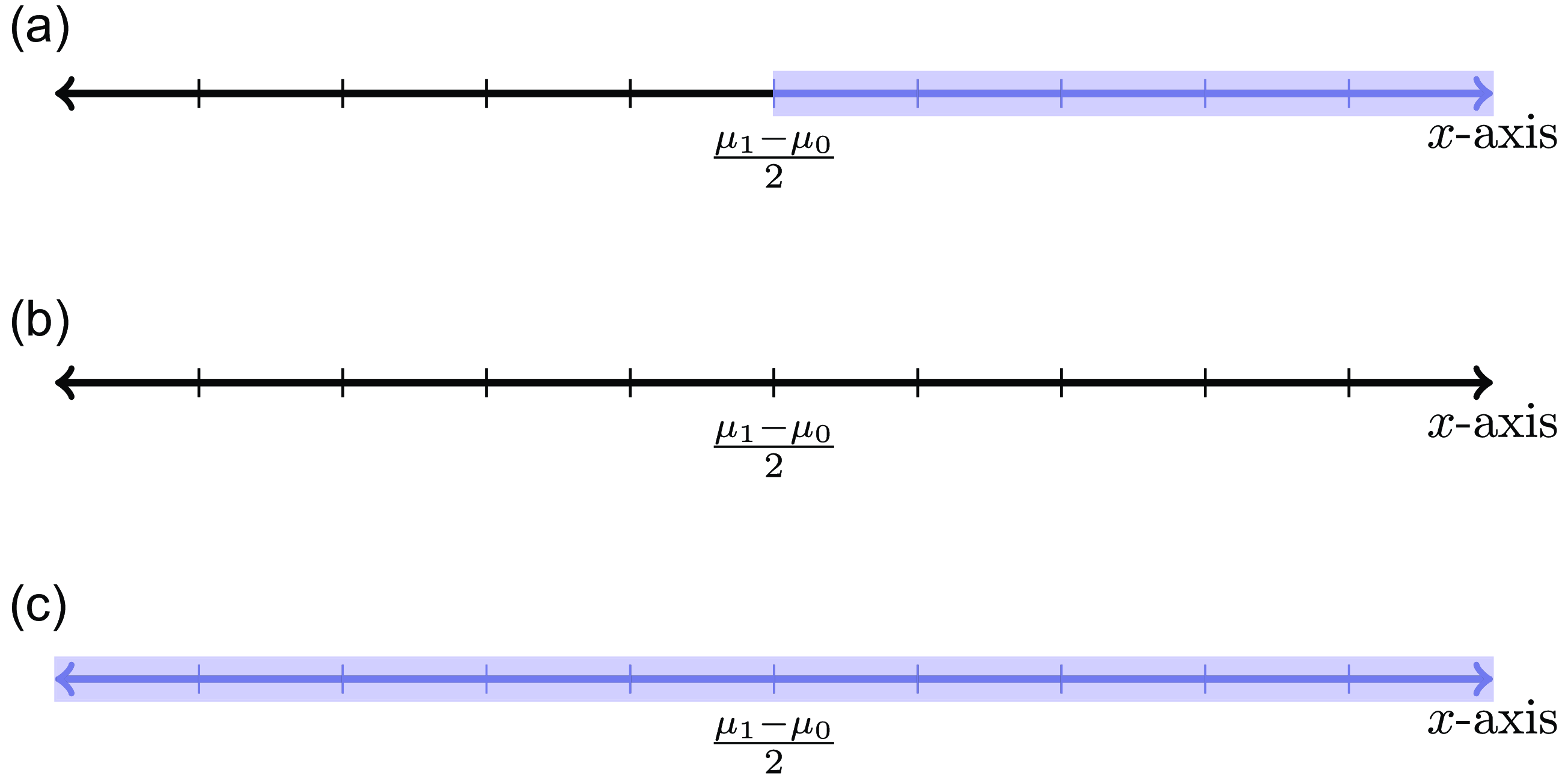

Figure 2. The adversarial Bayes classifier for two gaussians with equal variances and differing means. We assume in this figure that

![]() $\mu _1\gt \mu _0$

. The shaded blue area depicts the region inside the adversarial Bayes classifier. Figure 2a depicts an adversarial Bayes when

$\mu _1\gt \mu _0$

. The shaded blue area depicts the region inside the adversarial Bayes classifier. Figure 2a depicts an adversarial Bayes when

![]() $\epsilon \leq (\mu _1-\mu _0)/2$

and Figure 2b and 2c depict the adversarial Bayes classifier when

$\epsilon \leq (\mu _1-\mu _0)/2$

and Figure 2b and 2c depict the adversarial Bayes classifier when

![]() $\epsilon \geq (\mu _1-\mu _0)/2$

. (See [Reference Frank15, Example 4.1] for a justification of these illustrations.) the adversarial Bayes classifiers in Figure 2b and 2c are not equivalent up to degeneracy.

$\epsilon \geq (\mu _1-\mu _0)/2$

. (See [Reference Frank15, Example 4.1] for a justification of these illustrations.) the adversarial Bayes classifiers in Figure 2b and 2c are not equivalent up to degeneracy.

-

• Consider mean zero gaussians with different variances:

$p_0(x)=\frac 1 {2\sqrt {2\pi } \sigma _0}e^{-x^2/2\sigma _0^2}$

and

$p_0(x)=\frac 1 {2\sqrt {2\pi } \sigma _0}e^{-x^2/2\sigma _0^2}$

and

$p_1(x)=\frac 1 {2\sqrt {2\pi } \sigma _1}e^{-x^2/2\sigma _1^2}$

. The adversarial Bayes classifier is unique up to degeneracy for all

$p_1(x)=\frac 1 {2\sqrt {2\pi } \sigma _1}e^{-x^2/2\sigma _1^2}$

. The adversarial Bayes classifier is unique up to degeneracy for all

$\epsilon$

[Reference Frank15, Example 4.2].

$\epsilon$

[Reference Frank15, Example 4.2]. -

• Consider gaussians with variance

$\sigma$

and means

$\sigma$

and means

$\mu _0$

and

$\mu _0$

and

$\mu _1$

:

$\mu _1$

:

$p_0(x)=\frac 1 {\sqrt {2\pi } \sigma }e^{-(x-\mu _0)^2/2\sigma ^2}$

and

$p_0(x)=\frac 1 {\sqrt {2\pi } \sigma }e^{-(x-\mu _0)^2/2\sigma ^2}$

and

$p_1(x)=\frac 1 {\sqrt {2\pi } \sigma }e^{-(x-\mu _1)^2/2\sigma ^2}$

. Then the adversarial Bayes classifier is unique up to degeneracy iff

$p_1(x)=\frac 1 {\sqrt {2\pi } \sigma }e^{-(x-\mu _1)^2/2\sigma ^2}$

. Then the adversarial Bayes classifier is unique up to degeneracy iff

$\epsilon \lt |\mu _1-\mu _0|/2$

[Reference Frank15, Example 4.1]. See Figure 2 for an illustration of the adversarial Bayes classifiers for this distribution.

$\epsilon \lt |\mu _1-\mu _0|/2$

[Reference Frank15, Example 4.1]. See Figure 2 for an illustration of the adversarial Bayes classifiers for this distribution.

Theorem2 implies that a convex loss is always adversarially consistent for the first gaussian mixture above. Furthermore, a convex loss is adversarially consistent for the second gaussian mixture when the perturbation radius

![]() $\epsilon$

is small compared to the differences between the means. However, Frank [Reference Frank15, Example 4.5] provides an example of a distribution for which the adversarial Bayes classifier is not unique up to degeneracy for all

$\epsilon$

is small compared to the differences between the means. However, Frank [Reference Frank15, Example 4.5] provides an example of a distribution for which the adversarial Bayes classifier is not unique up to degeneracy for all

![]() $\epsilon \gt 0$

, even though the Bayes classifier is unique. At the same time, one would hope that if the Bayes classifier is unique and

$\epsilon \gt 0$

, even though the Bayes classifier is unique. At the same time, one would hope that if the Bayes classifier is unique and

![]() ${\mathbb P}_0$

,

${\mathbb P}_0$

,

![]() ${\mathbb P}_1$

are sufficiently regular, then the adversarial Bayes classifier would be unique up to degeneracy for sufficiently small

${\mathbb P}_1$

are sufficiently regular, then the adversarial Bayes classifier would be unique up to degeneracy for sufficiently small

![]() $\epsilon$

. In general, understanding when the adversarial Bayes classifier is unique up to degeneracy for well-behaved distributions is an open problem.

$\epsilon$

. In general, understanding when the adversarial Bayes classifier is unique up to degeneracy for well-behaved distributions is an open problem.

The examples above rely on the techniques of [Reference Frank15] for calculating the equivalence classes under uniqueness up to degeneracy. Frank [Reference Frank15] proves that in one dimension, if

![]() $\mathbb P$

is absolutely continuous with respect to Lebesgue measure, every adversarial Bayes classifier is equivalent up to degeneracy to an adversarial Bayes classifier whose boundary points are strictly more than

$\mathbb P$

is absolutely continuous with respect to Lebesgue measure, every adversarial Bayes classifier is equivalent up to degeneracy to an adversarial Bayes classifier whose boundary points are strictly more than

![]() $2\epsilon$

apart [Reference Frank15, Theorem 3.5]. Therefore, to find all adversarial Bayes classifiers under equivalence under degeneracy, it suffices to consider all sets whose boundary points satisfy the first order necessary conditions obtained by differentiating the adversarial classification risk of a set

$2\epsilon$

apart [Reference Frank15, Theorem 3.5]. Therefore, to find all adversarial Bayes classifiers under equivalence under degeneracy, it suffices to consider all sets whose boundary points satisfy the first order necessary conditions obtained by differentiating the adversarial classification risk of a set

![]() $A$

with respect to its boundary points [Reference Frank15, Theorem 3.7]. The corresponding statement in higher dimensions is false – there exist distributions for which no adversarial Bayes classifier has enough regularity to allow for an equivalent statement. For instance, Bungert et al. [Reference Bungert, Trillos and Murray10] demonstrate a distribution for which there is no adversarial Bayes classifier with two-sided tangent balls at all points in the boundary. Developing a general method for calculating these equivalence classes in dimensions higher than one remains an open problem.

$A$

with respect to its boundary points [Reference Frank15, Theorem 3.7]. The corresponding statement in higher dimensions is false – there exist distributions for which no adversarial Bayes classifier has enough regularity to allow for an equivalent statement. For instance, Bungert et al. [Reference Bungert, Trillos and Murray10] demonstrate a distribution for which there is no adversarial Bayes classifier with two-sided tangent balls at all points in the boundary. Developing a general method for calculating these equivalence classes in dimensions higher than one remains an open problem.

Proposition2 in Section 6 presents a condition under which one can conclude consistency without the absolute continuity assumption. This result proves consistency whenever the optimal adversarial classification risk is zero, see the discussion after Proposition2 for details. Consequently, if

![]() $\mathrm {supp} {\mathbb P}_0$

and

$\mathrm {supp} {\mathbb P}_0$

and

![]() $\mathrm {supp} {\mathbb P}_1$

are separated by more than

$\mathrm {supp} {\mathbb P}_1$

are separated by more than

![]() $2\epsilon$

, then consistent losses are always adversarially consistent for such distributions. On the other hand, our analysis of the reverse direction of Theorem2 requires the absolute continuity assumption. Using Proposition2 to further understand consistency is an open question.

$2\epsilon$

, then consistent losses are always adversarially consistent for such distributions. On the other hand, our analysis of the reverse direction of Theorem2 requires the absolute continuity assumption. Using Proposition2 to further understand consistency is an open question.

5. Preliminary results

5.1 Minimizers of standard risks

Minimizers to the classification risk can be expressed in terms of the measure

![]() $\mathbb P$

and the function

$\mathbb P$

and the function

![]() $\eta =d{\mathbb P}_1/d{\mathbb P}$

. The risk

$\eta =d{\mathbb P}_1/d{\mathbb P}$

. The risk

![]() $R$

in terms of these quantities is

$R$

in terms of these quantities is

and

![]() $\inf _A R(A)=\int C^*(\eta )d{\mathbb P}$

where the functions

$\inf _A R(A)=\int C^*(\eta )d{\mathbb P}$

where the functions

![]() $C\,:\,[0,1]\times \{0,1\}\to \mathbb {R}$

and

$C\,:\,[0,1]\times \{0,1\}\to \mathbb {R}$

and

![]() $C^*\,:\,[0,1]\to \mathbb {R}$

are defined by

$C^*\,:\,[0,1]\to \mathbb {R}$

are defined by

Thus, if

![]() $A$

is a minimizer of

$A$

is a minimizer of

![]() $R$

, then

$R$

, then

![]() ${\mathbf {1}}_A$

must minimize the function

${\mathbf {1}}_A$

must minimize the function

![]() $C(\eta, \cdot )$

$C(\eta, \cdot )$

![]() $\mathbb P$

-almost everywhere. Consequently, the sets

$\mathbb P$

-almost everywhere. Consequently, the sets

are both Bayes classifiers.

Similarly, one can compute the infimum of

![]() $R_\phi$

by expressing the risk in terms of the quantities

$R_\phi$

by expressing the risk in terms of the quantities

![]() $\mathbb P$

and

$\mathbb P$

and

![]() $\eta$

:

$\eta$

:

and

![]() $\inf _f R_\phi (f)=\int C_\phi ^*(\eta ({\mathbf x}))d{\mathbb P}({\mathbf x})$

where the functions

$\inf _f R_\phi (f)=\int C_\phi ^*(\eta ({\mathbf x}))d{\mathbb P}({\mathbf x})$

where the functions

![]() $C_\phi (\eta, \alpha )$

and

$C_\phi (\eta, \alpha )$

and

![]() $C_\phi ^*(\eta )$

are defined by

$C_\phi ^*(\eta )$

are defined by

for

![]() $\eta \in [0,1]$

. Thus, a minimizer

$\eta \in [0,1]$

. Thus, a minimizer

![]() $f$

of

$f$

of

![]() $R_\phi$

must minimize

$R_\phi$

must minimize

![]() $C_\phi (\eta ({\mathbf x}),\cdot )$

almost everywhere according to the probability measure

$C_\phi (\eta ({\mathbf x}),\cdot )$

almost everywhere according to the probability measure

![]() $\mathbb P$

. Because

$\mathbb P$

. Because

![]() $\phi$

is continuous, the function

$\phi$

is continuous, the function

maps each

![]() $\eta$

to the smallest minimizer of

$\eta$

to the smallest minimizer of

![]() $C_\phi (\eta, \cdot )$

. Consequently, the function

$C_\phi (\eta, \cdot )$

. Consequently, the function

minimizes

![]() $C_\phi (\eta ({\mathbf x}),\cdot )$

at each point

$C_\phi (\eta ({\mathbf x}),\cdot )$

at each point

![]() $\mathbf x$

. Next, we will argue this function is measurable, and therefore is a minimizer of the risk

$\mathbf x$

. Next, we will argue this function is measurable, and therefore is a minimizer of the risk

![]() $R_\phi$

.

$R_\phi$

.

Lemma 1.

The function

![]() $\alpha _\phi \,:\,[0,1]\to \overline {\mathbb {R}}$

that maps

$\alpha _\phi \,:\,[0,1]\to \overline {\mathbb {R}}$

that maps

![]() $\eta$

to the smallest minimizer of

$\eta$

to the smallest minimizer of

![]() $C_\phi (\eta, \cdot )$

is non-decreasing.

$C_\phi (\eta, \cdot )$

is non-decreasing.

The proof of this result is presented below Lemma7 in Appendix C. Because

![]() $\alpha _\phi$

is monotonic, the composition in (9) is always measurable, and thus this function is a minimizer of

$\alpha _\phi$

is monotonic, the composition in (9) is always measurable, and thus this function is a minimizer of

![]() $R_\phi$

. Allowing for minimizers in extended real numbers

$R_\phi$

. Allowing for minimizers in extended real numbers

![]() $\overline {\mathbb {R}}=\{-\infty, +\infty \}\cup \mathbb {R}$

is necessary for certain losses – for instance when

$\overline {\mathbb {R}}=\{-\infty, +\infty \}\cup \mathbb {R}$

is necessary for certain losses – for instance when

![]() $\phi$

is the exponential loss, then

$\phi$

is the exponential loss, then

![]() $C_\phi (1,\alpha )= e^{-\alpha }$

does not assume its infimum on

$C_\phi (1,\alpha )= e^{-\alpha }$

does not assume its infimum on

![]() $\mathbb {R}$

.

$\mathbb {R}$

.

5.2 Dual problems for the adversarial risks

The proof Theorem2 relies on a dual formulation of the adversarial classification problem involving the Wasserstein-

![]() $\infty$

metric. Informally, a measure

$\infty$

metric. Informally, a measure

![]() ${\mathbb Q}^{\prime}$

is within

${\mathbb Q}^{\prime}$

is within

![]() $\epsilon$

of

$\epsilon$

of

![]() $\mathbb Q$

in the Wasserstein-

$\mathbb Q$

in the Wasserstein-

![]() $\infty$

metric if one can produce

$\infty$

metric if one can produce

![]() ${\mathbb Q}^{\prime}$

by perturbing each point in

${\mathbb Q}^{\prime}$

by perturbing each point in

![]() $\mathbb {R}^d$

by at most

$\mathbb {R}^d$

by at most

![]() $\epsilon$

under the measure

$\epsilon$

under the measure

![]() $\mathbb Q$

. The formal definition of the Wasserstein-

$\mathbb Q$

. The formal definition of the Wasserstein-

![]() $\infty$

metric involves couplings between probability measures: a coupling between two Borel measures

$\infty$

metric involves couplings between probability measures: a coupling between two Borel measures

![]() $\mathbb Q$

and

$\mathbb Q$

and

![]() ${\mathbb Q}^{\prime}$

with

${\mathbb Q}^{\prime}$

with

![]() ${\mathbb Q}(\mathbb {R}^d)={\mathbb Q}^{\prime}(\mathbb {R}^d)$

is a measure

${\mathbb Q}(\mathbb {R}^d)={\mathbb Q}^{\prime}(\mathbb {R}^d)$

is a measure

![]() $\gamma$

on

$\gamma$

on

![]() $\mathbb {R}^d\times \mathbb {R}^d$

with marginals

$\mathbb {R}^d\times \mathbb {R}^d$

with marginals

![]() $\mathbb Q$

and

$\mathbb Q$

and

![]() ${\mathbb Q}^{\prime}$

:

${\mathbb Q}^{\prime}$

:

![]() $\gamma (A\times \mathbb {R}^d)={\mathbb Q}(A)$

and

$\gamma (A\times \mathbb {R}^d)={\mathbb Q}(A)$

and

![]() $\gamma (\mathbb {R}^d\times A)={\mathbb Q}^{\prime}(A)$

for any Borel set

$\gamma (\mathbb {R}^d\times A)={\mathbb Q}^{\prime}(A)$

for any Borel set

![]() $A$

. The set of all such couplings is denoted

$A$

. The set of all such couplings is denoted

![]() $\Pi ({\mathbb Q},{\mathbb Q}^{\prime})$

. The Wasserstein-

$\Pi ({\mathbb Q},{\mathbb Q}^{\prime})$

. The Wasserstein-

![]() $\infty$

distance between the two measures is then

$\infty$

distance between the two measures is then

Theorem 2.6 of [Reference Jylhä19] proves that this infimum is always assumed. Equivalently,

![]() $W_\infty ({\mathbb Q},{\mathbb Q}^{\prime})\leq \epsilon$

iff there is a coupling between

$W_\infty ({\mathbb Q},{\mathbb Q}^{\prime})\leq \epsilon$

iff there is a coupling between

![]() $\mathbb Q$

and

$\mathbb Q$

and

![]() ${\mathbb Q}^{\prime}$

supported on

${\mathbb Q}^{\prime}$

supported on

Let

![]() ${{\mathcal {B}}^\infty _{\epsilon }}({\mathbb Q})=\{{\mathbb Q}^{\prime}\,:\, W_\infty ({\mathbb Q},{\mathbb Q}^{\prime})\leq \epsilon \}$

be the set of measures within

${{\mathcal {B}}^\infty _{\epsilon }}({\mathbb Q})=\{{\mathbb Q}^{\prime}\,:\, W_\infty ({\mathbb Q},{\mathbb Q}^{\prime})\leq \epsilon \}$

be the set of measures within

![]() $\epsilon$

of

$\epsilon$

of

![]() $\mathbb Q$

in the

$\mathbb Q$

in the

![]() $W_\infty$

metric. The minimax relations from prior work leverage a relationship between the Wasserstein-

$W_\infty$

metric. The minimax relations from prior work leverage a relationship between the Wasserstein-

![]() $\infty$

metric and the integral of the supremum function over an

$\infty$

metric and the integral of the supremum function over an

![]() $\epsilon$

-ball.

$\epsilon$

-ball.

Lemma 2.

Let

![]() $E$

be a Borel set. Then

$E$

be a Borel set. Then

Proof. Let

![]() ${\mathbb Q}^{\prime}$

be a measure in

${\mathbb Q}^{\prime}$

be a measure in

![]() ${{\mathcal {B}}^\infty _{\epsilon }} ({\mathbb Q})$

, and let

${{\mathcal {B}}^\infty _{\epsilon }} ({\mathbb Q})$

, and let

![]() $\gamma ^*$

be a coupling between these two measures supported on

$\gamma ^*$

be a coupling between these two measures supported on

![]() $\Delta _\epsilon$

. Then if

$\Delta _\epsilon$

. Then if

![]() $({\mathbf x},{\mathbf x}^{\prime})\in \Delta _\epsilon$

, then

$({\mathbf x},{\mathbf x}^{\prime})\in \Delta _\epsilon$

, then

![]() ${\mathbf x}^{\prime}\in \overline {B_\epsilon ({\mathbf x})}$

and thus

${\mathbf x}^{\prime}\in \overline {B_\epsilon ({\mathbf x})}$

and thus

![]() $S_\epsilon ({\mathbf {1}}_E)({\mathbf x})\geq {\mathbf {1}}_E({\mathbf x}^{\prime})$

$S_\epsilon ({\mathbf {1}}_E)({\mathbf x})\geq {\mathbf {1}}_E({\mathbf x}^{\prime})$

![]() $\gamma ^*$

-a.e. Consequently,

$\gamma ^*$

-a.e. Consequently,

Taking a supremum over all

![]() ${\mathbb Q}^{\prime}\in {{\mathcal {B}}^\infty _{\epsilon }}({\mathbb Q})$

proves the result.

${\mathbb Q}^{\prime}\in {{\mathcal {B}}^\infty _{\epsilon }}({\mathbb Q})$

proves the result.

Lemma2 implies:

\begin{align*} \inf _f R^\epsilon (f)\geq \inf _f\sup _{\substack {{\mathbb P}_1^{\prime}\in {\mathcal {B}}_\epsilon ({\mathbb P}_1)\\ {\mathbb P}_0^{\prime}\in {\mathcal {B}}_\epsilon ({\mathbb P}_0) }}\int {\mathbf {1}}_{f\leq 0} d{\mathbb P}_1^{\prime}+\int {\mathbf {1}}_{f\gt 0} d{\mathbb P}_0^{\prime}. \end{align*}

\begin{align*} \inf _f R^\epsilon (f)\geq \inf _f\sup _{\substack {{\mathbb P}_1^{\prime}\in {\mathcal {B}}_\epsilon ({\mathbb P}_1)\\ {\mathbb P}_0^{\prime}\in {\mathcal {B}}_\epsilon ({\mathbb P}_0) }}\int {\mathbf {1}}_{f\leq 0} d{\mathbb P}_1^{\prime}+\int {\mathbf {1}}_{f\gt 0} d{\mathbb P}_0^{\prime}. \end{align*}

Does equality hold and can one swap the infimum and the supremum? Frank and Niles-Weed [Reference Frank and Niles-Weed16], Pydi and Jog [Reference Pydi and Jog28] answer this question in the affirmative:

Theorem 3.

Let

![]() ${\mathbb P}_0$

,

${\mathbb P}_0$

,

![]() ${\mathbb P}_1$

be finite Borel measures. Define

${\mathbb P}_1$

be finite Borel measures. Define

where the function

![]() $C^*$

is defined in (

4

). Then

$C^*$

is defined in (

4

). Then

and furthermore equality is attained for some

![]() $f^*$

,

$f^*$

,

![]() ${\mathbb P}_0^*$

,

${\mathbb P}_0^*$

,

![]() ${\mathbb P}_1^*$

.

${\mathbb P}_1^*$

.

See Theorem1 of [Reference Frank and Niles-Weed16] for a proof. Theorems6, 8, and 9 of [Reference Frank and Niles-Weed17] show an analogous minimax theorem for surrogate risks.

Theorem 4.

Let

![]() ${\mathbb P}_0$

,

${\mathbb P}_0$

,

![]() ${\mathbb P}_1$

be finite Borel measures. Define

${\mathbb P}_1$

be finite Borel measures. Define

with the function

![]() $C_\phi ^*$

is defined in (

7

). Then

$C_\phi ^*$

is defined in (

7

). Then

and furthermore equality is attained for some

![]() $f^*$

,

$f^*$

,

![]() ${\mathbb P}_0^*$

,

${\mathbb P}_0^*$

,

![]() ${\mathbb P}_1^*$

.

${\mathbb P}_1^*$

.

Just like

![]() $R_\phi$

, the risk

$R_\phi$

, the risk

![]() $R_\phi ^\epsilon$

may not have an

$R_\phi ^\epsilon$

may not have an

![]() $\mathbb {R}$

-valued minimizer. However, Lemma8 of [Reference Frank and Niles-Weed16] states that

$\mathbb {R}$

-valued minimizer. However, Lemma8 of [Reference Frank and Niles-Weed16] states that

5.3 Minimizers of adversarial risks

A formula analogous to (9) defines minimizers to adversarial risks. Let

![]() $I_\epsilon$

denote the infimum of a function over an

$I_\epsilon$

denote the infimum of a function over an

![]() $\epsilon$

ball:

$\epsilon$

ball:

Lemma 24 of [Reference Frank and Niles-Weed17] and Theorem9 of [Reference Frank and Niles-Weed17] prove the following result:

Theorem 5.

There exists a function

![]() $\hat \eta \,:\, \mathbb {R}^d\to [0,1]$

and measures

$\hat \eta \,:\, \mathbb {R}^d\to [0,1]$

and measures

![]() ${\mathbb P}_0^*\in {{\mathcal {B}}^\infty _{\epsilon }} ({\mathbb P}_0)$

,

${\mathbb P}_0^*\in {{\mathcal {B}}^\infty _{\epsilon }} ({\mathbb P}_0)$

,

![]() ${\mathbb P}_1^*\in {{\mathcal {B}}^\infty _{\epsilon }} ({\mathbb P}_1)$

for which

${\mathbb P}_1^*\in {{\mathcal {B}}^\infty _{\epsilon }} ({\mathbb P}_1)$

for which

I)

$\hat \eta =\eta ^*$

$\hat \eta =\eta ^*$

${\mathbb P}^*$

-a.e., where

${\mathbb P}^*$

-a.e., where

${\mathbb P}^*={\mathbb P}_0^*+{\mathbb P}_1^*$

and

${\mathbb P}^*={\mathbb P}_0^*+{\mathbb P}_1^*$

and

$\eta ^*=d{\mathbb P}_1^*/d{\mathbb P}^*$

$\eta ^*=d{\mathbb P}_1^*/d{\mathbb P}^*$

-

II)

$I_\epsilon (\hat \eta )({\mathbf x})=\hat \eta ({\mathbf x}^{\prime})$

$I_\epsilon (\hat \eta )({\mathbf x})=\hat \eta ({\mathbf x}^{\prime})$

$\gamma _0^*$

-a.e. and

$\gamma _0^*$

-a.e. and

$S_\epsilon (\hat \eta )({\mathbf x})=\hat \eta ({\mathbf x}^{\prime})$

$S_\epsilon (\hat \eta )({\mathbf x})=\hat \eta ({\mathbf x}^{\prime})$

$\gamma _1^*$

-a.e., where

$\gamma _1^*$

-a.e., where

$\gamma _0^*$

,

$\gamma _0^*$

,

$\gamma _1^*$

are couplings between

$\gamma _1^*$

are couplings between

${\mathbb P}_0$

,

${\mathbb P}_0$

,

${\mathbb P}_0^*$

and

${\mathbb P}_0^*$

and

${\mathbb P}_1$

,

${\mathbb P}_1$

,

${\mathbb P}_1^*$

supported on

${\mathbb P}_1^*$

supported on

$\Delta _\epsilon$

.

$\Delta _\epsilon$

. -

III) The function

$\alpha _\phi (\hat \eta ({\mathbf x}))$

is a minimizer of

$\alpha _\phi (\hat \eta ({\mathbf x}))$

is a minimizer of

$R_\phi ^\epsilon$

for any loss

$R_\phi ^\epsilon$

for any loss

$\phi$

, where

$\phi$

, where

$\alpha _\phi$

is the function defined in (

8

).

$\alpha _\phi$

is the function defined in (

8

).

The function

![]() $\hat \eta$

can be viewed as the conditional probability of label

$\hat \eta$

can be viewed as the conditional probability of label

![]() $+1$

under an ‘optimal’ adversarial attack [Reference Frank and Niles-Weed17]. Just as in the standard learning scenario, the function

$+1$

under an ‘optimal’ adversarial attack [Reference Frank and Niles-Weed17]. Just as in the standard learning scenario, the function

![]() $\alpha (\hat \eta ({\mathbf x}))$

may be

$\alpha (\hat \eta ({\mathbf x}))$

may be

![]() $\overline {\mathbb {R}}$

-valued. Item 3 is actually a consequence of Item 1 and Item 2: Item 1 and Item 2 imply that

$\overline {\mathbb {R}}$

-valued. Item 3 is actually a consequence of Item 1 and Item 2: Item 1 and Item 2 imply that

![]() $R_\phi ^\epsilon (\alpha _\phi (\hat \eta ))={\bar {R}_\phi }({\mathbb P}_0^*,{\mathbb P}_1^*)$

and Theorem4 then implies that

$R_\phi ^\epsilon (\alpha _\phi (\hat \eta ))={\bar {R}_\phi }({\mathbb P}_0^*,{\mathbb P}_1^*)$

and Theorem4 then implies that

![]() $\alpha _\phi (\hat \eta )$

minimizes

$\alpha _\phi (\hat \eta )$

minimizes

![]() $R_\phi ^\epsilon$

and

$R_\phi ^\epsilon$

and

![]() ${\mathbb P}_0^*$

,

${\mathbb P}_0^*$

,

![]() ${\mathbb P}_1^*$

maximize

${\mathbb P}_1^*$

maximize

![]() $\bar {R}_\phi$

. (A similar argument is provided later in this paper in Lemma6 of Appendix D.1.) Furthermore, the relation

$\bar {R}_\phi$

. (A similar argument is provided later in this paper in Lemma6 of Appendix D.1.) Furthermore, the relation

![]() $R_\phi ^\epsilon (\alpha _\phi (\hat \eta ))={\bar {R}_\phi }({\mathbb P}_0^*,{\mathbb P}_1^*)$

also implies

$R_\phi ^\epsilon (\alpha _\phi (\hat \eta ))={\bar {R}_\phi }({\mathbb P}_0^*,{\mathbb P}_1^*)$

also implies

Lemma 3.

The

![]() ${\mathbb P}_0^*$

,

${\mathbb P}_0^*$

,

![]() ${\mathbb P}_1^*$

of Theorem

5

maximize

${\mathbb P}_1^*$

of Theorem

5

maximize

![]() $\bar {R}_\phi$

over

$\bar {R}_\phi$

over

![]() ${{\mathcal {B}}^\infty _{\epsilon }}({\mathbb P}_0)\times {{\mathcal {B}}^\infty _{\epsilon }}({\mathbb P}_1)$

for every

${{\mathcal {B}}^\infty _{\epsilon }}({\mathbb P}_0)\times {{\mathcal {B}}^\infty _{\epsilon }}({\mathbb P}_1)$

for every

![]() $\phi$

.

$\phi$

.

We emphasize that a formal proof of Theorems5 and 3 is not included in this paper, and refer to Lemma 26 and Theorem9 of [Reference Frank and Niles-Weed17] for full arguments.

Next, we derive some further results about the function

![]() $\hat \eta$

. Recall that Bayes classifiers can be constructed by thesholding the conditional probability

$\hat \eta$

. Recall that Bayes classifiers can be constructed by thesholding the conditional probability

![]() $\eta$

at

$\eta$

at

![]() $1/2$

, see Equation 5). The function

$1/2$

, see Equation 5). The function

![]() $\hat \eta$

plays an analogous role for adversarial learning.

$\hat \eta$

plays an analogous role for adversarial learning.

Theorem 6.

Let

![]() $\hat \eta$

be the function described by Theorem

5

. Then the sets

$\hat \eta$

be the function described by Theorem

5

. Then the sets

![]() $\{\hat \eta \gt 1/2\}$

and

$\{\hat \eta \gt 1/2\}$

and

![]() $\{\hat \eta \geq 1/2\}$

are adversarial Bayes classifiers. Furthermore, any adversarial Bayes classifier

$\{\hat \eta \geq 1/2\}$

are adversarial Bayes classifiers. Furthermore, any adversarial Bayes classifier

![]() $A$

satisfies

$A$

satisfies

and

See Appendix A for a formal proof; these properties follow direction from Item 1 and Item 2 of Theorem5. Equations (11) and (12) imply that the sets

![]() $\{\hat \eta \gt 1/2\}$

and

$\{\hat \eta \gt 1/2\}$

and

![]() $\{\hat \eta \geq 1/2\}$

can be viewed as ‘minimal’ and ‘maximal’ adversarial Bayes classifiers.

$\{\hat \eta \geq 1/2\}$

can be viewed as ‘minimal’ and ‘maximal’ adversarial Bayes classifiers.

Theorem6 is proved in Appendix A– Item 1 and Item 2 imply that

![]() $ R^\epsilon (\{\hat \eta \gt 1/2\})=\bar R({\mathbb P}_0^*,{\mathbb P}_1^*)=R^\epsilon (\hat \eta \geq 1/2\})$

and consequently Theorem3 implies that

$ R^\epsilon (\{\hat \eta \gt 1/2\})=\bar R({\mathbb P}_0^*,{\mathbb P}_1^*)=R^\epsilon (\hat \eta \geq 1/2\})$

and consequently Theorem3 implies that

![]() $\{\hat \eta \gt 1/2\}$

,

$\{\hat \eta \gt 1/2\}$

,

![]() $\{\hat \eta \geq 1/2\}$

minimize

$\{\hat \eta \geq 1/2\}$

minimize

![]() $R^\epsilon$

and

$R^\epsilon$

and

![]() ${\mathbb P}_0^*$

,

${\mathbb P}_0^*$

,

![]() ${\mathbb P}_1^*$

maximize

${\mathbb P}_1^*$

maximize

![]() $\bar R$

. This proof technique is analogous to the approach employed by [Reference Frank and Niles-Weed17] to establish Theorem5. Lastly, uniqueness up to degeneracy can be characterized in terms of these

$\bar R$

. This proof technique is analogous to the approach employed by [Reference Frank and Niles-Weed17] to establish Theorem5. Lastly, uniqueness up to degeneracy can be characterized in terms of these

![]() ${\mathbb P}_0^*$

,

${\mathbb P}_0^*$

,

![]() ${\mathbb P}_1^*$

.

${\mathbb P}_1^*$

.

Theorem 7.

Assume that

![]() $\mathbb P$

is absolutely continuous with respect to Lebesgue measure. Then the following are equivalent:

$\mathbb P$

is absolutely continuous with respect to Lebesgue measure. Then the following are equivalent:

A) The adversarial Bayes classifier is unique up to degeneracy

B)

${\mathbb P}^*(\eta ^*=1/2)=0$

, where

${\mathbb P}^*(\eta ^*=1/2)=0$

, where

${\mathbb P}^*={\mathbb P}_0^*+{\mathbb P}_1^*$

and

${\mathbb P}^*={\mathbb P}_0^*+{\mathbb P}_1^*$

and

$\eta ^*=d{\mathbb P}_1^*/d{\mathbb P}^*$

for the measures

$\eta ^*=d{\mathbb P}_1^*/d{\mathbb P}^*$

for the measures

${\mathbb P}_0^*,{\mathbb P}_1^*$

of Theorem

5

.

${\mathbb P}_0^*,{\mathbb P}_1^*$

of Theorem

5

.

See Appendix B for a proof of Theorem7. In relation to prior work – the proof of [Reference Frank15, Theorem 3.4] shows Theorem7 but a full proof of Theorem7 is included in this paper for clarity as [Reference Frank15] did not discuss the role of the function

![]() $\hat \eta$

.

$\hat \eta$

.

6. Uniqueness up to degeneracy implies consistency

Before presenting the full proof of consistency, we provide an overview of the strategy of this argument. First, a minimizing sequence of

![]() $R_\phi ^\epsilon$

must satisfy the approximate complementary slackness conditions derived in [Reference Frank and Niles-Weed16, Proposition 4].

$R_\phi ^\epsilon$

must satisfy the approximate complementary slackness conditions derived in [Reference Frank and Niles-Weed16, Proposition 4].

Proposition 1.

Assume that the measures

![]() ${\mathbb P}_0^*\in {{\mathcal {B}}^\infty _{\epsilon }}({\mathbb P}_0)$

,

${\mathbb P}_0^*\in {{\mathcal {B}}^\infty _{\epsilon }}({\mathbb P}_0)$

,

![]() ${\mathbb P}_1^*\in {{\mathcal {B}}^\infty _{\epsilon }}({\mathbb P}_1)$

maximize

${\mathbb P}_1^*\in {{\mathcal {B}}^\infty _{\epsilon }}({\mathbb P}_1)$

maximize

![]() $\bar {R}_\phi$

. Then any minimizing sequence

$\bar {R}_\phi$

. Then any minimizing sequence

![]() $f_n$

of

$f_n$

of

![]() $R_\phi ^\epsilon$

must satisfyr

$R_\phi ^\epsilon$

must satisfyr

where

![]() ${\mathbb P}^*={\mathbb P}_0^*+{\mathbb P}_1^*$

and

${\mathbb P}^*={\mathbb P}_0^*+{\mathbb P}_1^*$

and

![]() $\eta ^*=d{\mathbb P}_1^*/d{\mathbb P}^*$

.

$\eta ^*=d{\mathbb P}_1^*/d{\mathbb P}^*$

.

We will show that when

![]() ${\mathbb P}^*(\eta ^*=1/2)=0$

, every sequence of functions satisfying (13) and (14) must minimize

${\mathbb P}^*(\eta ^*=1/2)=0$

, every sequence of functions satisfying (13) and (14) must minimize

![]() $R^\epsilon$

. Specifically, we will prove that every minimizing sequence

$R^\epsilon$

. Specifically, we will prove that every minimizing sequence

![]() $f_n$

of

$f_n$

of

![]() $R_\phi ^\epsilon$

must satisfy

$R_\phi ^\epsilon$

must satisfy

for the measures

![]() ${\mathbb P}_0^*$

,

${\mathbb P}_0^*$

,

![]() ${\mathbb P}_1^*$

in Theorem5. Consequently,

${\mathbb P}_1^*$

in Theorem5. Consequently,

![]() ${\mathbb P}^*(\eta ^*=1/2)=0$

would imply that

${\mathbb P}^*(\eta ^*=1/2)=0$

would imply that

![]() $\limsup _{n\to \infty } R^\epsilon (f_n)\leq \bar R({\mathbb P}_0^*,{\mathbb P}_1^*)$

and the strong duality relation in Theorem3 implies that

$\limsup _{n\to \infty } R^\epsilon (f_n)\leq \bar R({\mathbb P}_0^*,{\mathbb P}_1^*)$

and the strong duality relation in Theorem3 implies that

![]() $f_n$

must in fact be a minimizing sequence of

$f_n$

must in fact be a minimizing sequence of

![]() $R^\epsilon$

.

$R^\epsilon$

.

Next, we summarize the argument establishing Equation 15. We make several simplifying assumptions in the following discussion. First, we assume that the functions

![]() $\phi$

,

$\phi$

,

![]() $\alpha _\phi$

are strictly monotonic and that for each

$\alpha _\phi$

are strictly monotonic and that for each

![]() $\eta$

, there is a unique value of

$\eta$

, there is a unique value of

![]() $\alpha$

for which

$\alpha$

for which

![]() $\eta \phi (\alpha )+(1-\eta )\phi (-\alpha )=C_\phi ^*(\eta )$

. (For instance, the exponential loss

$\eta \phi (\alpha )+(1-\eta )\phi (-\alpha )=C_\phi ^*(\eta )$

. (For instance, the exponential loss

![]() $\phi (\alpha )=e^{-\alpha }$

satisfies these requirements.) Let

$\phi (\alpha )=e^{-\alpha }$

satisfies these requirements.) Let

![]() $\gamma _1^*$

be a coupling between

$\gamma _1^*$

be a coupling between

![]() ${\mathbb P}_1$

and

${\mathbb P}_1$

and

![]() ${\mathbb P}_1^*$

supported on

${\mathbb P}_1^*$

supported on

![]() $\Delta _\epsilon$

.

$\Delta _\epsilon$

.

Because

![]() $C_\phi (\eta ^*,f_n)\geq C_\phi ^*(\eta ^*)$

, the condition (13) implies that

$C_\phi (\eta ^*,f_n)\geq C_\phi ^*(\eta ^*)$

, the condition (13) implies that

![]() $C_\phi (\eta ^*,f_n)$

converges to

$C_\phi (\eta ^*,f_n)$

converges to

![]() $C_\phi ^*(\eta ^*)$

in

$C_\phi ^*(\eta ^*)$

in

![]() $L^1({\mathbb P}^*)$

, and the assumption that there is a single value of

$L^1({\mathbb P}^*)$

, and the assumption that there is a single value of

![]() $\alpha$

for which

$\alpha$

for which

![]() $\eta \phi (\alpha )+(1-\eta )\phi (-\alpha )=C_\phi ^*(\eta )$

implies that the function

$\eta \phi (\alpha )+(1-\eta )\phi (-\alpha )=C_\phi ^*(\eta )$

implies that the function

![]() $\phi (f_n({\mathbf x}^{\prime}))$

must converge to

$\phi (f_n({\mathbf x}^{\prime}))$

must converge to

![]() $\phi (\alpha _\phi (\eta ^*({\mathbf x}^{\prime}))$

in

$\phi (\alpha _\phi (\eta ^*({\mathbf x}^{\prime}))$

in

![]() $L^1({\mathbb P}_1^*)$

. Similarly, because Lemma2 states that

$L^1({\mathbb P}_1^*)$

. Similarly, because Lemma2 states that

![]() $S_\epsilon (\phi \circ f_n)({\mathbf x})\geq \phi \circ f_n({\mathbf x}^{\prime})$

$S_\epsilon (\phi \circ f_n)({\mathbf x})\geq \phi \circ f_n({\mathbf x}^{\prime})$

![]() $\gamma _1^*$

-a.e., Equation 14) implies that

$\gamma _1^*$

-a.e., Equation 14) implies that

![]() $S_\epsilon (\phi \circ f_n)({\mathbf x})-\phi \circ f_n({\mathbf x}^{\prime})$

converges to 0 in

$S_\epsilon (\phi \circ f_n)({\mathbf x})-\phi \circ f_n({\mathbf x}^{\prime})$

converges to 0 in

![]() $L^1(\gamma _1^*)$

. Consequently

$L^1(\gamma _1^*)$

. Consequently

![]() $S_\epsilon (\phi \circ f_n)({\mathbf x})$

must converge to

$S_\epsilon (\phi \circ f_n)({\mathbf x})$

must converge to

![]() $\phi (\alpha _\phi (\eta ^*({\mathbf x}^{\prime})))$

in

$\phi (\alpha _\phi (\eta ^*({\mathbf x}^{\prime})))$

in

![]() $L^1(\gamma _1^*)$

. As

$L^1(\gamma _1^*)$

. As

![]() $L^1$

convergence implies convergence in measure [Reference Folland14, Proposition 2.29], one can conclude that

$L^1$

convergence implies convergence in measure [Reference Folland14, Proposition 2.29], one can conclude that

for any

![]() $c\gt 0$

. The lower semi-continuity of

$c\gt 0$

. The lower semi-continuity of

![]() $\alpha \mapsto {\mathbf {1}}_{\alpha \leq 0}$

implies that

$\alpha \mapsto {\mathbf {1}}_{\alpha \leq 0}$

implies that

![]() $\int S_\epsilon ({\mathbf {1}}_{f_n\leq 0})d{\mathbb P}_1\leq \int {\mathbf {1}}_{S_\epsilon (\phi (f_n))({\mathbf x})\geq \phi (0)}d{\mathbb P}_1$

and furthermore (17) implies

$\int S_\epsilon ({\mathbf {1}}_{f_n\leq 0})d{\mathbb P}_1\leq \int {\mathbf {1}}_{S_\epsilon (\phi (f_n))({\mathbf x})\geq \phi (0)}d{\mathbb P}_1$

and furthermore (17) implies

Next, we will also assume that

![]() $\alpha _\phi ^{-1}$

is continuous and

$\alpha _\phi ^{-1}$

is continuous and

![]() $\alpha _\phi (1/2)=0$

. (The exponential loss satisfies these requirements as well.) Due to our assumptions on

$\alpha _\phi (1/2)=0$

. (The exponential loss satisfies these requirements as well.) Due to our assumptions on

![]() $\phi$

and

$\phi$

and

![]() $\alpha _\phi$

, the quantity

$\alpha _\phi$

, the quantity

![]() $\phi ^{-1}(\phi (0)-c)$

is strictly smaller than 0, and consequently,

$\phi ^{-1}(\phi (0)-c)$

is strictly smaller than 0, and consequently,

![]() $\alpha _\phi ^{-1}\circ \phi ^{-1}(\phi (0)-c)$

is strictly smalaler than

$\alpha _\phi ^{-1}\circ \phi ^{-1}(\phi (0)-c)$

is strictly smalaler than

![]() $1/2$

. However, if

$1/2$

. However, if

![]() $\alpha _\phi ^{-1}$

is continuous, one can choose

$\alpha _\phi ^{-1}$

is continuous, one can choose

![]() $c$

small enough so that

$c$

small enough so that

![]() ${\mathbb P}^*( |\eta -1/2|\lt 1/2- \alpha _\phi ^{-1}\circ \phi ^{-1}(\phi (0)-c))\lt \delta$

for any

${\mathbb P}^*( |\eta -1/2|\lt 1/2- \alpha _\phi ^{-1}\circ \phi ^{-1}(\phi (0)-c))\lt \delta$

for any

![]() $\delta \gt 0$

when

$\delta \gt 0$

when

![]() ${\mathbb P}^*(\eta ^*=1/2)=0$

. This choice of

${\mathbb P}^*(\eta ^*=1/2)=0$

. This choice of

![]() $c$

along with (18) proves (15).

$c$

along with (18) proves (15).

To avoid the prior assumptions on

![]() $\phi$

and

$\phi$

and

![]() $\alpha$

, we prove that when

$\alpha$

, we prove that when

![]() $\eta$

is bounded away from

$\eta$

is bounded away from

![]() $1/2$

and

$1/2$

and

![]() $\alpha$

is bounded away from the minimizers of

$\alpha$

is bounded away from the minimizers of

![]() $C_\phi (\eta, \cdot )$

, then

$C_\phi (\eta, \cdot )$

, then

![]() $C_\phi (\eta, \alpha )$

is bounded away from

$C_\phi (\eta, \alpha )$

is bounded away from

![]() $C_\phi ^*(\eta )$

.

$C_\phi ^*(\eta )$

.

Lemma 4.

Let

![]() $\phi$

be a consistent loss. For all

$\phi$

be a consistent loss. For all

![]() $r\gt 0$

, there is a constant

$r\gt 0$

, there is a constant

![]() $k_r\gt 0$

and an

$k_r\gt 0$

and an

![]() $\alpha _r\gt 0$

for which if

$\alpha _r\gt 0$

for which if

![]() $|\eta -1/2|\geq r$

and

$|\eta -1/2|\geq r$

and

![]() $\textrm {sign}(\eta -1/2) \alpha \leq \alpha _r$

then

$\textrm {sign}(\eta -1/2) \alpha \leq \alpha _r$

then

![]() $C_\phi (\eta, \alpha _r)-C_\phi ^*(\eta )\geq k_r$

, and this

$C_\phi (\eta, \alpha _r)-C_\phi ^*(\eta )\geq k_r$

, and this

![]() $\alpha _r$

satisfies

$\alpha _r$

satisfies

![]() $\phi (\alpha _r)\lt \phi (0)$

.

$\phi (\alpha _r)\lt \phi (0)$

.

See Appendix C for a proof. A minor modification of the argument above our main result:

Proposition 2.

Assume there exist

![]() ${\mathbb P}_0^*\in {{\mathcal {B}}^\infty _{\epsilon }} ({\mathbb P}_0)$

,

${\mathbb P}_0^*\in {{\mathcal {B}}^\infty _{\epsilon }} ({\mathbb P}_0)$

,

![]() ${\mathbb P}_1^*\in {{\mathcal {B}}^\infty _{\epsilon }}({\mathbb P}_1)$

that maximize

${\mathbb P}_1^*\in {{\mathcal {B}}^\infty _{\epsilon }}({\mathbb P}_1)$

that maximize

![]() $\bar {R}_\phi$

for which

$\bar {R}_\phi$

for which

![]() ${\mathbb P}^*(\eta ^*=1/2)=0$

. Then any consistent loss is adversarially consistent.

${\mathbb P}^*(\eta ^*=1/2)=0$

. Then any consistent loss is adversarially consistent.

When

![]() $\mathbb P$

is absolutely continuous with respect to Lebesgue measure, uniqueness up to degeneracy of the adversarial Bayes classifier implies the assumptions of this proposition due to Theorem7. However, this result applies even to distributions which are not absolutely continuous with respect to Lebesgue measure. For instance, if the optimal classification risk is zero, the

$\mathbb P$

is absolutely continuous with respect to Lebesgue measure, uniqueness up to degeneracy of the adversarial Bayes classifier implies the assumptions of this proposition due to Theorem7. However, this result applies even to distributions which are not absolutely continuous with respect to Lebesgue measure. For instance, if the optimal classification risk is zero, the

![]() ${\mathbb P}^*(\eta ^*=1/2)=0$

. To show this statement, notice that if

${\mathbb P}^*(\eta ^*=1/2)=0$

. To show this statement, notice that if

![]() $\inf _A R^\epsilon (A)=0$

, then Theorem3 implies that for any measures

$\inf _A R^\epsilon (A)=0$

, then Theorem3 implies that for any measures

![]() ${\mathbb P}_0^{\prime}\in {{\mathcal {B}}^\infty _{\epsilon }}({\mathbb P}_0)$

,

${\mathbb P}_0^{\prime}\in {{\mathcal {B}}^\infty _{\epsilon }}({\mathbb P}_0)$

,

![]() ${\mathbb P}_1^{\prime}\in {{\mathcal {B}}^\infty _{\epsilon }}({\mathbb P}_1)$

, one can conclude that

${\mathbb P}_1^{\prime}\in {{\mathcal {B}}^\infty _{\epsilon }}({\mathbb P}_1)$

, one can conclude that

![]() ${\mathbb P}^{\prime}(\eta ^{\prime}=1/2)=0$

, where

${\mathbb P}^{\prime}(\eta ^{\prime}=1/2)=0$

, where

![]() ${\mathbb P}^{\prime}={\mathbb P}_0^{\prime}+{\mathbb P}_1^{\prime}$

and

${\mathbb P}^{\prime}={\mathbb P}_0^{\prime}+{\mathbb P}_1^{\prime}$

and

![]() $\eta ^{\prime}=d{\mathbb P}_1^{\prime}/d{\mathbb P}^{\prime}$

.

$\eta ^{\prime}=d{\mathbb P}_1^{\prime}/d{\mathbb P}^{\prime}$

.

Proof of Proposition

2. We will show that every minimizing sequence of

![]() $R_\phi ^\epsilon$

must satisfy (15) and (16). These equations together with the assumption

$R_\phi ^\epsilon$

must satisfy (15) and (16). These equations together with the assumption

![]() ${\mathbb P}^*(\eta ^*=1/2)=0$

imply that

${\mathbb P}^*(\eta ^*=1/2)=0$

imply that

The strong duality result of Theorem3 then implies that

![]() $f_n$

must be a minimizing sequence of

$f_n$

must be a minimizing sequence of

![]() $R^\epsilon$

.

$R^\epsilon$

.

Let

![]() $\delta$

be arbitrary. Due to the assumption

$\delta$

be arbitrary. Due to the assumption

![]() ${\mathbb P}^*(\eta ^*=1/2)=0$

, one can pick an

${\mathbb P}^*(\eta ^*=1/2)=0$

, one can pick an

![]() $r$

for which

$r$

for which

Next, let

![]() $\alpha _r$

,

$\alpha _r$

,

![]() $k_r$

be as in Lemma4. Let

$k_r$

be as in Lemma4. Let

![]() $\gamma _i^*$

be couplings between

$\gamma _i^*$

be couplings between

![]() ${\mathbb P}_i$

and

${\mathbb P}_i$

and

![]() ${\mathbb P}_i^*$

supported on

${\mathbb P}_i^*$

supported on

![]() $\Delta _\epsilon$

. Lemma2 implies that

$\Delta _\epsilon$

. Lemma2 implies that

![]() $S_\epsilon (\phi \circ f_n)({\mathbf x})\geq \phi \circ f_n({\mathbf x}^{\prime})$

$S_\epsilon (\phi \circ f_n)({\mathbf x})\geq \phi \circ f_n({\mathbf x}^{\prime})$

![]() $\gamma _1^*$

-a.e., and thus (14) implies that

$\gamma _1^*$

-a.e., and thus (14) implies that

![]() $S_\epsilon (\phi \circ f_n)({\mathbf x})- \phi \circ f_n({\mathbf x}^{\prime})$

converges to 0 in

$S_\epsilon (\phi \circ f_n)({\mathbf x})- \phi \circ f_n({\mathbf x}^{\prime})$

converges to 0 in

![]() $L^1(\gamma _1^*)$

. Because convergence in

$L^1(\gamma _1^*)$

. Because convergence in

![]() $L^1$

implies convergence in measure [Reference Folland14, Proposition 2.29], the quantity

$L^1$

implies convergence in measure [Reference Folland14, Proposition 2.29], the quantity

![]() $S_\epsilon (\phi \circ f_n)({\mathbf x})- \phi \circ f_n({\mathbf x}^{\prime})$

converges to 0 in

$S_\epsilon (\phi \circ f_n)({\mathbf x})- \phi \circ f_n({\mathbf x}^{\prime})$

converges to 0 in

![]() $\gamma _1^*$

-measure. Similarly, one can conclude that

$\gamma _1^*$

-measure. Similarly, one can conclude that

![]() $S_\epsilon (\phi \circ -f_n)({\mathbf x})- \phi \circ -f_n({\mathbf x}^{\prime})$

converges to zero in

$S_\epsilon (\phi \circ -f_n)({\mathbf x})- \phi \circ -f_n({\mathbf x}^{\prime})$

converges to zero in

![]() $\gamma _0^*$

-measure. Analogously, as

$\gamma _0^*$

-measure. Analogously, as

![]() $C_\phi ^*(\eta ^*,f_n)\geq C_\phi ^*(\eta ^*)$

, Equation 13) implies that

$C_\phi ^*(\eta ^*,f_n)\geq C_\phi ^*(\eta ^*)$

, Equation 13) implies that

![]() $C_\phi ^*(\eta ^*,f_n)$

converges in

$C_\phi ^*(\eta ^*,f_n)$

converges in

![]() ${\mathbb P}^*$

-measure to

${\mathbb P}^*$

-measure to

![]() $C_\phi ^*(\eta ^*)$

. Therefore, Proposition1 implies that one can choose

$C_\phi ^*(\eta ^*)$

. Therefore, Proposition1 implies that one can choose

![]() $N$

large enough so that

$N$

large enough so that

![]() $n\gt N$

implies

$n\gt N$

implies

and

![]() ${\mathbb P}^*(C_\phi ^*(\eta ^*, f_n)\gt C_\phi ^*(\eta ^*)+k_r)\lt \delta$

. The relation

${\mathbb P}^*(C_\phi ^*(\eta ^*, f_n)\gt C_\phi ^*(\eta ^*)+k_r)\lt \delta$

. The relation

![]() ${\mathbb P}^*(C_\phi ^*(\eta ^*, f_n)\gt C_\phi ^*(\eta ^*)+k_r)\lt \delta$

implies

${\mathbb P}^*(C_\phi ^*(\eta ^*, f_n)\gt C_\phi ^*(\eta ^*)+k_r)\lt \delta$

implies

due to Lemma4. Because

![]() $\phi$

is non-increasing,

$\phi$

is non-increasing,

![]() ${\mathbf {1}}_{f_n\leq 0}\leq {\mathbf {1}}_{\phi \circ f_n\geq \phi (0)}$

and since the function

${\mathbf {1}}_{f_n\leq 0}\leq {\mathbf {1}}_{\phi \circ f_n\geq \phi (0)}$

and since the function

![]() $z \mapsto {\mathbf {1}}_{z\geq \phi (0)}$

is upper semi-continuous,

$z \mapsto {\mathbf {1}}_{z\geq \phi (0)}$

is upper semi-continuous,

Now (20) implies that for

![]() $n\gt N$

,

$n\gt N$

,

![]() $S_\epsilon (\phi \circ f_n)({\mathbf x})\lt (\phi \circ f_n)({\mathbf x}^{\prime})+\phi (0)-\phi (\alpha _r)$

outside a set of

$S_\epsilon (\phi \circ f_n)({\mathbf x})\lt (\phi \circ f_n)({\mathbf x}^{\prime})+\phi (0)-\phi (\alpha _r)$

outside a set of

![]() $\gamma _1^*$

-measure

$\gamma _1^*$

-measure

![]() $\delta$

and thus

$\delta$

and thus

Next, the monotonicity of

![]() $\phi$

implies that

$\phi$

implies that

![]() ${\mathbb P}_1^*(\phi \circ f_n({\mathbf x}^{\prime})\gt \phi (\alpha _r))\leq {\mathbb P}_1^*(f_n\lt \alpha _r)$

and thus

${\mathbb P}_1^*(\phi \circ f_n({\mathbf x}^{\prime})\gt \phi (\alpha _r))\leq {\mathbb P}_1^*(f_n\lt \alpha _r)$

and thus

by (19). Next, (22) implies

![]() ${\mathbb P}_1^*(\eta ^*\geq 1/2+r, f_n\leq \alpha _r)\lt \delta$

and consequently

${\mathbb P}_1^*(\eta ^*\geq 1/2+r, f_n\leq \alpha _r)\lt \delta$

and consequently

Because

![]() $\delta$

is arbitrary, this relation implies (15). Next, to prove (16), observe that

$\delta$

is arbitrary, this relation implies (15). Next, to prove (16), observe that

![]() ${\mathbf {1}}_{f\geq 0}={\mathbf {1}}_{-f\leq 0}$

, and thus the inequalities (23) and (24) hold with

${\mathbf {1}}_{f\geq 0}={\mathbf {1}}_{-f\leq 0}$

, and thus the inequalities (23) and (24) hold with

![]() $-f_n$

in place of

$-f_n$

in place of

![]() $f_n$

,

$f_n$

,

![]() ${\mathbb P}_0,$

${\mathbb P}_0,$

![]() ${\mathbb P}_0^*$

,

${\mathbb P}_0^*$

,

![]() $\gamma _0^*$

in place of

$\gamma _0^*$

in place of

![]() ${\mathbb P}_1,{\mathbb P}_1^*$

,

${\mathbb P}_1,{\mathbb P}_1^*$

,

![]() $\gamma _1^*$

, and (21) in place of (20). Equation 22 further implies

$\gamma _1^*$

, and (21) in place of (20). Equation 22 further implies

![]() ${\mathbb P}_1^*(\eta ^*\leq 1/2-r, f_n\geq -\alpha _r)\lt \delta$

, and these relations imply (16).

${\mathbb P}_1^*(\eta ^*\leq 1/2-r, f_n\geq -\alpha _r)\lt \delta$

, and these relations imply (16).

7. Consistency requires uniqueness up to degeneracy

We prove the reverse direction of Theorem2 by constructing a sequence of functions

![]() $f_n$

that minimize

$f_n$

that minimize

![]() $R_\phi ^\epsilon$

for which

$R_\phi ^\epsilon$

for which

![]() $R^\epsilon (f_n)$

is constant in

$R^\epsilon (f_n)$

is constant in

![]() $n$

and not equal to the minimal adversarial Bayes risk.

$n$

and not equal to the minimal adversarial Bayes risk.

Proposition 3.

Assume that

![]() $\mathbb P$

is absolutely continuous with respect to Lebesgue measure and that the adversarial Bayes classifier is not unique up to degeneracy. Then any consistent loss

$\mathbb P$

is absolutely continuous with respect to Lebesgue measure and that the adversarial Bayes classifier is not unique up to degeneracy. Then any consistent loss

![]() $\phi$

satisfying

$\phi$

satisfying

![]() $C_\phi ^*(1/2)=\phi (0)$

is not adversarially consistent.

$C_\phi ^*(1/2)=\phi (0)$

is not adversarially consistent.

We will outline the proof in this section below, and the full argument is presented in Appendix D. Before discussing the components of this argument, we illustrate this construction using the adversarial Bayes classifiers depicted in Figure 2. In this example, when

![]() $\epsilon \leq (\mu _1-\mu _0)/2$

, two adversarial Bayes classifiers are

$\epsilon \leq (\mu _1-\mu _0)/2$

, two adversarial Bayes classifiers are

![]() $A_1=\emptyset$

and

$A_1=\emptyset$

and

![]() $A_2=\mathbb {R}$

, as depicted in Figure 2b and 2c. These sets are not equivalent up to degeneracy, and in particular the set

$A_2=\mathbb {R}$

, as depicted in Figure 2b and 2c. These sets are not equivalent up to degeneracy, and in particular the set

![]() $\{1\}$

is not an adversarial Bayes classifier. However, the fact that both

$\{1\}$

is not an adversarial Bayes classifier. However, the fact that both

![]() $\emptyset$

and

$\emptyset$

and

![]() $\mathbb {R}$

are adversarial Bayes classifiers suggests that

$\mathbb {R}$

are adversarial Bayes classifiers suggests that

![]() $\hat \eta (x) \equiv 1/2$

on all of

$\hat \eta (x) \equiv 1/2$

on all of

![]() $\mathbb {R}$

, and thus

$\mathbb {R}$

, and thus

![]() $f(x)\equiv 0$

is a minimizer of

$f(x)\equiv 0$

is a minimizer of

![]() $R_\phi ^\epsilon$

. Consequently, the function sequence

$R_\phi ^\epsilon$

. Consequently, the function sequence

\begin{align*} f_n(x)=\begin{cases} \frac 1 n &\text {if }x\in \{1\}\\ -\frac 1n&\text {otherwise} \end{cases} \end{align*}

\begin{align*} f_n(x)=\begin{cases} \frac 1 n &\text {if }x\in \{1\}\\ -\frac 1n&\text {otherwise} \end{cases} \end{align*}

is a minimizing sequence of

![]() $R_\phi ^\epsilon$

for which

$R_\phi ^\epsilon$

for which

![]() $R^\epsilon (f_n)$

is constant in

$R^\epsilon (f_n)$

is constant in

![]() $n$

and not equal to the adversarial Bayes risk.

$n$

and not equal to the adversarial Bayes risk.

To generalize this construction to an arbitrary distribution, one must find a set

![]() $\tilde A$

contained between two adversarial Bayes classifiers that is not itself an adversarial Bayes classifier. The absolute continuity assumption is key in this step of the argument. Theorem6 together with a result of [Reference Frank15] imply that when

$\tilde A$

contained between two adversarial Bayes classifiers that is not itself an adversarial Bayes classifier. The absolute continuity assumption is key in this step of the argument. Theorem6 together with a result of [Reference Frank15] imply that when

![]() $\mathbb P$

is absolutely continuous with respect to Lebesgue measure, the adversarial Bayes classifier is unique iff

$\mathbb P$

is absolutely continuous with respect to Lebesgue measure, the adversarial Bayes classifier is unique iff

![]() $\{\hat \eta \gt 1/2\}$

and

$\{\hat \eta \gt 1/2\}$

and

![]() $\{\hat \eta \geq 1/2\}$

are equivalent up to degeneracy, see Appendix D for a proof.

$\{\hat \eta \geq 1/2\}$

are equivalent up to degeneracy, see Appendix D for a proof.

Lemma 5.

Assume

![]() $\mathbb P$

is absolutely continuous with respect to Lebesgue measure. Then adversarial Bayes classifier is unique up to degeneracy iff the adversarial Bayes classifiers

$\mathbb P$

is absolutely continuous with respect to Lebesgue measure. Then adversarial Bayes classifier is unique up to degeneracy iff the adversarial Bayes classifiers

![]() $\{\hat \eta \gt 1/2\}$

and

$\{\hat \eta \gt 1/2\}$

and

![]() $\{\hat \eta \geq 1/2\}$

are equivalent up to degeneracy.

$\{\hat \eta \geq 1/2\}$

are equivalent up to degeneracy.

Consequently, if the adversarial Bayes classifier is not unique up to degeneracy, then there is a set

![]() $\tilde A$

that is not an adversarial Bayes classifier but