Recent years have heralded a relatively tumultuous time in the history of psychological science. The past decade saw the publication of a landmark paper that attempted to replicate 100 studies and estimated that just 39 percent of studies published in top psychology journals were replicable (Open Science Collaboration, 2015). There was also a surplus of studies failing to replicate high-profile effects that had long been taken as fact (e.g., Reference Hagger, Chatzisarantis, Alberts, Anggono, Batailler, Birt, Brand, Brandt, Brewer, Bruyneel, Calvillo, Campbell, Cannon, Carlucci, Carruth, Cheung, Crowell, De Ridder, Dewitte and ZwienenbergHagger et al., 2016; Reference Harris, Coburn, Rohrer and PashlerHarris et al., 2013; Reference Wagenmakers, Beek, Dijkhoff, Gronau, Acosta, Adams, Albohn, Allard, Benning, Blouin-Hudon, Bulnes, Caldwell, Calin-Jageman, Capaldi, Carfagno, Chasten, Cleeremans, Connell, DeCicco and ZwaanWagenmakers et al., 2016). Taken together, suddenly, the foundations of much psychological research seemed very shaky.

As with similar evidence in other scientific fields (e.g., biomedicine, criminology), these findings have led to a collective soul-searching dubbed the “replication crisis” or the “credibility revolution” (Reference Nelson, Simmons and SimonsohnNelson et al., 2018; Reference VazireVazire, 2018). Clearly, something about the way scientists had gone about their work in the past wasn’t effective at uncovering replicable findings, and changes were badly needed. An impressive collection of meta-scientific studies (i.e., studies about scientists and scientific practices) have revealed major shortcomings in standard research and statistical methods (e.g., Reference Button, Ioannidis, Mokrysz, Nosek, Flint, Robinson and MunafòButton et al., 2013; Reference John, Loewenstein and PrelecJohn et al., 2012; Reference Nuijten, Hartgerink, Van Assen, Epskamp and WichertsNuijten et al., 2016; Reference Simmons, Nelson and SimonsohnSimmons et al., 2011). These studies point to a clear way to improve not only replicability but also the accuracy of scientific conclusions: open science.

Open science refers to a radically transparent approach to the research process. “Open” refers to sharing – making accessible – parts of the research process that have traditionally been known only to an individual researcher or research team. In a standard research article, authors summarize their research methods and their findings, leaving out many details along the way. Among other things, open science includes sharing research materials (protocols) in full, making data and analysis code publicly available, and pre-registering (i.e., making plans public) study designs, hypotheses, and analysis plans.

Psychology has previously gone through periods of unrest similar to the 2010s, with methodologists and statisticians making persuasive pleas for more transparency and rigor in research (e.g., Reference BakanBakan, 1966; Reference CohenCohen, 1994; Reference KerrKerr, 1998; Reference MeehlMeehl, 1978). Yet, it is only now with improvements in technology and research infrastructure, together with concerted efforts in journals and scientific societies by reformers, that changes have begun to stick (Reference SpellmanSpellman, 2015).

Training in open science practices is now a required part of becoming a research psychologist. The goal of this chapter is to briefly review the shortcomings in scientific practice that open science practices address and then to give a more detailed account of open science itself. We’ll consider what it means to work openly and offer pragmatic advice for getting started.

1. Why Open Science?

When introducing new researchers to the idea of open science, the need for such practices seems obvious and self-evident. Doesn’t being a scientist logically imply an obligation to transparently show one’s work and subject it to rigorous scrutiny? Yet, abundant evidence reveals that researchers have not historically lived up to this ideal and that the failure to do transparent, rigorous work has hindered scientific progress.

1.1 Old Habits Die Hard

Several factors in the past combined to create conditions that encouraged researchers to avoid open science practices. First, incentives in academic contexts have not historically rewarded such behaviors and, in some cases, may have actually punished them (Reference Smaldino and McElreathSmaldino & McElreath, 2016). To get ahead in an academic career, publications are the coin of the realm, and jobs, promotions, and accolades can sometimes be awarded based on number of publications, rather than publication quality.

Second, human biases conspire to fool us into thinking we have discovered something when we actually have not (Reference BishopBishop, 2020). For instance, confirmation bias allows us to selectively interpret results in ways that support our pre-existing beliefs or theories, which may be flawed. Self-serving biases might cause defensive reactions when critics point out errors in our methods or conclusions. Adopting open science practices can expose researchers to cognitive discomfort (e.g., pre-existing beliefs are challenged; higher levels of transparency mean that critics are given ammunition), which we might naturally seek to avoid.

Finally, psychology uses an apprenticeship model of researcher training, which means that the practices of new researchers might only be as good as the practices of the more senior academics training them. When questionable research practices are taught as normative by research mentors, higher-quality open science practices might be dismissed as methodological pedantry.

Given the abundant evidence of flaws in psychology’s collective body of knowledge, we now know how important it is to overcome the hurdles described here and transition to a higher standard of practice. Incentives are changing, and open science practices are becoming the norm at many journals (Reference Nosek, Alter, Banks, Borsboom, Bowman, Breckler, Buck, Chambers, Chin, Christensen, Contestabile, Dafoe, Eich, Freese, Glennerster, Goroff, Green, Hesse, Humphreys and YarkoniNosek et al., 2015). A new generation of researchers is being trained to employ more rigorous practices. And although the cognitive biases just discussed might be some of the toughest problems to overcome, greater levels of transparency in the publishing process help fortify the ability of the peer review process to serve as a check on researcher biases.

1.2 Benefits of Open Science Practices

A number of benefits of open science practices are worth emphasizing. First, increases in transparency make it possible for errors to be detected and for science to self-correct. The self-correcting nature of science is often heralded as a key feature that distinguishes scientific approaches from other ways of knowing. Yet, self-correction is difficult, if not impossible, when details of research are routinely withheld (Reference Vazire and HolcombeVazire & Holcombe, 2020).

Second, openly sharing research materials (protocols), analysis code, and data provides new opportunities to extend upon research and adds value above and beyond what a single study would add. For example, future researchers can more easily replicate a study’s methods if they have access to a full protocol and materials; secondary data analysts and meta-analysts can perform novel analyses on raw data if they are shared.

Third, collaborative work becomes easier when teams employ the careful documentation that is well-honed for followers of open science practices. Even massive collaborations across time and location become possible when research materials and data are shared following similar standards (Reference Moshontz, Campbell, Ebersole, IJzerman, Urry, Forscher and ChartierMoshontz et al., 2018).

Finally, the benefits of open science practices accrue not only to the field at large, but also to individual researchers. Working openly provides a tangible record of your contributions as a researcher, which may be useful when it comes to applying for funding, awards, or jobs.

Reference MarkowetzMarkowetz (2015) describes five “selfish” reasons to work reproducibly, chiefly: (a) to avoid “disaster” (i.e., major errors), (b) because it’s easier, (c) to smooth the peer review process, (d) to allow others to build on your work, and (e) to build your reputation. Likewise, Reference McKiernan, Bourne, Brown, Buck, Kenall, Lin, McDougall, Nosek, Ram, Soderberg, Spies, Thaney, Updegrove, Woo and YarkoniMcKiernan et al. (2016) review the ample evidence that articles that feature open science practices tend to be more cited, more discussed in the media, attract more funding and job offers, and are associated with having a larger network of collaborators. Reference Allen and MehlerAllen and Mehler (2019) review benefits (along with challenges) specifically for early career researchers.

All of this is not to say that there are not costs or downsides to some of the practices discussed here. For one thing, learning and implementing new techniques takes time, although experience shows that you’ll become faster and more efficient with practice. Additionally, unsupportive research mentors or other senior collaborators can make it challenging to embrace open science practices. The power dynamics in such relationships may mean that there is little flexibility in the practices that early career researchers can employ. Trying to propose new techniques can be stressful and might strain advisor-advisee relationships, but see Reference Kathawalla, Silverstein and SyedKathawalla et al. (2021) for rebuttals to these issues and other common worries.

In spite of these persistent challenges and the old pressures working against the adoption of open science practices, I hope to convince you that the benefits of working openly are numerous – both to the field and to individual researchers. As a testament to changing norms and incentives, open science practices are spreading and taking hold in psychology (Reference Christensen, Freese and MiguelChristensen, Freese, et al., 2019; Reference Tenney, Costa, Allard and VazireTenney et al., 2021). Let us consider in more detail what we actually mean by open science practices.

2. Planning Your Research

Many open science practices boil down to forming or changing your work habits so that more parts of your work are available to be observed by others. But like other healthy habits (eating healthy food, exercising), open science practices may take some initial effort to put into place. You may also find that what works well for others doesn’t work well for you, and it may take some trial and error to arrive at a workflow that is both effective and sustainable. However, the benefits that you’ll reap from establishing these habits – both immediate and delayed – are well worth putting in the effort. It may not seem like it, but there is no better time in your career to begin than now.

Likewise, you may find that many open science practices are most easily implemented early in the research process, during the planning stages. But fear not: if a project is already underway, we’ll consider ways to add transparency to the research process at later stages as well. Here, we’ll discuss using the Open Science Framework (https://osf.io), along with pre-registration and registered reports, as you plan your research.

2.1 Managing the Open Science Workflow: The Open Science Framework

The Open Science Framework (OSF; https://osf.io) is a powerful research management tool. Using a tool like OSF allows you to organize all stages of the research process in one location, which can help you stay organized. Using OSF is also not tied to any specific academic institution, so you won’t have to worry about transferring your work when you inevitably change jobs (perhaps several times). Other tools exist that can do many of the things OSF can (some researchers like to use GitHub, figshare, or Zenodo, for instance), but OSF was specifically created for managing scientific research and has a number of features that make it uniquely suited for the task. OSF’s core functions include (but are not limited to) long-term archival of research materials, analysis code, and data; a flexible but robust pre-registration tool; and support for collaborative workflow management. Later in the chapter, we’ll discuss the ins and outs of each of these practices, but here I want to review a few of the ways that OSF is specialized for these functions.

The main unit of work on OSF is the “project.” Each project has a stable URL and the potential to create an associated digital object identifier (DOI). This means that researchers can make reference to OSF project pages in their research articles without worry that links will cease to function or shared content will become unavailable. A sizable preservation fund promises that content shared on OSF will remain available for at least 50 years, even if the service should cease to operate. This stability makes OSF well-suited to host part of the scientific record.

A key feature of projects is that they can be made public (accessible to all) or private (accessible only to contributors). This feature allows you to share your work publicly when you are ready, whether that is immediately or only after a project is complete. Another feature is that projects can be shared using “view-only” links. These links have the option to remove contributor names to enable the materials shared in a project to be accessible to peer reviewers at journals that use masked review.

Projects can have any number of contributors, making it possible to easily work collaboratively even with a large team. An activity tracker gives a detailed and complete account of changes to the project (e.g., adding or removing a file, editing the project wiki page), so you always know who did what, and when, within a project. Another benefit is the ability to easily connect OSF to other tools (e.g., Google drive, GitHub) to further enhance OSF’s capabilities.

Within projects, it is possible to create nested “components.” Components have their own URLs, DOIs, privacy settings, and contributor list. It is possible, for instance, to create a component within a project and to restrict access to that component alone while making the rest of the project publicly accessible. If particular parts of a project are sensitive or confidential, components can be a useful way to maintain the privacy of that information. Similarly, perhaps it is necessary for part of a research group to have access to parts of a research project and for others to not have the access. Components allow researchers this fine-grained level of control.

Finally, OSF’s pre-registration function allows projects and components to be “frozen” (i.e., saved as time-stamped copies that cannot be edited). Researchers can opt to pre-register their projects using one of many templates, or they can simply upload the narrative text of their research plans. In this way, researchers and editors can be confident about which elements of a study were pre-specified and which were informed by the research process or outcomes.

The review of OSF’s features here is necessarily brief. Reference SoderbergSoderberg (2018) provides a step-by-step guide for getting started with OSF. Tutorials are also available on the Center for Open Science’s YouTube channel. I recommend selecting a project – perhaps one for which you are the lead contributor – to try out OSF and get familiar with its features in greater detail. Later, you may want to consider using a project template, like the one that I use in my lab (Reference CorkerCorker, 2016), to standardize the appearance and organization of your OSF projects.

2.2 Pre-Registration and Registered Reports

Learning about how to pre-register research involves much more than just learning how to use a particular tool (like OSF) to complete the registration process. Like other research methods, training and practice are needed to become skilled at this key open science technique (Reference Tackett, Brandes, Dworak and ShieldsTackett et al., 2020). Pre-registration refers to publicly reporting study designs, hypotheses, and/or analysis plans prior to the onset of a research project. Additionally, the pre-registered plan should be shared in an accessible repository, and it should be “read-only” (i.e., not editable after posting). As we’ll see, there are several reasons a researcher might choose to pre-register, along with a variety of benefits of doing so, but the most basic function of the practice is that pre-registration clearly delineates the parts of a research project that were specified before the onset of a project from those parts that were decided on along the way or based on observed data.

Depending on their goals, researchers might pre-register for different reasons (Reference Da Silva Frost and Ledgerwoodda Silva Frost & Ledgerwood, 2020; Reference LedgerwoodLedgerwood, 2018; Reference NavarroNavarro, 2019). First, researchers may want to constrain particular data analytic choices prior to encountering the data. Doing so makes it clear to the researchers, and to readers, that the presented analysis is not merely the one most favorable to the authors’ predictions, nor the one with the lowest p-value. Second, researchers might desire to specify theoretical predictions prior to encountering a result. In so doing, they set up conditions that enable a strong test of the theory, including the possibility for falsification of alternative hypotheses (Reference PlattPlatt, 1964). Third, researchers may seek to increase the transparency of their research process, documenting particular plans and, crucially, when those plans were made. In addition to the scientific benefits of transparency, pre-registration can also facilitate more detailed planning than usual, potentially increasing research quality as potential pitfalls are caught early enough to be remedied.

Some of these reasons are more applicable to certain types of research than others, but nearly all research can benefit from some form of pre-registration. For instance, some research is descriptive and does not test hypotheses stemming from a theory. Other research might feature few or no statistical analyses. The theory testing or analytic constraint functions of pre-registration might not be applicable in these instances. However, the benefits of increased transparency and enhanced planning stand to benefit many kinds of research (but see Reference Devezer, Navarro, Vandekerckhove and BuzbasDevezer et al., 2021, for a critical take on the value of pre-registration).

A related but distinct practice is Registered Reports (Reference ChambersChambers, 2013). In a registered report, authors submit a study proposal – usually as a manuscript consisting of a complete introduction, proposed method, and proposed analysis section – to a journal that offers the format. The manuscript (known at that point as “stage 1”) is then peer-reviewed, after which it can be rejected, accepted, or receive a revise and resubmit. Crucially, once the stage 1 manuscript is accepted (most likely after revision following peer review), the journal agrees to publish the final paper regardless of the statistical significance of results, provided the agreed upon plan has been followed – a phase of publication known as “in-principle acceptance.” Once results are in, the paper (at this point known as a “stage 2” manuscript) goes out again for peer review to verify that the study was executed as agreed.

When stage 1 proposals are published (either as stand-alone manuscripts or as supplements to the final stage 2 manuscripts), registered reports allow readers to confirm which parts of a study have been planned ahead of time, just like ordinary pre-registrations. Likewise, registered reports limit strategic analytic flexibility, allow strong tests of hypotheses, and increase the transparency of research. Crucially, however, registered reports also address publication bias, because papers are not accepted or rejected on the basis of the outcome of the research. Furthermore, the two-stage peer-review process has an even greater potential to improve study quality, because researchers receive the benefit of peer critique during the design phase of a study when there is still time to correct flaws. Finally, because the publication process is overseen by an editor, undisclosed deviations from the pre-registered plan may be less likely to occur than they are with unreviewed pre-registration. Pragmatically, registered reports might be especially worthwhile in contentious areas of study where it is useful to jointly agree on a critical test ahead of time with peer critics. Authors can also enjoy the promise of acceptance of the final product prior to investing resources in data collection.

Table 11.1 lists guidance and templates that have been developed across different subfields and research methods to enable nearly any study to be pre-registered. A final conceptual distinction is worth brief mention. Pre-registrations are documentation of researchers’ plans for their studies (in systematic reviews of health research, these documents are known as protocols). When catalogued and searchable, pre-registrations form a registry. In the United States, the most common study registry is clinicaltrials.gov, because the National Institutes of Health requires studies that it funds to be registered there. PROSPERO (Reference Page, Shamseer and TriccoPage et al., 2018) is the main registry for health-related systematic reviews. Entries in clinicaltrials.gov and PROSPERO must follow a particular format, and adhering to that format may or may not fulfill researchers’ pre-registration goals (for analytic constraint, for hypothesis testing, or for increasing transparency). For instance, when registering a study in clinicaltrials.gov, researchers must declare their primary outcomes (i.e., dependent variables) and distinguish them from secondary outcomes, but they are not required to submit a detailed analysis plan. A major benefit of study registries is to track the existence of studies independent of final publications. Registries also allow the detection of questionable research practices like outcome switching (e.g., Reference Goldacre, Drysdale, Dale, Milosevic, Slade, Hartley, Marston, Powell-Smith, Heneghan and MahtaniGoldacre et al., 2019). However, entries in clinicaltrials.gov and PROSPERO fall short in many ways when it comes to achieving the various goals of pre-registration discussed above. It is important to distinguish brief registry entries from more detailed pre-registrations and protocols.

Table 11.1 Guides and templates for pre-registration

3. Doing the Research

Open science considerations are as relevant when you are actually conducting your research as they are when you are planning it. One of the things you have surely already learned in your graduate training is that research projects often take a long time to complete. It may be several months, or perhaps even longer, after you have planned a study and collected the data before you are actually finalizing a manuscript to submit for publication. Even once an initial draft is completed, you will again have a lengthy wait while the paper is reviewed, after which time you will invariably have to return to the project for revisions. To make matters worse, as your career unfolds, you will begin to juggle multiple such projects simultaneously. Put briefly: you need a robust system of documentation to keep track of these many projects.

In spite of the importance of this topic, most psychology graduate programs have little in the way of formal training in these practices. Here, I will provide an overview of a few key topics in this area, but you would be well served to dig more deeply into this area on your own. In particular, Reference BrineyBriney (2015) provides a book-length treatment on data management practices. (Here “data” is used in the broad sense to mean information, which includes but extends beyond participant responses.) Reference HenryHenry (2021a, Reference Henry2021b) provides an overview of many relevant issues as well. Another excellent place to look for help in this area is your university library. Librarians are experts in data management, and libraries often host workshops and give consultations to help researchers improve their practices.

Several practices are part of the array of options available to openly document your research process. Here, I’ll introduce open lab notebooks, open protocols/materials, and open data/analysis code. Reference Klein, Hardwicke, Aust, Breuer, Danielsson, Mohr, IJzerman, Nilsonne, Vanpaemel and FrankKlein et al. (2018) provide a detailed, pragmatic look at these topics, highlighting considerations around what to share, how to share, and when to share.

3.1 Open Lab Notebooks

One way to track your research as it unfolds is to keep a detailed lab notebook. Recently, some researchers have begun to keep open, digital lab notebooks (Reference CampbellCampbell, 2018). Put briefly, open lab notebooks allow outsiders to access the research process in its entirety in real time (Reference Bradley, Lang, Koch, Neylon, Elkins, Lang, Koch and NeylonBradley et al., 2011). Open lab notebooks might include entries for data collected, experiments run, analyses performed, and so on. They can also include accounts of decisions made along the way – for instance, to change an analysis strategy or to modify the participant recruitment protocol. Open lab notebooks are a natural complement to pre-registration insofar as a pre-registration spells out a plan for a project, and the lab notebook documents the execution (or alteration) of that plan. In fact, for some types of research, where the a priori plan is relatively sparse, an open lab notebook can be an especially effective way to transparently document exploration as it unfolds.

On a spectrum from completely open research to completely opaque research, the practice of keeping an open lab notebook marks the far (open) end of the scale. For some projects (or some researchers) the costs of keeping a detailed open lab notebook in terms of time and effort might greatly exceed the scientific benefits for transparency and record keeping. Other practices may achieve similar goals more efficiently, but for some projects, the practice could prove invaluable. To decide whether an open lab notebook is right for you, consider the examples given in Reference CampbellCampbell (2018). You can also see an open notebook in action here: https://osf.io/3n964/ (Reference Koessler, Campbell and KohutKoessler et al., 2019).

3.2 Open Protocols and Open Materials

A paper’s Method section is designed to describe a study protocol – that is, its design, participants, procedure, and materials – in enough detail that an independent researcher could replicate the study. In actuality, many key details of study protocols are omitted from Method sections (Reference ErringtonErrington, 2019). To remedy this information gap, researchers should share full study protocols, along with the research materials themselves, as supplemental files. Protocols can include things like complete scripts for experimental research assistants, video demonstrations of techniques (e.g., a participant interaction or a neurochemical assay), and full copies of study questionnaires. The goal is for another person to be able to execute a study fully without any assistance from the original author.

Research materials that have been created specifically for a particular study – for instance, the actual questions asked of participants or program files for an experimental task – are especially important to share. If existing materials are used, the source where those materials can be accessed should be cited in full. If there are limitations on the availability of materials, which might be the case if materials are proprietary or have restricted access for ethical reasons, those limitations should be disclosed in the manuscript.

3.3 Reproducible Analyses, Open Code, and Open Data

One of the basic features of scientific research products is that they should be independently reproducible. A finding that can only be recreated by one person is a magic trick, not a scientific truism. Here, reproducible means that results can be recreated using the same data originally used to make a claim. By contrast, replicability implies the repetition of a study’s results using different data (e.g., a new sample). Note that also, a finding can be reproducible, or even replicable, but not be a valid or accurate representation of reality (Reference Vazire, Schiavone and BottesiniVazire et al., 2020). Reproducibility can be thought of as a minimally necessary precursor to later validity claims. In psychology, analyses of quantitative data very often form the backbone of our scientific claims. Yet, the reproducibility of data analytic procedures may never be checked, or if they are checked, findings may not be reproducible (Reference Obels, Lakens, Coles, Gottfried and GreenObels et al., 2020; Reference Stodden, Seiler and MaStodden et al., 2018). Even relatively simple errors in reporting threaten the accuracy of the research literature (Reference Nuijten, Hartgerink, Van Assen, Epskamp and WichertsNuijten et al., 2016).

Luckily, these problems are fixable, if we are willing to put in the effort. Specifically, researchers should share the code underlying their analyses and, when legally and ethically permissible, they should share their data. But beyond just sharing the “finished product,” it may be helpful to think about preparing your data and code to share while the project is actually under way (Reference Klein, Hardwicke, Aust, Breuer, Danielsson, Mohr, IJzerman, Nilsonne, Vanpaemel and FrankKlein et al., 2018).

Whenever possible, analyses should be conducted using analysis code – also known as scripting or syntax – rather than by using point-and-click menus in statistical software or doing hand calculations in spreadsheet programs. To further enhance the reproducibility of reported results, you can write your results section using a language called R Markdown. Succinctly, R Markdown combines descriptive text with results (e.g., statistics, counts) drawn directly from analyses. When results are prepared in this way, there is no need to worry about typos or other transcription errors making their way into your paper, because numbers from results are pulled directly from statistical output. Additionally, if there is a change to the data – say, if analyses need to be re-run on a subset of cases – the result text will automatically update with little effort.

Reference Peikert and BrandmaierPeikert and Brandmeier (2019) describe a possible workflow to achieve reproducible results using R Markdown along with a handful of other tools. Reference RouderRouder (2016) details a process for sharing data as it is generated – so-called “born open” data. This method also preserves the integrity of original data. When combined with Peikert and Brandmeier’s technique, the potential for errors to affect results or reporting is greatly diminished.

Regardless of the particular scripting language that you use to analyze your data, the code, along with the data itself, should be well documented to enable use by others, including reviewers and other researchers. You will want to produce a codebook, also known as a data dictionary, to accompany your data and code. Reference Buchanan, Crain, Cunningham, Johnson, Stash, Papadatou-Pastou, Isager, Carlsson and AczelBuchanan et al. (2021) describe the ins and outs of data dictionaries. Reference ArslanArslan (2019) writes about an automated process for codebook generation using R statistical software.

3.4 Version Control

When it comes to tracking research products in progress, a crucial concept is known as version control. A version control system permits contributors to a paper or other product (such as analysis code) to automatically track who made changes to the text and when they made them. Rather than saving many copies of a file in different locations and under different names, there is only one copy of a version-controlled file, but because changes are tracked, it is possible to roll back a file to an earlier version (for instance, if an error is detected). On large collaborative projects, it is vital to be able to work together simultaneously and to be able to return to an earlier version of the work if needed.

Working with version-controlled files decreases the potential for mistakes in research to go undetected. Reference Rouder, Haaf and SnyderRouder et al. (2019) describe practices, including the use of version control, that help to minimize mistakes and improve research quality. Reference Vuorre and CurleyVuorre and Curley (2018) provide specific guidance for using Git, one of the most popular version control systems. An additional benefit of learning to use these systems is their broad applicability in non-academic research settings (e.g., at technology and health companies). Indeed, developing skills in domain general areas like statistics, research design, and programming will broaden the array of opportunities available to you when your training is complete.

3.5 Working Openly Facilitates Teamwork and Collaboration

Keeping an open lab notebook, sharing a complete research protocol, or producing a reproducible analysis script that runs on open data might seem laborious compared to closed research practices, but there are advantages of these practices beyond the scientific benefits of working transparently. Detailed, clear documentation is needed for any collaborative research, and the need might be especially great in large teams. Open science practices can even facilitate massive collaborations, like those managed by the Psychological Science Accelerator (PSA; Reference Moshontz, Campbell, Ebersole, IJzerman, Urry, Forscher and ChartierMoshontz et al., 2018). The PSA is a global network of over 500 laboratories that coordinates large investigations of democratically selected study proposals. It enables even teams with limited resources to study important questions at a large enough scale to yield rich data and precise answers. Open science practices are baked into all parts of the research process, and indeed, such efforts would not be feasible or sustainable without these standard operating procedures.

Participating in a large collaborative project, such as one run by the PSA, is an excellent way to develop your open science skillset. It can be exciting and quite rewarding to work in such a large team, but in so doing, there is also the opportunity to learn from the many other collaborators on the project.

4. Writing It Up: Open Science and Your Manuscript

The most eloquent study with the most interesting findings is scientifically useless until the findings are communicated to the broader research community. Indeed, scientific communication may be the most important part of the research process. Yet skillfully communicating results isn’t about mechanically relaying the outcomes of hypothesis tests. Rather, it’s about writing that leaves the reader with a clear conclusion about the contribution of a project. In addition to being narratively compelling, researchers employing open science practices will also want to transparently and honestly describe the research process. Adept readers may sense a conflict between these two goals – crafting a compelling narrative vs. being transparent and honest – but in reality, both can be achieved.

4.1 Writing Well and Transparently

Reference GernsbacherGernsbacher (2018) provides detailed guidance on preparing a high-quality manuscript (with a clear narrative) while adhering to open science practices. She writes that the best articles are transparent, reproducible, clear, and memorable. To achieve clarity and memorability, authors must attend to good writing practices like writing short sentences and paragraphs and seeking feedback. These techniques are not at odds with transparency and reproducibility, which can be achieved through honest, detailed, and clear documentation of the research process. Even higher levels of detail can be achieved by including supplemental files along with the main manuscript.

One issue, of course, is how to decide which information belongs in the main paper versus the supplemental materials. A guiding principle is to organize your paper to help the reader understand the paper’s contribution while transparently describing what you’ve done and learned. Reference GernsbacherGernsbacher (2018) advises having an organized single file as a supplement to ease the burden on reviewers and readers. A set of well-labeled and organized folders in your OSF project (e.g., Materials, Data, Analysis Code, Manuscript Files) can also work well. Consider including a “readme” file or other descriptive text to help readers understand your file structure.

If a project is pre-registered, it is important that all of the plans (and hypotheses, if applicable) in the study are addressed in the main manuscript. Even results that are not statistically significant deserve discussion in the paper. If planned methods have changed, this is normal and absolutely fine. Simply disclose the change (along with accompanying rationale) in the paper, or better yet, file an addendum to your pre-registration when the change is made before proceeding. Likewise, when analysis plans change, disclose the change in the final paper. If the originally planned analysis strategy and the preferred strategy are both valid techniques, and others might disagree about which strategy is best, present results using both strategies. The details of the comparative analyses can be placed in a supplement, but discuss the analyses in the main text of the paper.

A couple of additional tools to assist with writing your open science manuscript are worth mention. First, Reference Aczel, Szaszi, Sarafoglou, Kekecs, Kucharský, Benjamin, Chambers, Fisher, Gelman, Gernsbacher, Ioannidis, Johnson, Jonas, Kousta, Lilienfeld, Lindsay, Morey, Munafò, Newell and WagenmakersAczel et al. (2020) provide a consensus-based transparency checklist that authors can complete to confirm that they have made all relevant transparency-based disclosures in their papers. The checklist can also be shared (e.g., on OSF) alongside a final manuscript to help guide readers through the disclosures. Second, R Markdown can be used to draft the entire text of your paper, not just the results section. Doing so allows you to render the final paper using a particular typesetting style more easily. More importantly, the full paper will then be reproducible. Rather than work from scratch, you may want to use the papaja package (Reference Aust and BarthAust & Barth, 2020), which provides an R Markdown template. Many researchers also like to use papaja in concert with Zotero (www.zotero.org/), an open-source reference manager.

4.2 Selecting a Journal for Your Research

Beyond questions of a journal’s topical reach and its reputation in the field, different journals have different policies when it comes to open science practices. When selecting a journal, you will want to review that journal’s submission guidelines to ensure that you understand and comply with its requirements. Another place to look for guidance on a journal’s stance on open science practices is editorial statements. These statements usually appear within the journal itself, but if the journal is owned by a society, they may also appear in society publications (e.g., American Psychological Association Monitor, Association for Psychological Science Observer).

Many journals are signatories of the TOP (Transparency and Openness Promotion) Guidelines, which specify three different levels of adoption for eight different transparency standards (Reference Nosek, Alter, Banks, Borsboom, Bowman, Breckler, Buck, Chambers, Chin, Christensen, Contestabile, Dafoe, Eich, Freese, Glennerster, Goroff, Green, Hesse, Humphreys and YarkoniNosek et al., 2015; see also https://topfactor.org). Journals with policies at level 1 require authors to disclose details about their studies in their manuscripts – for instance, whether the data associated with studies are available. At level 2, sharing of study components (e.g., materials, data, or analysis code) is required for publication, with exceptions granted for valid legal and ethical restrictions on sharing. At level 3, the journal or its designee verifies the shared components – for instance, a journal might check whether a study’s results can be reproduced from shared analysis code. Importantly, journals can adopt different levels of transparency for the different standards. For instance, a journal might adopt level 1 (disclose) for pre-registration of analysis plans, but level 3 (verify) for study materials. Again, journal submission guidelines, along with editorial statements, provide guidance as to the levels adopted for each standard.

Some journals also offer badges for adopting transparent practices. At participating journals, authors declare whether they have pre-registered a study or shared materials and/or data, and the journal then marks the resulting paper with up to three badges (pre-registration, open data, open materials) indicating the availability of the shared content.

A final consideration is the pre-printing policies of a journal. Almost certainly, you will want the freedom to share your work on a preprint repository like PsyArXiv (https://psyarxiv.com). Preprint repositories allow authors to share their research ahead of publication, either before submitting the work for peer review at a journal or after the peer-review process is complete. Some repositories deem the latter class of manuscripts “post-prints” to distinguish them from papers that have not yet been published in a journal. Sharing early copies of your work will enable to you to get valuable feedback prior to journal submission. Even if you are not ready to share a pre-publication copy of your work, sharing the final post-print increases access to the work – especially for those without access through a library including researchers in many countries, scholars without university affiliations, and the general public. Manuscripts shared on PsyArXiv are indexed on Google Scholar, increasing their discoverability.

You can check the policies of your target journal at the Sherpa Romeo database (https://v2.sherpa.ac.uk/romeo/). The journals with the most permissive policies allow sharing of the author copy of a paper (i.e., what you send to the journal, not the typeset version) immediately on disciplinary repositories like PsyArXiv. Other journals impose an embargo on sharing of perhaps one or two years. A very small number of journals will not consider manuscripts that have been shared as preprints. It’s best to understand a journal’s policy before choosing to submit there.

Importantly, sharing pre- or post-print copies of your work is free to do, and it greatly increases the reach of your work. Another option (which may even be a requirement depending on your research funder) is to publish your work in a fully open-access journal (called “gold” open access) or in a traditional journal with the option to pay for your article to be made open access (called “hybrid” open access). Gold open-access journals use the fees from articles to cover the costs of publishing, but articles are free to read for everyone without a subscription. Hybrid journals, on the other hand, charge libraries large subscription fees (as they do with traditional journals), and they charge authors who opt to have their articles made open access, effectively doubling the journal’s revenue without incurring additional costs. The fees for hybrid open access are almost never worth it, given that authors can usually make their work accessible for free using preprint repositories.

Fees to publish your work in a gold open-access journal currently vary from around US$1000 on the low end to US$3000 or more on the high end. Typically, a research funder pays these fees, but if not, there may be funds available from your university library or research support office. Some journals offer fee waivers for authors who lack access to grant or university funding for these costs. Part of open science means making the results of research as accessible as possible. Gold open-access journals are one means of achieving this goal, but preprint repositories play a critical role as well.

5. Coda: The Importance of Community

Certainly, there are many tools and techniques to learn when it comes to open science practices. When you are just beginning, you will likely want to take it slow to avoid becoming overwhelmed. Additionally, not every practice described here will be relevant for every project. With time, you will learn to deploy the tools you need to serve a particular project’s goals. Yet, it is also important not to delay beginning to use these practices. Now is the time in your career where you are forming habits that you will carry with you for many years. You want to lay a solid foundation for yourself, and a little effort to learn a new skill or technology now will pay off down the road.

One of the best ways to get started with open science practices is to join a supportive community of other researchers who are also working towards the same goal. Your region or university might have a branch of ReproducibiliTea (https://reproducibilitea.org/), a journal club devoted to discussing and learning about open science practices. If it doesn’t, you could gather a few friends and start one, or you could join one of the region-free online clubs. Twitter is another excellent place to keep up to date on new practices, and it’s also great for developing a sense of community. Another option is to attend the annual meeting of the Society for the Improvement of Psychological Science (SIPS; http://improvingpsych.org). The SIPS meeting features workshops to learn new techniques, alongside active sessions (hackathons and unconferences) where researchers work together to develop new tools designed to improve psychological methods and practices. Interacting with other scholars provides an opportunity to learn from one another, but also provides important social support. Improving your research practices is a career-long endeavor; it is surely more fun not to work alone.

Acknowledgments

Thank you to Julia Bottesini and Sarah Schiavone for their thoughtful feedback. All errors and omissions are my own.

1. Reasons for Presenting Research

There are several pros and cons to evaluate when deciding whether to submit your research to a conference. There is value in sharing your science with others at the conference, such as professors, students, clinicians, teachers, and other professionals who might be able to use your work to advance their own work. As a personal gain, your audience may provide feedback, which may be invaluable to you in your professional development. Presenting research at conferences also allows for the opportunity to meet potential future advisors, employers, collaborators, or colleagues. Conferences are ideal settings for networking and, in fact, many conferences have forums organized for this exact purpose (e.g., job openings listed on a bulletin board and networking luncheons). The downsides to submitting your work to a conference include the time commitment of writing and constructing the presentation, the potential for rejection from the reviewers, the anxiety inherent in formal presentations, and the potential time and expenses of traveling to the meeting. Although we do believe that the benefits of presenting at conferences outweigh the costs, you should carefully consider your own list of pros and cons before embarking on this experience.

2. Presentation Venues

There are many different outlets for presenting research findings, ranging from departmental colloquia to international conferences. The decision of submitting a proposal to one conference over another should be guided by both practical and professional reasoning. In selecting a convention, you might consider the following questions: Is this the audience to whom I wish to disseminate my findings? Are there other professionals that I would like to meet attending this conference? Are the other presentations of interest to me? Are the philosophies of the association consistent with my perspectives and training needs? Can I afford to travel to this location? Will my institution provide funding for the cost of this conference? Will my presentation be ready in time for the conference? Am I interested in visiting the city that is hosting the conference? Do the dates of the conference interfere with personal or professional obligations? Will this conference provide the opportunity to network with colleagues and friends? Is continuing education credit offered? Associated with the COVID-19 pandemic, many meetings are virtual, which provides a lower-cost option, but networking is more challenging and the options of linking a vacation to the conference is no longer an option. Fortunately, there are a range of options and you should be able to find a venue that satisfies most of your professional presenting needs.

3. Types of Presentations

After selecting a conference, you must decide on the type of presentation. In general, presentation categories are similar across venues and include poster and oral presentations (e.g., papers, symposia, panel discussions) and workshops. In general, poster presentations are optimal for disseminating preliminary or pilot findings, whereas well-established findings, cutting-edge research, and conceptual/theoretical issues often are reserved for oral presentations and workshops. A call for abstracts or proposals is often distributed by the institution hosting the conference and announces particular topics of interest for presentations. If you are unsure about whether your research is best suited for a poster or oral presentation or workshop, refer to the call for abstracts or proposals and consult with more experienced colleagues. Keynote and invited addresses are other types of conference proceedings typically delivered by esteemed professionals or experts in the field. It is important to note that not all conferences use the same terminology, especially when comparing conferences across countries. For example, a “workshop” at one conference might be a full-day interactive training session and at another conference it might indicate a briefer oral presentation. The following sections are organized in accord with common formats found in many conferences.

The most common types of conference presentations, poster presentations, symposia, panel discussions, and workshops, deserve further discussion. Typically, these scientific presentations follow a consistent format, which is similar to the layout of a research manuscript. For example, first you might introduce the topic, highlight related prior work, outline the purpose and hypotheses of the study, review the methodology, and, lastly, present and discuss salient results and implications (see Reference Drotar and DrotarDrotar, 2000).

3.1 Poster Presentations

Poster presentations are the most common medium through which researchers disseminate findings. In this format, researchers summarize their primary aims, results, and conclusions in an easily digestible manner on a poster board. Poster sessions vary in duration, often ranging from 1 to 2 hours. Authors typically are present with their posters for the duration of the session to discuss their work with interested colleagues. Poster presentations are relatively less formal and more personal than other presentation formats, with the discussion of projects often assuming a conversational quality. That said, it is important to be prepared to answer challenging questions about the work. Typically, many posters within a particular theme (e.g., health psychology) are displayed in a large room so that audiences might walk around the room and talk one-to-one with the authors. Thus, poster sessions are particularly well-suited for facilitating networking and meeting with researchers working in similar areas.

Pragmatically, conference reviewers accept more posters for presentations than symposia, panel discussion, and workshops, and thus, the acceptance criteria are typically more lenient. Relatedly, researchers might choose posters to present findings from small projects or preliminary or pilot results studies. Symposia, panel discussions, and workshops allow for the formal presentation of more ground-breaking findings or of multiple studies. Poster presentations are an opportune time for students to become familiarized with disseminating findings and mingling with other researchers in the field.

3.2 Research Symposia

Symposia involve the aggregation of several individuals who present on a common topic. Depending on time constraints, 4–6 papers typically are featured, each lasting roughly 20 minutes, and often representing different viewpoints or facets of a broader topic. For example, a symposium on the etiology of anxiety disorders might be comprised of four separate papers representing the role of familial influences, biological risk factors, peer relationships, and emotional conditioning on the development of maladaptive anxiety. As a presenter, you might discuss one project or the findings from a few studies. Like a master of ceremonies, the symposia Chair typically organizes the entire symposia by selecting presenters, guiding the topics and style of presentation, and introducing the topic and presenters at the beginning of the symposium. In addition to these duties, the Chair often will present a body of work or a few studies at the beginning of the symposium. In addition to the Chair and presenters, a Discussant can be part of a symposium. The Discussant concludes the symposium by summarizing key findings from each paper, integrating the studies, and making more broad-based conclusions and directions for future research. Although a Discussant is privy to the presenters’ papers prior to the symposium in order to prepare the summary comments, he or she will often take notes during the presenters’ talks to augment any prepared commentary. Presenters are often researchers of varying levels of experience, while Chairs and Discussants are usually senior investigators. The formal presentation is often followed by a period for audience inquiry and discussion.

3.3 Panel Discussions

Panel discussions are similar to research symposia in that several professionals come together to discuss a common topic. Panel discussions, however, generally tend to be less formal and structured and more interactive and animated than symposia. For example, Discussants can address each other and interject comments throughout the discussion. Similar to symposia, these presentations involve the discussion of one or more important topics in the field by informed Discussants. As with symposia presentations, the Chair typically organizes these semi-formal discussions by contacting potential speakers and communicating the discussion topic and their respective roles.

3.4 Workshops

Conference workshops typically are often longer (e.g., lasting at least three hours) and provide more in-depth, specialized training than symposia and panel discussions. It is not uncommon for workshop presenters to adopt a format similar to a structured seminar, in which mini-curricula are followed. Due to the length and specialized training involved, most workshop presenters enhance their presentations by incorporating interactive (e.g., role-plays) and multimedia (e.g., video clips) components. Workshops often are organized such that the information is geared for beginner, intermediate, or advanced professionals. Often conferences are organized such that participation in workshops must be reserved in advance and there might be additional fees associated with attendance. The cost should be balanced with the opportunity of obtaining unique training in a specialized area. These are most often presented by seasoned professionals; however, more junior presenters with specialized skills/knowledge might conduct a workshop.

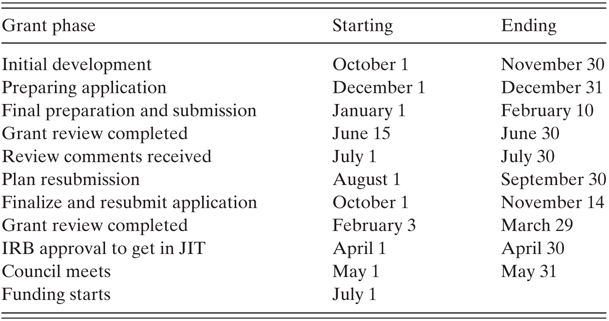

4. The Application Process

After selecting a venue and deciding on a presentation type, the next step is to submit an application to the conference you wish to attend. The application process typically involves submitting a brief abstract (e.g., 200–300 words) describing the primary aims, methods, results, and conclusions of your study. For symposia and other oral presentations, the selection committee might request an outline of your talk, curriculum vitae from all presenters, and a time schedule or presentation agenda. Some conferences might also request information regarding the educational objectives and goals of your presentation. One essential rule is to closely adhere to the directions for submissions to the conference. For example, if there is a word limit for a poster abstract submission, make sure that you do not exceed the number of words. Whereas some reviewers might not notice or mind, others might view it is as unprofessional and possibly disrespectful and an easy decision rule to use to reject a submission.

Although the application process itself is straightforward, there are differences in opinion regarding whether and when it is advisable to submit your research. A commonly asked question is whether a poster or paper can be presented twice. Many would agree that it is acceptable to present the same data twice if the conferences draw different audiences (e.g., regional versus national conferences). Another issue to consider is when, or at what stage, a project should be submitted for presentation. Submitting research prior to analyzing your data can be risky. It would be unfortunate, for example, to submit prematurely, such as during the data collection phase, only to find that your results are not ready in time for the conference. Although some might be willing to take this risk, remember that it is worse to present low-quality work than not to present at all.

5. Preparing and Conducting Presentations

5.1 Choosing an Appropriate Outfit

Dress codes for conference proceedings typically are not formally stated; however, data suggest that perceptions of graduate student professionalism and competence are influenced by dress (e.g., Reference Gorham, Cohen and MorrisGorham et al., 1999). Although the appropriateness of certain attire is likely to vary, a good rule of thumb is to err on the side of professionalism. You also might consider the dress of your audience, and dress in an equivalent or more formal fashion. Although there will be people at conferences wearing unique styles of dress, students and professionals still early in their careers are best advised to dress professionally. It can be helpful to ask people who have already attended the conference what would be appropriate to wear. In addition to selecting your outfit, there are several preparatory steps you can take to help ensure a successful presentation.

5.2 Preparing for Poster Presentations

5.2.1 The Basics

The first step in preparing a poster is to be cognizant of the specific requirements put forth by the selected venue. For example, very specific guidelines often are provided, detailing the amount of board space available for each presenter (typically a 4-foot by 6-foot standing board is available). To ensure the poster will fit within the allotted space, it may be helpful to physically lay it out prior to the conference. This also may help to reduce future distress, given that back-to-back poster sessions are the norm; knowing how to arrange the poster in advance obviates the need to do so hurriedly in the few minutes between sessions. If you are using PowerPoint to design your poster, you can adjust the size of your layout to match the conference requirements.

5.2.2 Tips For Poster Construction

The overriding goal for poster presentations is to summarize your study using an easily digestible, reader-friendly format (Reference GrechGrech, 2018b). As you will discover from viewing other posters, there are many different styles to do this. If you have the resources, professional printers can create large glossy posters that are well received. However, cutting large construction paper to use as a mat for laser-printed poster pages can also appear quite professional. Some companies will even print the poster on a fabric material, so it can be folded up and stored easily in a suitcase for travel to and from the conference. Regardless of the framing, it is advisable to use consistent formatting (e.g., same style and font size throughout the poster), large font sizes (e.g., at least 20-point font for text and 40-point font for headings), and alignment of graphics and text (Reference Zerwic, Grandfield, Kavanaugh, Berger, Graham and MershonZerwic et al., 2010). Another suggestion for enhancing readability and visual appeal is to use bullets, figures, and tables to illustrate important findings. Generally speaking, brief phrases (as opposed to wordy paragraphs) should be used to summarize pertinent points. It has been suggested to limit horizontal lines to 10 or fewer words and avoid using more than four colors (Reference Zerwic, Grandfield, Kavanaugh, Berger, Graham and MershonZerwic et al., 2010). In short, it is important to keep your presentation succinct and avoid overcrowding on pages. Although there are a variety of fonts available and poster boards come in all colors imaginable, it is best to keep the poster professional. In other words, Courier, Arial, or Times New Roman are probably the best fonts to use because they are easy to read and they will not distract or detract from the central message of the poster (i.e., your research). In addition, dark font (e.g., blue, black) on a light background (e.g., yellow, white) is easier to read in brightly lit rooms, which is the norm for poster sessions. Be mindful of appropriately acknowledging any funding agencies or other organizations (e.g., universities) on the poster or in the oral presentation slides. Recently, the format of posters has shifted. The “better poster” format (https://youtu.be/1RwJbhkCA58) includes the main research finding in large text in the center of the poster with extra details and figures on the sides (Figure 12.1). This allows conference attendees to quickly assess whether the research is of interest to them and if they would like more detailed information.

Figure 12.1 Poster formats.

5.2.3 What To Bring

When preparing for a poster presentation, consider which materials might be either necessary or potentially useful to bring. For instance, it might be wise to bring tacks (Reference GrechGrech, 2018b). It also is advisable to create handouts summarizing the primary aims and findings and to distribute these to interested colleagues. The number of copies one provides often depends on the size of the conference and the number of individuals attending a particular poster session. We have found that for larger conferences, 20 handouts are a good minimum. In general, handouts are in high demand and supplies are quickly depleted, in which case, you should be equipped with a notepad to obtain the names and addresses of individuals interested in receiving the handout via mail or e-mail. With the “better poster” format, researchers often include a QR code on their poster. The QR code can link them to a copy of the poster, contact information of the researchers, or other relevant electronic documents.

5.2.4 Critically Evaluate Other Posters

We also recommend critically evaluating other posters at conferences and posters previously used by colleagues. You will notice great variability in poster style and formatting, with some researchers using glossy posters with colored photographs and others using plain white paper and black text. Make mental notes regarding the effective and ineffective presentation of information. What attracted you to certain posters? Which colors stood out and were the most readable? Such informal evaluations likely will be invaluable when making decisions on aspects such as poster formatting, colors, font, and style.

5.2.5 Prepare Your Presentation

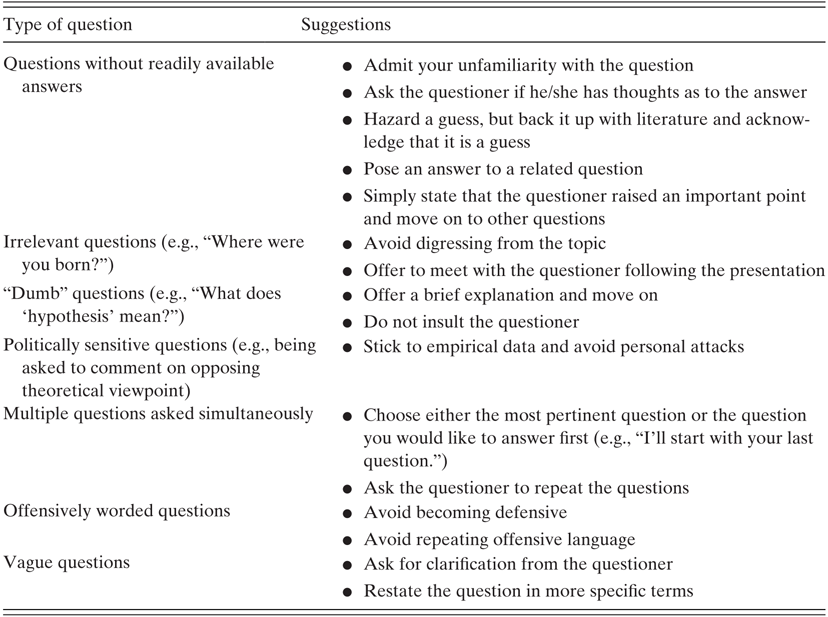

Poster session attendees will often approach your poster and ask you to summarize your study, so it is wise to prepare a brief overview of your study (e.g., 2 minutes). In addition, practice describing any figures or graphs displayed on your poster. Finally, attendees will often ask questions about your study (e.g., “What are the clinical implications?”, “What are some limitations to your study?”, “What do you recommend for future studies?”), so it may be helpful to have colleagues review your poster and ask questions. Table 12.1 provides some suggestions as to how to handle difficult questions.

Table 12.1 Handling difficult questions

| Type of question | Suggestions | |

|---|---|---|

| Questions without readily available answers |

| |

| Irrelevant questions (e.g., “Where were you born?”) |

| |

| “Dumb” questions (e.g., “What does ‘hypothesis’ mean?”) |

| |

| Politically sensitive questions (e.g., being asked to comment on opposing theoretical viewpoint) |

| |

| Multiple questions asked simultaneously |

| |

| Offensively worded questions |

| |

| Vague questions |

| |

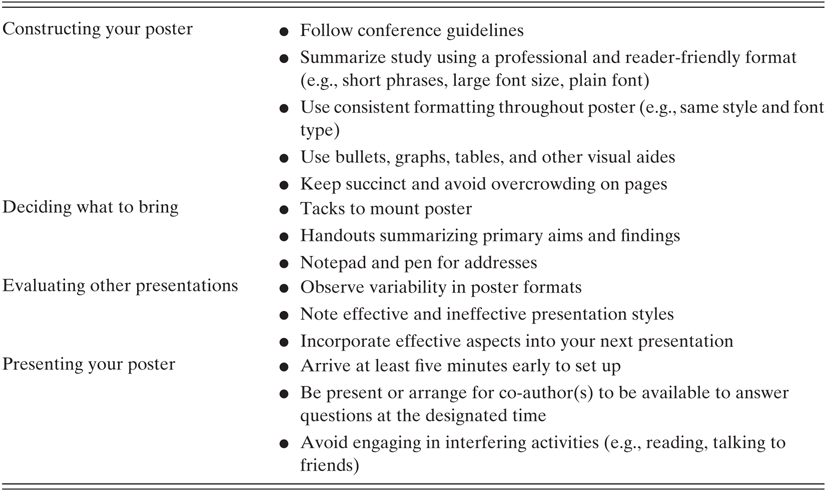

5.3 Conducting Poster Presentations

In general, presenting a poster is straightforward – tack the poster to the board at the beginning of the session, stand next to the poster and discuss the details of the project with interested viewers, and remove the poster at the end of the session. However, we have found that a surprisingly high number of presenters do not adequately fulfill these tasks. Arriving to the poster session at least five minutes early will allow you to find your allocated space, unpack your poster, and decide where to mount it on the board. When posters consist of multiple frames, it might be easiest to lie out the boards on the floor prior to beginning to tack it up on the board.

During the poster session, remember this fundamental rule – be present. It is permissible to browse other posters in the same session; however, always arrange for a co-author or another colleague knowledgeable about the study to man the poster. Another guideline is to be available to answer questions and discuss the project with interested parties. In other words, refrain from reading, chatting with friends, or engaging in other activities that interfere with being available to discuss the study. At the conclusion of the poster session, it is important to quickly remove your poster so subsequent presenters have ample time to set up their posters. Suggestions for preparing and presenting posters are summarized in Table 12.2.

Table 12.2 Suggestions for poster presentations

| Constructing your poster |

|

| Deciding what to bring |

|

| Evaluating other presentations |

|

| Presenting your poster |

|

5.4 Preparing for Oral Presentations

5.4.1 The Basics

Similar to poster sessions, it is important to be familiar with and adhere to program requirements when preparing for oral presentations. For symposia, this might include sending an outline of your talk to the Chair and Discussant several weeks in advance and staying within a specified time limit when giving your talk. Although the Chair often will ensure that the talks adhere to the theme and do not excessively overlap, the presenter also can do this via active communication with the Chair, Discussant, and other presenters.

5.4.2 What To Bring

As with poster presentations, it is useful to anticipate and remember to bring necessary and potentially useful materials. For instance, individuals using PowerPoint should bring their slides in paper form in case of equipment failure. Equipment, such as microphones, often are available upon request; it is the presenter’s responsibility, however, to reserve equipment in advance.

5.4.3 Critically Evaluate Other Presenters

By carefully observing other presenters, you might learn valuable skills of how to enhance your presentations. Examine the format of the presentation, the level of detail provided, and the types and quality of audiovisual stimuli. Also try to note the vocal quality (e.g., intonation, pitch, pace, use of filler terms such as “um”), facial characteristics (e.g., smiling, eye contact with audience members), body movements (e.g., pacing, hand gestures), and other subtle aspects that can help or hinder presentations.

5.4.4 Practice, Practice, Practice

In terms of presentation delivery, repeated practice is essential for effective preparation (see Reference Williams, Pequegnat and StoverWilliams, 1995). For many people, students and seasoned professionals alike, public speaking can elicit significant levels of distress. Given extensive data supporting the beneficial effects of exposure to feared stimuli (see Reference WolpeWolpe, 1977), repeated rehearsal is bound to produce positive outcomes, including increased comfort, increased familiarity with content, and decreased levels of anxiety. Additionally, practicing will help presenters hone their presentation skills and develop a more effective presentational style. We recommend practicing in front of an “audience” and soliciting feedback regarding both content and presentational style. Solicit feedback on every aspect of your presentation from the way you stand to the content of your talk. It might be helpful to rehearse in front of informed individuals (e.g., mentors, graduate students, research groups) who ask relevant and challenging questions and subsequently provide constructive feedback (Reference GrechGrech, 2018a; Reference Wellstead, Whitehurst, Gundogan and AghaWellstead et al., 2017). Based on this feedback, determine which suggestions should be incorporated and modify your presentation accordingly. As a general rule, practice and hone your presentation to the point that you are prepared to present without any crutches (e.g., notes, overheads, slides).

5.4.5 Be Familiar and Anticipate

As much as possible, try to familiarize yourself with the audience both before and during the actual presentation (Reference Baum and BoughtonBaum & Boughton, 2016; Reference RegulaRegula, 2020). By having background information, you can better tailor your talk to meet the professional levels and needs of those in attendance. It may be particularly helpful to have some knowledge regarding the educational background and general attitudes and interests of the audience (e.g., is the audience comprised of laymen and/or professionals in the field? What are the listeners’ general attitudes toward the topic and towards you as the speaker? Is the audience more interested with practical applications or with design and scientific rigor?). Are you critiquing previous work from authors that may be in the audience? By conducting an informal “audience analysis,” you will be more equipped to adapt your talk to meet the particular needs and interests of the audience. It is important to have a clear message that you want the audience to take with them after the presentation (Reference RegulaRegula, 2020). Most conference-goers will be seeing many presentations during the conference, so having a clear take-home message can make it easier for the audience to remember the key points.

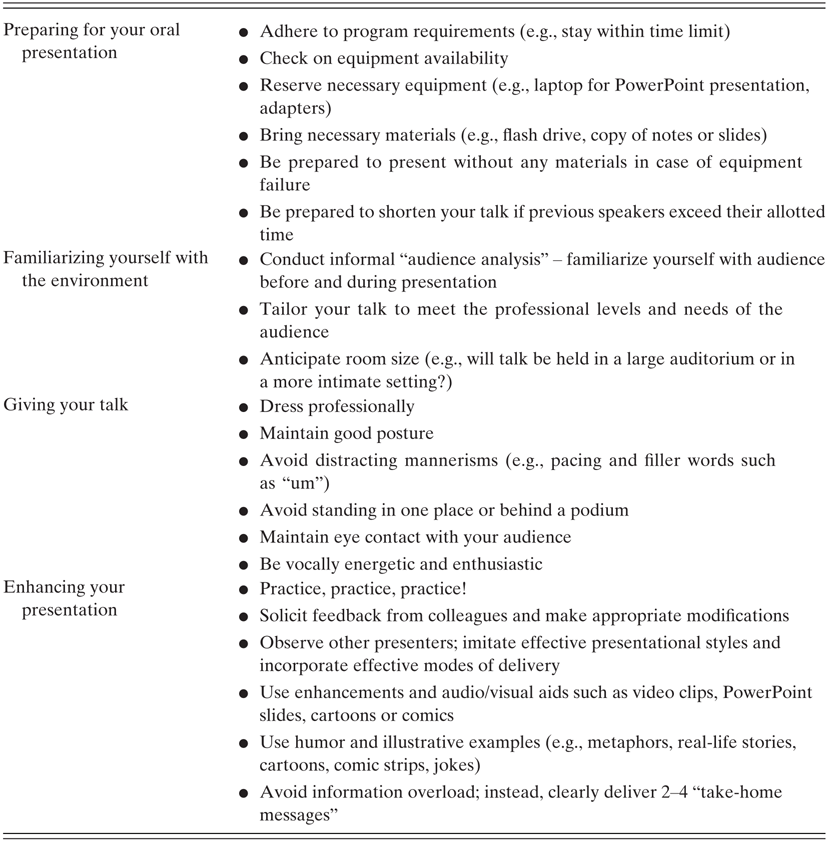

Similarly, it might be helpful to have some knowledge about key logistical issues, such as room size and availability of equipment (Reference Baum and BoughtonBaum & Boughton, 2016). For example, will the presentation take place in a large, auditorium-like room or in a more intimate setting with the chairs arranged in a semi-circle? If the former, will a microphone be available? Is there a podium at the front of the room that might influence where you will stand? Given the dimensions of the room, where should the slide projector be positioned? Although it may be impossible to answers all such questions, it is a good idea to have a general sense of where the presentation will take place and who will be attending. It may also help to rearrange the seating, so that latecomers do not pose as a distraction (Reference Baum and BoughtonBaum & Boughton, 2016). Suggestions for preparing and conducting oral presentations are summarized in Table 12.3.

Table 12.3 Oral presentations

| Preparing for your oral presentation |

|

| Familiarizing yourself with the environment |

|

| Giving your talk |

|

| Enhancing your presentation |

|

If the conference is virtual, it is critical to become familiar with the software or online platform well in advance of a synchronous event. For example, practicing with the camera and microphone and watching recordings of yourself will allow you to fine-tune the presentation and audio quality. Whether synchronous or asynchronous, there are a number of tips for optimizing video presentations, including how to position the camera, how to light the presenter, behaviors to include or avoid, and what to consider in terms of the background. Given the variability and subjectivity related to video presentation suggestions, we encourage the readers to research this extensive topic to personalize and optimize their virtual presentations.

5.5 Conducting Oral Presentations

5.5.1 Using Audiovisual Enhancements

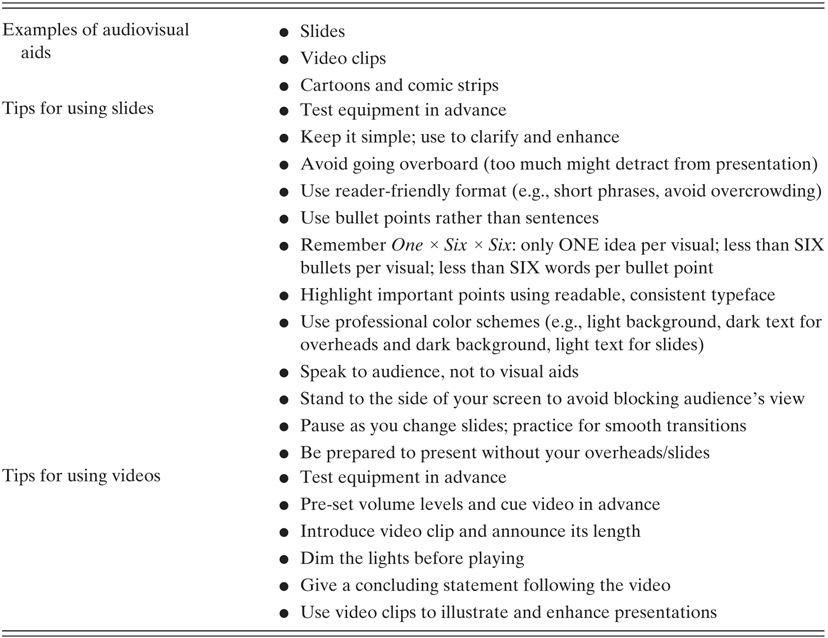

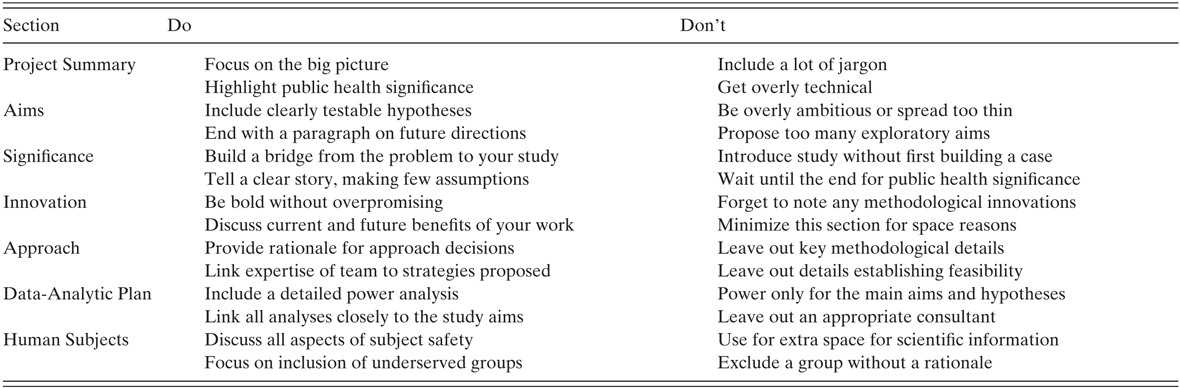

One strategy for enhancing oral presentations is to use audio/visual stimuli, such as slides or props (e.g., Reference GrechGrech, 2019; Reference HoffHoff, 1988; Reference WilderWilder, 1994; see Table 12.4). When using visual enhancements, keep it simple, and clearly highlight important points using readable and consistent typeface (Reference Blome, Sondermann and AugustinBlome et al., 2017; Reference GrechGrech, 2018a). Information should be easily assimilated and reader-friendly, which generally means limiting text to a few phrases rather than complete sentences or paragraphs and using sufficiently large font sizes (e.g., 36- to 48-point font for titles and 24- to 36-point font for text). In addition, it is a good idea to keep titles to one line and bullets to no more than two lines of information. Additionally, color schemes should be relatively subdued and “professional” in appearance. For slide presentations, a dark background and light text might be easier to read. Utilize fonts without serifs (e.g., Arial) as opposed to fonts with serifs (e.g., Times New Roman; Reference Lefor and MaenoLefor & Maeno, 2016). Some conferences prefer a particular slide size, so it can be important to check with their requirements before designing the presentation. Additionally, depending on the room set up, it can be hard for the audience to see the bottom of slides, so it can be helpful to avoid placing text near the bottom of the slide. See Figure 12.2 for an example of a poor and good slide for an oral presentation.

Table 12.4 Using audiovisual enhancements

| Examples of audiovisual aids |

|

| Tips for using slides |

|

| Tips for using videos |

|

Figure 12.2 Sample poor and good slides for an oral presentation.

Using audiovisual aids, such as video clips, also can contribute substantially to the overall quality and liveliness of a presentation. When incorporating video clips, pre-set volume levels and cue up the video in advance. We also recommend announcing the length of the video, dimming lights, and giving a concluding statement following the video.

Multimedia equipment and audiovisual aids have the potential to liven up even the most uninspiring presentations; however, caution against becoming overly dependent on any medium. Rather, be fully prepared to deliver a high-quality presentation without the use of enhancements. It also might be wise to prepare a solid “back-up plan” in case your original mode of presentation must be abandoned due to equipment failure or some other unforeseen circumstance. Back-up overheads, for example, might rescue a presenter who learns of equipment failures minutes before presenting.

When using slides, it is important to avoid “going overboard” with information (Reference Blome, Sondermann and AugustinBlome et al., 2017; Reference RegulaRegula, 2020). Many of us will present research with which we are intimately familiar and invested. With projects that are particularly near and dear (e.g., theses and dissertations), it may be tempting to tell the audience as much as possible. It is not necessary, for example, to describe the intricacies of the data collection procedure and present every pre-planned and post-hoc analysis, along with a multitude of significant and non-significant F-values and coefficients. Such information overload might bore audience members, who are unlikely to care about or remember so many fine-grained details. Instead of committing this common presentation blunder, present key findings in a bulleted, easy-to-read format rather than sentences. To avoid overcrowding of slides and overheads, you might remember the One × Six × Six rule of thumb: only ONE idea per visual, less than SIX bullets per visual, and less than SIX words per bullet (Reference RegulaRegula, 2020; see Figure 12.2).

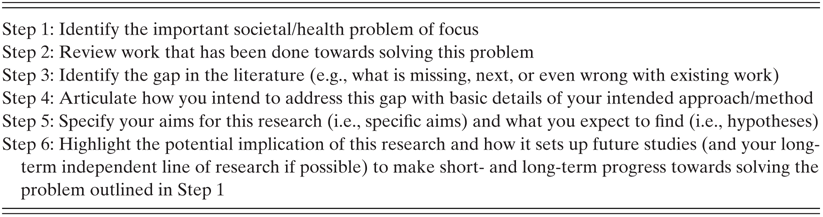

The length of your oral presentation will vary depending on time restrictions, but there are some general guidelines for how to structure your presentation. Reference Zerwic, Grandfield, Kavanaugh, Berger, Graham and MershonZerwic et al. (2010) proposed a possible structure for research presentations, which should include a title, acknowledgments, background, specific aims, methods, results, conclusions, and future directions sections. Zerwic et al. also recommended how many slides should be allocated to each section with your title, acknowledgments, background, specific aims, conclusions, and future directions sections each taking up one slide with the majority of your slides focusing on the methods and results sections.

In short, remember and hold fast to this basic dictum: Audio/visual aids should be used to clarify and enhance (Reference CohenCohen, 1990; Reference GrechGrech, 2018a; Reference WilderWilder, 1994). Aids that detract, confuse, or bore one’s audience should not be used (soliciting feedback from colleagues and peers will assist in this selection process; Reference RegulaRegula, 2020). Overly colorful and ornate visuals or excessive slide animation, for example, might detract and distract from the content of the presentation (Reference Lefor and MaenoLefor & Maeno, 2016). Likewise, visual aids containing superfluous text might encourage audience members to read your slides rather than attend to your presentation. Keeping visuals simple also might prevent another presentation faux pas: reading verbatim from slides.