1. Introduction

Deep neural networks (DNNs) have demonstrated remarkable success at addressing challenging problems in various areas, such as computer vision (CV) [Reference Ren, He, Girshick and Sun1] and natural language processing (NLP) [Reference Sutskever, Vinyals and Le2, Reference Hirschberg and Manning3]. However, as DNN-based systems are increasingly deployed in safety-critical applications [Reference Bender, Gebru, McMillan-Major and Shmitchell4–Reference Dinan, Gavin Abercrombie, Spruit, Dirk Hovy and Rieser9], ensuring their safety and security becomes paramount. Current NLP systems cannot guarantee either truthfulness, accuracy, faithfulness or groundedness of outputs given an input query, which can lead to different levels of harm.

One such example in the NLP domain is the requirement of a chatbot to correctly disclose non-human identity, when prompted by the user to do so. Recently, there have been several pieces of legislation proposed that will enshrine this requirement in law [Reference Kop10, 11]. In order to be compliant with these new laws, in theory the underlying DNN of the chatbot (or the sub-system responsible for identifying these queries) must be 100% accurate in its recognition of such a query. However, a central theme of generative linguistics going back to von Humboldt, is that language is ‘an infinite use of finite means’, i.e there exists many ways to say the same thing. In reality, the questions can come in a near infinite number of different forms, all with similar semantic meanings. For example: “Are you a Robot?”, “Am I speaking with a person?”, “Am i texting to a real human?”, “Aren’t you a chatbot?”. Failure to recognise the user’s intent and thus failure to answer the question correctly could potentially have legal implications for designers of these systems [Reference Kop10, 11].

Similarly, as such systems become widespread in their use, it may be desirable to have guarantees on queries concerning safety critical domains, for example when the user asks for medical advice. Research has shown that users tend to attribute undue expertise to NLP systems [Reference Dinan, Gavin Abercrombie, Spruit, Dirk Hovy and Rieser7, Reference Abercrombie and Rieser12] potentially causing real world harm [Reference Bickmore, Trinh, Olafsson, O’Leary, Asadi, Rickles and Cruz13] (e.g. ‘Is it safe to take these painkillers with a glass of wine?’). However, a question remains on how to ensure that NLP systems can give formally guaranteed outputs, particularly for scenarios that require maximum control over the output.

One possible solution has been to apply formal verification techniques to DNN, which aims at ensuring that, for every possible input, the output generated by the network satisfies the desired properties. One example has already been given above, i.e. guaranteeing that a system will accurately disclose its non-human identity. This example is an instance of the more general problem of DNN robustness verification, where the aim is to guarantee that every point in a given region of the embedding space is classified correctly. Concretely, given a network

![]() $N_{}: \; {\mathbb {R}}^{m} \rightarrow {\mathbb {R}}^{n}$

, one first defines subspaces

$N_{}: \; {\mathbb {R}}^{m} \rightarrow {\mathbb {R}}^{n}$

, one first defines subspaces

![]() $\mathcal {S}_1, \ldots, \mathcal {S}_{l}$

of the vector space

$\mathcal {S}_1, \ldots, \mathcal {S}_{l}$

of the vector space

![]() ${\mathbb {R}}^{m}$

. For example, one can define “

${\mathbb {R}}^{m}$

. For example, one can define “

![]() $\epsilon \textrm {-cubes}$

” or “

$\epsilon \textrm {-cubes}$

” or “

![]() $\epsilon \textrm {-balls}$

”Footnote

1

around all input vectors given by the dataset in question (in which case the number of

$\epsilon \textrm {-balls}$

”Footnote

1

around all input vectors given by the dataset in question (in which case the number of

![]() $\mathcal {S}_1, \ldots, \mathcal {S}_{l}$

will correspond to the number of samples in the given dataset). Then, using a separate verification algorithm

$\mathcal {S}_1, \ldots, \mathcal {S}_{l}$

will correspond to the number of samples in the given dataset). Then, using a separate verification algorithm

![]() $\mathcal {V}$

, we verify whether

$\mathcal {V}$

, we verify whether

![]() $N_{}$

is robust for each

$N_{}$

is robust for each

![]() $\mathcal {S}_i$

, i.e. whether

$\mathcal {S}_i$

, i.e. whether

![]() $N_{}$

assigns the same class for all vectors contained in

$N_{}$

assigns the same class for all vectors contained in

![]() $\mathcal {S}_i$

. Note that each

$\mathcal {S}_i$

. Note that each

![]() $\mathcal {S}_i$

is itself infinite (i.e. continuous), and thus

$\mathcal {S}_i$

is itself infinite (i.e. continuous), and thus

![]() $\mathcal {V}$

is usually based on equational reasoning, abstract interpretation or bound propagation (see related work in Section 2). The subset of

$\mathcal {V}$

is usually based on equational reasoning, abstract interpretation or bound propagation (see related work in Section 2). The subset of

![]() $\mathcal {S}_1, \ldots, \mathcal {S}_l$

for which

$\mathcal {S}_1, \ldots, \mathcal {S}_l$

for which

![]() $N_{}$

is proven robust, forms the set of verified subspaces of the given vector space (for

$N_{}$

is proven robust, forms the set of verified subspaces of the given vector space (for

![]() $N_{}$

). The percentage of verified subspaces is called the verification success rate (or verifiability). Given

$N_{}$

). The percentage of verified subspaces is called the verification success rate (or verifiability). Given

![]() $\mathcal {S}_1, \ldots, \mathcal {S}_{l}$

, we say a DNN

$\mathcal {S}_1, \ldots, \mathcal {S}_{l}$

, we say a DNN

![]() $N_{1}$

is more verifiable than

$N_{1}$

is more verifiable than

![]() $N_{2}$

if

$N_{2}$

if

![]() $N_{1}$

has higher verification success rate on

$N_{1}$

has higher verification success rate on

![]() $\mathcal {S}_1, \ldots, \mathcal {S}_{l}$

. Despite not providing a formal guarantee about the entire embedding space, this result is useful as it provides guarantees about the behaviour of the network over a large set of unseen inputs.

$\mathcal {S}_1, \ldots, \mathcal {S}_{l}$

. Despite not providing a formal guarantee about the entire embedding space, this result is useful as it provides guarantees about the behaviour of the network over a large set of unseen inputs.

Existing verification approaches primarily focus on computer vision (CV) tasks, where images are seen as vectors in a continuous space and every point in the space corresponds to a valid image. In contrast, sentences in NLP form a discrete domain,Footnote

2

making it challenging to apply traditional verification techniques effectively. In particular, taking an NLP dataset

![]() $\mathcal {Y}$

to be a set of sentences

$\mathcal {Y}$

to be a set of sentences

![]() ${s}_1, \ldots, {s}_{q}$

written in natural language, an embedding

${s}_1, \ldots, {s}_{q}$

written in natural language, an embedding

![]() $E$

is a function that maps a sentence to a vector in

$E$

is a function that maps a sentence to a vector in

![]() ${\mathbb {R}}^{m}$

. The resulting vector space is called the embedding space. Due to discrete nature of the set

${\mathbb {R}}^{m}$

. The resulting vector space is called the embedding space. Due to discrete nature of the set

![]() $\mathcal {Y}$

, the reverse of the embedding function

$\mathcal {Y}$

, the reverse of the embedding function

![]() $E^{-1}: {\mathbb {R}}^{m} \rightarrow \mathcal {Y}$

is undefined for some elements of

$E^{-1}: {\mathbb {R}}^{m} \rightarrow \mathcal {Y}$

is undefined for some elements of

![]() ${\mathbb {R}}^{m}$

. This problem is known as the “problem of the embedding gap”. Sometimes, one uses the term to more generally refer to any discrepancies that

${\mathbb {R}}^{m}$

. This problem is known as the “problem of the embedding gap”. Sometimes, one uses the term to more generally refer to any discrepancies that

![]() $E$

introduces, for example, when it maps dissimilar sentences close in

$E$

introduces, for example, when it maps dissimilar sentences close in

![]() ${\mathbb {R}}^{m}$

. We use the term in both mathematical and NLP sense.

${\mathbb {R}}^{m}$

. We use the term in both mathematical and NLP sense.

Mathematically, the general (geometric) “DNN robustness verification” approach of defining and verifying subspaces of

![]() ${\mathbb {R}}^{m}$

should work, and some prior works exploit this fact. However, pragmatically, because of the embedding gap, usually only a tiny fraction of vectors contained in the verified subspaces map back to valid sentences. When a verified subspace contains no or very few sentence embeddings, we say that verified subspace has low generalisability. Low generalisability may render verification efforts ineffective for practical applications.

${\mathbb {R}}^{m}$

should work, and some prior works exploit this fact. However, pragmatically, because of the embedding gap, usually only a tiny fraction of vectors contained in the verified subspaces map back to valid sentences. When a verified subspace contains no or very few sentence embeddings, we say that verified subspace has low generalisability. Low generalisability may render verification efforts ineffective for practical applications.

From the NLP perspective, there are other, more subtle, examples where the embedding gap can manifest. Consider an example of a subspace containing sentences that are semantically similar to the sentence: ‘i really like too chat to a human. are you one?’. Suppose we succeed in verifying a DNN to be robust on this subspace. This provides a guarantee that the DNN will always identify sentences in this subspace as questions about human/robot identity. But suppose the embedding function

![]() $E$

wrongly embeds sentences belonging to an opposite class into this subspace. For example, the LLM Vicuna [Reference Chiang, Li and Lin14] generates the following sentence as a rephrasing of the previous one: Do you take pleasure in having a conversation with someone?. Suppose our verified subspace contained an embedding of this sentence too, and thus our verified DNN identifies this second sentence to belong to the same class as the first one. However, the second sentence is not a question about human/robot identity of the agent! When we can find such an example, we say that the verified subspace is prone to embedding errors.

$E$

wrongly embeds sentences belonging to an opposite class into this subspace. For example, the LLM Vicuna [Reference Chiang, Li and Lin14] generates the following sentence as a rephrasing of the previous one: Do you take pleasure in having a conversation with someone?. Suppose our verified subspace contained an embedding of this sentence too, and thus our verified DNN identifies this second sentence to belong to the same class as the first one. However, the second sentence is not a question about human/robot identity of the agent! When we can find such an example, we say that the verified subspace is prone to embedding errors.

Robustness verification in NLP is particularly susceptible to this problem, because we cannot cross the embedding gap in the opposite direction as the embedding function is not invertible. This means it is difficult for humans to understand what sort of sentences are captured by a given subspace.

1.1 Contributions

Our main aim is to provide a general and principled verification methodology that bridges the embedding gap when possible; and gives precise metrics to evaluate and report its effects in any case. The contributions split into two main groups, depending on whether the embedding gap is approached from mathematical or NLP perspective.

1.1.1 Contributions part 1: characterisation of verifiable subspaces and general

NLP Verification Pipeline. We start by showing, through a series of experiments, that purely geometric approaches to NLP verification (such as those based on the

![]() $\epsilon \textrm {-ball}$

[Reference Shi, Zhang, Chang, Huang and Hsieh15]) suffer from the verifiability-generalisability trade-off: that is, when one metric improves, the other deteriorates. Figure 1 gives a good idea of the problem: the smaller the

$\epsilon \textrm {-ball}$

[Reference Shi, Zhang, Chang, Huang and Hsieh15]) suffer from the verifiability-generalisability trade-off: that is, when one metric improves, the other deteriorates. Figure 1 gives a good idea of the problem: the smaller the

![]() $\epsilon \textrm {-ball}$

s are, the more verifiable they are, and less generalisable. To the best of our knowledge, this phenomenon has not been reported in the literature before (in the NLP context). We propose a general method for measuring generalisability of the verified subspaces, based on algorithmic generation of semantic attacks on sentences included in the given verified semantic subspace.

$\epsilon \textrm {-ball}$

s are, the more verifiable they are, and less generalisable. To the best of our knowledge, this phenomenon has not been reported in the literature before (in the NLP context). We propose a general method for measuring generalisability of the verified subspaces, based on algorithmic generation of semantic attacks on sentences included in the given verified semantic subspace.

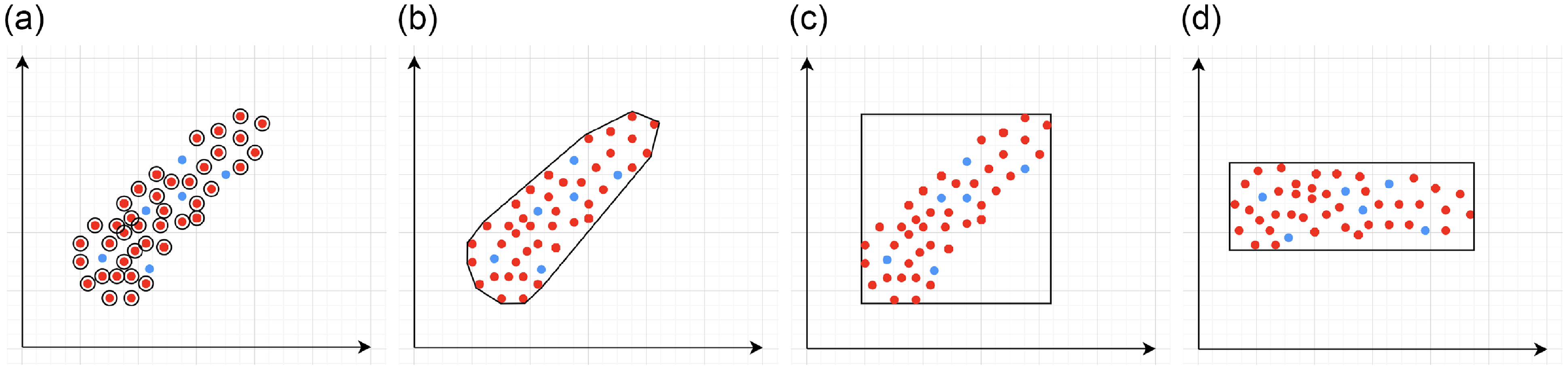

Figure 1.

An example of verifiable but not generalisable

![]() $\epsilon \textrm {-balls}$

(a), convex-hull around selected embedded points (b), hyper-rectangle around same points (c) and rotation of such hyper-rectangle (d) in 2-dimensions. The red dots represent sentences in the embedding space from the training set belonging to one class, while the turquoise dots are embedded sentences from the test set belonging to the same class.

$\epsilon \textrm {-balls}$

(a), convex-hull around selected embedded points (b), hyper-rectangle around same points (c) and rotation of such hyper-rectangle (d) in 2-dimensions. The red dots represent sentences in the embedding space from the training set belonging to one class, while the turquoise dots are embedded sentences from the test set belonging to the same class.

An alternative method to the purely geometric approach is to construct subspaces of the embedding space based on the semantic perturbations of sentences (first attempts to do this appeared in [Reference Casadio, Arnaboldi, Daggitt, Isac, Dinkar, Kienitz, Rieser and Komendantskaya16–Reference Zhang, Albarghouthi and D’Antoni19]). Concretely, the idea is to form each

![]() $\mathcal {S}_i$

by embedding a sentence

$\mathcal {S}_i$

by embedding a sentence

![]() $s$

and

$s$

and

![]() $n$

semantic perturbations of

$n$

semantic perturbations of

![]() $s$

into the real vector space and enclosing them inside some geometric shape. Ideally, this shape should be the convex hull around the

$s$

into the real vector space and enclosing them inside some geometric shape. Ideally, this shape should be the convex hull around the

![]() $n+1$

embedded sentences (see Figure 1), however calculating convex hulls with sufficient precision is computationally infeasible for high number of dimensions. Thus, simpler shapes, such as hyper-cubes and hyper-rectangles are used in the literature. We propose a novel refinement of these ideas, by including the method of a hyper-rectangle rotation in order to increase the shape precision (see Figure 1). We will call the resulting shapes semantic subspaces (in contrast to those obtained purely geometrically).

$n+1$

embedded sentences (see Figure 1), however calculating convex hulls with sufficient precision is computationally infeasible for high number of dimensions. Thus, simpler shapes, such as hyper-cubes and hyper-rectangles are used in the literature. We propose a novel refinement of these ideas, by including the method of a hyper-rectangle rotation in order to increase the shape precision (see Figure 1). We will call the resulting shapes semantic subspaces (in contrast to those obtained purely geometrically).

A few questions have been left unanswered in the previous work [Reference Casadio, Arnaboldi, Daggitt, Isac, Dinkar, Kienitz, Rieser and Komendantskaya16–Reference Zhang, Albarghouthi and D’Antoni19]. First, because generalisability of the verified subspaces is not reported in the literature, we cannot know whether the prior semantically-informed approaches are better in that respect than purely geometric methods. If they are better in both verifiability and generalisability, it is unclear whether the improvement should be attributed to:

-

• the fact that verified semantic subspaces simply have an optimal volume (for the verifiability-generalisability trade-off), or

-

• the improved precision of verified subspaces that comes from using the semantic knowledge.

Through a series of experiments, we confirm that semantic subspaces are more verifiable and more generalisable than their geometric counterparts. Moreover, by comparing the volumes of the obtained verified semantic and geometric subspaces, we show that the improvement is partly due to finding an optimal size of subspaces (for the given embedding space), and partly due to improvement in shape precision.

The second group of unresolved questions concerns robust training regimes in NLP verification that is used as means of improving verifiability of subspaces in prior works [Reference Casadio, Arnaboldi, Daggitt, Isac, Dinkar, Kienitz, Rieser and Komendantskaya16–Reference Zhang, Albarghouthi and D’Antoni19]. It was not clear what made robust training successful:

-

• was it because additional examples generally improved the precision of the decision boundary (in which case dataset augmentation would have a similar effect);

-

• was it because adversarial examples specifically improved adversarial robustness (in which case simple

$\epsilon \textrm {-ball}$

PGD attacks would have a similar effect); or

$\epsilon \textrm {-ball}$

PGD attacks would have a similar effect); or -

• did the knowledge of semantic subspaces play the key role?

Through a series of experiments we show that the latter is the case. In order to do this, we formulate a semantically robust training method that uses projected gradient descent on semantic subspaces (rather than on

![]() $\epsilon \textrm {-balls}$

as the famous PGD algorithm does [Reference Madry, Makelov, Schmidt, Tsipras and Vladu20]). We use different forms of semantic perturbations, at character, word and sentence levels (alongside the standard PGD training and data augmentation) to perform semantically robust training. We conclude that semantically robust training generally wins over the standard robust training methods. Moreover, the more sophisticated semantic perturbations we use in semantically robust training, the more verifiable the neural network will be obtained as a result (at no cost to generalisability). For example, using the strongest form of attack (the polyjuice attack [Reference Wu, Ribeiro, Heer and Weld21]) in semantically robust training, we obtain DNNs that are more verifiable irrespective of the way the verified sub-spaces are formed.

$\epsilon \textrm {-balls}$

as the famous PGD algorithm does [Reference Madry, Makelov, Schmidt, Tsipras and Vladu20]). We use different forms of semantic perturbations, at character, word and sentence levels (alongside the standard PGD training and data augmentation) to perform semantically robust training. We conclude that semantically robust training generally wins over the standard robust training methods. Moreover, the more sophisticated semantic perturbations we use in semantically robust training, the more verifiable the neural network will be obtained as a result (at no cost to generalisability). For example, using the strongest form of attack (the polyjuice attack [Reference Wu, Ribeiro, Heer and Weld21]) in semantically robust training, we obtain DNNs that are more verifiable irrespective of the way the verified sub-spaces are formed.

As a result, we arrive at a fully parametric approach to NLP verification that disentangles the four components:

-

• choice of the semantic attack (on the NLP side),

-

• semantic subspace formation in the embedding space (on the geometric side),

-

• semantically robust training (on the machine learning side),

-

• choice of the verification algorithm (on the verification side).

We argue that this approach opens the way for more principled NLP verification methods that reduces the effects of the embedding gap; and generation of more transparent NLP verification benchmarks. We implement a tool ANTONIO that generates NLP verification benchmarks based on the above choices. This paper is the first to use a complete SMT-based verifier (namely Marabou [Reference Wu, Isac, Zeljić, Tagomori, Daggitt, Kokke and Barrett22]) for NLP verification.

1.1.2 Contributions part 2: NLP verification pipeline in use: an NLP perspective on the embedding gap

We test the theoretical results by suggesting an NLP verification pipeline, a general methodology that starts with NLP analysis of the dataset and obtaining semantically similar perturbations that together characterise the semantic meaning of a sentence; proceeds with embedding of the sentences into the real vector space and defining semantic subspaces around embeddings of semantically similar sentences; and culminates with using these subspaces for both training and verification. This clear division into stages allows us to formulate practical NLP methods for minimising the effects of the embedding gap. In particular, we show that the quality of the generated sentence perturbations maybe improved through the use of human evaluation, cosine similarity and ROUGE-N. We introduce the novel embedding error metric as an effective practical way to measure the quality of the embedding functions. Through a detailed case study, we show how geometric and NLP intuitions can be put at work towards obtaining DNNs that are more verifiable over better generalisable and less prone to embedding errors semantic subspaces. Perhaps more importantly, the proposed methodology opens the way for transparency in reporting NLP verification results, – something that this domain will benefit from if it reaches the stage of practical deployment of NLP verification pipelines.

Paper Outline. From here, the paper proceeds as follows. Section 2 gives an extensive literature review encompassing DNN verification methods generally, and NLP verification methods in particular. The section culminates with distilling a common “NLP verification pipeline” encompassing the existing literature. Based on the understanding of major components of the pipeline, the rest of the paper focuses on improving understanding or implementation of its components. Section 3 formally defines the components of the pipeline in a general mathematical notation, which abstracts away from particular choices of sentence perturbation, sentence embedding, training and verification algorithms. The central notion the section introduces is that of geometric and semantic subspaces. The next Section 4 makes full use of this general definition, and shows that semantic subspaces play a pivotal role in improving verification and training of DNNs in NLP. This section formally defines the generalisability metric and considers the problem of generalisability-verifiability trade-off. Through thorough empirical evaluation, it shows that a principled approach to defining semantic subspaces can help to improve both generalisability and verifiability of DNNs, thus reducing the effects of the trade-off. The final Section 5 further tests the NLP verification pipelines using state-of-the-art NLP tools, and analyses the effects of the embedding gap from the NLP perspective, in particular it introduces a method of measuring the embedding error and reporting this metric alongside verifiability and generalisability. Section 6 concludes the paper and discusses future work.

2. Related work

2.1 DNN verification

Formal verification is an active field across several domains including hardware [Reference Kroening and Paul23, Reference Patankar, Jain and Bryant24], software [Reference Jourdan, Laporte, Blazy, Leroy and Pichardie25], network protocols [Reference Metere and Arnaboldi26] and many more [Reference Woodcock, Larsen, Bicarregui and Fitzgerald27]. However, it was only recently that this became applicable to the field of machine learning [Reference Katz, Barrett, Dill, Julian and Kochenderfer28]. An input query to a verifier consists of a subspace within the embedding space and a target subspace of outputs, typically a target output class. The verifier then returns either true, false or unknown. True indicates that there exists an input within the given input subspace whose output falls within the given output subspace, often accompanied by an example of such input. False indicates that no such input exists. Several verifiers are popular in DNN verification and competitions [Reference Bak, Liu and Johnson29–Reference Singh, Ganvir, Püschel and Vechev32]. We can divide them into 2 main categories: complete verifiers which return true/false and incomplete verifiers which return true/unknown. While complete verifiers are always deterministic, incomplete verifiers may be probabilistic. Unlike deterministic verification, probabilistic verification is not sound and a verifier may incorrectly output true with a very low probability (typically 0.01%).

Complete Verification based on Linear Programming & Satisfiability Modulo Theories (SMT) solving. Generally, SMT solving is a group of methods for determining the satisfiability of logical formulas with respect to underlying mathematical theories such as real arithmetic, bit-vectors or arrays [Reference Barrett and Tinelli33]. These methods extend traditional satisfiability (SAT) solving by incorporating domain-specific reasoning, making them particularly useful for verifying complex systems. In the context of neural network verification, SMT solvers encode network behaviours and safety properties as logical constraints, enabling rigorous checks for violations of specifications [Reference Albarghouthi34]. When the activation functions are piecewise linear (e.g. ReLU), the DNN can be encoded by conjunctions and disjunctions of linear inequalities and thus linear programming algorithms can be directly applied to solve the satisfiability problem. A state-of-the-art tool is Marabou [Reference Wu, Isac, Zeljić, Tagomori, Daggitt, Kokke and Barrett22], which answers queries about neural networks and their properties in the form of constraint satisfaction problems. Marabou takes the network as input and first applies multiple pre-processing steps to infer bounds for each node in the network. It applies the algorithm ReLUplex [Reference Katz, Barrett, Dill, Julian and Kochenderfer28], a combination of Simplex [Reference Dantzig35] search over linear constraints, modified to work for networks with piece-wise linear activation functions. With time, Marabou grew into a complex prover with multiple heuristics supplementing the original ReLUplex algorithm [Reference Wu, Isac, Zeljić, Tagomori, Daggitt, Kokke and Barrett22], for example it now includes mixed-integer linear programming (MILP) [Reference Winston36] and abstract interpretation based algorithms which we survey below. MILP-based approaches [Reference Cheng, Nührenberg and Ruess37–Reference Tjeng, Xiao and Tedrake39] encode the verification problem as a mixed-integer linear programming problem, in which the constraints are linear inequalities and the objective is represented by a linear function. Thus, the DNN verification problem can be precisely encoded as a MILP problem. For example, ERAN [Reference Singh, Gehr, Mirman, Püschel, Vechev, Bengio, Wallach, Larochelle, Grauman, Cesa-Bianchi and Garnett40] combines abstract interpretation with the MILP solver GUROBI [41]. By the time Branch and Bound (BaB) methodologies are introduced later, it becomes evident that the verification community has effectively consolidated diverse approaches into a unified taxonomy. Modern verifiers, such as

![]() $\alpha \beta$

-CROWN [Reference Xu, Zhang, Wang, Wang, Jana, Lin and Hsieh42, Reference Wang, Zhang, Xu, Lin, Jana, Hsieh and Kolter43], take full advantage of this combination and effectively balance efficiency with precision.

$\alpha \beta$

-CROWN [Reference Xu, Zhang, Wang, Wang, Jana, Lin and Hsieh42, Reference Wang, Zhang, Xu, Lin, Jana, Hsieh and Kolter43], take full advantage of this combination and effectively balance efficiency with precision.

Incomplete Verification based on Abstract Interpretation takes inspiration from the domain of abstract interpretation, and mainly uses linear relaxations on ReLU neurons, resulting in an over-approximation of the initial constraint. Abstract interpretation was first developed by Cousot and Cousot [Reference Cousot and Cousot44] in 1977. It formalises the idea of abstraction of mathematical structures, in particular those involved in the specification of properties and proof methods of computer systems [Reference Cousot45] and it has since been used in many applications [Reference Cousot and Cousot46]. Specifically, for DNN verification, this technique can model the behaviour of a network using an abstract domain that captures the possible range of values the network can output for a given input. Abstract interpretation-based verifiers can define a lower bound and an upper bound of the output of each ReLU neuron as linear constraints, which define a region called ReLU polytope that gets propagated through the network. To propagate the bounds, one can use interval bound propagation (IBP) [Reference Wong and Kolter47–Reference Mirman, Gehr and Vechev50]. The strength of IBP-based methods lies in their efficiency; they are faster than alternative approaches and demonstrate superior scalability. However, their primary limitation lies in the inherently loose bounds they produce [Reference Gowal, Dvijotham, Stanforth, Bunel, Qin, Uesato, Arandjelovic, Mann and Kohli48]. This drawback becomes particularly pronounced in the case of deeper neural networks, typically those with more than 10 layers [Reference Li, Xie and Li51], where they cannot certify non-trivial robustness properties. Other methods that are less efficient but produce tighter bounds are based on polyhedra abstraction, such as CROWN [Reference Zhang, Weng, Chen, Hsieh and Daniel52] and DeepPoly [Reference Singh, Gehr, Püschel and Vechev53], or based on multi-neuron relaxation, such as PRIMA [Reference Müller, Makarchuk, Singh, Püschel and Vechev54]. An abstract interpretation tool CORA [Reference Althoff55], uses polyhedral abstractions and reachability analysis for formal verification of neural networks. It integrates various set representations, such as zonotopes, and algorithms to compute reachable sets for both continuous and hybrid systems, providing tighter bounds in verification tasks. Another mature tool in this category is ERAN [Reference Singh, Gehr, Mirman, Püschel, Vechev, Bengio, Wallach, Larochelle, Grauman, Cesa-Bianchi and Garnett40], which uses abstract domains (DeepPoly) with custom multi-neuron relaxations (PRIMA) to support fully-connected, convolutional and residual networks with ReLU, Sigmoid, Tanh and Maxpool activations. Note that, having lost completeness, they can work with a more general class of neural networks (e.g. neural networks with non linear layers).

Modern Neural Network Verifiers. Modern verifiers are complex tools that take advantage of a combination of complete and incomplete methods as well as additional heuristics. The term Branch and Bound (BaB) [Reference Wang, Zhang, Xu, Lin, Jana, Hsieh and Kolter43, Reference Gehr, Mirman, Drachsler-Cohen, Tsankov, Chaudhuri and Vechev56–Reference Zhang, Wang, Xu, Li, Li, Jana, Hseih and Kolter61] often refers to the method that relies on the piecewise linear property of DNNs: since each ReLU neuron outputs ReLU(

![]() $x$

) = max{

$x$

) = max{

![]() $x$

,

$x$

,

![]() $0$

} is piecewise linear, we can consider its two linear pieces

$0$

} is piecewise linear, we can consider its two linear pieces

![]() $x\geq 0$

,

$x\geq 0$

,

![]() $x\leq 0$

separately. A BaB verification approach, as the name suggests, consists of two parts: branching and bounding. It first derives a lower bound and an upper bound, then, if the lower bound is positive it terminates with ‘verified’, else, if the upper bound is non-positive it terminates with ‘not verified’ (bounding). Otherwise, the approach recursively chooses a neuron to split into two branches (branching), resulting in two linear constraints. Then bounding is applied to both constraints and if both are satisfied the verification terminates, otherwise the other neurons are split recursively. When all neurons are split, the branch will contain only linear constraints, and thus the approach applies linear programming to compute the constraint and verify the branch. It is important to note that BaB approaches themselves are neither inherently complete nor incomplete. BaB is an algorithm for splitting problems into sub-problems and requires a solver to resolve the linear constraints. The completeness of the verification depends on the combination of BaB and the solver used. Multi-Neuron Guided Branch-and-Bound (MN-BaB) [Reference Ferrari, Mueller, Jovanović and Vechev59] is a state-of-the-art neural network verifier that builds on the tight multi-neuron constraints proposed in PRIMA [Reference Müller, Makarchuk, Singh, Püschel and Vechev62] and leverages these constraints within a BaB framework to yield an efficient, GPU-based dual solver. Another state-of-the-art tool is

$x\leq 0$

separately. A BaB verification approach, as the name suggests, consists of two parts: branching and bounding. It first derives a lower bound and an upper bound, then, if the lower bound is positive it terminates with ‘verified’, else, if the upper bound is non-positive it terminates with ‘not verified’ (bounding). Otherwise, the approach recursively chooses a neuron to split into two branches (branching), resulting in two linear constraints. Then bounding is applied to both constraints and if both are satisfied the verification terminates, otherwise the other neurons are split recursively. When all neurons are split, the branch will contain only linear constraints, and thus the approach applies linear programming to compute the constraint and verify the branch. It is important to note that BaB approaches themselves are neither inherently complete nor incomplete. BaB is an algorithm for splitting problems into sub-problems and requires a solver to resolve the linear constraints. The completeness of the verification depends on the combination of BaB and the solver used. Multi-Neuron Guided Branch-and-Bound (MN-BaB) [Reference Ferrari, Mueller, Jovanović and Vechev59] is a state-of-the-art neural network verifier that builds on the tight multi-neuron constraints proposed in PRIMA [Reference Müller, Makarchuk, Singh, Püschel and Vechev62] and leverages these constraints within a BaB framework to yield an efficient, GPU-based dual solver. Another state-of-the-art tool is

![]() $\alpha \beta$

-CROWN [Reference Xu, Zhang, Wang, Wang, Jana, Lin and Hsieh42, Reference Wang, Zhang, Xu, Lin, Jana, Hsieh and Kolter43], a neural network verifier based on an efficient linear bound propagation framework and branch-and-bound. It can be accelerated efficiently on GPUs and can scale to relatively large convolutional networks (e.g.

$\alpha \beta$

-CROWN [Reference Xu, Zhang, Wang, Wang, Jana, Lin and Hsieh42, Reference Wang, Zhang, Xu, Lin, Jana, Hsieh and Kolter43], a neural network verifier based on an efficient linear bound propagation framework and branch-and-bound. It can be accelerated efficiently on GPUs and can scale to relatively large convolutional networks (e.g.

![]() $10^7$

parameters). It also supports a wide range of neural network architectures (e.g. CNN, ResNet and various activation functions).

$10^7$

parameters). It also supports a wide range of neural network architectures (e.g. CNN, ResNet and various activation functions).

Probabilistic Incomplete Verification approaches add random noise to models to smooth them, and then derive certified robustness for these smoothed models. This field is commonly referred to as Randomised Smoothing, given that these approaches provide probabilistic guarantees of robustness, and all current probabilistic verification techniques are tailored for smoothed models [Reference Lecuyer, Atlidakis, Geambasu, Hsu and Jana63–Reference Mohapatra, Ko, Weng, Chen, Liu and Daniel68]. Given that our work focuses on deterministic approaches, here we only report the existence of this line of work without going into details.

Note that these existing verification approaches primarily focus on CV tasks, where images are seen as vectors in a continuous space and every point in the space corresponds to a valid image, while sentences in NLP form a discrete domain, making it challenging to apply traditional verification techniques effectively.

In this work, we use both an abstract interpretation-based incomplete verifier (ERAN [Reference Singh, Gehr, Mirman, Püschel, Vechev, Bengio, Wallach, Larochelle, Grauman, Cesa-Bianchi and Garnett40]) and an SMT-based complete verifier (Marabou [Reference Wu, Isac, Zeljić, Tagomori, Daggitt, Kokke and Barrett22]) in order to demonstrate the effect that the choice of a verifier may bring and demonstrate common trends.

2.2 Geometric representations in DNN verification

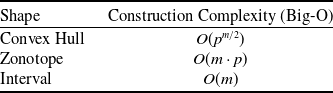

Geometric representations form the backbone of many DNN verification techniques, enabling the encoding and manipulation of input and output bounds during analysis. Among these, hyper-rectangles, including

![]() $\epsilon \textrm {-cubes}$

, are the most widely used due to their simplicity and efficiency in over-approximating neural network behaviours [Reference Wong and Kolter47, Reference Gowal, Dvijotham, Stanforth, Bunel, Qin, Uesato, Arandjelovic, Mann and Kohli48].These representations are computationally lightweight, making them highly scalable to large networks. However, they often produce loose approximations, particularly in deeper or more complex architectures, which can limit the precision of the verification results [Reference Gowal, Dvijotham, Stanforth, Bunel, Qin, Uesato, Arandjelovic, Mann and Kohli48]. Other representations, such as zonotopes [Reference Singh, Gehr, Püschel and Vechev53, Reference Gehr, Mirman, Drachsler-Cohen, Tsankov, Chaudhuri and Vechev56, Reference Singh, Gehr, Püschel and Vechev69], offer tighter approximations and better capture the linear dependencies between neurons but at a higher computational cost. Polyhedra-based methods, as employed in tools like DeepPoly [Reference Singh, Gehr, Püschel and Vechev53] and PRIMA [Reference Müller, Makarchuk, Singh, Püschel and Vechev54], provide even more precise abstractions by considering multi-dimensional relationships between neurons. However, these methods trade off efficiency for precision, making them less scalable to large and deep networks. Ellipsoidal representations [Reference Althoff55] are another class of geometric abstractions that provide compact and smooth bounds for neural network outputs. These representations are particularly useful for capturing the effects of continuous transformations in hybrid systems and other control applications. However, operations such as intersection and propagation through non-linear layers can be computationally intensive, which limits their applicability in large-scale neural network verification tasks. The dominance of hyper-rectangles in the field stems from their balance of computational simplicity and generality. Nonetheless, ongoing research continues to explore how alternative shapes, hybrid approaches or adaptive representations might better meet the demands of increasingly complex neural network architectures.

$\epsilon \textrm {-cubes}$

, are the most widely used due to their simplicity and efficiency in over-approximating neural network behaviours [Reference Wong and Kolter47, Reference Gowal, Dvijotham, Stanforth, Bunel, Qin, Uesato, Arandjelovic, Mann and Kohli48].These representations are computationally lightweight, making them highly scalable to large networks. However, they often produce loose approximations, particularly in deeper or more complex architectures, which can limit the precision of the verification results [Reference Gowal, Dvijotham, Stanforth, Bunel, Qin, Uesato, Arandjelovic, Mann and Kohli48]. Other representations, such as zonotopes [Reference Singh, Gehr, Püschel and Vechev53, Reference Gehr, Mirman, Drachsler-Cohen, Tsankov, Chaudhuri and Vechev56, Reference Singh, Gehr, Püschel and Vechev69], offer tighter approximations and better capture the linear dependencies between neurons but at a higher computational cost. Polyhedra-based methods, as employed in tools like DeepPoly [Reference Singh, Gehr, Püschel and Vechev53] and PRIMA [Reference Müller, Makarchuk, Singh, Püschel and Vechev54], provide even more precise abstractions by considering multi-dimensional relationships between neurons. However, these methods trade off efficiency for precision, making them less scalable to large and deep networks. Ellipsoidal representations [Reference Althoff55] are another class of geometric abstractions that provide compact and smooth bounds for neural network outputs. These representations are particularly useful for capturing the effects of continuous transformations in hybrid systems and other control applications. However, operations such as intersection and propagation through non-linear layers can be computationally intensive, which limits their applicability in large-scale neural network verification tasks. The dominance of hyper-rectangles in the field stems from their balance of computational simplicity and generality. Nonetheless, ongoing research continues to explore how alternative shapes, hybrid approaches or adaptive representations might better meet the demands of increasingly complex neural network architectures.

2.3 Robust training

Verifying DNNs poses significant challenges if they are not appropriately trained. The fundamental issue lies in the failure of DNNs, including even sophisticated models, to meet essential verification properties, such as robustness [Reference Casadio, Komendantskaya, Daggitt, Kokke, Katz, Amir and Rafaeli70]. To enhance robustness, various training methodologies have been proposed. It is noteworthy that, although robust training by projected gradient descent [Reference Madry, Makelov, Schmidt, Tsipras and Vladu20, Reference Goodfellow, Shlens and Szegedy71, Reference Kolter and Madry72] predates verification, contemporary approaches are often related to, or derived from, the corresponding verification methods by optimising verification-inspired regularisation terms or injecting specific data augmentation during training. In practice, after robust training, the model usually achieves higher certified robustness and is more likely to satisfy the desired verification properties [Reference Casadio, Komendantskaya, Daggitt, Kokke, Katz, Amir and Rafaeli70]. Thus, robust training is a strong complement to robustness verification approaches.

Robust training techniques can be classified into several large groups:

-

• data augmentation [Reference Rebuffi, Gowal, Calian, Stimberg, Wiles and Mann73],

-

• adversarial training [Reference Madry, Makelov, Schmidt, Tsipras and Vladu20, Reference Goodfellow, Shlens and Szegedy71] including property-driven training [Reference Fischer, Balunovic, Drachsler-Cohen, Gehr, Zhang, Vechev, Chaudhuri and Salakhutdinov74, Reference Slusarz, Komendantskaya, Daggitt, Stewart, Stark, Piskac and Voronkov75],

-

• IBP training [Reference Gowal, Dvijotham, Stanforth, Bunel, Qin, Uesato, Arandjelovic, Mann and Kohli48, Reference Zhang, Chen, Xiao, Gowal, Stanforth, Li, Boning and Hsieh76] and other forms of certified training [Reference Müller, Eckert, Fischer and Vechev77], or

-

• a combination thereof [Reference Casadio, Komendantskaya, Daggitt, Kokke, Katz, Amir and Rafaeli70, Reference Zhang, Albarghouthi and D’Antoni78].

Data augmentation involves the creation of synthetic examples through the application of diverse transformations or perturbations to the initial training data. These generated instances are then incorporated into the original dataset to enhance the training process. Adversarial training entails identifying worst-case examples at each epoch during the training phase and calculating an additional loss on these instances. State of the art adversarial training involves projected gradient descent algorithms such as FGSM [Reference Goodfellow, Shlens and Szegedy71] and PGD [Reference Madry, Makelov, Schmidt, Tsipras and Vladu20]. Certified training methods focus on providing mathematical guarantees about the model’s behaviour within certain bounds. Among them, we can name IBP training [Reference Gowal, Dvijotham, Stanforth, Bunel, Qin, Uesato, Arandjelovic, Mann and Kohli48, Reference Zhang, Chen, Xiao, Gowal, Stanforth, Li, Boning and Hsieh76] techniques, which impose intervals or bounds on the predictions or activations of the model, ensuring that the model’s output lies within a specific range with high confidence.

Note that all techniques mentioned above can be categorised based on whether they primarily augment the data (such as data augmentation) or augment the loss function (as seen in adversarial, IBP and certified training). Augmenting the data tends to be efficient, although it may not help against stronger adversarial attacks. Conversely, methods that manipulate the loss functions directly are more resistant to strong adversarial attacks but often come with higher computational costs. Ultimately, the choice between altering data or loss functions depends on the specific requirements of the application and the desired trade-offs between performance, computational complexity and robustness guarantees.

2.4 NLP robustness

There exists a substantial body of research dedicated to enhancing the adversarial robustness of NLP systems [Reference Zhang, Sheng, Alhazmi and Li79–Reference Dong, Luu, Ji and Liu85]. These efforts aim to mitigate the vulnerability of NLP models to adversarial attacks and improve their resilience in real-world scenarios [Reference Wang, Wang, Wang, Wang and Ye80, Reference Wang, Wang and Yang81] and mostly employ data augmentation techniques [Reference Feng, Gangal, Wei, Chandar, Vosoughi, Mitamura and Hovy86, Reference Dhole, Gangal, Gehrmann, Gupta, Li, Mahamood and Zhang87]. In NLP, we can distinguish perturbations based on three main criteria:

-

• where and how the perturbations occur,

-

• whether they are altered automatically using some defined rules (vs. generated by humans or large language model (LLMs)) and

-

• whether they are adversarial (as opposed to random).

In particular, perturbations can occur at the character, word or sentence level [Reference Cheng, Jiang and Macherey88–Reference Cao, Li, Fang, Zhou, Gao, Zhan and Tao90] and may involve deletion, insertion, swapping, flipping, substitution with synonyms, concatenation with characters or words, or insertion of numeric or alphanumeric characters [Reference Liang, Li, Su, Bian, Li and Shi91–Reference Lei, Cao, Li, Zhou, Fang and Pechenizkiy93]. For instance, in character level adversarial attacks, Belinkov et al. [Reference Belinkov and Bisk94] introduce natural and synthetic noise to input data, while Gao et al. [Reference Gao, Lanchantin, Soffa and Qi95] and Li et al. [Reference Li, Ji, Du, Li and Wang96] identify crucial words within a sentence and perturb them accordingly. For word level attacks, they can be categorised into gradient-based [Reference Liang, Li, Su, Bian, Li and Shi91, Reference Samanta and Mehta97], importance-based [Reference Ivankay, Girardi, Marchiori and Frossard98, Reference Di, Jin, Zhou and Szolovits99] and replacement-based [Reference Alzantot, Sharma, Elgohary, Ho, Srivastava and Chang100–Reference Pennington, Socher and Manning102] strategies, based on the perturbation method employed. Moreover, Moradi et al. [Reference Moradi and Samwald103] introduce rule-based non-adversarial perturbations at both the character and word levels. Their method simulates various types of noise typically caused by spelling mistakes, typos and other similar errors. In sentence level adversarial attacks, some perturbations [Reference Jia and Liang104, Reference Wang and Bansal105] are created so that they do not impact the original label of the input and can be incorporated as a concatenation in the original text. In such scenarios, the expected behaviour from the model is to maintain the original output, and the attack can be deemed successful if the label/output of the model is altered. Additionally, non-rule-based sentence perturbations can be obtained through prompting LLMs [Reference Chiang, Li and Lin14, Reference Wu, Ribeiro, Heer and Weld21] to generate rephrasing of the inputs. By augmenting the training data with these perturbed examples, models are exposed to a more diverse range of linguistic variations and potential adversarial inputs. This helps the models to generalise better and become more robust to different types of adversarial attacks. To help with this task, the NLP community has gathered a dataset of adversarial attacks named AdvGLUE [Reference Wang, Xu and Wang106], which aims to be a principled and comprehensive benchmark for NLP robustness measurements.

In this work we employ a PGD-based adversarial training as the method to enhance the robustness and verifiability of our models against gradient-based adversarial attacks. For non-adversarial perturbations, we create rule-based perturbations at the character and word level as in Moradi et al. [Reference Moradi and Samwald103] and non-rule-based perturbations at the sentence level using PolyJuice [Reference Wu, Ribeiro, Heer and Weld21] and Vicuna [Reference Chiang, Li and Lin14]. We thus cover most combinations of the three choices above (bypassing only human-generated adversarial attacks as there is no sufficient data to admit systematic evaluation which is important for this study).

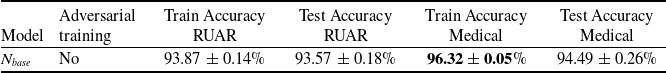

2.5 Datasets and use cases used in NLP verification

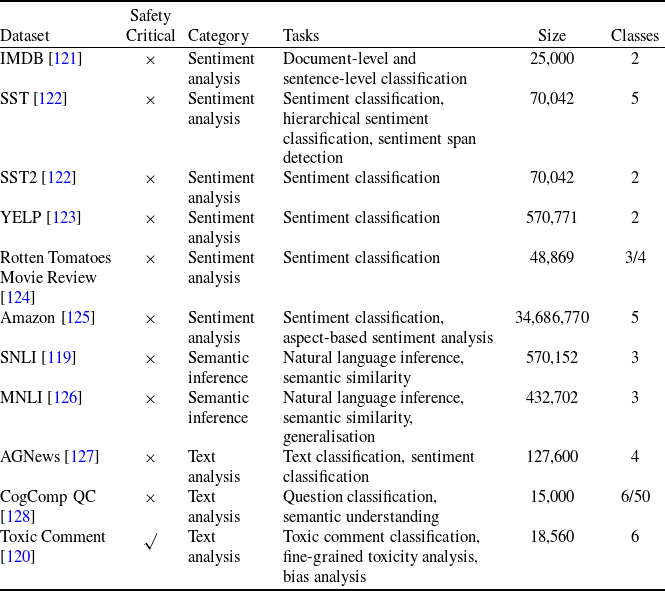

Existing NLP verification datasets. Table 1 summarises the main features and tasks of the datasets used in NLP verification. Despite their diverse origins and applications, the datasets in the literature are usually binary or multi-class text classification problems. Furthermore, datasets can be sensitive to perturbations, i.e. perturbations can have non-trivial impact on label consistency. For example, Jia et al. [Reference Jia, Raghunathan, Göksel and Liang17] use IBP with the SNLI [Reference Bowman, Angeli, Potts, Manning, Màrquez, Callison-Burch and Su119]Footnote 3 dataset (see Tables 2 and 1) to show that word perturbations (e.g. ‘good’ to ‘best’) can change whether one sentence entails another. Some works such as Jia et al. [Reference Jia, Raghunathan, Göksel and Liang17] try to address this label consistency, while others do not.

Table 1. Summary of the main features of the datasets used in NLP verification

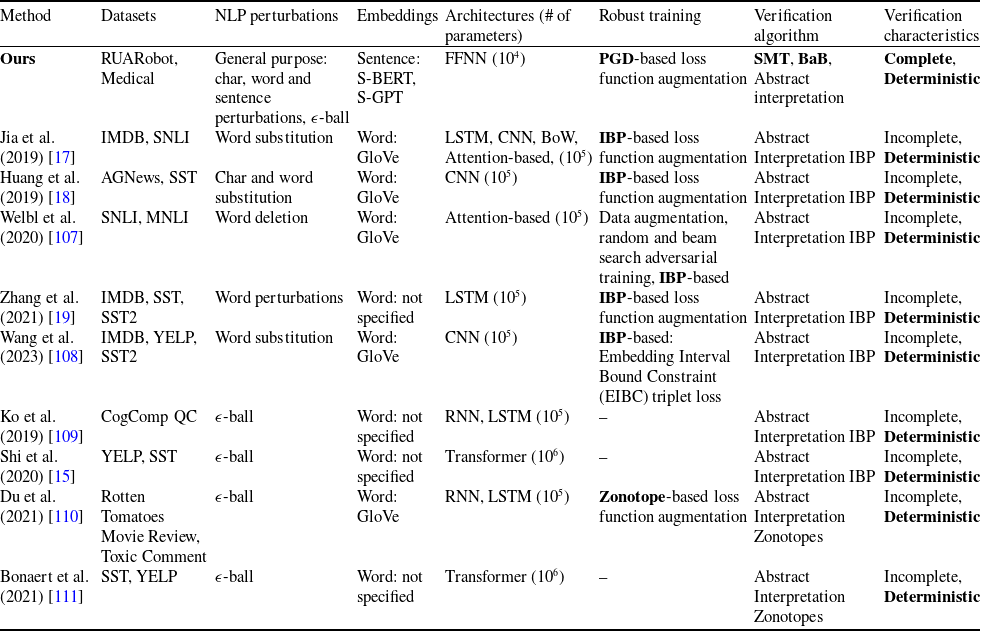

Table 2. Summary of the main features of the existing NLP verification approaches. In bold are state-of-the-art methods

Additionally, we find that the previous research on NLP verification does not utilise safety critical datasets (which strongly motivates the choice of datasets in alternative verification domains), with the exception of Du et al. [Reference Du, Ji, Shen, Zhang, Li, Shi, Fang, Yin, Beyah and Wang110] that use the Toxic Comment dataset [Reference Adam120]. Other papers do not provide detailed motivation as to why the dataset choices were made, however it could be due to the datasets being commonly used in NLP benchmarks (IMDB etc.).

2.5.1 Datasets proposed in this paper

In this paper, we focus on two existing datasets that model safety-critical scenarios. These two datasets have not previously been applied or explored in the context of NLP verification. Both are driven by real-world use cases of safety-critical NLP applications, i.e. applications for which law enforcement and safety demand formal guarantees of “good” DNN behaviour.

Chatbot Disclosure (R-U-A-Robot Dataset [Reference Gros, Li and Yu129]). The first case study is motivated by new legislation which states that a chatbot must not mislead people about its artificial identity [Reference Kop10, 11]. Given that the regulatory landscape surrounding NLP models (particularly LLMs and generative AI) is rapidly evolving, similar legislation could be widespread in the future – with recent calls for the US Congress to formalise such disclosure requirements [Reference Altman, Marcus and Montgomery130]. The prohibition on deceptive conduct act may apply to the outputs generated by NLP systems if used commercially [Reference Atleson131], and at minimum a system must guarantee a truthful response when asked about its agency [Reference Gros, Li and Yu129, Reference Abercrombie, Curry, Dinkar, Rieser and Talat132]. Furthermore, the burden of this should be placed on the designers of NLP systems, and not on the consumers.

Our first safety critical case is the R-U-A-Robot dataset [Reference Gros, Li and Yu129], a written English dataset consisting of 6800 variations on queries relating to the intent of ‘Are you a robot?’, such as ‘I’m a man, what about you?’. The dataset was created via a context-free grammar template, crowd-sourcing and pre-existing data sources. It consists of 2,720 positive examples (where given the query, it is appropriate for the system to state its non-human identity), 3,400 negative examples and 680 ‘ambiguous-if-clarify’ examples (where it is unclear whether the system is required to state its identity). The dataset was created to promote transparency, which may be required when the user receives unsolicited phone calls from artificial systems. Given systems like Google Duplex [Reference Leviathan and Matias133], and the criticism it received for human-sounding outputs [Reference Lieu134], it is also highly plausible for the user to be deceived regarding the outputs generated by other NLP-based systems [Reference Atleson131]. Thus we choose this dataset to understand how to enforce such disclosure requirements. We collapse the positive and ambiguous examples into one label, following the principle of ‘better be safe than sorry’, i.e. prioritising a high recall system.

Medical Safety Dataset. Another scenario one might consider is that inappropriate outputs of NLP systems have the potential to cause harm to human users [Reference Bickmore, Trinh, Olafsson, O’Leary, Asadi, Rickles and Cruz13]. For example, a system may give a user false impressions of its ‘expertise’ and generate harmful advice in response to medically related user queries [Reference Dinan, Gavin Abercrombie, Spruit, Dirk Hovy and Rieser7]. In practice, it may be desirable for the system to avoid answering such queries. Thus, we choose the Medical safety dataset [Reference Abercrombie and Rieser12], a dataset consisting of 2,917 risk-graded medical and non-medical queries (1,417 and 1,500 examples, respectively). The dataset was constructed via collecting questions posted on reddit, such as r/AskDocs. The medical queries have been labelled by experts and crowd annotators for both relevance and levels of risk (i.e. non-serious, serious to critical) following established World Economic Forum (WEF) risk levels designated for chatbots in healthcare [Reference Forum135]. We merge the medical queries of different risk-levels into one class, given the high scarcity of the latter two labels to create an in-domain/out-of-domain classification task for medical queries. Additionally, we consider only the medical queries that were labelled as such by expert medical practitioners. Thus, this dataset will facilitate discussion on how to guarantee a system recognises medical queries, in order to avoid generating medical output.

An additional benefit of these two datasets is that they are distinct semantically, i.e. the R-U-A-Robot dataset contains several semantically similar, but lexically different queries, while the medical safety dataset contains semantically diverse queries. For both datasets, we utilise the same data splits as given in the original papers and refer to the final binary labels as positive and negative. The positive label in the R-U-A-Robot dataset implies a sample where it is appropriate to disclose non-human identity, while in the medical safety dataset it implies an in-domain medical query.

2.6 Previous NLP verification approaches

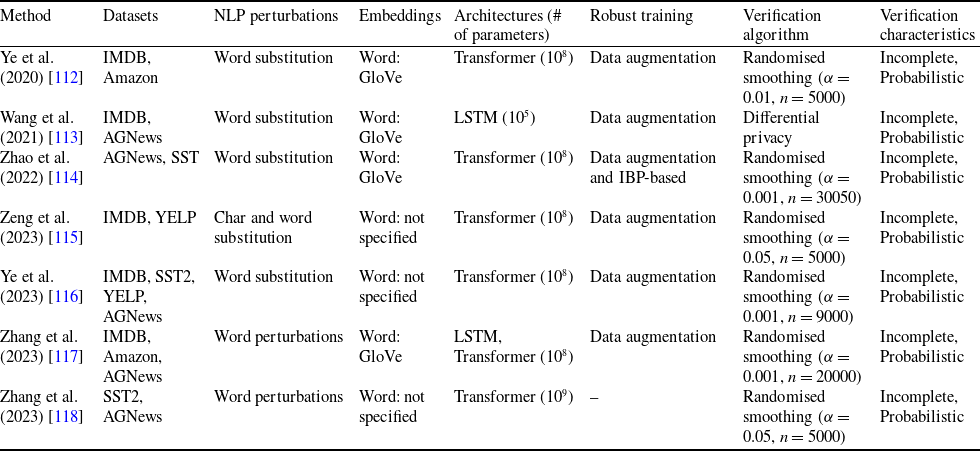

Although DNN verification studies have predominantly focused on CV, there is a growing body of research exploring the verification of NLP. This research can be categorised into three main approaches: using IBP, zonotopes and randomised smoothing. Tables 2 and 3 show a comparison of these approaches. To the best of our knowledge, this paper is the first one to use an SMT-based verifier for this purpose and compare it with an abstract interpretation-based verifier on the same benchmarks.

Table 3. Summary of the main features of the existing randomised smoothing approaches

NLP Verification via Interval Bound Propagation. The first technique successfully adopted from the CV domain for verifying NLP models was the IBP. IBP was used for both training and verification with the aim to minimise the upper bound on the maximum difference between the classification boundary and the input perturbation region. It was achieved by augmenting the loss function with a term that penalises large perturbations. Specifically, IBP incorporates interval bounds during the forward propagation phase, adding a regularisation term to the loss function that minimises the distance between the perturbed and unperturbed outputs. This facilitated the minimisation of the perturbation region in the last layer, ensuring it remained on one side of the classification boundary. As a result, the adversarial region becomes tighter and can be considered certifiably robust. Notably, Jia et al. [Reference Jia, Raghunathan, Göksel and Liang17] proposed certified robust models on word substitutions in text classification. The authors employed IBP to optimise the upper bound over perturbations, providing an upper bound over the discrete set of perturbations in the word vector space. Similarly, POPQORN [Reference Ko, Lyu, Weng, Daniel, Wong and Lin109] introduced robustness guarantees for RNN-based networks by handling the non-linear activation functions of complicated RNN structures (like LSTMs and GRUs) using linear bounds. Later, Shi et al. [Reference Shi, Zhang, Chang, Huang and Hsieh15] developed a verification algorithm for transformers with self-attention layers. This algorithm provides a lower bound to ensure the probability of the correct label remains consistently higher than that of the incorrect labels. Furthermore, Huang et al. [Reference Huang, Stanforth, Welbl, Dyer, Yogatama, Gowal, Dvijotham and Kohli18] introduced a verification and verifiable training method with a tighter over-approximation in style of the Simplex algorithm [Reference Katz, Barrett, Dill, Julian and Kochenderfer28]. To make the network verifiable, they defined the convex hull of all the original unperturbed inputs as a space of perturbations. By employing the IBP algorithm, they generated robustness bounds for each neural network layer. Later on, Welbl et al. [Reference Welbl, Huang, Stanforth, Gowal, Dvijotham, Szummer and Kohli107] differentiated from the previous approaches by using IBP to address the under-sensitivity issue. They designed and formally verified the ‘under-sensitivity specification’ that a model should not become more confident as arbitrary subsets of input words are deleted. Recently, Zhang et al. [Reference Zhang, Albarghouthi and D’Antoni19] introduced Abstract Recursive Certification (ARC) to verify the robustness of LSTMs. ARC defines a set of programmatically perturbed string transformations to construct a perturbation space. By memorising the hidden states of strings in the perturbation space that share a common prefix, ARC can efficiently calculate an upper bound while avoiding redundant hidden state computations. Finally, Wang et al. [Reference Wang, Yang, Di and He108] improved on the work of Jia et al. by introducing Embedding Interval Bound Constraint (EIBC). EIBC is a new loss that constraints the word embeddings in order to tighten the IBP bounds.

The strength of IBP-based methods is their efficiency and speed, while their main limitation is the bounds’ looseness, further accentuated if the neural network is deep.

NLP Verification via Propagating Zonotopes. Another popular verification technique applied to various NLP models is based on propagating zonotopes, which produces tighter bounds then IBP methods. One notable contribution in this area is Cert-RNN [Reference Du, Ji, Shen, Zhang, Li, Shi, Fang, Yin, Beyah and Wang110], a robust certification framework for RNNs that overcomes the limitations of POPQORN. The framework maintains inter-variable correlation and accelerates the non-linearities of RNNs for practical uses. Cert-RNN utilised zonotopes [Reference Ghorbal, Goubault and Putot136] to encapsulate input perturbations and can verify the properties of the output zonotopes to determine certifiable robustness. This results in improved precision and tighter bounds, leading to a significant speedup compared to POPQORN. Analogously, Bonaert et al. [Reference Bonaert, Dimitrov, Baader and Vechev111] propose DeepT, a certification method for large transformers. It is specifically designed to verify the robustness of transformers against synonym replacement-based attacks. DeepT employs multi-norm zonotopes to achieve larger robustness radii in the certification and can work with networks much larger than Shi et al.

Methods that propagate zonotopes produce much tighter bounds than IBP-based methods, which can be used with deeper networks. However, they use geometric methods and do not take into account semantic considerations (e.g. do not use semantic perturbations).

NLP Verification via Randomised Smoothing. Randomised smoothing [Reference Cohen, Rosenfeld and Kolter137] is another technique for verifying the robustness of deep language models that has recently grown in popularity due to its scalability [Reference Ye, Gong and Liu112–Reference Zhang, Zhang and Hou118]. The idea is to leverage randomness during inference to create a smoothed classifier that is more robust to small perturbations in the input. This technique can also be used to give certified guarantees against adversarial perturbations within a certain radius. Generally, randomised smoothing begins by training a regular neural network on a given dataset. During the inference phase, to classify a new sample, noise is randomly sampled from the predetermined distribution multiple times. These instances of noise are then injected into the input, resulting in noisy samples. Subsequently, the base classifier generates predictions for each of these noisy samples. The final prediction is determined by the class with the highest frequency of predictions, thereby shaping the smoothed classifier. To certify the robustness of the smoothed classifier against adversarial perturbations within a specific radius centred around the input, randomised smoothing calculates the likelihood of agreement between the base classifier and the smoothed classifier when noise is introduced to the input. If this likelihood exceeds a certain threshold, it indicates the certified robustness of the smoothed classifier within the radius around the input.

The main advantage of randomised smoothing-based methods is their scalability, indeed recent approaches are tested on larger transformer such as BERT and Alpaca. However, their main issue is that they are probabilistic approaches, meaning they give certifications up to a certain probability. In this work, we focus on deterministic approaches, hence we only report these works in Table 3 for completeness without delving deeper into each paper here. All randomised smoothing-based approaches use data augmentation obtained by semantic perturbations.

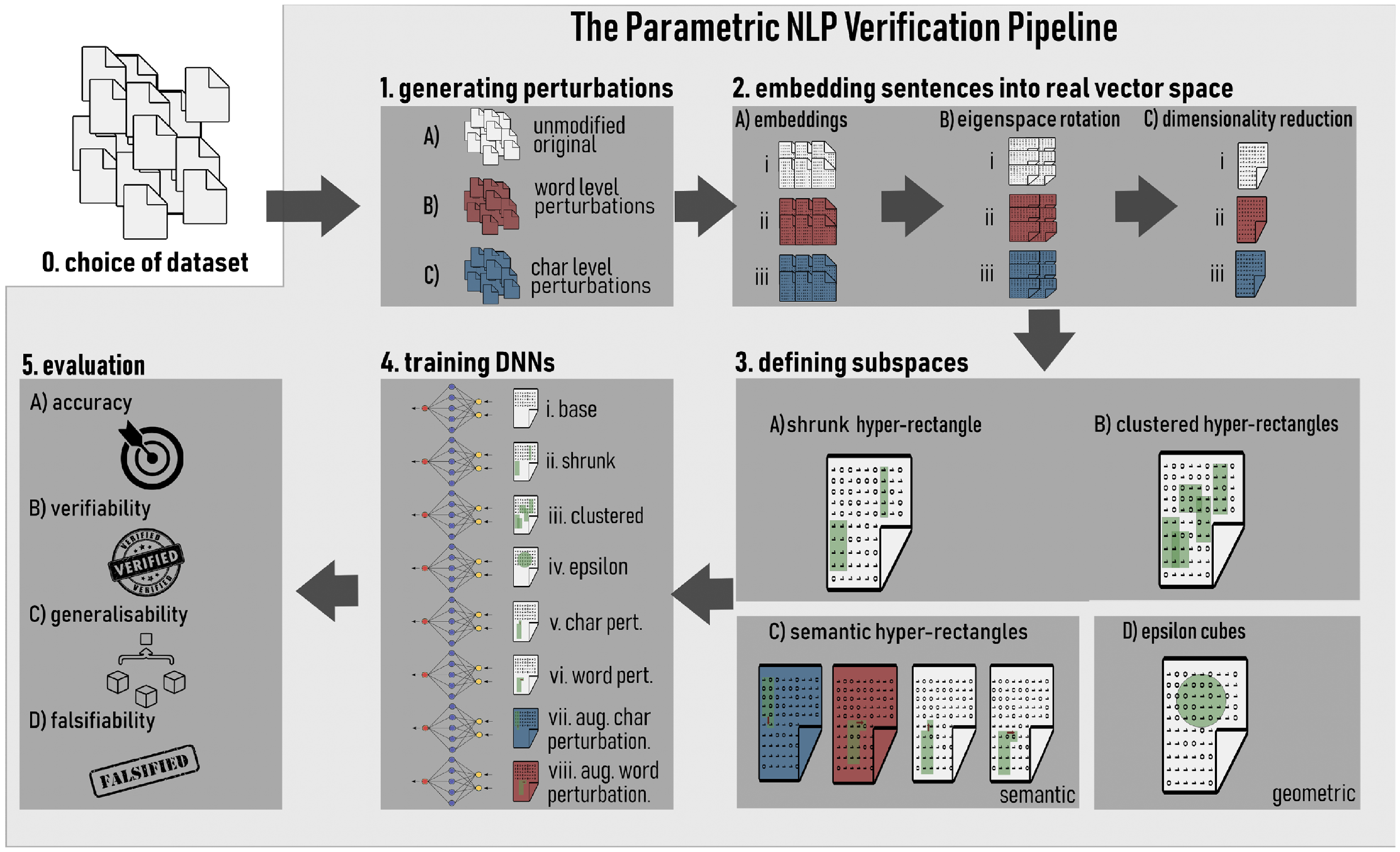

To systematically compare the existing body of research, we distil an “NLP verification pipeline” that is common across many related papers. This pipeline is outlined diagrammatically in Figure 2, while Tables 2 and 3 provide a detailed breakdown, with columns corresponding to each stage of the pipeline. It proceeds in stages:

-

1. Given an NLP dataset, generate semantic perturbations on sentences that it contains. The semantic perturbations can be of different kinds: character, word or sentence level. IBP and randomised smoothing use word and character perturbations, abstract interpretation papers usually do not use any semantic perturbations. Tables 2 and 3 give the exact mapping of perturbation methods to papers. Our method allows to use all existing semantic perturbations, in particular, we implement character and word-level perturbations as in Moradi et al. [Reference Moradi and Samwald103], sentence-level perturbations with PolyJuice [Reference Wu, Ribeiro, Heer and Weld21] and Vicuna.

-

2. Embed the semantic perturbations into continuous spaces. The cited papers use the word embeddings GloVe [Reference Pennington, Socher and Manning102], we use the sentence embeddings S-BERT and S-GPT.

-

3. Working on the embedding space, use geometric or semantic perturbations to define geometric or semantic subspaces around perturbed sentences. In IBP papers, semantic subspaces are defined as “bounds” derived from admissible semantic perturbations. In abstract interpretation papers, geometric subspaces are given by

$\epsilon$

-cubes and

$\epsilon$

-cubes and

$\epsilon \textrm {-balls}$

around each embedded sentence. Our paper generalises the notion of

$\epsilon \textrm {-balls}$

around each embedded sentence. Our paper generalises the notion of

$\epsilon$

-cubes by defining “hyper-rectangles” on sets of semantic perturbations. The hyper-rectangles generalise

$\epsilon$

-cubes by defining “hyper-rectangles” on sets of semantic perturbations. The hyper-rectangles generalise

$\epsilon$

-cubes both geometrically and semantically, by allowing to analyse subspaces that are drawn around several (embedded) semantic perturbations of the same sentence. We could adapt our methods to work with hyper-ellipses and thus directly generalise

$\epsilon$

-cubes both geometrically and semantically, by allowing to analyse subspaces that are drawn around several (embedded) semantic perturbations of the same sentence. We could adapt our methods to work with hyper-ellipses and thus directly generalise

$\epsilon \textrm {-balls}$

(the difference boils down to using

$\epsilon \textrm {-balls}$

(the difference boils down to using

$\ell _2$

norm instead of

$\ell _2$

norm instead of

$\ell _\infty$

when computing geometric proximity of points); however, hyper-rectangles are more efficient to compute, which determined our choice of shapes in this paper.

$\ell _\infty$

when computing geometric proximity of points); however, hyper-rectangles are more efficient to compute, which determined our choice of shapes in this paper. -

4. Use the geometric/semantic subspaces to train a classifier to be robust to change of label within the given subspaces. We generally call such training either robust training or semantically robust training, depending on whether the subspaces it uses are geometric or semantic. A custom semantically robust training algorithm is used in IBP papers, while abstract interpretation papers usually skip this step or use (adversarial) robust training. See Tables 2 and 3 for further details. In this paper, we adapt the famous PGD algorithm [Reference Madry, Makelov, Schmidt, Tsipras and Vladu20] that was initially defined for geometric subspaces (

$\epsilon \textrm {-balls}$

) to work with semantic subspaces (hyper-rectangles) to obtain a novel semantic training algorithm.

$\epsilon \textrm {-balls}$

) to work with semantic subspaces (hyper-rectangles) to obtain a novel semantic training algorithm. -

5. Use the geometric/semantic subspaces to verify the classifier’s behaviour within those subspaces. The papers [Reference Jia, Raghunathan, Göksel and Liang17–Reference Zhang, Albarghouthi and D’Antoni19, Reference Welbl, Huang, Stanforth, Gowal, Dvijotham, Szummer and Kohli107, Reference Wang, Yang, Di and He108] use IBP algorithms and the papers [Reference Shi, Zhang, Chang, Huang and Hsieh15, Reference Ko, Lyu, Weng, Daniel, Wong and Lin109–Reference Bonaert, Dimitrov, Baader and Vechev111] use abstract interpretation; in both cases, it is incomplete and deterministic verification. See ‘Verification algorithm’ and ‘Verification characteristics’ columns of Tables 2 and 3. We use SMT-based tool Marabou (complete and deterministic) and abstract-interpretation tool ERAN (incomplete and deterministic).

Tables 2 and 3 summarise differences and similarities of the above NLP verification approaches against ours. To the best of our knowledge, we are the first to use SMT-based complete methods in NLP verification and we show how they achieve higher verifiability than abstract interpretation verification approaches (ERAN and CORA) or IBP and BaB (

![]() $\alpha \beta$

-CROWN), thanks to the increased precision of the ReLUplex algorithm that underlies Marabou.

$\alpha \beta$

-CROWN), thanks to the increased precision of the ReLUplex algorithm that underlies Marabou.

Furthermore, our study is the first to demonstrate that the construction of semantic subspaces can happen independently of the choice of the training and verification algorithms. Likewise, although training and verification build upon the defined (semantic) subspaces, the actual choice of the training and verification algorithms can be made independently of the method used to define the semantic subspaces. This separation, and the general modularity of our approach, facilitates a comprehensive examination and comparison of the two key components involved in any NLP verification process:

-

• effects of the verifiability-generalisability trade-off for verification with geometric and semantic subspaces;

-

• relation between the volume/shape of semantic subspaces and verifiability of neural networks obtained via semantic training with these subspaces.

These two aspects have not been considered in the literature before.

3 The parametric NLP verification pipeline

This section presents a parametric NLP verification pipeline, shown in Figure 2 diagrammatically. We call it “parametric” because each component within the pipeline is defined independently of the others and can be taken as a parameter when studying other components. The parametric nature of the pipeline allows for the seamless integration of state-of-the-art methods at every stage, and for more sophisticated experiments with those methods. The following section provides a detailed exposition of the methodological choices made at each step of the pipeline.

Figure 2. Visualisation of the NLP verification pipeline followed in our approach.

3.1 Semantic perturbations

As discussed in Section 2.6, we require semantic perturbations for creating semantic subspaces. To do so, we consider three kinds of perturbations—i.e. character, word and sentence level. This systematically accounts for different variations of the samples.

Character and word-level perturbations are created via a rule-based method proposed by Moradi et al. [Reference Moradi and Samwald103] to simulate different kinds of noise one could expect from spelling mistakes, typos, etc. These perturbations are non-adversarial and can be generated automatically. Moradi et al. [Reference Moradi and Samwald103] found that NLP models are sensitive to such small errors, while in practice this should not be the case. Character-level perturbations types include randomly inserting, deleting, replacing, swapping or repeating a character of the data sample. At the character level, we do not apply letter case changing, given it does not change the sentence-level representation of the sample. Nor do we apply perturbations to commonly misspelled words, given only a small percentage of the most commonly misspelled words occur in our datasets. Perturbations types at the word level include randomly repeating or deleting a word, changing the ordering of the words, the verb tense, singular verbs to plural verbs or adding negation to the data sample. At the word level, we omit replacement with synonyms, as this is accounted for via sentence rephrasing. Negation is not done on the medical safety dataset, as it creates label ambiguities (e.g. ‘pain when straightening knee’

![]() $\rightarrow$

‘no pain when straightening knee’), as well as singular plural tense and verb tense, given human annotators would experience difficulties with this task (e.g. rephrase the following in plural/ with changed tense – ‘peritonsillar abscess drainage aftercare.. please help’). Note that the Medical dataset contains several sentences without a verb (like the one above) for which it is impossible to pluralise or change the tense of the verb.

$\rightarrow$

‘no pain when straightening knee’), as well as singular plural tense and verb tense, given human annotators would experience difficulties with this task (e.g. rephrase the following in plural/ with changed tense – ‘peritonsillar abscess drainage aftercare.. please help’). Note that the Medical dataset contains several sentences without a verb (like the one above) for which it is impossible to pluralise or change the tense of the verb.

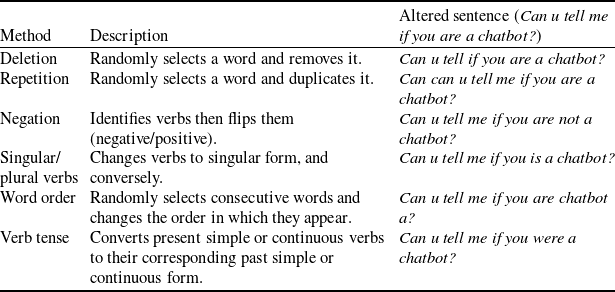

Further examples of character and word rule-based perturbations can be found in Tables 4 and 5.

Table 4. Character-level perturbations: their types and examples of how each type acts on a given sentence from the R-U-A-robot dataset [Reference Gros, Li and Yu129]. Perturbations are selected from random words that have 3 or more characters, first and last characters of a word are never perturbed

Table 5. Word-level perturbations: their types and examples of how each type acts on a given sentence from the R-U-A-robot dataset [Reference Gros, Li and Yu129].

Sentence-level perturbations. We experiment with two types of sentence-level perturbations, particularly due to the complicated nature of the medical queries (e.g. it is non-trivial to rephrase queries such as this – ‘peritonsillar abscess drainage aftercare.. please help’). We do so by either using Polyjuice [Reference Wu, Ribeiro, Heer and Weld21] or vicuna-13b.Footnote 4 Polyjuice is a general-purpose counterfactual generator that allows for control over perturbation types and locations, trained by fine-tuning GPT-2 on multiple datasets of paired sentences. Vicuna is a state-of-the-art open source chatbot trained by fine-tuning LLaMA [138] on user-shared conversations collected from ShareGPT.Footnote 5 For Vicuna, we use the following prompt to generate variations on our data samples ‘Rephrase this sentence 5 times: “[Example]”.’ For example, from the sentence “How long will I be contagious?”, we can obtain “How many years will I be contagious?” or “Will I be contagious for long?” and so on.

We will use notation

![]() $\mathcal {P}$

to refer to a perturbation algorithm abstractly.

$\mathcal {P}$

to refer to a perturbation algorithm abstractly.

Semantic similarity of perturbations. In later sections we will make an assumption that the perturbations that we use produce sentences that are semantically similar to the originals. However, precisely defining or measuring semantic similarity is a challenge in its own right, as semantic meaning of sentences can be subjective, context-dependent, which makes evaluating their similarity intractable. Nevertheless, Subsection 5.5.2 will discuss and use several metrics for calculating semantic similarity of sentences, modulo some simplifying assumptions.

3.2 NLP embeddings

The next component of the pipeline is the embeddings. Embeddings play a crucial role in NLP verification as they map textual data into continuous vector spaces, in a way that should capture semantic relationships and contextual information.

Given the set of all strings,

![]() $\mathbb {S}$

, an NLP dataset

$\mathbb {S}$

, an NLP dataset

![]() $\mathcal {Y} \subset \mathbb {S}$

is a set of sentences

$\mathcal {Y} \subset \mathbb {S}$

is a set of sentences

![]() ${s}_1, \ldots, {s}_{q}$

written in natural language. The embedding

${s}_1, \ldots, {s}_{q}$

written in natural language. The embedding

![]() $E$

is a function that maps a string in

$E$

is a function that maps a string in

![]() $\mathbb {S}$

to a vector in

$\mathbb {S}$

to a vector in

![]() ${\mathbb {R}}^{m}$

. The vector space

${\mathbb {R}}^{m}$

. The vector space

![]() ${\mathbb {R}}^{m}$

is called the embedding space. Ideally,

${\mathbb {R}}^{m}$

is called the embedding space. Ideally,

![]() $E$

should reflect the semantic similarities between sentences in

$E$

should reflect the semantic similarities between sentences in

![]() $\mathbb {S}$

, i.e. the more semantically similar two sentences

$\mathbb {S}$

, i.e. the more semantically similar two sentences

![]() ${s}_i$

and

${s}_i$

and

![]() ${s}_j$

are, the closer the distance between

${s}_j$

are, the closer the distance between

![]() $E({s}_i)$