Introduction

In the last decade, expectations for engaging patients and other stakeholders throughout the conceptualization, conduct, and dissemination of research have become an established part of the clinical and translational research culture [Reference Manafò, Petermann, Vandall-Walker and Mason-Lai1–Reference Price, Albarqouni and Kirkpatrick3]. Funders such as the Patient-Centered Outcomes Research Institute (PCORI) and increasingly the National Institutes of Health (NIH) encourage or require partnerships with stakeholders throughout the research process [Reference Selker and Wilkins4,Reference Fleurence, Selby and Odom-Walker5]. A stakeholder is an individual or group responsible for or affected by health- and healthcare-related decisions that can be informed by research evidence and includes groups like payers, practitioners, and policymakers as well as patients, families, and communities [Reference Concannon, Meissner and Grunbaum6]. Stakeholder engagement can improve public commitment to research, make research and the products of research more patient-centered, and enhance likelihood of successful dissemination and implementation in real-world settings [Reference Klein, Gold and Sullivan7–Reference Bodison, Sankaré and Anaya9]. General principles and processes of participatory research [Reference Kwon, Tandon, Islam, Riley and Trinh-Shevrin10,Reference Kirwan, de Wit and Frank11] have been well established. There are multiple methods for stakeholder engagement in research and empirical evidence on the strengths and weaknesses of specific methods is emerging [Reference Harrison, Auerbach and Anderson12,Reference Bishop, Elliott and Cassidy13], though gaps remain regarding the comparative effectiveness of various engagement methods in relation to specific research contexts and purposes [Reference Pearson, Duran and Oetzel14].

Researchers seeking guidance on appropriate methods of stakeholder engagement for their projects may turn to clinical translational science award (CTSA) programs or others that offer training and consultation on community and stakeholder engagement [Reference DiGirolamo, Geller, Tendulkar, Patil and Hacker15,Reference Shea, Young and Powell16], but these programs typically have limited resources (i.e., expert personnel) for providing consultations. Scalable infrastructure could support improvements in stakeholder-engaged research [Reference Hendricks, Shanafelt, Riggs, Call and Eder17], and self-directed, web-based interactive tools are emerging solutions across clinical and translational research [Reference Meissner, Cottler, Eder and Michener18,Reference Ford, Rabin, Morrato and Glasgow19].

The Data Science to Patient Value (“D2V”) initiative at the University of Colorado Anschutz Medical Campus supports advanced research in data science and data-driven health care, including through pilot award funding and other research support. The D2V Dissemination, Implementation, Communication, Ethics and Engagement (DICE) core consults with researchers who wish to engage stakeholders throughout the process of designing, conducting, and disseminating their work. The process of “designing for dissemination” requires engagement of patients, clinicians, and other health system stakeholders, and the DICE core includes leaders from our local CTSA, bioethicists, clinicians, health services researchers, D&I scientists, and communication, instructional design, and user-centered design experts (see supplementary material for details on DICE core team composition). As with other consultation services, however, the core is a limited resource that cannot meet all campus needs.

The D2V DICE core therefore undertook an iterative process of design, development, and testing of an interactive web-based tool (henceforth “webtool”) to guide researchers in learning about, selecting, and using a variety of methods for stakeholder-engaged research for their grant writing, protocol planning, implementation, and evidence dissemination.

The design process addressed: 1) What education and expert guidance do health researchers need to select and use engagement methods? 2) What criteria of engagement methods and the research context are relevant to decisions about which method to use? 3) What features of a webtool would help researchers with self-directed selection and use of engagement methods?

Methods

Overview of Design and Development Process

The design and development of the engagement methods webtool was guided by user-centered design processes (Fig. 1). The DICE core followed Design Thinking stages described by Ideo.org: Empathize, Define, Ideate, Prototype, and Test [20]. Design Thinking stages are iterative in nature – such that progress from one stage to another often returns to prior stages with new insights. The team participated in a self-paced Design Thinking course from IDEO.org during early stages of the project. Multiple prototypes were developed, revised, and refined over time.

Fig. 1. Stakeholder engagement navigator webtool design thinking process. CCTSI, Colorado Clinical and Translational Sciences Institute; D2V, Data Science to Patient Value.

Intended Audience and Context for Use

The webtool is designed with health services researchers in mind, though the engagement methods cataloged and the information on stakeholder engagement provided are not limited to use in health services research. The webtool is designed for use by researchers at all career levels and in any stage of a research project, from planning through implementation to dissemination.

Empathize Stage

During “Empathize” stage activities, our goal was to understand the educational and resource needs of our intended audience and to cataloge existing resources. Steps in this stage included D2V pilot grantee consultations and educational events, ethnographic interviews, a literature review of engagement methods, and an environmental scan of comparable engagement method and consumer product selection tools, websites, recommender, and filtering systems.

DICE core members conducted formal consultations with D2V pilot grantees (n = 7) across two annual funding cycles, using these as opportunities to explore current resources available to help investigators select methods and to understand when and why researchers make decisions about engagement methods for their research. The DICE core also collaborated with the Colorado Clinical and Translational Sciences Institute (CCTSI)’s community engagement core to provide a required 2-hour educational workshop on principles of community engagement for pilot grantees; workshop evaluations revealed unmet needs for guidance on why to engage stakeholders, what to engage them in, and how to engage them.

A literature review combined with the expertise of the DICE core team identified an initial list of about 40 engagement methods, many from the appendix of Engaging Stakeholders To Identify and Prioritize Future Research Needs by O’Haire et al. [Reference O’Haire, McPheeters and Nakamoto21]. This list was eventually refined to a total of 31.

The environmental scan revealed two exemplar engagement selection webtools: Engage2020’s Action Catalogue [22] (a general societal engagement tool from the European Commission) and Participedia (a global crowdsourcing platform for participatory political processes) [23]. Neither tool focuses on the specific needs of health researchers, but both provided valuable design ideas for our webtool (see supplementary material). A final Empathize stage activity was four ethnographic interviews with research faculty and staff who provide expert consultation and guidance on research methods, including engagement methods. Insights from the environmental scan and ethnographic interviews led to "how might we" design questions, focusing on three areas: 1) helping investigators understand the stakeholder engagement process as well as the timeline and resources involved; 2) working with investigators to identify their goals in performing stakeholder engagement; and 3) guiding investigators to understand what resources they already have, which ones they need, and how best to apply them.

Define Stage

The purpose of the “Define” stage was to clarify and state the core needs and problems of users. Define stage activities included 1) development of user personas and use cases for the webtool and 2) classification of the 31 engagement methods according to criteria relevant to selection and use. The team developed 5 use cases to illustrate the needs of our key audience (health services researchers) with varying levels of experience with stakeholder engagement methods (see supplementary material). These were used to clarify which design features were important to which types of users and to reduce costs by preventing errant design upfront.

Formal cataloging of the key criteria of each engagement method was based on three steps: a card-sorting activity, a team review, and user surveys. A group of twelve national experts in stakeholder engagement and six members of the DICE team met in-person to undertake a card-sorting activity [Reference Spencer and Warfel24] in which they were asked to organize the list of methods into high-level categories (see supplementary material). Group discussion yielded the idea that the term “methods” did not sufficiently encompass all types of engagement activities; some were more appropriately called “approaches” (e.g., high-level frameworks that do not specify a set process, such as Community-Based Participatory Research), while “methods” were defined as sets of activities with explicit, step-by-step procedures.

The DICE team then undertook a method review process designed to classify each of the 31 methods according to seven dimensions: consistency with the definition of engagement, including both 1) bidirectionality (the method supports collaborative discussion with two-way communication rather than unidirectional data collection) and 2) “longitudinality” (the method supports relationships over time with stakeholders rather than a single interaction), 3) purpose of engagement (including “Identify and explore new perspectives or understanding,” “Expand and diversify stakeholder outreach,” and “Disseminate findings to relevant audiences,” adapted from PCORI’s Engagement Rubric [25]), 4) cost (high, medium, or low), 5) duration (time required for any given stakeholder interaction), 6) level of training/expertise needed to carry out the method effectively, and 7) strength of the evidence base. These dimensions were identified as relevant to choosing an engagement method, both scientifically (e.g., appropriateness of the method for achieving engagement goals) and pragmatically (e.g., budget, access to stakeholders, team expertise, and timeline).

The method review process was similar to an NIH style grant review; two reviewers were assigned to review detailed descriptions of each method and complete a form indicating scores for each of the dimensions using a 9-point scale (see supplementary material). In addition, reviewers were asked to select relevant engagement purposes for each method from the list of purposes above [25]. The review panel convened for discussion, with the primary and secondary reviewers providing their ratings and justification for their selections. A key decision was that methods that did not align with the definition of engagement (i.e., supporting bidirectional, longitudinal engagement) were re-labeled a “tool” rather than a method.

To determine user perspectives on important criteria for method selection, the DICE core and a CCTSI partner worked together to administer a 5-item feature prioritization survey at a community engagement seminar in February 2020 (see supplementary material). Twenty-one respondents ranked the importance of website features, the helpfulness, and importance of method selection criteria, and conveyed their interest in using an engagement method selection tool.

Ideate Stage

The “Ideate” stage purpose was to develop an organizing framework and brainstorm webtool content, features, and organization. Based on Empathize and Define stage activities, it was determined that the webtool should include three core features: education on the principles and purposes of stakeholder engagement, a method selection tool, and guidance on seeking expert consultation. Ultimately, a simple filtering technique was selected. The tool would assess user engagement goals and resources, use this assessment to filter recommended methods, and then offer informational sheets, called “strategy fact sheets,” for the selected method or tool(s). This system would also easily allow addition of more methods and tools over time.

Next, the DICE core storyboarded the layout and features of the webtool, led by a team member with expertise in instructional design. This process generated the initial informational and navigational architecture, which was further tested and refined by reference to the use cases and how each would progress, step-by-step, through the method selection process (see supplementary material).

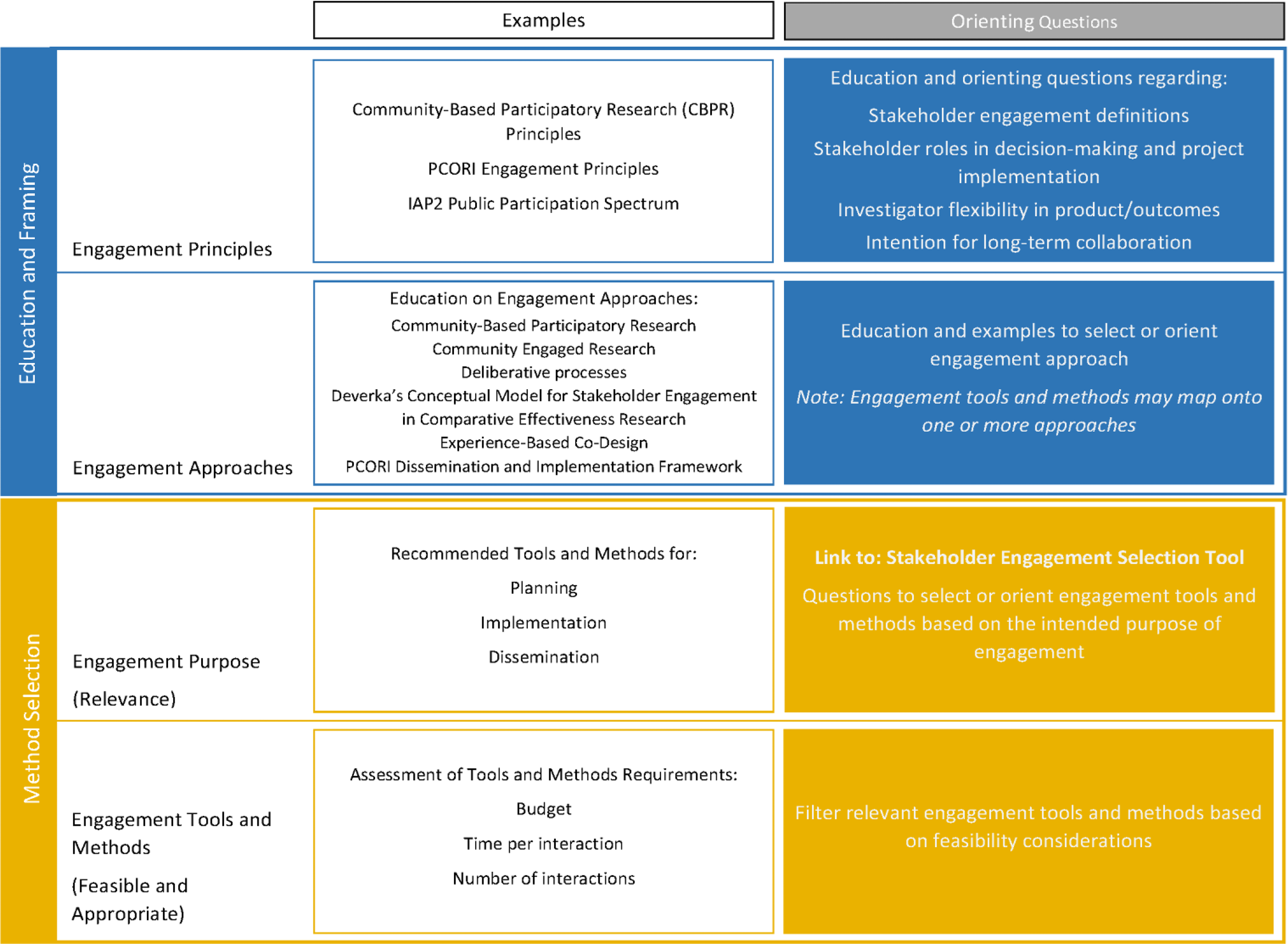

In parallel with storyboarding, we developed an organizing framework reflecting the prioritized webtool content (Fig. 2) and educational content conveying principles and purposes of engagement and defining engagement approaches, methods, and tools.

Fig. 2. Stakeholder engagement method navigator webtool organizing framework. IAP2, International Association of Public Participation; PCORI, Patient-Centered Outcomes Research Institute.

Prototype and Test Stages

Prototyping and testing were carried out to iterate successively more interactive, complete prototypes, and to evaluate usability and usefulness. During the Design Thinking course, the DICE core developed an initial static prototype using the JustInMind prototyping and wireframing tool available at JustInMind.com (Justinmind (C) 2021) [26]. This prototype specified general content and desired functionality to provide users with a sample of engagement strategies based on a few criteria.

Following the storyboarding and using the organizing framework, DICE core members developed an interactive prototype that included pages for educational content and the selection tool. After several rounds of iteration based on input from team members, a team member with expertise in user-centered design led contextual inquiry [Reference Whiteside, Bennett and Holtzblatt27] user testing (see supplementary material) with four individuals representing key user types, identified based on the use cases developed during the Define stage. The resulting webtool underwent further task-oriented Think Aloud [Reference Trulock28] testing (see supplementary material) with 16 participants attending a virtual pragmatic research conference in August 2020. Participants were separated into breakout rooms and navigated through the website with the goal of completing specific tasks while vocalizing their cognitive process aloud. Users were given hypothetical scenarios (e.g., “You are a junior researcher with a small budget being asked to find a method suitable for engaging patients on a national scale”) and then instructed to navigate the website within that scenario. User feedback guided one additional round of iteration, yielding the V1.0 webtool.

Results

The V1.0 webtool can be accessed at DICEMethods.org. Select screenshots from the tool are shown in Fig. 3. Overall, webtool design was informed by 77 unique individuals, including 7 D2V pilot grantees, 4 participants in ethnographic interviews, 12 external engagement experts, 20 usability testers, 21 survey respondents, and 14 DICE core team members.

Fig. 3. Screenshots from the stakeholder engagement method navigator webtool.

Education and Guidance in Engagement Method Selection

The first question we sought to answer was: What education and expert guidance do health researchers need to select and use engagement methods?

Insights from the Empathize and Define stages included the following: Researchers understand that stakeholder engagement is valuable and want to include it in their research design and implementation; however, researchers are not familiar with stakeholder engagement methods. Researchers need an efficient means to learn how to conduct stakeholder engagement and to include engagement methods in grant proposals.

These findings drove team discussion and synthesis around the content and scope of education and guidance to be included in the webtool. Educating investigators on the basics of stakeholder engagement was equally important to guiding method selection. As a result, the webtool includes two main sections, an “Education Hub” and a “Find Engagement Strategies” section, which take up equal real estate at the top of the website. The Education Hub includes a section on stakeholder engagement basics, such as definitions of stakeholder engagement, its importance in research, a breakdown of core principles of stakeholder engagement, and a guide for identifying stakeholders and establishing their roles. For those already familiar with stakeholder engagement, the Education Hub also includes “A Deeper Dive,” which describes how to distinguish among approaches, methods, and tools and provides users with details on the different engagement approaches they might use as frameworks for their projects.

Engagement Method Cataloging and Selection Criteria

The second question we sought to answer was: What criteria of engagement methods and the research context are relevant to decisions about which method to use?

A consultation intake form developed for D2V engagement consultations was an early prototype for gathering method selection criteria and included details such as stage of clinical or translational research (T1-T4), types of stakeholders to be engaged, engagement purpose, available funding to pay stakeholders, and more features selected to help guide expert consultations – only some of which were ultimately included in the selection tool (see supplementary material). From the card-sorting activity conducted during the define stage, potential methods classification suggested by engagement experts included longitudinality, deliberative approaches, hypothesis-generating methods, or modality (virtual or in-person).

From team sensemaking following the Empathize, Define, and Ideate stage activities, the DICE core ultimately determined the set of 31 engagement strategies that should be first distinguished as either approaches, methods, or tools (see supplementary material). We developed definitions for each of these terms, which can be found in Table 1. Four distinguishing criteria emerged relevant to engagement method selection addressing both scientific fit (purpose of engagement) and practical fit with resources (budget, duration of individual engagement interactions, and timeline). Results from the feature prioritization survey were used to determine how these criteria would be prioritized by the filtering process. In order, based on level of importance most selected by respondents, the most important criteria in determining what method to use for stakeholder engagement in research was “How well the method achieves the specific goal,” followed by “Skills/personnel required to conduct the engagement method,” “Strengths or evidence base supporting the method,” “Time required to conduct the engagement method,” and “Cost required to conduct the engagement method.” Based on these findings, engagement purpose became the primary filter for the method selection tool.

Table 1. Stakeholder engagement approaches, methods, and tools: definitions, explanations, and examples

Within the method selection feature of the webtool, engagement purposes are categorized by research stage (planning, implementation, and/or dissemination). Users then further refine recommended methods based on anticipated budget (e.g., accounting for personnel effort, stakeholder incentives, other materials), timeline for project completion, and anticipated availability of the stakeholders (e.g., would they be available for only brief interactions or potentially able to attend longer sessions?). DICE core members developed orienting questions (Fig. 3a) for each distinguishing feature, which are posed both at the beginning of the selection tool (so that researchers may gather what they will need to know in advance) and at the corresponding step in the selection tool. For each method and tool, “strategy fact sheets” describe information on budget, time frame, workload, appropriate applications, materials and personnel needed, and a “how-to” section.

Engagement Navigator Webtool Features

Our final question was: What features of a webtool would help researchers with self-directed selection and use of engagement methods?

Based on the environmental scan of comparable tools from the Empathize stage, we developed a modification of an interactive “bubble” feature that displayed results on Engage2020’s Action Catalogue [22]. We carried this concept over into our webtool but streamlined the user experience by first asking screening questions about what stage of research the user plans to incorporate stakeholder engagement activities in and their purpose for engaging stakeholders. These questions narrow down the engagement methods and tools in the interactive results display to only those applicable to the user’s project. As the user inputs additional information about their project, the methods, and tools that are less applicable decrease in size but remain accessible so that the user is still able to learn about them if they wish.

The contextual inquiry usability testing from the iterative Prototype and Test stage activities informed design changes. Most design changes to early prototypes related to usability of the engagement method selection results page (see supplementary material). In general, users felt that there was too much content on the results page and the cognitive effort to make sense of the page was overly burdensome. Therefore, the design team prioritized streamlining the filtering system feedback by refining visual cues and the visual layout of the content. The results of the Think Aloud usability testing further refined the web tool prototype (see supplementary material). Overall, results from this round of user testing highlighted changes in the visual cues, words, or phrasing used in the interface, instead of the interface functionality itself, that is, user requests focused on quick comprehension.

Discussion

Using an iterative, multi-stage design thinking process, the DICE core identified researcher needs and desired features of a webtool that would facilitate education and guidance in selection of stakeholder engagement methods. As depicted in our organizing framework, the webtool educational content incorporates engagement principles, approaches, and frameworks, while a method selection tool guides users through a methods filtering interface. Our V1.0 webtool includes educational content for beginners (“The Basics”) and more advanced scholars (“A Deeper Dive”). Relevant distinguishing features of engagement methods included both scientific relevance of the methods for specific engagement goals, as well as feasibility and appropriateness considerations (budgets, timelines, stakeholder availability, and team expertise).

Some novel design-based conclusions stemmed from following user experience (UX) best practices during the development process [Reference Gualtieri29]. The DICE core spent time with end-users to understand our audience, who generally think “less is more” with regard to academic web-based content and dislike content and/or visual overload. The concise and playful selector tool allowed end-users to accomplish the goal of identifying appropriate stakeholder engagement strategies easily and efficiently. A balance was achieved in decreasing cognitive load when manipulating the system, while acknowledging that this audience has a high baseline cognitive capability.

Since we began our design process, PCORI released their web-based Engagement Tool and Resource Repository (https://www.pcori.org/engagement/engagement-resources/Engagement-Tool-Resource-Repository), which provides links to resources to support conduct and evaluation of engagement efforts, organized by resource focus, phase of research, health conditions, stakeholder type, and populations. The PCORI repository contains multiple resources for researchers but does not provide recommendations on selecting engagement methods as our webtool does.

Implications for CTSAs

This work advances the science and practice of clinical and translational research in two ways. First, we demonstrate the utility (but resource intensiveness) of design thinking methods for developing these sorts of web-based research guidance tools. To be done well, webtool development requires substantial investments of time and financial resources; in our case, a Dean’s transformational research initiative was the main source of funding, with additional support from the CCTSI. Second, this work informs how researchers choose and curate methods for stakeholder engagement in clinical and translational research. We used an NIH style review process to rate methods based on our experience and findings of a literature review. This revealed an obvious research gap: The evidence base behind most engagement methods is limited, and few randomized controlled trials (RCTs) exist that evaluate methods or compare them head-to-head. The literature describes how researchers use different methods of engagement and the positive impact engagement has on research but is relatively silent on whether using particular engagement methods for specific purposes could better or more efficiently achieve the different goals of engagement [Reference Price, Albarqouni and Kirkpatrick3,Reference Gierisch, Hughes, Williams, Gordon and Goldstein30,Reference Forsythe, Carman and Szydlowski31]. Just as particular scientific methods are uniquely suited for answering specific question, our project advances the science of engagement by allowing researchers to begin tailoring engagement methods to the task at hand.

Next Steps

Next steps for the Stakeholder Engagement Navigator webtool include developing and implementing a dissemination strategy, designing and conducting evaluations to assess real-world utility, and devising processes to help ensure that the webtool is maintained, updated, and sustained over time.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/cts.2021.850.

Acknowledgements

The authors wish to acknowledge the contributions of Suzanne Millward for guiding the DICE team through the beginning stages of the Design Thinking process, Erika Blacksher for substantive contributions to the design of the webtool, the valuable input received from user testing participants, as well as the support of the University of Colorado’s Data Science to Patient Value initiative. Supported by NIH/NCATS Colorado CTSA Grant Number UL1 TR002535. Contents are the authors’ sole responsibility and do not necessarily represent official NIH views.

Disclosures

The authors have no conflicts of interest to declare.