No CrossRef data available.

$L^4$ norm of Littlewood polynomials

$L^4$ norm of Littlewood polynomialsPublished online by Cambridge University Press: 15 March 2024

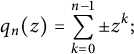

This paper concerns the  $L^4$ norm of Littlewood polynomials on the unit circle which are given by

$L^4$ norm of Littlewood polynomials on the unit circle which are given by  $$ \begin{align*}q_n(z)=\sum_{k=0}^{n-1}\pm z^k;\end{align*} $$

$$ \begin{align*}q_n(z)=\sum_{k=0}^{n-1}\pm z^k;\end{align*} $$ $\{-1,1\}$. Let

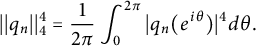

$\{-1,1\}$. Let  $$ \begin{align*}||q_n||_4^4=\frac{1}{2\pi}\int_0^{2\pi}|q_n(e^{i\theta})|^4 d\theta.\end{align*} $$

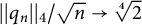

$$ \begin{align*}||q_n||_4^4=\frac{1}{2\pi}\int_0^{2\pi}|q_n(e^{i\theta})|^4 d\theta.\end{align*} $$ $||q_n||_4/\sqrt {n}\rightarrow \sqrt [4]{2}$ almost surely as

$||q_n||_4/\sqrt {n}\rightarrow \sqrt [4]{2}$ almost surely as  $n\to \infty $. This improves a result of Borwein and Lockhart (2001, Proceedings of the American Mathematical Society 129, 1463–1472), who proved the corresponding convergence in probability. Computer-generated numerical evidence for the a.s. convergence has been provided by Robinson (1997, Polynomials with plus or minus one coefficients: growth properties on the unit circle, M.Sc. thesis, Simon Fraser University). We indeed present two proofs of the main result. The second proof extends to cases where we only need to assume a fourth moment condition.

$n\to \infty $. This improves a result of Borwein and Lockhart (2001, Proceedings of the American Mathematical Society 129, 1463–1472), who proved the corresponding convergence in probability. Computer-generated numerical evidence for the a.s. convergence has been provided by Robinson (1997, Polynomials with plus or minus one coefficients: growth properties on the unit circle, M.Sc. thesis, Simon Fraser University). We indeed present two proofs of the main result. The second proof extends to cases where we only need to assume a fourth moment condition.

Y. Duan is supported by the NNSF of China (Grant No. 12171075) and the Science and Technology Research Project of Education Department of Jilin Province (Grant No. JJKH20241406KJ). X. Fang is supported by the NSTC of Taiwan (Grant No. 112-2115-M-008-010-MY2).