1. Introduction

The study of passive tracer transport is of fundamental importance in characterizing turbulence. However, predicting a chaotic dynamical trajectory over long times is infeasible (Lorenz Reference Lorenz1963), so one must switch to a statistical perspective to make headway on transport properties. From the analysis of dispersion by Taylor (Reference Taylor1922), operator notions of mixing from Knobloch (Reference Knobloch1977), computations of ‘effective diffusivity’ by Avellaneda & Majda (Reference Avellaneda and Majda1991), simplified models of turbulence by Pope (Reference Pope2011), rigorous notions of mixing in terms of Sobolev norms by Thiffeault (Reference Thiffeault2012) or upper bounds on transport of Hassanzadeh, Chini & Doering (Reference Hassanzadeh, Chini and Doering2014), a variety of different approaches have been developed to elucidate fundamental properties of turbulence.

In addition to furthering our understanding of turbulence, there are practical applications for turbulence closures. In particular, Earth Systems Models require closure relations for the transport of unresolved motions (Schneider et al. Reference Schneider, Lan, Stuart and Teixeira2017); however, the closure relations are marred by structural and parametric uncertainty, requiring ad hoc tuning to compensate for biases. Structural biases are associated with scaling laws and closure assumptions between turbulent fluxes and gradients. Modern studies are bridging the gap by incorporating more complex physics and novel scaling laws, see as examples the works of Tan et al. (Reference Tan, Kaul, Pressel, Cohen, Schneider and Teixeira2018) and Gallet & Ferrari (Reference Gallet and Ferrari2020), but the correct functional forms to represent fluxes still need to be discovered.

The multi-scale nature of turbulent flows, coherent structures and the interconnection of reacting chemical species and prognostic fields suggest that fluxes are better modelled using nonlinear and non-local operators in space, time and state. Data-driven methods relying on flexible interpolants can significantly reduce structural bias, but often at the expense of interpretability, generalizability or efficiency. Thus, understanding scaling laws and functional forms of turbulence closures is still necessary to physically constrain data-driven methods and decrease their computational expense. A promising avenue for significant progress, lying at the intersection of theory and practice, is the calculation of closure relations for passive tracers.

The present work addresses a fundamentally different question than that of short-time Lagrangian statistics, such as the works of Taylor (Reference Taylor1922), Moffatt (Reference Moffatt1983), Weeks, Urbach & Swinney (Reference Weeks, Urbach and Swinney1996) and Falkovich, Gawȩ dzki & Vergassola (Reference Falkovich, Gawȩ dzki and Vergassola2001), since our focus is on Eulerian statistically steady statistics. Consequently, we develop new non-perturbative techniques to address the question at hand. In particular, the use of path integrals or Taylor expansions is stymied since perturbation methods based on local information often yield irredeemably non-convergent expansions for strong flow fields and long time limits. The calculations herein are more akin to calculating a ground state in quantum mechanics rather than short-time particle scattering.

A middle ground is the work of Gorbunova et al. (Reference Gorbunova, Pagani, Balarac, Canet and Rossetto2021), which analyses short-time Eulerian two-point statistics and makes connections to the Lagrangian approach. There they use renormalization groups as the workhorse for calculations. The most similar prior work regarding problem formulation is the upper bound analysis of Hakulinen (Reference Hakulinen2003), in which all moments of a stochastically advected passive tracer were considered. The scope was different because the goal was to understand all moments of the resulting stochastic tracer field advected by the Gaussian model of Kraichnan (Reference Kraichnan1968). Here we focus on exact expressions for the ensemble mean flux caused by a general class of flow fields. Although we only focus on the ensemble mean flux of a passive tracer, we do not make restrictions on the temporal statistics of the stochastic flow field. Furthermore, the methodology we outline extends to other moments of the flow field and short-time statistics, although we emphasize neither.

We make arguments akin to those by Kraichnan (Reference Kraichnan1968) to motivate the operator approach, but our calculation method is fundamentally different. Given that the goal is to construct an operator rather than estimate a diffusivity tensor acting on the ensemble mean gradients or deriving upper bounds, we take a field-theoretic perspective (Hopf Reference Hopf1952). Doing so allows us to derive a coupled set of partial differential equations representing conditional mean tracers where the conditional averages are with respect to different flow states. The flux associated with fluctuations is then a Schur complement of the resulting linear system with respect to statistical ‘perturbation’ variables. Moreover, if the flow statistics are given by a continuous-time Markov process with a small finite state space, the Schur complement becomes tractable to compute analytically. Hereafter, we refer to the flux associated with fluctuations as the turbulent flux (which is sometimes also called an ‘eddy flux’), and the corresponding operator is the turbulent diffusivity operator (alternatively an ‘effective diffusivity operator’).

The paper is organized as follows. In § 2, we formulate the closure problem and recast it as one of solving partial differential equations. In § 3, we give two examples: a one-dimensional (1-D) tracer advected by an Ornstein–Uhlenbeck process and a tracer advected by a flow field with an arbitrary spatial structure that switches between three states. Section 4 outlines the general theory, and we apply it to a travelling stochastic wave in a channel in § 5. Appendices supplement the body of the manuscript. Appendix A shows how a ‘white-noise’ limit reduces the turbulent diffusivity to a local diffusivity tensor and how the present theory encompasses the class of flow fields of Kraichnan (Reference Kraichnan1968) in the ‘white-noise’ limit. Appendix B provides a direct field-theoretic derivation of arguments in § 2, and Appendix C provides a heuristic overview of obtaining continuous-time Markov processes with finite state space and their statistics from deterministic or stochastic dynamical systems.

2. Problem formulation

We consider the advection and diffusion of an ensemble of passive tracers ![]() $\theta _{\omega }$

$\theta _{\omega }$

by a stochastic flow field ![]() $\boldsymbol {\tilde {u}}_{\omega } (\boldsymbol {x}, t)$ where

$\boldsymbol {\tilde {u}}_{\omega } (\boldsymbol {x}, t)$ where ![]() $\omega$ labels the ensemble member. Here,

$\omega$ labels the ensemble member. Here, ![]() $s$ is a deterministic mean zero source term and

$s$ is a deterministic mean zero source term and ![]() $\kappa$ is a diffusivity constant. (For laboratory flows,

$\kappa$ is a diffusivity constant. (For laboratory flows, ![]() $\kappa$ would be the molecular diffusivity; for larger-scale problems, we rely on the fact that away from boundaries, the ensemble mean advective flux (but not necessarily other statistics) may still be much larger than the diffusive flux. Thus, the exact value of

$\kappa$ would be the molecular diffusivity; for larger-scale problems, we rely on the fact that away from boundaries, the ensemble mean advective flux (but not necessarily other statistics) may still be much larger than the diffusive flux. Thus, the exact value of ![]() $\kappa$ will not matter.) Our target is to obtain a meaningful equation for the ensemble mean,

$\kappa$ will not matter.) Our target is to obtain a meaningful equation for the ensemble mean,

which requires a computationally amenable expression for the mean advective flux, ![]() $\langle \boldsymbol {\tilde {u}} \theta \rangle$, in terms of the statistics of the flow field,

$\langle \boldsymbol {\tilde {u}} \theta \rangle$, in terms of the statistics of the flow field, ![]() $\boldsymbol {\tilde {u}}_{\omega } (\boldsymbol {x}, t)$, and the ensemble average of the tracer,

$\boldsymbol {\tilde {u}}_{\omega } (\boldsymbol {x}, t)$, and the ensemble average of the tracer, ![]() $\langle \theta \rangle$. Thus, the closure problem is to find an operator

$\langle \theta \rangle$. Thus, the closure problem is to find an operator ![]() ${O}$ that relates the ensemble mean,

${O}$ that relates the ensemble mean, ![]() $\langle \theta \rangle$, to the ensemble mean advective-flux,

$\langle \theta \rangle$, to the ensemble mean advective-flux, ![]() $\langle \boldsymbol {\tilde {u}} \theta \rangle$, i.e.

$\langle \boldsymbol {\tilde {u}} \theta \rangle$, i.e.

We show how to define (and solve for) the operator ![]() ${O}$. We do so by writing down the Fokker–Planck/master equation for the joint Markov process

${O}$. We do so by writing down the Fokker–Planck/master equation for the joint Markov process ![]() $(\boldsymbol {\tilde {u}}_{\omega } , \theta _{\omega })$ corresponding to (2.1), integrating with respect to all possible tracer field configurations and manipulating the resulting system of partial differential equations. The operator will be linear with respect to its argument and depend on the statistics of the flow field.

$(\boldsymbol {\tilde {u}}_{\omega } , \theta _{\omega })$ corresponding to (2.1), integrating with respect to all possible tracer field configurations and manipulating the resulting system of partial differential equations. The operator will be linear with respect to its argument and depend on the statistics of the flow field.

We assume all tracer ensemble members to have the same initial condition and, thus, the ensemble average here is with respect to different flow realizations. The only source of randomness comes from different flow realizations. Throughout the manuscript, we assume homogeneous Neumann boundary conditions for the tracer and zero wall-normal flow for the velocity field when boundaries are present. Combined with the assumption that the source term is mean zero, these restrictions imply that the tracer average is conserved.

For the statistics of the flow field, we consider a continuous-time Markov process with known statistics, as characterized by the generator of the process. When the state space of the flow is finite with ![]() $N$ states, we label all possible flow configurations corresponding to steady flow fields by

$N$ states, we label all possible flow configurations corresponding to steady flow fields by ![]() $\boldsymbol {u}_n(\boldsymbol {x})$, where

$\boldsymbol {u}_n(\boldsymbol {x})$, where ![]() $n$ is the associated state index. We will keep with the convention of using the

$n$ is the associated state index. We will keep with the convention of using the ![]() $\boldsymbol {\tilde {u}}$ as a dynamic variable and

$\boldsymbol {\tilde {u}}$ as a dynamic variable and ![]() $\boldsymbol {u}$ as a fixed flow structure. These choices represent the flow as a Markov jump process where the jumps occur between flow fields with a fixed spatial structure.

$\boldsymbol {u}$ as a fixed flow structure. These choices represent the flow as a Markov jump process where the jumps occur between flow fields with a fixed spatial structure.

Physically, we think of these states as representing coherent structures in a turbulent flow. (This is viewed as a finite volume discretization in function space where the states are the ‘cell averages’ of a control volume in function space. Thus, the transition probability matrix becomes an approximated Perron–Frobenius operator of the turbulent flow.) Suri et al. (Reference Suri, Tithof, Grigoriev and Schatz2017) provide the experimental and numerical motivation for this philosophy in the context of an approximate Kolmogorov flow. By its very nature, a turbulent flow that is chaotic and unpredictable over long time horizons is modelled as being semi-unpredictable through our choice. Over short horizons, the probability of remaining in a given state is large. The flow is limited to moving to a subset of likely places in phase space in the medium term. Over long time horizons, the most one can say about the flow is related to the likelihood of being found in the appropriate subset of phase space associated with the statistically steady state.

Thus, we proceed by characterizing the probability, ![]() $\mathbb {P}$, of transitioning from state

$\mathbb {P}$, of transitioning from state ![]() $n$ to state

$n$ to state ![]() $m$ by a transition matrix

$m$ by a transition matrix ![]() $\mathscr {P}(\tau )$,

$\mathscr {P}(\tau )$,

The transition probability is defined through its relation to the generator ![]() $\mathcal {Q}$,

$\mathcal {Q}$,

where ![]() $\exp ( \mathcal {Q} \tau )$ is a matrix exponential. Each entry of

$\exp ( \mathcal {Q} \tau )$ is a matrix exponential. Each entry of ![]() $\mathscr {P}(\tau )$ must be positive. Furthermore, for each

$\mathscr {P}(\tau )$ must be positive. Furthermore, for each ![]() $\tau$, the columns of

$\tau$, the columns of ![]() $\mathscr {P}(\tau )$ sum to one since the total probability must always sum to one. Similarly,

$\mathscr {P}(\tau )$ sum to one since the total probability must always sum to one. Similarly, ![]() $\mathcal {Q}$'s off-diagonal terms must be positive and the column sum of

$\mathcal {Q}$'s off-diagonal terms must be positive and the column sum of ![]() $\mathcal {Q}$ must be zero. (Indeed, to first order,

$\mathcal {Q}$ must be zero. (Indeed, to first order, ![]() $\exp (\mathcal {Q} \,{\rm d}t) = \mathbb {I} + \mathcal {Q} \,{\rm d}t$. The positivity requirement of the transition probability

$\exp (\mathcal {Q} \,{\rm d}t) = \mathbb {I} + \mathcal {Q} \,{\rm d}t$. The positivity requirement of the transition probability ![]() $\mathscr {P}({\rm d}t)=\exp (\mathcal {Q} \,{\rm d}t)$ necessitates the positivity of

$\mathscr {P}({\rm d}t)=\exp (\mathcal {Q} \,{\rm d}t)$ necessitates the positivity of ![]() $\mathcal {Q}$'s off-diagonal terms and thus, in turn, the negativity of its diagonal terms.)

$\mathcal {Q}$'s off-diagonal terms and thus, in turn, the negativity of its diagonal terms.)

We denote the probability of an ensemble member, ![]() $\boldsymbol {\tilde {u}}_{\omega }$, being found at state

$\boldsymbol {\tilde {u}}_{\omega }$, being found at state ![]() $m$ at time

$m$ at time ![]() $t$ by

$t$ by ![]() $\mathcal {P}_m(t)$,

$\mathcal {P}_m(t)$,

The evolution equation for ![]() $\mathcal {P}_m(t)$ is the master equation,

$\mathcal {P}_m(t)$ is the master equation,

We assume that (2.7) has a unique steady state and denote the components of the steady state by ![]() $P_m$.

$P_m$.

We have used several ‘P’ at this stage, and their relation is:

(i)

$\mathbb {P}$ denotes a probability;

$\mathbb {P}$ denotes a probability;(ii)

$\mathscr {P}(\tau )$ denotes the transition probability matrix for a time

$\mathscr {P}(\tau )$ denotes the transition probability matrix for a time  $\tau$ in the future;

$\tau$ in the future;(iii)

$\mathcal {P}_m(t)$ denotes the probability of being in state

$\mathcal {P}_m(t)$ denotes the probability of being in state  $m$ at time

$m$ at time  $t$. The algebraic relation

(2.8)holds;

$t$. The algebraic relation

(2.8)holds; \begin{equation} \sum_m \mathcal{P}_m(t+\tau) \boldsymbol{\hat{e}}_m = \mathscr{P}(\tau) \sum_n \mathcal{P}_n(t) \boldsymbol{\hat{e}}_n \end{equation}

\begin{equation} \sum_m \mathcal{P}_m(t+\tau) \boldsymbol{\hat{e}}_m = \mathscr{P}(\tau) \sum_n \mathcal{P}_n(t) \boldsymbol{\hat{e}}_n \end{equation}(iv) in addition, we use

$P_m$ for the statistically steady probability of being found in state

$P_m$ for the statistically steady probability of being found in state  $m$, in the limit

(2.9)

$m$, in the limit

(2.9) \begin{equation} \lim_{t \rightarrow \infty} \mathcal{P}_m(t) = P_m . \end{equation}

\begin{equation} \lim_{t \rightarrow \infty} \mathcal{P}_m(t) = P_m . \end{equation}

We exploit the information about the flow field to infer the mean statistics of the passive tracer ![]() $\theta _\omega$. We do so by conditionally averaging the tracer field

$\theta _\omega$. We do so by conditionally averaging the tracer field ![]() $\theta _\omega$ with respect to a given flow state

$\theta _\omega$ with respect to a given flow state ![]() $\boldsymbol {u}_m$. More precisely, given the stochastic partial differential equation,

$\boldsymbol {u}_m$. More precisely, given the stochastic partial differential equation,

we shall obtain equations for probability weighted conditional means of ![]() $\theta _\omega$,

$\theta _\omega$,

Empirically, the probability weighted conditional average is computed by examining all ensemble members at a fixed time step, adding up only the ensemble members that are currently being advected by state ![]() $\boldsymbol {u}_m$ and then dividing by the total number of ensemble members. We will show that the evolution equation for

$\boldsymbol {u}_m$ and then dividing by the total number of ensemble members. We will show that the evolution equation for ![]() $\varTheta _m$ is

$\varTheta _m$ is

As we shall see, the explicit dependence on the generator in (2.14) yields considerable information. We recover the equation for the tracer ensemble mean, (2.2), by summing (2.14) over the index ![]() $m$, using

$m$, using ![]() $\langle \theta \rangle = \sum _m \varTheta _m$,

$\langle \theta \rangle = \sum _m \varTheta _m$, ![]() $\sum _m \mathcal {Q}_{mn} = \boldsymbol {0}$, and

$\sum _m \mathcal {Q}_{mn} = \boldsymbol {0}$, and ![]() $\sum _m \mathcal {P}_{m} = 1$,

$\sum _m \mathcal {P}_{m} = 1$,

\begin{align} \partial_t \sum_m \varTheta_m + \boldsymbol{\nabla} \boldsymbol{\cdot} \left( \sum_m \boldsymbol{u}_m \varTheta_m - \kappa \boldsymbol{\nabla} \sum_m \varTheta_m \right) &= s(\boldsymbol{x}) \end{align}

\begin{align} \partial_t \sum_m \varTheta_m + \boldsymbol{\nabla} \boldsymbol{\cdot} \left( \sum_m \boldsymbol{u}_m \varTheta_m - \kappa \boldsymbol{\nabla} \sum_m \varTheta_m \right) &= s(\boldsymbol{x}) \end{align}The presence of the generator when taking conditional averages is similar to the entrainment hypothesis in the atmospheric literature. See, for example, Tan et al. (Reference Tan, Kaul, Pressel, Cohen, Schneider and Teixeira2018) for its use in motivating a turbulence closure; however, here, we derive the result from the direct statistical representation instead of hypothesizing its presence from a dynamical argument.

We give a brief derivation of (2.13) and (2.14) using a discretization of the advection-diffusion equation here. For an alternative derivation where we forego discretization, see Appendix B, and for a brief overview of the connection between the discrete, continuous and mixed master equation, see Appendix C or, in a simpler context, the work of Hagan, Doering & Levermore (Reference Hagan, Doering and Levermore1989). Most of the terms in (2.14) are obtained by applying a conditional average to (2.11), commuting with spatial derivatives when necessary and then multiplying through by ![]() $\mathcal {P}_m$; however, the primary difficulty lies in proper treatment of the conditional average of the temporal derivative. We circumvent the problem in a roundabout manner: discretize the advection-diffusion equation, write down the resulting master equation, compute moments of the probability distribution and then take limits to restore the continuum nature of the advection-diffusion equation.

$\mathcal {P}_m$; however, the primary difficulty lies in proper treatment of the conditional average of the temporal derivative. We circumvent the problem in a roundabout manner: discretize the advection-diffusion equation, write down the resulting master equation, compute moments of the probability distribution and then take limits to restore the continuum nature of the advection-diffusion equation.

A generic discretization (in any number of dimensions) of (2.11) is of the form

\begin{equation} \frac{{\rm d}}{{\rm d}t} \theta^i + \sum_{jkc} A_{ijk}^c \tilde{u}^{k,c}_\omega \theta^j - \sum_j D_{ij} \theta^j = s^i \end{equation}

\begin{equation} \frac{{\rm d}}{{\rm d}t} \theta^i + \sum_{jkc} A_{ijk}^c \tilde{u}^{k,c}_\omega \theta^j - \sum_j D_{ij} \theta^j = s^i \end{equation}

for some tensor ![]() $A_{ijk}^c$, representing advection, and matrix

$A_{ijk}^c$, representing advection, and matrix ![]() $D_{ij}$, representing diffusion. Here, each

$D_{ij}$, representing diffusion. Here, each ![]() $i,j$ and

$i,j$ and ![]() $k$ corresponds to a spatial location, and the index

$k$ corresponds to a spatial location, and the index ![]() $c$ corresponds to a component of the velocity field

$c$ corresponds to a component of the velocity field ![]() $\boldsymbol {\tilde {u}}$. The variable

$\boldsymbol {\tilde {u}}$. The variable ![]() $\theta ^i$ is the value of the tracer at grid location

$\theta ^i$ is the value of the tracer at grid location ![]() $i$ and

$i$ and ![]() $v^{k,c}$ is the value of the

$v^{k,c}$ is the value of the ![]() $c$th velocity component and grid location

$c$th velocity component and grid location ![]() $k$. The master equation for the joint probability density for each component

$k$. The master equation for the joint probability density for each component ![]() $\theta ^i$ and Markov state

$\theta ^i$ and Markov state ![]() $m$,

$m$, ![]() $\rho _m( \boldsymbol {\theta } )$, where

$\rho _m( \boldsymbol {\theta } )$, where ![]() $\boldsymbol {\theta } = (\theta ^1, \theta ^2,\ldots )$ and the

$\boldsymbol {\theta } = (\theta ^1, \theta ^2,\ldots )$ and the ![]() $m$-index denotes a particular Markov state, is a combination of the Liouville equation for (2.17) and the transition rate equation for (2.10),

$m$-index denotes a particular Markov state, is a combination of the Liouville equation for (2.17) and the transition rate equation for (2.10),

\begin{equation} \partial_t \rho_m = \sum_{i} \frac{\partial}{\partial \theta^i} \left[ \left( \sum_{jkc} A_{ijk}^c u^{k,c}_m \theta^j - \sum_j D_{ij} \theta^j - s^i \right ) \rho_m \right] + \sum_n \mathcal{Q}_{mn} \rho_n . \end{equation}

\begin{equation} \partial_t \rho_m = \sum_{i} \frac{\partial}{\partial \theta^i} \left[ \left( \sum_{jkc} A_{ijk}^c u^{k,c}_m \theta^j - \sum_j D_{ij} \theta^j - s^i \right ) \rho_m \right] + \sum_n \mathcal{Q}_{mn} \rho_n . \end{equation}Define the following moments:

We obtain an equation for ![]() $\mathcal {P}_m$ by integrating (2.18) by

$\mathcal {P}_m$ by integrating (2.18) by ![]() ${\rm d}\boldsymbol {\theta }$ to yield

${\rm d}\boldsymbol {\theta }$ to yield

as expected from (2.7). The equation for ![]() $\varTheta _m^\ell$ is obtained by multiplying (2.18) by

$\varTheta _m^\ell$ is obtained by multiplying (2.18) by ![]() $\theta ^\ell$ and then integrating with respect to

$\theta ^\ell$ and then integrating with respect to ![]() ${\rm d}\boldsymbol {\theta }$,

${\rm d}\boldsymbol {\theta }$,

\begin{equation} \frac{{\rm d}}{{\rm d}t} \varTheta_m^\ell ={-}\sum_{jkc} A_{\ell jk}^c u^{k,c}_m \varTheta^j_m + \sum_j D_{\ell j} \varTheta^j_m + s^\ell \mathcal{P}_m + \sum_n \mathcal{Q}_{mn} \varTheta_n^\ell , \end{equation}

\begin{equation} \frac{{\rm d}}{{\rm d}t} \varTheta_m^\ell ={-}\sum_{jkc} A_{\ell jk}^c u^{k,c}_m \varTheta^j_m + \sum_j D_{\ell j} \varTheta^j_m + s^\ell \mathcal{P}_m + \sum_n \mathcal{Q}_{mn} \varTheta_n^\ell , \end{equation}

where we integrated by parts on the ![]() $\int \,{\rm d} \boldsymbol {\theta } \theta ^\ell \partial _{\theta ^i} \bullet$ term. Upon taking limits of (2.21), we arrive at (2.13) and (2.14), repeated here for convenience,

$\int \,{\rm d} \boldsymbol {\theta } \theta ^\ell \partial _{\theta ^i} \bullet$ term. Upon taking limits of (2.21), we arrive at (2.13) and (2.14), repeated here for convenience,

We compare (2.23) to the direct application of the conditional average to (2.11) followed by multiplication with ![]() $\mathcal {P}_m$ to infer

$\mathcal {P}_m$ to infer

In summary, for an ![]() $m$-dimensional advection-diffusion equation and

$m$-dimensional advection-diffusion equation and ![]() $N$ Markov states, (2.13) and (2.14) are a set of

$N$ Markov states, (2.13) and (2.14) are a set of ![]() $N$-coupled

$N$-coupled ![]() $m$-dimensional advection-diffusion equations with

$m$-dimensional advection-diffusion equations with ![]() $N$ different steady velocities. When

$N$ different steady velocities. When ![]() $c$ continuous variables describe the statistics of the flow field, the resulting equation set becomes an

$c$ continuous variables describe the statistics of the flow field, the resulting equation set becomes an ![]() $m+c$ dimensional system. Stated differently, if the statistics of

$m+c$ dimensional system. Stated differently, if the statistics of ![]() $\boldsymbol {\tilde {u}}_{\omega }$ are characterized by transitions between a continuum of states associated with variables

$\boldsymbol {\tilde {u}}_{\omega }$ are characterized by transitions between a continuum of states associated with variables ![]() $\boldsymbol {\alpha } \in \mathbb {R}^c$ and Fokker–Planck operator

$\boldsymbol {\alpha } \in \mathbb {R}^c$ and Fokker–Planck operator ![]() $\mathcal {F}_{\boldsymbol {\alpha }}$, then the conditional averaging procedure yields

$\mathcal {F}_{\boldsymbol {\alpha }}$, then the conditional averaging procedure yields

where ![]() $\mathcal {P} = \mathcal {P}(\boldsymbol {\alpha }, t)$,

$\mathcal {P} = \mathcal {P}(\boldsymbol {\alpha }, t)$, ![]() $\varTheta = \varTheta (\boldsymbol {x}, \boldsymbol {\alpha }, t)$ and

$\varTheta = \varTheta (\boldsymbol {x}, \boldsymbol {\alpha }, t)$ and ![]() $\boldsymbol {u} = \boldsymbol {u}(\boldsymbol {x}, \boldsymbol {\alpha })$. Equations (2.22) and (2.23) are thought of as finite volume discretizations of flow statistics in (2.25) and (2.26). We give an explicit example in § 3.1 and another in § 5.

$\boldsymbol {u} = \boldsymbol {u}(\boldsymbol {x}, \boldsymbol {\alpha })$. Equations (2.22) and (2.23) are thought of as finite volume discretizations of flow statistics in (2.25) and (2.26). We give an explicit example in § 3.1 and another in § 5.

Our primary concern in this work is to use (2.22) and (2.23), or analogously (2.25) and (2.26), to calculate meaningful expressions for ![]() $\langle \boldsymbol {\tilde {u}} \theta \rangle$; however, we shall first take a broader view to understand the general structure of the turbulent fluxes. We attribute the following argument to Weinstock (Reference Weinstock1969) but use different notation and make additional simplifications.

$\langle \boldsymbol {\tilde {u}} \theta \rangle$; however, we shall first take a broader view to understand the general structure of the turbulent fluxes. We attribute the following argument to Weinstock (Reference Weinstock1969) but use different notation and make additional simplifications.

Applying the Reynolds decomposition

yields

The perturbation equation is rewritten as

This equation is an infinite system (or finite, depending on the number of ensemble members) of coupled partial differential equations between the different ensemble members. The ensemble members are coupled due to the turbulent flux, ![]() $\langle \boldsymbol {\tilde {u}}' \theta ' \rangle$. The key observation is to notice that the terms on the left-hand side involve the perturbation variables and not the ensemble mean of the gradients. Assuming it is possible to find the inverse, the Green's function for the large linear system is used to yield

$\langle \boldsymbol {\tilde {u}}' \theta ' \rangle$. The key observation is to notice that the terms on the left-hand side involve the perturbation variables and not the ensemble mean of the gradients. Assuming it is possible to find the inverse, the Green's function for the large linear system is used to yield

where we also have to integrate with respect to the measure defining the different ensemble members through ![]() ${\rm d}\mu _{\omega '}$. In our notation, this implies

${\rm d}\mu _{\omega '}$. In our notation, this implies ![]() $\langle \theta \rangle = \int \,{\rm d} \mu _{\omega } \theta _\omega$. We use this expression to rewrite the turbulent flux as

$\langle \theta \rangle = \int \,{\rm d} \mu _{\omega } \theta _\omega$. We use this expression to rewrite the turbulent flux as

We make two simplifications for illustrative purposes.

(i) All ensemble averages are independent of time.

(ii) The flow is incompressible, i.e.

$\boldsymbol {\nabla } \boldsymbol {\cdot } \boldsymbol {\tilde {u}} = 0$.

$\boldsymbol {\nabla } \boldsymbol {\cdot } \boldsymbol {\tilde {u}} = 0$.

Equation (2.32) becomes

We perform the ![]() $t', \alpha, \omega$ integrals first to define the turbulent diffusivity tensor kernel as

$t', \alpha, \omega$ integrals first to define the turbulent diffusivity tensor kernel as

\begin{align} \langle \boldsymbol{\tilde{u}}' \theta' \rangle &={-}\int \,{\rm d}\boldsymbol{x}' \underbrace{\int \,{\rm d}t' \,{\rm d}\mu_{\omega} \,{\rm d}\mu_{\omega'} \left[ \boldsymbol{\tilde{u}}'_\omega(\boldsymbol{x}, t) \otimes \boldsymbol{\tilde{u}}_{\omega'}'(\boldsymbol{x}' , t') \mathcal{G}_{\omega \omega'}(\boldsymbol{x}, t | \boldsymbol{x}' , t' ) \right] }_{\boldsymbol{\mathcal{K}}(\boldsymbol{x}|\boldsymbol{x}')} \boldsymbol{\cdot} \boldsymbol{\nabla} \langle \theta \rangle (\boldsymbol{x}' ), \end{align}

\begin{align} \langle \boldsymbol{\tilde{u}}' \theta' \rangle &={-}\int \,{\rm d}\boldsymbol{x}' \underbrace{\int \,{\rm d}t' \,{\rm d}\mu_{\omega} \,{\rm d}\mu_{\omega'} \left[ \boldsymbol{\tilde{u}}'_\omega(\boldsymbol{x}, t) \otimes \boldsymbol{\tilde{u}}_{\omega'}'(\boldsymbol{x}' , t') \mathcal{G}_{\omega \omega'}(\boldsymbol{x}, t | \boldsymbol{x}' , t' ) \right] }_{\boldsymbol{\mathcal{K}}(\boldsymbol{x}|\boldsymbol{x}')} \boldsymbol{\cdot} \boldsymbol{\nabla} \langle \theta \rangle (\boldsymbol{x}' ), \end{align}

The independence of ![]() $\boldsymbol {\mathcal {K}}$ with respect to

$\boldsymbol {\mathcal {K}}$ with respect to ![]() $t$ follows from the time-independence of

$t$ follows from the time-independence of ![]() $\langle \boldsymbol {\tilde {u}}' \theta ' \rangle$ and

$\langle \boldsymbol {\tilde {u}}' \theta ' \rangle$ and ![]() $\langle \theta \rangle$. In total, we see

$\langle \theta \rangle$. In total, we see

An insight from (2.36) is the dependence of turbulent fluxes ![]() $\langle \boldsymbol {\tilde {u}}' \theta ' \rangle$ at location

$\langle \boldsymbol {\tilde {u}}' \theta ' \rangle$ at location ![]() $\boldsymbol {x}$ as a weighted sum of gradients of the mean variable

$\boldsymbol {x}$ as a weighted sum of gradients of the mean variable ![]() $\langle \theta \rangle$ at locations

$\langle \theta \rangle$ at locations ![]() $\boldsymbol {x}'$. The operator is linear and amenable to computation, even in turbulent flows (Bhamidipati, Souza & Flierl Reference Bhamidipati, Souza and Flierl2020).

$\boldsymbol {x}'$. The operator is linear and amenable to computation, even in turbulent flows (Bhamidipati, Souza & Flierl Reference Bhamidipati, Souza and Flierl2020).

We consider the spectrum for the turbulent diffusivity operator ![]() $\int \,{\rm d} \boldsymbol {x}' \mathcal {K}(\boldsymbol {x} | \boldsymbol {x}' ) \bullet$ as a characterization of turbulent mixing by the flow field

$\int \,{\rm d} \boldsymbol {x}' \mathcal {K}(\boldsymbol {x} | \boldsymbol {x}' ) \bullet$ as a characterization of turbulent mixing by the flow field ![]() $\boldsymbol {\tilde {u}}_{\omega } (\boldsymbol {x}, t)$. We comment that the operator

$\boldsymbol {\tilde {u}}_{\omega } (\boldsymbol {x}, t)$. We comment that the operator ![]() $\int \,{\rm d} \boldsymbol {x}' \mathcal {K}(\boldsymbol {x} | \boldsymbol {x}' ) \bullet$ is a mapping from vector fields to vector fields, whereas the kernel

$\int \,{\rm d} \boldsymbol {x}' \mathcal {K}(\boldsymbol {x} | \boldsymbol {x}' ) \bullet$ is a mapping from vector fields to vector fields, whereas the kernel ![]() $\mathcal {K}(\boldsymbol {x} | \boldsymbol {x}' )$ is a mapping from two positions to a tensor.

$\mathcal {K}(\boldsymbol {x} | \boldsymbol {x}' )$ is a mapping from two positions to a tensor.

For example, consider a 1-D problem in a periodic domain ![]() $x \in [0, 2 {\rm \pi})$. If

$x \in [0, 2 {\rm \pi})$. If ![]() $\mathcal {K}(x | x' ) = \kappa _e \delta (x - x')$ for some positive constant

$\mathcal {K}(x | x' ) = \kappa _e \delta (x - x')$ for some positive constant ![]() $\kappa _e$, the spectrum of the operator is flat and turbulent mixing remains the same on every length scale. If

$\kappa _e$, the spectrum of the operator is flat and turbulent mixing remains the same on every length scale. If ![]() $\mathcal {K}(x| x' ) = -\kappa _e \partial _{xx}^2 \delta (x - x')$, then the rate of mixing increases with increasing wavenumber and one gets hyperdiffusion. Lastly, if

$\mathcal {K}(x| x' ) = -\kappa _e \partial _{xx}^2 \delta (x - x')$, then the rate of mixing increases with increasing wavenumber and one gets hyperdiffusion. Lastly, if ![]() $\int \,{{\rm d} x}' \mathcal {K}(x | x' ) \bullet = (\kappa _e - \partial _{xx})^{-1}$, then the kernel is non-local and the rate of mixing decreases at smaller length scales.

$\int \,{{\rm d} x}' \mathcal {K}(x | x' ) \bullet = (\kappa _e - \partial _{xx})^{-1}$, then the kernel is non-local and the rate of mixing decreases at smaller length scales.

In the following section, we calculate ![]() $\int \,{\rm d} \boldsymbol {x}' \mathcal {K}(\boldsymbol {x} | \boldsymbol {x}' ) \bullet$ directly from the conditional equations, discuss the general structure in § 4 and then apply the methodology to a wandering wave in a channel in § 5.

$\int \,{\rm d} \boldsymbol {x}' \mathcal {K}(\boldsymbol {x} | \boldsymbol {x}' ) \bullet$ directly from the conditional equations, discuss the general structure in § 4 and then apply the methodology to a wandering wave in a channel in § 5.

3. Examples

We now go through two examples to understand the implications of (2.13) and (2.14). The two examples illustrate different aspects of the conditional averaging procedure. The first aspect is the ability to approximate continuous stochastic processes as one with a finite state space, i.e. a Markov jump process. The second aspect is to obtain closed-form formulae for a Markov jump process. In both cases, we use the methodology to calculate turbulent diffusivities for statistically steady states.

The first example is the advection of a passive tracer in a 1-D periodic domain by an Ornstein–Uhlenbeck process: perhaps the simplest class of problems amenable to detailed analysis. See Pappalettera (Reference Pappalettera2022) for a mathematical treatment of this problem without source terms. The second example further builds intuition for the statistical significance of the conditionally averaging procedure by examining a three-velocity state approximation to an Ornstein–Uhlenbeck in an abstract ![]() $n$-dimensional setting and a concrete two-dimensional (2-D) setting.

$n$-dimensional setting and a concrete two-dimensional (2-D) setting.

3.1. Ornstein–Uhlenbeck process in one dimension

Consider the advection of a 1-D tracer by an Ornstein–Uhlenbeck (OU) process in a ![]() $2 {\rm \pi}$ periodic domain. The equations of motion are

$2 {\rm \pi}$ periodic domain. The equations of motion are

where ![]() $s$ is a mean zero source,

$s$ is a mean zero source, ![]() $\omega$ labels the ensemble member and we choose

$\omega$ labels the ensemble member and we choose ![]() $\kappa = 0.01$ for the diffusivity constant. We shall calculate the turbulent diffusivity operator for this system in two different ways. The first will be by simulating the equations and the second will be by using numerical approximations of the conditional mean equations.

$\kappa = 0.01$ for the diffusivity constant. We shall calculate the turbulent diffusivity operator for this system in two different ways. The first will be by simulating the equations and the second will be by using numerical approximations of the conditional mean equations.

Since the flow field ![]() $u$ is independent of the spatial variable and the domain is periodic, we decompose the tracer equation using Fourier modes and calculate a diffusivity as a function of wavenumber

$u$ is independent of the spatial variable and the domain is periodic, we decompose the tracer equation using Fourier modes and calculate a diffusivity as a function of wavenumber ![]() $k$, wavenumber by wavenumber. Decomposing

$k$, wavenumber by wavenumber. Decomposing ![]() $\theta _\omega$ as

$\theta _\omega$ as

\begin{equation} \theta_\omega(x, t) = \sum_{n={-}\infty}^{\infty} \hat{\theta}^n_\omega(t) {\rm e}^{{\rm i} k_n x} \quad\text{and}\quad s(x) = \sum_{n={-}\infty}^{\infty} \hat{s}^n {\rm e}^{{\rm i} k_n x}, \end{equation}

\begin{equation} \theta_\omega(x, t) = \sum_{n={-}\infty}^{\infty} \hat{\theta}^n_\omega(t) {\rm e}^{{\rm i} k_n x} \quad\text{and}\quad s(x) = \sum_{n={-}\infty}^{\infty} \hat{s}^n {\rm e}^{{\rm i} k_n x}, \end{equation}

where ![]() $k_n = n$, yields

$k_n = n$, yields

which are ordinary differential equations for each wavenumber ![]() $k_n$ with no coupling between wavenumbers. We define a ‘turbulent diffusivity’ on a wavenumber by wavenumber basis with

$k_n$ with no coupling between wavenumbers. We define a ‘turbulent diffusivity’ on a wavenumber by wavenumber basis with

\begin{equation} \kappa_T(k_n) ={-}\frac{\langle \tilde{u} \hat{\theta}^n \rangle}{\langle {\rm i} k_n \hat{\theta}^n \rangle}, \end{equation}

\begin{equation} \kappa_T(k_n) ={-}\frac{\langle \tilde{u} \hat{\theta}^n \rangle}{\langle {\rm i} k_n \hat{\theta}^n \rangle}, \end{equation}

which is a flux divided by a gradient. A local diffusivity would be independent of wavenumber. Here the averaging operator, ![]() $\langle {\cdot } \rangle$, is an average over all ensemble members. This quantity is independent of the source wavenumber amplitude,

$\langle {\cdot } \rangle$, is an average over all ensemble members. This quantity is independent of the source wavenumber amplitude, ![]() $\hat {s}^n$, for non-zero sources and conventions for scaling the Fourier transform. With this observation in place, we take our source term to be

$\hat {s}^n$, for non-zero sources and conventions for scaling the Fourier transform. With this observation in place, we take our source term to be ![]() $s(x) = \delta (x) - 1$ so that

$s(x) = \delta (x) - 1$ so that ![]() $\hat {s}^n = 1$ for all

$\hat {s}^n = 1$ for all ![]() $n \neq 0$ and

$n \neq 0$ and ![]() $\hat {s}^0 = 0$. We numerically simulate (3.1) and (3.2) in spectral space until a time

$\hat {s}^0 = 0$. We numerically simulate (3.1) and (3.2) in spectral space until a time ![]() $t=25$ using

$t=25$ using ![]() $10^6$ ensemble members and then perform the ensemble average at this fixed time. We have finished the description for the first method of calculation. We now move on to using the conditional mean equations directly.

$10^6$ ensemble members and then perform the ensemble average at this fixed time. We have finished the description for the first method of calculation. We now move on to using the conditional mean equations directly.

The conditional mean equations recover the same result as the continuous ensemble mean. We show this through numerical discretization. The conditional mean equation version of (3.1) and (3.2) is

where ![]() $\varTheta = \varTheta (x, u, t)$ and

$\varTheta = \varTheta (x, u, t)$ and ![]() $\mathcal {P} = \mathcal {P}(u, t)$. We make the observation

$\mathcal {P} = \mathcal {P}(u, t)$. We make the observation

To discretize the ![]() $u$ variable, we invoke a finite volume discretization by integrating with respect to

$u$ variable, we invoke a finite volume discretization by integrating with respect to ![]() $N = N'+1$ control volumes

$N = N'+1$ control volumes ![]() $\varOmega _m = [(2m - 1 - N') / \sqrt {N'},(2m + 1 - N') / \sqrt {N'}]$ for

$\varOmega _m = [(2m - 1 - N') / \sqrt {N'},(2m + 1 - N') / \sqrt {N'}]$ for ![]() $m = 0,\ldots, N'$, a procedure which is detailed in Appendix C.2. The result is

$m = 0,\ldots, N'$, a procedure which is detailed in Appendix C.2. The result is

where

and ![]() $\mathcal {Q}_{mm'} = ( -N' \delta _{mm'} + m' \delta _{(m+1)m'} + (N'-m')\delta _{(m-1)m'} )/2$ and

$\mathcal {Q}_{mm'} = ( -N' \delta _{mm'} + m' \delta _{(m+1)m'} + (N'-m')\delta _{(m-1)m'} )/2$ and ![]() $m = 0 ,\ldots, N'$. This approximation is the same as that used by Hagan et al. (Reference Hagan, Doering and Levermore1989) to represent the Ornstein–Uhlenbeck process by Markov jump processes, but now justified as a finite volume discretization. Observe that for

$m = 0 ,\ldots, N'$. This approximation is the same as that used by Hagan et al. (Reference Hagan, Doering and Levermore1989) to represent the Ornstein–Uhlenbeck process by Markov jump processes, but now justified as a finite volume discretization. Observe that for ![]() $N = 2$, we have a dichotomous velocity process

$N = 2$, we have a dichotomous velocity process

and for ![]() $N=3$, we have a generalization

$N=3$, we have a generalization

similar to the models of Davis et al. (Reference Davis, Flierl, Wiebe and Franks1991) and Ferrari, Manfroi & Young (Reference Ferrari, Manfroi and Young2001). The presence of an evolution equation for ![]() $\mathcal {P}_n$ accounts for statistically non-stationary states of the flow field. Alternative discretizations are possible. For example, using a spectral discretization for the

$\mathcal {P}_n$ accounts for statistically non-stationary states of the flow field. Alternative discretizations are possible. For example, using a spectral discretization for the ![]() $u$ variable via Hermite polynomials converges to the continuous system at a faster rate; however, the resulting discrete system no longer has an interpretation as a Markov jump process for three or more state variables.

$u$ variable via Hermite polynomials converges to the continuous system at a faster rate; however, the resulting discrete system no longer has an interpretation as a Markov jump process for three or more state variables.

Next, taking the Fourier transform in ![]() $x$ yields

$x$ yields

We solve for the steady-state solution

and then compute

\begin{equation} \kappa_E(k_n)

={-}\frac{\displaystyle\sum_m u_m \hat{\varTheta}_m^n}{\displaystyle \sum_m {\rm i}

k_n \hat{\varTheta}_m^n } \Rightarrow

-\frac{\displaystyle\int_{-\infty}^\infty \,{\rm d}u \hat{\varTheta}^n u

}{\displaystyle\int_{-\infty}^\infty \,{\rm d}u ({\rm i} k_n

\hat{\varTheta}^n) } \end{equation}

\begin{equation} \kappa_E(k_n)

={-}\frac{\displaystyle\sum_m u_m \hat{\varTheta}_m^n}{\displaystyle \sum_m {\rm i}

k_n \hat{\varTheta}_m^n } \Rightarrow

-\frac{\displaystyle\int_{-\infty}^\infty \,{\rm d}u \hat{\varTheta}^n u

}{\displaystyle\int_{-\infty}^\infty \,{\rm d}u ({\rm i} k_n

\hat{\varTheta}^n) } \end{equation}

for various choices of discrete states. The calculations in the numerator and denominator are equivalent to ensemble averages.

We compare the two methods of calculation in figure 1. We see that increasing the number of states from ![]() $N=2$ to

$N=2$ to ![]() $N=15$ yields, for each wavenumber, ensemble averages similar to those of the Ornstein–Uhlenbeck process. The explicit formula for

$N=15$ yields, for each wavenumber, ensemble averages similar to those of the Ornstein–Uhlenbeck process. The explicit formula for ![]() $N=3$ is given in the next section. We stop at

$N=3$ is given in the next section. We stop at ![]() $N = 15$ states since all wavenumbers in the plot are within 1 % of one another. The

$N = 15$ states since all wavenumbers in the plot are within 1 % of one another. The ![]() $n=0$ wavenumber is plotted for convenience as well and, in the case of the present process, also happens to correspond to the velocity autocorrelation. The correspondence of all velocity states (including the Ornstein–Uhlenbeck process) at wavenumber

$n=0$ wavenumber is plotted for convenience as well and, in the case of the present process, also happens to correspond to the velocity autocorrelation. The correspondence of all velocity states (including the Ornstein–Uhlenbeck process) at wavenumber ![]() $k_n=0$ is particular to this system and is not a feature that holds in general. The ‘large scale limit’, corresponding to wavenumber

$k_n=0$ is particular to this system and is not a feature that holds in general. The ‘large scale limit’, corresponding to wavenumber ![]() $k_n = 0$, can often be considered an appropriate local diffusivity definition.

$k_n = 0$, can often be considered an appropriate local diffusivity definition.

Figure 1. Wavenumber diffusivities. We show the turbulent diffusivity estimate as a function of wavenumber. Here we see that different wavenumbers, or equivalently length scales, produce different estimates of the turbulent diffusivity. Furthermore, we show that the different N-state approximations yield an increasingly better approximation to the Ornstein–Uhlenbeck empirical estimate.

Although we use the finite volume discretization of velocity states as approximations to the Ornstein–Uhlenbeck process, they also constitute a realizable stochastic process in the form of a Markov jump process. Thus, each finite state case can be simulated from whence the turbulent diffusivity estimate in figure 1 is exact rather than an approximation. We choose a particular example with ![]() $N=3$ in the following section.

$N=3$ in the following section.

Furthermore, each wavenumber ![]() $k_n$ yields a different estimate of the turbulent diffusivity. In particular, the decrease of turbulent diffusivity as a function of wavenumber implies non-locality. Choosing a forcing that is purely sinusoidal of a given mode would yield different estimates of turbulent fluxes. For example, forcing with

$k_n$ yields a different estimate of the turbulent diffusivity. In particular, the decrease of turbulent diffusivity as a function of wavenumber implies non-locality. Choosing a forcing that is purely sinusoidal of a given mode would yield different estimates of turbulent fluxes. For example, forcing with ![]() $s(x) = \sin (x)$ would yield a diffusivity estimate of

$s(x) = \sin (x)$ would yield a diffusivity estimate of ![]() $\kappa _T(1) \approx 0.58$, whereas forcing with

$\kappa _T(1) \approx 0.58$, whereas forcing with ![]() $s(x) = \sin (2x)$ would yield a diffusivity estimate of

$s(x) = \sin (2x)$ would yield a diffusivity estimate of ![]() $\kappa _T(2) \approx 0.34$, as implied by figure 1.

$\kappa _T(2) \approx 0.34$, as implied by figure 1.

In the present case, the inverse Fourier transform of the turbulent diffusivity yields the turbulent diffusivity operator in real space. Since products in wavenumber space are circular convolutions in real space, we naturally guarantee the translation invariance of the kernel for the present case. The turbulent diffusivity kernels are shown in figure 2. Thus, the equation for the ensemble mean is

where ![]() $*$ is a circular convolution with the kernel in figure 2.

$*$ is a circular convolution with the kernel in figure 2.

Figure 2. Kernels for N-state systems. Here we show the turbulent diffusivity kernels for several approximations of the Ornstein–Uhlenbeck diffusivity kernel. We compare all kernels to the ![]() $N = 15$ state kernel, which is a good approximation to the OU process kernel. We see that the width of the kernel is comparable to the domain. The non-locality of the kernel is a consequence of the turbulent diffusivity estimate differing on a wavenumber per wavenumber basis.

$N = 15$ state kernel, which is a good approximation to the OU process kernel. We see that the width of the kernel is comparable to the domain. The non-locality of the kernel is a consequence of the turbulent diffusivity estimate differing on a wavenumber per wavenumber basis.

To illustrate the action of a non-local operator, we show the implied flux for a gradient that is presumed to have the functional form

The flux computed from the convolution with the ![]() $N=15$ state kernel

$N=15$ state kernel

is shown in figure 3. Even though the gradient changes sign, we see that the flux remains purely negative, i.e. the flux is up-gradient at particular points of the domain. Furthermore, the figure 3(b) does not resemble a straight line through the origin, as would be the case for a local diffusivity. The red portion highlights the ‘up-gradient’ part of the flux-gradient relation. We point out these features to illustrate the limitations of a purely local flux-gradient relationship.

Figure 3. Flux prediction from the kernel. Given the ensemble mean gradient (green), we convolve with the ![]() $N=15$ state kernel to get the flux (yellow) in panel (a). We see that the gradient changes sign while the flux always remains negative. To further illustrate the effect of non-locality, we show flux versus gradient in panel (b). The blue regions are down-gradient and the red regions are up-gradient. A local diffusivity estimate would be a straight line through the origin, whose slope determines the diffusivity constant.

$N=15$ state kernel to get the flux (yellow) in panel (a). We see that the gradient changes sign while the flux always remains negative. To further illustrate the effect of non-locality, we show flux versus gradient in panel (b). The blue regions are down-gradient and the red regions are up-gradient. A local diffusivity estimate would be a straight line through the origin, whose slope determines the diffusivity constant.

This section focused on a flow field with a simple spatial and temporal structure. In the next section, we derive the turbulent diffusivity operator for a flow with arbitrary spatial structure but with a simple statistical temporal structure: a three-state velocity field whose amplitude is an approximation to the Ornstein–Uhlenbeck process.

3.2. A generalized three-state system with a 2-D example

For this example, we consider a Markov process that transitions between three incompressible states ![]() $\boldsymbol {u}_1(\boldsymbol {x}) = \boldsymbol {u}(\boldsymbol {x})$,

$\boldsymbol {u}_1(\boldsymbol {x}) = \boldsymbol {u}(\boldsymbol {x})$, ![]() $\boldsymbol {u}_2(\boldsymbol {x}) = 0$ and

$\boldsymbol {u}_2(\boldsymbol {x}) = 0$ and ![]() $\boldsymbol {u}_3(\boldsymbol {x}) = -\boldsymbol {u}(\boldsymbol {x})$. We use the three-state approximation to the Ornstein–Uhlenbeck process, in which case we let the generator be

$\boldsymbol {u}_3(\boldsymbol {x}) = -\boldsymbol {u}(\boldsymbol {x})$. We use the three-state approximation to the Ornstein–Uhlenbeck process, in which case we let the generator be

\begin{equation} \mathcal{Q} = \gamma \begin{bmatrix} -1 & 1/2 & 0 \\ 1 & -1 & 1 \\ 0 & 1/2 & -1 \end{bmatrix}, \end{equation}

\begin{equation} \mathcal{Q} = \gamma \begin{bmatrix} -1 & 1/2 & 0 \\ 1 & -1 & 1 \\ 0 & 1/2 & -1 \end{bmatrix}, \end{equation}

where ![]() $\gamma > 0$. This generator implies that the flow field stays in each state for an exponentially distributed amount of time, with each state's rate parameter

$\gamma > 0$. This generator implies that the flow field stays in each state for an exponentially distributed amount of time, with each state's rate parameter ![]() $\gamma$. Flow fields

$\gamma$. Flow fields ![]() $\boldsymbol {u}_1$ and

$\boldsymbol {u}_1$ and ![]() $\boldsymbol {u}_3$ always transition to state

$\boldsymbol {u}_3$ always transition to state ![]() $\boldsymbol {u}_2$ and

$\boldsymbol {u}_2$ and ![]() $\boldsymbol {u}_2$ transitions to either

$\boldsymbol {u}_2$ transitions to either ![]() $\boldsymbol {u}_1$ or

$\boldsymbol {u}_1$ or ![]() $\boldsymbol {u}_3$ with

$\boldsymbol {u}_3$ with ![]() $50\,\%$ probability. This stochastic process is a generalization of the model considered in the previous section and the class of problems considered by Ferrari et al. (Reference Ferrari, Manfroi and Young2001) since the flow field can have spatial structure and is not limited to one dimension.

$50\,\%$ probability. This stochastic process is a generalization of the model considered in the previous section and the class of problems considered by Ferrari et al. (Reference Ferrari, Manfroi and Young2001) since the flow field can have spatial structure and is not limited to one dimension.

The three-state system is viewed as a reduced-order statistical model for any flow field that randomly switches between clockwise or counterclockwise advection, such as the numerical and experimental flows of Sugiyama et al. (Reference Sugiyama, Ni, Stevens, Chan, Zhou, Xi, Sun, Grossmann, Xia and Lohse2010). In their work, the numerical computations of 2-D Rayleigh–Bénard convection in a rectangular domain with no-slip boundary conditions along all walls yielded large-scale convection rolls that flipped orientation at random time intervals upon particular choices of Rayleigh and Prandtl numbers. In addition, they showed that the phenomenology could be observed in a laboratory experiment with a setup similar to that of Xia, Sun & Zhou (Reference Xia, Sun and Zhou2003). In general, we expect reducing the statistics of a flow to a continuous-time Markov process with discrete states to be useful and lead to tractable analysis in fluid flows characterized by distinct well-separated flow regimes with seemingly random transitions between behaviours. Consequently, a similar construction is possible for more complex three-dimensional flow fields, such as those of Brown & Ahlers (Reference Brown and Ahlers2007), or geophysical applications (Zhang, Zhang & Tian Reference Zhang, Zhang and Tian2022).

Proceeding with calculations, we note that the eigenvectors of the generator are

\begin{equation} \boldsymbol{v}^1 = \begin{bmatrix} 1/4 \\ 1/2 \\ 1/4 \end{bmatrix},\quad \boldsymbol{v}^2 = \begin{bmatrix} 1/2 \\ 0 \\ -1/2 \end{bmatrix} \quad \text{and}\quad \boldsymbol{v}^3 = \begin{bmatrix} 1/4 \\ -1/2 \\ 1/4 \end{bmatrix} \end{equation}

\begin{equation} \boldsymbol{v}^1 = \begin{bmatrix} 1/4 \\ 1/2 \\ 1/4 \end{bmatrix},\quad \boldsymbol{v}^2 = \begin{bmatrix} 1/2 \\ 0 \\ -1/2 \end{bmatrix} \quad \text{and}\quad \boldsymbol{v}^3 = \begin{bmatrix} 1/4 \\ -1/2 \\ 1/4 \end{bmatrix} \end{equation}

with respective eigenvalues ![]() $\lambda ^1 = 0$,

$\lambda ^1 = 0$, ![]() $\lambda ^2 = - \gamma$ and

$\lambda ^2 = - \gamma$ and ![]() $\lambda ^3 = - 2 \gamma$.

$\lambda ^3 = - 2 \gamma$.

The statistically steady three-state manifestation of (2.13) and (2.14) is

We define a transformation using the eigenvectors of the generator ![]() $\mathcal {Q}$,

$\mathcal {Q}$,

\begin{equation} \begin{bmatrix} 1/4 & 1/2 & 1/4 \\ 1/2 & 0 & -1/2 \\ 1/4 & -1/2 & 1/4 \end{bmatrix} \begin{bmatrix} \varphi_1 \\ \varphi_2 \\ \varphi_3 \end{bmatrix} = \begin{bmatrix} \varTheta_1 \\ \varTheta_2 \\ \varTheta_3 \end{bmatrix} \Leftrightarrow \begin{bmatrix} \varphi_1 \\ \varphi_2 \\ \varphi_3 \end{bmatrix} = \begin{bmatrix} 1 & 1 & 1 \\ 1 & 0 & -1 \\ 1 & -1 & 1 \end{bmatrix} \begin{bmatrix} \varTheta_1 \\ \varTheta_2 \\ \varTheta_3 \end{bmatrix} \end{equation}

\begin{equation} \begin{bmatrix} 1/4 & 1/2 & 1/4 \\ 1/2 & 0 & -1/2 \\ 1/4 & -1/2 & 1/4 \end{bmatrix} \begin{bmatrix} \varphi_1 \\ \varphi_2 \\ \varphi_3 \end{bmatrix} = \begin{bmatrix} \varTheta_1 \\ \varTheta_2 \\ \varTheta_3 \end{bmatrix} \Leftrightarrow \begin{bmatrix} \varphi_1 \\ \varphi_2 \\ \varphi_3 \end{bmatrix} = \begin{bmatrix} 1 & 1 & 1 \\ 1 & 0 & -1 \\ 1 & -1 & 1 \end{bmatrix} \begin{bmatrix} \varTheta_1 \\ \varTheta_2 \\ \varTheta_3 \end{bmatrix} \end{equation}resulting in the equations

We comment that ![]() $\varphi _1 = \langle \theta \rangle$, and that

$\varphi _1 = \langle \theta \rangle$, and that ![]() $\varphi _2$ and

$\varphi _2$ and ![]() $\varphi _3$ are thought of as perturbation variables. Furthermore, the turbulent flux is

$\varphi _3$ are thought of as perturbation variables. Furthermore, the turbulent flux is ![]() $\langle \boldsymbol {\tilde {u}}' \theta ' \rangle = \boldsymbol {u} \varphi _2$. We eliminate dependence on the perturbation variables

$\langle \boldsymbol {\tilde {u}}' \theta ' \rangle = \boldsymbol {u} \varphi _2$. We eliminate dependence on the perturbation variables ![]() $\varphi _2$ and

$\varphi _2$ and ![]() $\varphi _3$ by first solving for

$\varphi _3$ by first solving for ![]() $\varphi _3$ in terms of

$\varphi _3$ in terms of ![]() $\varphi _2$,

$\varphi _2$,

and then solving for ![]() $\varphi _2$ in terms of

$\varphi _2$ in terms of ![]() $\varphi _1$,

$\varphi _1$,

And finally, we write our equation for the ensemble mean as

from whence we extract the turbulent diffusivity operator

We point out a few salient features of (3.42).

For large ![]() $\kappa$, we have

$\kappa$, we have

The inverse Helmholtz operator, ![]() $(\gamma - \kappa \Delta )^{-1}$, damps high spatial frequency components of ensemble mean gradients. Thus, the operator's eigenvalues decrease as one examines increasingly fine-scale structure. Intuitively, as one examines a small-scale structure, the presence of diffusivity leads to lower turbulent fluxes, expressing that it is challenging to transport something that immediately diffuses.

$(\gamma - \kappa \Delta )^{-1}$, damps high spatial frequency components of ensemble mean gradients. Thus, the operator's eigenvalues decrease as one examines increasingly fine-scale structure. Intuitively, as one examines a small-scale structure, the presence of diffusivity leads to lower turbulent fluxes, expressing that it is challenging to transport something that immediately diffuses.

A second observation pertains to the presence of the eigenvalue of the generator in the operator. If the flow field changes rapidly, transitioning between the disparate states, then ![]() $\gamma$ is large and one can expect the turbulent diffusivity to be local. In other words, the flow does not stay sufficiently long time near a coherent structure. More concretely, larger transition rates correspond to an enhanced locality in the turbulent diffusivity operator since, as

$\gamma$ is large and one can expect the turbulent diffusivity to be local. In other words, the flow does not stay sufficiently long time near a coherent structure. More concretely, larger transition rates correspond to an enhanced locality in the turbulent diffusivity operator since, as ![]() $\gamma \rightarrow \infty$,

$\gamma \rightarrow \infty$,

Observe that (3.44) is the integrated autocorrelation of the stochastic flow field. Whether or not non-local effects matter depends on the characteristic time scale of the flow field, ![]() $L U^{-1}$ as well as the characteristic time scale for diffusion

$L U^{-1}$ as well as the characteristic time scale for diffusion ![]() $L^2 / \kappa$, as it compares to the characteristic time scale for transitioning between states,

$L^2 / \kappa$, as it compares to the characteristic time scale for transitioning between states, ![]() $\gamma ^{-1}$.

$\gamma ^{-1}$.

We see that the ![]() $\kappa \rightarrow 0$ limit retains a non-local feature since

$\kappa \rightarrow 0$ limit retains a non-local feature since

and the operator ![]() $(\boldsymbol {u}\boldsymbol {\cdot } \boldsymbol {\nabla })(\boldsymbol {u} \boldsymbol {\cdot } \boldsymbol {\nabla })$ can have a significant spatial structure. To further understand this point, we simplify by using the example from the previous section. Using the 1-D example in Fourier space and using the usual transcriptions

$(\boldsymbol {u}\boldsymbol {\cdot } \boldsymbol {\nabla })(\boldsymbol {u} \boldsymbol {\cdot } \boldsymbol {\nabla })$ can have a significant spatial structure. To further understand this point, we simplify by using the example from the previous section. Using the 1-D example in Fourier space and using the usual transcriptions ![]() $\Delta \rightarrow -k^2$,

$\Delta \rightarrow -k^2$, ![]() $\boldsymbol {\nabla } \rightarrow \iota k$, and with

$\boldsymbol {\nabla } \rightarrow \iota k$, and with ![]() $u > 0$, the kernel is

$u > 0$, the kernel is

\begin{align} \dfrac{u^2}{2(\gamma + \kappa k^2) + \dfrac{u^2 k^2}{(\kappa k^2 + 2 \gamma)}} \rightarrow u \dfrac{ \dfrac{2 \gamma}{u} }{ \dfrac{4\gamma^2}{u^2} + k^2} \Rightarrow \mathcal{K}(x, x') = \dfrac{u}{2} \exp \left( -\dfrac{2 \gamma}{u} \left| x - x' \right| \right), \end{align}

\begin{align} \dfrac{u^2}{2(\gamma + \kappa k^2) + \dfrac{u^2 k^2}{(\kappa k^2 + 2 \gamma)}} \rightarrow u \dfrac{ \dfrac{2 \gamma}{u} }{ \dfrac{4\gamma^2}{u^2} + k^2} \Rightarrow \mathcal{K}(x, x') = \dfrac{u}{2} \exp \left( -\dfrac{2 \gamma}{u} \left| x - x' \right| \right), \end{align}

where in the last line, we used the inverse Fourier transform on the real line to simplify the structure of the kernel. (Accounting for finite domain effects yields the Fourier series of a hyperbolic cosine.) The left-most expression corresponds to the blue dots in figure 1 for ![]() $N=3$,

$N=3$, ![]() $u = \sqrt {2}$,

$u = \sqrt {2}$, ![]() $\gamma = 1$ and

$\gamma = 1$ and ![]() $\kappa = 0.01$ for each wavenumber

$\kappa = 0.01$ for each wavenumber ![]() $k \in \{0, 1, 2,\ldots, 7 \}$. In the right-most expression, we see the mean free path

$k \in \{0, 1, 2,\ldots, 7 \}$. In the right-most expression, we see the mean free path ![]() $\ell \equiv u / (2\gamma )$ as the characteristic width of the non-local kernel. Observe that by rescaling

$\ell \equiv u / (2\gamma )$ as the characteristic width of the non-local kernel. Observe that by rescaling ![]() $u \rightarrow \sqrt {2 \gamma } u$ and taking the limit as

$u \rightarrow \sqrt {2 \gamma } u$ and taking the limit as ![]() $\gamma \rightarrow \infty$ yields a delta function, a point that we come back to in § 4.3 and Appendix A. Furthermore, the weak velocity amplitude limit

$\gamma \rightarrow \infty$ yields a delta function, a point that we come back to in § 4.3 and Appendix A. Furthermore, the weak velocity amplitude limit ![]() $u \rightarrow 0$ is also local.

$u \rightarrow 0$ is also local.

To further explicate the conditional average procedure, we compare empirical conditional averages of (2.10) and (2.11) (specialized to the present system) to the direct conditionally averaged equations (3.36)–(3.38). Concretely, we use the flow field and source term

in a ![]() $2 {\rm \pi}$ periodic domain and

$2 {\rm \pi}$ periodic domain and ![]() $\gamma = 1$ for the transition rate. We employ a 2-D pseudo-spectral Fourier discretization with

$\gamma = 1$ for the transition rate. We employ a 2-D pseudo-spectral Fourier discretization with ![]() $48 \times 48$ grid points and evolve both systems to a time

$48 \times 48$ grid points and evolve both systems to a time ![]() $t=25$, which suffices for the flow and tracer to ‘forget’ its initial condition and reach a statistically steady state. For the stochastic differential equation, we use 10 000 ensemble members. We emphasize that the turbulent diffusivity operator is independent of the source term, and we make a choice for illustrative purposes.

$t=25$, which suffices for the flow and tracer to ‘forget’ its initial condition and reach a statistically steady state. For the stochastic differential equation, we use 10 000 ensemble members. We emphasize that the turbulent diffusivity operator is independent of the source term, and we make a choice for illustrative purposes.

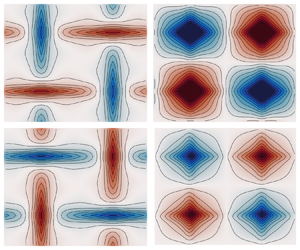

Figure 4 summarizes the result of comparing two different calculation methods. To compute the conditional averages, for example, ![]() $\varTheta _1$ at time

$\varTheta _1$ at time ![]() $t=25$, we proceed as follows. At the time

$t=25$, we proceed as follows. At the time ![]() $t=25$, we tag all fields that are currently being advected by

$t=25$, we tag all fields that are currently being advected by ![]() $\boldsymbol {u}_1$, add them up and then divide by the number of ensemble members, which in the present case is 10 000. This sequence of calculations yields

$\boldsymbol {u}_1$, add them up and then divide by the number of ensemble members, which in the present case is 10 000. This sequence of calculations yields ![]() $\varTheta _1$, shown in figure 4(a). The conditional means

$\varTheta _1$, shown in figure 4(a). The conditional means ![]() $\varTheta _2$ and

$\varTheta _2$ and ![]() $\varTheta _3$ are obtained similarly. We then calculate the conditional mean equations directly in the bottom row. All fields are plotted with respect to the same colour scale. For convenience, we also show the ensemble average, which here is

$\varTheta _3$ are obtained similarly. We then calculate the conditional mean equations directly in the bottom row. All fields are plotted with respect to the same colour scale. For convenience, we also show the ensemble average, which here is ![]() $\langle \theta \rangle = \varphi _1 = \varTheta _1 + \varTheta _2 + \varTheta _3$. The empirical averages have a slight asymmetry due to finite sampling effects. For reference, the source and stream function of the flow field are shown in the last column of the figure.

$\langle \theta \rangle = \varphi _1 = \varTheta _1 + \varTheta _2 + \varTheta _3$. The empirical averages have a slight asymmetry due to finite sampling effects. For reference, the source and stream function of the flow field are shown in the last column of the figure.

Figure 4. Three-state system. Here, we show the stream function, source term, conditional averages and the ensemble mean obtained using two different calculation methods. The first calculation method uses an empirical average with 10 000 ensemble members, and the second method uses the equations from the text. Both are shown at a final time of ![]() $T = 25$ time units where the statistical steady state has been reached.

$T = 25$ time units where the statistical steady state has been reached.

When the tracers are being advected by flow field ![]() $\boldsymbol {u}_1$, we see that the red in the stream function corresponds to a counterclockwise flow and the blue to a clockwise flow. The negative source concentration corresponding to the top left and bottom right parts of the domain are then transported vertically, whereas the positive source, located in the top right and bottom left of the domain, is transported horizontally. When advected by flow field

$\boldsymbol {u}_1$, we see that the red in the stream function corresponds to a counterclockwise flow and the blue to a clockwise flow. The negative source concentration corresponding to the top left and bottom right parts of the domain are then transported vertically, whereas the positive source, located in the top right and bottom left of the domain, is transported horizontally. When advected by flow field ![]() $\boldsymbol {u}_3$, the clockwise and counterclockwise roles reverse, and positive tracer sources are transported vertically and negative tracer sources are transported horizontally. When advected by

$\boldsymbol {u}_3$, the clockwise and counterclockwise roles reverse, and positive tracer sources are transported vertically and negative tracer sources are transported horizontally. When advected by ![]() $\boldsymbol {u}_2 = 0$, the conditional averages reflect the source term closely.

$\boldsymbol {u}_2 = 0$, the conditional averages reflect the source term closely.

Now that we have presented two examples, in the next section, we generalize by expressing the turbulent diffusivity operator in terms of the spectrum of the generator.

4. General approach

The previous example had the following pattern:

(i) compute the eigenvectors of the generator

$\mathcal {Q}$;

$\mathcal {Q}$;(ii) transform the equations into a basis that diagonalizes

$\mathcal {Q}$;

$\mathcal {Q}$;(iii) separate the mean equation from the perturbation equations;

(iv) solve for the perturbation variables in terms of the mean variable.

Here we aim to gather the above procedure in the general case where we have access to the eigenvectors of ![]() $\mathcal {Q}$. Furthermore, in the last example, we claimed that the local turbulent diffusivity approximation, as calculated by neglecting the effects of diffusion and perturbation gradients, is equivalent to calculating the integrated auto-correlation of the Markov process. We justify that claim in § 4.3.

$\mathcal {Q}$. Furthermore, in the last example, we claimed that the local turbulent diffusivity approximation, as calculated by neglecting the effects of diffusion and perturbation gradients, is equivalent to calculating the integrated auto-correlation of the Markov process. We justify that claim in § 4.3.

4.1. Notation

Let us establish a notation for the general procedure. We again let ![]() $\mathcal {Q}$ denote the generator with corresponding transition probability matrix

$\mathcal {Q}$ denote the generator with corresponding transition probability matrix ![]() $\mathscr {P}(\tau )$ given by the matrix exponential

$\mathscr {P}(\tau )$ given by the matrix exponential

The entries of the matrix ![]() $[\mathscr {P}(\tau )]_{mn}$ denote the transition probability of state

$[\mathscr {P}(\tau )]_{mn}$ denote the transition probability of state ![]() $n$ to the state

$n$ to the state ![]() $m$. In each column of the transition matrix, the sum of the entries is one. We assume a unique zero eigenvalue for

$m$. In each column of the transition matrix, the sum of the entries is one. We assume a unique zero eigenvalue for ![]() $\mathcal {Q}$ with all other eigenvalues negative. We also assume that the eigenvalues can be ordered in such a way that they are decreasing, i.e.

$\mathcal {Q}$ with all other eigenvalues negative. We also assume that the eigenvalues can be ordered in such a way that they are decreasing, i.e. ![]() $\lambda _1 = 0$,

$\lambda _1 = 0$, ![]() $\lambda _2 < 0$ and

$\lambda _2 < 0$ and ![]() $\lambda _i \leq \lambda _j$ for

$\lambda _i \leq \lambda _j$ for ![]() $i > j$ with

$i > j$ with ![]() $j \geq 2$. These choices result in a unique statistical steady state which we denote by the vector

$j \geq 2$. These choices result in a unique statistical steady state which we denote by the vector ![]() $\boldsymbol {v}_1$ with the property

$\boldsymbol {v}_1$ with the property

and similarly for the left eigenvector, ![]() $\boldsymbol {w}_1$. We denote the entries of

$\boldsymbol {w}_1$. We denote the entries of ![]() $\boldsymbol {v}_1$ and

$\boldsymbol {v}_1$ and ![]() $\boldsymbol {w}_1$ by column vectors

$\boldsymbol {w}_1$ by column vectors

\begin{equation} \boldsymbol{v}_1 = \begin{bmatrix} P_1 \\ P_2 \\ \vdots \\ P_M \end{bmatrix} \quad \text{and}\quad \boldsymbol{w}_1 = \begin{bmatrix} 1 \\ 1 \\ \vdots \\ 1 \end{bmatrix}, \end{equation}

\begin{equation} \boldsymbol{v}_1 = \begin{bmatrix} P_1 \\ P_2 \\ \vdots \\ P_M \end{bmatrix} \quad \text{and}\quad \boldsymbol{w}_1 = \begin{bmatrix} 1 \\ 1 \\ \vdots \\ 1 \end{bmatrix}, \end{equation}

where ![]() $M$ is the number of states. We assume that the eigenvector

$M$ is the number of states. We assume that the eigenvector ![]() $\boldsymbol {v}_1$ is normalized such that

$\boldsymbol {v}_1$ is normalized such that ![]() $\sum _m P_m = 1$. Consequently,

$\sum _m P_m = 1$. Consequently, ![]() $\boldsymbol {w}_1 \boldsymbol {\cdot } \boldsymbol {v}_1 = \boldsymbol {w}_1^{\rm T} \boldsymbol {v}_1 = 1$. We introduce unit vectors

$\boldsymbol {w}_1 \boldsymbol {\cdot } \boldsymbol {v}_1 = \boldsymbol {w}_1^{\rm T} \boldsymbol {v}_1 = 1$. We introduce unit vectors ![]() $\hat {\boldsymbol {e}}_m$ whose

$\hat {\boldsymbol {e}}_m$ whose ![]() $m$th entry is zero and all other entries are zero, e.g.

$m$th entry is zero and all other entries are zero, e.g.

\begin{equation} \hat{\boldsymbol{e}}_1 = \begin{bmatrix} 1 \\ 0 \\ \vdots \\ 0 \end{bmatrix} ,\quad \hat{\boldsymbol{e}}_2 = \begin{bmatrix} 0 \\ 1 \\ \vdots \\ 0 \end{bmatrix} \quad \text{and}\quad \hat{\boldsymbol{e}}_M = \begin{bmatrix} 0 \\ 0 \\ \vdots \\ 1 \end{bmatrix} . \end{equation}

\begin{equation} \hat{\boldsymbol{e}}_1 = \begin{bmatrix} 1 \\ 0 \\ \vdots \\ 0 \end{bmatrix} ,\quad \hat{\boldsymbol{e}}_2 = \begin{bmatrix} 0 \\ 1 \\ \vdots \\ 0 \end{bmatrix} \quad \text{and}\quad \hat{\boldsymbol{e}}_M = \begin{bmatrix} 0 \\ 0 \\ \vdots \\ 1 \end{bmatrix} . \end{equation}

Thus, ![]() $\boldsymbol {v}_1 = \sum _m P_m \boldsymbol {\hat {e}}_m$,

$\boldsymbol {v}_1 = \sum _m P_m \boldsymbol {\hat {e}}_m$, ![]() $\boldsymbol {w}_1 = \boldsymbol {\hat {e}}_m$. Furthermore,

$\boldsymbol {w}_1 = \boldsymbol {\hat {e}}_m$. Furthermore, ![]() $\boldsymbol {\hat {e}}_m \boldsymbol {\cdot } \boldsymbol {v}_1 = P_m$ and

$\boldsymbol {\hat {e}}_m \boldsymbol {\cdot } \boldsymbol {v}_1 = P_m$ and ![]() $\boldsymbol {\hat {e}}_m \boldsymbol {\cdot } \boldsymbol {w}_1 = 1$ for each

$\boldsymbol {\hat {e}}_m \boldsymbol {\cdot } \boldsymbol {w}_1 = 1$ for each ![]() $m$.

$m$.

For the discussion that follows, we will assume that the matrix ![]() $\mathcal {Q}$ has an eigenvalue decomposition. In general, we denote the right eigenvectors of

$\mathcal {Q}$ has an eigenvalue decomposition. In general, we denote the right eigenvectors of ![]() $\mathcal {Q}$ by

$\mathcal {Q}$ by ![]() $\boldsymbol {v}_i$ for

$\boldsymbol {v}_i$ for ![]() $i = 1, \ldots, M$ and the left eigenvectors by

$i = 1, \ldots, M$ and the left eigenvectors by ![]() $\boldsymbol {w}_i$ for

$\boldsymbol {w}_i$ for ![]() $i = 1,\ldots, M$. These vectors are all associated with eigenvalues

$i = 1,\ldots, M$. These vectors are all associated with eigenvalues ![]() $\lambda _i$ for

$\lambda _i$ for ![]() $i=1,\ldots, M$, where

$i=1,\ldots, M$, where ![]() $i=1$ denotes the unique eigenvalue

$i=1$ denotes the unique eigenvalue ![]() $\lambda _1 = 0$. We recall that the left eigenvectors can be constructed from the right eigenvectors by placing the right eigenvectors in the columns of a matrix

$\lambda _1 = 0$. We recall that the left eigenvectors can be constructed from the right eigenvectors by placing the right eigenvectors in the columns of a matrix ![]() $V$, computing the inverse

$V$, computing the inverse ![]() $V^{-1}$ and extracting the rows of the inverse. The aforementioned procedure guarantees the normalization

$V^{-1}$ and extracting the rows of the inverse. The aforementioned procedure guarantees the normalization ![]() $\boldsymbol {w}_j \boldsymbol {\cdot } \boldsymbol {v}_i = \boldsymbol {w}_i^{\rm T} \boldsymbol {v}_j = \delta _{ij}$. Thus, we have the relations

$\boldsymbol {w}_j \boldsymbol {\cdot } \boldsymbol {v}_i = \boldsymbol {w}_i^{\rm T} \boldsymbol {v}_j = \delta _{ij}$. Thus, we have the relations

With notation now in place, we observe that the operators ![]() $\mathcal {Q}$ and

$\mathcal {Q}$ and ![]() $\mathscr {P}(\tau )$ are characterized by their spectral decomposition

$\mathscr {P}(\tau )$ are characterized by their spectral decomposition

We now introduce our Markov states as steady vector fields. The use of several vector spaces imposes a burden on notation: the vector spaces associated with Markov states, ensemble members and the vector field ![]() $\boldsymbol {u}$. Instead of using overly decorated notation with an excessive number of indices, we introduce the convention that

$\boldsymbol {u}$. Instead of using overly decorated notation with an excessive number of indices, we introduce the convention that ![]() $\boldsymbol {u}$ will always belong to the vector space associated with the vector field, and all other vectors are associated with the vector space of Markov states. For example, if we have two flow states

$\boldsymbol {u}$ will always belong to the vector space associated with the vector field, and all other vectors are associated with the vector space of Markov states. For example, if we have two flow states ![]() $\boldsymbol {u}_1$ and

$\boldsymbol {u}_1$ and ![]() $\boldsymbol {u}_2$, then

$\boldsymbol {u}_2$, then

4.2. Spectral representation

With this notation now in place, the statistically steady equations

are represented as the matrix system

\begin{equation} \sum_{m} \hat{\boldsymbol{e}}_m \boldsymbol{\nabla} \boldsymbol{\cdot} \left( \boldsymbol{u}_m \varTheta_m \right)= \sum_{m} \hat{\boldsymbol{e}}_m \kappa \Delta \varTheta_m + s \boldsymbol{v}_1 + \mathcal{Q} \left(\sum_{m} \hat{\boldsymbol{e}}_m \varTheta_m \right), \end{equation}

\begin{equation} \sum_{m} \hat{\boldsymbol{e}}_m \boldsymbol{\nabla} \boldsymbol{\cdot} \left( \boldsymbol{u}_m \varTheta_m \right)= \sum_{m} \hat{\boldsymbol{e}}_m \kappa \Delta \varTheta_m + s \boldsymbol{v}_1 + \mathcal{Q} \left(\sum_{m} \hat{\boldsymbol{e}}_m \varTheta_m \right), \end{equation}

where we made use of ![]() $\sum _m \boldsymbol {\hat {e}}_m P_m = \boldsymbol {v}_1$. We now re-express (4.10) in terms of a basis that uses the eigenvectors of the transition matrix. Define components

$\sum _m \boldsymbol {\hat {e}}_m P_m = \boldsymbol {v}_1$. We now re-express (4.10) in terms of a basis that uses the eigenvectors of the transition matrix. Define components ![]() $\varphi _n$ by the change of basis formula

$\varphi _n$ by the change of basis formula