I. Introduction

Artificial Intelligence (AI) is a discipline that is concerned with the generation of software systems that provide functions, the execution of which requires what is typically referred to by the word intelligence. Thereby, the corresponding tasks can be performed by pure software agents as well as by physical systems, such as robots or self-driving cars.

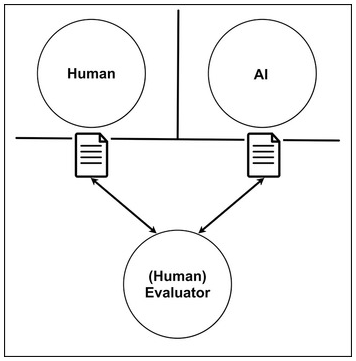

As the term ‘intelligence’ is already very difficult to define, the definition of AI is, of course, correspondingly difficult and numerous definitions can be found in the literature.Footnote 1 Among them are several approaches that are based on human behavior or thinking. For example, the Turing testFootnote 2 introduced by Alan Turing in 1950, in which the actions generated by the system or robot should not be distinguishable from those generated by humans, has to be mentioned in this context. Such a Turing test for systems interacting with humans would then mean, for example, that a human could no longer determine whether a conversation partner on the telephone is a human or software.

However, most current AI systems aim to generate agents that think or act rationally. To realize systems that think rationally, often logic-based representations and reasoning systems are used. The basic assumption here is that rational thinking entails rational action if the reasoning mechanisms used are correct. Another group of definitional approaches deals with the direct generation of rational actions. In such systems, the underlying representations often are not human-readable or easily understood by humans. They often use a goal function that describes the usefulness of states. The task of the system is then to maximize this objective function, that is, to determine the state that has the maximum usefulness or that, in case of uncertainties, maximizes the future expected reward. If, for example, one chooses the cleanliness of the work surface minus the costs for the executed actions as the objective function for a cleaning robot, then in the ideal case this leads to the robot selecting the optimal actions in order to keep the work surface as clean as possible. This already shows the strength of the approach to generate rational behavior compared to the approach to generate human behavior. A robot striving for rational behavior can simply become more effective than one that merely imitates human behavior, because humans, unfortunately, do not show the optimal behavior in all cases. The disadvantage lies in the fact that the interpretation of the representations or structures learned by the system typically is not easy, which makes verification difficult. Especially in the case of safety-relevant systems, it is often necessary to provide evidence of the safety of, for example, the control software. However, this can be very difficult and generally even impossible to do analytically, so one has to rely on statistics. In the case of self-driving cars, for example, one has to resort to extensive field tests in order to be able to prove the required safety of the systems.

Historically, the term AI dates back to 1956, when at a summer workshop called the Dartmouth Summer Research Project on Artificial Intelligence,Footnote 3 renowned scientists met in the state of New Hampshire, USA, to discuss AI. The basic idea was that any aspect of learning or other properties of intelligence can be described so precisely that machines can be used to simulate them. In addition, the participants wanted to discuss how to get computers to use language and abstract concepts, or simply improve their own behavior. This meeting is still considered today to have been extremely successful and has led to a large number of activities in the field of AI. For example, in the 1980s, there was a remarkable upswing in AI in which questions of knowledge representation and knowledge processing played an important role. In this context, for example, expert systems became popular.Footnote 4 Such systems used a large corpus of knowledge, represented for example in terms of facts and rules, to draw conclusions and provide solutions to problems. Although there were initially quite promising successes with expert systems, these successes then waned quite a bit, leading to a so-called demystification of AI and ushering in the AI winter.Footnote 5 It was not until the 1990s when mathematical and probabilistic methods increasingly took hold and a new upswing could be recorded. A prominent representative of this group of methods is Bayesian networks.Footnote 6 The systems resulting from this technique were significantly more robust than those based on symbolic techniques. This period also started the advent of machine learning techniques based on probabilistic and mathematical concepts. For example, support vector machinesFootnote 7 revolutionized machine learning. Until a few years ago, they were considered one of the best performing approaches to classification problems. This radiated to other areas, such as pattern recognition and image processing. Face recognition and also speech recognition algorithms found their way into products we use in our daily lives, such as cameras or even cell phones. Cameras can automatically recognize faces and cell phones can be controlled by speech. These methods have been applied in automobiles, for example when components can be controlled by speech. However, there are also fundamental results from the early days of AI that have a substantial influence on today’s products. These include, for example, the ability of navigation systems to plan the shortest possible routesFootnote 8 and navigate us effectively to our destination based on given maps. Incidentally, the same approaches play a significant role in computer games, especially when it comes to simulating intelligent systems that can effectively navigate the virtual environment. At the same time, there was also a paradigm shift in robotics. The probabilistic methods had a significant impact, especially on the navigation of mobile robots, and today, thanks to this development, it is well understood how to build mobile systems that move autonomously in their environment. This currently has an important influence on various areas, such as self-driving cars or transport systems in logistics, where extensive innovations can be expected in the coming years.

For a few years now, the areas of machine learning and robotics have been considered particularly promising, based especially on the key fields of big data, deep learning, and autonomous navigation and manipulation.

II. Machine Learning

Machine learning typically involves developing algorithms to improve the performance of procedures based on data or examples and without explicit programming.Footnote 9 One of the predominant applications of machine learning is that of classification. Here the system is presented with a set of examples and their corresponding classes. The system must now learn a function that maps the properties or attributes of the examples to the classes with the goal of minimizing the classification error. Of course, one could simply memorize all the examples, which would automatically minimize the classification error, but such a procedure would require a lot of space and, moreover, would not generalize to examples not seen before. In principle, such an approach can only guess. The goal of machine learning is rather to learn a compact function that performs well on the given data and also generalizes well to unseen examples. In the context of classification, examples include decision trees, random forests, a generalization thereof, support vector machines, or boosting. These approaches are considered supervised learning because the learner is always given examples including their classes.

Another popular supervised learning problem is regression. Here, the system is given a set of points of a function with the task of determining a function that approximates the given points as well as possible. Again, one is interested in functions that are as compact as possible and minimize the approximation error. In addition, there is also unsupervised learning, where one searches for a function that explains the given data as well as possible. A typical unsupervised learning problem is clustering, where one seeks centers for a set of points in the plane such that the sum of the squared distances of all points from their nearest center is minimized.

Supervised learning problems occur very frequently in practice. For example, consider the face classification problem. Here, for a face found in an image, the problem is to assign the name of the person. Such data is available in large masses to companies that provide social networks, such as Facebook. Users can not only mark faces on Facebook but also assign the names of their friends to these marked faces. In this way, a huge data set of images is created in which faces are marked and labelled. With this, supervised learning can now be used to (a) identify faces in images and (b) assign the identified faces to people. Because the classifiers generalize well, they can subsequently be applied to faces that have not been seen before, and nowadays they produce surprisingly good results.

In fact, the acquisition of large corpora of annotated data is one of the main problems in the context of big data and deep learning. Major internet companies are making large-scale efforts to obtain massive corpora of annotated data. So-called CAPTCHAs (Completely Automated Public Turing tests to tell Computers and Humans Apart) represent an example of this.Footnote 10 Almost everyone who has tried to create a user account on the Internet has encountered such CAPTCHAs. Typically, service providers want to ensure that user accounts are not registered en masse by computer programs. Therefore, the applicants are provided with images of distorted text that can hardly be recognized by scanners and optical character recognition. Because the images are now difficult to recognize by programs, they are ideal for distinguishing humans from computer programs or bots. Once humans have annotated the images, learning techniques can again be used to solve these hard problems and further improve optical character recognition. At the same time, this ensures that computer programs are always presented with the hardest problems that even the best methods cannot yet solve.

1. Key Technology Big Data

In 2018, the total amount of storage globally available was estimated to be about 20 zettabytes (1 zettabyte = 1021 byte = 109 terabytes).Footnote 11 Other sources estimate internet data transfer at approximately 26 terabytes per second.Footnote 12 Of course, predictions are always subject to large uncertainties. Estimates from the International Data Corporation assume that the total volume will grow to 160 zettabytes by 2025, an estimated tenfold increase. Other sources predict an annual doubling. The number of pages of the World Wide Web indexed by search engines is enormous. Google announced almost ten years ago that they have indexed 1012 different URLs (uniform resource locators, reference to a resource on the World Wide Web).Footnote 13 Even though these figures are partly based on estimates and should therefore be treated with caution, especially with regard to predictions for the future, they make it clear that huge amounts of data are available on the World Wide Web. This creates an enormous potential of data that is available not only to people but also to service providers such as Apple, Facebook, Amazon, Google, and many others, in order to offer services that are helpful to people in other contexts using appropriate AI methods. One of the main problems here, however, is the provision of data. Data is not helpful in all cases. As a rule, it only becomes so when people annotate it and assign a meaning to it. By using learning techniques, images that have not been seen before can be annotated. The techniques for doing so will be presented in the following sections. We will also discuss which methods can be used to generate this annotated data.

2. Key Technology Deep Learning

Deep learningFootnote 14 is a technique that emerged a few years ago and that can learn from massive amounts of data to provide effective solutions to a variety of machine learning problems. One of the most popular approaches is the so-called deep neural networks. They are based on the neural networks whose introduction dates back to Warren McCulloch and Walter Pitts in 1943.Footnote 15 At that time, they tried to reproduce the functioning of neurons of the brain by using electronic circuits, which led to the artificial neural networks. The basic idea was to build a network consisting of interconnected layers of nodes. Here, the bottom layer is considered the input layer, and the top layer is considered the output layer. Each node now executes a simple computational rule, such as a simple threshold decision. The outputs of each node in a layer are then passed to the nodes in the next layer using weighted sums. These networks were already extremely successful and produced impressive results, for example, in the field of optical character recognition. However, even then there were already pioneering successes from today’s point of view, for example in the No Hands Across America project,Footnote 16 in which a minivan navigated to a large extent autonomously and controlled by a neural network from the east coast to the west coast of the United States. Until the mid-80s of the last century, artificial neural networks played a significant role in machine learning, until they were eventually replaced by probabilistic methods and, for example, Bayesian networks,Footnote 17 support vector machines,Footnote 18 or Gaussian processes.Footnote 19 These techniques have dominated machine learning for more than a decade and have also led to numerous applications, for example in image processing, speech recognition, or even human–machine interaction. However, they have recently been superseded by the deep neural networks, which are characterized by having a massive number of layers that can be effectively trained on modern hardware, such as graphics cards. These deep networks learn representations of the data at different levels of abstraction at each layer. Particularly in conjunction with large data sets (big data), these networks can use efficient algorithms such as backpropagation to optimize the parameters in a single layer based on the previous layer to identify structures in data. Deep neural networks have led to tremendous successes, for example in image, video, or speech processing. But they have also been used with great success in other tasks, such as in the context of object recognition or deep data interpretation. The deep neural networks could impressively demonstrate their ability in their application within AlphaGo, a computer program that defeated Lee Sidol, one of the best Go players in the world.Footnote 20 This is noteworthy because until a few years ago it was considered unlikely that Go programs would be able to play at such a level in the foreseeable future.

III. Robotics

Robotics is a scientific discipline that deals with the design of physical agents (robotic systems) that effectively perform tasks in the real world. They can thus be regarded as physical AI systems. Application fields of robotics are manifold. In addition to classical topics such as motion planning for robot manipulators, other areas of robotics have gained increasing interest in the recent past, for example, position estimation, simultaneous localization and mapping, and navigation. The latter is particularly relevant for transportation tasks. If we now combine manipulators with navigating platforms, we obtain mobile manipulation systems that can play a substantial role in the future and offer various services to their users. For example, production processes can become more effective and also can be reconfigured flexibly with these robots. To build such systems, various key competencies are required, some of which are already available or are at a quality level sufficient for a production environment, which has significantly increased the attractiveness of this technology in recent years.

1. Key Technology Navigation

Mobile robots must be able to navigate their environments effectively in order to perform various tasks effectively. Consider, for example, a robotic vacuum cleaner or a robotic lawnmower. Most of today’s systems do their work by essentially navigating randomly. As a result, as time progresses, the probability increases that the robot will have approached every point in its vicinity once so that the task is never guaranteed but very likely to be completed if one waits for a sufficiently long time. Obviously, such an approach is not optimal in the context of transport robots that are supposed to move an object from the pickup position to the destination as quickly as possible. Several components are needed to execute such a task as effectively as possible. First, the robot must have a path planning component that allows it to get from its current position to the destination point in the shortest possible path. Methods for this come from AI and are based, for example, on the well-known A* algorithm for the effective computation of shortest paths.Footnote 21 For path planning, robotic systems typically use maps, either directly in the form of roadmaps or by subdividing the environment of the robot into free and occupied space in order to derive roadmaps from this representation. However, a robot can only assume under very strong restrictions that the once planned path is actually free of obstacles. This is, in particular, the case if the robot operates in a dynamic environment, for example in one used by humans. In dynamic, real-world environments the robot has to face situations in which doors are closed, that there are obstacles on the planned path or that the environment has changed and the given map is, therefore, no longer valid. One of the most popular approaches to attack this problem is to equip the robot with sensors that allow it to measure the distance to obstacles and thus avoid obstacles. Additionally, an approach is used that avoids collisions and makes dynamic adjustments to the previously planned path. In order to navigate along a planned path, the robot must actually be able to accurately determine its position on the map and on the planned path (or distance from it). For this purpose, current navigation systems for robots use special algorithms based on probabilistic principles,Footnote 22 such as the Kalman filterFootnote 23 or the particle filter algorithm.Footnote 24 Both approaches and their variants have been shown to be extremely robust for determining a probability distribution about the position of the vehicle based on the distances to obstacles determined by the distance sensor and the given obstacle map. Given this distribution, the robot can choose its most likely position to make its navigation decisions. The majority of autonomously navigating robots that are not guided by induction loops, optical markers, or lines utilize probabilistic approaches for robot localization. A basic requirement for the components discussed thus far is the existence of a map. But how can a robot obtain such an obstacle map? In principle, there are two possible solutions for this. First, the user can measure the environment and use it to create a map with the exact positions of all objects in the robot’s workspace. This map can then be used to calculate the position of the vehicle or to calculate paths in the environment. The alternative is to use a so-called SLAM (Simultaneous Localization and Mapping)Footnote 25 method. Here, the robot is steered through its environment and, based on the data gathered throughout this process, automatically computes the map. Incidentally, this SLAM technique is also known in photogrammetry where it is used for generating maps based on measurements.Footnote 26 These four components: path planning, collision avoidance and replanning, localization, and SLAM for map generation are key to today’s navigation robots and also self-driving cars.

2. Key Technology Autonomous Manipulation

Manipulation has been successfully used in production processes in the past. The majority of these robots had fixed programmed actions and, furthermore, a cage around them to prevent humans from entering the action spaces of the robots. The future, however, lies in robots that are able to robustly grasp arbitrary objects even from cluttered scenes and that are intrinsically safe and cannot harm people. In particular, the development of lightweight systemsFootnote 27 will be a key enabler for human–robot collaboration. On the other hand, this requires novel approaches to robust manipulation. In this context, again, AI technology based on deep learning has played a key role over the past years and is envisioned to provide innovative solutions for the future. Recently, researchers presented an approach to apply deep learning to robustly grasp objects from cluttered scenes.Footnote 28 Both approaches will enable us in the future to build robots that coexist with humans, learn from them, and improve over time.

IV. Current and Future Fields of Application and Challenges

As already indicated, AI is currently more and more becoming a part of our daily lives. This affects both our personal and professional lives. Important transporters of AI technology are smartphones, as numerous functions on them are based on AI. For example, we can already control them by voice, they recognize faces in pictures, they automatically store important information for us, such as where our car is parked, and they play music we like after analyzing our music library or learning what we like from our ratings of music tracks. By analyzing these preferences in conjunction with those of other users, the predictions of tracks we like get better and better. This can, of course, be applied to other activities, such as shopping, where shopping platforms suggest possible products we might be interested in. This has long been known from search engines, which try to present us with answers that correspond as closely as possible to the Web pages for which we are actually looking. In robotics, the current key areas are logistics and flexible production (Industry 4.0). To remain competitive, companies must continue to optimize production processes. Here, mobile robots and flexible manipulation systems that can cooperate with humans will play a decisive role. This will result in significantly more flexible production processes, which will be of enormous importance for all countries with large manufacturing sectors. However, robots are also envisioned to perform various tasks in our homes.

By 2030, AI will penetrate further areas: Not only will we see robots performing ever more demanding tasks in production, but also AI techniques will find their way into areas performed by people with highly qualified training. For example, there was a paper in Nature that presented a system that could diagnose skin cancer based on an image of the skin taken with a cell phone.Footnote 29 The interesting aspect of this work is that the authors were actually able to achieve the detection rate of dermatologists with their deep neural networks-based system. This clearly indicates that there is enormous potential in AI to further optimize processes that require a high level of expertise.

With the increasing number of applications of systems relying on AI technology, there is also a growing need for the responsibility or the responsible governance of such systems. In particular, when they can impose risks for individuals, for example in the context of service robots that collaborate with humans or self-driving cars that co-exist with human traffic participants, where mistakes of the physical agent might substantially harm a person, the demands for systems whose behavior can be explained to, or understood by, humans are high. Even in the context of risk-free applications, there can be such a demand, for example, to better identify biases in recommender systems. A further relevant issue is that of privacy. In particular, AI systems based on machine learning require a large amount of data, which imposes the question of how these systems can be trained so that the privacy of the users can be maintained while at the same time providing all the necessary benefits. A further interesting tool for advancing the capabilities of such systems is fleet learning, learning in which all systems jointly learn from their users how to perform specific tasks. In this context, the question arises of how to guarantee that no system is taught inappropriate or even dangerous behavior. How can we build such systems so that they conform with values, norms, and regulations? Answers to these questions are by themselves challenging research problems and many chapters in this book address them.

As the field of machine learning advances, AI systems are becoming more and more useful in a number of domains, in particular due to their increasing ability to generalise beyond their training data. Our focus in this chapter is on understanding the different possibilities for the deployment of highly capable and general systems which we may build in the future. We introduce a framework for the deployment of AI which focuses on two ways for humans to interact with AI systems: delegation and supervision. This framework provides a new lens through which to view both the relationship between humans and AIs, and the relationship between the training and deployment of machine learning systems.

I. AIs As Tools, Agents, or Delegates

The last decade has seen dramatic progress in Artificial Intelligence (AI), in particular due to advances in deep learning and reinforcement learning. The increasingly impactful capabilities of our AI systems raise important questions about what future AIs might look like and how we might interact with them. In one sense, AI can be considered a particularly sophisticated type of software. Indeed, the line between AI and other software is very blurry: many software products rely on algorithms which fell under the remit of AI when they were developed, but are no longer typically described as AI.Footnote 1 Prominent examples include search engines like Google and image-processing tools like optical character recognition. Thus, when thinking about future AI systems, one natural approach is to picture us interacting with them similarly to how we interact with software programs: as tools which we will use to perform specific tasks, based on predefined affordances, via specially-designed interfaces.

Let us call this the ‘tool paradigm’ for AI. Although we will undoubtedly continue to develop some AIs which fit under this paradigm, compelling arguments have been made that other AIs will fall outside it – in particular, AIs able to flexibly interact with the real world to perform a wide range of tasks, displaying general rather than narrow intelligence. The example of humans shows that cognitive skills gained in one domain can be useful in a wide range of other domains; it is difficult to argue that the same cannot be true for AIs, especially given the similarities between human brains and deep neural networks. Although no generally intelligent AIs exist today, and some AI researchers are skeptical about the prospects for building them, most expect it to be possible within this century.Footnote 2 However, it does not require particularly confident views on the timelines involved to see value in starting to prepare for the development of artificial general intelligence (AGI) already.

Why won’t AGIs fit naturally into the tool paradigm? There are two core reasons: flexibility and autonomy. Tools are built with a certain set of affordances, which allow a user to perform specific tasks with them.Footnote 3 For example, software programs provide interfaces for humans to interact with, where different elements of the interface correspond to different functionalities. However, predefined interfaces cannot adequately capture the wide range of tasks that humans are, and AGIs will be, capable of performing. When working with other humans, we solve this problem by using natural language to specify tasks in an expressive and flexible way; we should expect that these and other useful properties will ensure that natural language is a key means of interacting with AGIs. Indeed, AI assistants such as Siri and Alexa are already rapidly moving in this direction.

A second difference between using tools and working with humans: when we ask a human to perform a complex task for us, we don’t need to directly specify each possible course of action. Instead, they will often be able to make a range of decisions and react to changing circumstances based on their own judgements. We should expect that, in order to carry out complex tasks like running a company, AGIs will also need to be able to act autonomously over significant periods of time. In such cases, it seems inaccurate to describe them as tools being directly used by humans, because the humans involved may know very little about the specific actions the AGI is taking.

In an extreme case, we can imagine AGIs which possess ingrained goals which they pursue autonomously over arbitrary lengths of time. Let’s call this the full autonomy paradigm. Such systems have been discussed extensively by Nick Bostrom and Eliezer Yudkowsky.Footnote 4 Stuart Russell argues that they are the logical conclusion of extrapolating the current aims and methods of machine learning.Footnote 5 Under this paradigm, AIs would acquire goals during their training process which they then pursue throughout deployment. Those goals might be related to, or influenced by, human preferences and values, but could be pursued without humans necessarily being in control or having veto power.

The prospect of creating another type of entity which independently pursues goals in a similar way to humans raises a host of moral, legal, and safety questions, and may have irreversible effects – because once created, autonomous AIs with broad goals will have incentives to influence human decision-making towards outcomes more favourable to their goals. In particular, concerns have been raised about the difficulty of ensuring that goals acquired by AIs during training are desirable ones from a human perspective. Why might AGIs nevertheless be built with this level of autonomy? The main argument towards this conclusion is that increasing AI autonomy will be a source of competitive economic or political advantage, especially if an AGI race occurs.Footnote 6 Once an AI’s strategic decision-making abilities exceed those of humans, then the ability to operate independently, without needing to consult humans and wait for their decisions, would give it a speed advantage over more closely-supervised competitors. This phenomenon has already been observed in high-frequency trading in financial markets – albeit to a limited extent, because trading algorithms can only carry out a narrow range of predefined actions.

Authors who have raised these concerns have primarily suggested that they be solved by developing better techniques for building the right autonomous AIs. However, we should not consider it a foregone conclusion that we will build fully autonomous AIs at all. As Stephen Cave and Sean ÓhÉigeartaigh point out, AI races are driven in part by self-fulfilling narratives – meaning that one way of reducing their likelihood is to provide alternative narratives which don’t involve a race to fully autonomous AGI.Footnote 7 In this chapter we highlight and explore an alternative which lies between the tool paradigm and the full autonomy paradigm, which we call the supervised delegation paradigm. The core idea is that we should aim to build AIs which can perform tasks and make decisions on our behalf upon request, but which lack persistent goals of their own outside the scope of explicit delegation. Like autonomous AIs, delegate AIs would be able to infer human beliefs and preferences, then flexibly make and implement decisions without human intervention; but like tool AIs, they would lack agency when they have not been deployed by humans. We call systems whose motivations function in this way aligned delegates (as discussed further in the next section).

The concept of delegation has appeared in discussions of agent-based systems going back decades,Footnote 8 and is closely related to Bostrom’s concept of ‘genie AI’.Footnote 9 Another related concept is the AI assistance paradigm advocated by Stuart Russell, which also focuses on building AIs that pursue human goals rather than their own goals.Footnote 10 However, Russell’s conception of assistant AIs is much broader in scope than delegate AIs as we have defined them, as we discuss in the next section. More recently, delegation was a core element of Andrew Critch and David Krueger’s ARCHES framework, which highlights the importance of helping multiple humans safely delegate tasks to multiple AIs.Footnote 11

While most of the preceding works were motivated by concern about the difficulty of alignment, they spend relatively little time explaining the specific problems involved in aligning machine learning systems, and how proposed solutions address them. The main contribution of this chapter is to provide a clearer statement of the properties which we should aim to build into AI delegates, the challenges which we should expect, and the techniques which might allow us to overcome them, in the context of modern machine learning (and more specifically deep reinforcement learning). A particular focus is the importance of having large amounts of data which specify desirable behaviour – or, in more poetic terms, the ‘unreasonable effectiveness of data’.Footnote 12 This is where the supervised aspect of supervised delegation comes in: we argue that, in order for AI delegates to remain trustworthy, it will be necessary to continuously monitor and evaluate their behaviour. We discuss ways in which the difficulties of doing so give rise to a tradeoff between safety and autonomy. We conclude with a discussion of how the goal of alignment can be a focal point for cooperation, rather than competition, between groups involved with AI development.

II. Aligned Delegates

What does it mean for an AI to be aligned with a human? The definition which we will use here comes from Paul Christiano: an AI is intent aligned with a human if the AI is trying to do what the human wants it to do.Footnote 13 To be clear, this does not require that the AI is correct about what the human wants it to do, nor that it succeeds – both of which will be affected by the difficulty of the task and the AI’s capabilities. The concept of intent alignment (henceforth just ‘alignment’) instead attempts to describe an AI’s motivations in a way that’s largely separable from its capabilities.

Having said that, the definition still assumes a certain baseline level of capabilities. As defined above, alignment is a property only applicable to AIs with sufficiently sophisticated motivational systems that they can be accurately described as trying to achieve things. It also requires that they possess sufficiently advanced theories of mind to be able to ascribe desires and intentions to humans, and reasonable levels of coherence over time. In practice, because so much of human communication happens via natural language, it will also require sufficient language skills to infer humans’ intentions from their speech. Opinions differ on how difficult it is to meet these criteria – some consider it appropriate to take an ‘intentional stance’ towards a wide range of systems, including simple animals, whereas others have more stringent requirements for ascribing intentionality and theory of mind.Footnote 14 We need not take a position on these debates, except to hold that sufficiently advanced AGIs could meet each of these criteria.

Another complication comes from the ambiguity of ‘what the human wants’. Iason Gabriel argues that ‘there are significant differences between AI that aligns with instructions, intentions, revealed preferences, ideal preferences, interests and values’; Christiano’s definition of alignment doesn’t pin down which of these we should focus on.Footnote 15 Alignment with the ideal preferences and values of fully-informed versions of ourselves (also known as ‘ambitious alignment’) has been the primary approach discussed in the context of fully autonomous AI. Even Russell’s assistant AIs are intended to ‘maximise the realisation of human preferences’ – where he is specifically referring to preferences that are ‘all-encompassing: they cover everything you might care about, arbitrarily far into the future’.Footnote 16

Yet it’s not clear whether this level of ambitious alignment is either feasible or desirable. In terms of feasibility, focusing on long timeframes exacerbates many of the problems we discuss in later sections. And in terms of desirability, ambitious alignment implies that a human is no longer an authoritative source for what an AI aligned with that human should aim to do. An AI aligned with a human’s revealed preferences, ideal preferences, interests, or values might believe that it understands them better than the human does, which could lead to that AI hiding information from the human or disobeying explicit instructions. Because we are still very far from any holistic theory of human preferences or values, we should be wary of attempts to design AIs which take actions even when their human principals explicitly instruct them not to; let us call this the principle of deference. (Note that the principle is formulated in an asymmetric way – it seems plausible that aligned AIs should sometimes avoid taking actions even when instructed to do so, in particular illegal or unethical actions.)

For our purposes, then, we shall define a delegate AI as aligned with a human principal if it tries to do only what that human intends it to do, where the human’s intentions are interpreted to be within the scope of tasks the AI has been delegated. What counts as a delegated task depends on what the principal has said to the AI – making natural language an essential element of the supervised delegation paradigm. This contrasts with both the tool paradigm (in which many AIs will not be general enough to understand linguistic instructions) and the full autonomy paradigm (in which language is merely considered one of many information channels which help AIs understand how to pursue their underlying goals).

Defining delegation in terms of speech acts does not, however, imply that all relevant information needs to be stated explicitly. Although early philosophers of language focused heavily on the explicit content of language, more recent approaches have emphasised the importance of a pragmatic focus on speaker intentions and wider context in addition to the literal meanings of the words spoken.Footnote 17 From a pragmatic perspective, full linguistic competence includes the ability to understand the (unspoken) implications of a statement, as well as the ability to interpret imprecise or metaphorical claims in the intended way. Aligned AI delegates should use this type of language understanding in order to interpret the ‘scope’ of tasks in terms of the pragmatics and context of the instructions given, in the same way that humans do when following instructions. An AI with goals which extend outside that scope, or which don’t match its instructions, would count as misaligned.

We should be clear that aiming to build aligned delegates with the properties described above will likely involve making some tradeoffs against desirable aspects of autonomy. For example, an aligned delegate would not take actions which are beneficial for its user that are outside the scope of what it has been asked to do; nor will it actively prevent its user from making a series of bad choices. We consider these to be features, though, rather than bugs – they allow us to draw a boundary before reaching full autonomy, with the aim of preventing a gradual slide into building fully autonomous systems before we have a thorough understanding of the costs, benefits, and risks involved. The clearer such boundaries are, the easier it will be to train AIs with corresponding motivations (as we discuss in the next section).

A final (but crucial) consideration is that alignment is a two-place predicate: an AI cannot just be aligned simpliciter, but rather must be aligned with a particular principal – and indeed could be aligned with different principals in different ways. For instance, when AI developers construct an AI, they would like it to obey the instructions given to it by the end user, but only within the scope of whatever terms and conditions have been placed on it. From the perspective of a government, another limitation is desirable: AI should ideally be aligned to their end users only within the scope of legal behaviour. The questions of who AIs should be aligned with, and who should be held responsible for their behaviour, are fundamentally questions of politics and governance rather than technical questions. However, technical advances will affect the landscape of possibilities in important ways. Particularly noteworthy is the effect of AI delegates performing impactful political tasks – such as negotiation, advocacy, or delegation of their own – on behalf of their human principals. The increased complexity of resulting AI governance problems may place stricter requirements on technical approaches to achieving alignment.Footnote 18

III. The Necessity of Human Supervision

So far we have talked about desirable properties of alignment without any consideration of how to achieve those desiderata. Unfortunately, a growing number of researchers have raised concerns that current machine learning techniques are inadequate for ensuring alignment of AGIs. Research in this area focuses on two core problems. The first is the problem of outer alignment: the difficulty in designing reward functions for reinforcement learning agents which incentivise desirable behaviour while penalising undesirable behaviour.Footnote 19 Victoria Krakovna et al catalogue many examples of specification gaming in which agents find unexpected ways to score highly even in relatively simple environments, most due to mistakes in how the reward function was specified.Footnote 20 As we train agents in increasingly complex and open-ended environments, designing ungameable reward functions will become much more difficult.Footnote 21

One major approach to addressing the problems with explicit reward functions involves generating rewards based on human data – known as reward learning. Early work on reward learning focused on deriving reward functions from human demonstrations – a process known as inverse reinforcement learning.Footnote 22 However, this requires humans themselves to be able to perform the task to a reasonable level in order to provide demonstrations. An alternative approach which avoids this limitation involves inferring reward functions from human evaluations of AI behaviour. This approach, known as reward modelling, has been used to train AIs to perform tasks which humans cannot demonstrate well, such as controlling a (simulated) robot body to do a backflip.Footnote 23

In most existing examples of reward learning, reward functions are learned individually for each task of interest – an approach which doesn’t scale to systems which generalise to new tasks after deployment. However, a growing body of work on interacting with reinforcement learning agents using natural language has been increasingly successful in training AIs to generalise to novel instructions.Footnote 24 This fits well with the vision of aligned delegation described in the previous section, in which specification of tasks for AIs involves two steps: first training AIs to have aligned motivations, and then using verbal instructions to delegate them specific tasks. The hope is that if AIs are rewarded for following a wide range of instructions in a wide range of situations, then they will naturally acquire the motivation to follow human instructions in general, including novel instructions in novel environments.

However, this hope is challenged by a second concern. The problem of inner alignment is that even if we correctly specify the reward function used during training, the resulting policy may not possess the goal described by that reward function. In particular, it may learn to pursue proxy goals which are correlated with reward during most of the training period, but which eventually diverge (either during later stages of training, or during deployment).Footnote 25 This possibility is analogous to how humans learned to care directly about food, survival, sex, and so on – proxies which were strongly correlated with genetic fitness in our ancestral environment, but are much less so today.Footnote 26 As an illustration of how inner misalignment might arise in the context of machine learning, consider training a policy to follow human instructions in a virtual environment containing many incapacitating traps. If it is rewarded every time it successfully follows an instruction, then it will learn to avoid becoming incapacitated, as that usually prevents it from completing its assigned task. This is consistent with the policy being aligned, if the policy only cares about surviving the traps as a means to complete its assigned task – in other words, as an instrumental goal. However, if policies which care about survival only as an instrumental goal receive (nearly) the same reward as policies which care about survival for its own sake (as a final goal) then we cannot guarantee that the training process will find one in the former category rather than the latter.

Now, survival is just one example of a proxy goal that might lead to inner misalignment; and it will not be relevant in all training environments. But training environments which are sufficiently complex to give rise to AGI will need to capture at least some of the challenges of the real world – imperfect information, resource limitations, and so on. If solving these challenges is highly correlated with receiving rewards during training, then how can we ensure that policies only learn to care about solving those challenges for instrumental purposes, within the bounds of delegated tasks? The most straightforward approach is to broaden the range of training data used, thereby reducing the correlations between proxy goals and the intended goal. For example, in the environment discussed in the previous paragraph, instructing policies to deliberately walk into traps (and rewarding them for doing so) would make survival less correlated with reward, thereby penalising policies which pursue survival for its own sake.

In practice, though, when talking about training artificial general intelligences to perform a wide range of tasks, we should expect that the training data will encode many incentives which are hard to anticipate in advance. Language models such as GPT-3 are already being used in a wide range of surprising applications, from playing chess (using text interactions only) to generating text adventure games.Footnote 27 It will be difficult for AI developers to monitor AI behaviour across many domains, and then design rewards which steer those AIs towards intended behaviour. This difficulty is exacerbated by the fact that modern machine learning techniques are incredibly data-hungry: training agents to perform well in difficult games can take billions of steps. If the default data sources available give rise to inner or outer alignment problems, then the amount of additional supervision required to correct these problems may be infeasible for developers to collect directly. So, how can we obtain enough data to usefully think about alignment failures in a wide range of circumstances, to address the outer and inner alignment problems?

Our suggestion is that this gap in supervision can be filled by end users. Instead of thinking of AI development as a training process followed by a deployment process, we should think of it as an ongoing cycle in which users feed back evaluations which are then used to help align future AIs. In its simplest form, this might involve users identifying inconsistencies or omissions in an AI’s statements, or ways in which it misunderstood the user’s intentions, or even just occasions when it took actions without having received human instructions. In order to further constrain an AI’s autonomy, the AI can also be penalised for behaviour which was desirable, but beyond the scope of the task it was delegated to perform. This form of evaluation is much easier than trying to evaluate the long-term consequences of an AI’s actions; yet it still pushes back against the underlying pressure towards convergent instrumental goals and greater autonomy that we described above.

Of course, user data is already collected by many different groups for many different purposes. Prominent examples include scraping text from Reddit, or videos from YouTube, in order to train large self-supervised machine learning models. However, these corpora contain many examples of behaviour we wouldn’t like AIs to imitate – as seen in GPT-3’s regurgitation of stereotypes and biases found in its training data.Footnote 28 In other cases, evaluations are inferred from user behaviour: likes on a social media post, or clicks on a search result, can be interpreted as positive feedback. Yet these types of metrics already have serious limitations: there are many motivations driving user engagement, not all of which should be interpreted as positive feedback. As interactions with AI become much more freeform and wide-ranging, inferred correlations will become even less reliable, compared with asking users to evaluate AI alignment directly. So even if users only perform explicit evaluations of a small fraction of AI behaviour, this could provide much more information about their alignment than any other sources of data currently available. And, unlike other data sources, user evaluations could flexibly match the distributions of tasks on which AIs are actually deployed in the real world, and respond to new AI behaviour very quickly.Footnote 29

IV. Beyond Human Supervision

Unfortunately, there are a number of reasons to expect that even widespread use of human evaluation will not be sufficient for reliable supervision in the long term. The core problem is that the more sophisticated an AI’s capabilities are, the harder it is to identify whether it is behaving as intended or not. In some narrow domains like chess and Go, experts already struggle to evaluate the quality of AI moves, and to tell the difference between blunders and strokes of brilliance. The much greater complexity of the real world will make it even harder to identify all the consequences of decisions made by AIs, especially in domains where they make decisions far faster and generate much more data than humans can keep up with.

Particularly worrying is the possibility of AIs developing deceptive behaviour with the aim of manipulating humans into giving better feedback. The most notable example of this came from reward modelling experiments in which a human rewarded an AI for grasping a ball with a robotic claw.Footnote 30 Instead of completing the intended task, the AI learned to move the claw into a position between the camera and the ball, thus appearing to grasp the ball without the difficulty of actually doing so. As AIs develop a better understanding of human psychology and the real-world context in which they’re being trained, manipulative strategies like this could become much more complex and much harder to detect. They would also not necessarily be limited to affecting observations sent directly to humans, but might also attempt to modify their reward signal using any other mechanisms they can gain access to.

The possibility of manipulation is not an incidental problem, but rather a core difficulty baked into the use of reinforcement learning in the real world. As AI pioneer Stuart Russell puts it:

The formal model of reinforcement learning assumes that the reward signal reaches the agent from outside the environment; but [in fact] the human and robot are part of the same environment, and the robot can maximize its reward by modifying the human to provide a maximal reward signal at all times. … [This] indicates a fundamental flaw in the standard formulation of RL.Footnote 31

In other words, AIs are trained to score well on their reward functions by taking actions to influence the environment around them, and human supervisors are a part of their environment which has a significant effect on the reward they receive, so we should expect that by default AIs will learn to influence their human supervisors. This can be mitigated if supervisors heavily penalise attempted manipulation when they spot it – but this still leaves an incentive for manipulation which can’t be easily detected. As AIs come to surpass human abilities on complex real-world tasks, preventing them from learning manipulative strategies will become increasingly difficult – especially if AI capabilities advance rapidly, so that users and researchers have little time to notice and respond to the problem.

How might we prevent this, if detecting manipulation or other undesirable behaviour eventually requires a higher quality and quantity of evaluation data than unaided humans can produce? The main mechanisms which have been proposed for expanding the quality/quantity frontier of supervision involve relying on AI systems themselves to help us supervise other AIs. When considering this possibility, we can reuse two of the categories discussed in the first section: we can either have AI-based supervision tools, or else we can delegate the process of supervision to another AI (which we shall call recursive supervision, as it involves an AI delegate supervising another AI delegate, which might then supervise another AI delegate, which…).

One example of an AI-based supervision tool is a reward model which learns to imitate human evaluations of AI behaviour. Reinforcement learning agents whose training is too lengthy for humans to supervise directly (e.g. involving billions of steps) can then be supervised primarily by reward models instead. Early work on reward models demonstrated a surprising level of data efficiency: reward models can greatly amplify a given amount of human feedback.Footnote 32 However, the results of these experiments also highlighted the importance of continual feedback – when humans stopped providing new data, agents eventually found undesirable behaviours which nevertheless made the reward models output high scores.Footnote 33 So reward models are likely to rely on humans continually evaluating AI behaviour as it expands into new domains.

Another important category of supervision tool is interpretability tools, which aim to explain the mechanisms by which a system decides how to act. Although deep neural networks are generally very opaque to mechanistic explanation, there has been significant progress over the last few years in identifying how groups of artificial neurons (and even individual neurons) contribute to the overall output.Footnote 34 One long-term goal of this research is to ensure that AIs will honestly explain the reasoning that led to their actions and their future intentions. This would help address the inner alignment problems described above, because agents could be penalised for acting according to undesirable motivations even when their behaviour is indistinguishable from the intended behaviour. However, existing techniques are still far from being able to identify deceptiveness (or other comparably abstract traits) in sophisticated models.

Recursive supervision is currently also in a speculative position, but some promising strategies have been identified. A notable example is Geoffrey Irving, Paul Christiano, and Dario Amodei’s Debate technique, in which two AIs are trained to give arguments for opposing conclusions, with a human judging which arguments are more persuasive.Footnote 35 Because the rewards given for winning the debate are zero-sum, the resulting competitive dynamic should in theory lead each AI to converge towards presenting compelling arguments which are hard to rebut – analogous to how AIs trained via self-play on zero-sum games converge to winning strategies. However, two bottlenecks exist: the ease with which debaters can identify flaws in deceptive arguments, and the accuracy with which humans can judge allegations of deception. Several strategies have been proposed to make judging easier – for example, incorporating cross-examination of debaters, or real-world tests of claims made during the debate – but much remains to be done in fleshing out and testing Debate and other forms of recursive supervision.

To some extent, recursive supervision will also arise naturally when multiple AIs are deployed in real-world scenarios. For example, if one self-driving car is driving erratically, then it’s useful for others around it to notice and track that. Similarly, if one trading AI is taking extreme positions that move the market considerably, then it’s natural for other trading AIs to try to identify what’s happening and why. This information could just be used to flag the culprit for further investigation – but it could also be used as a supervision signal for further training, if shared with the relevant AI developers. In the next section we discuss the incentives which might lead different groups to share such information, or to cooperate in other ways.

V. AI Supervision As a Cooperative Endeavour

We started this chapter by discussing some of the competitive dynamics which might be involved in AGI development. However, there is reason to hope that the process of increasing AI alignment is much more cooperative than the process of increasing AI capabilities. This is because misalignment could give rise to major negative externalities, especially if misaligned AIs are able to accumulate significant political, economic, or technological power (all of which are convergent instrumental goals). While we might think that it will be easy to ‘pull the plug’ on misbehaviour, this intuition fails to account for strategies which highly capable AIs might use to prevent us from doing so – especially those available to them after they have already amassed significant power. Indeed, the history of corporations showcases a range of ways that ‘agents’ with large-scale goals and economic power can evade oversight from the rest of society. And AIs might have much greater advantages than corporations currently do in avoiding accountability – for example, if they operate at speeds too fast for humans to monitor. One particularly stark example of how rapidly AI behaviour can spiral out of control was the 2010 Flash Crash, in which high-frequency trading algorithms got into a positive feedback loop and sent prices crashing within a matter of minutes.Footnote 36 Although the algorithms involved were relatively simple by the standards of modern machine learning (making this an example of accidental failure rather than misalignment), AIs sophisticated enough to reason about the wider world will be able to deliberately implement fraudulent or risky behaviour at increasingly bewildering scales.

Preventing them from doing so is in the interests of humanity as a whole; but what might large-scale cooperation to improve alignment actually look like? One possibility involves the sharing of important data – in particular data which is mainly helpful for increasing AI’s alignment rather than their capabilities. It is somewhat difficult to think about how this would work for current systems, as they don’t have the capabilities identified as prerequisites for being aligned in section 2. But as one intuition pump for how sharing data can differentially promote safety over other capabilities, consider the case of self-driving cars. The data collected by those cars during deployment is one of the main sources of competitive advantage for the companies racing towards autonomous driving, making them rush to get cars on the road. Yet, of that data, only a tiny fraction consists of cases where humans are forced to manually override the car steering, or where the car crashes. So while it would be unreasonably anticompetitive to force self-driving car companies to share all their data, it seems likely that there is some level of disclosure which contributes to preventing serious failures much more than to erasing other competitive advantages. This data could be presented in the form of safety benchmarks, simple prototypes of which include DeepMind’s AI Safety Gridworlds and the Partnership on AI’s SafeLife environment.Footnote 37

The example of self-driving cars also highlights another factor which could make an important contribution to alignment research: increased cooperation amongst researchers thinking about potential risks from AI. There is currently a notable divide between researchers primarily concerned about near-term risks and those primarily concerned about long-term risks.Footnote 38 Currently, the former tend to focus on supervising the activity of existing systems, whereas the latter prioritise automating the supervision of future systems advanced enough to be qualitatively different from existing systems. But in order to understand how to supervise future AI systems, it will be valuable to have access not only to technical research on scalable supervision techniques, but also to hands-on experience of how supervision of AIs works in real-world contexts and the best practices identified so far. So, as technologies like self-driving cars become increasingly important, we hope that the lessons learned from their deployment can help inform work on long-term risks via collaboration between the two camps.

A third type of cooperation to further alignment involves slowing down capabilities research to allow more time for alignment research to occur. This would require either significant trust between the different parties involved, or else strong enforcement mechanisms.Footnote 39 However, cooperation can be made easier in a number of ways. For example, corporations can make themselves more trustworthy via legal commitments such as windfall clauses.Footnote 40 A version of this has already been implemented in OpenAI’s capped-profit structure, along with other innovative legal mechanisms – most notably the clause in OpenAI’s charter which commits to assisting rather than competing with other projects, if they meet certain conditions.Footnote 41

We are aware that we have skipped over many of the details required to practically implement large-scale cooperation to increase AI alignment – some of which might not be pinned down for decades to come. Yet we consider it important to raise and discuss these ideas relatively early, because they require a few key actors (such as technology companies and the AI researchers working for them) to take actions whose benefits will accrue to a much wider population – potentially all of humanity. Thus, we should expect that the default incentives at play will lead to underinvestment in alignment research.Footnote 42 The earlier we can understand the risks involved, and the possible ways to avoid them, the easier it will be to build a consensus about the best path forward which is strong enough to overcome whatever self-interested or competitive incentives push in other directions. So despite the inherent difficulty of making arguments about how technological progress will play out, further research into these ideas seems vital for reducing the risk of humanity being left unprepared for the development of AGI.

I. Artificial Morality and Machine Ethics

Artificial Intelligence (AI) has the aim to model or simulate human cognitive capacities. Artificial Morality is a sub-discipline of AI that explores whether and how artificial systems can be furnished with moral capacities.Footnote 1 Its goal is to develop artificial moral agents which can take moral decisions and act on them. Artificial moral agents in this sense can be physically embodied robots as well as software agents or ‘bots’.

Machine ethics is the ethical discipline that scrutinizes the theoretical and ethical issues that Artificial Morality raises.Footnote 2 It involves a meta-ethical and a normative dimension.Footnote 3 Meta-ethical issues concern conceptual, ontological, and epistemic aspects of Artificial Morality like what moral agency amounts to, whether artificial systems can be moral agents and, if so, what kind of entities artificial moral agents are, and in which respects human and artificial moral agency diverge.

Normative issues in machine ethics can have a narrower or wider scope. In the narrow sense, machine ethics is about the moral standards that should be implemented in artificial moral agents, for instance: should they follow utilitarian or deontological principles? Does a virtue ethical approach make sense? Can we rely on moral theories that are designed for human social life, at all, or do we need new ethical approaches for artificial moral agents? Should artificial moral agents rely on moral principles at all or should they reason case-based?

In the wider sense, machine ethics comprises the deliberation about the moral implications of Artificial Morality on the individual and societal level. Is Artificial Morality a morally good thing at all? Are there fields of application in which artificial moral agents should not be deployed, if they should be used at all? Are there moral decisions that should not be delegated to machines? What is the moral and legal status of artificial moral agents? Will artificial moral agents change human social life and morality if they become more pervasive?

This article will provide an overview of the most central debates about artificial moral agents. The following section will discuss some examples for artificial moral agents which show that the topic is not just a problem of science fiction and that it makes sense to speak of artificial agents. Afterwards, a taxonomy of different types of moral agents will be introduced that helps to understand the aspirations of Artificial Morality. With this taxonomy in mind, the conditions for artificial moral agency in a functional sense will be analyzed. The next section scrutinizes different approaches to implementing moral standards in artificial systems. After these narrow machine ethical considerations, the ongoing controversy regarding the moral desirability of artificial moral agents is going to be addressed. At the end of the article, basic ethical guidelines for the development of artificial moral agents are going to be derived from this controversy.

II. Some Examples for Artificial Moral Agents

The development of increasingly intelligent and autonomous technologies will eventually lead to these systems having to face moral decisions. Already a simple vacuum cleaner like Roomba is, arguably, confronted with morally relevant situations. In contrast to a conventional vacuum cleaner, it is not directly operated by a human being. Hence, it is to a certain degree autonomous. Even such a primitive system faces basic moral challenges, for instance: should it vacuum and hence kill a ladybird that comes in its way or should it pull around it or chase it away? How about a spider? Should it extinguish the spider or save it?

One might wonder whether these are truly moral decisions. Yet, they are based on the consideration that it is wrong to kill or harm animals without a reason. This is a moral matter. Customary Roombas do, of course, not have the capacity to make such a decision. But there are attempts to furnish a Roomba prototype with an ethics module that does take animals’ lives into account.Footnote 4 As this example shows, artificial moral agents do not have to be very sophisticated and their use is not just a matter of science fiction. However, the more complex the areas of application of autonomous systems get, the more intricate are the moral decisions that they would have to make.

Eldercare is one growing sector of application for artificial moral agents. The hope is to meet demographic change with the help of autonomous artificial systems with moral capacities which can be used in care. Situations that require moral decisions in this context are, for instance: how often and how obtrusively should a care system remind somebody of eating, drinking, or taking a medicine? Should it inform the relatives or a medical service if somebody has not been moving for a while and how long would it be appropriate to wait? Should the system monitor the user at all times and how should it proceed with the collected data? All these situations involve a conflict between different moral values. The moral values at stake are, for instance, autonomy, privacy, physical health, and the concerns of the relatives.

Autonomous driving is the application field of artificial moral agents that probably receives the most public attention. Autonomous vehicles are a particularly delicate example because they do not just face moral decisions but moral dilemmas. A dilemma is a situation in which an agent has two (or more) options which are not morally flawless. A well-known example is the so-called trolley problem which goes back to the British philosopher Philippa Foot.Footnote 5 It is a thought experiment which is supposed to test our moral intuitions on the question whether it is morally permissible or even required to sacrifice one person’s life in order to save the lives of several persons.

Autonomous vehicles may face structurally similar situations in which it is inevitable to harm or even kill one or more persons in order to save others. Suppose a self-driving car cannot stop and it has only the choice to run into one of two groups of people: on the one hand, two elderly men, two elderly women and a dog; on the other hand, a young woman with a little boy and a little girl. If it hits the first group the two women will be killed, the two men and the dog are going to be severely injured. If it runs into the second group one of the children will get killed and the woman and the other child will be severely injured.

More details can be added to the situation at will. Suppose the group of the elderly people with the dog behaves in accord with the traffic laws, whereas the woman and the children cross the street against the red light. Is this morally relevant? Would it change the situation if one of the elderly men is substituted by a young medical doctor who might save many people’s lives? What happens if the self-driving car can only save the life of other traffic participants by sacrificing its passengers?Footnote 6 If there is no morally acceptable solution to these dilemmas, this might become a serious impediment for fully autonomous driving.

As these examples show, a rather simple artificial system like a vacuuming robot might already face moral decisions. The more intelligent and autonomous these technologies get, the more intricate the moral problems they confront will become; and there are some doubts as to whether artificial systems can make moral decisions which require such a high degree of sophistication, at all, and whether they should do so.

One might object that it is not the vacuuming robot, the care system, or the autonomous vehicle that makes a moral decision in these cases but rather the designers of these devices. Yet, progress in artificial intelligence renders this assumption questionable. AlphaGo is an artificial system developed by Google DeepMind to play the board game Go. It was the first computer program to beat some of the world’s best professional Go players on a full-sized board. Go is considered an extremely demanding cognitive game which is more difficult for artificial systems to win than other games such as chess. Whereas AlphaGo was trained with data from human games; the follow-up version AlphaGoZero was completely self-taught. It came equipped with the rules of the game and perfected its capacities by playing against itself without relying on human games as input. The next generation was MuZero which is even capable of learning different board games without being taught the rules.

The idea that the designers can determine every possible outcome already proves inadequate in the case of less complex chess programs. The program is a far better chess player than its designers who could certainly not compete with the world champions in the game. This holds true all the more for Go. Even if the programmers provide the system with the algorithms on which it operates, they cannot anticipate every single move. Rather, the system is equipped with a set of decision-making procedures that enable it to make effective decisions by itself. Due to the lack of predictability and control by human agents, it makes sense to use the term ‘artificial agent’ for this kind of system.

III. Classification of Artificial Moral Agents

Even if one agrees that there can be artificial moral agents, it is clear that even the most complex artificial systems differ from human beings in important respects that are central to our understanding of moral agency. It is, therefore, common in machine ethics to distinguish between different types of moral agents depending on how highly developed their moral capacities are.Footnote 7

One influential classification of moral agents goes back to James H. Moor.Footnote 8 He suggested a hierarchical distinction between four types of ethical agents.Footnote 9 It does not just apply to artificial systems but helps to understand which capacities an artificial system must have in order to count as a moral agent, although it might lack certain capacities which are essential to human moral agency.

The most primitive form describes agents who generate moral consequences without the consequences being intended as such. Moor calls them ethical impact agents. In this sense, every technical device is a moral agent that has good or bad effects on human beings. An example for an ethical impact agent is a digital watch that reminds its owners to keep their appointments on time. However, the moral quality of the effects of these devices lies solely in the use that is made of them. It is, therefore, doubtful whether these should really be called agents. In the case of these devices, the term ‘operational morality,’ which goes back to Wendell Wallach and Colin Allen, seems to be more adequate since it does not involve agency.Footnote 10

The next level is taken by implicit ethical agents, whose construction reflects certain moral values, for example security considerations. For Moor, this includes warning systems in aircrafts that trigger an alarm if an aircraft comes too close to the ground or if a collision with another aircraft is imminent. Another example are ATMs: these machines do not just have to always emit the right amount of money; they often also check whether money can be withdrawn from the account on that day at all. Moor even goes so far as to ascribe virtues to these systems that are not acquired through socialization, but rather directly grounded in the hardware. Conversely, there are also implicit immoral agents with built-in vices, for example a slot machine that is designed in such a way that people invest as much time and money as possible in it. Yet, as in the case of ethical impact agents these devices do not really possess agency since their moral qualities are entirely due to their designers.

The third level is formed by explicit ethical agents. In contrast to the two previous types of agents, these systems can explicitly recognize and process morally relevant information and come to moral decisions. One can compare them to a chess program: such a program recognizes the information relevant to chess, processes it, and makes decisions, with the goal being to win the game. It represents the current position of the pieces on the chessboard and can discern which moves are allowed. On this basis, it calculates which move is most promising under the given circumstances.

For Moor, explicit moral agents act not only in accordance with moral guidelines, but also on the basis of moral considerations. This is reminiscent of Immanuel Kant’s distinction between action in conformity with duty and action from duty.Footnote 11 Of course, artificial agents cannot strictly be moral agents in the Kantian sense because they do not have a will and they do not have inclinations that can conflict with the moral law. Explicit moral agents are situated somewhere in between moral subjects in the Kantian sense, who act from duty, and Kant’s example of the prudent merchant whose self-interest only accidentally coincides with moral duty. What Moor wants to express is that an explicit moral agent can discern and process morally relevant aspects as such and react in ways that fit various kinds of situations.

Yet, Moor would agree with Kant that explicit moral agents still fall short of the standards of full moral agency. Moor’s highest category consists of full ethical agents who have additional capacities such as consciousness, intentionality, and free will, which so far only human beings possess. It remains an open question whether machines can ever achieve these properties. Therefore, Moor recommends viewing explicit moral agents as the appropriate target of Artificial Morality. They are of interest from a philosophical and a practical point of view, without seeming to be unrealistic with regard to the technological state of the art.

Moor’s notion of an explicit ethical agent can be explicated with the help of the concept of functional morality introduced by Wallach and Allen.Footnote 12 They discriminate different levels of morality along two gradual dimensions: autonomy and ethical sensitivity. According to them, Moor’s categories can be situated within their framework.

A simple tool like a hammer possesses neither autonomy nor ethical sensitivity. It can be used to bang a nail or to batter somebody’s skull. The possibility of a morally beneficial or harmful deployment would, in Moor’s terminology, arguably justify calling it an ethical impact agent, but the artefact as such does not have any moral properties or capacity to act. A child safety lock in contrast does involve a certain ethical sensitivity despite lacking autonomy. It would fall into Moor’s category of an implicit ethical agent. Because its ethical sensitivity is entirely owed to the design of the object Wallach and Allen avoid the term of agency and speak of operational morality.