1. Introduction

This tutorial will start with simple chatbots, showing how to respond to prompts. These examples will introduce some opportunities for improvement such as hallucinations and timeliness. Retrieval-augmented generation (RAG) addresses some of these opportunities by adding the ability to upload files just-in-time.

2. Simple chat with openAI

This section will start with simple chats. Later, we will discuss RAGs that upload files just-in-time. Here is a simple example of a chat with OpenAI (and no uploading of files):Footnote a

-

• Input to ChatGPT:

system: You are a helpful assistant.

user: Who won the world series in 2020?

assistant: The Los Angeles Dodgers won the World Series in 2020.

user: Where was it played?

-

• Response from ChatGPT:

The 2020 World Series was played at Globe Life Field in Arlington, Texas.

Details on how to run this example and other examples in this paper are posted on GitHub.Footnote b Unfortunately, it may be difficult to replicate the exact outputs in this paper because chatbots are not always repeatable/deterministic. There are also concerns about ChatGPT’s behavior changing over time (Chen, Zaharia, and Zou Reference Chen, Zaharia and Zou2023).

The input to ChatGPT is a sequence of message objects. The message object contains two required fields: role and content. Examples of roles are system, user, and assistant.

2.1 Guard rails

The following example is like the example above, except that we ask ChatGPT to predict the future:

-

• Input to ChatGPT:

system: You are a helpful assistant.

user: Who won the world series in 2035?

-

• Response from ChatGPT:

I’m sorry, but I am unable to provide real-time information or details about future events as I exist in the present. You may want to check the latest sports news or websites for updates on the World Series winners in 2035.

ChatGPT is constructed with guard rails designed to reduce “hallucinations.” “Hallucination” is a technical term for “making stuff up.” Chatbots have a tendency to spread misinformation. Guard rails help in easy cases like this, though it is not hard for an adversary to find small modifications to input prompts that will crash through the guard rails.

2.2 Timeliness

The next example replaces “2035” with “most recent.” The point of this modification is to make it clear that we are using an old model that was trained in 2021:

-

• Input to ChatGPT:

system: You are a helpful assistant.

user: Who won the most recent world series?

-

• Response from ChatGPT:

The Atlanta Braves won the most recent World Series in 2021.

We used an old model because OpenAI charges more for more recent models. But even so, since training large language models (LLMs) is expensive, it is prohibitively expensive for OpenAI to continuously update their models. The following chat shows that phrases like “most recent” call out differences between training time and inference time. According to Google and Wikipedia, the Texas Rangers won the most recent World Series (in 2023), but ChatGPT-3 says the Atlanta Braves won (in 2021). It is easier to keep Google and Wikipedia up to date than ChatGPT. In Subsection 3.2, we will show how RAG can work around the need to continuously update models.

2.3 Hallucinations when asked to discuss content that goes beyond training data

The next example was designed to elicit misinformation. The prompt presupposes that we have been talking about an (unspecified) paper on psycholinguistics. One might hope there would be a guard rail that would catch this prompt and respond, “I’m sorry, but I don’t know what paper you are referring to.” As we will see below, there is a guard rail that catches similar prompts. Unfortunately, in this case, the response below starts with a pivot to a different question: “What is Psycholinguistics?” After that pivot, the response continues by hallucinating what might be in the unspecified paper.

-

• Input to ChatGPT:

system: You are a helpful assistant.

user: Please summarize the paper on psycholinguistics.

-

• Response from ChatGPT:

Sure! Psycholinguistics is a field that studies the psychological processes involved in language comprehension and production. The paper discusses various topics in psycholinguistics, such as how language is processed in the brain, how we acquire language skills, and how language is used in communication. It also explores issues related to bilingualism, language development in children, and the impact of language disorders on individuals. Overall, the paper provides insights into how the human mind processes and understands language.

Robustness is a challenge for guard rails. A small change to the prompt can have a large impact. Consider the following chat where we replace a period (in the chat above) with a question mark (in the chat below). The guard rails are more effective with the question mark, at least in this particular instance:

-

• Input to ChatGPT:

system: You are a helpful assistant.

user: Please summarize the paper on psycholinguistics?

-

• Response from ChatGPT:

I would need more specific information to provide an accurate answer. There are numerous papers on the topic of psycholinguistics written by various authors. If you have a specific paper or author in mind, please provide more details so I can assist you better.

More generally, hallucinations are a challenge for LLMs when asked to discuss content that goes beyond training data. A lawyer once found himself in trouble when he trusted ChatGPT to help write a brief.Footnote c

There was just one hitch: No one – not the airline’s lawyers, not even the judge himself – could find the decisions or the quotations cited and summarized in the brief.

A study on legal hallucinations from Yale and Stanford (Dahl et al. Reference Dahl, Magesh, Suzgun and Ho2024) reports that the problem is “alarmingly prevalent, occurring between 69% of the time with ChatGPT 3.5 and 88% with Llama 2, when these models are asked specific, verifiable questions about random federal court cases.”

3. Opportunities for RAG

The examples above illustrate three opportunities for improvement:

-

More robust guard rails: Too easy to crash through existing guard rails (Nasr et al. Reference Nasr, Carlini, Hayase, Jagielski, Cooper, Ippolito, Choquette-Choo, Wallace, Tramèr and Lee2023),

-

Pivots/Hallucinations: Chatbots tend to pivot and/or hallucinate when asked to discuss content that goes beyond the training set such as an unspecified paper (Subsection 2.3), and

-

Timeliness: Training time

$\neq$

inference time (Subsection 2.2)

$\neq$

inference time (Subsection 2.2)

Critics of large language models (LLMs) and chatbots bring up many classic topics in Philosophy of Language, Artificial Intelligence and Creative Writing such as:Footnote d

-

1. Hallucinations and Misinformation: Fact-checking, Grounding, Attribution

-

2. Knowledge Acquisition: Timeliness, PlagiarismFootnote e (Nasr et al. Reference Nasr, Carlini, Hayase, Jagielski, Cooper, Ippolito, Choquette-Choo, Wallace, Tramèr and Lee2023)

-

3. Knowledge Representation: Lexical Semantics, Ontologies, World Knowledge, Semantics of Time and Space, Technical Terminology, Domain Specific Knowledge

-

4. Reference: Co-reference, Given/New, Use/Mention,Footnote f Intensional Logic,Footnote g Possible Worlds,Footnote h BeliefFootnote i

-

5. Discourse Structure: Grice’s Maxims (Grice Reference Grice1975), Perspective, Character Development,Footnote j Plot (and Plot Twists)

-

6. Problem Solving: Planning (Kautz and Allen Reference Kautz and Allen1986), Common Sense Reasoning (McCarthy Reference McCarthy and Minsky1969), Problem Decomposition

-

7. Explanation (Ribeiro, Singh, and Guestrin Reference Ribeiro, Singh and Guestrin2016)

RAG addresses some of these opportunities by adding a feature to upload documents just-in-time. Another motivation for uploading documents just-in-time involves private data. LLMs are trained on massive amounts of public data, but most documents are private. Suppose, for example, we are building a chatbot for customer support. Users will have questions about their bills. If we can upload their private bills just-in-time, then we can address their questions in the context of their bill. Otherwise, the chatbot can do little more than discuss generic FAQs about billing questions in general, but not specifically about their bill.

3.1 Recipe

Four implementations of RAG are posted on our GitHub: (1) src/OpenAI/Rag.py, (2) src/LangChain/Rag.py, (3) src/transformers/Rag.py,and (4) src/VecML/Rag.py. They all use the following recipe:

-

1. Upload one or more files

-

2. Parse files into chunks

-

3. Embed chunks as vectors in a vector space

-

4. Index vectors with an approximate nearest neighbor (ANN) algorithm such as ANNOYFootnote k or FAISSFootnote l (Johnson, Douze, and Jégou Reference Johnson, Douze and Jégou2019; Douze et al. Reference Douze, Guzhva, Deng, Johnson, Szilvasy, Mazaré, Lomeli, Hosseini and Jégou2024)

-

5. Retrieve chunks near prompt (using ANN)

-

6. Generate responses

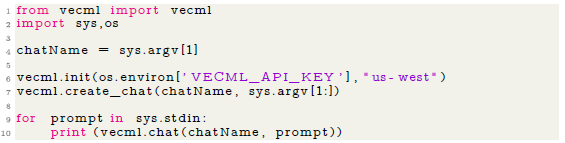

All four RAG.py programs share the same interface. They take a list of files to upload on the command line. Input prompts are read from standard input, and output responses are written to standard output. The programs are short, less than 100 lines; the VecML program is particularly short:

Some of the shorter RAG.py programs hide various steps in the recipe above behind APIs running on the cloud.

There are also a few programs, chat.py. These programs read prompts from standard input and write responses to standard output, but unlike RAG.py programs, the chat.py programs do not upload files.

3.2 Timeliness and a simple example of RAG

This paper will not attempt to discuss all of the opportunities raised above, except to suggest that RAG can help with a few of them. Consider the timeliness opportunity in Subsection 2.2; obviously, users do not want “day-old donuts” like who won the World Series when the bot was trained a few years ago.

On the web, information ages at different rates. Before the web, news was typically relevant for a news cycle, though breaking news was already an exception. These days, news typically ages more quickly than it used to, but even so, the half-life of news is probably a few hours. Some information sources age more quickly such as stock prices, and other information sources age more slowly such as the academic literature. Within the academic literature, some venues (arXiv and conferences) age more quickly than others (archival journals). Crawlers for search engines like Google and Bing need to prioritize crawling by trading-off these aging properties with demand. Since crawlers cannot afford to crawl all pages all the time, they need to crawl pages often enough to keep up with aging, but if that is not possible, then they should prioritize pages that are more likely to be requested.

How does RAG help with timeliness? Rather than use LLMs as is, and attempt “closed-book question-answering” with an “out-of-date book,” RAG uses R (retrieval/search) and A (augmentation) to update “the book” (knowledge base) just-in-time. For example, if we want to know who won the most recent World Series, then it might help to do a search for documents on that topic such as this.Footnote m RAG makes it possible to upload files before generating responses to the prompt. On the GitHub,Footnote n we work though this example in detail:

Without RAG, an LLM trained on 2021 data would likely hallucinate when asked about 2023. RAG fills in gaps in the knowledge base by uploading a pdf file, sample_files/World_Series/*pdf, a version of the text from footnoteFootnote m , and uses that content to update the knowledge base on this topic from 2021 to cover 2023. In this way, RAG works around the need to continuously retrain the bot, by creating a just-in-time mechanism for updating the knowledge base.

3.3 References to background context and RAG

RAG can also help with references to the discourse context. If we upload a paper on psycholinguistics, then it can address the prompt: “Please summarize the paper on psycholinguistics.”

The directory, sample_files/papers, contains two pdf files for Church and Hanks (Reference Church and Hanks1990) and Lin (Reference Lin1998). We would like to ask the bot to summarize (Church and Hanks Reference Church and Hanks1990), but since the bot is unlikely to understand citations, we will refer to the two papers with phrases such as

-

• the paper on psycholinguistics

-

• the paper on clustering

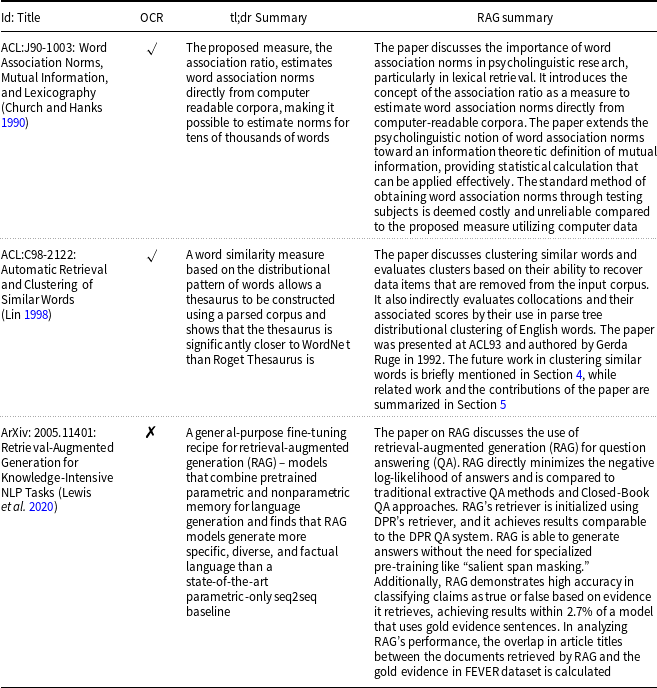

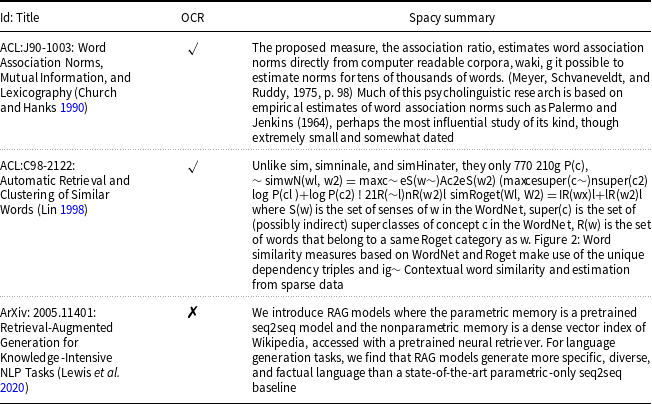

The program listing above produces the RAG summaries in Table 1. Summaries from RAG are impressive, at least on first impression. However, on further reflection, after reading a number of such summaries, it becomes clear that there are many opportunities for improvement.

Table 1. RAG summaries are longer than tl;dr summaries from Semantic Scholar

RAG summaries are probably worse than summaries from previous technologies such as tl;dr (too long; did not read) summaries from Semantic Scholar (S2).Footnote o Table 1 compares RAG summaries with tl;dr summaries; S2 tl;dr summaries are shorter, and probably more to the point, though it is hard to define “more to the point” precisely.

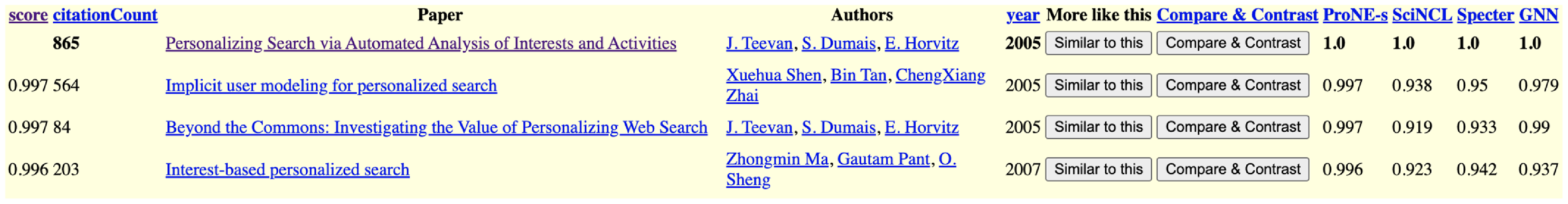

Figure 1. The query (top line) is followed by recommendations.

3.4 Scenario: compare and contrast recommendations

Figure 1 shows a recommendation engine.Footnote p We would like to provide users with explanations that work for them. Users probably do not want to know how the different recommendation engines work. It is probably not helpful to discuss details such as deep networks, embeddings, approximate nearest neighbors, and spectral clustering on citation graphs. Rather, we propose to offer users buttons like the “compare & contrast” buttons in Fig. 1. Users can click on one of these buttons if they want to know why a paper is being recommended. The implementation of these buttons is based on RAG. In this way, RAG can be used to address some of the explanation opportunities mentioned above. If a user clicks on one of these buttons, the relevant papers will be uploaded to a RAG system and then the RAG system will be prompted to not only summarize the relevant papers one at a time but also to compare and contrast pairs of papers at a time.Footnote q

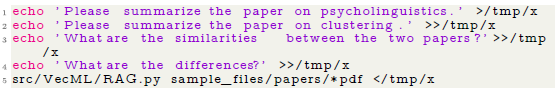

3.5 Compare and contrast with VecML.com

Our GitHub provides several versions of RAG.py. In addition to the OpenAI version mentioned above, there is also a version based on VecML.com.Footnote r Here is an example that uses the VecML.com version of RAG.py:

The VecML code for RAG.pyFootnote s is even shorter than the OpenAI code for RAG.py.Footnote t As mentioned above, all of the RAG.py programs take one or more papers on the command line and upload them to the cloud. Then they read prompts from standard input and print responses to standard output.

If we run the VecML RAG.py program on two ACL papers, Church and Hanks (Reference Church and Hanks1990), Lin (Reference Lin1998), using the prompts below:

-

1. Please summarize the paper on psycholinguistics.

-

2. Please summarize the paper on clustering.

-

3. What are the similarities between the two papers?

-

4. What are the differences?

We obtain the following results:

-

Summarize (Church and Hanks 1990): The paper on psycholinguistics discusses the importance of word association norms in psycholinguistic research, particularly in the area of lexical retrieval. It mentions that subjects respond quicker to words that are highly associated with each other. While noun–noun word associations like “doctor/nurse” are extensively studied, less attention is given to associations among verbs, function words, adjectives, and other non-nouns. The paper concludes by linking the psycholinguistic notion of word association norms to the information-theoretic concept of mutual information, providing a more precise understanding of word associations.

-

Summarize (Lin 1998): The paper discusses a triangulation approach for clustering concordance lines into word senses based on usage rather than intuitive meanings. It highlights the superficiality of defining a word measure for clustering words without explicit preprocessing tools such as Church’s parts program or Hindle’s parser. The paper briefly mentions future work on clustering similar words and reviews related work while summarizing its contributions.

-

Similarities: The similarities between the two papers include a focus on analyzing language data, using distributional patterns of words, evaluating similarity measures for creating a thesaurus, and discussing the importance of smoothing methods in language processing tasks.

-

Differences: The differences between the two thesaurus entries can be measured based on the cosine coefficient of their feature vectors. In this case, the differences are represented in the relationships between the words listed in each entry. For example, in the given entries, “brief (noun)” is associated with words like “differ,” “scream,” “compete,” and “add,” while “inform” and “notify” are related to each other in the second entry. These associations indicate the semantic relationships and differences between the words in each entry.

Like summaries, output from the compare-and-contrast button looks impressive on first impression, but on further reflection, the responses can be long-winded, and sometimes seem to miss the point. It is not clear that the bot “understands” concepts such as similarities and differences. The bot’s discussion of differences is not addressing differences between the two papers, but rather differences within one of the papers among lexical items. We have obtained slightly better responses by rephrasing the prompts in various ways, but we will not discuss such complications here since this tutorial is intended to provide a gentle introduction (as opposed to SOTA-chasing).

That said, as an area chair and senior area chair for many conferences, we have seen many reviews from unmotivated (and unqualified) reviewers. It is not clear that RAG is worse than bad reviews, though there are obvious ethics concerns with using RAG (and unmotivated/unqualified reviewers) for high-stakes decisions with long-term implications for careers.

4. RAG is not magic

4.1 Problem decomposition

Uploading documents just-in-time addresses some of the opportunities raised above, but not all. Consider problem decomposition. Chatbots can easily add two small numbers, but they cannot add two large numbers. Children have the reverse problem. They struggle with memorizing multiplication and addition tables, but they have little trouble decomposing the sum of two big numbers into more manageable tasks. You do not have to teach a child fancy principles like superposition. They just get it.

A popular workaround to this problem is Chain-of-Thought (CoT) Prompting (Wei et al. Reference Wei, Wang, Schuurmans, Bosma, Xia, Chi, Le and Zhou2022). Since chatbots struggle with decomposing larger tasks into more manageable subtasks, the community has discovered that chatbots will be more successful if prompts come already predecomposed. In this way, CoT is seen by most researchers as an advance, but it might be more appropriate to think of it as a workaround. Just as a parent might cut up a child’s dinner to prevent choking incidents, so too, CoT Prompting cuts up prompts into more manageable bite-size chunks.

In short, chatbots are not magic. Chatbots will be more successful if we spoon-feed them. RAG spoon-feeds them by inserting the relevant content into the input before invoking the response, and CoT Prompting spoon feeds them by cutting up prompts into bite-size pieces to prevent hallucinations.

4.2 Opportunities for improvement

There are a couple of opportunities to improve the example above:

-

1. OCR errors: garbage in

$\rightarrow$

garbage out

$\rightarrow$

garbage out -

2. KISS (keep it simple, stupid):

-

(a) It is safer to process fewer files at a time, and

-

(b) To decompose prompts into smaller subtasks (CoT reasoning)

-

Consider OCR errors. RAG tends to process documents in very simple ways, typically as a sequence of chunks, where each chunk is a sequence of no more than 512 subword units. In fact, documents are much more complicated than that. Many documents contain tables, figures, equations, references, footnotes, headers, footers and much more. There are many OCR errors in older pdf files on the ACL Anthology, as illustrated in Table 2.

4.3 OCR errors and spaCy summarizations

It is remarkable how well RAG does on documents with OCR errors. Older technologies such as spaCyFootnote u are less robust to OCR errors, as discussed on our GitHub.Footnote v Table 2 shows that OCR errors are more challenging for spaCy than RAG.

Table 2. OCR errors are more challenging for spaCy than RAG

5. Where is the RAG literature going?

The literature on RAG has been exploding recently. There are 381 references in Zhao et al. (Reference Zhao, Zhang, Yu, Wang, Geng, Fu, Yang, Zhang and Cui2024), of which 168 (44%) were published in 2023 or 2024. Given the volume, veracity (and recency) of the literature on RAG, it is difficult to see where it is going at this early point in time, but the list below suggests that benchmarking is a hot topic. The field is convinced that RAG is an important advance, but the field is still trying to figure out how to measure progress.

-

Surveys: Gao et al. (Reference Gao, Xiong, Gao, Jia, Pan, Bi, Dai, Sun and Wang2023), Zhao et al. (Reference Zhao, Zhang, Yu, Wang, Geng, Fu, Yang, Zhang and Cui2024)Footnote w

-

Tutorials: DSPyFootnote x and some even more gentle introductions to RAG than this paper: LangChain Explained in 13 Minutes,Footnote y Langchain RAG Tutorial,Footnote z Learn RAG From Scratch Footnote aa and Simple Local Rag Footnote ab

-

Benchmarking: CRAG,Footnote ac RGBFootnote ad (Chen et al. Reference Chen, Lin, Han and Sun2024), KILTFootnote ae , Footnote af (Petroni et al. Reference Petroni, Piktus, Fan, Lewis, Yazdani, De Cao, Thorne, Jernite, Karpukhin, Maillard, Plachouras, Rocktäschel and Riedel2021), ARESFootnote ag (Saad-Falcon et al. Reference Saad-Falcon, Khattab, Potts and Zaharia2023), TruLens,Footnote ah CRUD-RAGFootnote ai (Lyu et al. Reference Lyu, Li, Niu, Xiong, Tang, Wang, Wu, Liu, Xu and Chen2024), MIRAGEFootnote aj (Xiong et al. Reference Xiong, Jin, Lu and Zhang2024), EXAM (Sander and Dietz, Reference Sander and Dietz2021), CLAPNQFootnote ak (Rosenthal et al. Reference Rosenthal, Sil, Florian and Roukos2024), Retrieval-QA,Footnote al Hallucination Leaderboard,Footnote am RAGAsFootnote an (Es et al. Reference Es, James, Espinosa Anke and Schockaert2024), HaluEval (Li et al. Reference Li, Cheng, Zhao, Nie and Wen2023), PHDFootnote ao (Yang, Sun, and Wan Reference Yang, Sun and Wan2023), and RAGTruthFootnote ap (Wu et al. Reference Wu, Zhu, Xu, Shum, Niu, Zhong, Song and Zhang2023)

Benchmarks focus the research community on specific opportunities. For example, the RGB Benchmark is designed to address four opportunities in English (and Chinese) datasets:

-

1. Noise robustness (includes timeliness opportunities),

-

2. Negative rejection (guard rails),

-

3. Information integration (answering questions by combining two or more documents), and

-

4. Counterfactual robustness (robustness to factual errors in documents)

CLAPNQ (Cohesive Long-form Answers from Passages in Natural Questions) addresses guard rails plus four new opportunities:

-

1. Faithfulness (answer must be grounded in gold passage),

-

2. Conciseness (answer must be short, excluding information unrelated to gold answer),

-

3. Completeness (answer must cover all information in gold passage)

-

4. Cohesiveness

CLAPNQ is based on the natural questions (NQ) benchmark (Kwiatkowski et al. Reference Kwiatkowski, Palomaki, Redfield, Collins, Parikh, Alberti, Epstein, Polosukhin, Devlin, Lee, Toutanova, Jones, Kelcey, Chang, Dai, Uszkoreit, Le and Petrov2019). Another recent paper on NQ (Cuconasu et al. Reference Cuconasu, Trappolini, Siciliano, Filice, Campagnano, Maarek, Tonellotto and Silvestri2024) shows that performance improves with some conditions and degrades with others. They considered (1) gold context (from benchmark), (2) relevant documents that contain correct answers, (3) related documents that do not contain correct answers, and (4) irrelevant random documents. It may not be surprising that (3) degrades performance, but their main (surprising) result is (4) improves performance.

The benchmarking approach is likely to make progress on many of these topics, though we have concerns about guard rails (and hallucinations). Bots tend to go “off the rails” when they fail to find evidence. It is impressive how much progress the field has made with zero-shot reasoning recently, but even so, reasoning in the absence of evidence is challenging. Failure to find fallacies are hard.

Maybe we can make progress on hallucinations by measuring average scores on benchmarks, or maybe we need a different approach such as theoretical guarantees (from theoretical computer science) or confidence intervals (from statistics). Statistics distinguishes the first moment (expected value) from the second moment (variance). When there is little evidence to support a prediction, confidence intervals are used to prevent hallucinations. Perhaps benchmarks should become more like calibration in statistics. When we have such and such evidence, how likely is the bot to be correct? Guard rails should be deployed when confidence fails to reach significance.

6. Conclusions

This tutorial (and the accompanying GitHub) showed a number of implementations of chatbots and RAG using tools from OpenAI, LangChain, HuggingFace, and VecML. RAG improves over chatbots by adding the ability to upload files just-in-time. Chatbots are trained on massive amounts of public data. By adding the ability to upload files just-in-time, RAG addresses a number of gaps in the chatbot’s knowledge base such as timeliness, references to background knowledge, private data, etc. Gaps in the knowledge base can lead to hallucinations. By filling in many of these gaps just-in-time, RAG reduces the chance of hallucinations.