1 Introduction and motivation

The motivation of the work presented in this paper stems from automatic static cost analysis and verification of logic programs (Debray et al., Reference Debray, Lin and Hermenegildo1990; Debray and Lin, Reference Debray and Lin1993; Debray et al., Reference Debray, Lopez-Garcia, Hermenegildo and Lin1997; Navas et al., Reference Navas, Mera, Lopez-Garcia and Hermenegildo2007; Serrano et al., Reference Serrano, Lopez-Garcia and Hermenegildo2014; Lopez-Garcia et al., Reference Lopez-Garcia, Klemen, Liqat and Hermenegildo2016, Reference Lopez-Garcia, Darmawan, Klemen, Liqat, Bueno and Heremenegildo2018). The goal of such analysis is to infer information about the resources used by programs without actually running them with concrete data and present such information as functions of input data sizes and possibly other (environmental) parameters. We assume a broad concept of resource as a numerical property of the execution of a program, such as number of resolution steps, execution time, energy consumption, memory, number of calls to a predicate, and number of transactions in a database. Estimating in advance the resource usage of computations is useful for a number of applications, such as automatic program optimization, verification of resource-related specifications, detection of performance bugs, helping developers make resource-related design decisions, security applications (e.g., detection of side channel attacks), or blockchain platforms (e.g., smart-contract gas analysis and verification).

The challenge we address originates from the established approach of setting up recurrence relations representing the cost of predicates, parameterized by input data sizes (Wegbreit, Reference Wegbreit1975; Rosendahl, Reference Rosendahl1989; Debray et al., Reference Debray, Lin and Hermenegildo1990; Debray and Lin, Reference Debray and Lin1993; Debray et al., Reference Debray, Lopez-Garcia, Hermenegildo and Lin1997; Navas et al., Reference Navas, Mera, Lopez-Garcia and Hermenegildo2007; Albert et al., Reference Albert, Arenas, Genaim and Puebla2011; Serrano et al., Reference Serrano, Lopez-Garcia and Hermenegildo2014; Lopez-Garcia et al., Reference Lopez-Garcia, Klemen, Liqat and Hermenegildo2016), which are then solved to obtain closed forms of such recurrences (i.e., functions that provide either exact, or upper/lower bounds on resource usage in general). Such approach can infer different classes of functions (e.g., polynomial, factorial, exponential, summation, or logarithmic).

The applicability of these resource analysis techniques strongly depends on the capabilities of the component in charge of solving (or safely approximating) the recurrence relations generated during the analysis, which has become a bottleneck in some systems.

A common approach to automatically solving such recurrence relations consists of using a Computer Algebra System (CAS) or a specialized solver. However, this approach poses several difficulties and limitations. For example, some recurrence relations contain complex expressions or recursive structures that most of the well-known CASs cannot solve, making it necessary to develop ad-hoc techniques to handle such cases. Moreover, some recurrences may not have the form required by such systems because an input data size variable does not decrease, but increases instead. Note that a decreasing-size variable could be implicit in the program, that is it could be a function of a subset of input data sizes (a ranking function), which could be inferred by applying established techniques used in termination analysis (Podelski and Rybalchenko, Reference Podelski and Rybalchenko2004). However, such techniques are usually restricted to linear arithmetic.

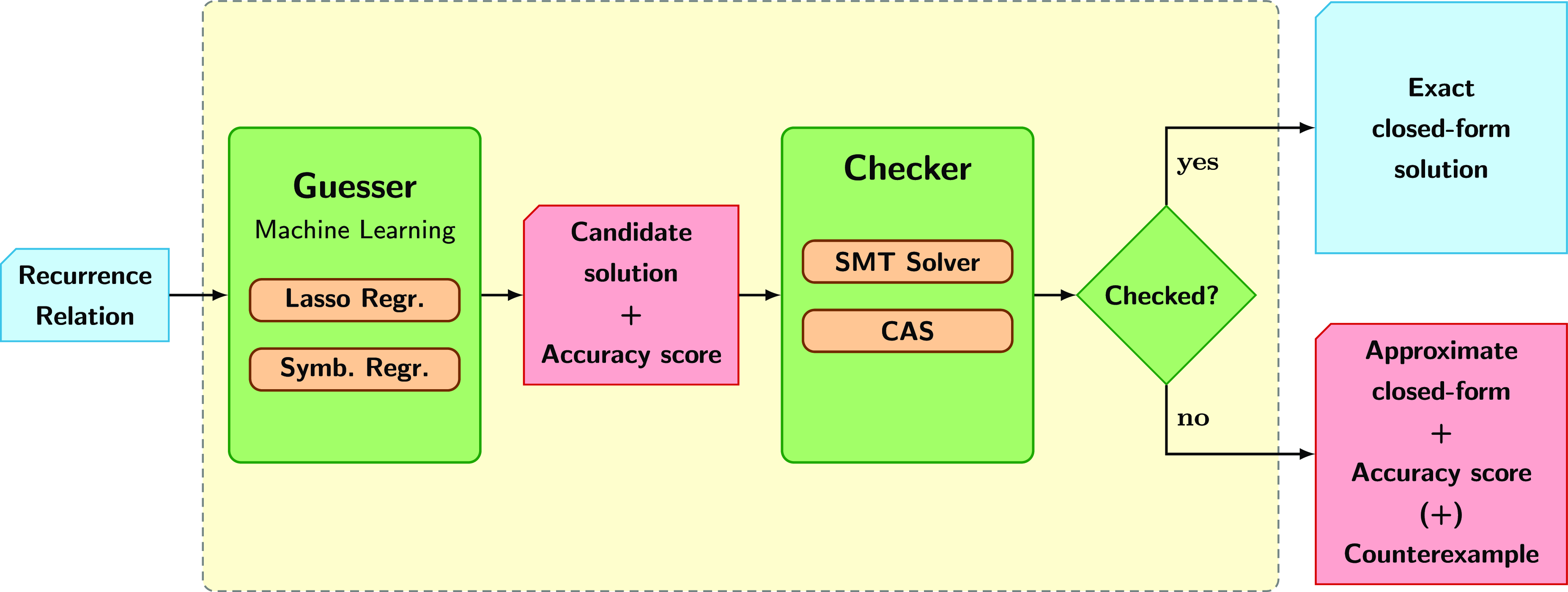

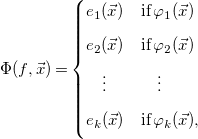

In order to address this challenge we have developed a novel, general method for solving arbitrary, constrained recurrence relations. It is a guess and check approach that uses machine learning techniques for the guess stage and a combination of an SMT-solver and a CAS for the check stage (see Figure 1). To the best of our knowledge, there is no other approach that does this. The resulting closed-form function solutions can be of different kinds, such as polynomial, factorial, exponential or logarithmic.

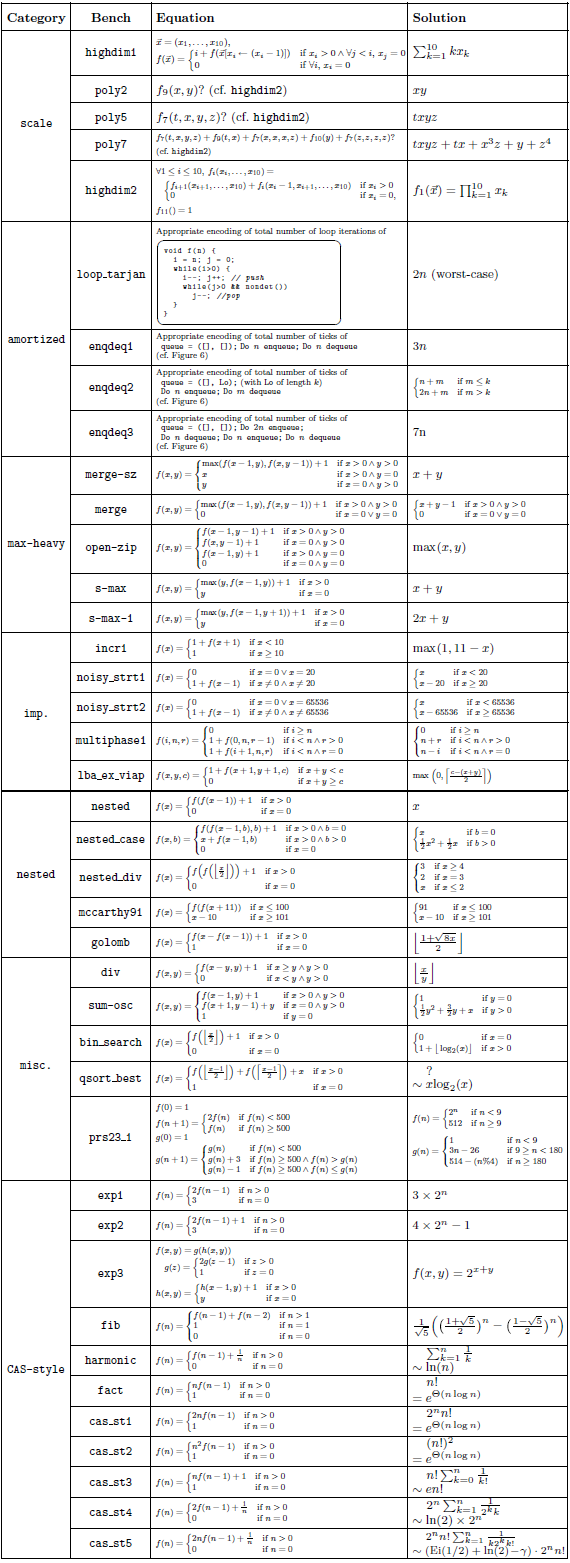

Our method is parametric in the guess procedure used, providing flexibility in the kind of functions that can be inferred, trading off expressivity with efficiency and theoretical guarantees. We present the results of two instantiations, based on sparse linear regression (with non-linear templates) and symbolic regression. Solutions to our equations are first evaluated on finite samples of the input space, and then, the chosen regression method is applied. In addition to obtaining exact solutions, the search and optimization algorithms typical of machine learning methods enable the efficient discovery of good approximate solutions in situations where exact solutions are too complex to find. These approximate solutions are particularly valuable in certain applications of cost analysis, such as granularity control in parallel/distributed computing. Furthermore, these methods allow exploration of model spaces that cannot be handled by complete methods such as classical linear equation solving (Table 1).

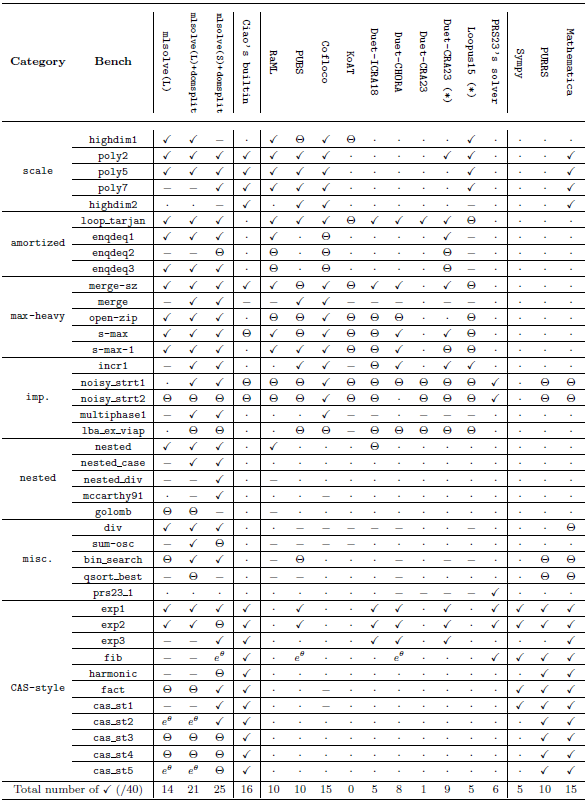

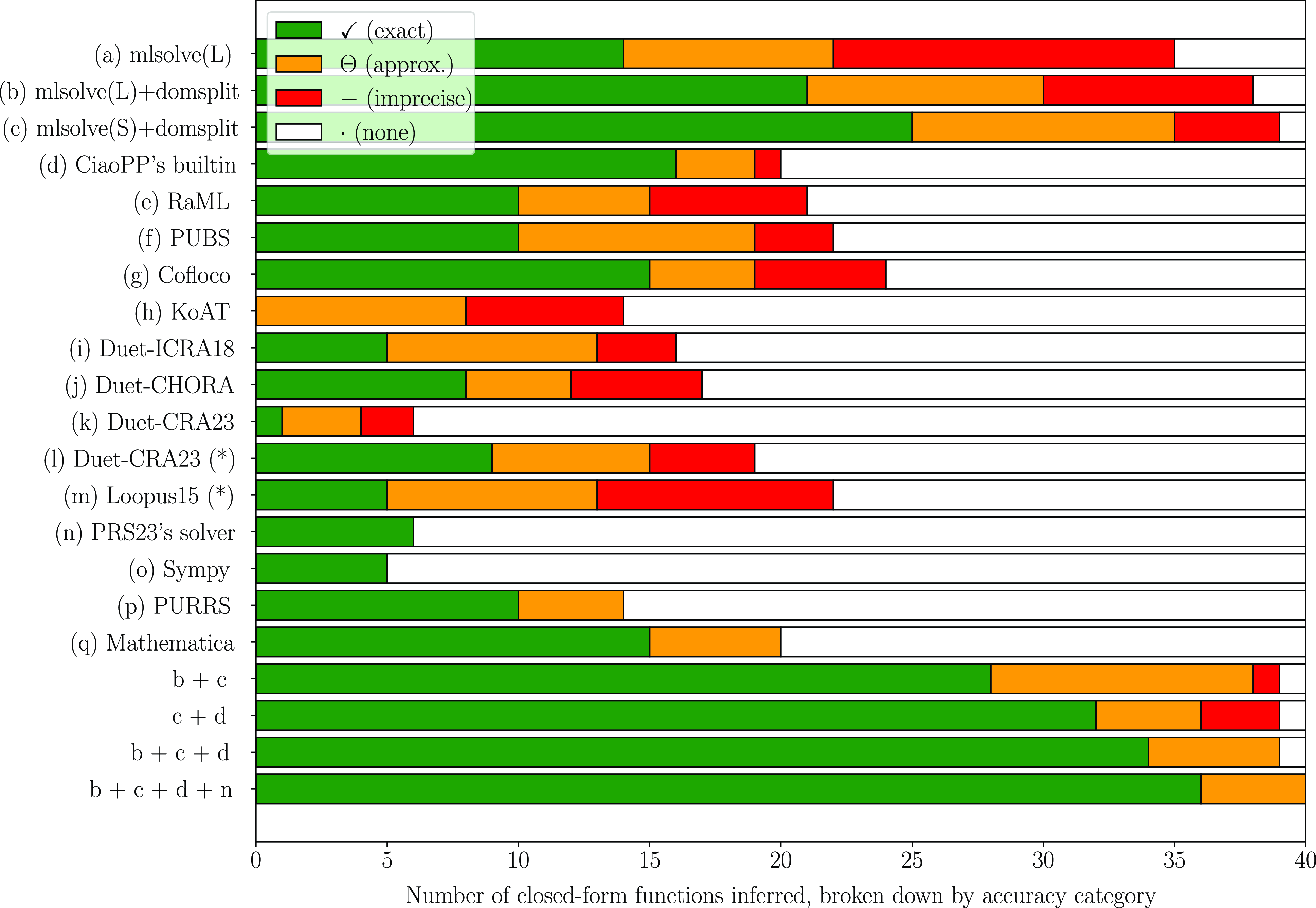

Fig 1. Architecture of our novel machine learning-based recurrence solver.

The rest of this paper is organized as follows. Section 2 gives and overview of our novel guess and check approach. Then, Section 3 provides some background information and preliminary notation. Section 4 presents a more detailed, formal and algorithmic description of our approach. Section 5 describes the use of our approach in the context of static cost analysis of (logic) programs. Section 6 comments on our prototype implementation as well as its experimental evaluation and comparison with other solvers. Finally, Section 7 discusses related work, and Section 8 summarizes some conclusions and lines for future work. Additionally, Appendix B provides complementary data to the evaluation of Section 6 and Table 2.

2 Overview of our approach

We now give an overview of our approach and its two stages already mentioned, illustrated in Figure 1: guess a candidate closed-form function, and check whether such function is actually a solution of the recurrence relation.

Given a recurrence relation for a function

![]() $f(\vec{x})$

, solving it means to find a closed-form expression

$f(\vec{x})$

, solving it means to find a closed-form expression

![]() $\hat{f}(\vec{x})$

defining a function, on the appropriate domain, that satisfies the relation. We say that

$\hat{f}(\vec{x})$

defining a function, on the appropriate domain, that satisfies the relation. We say that

![]() $\hat{f}$

is a closed-form expression whenever it does not contain any subexpression built using

$\hat{f}$

is a closed-form expression whenever it does not contain any subexpression built using

![]() $\hat{f}$

(i.e.,

$\hat{f}$

(i.e.,

![]() $\hat{f}$

is not recursively defined), although we will often additionally aim for expressions built only on elementary arithmetic functions, for example constants, addition, subtraction, multiplication, division, exponential, or perhaps rounding operators and factorial. We will use the following recurrence as an example to illustrate our approach.

$\hat{f}$

is not recursively defined), although we will often additionally aim for expressions built only on elementary arithmetic functions, for example constants, addition, subtraction, multiplication, division, exponential, or perhaps rounding operators and factorial. We will use the following recurrence as an example to illustrate our approach.

2.1 The “guess” stage

As already stated, our method is parametric in the guess procedure utilized, and we instantiate it with both sparse linear and symbolic regression in our experiments. However, for the sake of presentation, we initially focus on the former to provide an overview of our approach. Subsequently, we describe the latter in Section 3. Accordingly, any possible model we can obtain through sparse linear regression (which constitutes a candidate solution) must be an affine combination of a predefined set of terms that we call base functions. In addition, we aim to use only a small number of such base functions. That is, a candidate solution is a function

![]() $\hat{f}(\vec{x})$

of the form

$\hat{f}(\vec{x})$

of the form

where the

![]() $t_i$

are arbitrary functions on

$t_i$

are arbitrary functions on

![]() $\vec{x}$

from a set

$\vec{x}$

from a set

![]() $\mathcal{F}$

of candidate base functions, which are representative of common complexity orders, and the

$\mathcal{F}$

of candidate base functions, which are representative of common complexity orders, and the

![]() $\beta _i$

’s are the coefficients (real numbers) that are estimated by regression, but so that only a few coefficients are nonzero.

$\beta _i$

’s are the coefficients (real numbers) that are estimated by regression, but so that only a few coefficients are nonzero.

For illustration purposes, assume that we use the following set

![]() $\mathcal{F}$

of base functions:

$\mathcal{F}$

of base functions:

where each base function is represented as a lambda expression. Then, the sparse linear regression is performed as follows.

-

1. Generate a training set

$\mathcal{T}$

. First, a set

$\mathcal{T}$

. First, a set

$\mathcal{I} = \{ \vec{x}_1, \ldots ,\vec{x}_{n} \}$

of input values to the recurrence function is randomly generated. Then, starting with an initial

$\mathcal{I} = \{ \vec{x}_1, \ldots ,\vec{x}_{n} \}$

of input values to the recurrence function is randomly generated. Then, starting with an initial

$\mathcal{T} = \emptyset$

, for each input value

$\mathcal{T} = \emptyset$

, for each input value

$\vec{x}_i \in \mathcal{I}$

, a training case

$\vec{x}_i \in \mathcal{I}$

, a training case

$s_i$

is generated and added to

$s_i$

is generated and added to

$\mathcal{T}$

. For any input value

$\mathcal{T}$

. For any input value

$\vec{x} \in \mathcal{I}$

, the corresponding training case

$\vec{x} \in \mathcal{I}$

, the corresponding training case

$s$

is a pair of the formwhere

$s$

is a pair of the formwhere \begin{equation*} s = \big (\langle b_1, \ldots , b_{p} \rangle ,\,r\big ), \end{equation*}

\begin{equation*} s = \big (\langle b_1, \ldots , b_{p} \rangle ,\,r\big ), \end{equation*}

$b_i ={\lbrack \hspace{-0.3ex}\lbrack } t_i{\rbrack \hspace{-0.3ex}\rbrack }_{\vec{x}}$

for

$b_i ={\lbrack \hspace{-0.3ex}\lbrack } t_i{\rbrack \hspace{-0.3ex}\rbrack }_{\vec{x}}$

for

$1 \leq i \leq p$

, and

$1 \leq i \leq p$

, and

${\lbrack \hspace{-0.3ex}\lbrack } t_i{\rbrack \hspace{-0.3ex}\rbrack }_{\vec{x}}$

represents the result (a scalar) of evaluating the base function

${\lbrack \hspace{-0.3ex}\lbrack } t_i{\rbrack \hspace{-0.3ex}\rbrack }_{\vec{x}}$

represents the result (a scalar) of evaluating the base function

$t_i \in \mathcal{F}$

for input value

$t_i \in \mathcal{F}$

for input value

$\vec{x}$

, where

$\vec{x}$

, where

$\mathcal{F}$

is a set of

$\mathcal{F}$

is a set of

$p$

base functions, as already explained. The (dependent) value

$p$

base functions, as already explained. The (dependent) value

$r$

(also a constant number) is the result of evaluating the recurrence

$r$

(also a constant number) is the result of evaluating the recurrence

$f(\vec{x})$

that we want to solve or approximate, in our example, the one defined in Eq. (1). Assuming that there is an

$f(\vec{x})$

that we want to solve or approximate, in our example, the one defined in Eq. (1). Assuming that there is an

$\vec{x} \in \mathcal{I}$

such that

$\vec{x} \in \mathcal{I}$

such that

$\vec{x} = \langle 5 \rangle$

, its corresponding training case

$\vec{x} = \langle 5 \rangle$

, its corresponding training case

$s$

in our example will be

$s$

in our example will be \begin{equation*} \begin {array}{r@{\quad}l@{\quad}l} s &=& \big (\langle {\lbrack \hspace {-0.3ex}\lbrack } x {\rbrack \hspace {-0.3ex}\rbrack }_{5}, {\lbrack \hspace {-0.3ex}\lbrack } x^2 {\rbrack \hspace {-0.3ex}\rbrack }_{5}, {\lbrack \hspace {-0.3ex}\lbrack } x^3 {\rbrack \hspace {-0.3ex}\rbrack }_{5}, {\lbrack \hspace {-0.3ex}\lbrack } \lceil \log _2(x) \rceil {\rbrack \hspace {-0.3ex}\rbrack }_{5}, \ldots \rangle ,\, \mathbf {f(5)}\big ) \\[5pt] &=& \big (\langle 5, 25, 125, 3, \ldots \rangle ,\, \mathbf {5}\big ). \\[5pt] \end {array} \end{equation*}

\begin{equation*} \begin {array}{r@{\quad}l@{\quad}l} s &=& \big (\langle {\lbrack \hspace {-0.3ex}\lbrack } x {\rbrack \hspace {-0.3ex}\rbrack }_{5}, {\lbrack \hspace {-0.3ex}\lbrack } x^2 {\rbrack \hspace {-0.3ex}\rbrack }_{5}, {\lbrack \hspace {-0.3ex}\lbrack } x^3 {\rbrack \hspace {-0.3ex}\rbrack }_{5}, {\lbrack \hspace {-0.3ex}\lbrack } \lceil \log _2(x) \rceil {\rbrack \hspace {-0.3ex}\rbrack }_{5}, \ldots \rangle ,\, \mathbf {f(5)}\big ) \\[5pt] &=& \big (\langle 5, 25, 125, 3, \ldots \rangle ,\, \mathbf {5}\big ). \\[5pt] \end {array} \end{equation*}

-

2. Perform sparse regression using the training set

$\mathcal{T}$

created above in order to find a small subset of base functions that fits it well. We do this in two steps. First, we solve an

$\mathcal{T}$

created above in order to find a small subset of base functions that fits it well. We do this in two steps. First, we solve an

$\ell _1$

-regularized linear regression to learn an estimate of the non-zero coefficients of the base functions. This procedure, also called lasso (Hastie et al., Reference Hastie, Tibshirani and Wainwright2015), was originally introduced to learn interpretable models by selecting a subset of the input features. This happens because the

$\ell _1$

-regularized linear regression to learn an estimate of the non-zero coefficients of the base functions. This procedure, also called lasso (Hastie et al., Reference Hastie, Tibshirani and Wainwright2015), was originally introduced to learn interpretable models by selecting a subset of the input features. This happens because the

$\ell _1$

(sum of absolute values) penalty results in some coefficients becoming exactly zero (unlike an

$\ell _1$

(sum of absolute values) penalty results in some coefficients becoming exactly zero (unlike an

$\ell ^2_2$

penalty, which penalizes the magnitudes of the coefficients but typically results in none of them being zero). This will typically discard most of the base functions in

$\ell ^2_2$

penalty, which penalizes the magnitudes of the coefficients but typically results in none of them being zero). This will typically discard most of the base functions in

$\mathcal{F}$

, and only those that are really needed to approximate our target function will be kept. The level of penalization is controlled by a hyperparameter

$\mathcal{F}$

, and only those that are really needed to approximate our target function will be kept. The level of penalization is controlled by a hyperparameter

$\lambda \ge 0$

. As commonly done in machine learning (Hastie et al., Reference Hastie, Tibshirani and Wainwright2015), the value of

$\lambda \ge 0$

. As commonly done in machine learning (Hastie et al., Reference Hastie, Tibshirani and Wainwright2015), the value of

$\lambda$

that generalizes optimally on unseen (test) inputs is found via cross-validation on a separate validation set (generated randomly in the same way as the training set). The result of this first sparse regression step are coefficients

$\lambda$

that generalizes optimally on unseen (test) inputs is found via cross-validation on a separate validation set (generated randomly in the same way as the training set). The result of this first sparse regression step are coefficients

$\beta _1,\dots ,\beta _{p}$

(typically many of which are zero), and an independent coefficient

$\beta _1,\dots ,\beta _{p}$

(typically many of which are zero), and an independent coefficient

$\beta _0$

. In a second step, we keep only those coefficients (and their corresponding terms

$\beta _0$

. In a second step, we keep only those coefficients (and their corresponding terms

$t_i$

) for which

$t_i$

) for which

$|\beta _i|\geq \epsilon$

(where the value of

$|\beta _i|\geq \epsilon$

(where the value of

$\epsilon \ge 0$

is determined experimentally). We find that this post-processing results in solutions that better estimate the true non-zero coefficients.

$\epsilon \ge 0$

is determined experimentally). We find that this post-processing results in solutions that better estimate the true non-zero coefficients. -

3. Finally, our method performs again a standard linear regression (without

$\ell _1$

regularization) on the training set

$\ell _1$

regularization) on the training set

$\mathcal{T}$

, but using only the base functions selected in the previous step. In our example, with

$\mathcal{T}$

, but using only the base functions selected in the previous step. In our example, with

$\epsilon = 0.05$

, we obtain the model

$\epsilon = 0.05$

, we obtain the model \begin{equation*} \begin {array}{r@{\quad}l} \hat {f}(x) = 1.0 \cdot x. & \end {array} \end{equation*}

\begin{equation*} \begin {array}{r@{\quad}l} \hat {f}(x) = 1.0 \cdot x. & \end {array} \end{equation*}

A final test set

![]() $\mathcal{T}_{\text{test}}$

with input set

$\mathcal{T}_{\text{test}}$

with input set

![]() $\mathcal{I}_{\text{test}}$

is then generated (in the same way as the training set) to obtain a measure

$\mathcal{I}_{\text{test}}$

is then generated (in the same way as the training set) to obtain a measure

![]() $R^2$

of the accuracy of the estimation. In this case, we obtain a value

$R^2$

of the accuracy of the estimation. In this case, we obtain a value

![]() $R^2 = 1$

, which means that the estimation obtained predicts exactly the values for the test set. This does not prove that the

$R^2 = 1$

, which means that the estimation obtained predicts exactly the values for the test set. This does not prove that the

![]() $\hat{f}$

is a solution of the recurrence, but this makes it a candidate solution for verification. If

$\hat{f}$

is a solution of the recurrence, but this makes it a candidate solution for verification. If

![]() $R^2$

were less than

$R^2$

were less than

![]() $1$

, it would mean that the function obtained is not a candidate (exact) solution, but an approximation (not necessarily a bound), as there are values in the test set that cannot be exactly predicted.

$1$

, it would mean that the function obtained is not a candidate (exact) solution, but an approximation (not necessarily a bound), as there are values in the test set that cannot be exactly predicted.

Currently, the set of base functions

![]() $\mathcal{F}$

is fixed; nevertheless, we plan to automatically infer better, more problem-oriented sets by using different heuristics, as we comment on in Section 8. Alternatively, as already mentioned, our guessing method is parametric and can also be instantiated to symbolic regression, which mitigates this limitation by creating new expressions, that is new “templates”, beyond linear combinations of

$\mathcal{F}$

is fixed; nevertheless, we plan to automatically infer better, more problem-oriented sets by using different heuristics, as we comment on in Section 8. Alternatively, as already mentioned, our guessing method is parametric and can also be instantiated to symbolic regression, which mitigates this limitation by creating new expressions, that is new “templates”, beyond linear combinations of

![]() $\mathcal{F}$

. However, only shallow expressions are reachable in acceptable time by symbolic regression’s exploration strategy: timeouts will often occur if solutions can only be expressed by deep expressions.

$\mathcal{F}$

. However, only shallow expressions are reachable in acceptable time by symbolic regression’s exploration strategy: timeouts will often occur if solutions can only be expressed by deep expressions.

2.2 The “check” stage

Once a function that is a candidate solution for the recurrence has been guessed, the second step of our method tries to verify whether such a candidate is actually a solution. To do so, the recurrence is encoded as a first order logic formula where the references to the target function are replaced by the candidate solution whenever possible. Afterwards, we use an SMT-solver to check whether the negation of such formula is satisfiable, in which case we can conclude that the candidate is not a solution for the recurrence. Otherwise, if such formula is unsatisfiable, then the candidate function is an exact solution. Sometimes, it is necessary to consider a precondition for the domain of the recurrence, which is also included in the encoding.

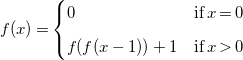

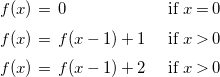

To illustrate this process, Expression (2) below

\begin{equation} \begin{array}{l@{\quad}l} f(x) = \begin{cases} 0 & \textrm{if}\; x = 0 \\[5pt] f(f(x-1)) + 1 & \textrm{if}\; x \gt 0 \end{cases} \end{array} \end{equation}

\begin{equation} \begin{array}{l@{\quad}l} f(x) = \begin{cases} 0 & \textrm{if}\; x = 0 \\[5pt] f(f(x-1)) + 1 & \textrm{if}\; x \gt 0 \end{cases} \end{array} \end{equation}

shows the recurrence we target to solve, for which the candidate solution obtained previously using (sparse) linear regression is

![]() $\hat{f}(x) = x$

(for all

$\hat{f}(x) = x$

(for all

![]() $x\geq 0$

). Now, Expression (3) below shows the encoding of the recurrence as a first order logic formula.

$x\geq 0$

). Now, Expression (3) below shows the encoding of the recurrence as a first order logic formula.

Finally, Expression (4) below shows the negation of such formula, as well as the references to the function name substituted by the definition of the candidate solution. We underline both the subexpressions to be replaced, and the subexpressions resulting from the substitutions.

It is easy to see that Formula (4) is unsatisfiable. Therefore,

![]() $\hat{f}(x) = x$

is an exact solution for

$\hat{f}(x) = x$

is an exact solution for

![]() $f(x)$

in the recurrence defined by Eq. (1).

$f(x)$

in the recurrence defined by Eq. (1).

For some cases where the candidate solution contains transcendental functions, our implementation of the method uses a CAS to perform simplifications and transformations, in order to obtain a formula supported by the SMT-solver. We find this combination of CAS and SMT-solver particularly useful, since it allows us to solve more problems than only using one of these systems in isolation.

3 Preliminaries

3.1 Notations

We use the letters

![]() $x$

,

$x$

,

![]() $y$

,

$y$

,

![]() $z$

to denote variables and

$z$

to denote variables and

![]() $a$

,

$a$

,

![]() $b$

,

$b$

,

![]() $c$

,

$c$

,

![]() $d$

to denote constants and coefficients. We use

$d$

to denote constants and coefficients. We use

![]() $f, g$

to represent functions, and

$f, g$

to represent functions, and

![]() $e, t$

to represent arbitrary expressions. We use

$e, t$

to represent arbitrary expressions. We use

![]() $\varphi$

to represent arbitrary boolean constraints over a set of variables. Sometimes, we also use

$\varphi$

to represent arbitrary boolean constraints over a set of variables. Sometimes, we also use

![]() $\beta$

to represent coefficients obtained with (sparse) linear regression. In all cases, the symbols can be subscribed.

$\beta$

to represent coefficients obtained with (sparse) linear regression. In all cases, the symbols can be subscribed.

![]() $\mathbb{N}$

and

$\mathbb{N}$

and

![]() $\mathbb{R}^+$

denote the sets of non-negative integer and non-negative real numbers, respectively, both including

$\mathbb{R}^+$

denote the sets of non-negative integer and non-negative real numbers, respectively, both including

![]() $0$

. Given two sets

$0$

. Given two sets

![]() $A$

and

$A$

and

![]() $B$

,

$B$

,

![]() $B^A$

is the set of all functions from

$B^A$

is the set of all functions from

![]() $A$

to

$A$

to

![]() $B$

. We use

$B$

. We use

![]() $\vec{x}$

to denote a finite sequence

$\vec{x}$

to denote a finite sequence

![]() $\langle x_1,x_2,\ldots ,x_{p}\rangle$

, for some

$\langle x_1,x_2,\ldots ,x_{p}\rangle$

, for some

![]() $p\gt 0$

. Given a sequence

$p\gt 0$

. Given a sequence

![]() $S$

and an element

$S$

and an element

![]() $x$

,

$x$

,

![]() $\langle x| S\rangle$

is a new sequence with first element

$\langle x| S\rangle$

is a new sequence with first element

![]() $x$

and tail

$x$

and tail

![]() $S$

. We refer to the classical finite-dimensional

$S$

. We refer to the classical finite-dimensional

![]() $1$

-norm (Manhattan norm) and

$1$

-norm (Manhattan norm) and

![]() $2$

-norm (Euclidean norm) by

$2$

-norm (Euclidean norm) by

![]() $\ell _1$

and

$\ell _1$

and

![]() $\ell _2$

, respectively, while

$\ell _2$

, respectively, while

![]() $\ell _0$

denotes the “norm” (which we will call a pseudo-norm) that counts the number of non-zero coordinates of a vector.

$\ell _0$

denotes the “norm” (which we will call a pseudo-norm) that counts the number of non-zero coordinates of a vector.

3.2 Recurrence relations

In our setting, a recurrence relation (or recurrence equation) is just a functional equation on

![]() $f:{\mathcal{D}}\to{\mathbb{R}}$

with

$f:{\mathcal{D}}\to{\mathbb{R}}$

with

![]() ${\mathcal{D}} \subset{\mathbb{N}}^{m}$

,

${\mathcal{D}} \subset{\mathbb{N}}^{m}$

,

![]() $m \geq 1$

, that can be written as

$m \geq 1$

, that can be written as

where

![]() $\Phi :{\mathbb{R}}^{\mathcal{D}}\times{\mathcal{D}}\to{\mathbb{R}}$

is used to define

$\Phi :{\mathbb{R}}^{\mathcal{D}}\times{\mathcal{D}}\to{\mathbb{R}}$

is used to define

![]() $f(\vec{x})$

in terms of other values of

$f(\vec{x})$

in terms of other values of

![]() $f$

. In this paper we consider functions

$f$

. In this paper we consider functions

![]() $f$

that take natural numbers as arguments but output real values, for example, corresponding to costs such as energy consumption, which need not be measured as integer values in practice. Working with real values also makes optimizing for the regression coefficients easier. We restrict ourselves to the domain

$f$

that take natural numbers as arguments but output real values, for example, corresponding to costs such as energy consumption, which need not be measured as integer values in practice. Working with real values also makes optimizing for the regression coefficients easier. We restrict ourselves to the domain

![]() $\mathbb{N}^{m}$

because in our motivating application, cost/size analysis, the input arguments to recurrences represent data sizes, which take non-negative integer values. Technically, our approach may be easily extended to recurrence equations on functions with domain

$\mathbb{N}^{m}$

because in our motivating application, cost/size analysis, the input arguments to recurrences represent data sizes, which take non-negative integer values. Technically, our approach may be easily extended to recurrence equations on functions with domain

![]() $\mathbb{Z}^{m}$

.

$\mathbb{Z}^{m}$

.

A system of recurrence equations is a functional equation on multiple

![]() $f_i:{\mathcal{D}}_i\to{\mathbb{R}}$

, that is

$f_i:{\mathcal{D}}_i\to{\mathbb{R}}$

, that is

![]() $\forall i,\,\forall{\vec{x}\in{\mathcal{D}}_i},\; f_i(\vec{x}) = \Phi _i(f_1,\ldots \!,f_r,\vec{x})$

. Inequations and non-deterministic equations can also be considered by making

$\forall i,\,\forall{\vec{x}\in{\mathcal{D}}_i},\; f_i(\vec{x}) = \Phi _i(f_1,\ldots \!,f_r,\vec{x})$

. Inequations and non-deterministic equations can also be considered by making

![]() $\Phi$

non-deterministic, that is

$\Phi$

non-deterministic, that is

![]() $\Phi :{\mathbb{R}}^{\mathcal{D}}\times{\mathcal{D}}\to{\mathcal{P}}({\mathbb{R}})$

and

$\Phi :{\mathbb{R}}^{\mathcal{D}}\times{\mathcal{D}}\to{\mathcal{P}}({\mathbb{R}})$

and

![]() $\forall{\vec{x}\in{\mathcal{D}}},\;f(\vec{x}) \in \Phi (f,\vec{x})$

. In general, such equations may have any number of solutions. In this work, we focus on deterministic recurrence equations, as we will discuss later.

$\forall{\vec{x}\in{\mathcal{D}}},\;f(\vec{x}) \in \Phi (f,\vec{x})$

. In general, such equations may have any number of solutions. In this work, we focus on deterministic recurrence equations, as we will discuss later.

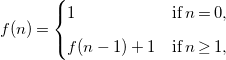

Classical recurrence relations of order

![]() $k$

are recurrence relations where

$k$

are recurrence relations where

![]() ${\mathcal{D}}={\mathbb{N}}$

,

${\mathcal{D}}={\mathbb{N}}$

,

![]() $\Phi (f,n)$

is a constant when

$\Phi (f,n)$

is a constant when

![]() $n \lt k$

and

$n \lt k$

and

![]() $\Phi (f,n)$

depends only on

$\Phi (f,n)$

depends only on

![]() $f(n-k),\ldots \!,f(n-1)$

when

$f(n-k),\ldots \!,f(n-1)$

when

![]() $n \geq k$

. For example, the following recurrence relation of order

$n \geq k$

. For example, the following recurrence relation of order

![]() $k=1$

, where

$k=1$

, where

![]() $\Phi (f,n)= f(n-1) + 1$

when

$\Phi (f,n)= f(n-1) + 1$

when

![]() $n \geq 1$

, and

$n \geq 1$

, and

![]() $\Phi (f,n)= 1$

when

$\Phi (f,n)= 1$

when

![]() $n \lt 1$

(or equivalently,

$n \lt 1$

(or equivalently,

![]() $n = 0$

), that is

$n = 0$

), that is

\begin{equation} f(n) = \begin{cases} 1 & \textrm{if}\; n = 0, \\[5pt] f(n-1) + 1 & \textrm{if}\; n \geq 1, \end{cases} \end{equation}

\begin{equation} f(n) = \begin{cases} 1 & \textrm{if}\; n = 0, \\[5pt] f(n-1) + 1 & \textrm{if}\; n \geq 1, \end{cases} \end{equation}

has the closed-form function

![]() $f(n) = n + 1$

as a solution.

$f(n) = n + 1$

as a solution.

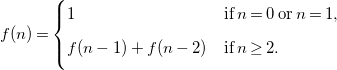

Another example, with order

![]() $k=2$

, is the historical Fibonacci recurrence relation, where

$k=2$

, is the historical Fibonacci recurrence relation, where

![]() $\Phi (f,n)=f(n-1)+f(n-2)$

when

$\Phi (f,n)=f(n-1)+f(n-2)$

when

![]() $n\geq 2$

, and

$n\geq 2$

, and

![]() $\Phi (f,n)= 1$

when

$\Phi (f,n)= 1$

when

![]() $n \lt 2$

:

$n \lt 2$

:

\begin{equation} f(n) = \begin{cases} 1 & \textrm{if}\; n = 0\; \textrm{or}\; n = 1, \\[5pt] f(n-1) + f(n-2) & \textrm{if}\; n \geq 2. \\[5pt] \end{cases} \end{equation}

\begin{equation} f(n) = \begin{cases} 1 & \textrm{if}\; n = 0\; \textrm{or}\; n = 1, \\[5pt] f(n-1) + f(n-2) & \textrm{if}\; n \geq 2. \\[5pt] \end{cases} \end{equation}

![]() $\Phi$

may be viewed as a recursive definition of “the” solution

$\Phi$

may be viewed as a recursive definition of “the” solution

![]() $f$

of the equation, with the caveat that the definition may be non-satisfiable or partial (degrees of freedom may remain). We define below an evaluation strategy

$f$

of the equation, with the caveat that the definition may be non-satisfiable or partial (degrees of freedom may remain). We define below an evaluation strategy

![]() $\mathsf{EvalFun}$

of this recursive definition, which, when it terminates for all inputs, provides a solution to the equation. This will allow us to view recurrence equations as programs that can be evaluated, enabling us to easily generate input-output pairs, on which we can perform regression to attempt to guess a symbolic solution to the equation.

$\mathsf{EvalFun}$

of this recursive definition, which, when it terminates for all inputs, provides a solution to the equation. This will allow us to view recurrence equations as programs that can be evaluated, enabling us to easily generate input-output pairs, on which we can perform regression to attempt to guess a symbolic solution to the equation.

This setting can be generalized to partial solutions to the equation, that is partial functions

![]() $f:{\mathcal{D}}\rightharpoonup{\mathbb{R}}$

such that

$f:{\mathcal{D}}\rightharpoonup{\mathbb{R}}$

such that

![]() $f(\vec{x}) = \Phi (f,\vec{x})$

whenever they are defined at

$f(\vec{x}) = \Phi (f,\vec{x})$

whenever they are defined at

![]() $\vec{x}$

.

$\vec{x}$

.

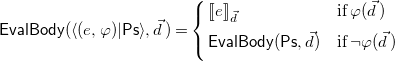

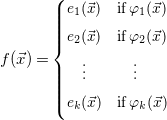

We are interested in constrained recurrence relations, where

![]() $\Phi$

is expressed piecewise as

$\Phi$

is expressed piecewise as

\begin{equation} \Phi (f,\vec{x}) = \begin{cases} e_1(\vec{x}) & \textrm{if}\; \varphi _1(\vec{x}) \\[5pt] e_2(\vec{x}) & \textrm{if}\; \varphi _2(\vec{x}) \\[5pt] \quad \vdots & \quad \vdots \\[5pt] e_k(\vec{x}) & \textrm{if}\; \varphi _k(\vec{x}), \\[5pt] \end{cases} \end{equation}

\begin{equation} \Phi (f,\vec{x}) = \begin{cases} e_1(\vec{x}) & \textrm{if}\; \varphi _1(\vec{x}) \\[5pt] e_2(\vec{x}) & \textrm{if}\; \varphi _2(\vec{x}) \\[5pt] \quad \vdots & \quad \vdots \\[5pt] e_k(\vec{x}) & \textrm{if}\; \varphi _k(\vec{x}), \\[5pt] \end{cases} \end{equation}

with

![]() $f:{\mathcal{D}}\to{\mathbb{R}}$

,

$f:{\mathcal{D}}\to{\mathbb{R}}$

,

![]() ${\mathcal{D}} = \{\vec{x}\,|\,\vec{x} \in{\mathbb{N}}^k \wedge \varphi _{\text{pre}}(\vec{x})\}$

for some boolean constraint

${\mathcal{D}} = \{\vec{x}\,|\,\vec{x} \in{\mathbb{N}}^k \wedge \varphi _{\text{pre}}(\vec{x})\}$

for some boolean constraint

![]() $\varphi _{\text{pre}}$

, called the precondition of

$\varphi _{\text{pre}}$

, called the precondition of

![]() $f$

, and

$f$

, and

![]() $e_i(\vec{x})$

,

$e_i(\vec{x})$

,

![]() $\varphi _i(\vec{x})$

are respectively arbitrary expressions and constraints over both

$\varphi _i(\vec{x})$

are respectively arbitrary expressions and constraints over both

![]() $\vec{x}$

and

$\vec{x}$

and

![]() $f$

. We further require that

$f$

. We further require that

![]() $\Phi$

is always defined, that is,

$\Phi$

is always defined, that is,

![]() $\varphi _{\text{pre}} \models \vee _i\,\varphi _i$

. A case such that

$\varphi _{\text{pre}} \models \vee _i\,\varphi _i$

. A case such that

![]() $e_i,\,\varphi _i$

do not contain any call to

$e_i,\,\varphi _i$

do not contain any call to

![]() $f$

is called a base case, and those that do are called recursive cases. In practice, we are only interested in equations with at least one base case and one recursive case.

$f$

is called a base case, and those that do are called recursive cases. In practice, we are only interested in equations with at least one base case and one recursive case.

A challenging class of recurrences that can be tackled with our approach is that of “nested” recurrences, where recursive cases may contain nested calls

![]() $f(f(\ldots ))$

.

$f(f(\ldots ))$

.

We assume that the

![]() $\varphi _i$

are mutually exclusive, so that

$\varphi _i$

are mutually exclusive, so that

![]() $\Phi$

must be deterministic. This is not an important limitation for our motivating application, cost analysis, and in particular the one performed by the CiaoPP system. Such cost analysis can deal with a large class of non-deterministic programs by translating the resulting non-deterministic recurrences into deterministic ones. For example, assume that the cost analysis generates the following recurrence (which represents an input/output size relation).

$\Phi$

must be deterministic. This is not an important limitation for our motivating application, cost analysis, and in particular the one performed by the CiaoPP system. Such cost analysis can deal with a large class of non-deterministic programs by translating the resulting non-deterministic recurrences into deterministic ones. For example, assume that the cost analysis generates the following recurrence (which represents an input/output size relation).

\begin{equation*} \begin {array}{l@{\quad}l} f(x) \, = \; 0 & \text { if } x = 0 \\[5pt] f(x) \, = \; f(x-1) + 1 & \text { if } x \gt 0 \\[5pt] f(x) \, = \; f(x-1) + 2 & \text { if } x \gt 0 \end {array} \end{equation*}

\begin{equation*} \begin {array}{l@{\quad}l} f(x) \, = \; 0 & \text { if } x = 0 \\[5pt] f(x) \, = \; f(x-1) + 1 & \text { if } x \gt 0 \\[5pt] f(x) \, = \; f(x-1) + 2 & \text { if } x \gt 0 \end {array} \end{equation*}

Then, prior to calling the solver, the recurrence is transformed into the following two deterministic recurrences, the solution of which would be an upper or lower bound on the solution to the original recurrence. For upper bounds:

and for lower bounds:

Our regression technique correctly infers the solution

![]() $f(x) = 2 x$

in the first case, and

$f(x) = 2 x$

in the first case, and

![]() $f(x) = x$

in the second case. We have access to such program transformation, that recovers determinism by looking for worst/best cases, under some hypotheses, outside of the scope of this paper, on the kind of non-determinism and equations that are being dealt with.

$f(x) = x$

in the second case. We have access to such program transformation, that recovers determinism by looking for worst/best cases, under some hypotheses, outside of the scope of this paper, on the kind of non-determinism and equations that are being dealt with.

We now introduce an evaluation strategy of recurrences that allows us to be more consistent with the termination of programs than the more classical semantics consisting only of maximally defined partial solutions. Let

![]() $\mathsf{def}(\Phi )$

denote a sequence

$\mathsf{def}(\Phi )$

denote a sequence

![]() $\langle (e_1(\vec{x}),\varphi _1(\vec{x})),\ldots ,(e_k(\vec{x}),\varphi _k(\vec{x}))\rangle$

defining a (piecewise) constrained recurrence relation

$\langle (e_1(\vec{x}),\varphi _1(\vec{x})),\ldots ,(e_k(\vec{x}),\varphi _k(\vec{x}))\rangle$

defining a (piecewise) constrained recurrence relation

![]() $\Phi$

on

$\Phi$

on

![]() $f$

, where each element of the sequence is a pair representing a case. The evaluation of the equation for a concrete value

$f$

, where each element of the sequence is a pair representing a case. The evaluation of the equation for a concrete value

![]() $\vec{{d}}$

, denoted

$\vec{{d}}$

, denoted

![]() $\mathsf{EvalFun}(f(\vec{{d}}))$

, is defined as follows.

$\mathsf{EvalFun}(f(\vec{{d}}))$

, is defined as follows.

\begin{equation*} \mathsf {EvalBody}\big (\langle (e,\varphi )|\mathsf {Ps}\rangle , \vec {{d}}\,\big ) = \left\{\begin {array}{l@{\quad}l} {\lbrack \hspace {-0.3ex}\lbrack } e {\rbrack \hspace {-0.3ex}\rbrack }_{\vec {{d}}} & \textrm{if}\; \varphi \big (\vec {{d}}\,\big ) \\[5pt] \mathsf {EvalBody}(\mathsf {Ps}, \vec {{d}}) & \textrm{if}\; \neg \varphi \big (\vec {{d}}\,\big ) \\[5pt] \end {array}\right. \end{equation*}

\begin{equation*} \mathsf {EvalBody}\big (\langle (e,\varphi )|\mathsf {Ps}\rangle , \vec {{d}}\,\big ) = \left\{\begin {array}{l@{\quad}l} {\lbrack \hspace {-0.3ex}\lbrack } e {\rbrack \hspace {-0.3ex}\rbrack }_{\vec {{d}}} & \textrm{if}\; \varphi \big (\vec {{d}}\,\big ) \\[5pt] \mathsf {EvalBody}(\mathsf {Ps}, \vec {{d}}) & \textrm{if}\; \neg \varphi \big (\vec {{d}}\,\big ) \\[5pt] \end {array}\right. \end{equation*}

The goal of our regression strategy is to find an expression

![]() $\hat{f}$

representing a function

$\hat{f}$

representing a function

![]() ${\mathcal{D}}\to{\mathbb{R}}$

such that, for all

${\mathcal{D}}\to{\mathbb{R}}$

such that, for all

![]() $\vec{{d}} \in{\mathcal{D}}$

,

$\vec{{d}} \in{\mathcal{D}}$

,

-

•

$\text{ If }\mathsf{EvalFun}(f(\vec{{d}})) \text{ terminates, then } \mathsf{EvalFun}(f(\vec{{d}})) ={\lbrack \hspace{-0.3ex}\lbrack } \hat{f}{\rbrack \hspace{-0.3ex}\rbrack }_{\vec{{d}}}$

, and

$\text{ If }\mathsf{EvalFun}(f(\vec{{d}})) \text{ terminates, then } \mathsf{EvalFun}(f(\vec{{d}})) ={\lbrack \hspace{-0.3ex}\lbrack } \hat{f}{\rbrack \hspace{-0.3ex}\rbrack }_{\vec{{d}}}$

, and -

•

$\hat{f}$

does not contain any recursive call in its definition.

$\hat{f}$

does not contain any recursive call in its definition.

If the above conditions are met, we say that

![]() $\hat{f}$

is a closed form for

$\hat{f}$

is a closed form for

![]() $f$

. In the case of (sparse) linear regression, we are looking for expressions

$f$

. In the case of (sparse) linear regression, we are looking for expressions

where

![]() $\beta _i \in{\mathbb{R}}$

, and

$\beta _i \in{\mathbb{R}}$

, and

![]() $t_i$

are expressions over

$t_i$

are expressions over

![]() $\vec{x}$

, not including recursive references to

$\vec{x}$

, not including recursive references to

![]() $\hat{f}$

.

$\hat{f}$

.

For example, consider the following Prolog program which does not terminate for a call q(X) where X is bound to a positive integer.

The following recurrence relation for its cost (in resolution steps) can be set up.

A CAS will give the closed form

![]() $\mathtt{C}_{\mathtt{q}}(x) = 1 - x$

for such recurrence, however, the cost analysis should give

$\mathtt{C}_{\mathtt{q}}(x) = 1 - x$

for such recurrence, however, the cost analysis should give

![]() $\mathtt{C}_{\mathtt{q}}(x) = \infty$

for

$\mathtt{C}_{\mathtt{q}}(x) = \infty$

for

![]() $x\gt 0$

.

$x\gt 0$

.

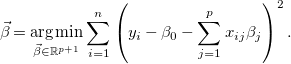

3.3 (Sparse) linear regression

Linear regression (Hastie et al., Reference Hastie, Tibshirani and Friedman2009) is a statistical technique used to approximate the linear relationship between a number of explanatory (input) variables and a dependent (output) variable. Given a vector of (input) real-valued variables

![]() $X = (X_1,\ldots ,X_{p})^T$

, we predict the output variable

$X = (X_1,\ldots ,X_{p})^T$

, we predict the output variable

![]() $Y$

via the model

$Y$

via the model

which is defined through the vector of coefficients

![]() $\vec{\beta } = (\beta _0,\ldots ,\beta _{p})^T \in \mathbb{R}^{p+1}$

. Such coefficients are estimated from a set of observations

$\vec{\beta } = (\beta _0,\ldots ,\beta _{p})^T \in \mathbb{R}^{p+1}$

. Such coefficients are estimated from a set of observations

![]() $\{(\langle x_{i1},\ldots ,x_{ip}\rangle ,y_i)\}_{i=1}^{n}$

so as to minimize a loss function, most commonly the sum of squares

$\{(\langle x_{i1},\ldots ,x_{ip}\rangle ,y_i)\}_{i=1}^{n}$

so as to minimize a loss function, most commonly the sum of squares

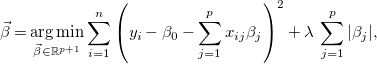

\begin{equation} \vec{\beta } = \underset{\vec{\beta } \in \mathbb{R}^{p+1}}{\textrm{arg min}}\sum _{i=1}^{n}\left(y_i - \beta _0 - \sum _{j=1}^{p} x_{ij}\beta _j\right)^2. \end{equation}

\begin{equation} \vec{\beta } = \underset{\vec{\beta } \in \mathbb{R}^{p+1}}{\textrm{arg min}}\sum _{i=1}^{n}\left(y_i - \beta _0 - \sum _{j=1}^{p} x_{ij}\beta _j\right)^2. \end{equation}

Sometimes (as is our case) some of the input variables are not relevant to explain the output, but the above least-squares estimate will almost always assign nonzero values to all the coefficients. In order to force the estimate to make exactly zero the coefficients of irrelevant variables (hence removing them and doing feature selection), various techniques have been proposed. The most widely used one is the lasso (Hastie et al., Reference Hastie, Tibshirani and Wainwright2015), which adds an

![]() $\ell _1$

penalty on

$\ell _1$

penalty on

![]() $\vec{\beta }$

(i.e., the sum of absolute values of each coefficient) to Expression 11, obtaining

$\vec{\beta }$

(i.e., the sum of absolute values of each coefficient) to Expression 11, obtaining

\begin{equation} \vec{\beta } = \underset{\vec{\beta } \in \mathbb{R}^{p+1}}{\textrm{arg min}}\sum _{i=1}^{n}\left(y_i - \beta _0 - \sum _{j=1}^{p} x_{ij}\beta _j\right)^2 + \lambda \,\sum _{j=1}^{p}|\beta _j|, \end{equation}

\begin{equation} \vec{\beta } = \underset{\vec{\beta } \in \mathbb{R}^{p+1}}{\textrm{arg min}}\sum _{i=1}^{n}\left(y_i - \beta _0 - \sum _{j=1}^{p} x_{ij}\beta _j\right)^2 + \lambda \,\sum _{j=1}^{p}|\beta _j|, \end{equation}

where

![]() $\lambda \ge 0$

is a hyperparameter that determines the level of penalization: the greater

$\lambda \ge 0$

is a hyperparameter that determines the level of penalization: the greater

![]() $\lambda$

, the greater the number of coefficients that are exactly equal to

$\lambda$

, the greater the number of coefficients that are exactly equal to

![]() $0$

. The lasso has two advantages over other feature selection techniques for linear regression. First, it defines a convex problem whose unique solution can be efficiently computed even for datasets where either of

$0$

. The lasso has two advantages over other feature selection techniques for linear regression. First, it defines a convex problem whose unique solution can be efficiently computed even for datasets where either of

![]() $n$

or

$n$

or

![]() $p$

are large (almost as efficiently as a standard linear regression). Second, it has been shown in practice to be very good at estimating the relevant variables. In fact, the regression problem we would really like to solve is that of Expression 11 but subject to the constraint that

$p$

are large (almost as efficiently as a standard linear regression). Second, it has been shown in practice to be very good at estimating the relevant variables. In fact, the regression problem we would really like to solve is that of Expression 11 but subject to the constraint that

![]() $\left \lVert (\beta _1,\ldots ,\beta _{p})^T\right \rVert _0 \le K$

, that is, that at most

$\left \lVert (\beta _1,\ldots ,\beta _{p})^T\right \rVert _0 \le K$

, that is, that at most

![]() $K$

of the

$K$

of the

![]() $p$

coefficients are non-zero, an

$p$

coefficients are non-zero, an

![]() $\ell _0$

-constrained problem. Unfortunately, this is an NP-hard problem. However, replacing the

$\ell _0$

-constrained problem. Unfortunately, this is an NP-hard problem. However, replacing the

![]() $\ell _0$

pseudo-norm with the

$\ell _0$

pseudo-norm with the

![]() $\ell _1$

-norm has been observed to produce good approximations in practice (Hastie et al., Reference Hastie, Tibshirani and Wainwright2015).

$\ell _1$

-norm has been observed to produce good approximations in practice (Hastie et al., Reference Hastie, Tibshirani and Wainwright2015).

3.4 Symbolic regression

Symbolic regression is a regression task in which the model space consists of all mathematical expressions on a chosen signature, that is expression trees with variables or constants for leaves and operators for internal nodes. To avoid overfitting, objective functions are designed to penalize model complexity, in a similar fashion to sparse linear regression techniques. This task is much more ambitious: rather than searching over the vector space spanned by a relatively small set of base functions as we do in sparse linear regression, the search space is enormous, considering any possible expression which results from applying a set of mathematical operators in any combination. For this reason, heuristics such as evolutionary algorithms are typically used to search this space, but runtime still remains a challenge for deep expressions.

The approach presented in this paper is parametric in the regression technique used, and we instantiate it with both (sparse) linear and symbolic regression in our experiments (Section 6). We will see that symbolic regression addresses some of the limitations of (sparse) linear regression, at the expense of time. Our implementation is based on the symbolic regression library PySR (Cranmer, Reference Cranmer2023), a multi-population evolutionary algorithm paired with classical optimization algorithms to select constants. In order to avoid overfitting and guide the search, PySR penalizes model complexity, defined as a sum of individual node costs for nodes in the expression tree, where a predefined cost is assigned to each possible type of node.

In our application context, (sparse) linear regression searches for a solution to the recurrence equation in the affine space spanned by candidate functions, that is

![]() $\hat{f} = \beta _0 + \sum _i \beta _i t_i$

with

$\hat{f} = \beta _0 + \sum _i \beta _i t_i$

with

![]() $t_i\in \mathcal{F}$

, while symbolic regression may choose any expression built on the chosen operators. For example, consider equation exp3 of Table 1, whose solution is

$t_i\in \mathcal{F}$

, while symbolic regression may choose any expression built on the chosen operators. For example, consider equation exp3 of Table 1, whose solution is

![]() $(x,y)\mapsto 2^{x+y}$

. This solution cannot be expressed using (sparse) linear regression and the set of candidates

$(x,y)\mapsto 2^{x+y}$

. This solution cannot be expressed using (sparse) linear regression and the set of candidates

![]() $\{\lambda xy.x,\lambda xy.y,\lambda xy.x^2,\lambda xy.y^2,\lambda xy.2^x,\lambda xy.2^y\}$

, but can be found with symbolic regression and operators

$\{\lambda xy.x,\lambda xy.y,\lambda xy.x^2,\lambda xy.y^2,\lambda xy.2^x,\lambda xy.2^y\}$

, but can be found with symbolic regression and operators

![]() $\{+(\cdot ,\cdot ), \times (\cdot ,\cdot ), 2^{(\cdot )}\}$

.

$\{+(\cdot ,\cdot ), \times (\cdot ,\cdot ), 2^{(\cdot )}\}$

.

4 Algorithmic description of the approach

In this section we describe our approach for generating and checking candidate solutions for recurrences that arise in resource analysis.

4.1 A first version

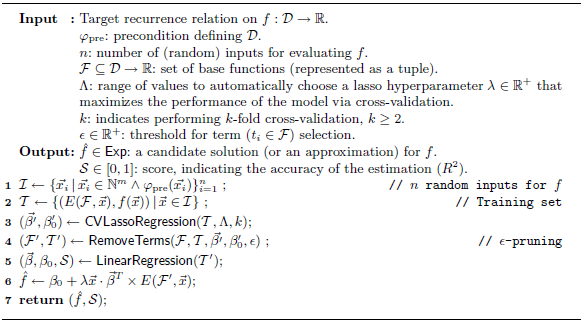

Algorithms1 and 2 correspond to the guesser and checker components, respectively, which are illustrated in Figure 1. For the sake of presentation, Algorithm1 describes the instantiation of the guess method based on lasso linear regression. It receives a recurrence relation for a function

![]() $f$

to solve, a set of base functions, and a threshold to decide when to discard irrelevant terms. The output is a closed-form expression

$f$

to solve, a set of base functions, and a threshold to decide when to discard irrelevant terms. The output is a closed-form expression

![]() $\hat{f}$

for

$\hat{f}$

for

![]() $f$

, and a score

$f$

, and a score

![]() $\mathcal{S}$

that reflects the accuracy of the approximation, in the range

$\mathcal{S}$

that reflects the accuracy of the approximation, in the range

![]() $[0,1]$

. If

$[0,1]$

. If

![]() $\mathcal{S} \approx 1$

, the approximation can be considered a candidate solution. Otherwise,

$\mathcal{S} \approx 1$

, the approximation can be considered a candidate solution. Otherwise,

![]() $\hat{f}$

is an approximation (not necessarily a bound) of the solution.

$\hat{f}$

is an approximation (not necessarily a bound) of the solution.

Algorithm 1. Candidate Solution Generation (Guesser).

4.1.1 Generate

In line 1 we start by generating a set

![]() $\mathcal{I}$

of random inputs for

$\mathcal{I}$

of random inputs for

![]() $f$

. Each input

$f$

. Each input

![]() $\vec{x_i}$

is an

$\vec{x_i}$

is an

![]() $m$

-tuple verifying precondition

$m$

-tuple verifying precondition

![]() $\varphi _{\text{pre}}$

, where

$\varphi _{\text{pre}}$

, where

![]() $m$

is the number of arguments of

$m$

is the number of arguments of

![]() $f$

. In line 2 we produce the training set

$f$

. In line 2 we produce the training set

![]() $\mathcal{T}$

. The (explanatory) inputs are generated by evaluating the base functions in

$\mathcal{T}$

. The (explanatory) inputs are generated by evaluating the base functions in

![]() $\mathcal{F} = \langle t_1, t_2, \ldots , t_{p} \rangle$

with each tuple

$\mathcal{F} = \langle t_1, t_2, \ldots , t_{p} \rangle$

with each tuple

![]() $\vec{x} \in \mathcal{I}$

. This is done by using function

$\vec{x} \in \mathcal{I}$

. This is done by using function

![]() $E$

, defined as follows.

$E$

, defined as follows.

We also evaluate the recurrence equation for input

![]() $\vec{x}$

, and add the observed output

$\vec{x}$

, and add the observed output

![]() $f(\vec{x})$

as the last element in the corresponding training case.

$f(\vec{x})$

as the last element in the corresponding training case.

4.1.2 Regress

In line 3 we generate a first linear model by applying function

![]() $\mathsf{CVLassoRegression}$

to the generated training set, which performs a sparse linear regression with lasso regularization. As already mentioned, lasso regularization requires a hyperparameter

$\mathsf{CVLassoRegression}$

to the generated training set, which performs a sparse linear regression with lasso regularization. As already mentioned, lasso regularization requires a hyperparameter

![]() $\lambda$

that determines the level of penalization for the coefficients. Instead of using a single value for

$\lambda$

that determines the level of penalization for the coefficients. Instead of using a single value for

![]() $\lambda$

,

$\lambda$

,

![]() $\mathsf{CVLassoRegression}$

uses a range of possible values, applying cross-validation on top of the linear regression to automatically select the best value for that parameter, from the given range. In particular,

$\mathsf{CVLassoRegression}$

uses a range of possible values, applying cross-validation on top of the linear regression to automatically select the best value for that parameter, from the given range. In particular,

![]() $k$

-fold cross-validation is performed, which means that the training set is split into

$k$

-fold cross-validation is performed, which means that the training set is split into

![]() $k$

parts or folds. Then, each fold is taken as the validation set, training the model with the remaining

$k$

parts or folds. Then, each fold is taken as the validation set, training the model with the remaining

![]() $k-1$

folds. Finally, the performance measure reported is the average of the values computed in the

$k-1$

folds. Finally, the performance measure reported is the average of the values computed in the

![]() $k$

iterations. The result of this function is the vector of coefficients

$k$

iterations. The result of this function is the vector of coefficients

![]() $\vec{\beta ^{\prime }}$

, together with the intercept

$\vec{\beta ^{\prime }}$

, together with the intercept

![]() $\beta _0^{\prime }$

. These coefficients are used in line 4 to decide which base functions are discarded before the last regression step. Note that

$\beta _0^{\prime }$

. These coefficients are used in line 4 to decide which base functions are discarded before the last regression step. Note that

![]() $\mathsf{RemoveTerms}$

removes the base functions from

$\mathsf{RemoveTerms}$

removes the base functions from

![]() $\mathcal{F}$

together with their corresponding output values from the training set

$\mathcal{F}$

together with their corresponding output values from the training set

![]() $\mathcal{T}$

, returning the new set of base functions

$\mathcal{T}$

, returning the new set of base functions

![]() $\mathcal{F}^{\prime }$

and its corresponding training set

$\mathcal{F}^{\prime }$

and its corresponding training set

![]() $\mathcal{T}^{\prime }$

. In line 5, standard linear regression (without regularization or cross-validation) is applied, obtaining the final coefficients

$\mathcal{T}^{\prime }$

. In line 5, standard linear regression (without regularization or cross-validation) is applied, obtaining the final coefficients

![]() $\vec{\beta }$

and

$\vec{\beta }$

and

![]() $\beta _0$

. Additionally, from this step we also obtain the score

$\beta _0$

. Additionally, from this step we also obtain the score

![]() $\mathcal{S}$

of the resulting model. In line 6 we set up the resulting closed-form expression, given as a function on the variables in

$\mathcal{S}$

of the resulting model. In line 6 we set up the resulting closed-form expression, given as a function on the variables in

![]() $\vec{x}$

. Note that we use the function

$\vec{x}$

. Note that we use the function

![]() $E$

to bind the variables in the base functions to the arguments of the closed-form expression. Finally, the closed-form expression and its corresponding score are returned as the result of the algorithm.

$E$

to bind the variables in the base functions to the arguments of the closed-form expression. Finally, the closed-form expression and its corresponding score are returned as the result of the algorithm.

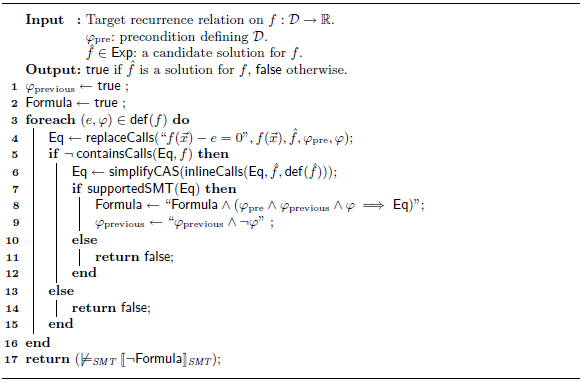

4.1.3 Verify

Algorithm 2. Solution Checking (Checker).

Algorithm2 mainly relies on an SMT-solver and a CAS. Concretely, given the constrained recurrence relation on

![]() $f:{\mathcal{D}} \to{\mathbb{R}}$

defined by

$f:{\mathcal{D}} \to{\mathbb{R}}$

defined by

\begin{equation*} f(\vec {x}) = \small \begin {cases} e_1(\vec {x}) & \textrm{if}\; \varphi _1(\vec {x}) \\[5pt] e_2(\vec {x}) & \textrm{if}\; \varphi _2(\vec {x}) \\[5pt] \quad \vdots & \quad \vdots \\[5pt] e_k(\vec {x}) & \textrm{if}\; \varphi _k(\vec {x}) \\[5pt] \end {cases} \end{equation*}

\begin{equation*} f(\vec {x}) = \small \begin {cases} e_1(\vec {x}) & \textrm{if}\; \varphi _1(\vec {x}) \\[5pt] e_2(\vec {x}) & \textrm{if}\; \varphi _2(\vec {x}) \\[5pt] \quad \vdots & \quad \vdots \\[5pt] e_k(\vec {x}) & \textrm{if}\; \varphi _k(\vec {x}) \\[5pt] \end {cases} \end{equation*}

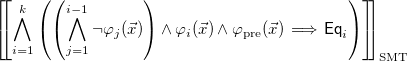

our algorithm constructs the logic formula

\begin{equation} \left[\hspace{-0.7ex}\left[ \bigwedge \limits _{i=1}^k \left (\left (\bigwedge \limits _{j=1}^{i-1} \neg \varphi _j(\vec{x}) \right ) \wedge \varphi _i(\vec{x}) \wedge \varphi _{\text{pre}}(\vec{x}) \implies \mathsf{Eq}_i \right )\right]\hspace{-0.7ex}\right]_{\text{SMT}} \end{equation}

\begin{equation} \left[\hspace{-0.7ex}\left[ \bigwedge \limits _{i=1}^k \left (\left (\bigwedge \limits _{j=1}^{i-1} \neg \varphi _j(\vec{x}) \right ) \wedge \varphi _i(\vec{x}) \wedge \varphi _{\text{pre}}(\vec{x}) \implies \mathsf{Eq}_i \right )\right]\hspace{-0.7ex}\right]_{\text{SMT}} \end{equation}

where operation

![]() ${\lbrack \hspace{-0.3ex}\lbrack } e{\rbrack \hspace{-0.3ex}\rbrack }_{\text{SMT}}$

is the translation of any expression

${\lbrack \hspace{-0.3ex}\lbrack } e{\rbrack \hspace{-0.3ex}\rbrack }_{\text{SMT}}$

is the translation of any expression

![]() $e$

to an SMT-LIB expression, and

$e$

to an SMT-LIB expression, and

![]() $\mathsf{Eq}_i$

is the result of replacing in

$\mathsf{Eq}_i$

is the result of replacing in

![]() $f(\vec{x}) = e_i(\vec{x})$

each occurrence of

$f(\vec{x}) = e_i(\vec{x})$

each occurrence of

![]() $f$

(a priori written as an uninterpreted function) by the definition of the candidate solution

$f$

(a priori written as an uninterpreted function) by the definition of the candidate solution

![]() $\hat{f}$

(by using

$\hat{f}$

(by using

![]() $\mathsf{replaceCalls}$

in line 4), and performing a simplification (by using

$\mathsf{replaceCalls}$

in line 4), and performing a simplification (by using

![]() $\mathsf{simplifyCAS}$

in line 6). The function

$\mathsf{simplifyCAS}$

in line 6). The function

![]() $\mathsf{replaceCalls}(\mathsf{expr},f(\vec{x}^\prime ),\hat{f},\varphi _{\text{pre}},\varphi )$

replaces every subexpression in

$\mathsf{replaceCalls}(\mathsf{expr},f(\vec{x}^\prime ),\hat{f},\varphi _{\text{pre}},\varphi )$

replaces every subexpression in

![]() $\mathsf{expr}$

of the form

$\mathsf{expr}$

of the form

![]() $f(\vec{x}^\prime )$

by

$f(\vec{x}^\prime )$

by

![]() $\hat{f}(\vec{x}^\prime )$

, if

$\hat{f}(\vec{x}^\prime )$

, if

![]() $\varphi \implies \varphi _{\text{pre}}(\vec{x}^\prime )$

. When checking Formula 13, all variables are assumed to be integers. As an implementation detail, to work with Z3 and access a large arithmetic language (including rational division), variables are initially declared as reals, but integrality constraints

$\varphi \implies \varphi _{\text{pre}}(\vec{x}^\prime )$

. When checking Formula 13, all variables are assumed to be integers. As an implementation detail, to work with Z3 and access a large arithmetic language (including rational division), variables are initially declared as reals, but integrality constraints

![]() $\large (\bigwedge _i \vec{x}_i = \lfloor \vec{x}_i \rfloor \large )$

are added to the final formula. Note that this encoding is consistent with the evaluation (

$\large (\bigwedge _i \vec{x}_i = \lfloor \vec{x}_i \rfloor \large )$

are added to the final formula. Note that this encoding is consistent with the evaluation (

![]() $\mathsf{EvalFun}$

) described in Section 3.

$\mathsf{EvalFun}$

) described in Section 3.

The goal of

![]() $\mathsf{simplifyCAS}$

is to obtain (sub)expressions supported by the SMT-solver. This typically allows simplifying away differences of transcendental functions, such as exponentials and logarithms, for which SMT-solvers like Z3 currently have extremely limited support, often dealing with them as if they were uninterpreted functions. For example, log2 is simply absent from SMT-LIB, although it can be modelled with exponentials, and reasoning abilities with exponentials are very limited: while Z3 can check that

$\mathsf{simplifyCAS}$

is to obtain (sub)expressions supported by the SMT-solver. This typically allows simplifying away differences of transcendental functions, such as exponentials and logarithms, for which SMT-solvers like Z3 currently have extremely limited support, often dealing with them as if they were uninterpreted functions. For example, log2 is simply absent from SMT-LIB, although it can be modelled with exponentials, and reasoning abilities with exponentials are very limited: while Z3 can check that

![]() $2^x - 2^x = 0$

, it cannot check (without further help) that

$2^x - 2^x = 0$

, it cannot check (without further help) that

![]() $2^{x+1}-2\cdot 2^x=0$

. Using

$2^{x+1}-2\cdot 2^x=0$

. Using

![]() $\mathsf{simplifyCAS}$

, the latter is replaced by

$\mathsf{simplifyCAS}$

, the latter is replaced by

![]() $0=0$

which is immediately checked.

$0=0$

which is immediately checked.

Finally, the algorithm asks the SMT-solver for models of the negated formula (line 17). If no model exists, then it returns

![]() $\mathsf{true}$

, concluding that

$\mathsf{true}$

, concluding that

![]() $\hat{f}$

is an exact solution to the recurrence, that is

$\hat{f}$

is an exact solution to the recurrence, that is

![]() $\hat{f}(\vec{x}) = f(\vec{x})$

for any input

$\hat{f}(\vec{x}) = f(\vec{x})$

for any input

![]() $\vec{x} \in{\mathcal{D}}$

such that

$\vec{x} \in{\mathcal{D}}$

such that

![]() $\mathsf{EvalFun}(f(\vec{x}))$

terminates. Otherwise, it returns

$\mathsf{EvalFun}(f(\vec{x}))$

terminates. Otherwise, it returns

![]() $\mathsf{false}$

. Note that, if we are not able to express

$\mathsf{false}$

. Note that, if we are not able to express

![]() $\hat{f}$

using the syntax supported by the SMT-solver, even after performing the simplification by

$\hat{f}$

using the syntax supported by the SMT-solver, even after performing the simplification by

![]() $\mathsf{simplifyCAS}$

, then the algorithm finishes returning

$\mathsf{simplifyCAS}$

, then the algorithm finishes returning

![]() $\mathsf{false}$

.

$\mathsf{false}$

.

4.2 Extension: Domain splitting

For the sake of exposition, we have only presented a basic combination of Algorithms1 and 2, but the core approach generate, regress and verify can be generalized to obtain more accurate results. Beyond the use of different regression algorithms (replacing lines 3–6 in Algorithm1, for example, with symbolic regression as presented in Section 3), we can also decide to apply Algorithm1 separately on multiple subdomains of

![]() $\mathcal{D}$

: we call this strategy domain splitting. In other words, rather than trying to directly infer a solution on

$\mathcal{D}$

: we call this strategy domain splitting. In other words, rather than trying to directly infer a solution on

![]() $\mathcal{D}$

by regression, we do the following.

$\mathcal{D}$

by regression, we do the following.

-

• Partition

$\mathcal{D}$

into subdomains

$\mathcal{D}$

into subdomains

${\mathcal{D}}_i$

.

${\mathcal{D}}_i$

. -

• Apply (any variant of) Algorithm1 on each

${\mathcal{D}}_i$

, that is generate input-output pairs, and regress to obtain candidates

${\mathcal{D}}_i$

, that is generate input-output pairs, and regress to obtain candidates

$\hat{f}_i:{\mathcal{D}}_i\to \mathbb{R}$

.

$\hat{f}_i:{\mathcal{D}}_i\to \mathbb{R}$

. -

• This gives a global candidate

$\hat{f} : x \mapsto \{\hat{f}_i \text{ if } x\in{\mathcal{D}}_i\}$

, that we can then attempt to verify (Algorithm2).

$\hat{f} : x \mapsto \{\hat{f}_i \text{ if } x\in{\mathcal{D}}_i\}$

, that we can then attempt to verify (Algorithm2).

A motivation for doing so is the observation that it is easier for regression algorithms to discover expressions of “low (model) complexity”, that is expressions of low depth on common operators for symbolic regression and affine combinations of common functions for (sparse) linear regression (note that model complexity of the expression is not to be confused with the computational complexity of the algorithm whose cost is represented by the expression, that is, the asymptotic rate of growth of the corresponding function). We also observe that our equations often admit solutions that can be described piecewise with low (model) complexity expressions, where equivalent global expressions are somehow artificial: they have high (model) complexity, making them hard or impossible to find. In other words, equations with “piecewise simple” solutions are more common than those with “globally simple” solutions, and the domain splitting strategy is able to decrease complexity for this common case by reduction to the more favorable one.

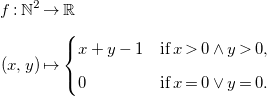

For example, consider equation merge in Table 1, representing the cost of a merge function in a merge-sort algorithm. Its solution is

\begin{align*} f : \mathbb{N}^2 &\to \mathbb{R}\\[5pt] (x,y) &\mapsto \begin{cases} x+y-1 & \textrm{if}\; x\gt 0 \land y\gt 0, \\[5pt] 0 & \textrm{if}\; x=0 \lor y=0. \end{cases} \end{align*}

\begin{align*} f : \mathbb{N}^2 &\to \mathbb{R}\\[5pt] (x,y) &\mapsto \begin{cases} x+y-1 & \textrm{if}\; x\gt 0 \land y\gt 0, \\[5pt] 0 & \textrm{if}\; x=0 \lor y=0. \end{cases} \end{align*}

It admits a piecewise affine description, but no simple global description as a polynomial, although we can admittedly write it as

![]() $\min (x,1)\times \min (y,1)\times (x+y-1)$

, which is unreachable by (sparse) linear regression for any reasonable set of candidates, and of challenging depth for symbolic regression.

$\min (x,1)\times \min (y,1)\times (x+y-1)$

, which is unreachable by (sparse) linear regression for any reasonable set of candidates, and of challenging depth for symbolic regression.

To implement domain splitting, there are two challenges to face: (1) partition the domain into appropriate subdomains that make regression more accurate and (2) generate random input points inside each subdomain.

In our implementation (Section 6), we test a very simple version of this idea, were generation is handled by a trivial rejection strategy, and where the domain is partitioned using the conditions

![]() $\varphi _i(\vec{x})\wedge \bigwedge _{j=1}^{i-1}\neg \varphi _j(\vec{x})$

that define each clause of the input equation.

$\varphi _i(\vec{x})\wedge \bigwedge _{j=1}^{i-1}\neg \varphi _j(\vec{x})$

that define each clause of the input equation.

In other words, our splitting strategy is purely syntactic. More advanced strategies could learn better subdomains, for example by using a generalization of model trees, and are left for future work. However, as we will see in Section 6, our naive strategy already provides good improvements compared to Section 4.1. Intuitively, this seems to indicate that a large part of the “disjunctive behavior” of solutions originates from the “input disjunctivity” in the equation (which, of course, can be found in other places than the

![]() $\varphi _i$

, but this is a reasonable first approximation).

$\varphi _i$

, but this is a reasonable first approximation).

Finally, for the verification step, we can simply construct an SMT formula corresponding to the equation as in Section 4.1, using an expression defined by cases for

![]() $\hat{f}$

, for example with the ite construction in SMT-LIB.

$\hat{f}$

, for example with the ite construction in SMT-LIB.

5 Our approach in the context of static cost analysis of (logic) programs

In this section, we describe how our approach could be used in the context of the motivating application, Static Cost Analysis. Although it is general, and could be integrated into any cost analysis system based on recurrence solving, we illustrate its use in the context of the CiaoPP system. Using a logic program, we first illustrate how CiaoPP sets up recurrence relations representing the sizes of output arguments of predicates and the cost of such predicates. Then, we show how our novel approach is used to solve a recurrence relation that cannot be solved by CiaoPP.

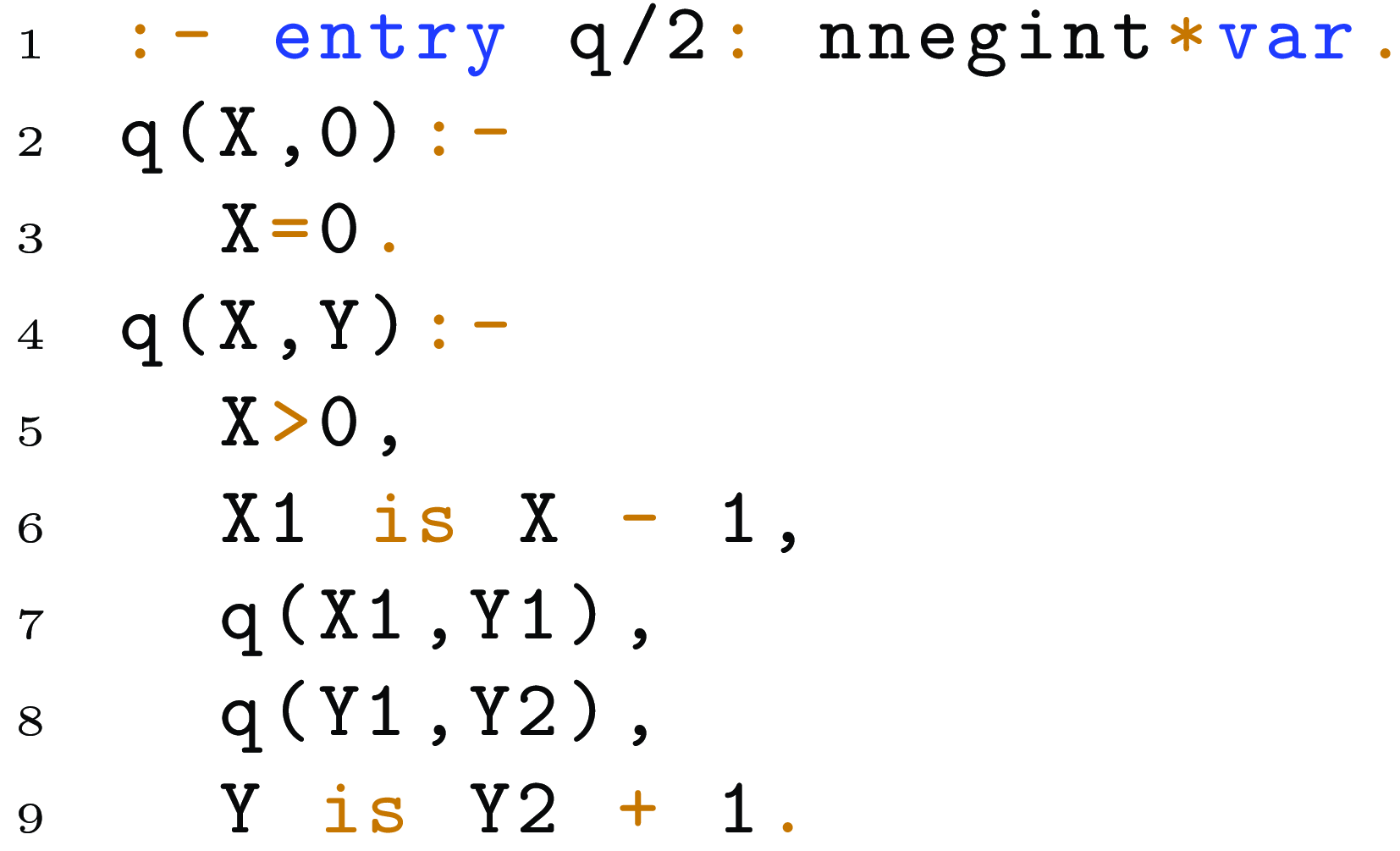

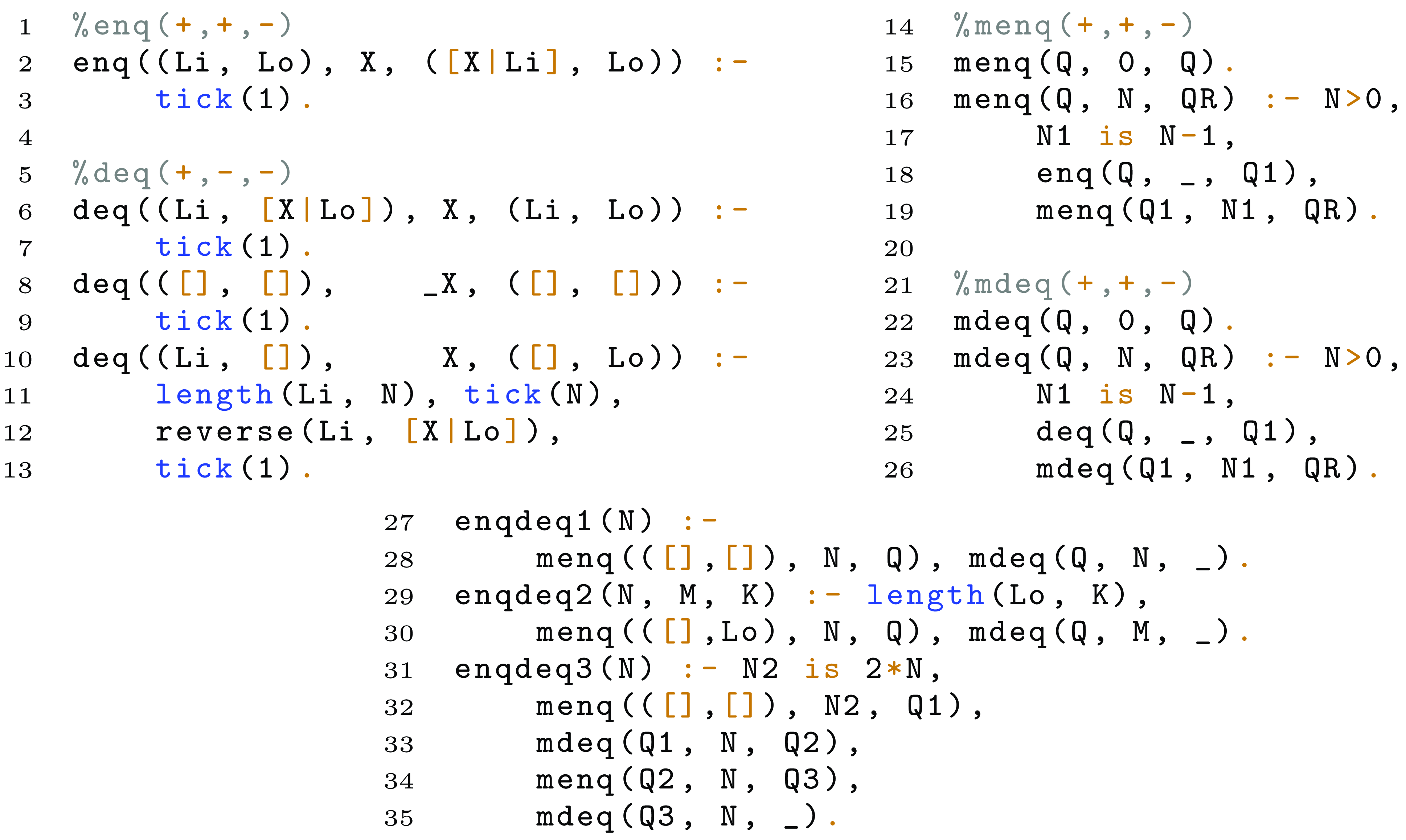

Example 1. Consider predicate q/2 in Figure 2, and calls to it where the first argument is bound to a non-negative integer and the second one is a free variable.Footnote 1 Upon success of these calls, the second argument is bound to an non-negative integer too. Such calling mode, where the first argument is input and the second one is output, is automatically inferred by CiaoPP (see Hermenegildo et al. (Reference Hermenegildo, Puebla, Bueno and Garcia2005) and its references).

Fig 2. A program with a nested recursion.

The CiaoPP system first infers size relations for the different arguments of predicates, using a rich set of size metrics (see Navas et al. (Reference Navas, Mera, Lopez-Garcia and Hermenegildo2007); Serrano et al. (Reference Serrano, Lopez-Garcia and Hermenegildo2014) for details). Assume that the size metric used in this example, for the numeric argument X is the actual value of it (denoted int(X)). The system will try to infer a function

![]() $\mathtt{S}_{\mathtt{q}}(x)$

that gives the size of the output argument of q/2 (the second one), as a function of the size (

$\mathtt{S}_{\mathtt{q}}(x)$

that gives the size of the output argument of q/2 (the second one), as a function of the size (

![]() $x$

) of the input argument (the first one). For this purpose, the following size relations for

$x$

) of the input argument (the first one). For this purpose, the following size relations for

![]() $\mathtt{ S}_{\mathtt{q}}(x)$

are automatically set up (the same as the recurrence in Eq. (1) used in Section 2 as example).

$\mathtt{ S}_{\mathtt{q}}(x)$

are automatically set up (the same as the recurrence in Eq. (1) used in Section 2 as example).

The first and second recurrence correspond to the first and second clauses respectively (i.e., base and recursive cases). Once recurrence relations (either representing the size of terms, as the ones above, or the computational cost of predicates, as the ones that we will see later) have been set up, a solving process is started.

Nested recurrences, as the one that arise in this example, cannot be handled by most state-of-the-art recurrence solvers. In particular, the modular solver used by CiaoPP fails to find a closed-form function for the recurrence relation above. In contrast, the novel approach that we propose obtains the closed form

![]() $\hat{\mathtt{S}}_{\mathtt{q}}(x) = x$

, which is an exact solution of such recurrence (as shown in Section 2).

$\hat{\mathtt{S}}_{\mathtt{q}}(x) = x$

, which is an exact solution of such recurrence (as shown in Section 2).

Once the size relations have been inferred, CiaoPP uses them to infer the computational cost of a call to q/2. For simplicity, assume that in this example, such cost is given in terms of the number of resolution steps, as a function of the size of the input argument, but note that CiaoPP’s cost analysis is parametric with respect to resources, which can be defined by the user by means of a rich assertion language, so that it can infer a wide range of resources, besides resolution steps. Also for simplicity, we assume that all builtin predicates, such as arithmetic/comparison operators have zero cost (in practice there is a “trust” assertion for each builtin that specifies its cost as if it had been inferred by the analysis).

In order to infer the cost of a call to q/2, represented as

![]() $\mathtt{C}_{\mathtt{q}}(x)$

, CiaoPP sets up the following cost relations, by using the size relations inferred previously.

$\mathtt{C}_{\mathtt{q}}(x)$

, CiaoPP sets up the following cost relations, by using the size relations inferred previously.

We can see that the cost of the second recursive call to predicate p/2 depends on the size of the output argument of the first recursive call to such predicate, which is given by function

![]() $\mathtt{S}_{\mathtt{q}}(x)$

, whose closed form

$\mathtt{S}_{\mathtt{q}}(x)$

, whose closed form

![]() $\mathtt{S}_{\mathtt{q}}(x) = x$

is computed by our approach, as already explained. Plugging such closed form into the recurrence relation above, it can now be solved by CiaoPP, obtaining

$\mathtt{S}_{\mathtt{q}}(x) = x$

is computed by our approach, as already explained. Plugging such closed form into the recurrence relation above, it can now be solved by CiaoPP, obtaining

![]() $\mathtt{C}_{\mathtt{q}}(x) = 2^{x+1} - 1$

.

$\mathtt{C}_{\mathtt{q}}(x) = 2^{x+1} - 1$

.

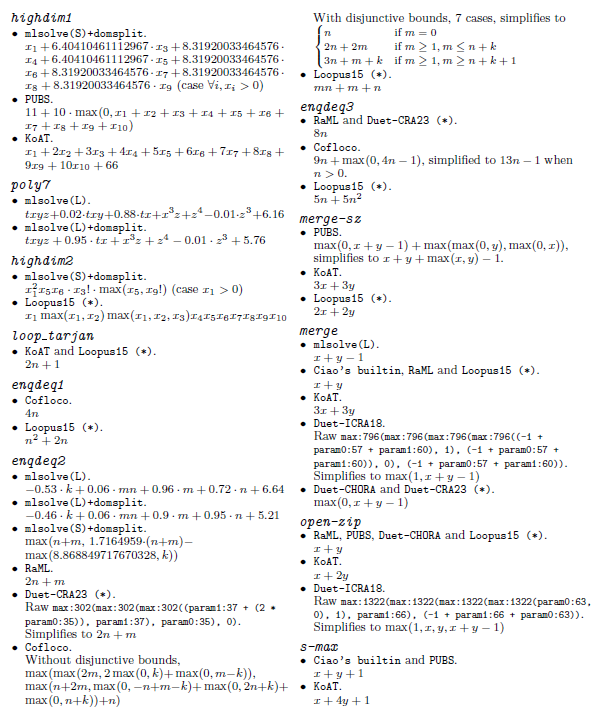

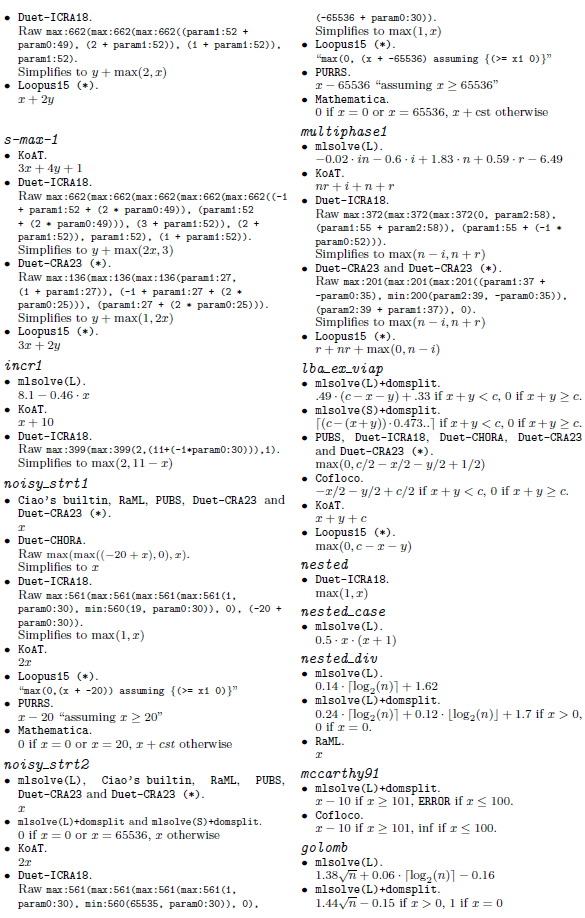

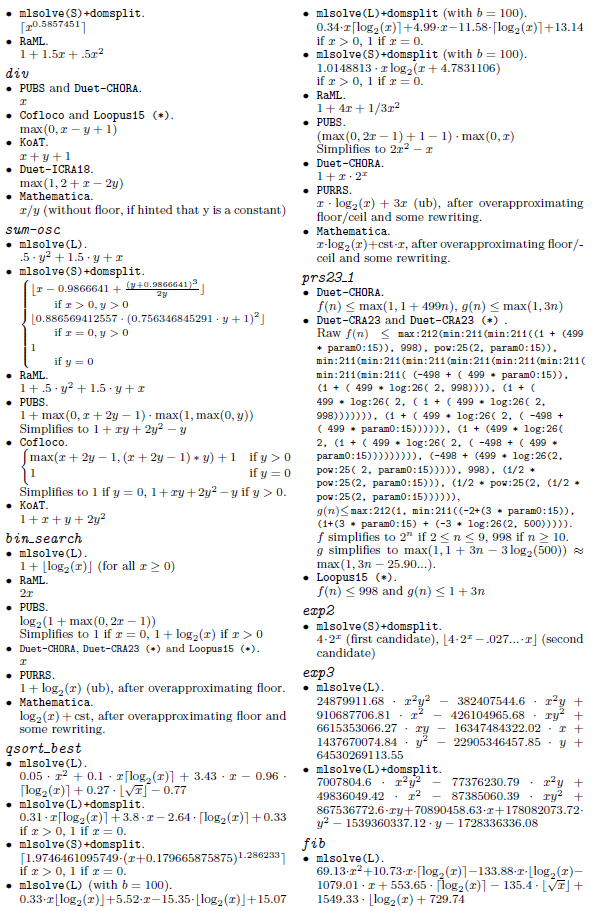

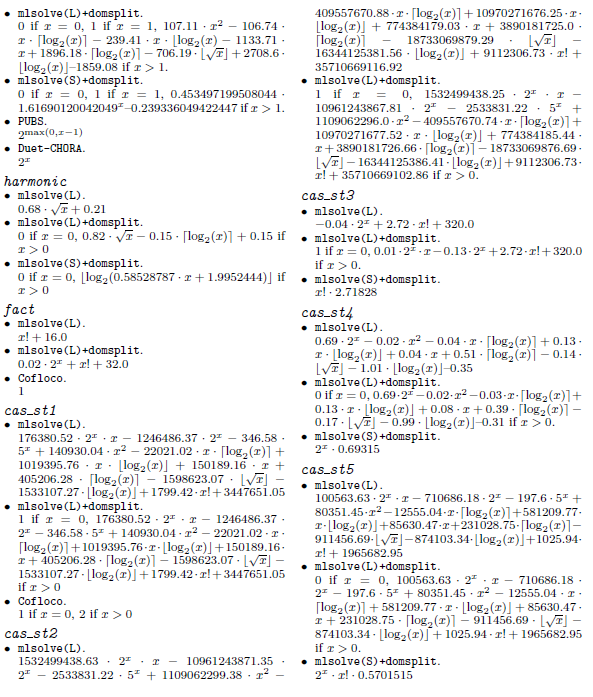

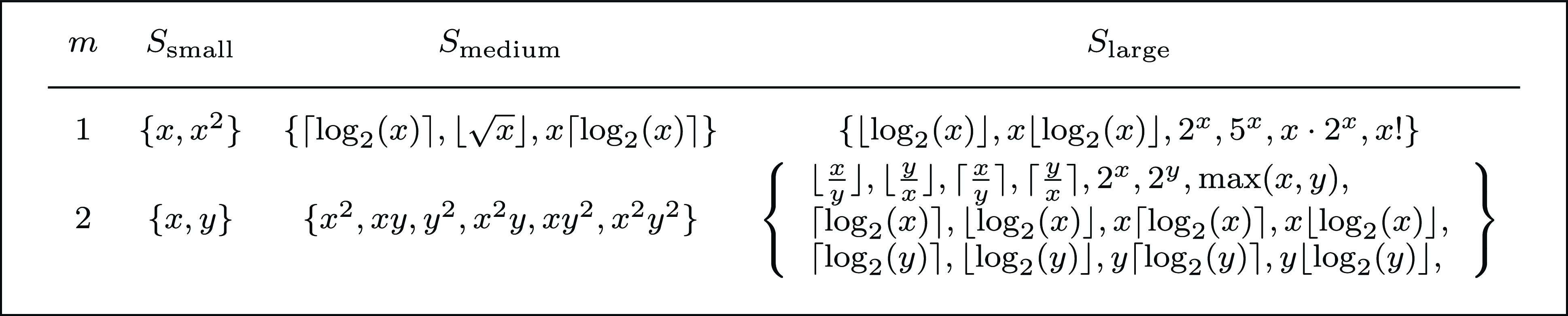

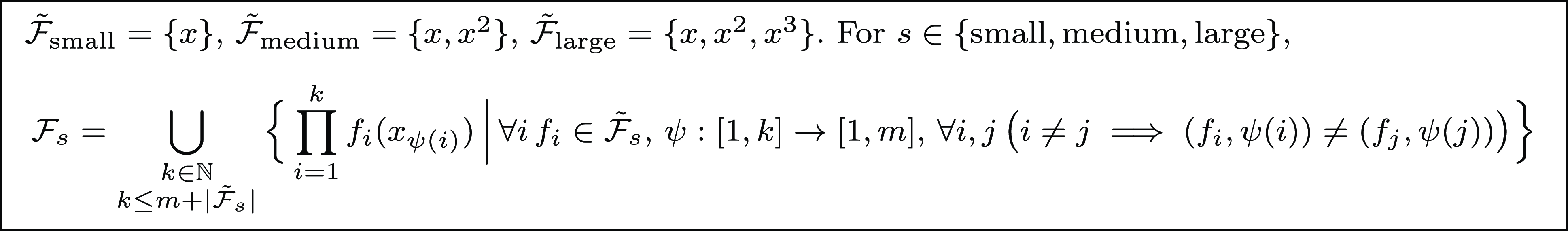

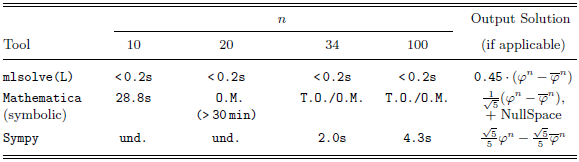

6 Implementation and experimental evaluation