1. Introduction

The Rayleigh–Taylor instability (RTI) occurs when a perturbed interface between two fluids of differing density undergoes an acceleration directed from the more dense to the less dense fluid. This instability was first described by Rayleigh (Reference Rayleigh1883) and was later expanded upon by Taylor (Reference Taylor1950). The RTI is relevant in a number of natural contexts such as atmospheric inversions and cloud physics (Schultz et al. Reference Schultz2006), astrophysics (Arnett et al. Reference Arnett, Bahcall, Kirshner and Woosley1989; Cabot & Cook Reference Cabot and Cook2006) and art (Zhou Reference Zhou2017a). The RTI also appears in a number of industrial contexts such as combustion (Biferale et al. Reference Biferale, Mantovani, Sbragaglia, Scagliarini, Toschi and Tripiccione2011) and in inertial confinement fusion (ICF) (Lindl et al. Reference Lindl, Landen, Edwards and Moses2014). These are only a few of the areas in which the RTI appears, and a more thorough summary is presented by Zhou (Reference Zhou2017a,Reference Zhoub).

The RTI is frequently considered in the context of the mixing of two components, but in many practical applications, including ICF, the RTI can occur in the presence of more than two components. Prior investigations of the RTI with multiple fluid layers has been conducted through experiment (Jacobs & Dalziel Reference Jacobs and Dalziel2005; Adkins et al. Reference Adkins, Shelton, Renoult, Carles and Rosenblatt2017; Suchandra & Ranjan Reference Suchandra and Ranjan2023), simulation (Youngs Reference Youngs2009, Reference Youngs2017; Morgan Reference Morgan2022a,Reference Morganb) and modelling (Mikaelian Reference Mikaelian1983, Reference Mikaelian1990, Reference Mikaelian1996, Reference Mikaelian2005; Parhi & Nath Reference Parhi and Nath1991; Yang & Zhang Reference Yang and Zhang1993). Three-layer RTI configurations differ in several ways from the more commonly considered two-layer case. Of particular note, the addition of a third layer results in two interfaces, and the stability of each interface is controlled by the density of the fluids used in each layer. Jacobs & Dalziel (Reference Jacobs and Dalziel2005) considered the special case where the upper and lower layers consist of the same fluid with a density ![]() $\rho _1$, and the middle layer consists of a fluid with density

$\rho _1$, and the middle layer consists of a fluid with density ![]() $\rho _2$ such that

$\rho _2$ such that ![]() $\rho _1 > \rho _2$. This results in an unstable interface between the upper and middle layers, and a stable interface between the middle and lower layers. They then showed that a self-similar three-layer RTI mixing layer in such a configuration will grow linearly with time as opposed to the quadratic growth predicted in the two-layer case. Reference Jacobs and DalzielJacobs & Dalziel find the slope,

$\rho _1 > \rho _2$. This results in an unstable interface between the upper and middle layers, and a stable interface between the middle and lower layers. They then showed that a self-similar three-layer RTI mixing layer in such a configuration will grow linearly with time as opposed to the quadratic growth predicted in the two-layer case. Reference Jacobs and DalzielJacobs & Dalziel find the slope, ![]() $\gamma$, of this linear growth to be

$\gamma$, of this linear growth to be ![]() $\gamma =0.49\pm 0.03$ in their experiments with an Atwood number of

$\gamma =0.49\pm 0.03$ in their experiments with an Atwood number of ![]() $0.002$. Suchandra & Ranjan (Reference Suchandra and Ranjan2023) found

$0.002$. Suchandra & Ranjan (Reference Suchandra and Ranjan2023) found ![]() $\gamma = 0.41\pm 0.01$ for their three-layer experiments with Atwood numbers of

$\gamma = 0.41\pm 0.01$ for their three-layer experiments with Atwood numbers of ![]() $0.3$ and

$0.3$ and ![]() $0.6$.

$0.6$.

In ICF applications, Rayleigh–Taylor (and Richtmyer–Meshkov) induced mixing between the layers of capsule material and the deuterium–tritium (DT) fuel contributes to degradation of capsule yield (Haan et al. Reference Haan2011). The CD Symcap experiments, for example, were a set of separated reactant experiments that were fielded on the National Ignition Facility (NIF) with the goal of studying the amount of mixing that occurs during a capsule implosion (Casey et al. Reference Casey2014). The CD Symcap experiments consisted of a recessed layer of deuterated plastic (CD) separated from tritium gas in the centre of the capsule by an inert plastic layer (CH). As a result, the measured DT yield signal represents a measure of the amount of mixing between the two reactant layers. However, in experiments such as CD Symcap as well as other ICF capsules with multiple layers, the thermonuclear (TN) output is not simply a function of mixing between the two reactant materials, and the impact of the inert layer must be considered as well. Understanding the factors that influence instability-driven mixing in ICF targets, as well as the effects of mixing on capsule performance, has been cited as a significant technical challenge in the pursuit of fusion ignition and greater fusion yields at the NIF (Lindl Reference Lindl1998; Smalyuk et al. Reference Smalyuk2019; Abu-Shawareb et al. Reference Abu-Shawareb2024).

Experiments such as these have motivated the development of models for use in the computational codes used to design ICF capsules to better predict the influence of turbulence and mixing on capsule yield. Generally, an equation for the average reaction rate can be written as

where ![]() ${\overline {\dot {r}_{\gamma,\alpha \beta }}}$ is the average binary reaction rate with product

${\overline {\dot {r}_{\gamma,\alpha \beta }}}$ is the average binary reaction rate with product ![]() $\gamma$ and reactants

$\gamma$ and reactants ![]() $\alpha$ and

$\alpha$ and ![]() $\beta$,

$\beta$, ![]() $R_{atomic}$ is the average reaction rate for atomically mixed reactants and

$R_{atomic}$ is the average reaction rate for atomically mixed reactants and ![]() $(\cdots )$ represents an augmentation of the reaction rate due to turbulent mixing. One important aspect to consider in developing such a model is the behaviour of that model in the ‘no-mix limit’, or whether the model returns to the correct physical limit for immiscible mixing of components. Existing models for reacting flow have treated this augmentation term in different ways, and with different results for the no-mix limit. Ristorcelli (Reference Ristorcelli2017) presents a model for reacting flow with mixing that recovers the no-mix limit based on the asssumption that the mixing conforms to a beta distribution. However, this model is not extensible to mixtures with more than two components. Morgan et al. (Reference Morgan, Olson, Black and McFarland2018a) presents another model describing the reaction rate in binary mixtures based on a truncated expansion of the reaction rate equation. A model that is applicable to mixing between an arbitrary number of components is presented by Morgan (Reference Morgan2022b), though this model does not recover the no-mix limit. Therefore, it is desirable to construct a model for an arbitrary number of mixing components that also recovers the no-mix limit. A principal motivation for the present work is thus to generate a validated dataset against which an improved reaction rate model can be evaluated.

$(\cdots )$ represents an augmentation of the reaction rate due to turbulent mixing. One important aspect to consider in developing such a model is the behaviour of that model in the ‘no-mix limit’, or whether the model returns to the correct physical limit for immiscible mixing of components. Existing models for reacting flow have treated this augmentation term in different ways, and with different results for the no-mix limit. Ristorcelli (Reference Ristorcelli2017) presents a model for reacting flow with mixing that recovers the no-mix limit based on the asssumption that the mixing conforms to a beta distribution. However, this model is not extensible to mixtures with more than two components. Morgan et al. (Reference Morgan, Olson, Black and McFarland2018a) presents another model describing the reaction rate in binary mixtures based on a truncated expansion of the reaction rate equation. A model that is applicable to mixing between an arbitrary number of components is presented by Morgan (Reference Morgan2022b), though this model does not recover the no-mix limit. Therefore, it is desirable to construct a model for an arbitrary number of mixing components that also recovers the no-mix limit. A principal motivation for the present work is thus to generate a validated dataset against which an improved reaction rate model can be evaluated.

The CD Symcap experiments represent a valuable dataset with experimental data available for a three-component reacting mixing problem. However, these experiments were quite complicated in design and with somewhat limited diagnostic data available. Additionally, due to the challenging range of physical scales involved, direct numerical simulation (DNS) of ICF capsules such as CD Symcap remains intractable (Bender et al. Reference Bender2021), and many computational efforts to simulate ICF capsules employ the use of turbulent mix models or simplify the problem to one or two dimensions (Raman et al. Reference Raman2012; Casey et al. Reference Casey2014; Smalyuk et al. Reference Smalyuk2014; Weber et al. Reference Weber2014; Khan et al. Reference Khan2016; Gatu Johnson et al. Reference Gatu Johnson2017, Reference Gatu Johnson2018). The present work therefore adopts a simpler approach based on the non-reacting three-component RTI experiments of Suchandra & Ranjan (Reference Suchandra and Ranjan2023) and Jacobs & Dalziel (Reference Jacobs and Dalziel2005). Direct numerical simulation of this simplified configuration is first validated through comparison with available experimental data. The benchmarked simulation is then extrapolated to a reacting configuration.

This work consists of two parts that will be discussed separately. The first part considers a DNS of a Rayleigh–Taylor mixing layer with three components. The physical configuration and fluid properties of this simulation are based on the experiments of Jacobs & Dalziel (Reference Jacobs and Dalziel2005) as well as Suchandra & Ranjan (Reference Suchandra and Ranjan2023). Confidence is established in the present simulations through comparison to experimental data as well as through a rigorous numerical convergence study. The computational dataset is then analysed to extract characteristic length scales using turbulence spectra and two-point spatial correlation techniques. Additionally, joint probability statistics of mixing concentration are examined and compared against several multivariate beta distributions of increasing complexity. While similar analyses have been performed in the past for two-component RTI mixing (Ristorcelli & Clark Reference Ristorcelli and Clark2004), as well as for three-component passive scalar mixing (Perry & Mueller Reference Perry and Mueller2018), to the best of the authors’ knowledge, this work is the first time these statistics have been examined for a three-component RTI mixing problem.

The second part of this work focuses on development of an improved model for the average reaction rate in a multicomponent mixing layer. Analysis is first presented to demonstrate that the model of Morgan (Reference Morgan2022a) can be extended to include higher-order statistical moments that were previously neglected while also respecting the no-mix limit. This improved model is then evaluated against the simulation data generated in the first part of this work. A single time instant from the DNS calculation representing significant, although not complete, mixing of the three components is numerically transformed to treat the mixing components as either ‘premixed’ or ‘non-premixed’ deuterium and tritium. The flow field is computationally frozen and a TN burn calculation is performed. The results from the high-fidelity calculation are then compared with a one-dimensional calculation utilizing the improved model.

This work is presented in the following sections. Section 2 discusses the configuration of the simulation, including a description of the numerical methods used, the computational domain, the fluids used and the initial conditions. Next, several sections are then focused on the results generated from these simulations. First, § 3 presents comparison of these simulation results with experimental data as well as verification that these simulations have converged and achieved DNS resolution. Section 4 presents additional analysis of this flow beyond that which was presented in the experiment and focuses on the turbulent aspects of the flow, including analysis of turbulent length scales utilizing spectra and two-point correlations. An evaluation of the joint probability distribution of the concentration of the mixing components is also presented. Section 5 introduces the proposed model for reacting flow in this configuration, and a comparison between the model and a calculation based on the DNS data is made. Lastly, § 6 summarizes the conclusions of this work.

2. Problem set-up

2.1. Numerical methods

The simulations presented in this work are conducted in two stages. The first stage involves simulating a non-reacting three-layer RTI mixing layer in time and validating the simulation through comparison with available experimental data from Jacobs & Dalziel (Reference Jacobs and Dalziel2005) and Suchandra & Ranjan (Reference Suchandra and Ranjan2023). As such, the material properties in this stage are chosen to be similar to those of the experiments. The second stage is conducted to validate and assess an improved model for the influence of mixing on reaction rates. This is accomplished in the present study by transforming the simulation state from the first stage into an ICF-relevant configuration and simulating the mixing layer as it undergoes TN burn. This calculation is performed utilizing both the simulation data directly as well as through the improved reaction rate model. Hydrodynamic evolution of the mixing layer is disabled in this second stage so that the mass fraction covariances do not evolve as a result of hydrodynamic motion during the TN burn process. This approach is not meant to represent the physics of ICF targets, but is a useful approach for evaluating the reaction rate model under the idealized case where second-moment concentration statistics that are known exactly (i.e. without the need for a coupled model for hydrodynamic evolution), thus simplifying the comparison between the simulation data and the model. In the first stage of the simulation, a high-order numerical scheme is desirable to capture all of the scales of turbulence with minimal numerical dissipation. In the second stage of the simulation, TN burn physics, radiation diffusion and tabular equations of state are required. To accommodate these differing computational requirements, separate codes are utilized for each stage of the simulation. This two-stage approach has been used previously in simulations of a reacting Rayleigh–Taylor mixing layer (Morgan et al. Reference Morgan, Olson, Black and McFarland2018a; Morgan Reference Morgan2022b), and the following paragraphs outline the computational codes utilized in each stage.

In the first stage, the Miranda hydrodynamics code (Cook Reference Cook2007, Reference Cook2009; Cabot & Cook Reference Cabot and Cook2006; Morgan et al. Reference Morgan, Olson, White and McFarland2017) is utilized to simulate an RTI mixing layer with three components in a configuration similar to the experiments of Jacobs & Dalziel (Reference Jacobs and Dalziel2005) and Suchandra & Ranjan (Reference Suchandra and Ranjan2023) as it evolves with time. Miranda has seen extensive use in compressible, multicomponent turbulent mixing problems (Cook, Cabot & Miller Reference Cook, Cabot and Miller2004; Cabot & Cook Reference Cabot and Cook2006; Olson & Cook Reference Olson and Cook2007; Olson et al. Reference Olson, Larsson, Lele and Cook2011; Olson & Greenough Reference Olson and Greenough2014a,Reference Olson and Greenoughb; Tritschler et al. Reference Tritschler, Olson, Lele, Hickel, Hu and Adams2014; Morgan et al. Reference Morgan, Olson, Black and McFarland2018a; Campos & Morgan Reference Campos and Morgan2019; Morgan Reference Morgan2022a,Reference Morganb; Ferguson, Wang & Morgan Reference Ferguson, Wang and Morgan2023). Miranda solves the compressible Navier–Stokes equations for a non-reacting, multicomponent mixture,

where ![]() $\rho$ is the density,

$\rho$ is the density, ![]() $t$ is the time,

$t$ is the time, ![]() $u_i$ is the velocity along axis

$u_i$ is the velocity along axis ![]() $i$,

$i$, ![]() $x_i$ is the spatial coordinate in axis

$x_i$ is the spatial coordinate in axis ![]() $i$,

$i$, ![]() $Y_\alpha$ is the mass fraction of species

$Y_\alpha$ is the mass fraction of species ![]() $\alpha$,

$\alpha$, ![]() $J_{\alpha,i}$ is the diffusive mass flux of species

$J_{\alpha,i}$ is the diffusive mass flux of species ![]() $\alpha$,

$\alpha$, ![]() $p$ is the pressure,

$p$ is the pressure, ![]() $\sigma _{ij}$ is the viscous stress tensor,

$\sigma _{ij}$ is the viscous stress tensor, ![]() $g_j$ is the gravitational body force in axis

$g_j$ is the gravitational body force in axis ![]() $j$,

$j$, ![]() $E$ is the total energy and

$E$ is the total energy and ![]() $q_i$ is the heat flux in axis

$q_i$ is the heat flux in axis ![]() $i$. These governing equations are solved using a tenth-order compact finite differencing scheme in space and a fourth-order explicit Runge–Kutta scheme in time. Miranda models the subgrid transfer of energy using an artificial fluid large-eddy simulation (AFLES) approach, where an eighth-order filter is applied to selectively add artificial contributions to the viscosity, diffusivity and thermal conductivity. Further information on Miranda, including specifics of the AFLES approach, is presented in Appendix A.1. Section 3.1 demonstrates that the influence of Miranda's artificial fluid approach is negligible in the present study.

$i$. These governing equations are solved using a tenth-order compact finite differencing scheme in space and a fourth-order explicit Runge–Kutta scheme in time. Miranda models the subgrid transfer of energy using an artificial fluid large-eddy simulation (AFLES) approach, where an eighth-order filter is applied to selectively add artificial contributions to the viscosity, diffusivity and thermal conductivity. Further information on Miranda, including specifics of the AFLES approach, is presented in Appendix A.1. Section 3.1 demonstrates that the influence of Miranda's artificial fluid approach is negligible in the present study.

In the second stage, a single time instant where there is significant, although not complete, mixing between all three layers is imported into the Ares code (Sharp Reference Sharp1978; Darlington, McAbee & Rodrigue Reference Darlington, McAbee and Rodrigue2001), where the mixing layer is simulated as it undergoes TN burn. Ares has been used extensively in the simulation of ICF targets and experiments (Raman et al. Reference Raman2012; Casey et al. Reference Casey2014; Smalyuk et al. Reference Smalyuk2014; Weber et al. Reference Weber2014; Khan et al. Reference Khan2016; Gatu Johnson et al. Reference Gatu Johnson2017, Reference Gatu Johnson2018; Bender et al. Reference Bender2021). In this stage, the mixing components are replaced with ICF-relevant materials. The materials considered in this stage include non-reactive CH plastic as well as deuterium (D) and tritium (T) in the form of either CD and tritium gas in a non-premixed configuration, or a mixture of deuterium and tritium (DT) in a premixed configuration. As such, only a single TN reaction is considered,

The rate of reaction with products ![]() $\gamma$ and reactants

$\gamma$ and reactants ![]() $\alpha$ and

$\alpha$ and ![]() $\beta$ is described by

$\beta$ is described by

where ![]() $\langle \sigma v \rangle _{\alpha \beta }$ is the reaction cross-section, and

$\langle \sigma v \rangle _{\alpha \beta }$ is the reaction cross-section, and ![]() $n_\alpha$ and

$n_\alpha$ and ![]() $n_\beta$ are the particle number densities. The reaction cross-section is interpolated using the TDFv2.3 library (Warshaw Reference Warshaw2001). Each reaction has an average thermal energy of

$n_\beta$ are the particle number densities. The reaction cross-section is interpolated using the TDFv2.3 library (Warshaw Reference Warshaw2001). Each reaction has an average thermal energy of ![]() $17.59\,{\rm MeV}$ for the

$17.59\,{\rm MeV}$ for the ![]() ${\rm D} +{\rm T}$ reaction considered here. Local deposition of this energy is assumed such that the average thermal energy is removed from the ion energy field. Charged particle energy is deposited in the same volume with a split between the ion and electron energies, determined according to the Corman–Spitzer model (Corman et al. Reference Corman, Loewe, Cooper and Winslow1975). Neutrons are assumed to immediately escape the problem, and energy carried by neutron products is removed from the system. Thermal effects and the apportionment of average thermal energy between reactants is determined following the method of Warshaw (Reference Warshaw2001), and the ion–electron coefficient is determined via the method of Brysk (Reference Brysk1974). Further details on the Ares code are presented in Appendix A.2.

${\rm D} +{\rm T}$ reaction considered here. Local deposition of this energy is assumed such that the average thermal energy is removed from the ion energy field. Charged particle energy is deposited in the same volume with a split between the ion and electron energies, determined according to the Corman–Spitzer model (Corman et al. Reference Corman, Loewe, Cooper and Winslow1975). Neutrons are assumed to immediately escape the problem, and energy carried by neutron products is removed from the system. Thermal effects and the apportionment of average thermal energy between reactants is determined following the method of Warshaw (Reference Warshaw2001), and the ion–electron coefficient is determined via the method of Brysk (Reference Brysk1974). Further details on the Ares code are presented in Appendix A.2.

The proposed reaction rate model is solved using the Ares code coupled with the modular RANSBox library (Morgan et al. Reference Morgan, Osawe, Marinak and Olson2024). Reynolds-averaged Navier–Stokes (RANS) calculations are performed on a one-dimensional computational mesh with grid spacing in the ![]() $z$ dimension set to be identical to the DNS grid spacing in order to compare with DNS data. First and second moments of species concentrations (i.e. mean mass fractions and mass fraction covariances) in the RANS calculations are taken directly from DNS at the same time instant chosen for TN burn. In this way, the reaction rate model may be assessed independently of the accuracy of any coupled model for the evolution of the mass fraction covariances, such as the

$z$ dimension set to be identical to the DNS grid spacing in order to compare with DNS data. First and second moments of species concentrations (i.e. mean mass fractions and mass fraction covariances) in the RANS calculations are taken directly from DNS at the same time instant chosen for TN burn. In this way, the reaction rate model may be assessed independently of the accuracy of any coupled model for the evolution of the mass fraction covariances, such as the ![]() $k$–

$k$–![]() $L$–

$L$–![]() $a$–

$a$–![]() $\mathcal {C}$ model (Morgan Reference Morgan2022b) or the

$\mathcal {C}$ model (Morgan Reference Morgan2022b) or the ![]() $R$–

$R$–![]() $2L$–

$2L$–![]() $a$–

$a$–![]() $\mathcal {C}$ model (Morgan, Ferguson & Olson Reference Morgan, Ferguson and Olson2023).

$\mathcal {C}$ model (Morgan, Ferguson & Olson Reference Morgan, Ferguson and Olson2023).

2.2. Computational set-up

The present work aims to study the RTI in a three-layered configuration. The experiments of Suchandra & Ranjan (Reference Suchandra and Ranjan2023) and Jacobs & Dalziel (Reference Jacobs and Dalziel2005) provide useful experimental data for a three-layer Rayleigh–Taylor driven flow, and so the present simulations aim to be similar in configuration to those experiments to permit reasonable comparison. However, the present simulations do not attempt to exactly replicate either experiment. The experiments consist of three layers of fluid with an acceleration due to Earth's gravity, with the upper and lower layers being more dense than the middle layer. This results in an unstable interface between the upper and middle layers, and a stable interface between the middle and lower layers. The experiments of Reference Jacobs and DalzielJacobs & Dalziel stabilize the upper unstable interface through the use of a splitter plate that is withdrawn to initiate the experiment. Reference Suchandra and RanjanSuchandra & Ranjan, in contrast, utilize three initially separated streams of gas flowing with a mean velocity that meet at the entrance to a test section where they are allowed to mix. The initial conditions of the simulation, discussed in detail in § 2.3, are chosen to approximate the perturbations that these approaches induce on the interface.

The computational domain is set up to be similar to the experimental configuration. This consists of three layers of fluid, with layer 1 located at the top of the domain, layer 3 located at the bottom of the domain and layer 2 located between layers 1 and 3. Gravitational acceleration is applied in the ![]() $-z$ direction. This results in two interfaces being formed, with one between layers 1 and 2, and the other between layers 2 and 3. The fluid properties in each layer are set such that the upper (1–2) interface is initially unstable, and the lower (2– 3) interface is initially stable. Additionally, the fluid properties used in layers 1 and 3 are chosen to be identical. A schematic of the domain, including the location of each layer of fluid and the direction of gravity, is presented in figure 1.

$-z$ direction. This results in two interfaces being formed, with one between layers 1 and 2, and the other between layers 2 and 3. The fluid properties in each layer are set such that the upper (1–2) interface is initially unstable, and the lower (2– 3) interface is initially stable. Additionally, the fluid properties used in layers 1 and 3 are chosen to be identical. A schematic of the domain, including the location of each layer of fluid and the direction of gravity, is presented in figure 1.

Figure 1. Schematic representation of the computational domain. The location and index of the three layers is indicated by the circled numerals. The location of the origin and orientation of the axes is indicated by the triad. The origin is located at the midpoint of the domain in the ![]() $x$ and

$x$ and ![]() $y$ dimensions, and at the vertical midpoint of the initial middle layer location in the

$y$ dimensions, and at the vertical midpoint of the initial middle layer location in the ![]() $z$ dimension.

$z$ dimension.

The problem domain is rectangular in shape, with dimensions of ![]() $L_x=40\, {\rm cm}$,

$L_x=40\, {\rm cm}$, ![]() $L_y = 40\, {\rm cm}$ and

$L_y = 40\, {\rm cm}$ and ![]() $L_z=80\, {\rm cm}$ in the

$L_z=80\, {\rm cm}$ in the ![]() $x$,

$x$, ![]() $y$ and

$y$ and ![]() $z$ axes, respectively. These dimensions are chosen to be similar to the test section utilized in the experiments of Suchandra & Ranjan (Reference Suchandra and Ranjan2023), though a few modifications are made to account for numerical limitations. In particular, the length of the experimental test section used by Reference Suchandra and RanjanSuchandra & Ranjan is several metres long, rendering simulation of the entire test section computationally intractable. To simplify this constraint, the frozen turbulence hypothesis of Taylor (Reference Taylor1938) is invoked to transform the spatially developing mixing layer into a temporally developing one. This approach was also used by Reference Suchandra and RanjanSuchandra & Ranjan in the presentation of their results. Conceptually, this approach considers a box of fluid that starts attached to the trailing edge of the splitter plate used to separate the fluids. The box then moves at a constant velocity equal to the mean flow velocity relative to the splitter plate. Thus, a spatially developing mixing layer with a mean flow relative to the splitter plate instead appears as a temporally developing layer with no mean flow from the perspective of the box. This allows the streamwise axis to be shortened to match the cross-stream direction, with this choice made to result in a domain with a square horizontal cross-section. The vertical axis in the simulation is set to be

$z$ axes, respectively. These dimensions are chosen to be similar to the test section utilized in the experiments of Suchandra & Ranjan (Reference Suchandra and Ranjan2023), though a few modifications are made to account for numerical limitations. In particular, the length of the experimental test section used by Reference Suchandra and RanjanSuchandra & Ranjan is several metres long, rendering simulation of the entire test section computationally intractable. To simplify this constraint, the frozen turbulence hypothesis of Taylor (Reference Taylor1938) is invoked to transform the spatially developing mixing layer into a temporally developing one. This approach was also used by Reference Suchandra and RanjanSuchandra & Ranjan in the presentation of their results. Conceptually, this approach considers a box of fluid that starts attached to the trailing edge of the splitter plate used to separate the fluids. The box then moves at a constant velocity equal to the mean flow velocity relative to the splitter plate. Thus, a spatially developing mixing layer with a mean flow relative to the splitter plate instead appears as a temporally developing layer with no mean flow from the perspective of the box. This allows the streamwise axis to be shortened to match the cross-stream direction, with this choice made to result in a domain with a square horizontal cross-section. The vertical axis in the simulation is set to be ![]() $80\, {\rm cm}$, which is somewhat taller than the experimental test section height of

$80\, {\rm cm}$, which is somewhat taller than the experimental test section height of ![]() $60\, {\rm cm}$. This change is made to address numerical stability issues associated with the mixing layer approaching the upper domain edge.

$60\, {\rm cm}$. This change is made to address numerical stability issues associated with the mixing layer approaching the upper domain edge.

The domain boundaries are located at ![]() $\pm 20\, {\rm cm}$ in the

$\pm 20\, {\rm cm}$ in the ![]() $x$ and

$x$ and ![]() $y$ axes, and at

$y$ axes, and at ![]() $-20$ and

$-20$ and ![]() $+60\,{\rm cm}$ in the vertical axis. The boundary conditions used for this problem are periodic on the

$+60\,{\rm cm}$ in the vertical axis. The boundary conditions used for this problem are periodic on the ![]() $\pm 20\,{\rm cm}$ faces in

$\pm 20\,{\rm cm}$ faces in ![]() $x$ and

$x$ and ![]() $y$, and no penetration on the top (

$y$, and no penetration on the top (![]() $+60\,{\rm cm}$) and bottom (

$+60\,{\rm cm}$) and bottom (![]() $-20\,{\rm cm}$) faces in

$-20\,{\rm cm}$) faces in ![]() $z$. The middle layer is initially located with its midpoint at

$z$. The middle layer is initially located with its midpoint at ![]() $z=0$, with an initial layer thickness of

$z=0$, with an initial layer thickness of ![]() $h_{2,0} = 3.2\,{\rm cm}$. This results in a greater amount of vertical space above the initial middle layer location than below it. This asymmetry, as with the increased length of the domain in the vertical axis discussed previously, is chosen to eliminate numerical stability issues as the mixing layer approaches the upper domain edge and to allow the developing mixing layer to remain approximately equidistant from the upper and lower domain boundaries.

$h_{2,0} = 3.2\,{\rm cm}$. This results in a greater amount of vertical space above the initial middle layer location than below it. This asymmetry, as with the increased length of the domain in the vertical axis discussed previously, is chosen to eliminate numerical stability issues as the mixing layer approaches the upper domain edge and to allow the developing mixing layer to remain approximately equidistant from the upper and lower domain boundaries.

The fluid properties in the present simulations are chosen to match those used in the experiments of Suchandra & Ranjan (Reference Suchandra and Ranjan2023). Atmospheric pressure and temperature were not reported in the experiment, and so a temperature of ![]() $T_{atm}=290\,{\rm K}$ and a pressure of

$T_{atm}=290\,{\rm K}$ and a pressure of ![]() $P_{atm}=101.3\,{\rm kPa}$ are assumed. The upper and lower layers of fluid in the experiment consisted of air, and the middle layer consisted of a mixture of air and helium with the ratio of these two components controlled to achieve a desired Atwood number. The densities of the pure gas components in the simulation are calculated from their molecular weights and ratio of specific heats as given in table 1 utilizing ideal gas relationships. The properties of the middle layer are calculated from the volume fractions of air and helium required to achieve a specified Atwood number using Miranda's mixture equation of state (Cook Reference Cook2009).

$P_{atm}=101.3\,{\rm kPa}$ are assumed. The upper and lower layers of fluid in the experiment consisted of air, and the middle layer consisted of a mixture of air and helium with the ratio of these two components controlled to achieve a desired Atwood number. The densities of the pure gas components in the simulation are calculated from their molecular weights and ratio of specific heats as given in table 1 utilizing ideal gas relationships. The properties of the middle layer are calculated from the volume fractions of air and helium required to achieve a specified Atwood number using Miranda's mixture equation of state (Cook Reference Cook2009).

Table 1. The molecular weight (![]() $M_f$), ratio of specific heats (

$M_f$), ratio of specific heats (![]() $\gamma$) and dynamic viscosity (

$\gamma$) and dynamic viscosity (![]() $\mu _f$) of each pure fluid used in the present work.

$\mu _f$) of each pure fluid used in the present work.

The values of the fluid contributions to the total viscosity, diffusivity and thermal conductivity are found from the fluid properties of each layer. The dynamic viscosity of the upper and lower fluid layers is set directly from the values in table 1, and Miranda's mixture equation of state is used to calculate an effective viscosity for the middle layer mixture. The fluid contribution to the bulk viscosity is neglected. The fluid contribution to the thermal conductivity is calculated as

where ![]() ${\textit {Pr}} = 0.7$ is the Prandtl number. Finally, the fluid contribution to the diffusivity is calculated as a binary species diffusivity

${\textit {Pr}} = 0.7$ is the Prandtl number. Finally, the fluid contribution to the diffusivity is calculated as a binary species diffusivity

where ![]() ${Sc} = 0.22$ is the Schmidt number. Note that all of these properties are further adjusted to maintain dynamic similarity between simulation and experiment owing to restrictions imposed by the compressible nature of the Miranda code. These adjustments are discussed in greater detail in § 2.4.

${Sc} = 0.22$ is the Schmidt number. Note that all of these properties are further adjusted to maintain dynamic similarity between simulation and experiment owing to restrictions imposed by the compressible nature of the Miranda code. These adjustments are discussed in greater detail in § 2.4.

Two sets of simulation data are generated in the present work with the goal of studying different aspects of the simulation. The first dataset, termed the ‘single realization’ set, are simulations run on successively refined computational meshes with an identical initial condition in order to assess convergence of the simulation solution. Information about these meshes are outlined in table 2. The base resolution for this problem is ![]() $60\times 60\times 120$ cells in the

$60\times 60\times 120$ cells in the ![]() $x$,

$x$, ![]() $y$, and

$y$, and ![]() $z$ axes, respectively, with these zone counts chosen to result in square zones. Each refined mesh is then generated via integer multiplication of the base mesh resolution, therefore ensuring that square zones are maintained for all computational meshes. Each mesh is labelled

$z$ axes, respectively, with these zone counts chosen to result in square zones. Each refined mesh is then generated via integer multiplication of the base mesh resolution, therefore ensuring that square zones are maintained for all computational meshes. Each mesh is labelled ![]() $R_N$, where

$R_N$, where ![]() $N$ indicates the multiplication factor used.

$N$ indicates the multiplication factor used.

Table 2. Computational grids utilized in the present simulations. Grids are named according to ![]() $R_N$, where

$R_N$, where ![]() $N$ is an integer used to multiply the zone counts in each axis on the base (

$N$ is an integer used to multiply the zone counts in each axis on the base (![]() $R_1$) grid to arrive at the refined grid.

$R_1$) grid to arrive at the refined grid.

The second set of simulations, termed the ‘ensemble’ dataset, arises by noting that the experimental statistics from Suchandra & Ranjan (Reference Suchandra and Ranjan2023) and Jacobs & Dalziel (Reference Jacobs and Dalziel2005) were captured over independent realizations of the flow with inherent randomness in their initial state. It is thus worthwhile to assess the influence of the randomness of the initial condition by conducting multiple runs with varied initial conditions and comparing the results. This dataset consists of the ‘single realization’ dataset results from the ![]() $R_4$ mesh, plus eight additional simulations also run on the

$R_4$ mesh, plus eight additional simulations also run on the ![]() $R_4$ mesh. The random number generator seed values used to generate the upper and lower interfaces are set to different values for each run, with these values chosen such that a given seed value is not repeated across runs on either interface. This ensures that nine independent initial states are generated for each of the nine simulations. A mean value of a parameter of interest, together with a 95 % confidence bound of that mean, is calculated from all runs to provide a measure of the uncertainty in the calculated mean values owing to the influence of initial conditions.

$R_4$ mesh. The random number generator seed values used to generate the upper and lower interfaces are set to different values for each run, with these values chosen such that a given seed value is not repeated across runs on either interface. This ensures that nine independent initial states are generated for each of the nine simulations. A mean value of a parameter of interest, together with a 95 % confidence bound of that mean, is calculated from all runs to provide a measure of the uncertainty in the calculated mean values owing to the influence of initial conditions.

2.3. Initial conditions

The interfaces in the experiments of Jacobs & Dalziel (Reference Jacobs and Dalziel2005) as well as Suchandra & Ranjan (Reference Suchandra and Ranjan2023) are unforced, and so the initial perturbations to the interface are not well quantified. Experiments in a similar configuration, albeit with only two fluid layers, were conducted by Davies Wykes & Dalziel (Reference Davies Wykes and Dalziel2014) that also utilized a splitter plate to initially separate the mixing fluids similar to the aforementioned experiments. A photograph of the development of the perturbations in space is provided in figure 5 of that work, providing a useful visual depiction of general shape of the interface perturbations. Observation of this photograph reveals that the initial perturbations appear to be composed of two components. One is a two-dimensional low-mode component parallel to the axis of the plate withdrawal, most likely induced by vortex shedding off of the back of the splitter plate. Second is a three-dimensional, high-mode component that is likely induced by random imperfections in the splitter plate and other factors that result in breaking of the symmetry of the streamwise and otherwise two-dimensional component of the perturbation. In the present work, it is assumed that the initial perturbations for the experiments of Reference Jacobs and DalzielJacobs & Dalziel and Reference Suchandra and RanjanSuchandra & Ranjan is likely similar to the case of Reference Davies Wykes and DalzielDavies Wykes & Dalziel owing to the similarity in how the initial interface is formed. This suggests that an appropriate initial perturbation for this configuration consists of a two-dimensional low-mode component in the streamwise (![]() $x$) direction and a three-dimensional, high-mode component imposed uniformly across the interface.

$x$) direction and a three-dimensional, high-mode component imposed uniformly across the interface.

Bender et al. (Reference Bender2021) present a method to specify an initial condition that is the combination of a specified initial perturbation combined with unknown defects present in the design and construction of an ICF capsule. This results in an initial perturbation profile consisting of a two-dimensional principal component and a three-dimensional noise component. Accordingly, the functional form of the initial perturbations that are utilized in the present work are based on the specification of Reference BenderBender et al. Initial conditions of a similar form were also discussed by Thornber et al. (Reference Thornber, Drikakis, Youngs and Williams2010), Schilling & Latini (Reference Schilling and Latini2010) and Thornber et al. (Reference Thornber2017). The general calculation procedure used to define the initial interface is outlined in the following paragraphs, and the reader is referred to appendix D of Bender et al. (Reference Bender2021) for in-depth information.

First, it is useful to define the wavenumber components in ![]() $x$ and

$x$ and ![]() $y$ as

$y$ as

such that the magnitude of the wavevector is ![]() $k_{i,j} = \sqrt { k_{x,i}^2 + k_{y,j}^2 }$. Here

$k_{i,j} = \sqrt { k_{x,i}^2 + k_{y,j}^2 }$. Here ![]() $i$ and

$i$ and ![]() $j$ are integers in the range

$j$ are integers in the range ![]() $[N_{min}, N_{max}]$, with this range set independently for the principal (

$[N_{min}, N_{max}]$, with this range set independently for the principal (![]() $p$) and noise (

$p$) and noise (![]() $n$) components of the perturbation spectrum. The amplitude of the principal and noise components of the initial perturbation spectrum are defined as

$n$) components of the perturbation spectrum. The amplitude of the principal and noise components of the initial perturbation spectrum are defined as ![]() $A_p$ and

$A_p$ and ![]() $A_n$, respectively, and the total perturbation height is

$A_n$, respectively, and the total perturbation height is ![]() $A = A_p + A_n$.

$A = A_p + A_n$.

The streamwise, or principal, perturbation component is the component of the perturbation that arises from the influence of the splitter plate initially separating the fluid layers. This is calculated as a one-dimensional Fourier series in ![]() $x$ that is extruded along

$x$ that is extruded along ![]() $y$ to generate a two-dimensional interface,

$y$ to generate a two-dimensional interface,

where ![]() $A_p$ is the principal perturbation amplitude,

$A_p$ is the principal perturbation amplitude, ![]() $a_{p,i}$ is the mode amplitude of mode number

$a_{p,i}$ is the mode amplitude of mode number ![]() $i$,

$i$, ![]() $\phi _i$ is a random phase offset and the summation is over the integers,

$\phi _i$ is a random phase offset and the summation is over the integers, ![]() $i$, in the range

$i$, in the range ![]() $[N_{p,min} = 10,~N_{p,max} = 20]$. The standard deviation of the perturbation height across the resulting two-dimensional interface is calculated, and the amplitude of the profile is scaled to have a specified standard deviation. The standard deviation of the principal component was chosen to be

$[N_{p,min} = 10,~N_{p,max} = 20]$. The standard deviation of the perturbation height across the resulting two-dimensional interface is calculated, and the amplitude of the profile is scaled to have a specified standard deviation. The standard deviation of the principal component was chosen to be ![]() $0.09\, {\rm cm}$ for this work. The range of mode numbers used, as well as the standard deviation of the principal component of the interface profile, was chosen to result in mixing layer growth that best matched available experimental data.

$0.09\, {\rm cm}$ for this work. The range of mode numbers used, as well as the standard deviation of the principal component of the interface profile, was chosen to result in mixing layer growth that best matched available experimental data.

The noise component of the initial perturbation is inherently two-dimensional and is imposed uniformly across the initial interface. Functionally, this perturbation component has the form

$$\begin{align} A_n(x,y) &= \sum_i \sum_j \eta^{(1)}_{ij} \cos\left( k_{x,i} x \right) \cos\left( k_{y,j} y \right) + \eta_{i,j}^{(2)} \cos\left( k_{x,i} x \right) \sin\left( k_{y,j} y \right) \nonumber\\ &\quad+ \eta_{i,j}^{(3)} \sin\left( k_{x,i} x \right) \cos\left( k_{y,j} y \right) + \eta_{i,j}^{(4)} \sin\left( k_{x,i} x \right) \sin\left( k_{y,j} y \right), \end{align}$$

$$\begin{align} A_n(x,y) &= \sum_i \sum_j \eta^{(1)}_{ij} \cos\left( k_{x,i} x \right) \cos\left( k_{y,j} y \right) + \eta_{i,j}^{(2)} \cos\left( k_{x,i} x \right) \sin\left( k_{y,j} y \right) \nonumber\\ &\quad+ \eta_{i,j}^{(3)} \sin\left( k_{x,i} x \right) \cos\left( k_{y,j} y \right) + \eta_{i,j}^{(4)} \sin\left( k_{x,i} x \right) \sin\left( k_{y,j} y \right), \end{align}$$

where ![]() $\eta _{i,j}^{(\cdots )}$ are amplitude coefficients drawn from a normal distribution with zero mean and a variance defined as

$\eta _{i,j}^{(\cdots )}$ are amplitude coefficients drawn from a normal distribution with zero mean and a variance defined as

The factor ![]() $P$ is chosen to be 1 if

$P$ is chosen to be 1 if ![]() $k_{min} \leq k \leq k_{max}$ and 0 otherwise, where

$k_{min} \leq k \leq k_{max}$ and 0 otherwise, where ![]() $k_{min}$ and

$k_{min}$ and ![]() $k_{max}$ are the wavenumbers associated with

$k_{max}$ are the wavenumbers associated with ![]() $N_{n,min}$ and

$N_{n,min}$ and ![]() $N_{n,max}$, respectively. The summation takes place over the integers

$N_{n,max}$, respectively. The summation takes place over the integers ![]() $i,j$ in the range

$i,j$ in the range ![]() $[N_{n,min}=30,~N_{n,max}=35]$. These values were likewise chosen to obtain good agreement with available experimental data. The full two-dimensional random noise perturbation profile,

$[N_{n,min}=30,~N_{n,max}=35]$. These values were likewise chosen to obtain good agreement with available experimental data. The full two-dimensional random noise perturbation profile, ![]() $A_n$, is then scaled similarly to that of the principal perturbation profile, with the profile amplitude scaled to achieve a specified standard deviation in interface perturbation height. This standard deviation was chosen to be

$A_n$, is then scaled similarly to that of the principal perturbation profile, with the profile amplitude scaled to achieve a specified standard deviation in interface perturbation height. This standard deviation was chosen to be ![]() $0.09\, {\rm cm}$ for the noise component of the perturbation profile. As with the principal part of the perturbation profile, the range of mode numbers used as well as the specified standard deviation were chosen to result in mixing layer growth that best matched available experimental data.

$0.09\, {\rm cm}$ for the noise component of the perturbation profile. As with the principal part of the perturbation profile, the range of mode numbers used as well as the specified standard deviation were chosen to result in mixing layer growth that best matched available experimental data.

The principal and noise components of the initial perturbation spectrum are calculated independently and then added together to produce a single perturbation amplitude. This process is repeated separately for the upper and lower interfaces with the random number generator seed value used to generate the random phase offsets chosen to be different values for each interface. Figure 2 depicts the initial state of the middle layer of fluid for the single realization simulation, also depicting the initial perturbations on the upper and lower interfaces as well as the difference in the perturbations in the streamwise (![]() $x$) and cross-stream (

$x$) and cross-stream (![]() $y$) axes.

$y$) axes.

Figure 2. An image of the initial condition depicting the mass fraction of the middle layer, ![]() $Y_2$, from 0 (red) to 1 (blue).

$Y_2$, from 0 (red) to 1 (blue).

Finally, the present simulations assume an initially quiescent state, ![]() $\boldsymbol {U}(\boldsymbol {x})=0$. This choice, together with the form of the initial perturbations described in this section, results in the initial state of the present simulations most closely resembling the state immediately after plate withdrawal in the experiments of Jacobs & Dalziel (Reference Jacobs and Dalziel2005), or the case where the mean flow velocities of all three streams of gas are exactly matched in the experiments of Suchandra & Ranjan (Reference Suchandra and Ranjan2023). It should be noted, however, that it is practically difficult for the latter experiment to exactly match the mean velocities of the three gas streams, and a mismatch may cause the resulting statistics to contain an influence from shear. The present simulations do not attempt to reproduce this effect, and some differences from the experimental results may be expected depending on the strength of this influence.

$\boldsymbol {U}(\boldsymbol {x})=0$. This choice, together with the form of the initial perturbations described in this section, results in the initial state of the present simulations most closely resembling the state immediately after plate withdrawal in the experiments of Jacobs & Dalziel (Reference Jacobs and Dalziel2005), or the case where the mean flow velocities of all three streams of gas are exactly matched in the experiments of Suchandra & Ranjan (Reference Suchandra and Ranjan2023). It should be noted, however, that it is practically difficult for the latter experiment to exactly match the mean velocities of the three gas streams, and a mismatch may cause the resulting statistics to contain an influence from shear. The present simulations do not attempt to reproduce this effect, and some differences from the experimental results may be expected depending on the strength of this influence.

2.4. Non-dimensionalization

For the purposes of comparison with experimental data, the non-dimensional scalings utilized by Suchandra & Ranjan (Reference Suchandra and Ranjan2023) as well as Jacobs & Dalziel (Reference Jacobs and Dalziel2005) are utilized here. Reference Jacobs and DalzielJacobs & Dalziel find that the mixing width of a self-similar three-layer mixing layer grows linearly as a function of time according to

where ![]() $A_{12}$ is the Atwood number between the upper and middle layers,

$A_{12}$ is the Atwood number between the upper and middle layers, ![]() $h_{2,0}$ is the initial middle layer thickness,

$h_{2,0}$ is the initial middle layer thickness, ![]() $g$ is gravity,

$g$ is gravity, ![]() $t$ is time and

$t$ is time and ![]() $\gamma$ is an unknown coefficient. Equation (2.13) can then be non-dimensionalized by the initial layer thickness to find

$\gamma$ is an unknown coefficient. Equation (2.13) can then be non-dimensionalized by the initial layer thickness to find

\begin{equation} \frac{h_c}{h_{2,0}} = \gamma \sqrt{\frac{A_{12} g}{h_{2,0}}} t = \frac{1}{\max\left[{\bar{C}}\right]}, \end{equation}

\begin{equation} \frac{h_c}{h_{2,0}} = \gamma \sqrt{\frac{A_{12} g}{h_{2,0}}} t = \frac{1}{\max\left[{\bar{C}}\right]}, \end{equation}

where the right-hand side of (2.14) is found by considering the conservation of a passive scalar, ![]() $C$, with an initial value of 1 in the middle layer and 0 elsewhere such that

$C$, with an initial value of 1 in the middle layer and 0 elsewhere such that

Therefore, the non-dimensional mixing layer width grows linearly with a non-dimensional time defined as

\begin{equation} \tau = \sqrt{\frac{ A_{12} g }{h_{2,0}}} t \end{equation}

\begin{equation} \tau = \sqrt{\frac{ A_{12} g }{h_{2,0}}} t \end{equation}

such that ![]() $h / h_{2,0} = \gamma \tau$.

$h / h_{2,0} = \gamma \tau$.

Miranda is a compressible code with an explicit fourth-order Runge–Kutta time integration scheme, and so has a time-step limit for numerical stability that is related to the acoustic Courant–Friedrichs–Lewy condition (Cook Reference Cook2007). At the same time, the experiment took place over several seconds. The consequence of these two factors is that performing the present simulation over the same physical time range as the experiment is intractable. Therefore, it is desired to compress the physical time range to be simulated in order to make this problem more computationally feasible. Equation (2.16) indicates that this can be accomplished by increasing the Atwood number, decreasing the middle layer thickness or increasing the magnitude of gravity. The Atwood number is fixed to facilitate comparison with experiment and grid resolution restricts the amount by which the middle layer thickness can be decreased. This leaves the gravity as the only parameter that can be varied. The gravity is therefore scaled by a factor of ![]() $N$ such that

$N$ such that ![]() $g_{sim} = N g_{exp}$.

$g_{sim} = N g_{exp}$.

Reducing the physical time over which the simulation takes place requires consideration of how other fluid parameters, in particular viscosity and diffusivity, must be scaled to maintain dynamic similarity with the experiment. The Reynolds number is a natural choice to establish a scaling factor between the two cases in order to maintain dynamic similarity. Suchandra & Ranjan (Reference Suchandra and Ranjan2023) define a characteristic Reynolds number in their experiments as

where ![]() $h$ is the mixing layer width,

$h$ is the mixing layer width, ![]() $u'_{z,{rms}}$ is the maximum of the vertical velocity fluctuations and

$u'_{z,{rms}}$ is the maximum of the vertical velocity fluctuations and ![]() $\nu$ is an average mixture viscosity. However, the effect of compressing the simulation time on

$\nu$ is an average mixture viscosity. However, the effect of compressing the simulation time on ![]() $u'_{z,{rms}}$ is not clear a priori, making establishing a scaling relationship using (3.6) difficult. Instead, a generic Reynolds number may be defined as

$u'_{z,{rms}}$ is not clear a priori, making establishing a scaling relationship using (3.6) difficult. Instead, a generic Reynolds number may be defined as

where ![]() $L$,

$L$, ![]() $U$ and

$U$ and ![]() $T$ are characteristic length, velocity and time scales. The right-hand side of (2.18) is arrived at by noting

$T$ are characteristic length, velocity and time scales. The right-hand side of (2.18) is arrived at by noting ![]() $U = L / T$, allowing the dependence on the characteristic velocity scale to be removed in favour of the characteristic length and time scales that are better known a priori. Requiring that this generic Reynolds number is similar between the experiment and simulation and assuming that the characteristic length scale is the same in the two cases yields

$U = L / T$, allowing the dependence on the characteristic velocity scale to be removed in favour of the characteristic length and time scales that are better known a priori. Requiring that this generic Reynolds number is similar between the experiment and simulation and assuming that the characteristic length scale is the same in the two cases yields

A relevant time scale to compare the two cases is now required, for which (2.16) may be used. Substituting this into the above expression and noting that ![]() $g_{sim} = N g_{exp}$ yields

$g_{sim} = N g_{exp}$ yields

\begin{equation} \frac{\nu_{sim}}{\nu_{exp}} \sim \frac{T_{exp}}{T_{sim}} \sim \frac{ \sqrt{ h_{2,0} / A_{12} g_{exp} } }{ \sqrt{ h_{2,0} / A_{12} N g_{exp}} } = \sqrt{N}. \end{equation}

\begin{equation} \frac{\nu_{sim}}{\nu_{exp}} \sim \frac{T_{exp}}{T_{sim}} \sim \frac{ \sqrt{ h_{2,0} / A_{12} g_{exp} } }{ \sqrt{ h_{2,0} / A_{12} N g_{exp}} } = \sqrt{N}. \end{equation}

Therefore, the kinematic viscosity for each fluid is increased by a factor of ![]() $\sqrt {N}$ in the simulation, and the time over which the instability develops is similarly compressed by a factor of

$\sqrt {N}$ in the simulation, and the time over which the instability develops is similarly compressed by a factor of ![]() $\sqrt {N}$. A value of

$\sqrt {N}$. A value of ![]() $N=800$ is chosen for the present work to reduce the physical time simulated to a computationally feasible range. A posteriori analysis finds the maximum Mach number to be

$N=800$ is chosen for the present work to reduce the physical time simulated to a computationally feasible range. A posteriori analysis finds the maximum Mach number to be ![]() $M \lessapprox 0.05$, indicating that this time compression does not introduce significant compressibility effects. This time compression also implicitly assumes that the mean flow velocity used for Taylor's frozen turbulence hypothesis discussed in § 2.2 is similarly increased by a factor of

$M \lessapprox 0.05$, indicating that this time compression does not introduce significant compressibility effects. This time compression also implicitly assumes that the mean flow velocity used for Taylor's frozen turbulence hypothesis discussed in § 2.2 is similarly increased by a factor of ![]() $\sqrt {N}$. Additional a posteriori analysis finds that the maximum value of

$\sqrt {N}$. Additional a posteriori analysis finds that the maximum value of ![]() $u_{x,{rms}}$ is less than

$u_{x,{rms}}$ is less than ![]() $10\,\%$ of this scaled mean flow velocity. Thus, this time compression also does not meaningfully impact the frozen flow assumption.

$10\,\%$ of this scaled mean flow velocity. Thus, this time compression also does not meaningfully impact the frozen flow assumption.

Scaling the kinematic viscosity in this way will also scale the dynamic viscosity, diffusivity and thermal conductivity of the fluids as well. A priori application of the scaling relationship in (2.20) to the present simulations resulted in a Reynolds number calculated using (2.17) that was approximately twice that of the experiment. Accordingly, the ratio ![]() $\nu _{sim} / \nu _{exp}$ was set to

$\nu _{sim} / \nu _{exp}$ was set to ![]() $2 \sqrt {N}$ to maintain a similar Reynolds number to the experiment.

$2 \sqrt {N}$ to maintain a similar Reynolds number to the experiment.

2.5. Averaging

Variables in turbulent flows may be decomposed in to a form that consists of an average value plus fluctuations of the variable about that mean. The Reynolds and Favre decompositions will be considered in the present work. An arbitrary scalar, ![]() $f$, may be decomposed according to

$f$, may be decomposed according to

where ![]() ${\bar {f}}$ is the Reynolds-averaged value of

${\bar {f}}$ is the Reynolds-averaged value of ![]() $f$,

$f$, ![]() $f'$ is the fluctuations of

$f'$ is the fluctuations of ![]() $f$ about

$f$ about ![]() ${\bar {f}}$,

${\bar {f}}$, ![]() ${\tilde {f}}$ is the Favre average and

${\tilde {f}}$ is the Favre average and ![]() $f''$ is the fluctuations of

$f''$ is the fluctuations of ![]() $f$ about

$f$ about ![]() ${\tilde {f}}$. The Favre and Reynolds averages are related through the density,

${\tilde {f}}$. The Favre and Reynolds averages are related through the density, ![]() $\rho$, according to

$\rho$, according to

Averages in both cases are computed over all ![]() $x,y$ for a fixed value of

$x,y$ for a fixed value of ![]() $z$ owing to the doubly periodic nature of this problem. This results in the averaged variables being a function of

$z$ owing to the doubly periodic nature of this problem. This results in the averaged variables being a function of ![]() $z$ only.

$z$ only.

3. Results: validation

3.1. Direct numerical simulation

This study seeks to perform DNS of a three-layer Rayleigh–Taylor unstable flow. Therefore, a useful first step in the validation of these results is to establish whether these simulations have sufficient resolution to achieve this goal. The DNS regime can be broadly described as the regime where all scales of turbulence are resolved, the flow physics are governed by the physical properties of the fluid, and purely numerical contributions are negligible. This section will demonstrate the latter two points, and additional analysis demonstrating that all relevant scales of this flow are fully resolved by the computational mesh will be presented in § 4.1.

Olson & Greenough (Reference Olson and Greenough2014a) present a set of parameters that can be used to identify the transition from well-resolved large-eddy simulation (LES) to DNS. In particular, the transition from LES to DNS in these simulations may be identified by the total contributions to the viscosity and diffusivity arising from the numerics becoming smaller than the physical contributions to these quantities. This is defined in Reference Olson and GreenoughOlson & Greenough as ![]() $\langle ({\cdot })_a / ({\cdot })_f \rangle < 1$, where

$\langle ({\cdot })_a / ({\cdot })_f \rangle < 1$, where ![]() $({\cdot })_a$ are the artificial contributions arising from purely numerical sources and

$({\cdot })_a$ are the artificial contributions arising from purely numerical sources and ![]() $({\cdot })_f$ is the physical contribution arising from the properties of the fluids.

$({\cdot })_f$ is the physical contribution arising from the properties of the fluids.

The present work considers three contributions to the total fluid properties. The first is the physical contribution, ![]() $({\cdot })_f$, that arises from the material properties of the fluid. Second is the explicit contribution arising from Miranda's artificial fluid approach,

$({\cdot })_f$, that arises from the material properties of the fluid. Second is the explicit contribution arising from Miranda's artificial fluid approach, ![]() $({\cdot })_a$, with these contributions calculated according to the method described in Appendix A.1. The sum of the physical and AFLES terms at each grid point is provided as an output from Miranda, and this output is used for these first two terms. Last are implicit contributions to viscosity and diffusivity arising from the use of a numerical method to solve the governing equations. These contributions are related to how well the computational grid resolves the flow field and must be calculated from the simulation data a posteriori.

$({\cdot })_a$, with these contributions calculated according to the method described in Appendix A.1. The sum of the physical and AFLES terms at each grid point is provided as an output from Miranda, and this output is used for these first two terms. Last are implicit contributions to viscosity and diffusivity arising from the use of a numerical method to solve the governing equations. These contributions are related to how well the computational grid resolves the flow field and must be calculated from the simulation data a posteriori.

A method for calculating an effective viscosity and diffusivity arising from the use of a numerical method is described by Olson & Greenough (Reference Olson and Greenough2014a), and this method is utilized here to provide an estimate for this contribution. The grid viscosity is approximated as

where ![]() $\mu _G$ is the grid-dependent viscosity,

$\mu _G$ is the grid-dependent viscosity, ![]() $\rho$ is the fluid density,

$\rho$ is the fluid density, ![]() $S$ is the magnitude of the strain rate tensor and

$S$ is the magnitude of the strain rate tensor and ![]() $\Delta x$ is the grid spacing. Here

$\Delta x$ is the grid spacing. Here ![]() $C_\mu$ is a code-dependent coefficient, with a value of

$C_\mu$ is a code-dependent coefficient, with a value of ![]() $8.11$ determined by Reference Olson and GreenoughOlson & Greenough for the Miranda code, corresponding to the transition from LES to DNS when

$8.11$ determined by Reference Olson and GreenoughOlson & Greenough for the Miranda code, corresponding to the transition from LES to DNS when ![]() $\mu _G / \mu _f = 1$. A similar definition is given for grid-dependent diffusivity as

$\mu _G / \mu _f = 1$. A similar definition is given for grid-dependent diffusivity as

where ![]() $c_s$ is the local speed of sound and

$c_s$ is the local speed of sound and ![]() $Y$ is a mass fraction, chosen to be the middle layer mass fraction,

$Y$ is a mass fraction, chosen to be the middle layer mass fraction, ![]() $Y_2$, in this work. Here

$Y_2$, in this work. Here ![]() $C_D$ is again a code-dependent coefficient with a value of

$C_D$ is again a code-dependent coefficient with a value of ![]() $0.039$ determined by Olson & Greenough (Reference Olson and Greenough2014a) for the Miranda code, corresponding to the transition from LES to DNS when

$0.039$ determined by Olson & Greenough (Reference Olson and Greenough2014a) for the Miranda code, corresponding to the transition from LES to DNS when ![]() $D_G / D_f = 1$. These contributions lead to a natural definition of the total fluid viscosity and diffusivity as

$D_G / D_f = 1$. These contributions lead to a natural definition of the total fluid viscosity and diffusivity as

and

which then allow for a similar expression of the DNS transition criteria of Olson & Greenough (Reference Olson and Greenough2014a) as

\begin{equation} \max\left[ {\overline{ \left( \frac{({\cdot})_t}{({\cdot})_f} \right) }} \right] < 2, \end{equation}

\begin{equation} \max\left[ {\overline{ \left( \frac{({\cdot})_t}{({\cdot})_f} \right) }} \right] < 2, \end{equation}

where the right-hand side of the inequality is now ![]() $2$ instead of

$2$ instead of ![]() $1$ owing to the inclusion of the molecular fluid property in the definition of the total fluid property in the present work. The maximum value of

$1$ owing to the inclusion of the molecular fluid property in the definition of the total fluid property in the present work. The maximum value of ![]() ${\overline { ( {\cdot } )_t / ( {\cdot } )_f }}$ is selected at each time instant in order to calculate a single value of this ratio for each fluid property.

${\overline { ( {\cdot } )_t / ( {\cdot } )_f }}$ is selected at each time instant in order to calculate a single value of this ratio for each fluid property.

The ratios of the total to fluid properties for viscosity and diffusivity as a function of time as calculated through (3.5) on successively refined simulation grids is plotted in figure 3. It is clear that these simulations satisfy this criteria in the mechanical fields between the ![]() $R_4$ and

$R_4$ and ![]() $R_8$ meshes, and subsequently refined meshes demonstrate convergence of the effective viscosity towards the physical viscosity. This criterion is similarly satisfied for the scalar fields starting around the

$R_8$ meshes, and subsequently refined meshes demonstrate convergence of the effective viscosity towards the physical viscosity. This criterion is similarly satisfied for the scalar fields starting around the ![]() $R_4$ mesh, with successive refinements resulting in the effective diffusivity approaching the physical diffusivity.

$R_4$ mesh, with successive refinements resulting in the effective diffusivity approaching the physical diffusivity.

The convergence of these simulations may also be established by noting the factor of ![]() $\Delta x^4$ in (3.1) and (3.2). The values of these two equations should decrease proportionally to

$\Delta x^4$ in (3.1) and (3.2). The values of these two equations should decrease proportionally to ![]() $\Delta x^4$ if the flow gradients are fully resolved. The maximum values of

$\Delta x^4$ if the flow gradients are fully resolved. The maximum values of ![]() ${\overline {\mu _G / \mu _f}}$ and

${\overline {\mu _G / \mu _f}}$ and ![]() ${\overline {D_G / D_f}}$ at a non-dimensional time of

${\overline {D_G / D_f}}$ at a non-dimensional time of ![]() $\tau =8.2$ are presented in figure 4. It can be observed in these figures that there is a clear change in the slope of decay between coarse and fine resolutions. This occurs as the gradients of the flow begin to become fully resolved, rather than being influenced by the numerical method. Furthermore, a rate of decay of grid viscosity and diffusivity that is very close to

$\tau =8.2$ are presented in figure 4. It can be observed in these figures that there is a clear change in the slope of decay between coarse and fine resolutions. This occurs as the gradients of the flow begin to become fully resolved, rather than being influenced by the numerical method. Furthermore, a rate of decay of grid viscosity and diffusivity that is very close to ![]() $\Delta x^4$ is observed at the highest resolution meshes. This is further evidence that these simulations have reached a state where all flow gradients are fully resolved and are not influenced by the numerical method.

$\Delta x^4$ is observed at the highest resolution meshes. This is further evidence that these simulations have reached a state where all flow gradients are fully resolved and are not influenced by the numerical method.

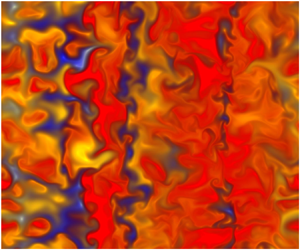

3.2. Qualitative comparison

It is useful to qualitatively compare the experimental results to those obtained from these simulations as this provides a useful measure for the similarity between the numerical and physical results, particularly with regards to whether the simulation is in a similar flow regime as the experiment. The present simulations do not attempt to exactly reproduce the experimental state of either Reference Jacobs and DalzielJacobs & Dalziel or Reference Suchandra and RanjanSuchandra & Ranjan, and so it is unlikely that a directly comparable simulation image can be found. Instead, multiple planes from the simulation are presented to provide a general sense of the state of the mixing layer, including large-scale behaviour as well as small-scale structures. This also provides a useful sense of how the randomness of the initial perturbation influences the qualitative appearance of the mixing layer. Figure 5 depicts five ![]() $x$–

$x$–![]() $z$ planes of middle mass fraction from the simulation at (a,d)

$z$ planes of middle mass fraction from the simulation at (a,d) ![]() $y=\pm 9\, {\rm cm}$, (b,e)

$y=\pm 9\, {\rm cm}$, (b,e) ![]() $y=\pm 3.7\, {\rm cm}$ and (c)

$y=\pm 3.7\, {\rm cm}$ and (c) ![]() $y=0\, {\rm cm}$, as well as (f) the Mie scattering visualization image from figure 5(a) of Suchandra & Ranjan (Reference Suchandra and Ranjan2023). The simulation images depict a subset of the simulation domain in order to produce images with a similar physical scale to the experimental image. The horizontal extents for the simulation images are

$y=0\, {\rm cm}$, as well as (f) the Mie scattering visualization image from figure 5(a) of Suchandra & Ranjan (Reference Suchandra and Ranjan2023). The simulation images depict a subset of the simulation domain in order to produce images with a similar physical scale to the experimental image. The horizontal extents for the simulation images are ![]() $x=[-15, 15]\, {\rm cm}$ and the vertical extents are

$x=[-15, 15]\, {\rm cm}$ and the vertical extents are ![]() $z=[-10,20]\, {\rm cm}$. These images are presented at a non-dimensional time of

$z=[-10,20]\, {\rm cm}$. These images are presented at a non-dimensional time of ![]() $\tau =8.2$ in both simulation and experiment.

$\tau =8.2$ in both simulation and experiment.

Figure 5. Visualization of the middle fluid layer at ![]() $\tau =8.2$. Images (a–e) depict

$\tau =8.2$. Images (a–e) depict ![]() $x$–

$x$–![]() $z$ planes of the middle layer mass fraction from the simulation at (a,d)

$z$ planes of the middle layer mass fraction from the simulation at (a,d) ![]() $y=\pm 9\, {\rm cm}$, (b,e)

$y=\pm 9\, {\rm cm}$, (b,e) ![]() $y=\pm 3.7\, {\rm cm}$ and (c)

$y=\pm 3.7\, {\rm cm}$ and (c) ![]() $y=0\, {\rm cm}$. Also shown is (f) a Mie scattering visualization of the middle layer from figure 5(a) of Suchandra & Ranjan (Reference Suchandra and Ranjan2023).

$y=0\, {\rm cm}$. Also shown is (f) a Mie scattering visualization of the middle layer from figure 5(a) of Suchandra & Ranjan (Reference Suchandra and Ranjan2023).

One immediately notable difference between the experimental and simulation images is that the experimental images demonstrate a high degree of streakiness, suggesting a greater degree of small-scale entrainment and less diffusive mixing of the unseeded gas than is observed in the simulation. This is likely due to the Mie scattering diagnostic used to generate the experimental image, and arises due to the tracer particle used in Mie scattering behaving as a Lagrangian tracer rather than exactly following and diffusing with the gas it is seeded into (Anderson et al. Reference Anderson, Vorobieff, Truman, Corbin, Kuehner, Wayne, Conroy, White and Kumar2015).

Good qualitative agreement is observed between experiment and simulation in terms of overall mixing layer extents and large-scale flow structure size. Generally good agreement in the medium-to-small scales is also observed, though the size and quantity of small-scale structures in the simulation appears qualitatively slightly different than the experiment depending on the image considered. A significant amount of small-scaling mixing can nonetheless be observed by noting the fluctuations in the mass fraction fields, particularly within the large structures. Reynolds number analysis in § 3.3.2 and length scale analysis in § 4.1 also indicates good overall agreement between experiment and simulation at this time however, and so qualitative differences in scale size are likely simply an artifact of the specific planes considered. Nevertheless, the range of scales observed indicate that the present simulations are in a similar flow regime to the experiment.

3.3. Experimental comparison

This section focuses on comparison with available experimental data from Jacobs & Dalziel (Reference Jacobs and Dalziel2005) and Suchandra & Ranjan (Reference Suchandra and Ranjan2023) to validate the present simulations. It should be emphasized that the present simulations consider a similar configuration to those experiments but do not attempt to exactly reproduce either experiment. Of particular note is the potential influence from shear in the experiments of Reference Suchandra and RanjanSuchandra & Ranjan discussed in § 2.3. Thus, some of the experimental results may include an influence from shear that are not reproduced in the present simulations, and so some disagreement in these cases may be expected.

3.3.1. Mixing layer width

The first metric to be considered is the mixing layer width as a function of time. Equation (2.14) shows that the mixing layer width can be defined in terms of the maximum value of a conserved passive scalar, ![]() $C$, with a value of 0 outside of the middle layer and an initial value of 1 inside the middle layer. The definition

$C$, with a value of 0 outside of the middle layer and an initial value of 1 inside the middle layer. The definition ![]() $C = \rho Y_2 / \rho _2$, where

$C = \rho Y_2 / \rho _2$, where ![]() $\rho$ is the density,

$\rho$ is the density, ![]() $Y_2$ is the mass fraction of the middle fluid and

$Y_2$ is the mass fraction of the middle fluid and ![]() $\rho _2$ is the partial density of the middle fluid, is used for the present work. This definition satisfies the assumptions of (2.14) with the exception that

$\rho _2$ is the partial density of the middle fluid, is used for the present work. This definition satisfies the assumptions of (2.14) with the exception that ![]() $\rho Y_2$ is not strictly a passive scalar.

$\rho Y_2$ is not strictly a passive scalar.

Figure 6 depicts the mixing layer width as a function of time for (a) the single realization case as a function of mesh resolution and (b) the ensemble averaged case with a mean mixing layer width and associated 95 % confidence interval of the mean. Also shown are the experimental data points from Suchandra & Ranjan (Reference Suchandra and Ranjan2023) and Jacobs & Dalziel (Reference Jacobs and Dalziel2005). The data points from the experiments of Reference Suchandra and RanjanSuchandra & Ranjan arise from four separate experimental runs. These consist of two runs utilizing a Mie scattering diagnostic at ![]() $\tau \approx 8.2$ and

$\tau \approx 8.2$ and ![]() $\tau \approx 15.8$, and two additional runs utilizing a particle image velocimetry (PIV)/planar laser induced fluorescence (PLIF) diagnostic at

$\tau \approx 15.8$, and two additional runs utilizing a particle image velocimetry (PIV)/planar laser induced fluorescence (PLIF) diagnostic at ![]() $\tau =11.1$ and

$\tau =11.1$ and ![]() $\tau =17.3$. The PIV/PLIF data are indicated by a black dot surrounded by a red circle in figure 6 to distinguish them from the Mie scattering data. Finally, a line corresponding to a value of