Impact Statement

This paper discusses methods born from the fusion of physics and machine learning, known as physics-enhanced machine learning (PEML) schemes. By considering their characteristics, this work clarifies and categorizes PEML techniques, aiding researchers and users to targetedly select methods on the basis of specific problem characteristics and requirements. The discussion of PEML techniques is framed around a survey of recent applications/developments of PEML in the field of structural mechanics. A running example of a Duffing oscillator is used to highlight the traits and potential of diverse PEML approaches. Additionally, code is provided to foster transparency and collaboration. The work advocates the pivotal role of PEML in advancing computing for engineering through the merger of physics-based knowledge and machine learning capabilities.

1. Introduction

With the increase in both computing power and data availability, machine learning (ML) and deep learning (DL) are in scientific and engineering applications (Reich, Reference Reich1997; Hey et al., Reference Hey, Butler, Jackson and Thiyagalingam2020; Zhong et al., Reference Zhong, Zhang, Bagheri, Burken, Gu, Li, Ma, Marrone, Ren and Schrier2021; Cuomo et al., Reference Cuomo, Di Cola, Giampaolo, Rozza, Raissi and Piccialli2022). Such methods have shown enormous potential in yielding efficient and accurate estimates over highly complex domains, such as those with high-dimensionality, or ill-posed problem definitions. The use of data-driven methods is wide reaching in science, from fields such as fluid dynamics (Zhang and Duraisamy, Reference Zhang and Duraisamy2015), geoscience (Bergen et al., Reference Bergen, Johnson, de Hoop and Beroza2019), bioinformatics (Olson et al., Reference Olson, Cava, Mustahsan, Varik and Moore2018), and more (Brunton and Kutz, Reference Brunton and Kutz2022). Data-driven schemes are particularly suited for the case of monitored systems, where the availability of data is ensured via the measurement of engineering quantities through the use of appropriate sensors (Sohn et al., Reference Sohn, Farrar, Hemez, Shunk, Stinemates, Nadler and Czarnecki2003; Farrar and Worden, Reference Farrar and Worden2007; Lynch, Reference Lynch2007).

However, such data-driven models are known to be restricted to the domain of the instance in which the data was collected; that is, they lack generalisability (O’Driscoll et al., Reference O’Driscoll, Lee and Fu2019; Karniadakis et al., Reference Karniadakis, Kevrekidis, Lu, Perdikaris, Wang and Yang2021), as a result of a lack of physical connotation. This challenge is often met when dealing with data-driven approaches for environmental and operational normalization (Cross et al., Reference Cross, Worden and Chen2011; Avendaño-Valencia et al., Reference Avendaño-Valencia, Chatzi, Koo and Brownjohn2017); it is impossible, or impractical, to collect data over the full environmental/operational (E/O) envelope (Figueiredo et al., Reference Figueiredo, Park, Farrar, Worden and Figueiras2011). Particularly to what concerns data gathered from large-scale engineered systems, it is common to meet a scarcity of training samples across a system’s comprehensive operational envelope (Sohn, Reference Sohn2007). These variables frequently exhibit intricate and non-stationary patterns changing over time. Consequently, the limited pool of labeled samples available for training or cross-validation can fall short of accurately capturing the intrinsic relationships for scientific discovery tasks, potentially resulting in misleading extrapolations (D’Amico et al., Reference D’Amico, Myers, Sykes, Voss, Cousins-Jenvey, Fawcett, Richardson, Kermani and Pomponi2019). This scarcity of representative samples sets scientific problems apart from more mainstream concerns like language translation or object recognition, where copious amounts of labeled or unlabeled data have underpinned recent advancements in deep learning (Jordan and Mitchell, Reference Jordan and Mitchell2015; Sharifani and Amini, Reference Sharifani and Amini2023). Discussions on and examples of the challenge posed by comparatively small datasets in scientific machine learning can be found in Shaikhina et al. (Reference Shaikhina, Lowe, Daga, Briggs, Higgins and Khovanova2015), Zhang and Ling (Reference Zhang and Ling2018), and Dou et al. (Reference Dou, Zhu, Merkurjev, Ke, Chen, Jiang, Zhu, Liu, Zhang and Wei2023).

While black box data-driven schemes are often sufficient for delivering an actionable system model, able to act as an estimator or classifier, a common pursuit within the context of mechanics lies in knowledge discovery (Geyer et al., Reference Geyer, Singh and Chen2021; Naser, Reference Naser2021; Cuomo et al., Reference Cuomo, Di Cola, Giampaolo, Rozza, Raissi and Piccialli2022). In this case, it is imperative to deliver models that are explainable/interpretable and generalizable (Linardatos et al., Reference Linardatos, Papastefanopoulos and Kotsiantis2020). This entails revealing and comprehending the cause-and-effect mechanisms underpinning the workings of a particular engineered system. Consequently, even if a black-box model attains marginally superior accuracy, its inability to unravel the fundamental underlying processes renders it inadequate for furthering downstream scientific applications (Langley et al., Reference Langley1994). Conversely, an interpretable model rooted in explainable theories is better poised to guard against the learning of spurious data-driven patterns that lack interpretability (Molnar, Reference Molnar2020). This becomes particularly crucial for practices where predictive models are of the essence for risk-based assessment and decision support, such as the domains of structural health monitoring (Farrar and Worden, Reference Farrar and Worden2012) and resilience (Shadabfar et al., Reference Shadabfar, Mahsuli, Zhang, Xue, Ayyub, Huang and Medina2022).

In modeling complex systems, there is a need for a balanced approach that combines physics-based and data-driven models (Pawar et al., Reference Pawar, San, Nair, Rasheed and Kvamsdal2021). Modern engineering systems, involve complex materials, geometries, and often intricate energy harvesting and vibration mitigation mechanisms, which may be associated with complex mechanics and failure patterns (Duenas-Osorio and Vemuru, Reference Duenas-Osorio and Vemuru2009; Van der Meer et al., Reference Van der Meer, Sluys, Hallett and Wisnom2012; Kim et al., Reference Kim, Jin, Lee and Kang2017). This results in behavior that cannot be trivially described purely on the basis of data observations or via common, and often simplified, modeling assumptions. In efficiently modeling such systems, a viable approach is to integrate the aspect of physics, which is linked to forward modeling with the aspect of learning from data (via machine learning tools), which can account for modeling uncertainties and imprecision. This fusion has been referred to via the term “physics-enhanced machine learning (PEML)” (Faroughi et al., Reference Faroughi, Pawar, Fernandes, Das, Kalantari and Mahjour2022), which we also adopt herein. This term is used to denote that, in some form, prior physics knowledge is embedded to the learner (O’Driscoll et al., Reference O’Driscoll, Lee and Fu2019; Choudhary et al., Reference Choudhary, Lindner, Holliday, Miller, Sinha and Ditto2020; Xiaowei et al., Reference Xiaowei, Shujin and Hui2021). which typically results in more interpretable models.

In this work, we focus on applications of PEML in the domain of structural mechanics; a field that impacts the design, building, monitoring, maintenance, and disuse of critical structures and infrastructures. Some of the greatest impact comes from large-scale infrastructure, such as bridges, wind turbines, and transport systems. However, accurate and robust numerical models of complex structures are non-trivial to establish for tasks such as Digital Twinning (DT) and Structural Health Monitoring (SHM), where both precision and computational efficiency are of the essence (Farrar and Worden, Reference Farrar and Worden2012; Yuan et al., Reference Yuan, Zargar, Chen and Wang2020). This has motivated the increased adoption of ML or DL approaches for generating models of such structures, overcoming the challenges presented by the complexity. Further to the extended use in the DT and SHM contexts, data-driven approaches have further been adopted for optimizing the design of materials and structures (Guo et al., Reference Guo, Yang, Yu and Buehler2021; Sun et al., Reference Sun, Burton and Huang2021). Multi-scale modeling of structures has also benefited from the use of ML approaches, typically via the replacement of computationally costly representative volume element simulations with ML models, such as neural networks (Huang et al., Reference Huang, Fuhg, Weißenfels and Wriggers2020) or support vector regression and random forest regression (Reimann et al., Reference Reimann, Nidadavolu, Hassan, Vajragupta, Glasmachers, Junker and Hartmaier2019).

In order to contextualize PEML for use within the realm of structural mechanics applications, we here employ a characterization that adopts the idea of a spectrum, as opposed to a categorization in purely white, black, and gray box models. This is inspired by the categorization put forth in the recent works of Cross et al. (Reference Cross, Gibson, Jones, Pitchforth, Zhang and Rogers2022), which discusses the placement of PEML methods on a two-dimensional spectrum of physics and data, and Faroughi et al. (Reference Faroughi, Pawar, Fernandes, Das, Kalantari and Mahjour2022) which categorizes these schemes based on the implementation of physics within the ML architecture. In the context of the previously used one-dimensional spectrum, the “darker” end of the said spectrum relies more heavily on data, whilst the “lighter” end relies more heavily on the portion of physics that is considered known (Figure 1). One can envision this one-dimensional spectrum lying equivalently along the diagonal from the red (top left), to the blue (bottom right) corners of the two-dimensional spectrum. Under this definition, “off-the-shelf” ML approaches more customarily fit the black end of the spectrum, while purely analytical solutions would sit on white end of the spectrum (Rebillat et al., Reference Rebillat, Monteiro and Mechbal2023). Generally, the position along this spectrum is driven by both the amount of data available, and the level of physics constraints that are applied. However, it is important to note, that the inclusion of data is not a requirement for PEML. An example of the latter is found in methods such as physics-informed neural networks (PINNs) (Raissi et al., Reference Raissi, Perdikaris and Karniadakis2019), which exploit the capabilities of ML methods to act as forward modelers, where no observations or measured data are necessarily used for the formulation of the loss function. In these cases, prescribed boundary/initial conditions, physics equations, and system inputs are provided and the ML algorithm is used to “learn” the solutions; one such method is the observation-absent PINN (Rezaei et al., Reference Rezaei, Harandi, Moeineddin, Xu and Reese2022).

Figure 1. The spectrum of physics-enhanced machine learning (PEML) schemes is surveyed in this paper.

The reliance on physics can be quantified in terms of the level of strictness of the physics model prescription. The level of strictness refers to the degree to which the prescribed model form incorporates and adheres to the underlying physical principles, and concurrently defines the set of systems which the prescribed model can emulate. For example, when system parameters are assumed known, the physics is more strictly prescribed. Using a solid mechanics example, a strictly prescribed model would correspond to the use of a specified a Finite Element model as the underlying physics structure. This strictness is somewhat relaxed when it is assumed that the model parameters are uncertain and subject to updating (Papadimitriou and Katafygiotis, Reference Papadimitriou and Katafygiotis2004). An example of a low degree of strictness corresponds to prescribing the system output as a function of the derivative of system inputs with respect to time; such a more loose prescription would be capable of emulating structural dynamics system (Bacsa et al., Reference Bacsa, Lai, Liu, Todd and Chatzi2023), as well as further system and problem types, such as heat transfer (Dhadphale et al., Reference Dhadphale, Unni, Saha and Sujith2022), or virus spreading (Núñez et al., Reference Núñez, Barreiro, Barrio and Rackauckas2023). In this work, the vertical axis of the PEML spectrum is defined in terms of the reliance on the imposed physics-based model form, which is earlier referred to as the level of strictness. A separate notion to consider, which is not reflected in the included axes, pertains to the level of constraint of the employed PEML architecture, which describes the degree to which the learner must adhere to a prescribed model. As an example, residual modeling techniques (Christodoulou and Papadimitriou, Reference Christodoulou and Papadimitriou2007) have a relatively low level of constraint, as the solution space is not limited to that which is posited by the physics model. The combination of the strictness in the prescription of a model form and the learner constraints defines the overall flexibility of the PEML scheme; this refers to its capability to emulate systems of varying types and complexities (Karniadakis et al., Reference Karniadakis, Kevrekidis, Lu, Perdikaris, Wang and Yang2021).

When selecting the type, or “genre” of PEML model, the confidence in the physics that is known a priori guides the selection of the appropriate reliance on physics in the form of the level of strictness of the prescribed model and/or constraints of the learner. Different prior knowledge can be in the form of an appropriate model structure, that is an equivalent MDOF system, or an appropriate finite element model, or it could be that appropriate material/property values are prescribed. The term appropriate here refers to the availability of adequate information on models and parameters, which approximate well the behavior and traits of the true system. If one wishes to delve deeper into such a categorization, the level of knowledge can be appraised in further sub-types, that is, the discrete number of, or confidence in, known material parameters, or the complexity of the model in relation to the real structure.

The remainder of the paper is organized into methods corresponding to different collective areas over the PEML spectrum, as indicated in Figure 1. We initiate with a discussion and corresponding examples that are closer to a white-box approach in Section 3, where physics-based models of specified form are fused with data via a Bayesian Filtering (BF) approach. This is followed by Section 4, which shows a brief survey of solely data-driven methods, which embed no prior knowledge on the underlying physics. After introducing instances of methods that are situated near the extreme corners of the spectrum (black- and white-box), the main motivation of the paper, namely the overview of PEML schemes initiates. The breakdown into the subsections of PEML techniques is driven by a combination of the reliance on the prescription of the physics model form and the method of physics embedding, these are broken down below and their naming conventions are explained.

Firstly, Section 5 surveys and discusses physics-guided machine learning (PGML) techniques, in which the physics model prescriptions are embedded as proposed solutions, and act in parallel to the data-driven learner in the full PEML model architecture. PGML schemes steer the learner toward a desired solution by prescribing models with a relatively large degree of strictness, therefore neighboring the similarly strict construct of BF methods. Physics-guided methods often benefit from a reduced data requirement since the physics embedding allows for estimation in the absence of dense observations from the system. However, depending on the formulation, or the type of method used, such schemes can still suffer from data-sparsity. In Section 6, physics-informed methods are presented, which correspond to a heavier reliance on data, while still retaining a moderate reliance on the prescribed physics. These schemes are so named as physics is embedded as prior information, from which an objective or loss function is constructed, which the learner is prompted to follow. Compared to physics-guided methods, in physics-informed schemes, the physics is embedded in a less constrained manner, that is it is weakly imposed. In this sense, such schemes are often formed by way of minimizing a loss or objective function, which vanishes when all the imposed physics are satisfied. The survey portion of the paper concludes with a discussion on physics-encoded learners in Section 7. Physics-encoded methods embed the imposed physics directly within the architecture of the learner, via selection of operators, kernels, or transforms. As a result, these methods are often less reliant on the model form (e.g., they may simply impose derivatives), but they are highly constrained in the fact that this imposed model is always adhered to. The position of PgNNs and PINNs compared to constrained Gaussian Processes (CGPs) is less indicative of the higher requirement of CGPs for data, but more indicative of the lower requirement of PgNNs and PINNs for data, as these methods are capable of proposing viable solutions with fewer data. However, this is dependent on the physics and ML model form, thus arrows are included to indicate their mobility on the spectrum.

Throughout the paper, a working example of a single-degree-of-freedom Duffing oscillator, the details of which are offered in Section 2, is used to demonstrate the methods surveyed. As previously mentioned, the code used to generate the fundamental versions of these methods is provided alongside this paper in a GitHub repository.Footnote 1 This code is written in Python and primarily built with the freely available Pytorch package (Paszke et al., Reference Paszke, Gross, Massa, Lerer, Bradbury, Chanan, Killeen, Lin, Gimelshein, Antiga, Desmaison, Kopf, Yang, DeVito, Raison, Tejani, Chilamkurthy, Steiner, Fang, Bai, Chintala, Wallach, Larochelle, Beygelzimer, d’Alché-Buc, Fox and Garnett2019).

2. A working example

Aiding the survey and discussion in each aspect of PEML, an example of a dynamic system will be used throughout the paper to provide a tangible example for the reader. A variety of PEML methods will be applied to the presented model, the aim of which is not to showcase any particularly novel applications of the methods, but to help illustrate and discuss the characteristics of the PEML variants for a simple example, while highlighting emerging schemes and their placement across the spectrum of Figure 1. To this end, we employ a single-degree-of-freedom (SDOF) Duffing oscillator, shown in Figure 2a, as a running example. The equation of motion of this oscillator is defined as,

where the values for the physical parameters

![]() $ m $

,

$ m $

,

![]() $ c $

,

$ c $

,

![]() $ k $

, and

$ k $

, and

![]() $ {k}_3 $

are 10 kg, 1Ns/m, 15 N/m, and 100N/m3, respectively. To be consistent with problem formulations in this paper, this is defined in state-space form as follows:

$ {k}_3 $

are 10 kg, 1Ns/m, 15 N/m, and 100N/m3, respectively. To be consistent with problem formulations in this paper, this is defined in state-space form as follows:

where

![]() $ \mathbf{z}={\left\{u,\dot{u}\right\}}^T $

is the system state, and the state matrices are,

$ \mathbf{z}={\left\{u,\dot{u}\right\}}^T $

is the system state, and the state matrices are,

$$ \mathbf{A}=\left[\begin{array}{cc}0& 1\\ {}-{m}^{-1}k& -{m}^{-1}c\end{array}\right],\hskip2em {\mathbf{A}}_n=\left[\begin{array}{c}0\\ {}-{m}^{-1}{k}_3\end{array}\right],\hskip2em \mathbf{B}=\left[\begin{array}{c}0\\ {}{m}^{-1}\end{array}\right]. $$

$$ \mathbf{A}=\left[\begin{array}{cc}0& 1\\ {}-{m}^{-1}k& -{m}^{-1}c\end{array}\right],\hskip2em {\mathbf{A}}_n=\left[\begin{array}{c}0\\ {}-{m}^{-1}{k}_3\end{array}\right],\hskip2em \mathbf{B}=\left[\begin{array}{c}0\\ {}{m}^{-1}\end{array}\right]. $$

Figure 2. (a) Diagram of the working example used throughout this paper, corresponding to a Duffing Oscillator; instances of the (b) displacement (top) and forcing signal (bottom) produced during simulation.

For this example, the forcing signal of the system consists of a random-phase multi-sine signal containing frequencies of 0.7, 0.85, 1.6, and 1.8 rad/s. The Duffing oscillator is simulated using a 4th-order Runge–Kutta integration. The forcing and resulting displacement for 1024 samples at an equivalent sample rate of 8.525 Hz is shown in Figure 2b. These data are then used as the ground truth for the examples shown throughout the paper.

2.1. A note on data and domains

The interdisciplinary nature of PEML can lead to confusion regarding the terms defining the data and domain for the model. To enhance clarity for readers with diverse backgrounds, we provide clarifications on the nomenclature in this paper. Firstly, data refers to all measured or known values that are used in the overall architecture/methodology, not exclusively that which is used as inputs to the ML model, or as target observations. This may include, but is not limited to, measured data, system parameters, and scaling information. In the context of the example above, the data encompasses measured values of the state,

![]() $ {\mathbf{z}}^{\ast } $

, and force,

$ {\mathbf{z}}^{\ast } $

, and force,

![]() $ {f}^{\ast } $

, along with system parameters

$ {f}^{\ast } $

, along with system parameters

![]() $ m,c,k,{k}_3 $

(where the asterisk denotes observations of a value). Importantly, the use of the term observation data is akin to the classic ML definition of training data, which is the scope of data used in traditional learning paradigms which minimize the discrepancy between the model output and some observed target values. This change is employed here as the training stages in many instances of PEML demonstrate the learner’s ability to make predictions beyond the scope of these observations of target values.

$ m,c,k,{k}_3 $

(where the asterisk denotes observations of a value). Importantly, the use of the term observation data is akin to the classic ML definition of training data, which is the scope of data used in traditional learning paradigms which minimize the discrepancy between the model output and some observed target values. This change is employed here as the training stages in many instances of PEML demonstrate the learner’s ability to make predictions beyond the scope of these observations of target values.

This goal of extending the scope of prediction also prompts a clarification of the term domain. The domain here is similar to the definition of the domain of a function, representing the set of values passed as input to the model—in this case, the set of time values,

![]() $ t $

. The domain where measured values of the model output are available, is termed the observation domain,

$ t $

. The domain where measured values of the model output are available, is termed the observation domain,

![]() $ {\Omega}_o $

. The overall domain in which the model is trained, and predictions can be made, is the collocation domain,

$ {\Omega}_o $

. The overall domain in which the model is trained, and predictions can be made, is the collocation domain,

![]() $ {\Omega}_c $

. For example, if one provides measurements of the state for the first third of the signal in Figure 2b but proposes the model to learn (and therefore predict) over the full signal range, the observation domain would be

$ {\Omega}_c $

. For example, if one provides measurements of the state for the first third of the signal in Figure 2b but proposes the model to learn (and therefore predict) over the full signal range, the observation domain would be

![]() $ {\Omega}_o\in 0\le t\le 40s $

and the collocation domain

$ {\Omega}_o\in 0\le t\le 40s $

and the collocation domain

![]() $ {\Omega}_c\in 0\le t\le 120s $

. It is crucial to note that these domains are not restricted to a range (scope), and the discrete nature of the observation domain influences the motivation for interpolation schemes. For example, in sparse data recovery schemes, the observation domain can be defined in a discrete manner. Figure 3 provides a visualization of commonly used domain types for a selection of schemes which can employ PEML methods.

$ {\Omega}_c\in 0\le t\le 120s $

. It is crucial to note that these domains are not restricted to a range (scope), and the discrete nature of the observation domain influences the motivation for interpolation schemes. For example, in sparse data recovery schemes, the observation domain can be defined in a discrete manner. Figure 3 provides a visualization of commonly used domain types for a selection of schemes which can employ PEML methods.

Figure 3. Visualization of domain definitions for schemes and motivations that can employ PEML. The blue areas represent the continuous collocation domain, and the red dots represent the coverage and sparsity of the discrete observation domain. The dashed and solid lines represent the scope of the collocation and observation domains, respectively.

3. White box case: physics-based Bayesian filtering

Prior to overviewing the mentioned PEML classes and their adoption within the SHM and twinning context, we briefly recall a class of methods, which is situated near the white-box end of the spectrum in Figure 1, that is, Bayesian Filtering (BF). Perhaps one of the most typical examples of a hybrid approach to monitoring of dynamical systems is delivered in such BF estimators, which couple a system model (typically in state-space form), with sparse and noisy monitoring data. The employed state-space model can be either derived via a data-driven approach, for example, via the use of a system identification approach such as a Stochastic Subspace Identification (Peeters and De Roeck, Reference Peeters and De Roeck2001), or alternatively, it may be inferred on the basis of a priori assumed numerical (e.g., finite element) model. We here refer to the former case, which we refer to as physics-based Bayesian Filtering. Such Bayesian filters can be used for estimation tasks of different complexity, including pure response (state) estimation, joint or dual state-parameter estimation (Chatzi and Smyth, Reference Chatzi and Smyth2009), input-state estimation (Eftekhar Azam et al., Reference Eftekhar Azam, Chatzi and Papadimitriou2015; Maes et al., Reference Maes, Gillijns and Lombaert2018; Sedehi et al., Reference Sedehi, Papadimitriou, Teymouri and Katafygiotis2019; Vettori et al., Reference Vettori, Lorenzo, Peeters and Chatzi2023b), joint state-parameter-input identification (Dertimanis et al., Reference Dertimanis, Chatzi, Azam and Papadimitriou2019), or damage detection (Erazo et al., Reference Erazo, Sen, Nagarajaiah and Sun2019). Bayesian filters draw their potency from their capacity to deal with uncertainties stemming from modeling errors, disturbances, lacking information on the structural system’s configuration, and noise corruption. However, they are limited by the requirement for a model structure, which should be representative of the system’s dynamics.

In the general case, the equation of motion of a multi-degree of freedom linear time-invariant (LTI) dynamic system can be formulated as:

where

![]() $ \mathbf{u}(t)\in {\mathrm{\mathbb{R}}}^{n_{dof}} $

is the vector of displacements, often linked to the Degrees of Freedom (DOFs) of a numerical system model,

$ \mathbf{u}(t)\in {\mathrm{\mathbb{R}}}^{n_{dof}} $

is the vector of displacements, often linked to the Degrees of Freedom (DOFs) of a numerical system model,

![]() $ \mathbf{M}\in {\mathrm{\mathbb{R}}}^{n_{dof}\times {n}_{dof}} $

,

$ \mathbf{M}\in {\mathrm{\mathbb{R}}}^{n_{dof}\times {n}_{dof}} $

,

![]() $ \mathbf{D}\in {\mathrm{\mathbb{R}}}^{n_{dof}\times {n}_{dof}} $

, and

$ \mathbf{D}\in {\mathrm{\mathbb{R}}}^{n_{dof}\times {n}_{dof}} $

, and

![]() $ \mathbf{K}\in {\mathrm{\mathbb{R}}}^{n_{dof}\times {n}_{dof}} $

denote the mass, damping, and stiffness matrices respectively;

$ \mathbf{K}\in {\mathrm{\mathbb{R}}}^{n_{dof}\times {n}_{dof}} $

denote the mass, damping, and stiffness matrices respectively;

![]() $ \mathbf{f}(t)\in {\mathrm{\mathbb{R}}}^{n_i} $

(with

$ \mathbf{f}(t)\in {\mathrm{\mathbb{R}}}^{n_i} $

(with

![]() $ {n}_i $

representing the number of loads) is the input vector and

$ {n}_i $

representing the number of loads) is the input vector and

![]() $ {\mathbf{S}}_i\in {\mathrm{\mathbb{R}}}^{n_{dof}\times {n}_i} $

is a Boolean input shape matrix for load assignment. As an optional step, a Reduced Order Model (ROM) can be adopted, often derived via superposition of modal contributions

$ {\mathbf{S}}_i\in {\mathrm{\mathbb{R}}}^{n_{dof}\times {n}_i} $

is a Boolean input shape matrix for load assignment. As an optional step, a Reduced Order Model (ROM) can be adopted, often derived via superposition of modal contributions

![]() $ \mathbf{u}(t)\approx \varPsi \mathbf{p}(t) $

, where

$ \mathbf{u}(t)\approx \varPsi \mathbf{p}(t) $

, where

![]() $ \varPsi \in {\mathrm{\mathbb{R}}}^{n_{dof}\times {n}_r} $

is the reduction basis and

$ \varPsi \in {\mathrm{\mathbb{R}}}^{n_{dof}\times {n}_r} $

is the reduction basis and

![]() $ \mathbf{p}\in {\mathrm{\mathbb{R}}}^{n_r} $

is the vector of the generalized coordinates of the system, with

$ \mathbf{p}\in {\mathrm{\mathbb{R}}}^{n_r} $

is the vector of the generalized coordinates of the system, with

![]() $ {n}_r $

denoting the reduced system dimension. This allows to rewrite equation (3) as:

$ {n}_r $

denoting the reduced system dimension. This allows to rewrite equation (3) as:

where the mass, damping, stiffness, and input shape matrices of the reduced system are obtained as

![]() $ {\mathbf{M}}_r={\varPsi}^T\mathbf{M}\varPsi $

,

$ {\mathbf{M}}_r={\varPsi}^T\mathbf{M}\varPsi $

,

![]() $ {\mathbf{D}}_r={\varPsi}^T\mathbf{D}\varPsi $

,

$ {\mathbf{D}}_r={\varPsi}^T\mathbf{D}\varPsi $

,

![]() $ {\mathbf{K}}_r={\varPsi}^T\mathbf{K}\varPsi $

, and

$ {\mathbf{K}}_r={\varPsi}^T\mathbf{K}\varPsi $

, and

![]() $ {\mathbf{S}}_r={\varPsi}^T{\mathbf{S}}_i $

.

$ {\mathbf{S}}_r={\varPsi}^T{\mathbf{S}}_i $

.

Assuming the availability of response measurements,

![]() $ {\mathbf{x}}_k\in {\mathrm{\mathbb{R}}}^m $

, at a finite set of

$ {\mathbf{x}}_k\in {\mathrm{\mathbb{R}}}^m $

, at a finite set of

![]() $ m $

DOFs, such an LTI system can be eventually brought into a combined deterministic-stochastic state-space model, which forms the basis of application of Bayesian filtering schemes (Vettori et al., Reference Vettori, Di Lorenzo, Peeters, Luczak and Chatzi2023a):

$ m $

DOFs, such an LTI system can be eventually brought into a combined deterministic-stochastic state-space model, which forms the basis of application of Bayesian filtering schemes (Vettori et al., Reference Vettori, Di Lorenzo, Peeters, Luczak and Chatzi2023a):

$$ \left\{\begin{array}{l}{\mathbf{z}}_k={\mathbf{A}}_d{\mathbf{z}}_{k-1}+{\mathbf{B}}_d{\mathbf{f}}_{k-1}+{\mathbf{w}}_{k-1}\\ {}{\mathbf{x}}_k={\mathbf{Cz}}_k+{\mathbf{Gf}}_k+{\mathbf{v}}_k.\end{array}\right., $$

$$ \left\{\begin{array}{l}{\mathbf{z}}_k={\mathbf{A}}_d{\mathbf{z}}_{k-1}+{\mathbf{B}}_d{\mathbf{f}}_{k-1}+{\mathbf{w}}_{k-1}\\ {}{\mathbf{x}}_k={\mathbf{Cz}}_k+{\mathbf{Gf}}_k+{\mathbf{v}}_k.\end{array}\right., $$

where the state vector

![]() $ {\mathbf{z}}_k={[{{\mathbf{p}}_k}^T\hskip0.5em {{\dot{\mathbf{p}}}_k}^T]}^T\in {\unicode{x211D}}^{2{n}_r} $

reflects a random variable following a Gaussian distribution with mean

$ {\mathbf{z}}_k={[{{\mathbf{p}}_k}^T\hskip0.5em {{\dot{\mathbf{p}}}_k}^T]}^T\in {\unicode{x211D}}^{2{n}_r} $

reflects a random variable following a Gaussian distribution with mean

![]() $ {\hat{\mathbf{z}}}_k\in {\mathrm{\mathbb{R}}}^{2{n}_r} $

and covariance matrix

$ {\hat{\mathbf{z}}}_k\in {\mathrm{\mathbb{R}}}^{2{n}_r} $

and covariance matrix

![]() $ {\mathbf{P}}_k\in {\mathrm{\mathbb{R}}}^{2{n}_r\times 2{n}_r} $

. Stationary zero-mean uncorrelated white noise sources

$ {\mathbf{P}}_k\in {\mathrm{\mathbb{R}}}^{2{n}_r\times 2{n}_r} $

. Stationary zero-mean uncorrelated white noise sources

![]() $ {\mathbf{w}}_k $

and

$ {\mathbf{w}}_k $

and

![]() $ {\mathbf{v}}_k $

of respective covariance

$ {\mathbf{v}}_k $

of respective covariance

![]() $ {\mathbf{Q}}_k:{\mathbf{w}}_k\sim \mathcal{N}\left(0,{\mathbf{Q}}_k\right) $

and

$ {\mathbf{Q}}_k:{\mathbf{w}}_k\sim \mathcal{N}\left(0,{\mathbf{Q}}_k\right) $

and

![]() $ {\mathbf{R}}_k:{\mathbf{v}}_k\sim \mathcal{N}\left(0,{\mathbf{R}}_k\right) $

are introduced to account for model uncertainties and measurement noise. A common issue in BF schemes lies in calibrating the defining noise covariance parameters, which is often tackled via offline schemes (Odelson et al., Reference Odelson, Rajamani and Rawlings2006), or online variants, as those proposed recently for more involved inference tasks (Kontoroupi and Smyth, Reference Kontoroupi and Smyth2016; Yang et al., Reference Yang, Nagayama and Xue2020; Vettori et al., Reference Vettori, Di Lorenzo, Peeters, Luczak and Chatzi2023a).

$ {\mathbf{R}}_k:{\mathbf{v}}_k\sim \mathcal{N}\left(0,{\mathbf{R}}_k\right) $

are introduced to account for model uncertainties and measurement noise. A common issue in BF schemes lies in calibrating the defining noise covariance parameters, which is often tackled via offline schemes (Odelson et al., Reference Odelson, Rajamani and Rawlings2006), or online variants, as those proposed recently for more involved inference tasks (Kontoroupi and Smyth, Reference Kontoroupi and Smyth2016; Yang et al., Reference Yang, Nagayama and Xue2020; Vettori et al., Reference Vettori, Di Lorenzo, Peeters, Luczak and Chatzi2023a).

Bayesian filters exploit this hybrid formulation to extract an improved posterior estimate of the complete response of the system

![]() $ {\mathbf{z}}_k $

, that is even in unmeasured DOFs, on the basis of a “predict” and “update” procedure. Variants of these filters are formed to operate on linear (Kalman Filter—KF) or nonlinear systems (Extended KF—EKF, Unscented KF—UKF, Particle Filter—PF, etc.) for diverse estimation tasks. Moreover, depending on the level of reduction achieved, BF estimators can feasibly operate in real, or near real-time. It becomes, however, obvious that these estimators are restricted by the rather strictly imposed model form.

$ {\mathbf{z}}_k $

, that is even in unmeasured DOFs, on the basis of a “predict” and “update” procedure. Variants of these filters are formed to operate on linear (Kalman Filter—KF) or nonlinear systems (Extended KF—EKF, Unscented KF—UKF, Particle Filter—PF, etc.) for diverse estimation tasks. Moreover, depending on the level of reduction achieved, BF estimators can feasibly operate in real, or near real-time. It becomes, however, obvious that these estimators are restricted by the rather strictly imposed model form.

In order to exemplify the functionality of such Bayesian filters for the purpose of system identification and state (response) prediction, we present the application of two nonlinear variants of the Kalman Filter on our Duffing oscillator working example. The system is simulated using the model parameters and inputs defined in Section 2. We further assume that the system is monitored via the use of a typical vibration sensor, namely an accelerometer, which delivers a noisy measurement of

![]() $ \overset{"}{u} $

. We further contaminate the simulated acceleration with zero mean Gaussian noise corresponding to 8.5% Root Mean Square (RMS) noise to signal ratio. For the purpose of this simulation, we assume accurate knowledge of the model form describing the dynamics, on the basis of engineering intuition. However, we assume that the model parameters are unknown, or rather uncertain. The UKF and PF are adopted in order to identify the unknown system parameters, namely the linear stiffness

$ \overset{"}{u} $

. We further contaminate the simulated acceleration with zero mean Gaussian noise corresponding to 8.5% Root Mean Square (RMS) noise to signal ratio. For the purpose of this simulation, we assume accurate knowledge of the model form describing the dynamics, on the basis of engineering intuition. However, we assume that the model parameters are unknown, or rather uncertain. The UKF and PF are adopted in order to identify the unknown system parameters, namely the linear stiffness

![]() $ k $

, mass,

$ k $

, mass,

![]() $ m $

, and nonlinear stiffness

$ m $

, and nonlinear stiffness

![]() $ {k}_3 $

. The parameter identification is achieved via augmenting the state vector to include the time-invariant parameters. A random walk assumption is made on the evolution of the parameters. The UKF employs a further augmentation of the state to include two dimensions for the process and measurement noise sources, resulting in this case in

$ {k}_3 $

. The parameter identification is achieved via augmenting the state vector to include the time-invariant parameters. A random walk assumption is made on the evolution of the parameters. The UKF employs a further augmentation of the state to include two dimensions for the process and measurement noise sources, resulting in this case in

![]() $ 2\times 9+1=19 $

Sigma points to simulate the system. It further initiates from an initial guess on the unknown parameters, set as:

$ 2\times 9+1=19 $

Sigma points to simulate the system. It further initiates from an initial guess on the unknown parameters, set as:

![]() $ {k}_0=1 $

N/m,

$ {k}_0=1 $

N/m,

![]() $ {c}_0=0.5 $

Ns/m,

$ {c}_0=0.5 $

Ns/m,

![]() $ {k}_{30}=40 $

N/m3, which is significantly off with respect to the true parameters. The PF typically employs a larger number of sample points in an effort to more appropriately approximate the posterior distribution of the state. We here employ 2000 sample points and initiate the parameter space in the interval

$ {k}_{30}=40 $

N/m3, which is significantly off with respect to the true parameters. The PF typically employs a larger number of sample points in an effort to more appropriately approximate the posterior distribution of the state. We here employ 2000 sample points and initiate the parameter space in the interval

![]() $ k\in \left\{5\;20\right\} $

N/m,

$ k\in \left\{5\;20\right\} $

N/m,

![]() $ c\in \left\{0.5\;2\right\} $

Ns/m,

$ c\in \left\{0.5\;2\right\} $

Ns/m,

![]() $ {k}_3\in \left\{50\;160\right\} $

N/m3. In all cases, a zero mean Gaussian process noise of covariance

$ {k}_3\in \left\{50\;160\right\} $

N/m3. In all cases, a zero mean Gaussian process noise of covariance

![]() $ 1e-18 $

(added to the velocity states) and a zero mean Gaussian measurement noise of covariance

$ 1e-18 $

(added to the velocity states) and a zero mean Gaussian measurement noise of covariance

![]() $ 1e-18 $

is assumed. Figure 4 demonstrates the results of the filter for the purpose of state estimation (left subplot) and parameter estimation (right subplot). The plotted result reveals a closer matching of the states for the UKF, while both filters sufficiently approximate the unknown parameters. More details on the implementation of these filters are found in Chatzi and Smyth (Reference Chatzi and Smyth2009), Chatzi et al. (Reference Chatzi, Smyth and Masri2010), and Kamariotis et al. (Reference Kamariotis, Sardi, Papaioannou, Chatzi and Straub2023), while a Python library is made available in association with the following tutorial on Nonlinear Bayesian filtering (Tatsis et al., Reference Tatsis, Dertimanis and Chatzi2023).

$ 1e-18 $

is assumed. Figure 4 demonstrates the results of the filter for the purpose of state estimation (left subplot) and parameter estimation (right subplot). The plotted result reveals a closer matching of the states for the UKF, while both filters sufficiently approximate the unknown parameters. More details on the implementation of these filters are found in Chatzi and Smyth (Reference Chatzi and Smyth2009), Chatzi et al. (Reference Chatzi, Smyth and Masri2010), and Kamariotis et al. (Reference Kamariotis, Sardi, Papaioannou, Chatzi and Straub2023), while a Python library is made available in association with the following tutorial on Nonlinear Bayesian filtering (Tatsis et al., Reference Tatsis, Dertimanis and Chatzi2023).

Figure 4. (a) State (response) estimation results for the nonlinear SDOF working example, assuming the availability of acceleration measurements and precise knowledge of the model form, albeit under the assumption of unknown model parameters. The performance is illustrated for use of the UKF and PF, contrasted against the reference simulation; (b) Parameter estimation convergence via use of the UKF and PF contrasted against the reference values for the nonlinear SDOF working example.

Recent advances/applications of Bayesian filtering in structural mechanics include the following works. The problem of virtual sensing has been further explored in Tatsis et al. (Reference Tatsis, Dertimanis, Papadimitriou, Lourens and Chatzi2021, Reference Tatsis, Agathos, Chatzi and Dertimanis2022) adopting a sub-structuring formulation, which allows to tackle problems, where only a portion of the domain is monitored. For clarity, sub-structuring involves dividing a complex domain into smaller, more manageable components, which are solved independently before integrated back into the full structure. Employing a lower level of reliance on the physics model form, by embedding physical concepts in the form of physics-domain knowledge, Tchemodanova et al. (Reference Tchemodanova, Sanayei, Moaveni, Tatsis and Chatzi2021) proposed a novel approach, where they combined a modal expansion with an augmented Kalman filter for output-only virtual sensing of vibration measurements. Greś et al. (Reference Greś, Döhler, Andersen and Mevel2021) proposed a Kalman filter-based approach to perform subspace identification on output-only data, where in the input force is unmeasured. In this case, only the periodic nature of the input force is known, and so this (unparameterized) information is also embedded within the model learning architecture. In comparison to filtering techniques with an assumed known force, this approach is less reliant on the physics model prescription, and so this approach has the advantage that it may be applied to a wide variety of similar problems/instances. The problem of unknown inputs has recently led to the adoption of Gaussian Process Latent Force Models (GPLFMs), which move beyond the typical assumption of a random walk model, that are meant to describe the evolution of the input depending on the problem at hand (Nayek et al., Reference Nayek, Chakraborty and Narasimhan2019; Rogers et al., Reference Rogers, Worden and Cross2020; Vettori et al., Reference Vettori, Lorenzo, Peeters and Chatzi2023b; Zou et al., Reference Zou, Lourens and Cicirello2023). Such an approach now moves toward a gray-like method (as discussed in the later sections), since Gaussian Processes, which are trained on sample data are required for data-driven inference and characterization of the unknown input model.

In relaxing the strictness of the imposed physics model, BF inference schemes can include model parameters in the inference task. Such an example is delivered in joint or dual state-parameter estimation methods (Dertimanis et al., Reference Dertimanis, Chatzi, Azam and Papadimitriou2019; Teymouri et al., Reference Teymouri, Sedehi, Katafygiotis and Papadimitriou2023), which are further extended to state-input-parameter estimation schemes. In this context, Naets et al. (Reference Naets, Croes and Desmet2015) couple reduced-order modeling with Extended Kalman Filters to achieve online state-input-parameter estimation, while Dertimanis et al. (Reference Dertimanis, Chatzi, Azam and Papadimitriou2019) combine a dual and an Unscented Kalman filter, to this end; the former for estimating the unknown structural excitation, and the latter for the combined state-parameter estimation. Naturally, when the inference task targets multiple quantities, it is important to ensure sufficiency of the available observations, a task which can be achieved by checking appropriate observability identifiability, and invertibility criteria (Chatzis et al., Reference Chatzis, Chatzi and Smyth2015; Maes et al., Reference Maes, Chatzis and Lombaert2019; Shi and Chatzis, Reference Shi and Chatzis2022). Feng et al. (Reference Feng, Li and Lu2020) proposed a “sparse Kalman filter,” using Bayesian logic, to effectively localize and reconstruct time-domain force signals on a fixed beam. As another example in the context of damage detection strategies, Nandakumar and Jacob (Reference Nandakumar and Jacob2021) presented a method for identifying cracks in a structure, from the state space model, using a combined Observer Kalman filter identification, and Eigen Realization Algorithm methods. Another approach to overcome to challenge of model-system discrepancy is to utilize ML approaches along with BF techniques to “bridge the gap,” but more will be discussed on this in Section 5, as these are no longer white-box models.

4. The black box case: deep learning models

Many modern ML methods are based on, or form extensions of, perhaps one of the most well-known methods, the neural network (NN). The NN can be used as a universal function approximator, where more complex models will generally require deeper and/or wider networks. When using multiple layers within the network, the method falls in the deep-learning (DL) class. For a regression problem, the aim of an NN is to determine an estimate of the mapping from the input

![]() $ \mathbf{x} $

, to the output

$ \mathbf{x} $

, to the output

![]() $ \mathbf{y} $

. A fully connected, feed-forward NN is formed by

$ \mathbf{y} $

. A fully connected, feed-forward NN is formed by

![]() $ N $

hidden layers, each with

$ N $

hidden layers, each with

![]() $ {n}^{(N)} $

nodes. The nodes of each layer are connected to every node in the next layer and the values are passed through an activation function

$ {n}^{(N)} $

nodes. The nodes of each layer are connected to every node in the next layer and the values are passed through an activation function

![]() $ \sigma $

. For

$ \sigma $

. For

![]() $ N $

hidden layers, the output of the neural network can be defined as,

$ N $

hidden layers, the output of the neural network can be defined as,

where

![]() $ \mathbf{W}=\left\{{\mathbf{w}}^1,\dots, {\mathbf{w}}^N\right\} $

and

$ \mathbf{W}=\left\{{\mathbf{w}}^1,\dots, {\mathbf{w}}^N\right\} $

and

![]() $ \mathbf{B}=\left\{{\mathbf{b}}^1,\dots, {\mathbf{b}}^N\right\} $

are the weights and biases of the network, respectively. The aim of the training stage is to then determine the network parameters

$ \mathbf{B}=\left\{{\mathbf{b}}^1,\dots, {\mathbf{b}}^N\right\} $

are the weights and biases of the network, respectively. The aim of the training stage is to then determine the network parameters

![]() $ \Theta =\left\{\mathbf{W},\mathbf{B}\right\} $

, which is done by minimizing an objective function defined so that when the value vanishes, the solution is satisfied.

$ \Theta =\left\{\mathbf{W},\mathbf{B}\right\} $

, which is done by minimizing an objective function defined so that when the value vanishes, the solution is satisfied.

$$ {L}_o={\left\langle {\mathbf{y}}^{\ast }-{\mathcal{N}}_{\mathbf{y}}\right\rangle}_{\Omega_o},\hskip2em {\left\langle \bullet \right\rangle}_{\Omega_{\kappa }}=\frac{1}{N_{\kappa }}\sum \limits_{x\in {\Omega}_{\kappa }}{\left\Vert \bullet \right\Vert}^2. $$

$$ {L}_o={\left\langle {\mathbf{y}}^{\ast }-{\mathcal{N}}_{\mathbf{y}}\right\rangle}_{\Omega_o},\hskip2em {\left\langle \bullet \right\rangle}_{\Omega_{\kappa }}=\frac{1}{N_{\kappa }}\sum \limits_{x\in {\Omega}_{\kappa }}{\left\Vert \bullet \right\Vert}^2. $$

At the other end of the spectrum of a white-box (model-based) approach, where the system dynamics are transparent and therefore largely prescribed, thus lies a black-box approach, employing naive DL schemes to achieve stochastic representations of monitored systems. Linking to the BF structure described previously, Variational Autoencoders (VAE) have been extended with a temporal transition process on the latent space dynamics in order to infer dynamic models from sequential observation data (Bayer and Osendorfer, Reference Bayer and Osendorfer2015). This approach offers greater flexibility than a scheme that relies on a prescribed physics-based model form, since VAEs are more apt to learning arbitrary nonlinear dynamics. The obvious shortcoming is that, typically, the inferred latent space need not be linked to coordinates of physical connotation. This renders such schemes more suitable for inferring dynamical features, and even condition these on operational variables (Mylonas et al., Reference Mylonas, Abdallah and Chatzi2021), but largely unsuitable for reproducing system response in a virtual sensing context. Following such a scheme, Stochastic Recurrent Networks (STORN) (Bayer and Osendorfer, Reference Bayer and Osendorfer2015) and Deep Markov Models (DMMs) (Krishnan et al., Reference Krishnan, Shalit and Sontag2016), which are further referred to as Dynamic Variational Autoencoders (DVAEs), have been applied for inferring dynamics in a black box context with promising results in speech analysis, music synthesis, medical diagnosis and dynamics (Vlachas et al., Reference Vlachas, Arampatzis, Uhler and Koumoutsakos2022). In structural dynamics, in particular, previous work of the authoring team Simpson et al. (Reference Simpson, Dervilis and Chatzi2021) argues that use of the AutoeEncoder (AE) essentially leads in capturing a system’s Nonlinear Normal Modes (NNMs), with a better approximation achieved when a VAE is employed (Simpson et al., Reference Simpson, Tsialiamanis, Dervilis, Worden, Chatzi, Brake, Renson, Kuether and Tiso2023). It is reminded that, while potent in delivering compressed representations, these DL methods do not learn interpretable latent spaces.

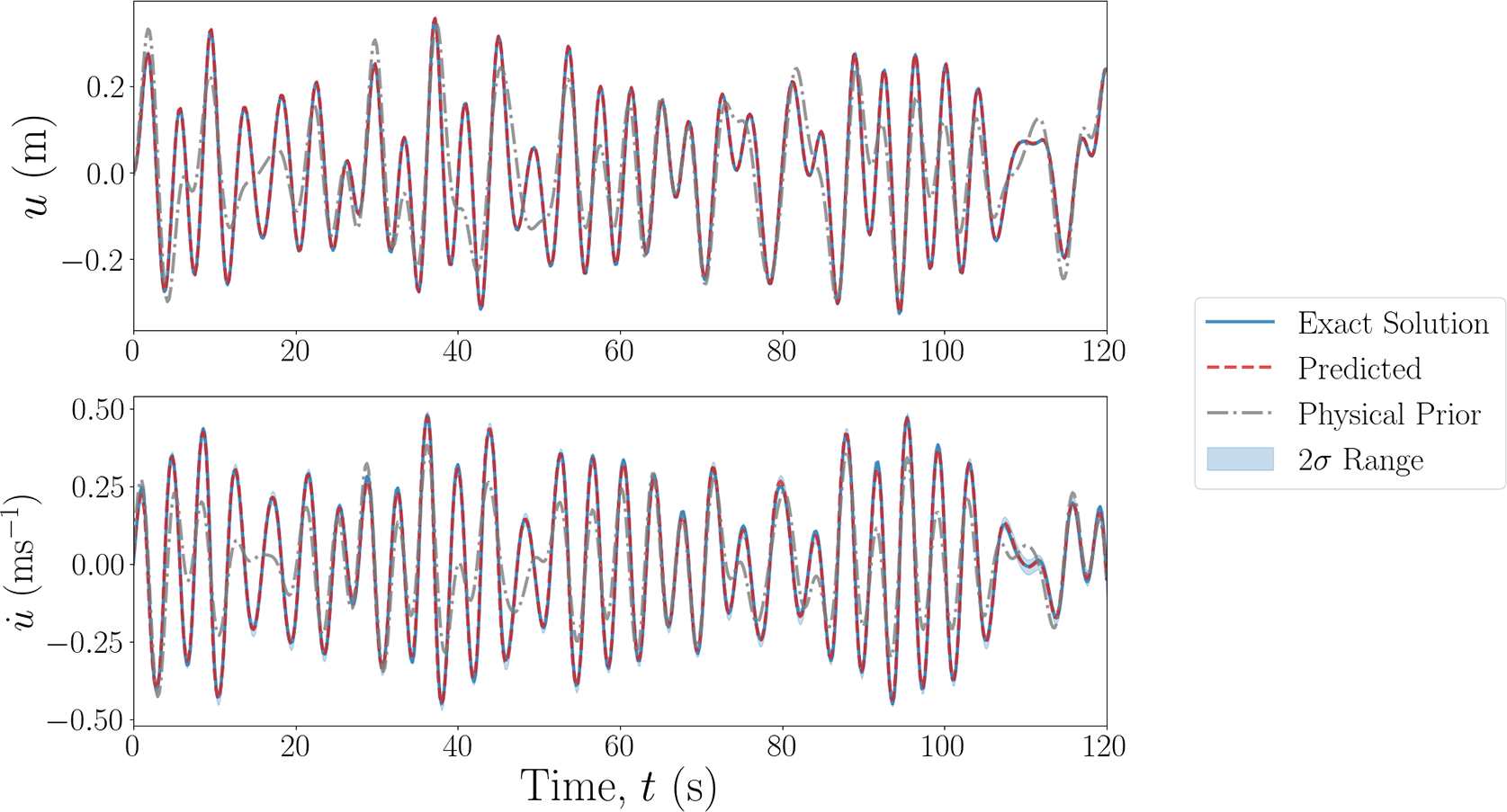

In this paper, a rudimentary black-box method is demonstrated to provide a simple example of ML applied to the case scenario. Only one black-box approach is shown here to keep the focus overall to PEML techniques. Figure 5 shows the results of applying DMM to the working example. The

![]() $ 2\sigma $

uncertainty is also included, however, in this case, it is difficult to observe on the figure, as the uncertainty is small as a result of the low level of noise within the data. The data is generated for the time interval of 0 to 120 seconds with a sampling rate of 5 Hz and the displacement is assumed to be the only measurement. All the transition and observation models, as described by Krishnan et al. (Reference Krishnan, Shalit and Sontag2016), are modeled by black-box neural networks, specifically DMMs. While it is observed that the latent representation captures certain patterns of observed data, it lacks physical interpretability.

$ 2\sigma $

uncertainty is also included, however, in this case, it is difficult to observe on the figure, as the uncertainty is small as a result of the low level of noise within the data. The data is generated for the time interval of 0 to 120 seconds with a sampling rate of 5 Hz and the displacement is assumed to be the only measurement. All the transition and observation models, as described by Krishnan et al. (Reference Krishnan, Shalit and Sontag2016), are modeled by black-box neural networks, specifically DMMs. While it is observed that the latent representation captures certain patterns of observed data, it lacks physical interpretability.

Figure 5. Predicted latent representations versus exact solutions of displacement (top) and velocity (bottom) using the DMM applied to the working example. Displacement is assumed to be the only measurement. The blue bounding boxes represent the estimated

![]() $ 2\sigma $

range.

$ 2\sigma $

range.

5. Light gray PEML schemes

When the prior physics knowledge of a system is relatively well-described, that is it captures most of the physics of the true system, it is possible to rely on this knowledge as a relatively strong bias, while further exploiting learning schemes to capture any model mismatch. The term model mismatch or model-system discrepancy refers to the portion of the true system’s behavior (or response) which remains uncaught by the known physics. As a result of the larger degree of reliance on the physics-based model form, we here refer to this class of methods as “light-gray.” We will first discuss a survey of machine-learning-enhanced Bayesian filtering methods, which are still mostly driven by physics knowledge embedded in the BF technique. This is then followed by a section on Physics-Guided Neural Networks, which use the universal-approximation capabilities of deep-learning to determine a model of model-system discrepancy.

5.1. ML-enhanced Bayesian filtering

As previously stated, classical Bayesian filtering requires the model form to be known a priori, implying that the resulting accuracy will depend on how exhaustive this model is. To overcome the inaccuracies that result from model-system discrepancy, ML can be infused with BF techniques to improve inference potential. To this end, Tatsis et al. (Reference Tatsis, Agathos, Chatzi and Dertimanis2022) propose to fuse BF with a Covariance Matrix Adaptation scheme, to extract the unknown position and location of flaws in the inverse problem setting of crack identification, while simultaneously achieving virtual sensing. The latter is the outcome of a hierarchical BF approach powered by reduced order modeling.

Using a different approach, Revach et al. (Reference Revach, Shlezinger, Ni, Escoriza, Van Sloun and Eldar2022) employ a neural network within a Kalman filter scheme to discover the full form of partially known and observed dynamics. By exploiting the nonlinear estimation capabilities of the NN, they managed to overcome the challenges of model constraint, that are common in filtering methods (Aucejo et al., Reference Aucejo, De Smet and Deü2019). Using a similar approach, but with a different motivation, Angeli et al. (Reference Angeli, Desmet and Naets2021) combined Kalman filtering with a deep-learning architecture to perform model-order reduction, by learning the mapping from the full-system coordinates, to a minimal coordinate latent space.

5.2. Physics-guided neural networks

In physics-guided machine learning (PGML), deep learning techniques are employed to capture the discrepancy between an explicitly defined model based on prior knowledge and the true system from which data is attained. The goal is to fine-tune the overall model’s parameters (i.e., the prior and ML model) in a way that the physical prior knowledge steers the training process toward the desired direction. By doing so, the model can be guided to learn latent quantities that align with the known physical principles of the system. This ensures that the resulting model is not only accurate in its predictions but also possesses physically interpretable latent representations. At this stage, we would like to remind the reader of the definitions of physics model strictness and physics constraint given in Section 1. PGML approaches employ a relatively high reliance on the physics-based model form, in that the assumed physics is imposed in a strict form. However, in order to allow for simulation of model discrepancy, the level of physics constraint is relatively low, which means that the learner is not forced to narrowly follow this assumed prescribed form. The relaxation of constraints differs between PGML and physics-informed (PIML) approaches; PGML techniques often reduce constraints through the use of bias or residual modeling, whereas PIML schemes employ physics into the loss function as a target solution (i.e., the solutions are weakly imposed).

One of the key advantages of physics-guided machine learning is its ability to incorporate domain knowledge into the learning process. This is particularly beneficial in scenarios where data may be limited, noisy, or expensive to obtain. In comparison to PEML techniques that lay lower on our prescribed spectrum, PGML methods can offer an increased level of interpretability. By steering the model’s learning process with physics-based insights, it can more effectively generalize to unseen data and maintain a coherent understanding of the underlying physical mechanisms.

The training process in physics-guided models involves two key components: (1) Incorporating Prior Knowledge: Prior knowledge on the physics of the system is integrated into the network architecture, or as part of the model; (2) Capturing Discrepancy: Deep learning models excel in learning from data, even when this contradicts prior knowledge. As a result, during training, such a model will gradually adapt and learn to account for discrepancies between the prior knowledge and the true dynamics of the system. This adaptability allows the model to converge toward a more accurate representation of the underlying physics.

Conceptually similar to estimating a residual modeler, Liu et al. (Reference Liu, Lai and Chatzi2021, Reference Liu, Lai, Bacsa and Chatzi2022) proposed a probabilistic physics-guided framework termed a Physics-guided Deep Markov Model (PgDMM) for inferring the characteristics and latent structure of nonlinear dynamical systems from measurement data. It addresses the shortcoming of black-box deep generative models (such as the DMM) in terms of lacking physical interpretation and failing to recover a structured representation of the learned latent space. To overcome this, the framework combines physics-based models of the partially known physics with a DMM scheme, resulting in a hybrid modeling approach. The proposed framework leverages the expressive power of DL while imposing physics-driven restrictions on the latent space, through structured transition and emission functions, to enhance performance and generalize predictive capabilities. The authors demonstrate the benefits of this fusion through improved performance on simulation examples and experimental case studies of nonlinear systems.

Both residual modeling and PgNNs, in general, share a common objective of easing the training objective of neural networks. Residual modeling achieves this by easing the learning process through a general approximation, while PgNNs incorporate physical knowledge to provide reliable predictions even in data-limited situations. However, regarding specific implementations, there are differences between these two methods. Residual modeling operates without the need for specific domain knowledge, focusing instead on the residuals in a more general-purpose application. This makes it broadly applicable across various standard machine learning tasks, especially in the domain of computer vision. PgNNs, on the other hand, explicitly incorporate physical laws into the model, adding interpretability related to these physical models. PgNNs can also work in a general setting with any prior models to fit the residuals, but the key idea is to use physical prior model to obtain a physically interpretable latent representation from neural networks. This makes PgNNs particularly suited for problems where adherence to physical laws is paramount. Additionally, while residual networks rely heavily on data for learning, PgNNs can leverage physical models to make predictions even with limited data, demonstrating their utility in data-constrained environments.

To demonstrate how a physical prior model can impact the training of DL models, we apply the PgDMM to our working example, using the same data generation settings as explained in Section 4. In this case, instead of approaching the system with no prior information, we introduce a physical prior model into the DMM to guide the training process. This physical prior model is a linear model that excludes the cubic term in equation (1), which replicates a knowledge gap in the form of additional system complexities. The results are shown in Figure 6. It can be observed that the predictions for both displacements and velocities align well with the ground truth. The estimation uncertainty is slightly higher for velocities, which is expected since they are unobserved quantities. It is important to note that the system displays significant nonlinearity due to the presence of a cubic term with a large coefficient, causing the linear approximation to deviate noticeably from the true system dynamics. However, the learning-based model within the framework still captures this discrepancy and reconstructs the underlying dynamics through the guided training process.

Figure 6. Predictions versus exact solutions of displacement (top) and velocity (bottom) using the PgDMM applied to the working example. Displacement is assume to be the only measurement. The gray dash-dot line is the physical prior model and the blue bounding boxes represent the estimated

![]() $ 2\sigma $

range.

$ 2\sigma $

range.

A similar physics-guided RNN was proposed by Yu et al. (Reference Yu, Yao and Liu2020), which consists of two parts: physics-based layers and data-driven layers, where physics-based layers encode the underlying physics into the network and the residual block computes a residual value which reflects the consistency of the prediction results with the known physics and needs to be optimized toward zero.

Instead of modeling the residual of the prior model, a physics-guided Neural Network (PgNN), proposed by Karpatne et al. (Reference Karpatne, Watkins, Read and Kumar2017), not only ingests the output of a physics-based model in the neural network framework, but also uses a novel physics-based learning objective to ensure the learning of physically consistent predictions, as based on domain knowledge. Similarly, the authors proposed a Physics-guided Recurrent Neural Network scheme (PGRNN, Jia et al., Reference Jia, Willard, Karpatne, Read, Zwart, Steinbach and Kumar2019) that contains two parallel recurrent structures—a standard RNN flow and an energy flow to be able to capture the variation of energy balance over time. While the standard RNN flow models the temporal dependencies that better fit observed data, the energy flow aims to regularize the temporal progression of the model in a physically consistent fashion. Furthermore, in another PgNN proposed by Robinson et al. (Reference Robinson, Pawar, Rasheed and San2022), the information from the known part of the system is injected into an intermediate layer of the neural network.

The physics-guided deep neural network (PGDNN), proposed by Huang et al. (Reference Huang, Yin and Liu2022), uses a cross-physics-data domain loss function to fuse features extracted from both the physical domain and data domain, which evaluates the discrepancy between the output of a FE model and the measured signals from the real structure. With the physical guidance of the FE model, the learned PGDNN model can be well generalized to identify test data of unknown damages. The authors also use the same idea in bridge damage identification under moving vehicle loads (Yin et al., Reference Yin, Huang and Liu2023). Similarly, Zhang and Sun (Reference Zhang and Sun2021) presented usage of the FE model as an implicit representation of scientific knowledge underlying the monitored structure and incorporates the output of FE model updating into the NN model setup and learning.

In Chen and Liu (Reference Chen and Liu2021), the physics knowledge is incorporated into the neural network by means of imposing appropriate constraints on weights, biases or both. Muralidhar et al. (Reference Muralidhar, Bu, Cao, He, Ramakrishnan, Tafti and Karpatne2020) used physics-based prior model, physical intermediate variables, and physics-guided loss functions to learn physically interpretable quantities such as pressure field and velocity field.

6. Gray PEML schemes

The motivations which drive the “middle ground” of PEML will—naturally—vary depending on the nature of the information deficiency, and whether this is a physics knowledge gap or data scarcity. For example, the knowledge could be made up of a number of possible physical phenomena, or the system could be known but the parameters not. Or, with the opposite problem, it may be possible to capture data well in one domain, but be limited in the relative resolution of other domains (e.g., temporal vs. spatial). These are just a few of the many examples which motivate the use of “gray” PEML techniques. In this section, two such approaches are surveyed and discussed; first dictionary methods are shown, which select a suitably sparse representation of the model via linear superposition from a dictionary of candidate functions. The second technique discussed is the physics-informed neural network, which weakly imposes conditions on the model output in order to steer the learner. This differs from the previously discussed physics-guided approaches, where the physics restrictions are strongly imposed by way of a proposed solution that instantiates an inductive bias to the learner. As will be discussed in detail below, the latter technique can be applied in a variety of ways, each embedding different prior knowledge and beliefs, allowing for flexibility in its application.

6.1. Dictionary methods

One of the biggest challenges faced in the practical application of structural mechanics in engineering, is the presence of irregular, unknown, or ill-defined nonlinearities in the system. Another challenge may occur from variation in the parameters which govern the prescribed model of the system, which could be from environmental changes, or from consequential changes such as damage. This motivates less reliance on the physics-based model form, to allow for freedom in physical-digital system discrepancy whilst satisfying known physics. One approach to reducing the reliance on the prescribed physics model form is by having the learner estimate the definition of the model, which may be the sole, or additional, objective of the learner. Dictionary methods are well-positioned as a solution for less strict physics embedding, where the model is determined from a set of possible model solutions, allowing freedom in a semi-discrete manner.

The problem of estimating the existence, type, or strength of the model-governing physics is described as that of equation discovery. This inverse problem can often be very computationally expensive, due to the large number of forward model calculations required to evaluate the current estimate of the parameters (Frangos et al., Reference Frangos, Marzouk, Willcox and van Bloemen Waanders2010). When determining the presence of governing equation components, often the identification is drawn from a family of estimated equations. For example, the matching-pursuit algorithm selects the most sparse representation of a signal from a dictionary of physics-based functions (Vincent and Bengio, Reference Vincent and Bengio2002).

For dictionary-based approaches, the idea is to determine an estimate of the model output as some combination of bases or “atoms.” Often, these bases are formed as candidate functions of the input data, and are compiled into a dictionary-matrix

![]() $ \Theta \left(\mathbf{x}\right) $

. Then, linear algebra is used to represent the target signal from a sparse representation of this dictionary and a coefficient matrix

$ \Theta \left(\mathbf{x}\right) $

. Then, linear algebra is used to represent the target signal from a sparse representation of this dictionary and a coefficient matrix

![]() $ \Xi $

.

$ \Xi $

.

And so, the aim of the learner is to determine a suitably sparse solution of

![]() $ \Xi $

, via some objective function. Typically, sparsity-promoting optimisation methods are used, such as LASSO (Tibshirani, Reference Tibshirani1996). The coefficient matrix can be constrained to be binary values, thus operating as a simple mask of the candidate function, or can be allowed to contain continuous values, and thus can simultaneously determine estimated system parameters which are used in the candidate functions. For the case of dynamic systems, a specific algorithm was developed by Brunton et al. (Reference Brunton, Proctor and Kutz2016a) for sparse discovery of nonlinear systems. The team also showed how this could be used in control by including the control parameters in the dictionary definition (Brunton et al., Reference Brunton, Proctor and Kutz2016b). Kaiser et al. (Reference Kaiser, Kutz and Brunton2018) extended this nonlinear dictionary learning approach to improve control of a dynamic system where data is lacking. To do so, they extended the method to include the effects of actuation for better forward prediction.

$ \Xi $

, via some objective function. Typically, sparsity-promoting optimisation methods are used, such as LASSO (Tibshirani, Reference Tibshirani1996). The coefficient matrix can be constrained to be binary values, thus operating as a simple mask of the candidate function, or can be allowed to contain continuous values, and thus can simultaneously determine estimated system parameters which are used in the candidate functions. For the case of dynamic systems, a specific algorithm was developed by Brunton et al. (Reference Brunton, Proctor and Kutz2016a) for sparse discovery of nonlinear systems. The team also showed how this could be used in control by including the control parameters in the dictionary definition (Brunton et al., Reference Brunton, Proctor and Kutz2016b). Kaiser et al. (Reference Kaiser, Kutz and Brunton2018) extended this nonlinear dictionary learning approach to improve control of a dynamic system where data is lacking. To do so, they extended the method to include the effects of actuation for better forward prediction.

In an example of dictionary-based learning, Flaschel et al. (Reference Flaschel, Kumar and De Lorenzis2021) and Thakolkaran et al. (Reference Thakolkaran, Joshi, Zheng, Flaschel, De Lorenzis and Kumar2022) showed a method for unsupervised learning of the constitutive laws governing an isotropic or anisotropic plate. The approach is not only unsupervised, needing only displacement and force data, it is directly inferrable in a physical manner. The authoring team then extended this work further to include a Bayesian estimation (Joshi et al., Reference Joshi, Thakolkaran, Zheng, Escande, Flaschel, De Lorenzis and Kumar2022), allowing for quantified uncertainty in the model of the constitutive laws.

Another practical example: Ren et al. (Reference Ren, Han, Yu, Skjetne, Leira, Sævik and Zhu2023) used nonlinear dynamic identification to successfully predict the forward behavior of a 6DOF ship model, including coupling effects between the rigid body and water. In this work, the dictionary method is combined with a numerical method to predict the state of the system in a short time window ahead. This facet is often found when applying DM-based approaches to practical examples, as the method is intrinsically a model discovery approach, and so a solution step is required if model output prediction is required.

Data-driven approaches to equation discovery will often require an assumption of the physics models being exhaustive of the “true” solution; that is all the parameters being estimated will fully define the model, or the solution will lie within the proposed family of equations. One of the challenges faced with deterministic methods, such as LASSO (Tibshirani, Reference Tibshirani1996), is their sensitivity to hyperparameters (Brunton et al., Reference Brunton, Proctor and Kutz2016a); a potential manifestation of which is the estimation of a combination of two similar models, which is a less accurate estimate of the “true” solution than each of these models individually. Bayesian approaches can help to overcome this issue, by instead providing a stochastic estimate of the model, and enforcing sparsity (Park and Casella, Reference Park and Casella2008).

Fuentes et al. (Reference Fuentes, Nayek, Gardner, Dervilis, Rogers, Worden and Cross2021) show a Bayesian approach for nonlinear dynamic system identification which simultaneously selects the model, and estimates the parameters of the model. Similarly, Nayek et al. (Reference Nayek, Fuentes, Worden and Cross2021) identify types and strengths of nonlinearities by utilizing spike-and-slab priors in the identification scheme. As these priors are analytically intractable, this allowed them to be used along with a MCMC sampling procedure to generate posterior distributions over the parameters. Abdessalem et al. (Reference Abdessalem, Dervilis, Wagg and Worden2018) showed a method for approximate Bayesian computation of model selection and parameter estimation of dynamic structures, for cases where the likelihood is either intractable or cannot be approached in closed form.

6.2. Physics-informed neural networks

Raissi et al. (Reference Raissi, Perdikaris and Karniadakis2019) showed that by exploiting automatic differentiation that is common in the practical implementation of neural networks, one can embed physics that is known in the form of ordinary/partial differential equations (ODEs/PDEs). Given a system of known ODEs/PDEs which define the physics, where the sum of these ODEs/PDEs should equal zero, an objective function is formed which can be estimated using automatic differentiation over the network. If one was to apply a PINN to estimate the state of the example in Figure 2, over the collocation domain

![]() $ {\Omega}_c $

, using equation (2), the physics-informed loss function becomes,

$ {\Omega}_c $

, using equation (2), the physics-informed loss function becomes,

where

![]() $ {\partial}_t{\mathcal{N}}_{\mathbf{z}} $

is the estimated first-order derivative of the state using automatic differentiation, and the system parameters are

$ {\partial}_t{\mathcal{N}}_{\mathbf{z}} $

is the estimated first-order derivative of the state using automatic differentiation, and the system parameters are

![]() $ \theta =\left\{m,c,k,{k}_3\right\} $

. Boundary conditions are embedded into PINNs in one of two ways; the first is to embed them in a “soft” manner, where an additional loss term is included based on a defined boundary condition

$ \theta =\left\{m,c,k,{k}_3\right\} $

. Boundary conditions are embedded into PINNs in one of two ways; the first is to embed them in a “soft” manner, where an additional loss term is included based on a defined boundary condition

![]() $ \xi \left(\mathbf{y}\right) $

(Sun et al., Reference Sun, Gao, Pan and Wang2020). Given the boundary domain

$ \xi \left(\mathbf{y}\right) $

(Sun et al., Reference Sun, Gao, Pan and Wang2020). Given the boundary domain

![]() $ \mathrm{\partial \Omega}\in {\Omega}_c $

,

$ \mathrm{\partial \Omega}\in {\Omega}_c $

,

The second case, so-called “hard” boundary conditions, involves directly masking the outputs of the network with the known boundary conditions,

where often gradated masks are used to avoid asymptotic gradients in the optimization (Sun et al., Reference Sun, Gao, Pan and Wang2020). In the case of estimating the state over a specified time window, the boundary condition becomes the initial condition (i.e., the state at

![]() $ t=0 $

).

$ t=0 $

).

The total objective function of the PINN is then formed as the weighted sum of the observation, physics, and boundary condition losses,

The flexibility of the PINN can then be highlighted by considering how the belief of the architecture is changed when selecting the corresponding objective weighting parameters

![]() $ {\lambda}_k $

. By considering the weighting parameters as 1 or 0, Table 1 shows how the selection of objective terms to include changes the application type of the PINN. The column titled

$ {\lambda}_k $

. By considering the weighting parameters as 1 or 0, Table 1 shows how the selection of objective terms to include changes the application type of the PINN. The column titled

![]() $ {\Omega}_c $

indicates the domain used for the PDE objective term, where the significant difference is when the observation domain is used, and therefore the PINN becomes akin to a system identification problem solution.

$ {\Omega}_c $

indicates the domain used for the PDE objective term, where the significant difference is when the observation domain is used, and therefore the PINN becomes akin to a system identification problem solution.

Table 1. Summary of PINN application types, and the physics-enhanced machine learning genre/category each would be grouped into

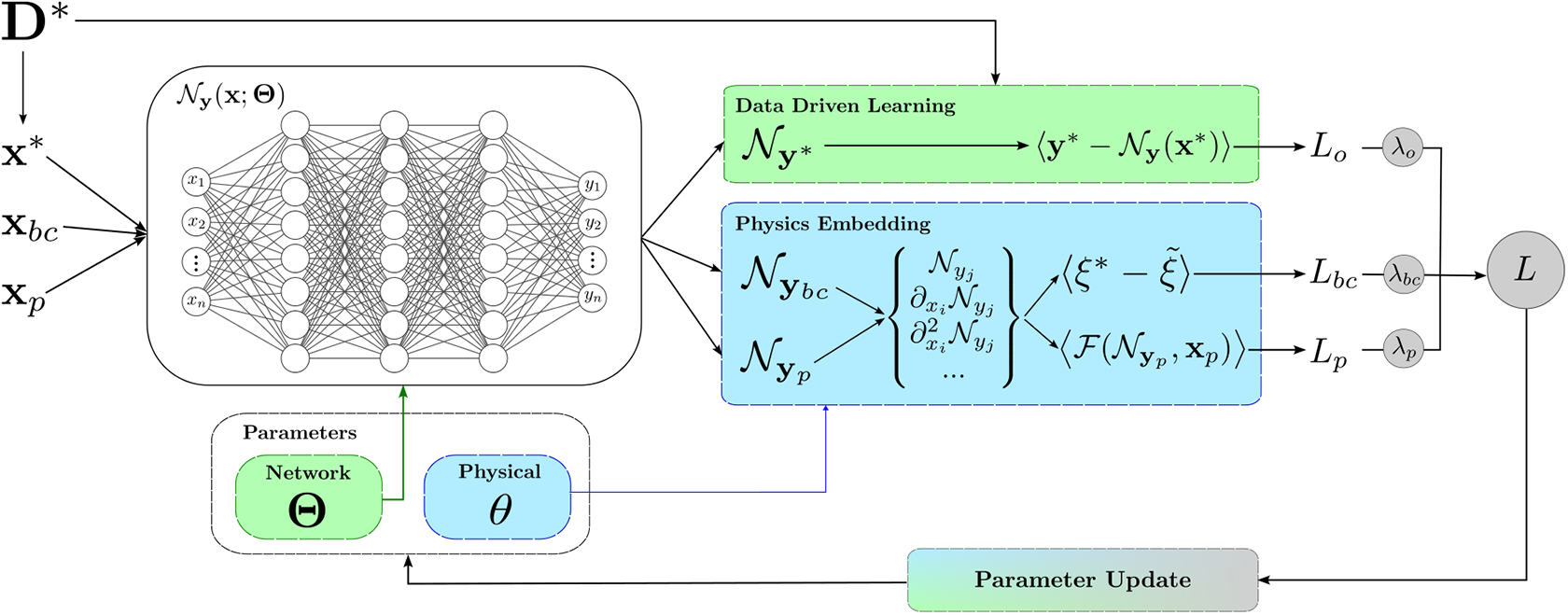

Figure 7 shows the framework of a generic PINN, highlighting where the framework, specifically the loss function formulation, can be broken down into the data-driven and physics-embedding components. It is also possible to include the system parameters

![]() $ \theta $

as unknowns which are determined as part of the optimisation process, that is,

$ \theta $

as unknowns which are determined as part of the optimisation process, that is,

![]() $ \Theta =\left\{\mathbf{W},\mathbf{B},\theta \right\} $