Introduction

We agree with Starke et al. (Reference Starke, De Clercq, Borgwardt and Elger2020): despite the current lack of direct clinical applications, artificial intelligence (AI) will undeniably transform the future of psychiatry. AI has led to algorithms that can perform more and more complex tasks by interpreting and learning from data (Dobrev, Reference Dobrev2012). AI applications in psychiatry are receiving more attention, with a 3-fold increase in the number of PubMed/MEDLINE articles on IA in psychiatry over the past 3 years (N = 567 results). The impact of AI on the entire psychiatric profession is likely to be significant (Brown, Story, Mourão-Miranda, & Baker, Reference Brown, Story, Mourão-Miranda and Baker2019; Grisanzio et al., Reference Grisanzio, Goldstein-Piekarski, Wang, Ahmed, Samara and Williams2018; Huys, Maia, & Frank, Reference Huys, Maia and Frank2016; Torous, Stern, Padmanabhan, Keshavan, & Perez, Reference Torous, Stern, Padmanabhan, Keshavan and Perez2015). These effects will be felt not only through the advent of advanced applications in brain imaging (Starke et al., Reference Starke, De Clercq, Borgwardt and Elger2020) but also through the stratification and refinement of our clinical categories, a more profound challenge which ‘lies in its long-embattled nosology’ (Kendler, Reference Kendler2016).

These technical challenges are subsumed by ethical ones. In particular, the risk of non-transparency and reductionism in psychiatric practice is a burning issue. Clinical medicine has already developed the overarching ethical principles of respect for autonomy, non-maleficence, beneficence, and justice (Beauchamp & Childress, Reference Beauchamp and Childress2001). The need for the principle of Explainability should be added to this list, specifically regarding the issues involved by AI (Floridi et al., Reference Floridi, Cowls, Beltrametti, Chatila, Chazerand, Dignum and Vayena2018). Explainability concerns the understanding of how a given algorithm works (Intelligibility) and who is responsible for the way it works (Accountability). We totally agree with Starke et al. (Reference Starke, De Clercq, Borgwardt and Elger2020) that Explainability is essential and constitutes a real challenge for future developments in AI. In addition, however, we think that this ethical issue requires dedicated pedagogical training that must be underpinned by a solid epistemological framework.

The practice of young physicians depends primarily on core educational principles (Chen, Joshi, & Ghassemi, Reference Chen, Joshi and Ghassemi2020; Pinto Dos Santos et al., Reference Pinto Dos Santos, Giese, Brodehl, Chon, Staab, Kleinert and Baeßler2019). Raising awareness about ethics requires sound training covering the multiple aspects of AI, from its history and underlying principles to the challenges of current applications and even its promotion for the future of psychiatry. Furthermore, young scientists and physicians must be trained in the inter-disciplinary challenges that lie ahead of them by becoming fully versed in the philosophy of ethics, computer science, cognitive neuroscience, computational psychiatry, and clinical practice. They should learn how to identify which technology can help in a given clinical context, to interpret and understand the results (with the potential errors, biases, or clinical inapplicability), and ultimately to explain those results both to patients and other health professionals (McCoy et al., Reference McCoy, Nagaraj, Morgado, Harish, Das and Celi2020). Training physicians in this way to be at ease in both AI and medicine has already become the norm in some prestigious institutions, as attested by the partnerships between the University of Toronto and the Vector Institute or between Harvard Medical School and MIT. Concerted efforts to develop such dual competencies will enable a new academic ecosystem to emerge that will bring together AI and clinical practice in psychiatry.

However, if training in such dual competency is to be efficient, it needs strong epistemological foundations. Indeed, without minimal instruction in core concepts, models and theories, such a pedagogical program could end up in the massive misuse of algorithms. Epistemology is specifically the science of the nature and grounds of knowledge that coordinates sets of concepts, models and theories, thus allowing knowledge to be structured. While clinical observations form the building blocks of medicine, clinical reasoning corresponds to how physicians establish diagnoses and prognoses and solve therapeutic problems. AI certainly offers new opportunities for assembling these bricks, but it must be accompanied by a new epistemological framework to structure all the emerging ethical and clinical issues. Therefore, while we fully support the pedagogical integration of AI in medicine, we also argue strongly for the teaching of medical epistemology. Learning to formalize medical theories based on AI – and not just models based on AI – seems as important as attempting to apply continually evolving and changing techniques (Muthukrishna & Henrich, Reference Muthukrishna and Henrich2019).

To translate the ethical principles proposed by Starke et al. (Reference Starke, De Clercq, Borgwardt and Elger2020) into clinical practice, such an epistemological and methodological framework could be built upon the principles recently proposed by McCoy et al. (Reference McCoy, Nagaraj, Morgado, Harish, Das and Celi2020) and by Torous et al. (Reference Torous, Stern, Padmanabhan, Keshavan and Perez2015) with regard to pedagogy in AI and neuroscience, respectively. McCoy et al. (Reference McCoy, Nagaraj, Morgado, Harish, Das and Celi2020) propose instilling durable fundamental concepts about AI while avoiding technical specifics, stating that it is ‘important for students to have a robust conceptual understanding of AI and the structure of clinical data science’. They go on to suggest that there is a need to ‘introduce frameworks for approaching ethical considerations, both clinically and at a systems level. The content of such consideration should allow students to appreciate fairness, accountability, and transparency as core AI.’ Torous et al. (Reference Torous, Stern, Padmanabhan, Keshavan and Perez2015) propose including neuroscientific methods in psychiatry residency training to face the challenge of Explainability. In particular, they encourage real-time ‘circuit-specific discussions of brain-symptom relationships across the care of psychiatric patients,’ to enable medical students to understand and select ‘psychological and biologically informed treatments,’ keeping in mind the necessity to render new models and theories in psychiatry intelligible. This approach may help in understanding the stratification of psychiatric categories, the definition of micro-phenotypes and the dynamics of clusters in the context of staging models, i.e. ebb and flow core symptoms of a psychiatric manifestation (McGorry & Nelson, Reference McGorry and Nelson2019), as opposed to the macro-phenotypes defined by the traditional rigid categorical diagnoses provided by the DSM. It may even help in circumventing the Bernardian dichotomy between psychiatric manifestations (phenotypes) and causal mechanisms (endotypes), and in structuring the definition of diagnostic, prognostic and predictive biomarkers (McGorry et al., Reference McGorry, Goldstone, Amminger, Allott, Berk and Lavoie2014).

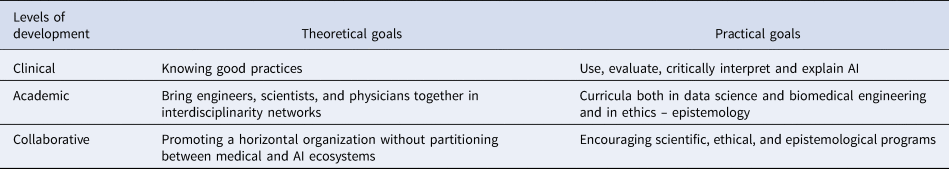

An epistemological framework for teaching AI in medicine could also convey some educational principles for clinical practice (Table 1):

(1) At the clinical level, teach the medical students how to use and evaluate, to interpret critically and to explain AI results, especially by knowing which practices are acceptable (Poldrack, Huckins, & Varoquaux, Reference Poldrack, Huckins and Varoquaux2019) and the difference between prediction and inference (Bzdok, Engemann, Grisel, Varoquaux, & Thirion, Reference Bzdok, Engemann, Grisel, Varoquaux and Thirion2018).

(2) At the academic level, develop curricula both in data science and biomedical engineering and in ethics and epistemology to develop cross-disciplinary collaboration between engineers, scientists and physicians, with the help of interdisciplinary networks.

(3) At the collaborative level, promote a horizontal organization without partitioning between medical and AI ecosystems by encouraging scientific, ethical and epistemological programs.

Table 1. Pedagogical challenges for modern psychiatry

While medical education is currently facing the progress of AI, ethics and epistemology offer two structuring frameworks to constrain the associated issues and to allow the development of relevant educational programs.

On one hand, psychiatry is beginning to appropriate the 5P values of medicine (Personalized, Preventive, Participative, Predictive and Pluri-expert/Populational) and the 5 V vision of data (Volume, Velocity, Variety, Veracity, and Value). On the other, AI is now embracing both neuroscience and the 4EA approach (Embodied, Embedded, Enacted, Extended, Affective). Following the lead of the Society for Neuroscience and the Neuroscience Core Concepts (McNerney, Chang, & Spitzer, Reference McNerney, Chang and Spitzer2009), we assume that the future of medical epistemology will be based on a theoretical and methodological framework that requires to-ing and fro-ing between how scientists appropriate the concepts of philosophy (AI in epistemology) and how philosophers appropriate those of science (epistemology in AI) in a dynamic, grounded and evolutive manner (Pradeu & Carosella, Reference Pradeu and Carosella2006). Tomorrow's physicians should be aware of these epistemological backgrounds so that well-planned, practical AI systems may be developed that foster trust and confidence between them and their patients.