Surgical site infections (SSIs) are among the most common healthcare-associated infections (HAIs) in surgical patients.Reference Najjar and Smink1 SSIs are associated with a substantial clinical and financial burden due to their negative impact on patient health and the increased costs associated with treatment and extended hospitalization.Reference Badia, Casey, Petrosillo, Hudson, Mitchell and Crosby2, Reference Jenks, Laurent, McQuarry and Watkins3 On average, these infections occur in 2%–4% of surgical patients, but the infection rates and severity vary across surgical procedures.4, 5 Colorectal surgery is associated with the highest risk of infection, which ranges from 15% to 30% of these patients.Reference Keenan, Speicher, Thacker, Walter, Kuchibhatla and Mantyh6

The incidence of SSIs and other HAIs are monitored in infection surveillance programs. These programs routinely collect data on HAI rates, which are used as reference data for hospitals and healthcare providers.Reference Awad7 Data can be applied to evaluate the quality of care, to identify where improvements are needed, and to support and facilitate implementation of new preventive measures. As a result, infection surveillance can be used to reduce SSI-related morbidity and costs. To ensure adequate and reliable surveillance, uniform ascertainment of infection is crucial. International definitions were therefore developed to provide a standardized approach to diagnosing and reporting SSIs.Reference Horan, Gaynes, Martone, Jarvis and Emori8 SSI surveillance is traditionally performed by extensive manual review of medical records. Consequently, surveillance is labor intensive and time-consuming. As the number of surgical procedures continues to rise, the total time spent on surveillance likely will increase as well.Reference Berríos-Torres, Umscheid and Bratzler9

The objective of this study was to develop an algorithm for the surveillance of deep SSIs after colorectal surgery to reduce the number of medical records that require full manual review.

Methods

Study design

This retrospective cohort study was conducted in the Amphia Hospital (Breda, The Netherlands), a teaching hospital that participates in the national HAI surveillance program. Medical records of all patients who undergo colorectal surgery are manually reviewed by trained infection control practitioners for the identification of deep SSI. Deep SSIs are defined according to the Centers for Disease Control and Prevention (CDC) criteria and comprise deep incisional and organ/space infections that manifest within 30 days after surgery.Reference Horan, Gaynes, Martone, Jarvis and Emori8 Postoperative complications that developed after discharged were either reported when the patient was referred back to the hospital or during a postoperative outpatient clinic visit ∼30 days after surgery. For the present analysis, we used surveillance data from January 2012 through December 2015. Infection surveillance was performed by the same infection control practitioners throughout the entire study period. A multidisciplinary group of infection control practitioners, surgeons, and clinical microbiologists discussed cases to reach consensus on the diagnosis when necessary.

Patients who were categorized as having a contaminated wound at the time of the surgical procedure (ie, wound class 4) were excluded because these wounds were already infected at the time of the surgical procedure. Demographic and clinical patient data and data on the surgical procedure were collected by manual chart review, except for data on postoperative use of antibiotics and diagnostic radiological procedures, which were automatically extracted.

We designed the analysis by following the TRIPOD statement for prediction modeling.Reference Moons, Altman and Reitsma10 Candidate model predictors were selected based on literature and included known risk factors or outcomes for deep SSI.Reference Jenks, Laurent, McQuarry and Watkins3, Reference Fry11, Reference Owens and Stoessel12, Reference Coello, Charlett, Wilson, Ward, Pearson and Borriello13 One predictor was selected for every 10 events. The administration of preoperative oral and perioperative intravenous antibiotic prophylaxis, American Society of Anesthesiologists (ASA) score classification, wound class, level of emergency, blood loss during the procedure, surgical technique were selected as preoperative and operative candidate predictors. Preoperative oral antibiotic prophylaxis comprised a 3-day course of colistin and tobramycin. Perioperative intravenous prophylaxis was administered according to the national guideline.Reference Bauer, van de Garde, van Kasteren, Prins and Vos14 Surgical technique was categorized into open, laparoscopic, and robotic laparoscopic procedures. Laparoscopic procedures that were converted to open were categorized as open procedures. The postoperative predictors length of stay, hospital readmission, reoperation, mortality, and in-hospital antibiotic use and abdominal radiologic procedures were assessed 30 days after the primary surgical procedure. Data on 30-day mortality were collected from the hospital database.

Statistical analysis

Univariable associations between baseline characteristics and candidate predictors and deep SSI were estimated using the Student t test or the Mann-Whitney U test for continuous variables, and the Fisher exact or χReference Badia, Casey, Petrosillo, Hudson, Mitchell and Crosby2 test for categorical variables. We analyzed missing data by comparing patients with complete data with patients who had 1 or more missing values in the outcome or in the model predictors. Missing data were subsequently imputed using multiple imputation by chained equations (MICE), and 10 datasets were created.Reference Buuren and Groothuis-Oudshoorn15 Predictive mean matching and logistic regression were used as imputation techniques for continuous and binary variables, respectively. Rubin’s rule was applied to calculate pooled results.Reference Rubin16 We built the model using multivariable logistic regression analysis with backward selection. The Akaike information criterion (AIC) was used to determine whether the model fit could be improved by deleting predictors. Predictors were deleted until the AIC could not be further reduced. The final model was validated internally with bootstrapping to correct for optimism (500 samples).Reference Canty and Ripley17

We determined discriminatory power and calibration to evaluate the statistical performance of the model.Reference Robin, Turck and Hainard18, Reference Harrell19 Discrimination was defined as the area under the receiver operating characteristic (ROC) curve (AUC). Calibration of the model was tested by plotting the predicted probabilities against the observed outcomes in the cohort. The final step was to evaluate the clinical applicability of the model. A predicted probability threshold was selected corresponding to an excellent sensitivity and an acceptable specificity to ensure that the vast majority of cases would be detected. When the individual predicted probabilities exceeded the predefined threshold, the model identified the medical record as having had a high probability for deep SSI, and the medical record was kept for full manual review. Not exceeding the threshold led to immediate classification of the record as ‘no deep SSI.’ At the threshold, the associated sensitivity, specificity, positive predictive value, and negative predictive value were calculated. As an exploratory analysis, we also assessed the diagnostic performance of the strongest predictor. P < .05 (2-sided) was considered statistically significant. All statistical analyses were performed using R version 1.0.143.20

Results

In total, 1,717 patients underwent colorectal surgery in the study period, of whom 111 (6.5%) with a contaminated wound (wound class 4) were excluded. Of the 1,606 remaining patients, 129 patients acquired a deep SSI (8.0%). Baseline characteristics are presented in Table 1. The median age was 68 years and 55.7% of patients were male. Compared with patients without SSI, patients who acquired an SSI less frequently received prophylactic oral antibiotics before surgery (44.2% vs 60.3%); more frequently had ASA scores of ≥2 (43.1% vs 28.7%); and had increased risks of reoperation (79.1% vs 6.1%), readmission (24.8% vs 8.9%), and death (8.5% vs 2.3%). Baseline characteristics for patients with complete data and for patients with 1 or more missing values are shown in Supplementary Table 1 online. Moreover, 109 (6.8%) were missing data for at least 1 of the covariables (6.8%) Patients with complete data differed significantly from those with missing data, which supported the use of multiple imputation to reduce the risk of bias due to missing data.

Table 1. Baseline Characteristics (Before Imputation)

Note. ICU, intensive care unit; IQR, interquartile range; SSI, surgical site infection; BMI, body mass index.

a Data are presented as no. (%) or median (IQR).

b P values are the estimated univariable associations between the variable and deep SSI.

c % missing data: ASA classification, 5.5%; perioperative antibiotic prophylaxis, 0.85%; surgical approach, 0.44%; BMI, 1.75%.

d Performed at least 25 colorectal surgical procedures in 1 year.

e Multiple surgical incisions during the same surgical procedure, excludes creation of ostomy.

f 75th percentiles of duration of surgery accounting for the n type of resection and for the surgical approach, according to PREZIES reference values.21

g Evaluation of the postoperative outcomes occurred 30 d after the index procedure.

h Starting 48 h after the primary procedure.

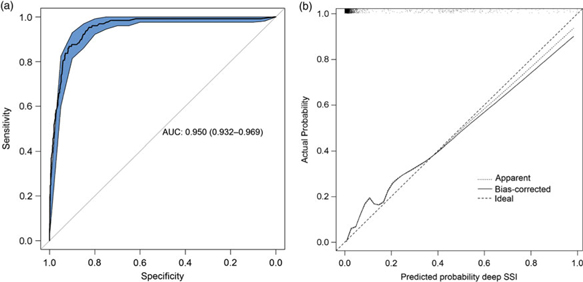

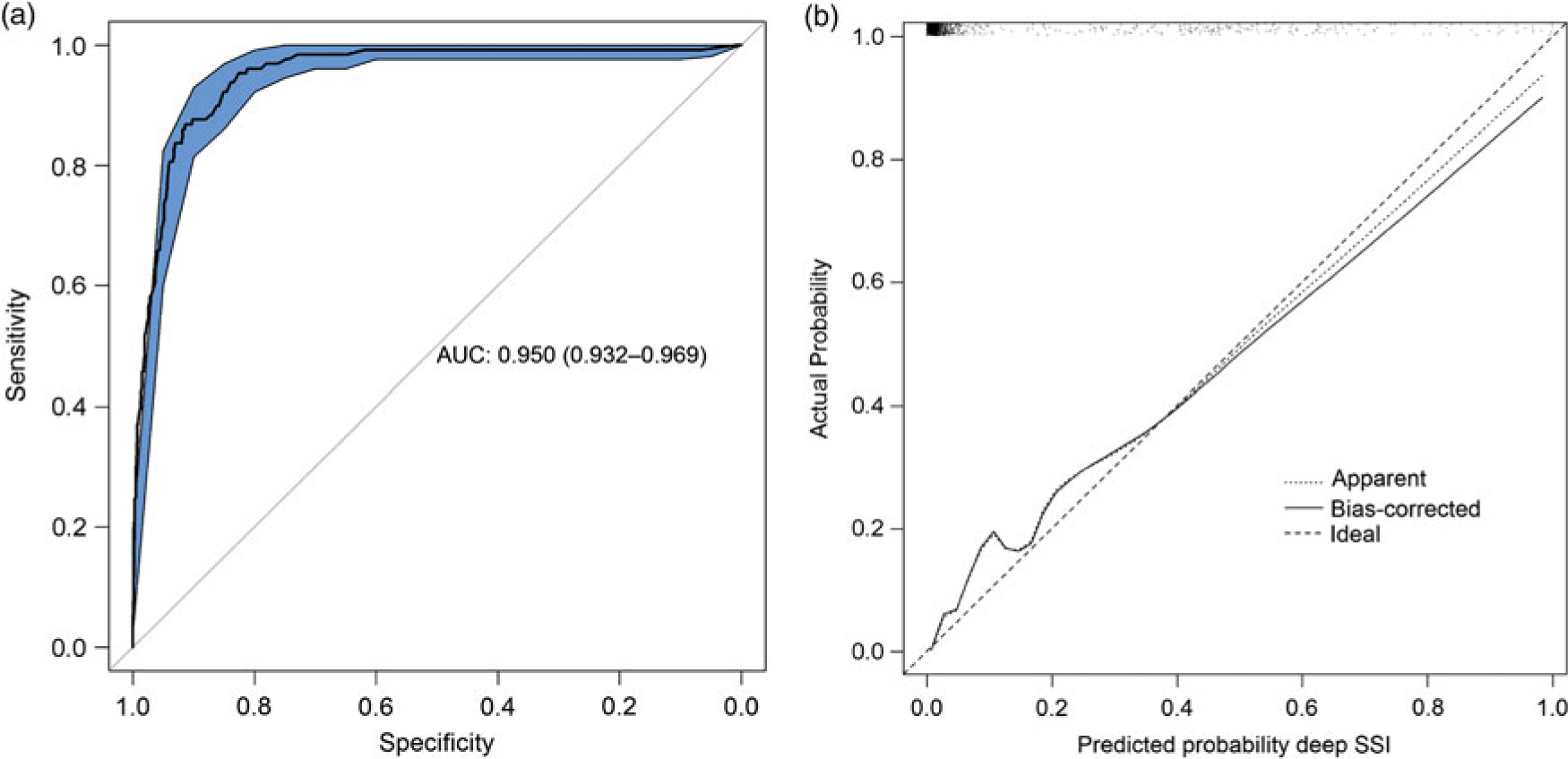

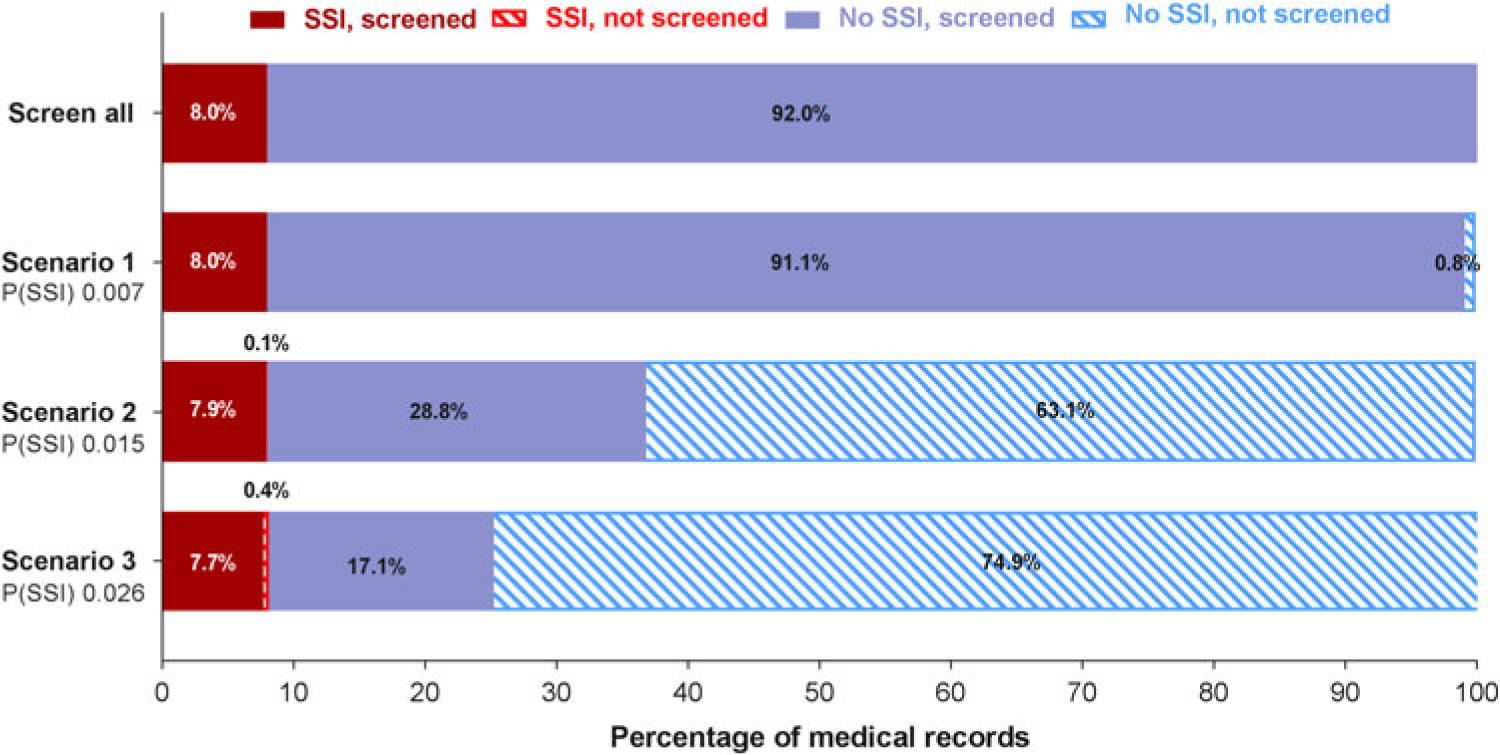

The final diagnostic model, including bias-corrected estimates, is shown in Table 2. The predictors retained in the model after backward selection were wound class, reoperation, readmission, length of stay, and death. The prediction rule is illustrated in Supplementary Fig. 1 online. The discriminatory power of the final model was 0.950 (95% CI, 0.932–0.969) (Fig. 1A), with good calibration (Fig. 1B). The calibration slope that was used to shrink the estimates was 0.978, indicating slight overprediction of the model before bootstrapping. To assess the clinical applicability of the model (Fig. 2), we compared complete chart review with the results produced by the final model when we used 3 different predicted probability thresholds.

Table 2. Final Model for the Prediction of Deep SSIa

Note. CI, confidence interval; OR, odds ratio; SSI, surgical site infection.

a Final logistic regression model after backward selection on Akaike information criterion Intercept: −5.234. ORs and CIs were corrected for optimism by bootstrapping (2,000 samples). The following predictors were not retained in the model: ASA classification, level of emergency, preoperative oral antibiotic prophylaxis, blood loss during surgery, surgical approach, administration of antibiotics and the requests for radiology of the abdomen. All patients (n= 1,616) had complete data for all 5 parameters.

Fig. 1. Statistical model performance. A. ROC curve with discriminatory power expressed as AUC (AUC, 0.950; 95% CI, 0.932–0.969). B. Calibration plot of the model. Calibration refers to the correspondence between the probability of SSI predicted by the model and the actual probability of infection. The diagonal line represents perfect (ideal) calibration; the dotted line represents the actual calibration; and the black line represents calibration after bootstrapping. The slope of the linear predictor was 0.978, indicating slight overprediction before bootstrapping. Note. ROC, receiver operating characteristic; AUC, area under the curve; CI, confidence interval; SSI, surgical site infection.

Fig. 2. Clinical applicability of the prediction model. Manual screening of all files is compared with screening a subset of files that are preselected by the prediction model. Three scenarios with different cutoffs in predicted probability for SSI are presented. When the predicted probability for a patient exceeds the cutoff value, the patient file will be identified as a possible SSI and will be retained for manual review. If the threshold is not exceeded, the patient file will be discarded immediately. The solid bars represent the files that are manually screened; the striped bars represent the files that are discarded. When the predicted probability cut off increases, the number of files that need to be reviewed manually decreases. The number of missed SSI cases (ie, false negatives) will increase. Note. P(SSI), predicted probability for surgical site infection.

At the predicted probability threshold of 0.015, the model had a 98.5% sensitivity, 68.7% specificity, 21.5% positive predictive value, and 99.8% negative predictive value for predicting deep SSI (Table 3). The number of medical records that required manual review was reduced from 100% to 36.7% (from 1,606 to 590 records). Finally, the diagnostic performance of the strongest predictor (reoperation) was evaluated. Reoperation was strongly associated with deep SSI and had good discriminatory power (AUC, 0.865; 95% CI, 0.829–0.901), with 79.1% sensitivity, 93.7% specificity, 53.1% positive predictive value, and 98.1% negative predictive value. With this single predictor, the number of charts to review manually was reduced to 11.9% (from 1,606 to 192 records).

Table 3. Contingency Table for the Prediction of Deep Surgical Site Infection (SSI)

Note. CI, confidence interval; NPV, negative predictive value; P(SSI), probability of surgical site infection; PPV, positive predictive value.

Discussion

We developed a 5-parameter diagnostic model that was able to identify 98.5% of all deep SSIs with a 99.8% negative predictive value. Use of the model could reduce the number of medical records that required full manual review from 1,606 (100%) to 590 (36.7%), at the cost of 2 missed deep SSIs (1.6%). Increasing the predicted probability threshold would allow for further reduction of workload but would also increase the likelihood of missed SSIs. Using only reoperation to detect deep SSI, the number of medical records for complete manual review was substantially reduced, although this method is associated with a higher false-negative rate and lower discriminatory power compared to the full model. Nevertheless, we suggest that reoperation should be included as a model parameter when new algorithms are developed for colorectal SSI surveillance. We set the model threshold at an excellent sensitivity so that it could detect a high percentage of patients with SSI. The trade-off for this high sensitivity was a relatively high rate of false positives due to a moderate specificity, which we accepted because the model would be used to identify the patients with a high probability of SSI whose records would then be reviewed. Thus, the lower specificity would not affect case finding. The moderate false-positive rate decreases the efficiency of the model. However, SSI surveillance that uses the model to identify patients whose records need to be reviewed will be more efficient than reviewing every patient’s record. Because this model has a high sensitivity, it could be used for quality improvement purposes and for benchmarking.

Previous studies of drain-related meningitis,Reference Van Mourik, Troelstra and Van Der Sprenkel22 bloodstream infections,Reference Leal, Gregson, Church, Henderson, Ross and Laupland23 and SSI after orthopedic proceduresReference Menendez, Janssen and Ring24 found that algorithms performed well compared with manual surveillance. For the surveillance of SSIs after gastrointestinal surgery, algorithms have been developed using several approaches, such as Bayesian network modelling (AUC, 0.89),Reference Sohn, Larson, Habermann, Naessens, Alabbad and Liu25 logistic regression modeling (AUC, 0.89),Reference Branch-Elliman, Strymish, Itani and Gupta26 or machine learning (AUC, 0.82).Reference Hu, Simon, Arsoniadis, Wang, Kwaan and Melton27

We made several efforts to secure generalizability and validity of our findings. We aimed to take an objective approach in model development by selecting predictors on theoretical grounds exclusively and by performing backward selection using the statistical model fit. Subsequently, the model was internally validated and, as such, we attempted to reduce the risk of overprediction. We obtained complete data on the outcome with the reference standard, and we did not change the definition of SSI the during the study period, which prevents selection bias due to partial verification.

Several limitations should be addressed. The use of routine care data as well as retrospective data collection is inevitably associated with missing information. Proper handling of missing data reduces the risk of bias and improves precision. We applied multiple imputation (MICE) to handle the missing data, which has been demonstrated previously to improve the performance of automated detection of SSI.Reference Hu, Simon, Arsoniadis, Wang, Kwaan and Melton27 When this model is used in clinical practice, missing data cannot be dealt with using the same method, which is an issue with applying prediction models in general. In the case of missing values, the model cannot calculate a predicted probability. Using backward selection in algorithm development, the number of parameters is reduced which also limits the amount of information needed for adequate diagnostic performance. As such, the clinical applicability will be enhanced because the risk of missing values is reduced. We had no missing data in any of the model covariables; thus, we expect that missing data will not be an important issue when the algorithm is applied in clinical practice.

Another limitation is that the model was developed on data derived from a single hospital. The parameters in the final model should be available in most medical records. Hospitals wishing to validate the algorithm in their colorectal surgical population should therefore ensure that all of the parameters are available electronically. Also, external validation is essential before the model can be applied in practice. Ideally, data derived from multiple hospitals are used to confirm model performance in other settings and future studies may also investigate less data-driven methods of model development for semiautomated surveillance.

In conclusion, we developed a 5-parameter diagnostic model that identified 98.5% of the patients who acquired a deep SSI and reduced the number of medical records requiring complete manual screening by 63.4%. These results can be used to develop semiautomatic surveillance of deep SSIs after colorectal surgery.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/ice.2019.36

Author ORCIDs

Tessa Mulder, 0000-0003-4556-0338

Acknowledgments

The authors would like to thank the infection control practitioners of the Department of Infection Control of the Amphia Hospital in Breda, The Netherlands.

Financial support

No financial support was provided relevant to this article.

Conflicts of interest

All authors report no conflicts of interest relevant to this article.