1 Introduction

Think of your favorite philosophical problem. You’ve likely thought about it for a long time, and you must have some idea regarding how to resolve it. And odds are you know someone who has thought about it for at least as long, whose credential are as good as yours, and whose solution to the problem is incompatible with yours. If so, you’re in disagreement with an epistemic peer.Footnote 1 Should this fact make you less confident that your solution to the problem is the correct one? According to the so-called conciliatory or conciliationist views, it should. This answer has much intuitive appeal: the problem is complex, you are not infallible, and one straightforward explanation for the disagreement is that you’ve made a subtle mistake when reasoning. An equally good explanation is that your opponent has made a mistake. But given that there’s no good reason to favor the latter, reducing confidence still seems appropriate.Footnote 2

But their intuitive appeal notwithstanding, conciliatory views are said to run into problems when applied to themselves, or when answering the question of what should one do when disagreeing about the epistemic significance of disagreement. The problems are most transparent and easiest to explain for the more extreme conciliatory views. According to such views, when you hold a well-reasoned belief that X and an equally informed colleague disagrees with you about whether X, you should lower your confidence in X dramatically, or—to state it in terms of categorical beliefs—you should abandon your belief and suspend judgment on the issue.

Let’s imagine that you’ve reasoned your way toward such a view, and that you’re the sort of person who acts on the views they hold. Imagine further that you have a well-reasoned belief on some other complex issue, say, you believe that we have libertarian free will. Now, to your dismay, you find yourself in a crossfire: your friend metaphysician Milo thinks that there’s no libertarian free will, while your friend epistemologist Evelyn thinks that one should not abandon one’s well-reasoned belief when faced with a disagreeing peer. Call this scenario Double Disagreement. The question now is how should you adjust your beliefs. For starters, your conciliatory view appears to self-defeat, or call for abandoning itself. Just instantiate X with it! There’s disagreement of the right sort, and so you should abandon your view. And to make the matters worse, there’s something in the vicinity of inconsistency around the corner. Think about what are you to do about your belief in free will. Since there’s no antecedent reason to start by applying the conciliatory view to itself, you’ve two lines of argument supporting opposing conclusions: that you should abandon your belief in the existence of free will, and that it’s not the case that you should. On the one hand, it’d seem that you should drop the belief, in light of your disagreement with Milo and your conciliatory view. On the other, there’s the following line of argument too. Your conciliatory view self-defeats, and, once it does, your disagreement with Milo loses its epistemic significance. But if the disagreement isn’t significant for you, then it’s fine for you to keep your belief in the existence of free will, implying that it’s not the case that you should abandon it.Footnote 3

Although this was sketchy and quick, you should agree that the advocates of strong conciliatory views face a challenge: it looks like their views issue inconsistent recommendations in Double Disagreement and other scenarios sharing its structure. What’s more, Christensen [Reference Christensen, Christensen and Lackey8], Elga [Reference Elga, Feldman and Warfield16], and others have forcefully argued that this challenge generalizes to all types of conciliatory views, whether they be calling for strong, moderate, or even minimal conciliation.Footnote 4 We’ll call this challenge the self-defeat objection to conciliatory views.Footnote 5

Advocates of conciliatory views have taken this objection very seriously, offering various ingenious responses to it. Thus, Bogardus [Reference Bogardus3] argues that we have a special rational insight into the truth of conciliationism, making it immune from disagreements about it.Footnote 6 Elga proposes modifying conciliationism, with the view of making beliefs in it exempt from its scope of application. Christensen [Reference Christensen, Christensen and Lackey8] suggests that cases like Double Disagreement are inherently unfortunate or tragic, and that what they reveal is that conciliatory views can lead to inevitable violations of “epistemic ideals,” as opposed to showing that these views themselves are mistaken. Matheson [Reference Matheson33] invokes “higher-order recommendations” and evidentialism to respond to the objection.Footnote 7 And Pittard [Reference Pittard37] argues that the agent who finds herself in Double Disagreement has no way of “deferring” to her opponent both at the level of belief and the level of reasoning, and that, therefore, it’s rational for her to refuse to abandon her belief in conciliationism. Even without looking at these responses in any more detail, it should be clear that they all either incur intuitive costs or substantially modify conciliationism.Footnote 8 So the advocates of conciliatory views should welcome a less committal and more conservative response to the objection.

One of the two main goals of this paper, then, is to develop such a response. The second one is to present a formal model that captures the core idea behind conciliatory views using the resources from the defeasible logic paradigm. This model, I contend, is particularly useful for exploring the structure of conciliatory reasoning in cases like Double Disagreement and, therefore, also working out a response to the self-defeat objection. In a word, it’ll help us see two things. First, the recommendations that conciliatory views issue in such cases aren’t, in fact, inconsistent. And second, in those cases where these recommendations may appear incoherent to us—that is, when they say, roughly, that you should abandon your belief in conciliationism and still conciliate in response to the disagreement about free will—they actually call for the correct and perfectly reasonable response.Footnote 9

The remainder of this paper is structured as follows. Section 2 sets up the model and sharpens the objection: Section 2.1 formulates a defeasible reasoner, or a simple logic with a consequence relation at its core; Section 2.2 embeds the core idea behind conciliationism in it; and Section 2.3 turns to cases like Double Disagreement, leading to a formal version of the concern that conciliatory views self-defeat. We then address it in two steps. Section 3 is concerned with a, by and large, technical problem, but, by solving it, we will have shown that conciliatory views do not issue inconsistent recommendations when they turn on themselves. Section 4, in turn, is concerned with explaining why even those recommendations of conciliatory views that may, at first, strike us as incoherent actually call for rational responses. The key role in this is played by the notion of (rational) degrees of confidence. These three sections are followed by a brief conclusion and an appendix, verifying the main observations.

2 Conciliatory reasoning in default logic

2.1 Basic defeasible reasoner

This section defines a simple defeasible reasoner. The particular reasoner we’ll be working with is a form of default logic.Footnote

10

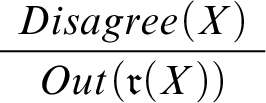

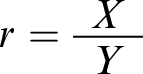

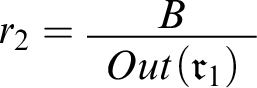

The core idea behind it is to supplement the standard (classical) logic with a special set of default rules representing defeasible generalizations, with a view of being able to derive a stronger set of conclusions from a given set of premises. We assume the language of ordinary propositional logic as our background and represent default rules as pairs of (vertically) ordered formulas: where X and Y are arbitrary propositions,

will stand for the rule that lets us conclude Y from X by default. To take an example, let B be the proposition that Tweety is a bird and F the proposition that Tweety flies. Then

will stand for the rule that lets us conclude Y from X by default. To take an example, let B be the proposition that Tweety is a bird and F the proposition that Tweety flies. Then

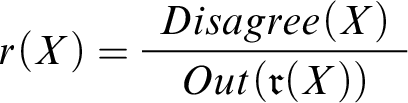

says that we can conclude that Tweety flies as soon as we have established that he is a bird. We use the letter r (with subscripts) to denote default rules, and make use of the functions

says that we can conclude that Tweety flies as soon as we have established that he is a bird. We use the letter r (with subscripts) to denote default rules, and make use of the functions

![]() $Premise[\cdot ]$

and

$Premise[\cdot ]$

and

![]() $Conclusion[\cdot ]$

to pick out, respectively, the premise and the conclusion of some given rule: if

$Conclusion[\cdot ]$

to pick out, respectively, the premise and the conclusion of some given rule: if

, then

, then

![]() $Premise[r] = X$

and

$Premise[r] = X$

and

![]() $Conclusion[r] = Y$

. The second function can be applied to sets of rules too: where

$Conclusion[r] = Y$

. The second function can be applied to sets of rules too: where

![]() $\mathcal {S}$

is a set of default rules,

$\mathcal {S}$

is a set of default rules,

![]() $Conclusion[\mathcal {S}]$

picks out the conclusions of all rules in

$Conclusion[\mathcal {S}]$

picks out the conclusions of all rules in

![]() $\mathcal {S}$

, or, formally,

$\mathcal {S}$

, or, formally,

![]() $Conclusion[\mathcal {S}] = \{Conclusion[r]: r\in \mathcal {S}\}$

.

$Conclusion[\mathcal {S}] = \{Conclusion[r]: r\in \mathcal {S}\}$

.

We envision an agent reasoning on the basis of a two-part structure

![]() $\langle \mathcal {W},\mathcal {R}\rangle $

where

$\langle \mathcal {W},\mathcal {R}\rangle $

where

![]() $\mathcal {W}$

is a set of ordinary propositional formulas—the hard information, or the information that the agent is certain of—and

$\mathcal {W}$

is a set of ordinary propositional formulas—the hard information, or the information that the agent is certain of—and

![]() $\mathcal {R}$

is a set of default rules—the rules the agent relies on when reasoning. We call such structures contexts and denote them by the letter c (with subscripts).

$\mathcal {R}$

is a set of default rules—the rules the agent relies on when reasoning. We call such structures contexts and denote them by the letter c (with subscripts).

Definition 1 (Contexts)

A context c is a structure of the form

![]() $\langle \mathcal {W},\mathcal {R}\rangle $

, where

$\langle \mathcal {W},\mathcal {R}\rangle $

, where

![]() $\mathcal {W}$

is a set of propositional formulas and

$\mathcal {W}$

is a set of propositional formulas and

![]() $\mathcal {R}$

is a set of default rules.

$\mathcal {R}$

is a set of default rules.

Let’s illustrate the notion using a standard example from the artificial intelligence literature, the Tweety Triangle: in the first step, the reasoning agent learns that Tweety is a bird and concludes that Tweety flies. In the second, it learns that Tweety is a penguin, retracts the previous conclusion, and concludes that Tweety doesn’t fly. Since the scenario unfolds in two steps, there are two contexts,

![]() $c_1 = \langle \mathcal {W},\mathcal {R}\rangle $

and

$c_1 = \langle \mathcal {W},\mathcal {R}\rangle $

and

![]() $c_2 = \langle \mathcal {W}',\mathcal {R}\rangle $

. Let B and F be as before, and let P stand for the proposition that Tweety is a penguin. The hard information

$c_2 = \langle \mathcal {W}',\mathcal {R}\rangle $

. Let B and F be as before, and let P stand for the proposition that Tweety is a penguin. The hard information

![]() $\mathcal {W}$

of

$\mathcal {W}$

of

![]() $c_1$

must include B and

$c_1$

must include B and

![]() $P\supset B$

, expressing an instance of the fact that all penguins are birds. The set of rules

$P\supset B$

, expressing an instance of the fact that all penguins are birds. The set of rules

![]() $\mathcal {R}$

of

$\mathcal {R}$

of

![]() $c_1$

(and

$c_1$

(and

![]() $c_2)$

, in turn, contains the two rules

$c_2)$

, in turn, contains the two rules

and

and

. The first lets the reasoner infer that Tweety can fly, by default, once it has concluded that Tweety is a bird. The second lets the reasoner infer that Tweety cannot fly, by default, once it has concluded that he is a penguin. Notice that

. The first lets the reasoner infer that Tweety can fly, by default, once it has concluded that Tweety is a bird. The second lets the reasoner infer that Tweety cannot fly, by default, once it has concluded that he is a penguin. Notice that

![]() $r_1$

and

$r_1$

and

![]() $r_2$

can be thought of as instances of two sensible, yet defeasible principles for reasoning, namely, that birds usually fly and that penguins usually do not. As for

$r_2$

can be thought of as instances of two sensible, yet defeasible principles for reasoning, namely, that birds usually fly and that penguins usually do not. As for

![]() $c_2 = \langle \mathcal {W}',\mathcal {R}\rangle $

, it is just like

$c_2 = \langle \mathcal {W}',\mathcal {R}\rangle $

, it is just like

![]() $c_1$

, except for its hard information also contains P, saying that Tweety is a penguin.

$c_1$

, except for its hard information also contains P, saying that Tweety is a penguin.

Now we will specify a procedure determining which formulas follow from any given context. It will rely on an intermediary notion of a proper scenario. Given some context

![]() $c = \langle \mathcal {W},\mathcal {R}\rangle $

, any subset of its rules

$c = \langle \mathcal {W},\mathcal {R}\rangle $

, any subset of its rules

![]() $\mathcal {R}$

counts as a scenario based on c, but proper scenarios are going to be special, in the sense that, by design, they will contain all and only those rules from

$\mathcal {R}$

counts as a scenario based on c, but proper scenarios are going to be special, in the sense that, by design, they will contain all and only those rules from

![]() $\mathcal {R}$

of which we’ll want to say that they should be applied or that they should be in force. As long as this is kept in mind, the following definition of consequence should make good intuitive sense:Footnote

11

$\mathcal {R}$

of which we’ll want to say that they should be applied or that they should be in force. As long as this is kept in mind, the following definition of consequence should make good intuitive sense:Footnote

11

Definition 2 (Consequence)

Let

![]() $c =\langle \mathcal {W},\mathcal {R}\rangle $

be a context. Then the statement X follows from c, written as

$c =\langle \mathcal {W},\mathcal {R}\rangle $

be a context. Then the statement X follows from c, written as

![]() , just in case

, just in case

![]() $\mathcal {W}\cup Conclusion[\mathcal {S}]\vdash X$

for each proper scenario

$\mathcal {W}\cup Conclusion[\mathcal {S}]\vdash X$

for each proper scenario

![]() $\mathcal {S}$

based on c.

$\mathcal {S}$

based on c.

Of course, the notion of a proper scenario still has to be defined. It will emerge as a combination of three conditions on the rules included in them. The first of these captures the intuitive idea that a rule has to come into operation, or that it has to be triggered:

Definition 3 (Triggered rules)

Let

![]() $c = \langle \mathcal {W},\mathcal {R}\rangle $

be a context and

$c = \langle \mathcal {W},\mathcal {R}\rangle $

be a context and

![]() $\mathcal {S}$

a scenario based on it. Then the default rules from

$\mathcal {S}$

a scenario based on it. Then the default rules from

![]() $\mathcal {R}$

that are triggered in

$\mathcal {R}$

that are triggered in

![]() $\mathcal {S}$

are those that belong to the set

$\mathcal {S}$

are those that belong to the set

![]() $Triggered_{\mathcal {W},\mathcal {R}}(\mathcal {S}) = \{r \in \mathcal {R}: \mathcal {W}\cup Conclusion[S]\vdash Premise[r]\}$

.

$Triggered_{\mathcal {W},\mathcal {R}}(\mathcal {S}) = \{r \in \mathcal {R}: \mathcal {W}\cup Conclusion[S]\vdash Premise[r]\}$

.

Applying this definition to the empty scenario

![]() $\emptyset $

, against the background of the context

$\emptyset $

, against the background of the context

![]() $c_1$

, it’s easy to see that

$c_1$

, it’s easy to see that

![]() $Triggered_{\mathcal {W},\mathcal {R}}(\emptyset ) = \{r_1\}$

: the hard information

$Triggered_{\mathcal {W},\mathcal {R}}(\emptyset ) = \{r_1\}$

: the hard information

![]() $\mathcal {W} = \{B, P\supset B\}$

entails B, and

$\mathcal {W} = \{B, P\supset B\}$

entails B, and

![]() $B = Premise[r_1]$

.

$B = Premise[r_1]$

.

The need for further conditions on proper scenarios reveals itself once we apply

![]() $Triggered(\cdot )$

to any scenario against the background of

$Triggered(\cdot )$

to any scenario against the background of

![]() $c_2$

. While both rules

$c_2$

. While both rules

![]() $r_1$

and

$r_1$

and

![]() $r_2$

come out triggered in every scenario based on it, the only scenario that seems intuitively correct is

$r_2$

come out triggered in every scenario based on it, the only scenario that seems intuitively correct is

![]() $\{r_2\}$

. This means that we need to specify a further condition, precluding the addition of

$\{r_2\}$

. This means that we need to specify a further condition, precluding the addition of

![]() $r_1$

to

$r_1$

to

![]() $\{r_2\}$

. And here’s one that does the trick:

$\{r_2\}$

. And here’s one that does the trick:

Definition 4 (Conflicted rules)

Let

![]() $c = \langle \mathcal {W},\mathcal {R}\rangle $

be a context, and

$c = \langle \mathcal {W},\mathcal {R}\rangle $

be a context, and

![]() $\mathcal {S}$

a scenario based on it. Then the rules from

$\mathcal {S}$

a scenario based on it. Then the rules from

![]() $\mathcal {R}$

that are conflicted in the context of

$\mathcal {R}$

that are conflicted in the context of

![]() $\mathcal {S}$

are those that belong to the set

$\mathcal {S}$

are those that belong to the set

![]() $Conflicted_{\mathcal {W},\mathcal {R}}(\mathcal {S}) = \{r\in \mathcal {R}: \mathcal {W}\cup Conclusion[\mathcal {S}]\vdash \neg Conclusion[r]\}$

.

$Conflicted_{\mathcal {W},\mathcal {R}}(\mathcal {S}) = \{r\in \mathcal {R}: \mathcal {W}\cup Conclusion[\mathcal {S}]\vdash \neg Conclusion[r]\}$

.

Notice that we have

![]() $Conflicted_{\mathcal {W},\mathcal {R}}(\{r_2\}) = \{r_1\}$

, saying that the rule

$Conflicted_{\mathcal {W},\mathcal {R}}(\{r_2\}) = \{r_1\}$

, saying that the rule

![]() $r_1$

is conflicted in the context of the scenario

$r_1$

is conflicted in the context of the scenario

![]() $\{r_2\}$

, as desired. Now consider the following preliminary definition for proper scenarios:

$\{r_2\}$

, as desired. Now consider the following preliminary definition for proper scenarios:

Definition 5 (Proper scenarios, first pass)

Let

![]() $c = \langle \mathcal {W},\mathcal {R}\rangle $

be a context and

$c = \langle \mathcal {W},\mathcal {R}\rangle $

be a context and

![]() $\mathcal {S}$

a scenario based on it. Then

$\mathcal {S}$

a scenario based on it. Then

![]() $\mathcal {S}$

is a proper scenario based on c just in case

$\mathcal {S}$

is a proper scenario based on c just in case

$$ \begin{align*}\begin{array}{llll} \mathcal{S} &= &\{ r\in \mathcal{R} : & r \in Triggered_{\mathcal{W},\mathcal{R}}(\mathcal{S}), \\ &&& r\notin Conflicted_{\mathcal{W},\mathcal{R}}(\mathcal{S}) \}. \end{array} \end{align*} $$

$$ \begin{align*}\begin{array}{llll} \mathcal{S} &= &\{ r\in \mathcal{R} : & r \in Triggered_{\mathcal{W},\mathcal{R}}(\mathcal{S}), \\ &&& r\notin Conflicted_{\mathcal{W},\mathcal{R}}(\mathcal{S}) \}. \end{array} \end{align*} $$

The definition gives us the correct result when applied to

![]() $c_1$

, since the singleton

$c_1$

, since the singleton

![]() $\mathcal {S}_1 = \{r_1\}$

comes out as its only proper scenario. But it falls flat when applied to

$\mathcal {S}_1 = \{r_1\}$

comes out as its only proper scenario. But it falls flat when applied to

![]() $c_2$

, since both

$c_2$

, since both

![]() $\mathcal {S}_1$

and

$\mathcal {S}_1$

and

![]() $\mathcal {S}_2 = \{r_2\}$

qualify as proper. There are multiple ways to resolve this problem formally. The one I adopt here is motivated by the broader goal of having the resources which will let us model conciliatory reasoning.

$\mathcal {S}_2 = \{r_2\}$

qualify as proper. There are multiple ways to resolve this problem formally. The one I adopt here is motivated by the broader goal of having the resources which will let us model conciliatory reasoning.

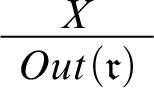

We introduce a new type of exclusionary rules, letting the reasoner take other rules out of consideration. To be able to formulate such rules, we extend the background language in two ways. First, we introduce rule names: every default rule

![]() $r_X$

is assigned a unique name

$r_X$

is assigned a unique name

![]() $\mathfrak {r}_X$

—the Fraktur script is used to distinguish rule names from the rules themselves. Second, we introduce a special predicate

$\mathfrak {r}_X$

—the Fraktur script is used to distinguish rule names from the rules themselves. Second, we introduce a special predicate

![]() $Out(\cdot )$

, with a view of forming expressions of the form

$Out(\cdot )$

, with a view of forming expressions of the form

![]() $Out(\mathfrak {r}_X)$

. The intended meaning of

$Out(\mathfrak {r}_X)$

. The intended meaning of

![]() $Out(\mathfrak {r}_x)$

is that the rule

$Out(\mathfrak {r}_x)$

is that the rule

![]() $r_x$

is excluded or taken out of consideration.Footnote

12

For concreteness, let

$r_x$

is excluded or taken out of consideration.Footnote

12

For concreteness, let

![]() $\mathfrak {r}_1$

be the name of the familiar rule

$\mathfrak {r}_1$

be the name of the familiar rule

![]() $r_1$

. Then

$r_1$

. Then

![]() $Out(\mathfrak {r}_1)$

says that

$Out(\mathfrak {r}_1)$

says that

![]() $r_1$

is excluded.

$r_1$

is excluded.

With names and the new predicate in hand, we can formulate a second negative condition on a rule’s inclusion in a proper scenario:

Definition 6 (Excluded rules)

Let

![]() $c = \langle \mathcal {W},\mathcal {R}\rangle $

be a context, and

$c = \langle \mathcal {W},\mathcal {R}\rangle $

be a context, and

![]() $\mathcal {S}$

a scenario based on this context. Then the rules from

$\mathcal {S}$

a scenario based on this context. Then the rules from

![]() $\mathcal {R}$

that are excluded in the context of

$\mathcal {R}$

that are excluded in the context of

![]() $\mathcal {S}$

are those that belong to the set

$\mathcal {S}$

are those that belong to the set

![]() $Excluded_{\mathcal {W},\mathcal {R}}(\mathcal {S}) = \{r\in \mathcal {R}: \mathcal {W}\cup Conclusion[\mathcal {S}]\vdash Out(\mathfrak {r})\}$

.

$Excluded_{\mathcal {W},\mathcal {R}}(\mathcal {S}) = \{r\in \mathcal {R}: \mathcal {W}\cup Conclusion[\mathcal {S}]\vdash Out(\mathfrak {r})\}$

.

Our full definition of proper scenarios, then, runs thus:Footnote 13

Definition 7 (Proper scenarios)

Let

![]() $c = \langle \mathcal {W},\mathcal {R}\rangle $

be a context and

$c = \langle \mathcal {W},\mathcal {R}\rangle $

be a context and

![]() $\mathcal {S}$

a scenario based on it. Then

$\mathcal {S}$

a scenario based on it. Then

![]() $\mathcal {S}$

is a proper scenario based on c just in case

$\mathcal {S}$

is a proper scenario based on c just in case

$$ \begin{align*}\begin{array}{llll} \mathcal{S} &= &\{ r\in \mathcal{R} : & r \in Triggered_{\mathcal{W},\mathcal{R}}(\mathcal{S}), \\ &&& r\notin Conflicted_{\mathcal{W},\mathcal{R}}(\mathcal{S}), \\ &&& r\notin Excluded_{\mathcal{W},\mathcal{R}}(\mathcal{S}) \}. \end{array} \end{align*} $$

$$ \begin{align*}\begin{array}{llll} \mathcal{S} &= &\{ r\in \mathcal{R} : & r \in Triggered_{\mathcal{W},\mathcal{R}}(\mathcal{S}), \\ &&& r\notin Conflicted_{\mathcal{W},\mathcal{R}}(\mathcal{S}), \\ &&& r\notin Excluded_{\mathcal{W},\mathcal{R}}(\mathcal{S}) \}. \end{array} \end{align*} $$

According to this definition, a proper scenario

![]() $\mathcal {S}$

contains all and only those rules that are triggered and neither conflicted, nor excluded in it.

$\mathcal {S}$

contains all and only those rules that are triggered and neither conflicted, nor excluded in it.

Returning to the example,

![]() $c_1$

and

$c_1$

and

![]() $c_2$

must be supplemented with the rule

$c_2$

must be supplemented with the rule

, saying that the rule

, saying that the rule

![]() $r_1$

must be taken out of consideration in case Tweety is a penguin. This should make good sense. Since penguins form a peculiar type of birds, it’s not reasonable to base one’s conclusion that a penguin flies on the idea that birds usually do. It’s easy to see that

$r_1$

must be taken out of consideration in case Tweety is a penguin. This should make good sense. Since penguins form a peculiar type of birds, it’s not reasonable to base one’s conclusion that a penguin flies on the idea that birds usually do. It’s easy to see that

![]() $\mathcal {S}_1$

is still the only proper scenario based on

$\mathcal {S}_1$

is still the only proper scenario based on

![]() $c_1$

, and that

$c_1$

, and that

![]() $\mathcal {S}_3 = \{r_2,r_3\}$

is the only proper scenario based on

$\mathcal {S}_3 = \{r_2,r_3\}$

is the only proper scenario based on

![]() $c_2$

. So the addition of

$c_2$

. So the addition of

![]() $r_3$

leaves us with a unique proper scenario in each case.Footnote

14

And this gives us the intuitively correct results: given our definition of consequence, the formula F, saying that Tweety is able to fly, follows from

$r_3$

leaves us with a unique proper scenario in each case.Footnote

14

And this gives us the intuitively correct results: given our definition of consequence, the formula F, saying that Tweety is able to fly, follows from

![]() $c_1$

, and the formula

$c_1$

, and the formula

![]() $\neg F$

, saying that Tweety isn’t able to fly, follows from

$\neg F$

, saying that Tweety isn’t able to fly, follows from

![]() $c_2$

.

$c_2$

.

With this, our basic defeasible reasoner is complete. We interpret it as a model reasoner: if it outputs X in the context c, then it’s rational for one to, or one ought to, believe that X in the situation c stands for. And if the reasoner doesn’t output X in c, then it’s not rational for one to, or it’s not the case that one ought to, believe that X in the situation c stands for. It may look like the model is committed to the all-or-nothing picture of doxastic attitudes, but it actually can accommodate degree-of-confidence talk as well—more on this in Section 4.

I will sometimes represent contexts as inference graphs. The ones in Figure 1 represent the two contexts capturing the Tweety Triangle. Here’s how such graphs should be read: a black node at the bottom of the graph—a node that isn’t grayed out, that is—represents an atomic formula from the hard information. A double link from X to Y stands for a proposition of the form

![]() $X\supset Y$

. A single link from X to Y stands for an ordinary default rule of the form

$X\supset Y$

. A single link from X to Y stands for an ordinary default rule of the form

, while a crossed out single link from X to Y stands for a default rule of the form

, while a crossed out single link from X to Y stands for a default rule of the form

. A crossed out link that starts from a node X and points to another link stands for an exclusionary default of the form

. A crossed out link that starts from a node X and points to another link stands for an exclusionary default of the form

, with the second link representing the rule r.

, with the second link representing the rule r.

Fig. 1 Tweety Triangle,

![]() $c_1$

(left) and

$c_1$

(left) and

![]() $c_2$

(right).

$c_2$

(right).

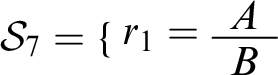

2.2 Capturing conciliationism

Now let’s see how the core idea motivating conciliatory views can be captured in the defeasible reasoner. As a first step, consider the following case, in which the conciliatory response seems particularly intuitive:

Mental Math. My friend and I have been going out to dinner for many years. We always tip 20% and divide the bill equally, and we always do the math in our heads. We’re quite accurate, but on those occasions where we’ve disagreed in the past, we’ve been right equally often. This evening seems typical, in that I don’t feel unusually tired or alert, and neither my friend nor I have had more wine or coffee than usual. I get $43 in my mental calculation, and become quite confident of this answer. But then my friend says she got $45. I dramatically reduce my confidence that $43 is the right answer.Footnote 15

Mental Math describes fairly complex reasoning, and we shouldn’t miss three of its features: first, we can distinguish two components in it, the mathematical calculations and the reasoning prompted by the friend’s announcement. What’s more, it seems perfectly legitimate to call the former the agent’s first-order reasoning and the latter her second-order reasoning. Second, the agent’s initial confidence in $43 being the correct answer is based on her calculations, and it gets reduced because the agent becomes suspicious of them. And third, the friend’s announcement provides for a very good reason for the agent to suspect that she may have erred in her calculations.

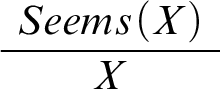

Bearing this in mind, let’s capture the agent’s reasoning in the model. To this end, we introduce a new predicate

![]() $Seems(\cdot )$

to our language. Now,

$Seems(\cdot )$

to our language. Now,

![]() $Seems(X)$

is meant to express the thought that the agent has reasoned to the best of her ability about whether some proposition X is true and come to the conclusion that it is true as a result. I doubt that there’s much informative we can say about the reasoning implied in

$Seems(X)$

is meant to express the thought that the agent has reasoned to the best of her ability about whether some proposition X is true and come to the conclusion that it is true as a result. I doubt that there’s much informative we can say about the reasoning implied in

![]() $Seems(\cdot )$

. One thing should be clear though: it’s going to depend on X and thus also differ from one case to another. If X is a mathematical claim,

$Seems(\cdot )$

. One thing should be clear though: it’s going to depend on X and thus also differ from one case to another. If X is a mathematical claim,

![]() $Seems(X)$

implies calculations of the sort described in Mental Math. If X is a philosophical claim,

$Seems(X)$

implies calculations of the sort described in Mental Math. If X is a philosophical claim,

![]() $Seems(X)$

implies a careful philosophical investigation. Also, note that

$Seems(X)$

implies a careful philosophical investigation. Also, note that

![]() $Seems(X)$

is perfectly compatible with

$Seems(X)$

is perfectly compatible with

![]() $\neg X$

. Since the agent is fallible, the fact that she has reasoned to the best of her ability about the issue doesn’t guarantee that the conclusion is correct.

$\neg X$

. Since the agent is fallible, the fact that she has reasoned to the best of her ability about the issue doesn’t guarantee that the conclusion is correct.

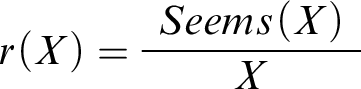

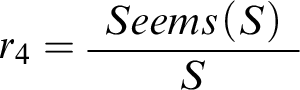

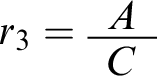

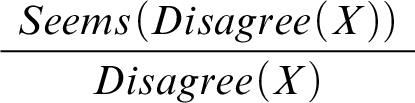

Presumably, though, situations where the agent’s best reasoning leads her astray are relatively rare, and so it’s reasonable for her to go by her best reasoning. After all, she doesn’t have any other alternative. This motivates the following default rule schema:

-

Significance of first-order reasoning:

, or if your best first-order (or domain-specific) reasoning suggests that X, conclude X by default.

, or if your best first-order (or domain-specific) reasoning suggests that X, conclude X by default.

In Mental Math, we would instantiate the schema with the rule

, with S standing for the proposition that my share of the bill is $43. What the friend’s announcement brings into question, then, is exactly the connection between

, with S standing for the proposition that my share of the bill is $43. What the friend’s announcement brings into question, then, is exactly the connection between

![]() $Seems(S)$

and S. While my mental calculations usually are reliable, now and then I make a mistake. The announcement suggests that this may have happened when I reasoned toward S.

$Seems(S)$

and S. While my mental calculations usually are reliable, now and then I make a mistake. The announcement suggests that this may have happened when I reasoned toward S.

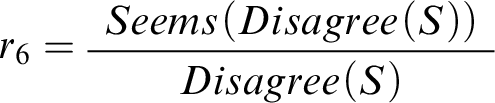

To capture the effects of the announcement, we use another designated predicate

![]() $Disagree(\cdot )$

. The formula

$Disagree(\cdot )$

. The formula

![]() $Disagree(X)$

is meant to express the idea that the agent is in genuine disagreement about whether X. And I say genuine to distinguish the sorts of disagreements that conciliationists take to be epistemically significant from merely apparent disagreements, such as verbal disagreements and disagreements based on misunderstandings.Footnote

16

So

$Disagree(X)$

is meant to express the idea that the agent is in genuine disagreement about whether X. And I say genuine to distinguish the sorts of disagreements that conciliationists take to be epistemically significant from merely apparent disagreements, such as verbal disagreements and disagreements based on misunderstandings.Footnote

16

So

![]() $Disagree(S)$

means that there’s a genuine disagreement—between me and my friend—over whether my share of the bill is $43. We capture the effects of this disagreement by means of the default rule

$Disagree(S)$

means that there’s a genuine disagreement—between me and my friend—over whether my share of the bill is $43. We capture the effects of this disagreement by means of the default rule

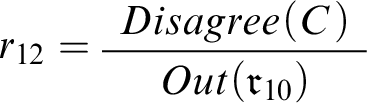

, which says, roughly, that in case there’s genuine disagreement about whether S, the rule

, which says, roughly, that in case there’s genuine disagreement about whether S, the rule

![]() $r_4$

, which lets me conclude S on the basis of

$r_4$

, which lets me conclude S on the basis of

![]() $Seems(S)$

, is to be excluded. The rule

$Seems(S)$

, is to be excluded. The rule

![]() $r_5$

, then, is what expresses the distinctively conciliatory component of the complex reasoning discussed in Mental Math.

$r_5$

, then, is what expresses the distinctively conciliatory component of the complex reasoning discussed in Mental Math.

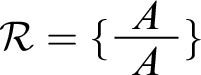

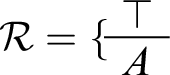

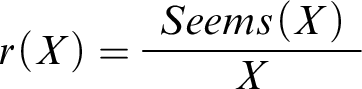

Now notice that

![]() $r_5$

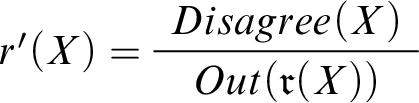

too instantiates a default rule schema, namely:

$r_5$

too instantiates a default rule schema, namely:

-

Significance of disagreement:

, or if there’s genuine disagreement about whether X, stop relying on your first-order reasoning about X by default.

, or if there’s genuine disagreement about whether X, stop relying on your first-order reasoning about X by default.

This schema expresses the core idea motivating conciliatory views, or the core of conciliationism, in our model.Footnote 17

As a first pass, we might try to express Mental Math in the context

![]() $c_3 = \langle \mathcal {W},\mathcal {R}\rangle $

where

$c_3 = \langle \mathcal {W},\mathcal {R}\rangle $

where

![]() $\mathcal {W} = \{Seems(S)$

,

$\mathcal {W} = \{Seems(S)$

,

![]() $Disagree(S)\}$

and

$Disagree(S)\}$

and

![]() $\mathcal {R} = \{r_4,r_5\}$

—see Figure 2 for a picture. While S doesn’t follow from

$\mathcal {R} = \{r_4,r_5\}$

—see Figure 2 for a picture. While S doesn’t follow from

![]() $c_3$

, as desired, this context doesn’t represent some important features of the scenario. In particular, it misleadingly suggests that the agent doesn’t reason to the conclusion that there’s genuine disagreement over the bill’s amount, but, rather, starts off knowing it for a fact. Admittedly, the description glances over this component of the reasoning, but it’s clearly implied by the background story:“.. my friend and I have been going out to dinner for many years.. we’ve been right equally often.. neither my friend nor I have had more wine or coffee than usual.” And nothing stands in the way of capturing the reasoning implied by this description by instantiating the familiar schema

$c_3$

, as desired, this context doesn’t represent some important features of the scenario. In particular, it misleadingly suggests that the agent doesn’t reason to the conclusion that there’s genuine disagreement over the bill’s amount, but, rather, starts off knowing it for a fact. Admittedly, the description glances over this component of the reasoning, but it’s clearly implied by the background story:“.. my friend and I have been going out to dinner for many years.. we’ve been right equally often.. neither my friend nor I have had more wine or coffee than usual.” And nothing stands in the way of capturing the reasoning implied by this description by instantiating the familiar schema

with

with

![]() $Disagree(S)$

.

$Disagree(S)$

.

Fig. 2 Mental Math, first pass.

We represent Mental Math using the pair of contexts

![]() $c_4 = \langle \mathcal {W},\mathcal {R}\rangle $

and

$c_4 = \langle \mathcal {W},\mathcal {R}\rangle $

and

![]() $c_5 = \langle \mathcal {W}', \mathcal {R}\rangle $

, with the first standing for the situation before the friend’s announcement and the second right after it. The set

$c_5 = \langle \mathcal {W}', \mathcal {R}\rangle $

, with the first standing for the situation before the friend’s announcement and the second right after it. The set

![]() $\mathcal {W}$

is comprised of the formula

$\mathcal {W}$

is comprised of the formula

![]() $Seems(S)$

, and

$Seems(S)$

, and

![]() $\mathcal {W}'$

of the formulas

$\mathcal {W}'$

of the formulas

![]() $Seems(S)$

and

$Seems(S)$

and

![]() $Seems(Disagree(S))$

. Both contexts share the same set of rules

$Seems(Disagree(S))$

. Both contexts share the same set of rules

![]() $\mathcal {R}$

, comprised of the familiar rules

$\mathcal {R}$

, comprised of the familiar rules

![]() $r_4$

and

$r_4$

and

![]() $r_5$

, as well as the new rule

$r_5$

, as well as the new rule

, another instance of the first-order reasoning schema. The two contexts are depicted in Figure 3. It’s not hard to verify that

, another instance of the first-order reasoning schema. The two contexts are depicted in Figure 3. It’s not hard to verify that

![]() $\{r_4\}$

is the unique proper scenario based on

$\{r_4\}$

is the unique proper scenario based on

![]() $c_4$

, and that, therefore, S follows from

$c_4$

, and that, therefore, S follows from

![]() $c_4$

, suggesting that, before the announcement, it’s rational for the agent to believe that her share of the bill is $43. As for

$c_4$

, suggesting that, before the announcement, it’s rational for the agent to believe that her share of the bill is $43. As for

![]() $c_5$

, here again we have only one proper scenario, namely,

$c_5$

, here again we have only one proper scenario, namely,

![]() $\{r_5,r_6\}$

. This implies that S does not follow from

$\{r_5,r_6\}$

. This implies that S does not follow from

![]() $c_5$

, suggesting that, after the announcement, it’s not rational for the agent to believe that her share of the bill is $43. So our model delivers the intuitive result.

$c_5$

, suggesting that, after the announcement, it’s not rational for the agent to believe that her share of the bill is $43. So our model delivers the intuitive result.

Fig. 3 Mental Math, final,

![]() $c_4$

(left) and

$c_4$

(left) and

![]() $c_5$

(right).

$c_5$

(right).

It also supports the following take on conciliationism: it’s not a simple view, on which you’re invariably required to give up your belief in X as soon as you find yourself in disagreement over X with an epistemic peer—or, perhaps, as soon as it’s rational for you to think that you’re in such a disagreement. Instead, it’s a more structured view, saying roughly the following: if your best first-order (or domain-specific) reasoning suggests that X and it’s rational for you to believe that you’re in disagreement over X with an epistemic peer, then, under normal circumstances, you should bracket your first-order reasoning about X and avoid forming beliefs on its basis.Footnote 18

2.3 Disagreements over disagreement

Now we can turn to the self-defeat objection. Here’s the scenario I used to illustrated it, presented in the first-person perspective:

Double Disagreement. I consider myself an able philosopher with special interests in metaphysics and social epistemology. I’ve reasoned very carefully about the vexed topic of free will, and I’ve come to the conclusion that we have libertarian free will. I’ve also spent a fair amount of time thinking about the issues surrounding peer disagreement, becoming convinced that conciliationism is correct and that one has to give up one’s well-reasoned opinion when faced with a disagreeing peer. Then, to my surprise, I discover that my friend metaphysician Milo disagrees with me about the existence of free will, and that my friend epistemologist Evelyn disagrees with me about conciliationism.Footnote 19

Elga [Reference Elga, Feldman and Warfield16] famously argued that cases like this show that conciliatory views are inconsistent.Footnote 20 His line of reasoning runs roughly thus. On the one hand, conciliationism seems to recommend that I abandon my belief in the existence of free will in response to my disagreement with Milo. On the other, conciliationism seems to recommend that I do not abandon my belief in the existence of free will. How? Well, it recommends that I abandon my belief in conciliationism in response to my disagreement with Evelyn. But with this belief gone, my disagreement with Milo seems to lose its epistemic significance for me, implying that it must be okay for me to retain the belief in the existence of free will. Putting the two together, conciliationism appears to support two inconsistent conclusions, that I ought to abandon the belief in free will, and that it’s not the case that I ought to.

Now let’s see if our model reasoner supports this train of thought. The first step is to capture Double Disagreement in a context, and here already we face a difficulty: we need to model the agent’s becoming convinced that conciliationism is correct. We know that conciliationism can be modeled as a specific reasoning policy, but we don’t yet know how to model the reasoning that puts such a policy in place. What we’ll do, then, is start with a partial representation, and then gradually add the missing pieces. Let C stand for the proposition that conciliationism is correct—and think of C as a placeholder to be made precise later on—and L for the proposition that we have libertarian free will. As our first pass, we represent Double Disagreement as the context

![]() $c_6 = \langle \mathcal {W},\mathcal {R}\rangle $

where

$c_6 = \langle \mathcal {W},\mathcal {R}\rangle $

where

![]() $\mathcal {W}$

is comprised of

$\mathcal {W}$

is comprised of

![]() $Seems(L)$

,

$Seems(L)$

,

![]() $Seems(C)$

,

$Seems(C)$

,

![]() $Seems(Disagree(L))$

, and

$Seems(Disagree(L))$

, and

![]() $Seems(Disagree(C))$

, and where

$Seems(Disagree(C))$

, and where

![]() $\mathcal {R}$

contains the following rules:

$\mathcal {R}$

contains the following rules:

-

: If my first-order reasoning about free will suggests that it exists, I conclude that it does indeed exist by default.

: If my first-order reasoning about free will suggests that it exists, I conclude that it does indeed exist by default. -

: If my (first-order) reasoning about my disagreement with Milo suggests that it’s genuine, I conclude that this disagreement is genuine by default.

: If my (first-order) reasoning about my disagreement with Milo suggests that it’s genuine, I conclude that this disagreement is genuine by default. -

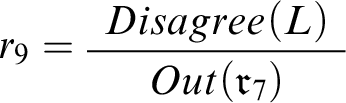

: If there’s genuine disagreement over free will, I back off from my first-order reasoning about it by default.

: If there’s genuine disagreement over free will, I back off from my first-order reasoning about it by default. -

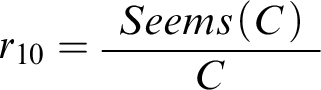

: If my reasoning about the epistemic significance of disagreement suggests that conciliationism is correct, I conclude that it is by default.

: If my reasoning about the epistemic significance of disagreement suggests that conciliationism is correct, I conclude that it is by default. -

: If my reasoning about my disagreement with Evelyn suggests that it’s genuine, I conclude that this disagreement is genuine by default.

: If my reasoning about my disagreement with Evelyn suggests that it’s genuine, I conclude that this disagreement is genuine by default. -

: If there’s genuine disagreement over conciliationism, I back off from my first-order reasoning about it by default.

: If there’s genuine disagreement over conciliationism, I back off from my first-order reasoning about it by default.

The context

![]() $c_6$

is depicted in Figure 4.

$c_6$

is depicted in Figure 4.

Fig. 4 Double Disagreement, first pass.

There’s one proper scenario based on

![]() $c_6$

, namely,

$c_6$

, namely,

![]() $\{r_{8},r_{9},r_{11},r_{12}\}$

, and this implies that neither C, nor L follow from this context. There’s nothing inconsistent here, but the reasoner’s response may seem odd: it doesn’t draw the conclusion that conciliationism is correct, and yet backs off from its reasoning about free will on distinctively conciliatory grounds. (I’ll have much more to say about this seeming oddness below.) However, the main problem is that, in

$\{r_{8},r_{9},r_{11},r_{12}\}$

, and this implies that neither C, nor L follow from this context. There’s nothing inconsistent here, but the reasoner’s response may seem odd: it doesn’t draw the conclusion that conciliationism is correct, and yet backs off from its reasoning about free will on distinctively conciliatory grounds. (I’ll have much more to say about this seeming oddness below.) However, the main problem is that, in

![]() $c_6$

, there’s no connection between the proposition saying that conciliationism is correct and the conciliatory reasoning policy, or between C and the rules

$c_6$

, there’s no connection between the proposition saying that conciliationism is correct and the conciliatory reasoning policy, or between C and the rules

![]() $r_{9}$

and

$r_{9}$

and

![]() $r_{12}$

.

$r_{12}$

.

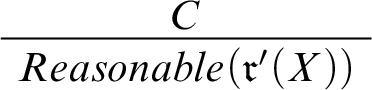

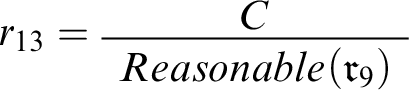

We’re going to put the connection in place, proceeding in two steps and starting by linking C and

![]() $r_9$

. To this end, let’s focus on a curtailed version of Double Disagreement in which I never find out that Evelyn disagrees with me over conciliationism—call this case Disagreement with Milo. We can express it in the context

$r_9$

. To this end, let’s focus on a curtailed version of Double Disagreement in which I never find out that Evelyn disagrees with me over conciliationism—call this case Disagreement with Milo. We can express it in the context

![]() $c_7 = \langle \mathcal {W},\mathcal {R}\rangle $

, which is like

$c_7 = \langle \mathcal {W},\mathcal {R}\rangle $

, which is like

![]() $c_6$

, except for its hard information

$c_6$

, except for its hard information

![]() $\mathcal {W}$

lacks the formula

$\mathcal {W}$

lacks the formula

![]() $Seems(Disagree(C))$

, and its set of rules lacks

$Seems(Disagree(C))$

, and its set of rules lacks

![]() $r_{11}$

and

$r_{11}$

and

![]() $r_{12}$

.Footnote

21

$r_{12}$

.Footnote

21

Since C says that conciliationism is correct and

![]() $r_9$

is an instance of the conciliatory schema

$r_9$

is an instance of the conciliatory schema

, it seems reasonable to arrange things in such a way that C is what puts

, it seems reasonable to arrange things in such a way that C is what puts

![]() $r_9$

in place. To this end, we extend our language with another designated predicate

$r_9$

in place. To this end, we extend our language with another designated predicate

![]() $Reasonable(\cdot )$

. Where

$Reasonable(\cdot )$

. Where

![]() $Out(\mathfrak {r})$

says that r is taken out of consideration,

$Out(\mathfrak {r})$

says that r is taken out of consideration,

![]() $Reasonable(\mathfrak {r})$

says that r is a prima facie reasonable rule to follow, or that r is among the rules that the agent could base her conclusions on. The formula

$Reasonable(\mathfrak {r})$

says that r is a prima facie reasonable rule to follow, or that r is among the rules that the agent could base her conclusions on. The formula

![]() $Reasonable(\mathfrak {r}_9)$

, in particular, says that

$Reasonable(\mathfrak {r}_9)$

, in particular, says that

![]() $r_9$

is a prima facie reasonable rule to follow.Footnote

22

We’re going to have to update our defeasible reasoner so that it can take this predicate into account. But first let’s connect C and

$r_9$

is a prima facie reasonable rule to follow.Footnote

22

We’re going to have to update our defeasible reasoner so that it can take this predicate into account. But first let’s connect C and

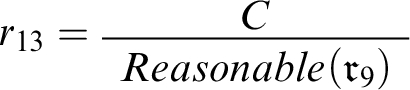

![]() $r_9$

by adding the rule

$r_9$

by adding the rule

to

to

![]() $c_7$

. The complete context is depicted in Figure 5. Notice that the graph depicting it includes two types of links we haven’t seen before. First, there’s a single link (standing for

$c_7$

. The complete context is depicted in Figure 5. Notice that the graph depicting it includes two types of links we haven’t seen before. First, there’s a single link (standing for

![]() $r_{13}$

) that points to another one and that isn’t crossed out. From now on, links of this type will represent rules of the form

$r_{13}$

) that points to another one and that isn’t crossed out. From now on, links of this type will represent rules of the form

. Second, there’s a dashed link (standing for

. Second, there’s a dashed link (standing for

![]() $r_9$

). From now on, links of this type will represent rules that, intuitively, the reasoner can start relying on only after it has concluded that they are prima facie reasonable to follow.Footnote

23

$r_9$

). From now on, links of this type will represent rules that, intuitively, the reasoner can start relying on only after it has concluded that they are prima facie reasonable to follow.Footnote

23

Fig. 5 Disagreement with Milo.

An easy check reveals that

![]() $Reasonable(\mathfrak {r}_9)$

and C do, while L does not follow from

$Reasonable(\mathfrak {r}_9)$

and C do, while L does not follow from

![]() $c_7$

. This is the intuitive result, but we can’t rest content with what we have here. To see why, consider the context

$c_7$

. This is the intuitive result, but we can’t rest content with what we have here. To see why, consider the context

![]() $c_8 = \langle \mathcal {W}\cup \{Out(\mathfrak {r}_{10})\},\mathcal {R}\rangle $

that extends the hard information of

$c_8 = \langle \mathcal {W}\cup \{Out(\mathfrak {r}_{10})\},\mathcal {R}\rangle $

that extends the hard information of

![]() $c_7$

with the formula

$c_7$

with the formula

![]() $Out(\mathfrak {r}_{10})$

, saying that the rule

$Out(\mathfrak {r}_{10})$

, saying that the rule

is to be taken out of consideration. It can be verified that the only proper scenario based on

is to be taken out of consideration. It can be verified that the only proper scenario based on

![]() $c_8$

is

$c_8$

is

![]() $\{r_8,r_9\}$

, and that, therefore, neither C, nor

$\{r_8,r_9\}$

, and that, therefore, neither C, nor

![]() $Reasonable(\mathfrak {r}_9)$

, nor L follow from it. But this is the wrong result: the formula L doesn’t follow because it gets excluded by the rule

$Reasonable(\mathfrak {r}_9)$

, nor L follow from it. But this is the wrong result: the formula L doesn’t follow because it gets excluded by the rule

![]() $r_9$

which was supposed to depend on the reasoner concluding C and

$r_9$

which was supposed to depend on the reasoner concluding C and

![]() $Reasonable(\mathfrak {r}_9)$

. Thus, a rule that, intuitively, should have no effects whatsoever ends up precluding the reasoner from deriving L.

$Reasonable(\mathfrak {r}_9)$

. Thus, a rule that, intuitively, should have no effects whatsoever ends up precluding the reasoner from deriving L.

To make the new predicate and rules like

![]() $r_9$

do real work, the inner workings of the reasoner have to be modified. And my general strategy here is to let the reasoner use a rule r only on the condition that it can infer a formula of the form

$r_9$

do real work, the inner workings of the reasoner have to be modified. And my general strategy here is to let the reasoner use a rule r only on the condition that it can infer a formula of the form

![]() $Reasonable(\mathfrak {r})$

, just like currently it can use a rule r only on the condition that it can infer its triggering condition,

$Reasonable(\mathfrak {r})$

, just like currently it can use a rule r only on the condition that it can infer its triggering condition,

![]() $Premise[r]$

. So, from now on, the reasoner is allowed to use a rule r only in case it can infer both

$Premise[r]$

. So, from now on, the reasoner is allowed to use a rule r only in case it can infer both

![]() $Premise[r]$

and

$Premise[r]$

and

![]() $Reasonable(\mathfrak {r})$

. And there are two ways for it to infer a formula of the form

$Reasonable(\mathfrak {r})$

. And there are two ways for it to infer a formula of the form

![]() $Reasonable(\mathfrak {r})$

: from the hard information or by means of other rules. One immediate implication of this is that some

$Reasonable(\mathfrak {r})$

: from the hard information or by means of other rules. One immediate implication of this is that some

![]() $Reasonable$

-formulas are going to have to be included in the hard information. But this should make good sense: the presence of

$Reasonable$

-formulas are going to have to be included in the hard information. But this should make good sense: the presence of

![]() $Reasonable(\mathfrak {r})$

in

$Reasonable(\mathfrak {r})$

in

![]() $\mathcal {W}$

can be understood in terms of the reasoner taking r to be a prima facie reasonable rule to follow from the outset.Footnote

24

$\mathcal {W}$

can be understood in terms of the reasoner taking r to be a prima facie reasonable rule to follow from the outset.Footnote

24

In Section 2.1, the central notion of a proper scenario was defined by appealing to three conditions on rules. Now we amend it by adding a fourth one:

Definition 8 (Reasonable rules)

Let

![]() $c = \langle \mathcal {W},\mathcal {R}\rangle $

be a context, and

$c = \langle \mathcal {W},\mathcal {R}\rangle $

be a context, and

![]() $\mathcal {S}$

a scenario based on it. Then the rules from

$\mathcal {S}$

a scenario based on it. Then the rules from

![]() $\mathcal {R}$

that are prima facie reasonable (to follow) in the context of the scenario

$\mathcal {R}$

that are prima facie reasonable (to follow) in the context of the scenario

![]() $\mathcal {S}$

are those that belong to the set

$\mathcal {S}$

are those that belong to the set

![]() $Reasonable_{\mathcal {W},\mathcal {R}}(\mathcal {S}) = \{r \in \mathcal {R}: \mathcal {W}\cup Conclusion[S]\vdash Reasonable(\mathfrak {r})\}$

.

$Reasonable_{\mathcal {W},\mathcal {R}}(\mathcal {S}) = \{r \in \mathcal {R}: \mathcal {W}\cup Conclusion[S]\vdash Reasonable(\mathfrak {r})\}$

.

Definition 9 (Proper scenarios, revised)

Let

![]() $\langle \mathcal {W},\mathcal {R}\rangle $

be a context and

$\langle \mathcal {W},\mathcal {R}\rangle $

be a context and

![]() $\mathcal {S}$

a scenario based on it. Then

$\mathcal {S}$

a scenario based on it. Then

![]() $\mathcal {S}$

is a proper scenario based on

$\mathcal {S}$

is a proper scenario based on

![]() $\langle \mathcal {W},\mathcal {R}\rangle $

just in case

$\langle \mathcal {W},\mathcal {R}\rangle $

just in case

$$ \begin{align*}\begin{array}{llll} \mathcal{S} &= &\{ r\in \mathcal{R} : & r\in Reasonable_{\mathcal{W},\mathcal{R}}(\mathcal{S}), \\ &&& r \in Triggered_{\mathcal{W},\mathcal{R}}(\mathcal{S}), \\ &&& r\notin Conflicted_{\mathcal{W},\mathcal{R}}(\mathcal{S}), \\ &&& r\notin Excluded_{\mathcal{W},\mathcal{R}}(\mathcal{S}) \}. \end{array} \end{align*} $$

$$ \begin{align*}\begin{array}{llll} \mathcal{S} &= &\{ r\in \mathcal{R} : & r\in Reasonable_{\mathcal{W},\mathcal{R}}(\mathcal{S}), \\ &&& r \in Triggered_{\mathcal{W},\mathcal{R}}(\mathcal{S}), \\ &&& r\notin Conflicted_{\mathcal{W},\mathcal{R}}(\mathcal{S}), \\ &&& r\notin Excluded_{\mathcal{W},\mathcal{R}}(\mathcal{S}) \}. \end{array} \end{align*} $$

With this, we’re all done. There’s no need to change the definition of consequence.Footnote 25 The next observation shows that our revised reasoner is a conservative generalization of the original one—the proof is provided in the Appendix:

Observation 2.1. Let

![]() $c = \langle \mathcal {W},\mathcal {R}\rangle $

be an arbitrary regular context where no

$c = \langle \mathcal {W},\mathcal {R}\rangle $

be an arbitrary regular context where no

![]() $Reasonable$

-formulas occur—or, more precisely, a context where no subformula of any of the formulas in

$Reasonable$

-formulas occur—or, more precisely, a context where no subformula of any of the formulas in

![]() $\mathcal {W}$

or any of the premises or conclusions of the rules in

$\mathcal {W}$

or any of the premises or conclusions of the rules in

![]() $\mathcal {R}$

is of the form

$\mathcal {R}$

is of the form

![]() $Reasonable(\mathfrak {r})$

. Then there’s a context

$Reasonable(\mathfrak {r})$

. Then there’s a context

![]() $c' = \langle \mathcal {W}\cup \{Reasonable(\mathfrak {r}):r\in \mathcal {R}\},\mathcal {R}\rangle $

that’s equivalent to c. Or more explicitly, X follows from c if and only if X follows from

$c' = \langle \mathcal {W}\cup \{Reasonable(\mathfrak {r}):r\in \mathcal {R}\},\mathcal {R}\rangle $

that’s equivalent to c. Or more explicitly, X follows from c if and only if X follows from

![]() $c'$

for all X such that no subformula of X is of the form

$c'$

for all X such that no subformula of X is of the form

![]() $Reasonable(\mathfrak {r})$

.

$Reasonable(\mathfrak {r})$

.

Our final rendering of Disagreement with Milo is the context

![]() $c_9 = \langle \mathcal {W},\mathcal {R}\rangle $

, which is like

$c_9 = \langle \mathcal {W},\mathcal {R}\rangle $

, which is like

![]() $c_7$

, except for its hard information

$c_7$

, except for its hard information

![]() $\mathcal {W}$

also includes the formulas saying that the reasoner takes the rules

$\mathcal {W}$

also includes the formulas saying that the reasoner takes the rules

![]() $r_7$

,

$r_7$

,

![]() $r_8$

,

$r_8$

,

![]() $r_{10}$

, and

$r_{10}$

, and

![]() $r_{13}$

to be prima facie reasonable to follow, that is,

$r_{13}$

to be prima facie reasonable to follow, that is,

![]() $\mathcal {W}$

includes the formulas

$\mathcal {W}$

includes the formulas

![]() $Reasonable(\mathfrak {r}_{7})$

,

$Reasonable(\mathfrak {r}_{7})$

,

![]() $Reasonable(\mathfrak {r}_{8})$

,

$Reasonable(\mathfrak {r}_{8})$

,

![]() $Reasonable(\mathfrak {r}_{10})$

, and

$Reasonable(\mathfrak {r}_{10})$

, and

![]() $Reasonable(\mathfrak {r}_{13})$

. It’s not difficult to see that the only proper scenario based on

$Reasonable(\mathfrak {r}_{13})$

. It’s not difficult to see that the only proper scenario based on

![]() $c_9$

is

$c_9$

is

![]() $\{r_8,r_9,r_{10},r_{13}\}$

, and so that C does, while L doesn’t follow from this context. Thus, our analysis suggests that the correct response to the scenario is to stick to the belief in conciliationism and to abandon the belief in free will. This seems perfectly intuitive.Footnote

26

$\{r_8,r_9,r_{10},r_{13}\}$

, and so that C does, while L doesn’t follow from this context. Thus, our analysis suggests that the correct response to the scenario is to stick to the belief in conciliationism and to abandon the belief in free will. This seems perfectly intuitive.Footnote

26

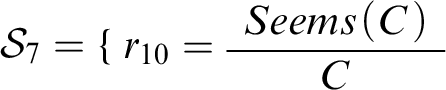

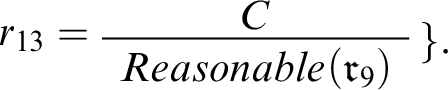

Now let’s zoom in on the other half of the story in Double Disagreement, forgetting about free will for a second and restricting attention to conciliationism. Our preliminary formalization included the formulas

![]() $Seems(C)$

and

$Seems(C)$

and

![]() $Seems(Disagree(C))$

, as well as the following three rules:

$Seems(Disagree(C))$

, as well as the following three rules:

,

,

, and

, and

. We found it wanting because it didn’t connect C and

. We found it wanting because it didn’t connect C and

![]() $r_{12}$

. Now, however, we can complete this formalization by supplementing it with the rule

$r_{12}$

. Now, however, we can complete this formalization by supplementing it with the rule

, as well as

, as well as

![]() $Reasonable$

-formulas saying that

$Reasonable$

-formulas saying that

![]() $r_{10}$

,

$r_{10}$

,

![]() $r_{11}$

, and

$r_{11}$

, and

![]() $r_{14}$

are prima facie reasonable rules. The resulting context

$r_{14}$

are prima facie reasonable rules. The resulting context

![]() $c_{10}$

is depicted in Figure 6. (Note that here and elsewhere formulas of the form

$c_{10}$

is depicted in Figure 6. (Note that here and elsewhere formulas of the form

![]() $Reasonable(\mathfrak {r})$

are not explicitly represented.)

$Reasonable(\mathfrak {r})$

are not explicitly represented.)

Fig. 6 Disagreement with Evelyn.

As it turns out, however, there are no proper scenarios based on

![]() $c_{10}$

.Footnote

27

This is bad news for the advocates of conciliatory views, since no proper scenarios means that we get

$c_{10}$

.Footnote

27

This is bad news for the advocates of conciliatory views, since no proper scenarios means that we get

![]() for any formula X whatsoever: on our definition of consequence, a formula X follows from a context

for any formula X whatsoever: on our definition of consequence, a formula X follows from a context

![]() $c = \langle \mathcal {W},\mathcal {R}\rangle $

if and only if

$c = \langle \mathcal {W},\mathcal {R}\rangle $

if and only if

![]() $\mathcal {W}\cup Conclusion[\mathcal {S}]\vdash X$

for every proper scenario based on c. But when there are no proper scenarios, every formula satisfies the right-hand side of the biconditional vacuously, and so every formula follows from the context. What’s more, the context capturing the entire story recounted in Double Disagreement delivers the same result. Merging

$\mathcal {W}\cup Conclusion[\mathcal {S}]\vdash X$

for every proper scenario based on c. But when there are no proper scenarios, every formula satisfies the right-hand side of the biconditional vacuously, and so every formula follows from the context. What’s more, the context capturing the entire story recounted in Double Disagreement delivers the same result. Merging

![]() $c_9$

and

$c_9$

and

![]() $c_{10}$

, we acquire the context

$c_{10}$

, we acquire the context

![]() $c_{11}$

, our final representation of the scenario—see Figure 7. Yet again, there are no proper scenarios based on

$c_{11}$

, our final representation of the scenario—see Figure 7. Yet again, there are no proper scenarios based on

![]() $c_{11}$

, and we get

$c_{11}$

, and we get

![]() for all X. Thus, our carefully designed model reasoner seems to suggest that the correct conciliatory response to Double Disagreement is to conclude everything.Footnote

28

for all X. Thus, our carefully designed model reasoner seems to suggest that the correct conciliatory response to Double Disagreement is to conclude everything.Footnote

28

Fig. 7 Double Disagreement, final.

We can think of this problem as the formal version of the self-defeat objection to conciliatory views that we started with. In the end, putting forth a model (conciliatory) reasoner which suggests that concluding everything is the correct response to scenarios of a certain shape is much like advancing a (conciliatory) view that can issue inconsistent recommendations. The fact that this formal problem obtains lends support to Elga’s pessimistic conclusion that conciliatory views are inherently flawed.

Luckily, though, the problem can be addressed.

3 Moving to argumentation theory

The fact that conciliationism turns on itself in Double Disagreement is only part of the reason why our model reasoner suggests concluding everything. Its other part is something of a technical nuisance: default logic—which our reasoner is based on—isn’t particularly well-suited to handle contexts containing what we can call vicious cycles or self-defeating chains of rules.Footnote 29 The main goal of this section is to clear this nuisance. Once it’s been cleared, we’ll see that conciliatory views do not have to issue inconsistent directives in Double Disagreement and other scenarios like it.

In order to be in a position to handle contexts containing vicious cycles adequately—including the context

![]() $c_{11}$

that expresses Double Disagreement and happens to contain such a cycle in the form of the chain of rules

$c_{11}$

that expresses Double Disagreement and happens to contain such a cycle in the form of the chain of rules

![]() $r_{10}$

–

$r_{10}$

–

![]() $r_{14}$

–

$r_{14}$

–

![]() $r_{12}$

—we go beyond default logic and draw on the resources of a more general formal framework called abstract argumentation theory. As Dung [Reference Dung13] has shown in his seminal work, default logic, as well as many other formalisms for defeasible reasoning can be seen as special cases of argumentation theory. One implication of this is that it’s possible to formulate an argumentation theory-based reasoner that picks out the same consequence relation as our default-logic based reasoner from the previous section. And that’s just what we’re going to do. For, first, a simple tweak to the new reasoner will let it handle cycles adequately, and, second, we’ll need the additional resources of argumentation theory later anyway (to capture degrees of confidence in Section 4).

$r_{12}$

—we go beyond default logic and draw on the resources of a more general formal framework called abstract argumentation theory. As Dung [Reference Dung13] has shown in his seminal work, default logic, as well as many other formalisms for defeasible reasoning can be seen as special cases of argumentation theory. One implication of this is that it’s possible to formulate an argumentation theory-based reasoner that picks out the same consequence relation as our default-logic based reasoner from the previous section. And that’s just what we’re going to do. For, first, a simple tweak to the new reasoner will let it handle cycles adequately, and, second, we’ll need the additional resources of argumentation theory later anyway (to capture degrees of confidence in Section 4).

The remainder of this section is structured as follows. Sections 3.1–3.3 introduce abstract argumentation, relate it to default logic, and formulate the more sophisticated reasoner. Section 3.4 returns to Double Disagreement, explains how this reasoner handles it, and contrasts its recommendations with the proposals for responding to the self-defeat objection from the literature.

3.1 Argument frameworks

In default logic, conclusions are derived on the basis of contexts. In argumentation theory, they are derived on the basis of argument (or argumentation) frameworks. Formally, such frameworks are pairs of the from

![]() $\langle \mathcal {A}, \leadsto \rangle $

, where

$\langle \mathcal {A}, \leadsto \rangle $

, where

![]() $\mathcal {A}$

is a set of arguments—the elements of which can be anything—and

$\mathcal {A}$

is a set of arguments—the elements of which can be anything—and

![]() $\leadsto $

is a defeat relation among them.Footnote

30

Thus, for any two arguments

$\leadsto $

is a defeat relation among them.Footnote

30

Thus, for any two arguments

![]() $\mathcal {S}$

and

$\mathcal {S}$

and

![]() $\mathcal {S}'$

in

$\mathcal {S}'$

in

![]() $\mathcal {A}$

, the relation

$\mathcal {A}$

, the relation

![]() $\leadsto $

can tell us whether

$\leadsto $

can tell us whether

![]() $\mathcal {S}$

defeats

$\mathcal {S}$

defeats

![]() $\mathcal {S}'$

or not.Footnote

31

We denote argument frameworks with the letter

$\mathcal {S}'$

or not.Footnote

31

We denote argument frameworks with the letter

![]() $\mathcal {F}$

. What argumentation theory does is provide a number of sensible ways for selecting the set of winning arguments of any given framework

$\mathcal {F}$

. What argumentation theory does is provide a number of sensible ways for selecting the set of winning arguments of any given framework

![]() $\mathcal {F}$

, the set which, in its turn, determines the conclusions that can be drawn on the basis of

$\mathcal {F}$

, the set which, in its turn, determines the conclusions that can be drawn on the basis of

![]() $\mathcal {F}$

. Since the frameworks we focus on will be constructed from contexts, argumentation theory will let us determine the conclusions that can be drawn on the basis of any given context c.

$\mathcal {F}$

. Since the frameworks we focus on will be constructed from contexts, argumentation theory will let us determine the conclusions that can be drawn on the basis of any given context c.

Our logic-based reasoner relies on the notion of a proper scenario to determine the consequences of a context. This notion specifies something like the necessary and sufficient conditions for a rule’s counting as admissible or good—that the rule be reasonable, triggered, not conflicted, and not excluded—and the reasoner can be thought of as selecting such rules in one single step. However, nothing stands in the way of selecting the good rules in a more stepwise fashion. That is, instead of jumping from a context to the scenario containing all and only the admissible rules, we could first select all scenarios whose members satisfy the positive conditions—reasonable and triggered—and later filter out the scenarios whose members do not satisfy the remaining negative conditions—conflicted and excluded. Let’s restate the idea, using our formal notation: starting with a context

![]() $c = \langle \mathcal {W},\mathcal {R}\rangle $

, in the first step we’d select all and only those scenarios

$c = \langle \mathcal {W},\mathcal {R}\rangle $

, in the first step we’d select all and only those scenarios

![]() $\mathcal {S} \subseteq \mathcal {R}$

such that, for every r in

$\mathcal {S} \subseteq \mathcal {R}$

such that, for every r in

![]() $\mathcal {S}$

,

$\mathcal {S}$

,

-

$\mathcal {W}\cup Conclusion[\mathcal {S}]\vdash Reasonable(r)\& Premise[r]$

,

$\mathcal {W}\cup Conclusion[\mathcal {S}]\vdash Reasonable(r)\& Premise[r]$

,

and, in the second step, we’d filter out all of those scenarios

![]() $\mathcal {S}$

for which it holds that there’s some r in

$\mathcal {S}$

for which it holds that there’s some r in

![]() $\mathcal {S}$

such that

$\mathcal {S}$

such that

-

$\mathcal {W}\cup Conclusion[\mathcal {S}]\vdash \neg Conclusion[r]$

or

$\mathcal {W}\cup Conclusion[\mathcal {S}]\vdash \neg Conclusion[r]$

or

$\mathcal {W}\cup Conclusion[\mathcal {S}]\vdash Out(\mathfrak {r})$

.

$\mathcal {W}\cup Conclusion[\mathcal {S}]\vdash Out(\mathfrak {r})$

.

After the second step, we’d have access to all and only the good rules. Applying argumentation theory to contexts can be naturally thought of as proceeding in these two steps. The scenarios selected in the first step are the arguments of the argumentation framework based on the given context. And the scenarios that remain standing after the second step are the winning arguments of the framework. The definition of an argument based on a context, then, runs thus:

Definition 10 (Arguments)

Let

![]() $c = \langle \mathcal {W},\mathcal {R}\rangle $

be a context and

$c = \langle \mathcal {W},\mathcal {R}\rangle $

be a context and

![]() $\mathcal {S}$

a scenario based on it,

$\mathcal {S}$

a scenario based on it,

![]() $\mathcal {S} \subseteq \mathcal {R}$

. Then

$\mathcal {S} \subseteq \mathcal {R}$

. Then

![]() $\mathcal {S}$

is an argument based on c just in case

$\mathcal {S}$

is an argument based on c just in case

![]() $\mathcal {S} \subseteq Triggered_{\mathcal {W},\mathcal {R}}(\mathcal {S})$

and

$\mathcal {S} \subseteq Triggered_{\mathcal {W},\mathcal {R}}(\mathcal {S})$

and

![]() $\mathcal {S} \subseteq Reasonable_{\mathcal {W},\mathcal {R}}(\mathcal {S})$

. The set of arguments based on c is the set

$\mathcal {S} \subseteq Reasonable_{\mathcal {W},\mathcal {R}}(\mathcal {S})$

. The set of arguments based on c is the set

![]() $Arguments(c) = \{\mathcal {S}\subseteq \mathcal {R} : \mathcal {S}\text { is an argument based on }c\}$

.

$Arguments(c) = \{\mathcal {S}\subseteq \mathcal {R} : \mathcal {S}\text { is an argument based on }c\}$

.

To see the definition at work, let’s apply it to a toy case. Consider the context

![]() $c_{12} =\langle \mathcal {W},\mathcal {R}\rangle $

where

$c_{12} =\langle \mathcal {W},\mathcal {R}\rangle $

where

![]() $\mathcal {W}$

contains A,

$\mathcal {W}$

contains A,

![]() $Reasonable(\mathfrak {r}_1)$