1. Introduction

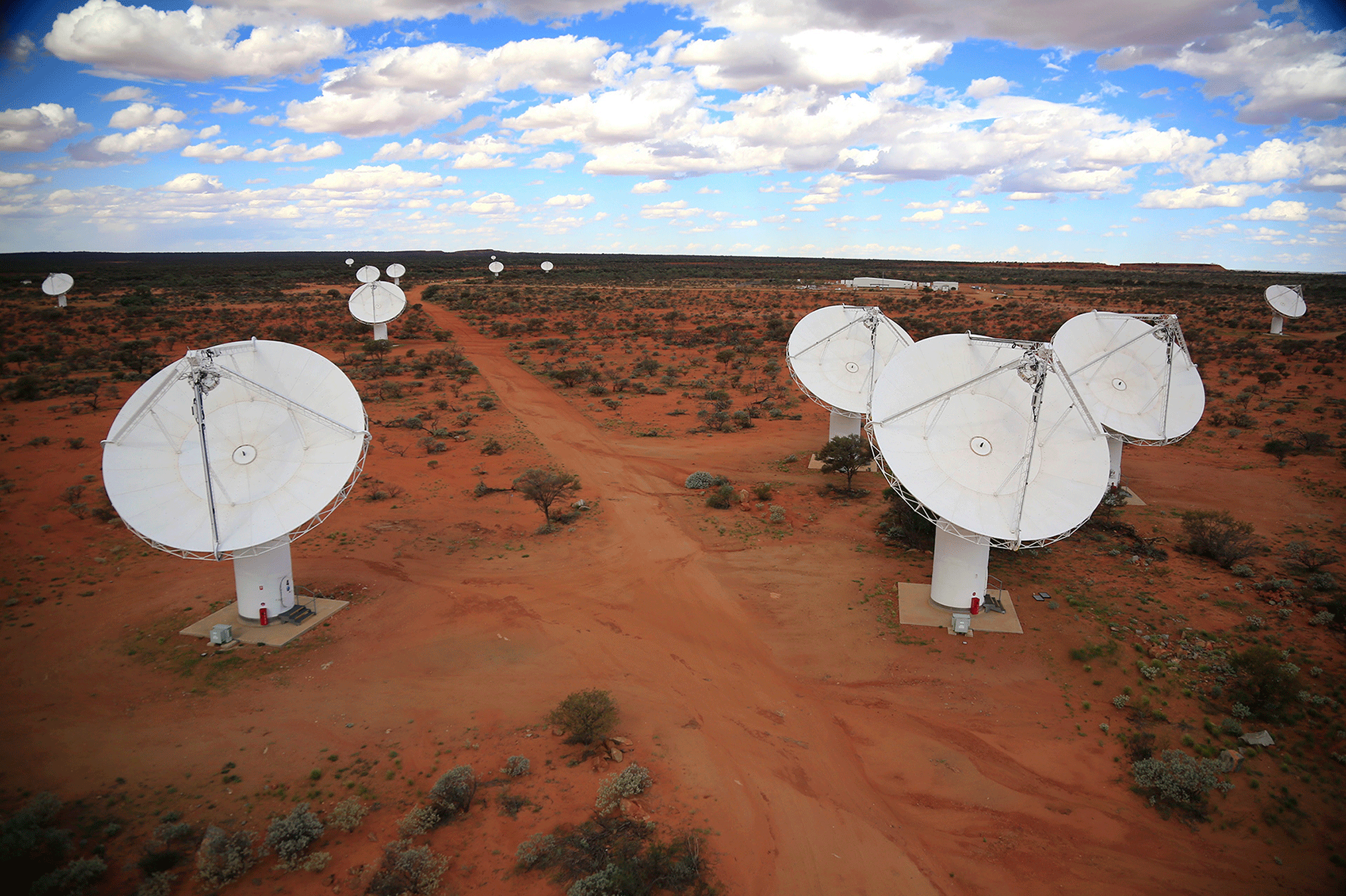

ASKAP is a Square Kilometre Array (SKAFootnote a) precursor telescope located at Australia’s SKA site, the Murchison Radio-Astronomy Observatory (MRO). This radio telescope was developed using new phased array feed (PAF) technology to achieve high survey speed by observing with a wide instantaneous field of view. The design concept is described in more detail by DeBoer et al. (Reference DeBoer2009) and the science goals in Johnston et al. (Reference Johnston2007). Figure 1 shows a photograph of ASKAP’s core taken in 2018.

After more than 10 years of design, prototyping, construction, and commissioning, ASKAP became fully operational in 2019. During construction, we developed a second generation of electronics (Hampson et al. Reference Hampson2012) based on lessons learnt from prototype hardware (Schinckel et al. 2011; Hotan et al. 2014; McConnell et al. Reference McConnell2016), along with many iterations of firmware and software. Thousands of components have been installed and connected together to form one of the most complicated and powerful radio astronomy signal processors ever developed.

The first interferometry between PAF-equipped antennas at the MRO was conducted using a prototype system known as the Boolardy engineering test array (BETA, Hotan et al. 2014). This consisted of six antennas fitted with first-generation receiver systems (Schinckel et al. 2011). Beyond teaching us how to improve ASKAP’s system design, BETA contributed to astrophysical research (e.g., Serra et al. Reference Serra2015b; Harvey-Smith et al. Reference Harvey-Smith2016; Hobbs et al. Reference Hobbs2016; Heywood et al. Reference Heywood2016; Allison et al. Reference Allison2017; Moss et al. Reference Moss2017) including the discovery of neutral hydrogen in a young radio galaxy at redshift ![]() $z=0.44$ through an absorption line search (Allison et al. Reference Allison2015). BETA also demonstrated real-time spatial radio-frequency interference (RFI) mitigation (Hellbourg, Bannister, & Hotan Reference Hotan2016).

$z=0.44$ through an absorption line search (Allison et al. Reference Allison2015). BETA also demonstrated real-time spatial radio-frequency interference (RFI) mitigation (Hellbourg, Bannister, & Hotan Reference Hotan2016).

A diverse early science programme conducted on a subset of 12–18 antennas fitted with the second-generation PAF systems also produced a wide range of results, showcasing the utility of a wide-field radio telescope at gigahertz frequencies. See Section 15 for more details.

In 2019, we commenced a programme of pilot surveys with the full array and an all-sky survey known as the Rapid ASKAP Continuum Survey (RACSFootnote b), demonstrating the full science capability of the telescope for the first time.

Figure 1. CSIRO’s Australian Square Kilometre Array Pathfinder (ASKAP) telescope.

With up to 36 beams per antenna and 36 antennas, ASKAP produces a torrent of raw data (approximately 100Tbit s–1). This is correlated and averaged at the observatory, producing an output visibility data stream of up to 2.4GB s–1 that is sent to the Pawsey Supercomputing CentreFootnote c in Perth, some 600 km south of the telescope, for image processing. This gives the research community a hint of the challenges to come in the era of the SKA.

This paper describes the technical details of ASKAP and documents key performance metrics based on commissioning data. Future papers in the series will describe the image processing software and performance metrics in more detail. We describe ASKAP’s design (Section 1.1) followed by information about the observatory site (Section 2), an overview of the system and its components (Section 3), and more detailed information about key subsystems (Sections 4–12). We report on measured performance metrics in Section 13, the site radio frequency environment in Section 14, the telescope operations model in Section 15, and future upgrade options in Section 16.

1.1. Designing a radio telescope for surveys

The primary design goal of ASKAP was to maximise survey speed, the rate at which the telescope can observe a given area of sky to a certain sensitivity limit (Johnston et al. Reference Johnston2007). Compared to existing telescopes, it was clear that high survey speed could be achieved by building an array of many small antennas to keep the primary beam size large, provide sensitivity over multiple spatial scales, and achieve good surface brightness sensitivity. Such an array would have many baselines (antenna pairs) and would, therefore, produce a very large amount of data and cause the computational cost of imaging to dominate array design.

ASKAP uses PAF receivers to achieve a 31![]() $\,\textrm{deg}^{2}$ instantaneous field of view at

$\,\textrm{deg}^{2}$ instantaneous field of view at ![]() $800\,\textrm{MHz}$. PAFs capture more information from each antenna and can be a more cost effective way to increase survey speed than using a much larger number of smaller antennas with cryogenic single-pixel feeds (Chippendale, Colegate, & O’Sullivan Reference Chippendale, Colegate and O’Sullivan2007).

$800\,\textrm{MHz}$. PAFs capture more information from each antenna and can be a more cost effective way to increase survey speed than using a much larger number of smaller antennas with cryogenic single-pixel feeds (Chippendale, Colegate, & O’Sullivan Reference Chippendale, Colegate and O’Sullivan2007).

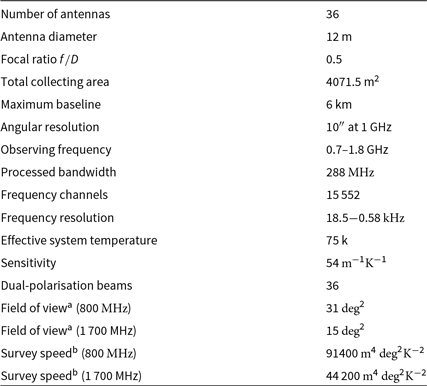

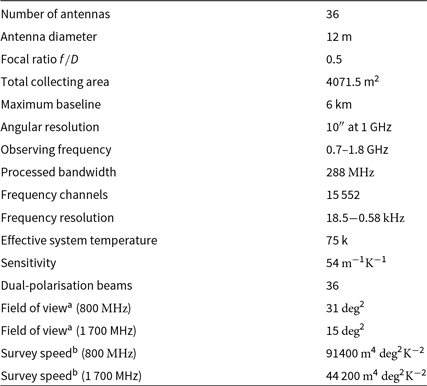

Table 1. Key parameters of the ASKAP telescope

![]() $^{\text{a}}$Calculated via (15).

$^{\text{a}}$Calculated via (15).

![]() $^{\text{b}}$Calculated via (14).

$^{\text{b}}$Calculated via (14).

The survey speed of a radio telescope array also depends on bandwidth (Bunton Reference Bunton2003a) and the precise nature of the survey parameters (Johnston & Grey Reference Johnston and Grey2006; Johnston et al. Reference Johnston2007), but can be broadly represented by the figure of merit (Bunton Reference Bunton2003a; DeBoer et al. Reference DeBoer2009; Bunton & Hay Reference Bunton and Hay2010)

where ![]() $A_{\text{e}}$ is the total effective area of all antennas,

$A_{\text{e}}$ is the total effective area of all antennas, ![]() $T_{\text{sys}}$ is the system equivalent noise temperature, and

$T_{\text{sys}}$ is the system equivalent noise temperature, and ![]() $\Omega_{\text{FoV}}$ is the instantaneous (processed) field of view. Effective area is related to the physical area A of the antennas via

$\Omega_{\text{FoV}}$ is the instantaneous (processed) field of view. Effective area is related to the physical area A of the antennas via ![]() $A_{\text{e}}=\eta A$ and

$A_{\text{e}}=\eta A$ and ![]() $\eta$ is the antenna efficiency.

$\eta$ is the antenna efficiency.

Table 1 summarises key indicative performance parameters of the ASKAP array using (1) to measure survey speed and ![]() $A_{\text{e}}/T_{\text{sys}}$ to measure sensitivity. A nominal measured value of 75 K is used for the effective system temperature

$A_{\text{e}}/T_{\text{sys}}$ to measure sensitivity. A nominal measured value of 75 K is used for the effective system temperature ![]() $T_{\text{sys}}/\eta$. With 36 dual-polarisation beams, ASKAP achieves a 31

$T_{\text{sys}}/\eta$. With 36 dual-polarisation beams, ASKAP achieves a 31![]() $\,\textrm{deg}^{2}$ field of view at

$\,\textrm{deg}^{2}$ field of view at ![]() $800\,\textrm{MHz}$ but this reduces to 15

$800\,\textrm{MHz}$ but this reduces to 15![]() $\,\textrm{deg}^{2}$ at

$\,\textrm{deg}^{2}$ at ![]() $1700\,\textrm{MHz}$ (McConnell Reference McConnell2017b). Field of view and survey speed may be increased at the high end of the band in the future by processing more than 36 beams. See Section 13 for more details on the definition and measurement of sensitivity, field of view, and the resulting survey speed.

$1700\,\textrm{MHz}$ (McConnell Reference McConnell2017b). Field of view and survey speed may be increased at the high end of the band in the future by processing more than 36 beams. See Section 13 for more details on the definition and measurement of sensitivity, field of view, and the resulting survey speed.

ASKAP’s large field of view and increased survey speed came at the expense of sensitivity since cooling each PAF to cryogenic temperatures was not economical on the scale required at the time of design. This resulted in a beamformed ![]() $T_{\text{sys}}/\eta$ in the range 60–80 K over most of the frequency range, or a system equivalent flux density (SEFD) of approximately 1 800 Jy for a single antenna (see Section 13).

$T_{\text{sys}}/\eta$ in the range 60–80 K over most of the frequency range, or a system equivalent flux density (SEFD) of approximately 1 800 Jy for a single antenna (see Section 13).

ASKAP’s ![]() $T_{\text{sys}}$ is dominated by low-noise amplifier (LNA) noise, in part because the LNA uses a transistor (ATF-35143, circa 2006) that is now quite old. Sensitivity could be improved significantly in the future by updating the room temperature LNA design with new transistors (Shaw & Hay 2015; Weinreb & Shi Reference Weinreb and Shi2019) or by scaling up the manufacturability and affordability of cryogenic PAF technology like that under development for the Parkes 64 m telescope (Dunning et al. Reference Dunning, Bowen, Hayman, Kanapathippillai, Kanoniuk, Shaw and Severs2016, Reference Dunning2019).

$T_{\text{sys}}$ is dominated by low-noise amplifier (LNA) noise, in part because the LNA uses a transistor (ATF-35143, circa 2006) that is now quite old. Sensitivity could be improved significantly in the future by updating the room temperature LNA design with new transistors (Shaw & Hay 2015; Weinreb & Shi Reference Weinreb and Shi2019) or by scaling up the manufacturability and affordability of cryogenic PAF technology like that under development for the Parkes 64 m telescope (Dunning et al. Reference Dunning, Bowen, Hayman, Kanapathippillai, Kanoniuk, Shaw and Severs2016, Reference Dunning2019).

Pilot survey observations (see Section 15) have tested many different observing modes, providing direct experience of the sensitivity that can be achieved within practical constraints. In many cases, we approach the thermal noise limit. However, for some observing modes (particularly with short integration times), other factors such as deconvolution errors contribute to an elevated noise floor. The values given in Table 2 should provide a realistic basis for planning observations, and may improve as updates are made to the telescope. The data used to compile Table 2 were obtained using different beam arrangements and processing strategies, so some variation is expected on top of the intrinsic spectral behaviour, as shown in Figure 22.

Importantly, the measured noise can be increased above the theoretical value by weighting the data differently to natural weighting. ASKAP uses a preconditioning approach with robust weighting. The robustness parameter has a similar effect to the description in Briggs (Reference Briggs1995), ranging from ![]() $-2.0$ (uniform weighting) to 2.0 (natural weighting). The robustness values typically used for pilot survey processing were: 0.0 for continuum imaging at low frequencies,

$-2.0$ (uniform weighting) to 2.0 (natural weighting). The robustness values typically used for pilot survey processing were: 0.0 for continuum imaging at low frequencies, ![]() $-0.5$ for continuum imaging in the mid-band, and 0.5 for spectral imaging in the low and mid-bands. The noise increases by factors of approximately 2.5, 1.5, and 1.2 for robustness values of

$-0.5$ for continuum imaging in the mid-band, and 0.5 for spectral imaging in the low and mid-bands. The noise increases by factors of approximately 2.5, 1.5, and 1.2 for robustness values of ![]() $-0.5$, 0.0, and 0.5, respectively.

$-0.5$, 0.0, and 0.5, respectively.

Table 2. RMS noise measured in pilot survey phase I data. RMS noise per beam is given as the minimum and average over all observations in CASDA, then the minimum scaled to a standard ![]() ${1}\,\textrm{h}$ and

${1}\,\textrm{h}$ and ![]() $288\,\textrm{MHz}$ (for continuum, above the line) or

$288\,\textrm{MHz}$ (for continuum, above the line) or ![]() ${18.5}\,\textrm{kHz}$ (for spectral line, below the line)

${18.5}\,\textrm{kHz}$ (for spectral line, below the line)

Most of ASKAP’s observing time will be dedicated to large-scale survey projects and the observatory will provide science-ready data products via a public archive (see Section 12). ASKAP’s deep survey projects are expected to discover millions of new radio sources in the southern sky (Johnston et al. Reference Johnston2007). The large instantaneous field of view also opens up new parameter space for the study of transient sources. ASKAP will excel at wide-area, high cadence surveys for slow transients; wide-area surveys for spectral line emission and absorption; rapid, wide-field searches for gravitational-wave counterparts; and the discovery and localisation of fast transients via 1 ms cadence autocorrelations and a deep voltage buffer (up to 14 s).

2. The murchison radio-astronomy observatory

ASKAP is located on a remote site in Western Australia, specifically established as the Australian radio quiet zone (WA) for the SKA and its precursors (Wilson, Storey, & Tzioumis Reference Wilson, Storey and Tzioumis2013; Wilson et al. Reference Wilson, Chow, Harvey-Smith, Indermuehle, Sokolowski and Wayth2016). The total power in RFI signals over the ASKAP band at this site is typically more than an order of magnitude less than ASKAP’s system noise power (Chippendale & Wormnes Reference Chippendale and Wormnes2013). Legislation regulates the use of radio transmitters within 260 km of the site (ACMA 2014), helping to ensure that the environment will remain as favourable as possible for radio astronomy into the future. Further protection is afforded by carefully testing and, if necessary, shielding all electronic equipment installed at the site (Beresford & Li Reference Beresford and Li2013).

The observatory site (see Figure 2) is roughly 315 km north-east of Geraldton, the largest town in the region. It is operated by the Australian Commonwealth Scientific and Industrial Research Organisation (CSIRO) and hosts the Murchison Widefield Array (MWA) (Tingay et al. Reference Tingay2013; Wayth et al. Reference Wayth2018) and EDGES (Bowman et al. Reference Bowman, Rogers, Monsalve, Mozdzen and Mahesh2018) telescopes in addition to ASKAP. In future, the low-frequency component of the SKA will be established nearby.

Figure 2. Diagram showing the relative location and size of the Murchison Radio-Astronomy Observatory, with the final inset showing ASKAP antennas as blue dots and service tracks as white lines.

In order to prevent ASKAP’s infrastructure from creating RFI, all processing hardware is contained within a dual-shielded central control building (Abeywickrema et al. Reference Abeywickrema, Allen, Ardern, Schinckel, Leitch, Wilson and Beresford2013) that attenuates any radio emission by 160 dB. Signal processing and computational systems are housed inside the second layer of shielding, while office and workshop space are inside the first layer. The building provides working space for maintenance crews but no on-site accommodation. The number of people on site is kept to a minimum and crews are housed in the Boolardy station accommodation complex, roughly 40 km from the telescope.

3. System overview

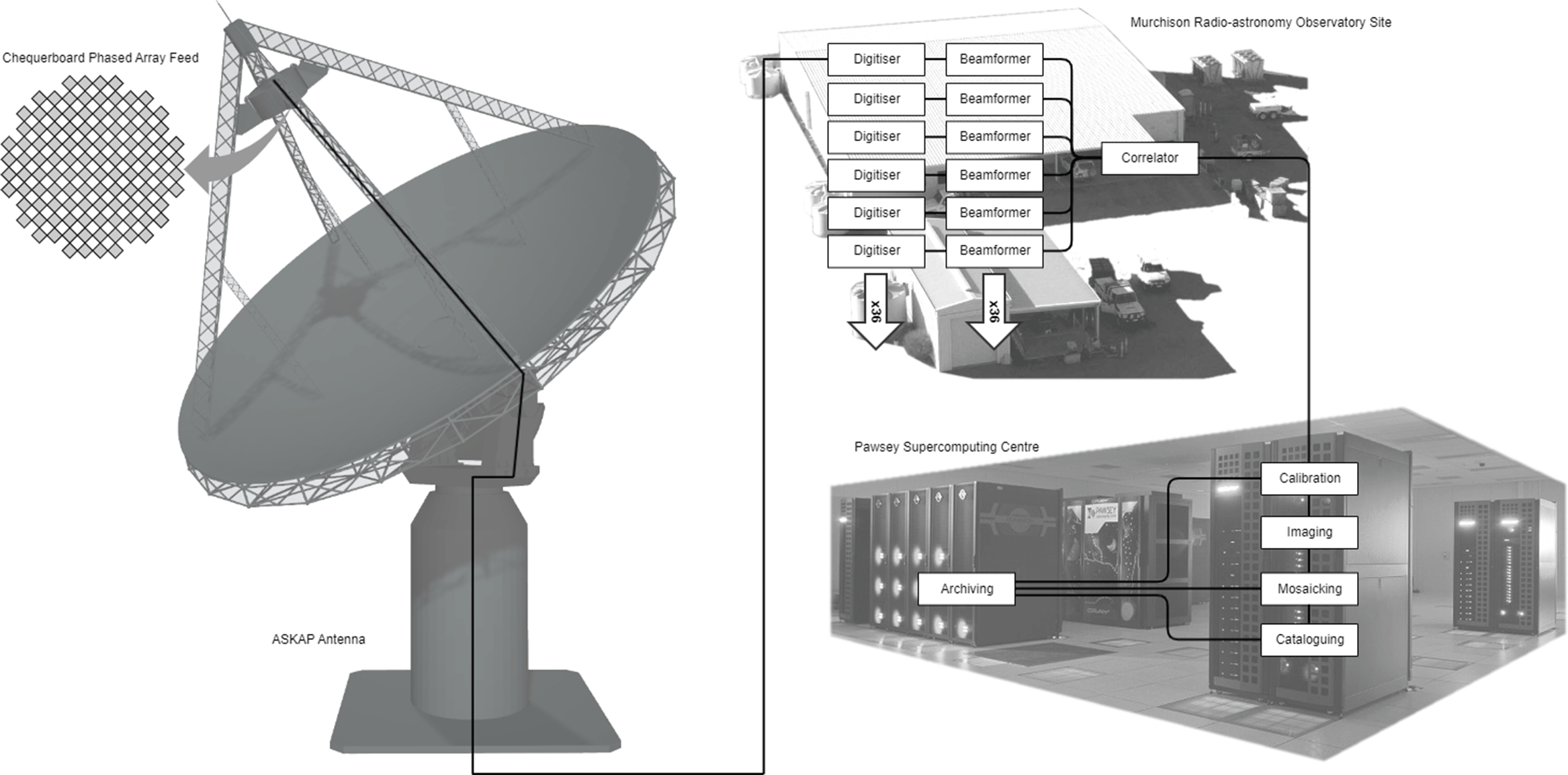

Figure 3 shows the major components of the telescope and highlights how data flows from the antennas through to the image archive. Each subsystem is described in more detail in the sections below.

Figure 3. Overview of key ASKAP subsystems and data flow.

ASKAP consists of 36 paraboloidal reflector antennas, each 12![]() $\,\textrm{m}$ in diameter with a chequerboard PAF at the primary focus. Within each PAF, the radio frequency signals received by 188 active feed elements (94 per polarisation) are converted into analogue optical signals. The optical signal from each element is transmitted over its own dedicated optical fibre back to the central control building. See Section 5 for more details.

$\,\textrm{m}$ in diameter with a chequerboard PAF at the primary focus. Within each PAF, the radio frequency signals received by 188 active feed elements (94 per polarisation) are converted into analogue optical signals. The optical signal from each element is transmitted over its own dedicated optical fibre back to the central control building. See Section 5 for more details.

Inside the control building, each PAF element signal is digitised and then passed through a coarse oversampled polyphase filter bank with 1 MHz channel spacing. This generates 640 or 768 channels depending on which of three frequency bands is being used. The digital receiver system selects 384 of these channels and sends them via digital optical communications links and a passive optical cross-connect to the beamformers.

The beamformers compute the weighted sum (see Section 9) across PAF elements for each frequency channel and antenna, forming 36 dual-polarisation beams over ![]() $336\,\textrm{MHz}$. The beamformed signals then pass into a fine filter bank that divides each 1 MHz channel into 54 channels of 18.5 kHz width, for a total of 18 144 fine channels. Bandwidth can be exchanged for higher frequency resolution by up to a factor of 32 using zoom modes. See Section 7 for more details. The beamformer also generates 1 ms power averages of the signal for each 1 MHz channel of each beam. This is processed on site to search for fast transients.

$336\,\textrm{MHz}$. The beamformed signals then pass into a fine filter bank that divides each 1 MHz channel into 54 channels of 18.5 kHz width, for a total of 18 144 fine channels. Bandwidth can be exchanged for higher frequency resolution by up to a factor of 32 using zoom modes. See Section 7 for more details. The beamformer also generates 1 ms power averages of the signal for each 1 MHz channel of each beam. This is processed on site to search for fast transients.

The fine channels are sent via digital optical links and an optical cross-connect to the correlator, which computes and accumulates the visibilities for each baseline. The correlator has the capacity to process 15 552 fine channels for a maximum bandwidth of ![]() $288\,\textrm{MHz}$. These visibilities are sent directly to the Pawsey Supercomputing Centre in the city of Perth via a long-haul underground optical fibre network, with no buffering at the observatory. Three petabytes of disk buffer is available at Pawsey, where raw visibilities are formatted and written to a number of Common Astronomy Software Applications (CASA) MeasurementSet files (version 2, Kemball & Wieringa Reference Kemball and Wieringa2000) in parallel.

$288\,\textrm{MHz}$. These visibilities are sent directly to the Pawsey Supercomputing Centre in the city of Perth via a long-haul underground optical fibre network, with no buffering at the observatory. Three petabytes of disk buffer is available at Pawsey, where raw visibilities are formatted and written to a number of Common Astronomy Software Applications (CASA) MeasurementSet files (version 2, Kemball & Wieringa Reference Kemball and Wieringa2000) in parallel.

The visibility data are imaged offline in batch processing mode using a custom software package called ASKAPsoft (Guzman et al. Reference Guzman2019). The Pawsey Supercomputing Centre currently maintains a dedicated compute cluster called Galaxy for the purpose of imaging ASKAP science data. This system is due to be replaced with a more powerful supercomputer in 2021. See Section 11 for more details.

Data products are made available to astronomers via a public archive, described in Section 12. As shown in Figure 3, the archive can store calibrated visibility data (averaged in frequency to reduce disk space usage), mosaicked image cubes (but typically not individual beam images) and source catalogues.

3.1. Control and monitoring

ASKAP’s control system is built on the Experimental Physics Instrument Control System (EPICS) library.Footnote d Each major hardware subsystem has an associated EPICS input/output controller (IOC), which is a software application that issues commands and gathers monitoring information via network transactions. Several servers located at the MRO run these IOCs and there is a dedicated monitoring and control network in addition to the office network and astronomical data network. The design of the control system is described in Guzman & Humphreys (Reference Guzman and Humphreys2010).

The digital signal processing hardware was designed with a common command and control interface that translates instructions from the network into firmware registers on field-programmable gate arrays (FPGAs) throughout the system. A common software library was written to support the hardware layer and this library is used by the EPICS IOCs. Various other commodity electronics devices (such as drive controllers and power supplies) are also used, requiring different network protocols at the IOC level.

3.2. Power infrastructure

Due to its remote location, the MRO is not connected to a mains power grid. The site is supplied by a hybrid solar–diesel power station purpose-built with sufficient RFI shielding to protect the observatory from stray radio emissions. The power station is located approximately 6 km from the core of the telescope to further aid in RFI mitigation. The solar component of the power station can supply 1.6 MW (enough to run the entire site during the day) and is linked to a battery bank with 2.5 MWh capacity. Several redundant diesel generators are the main power source at night.

Power is transmitted via underground high-voltage cable to the central control building. Distribution to the antennas is on six independently switched underground tracks that roughly follow the surface roads and power several antennas each. A transformer at each antenna pad steps the high-voltage input down to three-phase 415 V for the equipment in the antenna pedestal.

3.3. Cooling infrastructure

ASKAP’s digital signal processing hardware in the central control building consumes 280 kW of power and requires a commensurate amount of cooling. This is achieved by circulating chilled water to each electronics cabinet in the central building, where internal heat exchange units extract heat from the air. The cooling system vents this waste heat into a geothermal exchange system. When the air temperature is low, a water-to-air heat exchanger can be put in series, extending the life of the geothermal system. The entire cooling system was designed to minimise radio frequency emissions, as described in Abeywickrema et al. (Reference Abeywickrema, Allen, Ardern, Schinckel, Leitch, Wilson and Beresford2013).

3.4. Network infrastructure

The network between Perth and the MRO consists of approximately 900 km of optical fibre using carrier-grade transmission equipment. The network was designed and is managed jointly by CSIRO and AARNet (the Australian Academic and Research Network). Using dense wavelength division multiplexing, the system can support up to 80 channels on a single fibre pair. Over this distance, optical amplification is required every 80–100 km. Only two of these channels are used for production services, each lit by a 100 Gbit s-1 transponder split out into ![]() ${10 \times {10}\,\mathrm{Gbit\,s^{-1}}}$ services. The first runs direct to the Pawsey Supercomputing Centre in Perth with

${10 \times {10}\,\mathrm{Gbit\,s^{-1}}}$ services. The first runs direct to the Pawsey Supercomputing Centre in Perth with ![]() $4 \times {10}\,\mathrm{Gbit\,s^{-1}}$ used for ASKAP data and one 10 Gbit s

$4 \times {10}\,\mathrm{Gbit\,s^{-1}}$ used for ASKAP data and one 10 Gbit s![]() $^{-1}$ service to carry MWA data. The second 100 Gbit s-1 link provides services between Perth, Geraldton, and the MRO such as CSIRO internal network connectivity, IP telephony, and monitor and control traffic.

$^{-1}$ service to carry MWA data. The second 100 Gbit s-1 link provides services between Perth, Geraldton, and the MRO such as CSIRO internal network connectivity, IP telephony, and monitor and control traffic.

There are additional transponders to support SKA development activities at Curtin University and for long-haul network performance testing undertaken by AARNet and CSIRO. Currently, there is 500 Gbit s-1 of lit capacity between Perth and the MRO using 4 of the 80 available channels. There is also a parallel network consisting of two 1 Gbit s-1 links directly patched to fibre pairs between Geraldton and the MRO. This provides network services at the various locations en route and acts as a backup network should the transmission system fail, however, it does not protect against a fibre cable cut.

The network at the MRO itself is a dual-core design. One core handles all the telescope data and connects the digitisers and beamformers. The second core handles the monitoring and control of the telescope as well as the general purpose network at the MRO. The dual-core allows for separation of the different systems providing better control and resilience. The data network is large, the core router has over 2 400 network ports.

The second core makes extensive use of virtual local area networks (VLANs) to partition traffic. This avoids telescope monitor and control commands being mixed with unrelated network traffic. VLANs provide different network connections at relevant locations across the MRO.

The conditions at the MRO present some challenges to network equipment; the antenna pedestals are not cooled, so industrial switches are used, providing greater reliability and enabling connectivity for the varied range of equipment that has been installed at the observatory. The flexible network design allows for services to be provided to several clients located at the observatory, helping to broaden the research taking place at the site.

4. Antenna design

ASKAP’s antennas were designed, constructed, and installed by the 54th Research Institute of China Electronics Technology Group Corporation (CETC54) to CSIRO’s specification (CSIRO 2008). The antennas are 12 m diameter unshaped prime focus paraboloidal reflectors with a focal ratio ![]() $f/D$ of 0.5. They have a solid surface, specified up to 10 GHz, and are mounted on a novel ‘sky-mount’ that can roll the entire reflector, quadrupod and feed structure about the optical axis (DeBoer et al. Reference DeBoer2009). Slew rates of 1

$f/D$ of 0.5. They have a solid surface, specified up to 10 GHz, and are mounted on a novel ‘sky-mount’ that can roll the entire reflector, quadrupod and feed structure about the optical axis (DeBoer et al. Reference DeBoer2009). Slew rates of 1![]() $\deg\, {\rm s}^{-1}$ in elevation and 3

$\deg\, {\rm s}^{-1}$ in elevation and 3![]() ${\deg\,{\rm s}^{-1}}$ in azimuth and roll mean that ASKAP can slew to and track an arbitrary position on the sky within one minute of request.

${\deg\,{\rm s}^{-1}}$ in azimuth and roll mean that ASKAP can slew to and track an arbitrary position on the sky within one minute of request.

Continuous rise-to-set tracking of most sources is possible above the antenna elevation limit of ![]() $15^{\circ}$. From the observatory’s position at

$15^{\circ}$. From the observatory’s position at ![]() $26.7^{\circ}$ S latitude, the telescope can observe sources between

$26.7^{\circ}$ S latitude, the telescope can observe sources between ![]() $-90^{\circ}$ and

$-90^{\circ}$ and ![]() $+48^{\circ}$ declination, although with decreasing time above the horizon for sources closer to the northern limit. The PAF’s wide field of view extends this reach by another

$+48^{\circ}$ declination, although with decreasing time above the horizon for sources closer to the northern limit. The PAF’s wide field of view extends this reach by another ![]() $2.5^{\circ}$.

$2.5^{\circ}$.

4.1. Mechanical design and axes of motion

The antenna structure consists of a steel pedestal and support frame, topped with solid panels made of non-metallic honeycomb sandwiched between aluminium sheets. The feed is located at the prime focus.

Due to the small size of the reflectors and the comparatively large size of the PAF, special consideration was given to the strength and rigidity of the prime focus support structure. The robust feed legs, specified to support a 200 kg PAF receiver, block 4% of the total collecting area with the symmetric antenna design used. However, the symmetric design produces more uniform offset beams, in both total intensity and polarisation.

The use of a PAF with offset beams also makes it necessary to track parallactic angle on the sky during an observation. This is typically done with an equatorial mount, which creates extra mechanical complexity. For ASKAP, a unique hybrid approach was developed. CSIRO designed a simple azimuth–elevation mount that included a third axis of rotation for the reflector itself.Footnote e

While it would be possible to track parallactic angle by continuously updating the electronic beamformer weights or rotating just the feed, the third axis exchanges a small amount of mechanical complexity for greatly reduced computational complexity at the time of calibration and imaging, since it maintains the angular relationship of the feed elements with respect to the support structure.

Unlike the azimuth axis, the antenna polarisation axis (also known as the roll axis) does not have overlapping range limits and can only be driven slightly less than ![]() $\pm 180^{\circ}$ from its neutral position. This means it is not possible to continuously track northern sources without unwrapping at transit or beginning the track at an offset roll angle.

$\pm 180^{\circ}$ from its neutral position. This means it is not possible to continuously track northern sources without unwrapping at transit or beginning the track at an offset roll angle.

The antennas were specified to operate up to 10 GHz, providing the flexibility to consider higher frequency receiver upgrades in future. A single ASKAP antenna was successfully used for several years with a single-pixel feed for VLBI observations at 8.4 GHz (Kadler et al. Reference Kadler2016). Preparation for a large-scale receiver system upgrade to higher frequencies should include direct measurements of atmospheric water vapour to assess the suitability of the site itself. Photogrammetry was used to measure both the location of the PAF at the focus and the reflector accuracy after installation. The rms deviation from the expected parabolic shape is typically less than 1 mm. This is consistent with measurements of the first antenna during test assembly at the factory (Feng, Li, & Li Reference Feng, Li and Li2010).

4.2. Wind limits and storm monitoring

The ASKAP antennas were specified to operate under conditions similar to other radio telescopes. The antennas will stow if the peak wind speed exceeds 45km h-1 during the last 30 min. We find that very little observing time is lost as a result.

Strong winds at the MRO are usually associated with oncoming storm fronts. These can cause the wind speed to rise extremely rapidly, sometimes faster than the time it takes the antennas to stow. On a few occasions, this has led to drive errors during the stow procedure as wind loading exceeded the safe operating range of the motors. To prevent circumstances such as this, we have implemented a storm stow system that uses satellite meteorological data and lightning detectors to sense approaching storm fronts and stow the antennas in advance of their arrival (Indermuehle et al. Reference Indermuehle, Harvey-Smith, Marquarding and Reynolds2018a).

Not all nearby storms lead to high winds at the telescope, but associated lightning activity usually produces extensive radio-frequency interference. False positive stow triggers are, therefore, not a major concern as astronomy data would be impacted by a storm in any case.

4.3. Array configuration

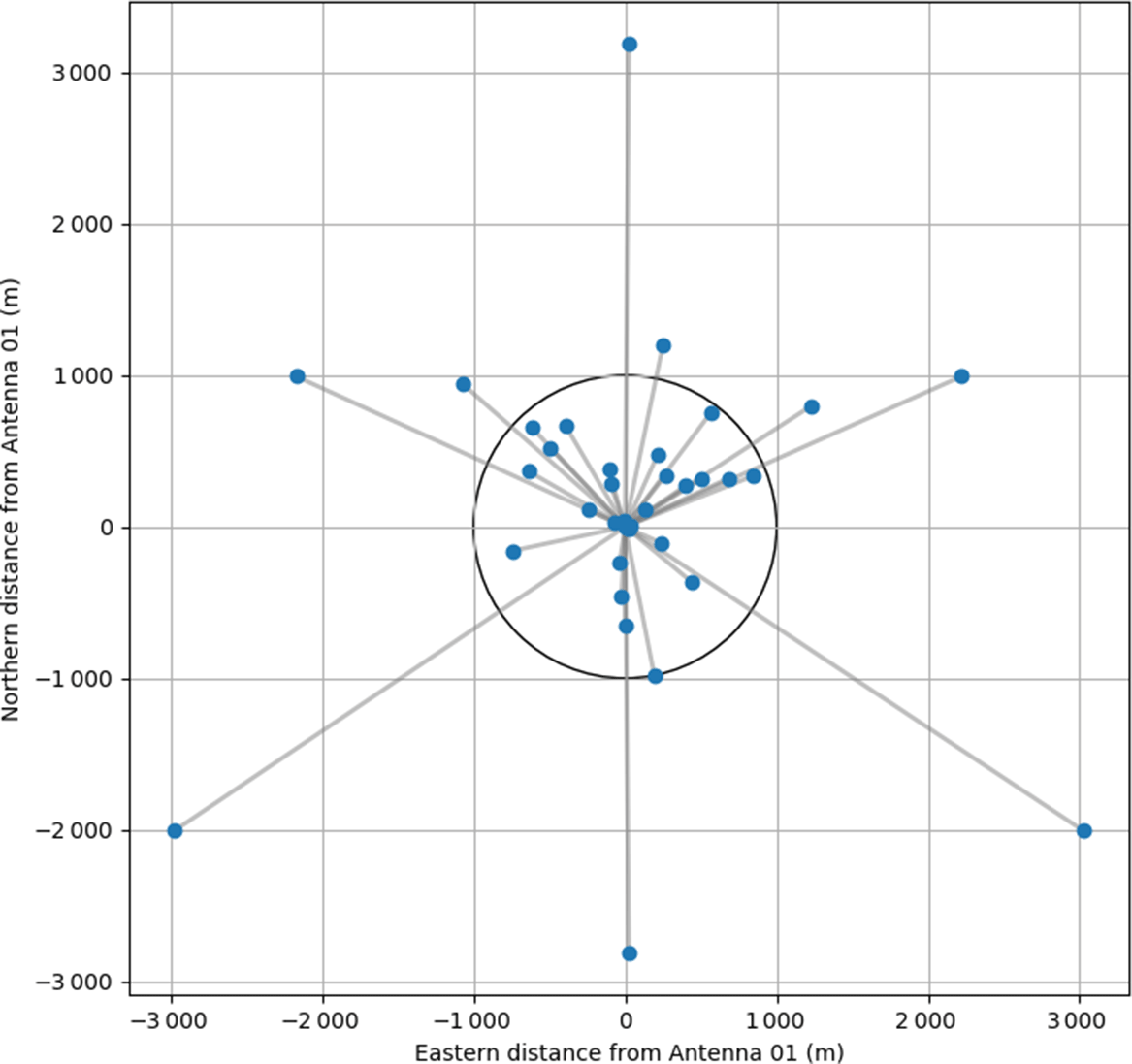

Of the 36 ASKAP antennas, 27 were placed to provide a Gaussian distribution of spatial scales with a point spread function of 30” (Gupta et al. Reference Gupta, Johnston, Feain and Cornwell2008). Three additional antennas were added to the core of the array to increase surface brightness sensitivity and another six antennas were added on longer baselines (up to 6 km) in a Reuleaux triangle (see Figure 4) to provide improved resolution (approximately 10![]() $^{\prime\prime}$) for compact sources. Antennas are plotted relative to ASKAP antenna 1, which is located at

$^{\prime\prime}$) for compact sources. Antennas are plotted relative to ASKAP antenna 1, which is located at ![]() $26.6970007225^{\circ}\textrm{S}$,

$26.6970007225^{\circ}\textrm{S}$, ![]() $116.6314242861^{\circ}\textrm{E}$ and an elevation of 361 m.

$116.6314242861^{\circ}\textrm{E}$ and an elevation of 361 m.

Figure 4. Location of each ASKAP antenna plotted relative to Antenna 1. A circle of radius 1 km is drawn for scale. The outer six antennas form a Reuleaux triangle and provide approximately 10![]() $^{\prime\prime}$ resolution. The dense cluster of antennas in the core provides excellent surface brightness sensitivity.

$^{\prime\prime}$ resolution. The dense cluster of antennas in the core provides excellent surface brightness sensitivity.

ASKAP has excellent instantaneous (u,v) coverage (Gupta et al. Reference Gupta, Johnston, Feain and Cornwell2008), making snapshot surveys and equatorial imaging possible.

5. ASKAP phased array feed

5.1. Design background

The first production prototype PAFs for ASKAP used copper coaxial cables for signal transport, with digitisation inside the antenna pedestal (Hotan et al. Reference Hotan2014). Six of these units were constructed for assessment in the field. Aperture array measurements (Schinckel et al. 2011; Chippendale et al. Reference Chippendale, Hayman and Hay2014), tests at the focus of a 12 m antenna located at the Parkes observatory (DeBoer et al. Reference DeBoer2009; Chippendale et al. Reference Chippendale, O’Sullivan, Reynolds, Gough, Hayman and Hay2010; Sarkissian et al. Reference Sarkissian, Reynolds, Hobbs and Harvey-Smith2017) and the BETA array at the MRO (Hotan et al. Reference Hotan2014) revealed several issues with the Mk I PAF. These included effective system temperatures in excess of 150 k (more than twice the design requirement) in the upper half of the frequency band (McConnell et al. Reference McConnell2016) and the need to fully remove the PAF from the focus of the antenna to perform internal maintenance.

In order to better assess the performance of prototype PAF designs, Chippendale et al. (Reference Chippendale, Hayman and Hay2014, Reference Chippendale2015, Reference Chippendale2016a) developed an aperture array method of determining the noise temperature of a PAF beam using measurements of a microwave absorber, the radio sky, and broadband noise transmitted from a reference antenna. This was a valuable step in the testing process as it helped validate electromagnetic modelling of the PAF that in turn enabled improvements in sensitivity (Shaw, Hay, & Ranga Reference Shaw, Hay and Ranga2012). Aperture array testing was also practical as it allowed rapid testing of PAF prototypes on the ground instead of at the focus of an antenna.

During these early tests, research into RF over fibre showed that the PAF signal could be transported to the central site for processing rather than digitising the signals at the antenna. A major redesign was initiated to incorporate experience from the Mk I tests and take advantage of new technological developments. The ASKAP Design Enhancements (ADE) project developed a Mk II system with lower overall cost, improved maintainability, and effective system temperature less than 80 k across most of the band. LNAs could be changed without removing the PAF from the dish and sensitive electronics were relocated from the antenna pedestal to the climate-controlled central building. All 36 ASKAP antennas are now fitted with Mk II PAFs and digital systems.

Figure 5. Photograph of a Mk II PAF installed on one of the ASKAP antennas at the MRO. The chequerboard surface is visible, along with the composite case and air vents for the cooling system. Power and optical cables attach via several bulkheads on the side of the case.

5.2. Planar chequerboard connected array

The ASKAP PAFs fill a region of the antenna’s focal plane with small receptors that consist of flat, square conductive patches (see Figure 5) printed on a circuit board substrate to form a planar connected-array antenna (Hay et al. 2007; Hay & O’Sullivan Reference Hay and O’Sullivan2008). The distance between each patch is 90 mm. A row of patches can be considered as a row of bow-tie antennas that are connected edge to edge, forming a linearly connected array. The feed point at the centre of each ‘bow-tie’ is differential and both orthogonal linear polarisations are available due to the two-dimensional nature of the grid. Each element has a radiation pattern that would over-illuminate the dish surface, but the beamforming process combines these elements to create an efficient illumination pattern for a given direction on the sky.

The precise geometry of the chequerboard surface was determined through electromagnetic simulation (Hay, O’Sullivan, & Mittra Reference Hay, O’Sullivan and Mittra2011). Matching the simulation results to experimental data from the Mk I system was a key step to ensure that design modifications could be assessed prior to manufacturing. Co-design of the chequerboard array and its LNAs was critical for achieving high sensitivity. An initial impedance target for the LNA and matching network design was derived by optimising for maximum beamformed sensitivity over the field of view (Hay Reference Hay2010). Slight modifications were made to the shape of the surface elements on the Mk II design to improve the impedance match with the LNAs in the upper observing band (1 400–1 800 MHz), and therefore reduce the system temperature.

The surface panels are bonded to a ground plane via several centimetres of non-conductive honeycomb backing, which provides structural rigidity as well as insulation. Twin-wire feed lines are used to connect the corners of each ‘bow-tie’ element to LNAs housed underneath the ground plane. A conformal coating is applied to the outward-facing surface for protection from dust and weather.

5.3. Composite enclosure

The density of electronics inside the PAF and the sometimes harsh environmental conditions at the focus of the antenna makes the design of the enclosure extremely challenging. As well as keeping the internal systems dry, free of dust, and thermally isolated from the external environment, the enclosure must provide more than 30 dB of extra RF shielding (Beresford & Bunton Reference Beresford and Bunton2013). Experience with the BETA array showed that poorly shielded enclosures led to significant leakage of noise that could correlate, including between PAF elements and nearby antennas.

The Mk II enclosure is a moulded composite structure that is bonded to the metal ground plane and shielded with carbon fibre. Four access hatches at the top are fitted with RF gaskets to reduce radio frequency leakage. The access hatches allow replacement of faulty electronics modules, even when a PAF is installed at the focus of an antenna.

5.4. Dominoes and monitoring

Each pair of LNAs is housed in a self-contained RF shielded enclosure known as a domino. The domino design was developed for the Mk II PAF to improve shielding of the components with the highest gain, and also to improve modularity and maintainability. The dominos are bolted directly to the ground plane to provide conductive heat transfer and can be removed individually for maintenance.

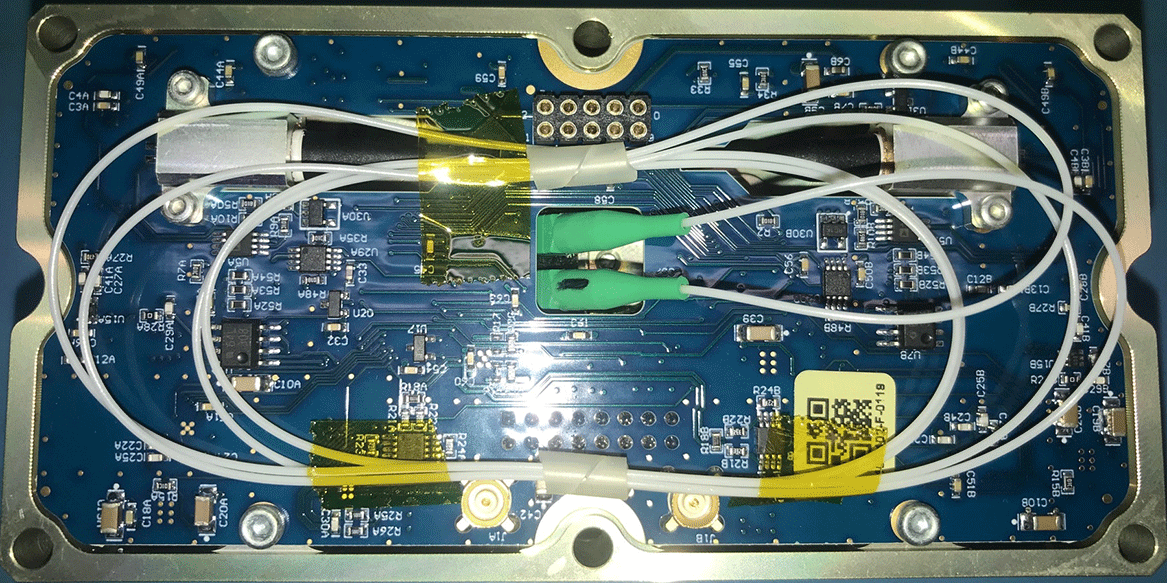

Each domino has three layers that contain differential LNAs, filters, and electro-optical converters stacked in order of distance from the ground plane. A photograph of the interior of each layer is shown in Figures 6, 7, and 8, and a transparent view of the assembled stack is shown in Figure 9. The signal path through the domino layers is shown in the upper left of Figure 10 in the boxes marked LNA, FILTER, and TRANSMITTER.

Figure 6. Photograph of the low-noise amplifier layer in the domino, referred to elsewhere as LNA.

Figure 7. Photograph of the filter layer in the domino, referred to elsewhere as FILTER.

The top of each domino provides connections for two fibre-optic cables that carry the analogue RF output, and for a copper ribbon cable that supplies DC power and supports monitoring and control within the PAF. Due to the proximity of the LNAs, front-end monitoring has a very high risk of generating RFI. ASKAP’s PAF monitoring system was designed to be low impact, with no continuous clock signal and RFI filters on all connections to wires within the enclosure. The monitoring system is based on the Serial Peripheral Interface (SPI) bus and is shown in the lower right part of Figure 10. Transmission of data packets may generate RFI, but most monitoring is disabled during an observation, with short updates between scheduling blocks to maintain a record of the system health. Control of each PAF is managed by a front-end controller (FEC) unit that converts instructions from an EPICS IOC into optical signals for the PAF and returns monitoring data to the software layer.

5.5. Ground plane and cooling

The Mk I PAFs employed water cooling inside their enclosures, using a heat exchange unit in the PAF and fans to circulate cool air throughout the electronics. Although this provided good cooling capacity, it required housing a water chiller at each antenna pedestal (with associated RFI issues) and pumping water up to the focus of the antenna. In addition, the possibility of condensation around the chilled water inlet or water leakage inside the PAF enclosure was a significant concern.

Figure 8. Photograph of the optical transmitter layer in the domino, referred to elsewhere as TRANSMITTER.

Figure 9. Diagram showing the assembled domino with transparent walls. The LNA card is closest to the bottom of the stack, followed by the filter card in the middle and the optical transmitter at the top.

The Mk II PAFs use heat pipes embedded in the ground plane to transport heat to the edge of the enclosure. Further heat pipes connect to thermoelectric coolers (TECs, as shown in Figure 10 and seen around the edge of the PAF in Figure 5) that transport the heat to the outside of the case where a ducted fan blows ambient air over heat sink fins. This system has proven effective at keeping the internal temperature as much as 15![]() $^{\circ}$C below ambient. It can be regulated via the voltage applied to each TEC. A servo loop keeps the internal temperature at a steady 25

$^{\circ}$C below ambient. It can be regulated via the voltage applied to each TEC. A servo loop keeps the internal temperature at a steady 25![]() $^{\circ}$C set point (which can be changed in software if necessary).

$^{\circ}$C set point (which can be changed in software if necessary).

The PAFs were designed to operate in ambient temperatures up to 45![]() $^{\circ}$C. This threshold is rarely exceeded except during the most extreme summer conditions. The cooling system is capable of maintaining safe internal operating temperatures in all conditions experienced to date. However, it is not always possible to keep the internal temperature fixed. The optimal internal temperature of 25

$^{\circ}$C. This threshold is rarely exceeded except during the most extreme summer conditions. The cooling system is capable of maintaining safe internal operating temperatures in all conditions experienced to date. However, it is not always possible to keep the internal temperature fixed. The optimal internal temperature of 25![]() $^{\circ}$C is exceeded when ambient temperatures rise above 35

$^{\circ}$C is exceeded when ambient temperatures rise above 35![]() $^{\circ}$C, which is quite common during summer. The PAF is fitted with an automatic safety shutdown that is triggered when the internal air temperature exceeds 50

$^{\circ}$C, which is quite common during summer. The PAF is fitted with an automatic safety shutdown that is triggered when the internal air temperature exceeds 50![]() $^{\circ}$C.

$^{\circ}$C.

The exact cooling capacity is slightly different for each unit. When the cooling capacity is exceeded, the internal temperature of the PAF begins to track the external ambient temperature, with a constant offset of approximately 10![]() $^{\circ}$C. This can cause amplitude calibration errors during astronomical data processing as the gain of the analogue electronics depends on their operating temperature. The impact of this on image quality has yet to be determined and it may be possible to correct using time-dependent self-calibration, a temperature-based lookup table, or the on-dish calibration (ODC) system described in the next section.

$^{\circ}$C. This can cause amplitude calibration errors during astronomical data processing as the gain of the analogue electronics depends on their operating temperature. The impact of this on image quality has yet to be determined and it may be possible to correct using time-dependent self-calibration, a temperature-based lookup table, or the on-dish calibration (ODC) system described in the next section.

Figure 10. The analogue signal path for a single PAF element from the feed to the ADC in the digital receiver. After the chequerboard feed elements, the signal encounters a low-noise amplifier (LNA), followed by switchable bandpass filters (BPF) and power amplifiers (PA). The transmitter module provides an option to introduce phase switching (![]() $\phi_{\textrm{SW}}$), after which the signal is used to modulate a laser diode for transmission over single mode optical fibre (SMF) from the antennas to the control building. After analogue-to-digital conversion (ADC), the digital signal processing (DSP) stage begins. PAF monitoring and control (M

$\phi_{\textrm{SW}}$), after which the signal is used to modulate a laser diode for transmission over single mode optical fibre (SMF) from the antennas to the control building. After analogue-to-digital conversion (ADC), the digital signal processing (DSP) stage begins. PAF monitoring and control (M![]() $/$C) is distributed to all elements via a system based on the serial peripheral interface (SPI) standard. Cooling inside the PAF is provided by a series of 8 thermoelectric cooler (TEC) modules. Direct current (DC) and extremely low voltage alternating current (ELVAC) powers the various subsystems inside the PAF. The on-dish calibration system consists of a log-periodic dipole antenna (LPDA) mounted at the dish vertex and connected to an optical receive module (ROAR) fed by a noise source (CICADA) located in the front end control (FEC) module. This can be driven synchronously using binary atomic time (BAT) events loaded via a gigabit ethernet (1Ge) interface.

$/$C) is distributed to all elements via a system based on the serial peripheral interface (SPI) standard. Cooling inside the PAF is provided by a series of 8 thermoelectric cooler (TEC) modules. Direct current (DC) and extremely low voltage alternating current (ELVAC) powers the various subsystems inside the PAF. The on-dish calibration system consists of a log-periodic dipole antenna (LPDA) mounted at the dish vertex and connected to an optical receive module (ROAR) fed by a noise source (CICADA) located in the front end control (FEC) module. This can be driven synchronously using binary atomic time (BAT) events loaded via a gigabit ethernet (1Ge) interface.

5.6. On-dish calibration

For a dish with a single feed, amplitude calibration is often obtained by placing a switched noise source in the feed that adds a known amount of power to both polarisations when activated. To determine the system amplitude gain, the power induced by the noise source is synchronously demodulated and measured with respect to the background.

Individually injecting a calibration signal into each PAF element is non-trivial due to the number of active elements. Instead, a common noise signal is radiated from the dish vertex to the PAF, illuminating all elements as uniformly as possible. The calibration signal is typically set to inject around 15 k equivalent noise temperature into the PAF, but this can be adjusted within the range 100 mK–200 K as required. We expect to reduce the calibration signal level as we gain experience in its use. The calibration noise is currently on continuously during all observations, but it is largely cancelled by beamforming with maximum signal-to-noise ratio beamformer weights resulting in less than 1% increase to beamformed system temperature (Chippendale, Button, & Lourenço Reference Chippendale, Button and Lourenço2018).

Using a radiated calibration signal has the disadvantage that it can leak into neighbouring antennas, but the signal power is such that the correlated contribution in a nearby antenna is less than that due to leakage of amplifier noise. Such common noise contributions could be further mitigated via Walsh modulation of the phase switches incorporated into each domino module, however this has not been found necessary.

Processing one or two calibration signals is a small engineering overhead compared to the 188 signals generated by each PAF. This allows the injected calibration signal to be digitised and correlated with the signals from all PAF elements, yielding an amplitude and phase calibration for each port. Corrections are then made to the beamformer weights to maintain stable beam patterns and sensitivity as described in Section 9.6.

The on-dish calibration system consists of several modules, as shown in Figure 11. There is one Cicada module per antenna located in the central building. During normal operation, it generates an independent noise signal that is directly modulated onto an optical carrier and sent to the antenna on single-mode fibre. At the antenna, the optical signal is detected and the resulting electrical signal is sent to a small log-periodic dipole array antenna (LDPA, ![]() $G={4}\,\textrm{dBi}$) located at the vertex of the 12 m dish, which points at the PAF and is oriented so that its linear polarisation is at 45 to that of the PAF elements. The illumination of the PAF is close to uniform but there is a path length difference of up to 21 mm from the central elements to the edge. To a first approximation the signal injected into all PAF elements is the same.

$G={4}\,\textrm{dBi}$) located at the vertex of the 12 m dish, which points at the PAF and is oriented so that its linear polarisation is at 45 to that of the PAF elements. The illumination of the PAF is close to uniform but there is a path length difference of up to 21 mm from the central elements to the edge. To a first approximation the signal injected into all PAF elements is the same.

Figure 11. Diagram showing key components and signal paths for ASKAP’s on-dish calibration (ODC) system.

The Cicada also generates an optical copy of the noise signal. Half the power is sent directly to the corresponding antenna’s digital receiver and half is sent to the antenna on the same multicore fibre cable as the PAF signals. This copy is reflected by a Faraday mirror in the antenna pedestal and returns to the Cicada, then continues into the digital receiver. In the digital receiver, both optical signals are processed in the same way as the PAF signals, becoming inputs to the array covariance matrix and a higher sensitivity calibration correlator that forms all correlation products between a nominated reference signal and the PAF signals.

The direct signal from the Cicada gives the best estimates of gain and phase variation. The signal returned from the Faraday mirror allows the optical path to the LPDA to be calibrated via a round-trip phase measurement. This allows the phase variation of the full analogue optical path to be characterised. The system also provides a way to inject a common calibration signal into the PAFs on all dishes, though this is not routinely used.

Although the main purpose of the ODC system is to measure complex gain and provide updates to the beamformer weights, it can also be used to improve system diagnostics in areas of RF continuity, RF distortion assessment and filter-bank frequency integrity. The main contribution of the ODC at present is to stop beams from being completely destroyed by phase slopes corresponding to unpredictable delay changes that occur with each full reset of the analogue-to-digital converters (ADCs). With further research it is hoped to use the ODC to temporally stabilise PAF beam (voltage) patterns to better than 1% at the half power points (Hayman et al. 2010).

6. Analogue signal processing

6.1. Design background

In the BETA version of the ASKAP PAF (Schinckel et al. 2011; Hotan et al. Reference Hotan2014), all analogue signal processing and digitisation occurred in the antenna pedestal. At the time of the BETA design, photonics for radio frequency over fibre (RFoF) signal transport appeared expensive and sufficiently unproven to deploy on the scale of a 6 768 element array for radio astronomy.

The BETA system comprised two shielded cabinets packed full of equipment and occupying most of the space in each antenna pedestal, along with a chiller plant located on the concrete antenna foundation pad to keep both the cabinets and PAF cooled. The chillers required continuous maintenance and the pedestal electronics were not easily accessible due to the confined space. Containment of radiated emissions was less than satisfactory due to the single layer of shielding and the large number of filtered conductive connections to the outside world. Even so, BETA served well to verify basic PAF performance and signal processing algorithms and was a pivotal step to the more advanced Mk II design.

For the Mk II version of ASKAP described here, the price of high linearity uncooled distributed feedback (DFB) laser diodes had fallen. Investigations of performance of low-cost RFoF analogue signal transport using these DFB components were undertaken with fibre spans up to 6km. It was found that, with suitable precautions, a directly modulated DFB laser solution could meet astronomy requirements (Beresford, Cheng, & Roberts Reference Beresford, Cheng and Roberts2017a). The cost of the optical transmitter was reduced to one hundred dollars rather than several hundred dollars for cooled laser devices or thousands of dollars for high quality externally modulated lasers traditionally used in broadband applications.

This allowed all analogue electronics, except the PAF itself, to be moved to the central building, which eliminated self RFI problems, lowered cost, and improved accessibility and maintainability of the analogue signal processing hardware.

6.2. LNA

Each domino houses the low-noise amplifiers (LNAs) for a pair of PAF elements (Figure 6) and there are 94 dominoes in each PAF. ASKAP’s LNA design forms an active balun by connecting the twin-wire feed line of one connected-array element on the PAF surface directly into two single-ended LNAs with a passive output-side balun (Shaw et al. Reference Shaw, Hay and Ranga2012). A passive input-side balun would have introduced too much loss. The differential input complicates the measurement of LNA noise temperature, so an innovative method was developed by Shaw et al. (Reference Shaw, Hay and Ranga2012). The measured LNA minimum noise temperature ![]() $T_{\text{min}}$ of the current design is 30 k in the middle of the frequency range (Shaw & Hay 2015).

$T_{\text{min}}$ of the current design is 30 k in the middle of the frequency range (Shaw & Hay 2015).

6.3. Filter

After the LNA, the signal encounters selectable anti-aliasing filters (Beresford et al. Reference Beresford2017b) as shown in Figure 7. These filters define four observing bands summarised in Table 3 and Figure 14. The fourth band was included for assessment of the low-frequency performance of the PAF and has not been used for astronomy.

Table 3. ASKAP receiver bands

6.4. Transmitter

After filtering, the signal is converted from electrical to optical for transmission back to the central building. The optical RFoF transmitter (Beresford et al. Reference Beresford, Ferris, Cheng, Hampson, Bunton, Chippendale and Kanapathippillai2017c,b; Hampson et al. Reference Hampson2012) is shown in Figure 8. The RF signal is directly added to the laser diode DC bias current and modulates the optical power. An average power control loop ensures constant optical level, compensating for component ageing. The low loss in the single-mode fibre allows the signal to be transported several kilometres. Each antenna connects to the central processing building via underground fibre-optic cables containing 216 cores per antenna, 188 of which carry RFoF PAF signals. Three additional fibres are used for the RFoF ODC system described in Section 5.6.

Figure 12. Distortion performance, measured as the spurious-free dynamic range (SFDR), of the RF over fibre link.

6.5. Optical link design considerations

All observing bands are suboctave as they are in the second or third Nyquist band of the analogue-to-digital converter (ADC). This mitigates the second-order harmonic distortion of the RFoF link. Radio astronomy signal paths are designed to respond linearly to their input and this was an important consideration for the optical link as well. For systems operating close to linearity, small departures are often characterised using a measure known as the input or output intercept point (IIP or OIP) for various polynomial orders. The ASKAP RFoF link has an OIP1 of -10 dBm, an OIP2 of 8.3 dBm, and an OIP3 of 6 dBm (Beresford et al. Reference Beresford, Cheng and Roberts2017a) as shown in Figure 12. This marginally degrades as the fibre span increases (Beresford et al. Reference Beresford, Ferris, Cheng, Hampson, Bunton, Chippendale and Kanapathippillai2017c). The nominal RFI-free RFoF link output signal level is ![]() $-47\,$dBm (0 dBc), relative intensity noise (RIN) and shot noise define the output noise floor at

$-47\,$dBm (0 dBc), relative intensity noise (RIN) and shot noise define the output noise floor at ![]() $-72\,$dBm (

$-72\,$dBm (![]() $-25\,$dBc).

$-25\,$dBc).

For narrow-band RFI equal in power to the system noise at the RFoF output (0 dBc), the second-order distortion is ![]() $-53\,$dBc and the third is

$-53\,$dBc and the third is ![]() $-102\,$dBc. The strongest narrow-band RFI is due to aviation Automatic Dependent Surveillance-Broadcast (ADS-B) transmitters, located on aircraft flying over the observatory at high altitude. This can be as high as 29 dBc for short periods of time (minutes) if the aircraft passes overhead.

$-102\,$dBc. The strongest narrow-band RFI is due to aviation Automatic Dependent Surveillance-Broadcast (ADS-B) transmitters, located on aircraft flying over the observatory at high altitude. This can be as high as 29 dBc for short periods of time (minutes) if the aircraft passes overhead.

Placement of half the anti-aliasing filter after the RFoF link eliminates the severe in-band second-order response from the RFoF link as well as out of band relative intensity noise and shot noise (Beresford et al. Reference Beresford, Ferris, Cheng, Hampson, Bunton, Chippendale and Kanapathippillai2017c). This allows the system to handle an extra 17 dB of RFI (third-order intermodulation at ![]() $-53\,$dBc, see Figure 12). The other half of the anti-aliasing filter preceding the RFoF link removes out of band RFI that could corrupt the link. Adjustment of the attenuator in the domino transmitter (see Figure 10) can reduce the signal level on the RFoF link to increase the compression headroom, but this comes at the cost of increased additive noise. With nominal settings, the RFoF link adds 1.5 k to the receiver noise temperature. Decreasing the power on the RFoF link by 3 dB gives 3.7 dB extra headroom for RFI but adds 1.9 k to the receiver noise temperature for the most distant antennas.

$-53\,$dBc, see Figure 12). The other half of the anti-aliasing filter preceding the RFoF link removes out of band RFI that could corrupt the link. Adjustment of the attenuator in the domino transmitter (see Figure 10) can reduce the signal level on the RFoF link to increase the compression headroom, but this comes at the cost of increased additive noise. With nominal settings, the RFoF link adds 1.5 k to the receiver noise temperature. Decreasing the power on the RFoF link by 3 dB gives 3.7 dB extra headroom for RFI but adds 1.9 k to the receiver noise temperature for the most distant antennas.

6.6. Optical receiver

The conversion from optical back to electrical signals occurs in the digital receiver (see Section 7.1). Further amplification increases the electrical signals to the level needed to drive the analogue-to-digital converter (ADC) in the digital receiver (![]() $-35\,$dBm). The ADC has a specified spurious-free dynamic range (SFDR) of approximately 61 dB. The RF signal chain has an SFDR of approximately 48 dB. Marginal degradation of SFDR occurs for longer fibre spans due to the optical loss of 0.35dB km-1. The longest fibre span in the array is 6km.

$-35\,$dBm). The ADC has a specified spurious-free dynamic range (SFDR) of approximately 61 dB. The RF signal chain has an SFDR of approximately 48 dB. Marginal degradation of SFDR occurs for longer fibre spans due to the optical loss of 0.35dB km-1. The longest fibre span in the array is 6km.

7. Digital signal processing

The next stage in the ASKAP signal chain consists of a distributed, custom digital signal processing (DSP) system built on field-programmable gate array (FPGA) technology (Hampson et al. Reference Hampson2012). This system is responsible for the following key processing steps:

1. sampling the PAF analogue RF signals;

2. channelising the band with

$1\,\textrm{MHz}$ coarse resolution;

$1\,\textrm{MHz}$ coarse resolution;3. selecting channels for further processing;

4. beamforming on the selected coarse channels;

5. applying time-varying coarse delays to align wavefronts with an error of at most half a sample at

$1\,\textrm{MHz}$;

$1\,\textrm{MHz}$;6. further channelising the beamformed output to the final frequency resolution of the correlator;

7. applying a time-varying phase slope across

$1\,\textrm{MHz}$ channels to provide fine delay control (fraction of coarse delay step); and

$1\,\textrm{MHz}$ channels to provide fine delay control (fraction of coarse delay step); and8. for each beam, cross-correlating the beam voltages.

Processing is implemented in three stages, first, the digital receiver, then the beamformer and finally the correlator. The result is the cross-correlation of beam voltages for each fine channel across all antenna pairs and polarisation products (the raw visibilities) for the specified phase centre. Each beam is correlated with itself (autocorrelation) and with each corresponding beam that points in the same direction from every other ASKAP antenna. Correlations between beams pointing in different directions are not calculated.

7.1. Digital receiver

ASKAP’s digital receiver (Brown et al. Reference Brown2014) is implemented with 12 Dragonfly modules (see Figure 13) for each antenna, each processing 16 optical signals. The 192 signals include 188 from each PAF, 2 calibration signals, and 2 spares for future applications such as RFI mitigation.

Figure 13. The Dragonfly digital receiver module, designed by CSIRO specifically for ASKAP. There are 12 per antenna.

The ADC devices used for ASKAP are National Semiconductor ADC12D1600 parts that have 12-bit resolution with an effective number of bits (ENOB) of 9 and a full-power analogue bandwidth of 2.8 GHz. The sample clock is generated in the Dragonfly synthesiser module as described in Section 7.6.

The ASKAP digital receiver has a bandpass sampling architecture. It directly samples the PAF signals at RF (Figure 10) without the need for analogue frequency conversion. The full 700–1 800 MHz frequency range of the PAF is covered by three selectable and overlapping sampling bands (see Table 3 in Section 6).

Each sampling band is designed to match a Nyquist zone of the ADC. Two sampling frequencies, 1 280 and 1 536 MHz, are used to sample at RF with an instantaneous bandwidth of ![]() $640\,\textrm{MHz}$ or

$640\,\textrm{MHz}$ or ![]() $768\,\textrm{MHz}$. Figure 14 illustrates how the sampling bands correspond to sampling frequency, how they overlap, and how much usable RF band is left after filtering. The lower and upper observing bands are located in the second and third Nyquist zones, respectively, of the ADC running at 1 280 MHz, while band 2 is located in the second Nyquist zone of the ADC running at 1 536 MHz, which overlaps the adjacent bands at the other sampling frequency. The sampling frequency and corresponding FPGA firmware are configured at the start of an observation.

$768\,\textrm{MHz}$. Figure 14 illustrates how the sampling bands correspond to sampling frequency, how they overlap, and how much usable RF band is left after filtering. The lower and upper observing bands are located in the second and third Nyquist zones, respectively, of the ADC running at 1 280 MHz, while band 2 is located in the second Nyquist zone of the ADC running at 1 536 MHz, which overlaps the adjacent bands at the other sampling frequency. The sampling frequency and corresponding FPGA firmware are configured at the start of an observation.

An overview of the signal path following the ADC, for a single PAF element, is shown in Figure 15. Also shown, in unshaded boxes, are a number of other ancillary signal statistics and monitoring modules. In operation, the ADC histogram is particularly useful as it shows whether the signal is Gaussian and helps to set the signal level into the ADC.

The sampled PAF element voltages feed directly into a polyphase filter bank (PFB) with an oversampling ratio of 32/27 (Tuthill et al. Reference Tuthill, Hampson, Bunton, Brown, Neuhold, Bateman, de Souza and Joseph2012). The PFB forms channels with a sample rate of 1.185 and 1 MHz spacing. As the channels have a noise bandwidth of ![]() $1\,\textrm{MHz}$ and a channel spacing of

$1\,\textrm{MHz}$ and a channel spacing of ![]() $1\,\textrm{MHz}$ they will be referred to as

$1\,\textrm{MHz}$ they will be referred to as ![]() $1\,\textrm{MHz}$ channels. The oversampling provides substantial gains in sub-band fidelity and downstream processing capabilities with only a modest increase in system complexity (Bunton Reference Bunton2003b; Tuthill et al. Reference Tuthill, Hampson, Bunton, Brown, Neuhold, Bateman, de Souza and Joseph2012). The channel-dependent frequency rotation within each output channel that is introduced by oversampling is compensated for within the PFB implementation so that the output 1 MHz channels are all correctly DC-centred before being forwarded on to the beamformer subsystem (Tuthill et al. Reference Tuthill, Hampson, Bunton, Harris, Brown, Ferris and Bateman2015).

$1\,\textrm{MHz}$ channels. The oversampling provides substantial gains in sub-band fidelity and downstream processing capabilities with only a modest increase in system complexity (Bunton Reference Bunton2003b; Tuthill et al. Reference Tuthill, Hampson, Bunton, Brown, Neuhold, Bateman, de Souza and Joseph2012). The channel-dependent frequency rotation within each output channel that is introduced by oversampling is compensated for within the PFB implementation so that the output 1 MHz channels are all correctly DC-centred before being forwarded on to the beamformer subsystem (Tuthill et al. Reference Tuthill, Hampson, Bunton, Harris, Brown, Ferris and Bateman2015).

Figure 14. ASKAP digital system sampling bands. For convenience, we refer to these as bands 1, 2, and 3 in left-to-right order elsewhere in this document. The labels indicate whether the frequency channel order is inverted or not, with respect to the natural ascending order.

Figure 15. The signal path for a single PAF element through the ASKAP digital receiver ADC and PFB.

The PFB produces either 768 or 640 ![]() $1\,\textrm{MHz}$ channels across the selected observing band (Figure 14), depending on the sampling frequency. In both cases, the channel sample rate is the same and the channel centre frequency is an integer multiple of

$1\,\textrm{MHz}$ channels across the selected observing band (Figure 14), depending on the sampling frequency. In both cases, the channel sample rate is the same and the channel centre frequency is an integer multiple of ![]() $1\,\textrm{MHz}$. The output of the PFB for each channel is the complex-valued analytic envelope of the full-band signal component within that sub-band, i.e. the single-sided spectrum in that sub-band, translated to DC (Rice Reference Rice1982). The equivalent operations to generate the output of the PFB for each channel are a multiplication of the input by

$1\,\textrm{MHz}$. The output of the PFB for each channel is the complex-valued analytic envelope of the full-band signal component within that sub-band, i.e. the single-sided spectrum in that sub-band, translated to DC (Rice Reference Rice1982). The equivalent operations to generate the output of the PFB for each channel are a multiplication of the input by ![]() $e^{2 \pi ift}$, where f is the channel centre frequency, followed by low-pass filtering and decimation to the output sample rate.

$e^{2 \pi ift}$, where f is the channel centre frequency, followed by low-pass filtering and decimation to the output sample rate.

Of the total number of output channels from the PFB, only 384 channels are selected for transport to the beamformer subsystem for further processing. These are transported as 8 data streams each with 48 channels. However, cost constraints and computing limitations mean that only seven of these data streams are beamformed and only six are correlated. This limits the total bandwidth available for imaging (see Section 11) to ![]() $288\,\textrm{MHz}$.

$288\,\textrm{MHz}$.

The channel selection process is very flexible and permits contiguous or non-contiguous groups of channels to be selected and also multiple copies of the same channels to be selected and transported to different downstream end points. The complex task of managing channel selection is done by the Telescope Operating System (TOS) software. At present, only contiguous bands are implemented to simplify visibility data storage and imaging. In future, we plan to support two or more split bands for purposes such as avoiding satellite interference and observing widely separated spectral lines.

Bit growth is permitted through the PFB to ensure that the DSP system meets dynamic range and noise floor requirements, so the output number representation for the coarse ![]() $1\,\textrm{MHz}$ channels is 16-bit real and 16-bit imaginary two’s complement (signed) integers. The resulting aggregate data rate at the output of the ASKAP digital receiver and PFB is approximately 100Tbit s-1. This is efficiently transported to the beamformer using a custom point-to-point packetised streaming protocol that has very low overheads. The physical transport medium consists of 10 368 multimode optical fibres in 864 12-fibre ribbons. All fibres in a ribbon route to different locations and the fibre reordering is achieved by using custom passive optical cross-connects.

$1\,\textrm{MHz}$ channels is 16-bit real and 16-bit imaginary two’s complement (signed) integers. The resulting aggregate data rate at the output of the ASKAP digital receiver and PFB is approximately 100Tbit s-1. This is efficiently transported to the beamformer using a custom point-to-point packetised streaming protocol that has very low overheads. The physical transport medium consists of 10 368 multimode optical fibres in 864 12-fibre ribbons. All fibres in a ribbon route to different locations and the fibre reordering is achieved by using custom passive optical cross-connects.

7.2. Beamformer

Output from the digital receiver for a single antenna is processed by seven Redback modules (Hampson et al. Reference Hampson, Brown, Bunton, Neuhold, Chekkala, Bateman and Tuthill2014), as shown in Figure 16. Each receives ![]() $48\,\textrm{MHz}$ of bandwidth from all PAF elements for a single antenna and can generate 36 independent dual-polarisation beams. With seven modules, 336 MHz out of

$48\,\textrm{MHz}$ of bandwidth from all PAF elements for a single antenna and can generate 36 independent dual-polarisation beams. With seven modules, 336 MHz out of ![]() $384\,\textrm{MHz}$ available from the digital receiver is processed. Wiring for the eighth module is in place, but cost constraints meant it was not installed. Each Redback has six processing FPGAs, which are fully interconnected electrically. The interconnect is used to distribute data so that each FPGA has

$384\,\textrm{MHz}$ available from the digital receiver is processed. Wiring for the eighth module is in place, but cost constraints meant it was not installed. Each Redback has six processing FPGAs, which are fully interconnected electrically. The interconnect is used to distribute data so that each FPGA has ![]() $8\,\textrm{MHz}$ of data from all 192 digitised ports (188 PAF elements + 2 calibration signals + 2 spare ports).

$8\,\textrm{MHz}$ of data from all 192 digitised ports (188 PAF elements + 2 calibration signals + 2 spare ports).

The signal path through the beamformer is shown in Figure 17. The beamformer engine produces up to 72 independent beams on the sky, each a linear combination of M digitised port voltages ![]() ${\textbf{x}=\left[ {{x}_{1}},{{x}_{2}},...,{{x}_{M}} \right]^{T}}$. The beamforming operation is defined by

${\textbf{x}=\left[ {{x}_{1}},{{x}_{2}},...,{{x}_{M}} \right]^{T}}$. The beamforming operation is defined by

where ![]() $\textbf{w}^H$ is the conjugate transpose of the beamformer weights and

$\textbf{w}^H$ is the conjugate transpose of the beamformer weights and ![]() $\textbf{x}(n)$ is the vector of port voltages for a single frequency channel at time sample n. The beamformer weights are complex valued and may be varied independently for each

$\textbf{x}(n)$ is the vector of port voltages for a single frequency channel at time sample n. The beamformer weights are complex valued and may be varied independently for each ![]() $1\,\textrm{MHz}$ channel of each beam. The weights are represented as 14-bit real and 14-bit imaginary two’s complement (signed) integers.

$1\,\textrm{MHz}$ channel of each beam. The weights are represented as 14-bit real and 14-bit imaginary two’s complement (signed) integers.

Table 4. Number of ports per polarisation per beam for various numbers of dual-polarisation beams

Figure 16. Redback module used for beamformer and correlator. Field-programmable gate arrays (FPGAs) used for digital signal processing are hidden beneath cooling fans. Banks of dynamic random access memory (DRAM) are visible beside each FPGA.

The number of ports M that are weighted into each beam can be as many as all 192 ports from the digital receiver for 1 ASKAP antenna, but it reduces to 60 ports as the number of beams increases to the full complement of 72 single polarisation beams. The reduction of input ports with beams is summarised in Table 4 and is required due to hardware resource limitations in the beamformer. The selection of M ports from 192 can be specified arbitrarily for each ![]() $1\,\textrm{MHz}$ channel of each beam.

$1\,\textrm{MHz}$ channel of each beam.

The system is typically configured to produce 36 dual-polarised beams. The beamformed voltages for each ![]() $1\,\textrm{MHz}$ channel pass into a second polyphase filter bank, which performs a final fine frequency channelisation. For normal operation the frequency resolution is

$1\,\textrm{MHz}$ channel pass into a second polyphase filter bank, which performs a final fine frequency channelisation. For normal operation the frequency resolution is ![]() $18.5\,\textrm{kHz}$.

$18.5\,\textrm{kHz}$.

A key advantage of beamforming on 1 MHz channels is that the beamformer can be most efficiently implemented mid-stage as 336 separate narrow-band beamformers (Van Veen & Buckley Reference Van Veen and Buckley1988). This allows beamforming with complex weights as opposed to frequency dependent weighting with FIR filters, which would be needed if the beamforming occurred on the ADC voltages. Furthermore, since the fine filter bank (FFB) is operating on beam voltages rather than raw PAF element voltages, there is a reduction in the FFB data throughput by a factor of approximately three (188 PAF elements/72 beams). This reduction also means that although three 12-fibre ribbons are needed to input data to a Redback module only one is needed at the output. That is, two fibres per FPGA each carrying ![]() $4\,\textrm{MHz}$ of beamformed data.

$4\,\textrm{MHz}$ of beamformed data.

A key data product for many beamforming algorithms is the array covariance matrix (ACM), which is defined as

where ![]() ${\textbf{x}=\left[ {{x}_{1}},{{x}_{2}},...,{{x}_{K}} \right]^{T}}$ is a vector of K array element voltages assumed to be stationary discrete-time stochastic processes,

${\textbf{x}=\left[ {{x}_{1}},{{x}_{2}},...,{{x}_{K}} \right]^{T}}$ is a vector of K array element voltages assumed to be stationary discrete-time stochastic processes, ![]() ${{\upmu }_{\textbf{x}}}$ is the element-wise mean value of

${{\upmu }_{\textbf{x}}}$ is the element-wise mean value of ![]() $\textbf{x}$, and

$\textbf{x}$, and ![]() $\langle\cdot\rangle$ denotes expectation. For the ASKAP signal model the

$\langle\cdot\rangle$ denotes expectation. For the ASKAP signal model the ![]() $x_i$ are the frequency-channelised PAF element voltages, which are complex-valued random time series whose statistics are assumed to be stationary with zero mean over the beam calibration interval. Under these conditions, the elements of

$x_i$ are the frequency-channelised PAF element voltages, which are complex-valued random time series whose statistics are assumed to be stationary with zero mean over the beam calibration interval. Under these conditions, the elements of ![]() $\textbf{R}$ in (3) are adequately approximated for each

$\textbf{R}$ in (3) are adequately approximated for each ![]() $1\,\textrm{MHz}$ channel by the time average