1. Introduction

1.1. Literature on Likelihood Ratio Test

The likelihood ratio test (LRT) is one of the most popular methods for comparing nested models. When comparing two nested models that satisfy certain regularity conditions, the p-value of an LRT is obtained by comparing the LRT statistic with a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution with degrees of freedom equal to the difference in the number of free parameters between the two nested models. This reference distribution is suggested by the asymptotic theory of LRT that is known as Wilks’ theorem (Wilks Reference Wilks1938).

distribution with degrees of freedom equal to the difference in the number of free parameters between the two nested models. This reference distribution is suggested by the asymptotic theory of LRT that is known as Wilks’ theorem (Wilks Reference Wilks1938).

However, for the statistical inference of models with latent variables (e.g., factor analysis, item factor analysis for categorical data, structural equation models, random effects models, finite mixture models), it is often found that the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() approximation suggested by Wilks’ theorem does not hold. There are various published studies showing that the LRT is not valid under certain violations/conditions (e.g., small sample size, wrong model under the alternative hypothesis, large number of items, non-normally distributed variables, unique variances equal to zero, lack of identifiability), leading to over-factoring and over-rejections; see, e.g., Hakstian et al. (Reference Hakstian, Rogers and Cattell1982), Liu and Shao (Reference Liu and Shao2003), Hayashi et al. (Reference Hayashi, Bentler and Yuan2007), Asparouhov and Muthén (Reference Asparouhov and Muthén2009), Wu and Estabrook (Reference Wu and Estabrook2016), Deng et al. (Reference Deng, Yang and Marcoulides2018), Shi et al. (Reference Shi, Lee and Terry2018), Yang et al. (Reference Yang, Jiang and Yuan2018) and Auerswald and Moshagen (Reference Auerswald and Moshagen2019). There is also a significant amount of the literature on the effect of testing at the boundary of parameter space that arise when testing the significance of variance components in random effects models as well as in structural equation models (SEM) with linear or nonlinear constraints (see Stram and Lee Reference Stram and Lee1994, Reference Stram and Lee1995; Dominicus et al. Reference Dominicus, Skrondal, Gjessing, Pedersen and Palmgren2006; Savalei and Kolenikov Reference Savalei and Kolenikov2008; Davis-Stober Reference Davis-Stober2009; Wu and Neale Reference Wu and Neale2013; Du and Wang Reference Du and Wang2020).

approximation suggested by Wilks’ theorem does not hold. There are various published studies showing that the LRT is not valid under certain violations/conditions (e.g., small sample size, wrong model under the alternative hypothesis, large number of items, non-normally distributed variables, unique variances equal to zero, lack of identifiability), leading to over-factoring and over-rejections; see, e.g., Hakstian et al. (Reference Hakstian, Rogers and Cattell1982), Liu and Shao (Reference Liu and Shao2003), Hayashi et al. (Reference Hayashi, Bentler and Yuan2007), Asparouhov and Muthén (Reference Asparouhov and Muthén2009), Wu and Estabrook (Reference Wu and Estabrook2016), Deng et al. (Reference Deng, Yang and Marcoulides2018), Shi et al. (Reference Shi, Lee and Terry2018), Yang et al. (Reference Yang, Jiang and Yuan2018) and Auerswald and Moshagen (Reference Auerswald and Moshagen2019). There is also a significant amount of the literature on the effect of testing at the boundary of parameter space that arise when testing the significance of variance components in random effects models as well as in structural equation models (SEM) with linear or nonlinear constraints (see Stram and Lee Reference Stram and Lee1994, Reference Stram and Lee1995; Dominicus et al. Reference Dominicus, Skrondal, Gjessing, Pedersen and Palmgren2006; Savalei and Kolenikov Reference Savalei and Kolenikov2008; Davis-Stober Reference Davis-Stober2009; Wu and Neale Reference Wu and Neale2013; Du and Wang Reference Du and Wang2020).

Theoretical investigations have shown that certain regularity conditions of Wilks’ theorem are not always satisfied when comparing nested models with latent variables. Takane et al. (Reference Takane, van der Heijden, Browne, Higuchi, Iba and Ishiguro2003) and Hayashi et al. (Reference Hayashi, Bentler and Yuan2007) were among the ones who pointed out that models for which one needs to select dimensionality (e.g., principal component analysis, latent class, factor models) have points of irregularity in their parameter space that in some cases invalidate the use of LRT. Specifically, such issues arise in factor analysis when comparing models with different number of factors rather than comparing a factor model against the saturated model. The LRT for comparing a q-factor model against the saturated model does follow a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution under mild conditions. However, for nested models with different number of factors (q-factor model is the correct one against the one with

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(q+k)$$\end{document}

distribution under mild conditions. However, for nested models with different number of factors (q-factor model is the correct one against the one with

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$(q+k)$$\end{document}

![]() factors), the LRT is likely not

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

factors), the LRT is likely not

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() -distributed due to violation of one or more of the regularity conditions. This is in line with the two basic assumptions required by the asymptotic theory for factor analysis and SEM: the identifiability of the parameter vector and non-singularity of the information matrix (see Shapiro Reference Shapiro1986 and references therein). More specifically, Hayashi et al. (Reference Hayashi, Bentler and Yuan2007) focus on exploratory factor analysis and on the problem that arises when the number of factors exceeds the true number of factors that might lead to rank deficiency and non-identifiability of model parameters. That corresponds to the violations of the two regularity conditions. Those findings go back to Geweke and Singleton (Reference Geweke and Singleton1980) and Amemiya and Anderson (Reference Amemiya and Anderson1990). More specifically, Geweke and Singleton (Reference Geweke and Singleton1980) studied the behavior of the LRT in small samples and concluded that when the regularity conditions from Wilks’ theorem are not satisfied the asymptotic theory seems to be misleading in all sample sizes considered.

-distributed due to violation of one or more of the regularity conditions. This is in line with the two basic assumptions required by the asymptotic theory for factor analysis and SEM: the identifiability of the parameter vector and non-singularity of the information matrix (see Shapiro Reference Shapiro1986 and references therein). More specifically, Hayashi et al. (Reference Hayashi, Bentler and Yuan2007) focus on exploratory factor analysis and on the problem that arises when the number of factors exceeds the true number of factors that might lead to rank deficiency and non-identifiability of model parameters. That corresponds to the violations of the two regularity conditions. Those findings go back to Geweke and Singleton (Reference Geweke and Singleton1980) and Amemiya and Anderson (Reference Amemiya and Anderson1990). More specifically, Geweke and Singleton (Reference Geweke and Singleton1980) studied the behavior of the LRT in small samples and concluded that when the regularity conditions from Wilks’ theorem are not satisfied the asymptotic theory seems to be misleading in all sample sizes considered.

1.2. Our Contributions

The contribution of this note is twofold. First, we provide a discussion about situations under which Wilks’ theorem for LRT may fail. Via three examples, we provide a relatively more complete picture about this issue in models with latent variables. Second, we introduce a unified asymptotic theory for LRT that covers Wilks’ theorem as a special case and provides the correct asymptotic reference distribution for LRT when Wilks’ theorem fails. This unified theory does not seem to have received enough attention in psychometrics, even though it has been established in statistics for long (Chernoff Reference Chernoff1954; van der Vaart Reference van der Vaart2000; Drton Reference Drton2009). In this note, we provide a tutorial on this theory, by presenting the theorems in a more accessible way and providing illustrative examples.

1.3. Examples

To further illustrate the issue with the classical theory for LRT, we provide three examples. These examples suggest that the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() approximation can perform poorly and give p-values that can be either more conservative or more liberal.

approximation can perform poorly and give p-values that can be either more conservative or more liberal.

Example 1

(Exploratory factor analysis) Consider a dimensionality test in exploratory factor analysis (EFA). For ease of exposition, we consider two hypothesis testing problems: (a) testing a one-factor model against a two-factor model and (b) testing a one-factor model against a saturated multivariate normal model with an unrestricted covariance matrix. Similar examples have been considered in Hayashi et al. (Reference Hayashi, Bentler and Yuan2007) where similar phenomena have been studied.

1(a). Suppose that we have J mean-centered continuous indicators,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathbf {X}}= (X_1, ..., X_J)^\top $$\end{document}

![]() , which follow a J-variate normal distribution

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$N({\mathbf {0}}, {\varvec{\Sigma }})$$\end{document}

, which follow a J-variate normal distribution

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$N({\mathbf {0}}, {\varvec{\Sigma }})$$\end{document}

![]() . The one-factor model parameterizes

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\Sigma }}$$\end{document}

. The one-factor model parameterizes

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\Sigma }}$$\end{document}

![]() as

as

where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathbf {a}}_1 = (a_{11}, ..., a_{J1})^\top $$\end{document}

![]() contains the loading parameters and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\Delta }} = diag(\delta _1, ..., \delta _J)$$\end{document}

contains the loading parameters and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\Delta }} = diag(\delta _1, ..., \delta _J)$$\end{document}

![]() is diagonal matrix with a diagonal entries

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\delta _1$$\end{document}

is diagonal matrix with a diagonal entries

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\delta _1$$\end{document}

![]() , ...,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\delta _J$$\end{document}

, ...,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\delta _J$$\end{document}

![]() . Here,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\Delta }}$$\end{document}

. Here,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\Delta }}$$\end{document}

![]() is the covariance matrix for the unique factors. Similarly, the two-factor model parameterizes

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\Sigma }}$$\end{document}

is the covariance matrix for the unique factors. Similarly, the two-factor model parameterizes

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\Sigma }}$$\end{document}

![]() as

as

where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathbf {a}}_2 = (a_{12}, ..., a_{J2})^\top $$\end{document}

![]() contains the loading parameters for the second factor and we set

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$a_{12} = 0$$\end{document}

contains the loading parameters for the second factor and we set

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$a_{12} = 0$$\end{document}

![]() to ensure model identifiability. Obviously, the one-factor model is nested within the two-factor model. The comparison between these two models is equivalent to test

to ensure model identifiability. Obviously, the one-factor model is nested within the two-factor model. The comparison between these two models is equivalent to test

If Wilks’ theorem holds, then under

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_0$$\end{document}

![]() the LRT statistic should asymptotically follow a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

the LRT statistic should asymptotically follow a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution with

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$J-1$$\end{document}

distribution with

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$J-1$$\end{document}

![]() degrees of freedom.

degrees of freedom.

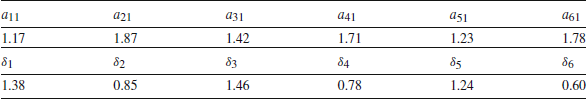

Table 1. Values of the true parameters for the simulations in Example 1.

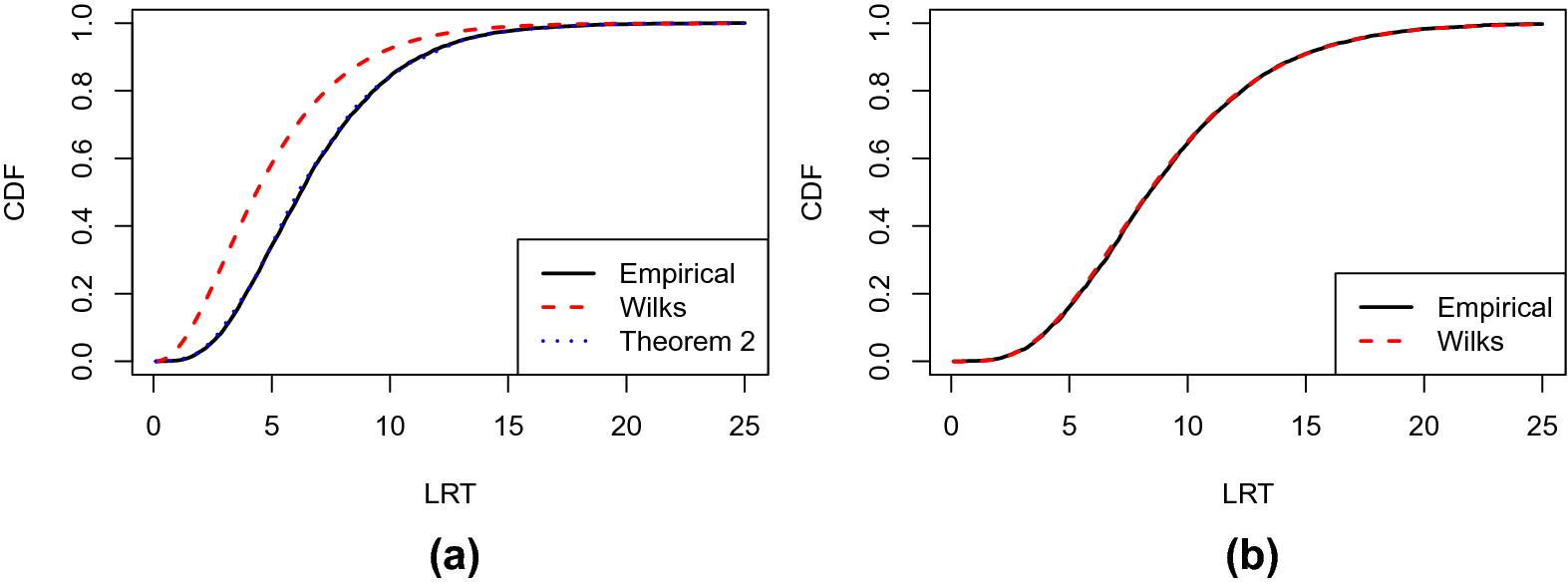

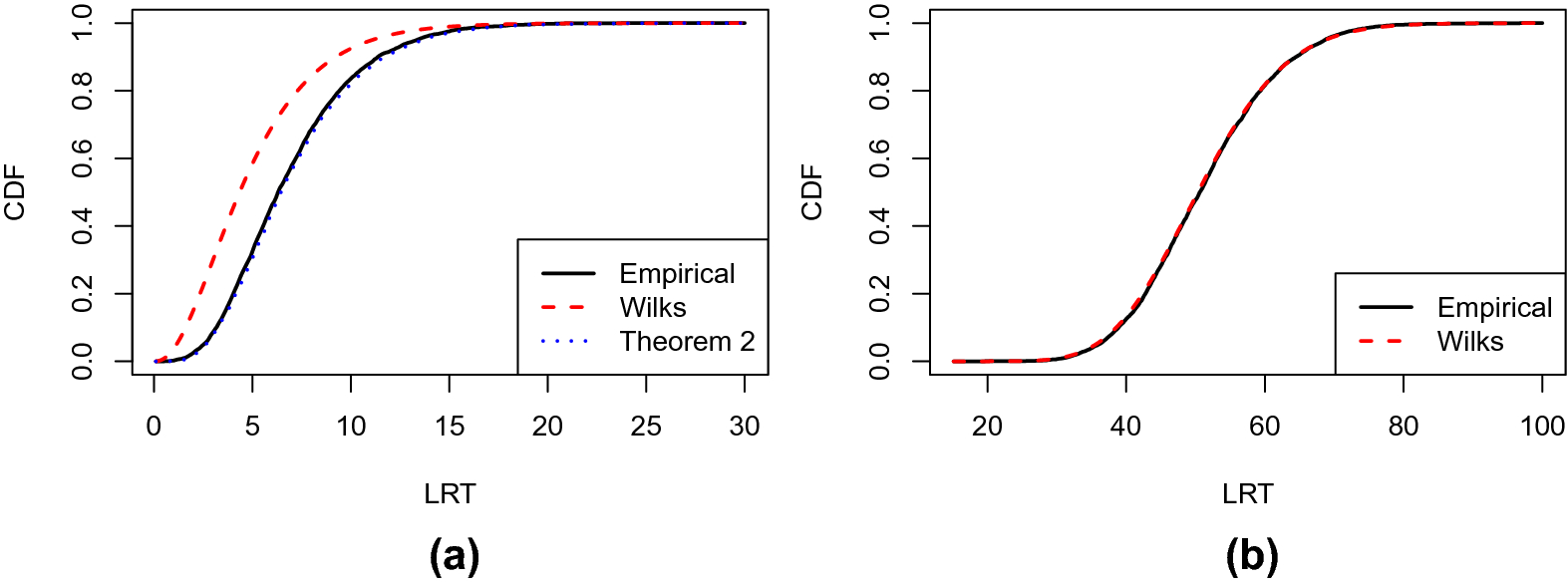

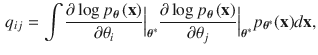

Figure 1.

a Results of Example 1(a). The black solid line shows the empirical CDF of the LRT statistic, based on 5000 independent simulations. The red dashed line shows the CDF of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution with 5 degrees of freedom as suggested by Wilks’ theorem. The blue dotted line shows the CDF of the reference distribution suggested by Theorem 2. b Results of Example 1(b). The black solid line shows the empirical CDF of the LRT statistic, and the red dashed line shows the CDF of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

distribution with 5 degrees of freedom as suggested by Wilks’ theorem. The blue dotted line shows the CDF of the reference distribution suggested by Theorem 2. b Results of Example 1(b). The black solid line shows the empirical CDF of the LRT statistic, and the red dashed line shows the CDF of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution with 9 degrees of freedom as suggested by Wilks’ theorem

distribution with 9 degrees of freedom as suggested by Wilks’ theorem

We now provide a simulated example. Data are generated from a one-factor model, with

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$J = 6$$\end{document}

![]() indicators and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$N=5000$$\end{document}

indicators and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$N=5000$$\end{document}

![]() observations. The true parameter values are given in Table 1. We generate 5000 independent datasets. For each dataset, we compute the LRT for comparing the one- and two-factor models. Results are presented in panel (a) of Fig. 1. The black solid line shows the empirical cumulative distribution function (CDF) of the LRT statistic, and the red dashed line shows the CDF of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

observations. The true parameter values are given in Table 1. We generate 5000 independent datasets. For each dataset, we compute the LRT for comparing the one- and two-factor models. Results are presented in panel (a) of Fig. 1. The black solid line shows the empirical cumulative distribution function (CDF) of the LRT statistic, and the red dashed line shows the CDF of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution suggested by Wilks’ Theorem. A substantial discrepancy can be observed between the two CDFs. Specifically, the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

distribution suggested by Wilks’ Theorem. A substantial discrepancy can be observed between the two CDFs. Specifically, the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() CDF tends to stochastically dominate the empirical CDF, implying that p-values based on this

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

CDF tends to stochastically dominate the empirical CDF, implying that p-values based on this

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution tend to be more liberal. In fact, if we reject

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_0$$\end{document}

distribution tend to be more liberal. In fact, if we reject

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$H_0$$\end{document}

![]() at 5% significance level based on these p-values, the actual type I error is 10.8%. These results suggest the failure of Wilks’ theorem in this example.

at 5% significance level based on these p-values, the actual type I error is 10.8%. These results suggest the failure of Wilks’ theorem in this example.

1(b). When testing the one-factor model against the saturated model, the LRT statistic is asymptotically

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() if Wilks’ theorem holds. The degrees of freedom of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

if Wilks’ theorem holds. The degrees of freedom of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution are

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$J(J+1)/2 - 2J$$\end{document}

distribution are

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$J(J+1)/2 - 2J$$\end{document}

![]() , where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$J(J+1)/2$$\end{document}

, where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$J(J+1)/2$$\end{document}

![]() is the number of free parameters in an unrestricted covariance matrix

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\Sigma }}$$\end{document}

is the number of free parameters in an unrestricted covariance matrix

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\Sigma }}$$\end{document}

![]() and 2J is the number of parameters in the one-factor model. In panel (b) of Fig. 1, the black solid line shows the empirical CDF of the LRT statistic based on 5000 independent simulations, and the red dashed line shows the CDF of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

and 2J is the number of parameters in the one-factor model. In panel (b) of Fig. 1, the black solid line shows the empirical CDF of the LRT statistic based on 5000 independent simulations, and the red dashed line shows the CDF of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution with 9 degrees of freedom. As we can see, the two curves almost overlap with each other, suggesting that Wilks’ theorem holds here.

distribution with 9 degrees of freedom. As we can see, the two curves almost overlap with each other, suggesting that Wilks’ theorem holds here.

Example 2

(Exploratory item factor analysis) We further give an example of exploratory item factor analysis (IFA) for binary data, in which similar phenomena as those in Example 1 are observed. Again, we consider two hypothesis testing problems: (a) testing a one-factor model against a two-factor model and (b) testing a one-factor model against a saturated multinomial model for a binary random vector.

2(a). Suppose that we have a J-dimensional response vector,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathbf {X}}= (X_1, ..., X_J)^\top $$\end{document}

![]() , where all the entries are binary-valued, i.e.,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_j \in \{0, 1\}$$\end{document}

, where all the entries are binary-valued, i.e.,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_j \in \{0, 1\}$$\end{document}

![]() . It follows a categorical distribution, satisfying

. It follows a categorical distribution, satisfying

where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\pi _{\mathbf {x}}\ge 0$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\sum _{{\mathbf {x}}\in \{0, 1\}^J} \pi _{{\mathbf {x}}} = 1$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\sum _{{\mathbf {x}}\in \{0, 1\}^J} \pi _{{\mathbf {x}}} = 1$$\end{document}

![]() .

.

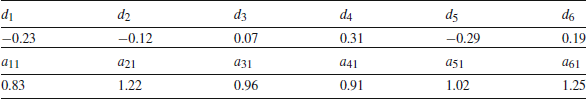

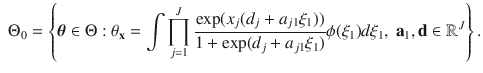

The exploratory two-factor IFA model parameterizes

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\pi _{\mathbf {x}}$$\end{document}

![]() by

by

where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\phi (\cdot )$$\end{document}

![]() is the probability density function of a standard normal distribution. This model is also known as a multidimensional two-parameter logistic (M2PL) model (Reckase Reference Reckase2009). Here,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$a_{jk}$$\end{document}

is the probability density function of a standard normal distribution. This model is also known as a multidimensional two-parameter logistic (M2PL) model (Reckase Reference Reckase2009). Here,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$a_{jk}$$\end{document}

![]() s are known as the discrimination parameters and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$d_j$$\end{document}

s are known as the discrimination parameters and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$d_j$$\end{document}

![]() s are known as the easiness parameters. We denote

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathbf {a}}_1 = (a_{11},...,a_{J1})^\top $$\end{document}

s are known as the easiness parameters. We denote

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathbf {a}}_1 = (a_{11},...,a_{J1})^\top $$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathbf {a}}_2 = (a_{12},...,a_{J2})^\top .$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathbf {a}}_2 = (a_{12},...,a_{J2})^\top .$$\end{document}

![]() For model identifiability, we set

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$a_{12} = 0$$\end{document}

For model identifiability, we set

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$a_{12} = 0$$\end{document}

![]() . When

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$a_{j2} = 0$$\end{document}

. When

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$a_{j2} = 0$$\end{document}

![]() ,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$j=2, ..., J$$\end{document}

,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$j=2, ..., J$$\end{document}

![]() , then the two-factor model degenerates to the one-factor model. Similar to Example 1(a), if Wilks’ theorem holds, the LRT statistic should asymptotically follow a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

, then the two-factor model degenerates to the one-factor model. Similar to Example 1(a), if Wilks’ theorem holds, the LRT statistic should asymptotically follow a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution with

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$J-1$$\end{document}

distribution with

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$J-1$$\end{document}

![]() degrees of freedom.

degrees of freedom.

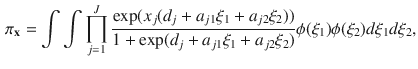

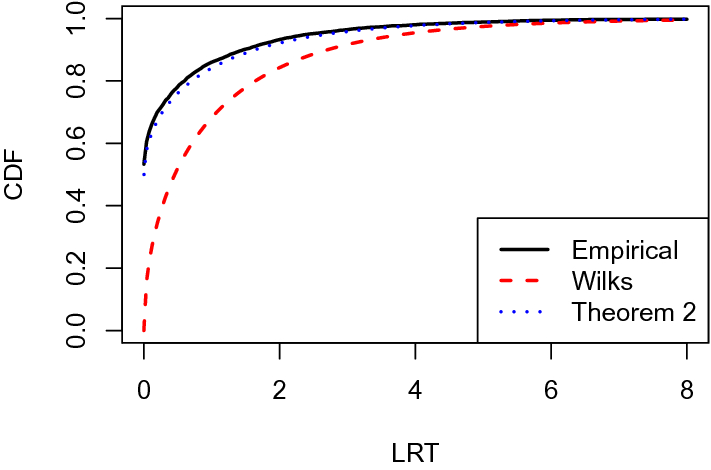

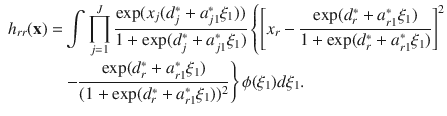

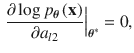

Simulation results suggest the failure of this

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() approximation. In Fig. 2, we provide plots similar to those in Fig. 1, based on 5000 datasets simulated from a one-factor IFA model with sample size

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$N = 5000$$\end{document}

approximation. In Fig. 2, we provide plots similar to those in Fig. 1, based on 5000 datasets simulated from a one-factor IFA model with sample size

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$N = 5000$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$J = 6$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$J = 6$$\end{document}

![]() . The true parameters of this IFA model are given in Table 2. The result is shown in panel (a) of Fig. 2, where a similar pattern is observed as that in panel (a) of Fig. 1 for Example 1(a).

. The true parameters of this IFA model are given in Table 2. The result is shown in panel (a) of Fig. 2, where a similar pattern is observed as that in panel (a) of Fig. 1 for Example 1(a).

Table 2. Values of the true parameters for the simulations in Example 2.

Figure 2.

a Results of Example 2(a). The black solid line shows the empirical CDF of the LRT statistic, based on 5000 independent simulations. The red dashed line shows the CDF of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution with 5 degrees of freedom as suggested by Wilks’ theorem. The blue dotted line shows the CDF of the reference distribution suggested by Theorem 2. b Results of Example 2(b). The black solid line shows the empirical CDF of the LRT statistic, and the red dashed line shows the CDF of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

distribution with 5 degrees of freedom as suggested by Wilks’ theorem. The blue dotted line shows the CDF of the reference distribution suggested by Theorem 2. b Results of Example 2(b). The black solid line shows the empirical CDF of the LRT statistic, and the red dashed line shows the CDF of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution with 51 degrees of freedom as suggested by Wilks’ theorem

distribution with 51 degrees of freedom as suggested by Wilks’ theorem

2(b). When testing the one-factor IFA model against the saturated model, the LRT statistic is asymptotically

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() if Wilks’ theorem holds, for which the degree of freedom is

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$2^J-1 - 2J$$\end{document}

if Wilks’ theorem holds, for which the degree of freedom is

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$2^J-1 - 2J$$\end{document}

![]() . Here,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$2^J-1$$\end{document}

. Here,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$2^J-1$$\end{document}

![]() is the number of free parameters in the saturated model, and 2J is the number of parameters in the one-factor IFA model. The result is given in panel (b) of Fig. 2. Similar to Example 1(b), the empirical CDF and the CDF implied by Wilks’ theorem are very close to each other, suggesting that Wilks’ theorem holds here.

is the number of free parameters in the saturated model, and 2J is the number of parameters in the one-factor IFA model. The result is given in panel (b) of Fig. 2. Similar to Example 1(b), the empirical CDF and the CDF implied by Wilks’ theorem are very close to each other, suggesting that Wilks’ theorem holds here.

Example 3

(Random effects model) Our third example considers a random intercept model. Consider two-level data with individuals at level 1 nested within groups at level 2. Let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$X_{ij}$$\end{document}

![]() be data from the jth individual from the ith group, where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$i = 1, ..., N$$\end{document}

be data from the jth individual from the ith group, where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$i = 1, ..., N$$\end{document}

![]() and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$j = 1, ..., J$$\end{document}

and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$j = 1, ..., J$$\end{document}

![]() . For simplicity, we assume all the groups have the same number of individuals. Assume the following random effects model,

. For simplicity, we assume all the groups have the same number of individuals. Assume the following random effects model,

where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\beta _0$$\end{document}

![]() is the overall mean across all the groups,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu _i \sim N(0, \sigma _1^2)$$\end{document}

is the overall mean across all the groups,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mu _i \sim N(0, \sigma _1^2)$$\end{document}

![]() characterizes the difference between the mean for group i and the overall mean, and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\epsilon _{ij} \sim N(0, \sigma _2^2)$$\end{document}

characterizes the difference between the mean for group i and the overall mean, and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\epsilon _{ij} \sim N(0, \sigma _2^2)$$\end{document}

![]() is the individual level residual.

is the individual level residual.

To test for between-group variability under this model is equivalent to test

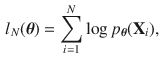

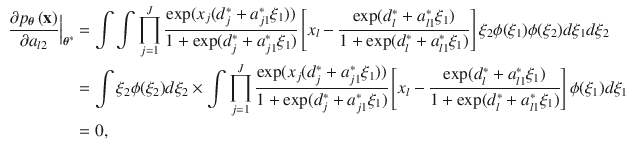

If Wilks’ theorem holds, then the LRT statistic should follow a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution with one degree of freedom. We conduct a simulation study and show the results in Fig. 3. In this figure, the black solid line shows the empirical CDF of the LRT statistic, based on 5000 independent simulations from the null model with

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$N = 200$$\end{document}

distribution with one degree of freedom. We conduct a simulation study and show the results in Fig. 3. In this figure, the black solid line shows the empirical CDF of the LRT statistic, based on 5000 independent simulations from the null model with

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$N = 200$$\end{document}

![]() ,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$J= 20$$\end{document}

,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$J= 20$$\end{document}

![]() ,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\beta _0 = 0$$\end{document}

,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\beta _0 = 0$$\end{document}

![]() , and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\sigma ^2_2 = 1$$\end{document}

, and

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\sigma ^2_2 = 1$$\end{document}

![]() . The red dashed line shows the CDF of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

. The red dashed line shows the CDF of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

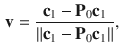

![]() distribution with one degree of freedom. As we can see, the two CDFs are not close to each other, and the empirical CDF tends to stochastically dominate the theoretical CDF suggested by Wilks’ theorem. It suggests the failure of Wilks’ theorem in this example.

distribution with one degree of freedom. As we can see, the two CDFs are not close to each other, and the empirical CDF tends to stochastically dominate the theoretical CDF suggested by Wilks’ theorem. It suggests the failure of Wilks’ theorem in this example.

This kind of phenomenon has been observed when the null model lies on the boundary of the parameter space, due to which the regularity conditions of Wilks’ theorem do not hold. The LRT statistic has been shown to often follow a mixture of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution asymptotically (e.g., Shapiro Reference Shapiro1985; Self and Liang Reference Self and Liang1987), instead of a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

distribution asymptotically (e.g., Shapiro Reference Shapiro1985; Self and Liang Reference Self and Liang1987), instead of a

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution. As it will be shown in Sect. 2, such a mixture of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

distribution. As it will be shown in Sect. 2, such a mixture of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution can be derived from a general theory for LRT.

distribution can be derived from a general theory for LRT.

Figure 3. The black solid line shows the empirical CDF of the LRT statistic, based on 5000 independent simulations. The red dashed line shows the CDF of the

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution with one degree of freedom as suggested by Wilks’ theorem. The blue dotted line shows the CDF of the mixture of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

distribution with one degree of freedom as suggested by Wilks’ theorem. The blue dotted line shows the CDF of the mixture of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() distribution suggested by Theorem 2 (Color figure online)

distribution suggested by Theorem 2 (Color figure online)

We now explain why Wilks’ theorem does not hold in Examples 1(a), 2(a), and 3. We define some generic notations. Suppose that we have i.i.d. observations

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathbf {X}}_1$$\end{document}

![]() , ...,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathbf {X}}_N$$\end{document}

, ...,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathbf {X}}_N$$\end{document}

![]() , from a parametric model

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {P}}_{\Theta } = \{P_{{\varvec{\theta }}}: {\varvec{\theta }} \in \Theta \subset {\mathbb {R}}^k\}$$\end{document}

, from a parametric model

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathcal {P}}_{\Theta } = \{P_{{\varvec{\theta }}}: {\varvec{\theta }} \in \Theta \subset {\mathbb {R}}^k\}$$\end{document}

![]() , where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathbf {X}}_i = (X_{i1}, ..., X_{iJ})^\top .$$\end{document}

, where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\mathbf {X}}_i = (X_{i1}, ..., X_{iJ})^\top .$$\end{document}

![]() We assume that the distributions in

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mathcal P_{\Theta }$$\end{document}

We assume that the distributions in

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\mathcal P_{\Theta }$$\end{document}

![]() are dominated by a common

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\sigma $$\end{document}

are dominated by a common

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\sigma $$\end{document}

![]() -finite measure

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\nu $$\end{document}

-finite measure

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\nu $$\end{document}

![]() with respect to which they have probability density functions

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$p_{{\varvec{\theta }}}: {\mathbb {R}}^J \rightarrow [0,\infty )$$\end{document}

with respect to which they have probability density functions

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$p_{{\varvec{\theta }}}: {\mathbb {R}}^J \rightarrow [0,\infty )$$\end{document}

![]() . Let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\Theta _0 \subset \Theta $$\end{document}

. Let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\Theta _0 \subset \Theta $$\end{document}

![]() be a submodel and we are interested in testing

be a submodel and we are interested in testing

Let

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$p_{{\varvec{\theta }}^* }$$\end{document}

![]() be the true model for the observations, where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\theta }}^* \in \Theta _0$$\end{document}

be the true model for the observations, where

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\theta }}^* \in \Theta _0$$\end{document}

![]() .

.

The likelihood function is defined as

and the LRT statistic is defined as

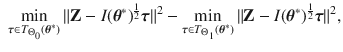

Under suitable regularity conditions, Wilks’ theorem suggests that the LRT statistic

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\lambda _N$$\end{document}

![]() is asymptotically

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

is asymptotically

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\chi ^2$$\end{document}

![]() .

.

Wilks’ theorem for LRT requires several regularity conditions; see, e.g., Theorem 12.4.2, Lehmann and Romano (Reference Lehmann and Romano2006). Among these conditions, there are two conditions that the previous examples do not satisfy. First, it is required that

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\theta }}^*$$\end{document}

![]() is an interior point of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\Theta $$\end{document}

is an interior point of

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\Theta $$\end{document}

![]() . This condition is not satisfied for Example 3, when

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\Theta $$\end{document}

. This condition is not satisfied for Example 3, when

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\Theta $$\end{document}

![]() is taken to be

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\{(\beta _0, \sigma _1^2, \sigma _2^2): \beta _0 \in {\mathbb {R}}, \sigma _1^2 \in [0, \infty ), \sigma _2^2 \in [0, \infty )\}$$\end{document}

is taken to be

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\{(\beta _0, \sigma _1^2, \sigma _2^2): \beta _0 \in {\mathbb {R}}, \sigma _1^2 \in [0, \infty ), \sigma _2^2 \in [0, \infty )\}$$\end{document}

![]() , as the null model lies on the boundary of the parameter space. Second, it is required that the expected Fisher information matrix at

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\theta }}^*$$\end{document}

, as the null model lies on the boundary of the parameter space. Second, it is required that the expected Fisher information matrix at

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$${\varvec{\theta }}^*$$\end{document}

![]() ,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$I({\varvec{\theta }}^*) = E_{{\varvec{\theta }}^*}[\nabla l_{N}({\varvec{\theta }}^*)\nabla l_{N}({\varvec{\theta }}^*)^\top ]/N$$\end{document}

,

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$I({\varvec{\theta }}^*) = E_{{\varvec{\theta }}^*}[\nabla l_{N}({\varvec{\theta }}^*)\nabla l_{N}({\varvec{\theta }}^*)^\top ]/N$$\end{document}

![]() is strictly positive definite. As we summarize in Lemma 1, this condition is not satisfied in Examples 1(a) and 2(a), when

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\Theta $$\end{document}

is strictly positive definite. As we summarize in Lemma 1, this condition is not satisfied in Examples 1(a) and 2(a), when

\documentclass[12pt]{minimal}

\usepackage{amsmath}

\usepackage{wasysym}

\usepackage{amsfonts}

\usepackage{amssymb}

\usepackage{amsbsy}

\usepackage{mathrsfs}

\usepackage{upgreek}

\setlength{\oddsidemargin}{-69pt}

\begin{document}$$\Theta $$\end{document}

![]() is taken to be the parameter space of the corresponding two-factor model. However, interestingly, when comparing the one-factor model with the saturated model, the Fisher information matrix is strictly positive definite in Examples 1(b) and 2(b), for both simulated examples.

is taken to be the parameter space of the corresponding two-factor model. However, interestingly, when comparing the one-factor model with the saturated model, the Fisher information matrix is strictly positive definite in Examples 1(b) and 2(b), for both simulated examples.

Lemma 1

-