The dramatic increase in life expectancy during the twentieth century surely ranks as one of the greatest achievements of medical science. Most babies born in 1900 did not live past the age of 50, today those born in Westernised countries can expect to live into their 70s and 80s and beyond( Reference Suzman and Beard 1 ), a trend which is likely to continue at least for the next decade or so( Reference Kontis, Bennett and Mathers 2 ). Nevertheless, population-level, increased longevity brings with it unexpected challenges in the form of age-related diseases. As well as placing a heavy economic burden on healthcare systems, these engender serious human costs. Amongst such disorders, perhaps the most pernicious is dementia which shares many mechanisms with less severe conditions such as subjective memory impairment (SMI; more recently termed subjective cognitive decline), mild cognitive impairment (MCI) and non-clinical age-related cognitive decline.

The World Health Organisation estimates that there is a new case of dementia every 4 s( Reference Prince, Guerchet and Prina 3 ), a figure which illustrates the urgency of the global health crisis associated with cognitive decline. Such decline, however, is far from inevitable. While there is a genetic influence in dementia (a three-generation family history roughly doubles the lifetime probability of a dementia diagnosis), it is estimated that <2 % of Alzheimer's disease (AD) cases are due to single-gene mutations with approximately 13 % of cases having a clear autosomal-dominant pattern of inheritance( Reference Bekris, Yu and Bird 4 ). Higher estimates of genetic influences on non-pathological age-related cognitive decline suggest that approximately 50 % of the variance may be explained by genetic factors( Reference Deary, Yang and Davies 5 ), with the remainder assumed to be attributable to environmental and lifestyle influences. This has driven the search for achievable ways to modify the expression of age-related neurocognitive decline and progression to AD. There is now increasing evidence that candidate modifying factors include exercise( Reference Kontis, Bennett and Mathers 2 ) and, importantly for this review, nutritional processes. There is also growing evidence that many of these modifiable influences on dementia risk also modify non-clinical cognitive decline and may be set in motion many years before the manifestation of impaired cognitive function.

The most common form of dementia is AD, accounting for some 60–80 % of dementia cases( 6 ). Distinguishing pathological from non-pathological cognitive ageing is challenging, partly because many of the markers for AD are also observed to some degree in normal ageing, as well in other cognitive phenotypes, such as SMI and MCI. Individuals with SMI perceive their memory to be declining but perform as well as their peers on objective cognitive tests. Nevertheless, they have reduced grey matter volume in the temporal lobe( Reference Jessen, Feyen and Freymann 7 ), which houses structures important for memory processing, and increased levels of brain activation during cognitive tasks( Reference Rodda, Dannhauser and Cutinha 8 ). This suggests that there may be central compensatory mechanisms during early phases of cognitive decline, with greater activation required to maintain previous levels of cognitive performance. Compared with SMI, MCI is more severe, associated with significantly reduced cognitive functions but, unlike AD, the ability to conduct activities of daily living is spared. Both SMI and MCI are associated with increased risk of future dementia( Reference Jessen, Wolfsgruber and Wiese 9 ) with 30–40 % of those with MCI converting to AD over a given 5-year period( Reference Ward, Tardiff and Dye 10 ). SMI also increases the risk of later dementia, especially in those who go on to be classified as having MCI( Reference Jessen, Wolfsgruber and Wiese 9 ), leading to the suggestion of a three-stage model. This risk is particularly high when SMI co-presents with worry( Reference Jessen, Wolfsgruber and Wiese 9 ), raising the intriguing possibility of experiential concern about declining memory being linked to a more severe organic, prodromal state. Conversely, while both conditions can be harbingers of dementia, some individuals stabilise and do not progress to more severe stages. This suggests that MCI and, especially, SMI may be viable targets for preventative interventions including from nutritional interventions.

The first-line pharmacotherapy for AD is dominated by the cholinesterase inhibitor (ChEI) family of drugs, introduced in 1997. Two decades later, there is a general consensus that the ChEI have not realised their initial promise. The drugs can treat the symptomatology of AD, but the impact on the disease itself has been modest. As the name suggests, their common mode of action is to inhibit the enzyme cholinesterase, resulting in elevated levels of the neurotransmitter acetylcholine, which is important for memory and attentional functions and is depleted in the AD brain. ChEI offer moderate respite at best and are effective only at earlier stages of the disorder. They have a relatively small therapeutic window due to low tolerance in many patients( Reference Deardorff, Feen and Grossberg 11 ), with a 30 % drop out rate in clinical trials. Interestingly there are certain herbs with ChEI properties which appear to be better tolerated than their pharmaceutical counterparts, including species of Salvia (sage). Extracts of Salvia have been shown to acutely improve memory and attention in healthy young and older populations( Reference Kennedy, Dodd and Robertson 12 – Reference Tildesley, Kennedy and Perry 15 ), with two small trials in cognitively impaired populations also showing promise( Reference Miroddi, Navarra and Quattropani 16 ).

The relative contribution of the ChEI properties of Salvia to these effects is not known, as the herb affects many cognition-relevant processes( Reference Miroddi, Navarra and Quattropani 16 ). Indeed, one reason for the relative lack of success of pharmaceutical ChEI in the treatment of AD is their restricted mode of action. Like other degenerative diseases, AD can be considered to be product of a pathological cascade, in this case involving progressively accelerating, reciprocal, neurotoxic interactions between oxidative stress, inflammatory responses, compromised cerebral metabolism, neurofibrillary tangle generation, β-amyloid deposition, amongst other processes which include damage to the cholinergic system( Reference Jack, Knopman and Jagust 17 ). The relative contribution of each of these is unknown and likely differs in idiosyncratic ways from person to person. Given the multifaceted nature of AD, unsurprisingly ‘magic bullet’ approaches to halting cognitive decline have been relatively unsuccessful. In part this is because they do not match well with our present understanding of central nervous system function, where our growth in knowledge is characterised by increased complexity; it seems unlikely that there will be a ‘double helix’ of brain function. The lack of success of central nervous system pharmaceutical pipelines in the development of treatments for brain senescence has led to investigations into the modifiable processes which underlie neurocognitive decline. These include (but are not restricted to) cognitive activity and education, exercise and nutritional factors. Some examples of the latter will be outlined in following sections.

Factors influencing age-related cognitive decline

There is increasing evidence that many of the risk factors for cognitive decline may be detectable in mid-life, with several of these overlapping with those for CVD( Reference Qiu and Fratiglioni 18 ). Such processes include poorer glycaemic control, compromised vascular function, oxidative stress, inflammation, perturbations of the hypothalamic–pituitary–adrenocortical axis and microbiotic dysbiosis (Fig. 1).

Fig. 1. Influences on neurocognitive ageing including therapeutic targets for delaying age-related neurocognitive decline. Pharmaceutical approaches are largely restricted to effects on neurotransmitter systems (grey panel). Processes within the green-shaded area represent realistic nutritional targets. Age and genetics (unshaded area) remain non-modifiable factors. HPA, hypothalamic–pituitary–adrenal axis.

The interactions between these factors and cognitive decline may be reciprocal or even reflect reverse causality. Childhood cognitive ability is a strong predictor of both age-related cognitive decline and many later life health indices( Reference Jessen, Wolfsgruber and Wiese 9 ) suggesting that both may be manifestations of some core mechanism(s). Irrespective of the underlying influences, the cluster of processes depicted in Fig. 1 is known to be modifiable by dietary interventions. Indeed, it has been suggested that, despite a projected 3-fold increase in dementia prevalence by 2050, the age-specific risk of dementia may be decreasing in some Western countries due in part to ‘[rising] levels of education and more widespread and successful treatment of key cardiovascular risk factors’( Reference Langa 19 ). Such treatment includes better nutrition advice, again supporting the concept of dietary interventions positively modifying the trajectory of cognitive decline in senescence. The following sections present a selective review of nutritional/dietary interventions, which have potential to modify cognitive function.

Various epidemiological studies have shown an association between certain dietary (patterns and specific bioactive nutrients) and protection against cognitive decline. These include the Mediterranean dietary pattern( Reference Hardman, Kennedy and Macpherson 20 ) and specific dietary components such as the flavonoids( Reference Nurk, Refsum and Drevon 21 ), a class of approximately 5000 compounds believed to contribute to the health benefits of fruit and vegetable intake. Several epidemiological studies have identified an association between flavonoid intake and better cognitive function( Reference Nurk, Refsum and Drevon 21 – Reference Camfield, Stough and Farrimond 24 ). Although useful, such studies may have missed ‘third-factor’ influences on both cognition and diet which, sometimes by definition, are presently unknown. These include genetic polymorphisms and microbiome differences. In the latter case, there is presently intense research focus on the so-called gut–brain axis which has become something of a new frontier for brain research in health and disease. Increasing evidence points to bidirectional cross-signalling between the gut microbiota and brain including via microbiotic metabolites, the immune system and the vagus nerve( Reference Sandhu, Sherwin and Schellekens 25 ). The intestinal microbiome is a rich source of signalling molecules and can be rapidly modified by diet( Reference Albenberg and Wu 26 ) raising the possibility of another nutritional target which influences brain function (e.g. using pre- or probiotics). Despite huge potential and compelling evidence from animal studies, results from early controlled human trials have been mixed. It is unclear to what extent probiotic supplementation achieves the primary goal of altering the microbiota composition( Reference Kristensen, Bryrup and Allin 27 ). Interventions specifically aimed at redressing microbiomic dysbiosis enhanced cognition in a cohort with dementia( Reference Akbari, Asemi and Kakhaki 28 ), but did not improve stress or cognitive function in healthy volunteers( Reference Kelly, Allen and Temko 29 ). A recent systematic review reported positive effects on anxiety and depression in five out of ten included studies( Reference Pirbaglou, Katz and de Souza 30 ). Some of these mixed results may be due to methodological issues. Better understanding of optimal treatment characteristics, including duration and type of intervention will doubtless emerge in the coming years and may herald an era of ‘psychobiotics’.

Trajectories of age-related cognitive change

Many of the same processes involved in cognitive pathology are integral mechanisms in non-clinical ageing and clearly contribute to population level, age-associated cognitive decline. There is however enormous variation in the trajectory of cognitive decline both across individuals and cognitive domains( Reference Wilson, Beckett and Barnes 31 ). Broadly, age-related decline is observed in ‘fluid’ cognitive abilities including memory (especially working memory), processing speed and executive functioning. Conversely, so-called ‘crystallised’ abilities, e.g. general knowledge and some verbal skills, remain relatively unscathed by the ageing process( Reference Ward, Tardiff and Dye 10 , Reference Deary, Corley and Gow 32 ). It should be noted that, even though domains such as working memory and processing speed decline with age, there is large individual variability, with some people maintaining high functioning well into old age( Reference Wilson, Beckett and Barnes 31 ). Again, while some of this variance can be explained by genetic factors, there remains a great deal which is influenced by environmental and lifestyle processes including nutrition.

As with cognitive abilities, there are substantial individual differences in age-related changes to the neural substrates which underpin cognitive performance. Longitudinal studies are rare but indicate an adult global cerebral atrophy rate of approximately 0·8 % per year( Reference Crivello, Tzourio-Mazoyer and Tzourio 33 ). Nevertheless some structures such as the visual and entorhinal cortices are relatively spared, while others such as the cerebellum and hippocampus show a more striking decline( Reference Park and Reuter-Lorenz 34 ), again with marked variability across individuals( Reference Callaghan, Freund and Draganski 35 ). The hippocampus is particularly important in the context of modifiable structure–function relationships. It is critically involved in binding together disparate elements of experience (time, location, environment, actions, specific stimuli, etc.) into specific memories( Reference Konkel and Cohen 36 ). Hippocampal size is reduced in a cluster of diet-modifiable disorders, including diabetes, obesity and hypertension( Reference Monti, Baym and Cohen 37 ). It is one of two mammalian brain structures which have been identified as having the capacity for adult neurogenesis (the birth of new neurons). Crucially there is increasing evidence that lifestyle factors such as exercise can increase human hippocampal volume( Reference Erickson, Voss and Prakash 38 ). In animals, this increased volume is accompanied by elevated rates of neurogenesis following exercise( Reference Pereira, Huddleston and Brickman 39 ) and by nutritional interventions such as diets rich in flavanols( Reference Van Praag, Lucero and Yeo 40 ) or n-3 fatty acids( Reference Luchtman and Song 41 ). This again supports the notion that there are plastic neural substrates of cognitive performance, which are viable targets for nutritional interventions in human subjects.

Neuroimaging and nutritional interventions

Unlike pharmaceuticals, the effects of dietary interventions can be subtle (although standardised extracts of the herbs Ginseng and Bacopa improve cognitive performance with effect sizes comparable with those of the US Food and Drug Administration-approved, cognitive enhancer du jour, Modafinil( Reference Neale, Camfield and Reay 42 ). The advent of computerised cognitive tests has helped to advance the field. Compared with paper-and-pencil psychometric assessment, these are capable of exquisite sensitivity in detecting slight changes in accuracy as well as resolving changes in response times at the millisecond level. This is particularly important when considering that speed of processing declines with ageing.

It is beyond the scope of the present paper to cover all randomised controlled trials in this area; the following sections, therefore, give a flavour of the results of such studies, focusing on those which have included the application of neuroimaging methodology.

Using neuroimaging to detect structural/functional changes associated with dietary interventions can be important for a number of reasons. If nothing else such studies can confirm that the treatment is centrally active, that is, that some component of the ingested substance is bioavailable and, directly or indirectly, changes the pattern of activation during cognitive processing.

Neuroimaging can also provide important insights into the mechanism of action of nutritional interventions. There are various neuroimaging modalities, each with its own advantages and disadvantages. Structural imaging, often performed using MRI can discern the size of neuroanatomical regions, or specifically grey matter (reflecting most structures other than the myelin sheath). The method is used to quantify changes in whole brain volume or specific neuroanatomical loci such as the hippocampus and its subfields. Other sophisticated measures have been developed, such as diffusion tensor imaging which can visualise white matter (essentially myelin), an important constituent of the tracts which convey electrochemical traffic between brain structures. Age-related declines in white matter integrity have been shown to mediate perceptual slowing in ageing( Reference Bucur, Madden and Spaniol 43 ). White matter integrity is related to vitamin D status( Reference Karakis, Pase and Beiser 44 ) and can be improved by nutritional change including n-3 fatty acid supplementation( Reference Janssen and Kiliaan 45 , Reference Witte, Kerti and Hermannstädter 46 ), suggesting it may be a viable target for nutrient interventions.

As well as structural changes, neuroimaging can be used to detect changes in functional activation using several methods. Each has advantages and can be viewed in terms of its spatial and temporal resolution, that is, how well the method can measure where and when, respectively, neural events occur.

Electroencephalography (EEG) was developed in the first half of the twentieth century and successfully detects changes in electrical activity associated with neuronal firing. It has a temporal resolution at the millisecond scale (reflecting the timescale of activity underpinning actual cognitive events). It has, however, poor spatial resolution since the signal is smeared by the skull. Continuous EEG recordings are typically divided into traditional frequency bands of δ (<4 Hz), θ (4–7 Hz), α (8–15 Hz), β (15–30 Hz) and γ (30+ Hz) with lower frequencies dominating during a relaxation, transitioning to higher frequencies during cognitive processing.

Applying EEG methodology to nutrient interventions have revealed effects of several nutritional interventions( Reference Camfield and Scholey 47 ). Acute administration of the green tea polyphenol epigallocatechin gallate reduced subjective stress and increased α, β and θ wave activity in healthy young volunteers( Reference Scholey, Downey and Ciorciari 48 ). There is evidence of relaxant properties of other tea components from human EEG activity( Reference Kobayashi, Nagato and Aoi 49 , Reference Juneja, Chu and Okubo 50 ). Two hundred milligrams of the green tea amino acid l-theanine, but not 50 mg, led to increased α-wave activity in the occipital and parietal regions of the brain within 40 min of ingestion when administered to resting participants. A different study found evidence of a decrease in α-wave activity following 250 mg l-theanine when measured during performance of an attention task( Reference Gomez-Ramirez, Higgins and Rycroft 51 ). While these findings may appear to be contradictory, it may also be indicative of differing EEG effects of l-theanine when administered during attentional processing (requiring focus) as opposed to at rest. In particular, these data can be explained by the fact that, as well as the classic relaxation-associated ‘tonic’ α, there is also a phasic α wave. This is believed to be associated with brain areas which are inhibited as, if they were active they would render this type of processing less efficient; a so-called distracter suppression mechanism. This interpretation was confirmed in a follow-up study where theanine again facilitated the EEG component of attentional switching, this time when the cue signalled a stimulus to the left or right visual field rather than between visual and auditory modalities( Reference Gomez-Ramirez, Kelly and Montesi 52 ).

More recently, neuroimaging using magnetoencephalography (MEG) has been developed. Rather than changes in electrical activity, MEG detects fluctuations in the magnetic field of neuronal populations. As the signal is relatively unchanged by bone, compared with EEG the spatial resolution from MEG is also very high. The brain's magnetic field is approximately 50 femtotesla (i.e. 10−9 that of the earth's magnetic field) making MEG methodologically challenging. The technique requires shielded housing and arrays of recording magnetometors (superconducting quantum interference devices) which are bathed in liquid helium.

Pharmaco-MEG is a developing discipline and MEG studies into central effects of nutritional interventions are lacking. One exception is an application of MEG to the study of the acute anti-stress effects of a drink containing 200 mg l-theanine( Reference White, de Klerk and Woods 53 ). Compared with a placebo, multitasking-evoked workload stress was reduced in the theanine condition 1 h post-drink only, with cortisol following a different pattern, being reduced at 3 h only. Additionally, MEG revealed that resting state α oscillatory activity was significantly greater in posterior sensors after active treatment 2 h post-dose. This effect was only apparent for those higher in trait anxiety, emphasising the need for control and/or measurement of initial situational and dispositional factors in this type of study. The change in resting state α oscillatory activity was not correlated with the change in subjective anxiety, stress or cortisol response, suggesting further research is required to assess the functional relevance of these treatment-related changes in resting α activity( Reference White, de Klerk and Woods 53 ).

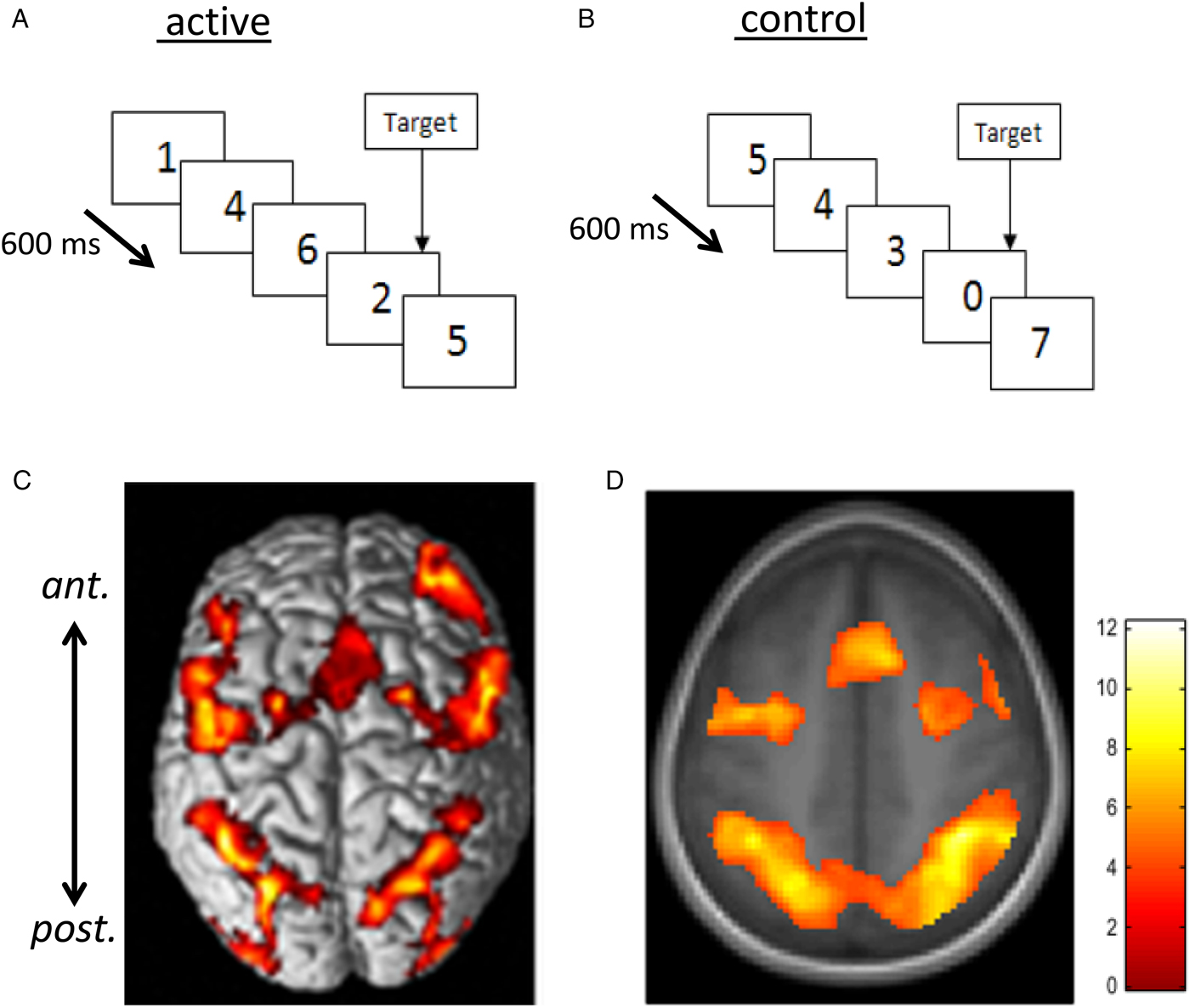

Many neuroimaging studies utilise functional MRI (fMRI) which reflects neuronal activation by measuring the amount and ratio between oxygenated and deoxygenated haemoglobin (the blood oxygen level dependent or BOLD signal) in target regions of interest. fMRI was developed over 25 years ago( Reference Belliveau, Kennedy and McKinstry 54 ) and has undergone significant advances in the past decades. While the spatial resolution of fMRI is relatively good, to the level of a cubic millimetre or so (representing perhaps tens of thousands of neurons), the method has poor temporal resolution compared with EEG and MEG. Activation is typically measured over a second or two and averaged over many trials. Many cognitive tasks involve perceptual and motor responses (e.g. attending to stimuli and pressing a button) which themselves produce region-specific central activation. Therefore, fMRI methodologies attempt to isolate the signal associated with cognitive processing only by subtracting the activation associated with a control task, which includes similar perceptual and motor elements but not the cognitive process (see Fig. 2).

Fig. 2. Rapid visual information processing (RVIP) as example functional MRI task used to capture effects of nutritional intervention studies. Panel A shows the active task requiring the subject to respond to three consecutive odd or three consecutive even digits when single digits are presented every 600 ms. In the control task (B), matched for perceptual and motor activity, subjects see a similar stimulus stream but respond to the presentation of the ‘0’ digit only (i.e. there is minimal cognitive load). When activation measured during B is subtracted from that during A, there is consistent activation in a well-characterised working memory circuit consisting of more anterior (ant.) frontal and supplementary motor areas and more posterior (post.) parietal and cerebellar regions linked with attention and working memory. C and D illustrate this pattern of activation from ( Reference White, Cox and Hughes 77 , Reference Neale, Johnston and Hughes 90 ) with warmer colours depicting greater activation. Increased activation within this circuit has been found following multivitamin mineral supplementation( Reference White, Cox and Hughes 77 ), see Fig. 3.

The methodology is developing increasing sophistication and there are several well-controlled fMRI studies that have shown changes in neural activity associated with nutritional interventions. These include multivitamins, caffeine, n-3 fatty acids glucose and flavanols (including from tea and cocoa). Each is dealt with in detail in( Reference Kobayashi, Nagato and Aoi 49 ) and covered in the following sections.

Cocoa flavanols (CF) have a number of potential health benefits including in the neurocognitive domain( Reference Scholey and Owen 55 ), where the most effective dose appears to be approximately 400–500 mg CF. Acute administration enhances mental function, particularly during mentally effortful processing( Reference Massee, Ried and Pase 56 , Reference Scholey, French and Morris 57 ). The differential effects according to mental effort suggest that increased cognitive load is itself associated with greater central activation, in the form of recruitment of more neural tissue. Neurovascular coupling dictates that this will signal greater localised blood flow and oxygen utilisation as reflected by a higher BOLD signal.

In addition to localising patterns of activation associated with specific cognitive tasks, MRI can be used to measure other aspects of brain function. Arterial spin labelling allows quantification of cerebral blood flow or perfusion. Two studies have reported increased cerebral blood flow 2 h following CF administration; the first examining whole brain cerebral blood flow( Reference Francis, Head and Morris 58 ), with a more recent study resolving specific regions with greater perfusion( Reference Lamport, Pal and Moutsiana 59 ). The latter reported increased activation in areas including the anterior cingulate cortex and left parietal lobe. The former is associated with numerous cognitive processes including goal-directed behaviour and attentional processes, while the latter is activated during working memory tasks.

CF consistently improve aspects of vascular functioning including those which are directly related to cognition-relevant neural processes. The dentate gyrus subfield of the hippocampus differentially atrophies during ageing. It is particularly important for specific aspects of memory including pattern separation, which reflects the ability to correctly discriminate similar overlapping sets of stimuli as specific memories. Twelve-week CF supplementation resulted in increased dentate gyrus cerebral blood volume which correlated with scores on a pattern recognition test( Reference Brickman, Khan and Provenzano 60 ). The dentate gyrus is one of the few areas of the adult brain capable of neurogenesis (the birth of new neurons) and in rodents cerebral blood volume is a marker of neurogenesis( Reference Pereira, Huddleston and Brickman 39 ). This finding could have significant implications, if CF (or other flavonoid) supplementation can promote neurogenesis, there are obvious applications in delaying cognitive decline. Conversely, it should also be noted that this was a small trial (n 37, parallel groups), half the cohort underwent an exercise regimen and there was a relatively high dropout rate. A more recent trial has confirmed that both 520 and 993 mg CF can improve cognitive functions, including working memory in older individuals as well as some of the processes depicted in Fig. 1, including indices of glucose utilisation( Reference Mastroiacovo, Kwik-Uribe and Grassi 61 ).

As well as detecting regional activation during task performance, fMRI can be used to examine structural and functional connections between brain regions (the latter is the focus of the human connectome project). The human brain is disproportionately metabolically active, typically contributing to 2 % of body weight but requiring some 20 % of metabolic resources in the form of oxygen and glucose. Unlike other tissue, the brain stores negligible amounts of glucose. It follows that neurocognitive function is exquisitely sensitive to fluctuations in glucose availability, with poor glucose control observed in ageing and dementia (to the extent that AD has been termed type 3 diabetes( Reference Kandimalla, Thirumala and Reddy 62 )). Cognitive function can be reliably improved by simply administering a glucose drink. Clearly there are health issues associated with the administration of sugar; nevertheless this manipulation has provided a useful prototype for examining acute cognitive enhancement( Reference Scholey, Macpherson and Sunram-Lea 63 – Reference Scholey, Sunram-Lea and Greer 65 ). In the context of nutritional interventions during an emotional episodic memory task, glucose improved both task performance and increased fMRI signal intensity in structures known to encode episodic memories( Reference Parent, Krebs-Kraft and Ryan 66 ). Additionally, increased connectivity was observed between the same brain regions that showed enhanced activation during task performance.

Magnetic resonance can also be used to measure the global cerebral rate of oxygen consumption. This has not yet been applied to dietary interventions, save for a single publication reporting reduced cerebral rate of oxygen consumption following glucose loading( Reference Xu, Liu and Pascual 67 ). The sensitivity to glucose suggests that the method may have applications in nutrition intervention studies.

More recently, magnetic resonance spectroscopy (MRS) has been successfully applied to the detection and quantification of various brain molecules. Proton MRS is the most commonly used methodology in human subjects (although other methods, including 31P, 13C and 19F MRS, have also been used). Proton MRS uses the signals emitted from hydrogen protons to measure central metabolite concentrations( Reference Novotny, Fulbright and Pearl 68 ). Many central molecules are invisible to MRS, with the most readily detectable resonance peaks being from N-acetyl aspartate, choline, creatine (Cr), glutamate/glutamine, and myo-inositol( Reference Rae 69 ). N-acetyl aspartate is a putative marker of neuronal density or viability and may index neurogenesis. Choline is enriched in membranes and is a marker of membrane turnover. Cr plays a key role in energy metabolism, while glutamate/glutamine represents the major excitatory neurotransmitter family and also correlates with metabolic activity. Myo-inositol is a second messenger involved in cell signalling and is also a marker of glial density. A small number of studies have examined age-related changes in these molecules with somewhat inconsistent findings( Reference Maudsley, Domenig and Govind 70 , Reference Chiu, Mak and Yau 71 ). Generally, levels of N-acetyl aspartate and glutamate/glutamine decline (possibly reflecting reduced neuronal number), while Cr and choline increase with age( Reference Maudsley, Domenig and Govind 70 , Reference Marjańska, McCarten and Hodges 72 ). Two small studies have used MRS to examine nutritional interventions. Six weeks Cr supplementation improved cognitive performance and increased central Cr levels( Reference Rae, Digney and McEwan 73 ). Central glucose can be measured using MRS, and one study( Reference Haley, Knight-Scott and Simnad 74 ) reported that oral glucose ingestion results in a measurable increase in the glucose MRS peak in eight AD patients compared with fourteen healthy young and fourteen age-matched controls. This reinforces the notion that the ability to effectively utilise central glucose contributes to the pathology of AD.

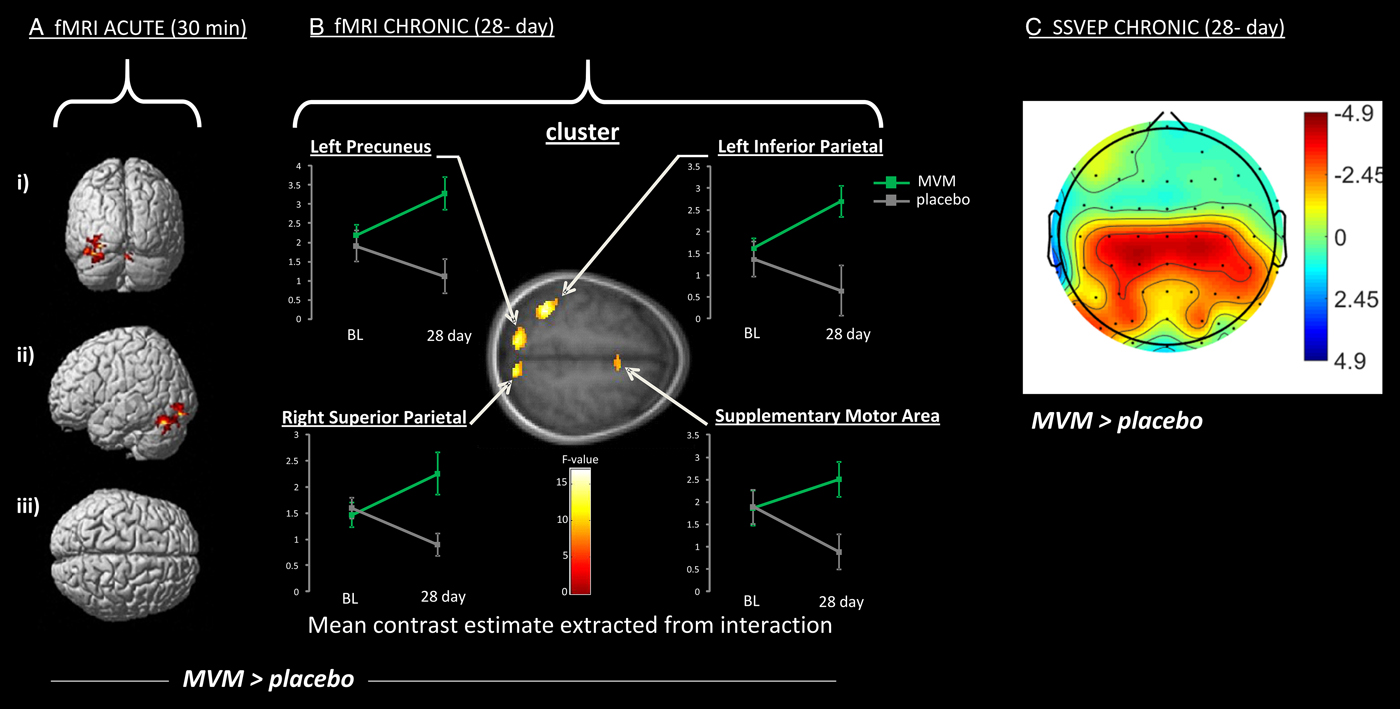

Several studies have examined the effects of vitamin and mineral supplementation on brain structure and function. These have focused on either single vitamins or broad-spectrum multivitamin mineral (MVM) preparations. Changes in patterns of activation were associated with MVM supplementation both acutely( Reference Scholey, Bauer and Neale 75 , Reference White, Camfield and Maggini 76 ) and following 4 weeks supplementation( Reference White, Cox and Hughes 77 ). One three-arm study compared placebo with MVM both with and without added guaraná. Focusing here on the MVM only arm, pilot fMRI data (n 6 in a three-arm crossover trial) indicated increased activation in a neural circuit associated with executive attentional functioning in healthy young adults in the hour following a single dose of an MVM( Reference Scholey, Bauer and Neale 75 ). In the same study, a specialised EEG methodology, steady-state visually evoked potential, was applied to a larger cohort (n 20) and found increased excitation in frontal regions coupled with inhibition in posterior regions during an attentional task( Reference White, Camfield and Maggini 76 ); see Fig. 3.

Fig. 3. Increased activation of a working memory network following administration of a broad-spectrum B vitamin and mineral (MVM) preparation compared with placebo (MVM > placebo), using two neuroimaging modalities (with warmer colour indicate greater difference over placebo). A and B show increased activation measured using functional MRI (fMRI) during the Rapid visual information processing task (as depicted in Fig. 2). Significantly increased activation is shown 30 min (A) and 28 d (B) following supplementation. Specifically A depicts activation of cerebellar regions shown in posterior (i), lateral (ii) and superior (iii) view, while B depicts clusters of increased activation following 28 d MVM supplementation, including parietal structures (precuneus and superior and inferior parietal lobes) and frontal regions (supplementary motor area). Graphs present levels of activation at baseline (BL) and following 28 d for MVM and placebo. C shows a similar network of activation to B during a spatial working memory task as measured using an electrophysiological measure Steady-state visual evoked potential (SSVEP). Effects follow the same 28-d intervention, and illustrate the more diffuse visualisation using this technique. Data are adapted from( Reference Scholey, Bauer and Neale 75 , Reference White, Cox and Hughes 77 ).

Acute neurocognitive effects of MVM administration, while not frequently reported, are not without precedence and, in this context, the absence of evidence should not be taken as evidence of absence. For example, acute mood and cognitive effects have been reported in children( Reference Haskell, Scholey and Jackson 78 ) and older adults( Reference Macpherson, Rowsell and Cox 79 ). While in healthy young adults, MVM administration was associated with acute increases in central blood flow, energy expenditure and fat oxidation( Reference Kennedy, Stevenson and Jackson 80 ). Clearly further work is needed to better characterise acute effects of MVM and the underlying mechanism of action.

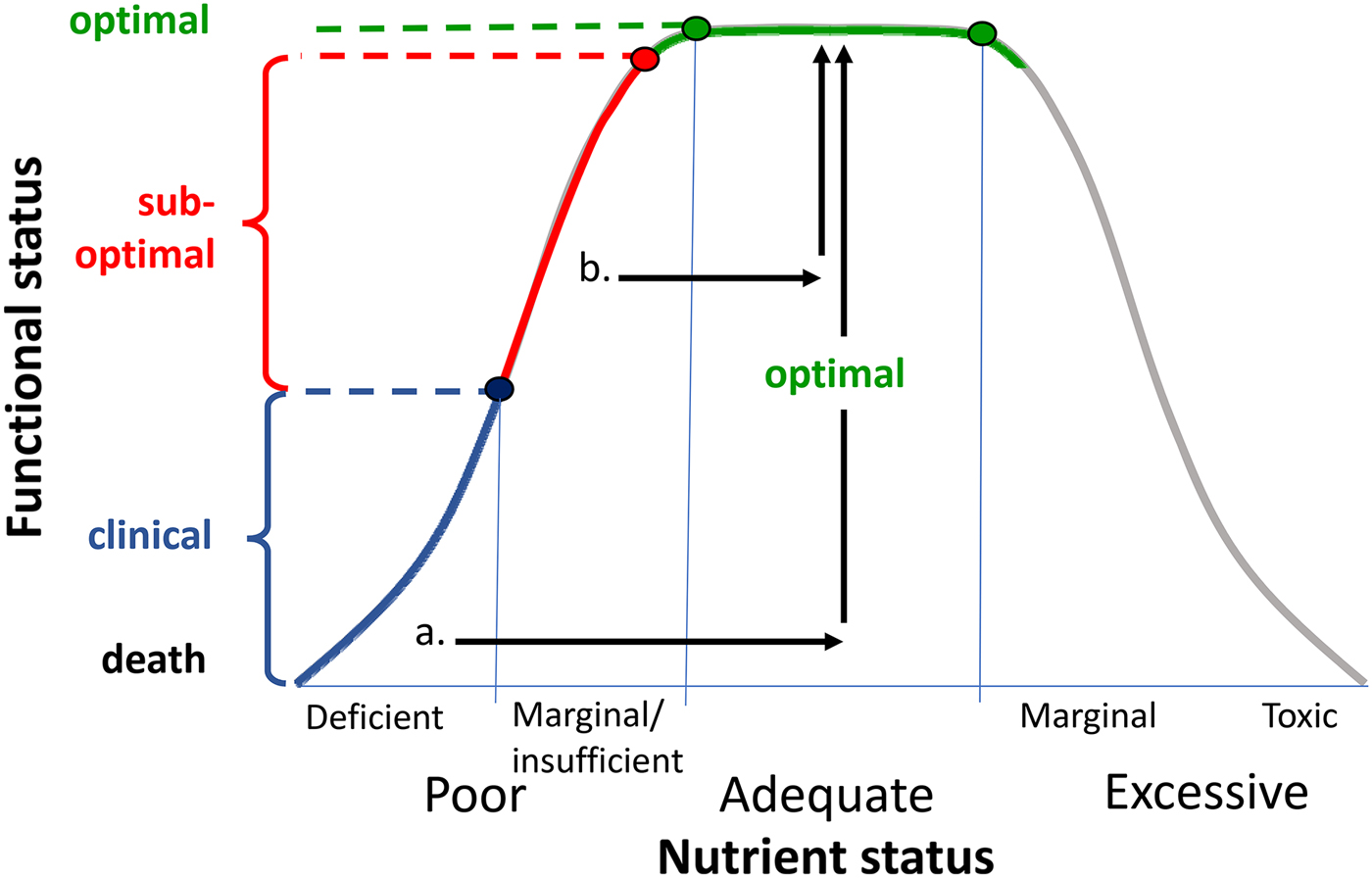

The fact that these effects were evident in healthy young adults raises the question of what role initial nutrient status may play in the observed effects. In the case of the 4-week MVM trial, the neurocognitive benefits were accompanied by positive shifts in mood and also blood B vitamin levels( Reference White, Cox and Peters 81 ). This suggests that it is possible to optimise nutrient status and improve function in individuals who have sub-optimal levels or ‘insufficiency’ rather than frank nutrient deficiency (see Fig. 4). Clearly this challenges the dogma that benefits of MVM supplementation will only be observed in individuals with a clinical nutrient deficiency. Indeed, a recent report from >10 000 adults in the US National Health and Nutrition Examination Surveys study reveals that any MVM supplementation lowers the odds ratio of deficiency of four out of five nutrients with recognised markers of deficiency, and reduces insufficiency of fifteen/seventeen nutrients examined( Reference Blumberg, Frei and Fulgoni 82 ).

Fig. 4. Representation of relationship between nutrient status and functional status (adapted from( Reference Morris and Tangney 91 )). Frank nutrient deficiency (blue) manifests itself with clinical symptoms (y-axis) and these may be treated by optimising nutrient status (arrow a). There is growing evidence that suboptimal nutrient status (also termed marginal nutrient deficiency or nutrient insufficiency( Reference Popper 85 )) may be associated with subclinical functional deficits. These may be reversed by nutrient supplementation (arrow b).

Indeed, there is growing evidence for neurocognitive benefits of MVM supplementation in non-clinical populations. For example, in a 16-week trial of a MVM supplementation in healthy young adults, improved attentional performance in males was significantly correlated with increased levels of vitamin B6 ( Reference Pipingas, Camfield and Stough 83 ). This strongly suggests a functional role for changes in B6, despite the fact that the group was not deficient in B6 (see( Reference Kennedy 84 ) for an overview of the role of B vitamins in this context). This begs the question as to what proportion of the population have suboptimal levels of nutrients which may have functional behavioural consequences( Reference Popper 85 ). While frank clinical deficiencies may be found in up to 10–20 % of the population in Westernised countries (depending on the nutrient in question). The figure is much higher for those with marginal deficiencies, with estimates for vitamin B12 insufficiency as high as 40–50 % or more of the US and UK adult populations( Reference Tucker, Rich and Rosenberg 86 , Reference Moat, Ashfield-Watt and Powers 87 ).

More recently, B vitamin supplementation has been applied to clinical cognitive decline in the individuals with MCI( Reference de Jager 88 ). In the VITACOG trial, 156 over 70-year olds with MCI underwent structural MRI at baseline and at 24 months following either placebo or a B vitamin preparation. Those in the vitamin group showed reduced grey matter atrophy (0·5 % v. 3·7 % in the placebo group); this effect was more marked in those with higher baseline homocysteine (0·6 % v. 5·2 %). It should be noted that high and low homocysteine groups were derived by median splits and no participant met the criterion for clinically elevated homocysteine (150 pmol/l). Again this supports the potential for improved neurocognitive outcomes following supplementation in non-deficient, with the effects being more pronounced in those with suboptimal nutrient status (Fig. 4).

Nutrition and neurocognitive scaffolding

What can these methodologies tell us about the mechanisms which might underlie cognitive enhancement from nutritional interventions? One clue might come from the Scaffolding theory of aging and cognition (STAC). In its original form, the STAC model proposed that ageing is characterised by a balance between negative and positive neural plasticity( Reference Park and Reuter-Lorenz 34 ), and that cognitive decline represents a preponderance of the former over the latter. Negative plasticity includes neural challenges and functional deterioration. Neural challenges include the previously described structural corrosion of grey and white matter which occurs with ageing. Functional deterioration describes dysregulation of neural activity, including reduced task-related recruitment of hippocampal and related structures during memory encoding, and maladaptive patterns in the default mode network. The STAC model argues that, at the same time, positive plasticity in the form of compensatory scaffolding occurs which acts to counter these negative structural and functional changes. In brain imaging studies, this compensatory scaffolding manifests itself as greater activation (i.e. more recruitment) of frontal and parietal regions and increased bilateral recruitment in tasks which may be lateralised in younger individuals. It is notable that the neuroimaging examples described earlier include the possibility of frontal recruitment being increased by multivitamin/nutrient supplementation and neurogenesis being facilitated by flavanols and n-3 supplementation. Later revisions of the model speculate that neurogenesis may also be part of scaffolding mechanisms( Reference Reuter-Lorenz and Park 89 ). Importantly, the STAC model suggests that it is possible to promote neural scaffolding, including via ‘various lifestyle activities including exercise, intellectual engagement and new learning, as well as more formal cognitive training interventions’( Reference Reuter-Lorenz and Park 89 ). The select neuroimaging examples described earlier suggest that nutritional interventions may also be added to this list.

In conclusion, converging evidence from epidemiology, mechanistic studies and clinical trials strongly suggest that nutritional interventions may offer a realistic option for offsetting neurocognitive decline. Such interventions include whole dietary change, select food components, well-characterised botanical extracts and nutrient supplementation. Since cognitive processes are influenced by multiple biological systems, positive effects of nutrition are likely to be pleiotropic. Recent studies employing a range of biomarkers and neuroimaging methodologies have shed light on which changes in neural substrates, including neurocognitive scaffolding, underlie benefits of nutrient interventions. In turn, such studies are helping to identify biologically plausible targets for nutrients. Thus, there is presently a realistic opportunity for a ‘virtuous cycle’, where evidence-led interventions and mechanistic studies reciprocally inform each other to further develop nutritional interventions for neurocognition. It should be noted that for many potentially beneficial, centrally active nutrients, there is a history of safe consumption. Compared with traditional pharmaceutical drug development pipelines, where many candidate treatments fail at early stages, this may allow more rapid translation of research findings into benefits for neurocognitive health.

Financial Support

None.

Conflicts of Interest

The author has received research funding, consultancy, honoraria and travel costs from various nutrition and supplement industry bodies.

Authorship

The author had sole responsibility for all aspects of preparation of this paper.