1. Introduction

Cognitively adequate models of language have been a subject of central interest in areas as diverse as philosophy, (computational) linguistics, artificial intelligence, cognitive science, neurology, and intermediate disciplines. Much effort in natural language processing (NLP) has been devoted to obtain representations of linguistic units,Footnote a such as words, that can capture language syntax, semantics,Footnote b and other linguistic aspects for computational processing. One of the primary and successful models for the representation of word semantics are vector space models (VSMs) introduced by Salton, Wong, and Yang (Reference Salton, Wong and Yang1975) and its variations, such as word space models (Schütze Reference Schütze1993), hyperspace analogue to language (Lund and Burgess Reference Lund and Burgess1996), latent semantic analysis (Deerwester et al. Reference Deerwester, Dumais, Furnas, Landauer and Harshman1990), and more recently neural word embeddings, such as word2vec (Mikolov et al. Reference Mikolov, Chen, Corrado and Dean2013a) and neural language models, such as BERT (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019). In VSMs, a vector representation in a continuous vector space of some fixed dimension is created for each word in the text. VSMs have been empirically justified by results from cognitive science (Gärdenfors Reference Gärdenfors2000).

One influential approach to produce word vector representations in VSMs are distributional representations, which are generally based on the distributional hypothesis first introduced by Harris (Reference Harris1954). The distributional hypothesis presumes that “difference of meaning correlates with difference of distribution” (Harris Reference Harris1954, p. 156). Based on this hypothesis, “words that occur in the same contexts tend to have similar meanings” (Pantel Reference Pantel2005, p. 126), and the meaning of words is defined by contexts in which they (co-)occur. Depending on the specific model employed, these contexts can be either local (the co-occurring words) or global (a sentence or a paragraph or the whole document). In VSMs, models that are obtained based on the distributional hypothesis are called distributional semantic models (DSMs). Word meaning is then modeled as an n-dimensional vector, derived from word co-occurrence counts in a given context. In these models, words with similar distributions tend to have closer representations in the vector space. These approaches to semantics share the usage-based perspective on meaning; that is, representations focus on the meaning of words that comes from their usage in a context. In this way, semantic relationships between words can also be understood using the distributional representations and by measuring the distance between vectors in the vector space (Mitchell and Lapata Reference Mitchell and Lapata2010). Vectors that are close together in this space have similar meanings and vectors that are far away are distant in meaning (Turney and Pantel Reference Turney and Pantel2010). In addition to mere co-occurrence information, some DSMs also take into account the syntactic relationship of word pairs, such as subject–verb relationship, for constructing their vector representations (Padó and Lapata Reference Padó and Lapata2007; Baroni and Lenci Reference Baroni and Lenci2010). Therefore, dependency relations contribute to the construction of the semantic space and capture more linguistic knowledge. These dependency relations are asymmetric and hence reflect the word position and order information in the word vector construction. In these models, text preprocessing is required for building the model, as lexico-syntactic relations have to be extracted first.

Many recent approaches utilize machine learning techniques with the distributional hypothesis to obtain continuous vector representations that reflect the meanings in natural language. One example is word2vec, proposed by Mikolov et al. (Reference Mikolov, Chen, Corrado and Dean2013a, b), which is supposed to capture both syntactic and semantic aspects of words. In general, VSMs have proven to perform well in a number of tasks requiring computation of semantic closeness between words, such as synonymy identification (Landauer and Dumais Reference Landauer and Dumais1997), automatic thesaurus construction (Grefenstette Reference Grefenstette1994), semantic priming and word sense disambiguation (Padó and Lapata Reference Padó and Lapata2007), and many more.

Early VSMs represented each word separately, without considering representations of larger units like phrases or sentences. Consequently, the compositionality properties of language were not considered in VSMs (Mitchell and Lapata Reference Mitchell and Lapata2010). According to Frege’s principle of compositionality (Frege Reference Frege1884), “The meaning of an expression is a function of the meanings of its parts and of the way they are syntactically combined” (Partee Reference Partee2004, p.153). Therefore, the meaning of a complex expression in a natural language is determined by its syntactic structure and the meanings of its constituents (Halvorsen and Ladusaw Reference Halvorsen and Ladusaw1979). On sentence level, the meaning of a sentence such as White mushrooms grow quickly is a function of the meaning of the noun phrase White mushrooms combined as a subject with the meaning of the verb phrase grow quickly. Each phrase is also derived from the combination of its constituents. This way, semantic compositionality allows us to construct long grammatical sentences with complex meanings (Baroni, Bernardi, and Zamparelli Reference Baroni, Bernardi and Zamparelli2014). Approaches have been developed that obtain meaning above the word level and introduce compositionality for DSMs in NLP. These approaches are called compositional distributional semantic models (CDSMs). CDSMs propose word representations and vector space operations (such as vector addition) as the composition operation. Mitchell and Lapata (Reference Mitchell and Lapata2010) propose a framework for vector-based semantic composition in DSMs. They define additive or multiplicative functions for the composition of two vectors and show that compositional approaches generally outperform non-compositional approaches which treat a phrase as the union of single lexical items. Word2vec models also exhibit good compositionality properties using standard vector operations (Mikolov et al. Reference Mikolov, Chen, Corrado and Dean2013a, b). However, these models cannot deal with lexical ambiguity and representations are non-contextualized. Very recently, contextualized (or context-aware) word representation models, such as transformer-based models like BERT (Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019), have been introduced. These models learn to construct distinct representations for different meanings of the words based on their occurrence in different contexts. Moreover, they consider the word order of input text for training the final representations by adding the positional information of words to their representations. These models compute word representations using large neural-based architectures. Moreover, training such models needs rich computational resources. Due to their expensive computational requirements, compressed versions of BERT have been introduced, such as DistilBERT (Sanh et al. Reference Sanh, Debut, Chaumond and Wolf2019). They have shown state-of-the-art performance in downstream NLP tasks, and we refer the reader interested in contextualized word representations to the work by Devlin et al. (Reference Devlin, Chang, Lee and Toutanova2019). Our focus in this article is on light-weight computations of word representations in a given context and the dynamic composition of word representations using algebraic operations.

Despite its simplicity and light-weight computations, one of the downsides of using vector addition (or other commutative operations like the component-wise product) as the compositionality operation is that word order information is inevitably lost. To overcome this limitation while maintaining light-weight computations for compositional representations, this article describes an alternative, word-order-sensitive approach for compositional word representations, called compositional matrix-space models (CMSMs). In such models, word matrices instead of vectors are used as word representations and compositionality is realized via iterated matrix multiplication.

Contributions. The contribution of this work can be grouped into two categories:

-

(1). On the formal, theoretical side, we propose CMSMs as word-level representation models and provide advantageous properties of these models for NLP, showing that they are able to simulate most of the known vector-based compositionality operations and that several CMSMs can be combined into one in a straightforward way. We also investigate expressiveness and computational properties of the languages accepted of a CMSM-based grammar model, called matrix grammars. This contribution is an extended and revised account of results by Rudolph and Giesbrecht (Reference Rudolph and Giesbrecht2010).

-

(2). On the practical side, we provide an exemplary experimental investigation of the practical applicability of CMSMs in English by considering two NLP applications: compositional sentiment analysis and compositionality prediction of short phrases. We chose these two tasks for practical investigations since compositionality properties of the language play an important role in such tasks. For this purpose, we develop two different machine learning techniques for the mentioned tasks and evaluate the performance of the learned model against other distributional compositional models from the literature. By means of these investigations we show that

-

there are scalable methods for learning CMSMs from linguistic corpora and

-

in terms of model quality, the learned models are competitive with other state-of-the-art approaches while requiring significantly fewer parameters.

-

This contribution addresses the question “how to acquire CMSMs automatically in large-scale and for specific purposes” raised by Rudolph and Giesbrecht (Reference Rudolph and Giesbrecht2010). Preliminary results of this contribution concerning the sentiment analysis task have been published by Asaadi and Rudolph (Reference Asaadi and Rudolph2017). In this article, we extend them with hitherto unpublished investigations on compositionality prediction.

Structure. The structure of the article is as follows. We first review compositional distributional models in literature and provide the related work for the task of compositional sentiment analysis and semantic compositionality prediction in Section 2. Then, to introduce CMSMs, we start by providing the necessary basic notions in linear algebra in Section 3. In Section 4, we give a formal account of the concept of compositionality, introduce CMSMs, and argue for the plausibility of CMSMs in the light of structural and functional considerations. Section 5 demonstrates beneficial theoretical properties of CMSMs: we show how common VSM approaches to compositionality can be captured by CMSMs, while they are likewise able to cover symbolic approaches; moreover, we demonstrate how several CMSMs can be combined into one model.

In view of these advantageous properties, CMSMs seem to be a suitable candidate in a diverse range of different tasks of NLP. In Section 6, we focus on ways to elicit information from matrices to leverage CMSMs for NLP tasks like scoring or classification. These established beneficial properties motivate a practical investigation of CMSMs in NLP applications. Therefore, methods for training such models need to be developed, for example, by leveraging appropriate machine learning techniques.

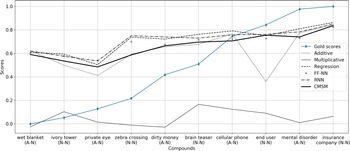

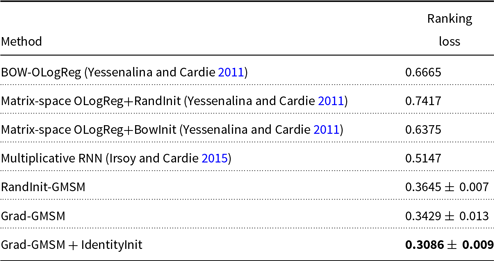

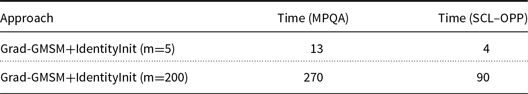

Hence, we address the problem of learning CMSMs in Section 7. Thereby, we focus on a gradient descent method but apply diverse optimizations to increase the method’s efficiency and performance. We propose to apply a two-step learning strategy where the output of the first step serves as the initialization for the second step. The results of the performance evaluation of our learning methods on two tasks are studied in Section 8.2.2. In the first part of the experiments, we investigate our learning method for CMSMs on the task of compositionality prediction of multi-word expressions (MWE). Compositionality prediction is important in downstream NLP tasks such as statistical machine translation (Enache, Listenmaa, and Kolachina Reference Enache, Listenmaa and Kolachina2014; Weller et al. Reference Weller, Cap, Müller, Schulte im Walde and Fraser2014), word-sense disambiguation (McCarthy, Keller, and Carroll Reference McCarthy, Keller and Carroll2003), and text summarization (ShafieiBavani et al. Reference ShafieiBavani, Ebrahimi, Wong and Chen2018) where a method is required to detect whether the words in a phrase are used in a compositional meaning. Therefore, we choose to evaluate the proposed method for CMSMs on the ability to detect the compositionality of phrases. In the second part of the experiments, we evaluate our method on the task of fine-grained sentiment analysis. We choose this task since it allows a direct comparison against two closely related techniques proposed by Yessenalina and Cardie (Reference Yessenalina and Cardie2011) and Irsoy and Cardie (Reference Irsoy and Cardie2015), which also trains a CMSM. We finally conclude by discussing the strengths and limitations of CMSMs in Section 9.

As stated earlier, this article is a consolidated, significantly revised, and considerably extended exposition of work presented in earlier conference and workshop papers (Rudolph and Giesbrecht Reference Rudolph and Giesbrecht2010; Asaadi and Rudolph Reference Asaadi and Rudolph2017).

2. Related work

We were not the first to suggest an extension of classical VSMs to higher-order tensors. Early attempts to apply matrices instead of vectors to text data came from research in information retrieval (Gao, Wang, and Wang Reference Gao, Wang and Wang2004; Liu et al. Reference Liu, Zhang, Yan, Chen, Liu, Bai and Chien2005; Antonellis and Gallopoulos Reference Antonellis and Gallopoulos2006; Cai, He, and Han Reference Cai, He and Han2006). Most proposed models in information retrieval still use a vector-based representation as the basis and then mathematically convert vectors into tensors, without linguistic justification of such a transformation, or they use metadata or ontologies to initialize the models (Sun, Tao, and Faloutsos Reference Sun, Tao and Faloutsos2006; Chew et al. Reference Chew, Bader, Kolda and Abdelali2007; Franz et al. Reference Franz, Schultz, Sizov and Staab2009; Van de Cruys Reference Van de Cruys2010). However, to the best of our knowledge, we were the first to propose an approach of realizing compositionality via consecutive matrix multiplication. In this section, a comprehensive review of related work on existing approaches to modeling words as matrices, distributional semantic compositionality, compositional methods for sentiment analysis, and compositionality prediction of MWEs is provided.

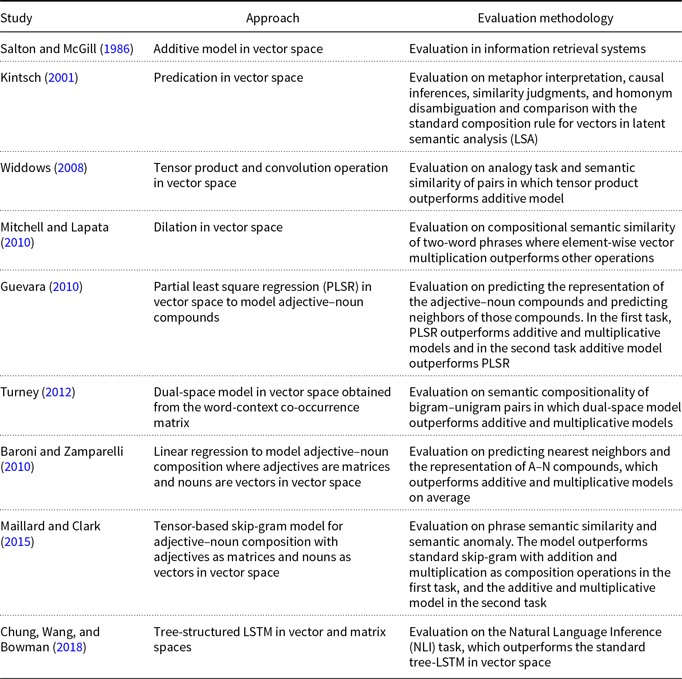

Compositional Distributional Semantic Models. In compositional distributional semantics, different approaches for learning word representations and diverse ways of realizing semantic compositionality are studied. In the following, we discuss the related vector space approaches, which are summarized in Table 1. However, be reminded that our compositional approach will be formulated in matrix space as opposed to vector space.

Table 1. Summary of the literature review in semantic compositionality

Salton and McGill (Reference Salton and McGill1986) introduce vector addition in VSMs as a composition method, which is the most common method. Given two words

![]() $w_i$

and

$w_i$

and

![]() $w_j$

and their associated d-dimensional semantic vector representations

$w_j$

and their associated d-dimensional semantic vector representations

![]() ${\textbf{u}} \in \mathbb{R}^d$

and

${\textbf{u}} \in \mathbb{R}^d$

and

![]() ${\textbf{v}} \in \mathbb{R}^d$

, respectively, vector addition is defined as follows:

${\textbf{v}} \in \mathbb{R}^d$

, respectively, vector addition is defined as follows:

where

![]() $\textbf{p} \in \mathbb{R}^d$

is the resulting compositional representation of the phrase

$\textbf{p} \in \mathbb{R}^d$

is the resulting compositional representation of the phrase

![]() $w_iw_j$

and f is called the composition function. Despite its simplicity, the additive method is not a suitable method of composition because vector addition is commutative. Therefore, it is not sensitive to word order in the sentence, which is a natural property of human language.

$w_iw_j$

and f is called the composition function. Despite its simplicity, the additive method is not a suitable method of composition because vector addition is commutative. Therefore, it is not sensitive to word order in the sentence, which is a natural property of human language.

Among the early attempts to provide more compelling compositional functions in VSMs is the work of Kintsch (Reference Kintsch2001) who is using a more sophisticated composition function to model predicate–argument structures. Kintsch (Reference Kintsch2001) argues that the neighboring words “strengthen features of the predicate that are appropriate for the argument of the predication” (p. 178). For instance, the predicate run depends on the noun as its argument and has a different meaning in, for example, “the horse runs” and “the ship runs before the wind.” Thus, different features are used for composition based on the neighboring words. Also, not all features of a predicate vector are combined with the features of the argument, but only those that are appropriate for the argument.

An alternative seminal work on compositional distributional semantics is by Widdows (Reference Widdows2008). Widdows proposes a number of more advanced vector operations well-known from quantum mechanics for semantic compositionality, such as tensor product and convolution operation to model composition in vector space. Given two vectors

![]() ${\textbf{u}} \in \mathbb{R}^{d}$

and

${\textbf{u}} \in \mathbb{R}^{d}$

and

![]() ${\textbf{v}} \in \mathbb{R}^{d}$

, the tensor product of two vectors is a matrix

${\textbf{v}} \in \mathbb{R}^{d}$

, the tensor product of two vectors is a matrix

![]() $Q \in \mathbb{R}^{d \times d}$

with

$Q \in \mathbb{R}^{d \times d}$

with

![]() $Q(i,j) = {\textbf{u}}(i){\textbf{v}}(j)$

. Since the number of dimensions increases by tensor product, the convolution operation was introduced to compress the tensor product operation to

$Q(i,j) = {\textbf{u}}(i){\textbf{v}}(j)$

. Since the number of dimensions increases by tensor product, the convolution operation was introduced to compress the tensor product operation to

![]() $\mathbb{R}^d$

space. Widdows shows the ability of the introduced compositional models to reflect the relational and phrasal meanings on a simplified analogy task and semantic similarity which outperform additive models.

$\mathbb{R}^d$

space. Widdows shows the ability of the introduced compositional models to reflect the relational and phrasal meanings on a simplified analogy task and semantic similarity which outperform additive models.

Mitchell and Lapata (Reference Mitchell and Lapata2010) formulate semantic composition as a function

![]() $m = f(w_1,w_2,R,K)$

where R is a relation between

$m = f(w_1,w_2,R,K)$

where R is a relation between

![]() $w_1$

and

$w_1$

and

![]() $w_2$

and K is additional knowledge. They evaluate the model with a number of addition and multiplication operations for vector combination and introduce dilation as another composition operation. The dilation method decomposes

$w_2$

and K is additional knowledge. They evaluate the model with a number of addition and multiplication operations for vector combination and introduce dilation as another composition operation. The dilation method decomposes

![]() ${\textbf{v}}$

into a parallel and an orthogonal component to

${\textbf{v}}$

into a parallel and an orthogonal component to

![]() ${\textbf{u}}$

and then stretches the parallel component to adjust

${\textbf{u}}$

and then stretches the parallel component to adjust

![]() ${\textbf{v}}$

along

${\textbf{v}}$

along

![]() ${\textbf{u}}$

:

${\textbf{u}}$

:

where

![]() $\lambda$

is the dilation factor and p is the composed vector. Therefore,

$\lambda$

is the dilation factor and p is the composed vector. Therefore,

![]() ${\textbf{u}}$

affects relevant elements of vector

${\textbf{u}}$

affects relevant elements of vector

![]() ${\textbf{v}}$

in the composition. Evaluation is done on their developed compositional semantic similarity dataset of two-word phrases. They conclude that element-wise vector multiplication outperforms additive models and non-compositional approaches in the semantic similarity of complex expressions.

${\textbf{v}}$

in the composition. Evaluation is done on their developed compositional semantic similarity dataset of two-word phrases. They conclude that element-wise vector multiplication outperforms additive models and non-compositional approaches in the semantic similarity of complex expressions.

Giesbrecht (Reference Giesbrecht2009) evaluates four vector composition operations (addition, element-wise multiplication, tensor product, convolution) in vector space on the task of identifying multi-word units. The evaluation results of the three studies (Widdows Reference Widdows2008; Giesbrecht Reference Giesbrecht2009; Mitchell and Lapata Reference Mitchell and Lapata2010) are not conclusive in terms of which vector operation performs best; the different outcomes might be attributed to the underlying word space models; for example, the models of Widdows (Reference Widdows2008) and Giesbrecht (Reference Giesbrecht2009) feature dimensionality reduction while that of Mitchell and Lapata (Reference Mitchell and Lapata2010) does not.

Guevara (Reference Guevara2010) proposes a linear regression model for adjective–noun (A–N) compositionality. He trains a generic function to compose any adjective and noun vectors and produce the A–N representation. The model which is learned by partial least square regression (PLSR) outperforms additive and multiplicative models in predicting the vector representation of A–Ns. However, the additive model outperforms PLSR in predicting the nearest neighbors in the vector space. As opposed to this work, semantic compositionality in our approach is regardless of the parts of speech (POS), and therefore, the model can be trained to represent different compositional compounds with various POS tags.

Some approaches for obtaining distributional representation of words in VSMs have also been extended to compositional distributional models. Turney (Reference Turney2012) proposes a dual-space model for semantic compositionality. He creates two VSMs from the word-context co-occurrence matrix, one from the noun as the context of the words (called domain space) and the other from the verb as the context of the word (called function space). Therefore, the dual-space model consists of a domain space for determining the similarity in topic or subject, and a function space for computing the similarity in role or relationship. He evaluates the dual-space model on the task of similarity of compositions for pairs of bigram–unigram in which bigram is a noun phrase and unigram is a noun. He shows that the introduced dual-space model outperforms additive and multiplicative models.

Few approaches using matrices for distributional representations of words have been introduced more recently, which are then used for capturing compositionality. A method to drive a distributional representation of A–N phrases is proposed by Baroni and Zamparelli (Reference Baroni and Zamparelli2010) where the adjective serves as a linear function mapping the noun vector to another vector in the same space, which presents the A–N compound. In this method, each adjective has a matrix representation. Using linear regression, they train separate models for each adjective. They evaluate the performance of the proposed approach in predicting the representation of A–N compounds and predicting their nearest neighbors. Results show that their model outperforms additive and multiplicative models on average. A limitation of this model is that a separate model is trained for each adjective, and there is no global training model for adjectives. This is in contrast to our proposed approach in this work.

Maillard and Clark (Reference Maillard and Clark2015) describe a compositional model for learning A–N pairs where, first, word vectors are trained using the skip-gram model with negative sampling (Mikolov et al. Reference Mikolov, Sutskever, Chen, Corrado and Dean2013b). Then, each A–N phrase is considered as a unit, and adjective matrices are trained by optimizing the skip-gram objective function for A–N phrase vectors. The phrase vectors of the objective function are obtained by multiplying the adjective matrix with its noun vector. Noun vectors in this step are fixed. Results on the phrase semantic similarity task show that the model outperforms the standard skip-gram with addition and multiplication as the composition operations. Moreover, the model outperforms additive and multiplicative models in the semantic anomaly task.

More recently, Chung et al. (Reference Chung, Wang and Bowman2018) introduced a learning method for a matrix-based compositionality model using a deep learning architecture. They propose a tree-structured long short-term memory (LSTM) approach for the task of natural language inference (NLI) to learn the word matrices. In their method, the model learns to transform the pre-trained input word embeddings (e.g., word2vec) to word matrix embeddings (lift layer). Then word matrices are composed hierarchically using matrix multiplication to obtain the representation of sentences (composition layer). The sentence representations are then used to train a classifier for the NLI task.

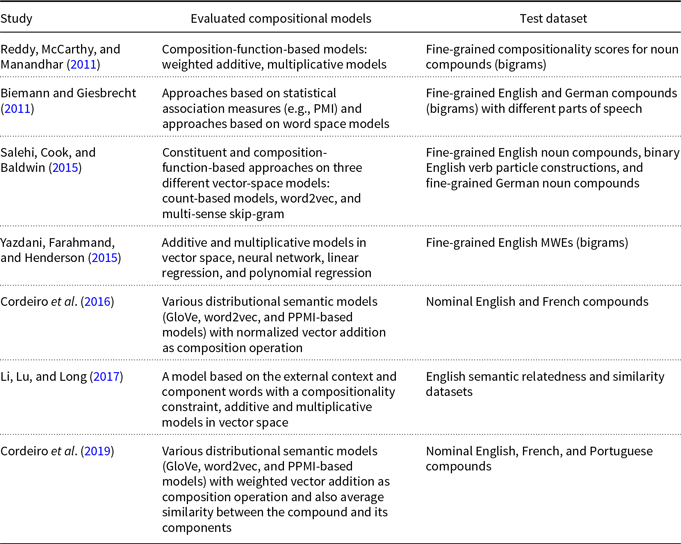

Semantic Compositionality Evaluation. Table 2 summarizes the literature on techniques to evaluate the existing compositional models on capturing semantic compositionality.

Table 2. Summary of the literature review in compositionality prediction

Reddy et al. (Reference Reddy, McCarthy and Manandhar2011) study the performance of compositional distributional models on compositionality prediction of multi-word compounds. For this purpose, they provide a dataset of noun compounds with fine-grained compositionality scores as well as literality scores for constituent words based on human judgments. They analyze both constituent-based models and composition-function-based models regarding compositionality prediction of the proposed compounds. In constituent-based models, they study the relations between the contribution of constituent words and the judgments on compound compositionality. They argue if a word is used literally in a compound, most probably it shares a common co-occurrence with the corresponding compound. Therefore, they evaluate different composition functions applied on constituent words and compute their similarity with the literality scores of phrases. In composition-function-based models, they evaluate weighted additive and multiplicative composition functions on their dataset and investigate the similarity between the composed word vector representations and the compound vector representation. Results show that in both models, additive composition outperforms other functions.

Biemann and Giesbrecht (Reference Biemann and Giesbrecht2011) aim at extracting non-compositional phrases using automatic distributional models that assign a compositionality score to a phrase. This score denotes the extent to which the compositionality assumption holds for a given expression. For this purpose, they created a dataset of English and German phrases which attracted several models ranging from statistical association measures and word space models submitted in a shared task of SemEval’11.

Salehi et al. (Reference Salehi, Cook and Baldwin2015) explore compositionality prediction of MWEs using constituent-based and composition-function-based approaches on three different VSMs, consisting of count-based models, word2vec, and multi-sense skip-gram model. In constituent-based models, they study the relation between the contribution of constituent words and the judgments on compound compositionality. In the composition-function-based models, they study the additive model in vector space on compositionality.

Yazdani et al. (Reference Yazdani, Farahmand and Henderson2015) then explore different compositional models ranging from simple to complex models such as neural networks (NNs) for non-compositionality prediction of a dataset of MWEs. The dataset is created by Farahmand, Smith, and Nivre (Reference Farahmand, Smith and Nivre2015), which consists of MWEs annotated with non-compositionality judgments. Representation of words is obtained from word2vec of Mikolov et al. (Reference Mikolov, Chen, Corrado and Dean2013a) and the models are trained using compounds extracted from a Wikipedia dump corpus, assuming that most compounds are compositional. Therefore, the trained models are expected to give a relatively high error to non-compositional compounds. They improve the accuracy of the models using latent compositionality annotation and show that this method improves the performance of nonlinear models significantly. Their results show that polynomial regression model with quadratic degree outperforms other models.

Cordeiro et al. (Reference Cordeiro, Ramisch, Idiart and Villavicencio2016) and their extended work (Cordeiro et al. Reference Cordeiro, Villavicencio, Idiart and Ramisch2019) are closely related to our work regarding the compositionality prediction task. They explore the performance of unsupervised vector addition and multiplication over various DSMs (GloVe, word2vec, and PPMI-based models) regarding predicting semantic compositionality of noun compounds over previously proposed English and French datasets in Cordeiro et al. (Reference Cordeiro, Ramisch, Idiart and Villavicencio2016) and a combination of previously and newly proposed English, French, and Portuguese datasets in Cordeiro et al. (Reference Cordeiro, Villavicencio, Idiart and Ramisch2019). Normalized vector addition in Cordeiro et al. (Reference Cordeiro, Ramisch, Idiart and Villavicencio2016) is considered as the composition function, and the performance of word embeddings is investigated using different setting of parameters for training them.

Cordeiro et al. (Reference Cordeiro, Villavicencio, Idiart and Ramisch2019) consider a weighted additive model as the composition function in which the weights of head and modifier words in the compounds range from 0 to 1, meaning that the similarity between head only word and the compound, the similarity between modifier only word and the compound, as well as the similarity between equally weighted head and modifier words and the compound are evaluated. Moreover, they consider the average of the similarity between head-compound pair and modifier-compound pair and compute the correlation between the average similarity score and the human judgments on the compositionality of compound. In both works, they also study the impact of corpus preprocessing on capturing compositionality with DSMs. Furthermore, the influence of different settings of DSMs parameters and corpus size for training is studied. In our work, we evaluate our proposed compositional model using their introduced English dataset. We compare the performance of our model with the weighted additive model as well as other unsupervised and supervised models and provide a more comprehensive collection of compositional models for evaluation. In the weighted additive model, we report the best model obtained by varying the weights of the head and modifier words of the compound.

In a work by Li et al. (Reference Li, Lu and Long2017), a hybrid method to learn the representation of MWEs from their external context and constituent words with a compositionality constraint is proposed. The main idea is to learn MWE representations based on a weighted linear combination of both external context and component words, where the weight is based on the compositionality of the MWEs. Evaluations are done on the task of semantic similarity and semantic relatedness between bigrams and unigrams. Recent deep learning techniques also focus on modeling the compositionality of more complex texts without considering the compositionality of the smaller parts such as Wu and Chi (Reference Wu and Chi2017), which is out of the scope of our study. None of the mentioned works, however, have investigated the performance of CMSMs in compositionality prediction of short phrases on MWE datasets.

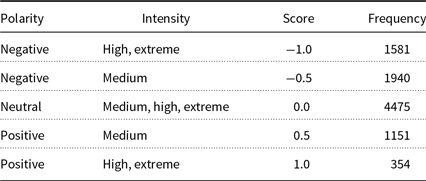

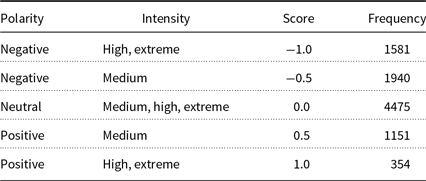

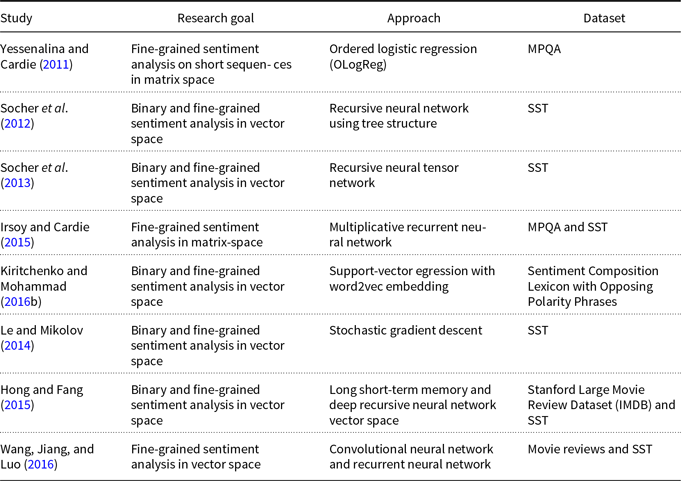

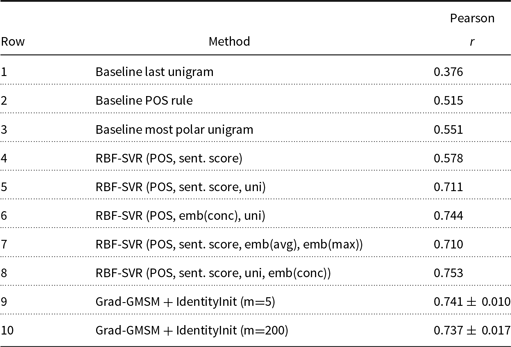

Compositional Sentiment Analysis. There is a lot of research interest in the task of sentiment analysis in NLP. The task is to classify the polarity of a text (negative, positive, neutral) or assign a real-valued score, showing the polarity and intensity of the text. In the following, we review the literature, which is summarized in Table 3.

Table 3. Summary of the literature review in compositional sentiment analysis. SST denotes Stanford Sentiment Treebank dataset

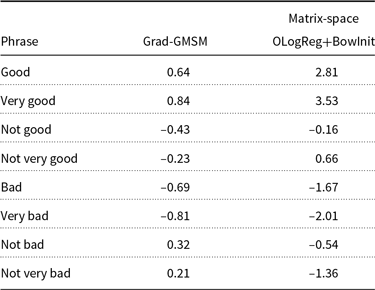

Yessenalina and Cardie (Reference Yessenalina and Cardie2011) propose the first supervised learning technique for CMSMs in fine-grained sentiment analysis on short sequences after it was introduced by Rudolph and Giesbrecht (Reference Rudolph and Giesbrecht2010). This work is closely related to ours as we propose learning techniques for CMSMs in the task of fine-grained sentiment analysis. Yessenalina and Cardie (Reference Yessenalina and Cardie2011) apply ordered logistic regression (OLogReg) with constraints on CMSMs to acquire a matrix representation of words. The learning parameters in their method include the word matrices as well as a set of thresholds (also called constraints), which indicate the intervals for sentiment classes since they convert the sentiment classes to ordinal labels. They argue that the learning problem for CMSMs is not a convex problem, so it must be trained carefully and specific attention has to be devoted to a good initialization, to avoid getting stuck in local optima. Therefore, they propose a model for ordinal scale sentiment prediction and address the optimization problem using OLogReg with constraints on sentiment intervals to relax the non-convexity. Finally, the trained model assigns real-valued sentiment scores to phrases. We address this issue in our proposed learning method for CMSMs. As opposed to Yessenalina and Cardie (Reference Yessenalina and Cardie2011)’s work, we address a sentiment regression problem directly and our learning method does not need to constrain the sentiment scores to the certain intervals. Therefore, the number of parameters to learn is reduced to only word matrices.

Recent approaches have focused on learning different types of NNs for sentiment analysis, such as the work of Socher et al. (Reference Socher, Huval, Manning and Ng2012) and (2013). Moreover, the superiority of multiplicative composition has been confirmed in their studies. Socher et al. (Reference Socher, Huval, Manning and Ng2012) propose a recursive NN which learns the vector representations of phrases in a tree structure. Each word and phrase is represented by a vector

![]() ${\textbf{v}}$

and a matrix M. When two constituents in the tree are composed, the matrix of one is multiplied with the vector of the other constituent. Therefore, the composition function is parameterized by the words that participate in it. Socher et al. (Reference Socher, Huval, Manning and Ng2012) predict the binary (only positive and negative) sentiment classes and fine-grained sentiment scores using the trained recursive NN on their developed Stanford Sentiment Treebank dataset. This means that new datasets must be preprocessed to generate the parse trees for evaluating the proposed method. A problem with this method is that the number of parameters becomes very large as it needs to store a matrix and a vector for each word and phrase in the tree together with the fully labeled parse tree. In contrast, our CMSM does not rely on parse trees, and therefore, preprocessing of the dataset is not required. Each word is represented only with matrices where the compositional function is the standard matrix multiplication, which replaces the recursive computations with a sequential computation.

${\textbf{v}}$

and a matrix M. When two constituents in the tree are composed, the matrix of one is multiplied with the vector of the other constituent. Therefore, the composition function is parameterized by the words that participate in it. Socher et al. (Reference Socher, Huval, Manning and Ng2012) predict the binary (only positive and negative) sentiment classes and fine-grained sentiment scores using the trained recursive NN on their developed Stanford Sentiment Treebank dataset. This means that new datasets must be preprocessed to generate the parse trees for evaluating the proposed method. A problem with this method is that the number of parameters becomes very large as it needs to store a matrix and a vector for each word and phrase in the tree together with the fully labeled parse tree. In contrast, our CMSM does not rely on parse trees, and therefore, preprocessing of the dataset is not required. Each word is represented only with matrices where the compositional function is the standard matrix multiplication, which replaces the recursive computations with a sequential computation.

Socher et al. (Reference Socher, Perelygin, Wu, Chuang, Manning, Ng and Potts2013) address the issue of the high number of parameters in the work by Socher et al. (Reference Socher, Huval, Manning and Ng2012) by introducing a recursive neural tensor network in which a global tensor-based composition function is defined. In this model, a tensor layer is added to their standard recursive NN where the vectors of two constituents are multiplied with a shared third-order tensor in this layer and then passed to the standard layer. The output is a composed vector of words which is then composed with the next word in the same way. The model is evaluated on both fine-grained and binary (only positive and negative) sentiment classification of phrases and sentences. Similar to Socher et al. (Reference Socher, Huval, Manning and Ng2012), a fully labeled parse tree is needed. In contrast, our model in this work does not rely on parse trees.

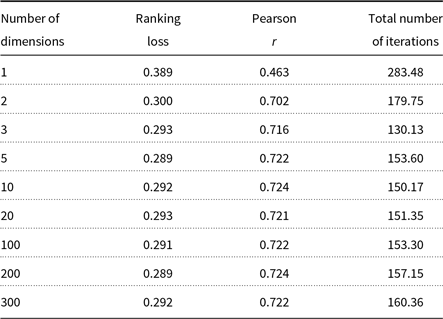

Irsoy and Cardie (Reference Irsoy and Cardie2015) propose a multiplicative recurrent NN (mRNN) for fine-grained sentiment analysis inspired from CMSMs (Rudolph and Giesbrecht Reference Rudolph and Giesbrecht2010). They show that their proposed architecture is more generic than CMSM and outperforms additive NNs in sentiment analysis. In their architecture, a shared third-order tensor is multiplied with each word vector input to obtain the word matrix in CMSMs. They use pre-trained word vectors of dimension 300 from word2vec (Mikolov et al. Reference Mikolov, Sutskever, Chen, Corrado and Dean2013b) and explore different sizes of matrices extracted from the shared third-order tensor. The results on the task of sentiment analysis are compared to the work by Yessenalina and Cardie (Reference Yessenalina and Cardie2011). We also compare the results of our model training on the same task to this approach since it is closely related to our work. However, in our approach, we do not use word vectors as input. Instead, the input word matrices are trained directly without using a shared tensor. We show that our model performs better while using fewer dimensions.

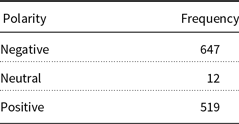

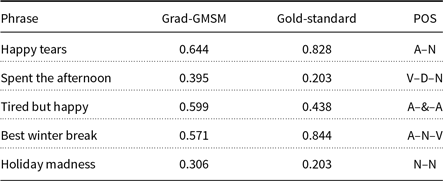

Kiritchenko and Mohammad (Reference Kiritchenko and Mohammad2016a) create a dataset of unigrams, bigrams, and trigrams, which contains specific phrases with at least one negative and one positive word. For instance, a phrase “happy tears” contains a positive-carrying sentiment word (happy) and a negative word (tears). They analyze the performance of support-vector regression (SVR) with different features on the developed dataset. We show that our approach can predict the sentiment score of such phrases in matrix space with a much lower number of features than SVR.

There are a number of deep NN models on the task of sentiment compositional analysis such as Hong and Fang (Reference Hong and Fang2015) who apply LSTM and deep recursive-NNs, and Wang et al. (Reference Wang, Jiang and Luo2016) who combine convolutional NNs and recurrent NNs leading to a significant improvement in sentiment analysis of short text. Le and Mikolov (Reference Le and Mikolov2014) also propose paragraph vector to represent long texts such as sentences and paragraphs, which is applied in the task of binary and fine-grained sentiment analysis. The model consists of a vector for each paragraph as well as the word vectors, which are concatenated to predict the next word in the context. Vectors are trained using stochastic gradient descent method. These techniques do not focus on training word representations that can be readily composed and therefore are not comparable directly to our proposed model.

3. Preliminaries

In this section, we recap some aspects of linear algebra to the extent needed for our considerations about CMSMs. For a more thorough treatise, we refer the reader to a linear algebra textbook (such as Strang Reference Strang1993).

Vectors. Given a natural number n, an n-dimensional vector

![]() ${\textbf{v}}$

over the reals can be seen as a list (or tuple) containing n real numbers

${\textbf{v}}$

over the reals can be seen as a list (or tuple) containing n real numbers

![]() $r_1,\ldots,r_n \in \mathbb{R}$

, written

$r_1,\ldots,r_n \in \mathbb{R}$

, written

![]() ${\textbf{v}} = (r_1\quad r_2\quad \cdots\quad r_n)$

. Vectors will be denoted by lowercase bold font letters and we will use the notation

${\textbf{v}} = (r_1\quad r_2\quad \cdots\quad r_n)$

. Vectors will be denoted by lowercase bold font letters and we will use the notation

![]() ${\textbf{v}}(i)$

to refer to the ith entry of vector

${\textbf{v}}(i)$

to refer to the ith entry of vector

![]() ${\textbf{v}}$

. As usual, we write

${\textbf{v}}$

. As usual, we write

![]() $\mathbb{R}^n$

to denote the set of all n-dimensional vectors with real-valued entries. Vectors can be added entry-wise, that is,

$\mathbb{R}^n$

to denote the set of all n-dimensional vectors with real-valued entries. Vectors can be added entry-wise, that is,

![]() $(r_1\quad \cdots\quad r_n) + (r^{\prime}_1\quad \cdots\quad r^{\prime}_n) = (r_1\!+\!r^{\prime}_1\quad \cdots\quad r_n\!+\!r^{\prime}_n)$

. Likewise, the entry-wise product (also known as Hadamard product) is defined by

$(r_1\quad \cdots\quad r_n) + (r^{\prime}_1\quad \cdots\quad r^{\prime}_n) = (r_1\!+\!r^{\prime}_1\quad \cdots\quad r_n\!+\!r^{\prime}_n)$

. Likewise, the entry-wise product (also known as Hadamard product) is defined by

![]() $(r_1\ \ \cdots\ \ r_n)\ \odot\ (r^{\prime}_1\ \ \cdots\ \ r^{\prime}_n) = (r_1\cdot r^{\prime}_1\ \ \cdots\ \ r_n\cdot r^{\prime}_n)$

.

$(r_1\ \ \cdots\ \ r_n)\ \odot\ (r^{\prime}_1\ \ \cdots\ \ r^{\prime}_n) = (r_1\cdot r^{\prime}_1\ \ \cdots\ \ r_n\cdot r^{\prime}_n)$

.

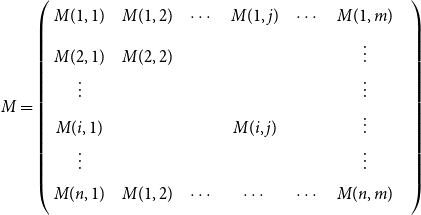

Matrices. Given two natural numbers n and m, an

![]() $n\times m$

matrix over the reals is an array of real numbers with n rows and m columns. We will use capital letters to denote matrices and, given a matrix M we will write M(i,j) to refer to the entry in the ith row and the jth column:

$n\times m$

matrix over the reals is an array of real numbers with n rows and m columns. We will use capital letters to denote matrices and, given a matrix M we will write M(i,j) to refer to the entry in the ith row and the jth column:

\begin{equation*} \mbox{$M =$} \left(\begin{array}{@{\ }c@{\quad}c@{\quad}c@{\quad}c@{\quad}c@{\quad}c@{\quad}} M(1,1) & M(1,2) & \cdots & M(1,j) & \cdots & M(1,m) \\[4pt] M(2,1) & M(2,2) & & & & \vdots \\[1pt] \vdots & & & & & \vdots \\[1pt] M(i,1) & & & M(i,j) & & \vdots \\[1pt] \vdots & & & & & \vdots \\[1pt] M(n,1) & M(1,2) & \cdots & \cdots & \cdots & M(n,m) \\[4pt] \end{array}\right) \end{equation*}

\begin{equation*} \mbox{$M =$} \left(\begin{array}{@{\ }c@{\quad}c@{\quad}c@{\quad}c@{\quad}c@{\quad}c@{\quad}} M(1,1) & M(1,2) & \cdots & M(1,j) & \cdots & M(1,m) \\[4pt] M(2,1) & M(2,2) & & & & \vdots \\[1pt] \vdots & & & & & \vdots \\[1pt] M(i,1) & & & M(i,j) & & \vdots \\[1pt] \vdots & & & & & \vdots \\[1pt] M(n,1) & M(1,2) & \cdots & \cdots & \cdots & M(n,m) \\[4pt] \end{array}\right) \end{equation*}

The set of all

![]() $n\times m$

matrices with real number entries is denoted by

$n\times m$

matrices with real number entries is denoted by

![]() $\mathbb{R}^{n\times m}$

. Obviously, m-dimensional vectors can be seen as

$\mathbb{R}^{n\times m}$

. Obviously, m-dimensional vectors can be seen as

![]() $1 \times m$

matrices. A matrix can be transposed by exchanging columns and rows: given the

$1 \times m$

matrices. A matrix can be transposed by exchanging columns and rows: given the

![]() $n\times m$

matrix M, its transposed version

$n\times m$

matrix M, its transposed version

![]() $M^T$

is an

$M^T$

is an

![]() $m\times n$

matrix defined by

$m\times n$

matrix defined by

![]() $M^T(i,j) = M(j,i)$

.

$M^T(i,j) = M(j,i)$

.

Third-order Tensors. A third-order tensor of dimension

![]() $d \times n \times m$

over real values is a d-array of

$d \times n \times m$

over real values is a d-array of

![]() $n \times m$

matrices. Third-order tensors are denoted by uppercase bold font letters, and

$n \times m$

matrices. Third-order tensors are denoted by uppercase bold font letters, and

![]() ${\bf T}(i,j,k)$

refers to row j and column k of matrix i in T.

${\bf T}(i,j,k)$

refers to row j and column k of matrix i in T.

![]() $\mathbb{R}^{d \times n \times m}$

indicates the set of all tensors with real number elements.

$\mathbb{R}^{d \times n \times m}$

indicates the set of all tensors with real number elements.

Linear Mappings. Beyond being merely array-like data structures, matrices correspond to a certain type of functions, so-called linear mappings, having vectors as input and output. More precisely, an

![]() $n\times m$

matrix M applied to an m-dimensional vector

$n\times m$

matrix M applied to an m-dimensional vector

![]() ${\textbf{v}}$

yields an n-dimensional vector

${\textbf{v}}$

yields an n-dimensional vector

![]() ${\textbf{v}}'$

(written:

${\textbf{v}}'$

(written:

![]() ${\textbf{v}} M = {\textbf{v}}'$

) according to

${\textbf{v}} M = {\textbf{v}}'$

) according to

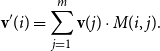

\begin{equation*}{\textbf{v}}'(i) = \sum_{j=1}^m {\textbf{v}}(j)\cdot M(i,j).\end{equation*}

\begin{equation*}{\textbf{v}}'(i) = \sum_{j=1}^m {\textbf{v}}(j)\cdot M(i,j).\end{equation*}

Linear mappings can be concatenated, giving rise to the notion of standard matrix multiplication: we write

![]() $M_1 M_2$

to denote the matrix that corresponds to the linear mapping defined by applying first

$M_1 M_2$

to denote the matrix that corresponds to the linear mapping defined by applying first

![]() $M_1$

and then

$M_1$

and then

![]() $M_2$

. Formally, the matrix product of the

$M_2$

. Formally, the matrix product of the

![]() $n\times \ell$

matrix

$n\times \ell$

matrix

![]() $M_1$

and the

$M_1$

and the

![]() $\ell \times m$

matrix

$\ell \times m$

matrix

![]() $M_2$

is an

$M_2$

is an

![]() $n\times m$

matrix

$n\times m$

matrix

![]() $M = M_1 M_2$

defined by

$M = M_1 M_2$

defined by

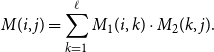

\begin{equation*}M(i,j) = \sum_{k=1}^\ell M_1(i,k)\cdot M_2(k,j).\end{equation*}

\begin{equation*}M(i,j) = \sum_{k=1}^\ell M_1(i,k)\cdot M_2(k,j).\end{equation*}

Note that the matrix product is associative (i.e.,

![]() $(M_1M_2)M_3 = M_1(M_2M_3)$

always holds, thus parentheses can be omitted) but not commutative (

$(M_1M_2)M_3 = M_1(M_2M_3)$

always holds, thus parentheses can be omitted) but not commutative (

![]() $M_1M_2 = M_2M_1$

does not hold in general, that is, the order of the multiplied matrices matters).

$M_1M_2 = M_2M_1$

does not hold in general, that is, the order of the multiplied matrices matters).

Permutations. Given a natural number n, a permutation on

![]() $\{1\ldots n\}$

is a bijection (i.e., a mapping that is one-to-one and onto)

$\{1\ldots n\}$

is a bijection (i.e., a mapping that is one-to-one and onto)

![]() $\Phi{\,:\,}\{1\ldots n\} \to \{1\ldots n\}$

. A permutation can be seen as a “reordering scheme” on a list with n elements: the element at position i will get the new position

$\Phi{\,:\,}\{1\ldots n\} \to \{1\ldots n\}$

. A permutation can be seen as a “reordering scheme” on a list with n elements: the element at position i will get the new position

![]() $\Phi(i)$

in the reordered list. Likewise, a permutation can be applied to a vector resulting in a rearrangement of the entries. We write

$\Phi(i)$

in the reordered list. Likewise, a permutation can be applied to a vector resulting in a rearrangement of the entries. We write

![]() $\Phi^n$

to denote the permutation corresponding to the n-fold application of

$\Phi^n$

to denote the permutation corresponding to the n-fold application of

![]() $\Phi$

and

$\Phi$

and

![]() $\Phi^{-1}$

to denote the permutation that “undoes”

$\Phi^{-1}$

to denote the permutation that “undoes”

![]() $\Phi$

.

$\Phi$

.

Given a permutation

![]() $\Phi$

, the corresponding permutation matrix

$\Phi$

, the corresponding permutation matrix

![]() $M_\Phi$

is defined by

$M_\Phi$

is defined by

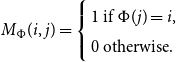

\begin{equation*}M_\Phi(i,j) = \left\{\begin{array}{l}1 \mbox{ if }\Phi(j)=i,\\0 \mbox{ otherwise.}\\\end{array}\right.\end{equation*}

\begin{equation*}M_\Phi(i,j) = \left\{\begin{array}{l}1 \mbox{ if }\Phi(j)=i,\\0 \mbox{ otherwise.}\\\end{array}\right.\end{equation*}

Then, obviously permuting a vector according to

![]() $\Phi$

can be expressed in terms of matrix multiplication as well, since we obtain, for any vector

$\Phi$

can be expressed in terms of matrix multiplication as well, since we obtain, for any vector

![]() ${\textbf{v}}\in\mathbb{R}^n$

,

${\textbf{v}}\in\mathbb{R}^n$

,

Likewise, iterated application (

![]() $\Phi^n$

) and the inverses

$\Phi^n$

) and the inverses

![]() $\Phi^{-n}$

carry over naturally to the corresponding notions in matrices.

$\Phi^{-n}$

carry over naturally to the corresponding notions in matrices.

4. A matrix-based model of compositionality

Frege’s principle of compositionality states that “the meaning of an expression is a function of the meanings of its parts and of the way they are syntactically combined” (Partee Reference Partee2004, p. 153). Also, according to Partee, ter Meulen, and Wall (Reference Partee, ter Meulen and Wall1993, p. 334) the mathematical formulation of the compositionality principle involves “representing both the syntax and the semantics as algebras and the semantic interpretation as a homomorphic mapping from the syntactic algebra into the semantic algebra.”

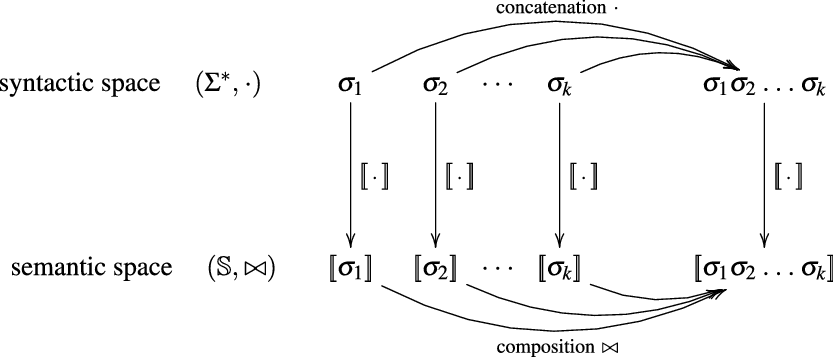

The underlying principle of compositional semantics is that the meaning of a composed sequence can be derived from the meaning of its constituent tokensFootnote

c

by applying a composition operation. More formally, the underlying idea can be described mathematically as follows: given a mapping

![]() $\mbox{$[\,\!\![$}{\,\cdot\,}\mbox{$]\,\!\!]$}: \Sigma \to \mathbb{S}$

from a set of tokens (words)

$\mbox{$[\,\!\![$}{\,\cdot\,}\mbox{$]\,\!\!]$}: \Sigma \to \mathbb{S}$

from a set of tokens (words)

![]() $\Sigma$

into some semantic space

$\Sigma$

into some semantic space

![]() $\mathbb{S}$

(the elements of which we will simply call “meanings”), we find a semantic composition operation

$\mathbb{S}$

(the elements of which we will simply call “meanings”), we find a semantic composition operation

![]() $\bowtie: \mathbb{S}^* \to \mathbb{S}$

mapping sequences of meanings to meanings such that the meaning of a sequence of tokens

$\bowtie: \mathbb{S}^* \to \mathbb{S}$

mapping sequences of meanings to meanings such that the meaning of a sequence of tokens

![]() $s=\sigma_1\sigma_2\ldots\sigma_k$

can be obtained by applying

$s=\sigma_1\sigma_2\ldots\sigma_k$

can be obtained by applying

![]() $\bowtie$

to the sequence

$\bowtie$

to the sequence

![]() $\mbox{$[\,\!\![$}{\sigma_1}\mbox{$]\,\!\!]$}\mbox{$[\,\!\![$}{\sigma_2}\mbox{$]\,\!\!]$}\ldots\mbox{$[\,\!\![$}{\sigma_k}\mbox{$]\,\!\!]$}$

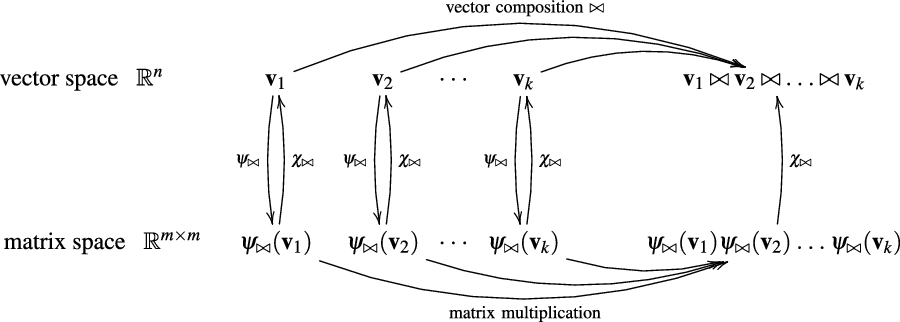

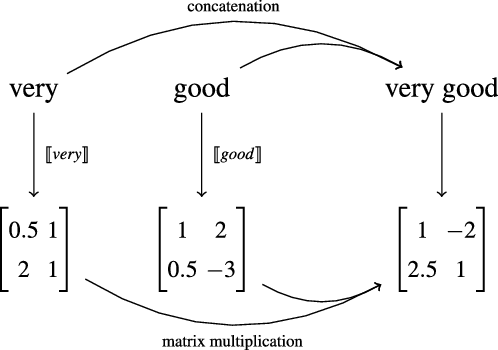

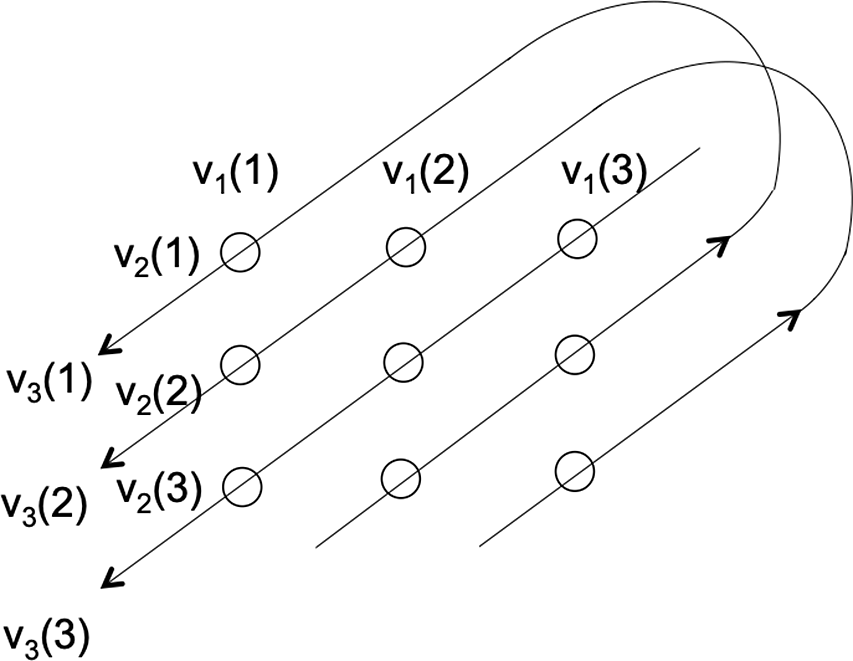

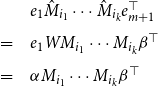

. This situation, displayed in Figure 1, qualifies

$\mbox{$[\,\!\![$}{\sigma_1}\mbox{$]\,\!\!]$}\mbox{$[\,\!\![$}{\sigma_2}\mbox{$]\,\!\!]$}\ldots\mbox{$[\,\!\![$}{\sigma_k}\mbox{$]\,\!\!]$}$

. This situation, displayed in Figure 1, qualifies

![]() $\mbox{$[\,\!\![$}{\cdot}\mbox{$]\,\!\!]$}$

as a homomorphism between

$\mbox{$[\,\!\![$}{\cdot}\mbox{$]\,\!\!]$}$

as a homomorphism between

![]() $(\Sigma^*,\cdot)$

and

$(\Sigma^*,\cdot)$

and

![]() $(\mathbb{S},\bowtie)$

.

$(\mathbb{S},\bowtie)$

.

Figure 1. Semantic mapping as homomorphism.

A great variety of linguistic models are subsumed by this general idea ranging from purely symbolic approaches (like type systems and categorial grammars) to statistical models (like vector space and word space models). At the first glance, the underlying encodings of word semantics as well as the composition operations differ significantly. However, we argue that a great variety of them can be incorporated – and even freely inter-combined – into a unified model where the semantics of simple tokens and complex phrases is expressed by matrices and the composition operation is standard matrix multiplication that considers the position of tokens in the sequence.

More precisely, in CMSMs, we have

![]() $\mathbb{S} = \mathbb{R}^{m\times m}$

, that is, the semantic space consists of quadratic matrices, and the composition operator

$\mathbb{S} = \mathbb{R}^{m\times m}$

, that is, the semantic space consists of quadratic matrices, and the composition operator

![]() $\bowtie$

coincides with matrix multiplication as introduced in Section 3.

$\bowtie$

coincides with matrix multiplication as introduced in Section 3.

We next provide an argument in favor of CMSMs due to their “algebraic plausibility.” Most linear-algebra-based operations that have been proposed to model composition in language models (such as vector addition or the Hadamard product) are both associative and commutative. Thereby, they realize a multiset (or bag-of-words) semantics which makes them oblivious of structural differences of phrases conveyed through word order. For instance, in an associative and commutative model, the statements “Oswald killed Kennedy” and “Kennedy killed Oswald” would be mapped to the same semantic representation. For this reason, having commutativity “built-in” in language models seems a very arguable design decision.

On the other hand, language is inherently stream-like and sequential; thus, associativity alone seems much more justifiable. Ambiguities which might be attributed to non-associativity (such as the different meanings of the sentence “The man saw the girl with the telescope.”) can be resolved easily by contextual cues.

As mentioned before, matrix multiplication is associative but non-commutative, whence we propose it as more adequate for modeling compositional semantics of language.

5. The power of CMSMs

In the following, we argue that CMSMs have diverse desirable properties from a theoretical perspective, justifying our confidence that they can serve as a generic approach to modeling compositionality in natural language.

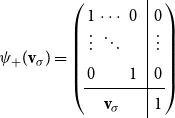

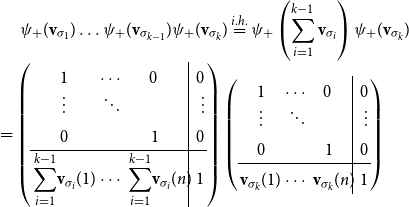

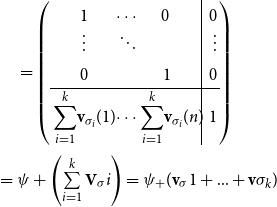

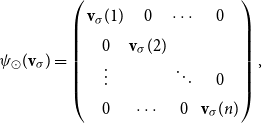

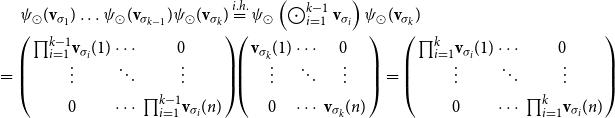

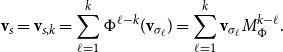

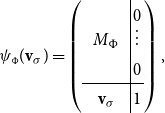

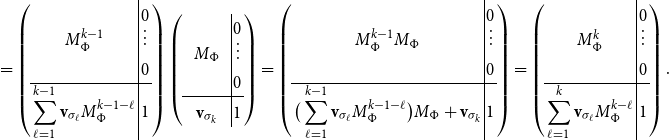

5.1 CMSMs capture compositional VSMs

In VSMs, numerous vector operations have been used to model composition (Widdows Reference Widdows2008). We show how common composition operators can be simulated by CMSMs.Footnote

d

For each such vector composition operation

![]() $\bowtie: \mathbb{R}^n \times \mathbb{R}^n \to \mathbb{R}^n$

, we will provide a pair of functions

$\bowtie: \mathbb{R}^n \times \mathbb{R}^n \to \mathbb{R}^n$

, we will provide a pair of functions

![]() $\psi_{\bowtie}: \mathbb{R}^n \to \mathbb{R}^{m\times m}$

and

$\psi_{\bowtie}: \mathbb{R}^n \to \mathbb{R}^{m\times m}$

and

![]() $\chi_{\bowtie}: \mathbb{R}^{m\times m} \to \mathbb{R}^n$

satisfying

$\chi_{\bowtie}: \mathbb{R}^{m\times m} \to \mathbb{R}^n$

satisfying

![]() $\chi_{\bowtie}(\psi_{\bowtie}({\textbf{v}})))={\textbf{v}}$

for all

$\chi_{\bowtie}(\psi_{\bowtie}({\textbf{v}})))={\textbf{v}}$

for all

![]() ${\textbf{v}} \in \mathbb{R}^n$

. These functions translate between the vector representation and the matrix representation in a way such that for all

${\textbf{v}} \in \mathbb{R}^n$

. These functions translate between the vector representation and the matrix representation in a way such that for all

![]() ${\textbf{v}}_1, \ldots, {\textbf{v}}_k \in \mathbb{R}^n$

holds

${\textbf{v}}_1, \ldots, {\textbf{v}}_k \in \mathbb{R}^n$

holds

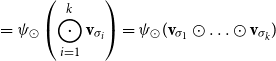

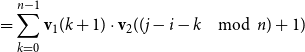

where

![]() $\psi_{\bowtie}({\textbf{v}}_i)\psi_{\bowtie}({\textbf{v}}_j)$

denotes matrix multiplication of the matrices assigned to

$\psi_{\bowtie}({\textbf{v}}_i)\psi_{\bowtie}({\textbf{v}}_j)$

denotes matrix multiplication of the matrices assigned to

![]() ${\textbf{v}}_i$

and

${\textbf{v}}_i$

and

![]() ${\textbf{v}}_j$

. This allows us to simulate a

${\textbf{v}}_j$

. This allows us to simulate a

![]() $\bowtie$

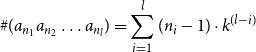

-compositional VSM by a matrix-space model where matrix multiplication is the composition operation (see Figure 2). We can in fact show that vector addition, element-wise vector multiplication, holographic reduced representation, and permutation based composition approaches are captured by CMSMs. See Appendix A for detailed discussion and proofs.

$\bowtie$

-compositional VSM by a matrix-space model where matrix multiplication is the composition operation (see Figure 2). We can in fact show that vector addition, element-wise vector multiplication, holographic reduced representation, and permutation based composition approaches are captured by CMSMs. See Appendix A for detailed discussion and proofs.

Figure 2. Simulating compositional VSM via CMSMs.

5.2 CMSMs capture symbolic approaches

Now we will elaborate on symbolic approaches to language, that is, discrete grammar formalisms, and show how they can conveniently be embedded into CMSMs. This might come as a surprise, as the apparent likeness of CMSMs to VSMs may suggest incompatibility to discrete settings.

Group Theory. Group theory and grammar formalisms based on groups and pre-groups play an important role in computational linguistics (Lambek Reference Lambek1958; Dymetman Reference Dymetman1998). From the perspective of our compositionality framework, those approaches employ a group (or pre-group)

![]() $(G,\cdot)$

as the semantic space

$(G,\cdot)$

as the semantic space

![]() $\mathbb{S}$

where the group operation (often written as multiplication) is used as composition operation

$\mathbb{S}$

where the group operation (often written as multiplication) is used as composition operation

![]() $\bowtie$

.

$\bowtie$

.

According to Cayley’s theorem (Cayley Reference Cayley1854), every group G is isomorphic to a permutation group on some set S. Hence, assuming finiteness of G and consequently S, we can encode group-based grammar formalisms into CMSMs in a straightforward way using permutation matrices of size

![]() $|S|\times|S|$

.

$|S|\times|S|$

.

Regular Languages. Regular languages constitute a basic type of languages characterized by a symbolic formalism. We will show how to select the assignment

![]() $\mbox{$[\,\!\![$}{\,\cdot\,}\mbox{$]\,\!\!]$}$

for a CMSM such that the matrix associated with a token sequence exhibits whether this sequence belongs to a given regular language, that is, if it is accepted by a given finite state automaton. As usual, we define a nondeterministic finite automaton

$\mbox{$[\,\!\![$}{\,\cdot\,}\mbox{$]\,\!\!]$}$

for a CMSM such that the matrix associated with a token sequence exhibits whether this sequence belongs to a given regular language, that is, if it is accepted by a given finite state automaton. As usual, we define a nondeterministic finite automaton

![]() $\mathcal{A}=(Q,\Sigma,\Delta,Q_\textrm{I},Q_\textrm{F})$

with

$\mathcal{A}=(Q,\Sigma,\Delta,Q_\textrm{I},Q_\textrm{F})$

with

![]() $Q=\{q_0,\ldots,q_{m-1}\}$

being the set of states,

$Q=\{q_0,\ldots,q_{m-1}\}$

being the set of states,

![]() $\Sigma$

the input alphabet,

$\Sigma$

the input alphabet,

![]() $\Delta \subseteq Q \times \Sigma \times Q$

the transition relation, and

$\Delta \subseteq Q \times \Sigma \times Q$

the transition relation, and

![]() $Q_\textrm{I}$

and

$Q_\textrm{I}$

and

![]() $Q_\textrm{F}$

being the sets of initial and final states, respectively.

$Q_\textrm{F}$

being the sets of initial and final states, respectively.

Then we assign to every token

![]() $\sigma \in \Sigma$

the

$\sigma \in \Sigma$

the

![]() $m\times m$

matrix

$m\times m$

matrix

![]() $\mbox{$[\,\!\![$}{\sigma}\mbox{$]\,\!\!]$}=M$

with

$\mbox{$[\,\!\![$}{\sigma}\mbox{$]\,\!\!]$}=M$

with

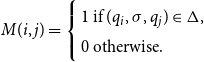

\begin{equation*}M(i,j) = \left\{\begin{array}{l}1 \mbox{ if }(q_i,\sigma,q_j)\in\Delta,\\0 \mbox{ otherwise.}\\\end{array}\right.\end{equation*}

\begin{equation*}M(i,j) = \left\{\begin{array}{l}1 \mbox{ if }(q_i,\sigma,q_j)\in\Delta,\\0 \mbox{ otherwise.}\\\end{array}\right.\end{equation*}

Hence essentially, the matrix M encodes all state transitions which can be caused by the input

![]() $\sigma$

. Likewise, for a sequence

$\sigma$

. Likewise, for a sequence

![]() $s=\sigma_1\ldots\sigma_k\in \Sigma^*$

, the matrix

$s=\sigma_1\ldots\sigma_k\in \Sigma^*$

, the matrix

![]() $M_s := \mbox{$[\,\!\![$}{\sigma_1}\mbox{$]\,\!\!]$}\ldots \mbox{$[\,\!\![$}{\sigma_k}\mbox{$]\,\!\!]$}$

will encode all state transitions mediated by s.

$M_s := \mbox{$[\,\!\![$}{\sigma_1}\mbox{$]\,\!\!]$}\ldots \mbox{$[\,\!\![$}{\sigma_k}\mbox{$]\,\!\!]$}$

will encode all state transitions mediated by s.

5.3 Intercombining CMSMs

Another central advantage of the proposed matrix-based models for word meaning is that several matrix models can be easily combined into one. Again assume a sequence

![]() $s=\sigma_1\ldots\sigma_k$

of tokens with associated matrices

$s=\sigma_1\ldots\sigma_k$

of tokens with associated matrices

![]() $\mbox{$[\,\!\![$}{\sigma_1}\mbox{$]\,\!\!]$}, \ldots, \mbox{$[\,\!\![$}{\sigma_k}\mbox{$]\,\!\!]$}$

according to one specific model and matrices

$\mbox{$[\,\!\![$}{\sigma_1}\mbox{$]\,\!\!]$}, \ldots, \mbox{$[\,\!\![$}{\sigma_k}\mbox{$]\,\!\!]$}$

according to one specific model and matrices

![]() $\mbox{$(\,\!\![$}{\sigma_1}\mbox{$]\,\!\!)$}, \ldots, \mbox{$(\,\!\![$}{\sigma_k}\mbox{$]\,\!\!)$}$

according to another.

$\mbox{$(\,\!\![$}{\sigma_1}\mbox{$]\,\!\!)$}, \ldots, \mbox{$(\,\!\![$}{\sigma_k}\mbox{$]\,\!\!)$}$

according to another.

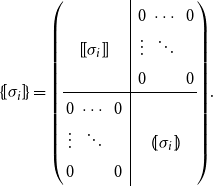

Then we can combine the two models into one

![]() $\mbox{$\{\,\!\![$}{\ \cdot\ }\mbox{$]\,\!\!\}$}$

by assigning to

$\mbox{$\{\,\!\![$}{\ \cdot\ }\mbox{$]\,\!\!\}$}$

by assigning to

![]() $\sigma_i$

the matrix

$\sigma_i$

the matrix

\begin{equation*}\mbox{$\{\,\!\![$}{\sigma_i}\mbox{$]\,\!\!\}$} =\left(\begin{array}{ccc@{\ \ }|cccc}& & & & 0 & \cdots & 0 \\& \mbox{$[\,\!\![$}{\sigma_i}\mbox{$]\,\!\!]$} & & & \vdots & \ddots & \\& & & & 0 & & 0 \\\hline0 & \cdots & 0 & & & & \\\vdots & \ddots & & & & \mbox{$(\,\!\![$}{\sigma_i}\mbox{$]\,\!\!)$} & \\0 & & 0 & & & & \\\end{array}\right)\! .\end{equation*}

\begin{equation*}\mbox{$\{\,\!\![$}{\sigma_i}\mbox{$]\,\!\!\}$} =\left(\begin{array}{ccc@{\ \ }|cccc}& & & & 0 & \cdots & 0 \\& \mbox{$[\,\!\![$}{\sigma_i}\mbox{$]\,\!\!]$} & & & \vdots & \ddots & \\& & & & 0 & & 0 \\\hline0 & \cdots & 0 & & & & \\\vdots & \ddots & & & & \mbox{$(\,\!\![$}{\sigma_i}\mbox{$]\,\!\!)$} & \\0 & & 0 & & & & \\\end{array}\right)\! .\end{equation*}

By doing so, we obtain the correspondence

\begin{equation*}\mbox{$\{\,\!\![$}{\sigma_1}\mbox{$]\,\!\!\}$}\ldots\mbox{$\{\,\!\![$}{\sigma_k}\mbox{$]\,\!\!\}$} =\left(\begin{array}{c@{\ }c@{\ }cc|cc@{\ }c@{\ }c}& & & & & 0 & \cdots & 0 \\& \mbox{$[\,\!\![$}{\sigma_1}\mbox{$]\,\!\!]$}\ldots\mbox{$[\,\!\![$}{\sigma_k}\mbox{$]\,\!\!]$} & & & & \vdots & \ddots & \\& & & & & 0 & & 0 \\\hline0 & \cdots & 0 & & & & & \\\vdots & \ddots & & & & & \mbox{$(\,\!\![$}{\sigma_1}\mbox{$]\,\!\!)$}\ldots\mbox{$(\,\!\![$}{\sigma_k}\mbox{$]\,\!\!)$} & \\0 & & 0 & & & & & \\\end{array}\right)\! .\end{equation*}

\begin{equation*}\mbox{$\{\,\!\![$}{\sigma_1}\mbox{$]\,\!\!\}$}\ldots\mbox{$\{\,\!\![$}{\sigma_k}\mbox{$]\,\!\!\}$} =\left(\begin{array}{c@{\ }c@{\ }cc|cc@{\ }c@{\ }c}& & & & & 0 & \cdots & 0 \\& \mbox{$[\,\!\![$}{\sigma_1}\mbox{$]\,\!\!]$}\ldots\mbox{$[\,\!\![$}{\sigma_k}\mbox{$]\,\!\!]$} & & & & \vdots & \ddots & \\& & & & & 0 & & 0 \\\hline0 & \cdots & 0 & & & & & \\\vdots & \ddots & & & & & \mbox{$(\,\!\![$}{\sigma_1}\mbox{$]\,\!\!)$}\ldots\mbox{$(\,\!\![$}{\sigma_k}\mbox{$]\,\!\!)$} & \\0 & & 0 & & & & & \\\end{array}\right)\! .\end{equation*}

In other words, the semantic compositions belonging to two CMSMs can be executed “in parallel.” Mark that by providing non-zero entries for the upper right and lower left matrix part, information exchange between the two models can be easily realized.

6. Eliciting linguistic information from matrix representations

In the previous sections, we have argued in favor of using quadratic matrices as representatives for the meaning of words and – by means of composition – phrases. The matrix representation of a phrase thus obtained then arguably carries semantic information encoded in a certain way. This necessitates a “decoding step” where the information of interest is elicited from the matrix representation and is represented in different forms.

In the following, we will discuss various possible ways of eliciting the linguistic information from the matrix representation of phrases. Thereby we distinguish if this information is in the form of a vector, a scalar, or a boolean value. Proofs for the given theorems and propositions can be found in Appendix B.

6.1 Vectors

Vectors can represent various syntactic and semantic information of words and phrases and are widely used in many NLP tasks. The information in matrix representations in CMSMs can be elicited in a vector shape allowing for their comparison and integration with other NLP vector-space approaches. There are numerous options for a vector extraction function

![]() $\chi: \mathbb{R}^{m\times m} \to \mathbb{R}^n$

, among them the different functions

$\chi: \mathbb{R}^{m\times m} \to \mathbb{R}^n$

, among them the different functions

![]() $\chi_{\bowtie}$

, introduced in Section 5.1.

$\chi_{\bowtie}$

, introduced in Section 5.1.

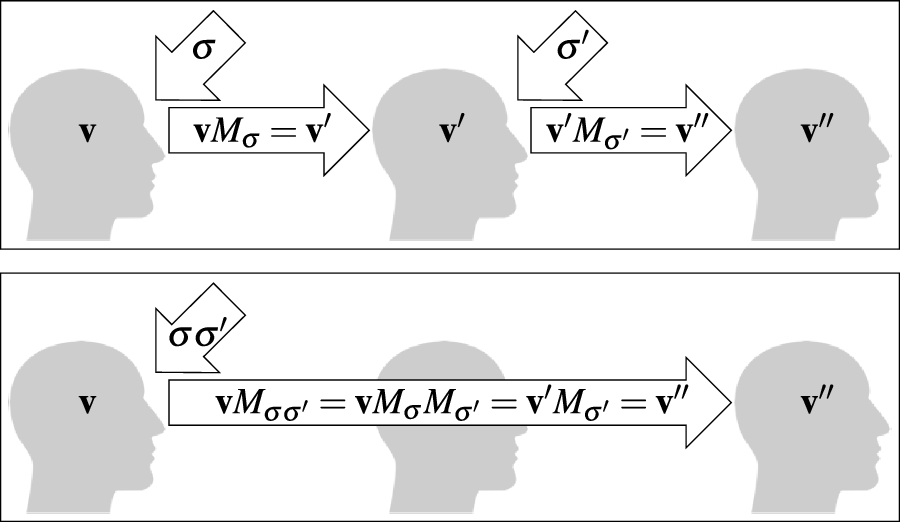

One alternative option can be derived from an idea already touched in the second part of Section 5.2, according to which CMSMs can be conceived as state transition systems, where states are represented by vectors, and multiplying a state-vector with a matrix implements a transition from the corresponding state to another. We will provide a speculative neuropsychological underpinning of this idea in Section 9. If we assume that processing an input sequence will always start from a fixed initial state

![]() $\alpha \in {R}^{m}$

, then the state after processing

$\alpha \in {R}^{m}$

, then the state after processing

![]() $s = \sigma_1\ldots \sigma_k$

will be

$s = \sigma_1\ldots \sigma_k$

will be

![]() $\alpha M_{\sigma_1}\ldots M_{\sigma_k} = \alpha M_{s}$

. Consequently, one simple but plausible vector extraction operation would be given by the function

$\alpha M_{\sigma_1}\ldots M_{\sigma_k} = \alpha M_{s}$

. Consequently, one simple but plausible vector extraction operation would be given by the function

![]() $\chi_\alpha$

where the vector v associated with a matrix M is

$\chi_\alpha$

where the vector v associated with a matrix M is

6.2 Scalars

Scalars (i.e., real values) may also represent semantic information in NLP tasks, such as semantic similarity degree in similarity tasks or sentiment score in sentiment analysis. Also, the information in scalar form requires less storage than matrices or vectors. To map a matrix

![]() $M \in \mathbb{R}^{m\times m}$

to a scalar value, we may employ any

$M \in \mathbb{R}^{m\times m}$

to a scalar value, we may employ any

![]() $m^2$

-ary function which takes as input all entries of M and delivers a scalar value. There are plenty of options for such a function. In this article, we will be focusing on the class of functions brought about by two mapping vectors from

$m^2$

-ary function which takes as input all entries of M and delivers a scalar value. There are plenty of options for such a function. In this article, we will be focusing on the class of functions brought about by two mapping vectors from

![]() $\mathbb{R}^m$

, called

$\mathbb{R}^m$

, called

![]() $\alpha$

and

$\alpha$

and

![]() $\beta$

, mapping a matrix M to the scalar value r via

$\beta$

, mapping a matrix M to the scalar value r via

Again, we can motivate this choice along the lines of transitional plausibility. If, as argued in the previous section,

![]() $\alpha$

represents an “initial mental state,” then, for a sequence s, the vector

$\alpha$

represents an “initial mental state,” then, for a sequence s, the vector

![]() $v_s = \alpha M_s \in \mathbb{R}^m$

represents the mental state after receiving the sequence s. Then

$v_s = \alpha M_s \in \mathbb{R}^m$

represents the mental state after receiving the sequence s. Then

![]() $r_s = \alpha M_s \beta^\top = v_s\beta^\top$

is the scalar obtained from a linear combination of the entries of

$r_s = \alpha M_s \beta^\top = v_s\beta^\top$

is the scalar obtained from a linear combination of the entries of

![]() $v_s$

, that is,

$v_s$

, that is,

![]() $r_s=b_1\cdot v(1) + \ldots + b_m\cdot v(m)$

, where

$r_s=b_1\cdot v(1) + \ldots + b_m\cdot v(m)$

, where

![]() $\beta = (b_1 \quad \cdots \quad b_m)$

.

$\beta = (b_1 \quad \cdots \quad b_m)$

.

Clearly, choosing appropriate “mapping vectors”

![]() $\alpha$

and

$\alpha$

and

![]() $\beta$

will dependent on the NLP task and the problem to be solved. However, it turns out that with a proper choice of the token-to-matrix mapping, we can restrict

$\beta$

will dependent on the NLP task and the problem to be solved. However, it turns out that with a proper choice of the token-to-matrix mapping, we can restrict

![]() $\alpha$

and

$\alpha$

and

![]() $\beta$

to a very specific form.

$\beta$

to a very specific form.

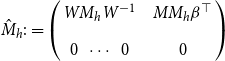

To this end, let

which only moderately restricts the expressivity of our model as made formally precise in the following theorem.

Theorem 1 Given matrices

![]() $M_1,\ldots,M_\ell \in \mathbb{R}^{m\times m}$

and vectors

$M_1,\ldots,M_\ell \in \mathbb{R}^{m\times m}$

and vectors

![]() $\alpha,\beta \in \mathbb{R}^{m}$

, there are matrices

$\alpha,\beta \in \mathbb{R}^{m}$

, there are matrices

![]() $\hat{M}_1,\ldots,\hat{M}_\ell \in \mathbb{R}^{(m+1)\times (m+1)}$

such that for every sequence

$\hat{M}_1,\ldots,\hat{M}_\ell \in \mathbb{R}^{(m+1)\times (m+1)}$

such that for every sequence

![]() $i_1\cdots i_k$

of numbers from

$i_1\cdots i_k$

of numbers from

![]() $\{1,\ldots,\ell\}$

holds

$\{1,\ldots,\ell\}$

holds

In words, this theorem guarantees that for every CMSM-based scoring model with arbitrary vectors

![]() $\alpha$

and

$\alpha$

and

![]() $\beta$

there is another such model (with dimensionality increased by one), where

$\beta$

there is another such model (with dimensionality increased by one), where

![]() $\alpha$

and

$\alpha$

and

![]() $\beta$

are distinct unit vectors. This theorem justifies our choice mentioned above.

$\beta$

are distinct unit vectors. This theorem justifies our choice mentioned above.

6.3 Boolean values

Boolean values can be also obtained from matrix representations. Obviously, any function

![]() $\zeta: \mathbb{R}^{m\times m} \to \{\textrm{true},\textrm{false}\}$

can be seen as a binary classifier which accepts or rejects a sequence of tokens as being part of the formal language

$\zeta: \mathbb{R}^{m\times m} \to \{\textrm{true},\textrm{false}\}$

can be seen as a binary classifier which accepts or rejects a sequence of tokens as being part of the formal language

![]() $L_\zeta$

defined by

$L_\zeta$

defined by

One option for defining such a function

![]() $\zeta$

is to first obtain a scalar (for instance using the mapping discussed before), as described in the preceding section and then compare that scalar against a given threshold value.Footnote

e

Of course, one can also perform several such comparisons. This idea gives rise to the notion of matrix grammars.

$\zeta$

is to first obtain a scalar (for instance using the mapping discussed before), as described in the preceding section and then compare that scalar against a given threshold value.Footnote

e

Of course, one can also perform several such comparisons. This idea gives rise to the notion of matrix grammars.

Definition 1 (Matrix Grammars). Let

![]() $\Sigma$

be an alphabet. A matrix grammar

$\Sigma$

be an alphabet. A matrix grammar

![]() $\mathcal{M}$

of degree m is defined as the pair

$\mathcal{M}$

of degree m is defined as the pair

![]() $\langle\ [\!\!\,[ \cdot ]\,\!\!],\ AC\rangle$

where

$\langle\ [\!\!\,[ \cdot ]\,\!\!],\ AC\rangle$

where

![]() $[\!\!\,[ \cdot ]\,\!\!]$

is a mapping from

$[\!\!\,[ \cdot ]\,\!\!]$

is a mapping from

![]() $\Sigma$

to

$\Sigma$

to

![]() $m\times m$

matrices and

$m\times m$

matrices and

![]() $AC = \{\langle\alpha_1,\beta_1,r_1\rangle, \ldots, \langle\alpha_\ell,\beta_\ell,r_\ell\rangle\}$

with

$AC = \{\langle\alpha_1,\beta_1,r_1\rangle, \ldots, \langle\alpha_\ell,\beta_\ell,r_\ell\rangle\}$

with

![]() $\alpha_1,\beta_1,\ldots,\alpha_\ell,\beta_\ell \in \mathbb{R}^m$

and

$\alpha_1,\beta_1,\ldots,\alpha_\ell,\beta_\ell \in \mathbb{R}^m$

and

![]() $r_1,\ldots,r_\ell\in \mathbb{R}$

is a finite set of acceptance conditions. The language generated by

$r_1,\ldots,r_\ell\in \mathbb{R}$

is a finite set of acceptance conditions. The language generated by

![]() $\mathcal{M}$

(denoted by

$\mathcal{M}$

(denoted by

![]() $L(\mathcal{M})$

) contains a token sequence

$L(\mathcal{M})$

) contains a token sequence

![]() $\sigma_1\ldots\sigma_k\in\Sigma^*$

exactly if

$\sigma_1\ldots\sigma_k\in\Sigma^*$

exactly if

![]() $\alpha_i [\!\!\,[ \sigma_1 ]\,\!\!] \ldots [\!\!\,[ \sigma_k ]\,\!\!] \beta^T_i \geq r_i$

for all

$\alpha_i [\!\!\,[ \sigma_1 ]\,\!\!] \ldots [\!\!\,[ \sigma_k ]\,\!\!] \beta^T_i \geq r_i$

for all

![]() $i\in\{1,\ldots, \ell\}$

. We will call a language L matricible if

$i\in\{1,\ldots, \ell\}$

. We will call a language L matricible if

![]() $L=L(\mathcal{M})$

for some matrix grammar

$L=L(\mathcal{M})$

for some matrix grammar

![]() $\mathcal{M}$

.

$\mathcal{M}$

.

Then, the following proposition is a direct consequence from the preceding section.

Proposition 1 Regular languages are matricible.

However, as demonstrated by the subsequent examples, many non-regular and even non-context-free languages are also matricible, hinting at the expressivity of matrix grammars.

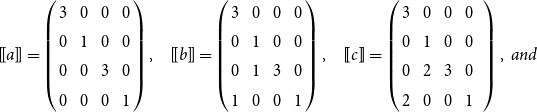

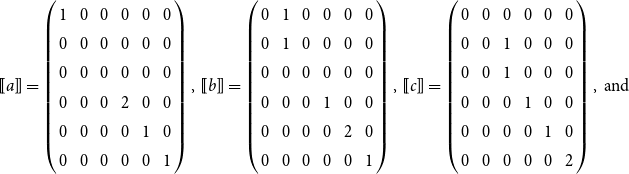

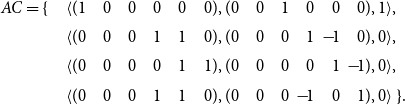

Example 1 Define

![]() $\mathcal{M}\langle\ \mbox{$[\,\!\![$}{\,\cdot\,}\mbox{$]\,\!\!]$},\ AC\rangle$

with

$\mathcal{M}\langle\ \mbox{$[\,\!\![$}{\,\cdot\,}\mbox{$]\,\!\!]$},\ AC\rangle$

with

![]() $\Sigma = \{a, b, c\}$

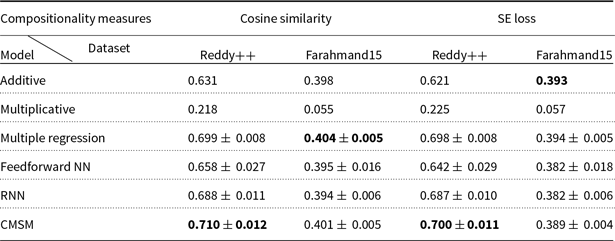

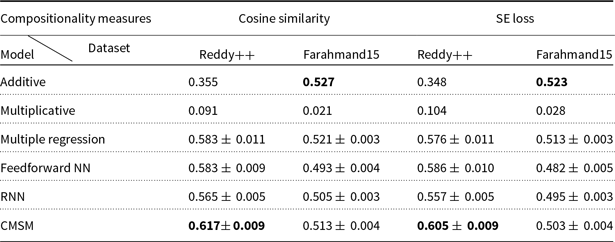

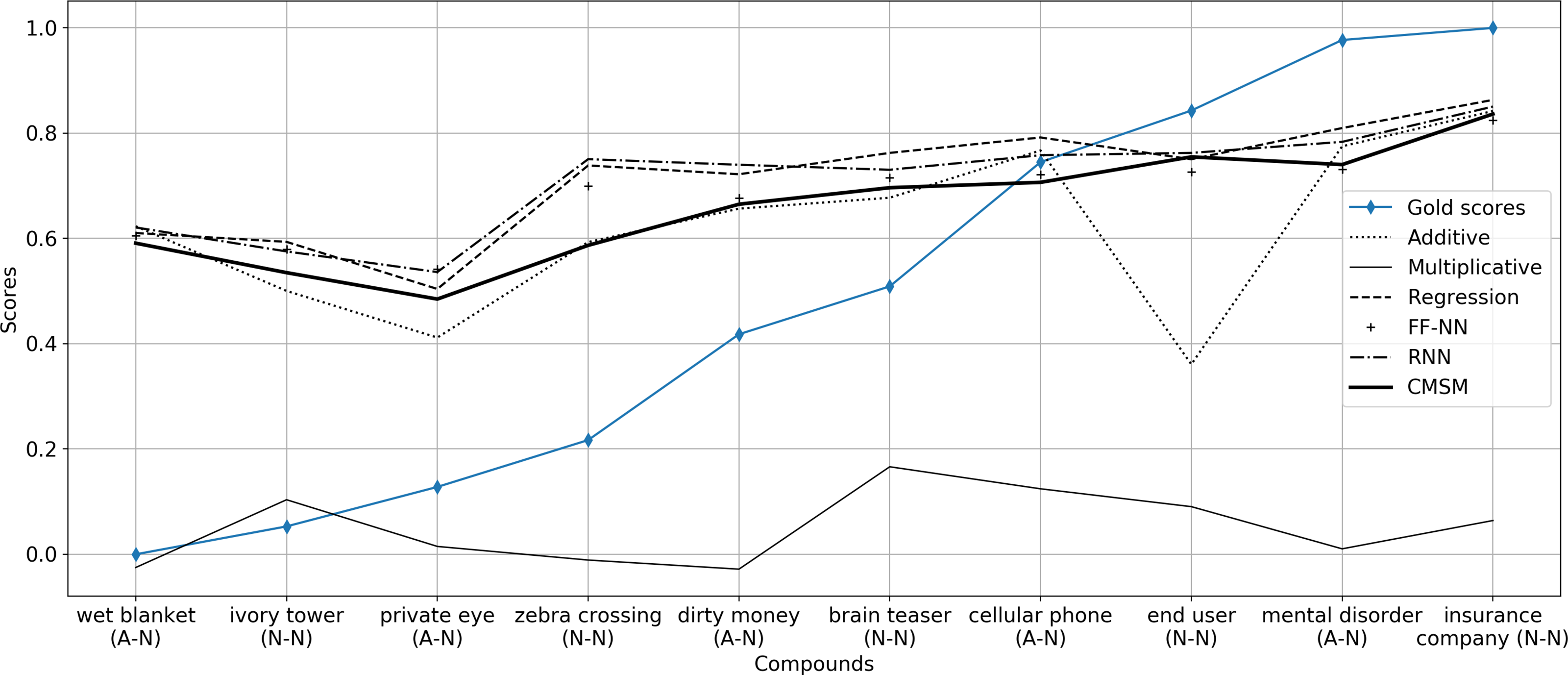

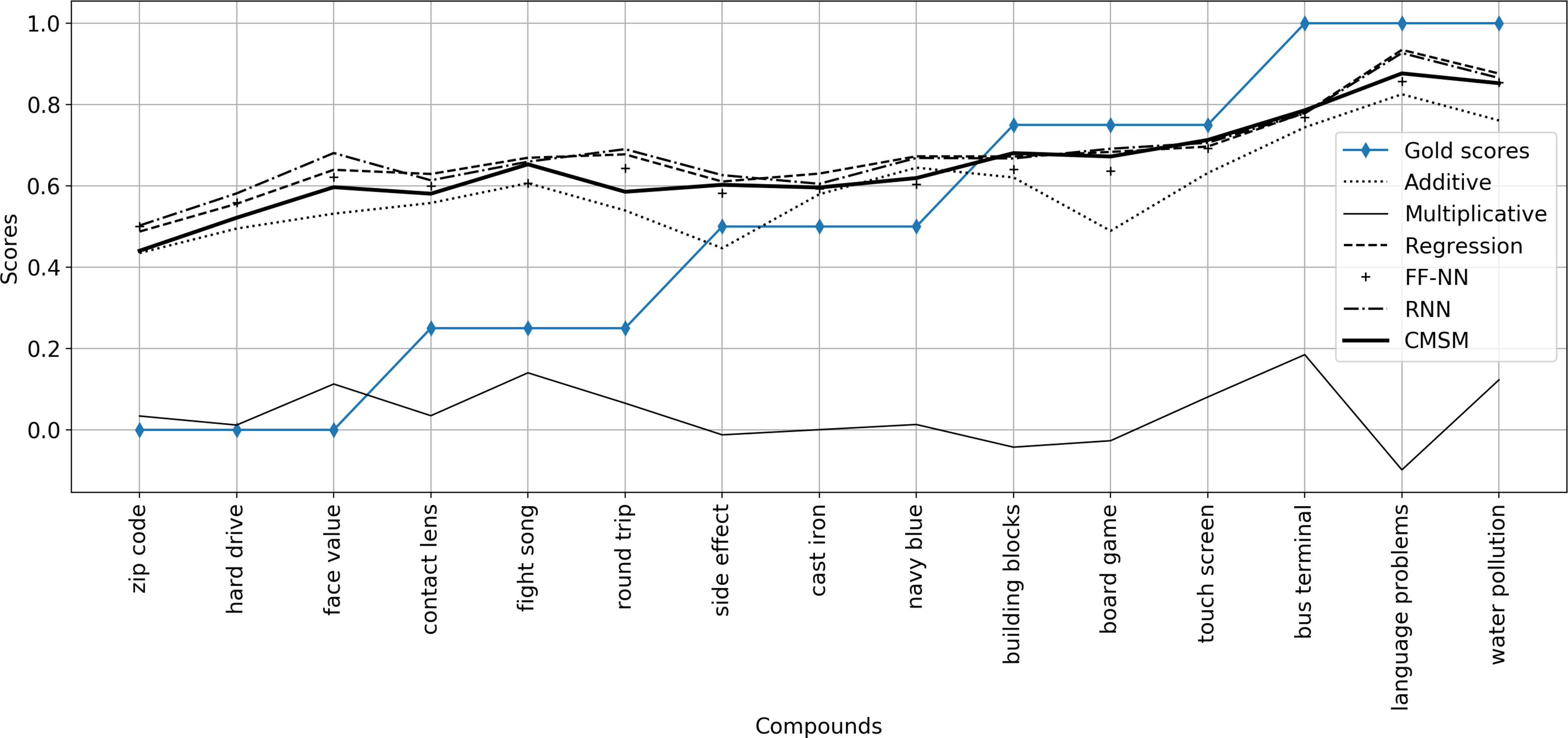

as well as