Introduction

Data-driven information is everywhere today and springs from vast sources, ranging from the internet to bodily functions. Yet developing meaningful ways of information extraction, processing, and representation remains a necessity. Such information management would not only be meaningful, but it also needs to conform to the societal needs for sustainability. Any technological attempt at energy-efficient management of information can be traced back to the never-ending quest to understand and imitate the inner workings of the brain that gives rise to intelligence. Harnessing brain efficiency at the technological level can be condensed to the term “brain-inspired computing.”

An intelligent agent is a system that perceives and interacts with the environment in order to achieve its goals in an autonomous and rational manner. This interaction is dynamic, therefore intelligent agents are adaptive and with learning capabilities that improve their performance over time. Interaction is also bidirectional, which means that a fully functional system consists of sensors to acquire data from the environment, processing units to perceive the environment, and actuators that act upon that environment. Nevertheless, the borderline between these elements is blurred, as properties of one element can fuse to another. Indeed, this also applies to living organisms wherein sensing, processing, and actuation are not centralized into a single entity, but rather are distributed all over the body.Reference Kandel and Mack1 Intelligent agents can take the form of software or hardware.

A popular approach for software-based agents, is the representation of information processing aspects found in biological systems with artificial neural networks (ANNs), a field commonly known as machine learning or artificial intelligence. This approach is based on executing algorithms that loosely represent the function of the nervous system, on traditional computer architectures. Today, ANNs have spread across a variety of domains, including object/pattern and spoken language recognition, data mining in research fields such as chemistry and medicine, robotics, autonomous driving, as well as strategy planning for decision-/policymaking.Reference LeCun, Bengio and Hinton2,Reference Silver, Huang, Maddison, Guez, Sifre, van den Driessche, Schrittwieser, Antonoglou, Panneershelvam, Lanctot, Dieleman, Grewe, Nham, Kalchbrenner, Sutskever, Lillicrap, Leach, Kavukcuoglu, Graepel and Hassabis3 Although ANNs are successful in these shorter-term applications, still face major challenges in approaching the level of biological intelligence and energy efficiency.Reference Heaven4–Reference Marcus6 This insufficiency stems mainly from the fact that ANNs are an abstract representation of the nervous system.Reference Heaven4–Reference Marcus6

On the other hand, functions of neural processing can be directly emulated with actual electronic devices and circuits in hardware agents. This hardware-based paradigm of brain-inspired processing is also known as neuromorphic computing.Reference Mead7,Reference Indiveri, Linares-Barranco, Hamilton, van Schaik, Etienne-Cummings, Delbruck, Liu, Dudek, Häfliger, Renaud, Schemmel, Cauwenberghs, Arthur, Hynna, Folowosele, Saighi, Serrano-Gotarredona, Wijekoon, Wang and Boahen8 Prominent examples of neuromorphic computing with contemporary silicon technology include IBM's TrueNorth and Intel's Loihi chip.Reference Merolla, Arthur, Alvarez-Icaza, Cassidy, Sawada, Akopyan, Jackson, Imam, Guo, Nakamura, Brezzo, Vo, Esser, Appuswamy, Taba, Amir, Flickner, Risk, Manohar and Modha9,Reference Rajendran, Sebastian, Schmuker, Srinivasa and Eleftheriou10 Due to energy efficiency and scaling when compared to silicon technology, of particular interest is also the direct mapping of ANNs on dedicated circuits made of emerging, non-CMOS technologies such as memristors.Reference Prezioso, Merrikh-Bayat, Hoskins, Adam, Likharev and Strukov11–Reference Wang, Wu, Burr, Hwang, Wang, Xia and Yang13 Key computational tasks related to ANNs are executed more elegantly with these emerging technologies. Although promising, technical challenges such as reliability, device-to-device variation, and large-scale integration still hinder the transfer of these emerging neuromorphic computing technologies to the domain of consumer electronics.Reference Adam, Khiat and Prodromakis14

In contrast to software, an actual biological system requires a physical form of interfacing with actual devices and circuits. This combination of hardware and biological systems constitutes an unconventional type of hybrid intelligent agent that merges the two domains.Reference Park, Lee, Kim, Seo, Go and Lee15–Reference van Doremaele, Gkoupidenis and van de Burgt18 Such a hybrid approach is requisite for the local processing of biological signals, extraction of specific patterns, and realization of devices that act upon the environment in a biologically relevant fashion.Reference van Doremaele, Gkoupidenis and van de Burgt18 This bidirectionality requires that both domains, hardware and biological, speak a similar language, yet operate on the same time scale to enable real-time interaction.Reference Broccard, Joshi, Wang and Cauwenberghs16 In other words, the development of hybrid agents capable of achieving this is an essential requirement for fully autonomous bioelectronics applications that face specific bandwidth constraints in communication that will potentially enhance the human–machine interaction in areas such as neurorehabilitation, neuroengineering, and prosthetics, as well as for basic research.Reference Broccard, Joshi, Wang and Cauwenberghs16,Reference Wan, Cai, Wang, Qian, Huang and Chen19–Reference Lee and Lee21

The field of hardware-based neuromorphic computing has rapidly grown over the past several years, with the main advancements driven by the development of inorganic materials and devices.Reference Tsai, Ambrogio, Narayanan, Shelby and Burr22,Reference Li, Wang, Midya, Xia and Yang23 Although successful, this approach has revealed limitations in certain aspects of neuromorphic computing. Hardware-based agents, for instance, are still struggling to reach the energy efficiency of the brain.Reference Tang, Yuan, Shen, Wang, Rao, He, Sun, Li, Zhang, Li, Gao, Qian, Bi, Song, Yang and Wu24 In addition, biorealistic emulation (or biophysical realism) of the neural processing functions is questionable in many cases of inorganic devices, since their phenomena are often based on electronic devices. In addition, biophysical realism is questionable in many cases in inorganic devices, since the emulation of the neural processing functions is mainly based on electronic devices.Reference Tang, Yuan, Shen, Wang, Rao, He, Sun, Li, Zhang, Li, Gao, Qian, Bi, Song, Yang and Wu24

However, biological processing relies on ionic, chemical, and biochemical signals and its basic building blocks are immersed in a common electrochemical environment.Reference Kandel and Mack1 Moreover, direct interfacing of inorganic devices with biology poses obstacles. Many inorganic devices are unable to operate in biologically relevant environments, due to the fact that their microscopic mechanisms are in many cases sensitive to moisture.Reference Valov and Tsuruoka25 Therefore, their operation demands complex packaging, or indirect contact with a biological environment.Reference Gupta, Serb, Khiat, Zeitler, Vassanelli and Prodromakis26 This leads to unavoidable mismatch between neuromorphic devices and biology that hinders further development of hybrid agents.

Among other emerging technologies, devices based on organic materials are the latest entry into the list for brain-inspired computing.Reference van de Burgt, Melianas, Keene, Malliaras and Salleo27,Reference Sun, Fu and Wan28 Organic materials have shown a level of maturity and enjoy widespread acceptance, for instance, organic light-emitting-diodes (OLEDs) are already in the consumer electronics.Reference Oh, Shin, Nam, Ahn, Cha and Yeo29,Reference Chen, Lee, Lin, Chen and Wu30 The field of organic bioelectronics is also rapidly advancing, demonstrating a viable route for organic materials in interfacing biology with electronics.Reference Someya, Bao and Malliaras31,Reference Rogers, Malliaras and Someya32

This work provides a brief historical overview of the basic biophysical and artificial models of the building blocks that are found in the nervous system. These models are the main source of inspiration for the realization of devices for brain-like computing. We also review the latest advancements in organic neuromorphic devices for the implementation of hardware and hybrid intelligent agents (for more detailed reading about organic neuromorphic electronics, please refer to the topical reviewsReference van de Burgt, Melianas, Keene, Malliaras and Salleo27,Reference Ling, Koutsouras, Kazemzadeh, Burgt, Yan and Gkoupidenis33,Reference Goswami, Goswami and Venkatesan34 ). A comparison between biophysical and artificial models of the nervous system is provided, indicating the gap between them in several aspects of neural processing. Due to their intrinsic properties, organic neuromorphic devices have the potential to more precisely capture the diversity of biological neural processing and therefore enhance biophysical realism. Finally, we briefly discuss future challenges and directions for the field of organic neuromorphic devices.

Biological neural processing

The basic building blocks of biological networks are the neurons and synapses (Figure 1a).Reference Kandel and Mack1 A typical neuron consists of the soma, axon, and dendrites. Neurons are electrically excitable cells that produce, process, and transmit the basic communication event in biological processing. This is a form of electrical impulse, the action potential that consists of an ionic current flowing through the cell. Input signals are collected from the dendrites in the soma and accumulate over time. Above a certain threshold, the neuron fires an action potential toward the next neuron that propagates through the axon. Neurons are connected with each other through nanogap junctions (~nm), the synapses.

Figure 1. Neural processing: Biological versus artificial implementation. (a) The basic building blocks of biological neural processing are the neurons and synapses. Neurons are electrically excitable cells that produce action potentials. Input signals that are collected at the dendrites accumulate over time, and above a certain threshold, the neuron fires an action potential toward the next neuron. Neurons are connected with each other through the synapses. Electrical activity at a presynaptic neuron modulates the connection strength between the pre- and postsynaptic neuron. The connection strength is also known as synaptic weight, w. (b) Artificial implementation of a neuron. McCulloch–Pitts neuron: Binary inputs are summed toward an output with a stepwise activation function f as a threshold, and the neuron returns a binary output. Perceptron: In a perceptron, synaptic weights wi are added to the inputs of a McCulloch–Pitts neuron for taking into account the connection strength between neurons. The activation function f in perceptrons is nonlinear. Although not biologically realistic, perceptron still represents the basic building block of contemporary artificial neural networks.Reference McCulloch and Pitts37,Reference Rosenblatt40

A synapse conveys electrical or chemical signals between pre- and postsynaptic neurons. There are two types of synapses, chemical and electrical. In chemical synapses, action potentials that arrive at the presynaptic neuron trigger the release of chemical messengers from the presynaptic to the postsynaptic neuron. These messengers, known as neurotransmitters, bind to specific receptors of the postsynaptic terminal and thereby modulate the voltage of the postsynaptic neuron. In electrical synapses, the voltage of the postsynaptic neuron is modulated with ionic currents through the synapse when action potentials arrive at the presynaptic neuron. In both cases, electrical activity at the presynaptic neuron modulates the connection strength between the pre- and postsynaptic neurons. This connection strength can be quantified and is also known as synaptic weight, w.

Variation of w over time, known as synaptic plasticity, happens in a range of time scales. Short-term plasticity lasts for milliseconds to minutes, while long-term plasticity persists for longer periods of time, ranging from minutes to the lifetime of the brain itself.Reference Abbott and Regehr35,Reference Lamprecht and LeDoux36 Short-term plasticity serves various computational tasks, while long-term synaptic changes support the development of neural networks, and are considered as the biological substrate of learning and memory.Reference Abbott and Regehr35 On top of that, biological neural networks are immersed in a common, “wet” electrochemical environment that consists of various ionic and biochemical carriers of information. This “wet” environment is the source of information carriers and provides unprecedented modes of communication between distant neural populations. The plethora of phenomena found in biological neural processing is the main source of inspiration for the artificial implementation of neural networks and brain-like computing.

Organic devices for brain-inspired computing

Artificial implementation

The field of ANNs aims to mimic and harness the capability of the brain to process information in a highly efficient manner. The history of ANNs can be traced back to the early 1940s, when McCulloch and Pitts introduced a simplified model of a neuron (Figure 1b).Reference McCulloch and Pitts37 A McCulloch–Pitts neuron receives binary inputs, and by adding them toward an output with a stepwise activation function as a threshold, it returns a binary output. In that sense, McCulloch–Pitts neurons are able to perform simple Boolean functions. Indeed, when arranged in a network, McCulloch–Pitts neurons capture the essence of logical computation. Even though the McCulloch–Pitts model is only loosely analogous to biological neurons, it reproduces some key principles of neural processing such as the summation of presynaptic inputs in the dendrites, and the binary “all-or-nothing” action potential in neurons through a stepwise activation function.Reference Koch38 Although the introduction of McCulloch–Pitts neurons represents a milestone in ANNs, the rigidity of the model becomes obvious after closer examination. Every input has the same significance toward its summation at the output, while simultaneously this significance cannot be dynamically modulated. Soon after the introduction of McCulloch–Pitts neurons, it became apparent, by the pioneering work of Hebb, that the activity-dependent development or adaptability of biological neural networks was the essence of learning.Reference Hebb39

A revised version of the McCulloch–Pitts model, known as a perceptron, was introduced by Rosenblatt in the late 1950s (Figure 1b).Reference Rosenblatt40 A weight, wi, is now assigned to every input, for expressing the strength of influence of each input xi to the output y, and is analogous to the connection strength between neurons through a synapse. This tunable weight wi allows for training the network by modulating wi, in order to perform specific computational tasks through repetitive presentation of (labeled or known) examples. This process captures the essence of biological learning, in which the repetition of a task or external stimulus induces memory toward a desired behavior/function. Mathematically, the output of a perceptron is given by the weighted summation of inputs or  $y=f\left({\sum\nolimits_{i}}{w_{i} x_{i}}\right)$. f is the activation function, which defines the output for a given set of inputs and is an abstract representation of the firing rate of action potentials in biological neurons.Reference Hodgkin and Huxley41 Here, f is a stepwise function in binary McCulloch–Pitts neurons and nonlinear in perceptrons.Reference Ding, Qian and Zhou42 Although primitive in form, perceptrons still constitute the predecessor building blocks of contemporary ANNs that enabled a wide range of real-world applications and are still useful in many simple classification applications.

$y=f\left({\sum\nolimits_{i}}{w_{i} x_{i}}\right)$. f is the activation function, which defines the output for a given set of inputs and is an abstract representation of the firing rate of action potentials in biological neurons.Reference Hodgkin and Huxley41 Here, f is a stepwise function in binary McCulloch–Pitts neurons and nonlinear in perceptrons.Reference Ding, Qian and Zhou42 Although primitive in form, perceptrons still constitute the predecessor building blocks of contemporary ANNs that enabled a wide range of real-world applications and are still useful in many simple classification applications.

The figure of merit for the implementation of hardware-based ANNs with actual electronic devices is the emulation and implementation of the synaptic weight, w, as it allows for trainable circuits. In neuromorphic computing. In the CMOS based neuromorphic electronics, the w is mapped in a combination of binary memories (4-, 8-bit), while in emerging non-CMOS electronics, w is mapped in the resistance level of a nonvolatile memory device. A nonvolatile memory cell is an electronic device that can store single or multiple memory states (defined as a resistance level) for a certain period of time, commonly termed as data (or state) retention. The states can be modulated with an external stimulus (for instance electrical, optical, chemical, and mechanical); they can also be accessed/read by a low amplitude external stimulus that does not interfere with the memory states. The mapping of w requires devices to be tuned in an analog fashion or exhibit multiple resistance states, to emulate the gradual coupling strength between biological neurons.Reference Tsai, Ambrogio, Narayanan, Shelby and Burr22,Reference Burr43

In contrast, with the other extreme of traditional binary memories (with 0s and 1s), gradual programming of memory allows for fine tuning between inputs and the output of a perceptron during the weight update in a training process.Reference Tsai, Ambrogio, Narayanan, Shelby and Burr22,Reference Burr43 Although biological synapses are nonlinear (refer to the next section), linear and symmetric programming of analog memories is preferable for practical purposes, as it allows for weight update without any prior knowledge of the running weight (blind update).Reference Jacobs-Gedrim, Agarwal, Knisely, Stevens, Heukelom, Hughart, Niroula, James and Marinella44 Consequently, blind update relaxes the need for continuous access/reading of the memory state after each programming cycle, and this reduces the computational cost during training. In fact, this is essential for parallel updating and training.Reference Keene, Melianas, Fuller, van de Burgt, Talin and Salleo45

A variety of neuromorphic functions have been recently demonstrated with organic devices made of electrochemical,Reference Gkoupidenis, Schaefer, Garlan and Malliaras46–Reference Lei, Liu, Xia, Gao, Xu, Wang, Yin and Liu53 electronic,Reference Alibart, Pleutin, Guérin, Novembre, Lenfant, Lmimouni, Gamrat and Vuillaume54 and ferroelectricReference Amiri, Heidler, Müllen, Gkoupidenis and Asadi55–Reference Tian, Liu, Yan, Wang, Zhao, Zhong, Xiang, Sun, Peng, Shen, Lin, Dkhil, Meng, Chu, Tang and Duan57 phenomena. Moreover, basic aspects of short- and long-term synaptic plasticity have been emulated with organic devices over the past few years (Figure2a–b).Reference Gkoupidenis, Schaefer, Garlan and Malliaras46,Reference Gkoupidenis, Schaefer, Strakosas, Fairfield and Malliaras47,Reference van de Burgt, Lubberman, Fuller, Keene, Faria, Agarwal, Marinella, Talin and Salleo49,Reference Goswami, Matula, Rath, Hedström, Saha, Annamalai, Sengupta, Patra, Ghosh, Jani, Sarkar, Motapothula, Nijhuis, Martin, Goswami, Batista and Venkatesan51,Reference Majumdar, Tan, Qin and van Dijken56–Reference Fuller, Keene, Melianas, Wang, Agarwal, Li, Tuchman, James, Marinella, Yang, Salleo and Talin67 Such devices have shown excellent analog memory phenomena (~500 states)Reference van de Burgt, Lubberman, Fuller, Keene, Faria, Agarwal, Marinella, Talin and Salleo49 and endurance (>109 switching cycles),Reference Goswami, Matula, Rath, Hedström, Saha, Annamalai, Sengupta, Patra, Ghosh, Jani, Sarkar, Motapothula, Nijhuis, Martin, Goswami, Batista and Venkatesan51,Reference Fuller, Keene, Melianas, Wang, Agarwal, Li, Tuchman, James, Marinella, Yang, Salleo and Talin67 with ultralow operation voltage (~mV),Reference van de Burgt, Lubberman, Fuller, Keene, Faria, Agarwal, Marinella, Talin and Salleo49 low switching energy (~fJ/μmReference LeCun, Bengio and Hinton2),Reference Keene, Melianas, Fuller, van de Burgt, Talin and Salleo45 and sufficient data retention characteristics (~hours).Reference Keene, Melianas, van de Burgt and Salleo68 Among various device concepts, electrochemical devices based on organic mixed-conductors have recently emerged for brain-inspired computing (organic electrochemical transistors or OECTs, and electrochemical organic neuromorphic devices or ENODes).Reference van de Burgt, Melianas, Keene, Malliaras and Salleo27,Reference Ling, Koutsouras, Kazemzadeh, Burgt, Yan and Gkoupidenis33 Mapping synaptic weights is facile in electrochemical organic neuromorphic devices, as almost linear tuning of the device resistance is achieved by proper programming conditions and by the use of a third, gate terminal that decouples the read and write actions.Reference Keene, Melianas, Fuller, van de Burgt, Talin and Salleo45,Reference Fuller, Keene, Melianas, Wang, Agarwal, Li, Tuchman, James, Marinella, Yang, Salleo and Talin67 In situ polymerization of the active organic material during the device operation even allows for an evolvable type of organic electrochemical transistor that emulates the formation of new synapses in biological networks (synaptogenesis).Reference Gerasimov, Gabrielsson, Forchheimer, Stavrinidou, Simon, Berggren and Fabiano66,Reference Chklovskii, Mel and Svoboda69,Reference Eickenscheidt, Singler and Stieglitz70 A particularly appealing aspect is that organic neuromorphic devices are compatible with low-cost, additive fabrication techniques such as inkjet printing.Reference Liu, Liu, Li, Lau, Wu, Zhang, Li, Chen, Fu, Draper, Cao and Zhou65,Reference Molina-Lopez, Gao, Kraft, Zhu, Öhlund, Pfattner, Feig, Kim, Wang, Yun and Bao71 Moreover, organic neuromorphic devices exhibit unconventional form factors such as integration in flexible/stretchable substrates,Reference Lee, Oh, Xu, Kim, Kim, Kang, Kim, Son, Tok, Park, Bao and Lee72,Reference Kim, Chortos, Xu, Liu, Oh, Son, Kang, Foudeh, Zhu, Lee, Niu, Liu, Pfattner, Bao and Lee73 and operation in aqueous environments.Reference Gkoupidenis, Schaefer, Garlan and Malliaras46 These unique unconventional form factors are of particular interest for the fusion of bioelectronics and neuromorphics as well as for emulating the actual neural environment which is in principle “wet.” Organic devices and small-scale circuits have been also leveraged for the realization of neuro-inspired sensory and actuation systems.Reference Lee, Oh, Xu, Kim, Kim, Kang, Kim, Son, Tok, Park, Bao and Lee72,Reference Kim, Chortos, Xu, Liu, Oh, Son, Kang, Foudeh, Zhu, Lee, Niu, Liu, Pfattner, Bao and Lee73

Figure 2. Organic devices for brain-inspired computing. (a) Organic nonvolatile memory devices can be used for mapping the synaptic weight, w, of a perceptron in an artificial neural network (ANN). An electrochemical organic neuromorphic device (ENODe) exhibits excellent analog memory phenomena (for emulating short- and long-term synaptic plasticity functions) and endurance with ultralow operation voltage, low switching energy, and sufficient data retention characteristics (the conductance of the channel can be modulated in an analog fashion by applying a series of input pulses).Reference van de Burgt, Lubberman, Fuller, Keene, Faria, Agarwal, Marinella, Talin and Salleo49 (b) Organic neuromorphic devices are compatible with low-cost fabrication techniques such as inkjet printing.Reference Molina-Lopez, Gao, Kraft, Zhu, Öhlund, Pfattner, Feig, Kim, Wang, Yun and Bao71 (c) Mapping of the perceptron function in crossbar configuration. Every cell in the crossbar consists of an analog memory device and an access device. The perceptron function or the weighted summation of inputs  ${\sum\nolimits_{i}}{w_{i} x_{i}}$, is a direct result of Kirchhoff's Voltage Law

${\sum\nolimits_{i}}{w_{i} x_{i}}$, is a direct result of Kirchhoff's Voltage Law  $I={\sum\nolimits_{i}}{w_{i} v_{i}}$ in a crossbar array.Reference van de Burgt, Melianas, Keene, Malliaras and Salleo27 (d) ANN network with ENODes. Every cell consists of an ENODe and an ionic diode as an access device (ionic floating-gate memory). The network is trained in parallel operation to function as an exclusive OR (XOR) logic gate.Reference Fuller, Keene, Melianas, Wang, Agarwal, Li, Tuchman, James, Marinella, Yang, Salleo and Talin67 (e)Concepts of local data processing and feature extraction in bioelectronics with neuromorphic systems based on organic devices. In this example, a neuromorphic system would be able to detect brain seizures and initiate the operation of a drug delivery device for suppressing the seizure. Operation of the system in a closed-loop manner is essential for fully autonomous applications.Reference van Doremaele, Gkoupidenis and van de Burgt18

$I={\sum\nolimits_{i}}{w_{i} v_{i}}$ in a crossbar array.Reference van de Burgt, Melianas, Keene, Malliaras and Salleo27 (d) ANN network with ENODes. Every cell consists of an ENODe and an ionic diode as an access device (ionic floating-gate memory). The network is trained in parallel operation to function as an exclusive OR (XOR) logic gate.Reference Fuller, Keene, Melianas, Wang, Agarwal, Li, Tuchman, James, Marinella, Yang, Salleo and Talin67 (e)Concepts of local data processing and feature extraction in bioelectronics with neuromorphic systems based on organic devices. In this example, a neuromorphic system would be able to detect brain seizures and initiate the operation of a drug delivery device for suppressing the seizure. Operation of the system in a closed-loop manner is essential for fully autonomous applications.Reference van Doremaele, Gkoupidenis and van de Burgt18

The next logical outcome for brain-inspired computing with organic materials is the implementation of ANNs, since the emergence of analog memory devices allows for the mapping of synaptic weights in a network (Figure 2c–e). Of particular interest when implementing hardware-based ANNs, is the mapping of the perceptron function  ${\sum\nolimits_{i}}{w_{i} x_{i}}$ (weighted summation of inputs) in a crossbar configuration (Figure 2c).Reference van de Burgt, Melianas, Keene, Malliaras and Salleo27 This function can be mapped directly in crossbars according to Kirchhoff's Voltage Law

${\sum\nolimits_{i}}{w_{i} x_{i}}$ (weighted summation of inputs) in a crossbar configuration (Figure 2c).Reference van de Burgt, Melianas, Keene, Malliaras and Salleo27 This function can be mapped directly in crossbars according to Kirchhoff's Voltage Law  $I={\sum\nolimits_{i}}{w_{i} v_{i}}$, where vi is an input voltage and I is the total current of a crossbar column. Functional mapping of the perceptron operation in a crossbar configuration requires that parasitic communication (or sneak-path) of neighboring cells be eliminated.Reference Tsai, Ambrogio, Narayanan, Shelby and Burr22 To prevent these issues, access devices are commonly used that allow only programming of selected devices.Reference Chen74 Parasitic-free operation allows for parallel device access that reduces the computational time and cost dramatically during training.

$I={\sum\nolimits_{i}}{w_{i} v_{i}}$, where vi is an input voltage and I is the total current of a crossbar column. Functional mapping of the perceptron operation in a crossbar configuration requires that parasitic communication (or sneak-path) of neighboring cells be eliminated.Reference Tsai, Ambrogio, Narayanan, Shelby and Burr22 To prevent these issues, access devices are commonly used that allow only programming of selected devices.Reference Chen74 Parasitic-free operation allows for parallel device access that reduces the computational time and cost dramatically during training.

Toward this approach, small-scale ANNs with organic devices have been demonstrated.Reference Fuller, Keene, Melianas, Wang, Agarwal, Li, Tuchman, James, Marinella, Yang, Salleo and Talin67,Reference Demin, Erokhin, Emelyanov, Battistoni, Baldi, Iannotta, Kashkarov and Kovalchuk75–Reference Battistoni, Erokhin and Iannotta77 These networks were programmed to execute the function of basic logic gates. In a network that has been trained to perform a logic gate function, combinations of inputs result in specific network outputs. Therefore, these gates are able to classify various combinations of inputs for simple decision-making or logical reasoning processes (if this and that condition is true, then the result is ...). Recently, a path toward scalable organic-based networks has been shown for parallel programming/training, by combining electrochemical organic neuromorphic devices with an ionic diode (memristive switching device) as the access device.Reference Fuller, Keene, Melianas, Wang, Agarwal, Li, Tuchman, James, Marinella, Yang, Salleo and Talin67 Of particular interest for future applications, is the use of such ANNs in a more generic neuromorphic system, a hybrid agent,Reference Tarabella, D'Angelo, Cifarelli, Dimonte, Romeo, Berzina, Erokhina and Iannotta78–Reference Desbiefa, di Lauro, Casalini, Guerina, Tortorella, Barbalinard, Kyndiah, Murgi, Cramer, Biscarini and Vuillaumea81 for local signal processing in bioelectronics.Reference van Doremaele, Gkoupidenis and van de Burgt18 Indeed, concepts of local processing and feature extraction can minimize data transfer from the acquisition site to peripheral electronics, thereby allowing for fully autonomous applications in bioelectronics.

Biophysical realism

Major efforts toward the description of biophysics in biological neural processing were made almost in parallel with the early development of ANNs. In the early 1950s, Hodgkin and Huxley provided an accurate and rigorous description for the origin and propagation of electrical signals in biological neurons.Reference Hodgkin and Huxley41,Reference Hodgkin, Huxley and Katz82–Reference Hodgkin and Huxley84 The Hodgkin–Huxley model provides a detailed description of the initiation process of the basic communication event in biological neural processing, namely the action potential. The model describes the time-dependent relationship between the voltage across a biological lipid bilayer membrane and the ionic flow through it.

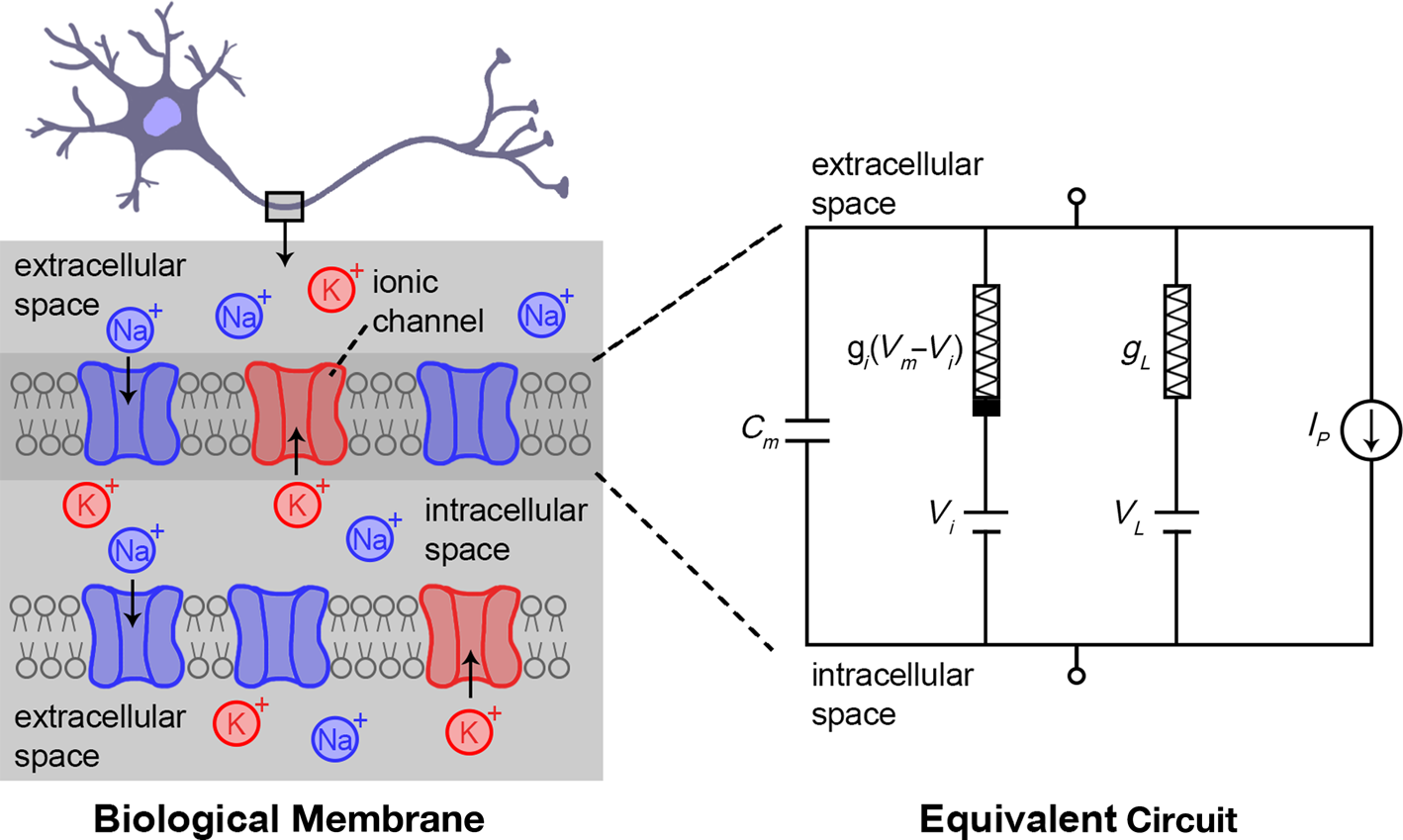

Figure 3 depicts a neuron and more detailed description of the cell membrane, which is surrounded by a common, water-based electrolyte in the outer (extracellular) and the inner (intracellular) membrane space. The electrolyte contains various ionic species, such as Na+, K+, and Cl–, as well as neurotransmitters, which are chemical carriers of information. The equivalent circuit of the membrane between the extracellular and intracellular space consists mainly of the following elements: (1) voltage-gated ion channels (voltage-sensitive) that are selective to specific ions (i.e., Na+, K+, Cl–). (2)Contribution of an ionic leakage current across the membrane. (3) An ionic current source that expresses the active transport of ionic species from proteins that act as ion pumps (ATP-powered) from/to the common extracellular space, through the membrane.

Figure 3. Hodgkin–Huxley model of biological neurons that describes in detail the initiation/temporal response of action potentials in biological cells—illustration of a neuronal biological membrane.Reference Kandel and Mack1,Reference Hodgkin, Huxley and Katz82–Reference Hodgkin and Huxley84 The neuron itself as well as the membrane are surrounded by the extracellular medium, a global electrolyte that contains various ions species (Na+, K+, Cl–). The membrane also encloses the intracellular medium that is similar to the extracellular space. The membrane forms a capacitor due to the difference in ionic concentrations between the intracellular and extracellular space. Various elements/mechanisms on the membrane surface are acting in parallel and the equivalent circuit of the membrane is depicted on the right. The sum of all ionic current contributions through the membrane is  $l_m(t,V_m)={\sum\nolimits_i}l_i (t, V_m) + l_L + l_P$, with I i(t,V m) being the current of the ith voltage-gated ion channel, I L the leakage current and I P the ionic current through ion pumps. The current of the ith voltage-gated ion channel is

$l_m(t,V_m)={\sum\nolimits_i}l_i (t, V_m) + l_L + l_P$, with I i(t,V m) being the current of the ith voltage-gated ion channel, I L the leakage current and I P the ionic current through ion pumps. The current of the ith voltage-gated ion channel is  $l_i(t, V_m)= g_i(t, V_m). (V_{m} - V_{i})$, where g i(t,V m) is the channel conductance and V i is the voltage difference of the voltage-gated channel. Similarly, the leakage current of the membrane is

$l_i(t, V_m)= g_i(t, V_m). (V_{m} - V_{i})$, where g i(t,V m) is the channel conductance and V i is the voltage difference of the voltage-gated channel. Similarly, the leakage current of the membrane is  $I_L(V_m)=g_L . (V_m - V_L)$, with g L being the leakage conductance and V L its potential difference.

$I_L(V_m)=g_L . (V_m - V_L)$, with g L being the leakage conductance and V L its potential difference.

The total ionic current through the membrane is  $I_m (t,V_m)=C_m . {dV_m (t) \over t}$, where t is time, V m is the voltage and C m is the capacitance of the membrane. The set of nonlinear equations of Figure 3 describes in detail the initiation of action potentials in neuron cells, as a result of complex exchange of ionic species between the outer and inner part of the membrane. Through this ionic exchange, neurons integrate ionic signals over time and this alters the membrane potential, V m. When V m exceeds a certain threshold, neurons fire an action potential and then the membrane rests back to the equilibrium potential. A relatively simple model for describing such spiking neural behavior is the leaky integrate-and-fire model.Reference Abbott85 The Hodgkin–Huxley model is computationally quite expensive, particularly in simulating large networks. To prevent these expensive calculations, approximations have been developed (the FitzHugh–Nagumo model).Reference FitzHugh86,Reference FitzHugh87 It is notable that other elements might also exist in the membrane model of Figure 3, such as neurotransmitter-gated ion channels that permit ion flow under the influence of specific chemical species, the neurotransmitters.Reference Koch and Segev88

$I_m (t,V_m)=C_m . {dV_m (t) \over t}$, where t is time, V m is the voltage and C m is the capacitance of the membrane. The set of nonlinear equations of Figure 3 describes in detail the initiation of action potentials in neuron cells, as a result of complex exchange of ionic species between the outer and inner part of the membrane. Through this ionic exchange, neurons integrate ionic signals over time and this alters the membrane potential, V m. When V m exceeds a certain threshold, neurons fire an action potential and then the membrane rests back to the equilibrium potential. A relatively simple model for describing such spiking neural behavior is the leaky integrate-and-fire model.Reference Abbott85 The Hodgkin–Huxley model is computationally quite expensive, particularly in simulating large networks. To prevent these expensive calculations, approximations have been developed (the FitzHugh–Nagumo model).Reference FitzHugh86,Reference FitzHugh87 It is notable that other elements might also exist in the membrane model of Figure 3, such as neurotransmitter-gated ion channels that permit ion flow under the influence of specific chemical species, the neurotransmitters.Reference Koch and Segev88

A closer examination and comparison of the artificial implementation (a perceptron) with a biophysical neuron (Hodgkin–Huxley model) leads to the conclusion that, although successful from a technological point of view, the former is an abstract representation of biological neurons without reflecting the diversity/wealth of actual neural processing (Figure 4).Reference Tang, Yuan, Shen, Wang, Rao, He, Sun, Li, Zhang, Li, Gao, Qian, Bi, Song, Yang and Wu24,Reference Zeng and Sanes89

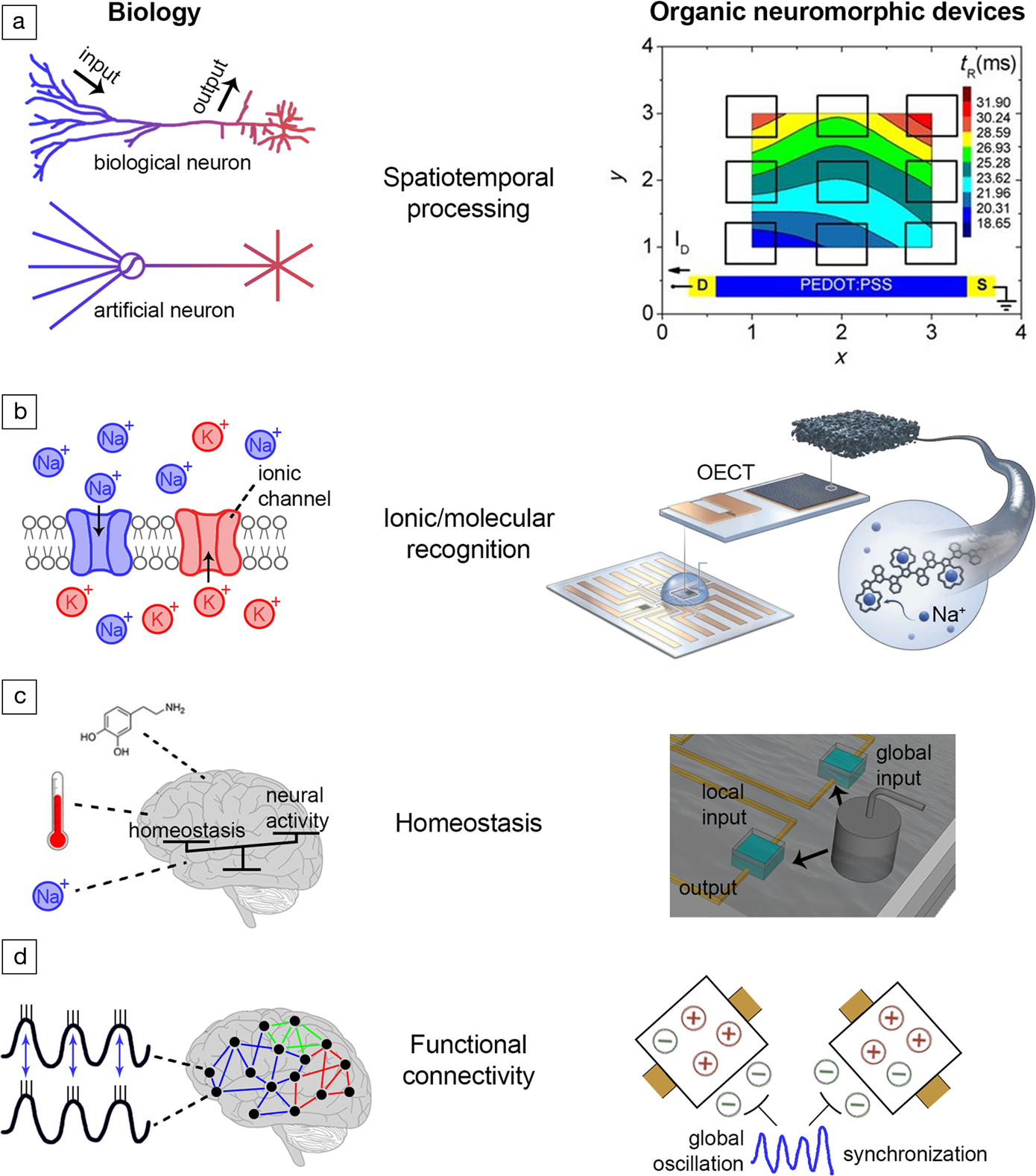

Figure 4. Biophysical realism in electrochemical organic neuromorphic devices. (a)Spatiotemporal processing. Left: Biological neural processing is spatiotemporal. In biological neurons, inputs/outputs are distributed over space, while in artificial neurons (i.e., a perceptron) inputs/outputs are concentrated in single points in space and the spatial aspect is missing.Reference Spruston and Kath99,Reference Branco, Clark and Häusser100 Right: The response of organic electrochemical transistors (OECTs) is spatiotemporal—the response time of OECTs depends on the distance between the input (gate) and output (drain) terminals.Reference Gkoupidenis, Koutsouras, Lonjaret, Fairfield and Malliaras117,Reference Gkoupidenis, Rezaei-Mazinani, Proctor, Ismailova and Malliaras118 (b) Ionic/molecular recognition. Left: In a neuronal membrane, voltage- and neurotransmitter-gated ionic channels offer ionic and molecular recognition.Reference Kandel and Mack1 Right: Ionic recognition in OECTs (selectivity of Na+ over K+ ions) isintroduced by engineering the organic material of the gate electrode.Reference Wustoni, Combe, Ohayon, Akhtar, McCulloch and Inal119 (c) Homeostasis. Left: In the brain, global parameters such as temperature, ionic and neurotransmitter concentrations regulate collectively neural networks.Reference Turrigiano and Nelson104,Reference Abbott and Nelson105 Right: Homeostasis in OECTs is induced by using a global input for the collective addressing of a device array.Reference Koutsouras, Malliaras and Gkoupidenis124 (d)Functional connectivity. Left: Macroscopic electrical oscillations in the brain synchronize distant brain regions and induce a functional type of connectivity between them.Reference Varela, Lachaux, Rodriguez and Martinerie115,Reference Gkoupidenis127 Right: Global voltage oscillations synchronize an array of OECT devices, each one receiving stochastic and independent inputsignals. The devices are functionally connected through the global oscillation.Reference Koutsouras, Prodromakis, Malliaras, Blom and Gkoupidenis126,Reference Gkoupidenis127 Note: PEDOT:PSS, poly(3,4-ethylenedioxythiophene)-poly(styrene sulfonate).

A perceptron is static as it performs floating-point arithmetic, while the Hodgkin–Huxley model describes the time-dependent response. Time-domain processing is exceptionally important in biological networks, in which processing is event-based through sequences of action potentials, but also energy-efficient, as these events are of low amplitude and relatively sparse (the amplitude is in the range of ~μV-mV and usually <200 events/s, in different modes such as spike bursting).Reference Stein, Gossen and Jones90 Memory and learning in biological networks are facilitated by plasticity that is dependent on the relative timing of spikes that arrive at the synapses, with time correlation between spikes or even spike rates playing an important role in the synaptic modulation.Reference Bi and Poo91,Reference Bi and Poo92 Spiking neural networks (SNNs) more precisely capture the phenomena of biological neural processing, with spike-timing and rate that is incorporated in their operation for information encoding.Reference Gerstner, Kistler, Naud and Paninski93–Reference Roy, Jaiswal and Panda95 At a lower level, devices and small-scale inorganic circuits that emulate the spiking behavior and temporal processing of neurons are also emerging.Reference Pickett, Medeiros-Ribeiro and Williams96–Reference Abu-Hassan, Taylor, Morris, Donati, Bortolotto, Indiveri, Paton and Nogaret98 Moreover, organic devices for oscillatory or spiking neuromorphic electronics have also been recently reported.Reference Lee, Oh, Xu, Kim, Kim, Kang, Kim, Son, Tok, Park, Bao and Lee72,Reference Kim, Chortos, Xu, Liu, Oh, Son, Kang, Foudeh, Zhu, Lee, Niu, Liu, Pfattner, Bao and Lee73

In contrast to an artificial neuron, biological neural processing is spatiotemporal, as it happens not only in time, but also in space, with input/output signals distributed in the dendrites of neurons acting as delay lines with nonconstant impedance (Figure 4a).Reference Spruston and Kath99 This distribution induces a range of time delays between inputs and outputs along neurons, and even equips them with the ability to discriminate or decode time-dependent signal patterns.Reference Branco, Clark and Häusser100 The output of such neurons is sensitive to specific temporal sequences, and this is a key property for sensory processing (vision, audition), which by definition occurs in the time domain.Reference Hawkins and Blakeslee101 In biological neural processing, time is inherently embedded in physical space since propagation of ionic/molecular signals is finite and slow, in contrast to contemporary electronics.Reference Carr and Konishi102,Reference Das, Dodda and Das103 Spatial dependence is not usually considered in ANNs, as input signals are summed in single points in space.

Biological neural processing involves exchanges of various ionic and molecular species, hence there is diversity in information carriers with every carrier type to hold specific role in processing. And yet, biological neurons possess the cellular machinery for recognizing and selectively processing a variety of information carriers (Figure 4b). For instance, voltage-gated and neurotransmitter-gated ion channels offer ionic and molecular recognition, respectively.Reference Kandel and Mack1 Moreover, in chemical synapses the synaptic strength is modulated by chemical messengers, the neurotransmitters. The description of those ionic/molecular processes is absent in ANNs, as the synaptic weight is modeled only by a real number without considering the specificity of those ionic and molecular markers.

Biological neural networks are immersed in a common electrochemical environment in extracellular space (e.g., an electrolyte). Global concentrations of information carriers in extracellular space also collectively regulate the neural network activity under certain constraints in a top-bottom manner, a phenomenon broadly know as homeostasis (Figure 4c).Reference Turrigiano and Nelson104–Reference Delvendahl and Müller106 This top-bottom regulation imposes additional degrees of collective influence on the network activity, or even training of networks in a chemical manner (for instance in pharmacological treatment, neurotransmitters, drugs, or neurotoxins).Reference Huang, Schlüter and Dong107 Neuromodulators, such as dopamine, are known to influence neural networks on a global scale and their presence is associated with reward or aversion during behavioral tasks.Reference Pignatelli and Bonci108 The essence of homeostasis, in a broader perspective, is to preserve the neural environment under certain physiological conditions that are crucial for the existence of life. Therefore, homeostasis is believed to be basic manifestation of intelligence, as is closely related to the ultimate goal of living systems which is self-preservation.Reference Man and Damasio109,Reference Turner110 Moreover, the excitability of neurons, and as such their tendency to produce action potentials, can also be regulated globally through the voltage-gated ionic channels.Reference Debanne, Inglebert and Russier111 Although a common input that loosely resembles homeostasis is used in ANNs (also known as a bias), global ionic and biochemical phenomena are rarely considered in practice.

Neural activity is also influenced by electrical perturbations of the common electrolyte in extracellular space.Reference Buzsáki, Anastassiou and Koch112,Reference Anastassiou, Perin, Markram and Koch113 This activity can be orchestrated by oscillatory electrical perturbations that are commonly found in the brain (Figure 4d). These electrical oscillations even synchronize the activity of groups of neurons that form distant networks.Reference Gupta, Singh and Stopfer114–Reference Fell and Axmacher116 Synchronization of different neural groups thus induces a functional type of connectivity (that lasts for short periods of time), since these groups share a common, time-dependent, neural activity due to the common oscillation they receive.

Features of biophysical realism that are mere abstractions in artificial neurons or ANNs, are akin to the operation principles of organic neuromorphic devices based on mixed-conductors (Figure 4). Organic electrochemical transistors (OECTs) have the ability for spatiotemporal information processing (Figure 4a).Reference Gkoupidenis, Schaefer, Garlan and Malliaras46,Reference Gkoupidenis, Koutsouras, Lonjaret, Fairfield and Malliaras117 The operation of OECTs in electrolytes permits the realization of multiterminal transistors, in which a single channel can be probed with an array of gates. The response of such a device depends not only on time, but also on the distance between the device terminals.Reference Gkoupidenis, Koutsouras, Lonjaret, Fairfield and Malliaras117,Reference Gkoupidenis, Rezaei-Mazinani, Proctor, Ismailova and Malliaras118 As a result of this, spatially distributed voltage inputs on the gate electrode array correlate with the output of the transistor leading to the ability of the multi-terminal device to discriminate between different stimuli orientations. This behavior is analogous to biological phenomena, such as the ability of the primary visual cortex to detect edges from visual stimuli. This and similar functions in a single organic electronic device enables the emulation of complex spatiotemporal processing functions with compact device configurations, or even the discrimination of biological (sensory) signals that span over space and time.

The intrinsic properties of OECTs and their materials also enable the introduction of ionic or even molecular recognition (Figure 4b). By engineering the chemical structure of the active materials (i.e., channel or gate material) OECTs are capable of ionic signal discrimination in sensing, for instance selectivity of Na+ over K+ ions, or selectivity of Ca+, NH4+ ions.Reference Wustoni, Combe, Ohayon, Akhtar, McCulloch and Inal119–Reference Keene, Fogarty, Cooke, Casadevall, Salleo and Parlak121 Selective detection of neurotransmitters such as dopamine, in the presence of other interfering chemical compounds is also possible with OECTs.Reference Gualandi, Tonelli, Mariani, Scavetta, Marzocchi and Fraboni122,Reference Tang, Lin, Chan and Yan123 The above examples emphasize the potential of OECTs to emulate the selectivity of ionic channels in processing (voltage- or neurotransmitter-gated channels in biological membranes), or to decouple the variety of information carriers that are present in the biological environment.

The operation of an array of OECTs in common/global electrolytes, can be leveraged in order to induce global regulation of the array (Figure 4c).Reference Koutsouras, Malliaras and Gkoupidenis124 A common gate electrode can collectively address or modulate the output of the device array. Global regulation depends on the electrolyte concentration, a behavior which is reminiscent of biological homeostasis.Reference Koutsouras, Malliaras and Gkoupidenis124,Reference Gkoupidenis, Koutsouras and Malliaras125 In the actual neural environment, homeostatic or global parameters such as temperature, neurotransmitters, as well as ionic/chemical species regulate the activity of large neural networks (for instance various pharmacological agents induce or suppress collective neural activity, namely epileptic events).Reference Turrigiano and Nelson104,Reference Abbott and Nelson105 At the level of neuromorphic functions, homeostasis allows for the existence of a “global pool” of information carriers that regulate or interact with a device array in a range of spatiotemporal scales on top of single device behavior.

Due to the inherent capability of a device array to operate in common electrolytes, the response of the device array when receiving stochastic input signals can be synchronized with a global oscillatory input signal (Figure 4d).Reference Koutsouras, Prodromakis, Malliaras, Blom and Gkoupidenis126,Reference Gkoupidenis127 Although not physically connected with metal lines, such devices are functionally connected through the global oscillatory input as they share a common correlation over time. In the brain, apart from actual structurally connected networks, networks also exist that share common activity over short periods of time and are thus functionally connected.Reference Fell and Axmacher116 Phenomena such as functional connectivity can be harnessed for inducing a transient link between different blocks in a neuromorphic circuit.

Conclusions

Organic devices for brain-inspired computing have rapidly evolved over the past few years, delivering metrics that are in some cases competitive with or even outperform their inorganic counterparts (for instance low-power operation, analog memory phenomena, tunable linearity during weight update). With these properties, organic devices can enable applications for neuromorphic computing and ANNs in domains such as local data processing, training and feature extraction in energy-restricted environments. Their facile interfacing with biology, also opens new avenues in neuromorphic sensing, actuation and closed-loop control in bioelectronics.

Nevertheless, materials development, long-term reliability and large-scale integration are major challenges that need to be addressed for real-world applications. Toward this direction, the materials that are used for organic neuromorphic devices should be air-stable, or able to be encapsulated with oxygen or moisture barriers. Thin-film deposition processes should also be further improved in terms of reliability and spatial homogeneity in order to pave the way for large-scale integration. Moreover, parasitic reactions should be minimized in electrochemical devices in order to avoid device degradation or any lethal reaction byproducts for living organisms in the case of biointerfacing. Major challenges still exist to downscale device metrics (for instance operation voltages) in order to approach those of biological phenomena such as action potentials. Especially for biointerfacing, the intrinsic device noise is critical and additional investigations are therefore needed for further improvements.

In the longer term, it is now well recognized that ANNs still face major challenges in approaching biological levels of intelligence and therefore neuroscience-driven development is essential for revisiting the computational primitives of the brain. Toward this approach, organic devices have shown the potential for biophysical realism in neuromorphics, by emulating aspects of biological neural processing that are nontrivial to be accessed by the inorganic counterparts. As an illustration, biological aspects of homeostasis, functional connectivity and ionic/molecular recognition rise naturally in such neuromorphic devices with inherent sensing capabilities. Ultimately, improving metrics and ANN-driven development in the shorter-term combined with neuroscience-driven development of novel device concepts in the longer-term, will allow organic materials to be an enabling technology for innovations in human-oriented neuromorphic computing.

Acknowledgments

Y.v.d.B. acknowledges funding from the European Union's Horizon 2020 Research and Innovation Program, Grant Agreement No. 802615.

Yoeri van de Burgthas led the Neuromorphic Engineering Group at Eindhoven University of Technology, since 2016, and has been a visiting professor at the University of Cambridge, UK, in 2017. He obtained his PhD degree at Eindhoven University of Technology, The Netherlands, in 2014. He briefly worked at a high-tech startup in Switzerland, after which he obtained a postdoctoral fellowship in the Department of Materials Science and Engineering at Stanford University. He was recently awarded an ERC Starting Grant from the European Commission. In 2019, he was elected as a Massachusetts Institute of Technology innovator Under 35 Europe. His research focuses on organic neuromorphic materials and electrochemical transistors. Van de Burgt can be reached by email at [email protected].

Yoeri van de Burgthas led the Neuromorphic Engineering Group at Eindhoven University of Technology, since 2016, and has been a visiting professor at the University of Cambridge, UK, in 2017. He obtained his PhD degree at Eindhoven University of Technology, The Netherlands, in 2014. He briefly worked at a high-tech startup in Switzerland, after which he obtained a postdoctoral fellowship in the Department of Materials Science and Engineering at Stanford University. He was recently awarded an ERC Starting Grant from the European Commission. In 2019, he was elected as a Massachusetts Institute of Technology innovator Under 35 Europe. His research focuses on organic neuromorphic materials and electrochemical transistors. Van de Burgt can be reached by email at [email protected].

Paschalis Gkoupidenishas been a group leader in the Department of Molecular Electronics at the Max Planck Institute for Polymer Research, Germany, since 2017. He earned his PhD degree in materials science from the National Centre of Scientific Research “Demokritos” Greece, in 2014. His doctoral research focused on ionic transport mechanisms of organic electrolytes, and physics of ionic-based devices and of nonvolatile memories. He conducted postdoctoral research in 2015 in the Department of Bioelectronics at École des Mines de Saint-Étienne, France, focusing on the design and development of organic neuromorphic devices based on electrochemical concepts. Gkoupidenis can be reached by email at [email protected].

Paschalis Gkoupidenishas been a group leader in the Department of Molecular Electronics at the Max Planck Institute for Polymer Research, Germany, since 2017. He earned his PhD degree in materials science from the National Centre of Scientific Research “Demokritos” Greece, in 2014. His doctoral research focused on ionic transport mechanisms of organic electrolytes, and physics of ionic-based devices and of nonvolatile memories. He conducted postdoctoral research in 2015 in the Department of Bioelectronics at École des Mines de Saint-Étienne, France, focusing on the design and development of organic neuromorphic devices based on electrochemical concepts. Gkoupidenis can be reached by email at [email protected].