1. Introduction

To tackle the hard core of unemployment, OECD countries devote substantial resources to job creation programmes (also referred to as direct employment or public employment programmes).Footnote 1 As an element of “new” or “active” welfare states (e.g. Taylor-Gooby et al., Reference Taylor-Gooby, Gumy and Otto2015; van Berkel, Reference van Berkel2010), such programmes are used particularly in Europe, aiming to increase the employability and labour market participation of the most disadvantaged unemployed. In a broader social policy perspective, these programmes reflect a “human capital development” rather than a “work first” approach, meaning that the focus is on increasing employability and long-run employment chances, rather than immediate employment integration (cf. Lindsay et al., Reference Lindsay, McQuaid and Dutton2007).

However, scientific evaluations have been rather pessimistic about these programmes’ effectiveness (e.g. Card et al., Reference Card, Kluve and Weber2010, Reference Card, Kluve and Weber2018; Kluve, Reference Kluve2010). More specifically, evaluations found that job creation programmes have unfavourable effects on the young and short-term unemployed; for the long-term unemployed, findings are more promising (Card et al., Reference Card, Kluve and Weber2010, Reference Card, Kluve and Weber2018). In some contexts, furthermore, women tend to experience better programme effects than men (Dengler, Reference Dengler2019). Thus, evaluators have urged policymakers to use job creation schemes sparingly, by focusing them on well-defined target groups.

We re-evaluate a German job creation programme for unemployed welfare benefit recipients, known as One-Euro-Jobs (OEJs), after a major reform in 2012 that addressed evaluators’ concerns. In line with the broader evidence on job creation programmes, previous evaluations pointed out an imprecise targeting of OEJs, as relatively well-employable persons had been allocated to the programme in its early years (Hohmeyer and Kopf, Reference Hohmeyer and Kopf2009; Hohmeyer and Wolff, Reference Hohmeyer and Wolff2012; Huber et al., Reference Huber, Lechner, Wunsch and Walter2011; Kettner and Rebien, Reference Kettner and Rebien2007; Thomsen and Walter, Reference Thomsen and Walter2010; Wolff and Hohmeyer, Reference Wolff and Hohmeyer2006). Such misallocation is widely regarded as a source of lock-in effects (reduced job search intensity due to programme participation) and stigma effects (being perceived as “economically and socially disadvantaged”, Burtless, Reference Burtless1985: 114; see also Bonoli and Liechti, Reference Bonoli and Liechti2018; Calmfors, Reference Calmfors1994), which may explain the adverse effects found for some groups of participants.

The 2012 reform of OEJs aimed at reducing such misallocation and sharpening the focus on the hardest-to-place. In particular, the reform emphasized the “last resort” nature of OEJs by clarifying that the programme is strictly subordinate to regular job placement and other active labour market policy (ALMP) measures. That is, jobseekers may be assigned to OEJs only if no other placement or programme suits their needs and capabilities, implying that only the least employable be placed in the programme. According to theory and previous evidence, focussing on the hardest-to-place should result in better OEJ participation effects. Another relevant aspect of the reform was the abolition of qualification elements within OEJs. Examples of qualification elements include job application training, obtaining a schooling degree, training in communication and social skills, and continued vocational training (Uhl et al., Reference Uhl, Rebien and Abraham2016).

To compensate for this loss of on-the-job training opportunities, the legislator encouraged the use of other ALMP programmes in combination with OEJs. Although such combinations may suit the needs of participants, they may decrease the employment effects of OEJs by pushing participants into so-called programme careers. It is therefore unclear ex ante how the reform has affected the employment effects of OEJs.

Using rich administrative data and statistical matching methods, we provide the first post-reform evaluation of OEJs, focusing mainly on employment effects. In order to assess whether the reform has improved OEJs’ efficacy, we disentangle possible reasons why the post-reform effects may differ from pre-reform effects (as found by earlier evaluations). Therein, we check whether OEJs trigger participation in further ALMP programmes.

Our study should be of interest beyond the specific cases of OEJs and Germany. First, because our findings may also apply to similar programmes that exist (or existed) in other countries, including the UK’s “Work Programme” (Department for Work and Pensions, 2012), the Australian “Work for the Dole” programme (Department of Education, Skills and Employment, 2021), and the Swedish work experience placement (cf. Tisch and Wolff, Reference Tisch and Wolff2015). Second, the OEJ reform is an example of policymaking responsive to scientific evaluation, with both policymakers and evaluators focusing on a typical problem of programme design (targeting). To some extent, thus, we evaluate not only a policy, but also the usefulness of previous evaluators’ advice.

The remainder of this article is organized as follows. Section 2 describes the institutional background. Section 3 highlights details of the reform and discusses its ex-ante implications for OEJs’ effectiveness, based on theory and previous evidence. It further provides aggregate descriptive evidence on OEJs, whereas section 4 presents the data, sample, and methodology used in our evaluation analysis. Section 5 presents our evaluation results and a comparison with the results of pre-reform evaluations. Section 6 discusses our findings in the light of the ex-ante considerations and concludes.

2. Institutional background

The programme we evaluate is officially called Arbeitsgelegenheiten (work opportunities), but more commonly referred to as One-Euro-Jobs (OEJs). OEJs are one of the most frequently used ALMP programmes for unemployed recipients of unemployment benefit II (UB II), the German basic income support. They are temporary jobs (usually three to twelve months) in part time (usually 20-30 hours per week) and participants receive a compensation of one to two Euros per hour, not deducted from their welfare benefits. Jobseekers who are offered an OEJ but refuse to participate can be sanctioned with benefit cuts; OEJs therefore involve an important compulsory element and can be labelled a “workfare” programme (cf. Hohmeyer and Wolff, Reference Hohmeyer and Wolff2018; van Ours, Reference van Ours2007).

Regarding OEJs’ content, the programme is the least ambitious German ALMP measure, meaning that it is not intended to qualify participants for regular employment, but merely to enable some work-related activity at all, and to increase their (basic) employability. The programme thus focuses on people strongly detached from the labour market. OEJs provide access to important functions of work that go beyond financial resources, such as a daily work routine and social contact outside of one’s own family (cf. Jahoda, Reference Jahoda1982). The immediate goal of OEJs is thus to stabilize participants’ life situation and to increase their employability by letting them gain some work experience. In contrast, self-sufficient employment (overcoming one’s need for UB II) is at best a long-term goal.Footnote 2 To reach the latter goal, OEJs can be combined with or followed by other, more ambitious programmes.

On April 1st, 2012, a major ALMP reform brought several legal changes regarding OEJs. In the next section, we discuss in detail those elements of the reform, as well as other contemporaneous changes, which appear relevant to the programme’s efficacy. Less relevant aspects of the reform are presented in the Appendix section “Elements of the reform”.

3. Ex-ante implications of the reform, descriptive indicators, and contemporaneous changes

3.1. Changes in participant selection

In order to assess whether the reform has improved OEJs’ efficacy, we distinguish three main dimensions in which relevant changes have occurred contemporaneously with, though not necessarily due to, the reform. The first dimension is OEJs’ participant selection. This was explicitly addressed by the reform and justified by previous evaluation results (cited above). Due to its nature as the least ambitious ALMP programme, OEJs were subordinate to all other measures even before the reform. However, there had been a de facto exception for the youngest (<25 years) and oldest (>57 years) unemployed UB II recipients: they had to be offered an OEJ immediately if caseworkers currently could not find a regular job or (only for the young group) apprenticeship for them. This exception was abolished by the reform. Thereby, the reform required OEJs to be consequently targeted at very hard-to-place jobseekers.

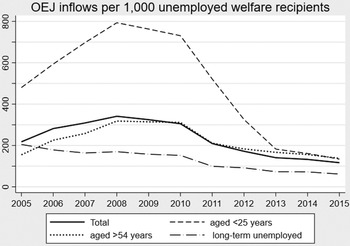

In fact, the annual number of inflows into OEJs started to decrease even before the 2012 reform: from 310 OEJ inflows per 1,000 unemployed welfare recipients in 2006-2008 to 116 per 1,000 in 2015 (Figure 1). The bulk of this decrease was a result of budget cuts enacted in 2009/2010. In the years since the 2012 reform, the number of OEJ inflows per 1,000 unemployed welfare recipients decreased less sharply, but the composition of OEJ participants changed markedly. The OEJ inflows per 1,000 unemployed welfare recipients among people aged under 25 years decreased sharply whereas the respective rates of older (over 54 years) and long-term unemployed people stayed relatively stable since 2012.

Figure 1. OEJ inflows per 1,000 unemployed welfare recipients, 2005-2015 (annual average)

Note to Figure 1: Data source: Statistics Department of the Federal Employment Agency; own calculations.

To assess whether these changes in participant composition could be a result of the 2012 reform, we compare our descriptive statistics with those of earlier studies. Hohmeyer and Kopf (Reference Hohmeyer and Kopf2009) and Hohmeyer (Reference Hohmeyer2012) found that the programme was not well targeted in the year of its introduction (2005). Comparing matched non-participants (identified by Propensity Score Matching) with all eligible non-participants in their respective samples, these studies found that the matched persons were positively selected in terms of later employment outcomes. That is, a positive selection of unemployed had been allocated to OEJs, contrary to the programme’s rationale. In contrast, using the same statistical approach for a participant cohort of 2007, Kiesel and Wolff (Reference Kiesel and Wolff2018) found a negative selection of OEJ participants: in their sample, matched controls’ employment rates, 36 months after programme entry, were some 6-18% lower than those of all potential controls (see Table A1 in the Appendix). In our study, with OEJ participants as of 2012, the corresponding differences are between −21 and −33%, indicating that OEJs increasingly address the right persons.

Put together, these findings suggest that OEJ participants were positively (and thus wrongly) selected at the very beginning of the programme, but this had been corrected as early as 2007 and seems to have slightly further improved between 2007 and 2012. In the light of these findings, the reform seems to have had only a minor impact on participant selection. According to previous studies (notably, Hohmeyer and Kopf, Reference Hohmeyer and Kopf2009; Hohmeyer and Wolff, Reference Hohmeyer and Wolff2012), this stricter targeting should have improved OEJs’ employment effects by reducing lock-in effects, presumably because the programme’s aim and design – developing employability through very basic work experience – are tailored to a hard-to-place target group.

Stricter targeting may also affect employment effects through increasing or decreasing stigma effects. First, (overly) strict targeting may cause stigmatization, as participating in a programme restricted to the hardest-to-place may be perceived as a signal of low individual productivity (Burtless, Reference Burtless1985; Calmfors, Reference Calmfors1994; Fervers, Reference Fervers2019). If potential employers are aware of the worsened participant composition, they may assess any OEJ participant as hardly employable. Thus, stricter targeting may increase stigmatization and thereby decrease the average treatment effect on the treated (ATT). Second, however, employers may evaluate programme participation differently for harder- and easier-to-place applicants who have previously participated in OEJs. According to Liechti et al. (Reference Liechti, Fossati, Bonoli and Auer2017), employers evaluate programme participation negatively only for the easier-to-place, and instead positively for the harder-to-place. In the case of OEJs (which address the hardest-to-place, all the more after the reform), the stricter targeting may thus prevent or reduce stigmatization. Considering both channels of effects (lock-in and stigma effects), the overall effect of the change in participant selection on the ATT therefore is ambiguous.

3.2. Changes in programme design

The second dimension in which OEJs have changed is programme design. The reform abolished qualification elements within OEJs, reducing the programme to its core function as a mere work opportunity. As ALMP programmes focusing on human capital accumulation showed relatively positive employment effects (Card et al., Reference Card, Kluve and Weber2018), this abolition might have worsened OEJs’ effectiveness, all the more since the hard-to-place target group should particularly benefit from human capital accumulation (ibid.). Furthermore, even before the reform, employers criticised that OEJ participants’ lacking skills is one of the main reasons why they refrain from hiring them as regular workers after the OEJ ends, pointing to a need of skill acquisition (Moczall and Rebien, Reference Moczall and Rebien2015).

To compensate for the abolished qualification elements, caseworkers can combine OEJs with other programmes, notably the so-called schemes for activation and integration (SAI).Footnote 3 Since OEJs leave relatively little time to participate in other programmes contemporaneously, such combinations tend to take the form of sequences – i.e. OEJs being preceded or followed by other programmes, rather than occurring contemporaneously. In our sample, some 19% of the OEJ participants went on to participate also in SAI within twelve months after OEJ participation; 16% of them had already participated in SAI in the twelve months before OEJ participation. Yet, only 3% of the same OEJ participants also participated in SAI during OEJ participation (which usually takes between three and twelve months).

Indeed, empirical studies have shown that some ALMP programmes, and OEJs in particular, may be part of longer sequences of programmes (Dengler, Reference Dengler2015; Dengler and Hohmeyer, Reference Dengler and Hohmeyer2010). However, such programme sequences may keep participants from taking up regular jobs for longer periods (“programme careers”). Some empirical studies have explicitly studied sequential programme participation as a particular form of treatment (e.g. Lechner and Wiehler, Reference Lechner and Wiehler2013). However, there is little evidence on further programme participation as an actual outcome of OEJ participation. The only exception we are aware of is Hohmeyer (Reference Hohmeyer2009), who found that OEJs have a positive effect on participating in other ALMP programmes and in further OEJs. Extending the evidence base, we also estimate OEJs’ effects on subsequent programme participation. To the extent that OEJs do trigger programme careers (a plausible hypothesis given Hohmeyer’s (Reference Hohmeyer2009) findings), OEJ participation should result in a prolonged lock-in period and, thus, decreased employment chances; at least, in the short run.

Another relevant programme design feature is sectoral composition (cf. Sowa et al., Reference Sowa, Klemm and Freier2012). Most importantly in the present context, Kiesel and Wolff (Reference Kiesel and Wolff2018) found that OEJs have relatively good employment effects if they are placed in health care, childcare, and youth welfare, but are quite ineffective if placed in environment protection, rural conservation, and infrastructure improvement. The authors attribute this pattern to the relatively favourable development of labour demand in the former group of sectors.

In fact, the share of OEJ inflows in low-demand sectors has continuously increased since the mid-2000s, and the share of inflows in high-demand sectors, which was already low from the beginning, decreased.Footnote 4 On average, female OEJ participants are placed in higher-demand sectors than men, but even for women, roughly half of all OEJs were placed in low-demand sectors by 2012 (see Figure A1 in the Appendix). OEJs’ sectoral composition was not directly addressed by the 2012 reform, but perhaps indirectly: the reform introduced a stricter competition-neutrality criterion that may be easier to satisfy in low-demand sectors, where OEJs yield unfavourable employment effects. This might lead to worse employment effects of OEJs. However, as the development of the sectoral structure has been rather steady, it seems not to have been caused by the reform.

3.3. Changes in business cycle conditions

The third dimension along which changes have occurred that (may) affect OEJs’ efficacy is the business cycle. Previous evidence found rather unambiguously that ALMP efficacy is countercyclical, i.e. effects are better when overall labour market performance is weak (e.g. Card et al., Reference Card, Kluve and Weber2018; Forslund et al., Reference Forslund, Fredriksson and Vikström2011; Kluve, Reference Kluve2010; Lechner and Wunsch, Reference Lechner and Wunsch2009). This may suggest that, as hiring becomes more selective during downturns, ALMP programmes increase the labour market value of participants and thus improve their position relative to other jobseekers – even though total competition for jobs is stronger than in upswings.

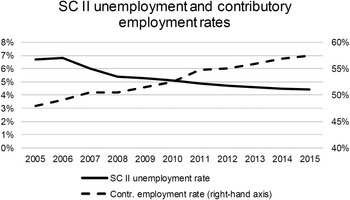

Considering the relevant period from the introduction of OEJs (2005) to the end of our observation period (2015), the German labour market performed increasingly well, with no substantial downturn even in the economic crisis of 2008/2009.Footnote 5 Considering only unemployed UB II recipients, the unemployment rate has fallen from 6.7 to 4.4% while the contributory employment rate (employees liable to social security as a share of the labour force) has increased from 48 to 57% (see Figure 2).Footnote 6 Therefore, the baseline hazard of leaving unemployment to contributory employment, even for UB II recipients, has increased substantially. However, this need not indicate better employment outcomes for OEJ participants, a very specific (and, as shown above, significantly changed) subgroup of UB II unemployment. Following the just-cited literature, this would suggest that OEJs have become less effective in the years around the 2012 reform.Footnote 7

Figure 2. SC II unemployment and contributory employment

Note to Figure 2: Data source: Statistics Department of the Federal Employment Agency; own calculations.

We therefore frame our comparison of pre- and post-reform evaluation results along three dimensions: participant heterogeneity, sectoral heterogeneity, and aggregate labour market performance. Empirically, we can consider the first two dimensions for an explicit comparison of evaluation results; regarding the third dimension, we rely on a more general body of literature on ALMP programme effectiveness and business cycle conditions. As for the abolition of qualification elements, we cannot consider this change empirically for lack of previous empirical evidence on the importance of qualification elements. In addition, we analyse whether OEJs trigger “programme careers” to inform our interpretation of results. The changes that occurred due to or contemporaneously to the reform along these dimensions have theoretically ambiguous implications for OEJs’ effectiveness. We therefore try to trace our evaluation findings, and their deviations from those of previous evaluations, to these various channels of effects.

4. Data and methods

4.1. Data sources and sample

We use rich administrative data of the Institute for Employment Research (IAB) which cover the populations of employees liable to social security, registered unemployed, registered jobseekers, benefit recipients, and ALMP programme participants.Footnote 8 In brief, the data contain detailed labour market biographies, sociodemographic characteristics, amounts of benefits and sanctions, wage income, detailed household-level information, and regional labour market data. For a comprehensive documentation of the Integrated Employment Biographies (IEB), one of the main IAB data products we also use, see e.g. Antoni et al. (Reference Antoni, Schmucker, Seth and vom Berge2019).

The data base from which our sample is drawn consists of registered unemployed UB II recipients as of June 30th, 2012 (the sampling date). All of our sample members were non-working and in principle eligible for OEJs on that date. That is, we draw a stock sample of unemployed, despite the well-known criticism that this results in oversampling of long-term unemployed (e.g. Biewen et al., Reference Biewen, Fitzenberger, Osikominu and Paul2014). We do so because the eligible population (hard-to-place unemployed UB II recipients) and the nature of the programme differ fundamentally from the setup in Biewen et al. and other seminal methodological studies. For a detailed discussion of this methodological choice, see Appendix section “Stock sample versus inflow sample”.

In defining the treatment, the focus is on OEJ participants who entered OEJs between July and October 2012 (the “treatment” group).Footnote 9 This time frame is short enough to limit any potential heterogeneity between the earliest and latest treated analysed, yet long enough to yield sufficient observation numbers, even after splitting the sample further for heterogeneity analyses. The “control” group (or waiting group), are sample members who did not enter OEJs during the same four months, but could do so later. In order to measure pre- and post-treatment outcomes at specific points in time, for the controls we draw hypothetical random programme start dates from the distribution of actual programme starts in the treatment group. Our treatment definition is thus “static”, as opposed to the “dynamic treatment assignment” proposed by Sianesi (Reference Sianesi2004). Similar to our stock sample, this methodological choice can be justified on the grounds of massive differences in the institutional setups in Sianesi (Reference Sianesi2004) versus the present study (see Appendix section “Static versus dynamic treatment assignment”).

In all steps of the analysis, the sample is split between men and women, as well as between EastFootnote 10 and West Germany, to account for the systematic differences in labour supply behaviour between sexes and the structural economic differences between East and West. Table 1, panel A presents the overall sample size (observation numbers by sex, region, and treatment status). Our sampling design, definition of the treatment group, and sample restrictions are strongly in line with those of Hohmeyer and Wolff (Reference Hohmeyer and Wolff2012) and Kiesel and Wolff (Reference Kiesel and Wolff2018), to whom we compare our findings. For further details on the estimation sample, see Appendix section “Sample restrictions”.

Table 1. Sample structure by region, sex, and treatment status

*Weights: 1 (Treated), 5 (Controls, under-sampled). **Field of activity does not apply to control observations (all potential controls can be matched to treated in any field of activity). ***Total number of treated is smaller than in the total (net) samples because field of activity is not reported by Approved Local Providers (a minority of jobcentres run exclusively by the local municipality). Data sources: IEB, LHG, XMTH; own calculations.

4.2. Identification approach and matching quality

The main aim of the following analysis is to identify the effect of OEJs on participants as the average treatment effect on the treated (ATT), as formulated in the potential outcomes framework (Roy, Reference Roy1951; Rubin, Reference Rubin1974):

where

![]() $$Y\left( D \right)$$

denotes the outcome

$$Y\left( D \right)$$

denotes the outcome

![]() $$Y$$

as a function of treatment status

$$Y$$

as a function of treatment status

![]() $$D$$

(1 = treated, 0 = control). Since the last term is obviously counterfactual, in the estimated ATT it is substituted by the outcomes of non-treated individuals:

$$D$$

(1 = treated, 0 = control). Since the last term is obviously counterfactual, in the estimated ATT it is substituted by the outcomes of non-treated individuals:

Accounting for the large number of relevant covariates (control variables), propensity score matching (PSM; Rosenbaum and Rubin, Reference Rosenbaum and Rubin1983) is used to estimate the ATT, which is then given as

where

![]() $$P\left( X \right)$$

is the propensity score, estimated as the prediction of treatment status obtained from a Probit regression on the explanatory variables

$$P\left( X \right)$$

is the propensity score, estimated as the prediction of treatment status obtained from a Probit regression on the explanatory variables

![]() $$X$$

. The PSM estimate of the ATT is thus the mean difference in outcomes between treated and matched controls. For further methodological details, see Appendix section “Identifying assumptions and matching quality”.

$$X$$

. The PSM estimate of the ATT is thus the mean difference in outcomes between treated and matched controls. For further methodological details, see Appendix section “Identifying assumptions and matching quality”.

We estimated the propensity score using a Probit regression of the treatment dummy on a rich set of covariates, including sociodemographics, household and partner characteristics, the last regular job’s characteristics, regional context indicators, and detailed labour market biography indicators. For covariate summary statistics, see Tables A6-A10 in the Appendix. As for the matching algorithm, we considered various procedures, using the psmatch2 command in Stata (Leuven and Sianesi, Reference Leuven and Sianesi2003). Our preferred algorithm is nearest-neighbour matching with five nearest neighbours (NN5 matching) and without calliper. We assessed matching quality by the mean standardized bias (MSB; Rosenbaum and Rubin, Reference Rosenbaum and Rubin1985) and the Pseudo R2 of the covariates with respect to treatment status. The MSB was found to be in the order of 0.4 (varying slightly across sex-region groups) and a Pseudo R2 between 0.001 and 0.002, meaning that almost no significant differences in means in the covariates remained between treated and matched controls (Table A2 in the Appendix). The table also reports the numbers of treated for whom the common support assumption is violated, showing that common support limitations are negligible. Overall, the findings are reassuring that the identifying assumptions are satisfied for the treatment and outcomes of interest.

To further demonstrate the validity of our matching approach, we present pre- and post-treatment employment outcomes, starting 84 months before (hypothetical) programme start in Appendix Figure A2. As we include quarterly pre-treatment employment rates in the covariate set, treated and controls have virtually the same employment rates in each of the 84 pre-treatment months covered by our observation period, but begin to diverge precisely at the (hypothetical) programme start date. Therefore, we are highly confident that the covariate balance achieved provides us with a solid ground for a causal interpretation of the ATT.

5. Econometric results

5.1. Overall results

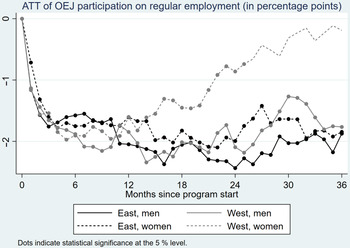

This section presents the ATT on the labour market outcomes of OEJ participants, notably regular (contributory, unsubsidized) employment, measured as a dummy variable for each of the 36 months after (hypothetical) programme start.Footnote 11 The ATT on regular employment is presented in Figure 3, which indicates that OEJs have a significantly negative effect on the regular employment rate of the treated, ranging between circa −2.5 and −0.5 percentage points. The effect converges to about −2 percentage points towards the end of the observation period, for all groups except women in West Germany. This probably relates to them participating more often in OEJs sectors characterized by higher labour demand (see Figure A1). Regarding the quantitative importance of these effects, for instance, matched controls’ employment rates 24 months after programme start range between 9% (East) and 11% (West). Thus, the estimated ATTs are rather substantial in relative terms (roughly −20%) relative to the baseline employment probability.

Figure 3. Employment effects of OEJs

Note to Figure 3: Data sources: IEB, LHG, LST-S, XMTH; own calculations.

The results of several robustness checks and sensitivity analyses are presented in the Appendix section “Robustness checks”. One such check worth pointing out is a jobcentre-exact matching algorithm, which accounts for local jobcentres’ discretion in interpreting assignment rules. This alternative algorithm did not yield substantially different results, suggesting that regional heterogeneity in OEJ assignment practices does not bias our results or render them imprecise. Other robustness checks are difference-in-differences matching (additionally controlling for time-invariant unobserved heterogeneity between treated and matched controls), entropy balancing (a flexible and very efficient weighting scheme to achieve covariate balance); and a Rosenbaum bounds sensitivity analysis. None of these questions our conclusions fundamentally. We are aware that our results may lack external validity: as we focus on OEJ participants and their comparable counterparts shortly after the 2012 reform, our results may not extend to later populations of OEJ participants.

5.2. Participant and sectoral heterogeneity

In order to better compare our results with previous (pre-reform) studies’, participants are further separated by how long they have been out of work (i.e. the time passed since their last regular job). This is a proxy measure of labour market detachment. Four subgroups are considered: (i) those who have never had a regular job (14% of the total sample),Footnote 12 (ii) those whose last regular job ended at most one year ago (26%), (iii) more than one up to five years ago (46%), and (iv) more than five years ago (14%); see Table 1, panel B for observation numbers.

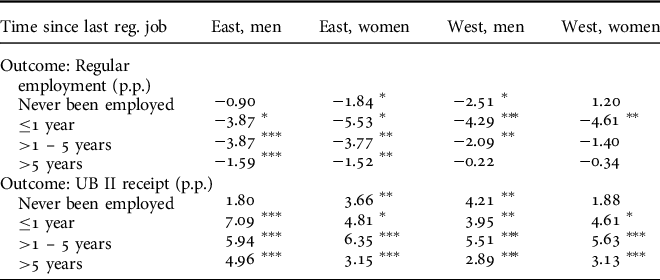

To wrap up the employment results (Table 2), OEJs’ effects are found to be most detrimental for those employed until relatively recently (for at most one year). This result is perfectly in line with previous studies such as Hohmeyer and Wolff (Reference Hohmeyer and Wolff2012), who argued that easier-to-place participants may benefit less from OEJs because the programme does not address their specific needs. With limited variation between regions and sexes, these OEJ participants suffer employment rate effects around −5 percentage points, three years after programme start. For the never-employed, the results are not clear-cut, not surprisingly given that this group is rather heterogeneous, including relatively young people but also older unemployed with extremely long unemployment durations.

Table 2. Treatment effects (ATT) by time since last regular job, 3 years after programme start

Legend: * p<.05; ** p<.01; *** p<.001. Data sources: IEB, LHG, LST-S, XMTH; own calculations.

Building on Kiesel and Wolff (Reference Kiesel and Wolff2018), we classify OEJs’ sectors as high-demand, low-demand, and others as explained above (see Table 1, panel C for observation numbers). The ATT on regular employment is displayed in Figure A3 in the Appendix. As would be expected given the high share of OEJ participants in low-demand sectors, the estimates suggest that the overall results are driven by these (and other, i.e. residual) sectors. In contrast, in high-demand sectors, employment effects turn non-negative rather quickly, and in the case of West Germany, even significantly positive in the longer run (two to three years after programme start). This pattern of results supports Kiesel and Wolff’s (Reference Kiesel and Wolff2018) conclusion that demand in the regular labour market is related to OEJs’ efficacy via their changing structure in terms of sectors.

5.3. OEJs’ effects on further ALMP programme participation

Although OEJs are the least ambitious programme in Germany, the negative employment effects we found even in the medium run hint to some prolonged lock-in effects. Thus, we consider subsequent participation in other ALMP programmes as another outcome, as such sequences might prevent labour market integration (cf. Dengler, Reference Dengler2015; Dengler and Hohmeyer, Reference Dengler and Hohmeyer2010; Hohmeyer, Reference Hohmeyer2009). Accounting for the possibility – explicitly intended by the legislator – to combine OEJs with SAI, we focus on subsequent participation in SAI. We find that, after a steep drop in the first months after programme start (likely due to lock-in), participating in OEJs significantly increases the probability to participate in SAI (Figure 4). The effect stabilizes at about 1 percentage point, for both sexes and regions, in the medium run. Since the counterfactual (matched controls’) participation rate in SAI is around 3% (closer to 2.5% (3.5%) in East (West) Germany), this is a rather substantial magnitude in relative terms.

Figure 4. Effects of OEJs on participation in SAI

Note to Figure 4: Data sources: IEB, LHG, LST-S, XMTH; own calculations.

5.4 Pre- versus post-reform effects of OEJs

The above results cannot be compared with those of previous evaluations without substantial qualifications, as much else has changed parallel to the reform. However, guided by our theoretical discussion, we attempt to at least roughly assess how the effects of OEJs have evolved after versus before the reform, and hence potentially due to the reform. To this end, we build on Hohmeyer and Wolff (Reference Hohmeyer and Wolff2012), henceforth HW, as well as Kiesel and Wolff (Reference Kiesel and Wolff2018), henceforth KW, as the most closely related studies. Both of them used very similar data and methods as we did. In particular, we largely adopted HW’s approach to participant heterogeneity, and we built directly on KW in addressing sectoral heterogeneity.

The subgroups constructed by HW and KW, respectively, do not perfectly match our categorization. However, we can make them broadly comparable in both cases. Regarding participant structure (the comparison with HW), we use three rather than four categories, displayed in Table 3. Panels A1 and A2 juxtapose HW’s and our sample’s composition, showing that our sample is comprised of longer-jobless OEJ participants.Footnote 13 Applying HW’s participant composition to our corresponding subgroup estimates 20 months after programme start (the end of HW’s observation period), we obtain counterfactual ATT estimates (line B2) that can, broadly, be compared with HW’s estimates (line B1) in order to see whether the deviation of our results from HW’s is driven by differences in participant composition. The evidence strongly rejects this hypothesis, as our counterfactual ATTs differ strongly from HW’s, mostly even in terms of sign. Reversely, the counterfactual ATTs are much closer to the ones we actually estimated (line B3). Thus, changes in participant selection do not seem to explain the large differences between the results of one pre-reform evaluation and those of our evaluation. This is despite the fact that the same pre-reform evaluation found a positive selection of OEJ participants from the pool of eligible unemployed, whereas we find a negative selection.

Table 3. Calculation of counterfactual ATT applying HWs’ OEJ participant structure

*Statistically significant at the 5% level. **Statistical significance not assessed. Data sources: Hohmeyer and Wolff (2012), IEB, LHG, LST-S, XMTH; own calculations.

In Table 4, we perform the same exercise for a comparison with KW. Again, some qualifications are necessary to make the results comparable. First, we aggregated the sectors considered by KW into the broader categories high-demand, low-demand, and other, as before. Second, we had to extrapolate KW’s estimates, which refer to 72 months after programme start, to 36 months after programme start (not reported by KW), assuming that the relative deviations of each sector’s ATT from the total-sample ATT (reported by KW also for 36 months after programme start) are the same at both points in time. Analogous to Table 3, panels A1 and A2 in Table 4 confirm the ‘lower-demand’ composition of OEJ participations (treatments) by sector in our study as compared with KW. More importantly, our counterfactual ATT estimates (applying KW’s sectoral composition to our sector-specific estimates) hardly deviate from our actual estimates, and they get nowhere close to KW’s.

Table 4. Calculation of counterfactual ATT applying KWs’ OEJ sectoral structure

*Statistical significance not assessed, as the reported numbers are synthetic composites of estimates rather than estimates themselves. Data sources: Kiesel and Wolff (2018), IEB, LHG, LST-S, XMTH; own calculations.

In sum, neither the pre- versus post-reform differences in participant selection (partly a result of the reform) nor in sectoral composition can, except to a very small extent, explain the strong negative deviation of our estimated employment effects from those of pre-reform evaluations. Thus, we need to turn to other pre- versus post-reform changes that may explain this deviation. The first of these changes is the abolition of qualification elements, which was part of the reform. Since no previous study has investigated the employment effects of OEJs with versus without qualification elements, we can consider this potential channel of effects only indirectly. We do so by drawing on the effect of OEJs on subsequent participation in other, similar programmes, because caseworkers are likely to compensate for the loss of qualification elements by using subsequent programmes to follow up on OEJs. Our findings (section 5.3) confirm this presumption, suggesting that OEJs trigger longer sequences of programmes. In the worst case, such sequences may amount to “programme careers” that shield participants from the regular labour market. To some extent, this may explain the rather persistent negative employment effects of the reformed OEJs.

The second pre- versus post-reform change that may explain our findings’ deviation from previous studies, concerns the business cycle. It is beyond the scope of this study to quantify the correlation between cyclical conditions and OEJs’ employment effects – this would amount to a study of its own similar to, e.g. Lechner and Wunsch (Reference Lechner and Wunsch2009). However, these studies propose a countercyclical pattern of ALMP programme effects, whereas no such negative relationship was found for OEJs with regard to regional labour market conditions (Hohmeyer and Wolff, Reference Hohmeyer and Wolff2007). Our findings are in line with a countercyclical pattern, suggesting that being treated with OEJs becomes less valuable in a prosperous labour market. Quite in contrast, as employment chances become higher during prosperity, the lock-in effect during treatment is likely to become stronger, all the more if it is prolonged by subsequent programme participations. Thus, a change in programme design (abolition of qualification elements) in combination with cyclical conditions may largely explain the relatively unfavourable employment effects we found.

6. Discussion and conclusion

This study evaluates One-Euro-Jobs (OEJs), a German workfare programme, after a major reform in 2012. The reform aimed to restrict participation in the programme to very hard-to-place unemployed welfare benefit recipients, accounting for previous evaluation results that pointed out an insufficient targeting (e.g. Hohmeyer and Wolff, Reference Hohmeyer and Wolff2012). Descriptive findings indicate that the stricter targeting rules work, at least to some degree. At the same time, OEJs are increasingly placed in sectors where labour demand and its trend are rather low, respectively negative (Kiesel and Wolff, Reference Kiesel and Wolff2018). Furthermore, the reform has abolished qualification elements within OEJs. We hypothesize that caseworkers compensate for this by assigning OEJ participants to subsequent programmes.

Using rich administrative data and matching methods, we find strong evidence that the reformed OEJs mostly have negative employment effects throughout the three years after programme start. The effects are less detrimental for participants who have been out of work for relatively long. Furthermore, OEJs placed in sectors with relatively high or rising labour demand yield better employment effects. These patterns of results are in line with previous evaluations, but overall, our findings seem quite disappointing compared with the findings of pre-reform evaluations. However, this comparison can only be drawn cautiously after adjusting for a number of relevant changes that have occurred contemporaneously, but not necessarily due to the reform.

We therefore performed a simple decomposition exercise focusing on intertemporal changes in OEJs’ participant structure and sectoral composition. Participant structure might affect OEJs’ effectiveness through more or less severe lock-in and stigma effects. OEJs’ sectoral composition may render the programme more or less effective due to sectoral heterogeneity of demand in the regular labour market. However, neither of these intertemporal changes can explain the differences between our estimated employment effects and those of pre-reform evaluations.

More plausible seems a business-cycle explanation, as the literature suggests a countercyclical relationship between aggregate labour market performance and ALMP programme effects (e.g. Card et al., Reference Card, Kluve and Weber2018). This explanation receives further support by our finding that OEJs have a positive effect on subsequent ALMP programme participation, likely prolonging their lock-in effect. This adverse effect should be more severe in times of overall economic prosperity, as participants’ counterfactual (non-treated) employment outcomes improve. Since the reform abolished the optional qualification elements of OEJs, the reform may even have caused this effect (OEJs trigger programme careers), as caseworkers may substitute longer programme sequences for the abolished qualification elements.

To conclude, OEJs in their post-reform design appear rather, but not exclusively as, a last resort than as a first step towards integration into self-sufficient employment. Although targeting has become stricter (as intended), OEJs still exert substantial lock-in effects, particularly on the more employable participants – meaning that our findings would be even worse without these improvements in targeting. Furthermore, contemporaneous changes in business cycle conditions and programme design might have had a negative impact offsetting the (apparently small) gains in efficacy from better targeting. At best, the reformed OEJs trigger subsequent participation in other ALMP programmes, which – although “locking in” participants for longer periods – may improve their long-run labour market outcomes. Researchers and policymakers should therefore consider the interactions between cyclical conditions, participant selection, and the design not just of individual programmes, but also of programme sequences. Such complex and partly unpredictable interactions may affect programme effectiveness substantially.

Acknowledgements

We thank Joachim Wolff for invaluable help and advice. Many thanks also to conference participants at ESPE 2019 and ESPAnet 2019 and seminar participants at IAB for valuable comments, and to Aylin Steyer, Natalie Bella, Hanna Brosch, Veronika Knize, and Markus Wolf for excellent research assistance. We further thank the two anonymous reviewers for their fruitful comments. All remaining errors are ours.

Competing interests

The authors declare none.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/S0047279421000313.