1. Introduction

When the heat released by a heat source, such as a flame, is sufficiently in phase with the acoustic waves of a confined environment, such a gas turbine or a rocket, thermoacoustic oscillations may occur (Rayleigh Reference Rayleigh1878). Thermoacoustic oscillations manifest themselves as large-amplitude vibrations, which can be detrimental to gas-turbine reliability (e.g. Lieuwen Reference Lieuwen2012), and can be destructive in high-power-density motors such as rocket engines (e.g. Culick & Kuentzmann Reference Culick and Kuentzmann2006). The objective of manufacturers is to design devices that are thermoacoustically stable, which is the goal of optimisation, and suppress a thermoacoustic oscillation if it occurs, which is the goal of control (e.g. Magri Reference Magri2019). Both optimisation and control rely on a mathematical model, which provides predictions on the key physical variables, such as the acoustic pressure and the heat-release rate. The accurate prediction of thermoacoustic oscillations, however, remains one of the most challenging problems faced by power generation, heating and propulsion manufacturers (e.g. Dowling & Morgans Reference Dowling and Morgans2005; Noiray et al. Reference Noiray, Durox, Schüller and Candel2008; Lieuwen Reference Lieuwen2012; Poinsot Reference Poinsot2017; Juniper & Sujith Reference Juniper and Sujith2018).

The prediction of thermoacoustic dynamics – even in simple systems – is challenging for three reasons. First, thermoacoustics is a multi-physics phenomenon. For a thermoacoustic oscillation to occur, three physical subsystems (flame, acoustics and hydrodynamics) interact constructively with each other (e.g. Lieuwen Reference Lieuwen2012; Magri Reference Magri2019). Second, thermoacoustics is a nonlinear phenomenon (e.g. Sujith & Unni Reference Sujith and Unni2020). In general, the flame's heat release responds nonlinearly to acoustic perturbations (Dowling Reference Dowling1999), and the hydrodynamics are typically turbulent (e.g. Huhn & Magri Reference Huhn and Magri2020b). Third, thermoacoustics is sensitive to perturbations to the system. In the linear regime, small changes to the system's parameters, such as the flame time delay, can cause arbitrarily large changes of the eigenvalue growth rates at exceptional points (Mensah et al. Reference Mensah, Magri, Silva, Buschmann and Moeck2018; Orchini et al. Reference Orchini, Magri, Silva, Mensah and Moeck2020). In the nonlinear regime, small changes to the system's parameters can cause a variety of nonlinear bifurcations of the solution. As a design parameter is varied in a small range, thermoacoustic oscillations may become chaotic, by either period doubling or Ruelle–Takens–Newhouse scenarios (Gotoda et al. Reference Gotoda, Nikimoto, Miyano and Tachibana2011, Reference Gotoda, Ikawa, Maki and Miyano2012; Kabiraj & Sujith Reference Kabiraj and Sujith2012; Kashinath, Waugh & Juniper Reference Kashinath, Waugh and Juniper2014; Orchini, Illingworth & Juniper Reference Orchini, Illingworth and Juniper2015; Huhn & Magri Reference Huhn and Magri2020b), or by intermittency bifurcations scenarios (Nair, Thampi & Sujith Reference Nair, Thampi and Sujith2014; Nair & Sujith Reference Nair and Sujith2015). The rich bifurcation behaviour has an impact on the effectiveness of control strategies, which may work for periodic oscillations with a dominant frequency, but may not work as effectively for multi-frequency oscillations. Additionally, unpredictable changes in the operating conditions and turbulence, which can be modelled as random phenomena (Nair & Sujith Reference Nair and Sujith2015; Noiray Reference Noiray2017), increase the uncertainty on the prediction of the quantities of interest.

Thermoacoustics can be modelled with a hierarchy of assumptions and computational costs. Large-eddy simulations make assumptions only on the finer flow scales, which makes the final solution high-fidelity, but computationally expensive (Poinsot Reference Poinsot2017). Euler and Helmholtz solvers compute the acoustics that evolve on a prescribed mean flow, which makes the solution medium-fidelity and computationally less expensive than turbulent simulations (e.g. Nicoud et al. Reference Nicoud, Benoit, Sensiau and Poinsot2007). Commonly, this is achieved with flame models, which capture the heat-release response to acoustic perturbations with transfer functions (e.g. Noiray et al. Reference Noiray, Durox, Schüller and Candel2008; Silva et al. Reference Silva, Nicoud, Schuller, Durox and Candel2013) and distributed time delays (Polifke Reference Polifke2020). Other medium-fidelity and medium-cost methods are based on flame-front tracking (e.g. Pitsch & de Lageneste Reference Pitsch and de Lageneste2002) and simple chemistry models (e.g. Magri & Juniper Reference Magri and Juniper2014), to name only a few. On the other hand, low-order models based on travelling waves and standing waves (Dowling Reference Dowling1995) provide low-fidelity solutions, but with low computational cost. These low-order models capture only the dominant physical mechanisms, which are the flame time delay, the flame strength (or index), and the damping. Low-order models, which are the subject of this study, are attractive to practitioners because they provide quick estimates on the quantity of interest. Along with modelling, accurate experimental data are becoming more accessible and available (O'Connor, Acharya & Lieuwen Reference O'Connor, Acharya and Lieuwen2015). To monitor the thermoacoustic behaviour in both real engines and academic rig (such as the Rijke tube), the pressure is measured experimentally by microphones (Lieuwen & Yang Reference Lieuwen and Yang2005; Kabiraj et al. Reference Kabiraj, Saurabh, Wahi and Sujith2012a). Microphones sample the pressure amplitude at typically high rates, which generates large datasets in real time. Except when required for diagnostics and a posteriori parameter identification (among many others, Polifke et al. Reference Polifke, Poncet, Paschereit and Döbbeling2001; Schuermans Reference Schuermans2003; Lieuwen & Yang Reference Lieuwen and Yang2005; Noiray et al. Reference Noiray, Durox, Schüller and Candel2008; Noiray Reference Noiray2017; Polifke Reference Polifke2020), the data are useful if they can be used in real time, i.e. on the fly, to correct (or update) our knowledge of the thermoacoustic states. The sequential assimilation method that we develop bypasses the need to store data, which enables the real-time assimilation of data as well as on-the-fly parameter estimation.

To summarise, in thermoacoustics, we have three ingredients to improve the design: (i) a human being, who identifies the physical mechanisms that need to be modelled depending on the objectives and resources; (ii) a mathematical model, which provides estimates of the physical states; and (iii) experimental data, which provide a quantitative measure of the system's observables. A model is good if the human being identifies the physical mechanisms needed to formulate a mathematical model that provides the system's states compatibly with the experimental data. The overarching objective of this paper is to propose a method to make qualitatively low-order models quantitatively (more) accurate every time that reference data becomes available. The ingredients for this are: a physical low-order model, which provides the states; data, which provide the observables; and a statistical method, which finds the most likely model by assimilating the data in the model. In weather forecasting, this process is known as data assimilation (Sasaki Reference Sasaki1955). Data assimilation techniques have been applied to oceanographic studies (Eckart Reference Eckart1960), aerospace control (Gelb Reference Gelb1974), robotics, geosciences and cognitive sciences (Reich & Cotter Reference Reich and Cotter2015), to name only a few. Data assimilation is a principled method, which, in contrast to traditional machine learning, uses a physical model to provide a prediction on the solution (the forecast), which is updated when observations become available to provide a corrected state (the analysis) (Reich & Cotter Reference Reich and Cotter2015). The analysis is an estimator of the physical state (the true state), which is more accurate than the forecast.

1.1. Data assimilation

Data assimilation methods can be divided into two main approaches (Lewis, Lakshmivarahan & Dhall Reference Lewis, Lakshmivarahan and Dhall2006): (i) variational and (ii) statistical assimilation methods. Variational data assimilation requires the minimisation of a cost functional – e.g. a Mahalanobis (semi)norm – in terms of a control variable to obtain a single optimal analysis state (Bannister Reference Bannister2017). The most common variational methods are 3D-VAR and 4D-VAR, which are used widely in weather centres such as the Met Office in the UK or the European Centre for Medium-Range Weather Forecasts, and in academic research (Bannister Reference Bannister2008). In thermoacoustics, variational data assimilation was introduced by Traverso & Magri (Reference Traverso and Magri2019), who found the optimal thermoacoustic states given reference data from pressure observations. Because variational methods need batches of data, they are not naturally suited to real-time inference. On the other hand, statistical data assimilation combines concepts of probability and estimation theory. The aim of statistical data assimilation is to compute the probability distribution function of a numerical model to combine it statistically with data from observations. Because the probability distribution function is high-dimensional, the practitioner is often interested in capturing only the first and second statistical moments of it. In reduced-order modelling, this was achieved in flame tracking methods by Yu, Juniper & Magri (Reference Yu, Juniper and Magri2019), who implemented ensemble Kalman filters and smoothers to learn the flame parameters on the fly. In high-fidelity methods in reacting flows, data assimilation with ensemble Kalman filters have been applied in large-eddy simulation of premixed flames to predict local extinctions in a jet flame (Labahn et al. Reference Labahn, Wu, Coriton, Frank and Ihme2019), and under-resolved turbulent simulation to predict autoignition events (Magri & Doan Reference Magri and Doan2020). The ensemble Kalman filter has also been applied successfully to non-reacting flow systems that show high nonlinearities, such as the estimation of turbulent near-wall flows (Colburn, Cessna & Bewley Reference Colburn, Cessna and Bewley2011), uncertainties in Reynolds-averaged Navier–Stokes (RANS) equations (Xiao et al. Reference Xiao, Wu, Wang, Sun and Roy2016), and aerodynamic flows (da Silva & Colonius Reference da Silva and Colonius2018). Statistical data assimilation based on Bayesian methods, which enable real-time prediction in contrast to variational methods, was introduced in thermoacoustics by Nóvoa & Magri (Reference Nóvoa and Magri2020).

1.2. Model bias

Commonly, data assimilation methods are derived under the assumption that forecast errors are random with zero mean (Evensen Reference Evensen2009), or, in other words, the error is unbiased. However, in addition to state and parameter uncertainties, low-order models are affected by model uncertainty, which manifests as an error bias. Modelling the model bias is an active research area. To produce an unbiased analysis, both forecast and observation biases need to be estimated (Dee & da Silva Reference Dee and da Silva1998). Friedland (Reference Friedland1969) developed the separate Kalman filter to estimate the bias, which is a two-stage sequential filtering process that addresses the estimation of a constant bias term, but its application is limited to linear processes. Drécourt, Madsen & Rosbjerg (Reference Drécourt, Madsen and Rosbjerg2006) extended the implementation of the separate Kalman filter and compared it to the coloured noise Kalman filter, which augments the state vector with an auto-retrogressive model that describes the bias. They propose a feedback implementation of these methods, which allows a time-correlated representation of the bias, but the accuracy is limited by the prescribed model of the bias. Houtekamer & Zhang (Reference Houtekamer and Zhang2016) reviewed techniques that involve multi-physical parametrisation to reduce the model bias in atmospheric data assimilation, which are more computationally expensive than single-model approaches. More recently, there have been studies that estimated model-related errors in the assimilation cycle. Rubio, Chamoin & Louf (Reference Rubio, Chamoin and Louf2019) proposed a data-based approach to model the error that arises from the truncation of proper generalised decomposition modes, which was integrated into a Bayesian inference method. Da Silva & Colonius (Reference da Silva and Colonius2020) proposed a low-rank representation of the observation discretisation bias, based as well on an auto-regressive model. They performed parameter estimation with an ensemble Kalman filter to calibrate the parameters of the auto-regressive model. These works modelled successfully the truncation and discretisation bias, but they did not model the physical model bias, which is a more general form of error that will be tackled in this paper. The estimation of a nonlinear dynamical state in the presence of a model bias remains an open problem. We propose a framework to obtain an unbiased analysis in thermoacoustic low-fidelity models by inferring the model bias. This consists of the combination of data assimilation with a recurrent neural network, which infers the model error of the low-fidelity model of the thermoacoustic system.

Recurrent neural networks are data-driven techniques that are designed to learn temporal correlations in time series (Rumelhart, Hinton & Williams Reference Rumelhart, Hinton and Williams1986), with a variety of applications in time series forecasting. In fluid mechanics, recurrent neural networks have been employed to model unsteady flow around bluff bodies (Hasegawa et al. Reference Hasegawa, Fukami, Murata and Fukagata2020), and as an optimisation tool for gliding control (Novati, Mahadevan & Koumoutsakos Reference Novati, Mahadevan and Koumoutsakos2019). Among recurrent neural networks, echo state networks based on reservoir computing, which are universal approximators (Grigoryeva & Ortega Reference Grigoryeva and Ortega2018), proved to be successful in learning nonlinear correlations in data (Maass, Natschläger & Markram Reference Maass, Natschläger and Markram2002; Jaeger & Haas Reference Jaeger and Haas2004) and ergodic properties (Huhn & Magri Reference Huhn and Magri2022). Training an echo state network consists of a simple linear regression problem. This is a simple and computationally cheap task with respect to the back propagation required in other architectures (such as long short-term memory networks), which makes echo state networks suited to real-time assimilation. Because no gradient descent is necessary, vanishing/exploding gradient problems do not occur in echo state networks. In chaotic flows, echo state networks have been used, for instance, to learn and optimise the time average of thermoacoustic dynamics (Huhn & Magri Reference Huhn and Magri2020a, Reference Huhn and Magri2022), to predict turbulent dynamics with physical constraints (Doan, Polifke & Magri Reference Doan, Polifke and Magri2021), and to predict the statistics of extreme events in turbulent flows (Racca & Magri Reference Racca and Magri2021). In this paper, we propose to model the model bias with an echo state network, which is a more versatile and general tool than auto-regressive models (Aggarwal Reference Aggarwal2018, p. 306).

1.3. Objectives and structure

The objectives of this paper are fivefold. First, we develop a sequential data assimilation for a low-order model to self-adapt and self-correct any time that reference data become available. The method, which is based on Bayesian inference, provides the maximum a posteriori estimate model prediction, i.e. the most likely prediction. Second, we apply the methodology to infer the thermoacoustic states and heat-release parameters on the fly without storing data. Third, we analyse the performance of the data assimilation algorithm on a twin experiment with synthetic data, and interpret the results physically. Fourth, we propose practical rules for thermoacoustic data assimilation. Fifth, we extend the data assimilation method to account for a biased thermoacoustic model. This method is tested by assimilating observations from a model with non-ideal boundary conditions, a mean flow and the simulation of the flame front with a kinematic model (Dowling Reference Dowling1999). The simulation of the flame dynamics is suitable for a time-domain approach, and it overcomes the limitations of flame response models.

The paper is structured as follows. Section 2 provides a description of the nonlinear thermoacoustic model with the data assimilation technique and its implementation for thermoacoustics. Section 3 presents the method developed for state and parameter estimation. Section 4 presents the method developed for combining data assimilation with an echo state network. Section 5 presents the nonlinear characterisation of the thermoacoustic dynamics. Section 6 shows the results for non-chaotic regimes, whereas § 7 shows and discusses the results for chaotic solutions. Section 8 shows and discusses the results for unbiased state and parameter estimation for a high-fidelity limit cycle. A final discussion and conclusions end the paper in § 9.

2. Thermoacoustic data assimilation

We consider a nonlinear thermoacoustic model, ![]() $\mathcal {T}$, as

$\mathcal {T}$, as

where ![]() $\boldsymbol {\psi }$ is the state of the system,

$\boldsymbol {\psi }$ is the state of the system, ![]() $\boldsymbol {\alpha }$ is the vector of the system's parameters,

$\boldsymbol {\alpha }$ is the vector of the system's parameters, ![]() $\boldsymbol {y}$ are the observables from reference data,

$\boldsymbol {y}$ are the observables from reference data, ![]() $\boldsymbol {F}$ is a nonlinear operator, which, in general, is differential,

$\boldsymbol {F}$ is a nonlinear operator, which, in general, is differential, ![]() $\boldsymbol {\delta }$ is a model error,

$\boldsymbol {\delta }$ is a model error, ![]() $\mathcal {G}$ is a nonlinear map from the state space to the observable state, and

$\mathcal {G}$ is a nonlinear map from the state space to the observable state, and ![]() $\boldsymbol {\epsilon }$ is the observation error. The thermoacoustic problem on which we focus is: ‘given some data (observables)

$\boldsymbol {\epsilon }$ is the observation error. The thermoacoustic problem on which we focus is: ‘given some data (observables) ![]() $\boldsymbol {y}$, and a mathematical model

$\boldsymbol {y}$, and a mathematical model ![]() $\mathcal {T}$, what are the most likely physical states

$\mathcal {T}$, what are the most likely physical states ![]() $\boldsymbol {\psi }$ and parameters

$\boldsymbol {\psi }$ and parameters ![]() $\boldsymbol {\alpha }$ of the system?’ To answer this, we use a Bayesian approach in the well-posed maximum a posteriori estimation framework. Although the framework is versatile, in the following subsections, we specify the low-order model

$\boldsymbol {\alpha }$ of the system?’ To answer this, we use a Bayesian approach in the well-posed maximum a posteriori estimation framework. Although the framework is versatile, in the following subsections, we specify the low-order model ![]() $\boldsymbol {F}$, the data

$\boldsymbol {F}$, the data ![]() $\boldsymbol {y}$, and the data assimilation approach.

$\boldsymbol {y}$, and the data assimilation approach.

2.1. Qualitative nonlinear thermoacoustic model

The system consists of an open-ended tube containing a heat source, such as a flame or an electrically heated gauze. Because the tube is sufficiently long with respect to the diameter, the cut-on frequency is such that only longitudinal acoustic waves propagate. This is known as the Rijke tube, which is a common laboratory-scale device that has been employed in a variety of fundamental studies (Heckl Reference Heckl1990; Balasubramanian & Sujith Reference Balasubramanian and Sujith2008; Juniper Reference Juniper2011; Magri et al. Reference Magri, Balasubramanian, Sujith and Juniper2013). This device is represented in figure 1. The Rijke model used in this work is described by Balasubramanian & Sujith (Reference Balasubramanian and Sujith2008) and Juniper (Reference Juniper2011). The flow is assumed to be a perfect gas, the mean flow is sufficiently slow such that its effects are neglected in the acoustic propagation, and viscous and body forces are neglected. The acoustics are governed by the dimensionless linearised momentum and energy conservation equations

where ![]() $u'$ is the acoustic velocity,

$u'$ is the acoustic velocity, ![]() $p'$ is the acoustic pressure,

$p'$ is the acoustic pressure, ![]() $\dot{Q}$ is the heat-release rate,

$\dot{Q}$ is the heat-release rate, ![]() $x_{f}$ is the flame location,

$x_{f}$ is the flame location, ![]() ${\delta }$ is the Dirac delta distribution, which models the heat source as a point source (compact assumption), and

${\delta }$ is the Dirac delta distribution, which models the heat source as a point source (compact assumption), and ![]() $\zeta$ is the damping factor, which encapsulates the acoustic energy radiated from both ends of the duct, and the thermoviscous losses in boundary layers. The non-dimensional heat-release rate perturbation,

$\zeta$ is the damping factor, which encapsulates the acoustic energy radiated from both ends of the duct, and the thermoviscous losses in boundary layers. The non-dimensional heat-release rate perturbation, ![]() ${\dot{Q}}$, is modelled with a qualitative nonlinear time-delayed model (Heckl Reference Heckl1990):

${\dot{Q}}$, is modelled with a qualitative nonlinear time-delayed model (Heckl Reference Heckl1990):

where ![]() $\beta$ is the strength of the source,

$\beta$ is the strength of the source, ![]() $u'_{{f}}$ is the acoustic velocity at the flame location, and

$u'_{{f}}$ is the acoustic velocity at the flame location, and ![]() ${\tau }$ is the time delay. The heat-release rate is a key thermoacoustic parameter for the system's stability. The dimensionless variables in (2.3)–(2.4) and the dimensional variables (with

${\tau }$ is the time delay. The heat-release rate is a key thermoacoustic parameter for the system's stability. The dimensionless variables in (2.3)–(2.4) and the dimensional variables (with ![]() $\,\tilde {\;}\,$) are related as

$\,\tilde {\;}\,$) are related as ![]() $x = {\tilde {x}}/{\tilde {L}_0}$, where

$x = {\tilde {x}}/{\tilde {L}_0}$, where ![]() ${\tilde {L}_0}$ is the length of the tube,

${\tilde {L}_0}$ is the length of the tube, ![]() $t = {\tilde {t}}{\tilde {c}_0}/\tilde {L}_0$ (where

$t = {\tilde {t}}{\tilde {c}_0}/\tilde {L}_0$ (where ![]() $\tilde {c}_0$ is the mean speed of sound),

$\tilde {c}_0$ is the mean speed of sound), ![]() $u' = {\tilde {u}'}/{\tilde {c}_0}$,

$u' = {\tilde {u}'}/{\tilde {c}_0}$, ![]() $\rho ' = {\tilde {\rho }'}/{\tilde {\rho }_0}$ (where

$\rho ' = {\tilde {\rho }'}/{\tilde {\rho }_0}$ (where ![]() $\tilde {\rho }_0$ is the mean density),

$\tilde {\rho }_0$ is the mean density), ![]() $p' = {\tilde {p}'}/({\tilde {\rho }_0\,\tilde {c}_0^2})$,

$p' = {\tilde {p}'}/({\tilde {\rho }_0\,\tilde {c}_0^2})$, ![]() $\dot{Q} = {\tilde {\dot{Q}}'(\gamma -1)}/({\tilde {\rho }_0\,\tilde {c}_0^3})$ (where

$\dot{Q} = {\tilde {\dot{Q}}'(\gamma -1)}/({\tilde {\rho }_0\,\tilde {c}_0^3})$ (where ![]() $\gamma$ is the heat capacity ratio), and

$\gamma$ is the heat capacity ratio), and ![]() $\delta ({x}-{x}_{{f}}) = {\tilde {\delta }(\tilde {x}-\tilde {x}_{{f}})}{\tilde {L}_0}$. The open-ended boundary conditions are ideal, which means that the acoustic pressure is zero, i.e.

$\delta ({x}-{x}_{{f}}) = {\tilde {\delta }(\tilde {x}-\tilde {x}_{{f}})}{\tilde {L}_0}$. The open-ended boundary conditions are ideal, which means that the acoustic pressure is zero, i.e. ![]() $p'=0$ at

$p'=0$ at ![]() $x=\{0,1\}$. By separation of variables, the acoustic velocity and pressure are decomposed into Galerkin modes as (Zinn & Lores Reference Zinn and Lores1971)

$x=\{0,1\}$. By separation of variables, the acoustic velocity and pressure are decomposed into Galerkin modes as (Zinn & Lores Reference Zinn and Lores1971)

\begin{equation} u'(x,t)=\sum^{N_m}_{j=1}\eta_j(t)\cos{(\,j{\rm \pi} x)},\quad p'(x,t)={-}\sum^{N_m}_{j=1}\dfrac{\dot{\eta}_j(t)}{j{\rm \pi}}\sin{(\,j{\rm \pi} x)}, \end{equation}

\begin{equation} u'(x,t)=\sum^{N_m}_{j=1}\eta_j(t)\cos{(\,j{\rm \pi} x)},\quad p'(x,t)={-}\sum^{N_m}_{j=1}\dfrac{\dot{\eta}_j(t)}{j{\rm \pi}}\sin{(\,j{\rm \pi} x)}, \end{equation}

where ![]() $\cos (\,j{\rm \pi} x)$ and

$\cos (\,j{\rm \pi} x)$ and ![]() $\sin (\,j{\rm \pi} x)$ are the eigenfunctions of the acoustic velocity and pressure, respectively, when

$\sin (\,j{\rm \pi} x)$ are the eigenfunctions of the acoustic velocity and pressure, respectively, when ![]() $\zeta =0$ and

$\zeta =0$ and ![]() $\dot{Q}=0$, and

$\dot{Q}=0$, and ![]() $N_m$ is the number of acoustic modes kept in the decomposition. Substituting (2.5a,b) into (2.3), multiplying (2.3b) by

$N_m$ is the number of acoustic modes kept in the decomposition. Substituting (2.5a,b) into (2.3), multiplying (2.3b) by ![]() $\sin {(k{\rm \pi} x)}$, and integrating over

$\sin {(k{\rm \pi} x)}$, and integrating over ![]() $x=[0,1]$, yields the governing ordinary differential equations, which represent physically a set of nonlinearly coupled oscillators:

$x=[0,1]$, yields the governing ordinary differential equations, which represent physically a set of nonlinearly coupled oscillators:

where the damping term is defined by modal components ![]() $\zeta _j=C_1\,j^2+C_2\sqrt {j}$, which is motivated physically in Landau & Lifshitz (Reference Landau and Lifshitz1987). The damping coefficients

$\zeta _j=C_1\,j^2+C_2\sqrt {j}$, which is motivated physically in Landau & Lifshitz (Reference Landau and Lifshitz1987). The damping coefficients ![]() $C_1$ and

$C_1$ and ![]() $C_2$ are assumed to be constant. For reasons that will be explained in § 2.3, we introduce an advection equation to eliminate mathematically the time-delayed velocity term (Huhn & Magri Reference Huhn and Magri2020b):

$C_2$ are assumed to be constant. For reasons that will be explained in § 2.3, we introduce an advection equation to eliminate mathematically the time-delayed velocity term (Huhn & Magri Reference Huhn and Magri2020b):

where ![]() $v$ is a dummy variable that travels with non-dimensional velocity

$v$ is a dummy variable that travels with non-dimensional velocity ![]() $\tau ^{-1}$ in a dummy spatial domain

$\tau ^{-1}$ in a dummy spatial domain ![]() $X$ such that

$X$ such that

Equations (2.8a,b) are discretised with a Chebyshev method (Trefethen Reference Trefethen2000) with ![]() $N_c + 1$ points in the interval

$N_c + 1$ points in the interval ![]() $0\leqslant X\leqslant 1$.

$0\leqslant X\leqslant 1$.

Figure 1. Schematic of an open-ended duct with a heat source (also known as the Rijke tube). The heat released by the compact heat source is indicated by the vertical dotted line. The blue vertical lines indicate microphones located equidistantly.

In a state-space notation, the thermoacoustic problem is governed by

\begin{equation}

\left.\begin{gathered} \frac{{\rm

d}\boldsymbol{{\psi}}}{{\rm d}t} =

\boldsymbol{F}(\boldsymbol{{\alpha}};

\boldsymbol{{\psi}}), \quad

\boldsymbol{{\psi}}(t=0) = \boldsymbol{{\psi}}_0, \\

\boldsymbol{y}=

\boldsymbol{\mathsf{M}}(\boldsymbol{{x}})\,\boldsymbol{\psi},

\end{gathered}\right\}

\end{equation}

\begin{equation}

\left.\begin{gathered} \frac{{\rm

d}\boldsymbol{{\psi}}}{{\rm d}t} =

\boldsymbol{F}(\boldsymbol{{\alpha}};

\boldsymbol{{\psi}}), \quad

\boldsymbol{{\psi}}(t=0) = \boldsymbol{{\psi}}_0, \\

\boldsymbol{y}=

\boldsymbol{\mathsf{M}}(\boldsymbol{{x}})\,\boldsymbol{\psi},

\end{gathered}\right\}

\end{equation}

where the state vector ![]() $\boldsymbol{\psi}\equiv(\boldsymbol{\eta}, \boldsymbol{\dot{\eta}}, \boldsymbol{v})^\mathrm{T}\in\mathbb{R}^{2N_m+N_c}$ is the column concatenation of the acoustic amplitudes

$\boldsymbol{\psi}\equiv(\boldsymbol{\eta}, \boldsymbol{\dot{\eta}}, \boldsymbol{v})^\mathrm{T}\in\mathbb{R}^{2N_m+N_c}$ is the column concatenation of the acoustic amplitudes ![]() $\boldsymbol{\eta} \equiv (\eta_1,\eta_2,...,\eta_{N_m})^\mathrm{T}\in\mathbb{R}^{N_m}$ and

$\boldsymbol{\eta} \equiv (\eta_1,\eta_2,...,\eta_{N_m})^\mathrm{T}\in\mathbb{R}^{N_m}$ and ![]() $\boldsymbol{\dot{\eta}} \equiv ({\dot{\eta}_1}/{\rm \pi},{\dot{\eta}_2}/{(2{\rm \pi})},\ldots,{\dot{\eta}_{N_m}}/{(N_m{\rm \pi})})^\mathrm{T}\in\mathbb{R}^{N_m}$, and the dummy velocity variables

$\boldsymbol{\dot{\eta}} \equiv ({\dot{\eta}_1}/{\rm \pi},{\dot{\eta}_2}/{(2{\rm \pi})},\ldots,{\dot{\eta}_{N_m}}/{(N_m{\rm \pi})})^\mathrm{T}\in\mathbb{R}^{N_m}$, and the dummy velocity variables ![]() $\boldsymbol{v}\equiv(v_1, v_2,\ldots, v_{N_c})^\mathrm{T}\in\mathbb{R}^{N_c}$, which arise from the discretisation of (2.7). Also, the thermoacoustic parameters are contained in the vector

$\boldsymbol{v}\equiv(v_1, v_2,\ldots, v_{N_c})^\mathrm{T}\in\mathbb{R}^{N_c}$, which arise from the discretisation of (2.7). Also, the thermoacoustic parameters are contained in the vector ![]() $\boldsymbol{\alpha}=(\beta, \tau, \zeta)^\mathrm{T}\in\mathbb{R}^{N_P}$;

$\boldsymbol{\alpha}=(\beta, \tau, \zeta)^\mathrm{T}\in\mathbb{R}^{N_P}$; ![]() $\boldsymbol {F}$ represents the nonlinear operator that consists of (2.6a), (2.6b) and (2.7),

$\boldsymbol {F}$ represents the nonlinear operator that consists of (2.6a), (2.6b) and (2.7), ![]() $\boldsymbol {F}: \mathbb {R}^{2N_m+N_c+N_P}\rightarrow \mathbb {R}^{2N_m+N_c}$; and

$\boldsymbol {F}: \mathbb {R}^{2N_m+N_c+N_P}\rightarrow \mathbb {R}^{2N_m+N_c}$; and ![]() $\boldsymbol{\mathsf{M}}(\boldsymbol {{x}})$ is the measurement operator, which maps the state to the observable space at

$\boldsymbol{\mathsf{M}}(\boldsymbol {{x}})$ is the measurement operator, which maps the state to the observable space at ![]() $\boldsymbol {{x}}$. The expression of the measurement operator depends on the nature of the observables being assimilated, as explained in § 3. To work with a reduced-order model that captures qualitatively the essential dynamics, we use

$\boldsymbol {{x}}$. The expression of the measurement operator depends on the nature of the observables being assimilated, as explained in § 3. To work with a reduced-order model that captures qualitatively the essential dynamics, we use ![]() $N_m=10$ acoustic modes. For the advection equation,

$N_m=10$ acoustic modes. For the advection equation, ![]() $N_c = 10$ ensures numerical convergence (Huhn & Magri Reference Huhn and Magri2020b). The number of degrees of freedom of the reduced-order model is

$N_c = 10$ ensures numerical convergence (Huhn & Magri Reference Huhn and Magri2020b). The number of degrees of freedom of the reduced-order model is ![]() $N= 2N_m + N_c=30$. The initial value problem (2.9) is solved with an automatic step size control method that combines fourth- and fifth-order Runge–Kutta methods (Shampine & Reichelt Reference Shampine and Reichelt1997).

$N= 2N_m + N_c=30$. The initial value problem (2.9) is solved with an automatic step size control method that combines fourth- and fifth-order Runge–Kutta methods (Shampine & Reichelt Reference Shampine and Reichelt1997).

2.2. Qualitative and quantitative accuracy

We say that a low-order model is qualitatively correct when it captures the key physical parameters/mechanisms (e.g. the time delay). Although a low-order model may be physically motivated, it is subject to three sources of errors: (i) uncertainty in the state; (ii) uncertainty in the parameters; and (iii) bias in the model, i.e. the low-order equation does not contain all the terms necessary to model the phenomenon (the model bias is equivalently referred to as the model error). Data assimilation methods combine the forecast of a low-order model with observations from either real experiments or high-fidelity simulations, which reduces the bias in the state (§ 3.1) and/or in the parameters of the model (§ 3.2). However, traditional data assimilation methods do not tackle the model bias because they assume that the forecast model is unbiased. In § 4, we propose an echo state network as a method to estimate the model bias, thereby closing the low-order model equations in the data assimilation. In summary, the aim of data assimilation is to make a qualitative accurate model more quantitatively correct.

2.3. Data assimilation

Data assimilation optimally combines the prediction from an imperfect model with data from observations to improve the knowledge of the system's state. The updated solution (analysis) optimally combines the information from the observations ![]() $\boldsymbol {y}$ and the model solution (forecast) with their uncertainties. In order to (i) update the system's knowledge any time that data become available, and (ii) not store the data during the entire operation, we assimilate sequentially assuming that the process is a Markovian process. The concept of Bayesian update is key to this process, as explained in § 2.3.1.

$\boldsymbol {y}$ and the model solution (forecast) with their uncertainties. In order to (i) update the system's knowledge any time that data become available, and (ii) not store the data during the entire operation, we assimilate sequentially assuming that the process is a Markovian process. The concept of Bayesian update is key to this process, as explained in § 2.3.1.

2.3.1. Bayesian update

In a Bayesian framework, we quantify our confidence in a model by a probability measure. Hence we update our confidence in the model predictions every time we have reference data from observations. The rigorous framework to achieve this is probability theory, as explained in Cox's theorem (Jaynes Reference Jaynes2003).

To set a probabilistic framework at time ![]() $t=t_k$, the state

$t=t_k$, the state ![]() $\boldsymbol {\psi }_k$ and reference observations

$\boldsymbol {\psi }_k$ and reference observations ![]() $\boldsymbol {y}_k$ are assumed to be realisations of their corresponding random variables acting on the sample spaces

$\boldsymbol {y}_k$ are assumed to be realisations of their corresponding random variables acting on the sample spaces ![]() $\varOmega _{\boldsymbol {\psi }}=\mathbb {R}^{2N_m+N_c}$ and

$\varOmega _{\boldsymbol {\psi }}=\mathbb {R}^{2N_m+N_c}$ and ![]() $\varOmega _{\boldsymbol {y}}=\mathbb {R}^{N_{\boldsymbol {y}}}$. Because we transformed the time-delayed problem into an initial value problem, the solution of (2.9) at the present depends on the solution at the previous time step only. In other words, we transformed a non-Markovian system into a Markovian system, which simplifies the design of the Bayesian update. We quantify our confidence in a quantity through a probability

$\varOmega _{\boldsymbol {y}}=\mathbb {R}^{N_{\boldsymbol {y}}}$. Because we transformed the time-delayed problem into an initial value problem, the solution of (2.9) at the present depends on the solution at the previous time step only. In other words, we transformed a non-Markovian system into a Markovian system, which simplifies the design of the Bayesian update. We quantify our confidence in a quantity through a probability ![]() $\mathcal {P}$:

$\mathcal {P}$:

where ![]() $\lvert$ denotes that the quantity on the left is conditioned on the knowledge of the quantities on the right. The leftmost probability answers the question: ‘given a model

$\lvert$ denotes that the quantity on the left is conditioned on the knowledge of the quantities on the right. The leftmost probability answers the question: ‘given a model ![]() $\boldsymbol {F}$, a set of parameters

$\boldsymbol {F}$, a set of parameters ![]() $\boldsymbol {\alpha }$, and the state

$\boldsymbol {\alpha }$, and the state ![]() $\boldsymbol {\psi }_{k-1}$, what is the probability that the state takes the value

$\boldsymbol {\psi }_{k-1}$, what is the probability that the state takes the value ![]() $\boldsymbol {\psi }_k$?’ The rightmost probability answers the question: ‘if we forecast the state

$\boldsymbol {\psi }_k$?’ The rightmost probability answers the question: ‘if we forecast the state ![]() $\boldsymbol {\psi }_k$ from the model, what is the probability that we observe

$\boldsymbol {\psi }_k$ from the model, what is the probability that we observe ![]() $\boldsymbol {y}_k$?’ We assume that the observations are statistically independent and uncorrelated with respect to the forecast. To update our knowledge of the system, the prior knowledge from the reduced-order model and the reference observations are combined through Bayes’ rule

$\boldsymbol {y}_k$?’ We assume that the observations are statistically independent and uncorrelated with respect to the forecast. To update our knowledge of the system, the prior knowledge from the reduced-order model and the reference observations are combined through Bayes’ rule

First, ![]() $\mathcal {P}(\boldsymbol {\psi }_k, \boldsymbol {\alpha }, \boldsymbol {F})$ is the prior, which measures the knowledge of our system prior to observing

$\mathcal {P}(\boldsymbol {\psi }_k, \boldsymbol {\alpha }, \boldsymbol {F})$ is the prior, which measures the knowledge of our system prior to observing ![]() $\boldsymbol {y}_k$. The prior evolves through the Chapman–Kolmogorov equation (Jazwinski Reference Jazwinski2007), which involves multi-dimensional integrals. To solve the Chapman–Kolmogorov equation numerically, we use an ensemble method by integrating the model equations (§ 2.3.2), which provide a forecast on the state. Second,

$\boldsymbol {y}_k$. The prior evolves through the Chapman–Kolmogorov equation (Jazwinski Reference Jazwinski2007), which involves multi-dimensional integrals. To solve the Chapman–Kolmogorov equation numerically, we use an ensemble method by integrating the model equations (§ 2.3.2), which provide a forecast on the state. Second, ![]() $\mathcal {P}(\boldsymbol {y}_k | \boldsymbol {\psi }_k, \boldsymbol {\alpha }, \boldsymbol {F})$ is the likelihood (2.10b), which measures the confidence that we have in our model prediction. The likelihood is prescribed (see § 2.3.2). Third,

$\mathcal {P}(\boldsymbol {y}_k | \boldsymbol {\psi }_k, \boldsymbol {\alpha }, \boldsymbol {F})$ is the likelihood (2.10b), which measures the confidence that we have in our model prediction. The likelihood is prescribed (see § 2.3.2). Third, ![]() $\mathcal {P}(\boldsymbol {y}_k, \boldsymbol {\alpha }, \boldsymbol {F})$ is the evidence, which is the probability that the observable takes on the value

$\mathcal {P}(\boldsymbol {y}_k, \boldsymbol {\alpha }, \boldsymbol {F})$ is the evidence, which is the probability that the observable takes on the value ![]() $\boldsymbol {y}_k$. This can be prescribed from the knowledge of the experimental uncertainties. Finally,

$\boldsymbol {y}_k$. This can be prescribed from the knowledge of the experimental uncertainties. Finally, ![]() $\mathcal {P}(\boldsymbol {\psi }_k | \boldsymbol {y}_k, \boldsymbol {\alpha }, \boldsymbol {F})$ is the posterior, which measures the knowledge that we have on the state,

$\mathcal {P}(\boldsymbol {\psi }_k | \boldsymbol {y}_k, \boldsymbol {\alpha }, \boldsymbol {F})$ is the posterior, which measures the knowledge that we have on the state, ![]() $\boldsymbol {\psi }_k$, after we have observed

$\boldsymbol {\psi }_k$, after we have observed ![]() $\boldsymbol {y}_k$. Here, we will select the most probable value of

$\boldsymbol {y}_k$. Here, we will select the most probable value of ![]() $\boldsymbol {\psi }_k$ in the posterior (i.e. the mode) as the best estimator of the state (maximum a posteriori approach, which is a well-posed approach in inverse problems). The best estimator is called analysis in weather forecasting (Tarantola Reference Tarantola2005). Equation (2.11) provides the Bayesian update, which is key to this work and sequential data assimilation.

$\boldsymbol {\psi }_k$ in the posterior (i.e. the mode) as the best estimator of the state (maximum a posteriori approach, which is a well-posed approach in inverse problems). The best estimator is called analysis in weather forecasting (Tarantola Reference Tarantola2005). Equation (2.11) provides the Bayesian update, which is key to this work and sequential data assimilation.

2.3.2. Stochastic ensemble filtering for sequential assimilation

For brevity, we will omit the subscript ![]() $k$, unless it becomes necessary for clarity. We focus on a qualitative reduced-order model in which (i) the bias on the solution is negligible, (ii) the uncertainty on the state is represented by a covariance, (iii) the probability density function of the state is assumed to be symmetrical around the mean, and (iv) the dynamics at regime do not present frequent extreme events, i.e. the tails of the probability density function are not heavy. In § 4, we relax assumption (i) by introducing a methodology to estimate the bias of the solution, i.e. the model error.

$k$, unless it becomes necessary for clarity. We focus on a qualitative reduced-order model in which (i) the bias on the solution is negligible, (ii) the uncertainty on the state is represented by a covariance, (iii) the probability density function of the state is assumed to be symmetrical around the mean, and (iv) the dynamics at regime do not present frequent extreme events, i.e. the tails of the probability density function are not heavy. In § 4, we relax assumption (i) by introducing a methodology to estimate the bias of the solution, i.e. the model error.

The probability distribution to employ is the distribution that maximises the information entropy (Jaynes Reference Jaynes1957), which, in this scenario, is the Gaussian distribution. Therefore, the system's forecast and the observations are assumed to follow Gaussian distributions, i.e. ![]() $\boldsymbol {{\psi }}^{{f}} \sim \mathcal {N}(\boldsymbol {{\psi }},\boldsymbol{\mathsf{C}}^{f}_{\psi \psi })$ and

$\boldsymbol {{\psi }}^{{f}} \sim \mathcal {N}(\boldsymbol {{\psi }},\boldsymbol{\mathsf{C}}^{f}_{\psi \psi })$ and ![]() $\boldsymbol {{y}} \sim \mathcal {N}({M}\boldsymbol {{\psi }},\boldsymbol{\mathsf{C}}_{\epsilon \epsilon })$, respectively, where

$\boldsymbol {{y}} \sim \mathcal {N}({M}\boldsymbol {{\psi }},\boldsymbol{\mathsf{C}}_{\epsilon \epsilon })$, respectively, where ![]() $\mathcal {N}$ denotes the normal distribution, with the first argument being the mean, and the second argument being the covariance matrix. The forecast and observation covariance matrices are

$\mathcal {N}$ denotes the normal distribution, with the first argument being the mean, and the second argument being the covariance matrix. The forecast and observation covariance matrices are ![]() $\boldsymbol{\mathsf{C}}^{f}_{\psi \psi }$ and

$\boldsymbol{\mathsf{C}}^{f}_{\psi \psi }$ and ![]() $\boldsymbol{\mathsf{C}}_{\epsilon \epsilon }$, respectively. If the dynamics were linear, then the Bayesian update (2.11) would be solved exactly by the Kalman filter equations (Kalman Reference Kalman1960)

$\boldsymbol{\mathsf{C}}_{\epsilon \epsilon }$, respectively. If the dynamics were linear, then the Bayesian update (2.11) would be solved exactly by the Kalman filter equations (Kalman Reference Kalman1960)

where the superscripts ![]() $a$ and

$a$ and ![]() $f$ denote analysis and forecast, respectively. Equation (2.12a) corrects the model prediction by weighting the statistical distance between the observations (data) and the forecast, according to the prediction and observation covariances (Evensen Reference Evensen2003). The observation error covariance has to be prescribed based on the knowledge of the experimental methodology used.

$f$ denote analysis and forecast, respectively. Equation (2.12a) corrects the model prediction by weighting the statistical distance between the observations (data) and the forecast, according to the prediction and observation covariances (Evensen Reference Evensen2003). The observation error covariance has to be prescribed based on the knowledge of the experimental methodology used.

In an ensemble method, the distribution is represented by the sample statistics

\begin{equation} \bar{\boldsymbol{\psi}} \approx \frac{1}{m}\sum_{i=1}^m \boldsymbol{\psi}^i, \quad \boldsymbol{\mathsf{C}}_{\boldsymbol{\psi}\boldsymbol{\psi}} \approx \frac{1}{m-1}\,\boldsymbol{\varPsi}\boldsymbol{\varPsi}^{\rm T}, \end{equation}

\begin{equation} \bar{\boldsymbol{\psi}} \approx \frac{1}{m}\sum_{i=1}^m \boldsymbol{\psi}^i, \quad \boldsymbol{\mathsf{C}}_{\boldsymbol{\psi}\boldsymbol{\psi}} \approx \frac{1}{m-1}\,\boldsymbol{\varPsi}\boldsymbol{\varPsi}^{\rm T}, \end{equation}

where the ![]() $i$th column of the matrix

$i$th column of the matrix ![]() $\boldsymbol {\varPsi }$ is the deviation from the mean of the

$\boldsymbol {\varPsi }$ is the deviation from the mean of the ![]() $i$th realisation,

$i$th realisation, ![]() $\boldsymbol {\psi }^i - \bar {\boldsymbol {\psi }}$, and

$\boldsymbol {\psi }^i - \bar {\boldsymbol {\psi }}$, and ![]() $m$ is the number of ensemble members. Because (2.13a,b) is a Monte Carlo Markov chain integration, the sampling error scales as

$m$ is the number of ensemble members. Because (2.13a,b) is a Monte Carlo Markov chain integration, the sampling error scales as ![]() ${O}(N^{-1/2})$. The key idea of ensemble filters is to group forecast states from a numerical model (the ensemble) to obtain, on filtering, the analysis state. Ensemble methods describe the state's uncertainty by the spread in the ensemble at a given time to avoid the explicit formulation of the covariance matrices (Livings, Dance & Nichols Reference Livings, Dance and Nichols2008). The algorithmic procedure is as follows. First, the initial condition is integrated forward in time to provide the forecast state

${O}(N^{-1/2})$. The key idea of ensemble filters is to group forecast states from a numerical model (the ensemble) to obtain, on filtering, the analysis state. Ensemble methods describe the state's uncertainty by the spread in the ensemble at a given time to avoid the explicit formulation of the covariance matrices (Livings, Dance & Nichols Reference Livings, Dance and Nichols2008). The algorithmic procedure is as follows. First, the initial condition is integrated forward in time to provide the forecast state ![]() $\boldsymbol {{\psi }}^{{f}}$. Second, experimental observations

$\boldsymbol {{\psi }}^{{f}}$. Second, experimental observations ![]() $\boldsymbol {{y}}$ are assimilated statistically into the forecast to obtain the analysis state

$\boldsymbol {{y}}$ are assimilated statistically into the forecast to obtain the analysis state ![]() $\boldsymbol {{\psi }}^{{a}}$, which, in turn, becomes the initial condition for the next time step. The forecast accumulates errors over the integration period, which is reduced in the assimilation stage through observations with their experimental uncertainties. If the model is qualitatively correct and unbiased, after a sufficient number of assimilations, the ensemble concentrates around the true value. This sequential filtering process on one ensemble member is shown in figure 2. The process is repeated in parallel for the other ensemble members.

$\boldsymbol {{\psi }}^{{a}}$, which, in turn, becomes the initial condition for the next time step. The forecast accumulates errors over the integration period, which is reduced in the assimilation stage through observations with their experimental uncertainties. If the model is qualitatively correct and unbiased, after a sufficient number of assimilations, the ensemble concentrates around the true value. This sequential filtering process on one ensemble member is shown in figure 2. The process is repeated in parallel for the other ensemble members.

Figure 2. Conceptual schematic of a sequential filtering process: truth (green); observations and their uncertainties (red); forecast state and uncertainties (orange); and analysis state and uncertainties (blue). The circles represent pictorially the spread of the probability density functions: the larger the circles, the larger the uncertainty.

2.3.3. Ensemble square-root Kalman filter

In the ensemble Kalman filter (2.12), each ensemble member is updated with the assimilation of independently perturbed observation data. However, this method provides a sub-optimal solution that, in some cases, does not preserve the ensemble mean and is affected by sampling errors of the observations (Evensen Reference Evensen2003). Moreover, the ensemble Kalman filter may require a fairly large ensemble to compensate the sampling errors of the observations (Sakov & Oke Reference Sakov and Oke2008). The ensemble square-root Kalman filter (EnSRKF), which is an ensemble-transform Kalman filter, overcomes these issues (Livings et al. Reference Livings, Dance and Nichols2008). The key idea of the EnSRKF is to update the ensemble mean and deviations instead of each ensemble member. The EnSRKF for ![]() $m$ ensemble members and a state vector of size

$m$ ensemble members and a state vector of size ![]() $N$ reads

$N$ reads

where ![]() $\boldsymbol{\mathsf{A}} = (\boldsymbol {{\psi }}_1, \boldsymbol {{\psi }}_2, \dots, \boldsymbol {{\psi }}_m)\in \mathbb {R}^{N \times m }$ is the matrix that contains the ensemble members as columns,

$\boldsymbol{\mathsf{A}} = (\boldsymbol {{\psi }}_1, \boldsymbol {{\psi }}_2, \dots, \boldsymbol {{\psi }}_m)\in \mathbb {R}^{N \times m }$ is the matrix that contains the ensemble members as columns, ![]() $\bar {\boldsymbol{\mathsf{A}}}= (\bar {\boldsymbol {\psi }}, \dots, \bar {\boldsymbol {\psi }})\in \mathbb {R}^{N \times m}$ contains the mean analysis states in each column,

$\bar {\boldsymbol{\mathsf{A}}}= (\bar {\boldsymbol {\psi }}, \dots, \bar {\boldsymbol {\psi }})\in \mathbb {R}^{N \times m}$ contains the mean analysis states in each column, ![]() $\boldsymbol{\mathsf{Y}}=(\boldsymbol {{y}},\dots,\boldsymbol {{y}})\in \mathbb {R}^{q \times m}$ is the matrix containing the

$\boldsymbol{\mathsf{Y}}=(\boldsymbol {{y}},\dots,\boldsymbol {{y}})\in \mathbb {R}^{q \times m}$ is the matrix containing the ![]() $q$ observations repeated

$q$ observations repeated ![]() $m$ times, the identity matrix is represented by

$m$ times, the identity matrix is represented by ![]() $\mathbb {I}$, and

$\mathbb {I}$, and ![]() $\boldsymbol{\mathsf{V}}$ and

$\boldsymbol{\mathsf{V}}$ and ![]() $\boldsymbol{\varSigma}$ are the orthogonal matrices of eigenvectors and a diagonal matrix of eigenvalues, respectively, from singular value decomposition. The largest matrices required in the EnSRKF algorithm have dimension

$\boldsymbol{\varSigma}$ are the orthogonal matrices of eigenvectors and a diagonal matrix of eigenvalues, respectively, from singular value decomposition. The largest matrices required in the EnSRKF algorithm have dimension ![]() $N \times m$ and

$N \times m$ and ![]() $m \times m$, therefore the storage requirements are significantly smaller than those of non-ensemble based filters. In addition, this filter is non-intrusive and suitable for parallel computation. A derivation of the EnSRKF can be found in Appendix A.

$m \times m$, therefore the storage requirements are significantly smaller than those of non-ensemble based filters. In addition, this filter is non-intrusive and suitable for parallel computation. A derivation of the EnSRKF can be found in Appendix A.

2.4. Discussion

An ensemble method enables us to: (i) work with high-dimensional systems because we do not need to propagate the covariance matrix, which has ![]() ${O}\,(N^2)$ components; (ii) work with nonlinear systems, such as the thermoacoustic system under investigation; (iii) work with time-dependent problems; (iv) not store the data because we sequentially assimilate (real-time, i.e. on-the-fly, assimilation); and (v) avoid implementing tangent or adjoint solvers, which are required, for example, in variational data assimilation methods (Traverso & Magri Reference Traverso and Magri2019). On the one hand, if the system were linear, then a Gaussian prior would remain Gaussian under time integration. This makes the ensemble filter the exact Bayesian update in the limit of an infinite number of samples. On the other hand, if the system were nonlinear (e.g. in the present study), then a Gaussian prior would not necessarily remain Gaussian under time integration. This makes the ensemble filter an approximate Bayesian update. The update of the first and second statistical moments, however, remains exact. In other words, we cannot capture the skewness, kurtosis, and other higher moments. (Particle filter methods overcome this limitation, but they may be expensive computationally; Pham Reference Pham2001.)

${O}\,(N^2)$ components; (ii) work with nonlinear systems, such as the thermoacoustic system under investigation; (iii) work with time-dependent problems; (iv) not store the data because we sequentially assimilate (real-time, i.e. on-the-fly, assimilation); and (v) avoid implementing tangent or adjoint solvers, which are required, for example, in variational data assimilation methods (Traverso & Magri Reference Traverso and Magri2019). On the one hand, if the system were linear, then a Gaussian prior would remain Gaussian under time integration. This makes the ensemble filter the exact Bayesian update in the limit of an infinite number of samples. On the other hand, if the system were nonlinear (e.g. in the present study), then a Gaussian prior would not necessarily remain Gaussian under time integration. This makes the ensemble filter an approximate Bayesian update. The update of the first and second statistical moments, however, remains exact. In other words, we cannot capture the skewness, kurtosis, and other higher moments. (Particle filter methods overcome this limitation, but they may be expensive computationally; Pham Reference Pham2001.)

3. State and parameter estimation

This work considers both state estimation, in which the state is the uncertain quantity (§ 3.1), and combined state and parameter estimation, in which both the state and model parameters are uncertain (§ 3.2).

3.1. State estimation

State estimation is the process of using a series of noisy measurements into an estimation of the state of the dynamical system, ![]() $\boldsymbol {\psi }$. This paper considers two different scenarios in assimilating acoustic data in thermoacoustics: (i) assimilation of the acoustic modes; and (ii) assimilation of pressure measurements from

$\boldsymbol {\psi }$. This paper considers two different scenarios in assimilating acoustic data in thermoacoustics: (i) assimilation of the acoustic modes; and (ii) assimilation of pressure measurements from ![]() $N_{mic}$ microphones, which are located equidistantly from the flame location up to the end of the Rijke tube (figure 1). The assimilation of acoustic modes assumes that observation data are available for the pressure and velocity acoustic modes, {

$N_{mic}$ microphones, which are located equidistantly from the flame location up to the end of the Rijke tube (figure 1). The assimilation of acoustic modes assumes that observation data are available for the pressure and velocity acoustic modes, {![]() $\boldsymbol {{\eta }},\dot{\boldsymbol {{\eta }}}$}. Hence the state equations are

$\boldsymbol {{\eta }},\dot{\boldsymbol {{\eta }}}$}. Hence the state equations are

\begin{equation}

\left.\begin{gathered} \frac{{\rm

d}\boldsymbol{{\psi}}}{{\rm d}t}

=\boldsymbol{F}(\boldsymbol{{\alpha}};

\boldsymbol{{\psi}}) , \quad

\boldsymbol{{\psi}}(t=0) = \boldsymbol{{\psi}}_0 =

(\boldsymbol{{\eta}}_0, \dot{\boldsymbol{{\eta}}}_0,

\boldsymbol{{v}}_0)^{\rm{T}},\\

\boldsymbol{y}=

\boldsymbol{\mathsf{M}}(\boldsymbol{{x}})\,\boldsymbol{\psi}=

\left(\boldsymbol{{\eta}},

\dot{\boldsymbol{{\eta}}}\right)^{\rm{T}}.

\end{gathered}\right\}

\end{equation}

\begin{equation}

\left.\begin{gathered} \frac{{\rm

d}\boldsymbol{{\psi}}}{{\rm d}t}

=\boldsymbol{F}(\boldsymbol{{\alpha}};

\boldsymbol{{\psi}}) , \quad

\boldsymbol{{\psi}}(t=0) = \boldsymbol{{\psi}}_0 =

(\boldsymbol{{\eta}}_0, \dot{\boldsymbol{{\eta}}}_0,

\boldsymbol{{v}}_0)^{\rm{T}},\\

\boldsymbol{y}=

\boldsymbol{\mathsf{M}}(\boldsymbol{{x}})\,\boldsymbol{\psi}=

\left(\boldsymbol{{\eta}},

\dot{\boldsymbol{{\eta}}}\right)^{\rm{T}}.

\end{gathered}\right\}

\end{equation}Alternatively, in scenario (ii), from (2.5b), the reference pressure measurements are

\begin{gather}

\boldsymbol{{p}}'_{mic} \!=\! \begin{pmatrix} p'_1(t)

\\ p'_2(t) \\ \vdots \\ p'_{N_{{mic}}} (t) \\ \end{pmatrix}

\!=\!{-} \begin{pmatrix} \sin{\left({\rm \pi} x_1\right)} &

\sin{\left(2{\rm \pi} x_1\right)} & \dots & \sin{\left(N_m{\rm \pi}

x_1\right)} \\ \sin{\left({\rm \pi} x_2\right)} &

\sin{\left(2{\rm \pi} x_2\right)} & \dots & \sin{\left(N_m{\rm \pi}

x_2\right)} \\ \vdots & \vdots & & \vdots \\

\sin{({\rm \pi} x_{N_{mic}})} & \sin{(2{\rm \pi}

x_{N_{mic}})} & \dots & \sin{(N_m{\rm \pi}

x_{N_{mic}}) } \end{pmatrix}\! \begin{pmatrix}

\dfrac{\dot{\eta}_1(t)}{\rm \pi} \\[6pt]

\dfrac{\dot{\eta}_2(t)}{2{\rm \pi}} \\ \vdots \\

\dfrac{\dot{\eta}_{N_m}(t)}{N_m{\rm \pi}}. \end{pmatrix}

\end{gather}

\begin{gather}

\boldsymbol{{p}}'_{mic} \!=\! \begin{pmatrix} p'_1(t)

\\ p'_2(t) \\ \vdots \\ p'_{N_{{mic}}} (t) \\ \end{pmatrix}

\!=\!{-} \begin{pmatrix} \sin{\left({\rm \pi} x_1\right)} &

\sin{\left(2{\rm \pi} x_1\right)} & \dots & \sin{\left(N_m{\rm \pi}

x_1\right)} \\ \sin{\left({\rm \pi} x_2\right)} &

\sin{\left(2{\rm \pi} x_2\right)} & \dots & \sin{\left(N_m{\rm \pi}

x_2\right)} \\ \vdots & \vdots & & \vdots \\

\sin{({\rm \pi} x_{N_{mic}})} & \sin{(2{\rm \pi}

x_{N_{mic}})} & \dots & \sin{(N_m{\rm \pi}

x_{N_{mic}}) } \end{pmatrix}\! \begin{pmatrix}

\dfrac{\dot{\eta}_1(t)}{\rm \pi} \\[6pt]

\dfrac{\dot{\eta}_2(t)}{2{\rm \pi}} \\ \vdots \\

\dfrac{\dot{\eta}_{N_m}(t)}{N_m{\rm \pi}}. \end{pmatrix}

\end{gather}

The statistical errors of the microphones are assumed to be independent and Gaussian. In the twin experiment, the pressure observations are created from the true state, with a standard deviation ![]() $\sigma _{mic}$ that mimics the measurement error. Pressure data cannot be assimilated directly with the EnSRKF because the state vector contains the acoustic modes, i.e. it does not contain the acoustic pressure. To circumvent this, we augment the state vector with the acoustic pressure at the microphones’ locations according to (3.2). Therefore, the new state vector includes the Galerkin acoustic modes, the dummy velocity variables and the pressure at the different microphone locations, i.e.

$\sigma _{mic}$ that mimics the measurement error. Pressure data cannot be assimilated directly with the EnSRKF because the state vector contains the acoustic modes, i.e. it does not contain the acoustic pressure. To circumvent this, we augment the state vector with the acoustic pressure at the microphones’ locations according to (3.2). Therefore, the new state vector includes the Galerkin acoustic modes, the dummy velocity variables and the pressure at the different microphone locations, i.e. ![]() $\boldsymbol{\psi}'\equiv(\boldsymbol{\eta}, \boldsymbol{\dot{\eta}}, \boldsymbol{v}, \boldsymbol{p'_{mic}})^\mathrm{T}$, with dimension

$\boldsymbol{\psi}'\equiv(\boldsymbol{\eta}, \boldsymbol{\dot{\eta}}, \boldsymbol{v}, \boldsymbol{p'_{mic}})^\mathrm{T}$, with dimension ![]() $N' = 2N_m + N_c + N_{mic}$. The augmented state equations are

$N' = 2N_m + N_c + N_{mic}$. The augmented state equations are

\begin{equation}

\left.\begin{gathered} \frac{{\rm

d}\boldsymbol{{\psi}}'}{{\rm d}t}=

\boldsymbol{F}(\boldsymbol{{\alpha}};

\boldsymbol{{\psi}}), \quad

\boldsymbol{{\psi}}'(t=0) =

\boldsymbol{{\psi}}'_0 =

(\boldsymbol{{\eta}}_0, \dot{\boldsymbol{{\eta}}}_0,

\boldsymbol{{v}}_0,

\boldsymbol{{p'_{{mic}}}}_0)^{\rm{T}},\\

\boldsymbol{y}=

\boldsymbol{\mathsf{M}}(\boldsymbol{{x}})\,\boldsymbol{\psi'}=\boldsymbol{{p'_{{mic}}}}(x).

\end{gathered}\right\}

\end{equation}

\begin{equation}

\left.\begin{gathered} \frac{{\rm

d}\boldsymbol{{\psi}}'}{{\rm d}t}=

\boldsymbol{F}(\boldsymbol{{\alpha}};

\boldsymbol{{\psi}}), \quad

\boldsymbol{{\psi}}'(t=0) =

\boldsymbol{{\psi}}'_0 =

(\boldsymbol{{\eta}}_0, \dot{\boldsymbol{{\eta}}}_0,

\boldsymbol{{v}}_0,

\boldsymbol{{p'_{{mic}}}}_0)^{\rm{T}},\\

\boldsymbol{y}=

\boldsymbol{\mathsf{M}}(\boldsymbol{{x}})\,\boldsymbol{\psi'}=\boldsymbol{{p'_{{mic}}}}(x).

\end{gathered}\right\}

\end{equation}With this, the modes will be updated indirectly during the assimilation step using the microphone data and their experimental error.

3.2. Combined state and parameter estimation

Combined state and parameter estimation is the process of using a series of noisy measurements into an estimation of the state of the dynamical system ![]() $\boldsymbol {\psi }$ and the parameters

$\boldsymbol {\psi }$ and the parameters ![]() $\boldsymbol {\alpha }$. The parameters are regarded as variables of the dynamical system so that they are updated in every analysis step. This is achieved by combining the governing equations of the thermoacoustic model with the equations that describe the evolution of parameters, which are constant in time, but can change when observations are assimilated. The equations for the augmented state of combined state and parameter estimation are

$\boldsymbol {\alpha }$. The parameters are regarded as variables of the dynamical system so that they are updated in every analysis step. This is achieved by combining the governing equations of the thermoacoustic model with the equations that describe the evolution of parameters, which are constant in time, but can change when observations are assimilated. The equations for the augmented state of combined state and parameter estimation are

\begin{equation} \left.\begin{gathered} \frac{{\rm d}}{{\rm d}t} \begin{bmatrix} \boldsymbol{{\psi}}\\ \boldsymbol{{\alpha}} \end{bmatrix} = \begin{bmatrix} \boldsymbol{F}(\boldsymbol{{\alpha}}; \boldsymbol{{\psi}})\\ 0 \end{bmatrix} , \quad \begin{matrix} \boldsymbol{{\psi}}(t=0) = \boldsymbol{{\psi}}_0,\\ \boldsymbol{{\alpha}}(t=0) = \boldsymbol{{\alpha}}_0, \end{matrix} \\ \boldsymbol{y}= \boldsymbol{\mathsf{M}}(\boldsymbol{{x}})\,\boldsymbol{\psi}. \end{gathered}\right\} \end{equation}

\begin{equation} \left.\begin{gathered} \frac{{\rm d}}{{\rm d}t} \begin{bmatrix} \boldsymbol{{\psi}}\\ \boldsymbol{{\alpha}} \end{bmatrix} = \begin{bmatrix} \boldsymbol{F}(\boldsymbol{{\alpha}}; \boldsymbol{{\psi}})\\ 0 \end{bmatrix} , \quad \begin{matrix} \boldsymbol{{\psi}}(t=0) = \boldsymbol{{\psi}}_0,\\ \boldsymbol{{\alpha}}(t=0) = \boldsymbol{{\alpha}}_0, \end{matrix} \\ \boldsymbol{y}= \boldsymbol{\mathsf{M}}(\boldsymbol{{x}})\,\boldsymbol{\psi}. \end{gathered}\right\} \end{equation}

With a slight abuse of notation, the state vector ![]() $\boldsymbol {{\psi }}$ in (3.4) is equal to

$\boldsymbol {{\psi }}$ in (3.4) is equal to ![]() $\boldsymbol {{\psi }}\equiv (\boldsymbol {\eta },\dot{\boldsymbol {\eta }},\boldsymbol {{v}})^\textrm {{T}}$ in (3.1) for the assimilation of acoustic modes, and equal to

$\boldsymbol {{\psi }}\equiv (\boldsymbol {\eta },\dot{\boldsymbol {\eta }},\boldsymbol {{v}})^\textrm {{T}}$ in (3.1) for the assimilation of acoustic modes, and equal to ![]() $\boldsymbol {{\psi }}'\equiv (\boldsymbol {\eta },\dot{\boldsymbol {\eta }},\boldsymbol {{v}},\boldsymbol {{p}}'_{mic})^\textrm {{T}}$ in (3.3) for the assimilation of pressure measurements.

$\boldsymbol {{\psi }}'\equiv (\boldsymbol {\eta },\dot{\boldsymbol {\eta }},\boldsymbol {{v}},\boldsymbol {{p}}'_{mic})^\textrm {{T}}$ in (3.3) for the assimilation of pressure measurements.

The data assimilation algorithm is applied to the augmented system for both the forecast state and the parameters to be updated at every analysis step. The parameters need to be initialised for each ensemble member from a uniform distribution with width 25 % of the mean parameter value. In other words, we assume that the parameters are uncertain by ![]() ${\pm }25\,\%$.

${\pm }25\,\%$.

3.3. Performance metrics

The performance of the state estimation and combined state and parameter estimation are evaluated with three metrics: (i) the trace of the forecast covariance, ![]() $\boldsymbol{\mathsf{C}}_{\psi \psi }^{{f}}$, which measures globally the spread of the ensemble; (ii) the relative difference between the true pressure oscillations at the flame location and the filtered solution, which measures the instantaneous error; and (iii) for the combined state and parameter assimilation, the convergence of the filtered parameters normalised to their true values, as well as the root-mean-square error with respect to the true solution.

$\boldsymbol{\mathsf{C}}_{\psi \psi }^{{f}}$, which measures globally the spread of the ensemble; (ii) the relative difference between the true pressure oscillations at the flame location and the filtered solution, which measures the instantaneous error; and (iii) for the combined state and parameter assimilation, the convergence of the filtered parameters normalised to their true values, as well as the root-mean-square error with respect to the true solution.

4. Data assimilation with bias estimation

In this section, we analyse the case of state, parameter and model bias estimation. Sources of model bias in the model of § 2.1 include (i) idealised boundary conditions, (ii) a simple heat-release law with no simulation of the flame, and (iii) zero mean flow effects. In this paper, a higher-fidelity model produces data that account for these three sources of model bias. We infer the model bias to correct the biased forecast state prior to the analysis step. By performing state and parameter estimation on the unbiased forecast, we increase the quantitative accuracy of the model prediction. First, we define the time-dependent model bias ![]() $\boldsymbol {{U}}(t)$ as the difference between the true pressure state (from the higher-fidelity model) at the microphone locations

$\boldsymbol {{U}}(t)$ as the difference between the true pressure state (from the higher-fidelity model) at the microphone locations ![]() $\boldsymbol {{p}}'^{~t}_{{mic}}$, and the expected biased pressure

$\boldsymbol {{p}}'^{~t}_{{mic}}$, and the expected biased pressure ![]() $\langle \boldsymbol {{p}}'_{{mic}}\rangle$, i.e. the mean of the ensemble of pressures

$\langle \boldsymbol {{p}}'_{{mic}}\rangle$, i.e. the mean of the ensemble of pressures

We propose an echo state network to predict the evolution of the model bias.

4.1. Echo state networks

An echo state network (ESN) is a type of recurrent neural network based on reservoir computing (Lukoševičius Reference Lukoševičius2012). The ESNs learn temporal correlations in data by nonlinearly expanding the data into a high-dimensional reservoir, which acts as the memory of the system, and is a framework more versatile than auto-regressive models (Aggarwal Reference Aggarwal2018).

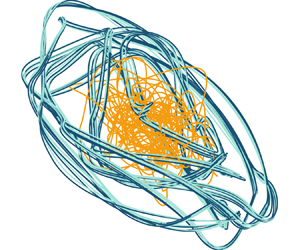

Figure 3 shows a pictorial representation of an ESN. The reservoir is defined by a high-dimensional vector ![]() ${\boldsymbol {r}}(t_i)\in \mathbb {R}^{N_{r}}$ and a state matrix

${\boldsymbol {r}}(t_i)\in \mathbb {R}^{N_{r}}$ and a state matrix ![]() $\boldsymbol{\mathsf{W}}\in \mathbb {R}^{N_{r}\times N_{r}}$, where

$\boldsymbol{\mathsf{W}}\in \mathbb {R}^{N_{r}\times N_{r}}$, where ![]() $N_{r}$ is the number of neurons in the reservoir. We use

$N_{r}$ is the number of neurons in the reservoir. We use ![]() $N_{r} = 100$ neurons, which are sufficient to represent the dynamics of the thermoacoustic system (Huhn & Magri Reference Huhn and Magri2022). The inputs and outputs from the reservoir are vectors of dimension

$N_{r} = 100$ neurons, which are sufficient to represent the dynamics of the thermoacoustic system (Huhn & Magri Reference Huhn and Magri2022). The inputs and outputs from the reservoir are vectors of dimension ![]() $\mathbb {R}^{N_{mic}}$ because we define the bias as the pressure error at each microphone (4.1). Their input and output matrices are

$\mathbb {R}^{N_{mic}}$ because we define the bias as the pressure error at each microphone (4.1). Their input and output matrices are ![]() $\boldsymbol{\mathsf{W}}_{{in}}\in \mathbb {R}^{N_{r}\times (N_{mic}+1)}$ and

$\boldsymbol{\mathsf{W}}_{{in}}\in \mathbb {R}^{N_{r}\times (N_{mic}+1)}$ and ![]() $\boldsymbol{\mathsf{W}}_{{out}}\in \mathbb {R}^{ N_{mic}\times (N_{r}+1)}$, respectively. At every time

$\boldsymbol{\mathsf{W}}_{{out}}\in \mathbb {R}^{ N_{mic}\times (N_{r}+1)}$, respectively. At every time ![]() $t_i$, the input bias

$t_i$, the input bias ![]() $\boldsymbol {{U}}(t_i)$ and the state of the reservoir at the previous time step

$\boldsymbol {{U}}(t_i)$ and the state of the reservoir at the previous time step ![]() $\boldsymbol {{r}}(t_{i-1})$ are combined to predict the reservoir state at the current time as well as the bias at the next time step

$\boldsymbol {{r}}(t_{i-1})$ are combined to predict the reservoir state at the current time as well as the bias at the next time step ![]() $\boldsymbol {{U}}(t_{i+1})$ such that

$\boldsymbol {{U}}(t_{i+1})$ such that

where ![]() $\tilde{{U}}$ is the input bias normalised by the range, component-wise, and the constants 0.1 and 1 are used to break the symmetry of the ESN (Huhn & Magri Reference Huhn and Magri2020a). The operator

$\tilde{{U}}$ is the input bias normalised by the range, component-wise, and the constants 0.1 and 1 are used to break the symmetry of the ESN (Huhn & Magri Reference Huhn and Magri2020a). The operator ![]() $[\,;\,]$ indicates vertical concatenation. The matrices

$[\,;\,]$ indicates vertical concatenation. The matrices ![]() $\boldsymbol{\mathsf{W}}_{{in}}$ and

$\boldsymbol{\mathsf{W}}_{{in}}$ and ![]() $\boldsymbol{\mathsf{W}}$ are predefined as fixed, sparse and randomly generated. Specifically,

$\boldsymbol{\mathsf{W}}$ are predefined as fixed, sparse and randomly generated. Specifically, ![]() $\boldsymbol{\mathsf{W}}_{{in}}$ has only one non-zero element per row, which is sampled from a uniform distribution in

$\boldsymbol{\mathsf{W}}_{{in}}$ has only one non-zero element per row, which is sampled from a uniform distribution in ![]() $[-\sigma _{{in}},\sigma _{{in}}]$, where

$[-\sigma _{{in}},\sigma _{{in}}]$, where ![]() $\sigma _{{in}}$ is the input scaling. Matrix

$\sigma _{{in}}$ is the input scaling. Matrix ![]() $\boldsymbol{\mathsf{W}}$ is an Erdős–Rényi matrix with average connectivity

$\boldsymbol{\mathsf{W}}$ is an Erdős–Rényi matrix with average connectivity ![]() $d=5$, in which each neuron (each row of

$d=5$, in which each neuron (each row of ![]() $\boldsymbol{\mathsf{W}}$) has on average

$\boldsymbol{\mathsf{W}}$) has on average ![]() $d$ connections (non-zero elements), which are obtained by sampling from a uniform distribution in

$d$ connections (non-zero elements), which are obtained by sampling from a uniform distribution in ![]() $[-1,1]$; the entire matrix is then rescaled by a multiplication factor to set the spectral radius

$[-1,1]$; the entire matrix is then rescaled by a multiplication factor to set the spectral radius ![]() $\rho$. The weights of

$\rho$. The weights of ![]() $\boldsymbol{\mathsf{W}}_{{out}}$ are determined through training, which consists of solving a linear system for a training set of length

$\boldsymbol{\mathsf{W}}_{{out}}$ are determined through training, which consists of solving a linear system for a training set of length ![]() $N_{tr}$:

$N_{tr}$:

where ![]() $\boldsymbol{\mathsf{R}}\in \mathbb {R}^{(N_{r}+1)\times N_{{tr}}}$ is the horizontal concatenation of the augmented reservoir state for each time in the training set

$\boldsymbol{\mathsf{R}}\in \mathbb {R}^{(N_{r}+1)\times N_{{tr}}}$ is the horizontal concatenation of the augmented reservoir state for each time in the training set ![]() $[\boldsymbol {r}(t_i);1]$ with

$[\boldsymbol {r}(t_i);1]$ with ![]() $i=1,\ldots,N_{tr}$,

$i=1,\ldots,N_{tr}$, ![]() $\boldsymbol{\mathsf{U}}_{{train}}\in \mathbb {R}^{N_{mic}\times N_{{tr}}}$ is the time concatenation of the output data,

$\boldsymbol{\mathsf{U}}_{{train}}\in \mathbb {R}^{N_{mic}\times N_{{tr}}}$ is the time concatenation of the output data, ![]() $\mathbb {I}$ is the identity matrix, and

$\mathbb {I}$ is the identity matrix, and ![]() $\gamma _t$ is the Tikhonov regularisation parameter (Lukoševičius Reference Lukoševičius2012). We employ recycle validation (Racca & Magri Reference Racca and Magri2021) to select the input scaling

$\gamma _t$ is the Tikhonov regularisation parameter (Lukoševičius Reference Lukoševičius2012). We employ recycle validation (Racca & Magri Reference Racca and Magri2021) to select the input scaling ![]() $\sigma _{in}=0.0126$, the spectral radius

$\sigma _{in}=0.0126$, the spectral radius ![]() $\rho =0.9667$, and the Tikhonov parameter

$\rho =0.9667$, and the Tikhonov parameter ![]() $\gamma _t=1\times 10^{-16}$.

$\gamma _t=1\times 10^{-16}$.

Figure 3. Schematic representation of an echo state network.

4.2. Thermoacoustic echo state network

On the one hand, in open loop (figure 4a), the true bias data (4.1) are fed into every forecast step to compute (4.2a,b). The output bias from the ESN is disregarded in open loop, which is used to initialise the network (the washout), such that ![]() $\boldsymbol {{U}}_{w}\in \mathbb {R}^{N_{mic}\times N_{w}}$. State and parameters are not updated during the washout. On the other hand, in closed loop (figure 4b), the true bias data (4.1) are fed in the first step, then the output bias from a forecast step (4.2b) is used as the initial condition of the next step. The ESN forecast frequency is set to be five times smaller than the thermoacoustic model time step, to reduce the additional computation cost associated with the bias estimation.

$\boldsymbol {{U}}_{w}\in \mathbb {R}^{N_{mic}\times N_{w}}$. State and parameters are not updated during the washout. On the other hand, in closed loop (figure 4b), the true bias data (4.1) are fed in the first step, then the output bias from a forecast step (4.2b) is used as the initial condition of the next step. The ESN forecast frequency is set to be five times smaller than the thermoacoustic model time step, to reduce the additional computation cost associated with the bias estimation.

Figure 4. Schematic representation of the ESN forecast methods: (a) open-loop forecast, and (b) closed-loop estimation of the bias.

In detail, the pseudo-algorithm 1 summarises the procedure that we propose for bias-aware data assimilation with an ESN: (1) the ensemble of acoustic modes is initialised and forecast for the washout time; (2) we run the ESN in open loop to initialise the reservoir; and (3) we perform data assimilation. When measurements become available, we compute the ensemble of pressures by adding the estimated bias from the ESN, ![]() ${U}$, to the expectation of the forecast pressure at the microphones, such that the unbiased ensemble is centred around the unbiased pressure state

${U}$, to the expectation of the forecast pressure at the microphones, such that the unbiased ensemble is centred around the unbiased pressure state ![]() $\hat {\boldsymbol {{p}}}'_{{mic\,}_j} = \boldsymbol {{U}} + \boldsymbol {{p}}'_{{mic\,}_j}$ for

$\hat {\boldsymbol {{p}}}'_{{mic\,}_j} = \boldsymbol {{U}} + \boldsymbol {{p}}'_{{mic\,}_j}$ for ![]() $j = 1,\ldots,m$, where

$j = 1,\ldots,m$, where ![]() $(\,\hat {\,}\,)$ indicates a statistically unbiased quantity. Subsequently, we perform the analysis step. The EnSRKF obtains the optimal combination between the unbiased pressures and the observations, which updates indirectly the biased ensemble of acoustic modes and parameters. The resulting analysis ensemble is used to re-initialise the ESN for the next forecast in closed loop, such that the bias is the difference between the observations and the expectation of the analysis pressures, i.e.

$(\,\hat {\,}\,)$ indicates a statistically unbiased quantity. Subsequently, we perform the analysis step. The EnSRKF obtains the optimal combination between the unbiased pressures and the observations, which updates indirectly the biased ensemble of acoustic modes and parameters. The resulting analysis ensemble is used to re-initialise the ESN for the next forecast in closed loop, such that the bias is the difference between the observations and the expectation of the analysis pressures, i.e. ![]() $\boldsymbol {{U}} = \boldsymbol {{p}}'^{~t}_{{mic}} - \langle \boldsymbol {{p}}'^{~a}_{{mic}}\rangle$. Finally, the Rijke model and the ESN are time-marched until the next measurement becomes available for assimilation.

$\boldsymbol {{U}} = \boldsymbol {{p}}'^{~t}_{{mic}} - \langle \boldsymbol {{p}}'^{~a}_{{mic}}\rangle$. Finally, the Rijke model and the ESN are time-marched until the next measurement becomes available for assimilation.

Algorithm 1 Data assimilation with ESN bias estimation

4.3. Test case

The higher-fidelity model that we use is based on the travelling-wave approach of Dowling (Reference Dowling1999), which is described in detail by Aguilar Pérez (Reference Aguilar Pérez2019). The acoustic pressure and velocity are written as functions of two acoustic waves that propagate downstream and upstream of the tube (see (3.5) and (3.7) in Dowling Reference Dowling1999). As shown in figure 5, the waves are defined as ![]() $f$ and

$f$ and ![]() $g$, with convective velocities