1. Introduction

Approximately two thirds of the surface of the Earth is covered by the ocean. The air–sea exchanges of mass, momentum and energy that happen over such a vast expanse play an integral role in determining the sea state, weather patterns and climate, thus significantly impacting many aspects of human life. Although we know that surface waves need to be fully integrated into weather and global climate forecast models (e.g. Melville Reference Melville1996; Sullivan & McWilliams Reference Sullivan and McWilliams2010), we do not yet fully understand the fundamental processes that couple the surface waves with the turbulent boundary layers above and below the ocean surface (e.g. Cavaleri et al. Reference Cavaleri, Barbariol, Benetazzo and Waseda2019; Buckley, Veron & Yousefi Reference Buckley, Veron and Yousefi2020; Geva & Shemer Reference Geva and Shemer2022). Indeed, the current parameterizations of air–sea exchange processes are limited, and most models still fall short of making accurate predictions of wave growth and weather events, particularly in strongly forced conditions.

Over the past few decades, many studies have investigated the influence of ocean surface waves on air–sea exchanges and, specifically, wind stress, defined as the sum of skin friction and form drag (e.g. Banner Reference Banner1990; Belcher & Hunt Reference Belcher and Hunt1993; Melville, Shear & Veron Reference Melville, Shear and Veron1998; Sullivan, McWilliams & Moeng Reference Sullivan, McWilliams and Moeng2000; Melville, Veron & White Reference Melville, Veron and White2002; Yang & Shen Reference Yang and Shen2010; Veron, Melville & Lenain Reference Veron, Melville and Lenain2011; Grare et al. Reference Grare, Peirson, Branger, Walker, Giovanangeli and Makin2013; Grare, Lenain & Melville Reference Grare, Lenain and Melville2018; Hao & Shen Reference Hao and Shen2019; Husain et al. Reference Husain, Hara, Buckley, Yousefi, Veron and Sullivan2019; Yousefi, Veron & Buckley Reference Yousefi, Veron and Buckley2020, Reference Yousefi, Veron and Buckley2021; Aiyer, Deike & Mueller Reference Aiyer, Deike and Mueller2023, among others). These studies have shown that the drag at the ocean surface strongly depends on environmental conditions and is a function of not only wind speed but also wave height, wave slope, wind–wave alignment and wave age, which is defined as the ratio of the wave phase speed to the reference wind speed measured at a height of 10 m above the air–water interface. Although some parameterizations exist to account for the impact of these parameters on surface stress (e.g. Fairall et al. Reference Fairall, Bradley, Hare, Grachev and Edson2003; Edson et al. Reference Edson, Jampana, Weller, Bigorre, Plueddemann, Fairall, Miller, Mahrt, Vickers and Hersbach2013; Teixeira Reference Teixeira2018; Porchetta et al. Reference Porchetta, Temel, Muñoz-Esparza, Reuder, Monbaliu, Van Beeck and van Lipzig2019; Husain, Hara & Sullivan Reference Husain, Hara and Sullivan2022a,Reference Husain, Hara and Sullivanb), there is only a partial understanding of the dependencies of sea-surface drag on wave parameters. Therefore, determining these dependencies and developing sea-state-aware parametrizations of wind stress have remained one of the central foci of the wind-wave research community. Furthermore, the effects of surface waves and the corresponding generation of turbulence, airflow separation, spray and bubbles on the surface drag are not well understood. Irrespective of these findings, most operational models used to date still rely on simple bulk parameterization based on the Monin–Obukhov similarity theory (e.g. Hara & Belcher Reference Hara and Belcher2004; Hara & Sullivan Reference Hara and Sullivan2015), which is only valid under equilibrium conditions and does not account for the multiscale nature of sea-surface roughness nor the sea-state dynamics. As such, these models often struggle to accurately predict the evolution of wave fields (e.g. Moon et al. Reference Moon, Hara, Ginis, Belcher and Tolman2004b; Moon, Ginis & Hara Reference Moon, Ginis and Hara2004a; Donelan et al. Reference Donelan, Curcic, Chen and Magnusson2012; Cronin et al. Reference Cronin2019; Husain et al. Reference Husain, Hara and Sullivan2022a,Reference Husain, Hara and Sullivanb).

Accurately simulating wind-wave fields over a realistic range of the parameter space hinges upon our ability to resolve sea-surface drag. However, resolving the drag directly at the ocean surface through, for example, direct numerical simulations (DNSs) or wall-resolved large-eddy simulations (LESs) is only possible at modest Reynolds numbers due to the inherent complexity of interfacial mechanisms occurring over a broad range of time and length scales (e.g. Sullivan & McWilliams Reference Sullivan and McWilliams2010; Ayet & Chapron Reference Ayet and Chapron2022). Wall-modelled LES offers a pathway to address this challenge. In wall-modelled LES, the near-surface region is bypassed, and a wall-layer parameterization is instead used to account for subgrid-scale surface forces (Piomelli Reference Piomelli2008; Bose & Park Reference Bose and Park2018). However, difficulties associated with the acquisition of high-resolution field (or even laboratory) measurements close to the air–sea interface (e.g. Turney & Banerjee Reference Turney and Banerjee2008, Reference Turney and Banerjee2013; Sullivan et al. Reference Sullivan, Banner, Morison and Peirson2018) have limited the development of such parameterizations (see, for e.g. Yang, Meneveau & Shen Reference Yang, Meneveau and Shen2013; Aiyer et al. Reference Aiyer, Deike and Mueller2023). Limiting factors in the retrieval of direct field measurements include platform movement (e.g. Donelan, Drennan & Katsaros Reference Donelan, Drennan and Katsaros1997), limitations of existing sensor technology (e.g. Graber et al. Reference Graber, Terray, Donelan, Drennan, Van Leer and Peters2000), broadband variability of ocean wave fields (e.g. Sullivan & McWilliams Reference Sullivan and McWilliams2010) and cost. In addition, in situ observations often rely on point measurement techniques that only provide time-averaged statistics. As a result, the existing computational models and available measurement techniques are both characterized by considerable uncertainty, and findings from these two approaches are often difficult to reconcile in terms of, for example, total wind stress and wave growth (e.g. Belcher & Hunt Reference Belcher and Hunt1998; Peirson & Garcia Reference Peirson and Garcia2008; Hara & Sullivan Reference Hara and Sullivan2015).

The action of wind imparts both momentum and energy fluxes to the wave field. At the air–water interface, the total momentum flux, or the wind stress, is the sum of the tangential and form drag (e.g. Grare et al. Reference Grare, Peirson, Branger, Walker, Giovanangeli and Makin2013; Buckley et al. Reference Buckley, Veron and Yousefi2020), i.e.

where ![]() $\tau _{\nu }$ is the interfacial tangential viscous stress (see § 2.1),

$\tau _{\nu }$ is the interfacial tangential viscous stress (see § 2.1), ![]() $\tau _{f}$ is the form drag,

$\tau _{f}$ is the form drag, ![]() $p$ is the pressure at the interface,

$p$ is the pressure at the interface, ![]() $\eta$ is the surface elevation and the overbar denotes temporal or spatial averaging. Using linear spectral decomposition of the wave field, it can be further shown that the total energy input to both waves and surface currents is directly related to the wind stress

$\eta$ is the surface elevation and the overbar denotes temporal or spatial averaging. Using linear spectral decomposition of the wave field, it can be further shown that the total energy input to both waves and surface currents is directly related to the wind stress

where ![]() $u_s$ is the surface velocity,

$u_s$ is the surface velocity, ![]() $c$ is the phase speed and

$c$ is the phase speed and ![]() ${\tau }_{wc}$ is the wave-coherent tangential stress. Consequently, the momentum flux leading to wave growth has two components, the form drag and the wave-coherent tangential stress. Knowledge of the energy input rate (or equivalently, wind stress) and its dependence on wave field characteristics is then of fundamental importance in modelling the air–sea momentum flux. Two common models for the energy input rate are developed by Jeffreys (Reference Jeffreys1925) and Miles (Reference Miles1957) from theoretical considerations and have been widely employed. These theories are, however, unable to reconcile their predictions with available field measurements.

${\tau }_{wc}$ is the wave-coherent tangential stress. Consequently, the momentum flux leading to wave growth has two components, the form drag and the wave-coherent tangential stress. Knowledge of the energy input rate (or equivalently, wind stress) and its dependence on wave field characteristics is then of fundamental importance in modelling the air–sea momentum flux. Two common models for the energy input rate are developed by Jeffreys (Reference Jeffreys1925) and Miles (Reference Miles1957) from theoretical considerations and have been widely employed. These theories are, however, unable to reconcile their predictions with available field measurements.

Reconstructing the near-surface flow fields and evaluating the surface wind stress from observable, readily available free-surface characteristics, such as wave profile, wave speed and reference wind speed, are of significant interest to various geophysical and engineering applications. There is a growing appreciation of the necessity of integrating wave effects into simple one-dimensional column models of the marine boundary layers for use in climate prediction and regional ocean mesoscale codes (e.g. Donelan Reference Donelan1998; Hanley & Belcher Reference Hanley and Belcher2008; Makin Reference Makin2008). Hence, the capability to interpret the surface drag from easy-to-acquire wave features can enable reliable, real-time wave models by measuring, for example, wave heights, surface currents and sea-surface temperature using remote sensing and aerial imaging techniques (e.g. Huang et al. Reference Huang, Carrasco, Shen, Gill and Horstmann2016; Metoyer et al. Reference Metoyer, Barzegar, Bogucki, Haus and Shao2021). To that end, data-driven methods have the potential to complement field and experimental measurements and to be used as a promising tool for developing reliable surface-drag parameterizations.

Over the past few years, fluid dynamics and turbulence research has undergone a significant transformation due to the advent of machine learning (ML) techniques (e.g. Kutz Reference Kutz2017; Brenner, Eldredge & Freund Reference Brenner, Eldredge and Freund2019; Brunton, Noack & Koumoutsakos Reference Brunton, Noack and Koumoutsakos2020; Fukami, Fukagata & Taira Reference Fukami, Fukagata and Taira2020a). The ML approaches have been successfully used to accelerate computational fluid dynamics (e.g. Bar-Sinai et al. Reference Bar-Sinai, Hoyer, Hickey and Brenner2019; Kochkov et al. Reference Kochkov, Smith, Alieva, Wang, Brenner and Hoyer2021; Jeon, Lee & Kim Reference Jeon, Lee and Kim2022), develop improved turbulence closures (e.g. Ling, Kurzawski & Templeton Reference Ling, Kurzawski and Templeton2016; Wang, Wu & Xiao Reference Wang, Wu and Xiao2017; Beck, Flad & Munz Reference Beck, Flad and Munz2019; Duraisamy, Iaccarino & Xiao Reference Duraisamy, Iaccarino and Xiao2019), reconstruct turbulent flow fields from spatially limited data (e.g. Liu et al. Reference Liu, Tang, Huang and Lu2020; Fukami, Nakamura & Fukagata Reference Fukami, Nakamura and Fukagata2020b; Kim et al. Reference Kim, Kim, Won and Lee2021), predict the spatio-temporal behaviour of turbulent flows (e.g. Wang et al. Reference Wang, Kashinath, Mustafa, Albert and Yu2020; Fukami, Fukagata & Taira Reference Fukami, Fukagata and Taira2021; Zhang & Zhao Reference Zhang and Zhao2021) and advance surrogate modelling of turbulent boundary layer flows (e.g. Hora & Giometto Reference Hora and Giometto2023). However, in contrast to the flow over solid surfaces, the applications of ML approaches to air–sea interactions have been quite limited and mainly focused on predicting wave characteristics (e.g. James, Zhang & O'Donncha Reference James, Zhang and O'Donncha2018; O'Donncha et al. Reference O'Donncha, Zhang, Chen and James2018, Reference O'Donncha, Zhang, Chen and James2019; Rasp & Lerch Reference Rasp and Lerch2018; Sun et al. Reference Sun, Huang, Luo, Luo, Wright, Fu and Wang2022; Zhang et al. Reference Zhang, Zhao, Jin and Greaves2022; Dakar et al. Reference Dakar, Fernández Jaramillo, Gertman, Mayerle and Goldman2023; Lou et al. Reference Lou, Lv, Dang, Su and Li2023; Xu, Zhang & Shi Reference Xu, Zhang and Shi2023). The existing works have employed different ML frameworks to reduce the computational complexity in estimating statistical wave conditions, such as significant wave height and peak wave period, and in low-dimensional learning of wave propagation (e.g. Sorteberg et al. Reference Sorteberg, Garasto, Cantwell and Bharath2020; Alguacil et al. Reference Alguacil, Bauerheim, Jacob and Moreau2021; Deo & Jaiman Reference Deo and Jaiman2022). For instance, O'Donncha et al. (Reference O'Donncha, Zhang, Chen and James2018) integrated the SWAN (simulating waves nearshore) model into an ML algorithm to resolve wave conditions (see also James et al. Reference James, Zhang and O'Donncha2018; O'Donncha et al. Reference O'Donncha, Zhang, Chen and James2019). Further, Sun et al. (Reference Sun, Huang, Luo, Luo, Wright, Fu and Wang2022) developed a deep learning-based bias correction method for enhancing significant wave height predictions of the WW3 (Wave Watch III) model over the Northwest Pacific Ocean region (see also Campos et al. Reference Campos, Krasnopolsky, Alves and Penny2020; Ellenson et al. Reference Ellenson, Pei, Wilson, Özkan-Haller and Fern2020). These studies have demonstrated the potential of ML in predicting the propagation of surface waves and improving the predictive performance of current wave models.

The reconstruction of flow field features from surface wave characteristics is, however, more challenging and has unique complications associated with multiscale wave profiles and motions. In previous research, limited efforts have been made to reconstruct the turbulent flow above/below surface waves based only on wave observations (e.g. Smeltzer et al. Reference Smeltzer, Æsøy, Ådnøy and Ellingsen2019; Gakhar, Koseff & Ouellette Reference Gakhar, Koseff and Ouellette2020; Xuan & Shen Reference Xuan and Shen2023; Zhang et al. Reference Zhang, Hao, Santoni, Shen, Sotiropoulos and Khosronejad2023). Using convolutional neural network (CNN) classifiers, Gakhar et al. (Reference Gakhar, Koseff and Ouellette2020) demonstrated that surface elevation information alone can be used to determine the physical features at the bottom boundary (see also Mandel et al. Reference Mandel, Rosenzweig, Chung, Ouellette and Koseff2017; Gakhar, Koseff & Ouellette Reference Gakhar, Koseff and Ouellette2022). More recently, Xuan & Shen (Reference Xuan and Shen2023) explored the feasibility of using a CNN to reconstruct the turbulent flow beneath free-surface flows using the surface elevation and velocity (see also Li, Xuan & Shen Reference Li, Xuan and Shen2020). Their CNN-based model was trained on a dataset obtained from DNSs of turbulent open-channel flows over a deformable surface and could accurately reconstruct the near-surface flow field.

In the absence of accurate parameterizations of the sea-surface drag (see, for e.g. Sullivan & McWilliams Reference Sullivan and McWilliams2010; Zachry et al. Reference Zachry, Schroeder, Kennedy, Westerink, Letchford and Hope2013; Gao et al. Reference Gao, Peng, Gao and Li2020), ML-based approaches may thus offer a practical alternative. To date, however, there has been no attempt to apply such data-driven algorithms to model the sea-surface drag. In the present study, using a supervised ML framework, we aim to estimate the surface viscous stress (or skin-friction drag) of wind-generated surface waves solely from wave profiles and wave age. To that end, we develop a CNN model that takes surface elevation profiles and the corresponding wave age as inputs and predicts the spatial distribution of skin-friction drag over wind waves. The CNN network is trained using high-resolution laboratory measurements of velocity fields obtained over a range of wind-wave conditions. The experimental dataset used in the current study is based on a re-analysis of the existing raw data of Yousefi et al. (Reference Yousefi, Veron and Buckley2020). We find that, for unseen wind-wave regimes that fall within the training samples, the CNN model can accurately predict the overall distribution of instantaneous and area-aggregate viscous stresses using only the surface signatures, wave phase speed and the mean wind speed magnitude aloft. In addition, we also assess the performance of the model in capturing unseen wind-wave conditions (i.e. out-of-training distribution). To the best of the authors’ knowledge, this is the first successful attempt in the literature to model the sea-surface drag using surface signatures and wave age. The proposed CNN model can be easily tailored to serve as a wall-layer model for skin-friction contributions in wall-modelled LESs of airflow over wave fields.

The rest of the paper is organized as follows. A brief description of the experimental dataset is summarized in § 2.2. The ML model details are provided in § 2.3. In § 3, the results are presented, where we assess the performance of the model to reconstruct skin-friction drag for both the interpolation and extrapolation tasks. Further discussions on the applicability and limitations of the CNN model are offered in § 4. Finally, a brief conclusion is presented in § 5.

2. Methodology

2.1. Theory

At the air–water interface, the total wind stress is the sum of the tangential viscous stress (i.e. skin-friction drag) and the pressure stress (i.e. form drag), ![]() $\tau ={\tau }_{\nu }+{\tau }_f$. The skin-friction drag can be estimated by

$\tau ={\tau }_{\nu }+{\tau }_f$. The skin-friction drag can be estimated by

where ![]() $\boldsymbol {\tau } = \mu (\boldsymbol {\nabla }\boldsymbol {u} + \boldsymbol {\nabla }{\boldsymbol {u}}^\mathrm {T})$ is the airside viscous stress tensor estimated at the air–water interface,

$\boldsymbol {\tau } = \mu (\boldsymbol {\nabla }\boldsymbol {u} + \boldsymbol {\nabla }{\boldsymbol {u}}^\mathrm {T})$ is the airside viscous stress tensor estimated at the air–water interface, ![]() $\mu$ is the air dynamic viscosity,

$\mu$ is the air dynamic viscosity, ![]() $\boldsymbol {\nabla }{\boldsymbol {u}}$ and

$\boldsymbol {\nabla }{\boldsymbol {u}}$ and ![]() $\boldsymbol {\nabla }{\boldsymbol {u}}^\mathrm {T}$ denote the velocity gradient tensor and its transpose,

$\boldsymbol {\nabla }{\boldsymbol {u}}^\mathrm {T}$ denote the velocity gradient tensor and its transpose, ![]() $\boldsymbol {u} = (u,w)$ are velocities in the vertical plane aligned with the direction of wave propagation and

$\boldsymbol {u} = (u,w)$ are velocities in the vertical plane aligned with the direction of wave propagation and ![]() $\boldsymbol {n}$ and

$\boldsymbol {n}$ and ![]() $\boldsymbol {t}$ are unit vectors that are, respectively, normal and tangent to the local water surface, as shown in figure 1. Considering the velocity field in the vertical plane to be aligned with the direction of wave propagation, the skin-friction drag can then be estimated by

$\boldsymbol {t}$ are unit vectors that are, respectively, normal and tangent to the local water surface, as shown in figure 1. Considering the velocity field in the vertical plane to be aligned with the direction of wave propagation, the skin-friction drag can then be estimated by

where ![]() $x$ and

$x$ and ![]() $z$ are the streamwise and vertical coordinate directions, respectively, and

$z$ are the streamwise and vertical coordinate directions, respectively, and ![]() $\epsilon = {\partial \eta }/{\partial x}$ is the local surface slope. It should be noted here that, in (2.2), the skin-friction drag is obtained to the first order in

$\epsilon = {\partial \eta }/{\partial x}$ is the local surface slope. It should be noted here that, in (2.2), the skin-friction drag is obtained to the first order in ![]() $\epsilon$ (see Longuet-Higgins Reference Longuet-Higgins1969; Buckley et al. Reference Buckley, Veron and Yousefi2020; Yousefi & Veron Reference Yousefi and Veron2020). The form drag can also be expressed by

$\epsilon$ (see Longuet-Higgins Reference Longuet-Higgins1969; Buckley et al. Reference Buckley, Veron and Yousefi2020; Yousefi & Veron Reference Yousefi and Veron2020). The form drag can also be expressed by ![]() $\tau _f = p \epsilon$. However, in the current study, we focus on skin-friction drag only.

$\tau _f = p \epsilon$. However, in the current study, we focus on skin-friction drag only.

Figure 1. Schematic representation of two-dimensional airflow velocity measurements obtained using the PIV technique above wind-driven surface waves. The mean flow profiles, kinematic processes and drag partitioning (into the skin-friction drag and form drag) at the air–water interface are also shown. The global coordinate system in the PIV plane is ![]() $(x, z)$ in the streamwise and vertical directions, respectively. The surface-following coordinate system

$(x, z)$ in the streamwise and vertical directions, respectively. The surface-following coordinate system ![]() $(t, n)$ is also represented, where

$(t, n)$ is also represented, where ![]() $\boldsymbol {t}$ and

$\boldsymbol {t}$ and ![]() $\boldsymbol {n}$ are unit vectors that are tangent and normal to the local instantaneous water surface. At the wavy water surface, it is noted that

$\boldsymbol {n}$ are unit vectors that are tangent and normal to the local instantaneous water surface. At the wavy water surface, it is noted that ![]() $\epsilon = {\partial \eta }/{\partial x}$ and

$\epsilon = {\partial \eta }/{\partial x}$ and ![]() $\theta = \arctan {(\epsilon )}$.

$\theta = \arctan {(\epsilon )}$.

2.2. Experimental dataset

In the current work, in order to train and examine the ML algorithm, we used the existing dataset of high-resolution airflow velocity measurements above wind-generated surface waves. The measurements were acquired using a combination of the particle image velocimetry (PIV) and laser-induced fluorescence (LIF) techniques in the wind-wave tunnel facility at the Air–Sea Interaction Laboratory of the University of Delaware. The facility is designed for studying atmosphere–ocean interactions, and its wave tank is approximately 42 m long, 1 m wide and 1.25 m high. The mean water level was kept at 0.7 m to allow sufficient space above the air–water surface. The tank is also equipped with a permeable wave-absorbing beach to dissipate the wave energy and prevent wave reflections. The wind tunnel of the facility can generate 10 m equivalent wind speeds of up to ![]() $30\ {\rm m}\ {\rm s}^{-1}$. It is equipped with a honeycomb straightener to provide uniform airflow across the tank and a smooth transition from the wind tunnel inlet to the water surface. The facility, experimental set-up and image acquisition and processing procedures are described in detail in Yousefi (Reference Yousefi2020). Here, we briefly summarize the experimental set-up to put the available measurements into perspective.

$30\ {\rm m}\ {\rm s}^{-1}$. It is equipped with a honeycomb straightener to provide uniform airflow across the tank and a smooth transition from the wind tunnel inlet to the water surface. The facility, experimental set-up and image acquisition and processing procedures are described in detail in Yousefi (Reference Yousefi2020). Here, we briefly summarize the experimental set-up to put the available measurements into perspective.

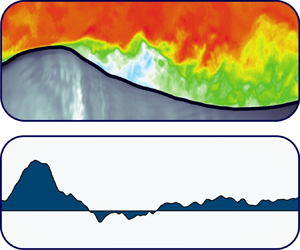

High-resolution two-dimensional velocity fields above wind waves were obtained using PIV. In combination with PIV measurements, the LIF technique was employed to precisely detect the surface profiles. This allowed the acquisition of airside velocity measurements very close to the surface within the airside viscous sublayer, as close as ![]() $100\ \mathrm {\mu }{\rm m}$ to the air–water interface, on average. Examples of streamwise velocity measurements along the wave field are plotted in figure 1. Figure 2 also shows an example of the PIV velocity field over a separating wind wave with the corresponding skin-friction drag at the air–water interface calculated using (2.2). These experimental measurements were acquired at a fetch of 22.7 m for various wind-wave conditions with different 10 m equivalent wind speeds varying from 2.25 to

$100\ \mathrm {\mu }{\rm m}$ to the air–water interface, on average. Examples of streamwise velocity measurements along the wave field are plotted in figure 1. Figure 2 also shows an example of the PIV velocity field over a separating wind wave with the corresponding skin-friction drag at the air–water interface calculated using (2.2). These experimental measurements were acquired at a fetch of 22.7 m for various wind-wave conditions with different 10 m equivalent wind speeds varying from 2.25 to ![]() $16.59\ {\rm m}\ {\rm s}^{-1}$. This resulted in purely one-dimensional waves that only propagate in the streamwise direction such that wave groups and individual waves within those groups generally align in the same direction. The wave age of the generated waves is in the range of 0.06–0.21, which corresponds to moderately to strongly forced wind waves (Drennan et al. Reference Drennan, Graber, Hauser and Quentin2003). For reference, the complete experimental conditions are listed in table 1.

$16.59\ {\rm m}\ {\rm s}^{-1}$. This resulted in purely one-dimensional waves that only propagate in the streamwise direction such that wave groups and individual waves within those groups generally align in the same direction. The wave age of the generated waves is in the range of 0.06–0.21, which corresponds to moderately to strongly forced wind waves (Drennan et al. Reference Drennan, Graber, Hauser and Quentin2003). For reference, the complete experimental conditions are listed in table 1.

Figure 2. An example of the instantaneous streamwise PIV velocity field over a separating wind wave (a) with the corresponding wave age, wave profile and skin-friction drag at the air–water interface (b). The objective of the CNN-based ML model is to estimate the skin-friction drag, ![]() $\tau _{\nu }$, over wind waves from the wave profile,

$\tau _{\nu }$, over wind waves from the wave profile, ![]() $\eta (x)$, and wave age,

$\eta (x)$, and wave age, ![]() $C_p/U_{10}$. In the experimental measurements, the surface tangential viscous stress (or, equivalently, skin-friction drag) is the first value of the viscous stress measurements taken at the height of

$C_p/U_{10}$. In the experimental measurements, the surface tangential viscous stress (or, equivalently, skin-friction drag) is the first value of the viscous stress measurements taken at the height of ![]() $94.8\ \mathrm {\mu }{\rm m}$ above the air–water interface.

$94.8\ \mathrm {\mu }{\rm m}$ above the air–water interface.

Table 1. Summary of the experimental data and wind-wave conditions. The friction and 10 m extrapolated velocities were calculated from the experimental data by fitting the logarithmic part of the mean wind velocity profile. Parameters with subscript ![]() $p$ indicate the peak wave values obtained from peak wave frequencies by applying linear wave theory. For additional details on experimental data and procedures, the reader is referred to publications by Yousefi (Reference Yousefi2020) and Yousefi et al. (Reference Yousefi, Veron and Buckley2020).

$p$ indicate the peak wave values obtained from peak wave frequencies by applying linear wave theory. For additional details on experimental data and procedures, the reader is referred to publications by Yousefi (Reference Yousefi2020) and Yousefi et al. (Reference Yousefi, Veron and Buckley2020).

The spectra of the generated surface wave elevations were relatively narrow banded for all experimental conditions, with peaks at the dominant wave frequency (see Yousefi et al. Reference Yousefi, Veron and Buckley2020). As reported in table 1, the dominant wave frequencies decrease with increasing wind speed because the fetch-limited waves grow longer with increasing wind speed. However, the spectral densities increase with increasing wind speed, which indicates that the waves also increase in amplitude with increasing wind speed. Under these experimental conditions, the wavelength of wind-generated surface waves varies approximately from 15 to 50 cm, which is at larger scales than capillary waves with a wavelength in the sub-centimetre range (e.g. Slavchov, Peychev & Ismail Reference Slavchov, Peychev and Ismail2021). The complications of bubble/spray generation were also largely avoided in these wind-wave conditions. Therefore, the effect of surface tension force on surface wave profiles is negligible and does not directly contribute to the viscous stress at the air–water interface.

2.2.1. Data pre-processing

The experimental dataset used to train and evaluate the ML model comprises various wind speed conditions, with each experiment sampling approximately the same number of waves. This resulted in the acquisition of more instantaneous PIV fields (which have a fixed footprint) when the wind waves were longer. Specifically, the dataset consists of approximately 2000, 3800, 4500, 4900 and 5000 instantaneous PIV images corresponding to wind speeds of ![]() $U_{10} = 2.25$, 5.08, 9.57, 14.82 and

$U_{10} = 2.25$, 5.08, 9.57, 14.82 and ![]() $16.59\ {\rm m}\ {\rm s}^{-1}$, respectively. For training and validation of the ML model, we considered experiments with a wind speed of 5.08, 9.57 and

$16.59\ {\rm m}\ {\rm s}^{-1}$, respectively. For training and validation of the ML model, we considered experiments with a wind speed of 5.08, 9.57 and ![]() $16.59\ {\rm m}\ {\rm s}^{-1}$ (corresponding to W1-05, W1-09 and W1-16 cases in table 1), resulting in a total of roughly 13 300 input–output pairs. These pairs were further randomly divided into training, validation and testing sub-datasets, where each set included 80 %, 10 % and 10 % of the total samples, respectively. The training dataset is used to train the ML model, allowing it to learn and adjust internal parameters to minimize prediction errors, while the validation subset is utilized to further fine tune the model's hyperparameters during training (e.g. Goodfellow, Bengio & Courville Reference Goodfellow, Bengio and Courville2016). Finally, in order to assess the generalization capability of the model and validate its performance in a more realistic scenario, we examined the model on out-of-training-distribution datasets. To this end, we used instantaneous PIV images of the cases with wind speeds of

$16.59\ {\rm m}\ {\rm s}^{-1}$ (corresponding to W1-05, W1-09 and W1-16 cases in table 1), resulting in a total of roughly 13 300 input–output pairs. These pairs were further randomly divided into training, validation and testing sub-datasets, where each set included 80 %, 10 % and 10 % of the total samples, respectively. The training dataset is used to train the ML model, allowing it to learn and adjust internal parameters to minimize prediction errors, while the validation subset is utilized to further fine tune the model's hyperparameters during training (e.g. Goodfellow, Bengio & Courville Reference Goodfellow, Bengio and Courville2016). Finally, in order to assess the generalization capability of the model and validate its performance in a more realistic scenario, we examined the model on out-of-training-distribution datasets. To this end, we used instantaneous PIV images of the cases with wind speeds of ![]() $U_{10} = 2.25$ and

$U_{10} = 2.25$ and ![]() $14.82\ {\rm m}\ {\rm s}^{-1}$ as a separate dataset to examine the model performance on completely unseen data. Notably, the

$14.82\ {\rm m}\ {\rm s}^{-1}$ as a separate dataset to examine the model performance on completely unseen data. Notably, the ![]() $U_{10} = 2.25\ {\rm m}\ {\rm s}^{-1}$ case is outside the wind speed training range.

$U_{10} = 2.25\ {\rm m}\ {\rm s}^{-1}$ case is outside the wind speed training range.

The signal-to-noise ratio of PIV velocity measurements naturally degrades in near-surface areas due to various factors, including the reflection of high-intensity laser light off the surface and the presence of other background noise sources. Although this does not affect velocity measurements of the current experimental dataset, the interfacial skin-friction drag experiences an increased level of noise because the gradient operator introduces additional noise to the measurement. It was observed that the performance of the ML algorithm is sensitive to the near-surface noise present in the experimental data. In order to ensure the optimal performance of the ML network, a zero-phase (smoothing) infinite-impulse-response filter (e.g. Gustafsson Reference Gustafsson1996) was applied to the along-wave surface tangential stress profiles

\begin{equation} \left.\begin{gathered} y_0[n] = \frac{1}{a_0} \left(\sum_{i=0}^{m} b_i x[n-i] - \sum_{j=1}^{m} a_j y_0[n-j]\right) \\ \mathrm{and} \\ y_1[n] = \frac{1}{a_0} \left(\sum_{i=0}^{m} b_i y_0[n+i] - \sum_{j=1}^{m} a_j y_1[n+j]\right) , \end{gathered}\right\} \end{equation}

\begin{equation} \left.\begin{gathered} y_0[n] = \frac{1}{a_0} \left(\sum_{i=0}^{m} b_i x[n-i] - \sum_{j=1}^{m} a_j y_0[n-j]\right) \\ \mathrm{and} \\ y_1[n] = \frac{1}{a_0} \left(\sum_{i=0}^{m} b_i y_0[n+i] - \sum_{j=1}^{m} a_j y_1[n+j]\right) , \end{gathered}\right\} \end{equation}

where ![]() $y_0[n]$ and

$y_0[n]$ and ![]() $y_1[n]$ are, respectively, the output of forward and reverse filtering operation at location index

$y_1[n]$ are, respectively, the output of forward and reverse filtering operation at location index ![]() $n$,

$n$, ![]() $x[n]$ is the input signal,

$x[n]$ is the input signal, ![]() $b_i$ are the feedforward filter coefficients,

$b_i$ are the feedforward filter coefficients, ![]() $a_j$ are the feedback filter coefficients and

$a_j$ are the feedback filter coefficients and ![]() $m$ is the filter order. An example of filtered skin-friction drag (along with other ML input data) and original experimental data are presented in figures 3(d) and 3(h) over a separating and non-separating wind wave. Further, in order to expedite the training process, we scaled the data to have zero mean and unit standard deviation. This standardization ensures that features are placed on a comparable scale, thereby facilitating more effective training of the ML model (e.g. Goodfellow et al. Reference Goodfellow, Bengio and Courville2016).

$m$ is the filter order. An example of filtered skin-friction drag (along with other ML input data) and original experimental data are presented in figures 3(d) and 3(h) over a separating and non-separating wind wave. Further, in order to expedite the training process, we scaled the data to have zero mean and unit standard deviation. This standardization ensures that features are placed on a comparable scale, thereby facilitating more effective training of the ML model (e.g. Goodfellow et al. Reference Goodfellow, Bengio and Courville2016).

Figure 3. An example of ML input data over non-separating (a–d) and separating (e–h) wind waves for the wind-wave experimental condition of ![]() $U_{10} = 5.08\ \textrm {m}\ \textrm {s}^{-1}$. The instantaneous fields of normalized streamwise velocity,

$U_{10} = 5.08\ \textrm {m}\ \textrm {s}^{-1}$. The instantaneous fields of normalized streamwise velocity, ![]() $u/U_{10}$, are shown in panels (a,e), in which solid lines denote the wave profiles. The panels below each wave show (b,f) the local wave slope,

$u/U_{10}$, are shown in panels (a,e), in which solid lines denote the wave profiles. The panels below each wave show (b,f) the local wave slope, ![]() $\epsilon = {\partial \eta } / {\partial x}$, (c,g) the local wave phase,

$\epsilon = {\partial \eta } / {\partial x}$, (c,g) the local wave phase, ![]() $\varphi$, and (d,h) the skin-friction drag scaled with total stress,

$\varphi$, and (d,h) the skin-friction drag scaled with total stress, ![]() $\tau _{\nu } / \tau$, where the experimental data are indicated with cross symbols and the solid lines are the filtered experimental tangential stress profiles.

$\tau _{\nu } / \tau$, where the experimental data are indicated with cross symbols and the solid lines are the filtered experimental tangential stress profiles.

2.3. Machine learning model

The main objective of this work is to develop a model to accurately estimate the instantaneous skin-friction drag, ![]() $\tau _{\nu }$, of surface wind waves from wave profiles,

$\tau _{\nu }$, of surface wind waves from wave profiles, ![]() $\eta (x)$, local interface slope,

$\eta (x)$, local interface slope, ![]() $\partial \eta /\partial {x}$, local wave phases,

$\partial \eta /\partial {x}$, local wave phases, ![]() $\varphi$, and the wave age of the corresponding wave field,

$\varphi$, and the wave age of the corresponding wave field, ![]() $C_p/U_{10}$. In particular, we aim to develop a mapping such that

$C_p/U_{10}$. In particular, we aim to develop a mapping such that

The CNN-based ML model is employed to approximate ![]() $\mathcal {M}$; a detailed description of network architecture is introduced in the following section. Here, we used wave age as a dimensionless parameter incorporating wind speed rather than wave Reynolds number (

$\mathcal {M}$; a detailed description of network architecture is introduced in the following section. Here, we used wave age as a dimensionless parameter incorporating wind speed rather than wave Reynolds number (![]() ${Re_w} = U_{10}/\nu k$ with

${Re_w} = U_{10}/\nu k$ with ![]() $k$ being the wavenumber) because the wave age provides a better metric to characterize different ocean regimes (for e.g. see Csanady Reference Csanady2001; Alves, Banner & Young Reference Alves, Banner and Young2003; Sullivan & McWilliams Reference Sullivan and McWilliams2010). In addition, the Re w is indirectly incorporated into the ML model as all the parameters that define

$k$ being the wavenumber) because the wave age provides a better metric to characterize different ocean regimes (for e.g. see Csanady Reference Csanady2001; Alves, Banner & Young Reference Alves, Banner and Young2003; Sullivan & McWilliams Reference Sullivan and McWilliams2010). In addition, the Re w is indirectly incorporated into the ML model as all the parameters that define ![]() ${Re_w}$ are already included in the mapping. Although we used the wave age as a non-dimensionalized input for the ML model, the wave age only covers a small range of wind-generated surface waves in these constant fetch laboratory experiments (see table 1). Therefore, in the remainder of the paper, we will narrow our analysis to wind speed, except when wave age is a convenient parameter to compare our results with available data. It should also be noted that the Bond number (

${Re_w}$ are already included in the mapping. Although we used the wave age as a non-dimensionalized input for the ML model, the wave age only covers a small range of wind-generated surface waves in these constant fetch laboratory experiments (see table 1). Therefore, in the remainder of the paper, we will narrow our analysis to wind speed, except when wave age is a convenient parameter to compare our results with available data. It should also be noted that the Bond number (![]() ${BO} = {\Delta \rho g}/{\sigma k^2}$, where

${BO} = {\Delta \rho g}/{\sigma k^2}$, where ![]() $\Delta \rho$ is the air–water density difference,

$\Delta \rho$ is the air–water density difference, ![]() $g$ is the gravity and

$g$ is the gravity and ![]() $\sigma$ is the surface tension) of surface wave cases considered for this study is relatively large for all cases, varying from

$\sigma$ is the surface tension) of surface wave cases considered for this study is relatively large for all cases, varying from ![]() $BO=65$ for the lowest wind speed of

$BO=65$ for the lowest wind speed of ![]() $2.25\ \textrm {m}\ \textrm {s}^{-1}$ to

$2.25\ \textrm {m}\ \textrm {s}^{-1}$ to ![]() $BO=975$ for the highest wind speed of

$BO=975$ for the highest wind speed of ![]() $16.59\ \textrm {m}\ \textrm {s}^{-1}$, indicating that the surface tension effects are negligible (e.g. Van Hooft et al. Reference Van Hooft, Popinet, Van Heerwaarden, Van der Linden, De Roode and Van de Wiel2018). Hence, due to the high Bond number of wind waves, we did not include the Bond number as a mapping parameter.

$16.59\ \textrm {m}\ \textrm {s}^{-1}$, indicating that the surface tension effects are negligible (e.g. Van Hooft et al. Reference Van Hooft, Popinet, Van Heerwaarden, Van der Linden, De Roode and Van de Wiel2018). Hence, due to the high Bond number of wind waves, we did not include the Bond number as a mapping parameter.

2.3.1. Convolutional neural network

Neural network-based ML models, notably the multi-layer perceptron (MLP) and CNN, are widely adopted for tackling complex nonlinear regression tasks across diverse scientific and engineering domains (e.g. Rumelhart, Hinton & Williams Reference Rumelhart, Hinton and Williams1986; LeCun et al. Reference LeCun, Bottou, Bengio and Haffner1998; Goodfellow et al. Reference Goodfellow, Bengio and Courville2016). While both models are commonly used in multi-output regression tasks, MLP-based models are preferred for pointwise mapping (e.g. Raissi, Yazdani & Karniadakis Reference Raissi, Yazdani and Karniadakis2020; Maulik et al. Reference Maulik, Sharma, Patel, Lusch and Jennings2021; Hora & Giometto Reference Hora and Giometto2023), whereas CNNs excel in handling structured grid data, such as velocity fields and images (e.g. Fukami et al. Reference Fukami, Fukagata and Taira2021; Kim et al. Reference Kim, Kim, Won and Lee2021; Xuan & Shen Reference Xuan and Shen2023). This preference arises from the CNN models’ ability to capture spatially local features, exhibit translation equivariance and offer scalability advantages (see, for e.g. Goodfellow et al. Reference Goodfellow, Bengio and Courville2016; Gao, Sun & Wang Reference Gao, Sun and Wang2021). Overall, the success of CNN-based networks has established them as promising approaches for approximating complex functions.

Specifically, in the current study, we employ a CNN-based ML model (indicated by ![]() $\mathcal {F}$) to approximate the mapping function,

$\mathcal {F}$) to approximate the mapping function, ![]() $\mathcal {M}$, such that

$\mathcal {M}$, such that

where ![]() $\boldsymbol{\mathsf{w}}$ encompasses the trainable parameters of the CNN, including weights and biases. The CNN model used in this work primarily consists of convolutional, dense (or fully connected) and nonlinear layers. Among these, the convolutional layer plays a crucial role in capturing hierarchical representations and extracting meaningful features from the high-dimensional spatial input. This is achieved by applying the convolution operation to the input, followed by a nonlinear activation function. In a two-dimensional convolutional layer, the output

$\boldsymbol{\mathsf{w}}$ encompasses the trainable parameters of the CNN, including weights and biases. The CNN model used in this work primarily consists of convolutional, dense (or fully connected) and nonlinear layers. Among these, the convolutional layer plays a crucial role in capturing hierarchical representations and extracting meaningful features from the high-dimensional spatial input. This is achieved by applying the convolution operation to the input, followed by a nonlinear activation function. In a two-dimensional convolutional layer, the output ![]() ${\mathsf{h}}^{{out}}$ can be described based on the input from the previous layer,

${\mathsf{h}}^{{out}}$ can be described based on the input from the previous layer, ![]() ${\mathsf{h}}^{{in}}$, as

${\mathsf{h}}^{{in}}$, as

\begin{equation} h^{{out}}_{ijd} = \sigma \left(\sum_{k=1}^{K} \sum_{p=1}^{F} \sum_{q=1}^{F} h^{{in}}_{i+p, j+q, k} {\mathsf{w}}_{pqkd} + {\mathsf{b}}_{ijd} \right), \end{equation}

\begin{equation} h^{{out}}_{ijd} = \sigma \left(\sum_{k=1}^{K} \sum_{p=1}^{F} \sum_{q=1}^{F} h^{{in}}_{i+p, j+q, k} {\mathsf{w}}_{pqkd} + {\mathsf{b}}_{ijd} \right), \end{equation}

where ![]() $\boldsymbol{\mathsf{w}}$ and

$\boldsymbol{\mathsf{w}}$ and ![]() $\boldsymbol{\mathsf{b}}$ denote the weight and biases of the output layer,

$\boldsymbol{\mathsf{b}}$ denote the weight and biases of the output layer, ![]() $\sigma$ is the element-wise nonlinearity,

$\sigma$ is the element-wise nonlinearity, ![]() $K$ is the number of kernels and

$K$ is the number of kernels and ![]() $F \times F$ is the filter/kernel dimension (LeCun et al. Reference LeCun, Bottou, Bengio and Haffner1998; Goodfellow et al. Reference Goodfellow, Bengio and Courville2016). While convolutional layers excel at extracting local features, they have a limited receptive field and lack global awareness (e.g. Long, Shelhamer & Darrell Reference Long, Shelhamer and Darrell2015). Conversely, dense layers connect all neurons (also known as perceptrons) from the previous layer to every neuron in the current layer, enabling them to capture spatially global patterns and make predictions based on combined information from all input features (e.g. Goodfellow et al. Reference Goodfellow, Bengio and Courville2016). By incorporating dense layers towards the end of the CNN architecture, the network gains the ability to learn and encode global features. Mathematically, the transformation performed by a dense layer can be described as follows: given an input vector

$F \times F$ is the filter/kernel dimension (LeCun et al. Reference LeCun, Bottou, Bengio and Haffner1998; Goodfellow et al. Reference Goodfellow, Bengio and Courville2016). While convolutional layers excel at extracting local features, they have a limited receptive field and lack global awareness (e.g. Long, Shelhamer & Darrell Reference Long, Shelhamer and Darrell2015). Conversely, dense layers connect all neurons (also known as perceptrons) from the previous layer to every neuron in the current layer, enabling them to capture spatially global patterns and make predictions based on combined information from all input features (e.g. Goodfellow et al. Reference Goodfellow, Bengio and Courville2016). By incorporating dense layers towards the end of the CNN architecture, the network gains the ability to learn and encode global features. Mathematically, the transformation performed by a dense layer can be described as follows: given an input vector ![]() ${\mathsf{h}}^{in} \in {\mathsf{R}}^n$ and a weight matrix

${\mathsf{h}}^{in} \in {\mathsf{R}}^n$ and a weight matrix ![]() $\boldsymbol{\mathsf{w}} \in {\mathsf{R}}^{m \times n}$, the output

$\boldsymbol{\mathsf{w}} \in {\mathsf{R}}^{m \times n}$, the output ![]() ${\mathsf{h}}^{out} \in {\mathsf{R}}^{m}$ can be computed as

${\mathsf{h}}^{out} \in {\mathsf{R}}^{m}$ can be computed as

The choice of an appropriate nonlinearity is crucial as it significantly impacts the training process and performance of the network for a given task (e.g. Hao et al. Reference Hao, Yizhou, Yaqin and Zhili2020). Nonlinear activation functions, including sigmoid, hyperbolic tangent (tanh) and rectified linear units (ReLU) and their variants (e.g. Nair & Hinton Reference Nair and Hinton2010; Maas et al. Reference Maas, Hannun and Ng2013; He et al. Reference He, Zhang, Ren and Sun2015), offer diverse choices to enable networks in learning complex relationships. However, gradient disappearance in sigmoid and tanh or the so-called ‘dying ReLU’ phenomenon for ReLU can adversely impact the models’ performance (e.g. Hochreiter Reference Hochreiter1998; Glorot & Bengio Reference Glorot and Bengio2010; Xu et al. Reference Xu, Wang, Chen and Li2015). Recently, Swish and Mish nonlinearity functions emerged as promising alternatives (see Ramachandran, Zoph & Le Reference Ramachandran, Zoph and Le2017; Misra Reference Misra2019), aiming to provide smoother, nonlinear behaviours, enhance the gradient flow and mitigate dead neurons. Given these properties, we employed the Mish activation, represented as ![]() $\textrm {Mish}(x) = x \tanh [\log (1 + e^x)]$ (Misra Reference Misra2019), in the nonlinear layers of our network architecture.

$\textrm {Mish}(x) = x \tanh [\log (1 + e^x)]$ (Misra Reference Misra2019), in the nonlinear layers of our network architecture.

Here, in order to obtain the optimal parameters ![]() $\boldsymbol{\mathsf{w}}$ of the CNN model, we minimize the

$\boldsymbol{\mathsf{w}}$ of the CNN model, we minimize the ![]() ${L}_2$ norm, also known as the mean squared error (MSE), between the ground-truth skin-friction drag obtained from experimental measurements (indicated by

${L}_2$ norm, also known as the mean squared error (MSE), between the ground-truth skin-friction drag obtained from experimental measurements (indicated by ![]() $\tau _{\nu }$) and the output of the ML model,

$\tau _{\nu }$) and the output of the ML model, ![]() $\hat {\tau }_{\nu }= \mathcal {F} (C_p/U_{10}, \eta (x), \partial \eta /\partial x, \varphi ; \boldsymbol{\mathsf{w}})$. Additionally, we incorporate ridge regularization to address the issue of overfitting. This regularization technique penalizes large values of trainable parameters,

$\hat {\tau }_{\nu }= \mathcal {F} (C_p/U_{10}, \eta (x), \partial \eta /\partial x, \varphi ; \boldsymbol{\mathsf{w}})$. Additionally, we incorporate ridge regularization to address the issue of overfitting. This regularization technique penalizes large values of trainable parameters, ![]() $w_i$, by augmenting the MSE loss function with the squared magnitudes of the weights. The aim is to prevent the model from assigning disproportionately large weights to specific features, a practice known to contribute to overfitting (e.g. Goodfellow et al. Reference Goodfellow, Bengio and Courville2016). In other words, our objective is to find

$w_i$, by augmenting the MSE loss function with the squared magnitudes of the weights. The aim is to prevent the model from assigning disproportionately large weights to specific features, a practice known to contribute to overfitting (e.g. Goodfellow et al. Reference Goodfellow, Bengio and Courville2016). In other words, our objective is to find ![]() $\boldsymbol {w}$ that minimizes the following expression:

$\boldsymbol {w}$ that minimizes the following expression:

where ![]() $\lambda$ is the regularization parameter and

$\lambda$ is the regularization parameter and ![]() $\sum \|{ \boldsymbol{\mathsf{w}}}\|_2$ is a ridge regularization. This optimization process enables the CNN model to learn optimal parameters, enhance performance and better approximate the underlying mapping function.

$\sum \|{ \boldsymbol{\mathsf{w}}}\|_2$ is a ridge regularization. This optimization process enables the CNN model to learn optimal parameters, enhance performance and better approximate the underlying mapping function.

The CNN model is implemented using the Pytorch ML library (Paszke et al. Reference Paszke, Gross, Massa, Lerer, Bradbury, Chanan, Killeen, Lin, Gimelshein and Antiga2019). For ![]() $\mathcal {F}$, based on the hyperparameter optimization analysis (see the Appendix), we adopt a CNN network with five convolutional layers, one flattened layer, one dense layer and Mish activation function for nonlinearity, as shown in figure 4. For the outputs, we employ one dense layer with a linear activation function. For all convolutional layers, the filter size is set to

$\mathcal {F}$, based on the hyperparameter optimization analysis (see the Appendix), we adopt a CNN network with five convolutional layers, one flattened layer, one dense layer and Mish activation function for nonlinearity, as shown in figure 4. For the outputs, we employ one dense layer with a linear activation function. For all convolutional layers, the filter size is set to ![]() $F = 3$, the number of kernels is set to

$F = 3$, the number of kernels is set to ![]() $K = 8$ (see (2.6)) and the number of perceptrons in a dense layer is kept at 2000. The optimal CNN-based ML model architecture is displayed in figure 4, which has 65 015 401 trainable and 0 non-trainable parameters. The network is trained end-to-end using backpropagation with the Adam optimizer (Kingma & Ba Reference Kingma and Ba2014). The Adam optimizer is an advanced stochastic gradient descent algorithm that iteratively updates the trainable parameter based on the gradients of the loss function with respect to the parameters. It incorporates adaptive learning rates and momentum, which dynamically adjust the step sizes during optimization, making the training process more efficient Kingma & Ba (Reference Kingma and Ba2014). All trainable parameters are initialized randomly using values sampled from a uniform distribution, following the approach of Glorot & Bengio (Reference Glorot and Bengio2010), and the value of regularization parameter

$K = 8$ (see (2.6)) and the number of perceptrons in a dense layer is kept at 2000. The optimal CNN-based ML model architecture is displayed in figure 4, which has 65 015 401 trainable and 0 non-trainable parameters. The network is trained end-to-end using backpropagation with the Adam optimizer (Kingma & Ba Reference Kingma and Ba2014). The Adam optimizer is an advanced stochastic gradient descent algorithm that iteratively updates the trainable parameter based on the gradients of the loss function with respect to the parameters. It incorporates adaptive learning rates and momentum, which dynamically adjust the step sizes during optimization, making the training process more efficient Kingma & Ba (Reference Kingma and Ba2014). All trainable parameters are initialized randomly using values sampled from a uniform distribution, following the approach of Glorot & Bengio (Reference Glorot and Bengio2010), and the value of regularization parameter ![]() $\lambda$ is set to

$\lambda$ is set to ![]() $10^{-4}$. The reduce learning rate on plateau schedule is used, initializing the learning rate to

$10^{-4}$. The reduce learning rate on plateau schedule is used, initializing the learning rate to ![]() $5 \times 10^{-4}$ and the rate is reduced by a factor of 0.9 when the validation loss stagnates during the training and the effective mini-batch size is set to 64.

$5 \times 10^{-4}$ and the rate is reduced by a factor of 0.9 when the validation loss stagnates during the training and the effective mini-batch size is set to 64.

Figure 4. Diagram illustrating the CNN network architecture utilized to estimate the skin-friction drag, ![]() $\tau _{\nu }$, from

$\tau _{\nu }$, from ![]() $\eta (x)$,

$\eta (x)$, ![]() $\partial \eta /\partial {x}$,

$\partial \eta /\partial {x}$, ![]() $\varphi$ and

$\varphi$ and ![]() $C_p/U_{10}$. The network comprises five convolution layers (labelled ‘Conv Layers’), a flattened layer, a dense layer and the Mish activation function as the nonlinear layer. The network takes inputs including a stack of

$C_p/U_{10}$. The network comprises five convolution layers (labelled ‘Conv Layers’), a flattened layer, a dense layer and the Mish activation function as the nonlinear layer. The network takes inputs including a stack of ![]() $\eta (x)$,

$\eta (x)$, ![]() $\partial \eta /\partial {x}$,

$\partial \eta /\partial {x}$, ![]() $\varphi$ and

$\varphi$ and ![]() $C_p/U_{10}$ (shown on the left), and the output

$C_p/U_{10}$ (shown on the left), and the output ![]() $\hat {\tau }_{\nu }$ (shown on the right). Each convolutional layer employs a filter size of

$\hat {\tau }_{\nu }$ (shown on the right). Each convolutional layer employs a filter size of ![]() $F=3$ and

$F=3$ and ![]() $K=8$ filters, the dense layer contains 2000 perceptrons/neurons. The tuple (4, 8, 985) represents the number of inputs, number of kernels and number of data points, respectively.

$K=8$ filters, the dense layer contains 2000 perceptrons/neurons. The tuple (4, 8, 985) represents the number of inputs, number of kernels and number of data points, respectively.

3. Results

In this section, we evaluate the performance of the CNN model by comparing the predicted skin-friction drags against the corresponding reference high-resolution PIV measurements from the test dataset mentioned in § 2.2.1. To this end, we first analyse the convergence history of the model and present a quantitative analysis of ML-specific error metrics. We then focus on model performance based on instantaneous and averaged skin-friction fields and assess its predictive ability in estimating the viscous drag from datasets in and out of the training range.

3.1. Convergence history

In this section, we provide an overview of the ML model training process. To ensure optimal performance, careful consideration must be given to the training process, including the number of epochs or iterations. Training for an insufficient number of epochs might lead to an underfitting issue, where the model fails to capture the underlying patterns in the data. Conversely, excessive training may lead to overfitting, a phenomenon in which the model memorizes the training data and learns the present noise, losing its ability to generalize to unseen data. Balancing the trade-off between underfitting and overfitting is critical to enhance the model's performance on new, unseen data. Such a balance can be generally achieved through various methods such as cross-validation, early stopping and regularization. Incorporating these techniques, combined with the careful selection of hyperparameters and the choice of appropriate model architecture, can significantly improve the generalization performance of the regression model (e.g. Hastie, Tibshirani & Friedman Reference Hastie, Friedman and Tibshirani2009; Goodfellow et al. Reference Goodfellow, Bengio and Courville2016).

Figure 5 shows the MSE convergence history of the ML model against the number of epochs on both training and validation datasets. It is plotted on a logarithmic vertical scale to better visualize the slight differences between training and validation losses. The figure clearly illustrates a progressive decrease in MSE for both datasets as the training progresses. This indicates that the model is continuously learning and improving its accuracy. Here, to address the challenge of overfitting and enhance generalization, we employed the early stopping technique proposed by Amari et al. (Reference Amari, Murata, Muller, Finke and Yang1997) and used in existing ML-based studies (e.g. Fukami, Fukagata & Taira Reference Fukami, Fukagata and Taira2019; Srinivasan et al. Reference Srinivasan, Guastoni, Azizpour, Schlatter and Vinuesa2019; Fukami et al. Reference Fukami, Fukagata and Taira2021). Early stopping is a technique that continuously monitors the model's performance on a separate validation dataset and terminates the training process if the validation loss fails to improve or consistently deteriorates over a predefined number of epochs (five in our case). In the current work, while the training set was used to update the model parameters, the validation set was used to continuously monitor the model performance on a separate unseen dataset and prevent overfitting the training data. After approximately 150 epochs, the validation loss stopped improving, and the early stopping mechanism terminated the training. This approach allowed us to identify the optimal stopping point during training and capture a model that strikes a balance between fitting the training data and generalizing it to unseen data. At the completion of the training, the MSE values for the training and validation datasets were 0.0146 and 0.0148, respectively. The close MSE value on training and validation datasets demonstrates the model's ability to accurately learn the underlying concept, while effectively avoiding overfitting or underfitting issues.

Figure 5. Convergence of the MSE loss of the CNN-based ML during the training phase. Training and validation losses represent the MSE on the training and validation datasets, respectively. Epochs indicate the number of iterations used in the learning process. The vertical axis is plotted on logarithmic access to better demonstrate the slight differences between loss curves.

3.2. Model performance on the test dataset

3.2.1. Error analysis

To quantify and evaluate the performance of the CNN-based ML method, we calculate the relative mean absolute error (RMAE) and the relative mean square error (RMSE) defined based on the ![]() ${L^1}$-norm and

${L^1}$-norm and ![]() ${L^2}$-norm, respectively. The relative mean error can be defined by

${L^2}$-norm, respectively. The relative mean error can be defined by

where ![]() $\hat {\tau }_{\nu }$ is the ML-predicted viscous stress,

$\hat {\tau }_{\nu }$ is the ML-predicted viscous stress, ![]() $\tau _{\nu }$ is the corresponding ground-truth experimental measurement and

$\tau _{\nu }$ is the corresponding ground-truth experimental measurement and ![]() $\|{\cdots }\|_{p}$ is the

$\|{\cdots }\|_{p}$ is the ![]() ${L_p}$-norm defined for the vector

${L_p}$-norm defined for the vector ![]() $\boldsymbol {v}$ of size

$\boldsymbol {v}$ of size ![]() $n$ by

$n$ by

\begin{equation} \|{\boldsymbol{v}}\|_{p} = {\left( \sum_{i=1}^{n} {\left| v_i \right|}^p \right)}^{{1}/{p}} . \end{equation}

\begin{equation} \|{\boldsymbol{v}}\|_{p} = {\left( \sum_{i=1}^{n} {\left| v_i \right|}^p \right)}^{{1}/{p}} . \end{equation}

Here, ![]() $|\cdots |$ represents the absolute operator,

$|\cdots |$ represents the absolute operator, ![]() $p=1$ indicates the RMAE and

$p=1$ indicates the RMAE and ![]() $p=2$ denotes the RMSE. Both metrics are thus a measure of the mean relative error between the ML prediction and the experimental output of skin-friction drag for each instantaneous wave. For evaluating the performance of the ML model, it is important to consider different types of errors. For completeness, we also calculated the coefficient of determination, denoted as

$p=2$ denotes the RMSE. Both metrics are thus a measure of the mean relative error between the ML prediction and the experimental output of skin-friction drag for each instantaneous wave. For evaluating the performance of the ML model, it is important to consider different types of errors. For completeness, we also calculated the coefficient of determination, denoted as ![]() $R^2$

$R^2$

\begin{equation} R^2 = 1 - \frac{\sum\left(\tau_{\nu} - \hat{\tau}_{\nu} \right)^2}{\sum\left(\tau_\nu - \bar{\tau}_\nu \right)^2} , \end{equation}

\begin{equation} R^2 = 1 - \frac{\sum\left(\tau_{\nu} - \hat{\tau}_{\nu} \right)^2}{\sum\left(\tau_\nu - \bar{\tau}_\nu \right)^2} , \end{equation}

where ![]() $\bar {\tau }_\nu$ is the ensemble average of the ground-truth skin-friction drag. Here, it is noted that the performance evaluation is based on the test dataset of the corresponding wind-wave case, i.e. the ensemble averaging in (3.3) is conducted on the snapshots in the test dataset only.

$\bar {\tau }_\nu$ is the ensemble average of the ground-truth skin-friction drag. Here, it is noted that the performance evaluation is based on the test dataset of the corresponding wind-wave case, i.e. the ensemble averaging in (3.3) is conducted on the snapshots in the test dataset only.

The values of the ![]() $\delta _{e,1}$,

$\delta _{e,1}$, ![]() $\delta _{e,2}$ and

$\delta _{e,2}$ and ![]() $R^2$ metrics are reported in table 2 for all wind-wave cases. In an average sense, these values comprehensively evaluate the ML-model predictions compared with the reference experimental data. In cases for which the model has been trained, the relative errors are slightly lower, except for the highest wind speed case; in the percentage sense, the RMAE error for the W1-05, W1-09 and W1-16 cases corresponding to wind waves with

$R^2$ metrics are reported in table 2 for all wind-wave cases. In an average sense, these values comprehensively evaluate the ML-model predictions compared with the reference experimental data. In cases for which the model has been trained, the relative errors are slightly lower, except for the highest wind speed case; in the percentage sense, the RMAE error for the W1-05, W1-09 and W1-16 cases corresponding to wind waves with ![]() $U_{10} = 5.08$, 9.57 and

$U_{10} = 5.08$, 9.57 and ![]() $16.59\ \textrm {m}\ \textrm {s}^{-1}$ are 24.0 %, 21.7 % and 34.4 %, respectively. The increase in the error metrics for the highest wind speed case compared with the moderate cases (

$16.59\ \textrm {m}\ \textrm {s}^{-1}$ are 24.0 %, 21.7 % and 34.4 %, respectively. The increase in the error metrics for the highest wind speed case compared with the moderate cases (![]() $U_{10} = 5.08$ and

$U_{10} = 5.08$ and ![]() $9.57\ \textrm {m}\ \textrm {s}^{-1}$) is, in part, due to capturing only a portion of the wave profiles and the corresponding surface stresses at high wind speeds. Because of longer waves, in the two high wind speed cases of 14.82 and

$9.57\ \textrm {m}\ \textrm {s}^{-1}$) is, in part, due to capturing only a portion of the wave profiles and the corresponding surface stresses at high wind speeds. Because of longer waves, in the two high wind speed cases of 14.82 and ![]() $16.59\ \textrm {m}\ \textrm {s}^{-1}$, there exist a number of waves for which the entire wavelength of the wave cannot be captured in the PIV field of view. We observed that the ML model performs best for the cases where at least one wavelength has been captured. Another contributor to this increased error might be the increase in the signal-to-noise ratio of the PIV measurements with wind speed. The unseen cases of W1-02 and W1-14 are discussed in § 3.4.

$16.59\ \textrm {m}\ \textrm {s}^{-1}$, there exist a number of waves for which the entire wavelength of the wave cannot be captured in the PIV field of view. We observed that the ML model performs best for the cases where at least one wavelength has been captured. Another contributor to this increased error might be the increase in the signal-to-noise ratio of the PIV measurements with wind speed. The unseen cases of W1-02 and W1-14 are discussed in § 3.4.

Table 2. Summary of the ML model performance in predicting skin-friction drag compared with the experimental data. The RMAE, RMSE and coefficient of determination (![]() $R^2$) metrics are presented for all wind-wave conditions. Also, the W1-02 and W1-14 cases are separate datasets for which the model has been applied to examine the model interpolation and extrapolation performance on unseen data.

$R^2$) metrics are presented for all wind-wave conditions. Also, the W1-02 and W1-14 cases are separate datasets for which the model has been applied to examine the model interpolation and extrapolation performance on unseen data.

The relative errors reported in table 2 provide an average measure over the entire dataset. To further evaluate error distributions across individual waves and gain insights into the model accuracy on a wave-by-wave basis, we estimate the relative error for each wave. The probability density functions (p.d.f.s) of relative mean absolute and ![]() $R^2$ errors are respectively shown in figures 6(a) and 6(b) for the W1-05, W1-09 and W1-16 cases. As is shown, the p.d.f. plots indicate that the ML model performs similarly for the W1-05 and W1-09 cases with moderate wind speeds of 5.08 and

$R^2$ errors are respectively shown in figures 6(a) and 6(b) for the W1-05, W1-09 and W1-16 cases. As is shown, the p.d.f. plots indicate that the ML model performs similarly for the W1-05 and W1-09 cases with moderate wind speeds of 5.08 and ![]() $9.57\ \textrm {m}\ \textrm {s}^{-1}$. In these cases, the most probable relative error is roughly 20 % with a coefficient of determination of approximately 0.96, where the maximum RMAE is

$9.57\ \textrm {m}\ \textrm {s}^{-1}$. In these cases, the most probable relative error is roughly 20 % with a coefficient of determination of approximately 0.96, where the maximum RMAE is ![]() $\max {(\delta _{e, 1})} \approx 55\,\%$ and the minimum

$\max {(\delta _{e, 1})} \approx 55\,\%$ and the minimum ![]() $R^2$ is

$R^2$ is ![]() $\min {(R^2)} \approx 0.7$. The p.d.f.s of these cases also exhibit overlapping tails with small

$\min {(R^2)} \approx 0.7$. The p.d.f.s of these cases also exhibit overlapping tails with small ![]() $p(\delta _{e, 1})$ values, suggesting that the probability of extreme errors (

$p(\delta _{e, 1})$ values, suggesting that the probability of extreme errors (![]() $\mathrm {RMAE} \geqslant 40\,\%$ and

$\mathrm {RMAE} \geqslant 40\,\%$ and ![]() $R^2 \leqslant 0.75$) is negligible. For the strongly forced wind waves (i.e. W1-16 case), however, the performance of the ML model slightly degrades, yielding a most probable relative error of

$R^2 \leqslant 0.75$) is negligible. For the strongly forced wind waves (i.e. W1-16 case), however, the performance of the ML model slightly degrades, yielding a most probable relative error of ![]() $\delta _{e, 1} \approx 30\,\%$ with a coefficient of determination of

$\delta _{e, 1} \approx 30\,\%$ with a coefficient of determination of ![]() $R^2 \approx 0.9$. In this case, although the maximum relative error increases to 90 %,

$R^2 \approx 0.9$. In this case, although the maximum relative error increases to 90 %, ![]() $R^2$ remains acceptable at around 0.55.

$R^2$ remains acceptable at around 0.55.

Figure 6. The p.d.f.s of (a) RMAE, ![]() $\delta _{e, 1}$, and (b) coefficient of determination error,

$\delta _{e, 1}$, and (b) coefficient of determination error, ![]() $R^2$, for the W1-05, W1-09 and W1-16 cases with a wind speed of

$R^2$, for the W1-05, W1-09 and W1-16 cases with a wind speed of ![]() $U_{10}= 5.08$, 9.57 and

$U_{10}= 5.08$, 9.57 and ![]() $16.59\ \textrm {m}\ \textrm {s}^{-1}$, respectively. The RMAE and

$16.59\ \textrm {m}\ \textrm {s}^{-1}$, respectively. The RMAE and ![]() $R^2$ error metrics were calculated for each instantaneous wave in the test dataset, and their distribution of probabilities is shown here.

$R^2$ error metrics were calculated for each instantaneous wave in the test dataset, and their distribution of probabilities is shown here.

The decrease in the model accuracy with increasing wind speed can be attributed to more frequent airflow separation events over wind waves and the increased signal-to-noise ratio in the experimental measurements. We discuss this in more detail in § 4. Here, it should also be noted that, although the relative error may be higher in high wind speed cases, the ![]() $R^2$ metric still demonstrates an acceptable correlation between the ML predicted and experimental values of the skin-friction drag for all wind-wave conditions. In fact, the RMAE is an average measure relative to the ground-truth experimental values. A large RMAE thus suggests that the ML model may have a large average deviation from the average magnitude of experimental values. However, the

$R^2$ metric still demonstrates an acceptable correlation between the ML predicted and experimental values of the skin-friction drag for all wind-wave conditions. In fact, the RMAE is an average measure relative to the ground-truth experimental values. A large RMAE thus suggests that the ML model may have a large average deviation from the average magnitude of experimental values. However, the ![]() $R^2$ represents how accurately the ML predictions of surface stress can capture the variability of the corresponding experimental measurements about their respective means. This indicates that our ML model, even in cases with a high RMAE, can sufficiently explain the overall variance of the measurements as the

$R^2$ represents how accurately the ML predictions of surface stress can capture the variability of the corresponding experimental measurements about their respective means. This indicates that our ML model, even in cases with a high RMAE, can sufficiently explain the overall variance of the measurements as the ![]() $R^2$ values are acceptable. Although RMAE and

$R^2$ values are acceptable. Although RMAE and ![]() $R^2$ are widely accepted metrics for evaluating ML model performance, their lack of physical significance may lead to misplaced confidence in the model's performance from a physical standpoint (e.g. Wang et al. Reference Wang, Kashinath, Mustafa, Albert and Yu2020; Hora & Giometto Reference Hora and Giometto2023).

$R^2$ are widely accepted metrics for evaluating ML model performance, their lack of physical significance may lead to misplaced confidence in the model's performance from a physical standpoint (e.g. Wang et al. Reference Wang, Kashinath, Mustafa, Albert and Yu2020; Hora & Giometto Reference Hora and Giometto2023).

3.2.2. Wave-phase-dependent errors

Here, we turn our attention to examining the accuracy of the ML model in predicting the wave-phase-dependent distribution of skin-friction drag over surface waves. The stress distributions over waves are largely wave-phase dependent, and accurate predictions of stress distributions are of significant importance for numerical simulations such as wall-modelled LESs and developing sea-state-dependent modelling frameworks. In order to analyse the behaviour of organized wave motions in a turbulent boundary layer flow, an instantaneous flow field variable may be separated into a phase-averaged (or wave-phase-dependent) component, ![]() $\langle\, f(\boldsymbol {x}, t) \rangle$, and a turbulent fluctuation component,

$\langle\, f(\boldsymbol {x}, t) \rangle$, and a turbulent fluctuation component, ![]() $f^\prime (\boldsymbol {x}, t)$, such that (Hussain & Reynolds Reference Hussain and Reynolds1970)

$f^\prime (\boldsymbol {x}, t)$, such that (Hussain & Reynolds Reference Hussain and Reynolds1970)

where ![]() $\boldsymbol {x}$ is the position vector at time

$\boldsymbol {x}$ is the position vector at time ![]() $t$. The phase-averaged quantity can be further decomposed into the sum of an ensemble mean component,

$t$. The phase-averaged quantity can be further decomposed into the sum of an ensemble mean component, ![]() $\bar {f} (\boldsymbol {x})$, and a wave-coherent component,

$\bar {f} (\boldsymbol {x})$, and a wave-coherent component, ![]() $\tilde {f}(\boldsymbol {x}, t)$, i.e.

$\tilde {f}(\boldsymbol {x}, t)$, i.e. ![]() $\langle\, f(\boldsymbol {x}, t) \rangle = \bar {f} (\boldsymbol {x}) + \tilde {f}(\boldsymbol {x}, t)$, which is defined as

$\langle\, f(\boldsymbol {x}, t) \rangle = \bar {f} (\boldsymbol {x}) + \tilde {f}(\boldsymbol {x}, t)$, which is defined as

\begin{equation} \langle\, f(\boldsymbol{x}, t) \rangle = \mathop{\mathrm{lim}}\limits_{N\to \infty } \frac{1}{N}\sum^N_{n=1}{f\left(\boldsymbol{x} + n \lambda, t \right)} , \end{equation}

\begin{equation} \langle\, f(\boldsymbol{x}, t) \rangle = \mathop{\mathrm{lim}}\limits_{N\to \infty } \frac{1}{N}\sum^N_{n=1}{f\left(\boldsymbol{x} + n \lambda, t \right)} , \end{equation}

where ![]() $N$ is the number of instantaneous realizations and

$N$ is the number of instantaneous realizations and ![]() $\lambda$ is the local wavelength. In fact, a phase-average quantity is the average of the values of that quantity at a particular phase of the wave, while the ensemble average (or equivalently, mean) is defined as the average of the quantity over all possible phases. The properties of the ensemble- and phase-averaged operators are discussed in reports by Hussain & Reynolds (Reference Hussain and Reynolds1970, Reference Hussain and Reynolds1972) and Reynolds & Hussain (Reference Reynolds and Hussain1972). The phase-averaged relative errors and r-squared distributions across wave phases are presented for W1-05, W1-09 and W1-16 experimental conditions in figures 7(a) and 7(b), respectively. It is noted that, in the current work, the local instantaneous wave phases were estimated using a Hilbert transform technique (for details, see Melville Reference Melville1983; Hristov, Friehe & Miller Reference Hristov, Friehe and Miller1998) applied to the wave profiles obtained from laboratory measurements (see Yousefi et al. Reference Yousefi, Veron and Buckley2020). In order to estimate the phase-averaged quantities, the wave phases were segregated into 108 independent bins, each covering a phase interval of

$\lambda$ is the local wavelength. In fact, a phase-average quantity is the average of the values of that quantity at a particular phase of the wave, while the ensemble average (or equivalently, mean) is defined as the average of the quantity over all possible phases. The properties of the ensemble- and phase-averaged operators are discussed in reports by Hussain & Reynolds (Reference Hussain and Reynolds1970, Reference Hussain and Reynolds1972) and Reynolds & Hussain (Reference Reynolds and Hussain1972). The phase-averaged relative errors and r-squared distributions across wave phases are presented for W1-05, W1-09 and W1-16 experimental conditions in figures 7(a) and 7(b), respectively. It is noted that, in the current work, the local instantaneous wave phases were estimated using a Hilbert transform technique (for details, see Melville Reference Melville1983; Hristov, Friehe & Miller Reference Hristov, Friehe and Miller1998) applied to the wave profiles obtained from laboratory measurements (see Yousefi et al. Reference Yousefi, Veron and Buckley2020). In order to estimate the phase-averaged quantities, the wave phases were segregated into 108 independent bins, each covering a phase interval of ![]() $5.82 \times 10^{-2}$ rad. From figure 7, it is evident that the relative errors for moderate wind speed cases of W1-05 and W1-09 exhibit similar patterns in that both RMAE and