1. Introduction

In this study, we propose an optimizer for active flow control focusing on multi-actuator bluff-body drag reduction. This optimizer combines the convergence rate of a gradient search method with an explorative method for identifying the global minimum. Actuators and sensors are becoming increasingly cheaper, powerful and reliable. This trend makes active flow control of increasing interest to industry. In addition, distributed actuation can give rise to performance benefits over single actuator solutions. Here, we focus on the simple case of open-loop control with steady or periodic operation of multiple actuators.

Even for this simple case, the optimization of actuation constitutes an algorithmic challenge. Often the budget for optimization is limited to ![]() $O(100)$ high fidelity simulations, like direct numerical simulations (DNS) or large-eddy simulations (LES) or

$O(100)$ high fidelity simulations, like direct numerical simulations (DNS) or large-eddy simulations (LES) or ![]() $O(100)$ water tunnel experiments, or

$O(100)$ water tunnel experiments, or ![]() $O(1000)$ Reynolds-averaged Navier–Stokes (RANS) simulations, or a similar amount of wind-tunnel experiments. Moreover, the optimization may need to be performed for multiple operating conditions.

$O(1000)$ Reynolds-averaged Navier–Stokes (RANS) simulations, or a similar amount of wind-tunnel experiments. Moreover, the optimization may need to be performed for multiple operating conditions.

Evidently, efficient optimizers are of large practical importance. Gradient-based optimizers, like the downhill simplex method (DSM) have the advantage of rapid convergence against a cost minimum, but this minimum may easily be a suboptimal local one, particularly for high-dimensional search spaces. Random restart variants have a larger probability of finding the global minimum but come with a dramatic increase of testing. In contrast to gradient-based approaches, Latin hypercube sampling (LHS) performs an ideal exploration by guaranteeing a close geometric coverage of the search space – obviously with a poor associated convergence rate and the price of extensive evaluations of unpromising territories. Monte Carlo sampling (MCS) has similar advantages and disadvantages. Genetic algorithms (GA) elegantly combine exploration with mutation and exploitation with crossover operations. These are routinely used optimizers and the focus of our study. These optimizers are superbly described in Press et al. (Reference Press, Flamery, Teukolsky and Vetterling2007). Myriad of other optimizers have been invented for different niche applications. Deterministic gradient-based optimizers may be augmented by estimators for the gradient. These estimators become particularly challenging for sparse data. This challenge is addressed by stochastic gradient methods which aim at navigating through a high-dimensional search space with insufficient derivative information. Many biologically inspired optimization methods, like ant colony and participle swarm optimization, also aim at balancing exploitation and exploration, like GA. A new avenue is opened by including the learning of the response model from actuation to cost function during the optimization process and using this model for identifying promising actuation parameters. Another new path is ridgeline inter- or extrapolation (Fernex et al. Reference Fernex, Semann, Albers, Meysonnat, Schröder and Noack2020), exploiting the topology of the control landscape. In this study, these extensions are not included in the comparative analysis, as the additional complexity of these methods with many additional tuning parameters can hardly be objectively performed.

Our first flow control benchmark is the fluidic pinball (Ishar et al. Reference Ishar, Kaiser, Morzynski, Albers, Meysonnat, Schröder and Noack2019; Deng et al. Reference Deng, Noack, Morzyński and Pastur2020). This two-dimensional flow around three equal, parallel, equidistantly placed cylinders can be changed by the three rotation velocities of the cylinders. The dynamics is rich in nonlinear behaviour, yet geometrically simple and physically interpretable. With suitable rotation of the cylinders many known wake stabilizing and drag-reducing mechanisms can be realized: (i) Coanda actuation (Geropp Reference Geropp1995; Geropp & Odenthal Reference Geropp and Odenthal2000), (ii) circulation control (Magnus effect), (iii) base bleed (Wood Reference Wood1964), (iv) high-frequency forcing (Thiria, Goujon-Durand & Wesfreid Reference Thiria, Goujon-Durand and Wesfreid2006), (v) low-frequency forcing (Glezer, Amitay & Honohan Reference Glezer, Amitay and Honohan2005) and (vi) phasor control (Protas Reference Protas2004). In this study, constant rotations are optimized for net drag power reduction accounting for the actuation energy. This search space implies the first three mechanisms. The fluidic pinball study will foreshadow key results of the Ahmed body. This includes the drag-reducing actuation mechanism and the visualization tools for high-dimensional search spaces.

The main application focus of this study is on active drag reduction behind a generic car model using RANS simulations. Aerodynamic drag is a major contribution of traffic-related costs, from airborne to ground and marine traffic. A small drag reduction would have a dramatic economic effect considering that transportation accounts for approximately ![]() $20\,\%$ of global energy consumption (Gad-el-Hak Reference Gad-el-Hak2007; Kim Reference Kim2011; Choi, Lee & Park Reference Choi, Lee and Park2014). While the drag of airplanes and ships is largely caused by skin friction, the resistance of cars and trucks is mainly caused by pressure or bluff-body drag (Seifert et al. Reference Seifert, Stalnov, Sperber, Arwatz, Palei, David, Dayan and Fono2009). Hucho (Reference Hucho2011) defines bodies with a pressure drag exceeding the skin-friction contribution as bluff and as streamlined otherwise.

$20\,\%$ of global energy consumption (Gad-el-Hak Reference Gad-el-Hak2007; Kim Reference Kim2011; Choi, Lee & Park Reference Choi, Lee and Park2014). While the drag of airplanes and ships is largely caused by skin friction, the resistance of cars and trucks is mainly caused by pressure or bluff-body drag (Seifert et al. Reference Seifert, Stalnov, Sperber, Arwatz, Palei, David, Dayan and Fono2009). Hucho (Reference Hucho2011) defines bodies with a pressure drag exceeding the skin-friction contribution as bluff and as streamlined otherwise.

The pressure drag of cars and trucks originates from the excess pressure at the front scaling with the dynamic pressure and a low-pressure region at the rear. The reduction of the pressure contribution from the front often requires significant changes of the aerodynamic design. Few active control solutions for the front drag reduction have been suggested (Minelli et al. Reference Minelli, Dong, Noack and Krajnović2020). In contrast, the contribution at the rearward side can be significantly changed with passive or active means. Drag reductions of 10 %–20 % are common, Pfeiffer & King (Reference Pfeiffer and King2014) have even achieved 25 % drag reduction with active blowing in the wind tunnel. For a car at a speed of ![]() $120\, \textrm {km}\,\textrm {h}^{-1}$, this would reduce consumption by approximately 1.8 l per 100 km. The economic impact of drag reduction is significant for trucking fleets with a profit margin of only 2 %–3 %. Two thirds of the operating costs are from fuel consumption (Brunton & Noack Reference Brunton and Noack2015). Hence, a 5 % reduction of fuel costs from aerodynamic drag corresponds to over 100 % increase of the profit margin.

$120\, \textrm {km}\,\textrm {h}^{-1}$, this would reduce consumption by approximately 1.8 l per 100 km. The economic impact of drag reduction is significant for trucking fleets with a profit margin of only 2 %–3 %. Two thirds of the operating costs are from fuel consumption (Brunton & Noack Reference Brunton and Noack2015). Hence, a 5 % reduction of fuel costs from aerodynamic drag corresponds to over 100 % increase of the profit margin.

Car and truck design is largely determined by practical and aesthetic considerations. In this study, we focus on drag reduction by active means at the rearward side. Intriguingly, most drag reductions of a bluff body fall into the categories of Kirchhoff solution and aerodynamic boat tailing. The first strategy may be idealized by the Kirchhoff solution (Pastoor et al. Reference Pastoor, Henning, Noack, King and Tadmor2008), i.e. potential flow around the car with infinitely thin shear layers from the rearward separation lines, separating the oncoming flow and dead-fluid region. The low-pressure region due to curved shear layers is replaced by an elongated, ideally infinitely long wake with small, ideally vanishing curvature of the shear layer. Thus, the pressure of the dead-water region is elevated to the outer pressure, i.e. the wake does not contribute to the drag. This wake elongation is achieved by reducing entrainment through the shear layer, e.g. by phasor control mitigating vortex shedding (Pastoor et al. Reference Pastoor, Henning, Noack, King and Tadmor2008) or by energization of the shear layer with high-frequency actuation (Barros et al. Reference Barros, Borée, Noack, Spohn and Ruiz2016). Wake disrupters also decrease drag by energizing the shear layer (Park et al. Reference Park, Lee, Jeon, Hahn, Kim, Choi and Choi2006) or delaying separation (Aider, Beaudoin & Wesfreid Reference Aider, Beaudoin and Wesfreid2010). Arguably, the drag of the Kirchhoff solution can be considered as an achievable limit with small actuation energy.

The second strategy targets drag reduction by aerodynamic boat tailing. Geropp (Reference Geropp1995) and Geropp & Odenthal (Reference Geropp and Odenthal2000) have pioneered this approach by Coanda blowing. Here, the shear layer originating at the bluff body is vectored inward and thus gives rise to a more streamlined wake shape. Barros et al. (Reference Barros, Borée, Noack, Spohn and Ruiz2016) have achieved 20 % drag reduction of a square-back Ahmed body with high-frequency Coanda blowing in a high-Reynolds-number experiment. A similar drag reduction was achieved with steady blowing but at higher flow coefficients.

This study focuses on drag reduction of the low-drag Ahmed body with rear slant angle of ![]() $35^{\circ }$. This Ahmed body simplifies the shape of many cars. Bideaux et al. (Reference Bideaux, Bobillier, Fournier, Gilliéron, El Hajem, Champagne, Gilotte and Kourta2011) and Gilliéron & Kourta (Reference Gilliéron and Kourta2013) have achieved 20 % drag reduction for this configuration in an experiment. High-frequency blowing was applied orthogonal to the upper corner of the slanted rear surface. Intriguingly, the maximum drag reduction was achieved in a narrow range of frequencies and actuation velocities and its effect rapidly deteriorated for slightly changed parameters. In addition, the actuation is neither Coanda blowing nor an ideal candidate for shear-layer energization, as the authors noted.

$35^{\circ }$. This Ahmed body simplifies the shape of many cars. Bideaux et al. (Reference Bideaux, Bobillier, Fournier, Gilliéron, El Hajem, Champagne, Gilotte and Kourta2011) and Gilliéron & Kourta (Reference Gilliéron and Kourta2013) have achieved 20 % drag reduction for this configuration in an experiment. High-frequency blowing was applied orthogonal to the upper corner of the slanted rear surface. Intriguingly, the maximum drag reduction was achieved in a narrow range of frequencies and actuation velocities and its effect rapidly deteriorated for slightly changed parameters. In addition, the actuation is neither Coanda blowing nor an ideal candidate for shear-layer energization, as the authors noted.

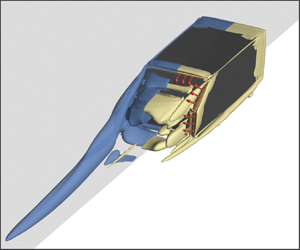

The literature on active drag reduction of the Ahmed body indicates that small changes of actuation can significantly change its effectiveness. Actuators have been applied with beneficial effects at all rearward edges (Barros et al. Reference Barros, Borée, Noack, Spohn and Ruiz2016), thus further complicating the optimization task. A systematic optimization of the actuation at all edges, including amplitudes and angles of blowing, is beyond reach of current experiments. In this study, a systematic RANS optimization is performed in a rich parametric space comprising the angles and amplitudes of steady blowing of five actuator groups: one on the top, middle and bottom edge and two symmetric actuators at the corners of the slanted and vertical surfaces. High-frequency forcing is not considered, as the RANS tends to be overly dissipative to the actuation response.

The manuscript is organized as follows. The employed optimization algorithms are introduced in § 2 and compared in § 3. § 4 optimizes the net drag power for the fluidic pinball, which features two-dimensional flow controlled in a three-dimensional actuation space based on DNS. A simulation-based optimization of actuation for the three-dimensional low-drag Ahmed body is given in § 5. Here, up to 10 actuation commands controlling the velocity and direction of five rearward slot actuator groups are optimized. Our results are summarized in § 6. Future directions are indicated in § 7.

2. Optimization algorithms

In this section, the employed optimization algorithms for the actuation parameters are described. Let ![]() $J ( \boldsymbol {b})$ be the cost function – here the drag coefficient – depending on

$J ( \boldsymbol {b})$ be the cost function – here the drag coefficient – depending on ![]() $N$ actuation parameters

$N$ actuation parameters ![]() $\boldsymbol {b}=[b_1, \ldots, b_N]^\textrm {T}$ in the domain

$\boldsymbol {b}=[b_1, \ldots, b_N]^\textrm {T}$ in the domain ![]() $\varOmega$,

$\varOmega$,

where the superscript ‘![]() ${\rm T}$’ denotes the transpose. Permissible values of each parameter define an interval,

${\rm T}$’ denotes the transpose. Permissible values of each parameter define an interval, ![]() $b_i \in [ b_{i, min}, b_{i, max} ]$,

$b_i \in [ b_{i, min}, b_{i, max} ]$, ![]() $i=1,\ldots,N$. In other words, optimization is performed in rectangular search space,

$i=1,\ldots,N$. In other words, optimization is performed in rectangular search space,

The optimization goal is to find the global minimum of ![]() $J$ in

$J$ in ![]() $\varOmega$,

$\varOmega$,

Several common optimization methods are investigated. Benchmark is the DSM (see, e.g. Press et al. Reference Press, Flamery, Teukolsky and Vetterling2007) as a robust data-driven representative of gradient search methods (§ 2.4). This algorithm exploits gradient information from neighbouring points to descend to a local minimum. Note that DSM is referred to as a gradient-free method in numerical textbooks because it does not require explicitly the gradient vector as input nor an estimation thereof.

Depending on the initial condition, this search may yield any local minimum. In random restart simplex (RRS), the chance of finding a global minimum is increased by multiple runs with random initial conditions. The geometric coverage of the search space is the focus of LHS (see, again, Press et al. Reference Press, Flamery, Teukolsky and Vetterling2007), which optimally explores the whole domain ![]() $\varOmega$ independently of the cost values, i.e. ignores any gradient information. Evidently, LHS has a larger chance of getting close to the global minimum while DSM is more efficient descending to a minimum, potentially a suboptimal one. MCS (see, again, Press et al. Reference Press, Flamery, Teukolsky and Vetterling2007), is a simpler and more common exploration strategy by taking random values for each argument, again, ignoring any cost value information. GA starts with a MCS in the first generation but then employs genetic operations to combine explorative and exploitive features in the following generations (see, e.g. Wahde Reference Wahde2008).

$\varOmega$ independently of the cost values, i.e. ignores any gradient information. Evidently, LHS has a larger chance of getting close to the global minimum while DSM is more efficient descending to a minimum, potentially a suboptimal one. MCS (see, again, Press et al. Reference Press, Flamery, Teukolsky and Vetterling2007), is a simpler and more common exploration strategy by taking random values for each argument, again, ignoring any cost value information. GA starts with a MCS in the first generation but then employs genetic operations to combine explorative and exploitive features in the following generations (see, e.g. Wahde Reference Wahde2008).

Sections 2.1–2.3 outline the non-gradient-based explorative methods from the most explorative LHS, to MCS and the partially exploitive GA. Sections 2.4 and 2.5 recapitulate DSM and its random restart variant. These are commonly used methods for data-driven optimization with an unknown analytical cost function.

In § 2.6, we combine the advantages of DSM in exploiting a local minimum and of the LHS in exploring the global one in a new method, the explorative gradient method (EGM), by an alternative execution (see figure 1). § 2.7 discusses auxiliary accelerators which are specific to the performed computational fluid dynamics optimization.

Figure 1. Sketch of the explorative gradient method. For details, see text.

2.1. LHS – deterministic exploration

While our DSM benchmark exploits neighbourhood information to slide down to a local minimum, LHS (McKay, Beckman & Conover Reference McKay, Beckman and Conover1979) aims to explore the parameter space irrespective of the cost values. We employ a space-filling variant which effectively covers the whole permissible domain of parameters. This explorative strategy (‘maximin’ criterion in Mathematica) minimizes the maximum minimal distance between the points

In other words, there is no other sampling of ![]() $M$ parameters with a larger minimum distance;

$M$ parameters with a larger minimum distance; ![]() $M$ can be any positive integral number.

$M$ can be any positive integral number.

For better comparison with DSM, we employ an iterative variant. Note that, once ![]() $M$ sample points are created, they cannot be augmented anymore, for instance when learning by LHS was not satisfactory. We create a large number of LHS candidates

$M$ sample points are created, they cannot be augmented anymore, for instance when learning by LHS was not satisfactory. We create a large number of LHS candidates ![]() $\boldsymbol {b}^\star _j$,

$\boldsymbol {b}^\star _j$, ![]() $j=1,\ldots, M^\star$ for a dense coverage of the parameter space

$j=1,\ldots, M^\star$ for a dense coverage of the parameter space ![]() $\varOmega$ at the beginning, typically

$\varOmega$ at the beginning, typically ![]() $M^\star = 10^6$. As first sample

$M^\star = 10^6$. As first sample ![]() $\boldsymbol {b}_1$, the centre of the initial simplex is taken. The second parameter is taken from

$\boldsymbol {b}_1$, the centre of the initial simplex is taken. The second parameter is taken from ![]() $\boldsymbol {b}^\star _j$,

$\boldsymbol {b}^\star _j$, ![]() $j=1,\ldots,M^\star$ maximizing the distance to

$j=1,\ldots,M^\star$ maximizing the distance to ![]() $\boldsymbol {b}_1$,

$\boldsymbol {b}_1$,

The third parameter ![]() $\boldsymbol {b}_3$ is taken from the same set so that the minimal distance to

$\boldsymbol {b}_3$ is taken from the same set so that the minimal distance to ![]() $\boldsymbol {b}_1$ and

$\boldsymbol {b}_1$ and ![]() $\boldsymbol {b}_2$ is maximized, and so on. This procedure allows us to recursively refine sample points and to start with an initial set of parameters.

$\boldsymbol {b}_2$ is maximized, and so on. This procedure allows us to recursively refine sample points and to start with an initial set of parameters.

2.2. MCS – stochastic exploration

The employed space-filling variant of LHS requires the solution of an optimization problem guaranteeing a uniform geometric coverage of the domain. In high-dimensional domains, this coverage may not be achievable. A much easier and far more commonly used exploration strategy is MCS. Here, the ![]() $m$th sample

$m$th sample ![]() $\boldsymbol {b}_m = [ b_{1,m}, \ldots, b_{N,m} ]^\textrm {T}$ is given by

$\boldsymbol {b}_m = [ b_{1,m}, \ldots, b_{N,m} ]^\textrm {T}$ is given by

where ![]() $\zeta _{i,m} \in [0,1]$ are random numbers with uniform probability distribution in the unit domain. The relative performance of LHS and MCS is a debated topic. We will wait for the results of an analytical problem in § 3.

$\zeta _{i,m} \in [0,1]$ are random numbers with uniform probability distribution in the unit domain. The relative performance of LHS and MCS is a debated topic. We will wait for the results of an analytical problem in § 3.

2.3. GA – biologically inspired exploration and exploitation

GA mimics the natural selection process. We refer to Wahde (Reference Wahde2008) as excellent reference. In the following, the method is briefly outlined to highlight the specific version we use and the chosen parameters.

Any parameter vector ![]() $\boldsymbol {b} = [b_1, b_2, \ldots, b_N]^\textrm {T} \in \varOmega \subset {\mathcal {R}}^N$ comprises the real values

$\boldsymbol {b} = [b_1, b_2, \ldots, b_N]^\textrm {T} \in \varOmega \subset {\mathcal {R}}^N$ comprises the real values ![]() $b_i$, also called alleles. This real value is encoded as a binary number and called the gene. The chromosome comprises alle genes and represents the parameter vector (Wright Reference Wright1991).

$b_i$, also called alleles. This real value is encoded as a binary number and called the gene. The chromosome comprises alle genes and represents the parameter vector (Wright Reference Wright1991).

GA evolves one generation of ![]() $I$ parameters, also called individuals, into a new generation with the same number of parameters using biologically inspired genetic operations. The first generation is based on MCS, i.e. represents completely random genoms. The individuals

$I$ parameters, also called individuals, into a new generation with the same number of parameters using biologically inspired genetic operations. The first generation is based on MCS, i.e. represents completely random genoms. The individuals ![]() $J_i^1$,

$J_i^1$, ![]() $i=1,\ldots,I$ are evaluated and sorted by their costs

$i=1,\ldots,I$ are evaluated and sorted by their costs

The next generation is computed with elitism and two genetic operations. Elitism copies the ![]() $N_e$ best performing individuals in the new generation;

$N_e$ best performing individuals in the new generation; ![]() $P_e = N_e/I$ denotes the relative quota. The two genetic operations include mutation, which randomly changes parts of the genom, and crossover, which randomly exchanges parts of the genoms of two individuals. Mutation serves explorative purposes and crossover has the tendency to breed better individuals. In an outer loop, the genetic operations are randomly chosen with probabilities

$P_e = N_e/I$ denotes the relative quota. The two genetic operations include mutation, which randomly changes parts of the genom, and crossover, which randomly exchanges parts of the genoms of two individuals. Mutation serves explorative purposes and crossover has the tendency to breed better individuals. In an outer loop, the genetic operations are randomly chosen with probabilities ![]() $P_m$ and

$P_m$ and ![]() $P_c$ for mutation and crossover, respectively. Note that

$P_c$ for mutation and crossover, respectively. Note that ![]() $P_e+P_m+P_c=1$ by design.

$P_e+P_m+P_c=1$ by design.

In the inner loop, i.e. after the genetic operation is determined, individuals from the current generation are chosen. Higher performing individuals have higher probability of being chosen. Following the Matlab routine, this probability is proportional to the inverse square root of its relative rank ![]() $p \propto 1/\sqrt {i}$.

$p \propto 1/\sqrt {i}$.

GA terminates according to a predetermined stop criterion, a maximum number of generations ![]() $L$ or corresponding number of evaluations

$L$ or corresponding number of evaluations ![]() $M=IL$. For reasons of comparison, we renumber the individuals in the order of their evaluation, i.e.

$M=IL$. For reasons of comparison, we renumber the individuals in the order of their evaluation, i.e. ![]() $m \in \{1,\ldots, I\}$ belongs to the first generation,

$m \in \{1,\ldots, I\}$ belongs to the first generation, ![]() $m \in \{I+1, \ldots, 2I \}$ to the second generation, etc.

$m \in \{I+1, \ldots, 2I \}$ to the second generation, etc.

The chosen parameters are the default values of Matlab, e.g. ![]() $P_r=0.05$

$P_r=0.05$ ![]() $P_c=0.8$,

$P_c=0.8$, ![]() $P_m=0.15$,

$P_m=0.15$, ![]() $N_e = 3$. Further details are provided in Appendix A.

$N_e = 3$. Further details are provided in Appendix A.

2.4. DSM – a robust gradient method

DSM proposed by Nelder & Mead (Reference Nelder and Mead1965) is a very simple, robust and widely used gradient method. This method does not require any gradient information and is well suited for expensive function evaluations, like the considered RANS simulation for the drag coefficients, and for experimental optimizations with inevitable noise. The price is a slow convergence for the minimization of smooth functions as compared with algorithms which can exploit gradient and curvature information.

We briefly outline the employed downhill simplex algorithm, as there are many variants. First, ![]() $N+1$ vertices

$N+1$ vertices ![]() $\boldsymbol {b}_m$,

$\boldsymbol {b}_m$, ![]() $m=1,\ldots,N+1$ in

$m=1,\ldots,N+1$ in ![]() $\varOmega$ are initialized as detailed in the respective sections. Commonly,

$\varOmega$ are initialized as detailed in the respective sections. Commonly, ![]() $\boldsymbol {b}_{N+1}$ is placed somewhere in the middle of the domain and the other vertices explore steps in all directions,

$\boldsymbol {b}_{N+1}$ is placed somewhere in the middle of the domain and the other vertices explore steps in all directions, ![]() $\boldsymbol {b}_m = \boldsymbol {b}_{N+1} + h \, \boldsymbol {e}_{m}$,

$\boldsymbol {b}_m = \boldsymbol {b}_{N+1} + h \, \boldsymbol {e}_{m}$, ![]() $m=1,\ldots,N$. Here,

$m=1,\ldots,N$. Here, ![]() $\boldsymbol {e}_i = [\delta _{i1}, \ldots, \delta _{iN}]^\textrm {T}$ is a unit vector in the

$\boldsymbol {e}_i = [\delta _{i1}, \ldots, \delta _{iN}]^\textrm {T}$ is a unit vector in the ![]() $i$th direction and

$i$th direction and ![]() $h$ is a step size which is small compared with the domain. Evidently, all vertices must remain in the domain

$h$ is a step size which is small compared with the domain. Evidently, all vertices must remain in the domain ![]() $\boldsymbol {b}_m \in \varOmega$.

$\boldsymbol {b}_m \in \varOmega$.

The goal of the simplex transformation iteration is to replace the worst argument ![]() $\boldsymbol {b}_h$ of the considered simplex by a new better one

$\boldsymbol {b}_h$ of the considered simplex by a new better one ![]() $\boldsymbol {b}_{N+2}$. This is archived in following steps:

$\boldsymbol {b}_{N+2}$. This is archived in following steps:

(i) Ordering: without loss of generality, we assume that the vertices are sorted in terms of the cost values

$J_m = J(\boldsymbol {b}_m)$:

$J_m = J(\boldsymbol {b}_m)$:  $J_1 \le J_2 \le \ldots \le J_{N+1}$.

$J_1 \le J_2 \le \ldots \le J_{N+1}$.(ii) Centroid: in the second step, the centroid of the best side opposite to the worst vertex

$\boldsymbol {b}_{N+1}$ is computed:

(2.8)

$\boldsymbol {b}_{N+1}$ is computed:

(2.8) \begin{equation} \boldsymbol{c} =\frac{1}{N} \sum_{m=1}^{N} \boldsymbol{b}_m. \end{equation}

\begin{equation} \boldsymbol{c} =\frac{1}{N} \sum_{m=1}^{N} \boldsymbol{b}_m. \end{equation}(iii) Reflection: reflect the worst simplex

$\boldsymbol {b}_{N+1}$ at the best side,

(2.9)and compute the new cost

$\boldsymbol {b}_{N+1}$ at the best side,

(2.9)and compute the new cost \begin{equation} \boldsymbol{b}_r = \boldsymbol{c} + \left( \boldsymbol{c} - \boldsymbol{b}_{N+1} \right) \end{equation}

\begin{equation} \boldsymbol{b}_r = \boldsymbol{c} + \left( \boldsymbol{c} - \boldsymbol{b}_{N+1} \right) \end{equation} $J_r=J(\boldsymbol {b}_{r})$. Take

$J_r=J(\boldsymbol {b}_{r})$. Take  $\boldsymbol {b}_{r}$ as new vertex, if

$\boldsymbol {b}_{r}$ as new vertex, if  $J_1 \le J_r \le J_{N}$.

$J_1 \le J_r \le J_{N}$.  $\boldsymbol {b}_m$,

$\boldsymbol {b}_m$,  $m=1\ldots,N$ and

$m=1\ldots,N$ and  $\boldsymbol {b}_r$ define the new simplex for the next iteration. Renumber the indices to the

$\boldsymbol {b}_r$ define the new simplex for the next iteration. Renumber the indices to the  $1 \ldots N+1$ range. Now, the cost is better than the second worst value

$1 \ldots N+1$ range. Now, the cost is better than the second worst value  $J_N$, but not as good as the best one

$J_N$, but not as good as the best one  $J_1$. Start a new iteration with step i.

$J_1$. Start a new iteration with step i.(iv) Expansion: if

$J_{r} < J_1$, expand in this direction further by a factor

$J_{r} < J_1$, expand in this direction further by a factor  $2$,

(2.10)Take the best vertex of

$2$,

(2.10)Take the best vertex of \begin{equation} \boldsymbol{b}_{e} = \boldsymbol{c} + 2 \left(\boldsymbol{c} - \boldsymbol{b}_{N+1} \right). \end{equation}

\begin{equation} \boldsymbol{b}_{e} = \boldsymbol{c} + 2 \left(\boldsymbol{c} - \boldsymbol{b}_{N+1} \right). \end{equation} $\boldsymbol {b}_r$ and

$\boldsymbol {b}_r$ and  $\boldsymbol {b}_e$ as

$\boldsymbol {b}_e$ as  $\boldsymbol {b}_{N+1}$ replacement and start a new iteration.

$\boldsymbol {b}_{N+1}$ replacement and start a new iteration.(v) Single contraction: at this stage,

$J_{r} \ge J_{N}$. Contract the worst vertex half-way towards centroid,

(2.11)Take

$J_{r} \ge J_{N}$. Contract the worst vertex half-way towards centroid,

(2.11)Take \begin{equation} \boldsymbol{b}_c = \boldsymbol{c} + \tfrac{1}{2} \left( \boldsymbol{b}_{N+1} - \boldsymbol{c} \right). \end{equation}

\begin{equation} \boldsymbol{b}_c = \boldsymbol{c} + \tfrac{1}{2} \left( \boldsymbol{b}_{N+1} - \boldsymbol{c} \right). \end{equation} $\boldsymbol {b}_c$ as the new vertex (

$\boldsymbol {b}_c$ as the new vertex ( $\boldsymbol {b}_{N+1}$ replacement), if it is better than the worst one, i.e.

$\boldsymbol {b}_{N+1}$ replacement), if it is better than the worst one, i.e.  $J_c \le J_{N+1}$. In this case, start the next iteration.

$J_c \le J_{N+1}$. In this case, start the next iteration.(vi) Shrink/multiple contraction: at this stage, none of the above operations was successful. Shrink the whole simplex by a factor

$1/2$ towards the best vertex, i.e. replace all vertices by

(2.12)This shrunken simplex represents the one for the next iteration. It should be noted that this shrinking operation is the last resort as it is very expensive with

$1/2$ towards the best vertex, i.e. replace all vertices by

(2.12)This shrunken simplex represents the one for the next iteration. It should be noted that this shrinking operation is the last resort as it is very expensive with \begin{equation} \boldsymbol{b_m} \mapsto \boldsymbol{b}_1 + \tfrac{1}{2} \left( \boldsymbol{b}_m-\boldsymbol{b}_1\right), m=2,\ldots,N+1. \end{equation}

\begin{equation} \boldsymbol{b_m} \mapsto \boldsymbol{b}_1 + \tfrac{1}{2} \left( \boldsymbol{b}_m-\boldsymbol{b}_1\right), m=2,\ldots,N+1. \end{equation} $N$ function evaluations. The rationale behind this shrinking is that a smaller simplex may better follow local gradients.

$N$ function evaluations. The rationale behind this shrinking is that a smaller simplex may better follow local gradients.

2.5. RRS – preparing for multiple minima

DSM of the previous section may be equipped with a random restart initialization (Humphrey & Wilson Reference Humphrey and Wilson2000). Maehara & Shimoda (Reference Maehara and Shimoda2013) proposed a hybrid method of GA and DSM, which shared a similar idea, for the optimization of the chiller configuration. This method selects the elite gene with the lowest cost by GA as the starting point for the downhill simplex iteration.

As the random initial condition, we chose a MCS as main vertex of the simplex and explore all coordinate directions by a positive shift of 10 % of the domain size. It is secured that all vertices are inside the domain ![]() $\varOmega$. These initial simplexes attribute the same probability to the whole search space. The chosen small edge length makes a locally smooth behaviour probable – in the absence of any other information. The downhill search is stopped after a fixed number of evaluations. We chose 50 evaluations as the safe upper bound for convergence. It should be noted that the number of simplex iterations is noticeably smaller, as one iteration implies one to

$\varOmega$. These initial simplexes attribute the same probability to the whole search space. The chosen small edge length makes a locally smooth behaviour probable – in the absence of any other information. The downhill search is stopped after a fixed number of evaluations. We chose 50 evaluations as the safe upper bound for convergence. It should be noted that the number of simplex iterations is noticeably smaller, as one iteration implies one to ![]() $N+2$ evaluations.

$N+2$ evaluations.

Evidently, the random restart algorithm may be improved by appreciating the many recommendations of the literature, e.g. avoiding closeness to explored parameters. We trade these improvements in all optimization strategies for simplicity of the algorithms.

2.6. EGM – combining exploration and gradient method

In this section, we combine the advantages of the exploitive DSM and the explorative LHS in a single algorithm. The source code is freely available at https://github.com/YiqingLiAnne/egm.

Step 0 – Initialize. First,

$\boldsymbol {b}_{m}$,

$\boldsymbol {b}_{m}$,  $m=1,\ldots,M+1$ are initialized for the DSM.

$m=1,\ldots,M+1$ are initialized for the DSM.Step 1 – DSM. Perform one simplex iteration (§ 2.4) with the best

$M+1$ parameters discovered so far.

$M+1$ parameters discovered so far.Step 2 – LHS. Compute the cost

$J$ of a new LHS parameter

$J$ of a new LHS parameter  $\boldsymbol {b}$. As described above, we take a parameter from a precomputed list which is the furthest away from all hitherto employed parameters.

$\boldsymbol {b}$. As described above, we take a parameter from a precomputed list which is the furthest away from all hitherto employed parameters.Step 3 – Loop. Continue with step 1 until a convergence criterion is met.

Sometimes, the simplex may degenerate to one with small volume, for instance, when it crawls through a narrow valley. In this case, the vertices lie in a subspace and valuable gradient information is lost. This degeneration is diagnosed and cured after step 1 as follows. Let ![]() $\boldsymbol {b}_c$ be the geometric centre of the simplex. Compute the distance

$\boldsymbol {b}_c$ be the geometric centre of the simplex. Compute the distance ![]() $D$ between each vertex and their geometric centre point. If the minimum

$D$ between each vertex and their geometric centre point. If the minimum ![]() ${D}_{min}$ is smaller than half the maximum distance

${D}_{min}$ is smaller than half the maximum distance ![]() ${D}_{max}/2$, the simplex is deemed degenerated. This degeneration is removed as follows. Draw a sphere with

${D}_{max}/2$, the simplex is deemed degenerated. This degeneration is removed as follows. Draw a sphere with ![]() ${D}_{max}$ around the simplex centre. This sphere contains all vertices by construction. Obtain

${D}_{max}$ around the simplex centre. This sphere contains all vertices by construction. Obtain ![]() $1000$ random points in this sphere. Replace the vertex with the highest cost

$1000$ random points in this sphere. Replace the vertex with the highest cost ![]() $J_{max}$ with one of these point to create the simplex with the largest volume. Of course, the cost of this changed point needs to be evaluated.

$J_{max}$ with one of these point to create the simplex with the largest volume. Of course, the cost of this changed point needs to be evaluated.

The algorithm is intuitively appealing. If the LHS discovers a parameter with a cost ![]() $J$ in the top

$J$ in the top ![]() $M+1$ values, this parameter is included in the new simplex and the corresponding iteration may slide down to another better minimum. It should be noted that LHS exploration does not come with the toll of having to evaluate the cost at

$M+1$ values, this parameter is included in the new simplex and the corresponding iteration may slide down to another better minimum. It should be noted that LHS exploration does not come with the toll of having to evaluate the cost at ![]() $N+1$ vertices and subsequent iterations. The downside of a single evaluation is that we miss potentially important gradient information pointing to an unexplored much better minimum. Relative to random restart gradients searches requiring many evaluations for a converging iteration, LHS exploration becomes increasingly better in rougher landscapes, i.e. more complex multi-modal behaviour.

$N+1$ vertices and subsequent iterations. The downside of a single evaluation is that we miss potentially important gradient information pointing to an unexplored much better minimum. Relative to random restart gradients searches requiring many evaluations for a converging iteration, LHS exploration becomes increasingly better in rougher landscapes, i.e. more complex multi-modal behaviour.

2.7. Computational accelerators

The RANS-based optimization may be accelerated by enablers which are specific to the chosen flow control problem. The computation time for each RANS simulation is based on the choice of the initial condition, as it affects the convergence time for the steady solution. The first simulation of an optimization starts with the unforced flow as initial condition. The next iterations exploit that the averaged velocity field ![]() $\bar {\boldsymbol {u}}(\boldsymbol {x})$ is a function of the actuation parameter

$\bar {\boldsymbol {u}}(\boldsymbol {x})$ is a function of the actuation parameter ![]() $\boldsymbol {b}$. The initial condition of the

$\boldsymbol {b}$. The initial condition of the ![]() $m$th simulation is obtained with the 1-nearest-neighbour approach: the velocity field associated with the closest hitherto computed actuation vector is taken as initial condition for the RANS simulation. This simple choice of initial condition saves approximately 60 % CPU time in reduced convergence time.

$m$th simulation is obtained with the 1-nearest-neighbour approach: the velocity field associated with the closest hitherto computed actuation vector is taken as initial condition for the RANS simulation. This simple choice of initial condition saves approximately 60 % CPU time in reduced convergence time.

Another 30 % reduction of the CPU time is achieved by avoiding RANS computations with very similar actuations. This is achieved by a quantization of the ![]() $\boldsymbol {b}$ vector: the actuation velocities are quantized with respect to integral

$\boldsymbol {b}$ vector: the actuation velocities are quantized with respect to integral ![]() $\textrm {m}\,\textrm {s}^{-1}$ values. This corresponds to increments of

$\textrm {m}\,\textrm {s}^{-1}$ values. This corresponds to increments of ![]() $U_{\infty }/30$ with

$U_{\infty }/30$ with ![]() $U_{\infty }=30\,\textrm {m}\,\textrm {s}^{-1}$. All actuation vectors are rounded with respect to this quantization. If the optimization algorithm yields a rounded actuation vector which has already been investigated, the drag is taken from the corresponding simulation and no new RANS simulation is performed. Similarly, the angles are discretized into integral degrees.

$U_{\infty }=30\,\textrm {m}\,\textrm {s}^{-1}$. All actuation vectors are rounded with respect to this quantization. If the optimization algorithm yields a rounded actuation vector which has already been investigated, the drag is taken from the corresponding simulation and no new RANS simulation is performed. Similarly, the angles are discretized into integral degrees.

3. Comparative optimization study

In this section, the six optimization methods of § 2 are compared for an analytical function with 4 local minima.

§ 3.1 describes this function. In § 3.2, the optimization methods with corresponding parameters are discussed. § 3.3 shows the tested individuals. The learning rates are detailed in § 3.4. Finally, the results are summarized (§ 3.5).

3.1. Analytical function

The considered analytical cost function

$$\begin{align} J(b_1, b_2) &= 1 - e^{ {-}2 (b_1-1)^2 - 2 (b_2-1) ^2 }- \tfrac{1}{2} e^{ {-}2 (b_1+1)^2 - 2 (b_2-1) ^2 }\nonumber\\ &\quad - \tfrac{1}{3} e^{ {-}2 (b_1-1)^2 - 2 (b_2+1) ^2 } - \tfrac{1}{4} e^{ {-}2 (b_1+1)^2 - 2 (b_2+1) ^2 } \end{align}$$

$$\begin{align} J(b_1, b_2) &= 1 - e^{ {-}2 (b_1-1)^2 - 2 (b_2-1) ^2 }- \tfrac{1}{2} e^{ {-}2 (b_1+1)^2 - 2 (b_2-1) ^2 }\nonumber\\ &\quad - \tfrac{1}{3} e^{ {-}2 (b_1-1)^2 - 2 (b_2+1) ^2 } - \tfrac{1}{4} e^{ {-}2 (b_1+1)^2 - 2 (b_2+1) ^2 } \end{align}$$

is characterized by a global minimum near ![]() $[1,1]^\textrm {T}$ and three local minima separately near

$[1,1]^\textrm {T}$ and three local minima separately near ![]() $[1,-1]^\textrm {T}$,

$[1,-1]^\textrm {T}$, ![]() $[-1,1]^\textrm {T}$ and

$[-1,1]^\textrm {T}$ and ![]() $[-1,-1]^\textrm {T}$. The cost reaches a plateau

$[-1,-1]^\textrm {T}$. The cost reaches a plateau ![]() $J=1$ far away from the origin. The investigated parameter domain is

$J=1$ far away from the origin. The investigated parameter domain is ![]() $\varOmega = [-3,3] \times [-3,3]$.

$\varOmega = [-3,3] \times [-3,3]$.

3.2. Optimization methods and their parameters

LHS is performed as described in § 2.1. We take ![]() $M^\star = 10^3$ random points for the optimization of the coverage. MCS is uniformly distributed over the parameter domain

$M^\star = 10^3$ random points for the optimization of the coverage. MCS is uniformly distributed over the parameter domain ![]() $\varOmega$.

$\varOmega$.

The most important parameters of GA are summarized from Appendix A: the generation size is ![]() $I = 50$ and the iterations are terminated with generation

$I = 50$ and the iterations are terminated with generation ![]() $L = 20$. The crossover and mutation probabilities are

$L = 20$. The crossover and mutation probabilities are ![]() $P_c = 0.80$ and

$P_c = 0.80$ and ![]() $P_m = 0.15$, respectively. The number of elite individuals

$P_m = 0.15$, respectively. The number of elite individuals ![]() $N_e=3$ corresponds to the complementary probability

$N_e=3$ corresponds to the complementary probability ![]() $P_e = 5\,\%$.

$P_e = 5\,\%$.

DSM follows exactly the description of § 2.4 with an expansion rate of ![]() $2$, single contraction rate of

$2$, single contraction rate of ![]() $1/2$ and a shrink rate of

$1/2$ and a shrink rate of ![]() $1/2$. RRS (§ 2.5) has an evaluation limit of 50 for 20 random restarts. The step size for each initial simplex is

$1/2$. RRS (§ 2.5) has an evaluation limit of 50 for 20 random restarts. The step size for each initial simplex is ![]() $h=0.35$. EGM builds on the LHS and downhill simplex iterations discussed above.

$h=0.35$. EGM builds on the LHS and downhill simplex iterations discussed above.

3.3. Tested individuals in the parameter space

Figure 2 illustrates the iteration of all six algorithms in the parameter space. LHS shows a uniform coverage of the domain. In contrast, MCS leads to local ‘lumping’ of close individuals, i.e. indications of redundant testing, and local untested regions, both undesirable features. Thus, LHS is clearly seen to perform better than MCS. GA is seen to sparsely test the plateau while densely populating the best minima. This is clearly a desirable feature over LHS and MCS.

Figure 2. Comparison of all optimizers for the analytical function (3.1). Tested individuals of (a) LHS, (b) DSM, (c) MCS, (d) RRS, (e) GA and ( f) EGM from a typical optimization with 1000 individuals. The red solid circles mark new local minima during the iteration while the blue open circles represent suboptimal tested parameters.

The standard DSM converges to a local minimum in this realization, while RRS finds all minima, including the global optimal one. Clearly, the random restart initialization is a security policy against sliding into a suboptimal minimum. The proposed EGM finds all four minima and converges against the global one. By contrast, the exploration is less dense in LHS. The 1000 iterations comprise approximately 250 LHS steps and approximately 250 downhill simplex iterations with an average of three evaluations for each.

Arguably, the EGM is seen to be superior to all downhill simplex variant with more dense exploration and convergence to the global optimum. EGM also performs better than LHS, MCS and GA, as it invests in a more dense coverage of the parameter domain while approximately ![]() $75\,\%$ of the evaluations serve the convergence.

$75\,\%$ of the evaluations serve the convergence.

The conclusions are practically independent of the chosen realization of the optimization algorithm, except that the DSM slides into the global minimum in approximately ![]() $27\,\%$ of the cases.

$27\,\%$ of the cases.

We note that some of our conclusions are tied to the low dimension of the parameter space. In a cubical domain of 10 dimensions, the first ![]() $2^{10}=1024$ LHS individuals would populate the corners before the interior is explored. A geometric coverage of higher dimensions is incompatible with a budget of 1000 evaluations.

$2^{10}=1024$ LHS individuals would populate the corners before the interior is explored. A geometric coverage of higher dimensions is incompatible with a budget of 1000 evaluations.

3.4. The learning curve

In figure 3, we investigate the learning curve of each algorithm for 100 realizations with randomly chosen initial conditions. The learning curve shows the best cost value found with ![]() $m$ evaluations. In this statistical analysis, the

$m$ evaluations. In this statistical analysis, the ![]() $10$,

$10$, ![]() $50$ and

$50$ and ![]() $90\,\%$ percentiles of the learning curves are displayed. The

$90\,\%$ percentiles of the learning curves are displayed. The ![]() $10\,\%$ percentile at

$10\,\%$ percentile at ![]() $m$ evaluations implies that

$m$ evaluations implies that ![]() $10\,\%$ of the realizations yield better and

$10\,\%$ of the realizations yield better and ![]() $90\,\%$ yield worse cost values. The

$90\,\%$ yield worse cost values. The ![]() $50\,\%$ and

$50\,\%$ and ![]() $90\,\%$ percentiles are defined analogously.

$90\,\%$ percentiles are defined analogously.

Figure 3. Comparison of all optimizers for the analytical function (3.1). Learning curve of (a) LHS, (b) DSM, (c) MCS, (d) RRS, (e) GA and ( f) EGM in 100 runs. The 10th, 50th, and 90th percentiles indicate the ![]() $J$ value below which 10, 40 and 90 per cent of runs at current evaluation fall.

$J$ value below which 10, 40 and 90 per cent of runs at current evaluation fall.

The gradient-free algorithms (LHS, MC, GA) in the left column show smooth learning curves. All iterations eventually converge against the global optimum as seen from the upper envelope. The ![]() $10\,\%$ and

$10\,\%$ and ![]() $50\,\%$ percentile curves are comparable. Focusing on the bad case (

$50\,\%$ percentile curves are comparable. Focusing on the bad case (![]() $90\,\%$ percentile) and worst case performance (upper envelope), LHS is seen to beat both MCS and GA. MCS has the worst outliers, because it neither exploits the cost function, like the GA, nor comes with advantage of guaranteed good geometric coverage, like LHS.

$90\,\%$ percentile) and worst case performance (upper envelope), LHS is seen to beat both MCS and GA. MCS has the worst outliers, because it neither exploits the cost function, like the GA, nor comes with advantage of guaranteed good geometric coverage, like LHS.

The gradient-based algorithms reveal other features. The DSM can arrive at the global optimum much faster than any of the gradient-free algorithms. But it has also a ![]() $73\,\%$ probability terminating in one of the suboptimal local minima. The random restart version mitigates this risk to practically zero. In RRS,

$73\,\%$ probability terminating in one of the suboptimal local minima. The random restart version mitigates this risk to practically zero. In RRS, ![]() $50\,\%$ of the runs reach the minimum before 300 evaluations.

$50\,\%$ of the runs reach the minimum before 300 evaluations.

The learning curve of all gradient-based algorithms have jumps. Once the initial condition is in the attractive basin of one minimum, the convergence to that minimum is very fast, leading to a step decline of the learning curve. We notice that the worst case scenario does not exactly converge to zero. The reason is the degeneration of the simplex to points on a line which does not go through the global minimum. Only the EGM takes care of this degeneration with a geometric correction after step 1 as described in § 2.6. Expectedly, EGM also outperforms all other optimizers with respect to ![]() $50\,\%$ percentile,

$50\,\%$ percentile, ![]() $90\,\%$ percentile and the worst case scenario. The global minimum is consistently found in less than 200 evaluations. It is much more efficient to invest 50 points in LHS exploration than to iterate into a suboptimal minimum. The gradient descent slowed down from the distraction by

$90\,\%$ percentile and the worst case scenario. The global minimum is consistently found in less than 200 evaluations. It is much more efficient to invest 50 points in LHS exploration than to iterate into a suboptimal minimum. The gradient descent slowed down from the distraction by ![]() $25\,\%$ LHS evaluations as an insurance policy.

$25\,\%$ LHS evaluations as an insurance policy.

3.5. Discussion

The relative strengths and weaknesses of the different optimizers are summarized in table 1 for the average performance after ![]() $m=20$,

$m=20$, ![]() $100$,

$100$, ![]() $500$ and

$500$ and ![]() $1000$ evaluations. The averaging is performed over the costs of all 100 realizations after

$1000$ evaluations. The averaging is performed over the costs of all 100 realizations after ![]() $m$ evaluations. The iteration is considered as failed if the value is

$m$ evaluations. The iteration is considered as failed if the value is ![]() $1\,\%$, i.e.

$1\,\%$, i.e. ![]() $0.01$ above the global minimum.

$0.01$ above the global minimum.

Table 1. Comparison of all optimizers for the analytical function (3.1). Average cost of different algorithms during ![]() $m=20$,

$m=20$, ![]() $100$,

$100$, ![]() $500$ and

$500$ and ![]() $1000$ evaluations in 100 runs.

$1000$ evaluations in 100 runs.

First, we observe that DSM has the worst failure rate with ![]() $73\,\%$, followed by

$73\,\%$, followed by ![]() $61\,\%$ of MCS and

$61\,\%$ of MCS and ![]() $55\,\%$ of LHS. The failures of the DSM are more severe as the converged parameters significantly depart from the global minimum in

$55\,\%$ of LHS. The failures of the DSM are more severe as the converged parameters significantly depart from the global minimum in ![]() $73\,\%$ of the runs. In case of LHS and MCS, the failure is only the result of pure convergence against the right global minimum.

$73\,\%$ of the runs. In case of LHS and MCS, the failure is only the result of pure convergence against the right global minimum.

Second, after 20 evaluations, the average cost of all algorithms is close to ![]() $0.50$, i.e. very similar.

$0.50$, i.e. very similar.

After 100 evaluations, algorithms with explorative steps, i.e. LHS, MCS, GA and EGM have a distinct advantage over the DSM and even over the random restart version. Approximately four restarts are necessary to avoid the convergence to a suboptimal minimum in ![]() $99\,\%$ of the cases. EGM is already better than the other algorithms by a large factor.

$99\,\%$ of the cases. EGM is already better than the other algorithms by a large factor.

After 500 evaluations, EGM corroborates its distinct superiority over the other algorithms, followed by the RRS and GA. Intriguingly, GA with its exploitive crossover operation performs better than all other optimizers after 500 evaluations, except for EGM. LHS and MCS keep a significant error, lacking gradient-based optimization.

Summarizing, algorithms combining exploration and exploitation, i.e. EGM, GA and RRS, perform better than purely explorative or purely exploitive algorithms (LHS, MCS and DSM). For the ‘pure’ algorithms, LHS has the fastest decrease of cost function while DSM has the fastest convergence. EGM turns out to be the best combined algorithm by making a balance of exploration and exploitation from LHS and DSM, respectively. This superiority is already apparent after 100 evaluations.

We note that the conclusions have been drawn for a single analytical example for an optimization in a low-dimensional parameter space with few minima. From many randomly created analytical functions, we observe that EGM tends to outperform other optimizers in the case of few smooth minima and for low-dimensional search spaces. Yet, in higher-dimensional search spaces, LHS becomes increasingly inefficient and MCS may turn out to perform better. The number of minima also has an impact on the performance. For a single minimum with parabolic growth, the DSM can be expected to outperform the other algorithms. In the case of many local shallow minima, the advantage of gradient-based approaches will become smaller and exploration will correspondingly increase in importance.

The control landscape may have from one single minimum to many minima. Exploration is often inefficient in the former scenario (figure 4a), while exploitation is likely to invest a large number of tests to descend into suboptimal minima or even be trapped in one (figure 4b). An unknown problem is more likely to have a landscape with characteristics between the two worst case scenarios for explorative and exploitive methods. The strict alternation proposed by EGM aims to minimize unnecessary evaluations in the worst case scenario to 50 % of the iterations and guarantee a gradient-based converge rate in case of an identified minimum.

Figure 4. Sketch of the extreme landscapes with (a) one minimum and (b) many minima. The black dots denote the tested location. The red dots show the global minimum.

The following rules of thumb can be formulated for the choice and design of the optimizers. First, the larger the number of local minima, the more effective exploration becomes over exploitation. Second, gradient search becomes more effective when the global minimum has a large domain of attraction for gradient-based descent in comparison with the search space. The volume ratio between domain of attraction and search space determines the likelihood that EGM finds the global minimum with given budget for evaluations. Third, large plateaus, e.g. from asymptotic behaviour, make gradient search inefficient. Fourth, long ‘valleys’ make gradient search inefficient, too. Fifth, space-filling exploration, like LHS or MCS, will become inefficient with increasing dimension. Ten dimensions might be considered as a upper threshold, as just the exploration of the corners of a ten-dimensional cube requires ![]() $2^{10}=1024$ individuals. A good discretization, e.g. with 10 points per dimension is evidently impossible. Numerous other rules may be added for different control landscapes. Given the richness of possible landscapes, explorative methods come without practical and mathematically provable performance estimates.

$2^{10}=1024$ individuals. A good discretization, e.g. with 10 points per dimension is evidently impossible. Numerous other rules may be added for different control landscapes. Given the richness of possible landscapes, explorative methods come without practical and mathematically provable performance estimates.

4. Drag optimization of fluidic pinball with three actuators

As the first flow control example, EGM is applied to the two-dimensional fluidic pinball (Cornejo Maceda et al. Reference Cornejo Maceda, Noack, Lusseyran, Deng, Pastur and Morzyński2019; Deng et al. Reference Deng, Noack, Morzyński and Pastur2020), the wake behind a cluster of three rotating cylinders. In § 4.1, the benchmark problem is described: minimize the net drag power with the cylinder rotations as input parameters. In § 4.2, EGM yields a surprising non-symmetric result, consistent with other fluidic pinball simulations (Cornejo Maceda et al. Reference Cornejo Maceda, Noack, Lusseyran, Deng, Pastur and Morzyński2019) and experiments (Raibaudo et al. Reference Raibaudo, Zhong, Noack and Martinuzzi2020). The learning process of DSM and LHS are investigated in §§ 4.3 and 4.4,

4.1. Configuration

The fluidic pinball is a benchmark configuration for wake control which is geometrically simple yet rich in nonlinear dynamics behaviours. This two-dimensional configuration consists of a cluster of three equal, parallel and equidistantly spaced cylinders pointing in opposite to uniform flow. The wake can be controlled by the cylinder rotation. The fluidic pinball comprises most known wake stabilization mechanisms, like phasor control, circulation control, Coanda forcing, base bleed as well as high- and low-frequency forcing. In this study, we focus on steady open-loop forcing minimizing the drag power corrected by actuation energy.

The viscous incompressible two-dimensional flow has uniform oncoming flow with speed ![]() $U_\infty$ and a fluid with constant density

$U_\infty$ and a fluid with constant density ![]() $\rho$ and kinematic viscosity

$\rho$ and kinematic viscosity ![]() $\nu$. The three equal circular cylinders have radius

$\nu$. The three equal circular cylinders have radius ![]() $R$ and their centres form an equilateral triangle with side length

$R$ and their centres form an equilateral triangle with side length ![]() $3R$ pointing upstream. Thus, the transverse dimension of the cluster reads

$3R$ pointing upstream. Thus, the transverse dimension of the cluster reads ![]() $L = 5R$.

$L = 5R$.

In figure 5(a), the flow is described in Cartesian coordinate system where the ![]() $x$-axis points in the direction of the flow, the

$x$-axis points in the direction of the flow, the ![]() $z$-axis is aligned with the cylinder axes and the

$z$-axis is aligned with the cylinder axes and the ![]() $y$-axis is orthogonal to both. The origin

$y$-axis is orthogonal to both. The origin ![]() $\boldsymbol {0}$ is placed in the centre of the rightmost top and bottom cylinders. Thus, the centres of the cylinders are described by

$\boldsymbol {0}$ is placed in the centre of the rightmost top and bottom cylinders. Thus, the centres of the cylinders are described by

\begin{equation} \left. \begin{array}{ll} x_1=x_F ={-}3 R \cos 30^\circ, & y_1 =y_F = 0,\\ x_2=x_B = 0, & y_2 = y_B ={-}3R/2,\\ x_3= x_T = 0, & y_3 = y_T ={+}3R/2. \end{array} \right\} \end{equation}

\begin{equation} \left. \begin{array}{ll} x_1=x_F ={-}3 R \cos 30^\circ, & y_1 =y_F = 0,\\ x_2=x_B = 0, & y_2 = y_B ={-}3R/2,\\ x_3= x_T = 0, & y_3 = y_T ={+}3R/2. \end{array} \right\} \end{equation}

Here, the subscripts ‘![]() $F$’, ‘

$F$’, ‘![]() $B$’ and ‘

$B$’ and ‘![]() $T$’ refer to the front, bottom and top cylinder. Alternatively, the subscripts ’1’, ’2’ and ’3’ are used for these cylinders starting with the front cylinder and continuing in mathematically positive orientation.

$T$’ refer to the front, bottom and top cylinder. Alternatively, the subscripts ’1’, ’2’ and ’3’ are used for these cylinders starting with the front cylinder and continuing in mathematically positive orientation.

Figure 5. Fluidic pinball: (a) configuration and (b) grid.

The location is denoted by ![]() $\boldsymbol {x} = (x, y) = x\, \boldsymbol {e}_x + y \, \boldsymbol {e}_y$, where

$\boldsymbol {x} = (x, y) = x\, \boldsymbol {e}_x + y \, \boldsymbol {e}_y$, where ![]() $\boldsymbol {e}_x$ and

$\boldsymbol {e}_x$ and ![]() $\boldsymbol {e}_y$ are the unit vectors in the

$\boldsymbol {e}_y$ are the unit vectors in the ![]() $x$- and

$x$- and ![]() $y$-directions. The flow velocity is represented by

$y$-directions. The flow velocity is represented by ![]() $\boldsymbol {u} = (u, v) = u \, \boldsymbol {e}_x + v \, \boldsymbol {e}_y$. The pressure and time symbols are

$\boldsymbol {u} = (u, v) = u \, \boldsymbol {e}_x + v \, \boldsymbol {e}_y$. The pressure and time symbols are ![]() $p$ and

$p$ and ![]() $t$, respectively. In the following, all quantities are non-dimensionalized with cylinder diameter

$t$, respectively. In the following, all quantities are non-dimensionalized with cylinder diameter ![]() $D = 2R$, the velocity

$D = 2R$, the velocity ![]() $U_\infty$ and the fluid density

$U_\infty$ and the fluid density ![]() $\rho$.

$\rho$.

The corresponding Reynolds number reads ![]() $Re_D = U_\infty D/\nu = 100$. This Reynolds number corresponds to asymmetric periodic vortex shedding. Deng et al. (Reference Deng, Noack, Morzyński and Pastur2020) have investigated the transition scenario for increasing Reynolds number. At

$Re_D = U_\infty D/\nu = 100$. This Reynolds number corresponds to asymmetric periodic vortex shedding. Deng et al. (Reference Deng, Noack, Morzyński and Pastur2020) have investigated the transition scenario for increasing Reynolds number. At ![]() $Re_1 \approx 18$, the steady flow becomes unstable in a Hopf bifurcation leading to periodic vortex shedding. At

$Re_1 \approx 18$, the steady flow becomes unstable in a Hopf bifurcation leading to periodic vortex shedding. At ![]() $Re_2 \approx 68$, both the steady Navier–Stokes solutions and the limit cycles bifurcate into two mirror-symmetric states. Chen et al. (Reference Chen, Ji, Alam, Williams and Xu2020) performed a careful parametric analysis of the gap width between the cylinders and associated this behaviour with the ‘deflected regime’, where base bleed through the rightmost cylinder are deflected upward or downward. At

$Re_2 \approx 68$, both the steady Navier–Stokes solutions and the limit cycles bifurcate into two mirror-symmetric states. Chen et al. (Reference Chen, Ji, Alam, Williams and Xu2020) performed a careful parametric analysis of the gap width between the cylinders and associated this behaviour with the ‘deflected regime’, where base bleed through the rightmost cylinder are deflected upward or downward. At ![]() $Re_3 \approx 104$ another Hopf bifurcation leads to quasi-periodic flow. After

$Re_3 \approx 104$ another Hopf bifurcation leads to quasi-periodic flow. After ![]() $Re_4 \approx 115$, a chaotic state emerges.

$Re_4 \approx 115$, a chaotic state emerges.

The flow properties can be changed by the rotation of cylinders. The corresponding actuation commands are denoted by

Here, positive value denotes the anti-clockwise direction.

Following Cornejo Maceda et al. (Reference Cornejo Maceda, Noack, Lusseyran, Deng, Pastur and Morzyński2019), we aim to minimize of the averaged parasitic drag power ![]() $\bar {J}_a$ penalizing the averaged actuation power

$\bar {J}_a$ penalizing the averaged actuation power ![]() $\bar {J}_b$. The resulting cost function reads

$\bar {J}_b$. The resulting cost function reads

The first contribution ![]() $\bar {J}_a = c_D$ corresponds to drag coefficient

$\bar {J}_a = c_D$ corresponds to drag coefficient

for the chosen non-dimensionalization. Here, ![]() $\bar {F}_D$ denotes total averaged drag force on all cylinders per unit spanwise length. The second contribution arises from the necessary actuation torque to overcome the skin-friction resistance.

$\bar {F}_D$ denotes total averaged drag force on all cylinders per unit spanwise length. The second contribution arises from the necessary actuation torque to overcome the skin-friction resistance.

Following Deng et al. (Reference Deng, Noack, Morzyński and Pastur2020), the flow is computed with direct numerical solution in the computational domain

We use an in-house implicit finite-element method solver ‘UNS3’ which is of third-order accuracy in space and time. The unstructured grid in figure 5(b) contains 4225 triangles and 8633 vertices. An earlier grid convergence study identified this resolution sufficient for up to 2 per cent error in drag, lift and Strouhal number.

4.2. Optimized actuation

In the subsequent study, the actuation commands ![]() $b_1 = U_F$,

$b_1 = U_F$, ![]() $b_2=U_B$ and

$b_2=U_B$ and ![]() $b_3=U_T$ are bounded by 5, i.e. the search space reads

$b_3=U_T$ are bounded by 5, i.e. the search space reads

Previous symmetric parametric studies have identified symmetric Coanda forcing ![]() $b_1=0$,

$b_1=0$, ![]() $b_2=-b_3$ around

$b_2=-b_3$ around ![]() $2$ as optimal for net drag reduction, both in low-Reynolds-number direct numerical simulations (Cornejo Maceda et al. Reference Cornejo Maceda, Noack, Lusseyran, Deng, Pastur and Morzyński2019) and in high-Reynolds-number unsteady Reynolds-averaged Navier–Stokes simulations (Raibaudo et al. Reference Raibaudo, Zhong, Noack and Martinuzzi2020). The chosen bound of

$2$ as optimal for net drag reduction, both in low-Reynolds-number direct numerical simulations (Cornejo Maceda et al. Reference Cornejo Maceda, Noack, Lusseyran, Deng, Pastur and Morzyński2019) and in high-Reynolds-number unsteady Reynolds-averaged Navier–Stokes simulations (Raibaudo et al. Reference Raibaudo, Zhong, Noack and Martinuzzi2020). The chosen bound of ![]() $5$ adds a large safety factor to these values, i.e. the optimum can be expected to be in the chosen range. Steady bleed into the wake region is reported as another means for wake stabilization by suppressing the communication between the upper and lower shear layers. This study starts from the base-bleeding control in search of a different actuation from boat tailing.

$5$ adds a large safety factor to these values, i.e. the optimum can be expected to be in the chosen range. Steady bleed into the wake region is reported as another means for wake stabilization by suppressing the communication between the upper and lower shear layers. This study starts from the base-bleeding control in search of a different actuation from boat tailing.

LHS, DSM and EGM are applied to minimize the net drag power (4.3) with steady actuation in the three-dimensional domain (4.6). Following §§ 2.4 and 2.6, the initial simplex comprises four vertices: the individual controlled by base-bleeding actuation (![]() $b_1 = 0, b_2 = -4.5, b_3 = 4.5$), the other three individuals are positively shifted by 0.1 for each actuation. The individuals and their corresponding costs are listed in table 2. All the individuals have a larger cost than the unforced benchmark

$b_1 = 0, b_2 = -4.5, b_3 = 4.5$), the other three individuals are positively shifted by 0.1 for each actuation. The individuals and their corresponding costs are listed in table 2. All the individuals have a larger cost than the unforced benchmark ![]() $J = 1.8235$. The increase of the actuation amplitude from the strong base-bleeding forcing indicates higher cost. Thus, the initial condition seems to pose a challenge for optimization.

$J = 1.8235$. The increase of the actuation amplitude from the strong base-bleeding forcing indicates higher cost. Thus, the initial condition seems to pose a challenge for optimization.

Table 2. Fluidic pinball: initial simplex (![]() $m=1,2,3,4$) for the three-dimensional DSM optimization;

$m=1,2,3,4$) for the three-dimensional DSM optimization; ![]() $b_i$ denotes the circumferential velocity of the cylinders and

$b_i$ denotes the circumferential velocity of the cylinders and ![]() $J$ corresponds to the net drag power (4.3).

$J$ corresponds to the net drag power (4.3).

In this section, the optimization process of EGM is investigated. Figure 6(a) shows the best cost found with ![]() $m$ simulations. EGM is quickly and practically converged after

$m$ simulations. EGM is quickly and practically converged after ![]() $m=22$ evaluations and yields the near-optimal actuation at the

$m=22$ evaluations and yields the near-optimal actuation at the ![]() $78$th test

$78$th test

The cost function ![]() $J^\star =1.3$ reveals a net drag power saving of

$J^\star =1.3$ reveals a net drag power saving of ![]() $29\,\%$ with respect to the unforced value

$29\,\%$ with respect to the unforced value ![]() $J_u = 1.8235$. The large amount of suboptimal testing is indicative of a complex control landscape. The drag coefficient falls from

$J_u = 1.8235$. The large amount of suboptimal testing is indicative of a complex control landscape. The drag coefficient falls from ![]() $2.8824$ for unforced flow to

$2.8824$ for unforced flow to ![]() $1.3902$ for the actuation (4.7a–d) within a few convective time units. This near-optimal actuation corresponds to 52 % drag reduction. This 52 % reduction of drag power requires 23 % investment in actuation energy.

$1.3902$ for the actuation (4.7a–d) within a few convective time units. This near-optimal actuation corresponds to 52 % drag reduction. This 52 % reduction of drag power requires 23 % investment in actuation energy.

Figure 6. Optimization of the fluidic pinball actuation with EGM. The actuation parameters and cost are visualized as in figures 2 and 3. For enhanced interpretability, select new minima are displayed as solid yellow circles in the learning curve (a) and in the control landscape (b) the corresponding ![]() $m$ index. Here,

$m$ index. Here, ![]() $m$ counts the DNS for net drag power computation. The marked flows

$m$ counts the DNS for net drag power computation. The marked flows ![]() $A$–

$A$–![]() $J$ are explained in the text.

$J$ are explained in the text.

The best actuation mimics nearly symmetric Coanda forcing with a circumferential velocity of 1. This actuation deflects the flow towards the positive ![]() $x$-axis and effectively removes the dead-water region with reversal flow. The slight asymmetry of the actuation is not a bug but a feature of the optimal actuation after the pitchfork bifurcation at

$x$-axis and effectively removes the dead-water region with reversal flow. The slight asymmetry of the actuation is not a bug but a feature of the optimal actuation after the pitchfork bifurcation at ![]() $Re_2 \approx 68$. This achieved performance and actuation is similar to the optimization feedback control achieved by machine learning control (Cornejo Maceda et al. Reference Cornejo Maceda, Noack, Lusseyran, Deng, Pastur and Morzyński2019), comprising a slightly asymmetric Coanda actuation with small phasor control from the front cylinder. Also, the optimized experimental stabilization of the high-Reynolds-number regime leads to asymmetric steady actuation (Raibaudo et al. Reference Raibaudo, Zhong, Noack and Martinuzzi2020). The asymmetric forcing may be linked to the fact that the unstable asymmetric steady Navier–Stokes solutions have a lower drag than the unstable symmetric solution.

$Re_2 \approx 68$. This achieved performance and actuation is similar to the optimization feedback control achieved by machine learning control (Cornejo Maceda et al. Reference Cornejo Maceda, Noack, Lusseyran, Deng, Pastur and Morzyński2019), comprising a slightly asymmetric Coanda actuation with small phasor control from the front cylinder. Also, the optimized experimental stabilization of the high-Reynolds-number regime leads to asymmetric steady actuation (Raibaudo et al. Reference Raibaudo, Zhong, Noack and Martinuzzi2020). The asymmetric forcing may be linked to the fact that the unstable asymmetric steady Navier–Stokes solutions have a lower drag than the unstable symmetric solution.

Figure 6(b) shows the control landscape, i.e. two-dimensional proximity map of the three-dimensional actuation parameters. Neighbouring points in the proximity map correspond similar actuation vectors. The proximity map is computed with classical multi-dimensional scaling (Cox & Cox Reference Cox and Cox2000). This map shows all performed simulations for figure 6(a) as solid red circles, when the evaluation improves the cost with respect to the iteration history and as open blue circles otherwise. Select new minima are highlighted with yellow circles: the first run ![]() $m=1$ on the right side, the converged run

$m=1$ on the right side, the converged run ![]() $m=78$ on the left and the intermediate run

$m=78$ on the left and the intermediate run ![]() $m=9$ when the explorative step jumps in new better territories. The colour bar represents interpolated values of the cost (4.3).

$m=9$ when the explorative step jumps in new better territories. The colour bar represents interpolated values of the cost (4.3).

The meaning of the feature coordinates ![]() $\gamma _1$ and

$\gamma _1$ and ![]() $\gamma _2$ will be revealed by the following analysis. Ten of the individuals of the control landscape are selected along the coordinates and marked with letters between

$\gamma _2$ will be revealed by the following analysis. Ten of the individuals of the control landscape are selected along the coordinates and marked with letters between ![]() $A$ and

$A$ and ![]() $J$:

$J$:

(A) unforced flow in the centre;

(B) base-bleeding flow

$m=1$ as the initial individual;

$m=1$ as the initial individual;(C) optimal actuation after

$m=78$ evaluations;

$m=78$ evaluations;(D,E,J) strong asymmetric actuations ] at the boundary of the control landscape

$m=7$,

$m=7$,  $m=24$,

$m=24$,  $m=11$, showing a strong overall actuation;

$m=11$, showing a strong overall actuation;(F–I) random actuations along the coordinates

$\gamma _2$ in the centre the control landscape corresponding to

$\gamma _2$ in the centre the control landscape corresponding to  $m=10$,

$m=10$,  $m=19$,

$m=19$,  $m=60$,

$m=60$,  $m=51$, respectively.

$m=51$, respectively.

The flows corresponding to actuations ![]() $A$–

$A$–![]() $J$ in figure 6(b) are depicted in figure 7. The optimized actuation (

$J$ in figure 6(b) are depicted in figure 7. The optimized actuation (![]() $C$) yields a partially stabilized flow, like the machine learning feedback control by Cornejo Maceda et al. (Reference Cornejo Maceda, Noack, Lusseyran, Deng, Pastur and Morzyński2019). Actuation

$C$) yields a partially stabilized flow, like the machine learning feedback control by Cornejo Maceda et al. (Reference Cornejo Maceda, Noack, Lusseyran, Deng, Pastur and Morzyński2019). Actuation ![]() $C$ corresponds to complete stabilization with strong Coanda forcing, located near

$C$ corresponds to complete stabilization with strong Coanda forcing, located near ![]() $\gamma _2 \approx 0$ for small

$\gamma _2 \approx 0$ for small ![]() $\gamma _1$. In contrast, flow

$\gamma _1$. In contrast, flow ![]() $B$ on the opposite side of the control landscapes represents strong base bleeding. Actuations

$B$ on the opposite side of the control landscapes represents strong base bleeding. Actuations ![]() $J$,

$J$, ![]() $G$ and

$G$ and ![]() $H$, located at the top and bottom of the control landscapes correspond to Magnus effects. Large positive (negative) feature coordinates

$H$, located at the top and bottom of the control landscapes correspond to Magnus effects. Large positive (negative) feature coordinates ![]() $\gamma _2$ are associated with large positive (negative) total circulations and associated lift forces. Summarizing, the analysis of these points reveals that the feature coordinate

$\gamma _2$ are associated with large positive (negative) total circulations and associated lift forces. Summarizing, the analysis of these points reveals that the feature coordinate ![]() $\gamma _1$ corresponds to the strength of the Coanda forcing and is hence related to the drag. In contrast,

$\gamma _1$ corresponds to the strength of the Coanda forcing and is hence related to the drag. In contrast, ![]() $\gamma _2$ is correlated with the total circulation of the cylinder rotations and thus with the lift. Ishar et al. (Reference Ishar, Kaiser, Morzynski, Albers, Meysonnat, Schröder and Noack2019) arrives at a similar interpretation of the proximity map for differently actuated fluidic pinball simulations.

$\gamma _2$ is correlated with the total circulation of the cylinder rotations and thus with the lift. Ishar et al. (Reference Ishar, Kaiser, Morzynski, Albers, Meysonnat, Schröder and Noack2019) arrives at a similar interpretation of the proximity map for differently actuated fluidic pinball simulations.

Figure 7. Fluidic pinball flows of different actuations of the control landscape (figure 6b). Panels correspond to actuations with letters ![]() $A$–

$A$–![]() $J$, respectively, and display the vorticity of the post-transient snapshot. Positive (negative) vorticity is colour coded in red (blue). The dashed lines correspond to iso-contour lines of vorticity. The orientation of the cylinder rotations is indicated by the arrows. The cylinder rotation is proportional to the angle of the black sector inside.

$J$, respectively, and display the vorticity of the post-transient snapshot. Positive (negative) vorticity is colour coded in red (blue). The dashed lines correspond to iso-contour lines of vorticity. The orientation of the cylinder rotations is indicated by the arrows. The cylinder rotation is proportional to the angle of the black sector inside.

4.3. Downhill simplex method