1. Introduction

The dynamical systems view of turbulence (Hopf Reference Hopf1948; Eckhardt et al. Reference Eckhardt, Faisst, Schmiegel and Schumacher2002; Kerswell Reference Kerswell2005; Eckhardt et al. Reference Eckhardt, Schneider, Hof and Westerweel2007; Gibson, Halcrow & Cvitanovic Reference Gibson, Halcrow and Cvitanovic2008; Cvitanovic & Gibson Reference Cvitanovic and Gibson2010; Kawahara, Uhlmann & van Veen Reference Kawahara, Uhlmann and van Veen2012; Suri et al. Reference Suri, Kageorge, Grigoriev and Schatz2020; Graham & Floryan Reference Graham and Floryan2021; Crowley et al. Reference Crowley, Pughe-Sanford, Toler, Krygier, Grigoriev and Schatz2022) has revolutionised our understanding of transitional and weakly turbulent shear flows. In this perspective, a realisation of a turbulent flow is considered as a trajectory in a very high-dimensional dynamical system, in which unstable periodic orbits (UPOs) and their stable and unstable manifolds serve as a skeleton for the chaotic dynamics (Hopf Reference Hopf1948; Cvitanović et al. Reference Cvitanović, Artuso, Mainieri, Tanner and Vattay2016). However, progress with these ideas in multiscale turbulence at high Reynolds numbers (![]() $Re$) has been slower, which can be largely attributed to the challenge of finding suitable starting guesses for UPOs to input in a Newton–Raphson solver (Kawahara & Kida Reference Kawahara and Kida2001; Viswanath Reference Viswanath2007; Cvitanovic & Gibson Reference Cvitanovic and Gibson2010; Chandler & Kerswell Reference Chandler and Kerswell2013). As such, it is unknown whether a reduced representation of a high-

$Re$) has been slower, which can be largely attributed to the challenge of finding suitable starting guesses for UPOs to input in a Newton–Raphson solver (Kawahara & Kida Reference Kawahara and Kida2001; Viswanath Reference Viswanath2007; Cvitanovic & Gibson Reference Cvitanovic and Gibson2010; Chandler & Kerswell Reference Chandler and Kerswell2013). As such, it is unknown whether a reduced representation of a high-![]() $Re$ flow in terms of UPOs is possible, and how rapidly the number of such solutions grows as

$Re$ flow in terms of UPOs is possible, and how rapidly the number of such solutions grows as ![]() $Re$ increases. In this work, we outline a methodology based on learned embeddings in deep convolutional autoencoders that can both (i) map out the structure/population of solutions in state space at a given

$Re$ increases. In this work, we outline a methodology based on learned embeddings in deep convolutional autoencoders that can both (i) map out the structure/population of solutions in state space at a given ![]() $Re$ and (ii) generate effective guesses for UPOs that describe the high dissipation bursting dynamics.

$Re$ and (ii) generate effective guesses for UPOs that describe the high dissipation bursting dynamics.

Since the first discovery of a UPO in a (transiently) turbulent Couette flow by Kawahara & Kida (Reference Kawahara and Kida2001) there has been a flurry of interest and the convergence of many more UPOs in the same configuration (Viswanath Reference Viswanath2007; Cvitanovic & Gibson Reference Cvitanovic and Gibson2010; Page & Kerswell Reference Page and Kerswell2020) and other simple geometries including two- and three-dimensional Kolmogorov flow (Chandler & Kerswell Reference Chandler and Kerswell2013; Yalnız, Hof & Budanur Reference Yalnız, Hof and Budanur2021), pipe flow (Willis, Cvitanovic & Avila Reference Willis, Cvitanovic and Avila2013; Budanur et al. Reference Budanur, Short, Farazmand, Willis and Cvitanović2017), planar channels (Zammert & Eckhardt Reference Zammert and Eckhardt2014; Hwang, Willis & Cossu Reference Hwang, Willis and Cossu2016) and Taylor–Couette flow (Krygier, Pughe-Sanford & Grigoriev Reference Krygier, Pughe-Sanford and Grigoriev2021; Crowley et al. Reference Crowley, Pughe-Sanford, Toler, Krygier, Grigoriev and Schatz2022). Individual UPOs isolate a closed cycle of dynamical events which are also observed transiently in the full turbulence (Wang, Gibson & Waleffe Reference Wang, Gibson and Waleffe2007; Hall & Sherwin Reference Hall and Sherwin2010), although all solutions found to date have a much narrower range of scales than the full turbulence itself, e.g. domain-filling vortices and streaks (Kawahara & Kida Reference Kawahara and Kida2001), spatially isolated structures (Gibson & Brand Reference Gibson and Brand2014) or attached eddies in unbounded shear (Doohan, Willis & Hwang Reference Doohan, Willis and Hwang2019). Recent work has identified families of equilibria and travelling waves which become increasingly localised as ![]() $Re$ is increased, some of which are reminiscent of structures observed instantaneously in fully turbulent flows (Deguchi Reference Deguchi2015; Eckhardt & Zammert Reference Eckhardt and Zammert2018; Yang, Willis & Hwang Reference Yang, Willis and Hwang2019; Azimi & Schneider Reference Azimi and Schneider2020).

$Re$ is increased, some of which are reminiscent of structures observed instantaneously in fully turbulent flows (Deguchi Reference Deguchi2015; Eckhardt & Zammert Reference Eckhardt and Zammert2018; Yang, Willis & Hwang Reference Yang, Willis and Hwang2019; Azimi & Schneider Reference Azimi and Schneider2020).

The most popular method for computing periodic orbits, termed ‘recurrent flow analysis’, relies on a turbulent orbit shadowing a UPO for at least a full cycle, with the near-recurrence, measured with an ![]() $L_2$ norm, identifying a guess for both the velocity field and period of the solution (Viswanath Reference Viswanath2007; Cvitanovic & Gibson Reference Cvitanovic and Gibson2010; Chandler & Kerswell Reference Chandler and Kerswell2013). This inherently restricts the approach to lower

$L_2$ norm, identifying a guess for both the velocity field and period of the solution (Viswanath Reference Viswanath2007; Cvitanovic & Gibson Reference Cvitanovic and Gibson2010; Chandler & Kerswell Reference Chandler and Kerswell2013). This inherently restricts the approach to lower ![]() $Re$, as the shadowing becomes increasingly unlikely as the Reynolds number is increased due to the increased instability of the UPOs, although in two dimensions the effect may not be uniform as

$Re$, as the shadowing becomes increasingly unlikely as the Reynolds number is increased due to the increased instability of the UPOs, although in two dimensions the effect may not be uniform as ![]() $Re$ is increased due to connection to weakly unstable solutions of the Euler equation in the limit

$Re$ is increased due to connection to weakly unstable solutions of the Euler equation in the limit ![]() $Re\to \infty$ (Zhigunov & Grigoriev Reference Zhigunov and Grigoriev2023). Furthermore, measuring near-recurrence with an Euclidean norm is unlikely to be a suitable choice for a distance metric on the solution manifold, unless the near-recurrence is very close (Page, Brenner & Kerswell Reference Page, Brenner and Kerswell2021). More recent methods have sought to remove the reliance on near-recurrence, for example by using dynamic mode decomposition (Schmid Reference Schmid2010) to identify the signature of nearby periodic solutions (Page & Kerswell Reference Page and Kerswell2020; Marensi et al. Reference Marensi, Yalnız, Hof and Budanur2023), or by using variational methods that start with a closed loop as an initial guess (Lan & Cvitanovic Reference Lan and Cvitanovic2004; Parker & Schneider Reference Parker and Schneider2022).

$Re\to \infty$ (Zhigunov & Grigoriev Reference Zhigunov and Grigoriev2023). Furthermore, measuring near-recurrence with an Euclidean norm is unlikely to be a suitable choice for a distance metric on the solution manifold, unless the near-recurrence is very close (Page, Brenner & Kerswell Reference Page, Brenner and Kerswell2021). More recent methods have sought to remove the reliance on near-recurrence, for example by using dynamic mode decomposition (Schmid Reference Schmid2010) to identify the signature of nearby periodic solutions (Page & Kerswell Reference Page and Kerswell2020; Marensi et al. Reference Marensi, Yalnız, Hof and Budanur2023), or by using variational methods that start with a closed loop as an initial guess (Lan & Cvitanovic Reference Lan and Cvitanovic2004; Parker & Schneider Reference Parker and Schneider2022).

Periodic orbit theory has rigorously established how the statistics of UPOs can be combined to make statistical predictions for chaotic attractors in uniformly hyperbolic systems (Artuso, Aurell & Cvitanovic Reference Artuso, Aurell and Cvitanovic1990a,Reference Artuso, Aurell and Cvitanovicb). The hope in turbulent flows is that a similar approach may be effective even with an incomplete set of UPOs. For instance, see the statistical reconstructions in Page et al. (Reference Page, Norgaard, Brenner and Kerswell2024) or the Markovian models of weak turbulence in Yalnız et al. (Reference Yalnız, Hof and Budanur2021); recent work in the Lorenz equations indicates that it is possible to learn ‘better’ weights than periodic orbit theory using data-driven techniques when only a small number of solutions are available (Pughe-Sanford et al. Reference Pughe-Sanford, Quinn, Balabanski and Grigoriev2023). These types of approach are attractive because they allow one to unambiguously weigh individual dynamical processes in their contribution to the long-time statistics of the flow. However, the ability to apply these ideas at high-![]() $Re$ is currently limited by the search methods described previously, while extrapolating results at low

$Re$ is currently limited by the search methods described previously, while extrapolating results at low ![]() $Re$ upwards is challenging because of the emergence of new solutions in saddle-node bifurcations, the turning-back of solution branches and the fact that solutions that can be continued upwards in

$Re$ upwards is challenging because of the emergence of new solutions in saddle-node bifurcations, the turning-back of solution branches and the fact that solutions that can be continued upwards in ![]() $Re$ may leave the attractor (e.g. Gibson et al. Reference Gibson, Halcrow and Cvitanovic2008; Chandler & Kerswell Reference Chandler and Kerswell2013).

$Re$ may leave the attractor (e.g. Gibson et al. Reference Gibson, Halcrow and Cvitanovic2008; Chandler & Kerswell Reference Chandler and Kerswell2013).

The challenge of extending the dynamical systems approach to high ![]() $Re$, at least in classical time-stepping-based approaches, also involves a computational element associated with both the increased spatial/temporal resolution requirements and the slowdown of the ‘GMRES’ aspect of the Newton algorithm used to perform an approximate inverse of the Jacobian (van Veen et al. Reference van Veen, Vela-Martın, Kawahara and Yasuda2019); note, however, that Zhigunov & Grigoriev (Reference Zhigunov and Grigoriev2023) were able to successfully converge a number of exact solutions of the Euler equation with a regularising hyperviscosity. Modern data-driven and machine-learning techniques can play a role here, for instance by reducing underlying resolution requirements through learned derivative stencils (Kochkov et al. Reference Kochkov, Smith, Alieva, Wang, Brenner and Hoyer2021), or by building effective low-order models of the flow (see, e.g. the overview in Brunton, Noack & Koumoutsakos Reference Brunton, Noack and Koumoutsakos2020). In dynamical systems, neural networks have been highly effective in estimating attractor dimensions (Linot & Graham Reference Linot and Graham2020) and more recently in building low-order models of weakly turbulent flows (De Jesús Reference De Jesús and Graham2023; Linot & Graham Reference Linot and Graham2023). More recent network architectures have shown great promise as simulation tools with the surprising ability to work well when deployed in parts of the state space which were unseen in training (Kim et al. Reference Kim, Lu, Nozari, Pappas and Bassett2021; Röhm, Gauthier & Fischer Reference Röhm, Gauthier and Fischer2021), presumably because they have learnt a latent representation of the governing equations. One aspect of the model reduction considered in Linot & Graham (Reference Linot and Graham2023) that is particularly promising is that the low-order model (a ‘neural’ system of differential equations) was used to find periodic orbits that corresponded to true UPOs of the Navier–Stokes equation. However, all of these studies have only been performed at modest

$Re$, at least in classical time-stepping-based approaches, also involves a computational element associated with both the increased spatial/temporal resolution requirements and the slowdown of the ‘GMRES’ aspect of the Newton algorithm used to perform an approximate inverse of the Jacobian (van Veen et al. Reference van Veen, Vela-Martın, Kawahara and Yasuda2019); note, however, that Zhigunov & Grigoriev (Reference Zhigunov and Grigoriev2023) were able to successfully converge a number of exact solutions of the Euler equation with a regularising hyperviscosity. Modern data-driven and machine-learning techniques can play a role here, for instance by reducing underlying resolution requirements through learned derivative stencils (Kochkov et al. Reference Kochkov, Smith, Alieva, Wang, Brenner and Hoyer2021), or by building effective low-order models of the flow (see, e.g. the overview in Brunton, Noack & Koumoutsakos Reference Brunton, Noack and Koumoutsakos2020). In dynamical systems, neural networks have been highly effective in estimating attractor dimensions (Linot & Graham Reference Linot and Graham2020) and more recently in building low-order models of weakly turbulent flows (De Jesús Reference De Jesús and Graham2023; Linot & Graham Reference Linot and Graham2023). More recent network architectures have shown great promise as simulation tools with the surprising ability to work well when deployed in parts of the state space which were unseen in training (Kim et al. Reference Kim, Lu, Nozari, Pappas and Bassett2021; Röhm, Gauthier & Fischer Reference Röhm, Gauthier and Fischer2021), presumably because they have learnt a latent representation of the governing equations. One aspect of the model reduction considered in Linot & Graham (Reference Linot and Graham2023) that is particularly promising is that the low-order model (a ‘neural’ system of differential equations) was used to find periodic orbits that corresponded to true UPOs of the Navier–Stokes equation. However, all of these studies have only been performed at modest ![]() $Re$ or in other simpler partial differential equations (PDEs). Our focus here is on using learned low-dimensional representations to examine the impact of increasing

$Re$ or in other simpler partial differential equations (PDEs). Our focus here is on using learned low-dimensional representations to examine the impact of increasing ![]() $Re$ on the flow dynamics.

$Re$ on the flow dynamics.

In previous work (Page et al. Reference Page, Brenner and Kerswell2021, hereafter PBK21), we used a deep convolutional neural network architecture to examine the state space of a two-dimensional turbulent Kolmogorov flow (monochromatically forced on the 2-torus) in a weakly turbulent regime at ![]() $Re=40$. By exploiting a continuous symmetry in the flow we performed a ‘latent Fourier analysis’ of the embeddings of vorticity fields, demonstrating that the network built a representation around a set of spatially periodic patterns which strongly resembled known (and unknown) unstable equilibria and travelling wave solutions. In this paper, we consider the same flow but use a more advanced architecture to construct low-order models over a wide range of

$Re=40$. By exploiting a continuous symmetry in the flow we performed a ‘latent Fourier analysis’ of the embeddings of vorticity fields, demonstrating that the network built a representation around a set of spatially periodic patterns which strongly resembled known (and unknown) unstable equilibria and travelling wave solutions. In this paper, we consider the same flow but use a more advanced architecture to construct low-order models over a wide range of ![]() $40 \leq Re \leq 400$. Latent Fourier analysis provides great insight into the changing structure of the state space as

$40 \leq Re \leq 400$. Latent Fourier analysis provides great insight into the changing structure of the state space as ![]() $Re$ increases, by, for example, showing how high-dissipation bursts merge with the low-dissipation dynamics beyond the weakly chaotic flow at

$Re$ increases, by, for example, showing how high-dissipation bursts merge with the low-dissipation dynamics beyond the weakly chaotic flow at ![]() $Re=40$. It also allows us to isolate

$Re=40$. It also allows us to isolate ![]() $O(150)$ new UPOs associated with high-dissipation events that have not been found by any previous approach.

$O(150)$ new UPOs associated with high-dissipation events that have not been found by any previous approach.

Two-dimensional turbulence is a computationally attractive testing ground for these ideas because of the reduced computational requirements and the ability to apply state-of-the-art neural network architectures which have been built with image classification in mind (LeCun, Bengio & Hinton Reference LeCun, Bengio and Hinton2015; Huang et al. Reference Huang, Liu, van der Maaten and Weinberger2017). Kolmogorov flow itself has been widely studied with very large numbers of simple invariant solutions documented in the literature (Chandler & Kerswell Reference Chandler and Kerswell2013; Lucas & Kerswell Reference Lucas and Kerswell2014, Reference Lucas and Kerswell2015; Farazmand Reference Farazmand2016; Parker & Schneider Reference Parker and Schneider2022), although the phenomenology in two dimensions is distinct from three-dimensional wall-bounded flows discussed previously due to the absence of a dissipative anomaly and the inverse cascade of energy to large scales (Onsager Reference Onsager1949; Kraichnan Reference Kraichnan1967; Leith Reference Leith1968; Batchelor Reference Batchelor1969; Kraichnan & Montgomery Reference Kraichnan and Montgomery1980; Boffetta & Ecke Reference Boffetta and Ecke2012). There is the intriguing possibility that UPOs at high-![]() $Re$ in this flow actually connect to (unforced) solutions of the Euler equation (Zhigunov & Grigoriev Reference Zhigunov and Grigoriev2023), and we are able to use our embeddings to explore this effect here by designing a UPO-search strategy for structures with particular streamwise scales. Our analysis highlights the role of small-scale dynamical events in high-dissipation dynamics, and also suggests that the UPOs needed to describe turbulence at high

$Re$ in this flow actually connect to (unforced) solutions of the Euler equation (Zhigunov & Grigoriev Reference Zhigunov and Grigoriev2023), and we are able to use our embeddings to explore this effect here by designing a UPO-search strategy for structures with particular streamwise scales. Our analysis highlights the role of small-scale dynamical events in high-dissipation dynamics, and also suggests that the UPOs needed to describe turbulence at high ![]() $Re$ may need to be combined in space as well as time, a viewpoint which is consistent with idea of a ‘spatiotemporal’ tiling advocated by Gudorf & Cvitanovic (Reference Gudorf and Cvitanovic2019) and Gudorf (Reference Gudorf2020).

$Re$ may need to be combined in space as well as time, a viewpoint which is consistent with idea of a ‘spatiotemporal’ tiling advocated by Gudorf & Cvitanovic (Reference Gudorf and Cvitanovic2019) and Gudorf (Reference Gudorf2020).

The remainder of the manuscript is structured as follows: In § 2 we describe the flow configuration and datasets at the various ![]() $Re$, along with a summary of vortex statistics under increasing

$Re$, along with a summary of vortex statistics under increasing ![]() $Re$. We also outline a new architecture and training procedure that can accurately represent high dissipation events in all the flows considered. In § 3 we perform a latent Fourier analysis for three values of

$Re$. We also outline a new architecture and training procedure that can accurately represent high dissipation events in all the flows considered. In § 3 we perform a latent Fourier analysis for three values of ![]() $Re$, generating low-dimensional visualisations of the state space to examine the changing role of large-scale patterns and the emergence of the condensate. Section 4 summarises our UPO search, where we perform a modified recurrent flow analysis at

$Re$, generating low-dimensional visualisations of the state space to examine the changing role of large-scale patterns and the emergence of the condensate. Section 4 summarises our UPO search, where we perform a modified recurrent flow analysis at ![]() $Re=40$ before using the latent Fourier modes themselves to find large numbers of new high-dissipation UPOs. Finally, conclusions are provided in § 5.

$Re=40$ before using the latent Fourier modes themselves to find large numbers of new high-dissipation UPOs. Finally, conclusions are provided in § 5.

2. Flow configuration and neural networks

2.1. Kolmogorov flow

We consider two-dimensional flow on the surface of a 2-torus, driven by a monochromatic body force in the streamwise direction (‘Kolmogorov’ flow). The out-of-plane vorticity satisfies

where ![]() $\omega = \partial _x v - \partial _y u$, with

$\omega = \partial _x v - \partial _y u$, with ![]() $\boldsymbol u = (u, v)$. In (2.1) we have used the amplitude of the forcing from the momentum equation,

$\boldsymbol u = (u, v)$. In (2.1) we have used the amplitude of the forcing from the momentum equation, ![]() $\chi$, and the fundamental vertical wavenumber of the box,

$\chi$, and the fundamental vertical wavenumber of the box, ![]() $k= 2{\rm \pi} / L_y$, to define a length scale,

$k= 2{\rm \pi} / L_y$, to define a length scale, ![]() $k^{-1}$, and timescale,

$k^{-1}$, and timescale, ![]() $\sqrt {1/(k \chi )}$, so that

$\sqrt {1/(k \chi )}$, so that ![]() $Re:=\sqrt {\chi }k^{-3/2}/\nu$. Throughout we set

$Re:=\sqrt {\chi }k^{-3/2}/\nu$. Throughout we set ![]() $L_x = L_y$ and the forcing wavenumber

$L_x = L_y$ and the forcing wavenumber ![]() $n=4$ as in previous work (Chandler & Kerswell Reference Chandler and Kerswell2013, PBK21).

$n=4$ as in previous work (Chandler & Kerswell Reference Chandler and Kerswell2013, PBK21).

Equation (2.1) is equivariant under continuous shifts in the streamwise direction, ![]() $\mathscr T_s: \omega (x,y) \to \omega (x+s,y)$, under shift-reflects by a half-wavelength in

$\mathscr T_s: \omega (x,y) \to \omega (x+s,y)$, under shift-reflects by a half-wavelength in ![]() $y$,

$y$, ![]() $\mathscr S: \omega (x, y) \to -\omega (-x, y+{\rm \pi} /4)$ and under a rotation by

$\mathscr S: \omega (x, y) \to -\omega (-x, y+{\rm \pi} /4)$ and under a rotation by ![]() ${\rm \pi}$,

${\rm \pi}$, ![]() $\mathscr R: \omega (x,y) \to \omega (-x,-y)$. In contrast with other recent studies (Linot & Graham Reference Linot and Graham2020; De Jesús Reference De Jesús and Graham2023), which pre-process data by pulling back continuous symmetries using the method of slices (Budanur et al. Reference Budanur, Cvitanović, Davidchack and Siminos2015, Reference Budanur, Short, Farazmand, Willis and Cvitanović2017), we explicitly do not perform symmetry reduction. Our neural networks, described fully later in this section and in Appendix A, are all fully convolutional without fully connected layers: they are invariant under translations (albeit discrete translations set by the spatial resolution) hence the training will be unaffected by the application of pullback to the data.

$\mathscr R: \omega (x,y) \to \omega (-x,-y)$. In contrast with other recent studies (Linot & Graham Reference Linot and Graham2020; De Jesús Reference De Jesús and Graham2023), which pre-process data by pulling back continuous symmetries using the method of slices (Budanur et al. Reference Budanur, Cvitanović, Davidchack and Siminos2015, Reference Budanur, Short, Farazmand, Willis and Cvitanović2017), we explicitly do not perform symmetry reduction. Our neural networks, described fully later in this section and in Appendix A, are all fully convolutional without fully connected layers: they are invariant under translations (albeit discrete translations set by the spatial resolution) hence the training will be unaffected by the application of pullback to the data.

We consider a range of Reynolds numbers, ![]() $Re\in \{40, 80, 100, 400\}$. For the majority of this paper we will focus only on

$Re\in \{40, 80, 100, 400\}$. For the majority of this paper we will focus only on ![]() $Re\in \{40, 100, 400\}$, with the

$Re\in \{40, 100, 400\}$, with the ![]() $Re=80$ results included only to verify trends in the autoencoder performance. Our training and test datasets are generated with the open-source, fully differentiable flow solver JAX-CFD (Kochkov et al. Reference Kochkov, Smith, Alieva, Wang, Brenner and Hoyer2021). The workflow for converging UPOs was refined during the course of the project: we primarily converge periodic orbits in the spectral version of JAX-CFD (Dresdner et al. Reference Dresdner, Kochkov, Norgaard, Zepeda-Núñez, Smith, Brenner and Hoyer2023), though some results at

$Re=80$ results included only to verify trends in the autoencoder performance. Our training and test datasets are generated with the open-source, fully differentiable flow solver JAX-CFD (Kochkov et al. Reference Kochkov, Smith, Alieva, Wang, Brenner and Hoyer2021). The workflow for converging UPOs was refined during the course of the project: we primarily converge periodic orbits in the spectral version of JAX-CFD (Dresdner et al. Reference Dresdner, Kochkov, Norgaard, Zepeda-Núñez, Smith, Brenner and Hoyer2023), though some results at ![]() $Re=40$ were obtained with an in-house spectral code (Chandler & Kerswell Reference Chandler and Kerswell2013; Lucas & Kerswell Reference Lucas and Kerswell2014). Resolution requirements were adjusted based on the specific

$Re=40$ were obtained with an in-house spectral code (Chandler & Kerswell Reference Chandler and Kerswell2013; Lucas & Kerswell Reference Lucas and Kerswell2014). Resolution requirements were adjusted based on the specific ![]() $Re$ considered, ranging from

$Re$ considered, ranging from ![]() $256^2$ at

$256^2$ at ![]() $Re=40$ (in the finite-difference version of JAX-CFD) to

$Re=40$ (in the finite-difference version of JAX-CFD) to ![]() $1024^2$ at

$1024^2$ at ![]() $Re=400$. Lower resolutions (by a factor of two) were used in the spectral solvers when converging UPOs. We downsampled our higher

$Re=400$. Lower resolutions (by a factor of two) were used in the spectral solvers when converging UPOs. We downsampled our higher ![]() $Re$ training and test data to a resolution of

$Re$ training and test data to a resolution of ![]() $128^2$ for consistent input to the neural networks. Note that previous work has demonstrated the adequacy of a

$128^2$ for consistent input to the neural networks. Note that previous work has demonstrated the adequacy of a ![]() $128^2$ resolution in a spectral solver as high as

$128^2$ resolution in a spectral solver as high as ![]() $Re=100$ (see Chandler & Kerswell Reference Chandler and Kerswell2013; Lucas & Kerswell Reference Lucas and Kerswell2015), hence downsampling will not be an issue for most

$Re=100$ (see Chandler & Kerswell Reference Chandler and Kerswell2013; Lucas & Kerswell Reference Lucas and Kerswell2015), hence downsampling will not be an issue for most ![]() $Re$ considered. The truncation at

$Re$ considered. The truncation at ![]() $Re=400$ retains around nine decades in the time-averaged enstrophy spectrum (not shown) hence results in a loss of resolution of small-scale vortical structures of amplitude

$Re=400$ retains around nine decades in the time-averaged enstrophy spectrum (not shown) hence results in a loss of resolution of small-scale vortical structures of amplitude ![]() ${\lesssim } 10^{-4}$. This does not affect the performance of the autoencoder, which we will show to be equally effective at high dissipation when more small-scale structure is present.

${\lesssim } 10^{-4}$. This does not affect the performance of the autoencoder, which we will show to be equally effective at high dissipation when more small-scale structure is present.

At each ![]() $Re \in \{40, 80, 100\}$ we generated a training dataset by initialising

$Re \in \{40, 80, 100\}$ we generated a training dataset by initialising ![]() $1000$ trajectories from random initial conditions, discarding an initial transient before saving

$1000$ trajectories from random initial conditions, discarding an initial transient before saving ![]() $100$ snapshots from each, with snapshots separated by an advective time unit (in dimensional variables this corresponds to

$100$ snapshots from each, with snapshots separated by an advective time unit (in dimensional variables this corresponds to ![]() $\Delta t^* = 1/\sqrt {k \chi }$). When training the neural networks we applied a random symmetry transform to each of the

$\Delta t^* = 1/\sqrt {k \chi }$). When training the neural networks we applied a random symmetry transform to each of the ![]() $N = 10^5$ snapshots

$N = 10^5$ snapshots

each time we looped through the dataset. This data augmentation was included for historical reasons from previous architectural iterations which featured fully connected layers. As a result of the purely convolutional nature of the network, some of the symmetry operations are likely redundant: the network is essentially equivariant under translations in ![]() $x$ and

$x$ and ![]() $y$ by design (approximately if the shifts are not integer numbers of grid points). However, convolutions are not invariant under the reflect or rotation operations, hence data augmentation is beneficial there. Note that the data augmentation is applied randomly as batches of snapshots are fed into the optimiser and we do not generate copies of the original dataset. Test datasets of the same size were also generated in the same manner. For the highest

$y$ by design (approximately if the shifts are not integer numbers of grid points). However, convolutions are not invariant under the reflect or rotation operations, hence data augmentation is beneficial there. Note that the data augmentation is applied randomly as batches of snapshots are fed into the optimiser and we do not generate copies of the original dataset. Test datasets of the same size were also generated in the same manner. For the highest ![]() $Re=400$, our training dataset was smaller and formed of

$Re=400$, our training dataset was smaller and formed of ![]() $700$ trajectories and our test dataset at

$700$ trajectories and our test dataset at ![]() $Re=400$ consisted of

$Re=400$ consisted of ![]() $200$ trajectories. In training (network architecture discussed in detail below) we reserved

$200$ trajectories. In training (network architecture discussed in detail below) we reserved ![]() $10\,\%$ of the training data as a ‘validation’ set which is not used to update the model parameters. The performance of the model is recorded on the validation set as the model is trained as a method of determining whether overfitting occurs. This can be detected should the loss evaluated on the validation set depart significantly from that reported on the training data (Bishop Reference Bishop2006).

$10\,\%$ of the training data as a ‘validation’ set which is not used to update the model parameters. The performance of the model is recorded on the validation set as the model is trained as a method of determining whether overfitting occurs. This can be detected should the loss evaluated on the validation set depart significantly from that reported on the training data (Bishop Reference Bishop2006).

The dynamical regimes considered in this paper range from a weakly chaotic flow at ![]() $Re=40$ to the formation of a pair of large-scale vortices that dominate the flow, the ‘condensate’ (Onsager Reference Onsager1949; Smith & Yakhot Reference Smith and Yakhot1993), at

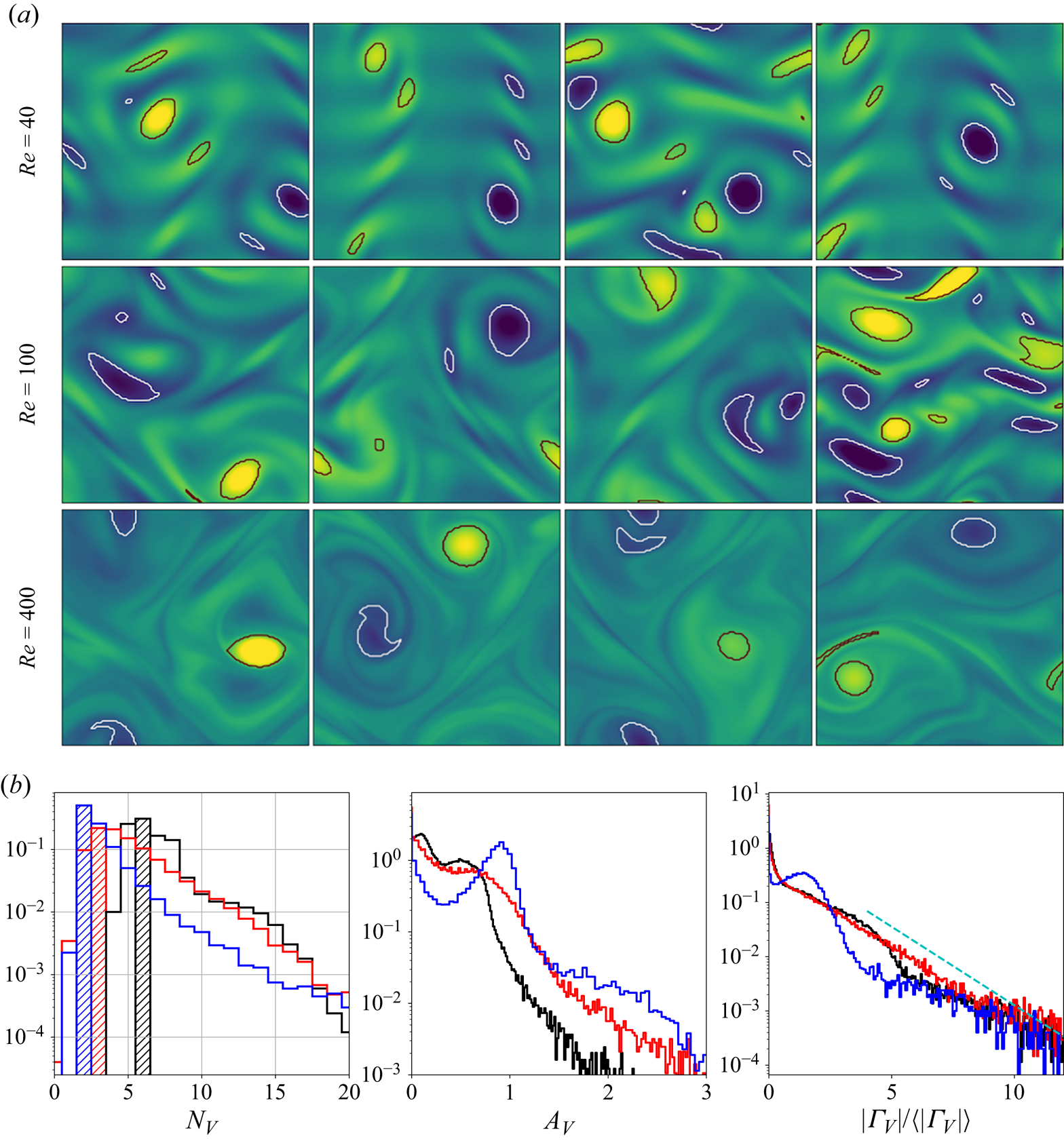

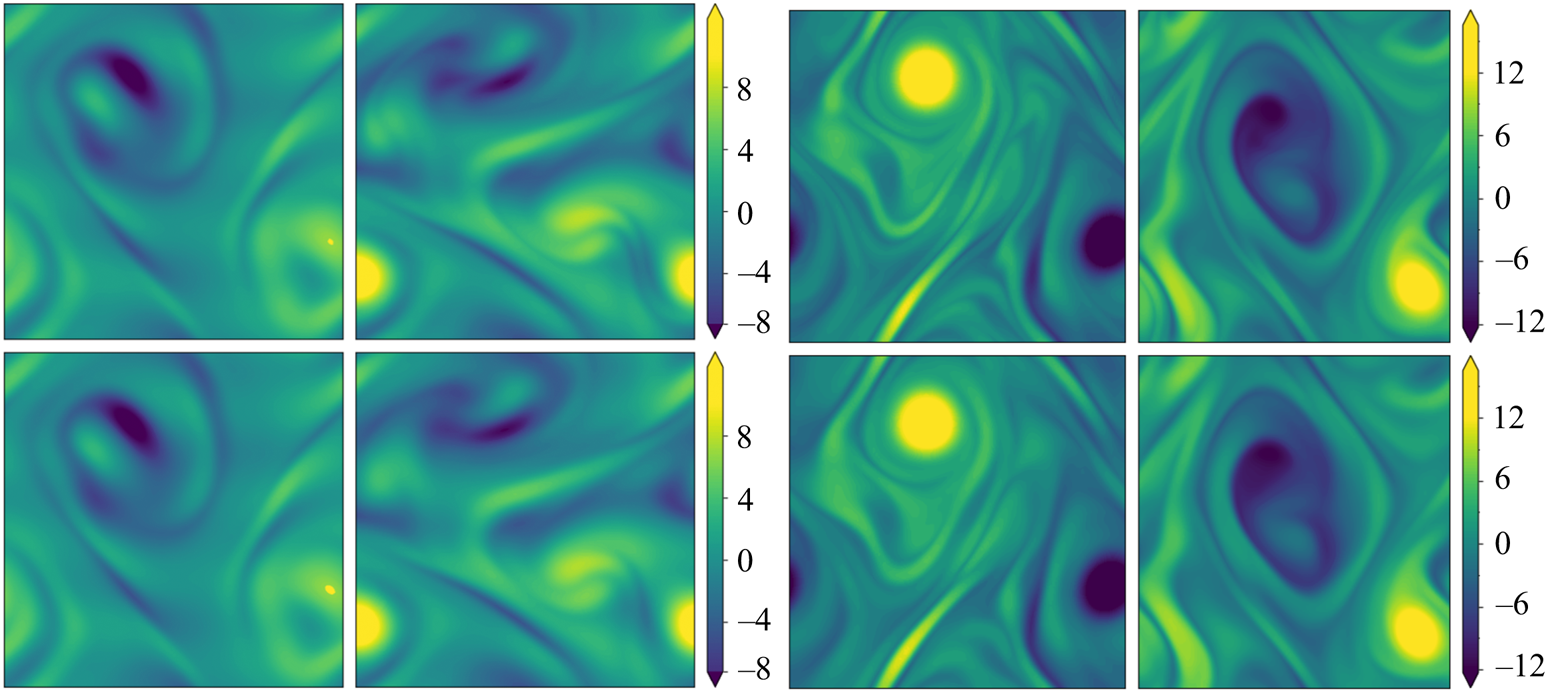

$Re=40$ to the formation of a pair of large-scale vortices that dominate the flow, the ‘condensate’ (Onsager Reference Onsager1949; Smith & Yakhot Reference Smith and Yakhot1993), at ![]() $Re=400$. These qualitative differences in the flow are explored in figure 1 where we report snapshots at

$Re=400$. These qualitative differences in the flow are explored in figure 1 where we report snapshots at ![]() $Re\in \{40, 100, 400\}$ (

$Re\in \{40, 100, 400\}$ (![]() $Re=80$ is largely indistinguishable from

$Re=80$ is largely indistinguishable from ![]() $Re=100$) along with some statistical analysis of the vortical structures present in the flow. The vortex statistics shown here are computed by first computing the root-mean-square vorticity fluctuations

$Re=100$) along with some statistical analysis of the vortical structures present in the flow. The vortex statistics shown here are computed by first computing the root-mean-square vorticity fluctuations ![]() $\omega _{{RMS}} := \sqrt {\langle (\omega (\boldsymbol x, t) - \bar {\omega } (y))^2\rangle }$, where

$\omega _{{RMS}} := \sqrt {\langle (\omega (\boldsymbol x, t) - \bar {\omega } (y))^2\rangle }$, where ![]() $\overline {\bullet }$ is a time, (‘horizontal’)

$\overline {\bullet }$ is a time, (‘horizontal’) ![]() $x$ and ensemble average and

$x$ and ensemble average and ![]() $\langle \bullet \rangle :=\int ^{2{\rm \pi} }_0 \overline {\bullet } \,{{\rm d}\kern 0.05em y}/2{\rm \pi}$ is fully spatially averaged. We then extract spatially localised ‘vortices’ as connected regions where

$\langle \bullet \rangle :=\int ^{2{\rm \pi} }_0 \overline {\bullet } \,{{\rm d}\kern 0.05em y}/2{\rm \pi}$ is fully spatially averaged. We then extract spatially localised ‘vortices’ as connected regions where ![]() $|\omega (\boldsymbol x, t) - \langle \omega \rangle | \geq 2\omega _{{RMS}}$.

$|\omega (\boldsymbol x, t) - \langle \omega \rangle | \geq 2\omega _{{RMS}}$.

Figure 1. Vorticity snapshots and statistics for ![]() $Re=40$,

$Re=40$, ![]() $100$ and

$100$ and ![]() $400$. Results at

$400$. Results at ![]() $Re=80$ are qualitatively very similar to those at

$Re=80$ are qualitatively very similar to those at ![]() $Re=100$ and are not shown. Top three rows show snapshots from within the test dataset at

$Re=100$ and are not shown. Top three rows show snapshots from within the test dataset at ![]() $Re=40$ (top; maximum contour levels

$Re=40$ (top; maximum contour levels ![]() $\pm 8$),

$\pm 8$), ![]() $Re=100$ (centre; maximum contour levels

$Re=100$ (centre; maximum contour levels ![]() $\pm 10$) and

$\pm 10$) and ![]() $Re=400$ (bottom; maximum contour levels

$Re=400$ (bottom; maximum contour levels ![]() $\pm 20$). Black/white lines indicate connected regions (‘vortices’ discussed in the text) where

$\pm 20$). Black/white lines indicate connected regions (‘vortices’ discussed in the text) where ![]() $|\omega (\boldsymbol x, t) - \langle \omega \rangle | \geq 2\omega _{{RMS}}$. Bottom row summarises the vortex statistics in the test datasets at

$|\omega (\boldsymbol x, t) - \langle \omega \rangle | \geq 2\omega _{{RMS}}$. Bottom row summarises the vortex statistics in the test datasets at ![]() $Re=40$ (black),

$Re=40$ (black), ![]() $100$ (red) and

$100$ (red) and ![]() $400$ (blue), from left to right showing PDFs of numbers of vortices

$400$ (blue), from left to right showing PDFs of numbers of vortices ![]() $N_V$ (modal contribution highlighted with the shaded vertical bars), vortex area

$N_V$ (modal contribution highlighted with the shaded vertical bars), vortex area ![]() $A_V$ and normalised vortex circulation

$A_V$ and normalised vortex circulation ![]() $|\varGamma _V|/\langle |\varGamma _V|\rangle$ (dashed line is

$|\varGamma _V|/\langle |\varGamma _V|\rangle$ (dashed line is ![]() $\exp (-(2/3) |\varGamma _V| / \langle |\varGamma _V|\rangle )$).

$\exp (-(2/3) |\varGamma _V| / \langle |\varGamma _V|\rangle )$).

The vortex statistics shown in figure 1 indicate the persistence of a large-scale flow structure at ![]() $Re=400$: at this point the flow spends 50 % of its time in a state where there are a pair of vortices, and often higher numbers of vortices,

$Re=400$: at this point the flow spends 50 % of its time in a state where there are a pair of vortices, and often higher numbers of vortices, ![]() $N_V > 2$, actually indicates a state like that shown in the third and fourth snapshots at

$N_V > 2$, actually indicates a state like that shown in the third and fourth snapshots at ![]() $Re=400$ where a small-scale region of high shear qualifies as a ‘vortex’ under our selection criteria. These observations are clearly supported by a peak in both the vortex area and circulation probability density functions (PDFs) at

$Re=400$ where a small-scale region of high shear qualifies as a ‘vortex’ under our selection criteria. These observations are clearly supported by a peak in both the vortex area and circulation probability density functions (PDFs) at ![]() $Re=400$. In contrast, the statistics at

$Re=400$. In contrast, the statistics at ![]() $Re=40$ and

$Re=40$ and ![]() $Re=100$ do not indicate the dominance of a single large-scale coherent state, but instead the vortex statistics are qualitatively similar to those reported in the early stages of decaying two-dimensional turbulence reported by Jiménez (Reference Jiménez2020); see the dashed line in the circulation statistics shown in figure 1.

$Re=100$ do not indicate the dominance of a single large-scale coherent state, but instead the vortex statistics are qualitatively similar to those reported in the early stages of decaying two-dimensional turbulence reported by Jiménez (Reference Jiménez2020); see the dashed line in the circulation statistics shown in figure 1.

As observed in earlier studies (Chandler & Kerswell Reference Chandler and Kerswell2013; Farazmand & Sapsis Reference Farazmand and Sapsis2017, PBK21), the ‘turbulence’ at ![]() $Re=40$ is only weakly chaotic and spends much of its time in a state which is qualitatively similar to the first non-trivial structure to bifurcate off the laminar solution at

$Re=40$ is only weakly chaotic and spends much of its time in a state which is qualitatively similar to the first non-trivial structure to bifurcate off the laminar solution at ![]() $Re \approx 10$, but with intermittent occurrences of more complex high-dissipation structures. In contrast, the dynamics at

$Re \approx 10$, but with intermittent occurrences of more complex high-dissipation structures. In contrast, the dynamics at ![]() $Re=100$ are much richer and display an interplay between larger-scale structures and small-scale dynamics. The increasing dominance of large-scale structure as

$Re=100$ are much richer and display an interplay between larger-scale structures and small-scale dynamics. The increasing dominance of large-scale structure as ![]() $Re$ increases can be tied to the emergence of new simple invariant solutions, which can presumably be connected to solutions of the Euler equations as

$Re$ increases can be tied to the emergence of new simple invariant solutions, which can presumably be connected to solutions of the Euler equations as ![]() $Re\to \infty$ (Zhigunov & Grigoriev Reference Zhigunov and Grigoriev2023). Similarly, the decreasing role of smaller scale vortical events in this limit can be associated with the movement of a set of small-scale UPOs away from the attractor. Our aim is to use learned embeddings within deep autoencoders to explore this process, mapping out the structure of the state space under increasing

$Re\to \infty$ (Zhigunov & Grigoriev Reference Zhigunov and Grigoriev2023). Similarly, the decreasing role of smaller scale vortical events in this limit can be associated with the movement of a set of small-scale UPOs away from the attractor. Our aim is to use learned embeddings within deep autoencoders to explore this process, mapping out the structure of the state space under increasing ![]() $Re$ and finding the associated UPOs.

$Re$ and finding the associated UPOs.

2.2. DenseNet autoencoders

We construct low-dimensional representations of Kolmogorov flow by training a family of deep convolutional autoencoders, ![]() $\{\mathscr A_m\}$, which seek to reconstruct their inputs

$\{\mathscr A_m\}$, which seek to reconstruct their inputs

The autoencoder takes an input vorticity field and constructs a low-dimensional embedding via an encoder function, ![]() $\mathscr E_m: \mathbb {R}^{N_x\times N_y} \to \mathbb {R}^m$, before a decoder converts the embedding back into a vorticity snapshot,

$\mathscr E_m: \mathbb {R}^{N_x\times N_y} \to \mathbb {R}^m$, before a decoder converts the embedding back into a vorticity snapshot, ![]() $\mathscr D_m: \mathbb {R}^m \to \mathbb {R}^{N_x\times N_y}$. The scalar vorticity is input to the autoencoder as an

$\mathscr D_m: \mathbb {R}^m \to \mathbb {R}^{N_x\times N_y}$. The scalar vorticity is input to the autoencoder as an ![]() $N_x\times N_y$ ‘image’ with a single channel representing the value of

$N_x\times N_y$ ‘image’ with a single channel representing the value of ![]() $\omega (x,y)$ at the grid points.

$\omega (x,y)$ at the grid points.

The architecture trained in PBK21 performed well at ![]() $Re=40$, but we were unable to obtain satisfactory performance at higher

$Re=40$, but we were unable to obtain satisfactory performance at higher ![]() $Re$ with the same network. Even the relatively strong performance at

$Re$ with the same network. Even the relatively strong performance at ![]() $Re=40$ in the PBK21 model was skewed towards low-dissipation snapshots, with the performance on the high-dissipation events being substantially weaker. To address this performance issue, we: (i) designed a new architecture with a more complex graph structure and feature map shapes motivated by discrete symmetries in the system; and (ii) trained the network with a modified loss function to encourage a good representation of the rarer, high-dissipation events.

$Re=40$ in the PBK21 model was skewed towards low-dissipation snapshots, with the performance on the high-dissipation events being substantially weaker. To address this performance issue, we: (i) designed a new architecture with a more complex graph structure and feature map shapes motivated by discrete symmetries in the system; and (ii) trained the network with a modified loss function to encourage a good representation of the rarer, high-dissipation events.

The structure of the new autoencoder is a purely convolutional network, with dimensionality reduction performed via max pooling as in PBK21. However, we use so-called ‘dense blocks’ (Huang et al. Reference Huang, Liu, van der Maaten and Weinberger2017, Reference Huang, Liu, Pleiss, Van Der Maaten and Weinberger2019) in place of single convolutional layers, allowing for increasingly abstract features as the outputs of multiple previous convolutions are concatenated prior to the next convolution operation. Our dense blocks (described fully in Appendix A) are each made up of three individual convolutions, with the output feature maps of each convolution then concatenated with the input. This means that the feature maps at a given scale (before a pooling operation is applied) can be much richer than a single convolution operation. For instance, if the input to a particular dense block is an image with ![]() $K$ channels, and each convolution adds 32 features, then the output of the block is an image with

$K$ channels, and each convolution adds 32 features, then the output of the block is an image with ![]() $K + 3 \times 32$ channels. For comparison, a standard convolution with 32 filters of size

$K + 3 \times 32$ channels. For comparison, a standard convolution with 32 filters of size ![]() $N_x' \times N_y'$ on the same image would be specified by

$N_x' \times N_y'$ on the same image would be specified by ![]() $O(32 \times N_x' \times N_y' \times K)$ parameters, whereas the dense block described here requires

$O(32 \times N_x' \times N_y' \times K)$ parameters, whereas the dense block described here requires ![]() $O(32 \times N_x' \times N_y' \times (96 + 3K))$ parameters due to the repeated concatenation with the upstream input feature maps. We also made other minor modifications to the network that are detailed in Appendix A. At the innermost level, the network represents the input snapshot with a set of

$O(32 \times N_x' \times N_y' \times (96 + 3K))$ parameters due to the repeated concatenation with the upstream input feature maps. We also made other minor modifications to the network that are detailed in Appendix A. At the innermost level, the network represents the input snapshot with a set of ![]() $M$ feature maps of shape

$M$ feature maps of shape ![]() $4 \times 8$, where the ‘8’ (corresponding to the physical

$4 \times 8$, where the ‘8’ (corresponding to the physical ![]() $y$ direction) is fixed by the 8-fold shift-reflect symmetry,

$y$ direction) is fixed by the 8-fold shift-reflect symmetry, ![]() $\mathscr S^8 \omega \equiv \omega$, in the system. The restriction to purely convolutional layers and the smallest feature map size constrains the dimension of the latent space to be a multiple of 32. Overall our new model is roughly twice as complex as that outlined in PBK21, with

$\mathscr S^8 \omega \equiv \omega$, in the system. The restriction to purely convolutional layers and the smallest feature map size constrains the dimension of the latent space to be a multiple of 32. Overall our new model is roughly twice as complex as that outlined in PBK21, with ![]() ${\sim }2.15 \times 10^6$ trainable parameters for the largest models (

${\sim }2.15 \times 10^6$ trainable parameters for the largest models (![]() $m=1024$): the increased cost of the dense blocks being offset somewhat by the absence of any fully connected layers. For context, training for 500 epochs on

$m=1024$): the increased cost of the dense blocks being offset somewhat by the absence of any fully connected layers. For context, training for 500 epochs on ![]() $10^5$ vorticity snapshots (see Appendix A) takes roughly 48 h on a single NVIDIA A100 GPU (80 GB memory).

$10^5$ vorticity snapshots (see Appendix A) takes roughly 48 h on a single NVIDIA A100 GPU (80 GB memory).

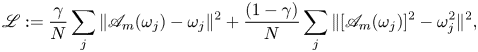

We train the networks to minimise the following loss:

\begin{equation} \mathscr L := \frac{\gamma}{N}\sum_j \| \mathscr A_m (\omega_j) - \omega_j \|^2 + \frac{(1-\gamma)}{N}\sum_j \| [\mathscr A_m (\omega_j)]^2 - \omega_j^2 \|^2, \end{equation}

\begin{equation} \mathscr L := \frac{\gamma}{N}\sum_j \| \mathscr A_m (\omega_j) - \omega_j \|^2 + \frac{(1-\gamma)}{N}\sum_j \| [\mathscr A_m (\omega_j)]^2 - \omega_j^2 \|^2, \end{equation}

over ![]() $500$ epochs (batch size of

$500$ epochs (batch size of ![]() $64$, constant learning rate in an Adam optimiser

$64$, constant learning rate in an Adam optimiser ![]() $\eta =5 \times 10^{-4}$, see Kingma & Ba Reference Kingma and Ba2015). An additional term has been added to the standard ‘mean squared error’ in the loss function (2.4). The new term is essentially a mean squared error on the square of the vorticity field: high-dissipation events are associated with large values of the enstrophy

$\eta =5 \times 10^{-4}$, see Kingma & Ba Reference Kingma and Ba2015). An additional term has been added to the standard ‘mean squared error’ in the loss function (2.4). The new term is essentially a mean squared error on the square of the vorticity field: high-dissipation events are associated with large values of the enstrophy ![]() $\iint \omega ^2 \,{\rm d}^2 \boldsymbol x$, and the new term in (2.4) makes the strongest contribution to the overall loss in these cases, whereas quiescent, low-dissipation snapshots are dominated by the standard mean-squared-error term. The rationale here is to encourage the network to learn a reasonable representation of high-dissipation events, particularly as we increase

$\iint \omega ^2 \,{\rm d}^2 \boldsymbol x$, and the new term in (2.4) makes the strongest contribution to the overall loss in these cases, whereas quiescent, low-dissipation snapshots are dominated by the standard mean-squared-error term. The rationale here is to encourage the network to learn a reasonable representation of high-dissipation events, particularly as we increase ![]() $Re$. Treatment via a modified loss is required as these events make up only a small fraction of the training dataset: a similar effect could perhaps be anticipated if the training data were drawn from a modified distribution skewed to high-dissipation events rather than sampled from the invariant measure. Intriguingly, we found that the addition of this term lead to better overall mean squared error than a network trained to minimise the mean squared error alone. We trained independent networks at

$Re$. Treatment via a modified loss is required as these events make up only a small fraction of the training dataset: a similar effect could perhaps be anticipated if the training data were drawn from a modified distribution skewed to high-dissipation events rather than sampled from the invariant measure. Intriguingly, we found that the addition of this term lead to better overall mean squared error than a network trained to minimise the mean squared error alone. We trained independent networks at ![]() $Re=40$ with various

$Re=40$ with various ![]() $\gamma$ and found

$\gamma$ and found ![]() $\gamma =1/2$ to be most effective. We fix

$\gamma =1/2$ to be most effective. We fix ![]() $\gamma$ to this value for all networks and

$\gamma$ to this value for all networks and ![]() $Re$.

$Re$.

The networks were trained by normalising the inputs ![]() $\omega \to \omega / \omega _{{norm}}$, where

$\omega \to \omega / \omega _{{norm}}$, where ![]() $\omega _{{norm}} = 25$ for

$\omega _{{norm}} = 25$ for ![]() $Re \in \{40, 80, 100\}$ and

$Re \in \{40, 80, 100\}$ and ![]() $\omega _{{norm}} = 60$ for

$\omega _{{norm}} = 60$ for ![]() $Re=400$. For a consistent analysis of the performance across the architectures and

$Re=400$. For a consistent analysis of the performance across the architectures and ![]() $Re$, we do not report the loss (2.4) directly, but instead compute the relative error for each snapshot

$Re$, we do not report the loss (2.4) directly, but instead compute the relative error for each snapshot

where the norm ![]() $\| \omega \| := \sqrt {(1/4{\rm \pi} ^2) \iint \omega ^2 \,{\rm d}^2 \boldsymbol x }$. The average error over the test set for our new architecture is reported for a range of embedding dimensions

$\| \omega \| := \sqrt {(1/4{\rm \pi} ^2) \iint \omega ^2 \,{\rm d}^2 \boldsymbol x }$. The average error over the test set for our new architecture is reported for a range of embedding dimensions ![]() $m \in \{32, 64,\ldots, 1024\}$ and all

$m \in \{32, 64,\ldots, 1024\}$ and all ![]() $Re$ in figure 2. Unsurprisingly, there is monotonic reduction in the error with increasing network dimensionality

$Re$ in figure 2. Unsurprisingly, there is monotonic reduction in the error with increasing network dimensionality ![]() $m$ at fixed

$m$ at fixed ![]() $Re$, and monotonic increase in the error with increasing

$Re$, and monotonic increase in the error with increasing ![]() $Re$ at fixed

$Re$ at fixed ![]() $m$. The best performing network at

$m$. The best performing network at ![]() $Re=40$ shows an average relative error of

$Re=40$ shows an average relative error of ![]() $\sim$1 % (exact test-set mean error

$\sim$1 % (exact test-set mean error ![]() $0.016$, and standard deviation

$0.016$, and standard deviation ![]() $0.0076$), whereas the performance at

$0.0076$), whereas the performance at ![]() $Re=400$ has dropped to

$Re=400$ has dropped to ![]() $\sim$8 % (mean test-set error of

$\sim$8 % (mean test-set error of ![]() $0.077$ and standard deviation

$0.077$ and standard deviation ![]() $0.017$). Visually, snapshots from all networks are very hard to distinguish from the ground truth once

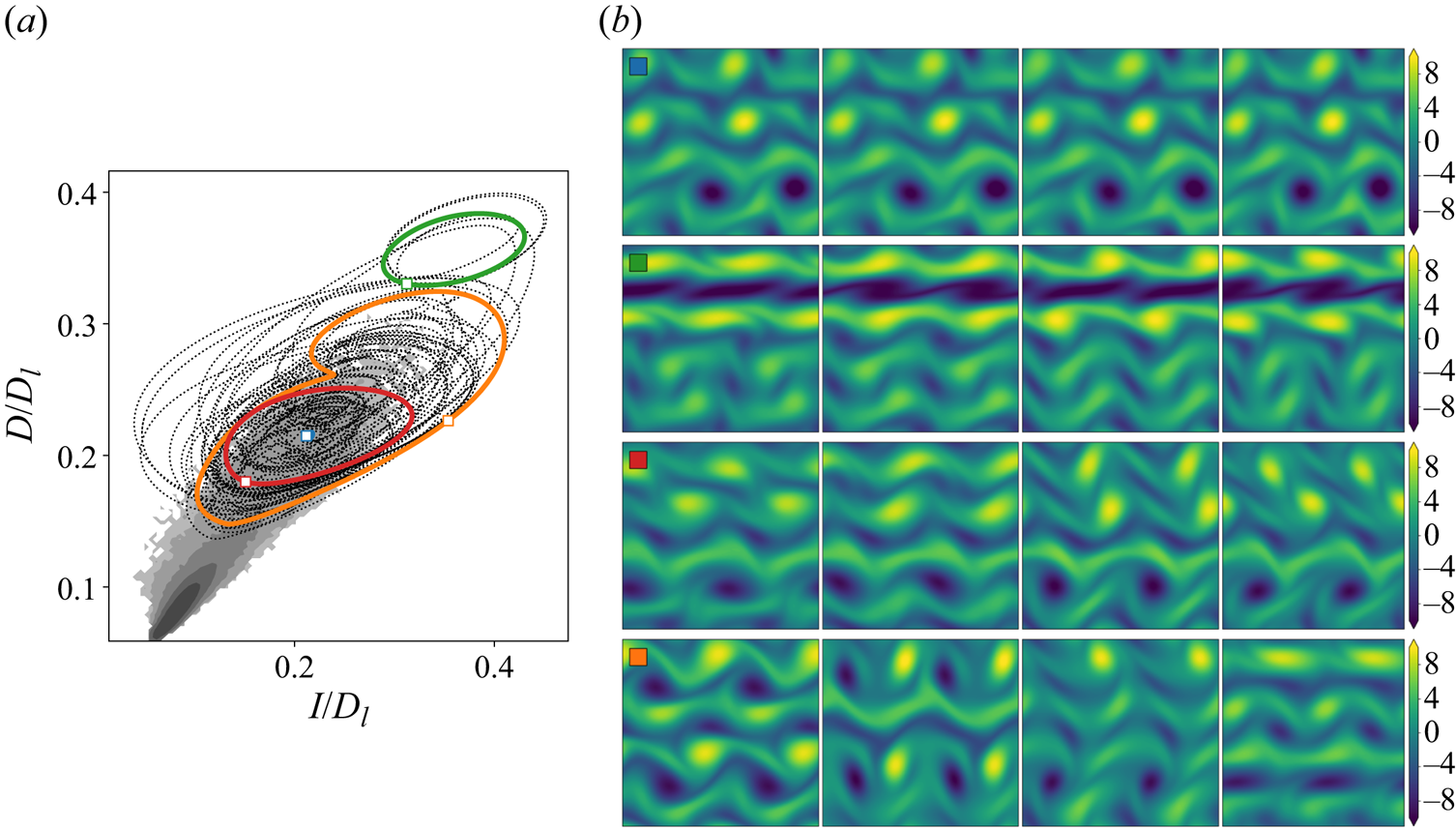

$0.017$). Visually, snapshots from all networks are very hard to distinguish from the ground truth once ![]() $\varepsilon _j \lesssim 0.1$: some examples for two specific networks and

$\varepsilon _j \lesssim 0.1$: some examples for two specific networks and ![]() $Re$ pairs are reported in figure 3 with a ‘good’ and ‘bad’ snapshot included (in terms of relative error (2.5)). For comparison, the same error measure evaluated on the induced velocity fields computed from

$Re$ pairs are reported in figure 3 with a ‘good’ and ‘bad’ snapshot included (in terms of relative error (2.5)). For comparison, the same error measure evaluated on the induced velocity fields computed from ![]() $\omega$ and

$\omega$ and ![]() $\mathscr A_m(\omega )$ is

$\mathscr A_m(\omega )$ is ![]() $\sim$2 % for snapshots where the vorticity error is

$\sim$2 % for snapshots where the vorticity error is ![]() $\varepsilon _j \approx 10\,\%$ at

$\varepsilon _j \approx 10\,\%$ at ![]() $Re=400$, and can be as much as a full order of magnitude better.

$Re=400$, and can be as much as a full order of magnitude better.

Figure 2. Average relative error (2.5) over the test set(s) as a function of embedding dimension for the best examples of all models trained in this work (recall the latent space dimension has a multiple of ![]() $32$ by design).

$32$ by design).

Figure 3. Snapshots of vorticity (top) at ![]() $Re=100$ (left) and

$Re=100$ (left) and ![]() $Re=400$ (right). Below is the output of the autoencoder,

$Re=400$ (right). Below is the output of the autoencoder, ![]() $\omega _{{norm}}\mathscr A_m(\omega )$. The network at

$\omega _{{norm}}\mathscr A_m(\omega )$. The network at ![]() $Re=100$ has minimum dimension

$Re=100$ has minimum dimension ![]() $m=512$, whereas at

$m=512$, whereas at ![]() $Re=400$ we use the

$Re=400$ we use the ![]() $m=1024$ network. Relative errors (2.5) from left to right are

$m=1024$ network. Relative errors (2.5) from left to right are ![]() $\varepsilon = 0.036$,

$\varepsilon = 0.036$, ![]() $\varepsilon = 0.065$

$\varepsilon = 0.065$ ![]() $\varepsilon = 0.102$ and

$\varepsilon = 0.102$ and ![]() $\varepsilon = 0.085$.

$\varepsilon = 0.085$.

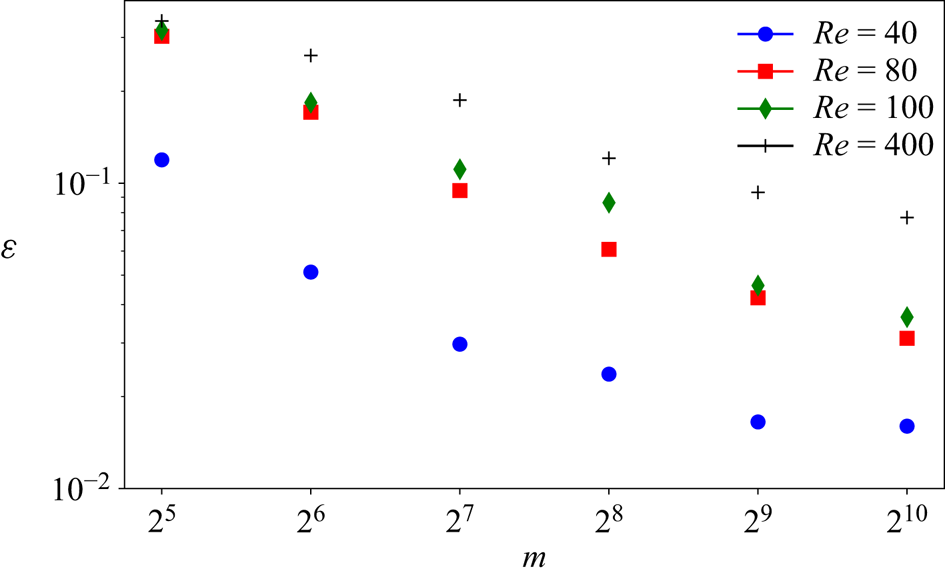

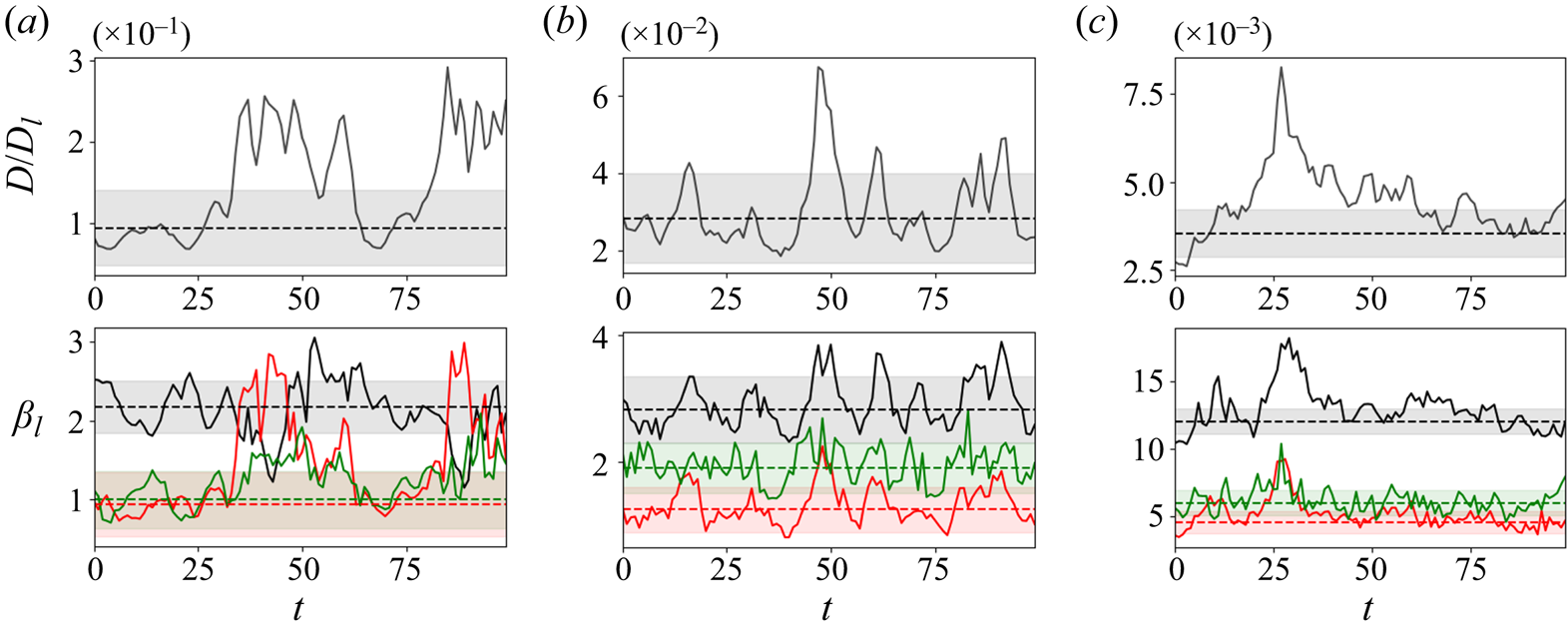

The distribution of errors over the test dataset is explored in more detail for the ![]() $m=256$ networks at all four Reynolds numbers in figure 4, where we examine the dependence of reconstruction error on the dissipation of the snapshot. Notably, there is no significant loss of performance on the rarer, high-dissipation events. This is to be contrasted with the sequential autoencoders considered in PBK21 and was achieved using the dual loss function (2.4) which encourages a robust embedding of snapshots with stronger enstrophy. We exploit the quality of the high-dissipation embeddings here to explore the nature of high-dissipation events as

$m=256$ networks at all four Reynolds numbers in figure 4, where we examine the dependence of reconstruction error on the dissipation of the snapshot. Notably, there is no significant loss of performance on the rarer, high-dissipation events. This is to be contrasted with the sequential autoencoders considered in PBK21 and was achieved using the dual loss function (2.4) which encourages a robust embedding of snapshots with stronger enstrophy. We exploit the quality of the high-dissipation embeddings here to explore the nature of high-dissipation events as ![]() $Re$ increases. We note that the errors reported in figures 2 and 4 are unaffected by the application of discrete symmetry transforms to the vorticity fields due to the data augmentation which was applied during training, and which was described earlier in § 2. For example, using a relative error which includes discrete symmetries (e.g.

$Re$ increases. We note that the errors reported in figures 2 and 4 are unaffected by the application of discrete symmetry transforms to the vorticity fields due to the data augmentation which was applied during training, and which was described earlier in § 2. For example, using a relative error which includes discrete symmetries (e.g. ![]() $\|\mathscr S^k \mathscr R \omega _j - \mathscr A_m(\mathscr S^k \mathscr R \omega _j) \| / \| \omega _j \|$, with

$\|\mathscr S^k \mathscr R \omega _j - \mathscr A_m(\mathscr S^k \mathscr R \omega _j) \| / \| \omega _j \|$, with ![]() $k\in \{1, 3, 5,7\}$) yields results which are essentially indistinguishable from those discussed previously (not shown).

$k\in \{1, 3, 5,7\}$) yields results which are essentially indistinguishable from those discussed previously (not shown).

Figure 4. Histograms of the test datasets for all ![]() $Re \in \{40, 80, 100, 400\}$ (

$Re \in \{40, 80, 100, 400\}$ (![]() $Re$ increasing left to right in the figure), visualised in terms of the relative error (2.5) computed using the

$Re$ increasing left to right in the figure), visualised in terms of the relative error (2.5) computed using the ![]() $m=256$ networks and the snapshot dissipation value normalised by the laminar value,

$m=256$ networks and the snapshot dissipation value normalised by the laminar value, ![]() $D_l = Re/ (2 n^2)$. The form of the loss function used in training (2.4) ensures that the rarer high-dissipation events are embedded to a similar standard to the low-dissipation data which make up a much larger proportion of the observations. Colours represent the number of snapshots in each bin, with a logarithmic colourmap.

$D_l = Re/ (2 n^2)$. The form of the loss function used in training (2.4) ensures that the rarer high-dissipation events are embedded to a similar standard to the low-dissipation data which make up a much larger proportion of the observations. Colours represent the number of snapshots in each bin, with a logarithmic colourmap.

For the remainder of this paper we consider three networks, ![]() $(Re, m) = (40, 128)$,

$(Re, m) = (40, 128)$, ![]() $(100, 512)$ and

$(100, 512)$ and ![]() $(400, 1024)$. This covers the full range of

$(400, 1024)$. This covers the full range of ![]() $Re$ for which we have trained models (excluding

$Re$ for which we have trained models (excluding ![]() $Re=80$ which is qualitatively very similar to

$Re=80$ which is qualitatively very similar to ![]() $Re=100$), with embedding dimensions

$Re=100$), with embedding dimensions ![]() $\{m\}$ selected to balance model performance against interpretability. Each of the three networks yields average relative errors

$\{m\}$ selected to balance model performance against interpretability. Each of the three networks yields average relative errors ![]() $\varepsilon$ of between roughly 2 % and 8 %.

$\varepsilon$ of between roughly 2 % and 8 %.

3. Latent Fourier analysis

3.1. Methodology

PBK21 introduced ‘latent Fourier analysis’ as a method to interpret the latent representations within neural networks for systems exhibiting a continuous symmetry. We will use the same approach here to understand how the state space of Kolmogorov flow complexifies under increasing ![]() $Re$, with a particular focus on the high-dissipation ‘bursting’ events. We briefly outline the numerical procedure for performing a latent Fourier decomposition of an encoded vorticity field,

$Re$, with a particular focus on the high-dissipation ‘bursting’ events. We briefly outline the numerical procedure for performing a latent Fourier decomposition of an encoded vorticity field, ![]() $\mathscr E_m(\omega )$.

$\mathscr E_m(\omega )$.

To perform a latent Fourier analysis we seek a linear operator that can perform a continuous streamwise shift in the latent space

where ![]() $\alpha$ is a chosen shift in the

$\alpha$ is a chosen shift in the ![]() $x$-direction. An approximate latent-shift operator

$x$-direction. An approximate latent-shift operator ![]() $\hat {\boldsymbol T}_{\alpha }$ is found via a least-squares minimisation (i.e. dynamic mode decomposition; Rowley et al. Reference Rowley, Mezić, Bagheri, Schlatter and Henningson2009; Schmid Reference Schmid2010) over the test set of embedding vectors and their

$\hat {\boldsymbol T}_{\alpha }$ is found via a least-squares minimisation (i.e. dynamic mode decomposition; Rowley et al. Reference Rowley, Mezić, Bagheri, Schlatter and Henningson2009; Schmid Reference Schmid2010) over the test set of embedding vectors and their ![]() $x$-shifted counterparts:

$x$-shifted counterparts:

where the ![]() $+$ indicates a Moore–Penrose pseudo-inverse and

$+$ indicates a Moore–Penrose pseudo-inverse and

with ![]() $N \gg m$ (

$N \gg m$ (![]() $N = O(10^5)$). As in PBK21, we consider a shift

$N = O(10^5)$). As in PBK21, we consider a shift ![]() $\alpha = 2{\rm \pi} / p$, with

$\alpha = 2{\rm \pi} / p$, with ![]() $p\in \mathbb {N}$, and given that

$p\in \mathbb {N}$, and given that ![]() $\boldsymbol T^p_{\alpha }\mathscr E_m(\omega ) = \mathscr E_m(\omega )$ due to streamwise periodicity, the eigenvalues of the discrete latent-shift operator are

$\boldsymbol T^p_{\alpha }\mathscr E_m(\omega ) = \mathscr E_m(\omega )$ due to streamwise periodicity, the eigenvalues of the discrete latent-shift operator are ![]() $\varLambda = \exp (2{\rm \pi} {\rm i} l/p)$, where

$\varLambda = \exp (2{\rm \pi} {\rm i} l/p)$, where ![]() $l \in \mathbb {Z}$ is a latent wavenumber.

$l \in \mathbb {Z}$ is a latent wavenumber.

The parameter ![]() $p$ is incrementally increased (i.e. reducing

$p$ is incrementally increased (i.e. reducing ![]() $\alpha$) until we stop recovering new latent wavenumbers. Across our networks, we find that only a handful of latent wavenumbers are required: substantially fewer than the number of wavenumbers required to accurately resolve the flow. For example,

$\alpha$) until we stop recovering new latent wavenumbers. Across our networks, we find that only a handful of latent wavenumbers are required: substantially fewer than the number of wavenumbers required to accurately resolve the flow. For example, ![]() $l_{{max}}=3$ for the

$l_{{max}}=3$ for the ![]() $m=128$ network at

$m=128$ network at ![]() $Re=40$, whereas the much higher-dimensional network

$Re=40$, whereas the much higher-dimensional network ![]() $m=1024$ at

$m=1024$ at ![]() $Re=400$ uses wavenumbers as high as

$Re=400$ uses wavenumbers as high as ![]() $l_{{max}}=8$. This compression comes about because each latent wavenumber

$l_{{max}}=8$. This compression comes about because each latent wavenumber ![]() $l$ is associated with vorticity fields which are periodic over

$l$ is associated with vorticity fields which are periodic over ![]() $2{\rm \pi} /l$. This means Fourier wavenumbers

$2{\rm \pi} /l$. This means Fourier wavenumbers ![]() $k^f=ml$ for integer

$k^f=ml$ for integer ![]() $m \in {\mathbb {Z}}$ can all be mapped onto

$m \in {\mathbb {Z}}$ can all be mapped onto ![]() $l$ resulting in a dramatically reduced maximum wavenumber

$l$ resulting in a dramatically reduced maximum wavenumber ![]() $l_{{max}}$.

$l_{{max}}$.

In practice streamwise-translation invariance is not embedded perfectly in any of our networks, and we use only the subset of the latent space which does exhibit equivariance under streamwise shifts by only retaining modes for which ![]() $|\varLambda _j|>0.9$. With the non-zero latent wavenumbers determined we can now write down an expression for an embedding of a snapshot subject to an arbitrary shift,

$|\varLambda _j|>0.9$. With the non-zero latent wavenumbers determined we can now write down an expression for an embedding of a snapshot subject to an arbitrary shift, ![]() $s\in \mathbb {R}$, in the streamwise direction:

$s\in \mathbb {R}$, in the streamwise direction:

\begin{equation} \mathscr E_m(\mathscr T_s \omega) = \sum_{l ={-}l_{{max}}}^{l_{{max}}} \left(\sum_{k=1}^{d(l)} \mathscr P^l_k(\mathscr E_m(\omega))\right){\rm e}^{{\rm i}ls}, \end{equation}

\begin{equation} \mathscr E_m(\mathscr T_s \omega) = \sum_{l ={-}l_{{max}}}^{l_{{max}}} \left(\sum_{k=1}^{d(l)} \mathscr P^l_k(\mathscr E_m(\omega))\right){\rm e}^{{\rm i}ls}, \end{equation}where

with ![]() $\boldsymbol \xi ^{(l)}_k$ the

$\boldsymbol \xi ^{(l)}_k$ the ![]() $k$th eigenvector of the shift operator

$k$th eigenvector of the shift operator ![]() $\hat {\boldsymbol T}_{\alpha }$,

$\hat {\boldsymbol T}_{\alpha }$,

in the subspace ![]() $l$ and

$l$ and ![]() $\boldsymbol \xi ^{(l){{\dagger}} }_k$ is the corresponding adjoint eigenfunction so

$\boldsymbol \xi ^{(l){{\dagger}} }_k$ is the corresponding adjoint eigenfunction so ![]() $(\boldsymbol \xi _i^{(l){{\dagger}} })^H\boldsymbol \xi ^{(l)}_j = \delta _{ij}$ with superscript

$(\boldsymbol \xi _i^{(l){{\dagger}} })^H\boldsymbol \xi ^{(l)}_j = \delta _{ij}$ with superscript ![]() $H$ indicating the conjugate-transpose. Equation (3.4) makes the connection with a Fourier transform clear. The small number of non-zero latent wavenumbers means that each must encode a wide variety of different patterns in the flow: each latent wavenumber is (potentially highly) degenerate with geometric multiplicity

$H$ indicating the conjugate-transpose. Equation (3.4) makes the connection with a Fourier transform clear. The small number of non-zero latent wavenumbers means that each must encode a wide variety of different patterns in the flow: each latent wavenumber is (potentially highly) degenerate with geometric multiplicity ![]() $d(l)$ whereas a given Fourier mode is not (ignoring the same degeneracy both possess in

$d(l)$ whereas a given Fourier mode is not (ignoring the same degeneracy both possess in ![]() $y$). In (3.4) the quantity

$y$). In (3.4) the quantity ![]() $\mathscr P^l_k(\mathscr E_m(\omega ))$ is the projection of the embedding vector onto direction

$\mathscr P^l_k(\mathscr E_m(\omega ))$ is the projection of the embedding vector onto direction ![]() $k$ within the eigenspace of latent wavenumber

$k$ within the eigenspace of latent wavenumber ![]() $l$. We obtain these projectors via a singular value decomposition (SVD) within a given eigenspace, which is described in § 3.2. We refer to the decode of a projection onto individual latent wavenumbers as a ‘recurrent pattern’.

$l$. We obtain these projectors via a singular value decomposition (SVD) within a given eigenspace, which is described in § 3.2. We refer to the decode of a projection onto individual latent wavenumbers as a ‘recurrent pattern’.

At the innermost representation in the autoencoders the turbulent flow is represented with a set of feature maps of shape ![]() $4 \times 8$, where the convolutional operations mean that the four horizontal cells each correspond to a quarter of the original domain, i.e. the network has constructed some highly abstract feature that was originally located in one-quarter of the physical domain in

$4 \times 8$, where the convolutional operations mean that the four horizontal cells each correspond to a quarter of the original domain, i.e. the network has constructed some highly abstract feature that was originally located in one-quarter of the physical domain in ![]() $x$. The correspondence of components of the innermost feature maps to specific regions of physical space is ensured by: (i) restricting the architecture to purely convolutional layers; (ii) periodic padding of the image at each layer of the network so that the output of the discrete convolution operation retains the same shape as the input; and (iii) shrinking of the convolutional filters as pooling operations are applied to retain a consistent size relative to the physical domain. Full architectural details are provided in Appendix A. This physical-space correspondence can be verified by shifting the input vorticity field by an exact quarter of the domain in

$x$. The correspondence of components of the innermost feature maps to specific regions of physical space is ensured by: (i) restricting the architecture to purely convolutional layers; (ii) periodic padding of the image at each layer of the network so that the output of the discrete convolution operation retains the same shape as the input; and (iii) shrinking of the convolutional filters as pooling operations are applied to retain a consistent size relative to the physical domain. Full architectural details are provided in Appendix A. This physical-space correspondence can be verified by shifting the input vorticity field by an exact quarter of the domain in ![]() $x$, after which the innermost feature maps are unchanged apart from a permutation of rows to match the shifting operation. Similarly, the eight vertical cells isolate some feature located in one of eight vertical bands in the original image: the Kolmogorov forcing fits eight half-wavelengths in the domain, and the ‘shift’ in the shift-reflect symmetry is one half-wavelength. The convolutions are invariant to arbitrary translations in

$x$, after which the innermost feature maps are unchanged apart from a permutation of rows to match the shifting operation. Similarly, the eight vertical cells isolate some feature located in one of eight vertical bands in the original image: the Kolmogorov forcing fits eight half-wavelengths in the domain, and the ‘shift’ in the shift-reflect symmetry is one half-wavelength. The convolutions are invariant to arbitrary translations in ![]() $y$, but the shift-reflect by a half-wavelength must be ‘learned’, which we accomplish via the data augmentation discussed in § 2. This is also the case for the rotational symmetry, which we account for in the augmentation during network training.

$y$, but the shift-reflect by a half-wavelength must be ‘learned’, which we accomplish via the data augmentation discussed in § 2. This is also the case for the rotational symmetry, which we account for in the augmentation during network training.

The choice of a particular feature map size for the encoder can be used to encourage the network to learn recurrent patterns at a particular scale. This architectural choice is at the root of the relatively low values of ![]() $l_{{max}}$ observed for the networks. For example, consider the case of a single feature map for the encoder: this would correspond to the

$l_{{max}}$ observed for the networks. For example, consider the case of a single feature map for the encoder: this would correspond to the ![]() $m=32$ networks trained here. In this case, we are effectively coarse-graining the original vorticity snapshot to a single

$m=32$ networks trained here. In this case, we are effectively coarse-graining the original vorticity snapshot to a single ![]() $4\times 8$ image, where, as discussed previously, each of the four rows corresponds to one-quarter domain in

$4\times 8$ image, where, as discussed previously, each of the four rows corresponds to one-quarter domain in ![]() $x$. Therefore, by the Nyquist sampling criterion that the minimum length scale that can be resolved is twice the grid spacing (i.e.

$x$. Therefore, by the Nyquist sampling criterion that the minimum length scale that can be resolved is twice the grid spacing (i.e. ![]() $L_x/2$), the maximum wavenumber obtained in the horizontal direction is

$L_x/2$), the maximum wavenumber obtained in the horizontal direction is ![]() $l_{{max}} = 2$: the network has to learn to couple smaller-scale features to large scales in the most efficient way. By expanding the number of feature maps at the innermost level, as is done for the

$l_{{max}} = 2$: the network has to learn to couple smaller-scale features to large scales in the most efficient way. By expanding the number of feature maps at the innermost level, as is done for the ![]() $m > 32$ networks (note that the

$m > 32$ networks (note that the ![]() $m=1024$ network has 32 feature maps), in principle we allow for higher latent wavenumbers, but it is seemingly inefficient for the network to embed features in this way, and instead most of the energy is contained in relatively low

$m=1024$ network has 32 feature maps), in principle we allow for higher latent wavenumbers, but it is seemingly inefficient for the network to embed features in this way, and instead most of the energy is contained in relatively low ![]() $l$. This is a benefit from an interpretability point of view, since individual recurrent patterns have a physical significance; each features a large number of physical wavenumbers with a base periodicity set by the value of

$l$. This is a benefit from an interpretability point of view, since individual recurrent patterns have a physical significance; each features a large number of physical wavenumbers with a base periodicity set by the value of ![]() $l$.

$l$.

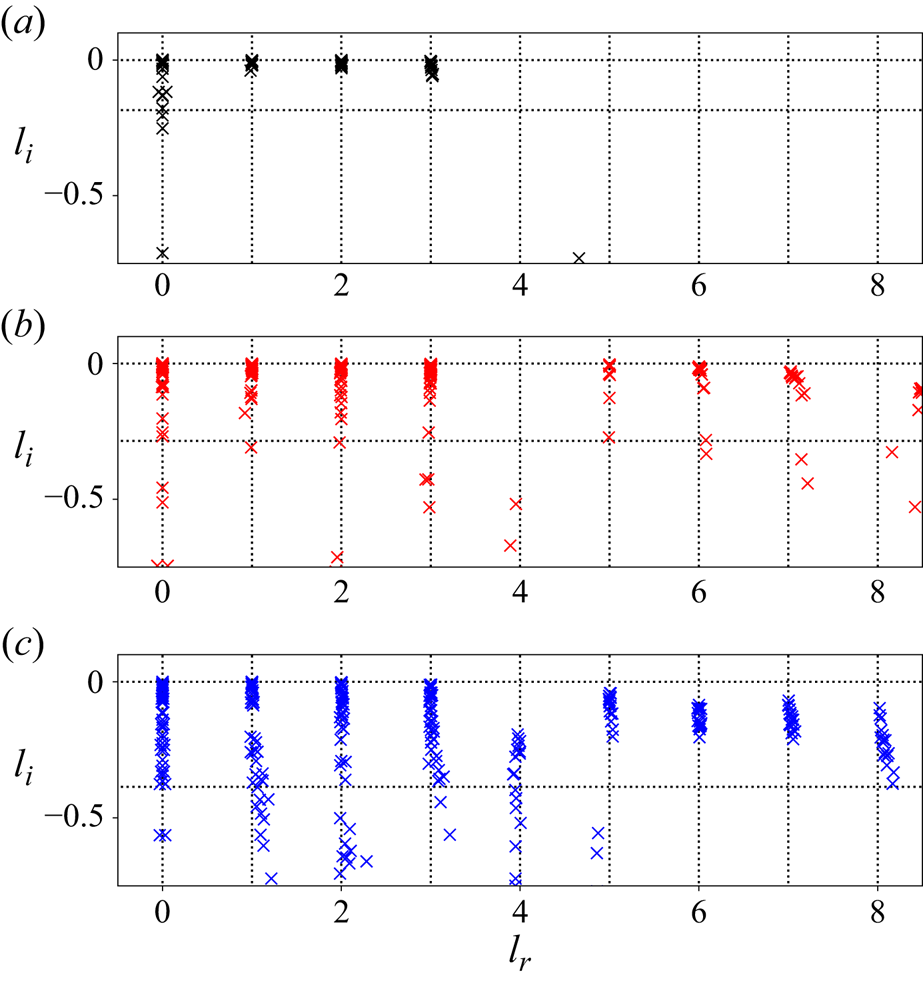

We report eigenvalue spectra for the latent wavenumbers in figure 5 for each of the three networks considered here. These figures were generated by first selecting a shift ![]() $\alpha = 2{\rm \pi} /p$, computing the spectra of

$\alpha = 2{\rm \pi} /p$, computing the spectra of ![]() $\hat {\boldsymbol T}_{\alpha }$ and inverting

$\hat {\boldsymbol T}_{\alpha }$ and inverting ![]() $\varLambda = \exp (2 {\rm \pi}{\rm i} l/p)$. As described previously, we incrementally decreased the shift until we stopped recovering new

$\varLambda = \exp (2 {\rm \pi}{\rm i} l/p)$. As described previously, we incrementally decreased the shift until we stopped recovering new ![]() $l$, i.e. the spectra reported in figure 5 are independent of

$l$, i.e. the spectra reported in figure 5 are independent of ![]() $\alpha$. This independence is also true of decodes of projections onto eigenvectors of

$\alpha$. This independence is also true of decodes of projections onto eigenvectors of ![]() $\hat {\boldsymbol T}_{\alpha }$ which we discuss in subsequent sections.

$\hat {\boldsymbol T}_{\alpha }$ which we discuss in subsequent sections.

Figure 5. Eigenvalues of the latent shift operator, ![]() $\hat {\boldsymbol T}_{\alpha }$, visualised in terms of latent wavenumbers

$\hat {\boldsymbol T}_{\alpha }$, visualised in terms of latent wavenumbers ![]() $l = l_r + {\rm i} l_i$ (in a perfect approximation

$l = l_r + {\rm i} l_i$ (in a perfect approximation ![]() $l_i=0$). (a) Network

$l_i=0$). (a) Network ![]() $(Re,m) = (40, 128)$, with shift

$(Re,m) = (40, 128)$, with shift ![]() $\alpha = 2{\rm \pi} / 11$. (b) Network

$\alpha = 2{\rm \pi} / 11$. (b) Network ![]() $(Re,m) = (100, 512)$, with shift

$(Re,m) = (100, 512)$, with shift ![]() $\alpha = 2{\rm \pi} / 17$. (c) Network

$\alpha = 2{\rm \pi} / 17$. (c) Network ![]() $(Re, m) = (400, 1024)$, with shift

$(Re, m) = (400, 1024)$, with shift ![]() $\alpha = 2{\rm \pi} / 23$. Latent wavenumbers are determined by writing the eigenvalues of the shift operator as

$\alpha = 2{\rm \pi} / 23$. Latent wavenumbers are determined by writing the eigenvalues of the shift operator as ![]() $\varLambda = \exp (2{\rm \pi} {\rm i} l/p)$, where

$\varLambda = \exp (2{\rm \pi} {\rm i} l/p)$, where ![]() $\alpha \equiv 2{\rm \pi} / p$. Horizontal dotted lines indicate the threshold

$\alpha \equiv 2{\rm \pi} / p$. Horizontal dotted lines indicate the threshold ![]() $|\varLambda | = 0.9$ or, equivalently,

$|\varLambda | = 0.9$ or, equivalently, ![]() $l_i = -p\log (0.9)/2{\rm \pi}$, below which eigenvalues are discarded when doing latent projections. Dotted vertical lines indicate integer values of

$l_i = -p\log (0.9)/2{\rm \pi}$, below which eigenvalues are discarded when doing latent projections. Dotted vertical lines indicate integer values of ![]() $l_r$.

$l_r$.

The clustering of points in figure 5 around the integer values of ![]() $l$ shows that the eigenvalues of the shift operator are highly degenerate. The maximum latent wavenumber found (and the size of each eigenspaces) expands with increasing

$l$ shows that the eigenvalues of the shift operator are highly degenerate. The maximum latent wavenumber found (and the size of each eigenspaces) expands with increasing ![]() $Re$, and consequently a smaller value of

$Re$, and consequently a smaller value of ![]() $\alpha$ is needed to construct the shift operator (3.1). There are large numbers of points clustered on integer values of

$\alpha$ is needed to construct the shift operator (3.1). There are large numbers of points clustered on integer values of ![]() $l$ in figure 5, though we also find that some structure in the network can not be accurately shifted with a linear operator, and we also observe ‘decaying’ eigenvalues with negative imaginary part. This behaviour is more apparent as

$l$ in figure 5, though we also find that some structure in the network can not be accurately shifted with a linear operator, and we also observe ‘decaying’ eigenvalues with negative imaginary part. This behaviour is more apparent as ![]() $Re$ increases and represents the imperfect embedding of continuous translations in the network (recall that the innermost representation coarse-grains the input image to a spatial ‘resolution’ of

$Re$ increases and represents the imperfect embedding of continuous translations in the network (recall that the innermost representation coarse-grains the input image to a spatial ‘resolution’ of ![]() $4\times 8$); we consider only a subset of the latent space where translation is robust by thresholding

$4\times 8$); we consider only a subset of the latent space where translation is robust by thresholding ![]() $|\varLambda | > 0.9$.

$|\varLambda | > 0.9$.

Despite the expanding ![]() $l_{{max}}$ with increasing

$l_{{max}}$ with increasing ![]() $Re$ and the increasing inaccuracy of the shift operator, all networks can produce a fairly robust representation of the flow with just three non-zero