1. Introduction

In a wide variety of practical situations, we wish to infer the state of a fluid flow from a limited number of flow sensors with generally noisy output signals. In particular, such knowledge of the flow state may assist within the larger scope of a flow control task, either in the training or application of a control strategy. For example, in reinforcement learning (RL) applications for guiding a vehicle to some target through a highly disturbed fluid environment (Verma, Novati & Koumoutsakos Reference Verma, Novati and Koumoutsakos2018), the system is partially observable if the RL framework only has knowledge of the state of the vehicle itself. It is unable to distinguish between quiescent and disturbed areas of the environment and to take actions that are distinctly advantageous in either, thus limiting the effectiveness of the control strategy. Augmenting this state with some knowledge of the flow may be helpful to improve this effectiveness.

The problem of flow estimation is very broad and can be pursued with different types of sensors in the presence of various flow physics. We focus in this paper on the inference of incompressible flows of moderate and large Reynolds numbers from pressure sensors, a problem that has been of interest in the fluid dynamics community for many years (Naguib, Wark & Juckenhöfel Reference Naguib, Wark and Juckenhöfel2001; Murray & Ukeiley Reference Murray and Ukeiley2003; Gomez et al. Reference Gomez, Lagor, Kirk, Lind, Jones and Paley2019; Sashittal & Bodony Reference Sashittal and Bodony2021; Iacobello, Kaiser & Rival Reference Iacobello, Kaiser and Rival2022; Zhong et al. Reference Zhong, Fukami, An and Taira2023). Flow estimation from other types of noisy measurements has also been pursued in closely related contexts in recent years, with tools very similar to those used in the present work (Juniper & Yoko Reference Juniper and Yoko2022; Kontogiannis et al. Reference Kontogiannis, Elgersma, Sederman and Juniper2022). Estimation generally seeks to infer a finite-dimensional state vector ![]() ${\textit {x}}$ from a finite-dimensional measurement vector

${\textit {x}}$ from a finite-dimensional measurement vector ![]() ${\textit {y}}$. Since the state of a fluid flow is inherently infinite-dimensional, a central task of flow estimation is to approximately represent the flow so that it can be parameterized by a finite-dimensional state vector. For example, this could be done by a linear decomposition into data-driven modes (e.g. with proper orthogonal decomposition (POD) or dynamic mode decomposition) – in which the flow state comprises the coefficients of these modes – or by a generalized (nonlinear) form of this decomposition via a neural network (Morimoto et al. Reference Morimoto, Fukami, Zhang and Fukagata2022; Fukami & Taira Reference Fukami and Taira2023). Although these are very effective in representing flows close to their training set, they are generally less effective in representing newly encountered flows. For example, a vehicle's interaction with a gust (an incident disturbance) may take very different forms, but the basis modes or neural network can only be trained on a subset of these interactions. Furthermore, even when these approaches are effective, it is difficult to probe them for intuition about some basic questions that underlie the estimation task. How does the effectiveness of the estimation depend on the physical distance between the sensors and vortical flow structures, or on the size of the structures?

${\textit {y}}$. Since the state of a fluid flow is inherently infinite-dimensional, a central task of flow estimation is to approximately represent the flow so that it can be parameterized by a finite-dimensional state vector. For example, this could be done by a linear decomposition into data-driven modes (e.g. with proper orthogonal decomposition (POD) or dynamic mode decomposition) – in which the flow state comprises the coefficients of these modes – or by a generalized (nonlinear) form of this decomposition via a neural network (Morimoto et al. Reference Morimoto, Fukami, Zhang and Fukagata2022; Fukami & Taira Reference Fukami and Taira2023). Although these are very effective in representing flows close to their training set, they are generally less effective in representing newly encountered flows. For example, a vehicle's interaction with a gust (an incident disturbance) may take very different forms, but the basis modes or neural network can only be trained on a subset of these interactions. Furthermore, even when these approaches are effective, it is difficult to probe them for intuition about some basic questions that underlie the estimation task. How does the effectiveness of the estimation depend on the physical distance between the sensors and vortical flow structures, or on the size of the structures?

We take a different approach to flow representation in this paper, writing the vorticity field as a sum of ![]() $N$ (nearly) singular vortex elements. (The adjective ‘nearly’ conveys that we will regularize each element with a smoothing kernel of small radius.) A distinct feature of a flow singularity (in contrast to, say, a POD mode) is that both its strength and its position are degrees of freedom in the state vector, so that it can efficiently and adaptively approximate a compact evolving vortex structure even with a small number of vortex elements. The compromise for this additional flexibility is that it introduces a nonlinear relationship between the velocity field and the state vector. However, since pressure is already inherently quadratically dependent on the velocity, one must contend with nonlinearity in the inference problem regardless of the choice of flow representation. Another advantage of using singularities as a representation of the flow is that their velocity and pressure fields are known exactly, providing useful insight into the estimation problem.

$N$ (nearly) singular vortex elements. (The adjective ‘nearly’ conveys that we will regularize each element with a smoothing kernel of small radius.) A distinct feature of a flow singularity (in contrast to, say, a POD mode) is that both its strength and its position are degrees of freedom in the state vector, so that it can efficiently and adaptively approximate a compact evolving vortex structure even with a small number of vortex elements. The compromise for this additional flexibility is that it introduces a nonlinear relationship between the velocity field and the state vector. However, since pressure is already inherently quadratically dependent on the velocity, one must contend with nonlinearity in the inference problem regardless of the choice of flow representation. Another advantage of using singularities as a representation of the flow is that their velocity and pressure fields are known exactly, providing useful insight into the estimation problem.

To restrict the dimensionality of the problem and make the estimation tractable, we truncate the set of vortex elements to a small number ![]() $N$. In this manner, the point vortices can be thought of as an adaptive low-rank representation of the flow, each capturing a vortex structure but omitting most details of the structure's internal dynamics. To keep the scope of this paper somewhat simpler, we will narrow our focus to unbounded two-dimensional vortical flows, so that the estimated vorticity field is given by

$N$. In this manner, the point vortices can be thought of as an adaptive low-rank representation of the flow, each capturing a vortex structure but omitting most details of the structure's internal dynamics. To keep the scope of this paper somewhat simpler, we will narrow our focus to unbounded two-dimensional vortical flows, so that the estimated vorticity field is given by

\begin{equation} \omega(\boldsymbol{r}) = \sum_{\textit{J}=1}^{N} \varGamma_{\textit{J}} \tilde{\delta}_{\epsilon}(\boldsymbol{r} - \boldsymbol{r}_{\textit{J}}), \end{equation}

\begin{equation} \omega(\boldsymbol{r}) = \sum_{\textit{J}=1}^{N} \varGamma_{\textit{J}} \tilde{\delta}_{\epsilon}(\boldsymbol{r} - \boldsymbol{r}_{\textit{J}}), \end{equation}

where ![]() $\tilde {\delta }_{\epsilon }$ is a regularized form of the two-dimensional Dirac delta function with small radius

$\tilde {\delta }_{\epsilon }$ is a regularized form of the two-dimensional Dirac delta function with small radius ![]() $\epsilon$, and any vortex

$\epsilon$, and any vortex ![]() ${\textit {J}}$ has strength

${\textit {J}}$ has strength ![]() $\varGamma _{{\textit {J}}}$ and position described by two Cartesian coordinates

$\varGamma _{{\textit {J}}}$ and position described by two Cartesian coordinates ![]() $\boldsymbol {r}_{{\textit {J}}} = (x_{{\textit {J}}},y_{{\textit {J}}})$. As we show in Appendix A, the pressure field due to a set of

$\boldsymbol {r}_{{\textit {J}}} = (x_{{\textit {J}}},y_{{\textit {J}}})$. As we show in Appendix A, the pressure field due to a set of ![]() $N$ vortex elements is given by

$N$ vortex elements is given by

\begin{equation} p(\boldsymbol{r}) - p_{\infty} ={-}\frac{1}{2}\rho \sum_{\textit{J}=1}^{N} \varGamma^{2}_{\textit{J}} P_{\epsilon}(\boldsymbol{r}-\boldsymbol{r}_{\textit{J}}) - \rho \sum_{\textit{J}=1}^{N} \sum_{\textit{K} = 1}^{\textit{J}-1} \varGamma_{\textit{J}}\varGamma_{\textit{K}} \varPi_{\epsilon}(\boldsymbol{r}-\boldsymbol{r}_{\textit{J}},\boldsymbol{r}-\boldsymbol{r}_{\textit{K}}), \end{equation}

\begin{equation} p(\boldsymbol{r}) - p_{\infty} ={-}\frac{1}{2}\rho \sum_{\textit{J}=1}^{N} \varGamma^{2}_{\textit{J}} P_{\epsilon}(\boldsymbol{r}-\boldsymbol{r}_{\textit{J}}) - \rho \sum_{\textit{J}=1}^{N} \sum_{\textit{K} = 1}^{\textit{J}-1} \varGamma_{\textit{J}}\varGamma_{\textit{K}} \varPi_{\epsilon}(\boldsymbol{r}-\boldsymbol{r}_{\textit{J}},\boldsymbol{r}-\boldsymbol{r}_{\textit{K}}), \end{equation}

where ![]() $P_{\epsilon }$ is a regularized direct vortex kernel, representing the individual effect of a vortex on the pressure field, and

$P_{\epsilon }$ is a regularized direct vortex kernel, representing the individual effect of a vortex on the pressure field, and ![]() $\varPi _{\epsilon }$ is a regularized vortex interaction kernel, encapsulating the coupled effect of a pair of vortices on pressure; their details are provided in Appendix A. This focus on unbounded two-dimensional flows preserves the essential purpose of the study, to reveal the important aspects of vortex estimation from pressure, and postpones to a future paper the effect of a body's presence or other flow contributors. Thus, the state dimensionality of the problems in this paper will be

$\varPi _{\epsilon }$ is a regularized vortex interaction kernel, encapsulating the coupled effect of a pair of vortices on pressure; their details are provided in Appendix A. This focus on unbounded two-dimensional flows preserves the essential purpose of the study, to reveal the important aspects of vortex estimation from pressure, and postpones to a future paper the effect of a body's presence or other flow contributors. Thus, the state dimensionality of the problems in this paper will be ![]() $n = 3N$, composed of the positions and strengths of the

$n = 3N$, composed of the positions and strengths of the ![]() $N$ vortex elements.

$N$ vortex elements.

In this limited context, we address the following question: Given a set of noisy pressure measurements at ![]() $d$ observation points (sensors) in or adjacent to an incompressible two-dimensional flow, to what extent can we infer a distribution of vortices? It is important to make a few points before we embark on our answer. First, because of the noise in measurements, we will address the inference problem in a probabilistic (i.e. Bayesian) manner: find the distribution of probable states based on the likelihood of the true observations. As we have noted, we have no hope of approximating a smoothly distributed vorticity field with a small number of singular vortex elements. However, as a result of our probabilistic approach, the expectation of the vorticity field over the whole distribution of estimated states will be smooth, even though the vorticity of each realization of the estimated flow is singular in space. This fact is shown in Appendix B.

$d$ observation points (sensors) in or adjacent to an incompressible two-dimensional flow, to what extent can we infer a distribution of vortices? It is important to make a few points before we embark on our answer. First, because of the noise in measurements, we will address the inference problem in a probabilistic (i.e. Bayesian) manner: find the distribution of probable states based on the likelihood of the true observations. As we have noted, we have no hope of approximating a smoothly distributed vorticity field with a small number of singular vortex elements. However, as a result of our probabilistic approach, the expectation of the vorticity field over the whole distribution of estimated states will be smooth, even though the vorticity of each realization of the estimated flow is singular in space. This fact is shown in Appendix B.

Second, vortex flows are generally unsteady, so we ultimately wish to address this inference question over some time interval. Indeed, that has been the subject of several previous works, e.g. Darakananda et al. (Reference Darakananda, da Silva, Colonius and Eldredge2018), Le Provost & Eldredge (Reference Le Provost and Eldredge2021) and Le Provost et al. (Reference Le Provost, Baptista, Marzouk and Eldredge2022), in which pressure measurements were assimilated into the estimate of the state via an ensemble Kalman filter (Evensen Reference Evensen1994). Each step of such sequential data assimilation consists of the same Bayesian inference (or analysis) procedure: we start with an initial guess for the probability distribution (the prior) and seek an improved guess (the posterior). At steps beyond the first one, we generate this prior by simply advancing an ensemble of vortex element systems forward by one time step (the forecast), from states drawn from the posterior at the end of the previous step. The quality of the estimation generally improves over time as the sequential estimator proceeds. However, when we start the problem we have no such posterior from earlier steps. Furthermore, even at later times, as new vortices are created or enter the region of interest, the prior will lack a description of these new features.

This challenge forms a central task of the present paper: we seek to infer the flow state at some instant from a prior that expresses little to no knowledge of the flow. Aside from some loose bounds, we do not have any guess for where the vortex elements lie, how strong they are or even how many there should be. All we know of the true system's behaviour comes from the sensor measurements, and we therefore estimate the vortex state by maximizing the likelihood that these sensor measurements will arise. It should also be noted that viscosity has no effect on the instantaneous relationships between vorticity, velocity and pressure in unbounded flow, so it is irrelevant if the true system is viscous or not. To assess the success of our inference approach, we will compute the expectation of the vorticity field under our estimated probability distribution and compare it with the true field, as we will presume to know this latter field for testing purposes.

The last point to make is that the inference of a flow from pressure is often an ill-posed problem with multiple possible solutions, a common issue with inverse problems of partial differential equations. For example, we will find that there may be many candidate vortex systems that reasonably match the pressure sensor data, forming ridges or local maxima of likelihood, even if they are not the global maximum solution. As we will show, these situations arise most frequently when the number of sensors is less than or equal to the number of states, i.e. when the inverse problem is underdetermined to some degree. In these cases, we will find that adding even one additional sensor can address the underlying ill posedness. There will also be various symmetries that arise due to the vortex-sensor arrangement. In this paper, we will use techniques to mitigate the effect of these symmetries on the estimation task. However, multiple solutions may still arise even with such techniques, and we seek to explore this multiplicity thoroughly. Therefore, we adopt a solution strategy that explores all features of the likelihood function, including multiple maxima. We describe the probability-based formulation of the vortex estimation problem and our methodologies for revealing it in § 2. Then, we present results of various estimation exercises with this methodology in § 3, and discuss the results and their generality in § 4.

2. Problem statement and methodology

2.1. The inference problem

Our goal is to estimate the state of an unbounded two-dimensional vortical flow with vortex system (1.1), which we will call a vortex estimator, specified completely by the ![]() $n$-dimensional vector (

$n$-dimensional vector (![]() $n=3N$)

$n=3N$)

where

is the 3-component state of vortex ![]() ${\textit {J}}$. The associated state covariance matrix is written as

${\textit {J}}$. The associated state covariance matrix is written as

\begin{equation} \varSigma_{\textit{x}} = \begin{pmatrix} \varSigma_{11} & \varSigma_{12} & \cdots & \varSigma_{1N} \\ \varSigma_{21} & \varSigma_{22} & \cdots & \varSigma_{2N} \\ \vdots & \vdots & \ddots & \vdots \\ \varSigma_{N 1} & \varSigma_{N 2} & \cdots & \varSigma_{NN} \end{pmatrix} \in \mathbb{R}^{3N \times 3N}, \end{equation}

\begin{equation} \varSigma_{\textit{x}} = \begin{pmatrix} \varSigma_{11} & \varSigma_{12} & \cdots & \varSigma_{1N} \\ \varSigma_{21} & \varSigma_{22} & \cdots & \varSigma_{2N} \\ \vdots & \vdots & \ddots & \vdots \\ \varSigma_{N 1} & \varSigma_{N 2} & \cdots & \varSigma_{NN} \end{pmatrix} \in \mathbb{R}^{3N \times 3N}, \end{equation}

where each ![]() $3 \times 3$ block represents the covariance between vortex elements

$3 \times 3$ block represents the covariance between vortex elements ![]() ${\textit {J}}$ and

${\textit {J}}$ and ![]() ${\textit {K}}$

${\textit {K}}$

Note that ![]() $\varSigma _{{\textit {K}}{\textit {J}}} = \varSigma ^{{\rm T}}_{{\textit {J}}{\textit {K}}}$.

$\varSigma _{{\textit {K}}{\textit {J}}} = \varSigma ^{{\rm T}}_{{\textit {J}}{\textit {K}}}$.

Equation (1.2) expresses the pressure (relative to ambient), ![]() ${\rm \Delta} p(\boldsymbol {r}) \equiv p(\boldsymbol {r}) - p_{\infty }$, as a continuous function of space,

${\rm \Delta} p(\boldsymbol {r}) \equiv p(\boldsymbol {r}) - p_{\infty }$, as a continuous function of space, ![]() $\boldsymbol {r}$. Here, we also explicitly acknowledge its dependence on the state,

$\boldsymbol {r}$. Here, we also explicitly acknowledge its dependence on the state, ![]() ${\rm \Delta} p(\boldsymbol {r},{\textit {x}})$. Furthermore, we will limit our observations to a finite number of sensor locations,

${\rm \Delta} p(\boldsymbol {r},{\textit {x}})$. Furthermore, we will limit our observations to a finite number of sensor locations, ![]() $\boldsymbol {r} = \boldsymbol {s}_{\alpha }$, for

$\boldsymbol {r} = \boldsymbol {s}_{\alpha }$, for ![]() $\alpha = 1,\ldots,d$, and define from this an observation operator,

$\alpha = 1,\ldots,d$, and define from this an observation operator, ![]() $h: \mathbb {R}^{n} \rightarrow \mathbb {R}^{d}$, mapping a given state vector

$h: \mathbb {R}^{n} \rightarrow \mathbb {R}^{d}$, mapping a given state vector ![]() ${\textit {x}}$ to the pressure at these sensor locations

${\textit {x}}$ to the pressure at these sensor locations

The objective of this paper is essentially to explore the extent to which we can invert function (2.5): from a given set of pressure observations ![]() ${\textit {y}}^{\ast } \in \mathbb {R}^{d}$ of the true system at

${\textit {y}}^{\ast } \in \mathbb {R}^{d}$ of the true system at ![]() $\boldsymbol {s}_{\alpha }$,

$\boldsymbol {s}_{\alpha }$, ![]() $\alpha = 1,\ldots,d$, determine the state

$\alpha = 1,\ldots,d$, determine the state ![]() ${\textit {x}}$. In this work, the true sensor measurements

${\textit {x}}$. In this work, the true sensor measurements ![]() ${\textit {y}}^{\ast }$ (the truth data) will be synthetically generated from the pressure field of a set of vortex elements in unbounded quiescent fluid, obtained from the same expression for pressure (1.2) that we use for

${\textit {y}}^{\ast }$ (the truth data) will be synthetically generated from the pressure field of a set of vortex elements in unbounded quiescent fluid, obtained from the same expression for pressure (1.2) that we use for ![]() $h({\textit {x}})$ in the estimator. Throughout, we will refer to the set of vortices that generated the measurements as the true vortices. However, there is inherent uncertainty in

$h({\textit {x}})$ in the estimator. Throughout, we will refer to the set of vortices that generated the measurements as the true vortices. However, there is inherent uncertainty in ![]() ${\textit {y}}^{\ast }$ due to random measurement noise

${\textit {y}}^{\ast }$ due to random measurement noise ![]() $\varepsilon \in \mathbb {R}^{d}$, so we model the predicted observations

$\varepsilon \in \mathbb {R}^{d}$, so we model the predicted observations ![]() ${\textit {y}}$ as

${\textit {y}}$ as

where ![]() $\varepsilon$ is normally distributed about zero mean,

$\varepsilon$ is normally distributed about zero mean, ![]() $\mathcal{N}(\varepsilon | 0,\varSigma _{\mathcal{E}})$, and the sensor noise is assumed independent and identically distributed, so that its covariance is

$\mathcal{N}(\varepsilon | 0,\varSigma _{\mathcal{E}})$, and the sensor noise is assumed independent and identically distributed, so that its covariance is ![]() $\varSigma _{\mathcal{E}} = \sigma _{\mathcal {E}}^{2} I$ with

$\varSigma _{\mathcal{E}} = \sigma _{\mathcal {E}}^{2} I$ with ![]() $I \in \mathbb {R}^{d \times d}$ the identity. We seek a probabilistic form of the inversion of (2.6) when set equal to

$I \in \mathbb {R}^{d \times d}$ the identity. We seek a probabilistic form of the inversion of (2.6) when set equal to ![]() ${\textit {y}}^{\ast }$. That is, we seek the conditional probability distribution of states based on the observed data,

${\textit {y}}^{\ast }$. That is, we seek the conditional probability distribution of states based on the observed data, ![]() ${\rm \pi} ({\textit {x}}\,|\,{\textit {y}}^{\ast })$: the peaks of this distribution would represent the most probable state(s) based on the measurements, and the breadth of the peaks would represent our uncertainty about the answer.

${\rm \pi} ({\textit {x}}\,|\,{\textit {y}}^{\ast })$: the peaks of this distribution would represent the most probable state(s) based on the measurements, and the breadth of the peaks would represent our uncertainty about the answer.

From Bayes’ theorem, the conditional probability of the state given an observation, ![]() ${\rm \pi} ({\textit {x}}\,|\,{\textit {y}})$, can be regarded as a posterior distribution over

${\rm \pi} ({\textit {x}}\,|\,{\textit {y}})$, can be regarded as a posterior distribution over ![]() ${\textit {x}}$,

${\textit {x}}$,

where ![]() ${\rm \pi} _{0}({\textit {x}})$ is the prior distribution, describing our original beliefs about the state

${\rm \pi} _{0}({\textit {x}})$ is the prior distribution, describing our original beliefs about the state ![]() ${\textit {x}}$, and

${\textit {x}}$, and ![]() $L({\textit {y}}\,|\,{\textit {x}})$ is called the likelihood function, representing the probability of observing certain data

$L({\textit {y}}\,|\,{\textit {x}})$ is called the likelihood function, representing the probability of observing certain data ![]() ${\textit {y}}$ at a given state,

${\textit {y}}$ at a given state, ![]() ${\textit {x}}$. The likelihood function encapsulates our physics-based prediction of the sensor measurements, based on the observation operator

${\textit {x}}$. The likelihood function encapsulates our physics-based prediction of the sensor measurements, based on the observation operator ![]() $h({\textit {x}})$. Collectively,

$h({\textit {x}})$. Collectively, ![]() $L({\textit {y}}\,|\,{\textit {x}}) {\rm \pi}_{0}({\textit {x}})$ represents the joint distribution of states and their associated observations. The distribution of observations,

$L({\textit {y}}\,|\,{\textit {x}}) {\rm \pi}_{0}({\textit {x}})$ represents the joint distribution of states and their associated observations. The distribution of observations, ![]() ${\rm \pi} ({\textit {y}})$, is a uniform normalizing factor. Its value is unnecessary for characterizing the posterior distribution over

${\rm \pi} ({\textit {y}})$, is a uniform normalizing factor. Its value is unnecessary for characterizing the posterior distribution over ![]() ${\textit {x}}$, since only comparisons (ratios) of the posterior are needed during sampling, as we discuss below in § 2.4. Thus, we can omit the denominator in (2.7). We evaluate this unnormalized posterior at the true observations,

${\textit {x}}$, since only comparisons (ratios) of the posterior are needed during sampling, as we discuss below in § 2.4. Thus, we can omit the denominator in (2.7). We evaluate this unnormalized posterior at the true observations, ![]() ${\textit {y}}^{\ast }$, and denote it by

${\textit {y}}^{\ast }$, and denote it by ![]() $\tilde {{\rm \pi} }({\textit {x}}\,|\,{\textit {y}}^{\ast }) = L({\textit {y}}^{\ast }\,|\,{\textit {x}}) {\rm \pi}_{0}({\textit {x}})$.

$\tilde {{\rm \pi} }({\textit {x}}\,|\,{\textit {y}}^{\ast }) = L({\textit {y}}^{\ast }\,|\,{\textit {x}}) {\rm \pi}_{0}({\textit {x}})$.

Our goal is to explore and characterize this unnormalized posterior for the vortex system. Expressing our lack of prior knowledge, we write ![]() ${\rm \pi} _{0}({\textit {x}})$ as a uniform distribution within a certain acceptable bounding region

${\rm \pi} _{0}({\textit {x}})$ as a uniform distribution within a certain acceptable bounding region ![]() $B$ on the state components (discussed in more specific detail below):

$B$ on the state components (discussed in more specific detail below):

Following from our observation model (2.6) with Gaussian noise, the likelihood is a Gaussian distribution about the predicted observations, ![]() $h({\textit {x}})$,

$h({\textit {x}})$,

where we have defined the covariance-weighted norm

Thus, our unnormalized posterior for the vortex estimator is given by

For practical purposes it is helpful to take the log of this probability, so that ratios of probabilities – some of them near machine zero – are assessed instead via differences in their logs. Because only differences are relevant, we can dispense with constants that arise from taking the log, such as the inverse square root factor. We note that the uniform probability distribution ![]() $\mathcal{U}_{n}({\textit {x}}\,|\,B)$ is uniform and positive inside

$\mathcal{U}_{n}({\textit {x}}\,|\,B)$ is uniform and positive inside ![]() $B$ and zero outside. Thus, to within an additive constant, this log posterior is

$B$ and zero outside. Thus, to within an additive constant, this log posterior is

where ![]() $c_{B}({\textit {x}})$ is a barrier function arising from the log of the uniform distribution, equal to zero for any

$c_{B}({\textit {x}})$ is a barrier function arising from the log of the uniform distribution, equal to zero for any ![]() ${\textit {x}}$ inside of the restricted region

${\textit {x}}$ inside of the restricted region ![]() $B$ of our uniform distribution, and

$B$ of our uniform distribution, and ![]() $c_{B}({\textit {x}})= -\infty$ outside of

$c_{B}({\textit {x}})= -\infty$ outside of ![]() $B$.

$B$.

In the examples that we present in this paper, the pressure sensors will be uniformly distributed along a straight line on the ![]() $x$ axis, unless otherwise specified. and the set of vortices used for estimation purposes as the vortex estimator. To ensure finite pressures throughout the domain, both the true vortices and the vortex estimator are regularized as discussed in Appendix A.4, with a small blob radius

$x$ axis, unless otherwise specified. and the set of vortices used for estimation purposes as the vortex estimator. To ensure finite pressures throughout the domain, both the true vortices and the vortex estimator are regularized as discussed in Appendix A.4, with a small blob radius ![]() $\epsilon = 0.01$ unless otherwise stated. To improve the scaling and conditioning of the problem, the pressure (relative to ambient) is implicitly normalized by

$\epsilon = 0.01$ unless otherwise stated. To improve the scaling and conditioning of the problem, the pressure (relative to ambient) is implicitly normalized by ![]() $\rho \varGamma _{0}^{2}/L^{2}$, where

$\rho \varGamma _{0}^{2}/L^{2}$, where ![]() $\varGamma _{0}$ is the strength of the largest-magnitude vortex in the true set and

$\varGamma _{0}$ is the strength of the largest-magnitude vortex in the true set and ![]() $L$ represents a characteristic distance of the vortex set from the sensors; all positions are implicitly normalized by

$L$ represents a characteristic distance of the vortex set from the sensors; all positions are implicitly normalized by ![]() $L$ and vortex strengths by

$L$ and vortex strengths by ![]() $\varGamma _{0}$. Unless otherwise specified, the measurement noise is

$\varGamma _{0}$. Unless otherwise specified, the measurement noise is ![]() $\sigma _{\mathcal{E}} = 5 \times 10^{-4}$.

$\sigma _{\mathcal{E}} = 5 \times 10^{-4}$.

2.2. Symmetries and nonlinearity in the vortex-pressure system

As mentioned in the introduction, there are many situations in which multiple solutions arise due to symmetries. This is easy to see from a simple thought experiment, depicted in figure 1(a). Suppose that we wish to estimate a single vortex from pressure sensors arranged in a straight line. A vortex on either side of this line of sensors will induce the same pressure on the sensors, and a vortex of either sign of strength will as well. Thus, in this simple problem, there are four possible states that are indistinguishable from each other, and we would need more information about the circumstances of the problem to rule out three of them. Such symmetries arise commonly in the problems we will study in this paper.

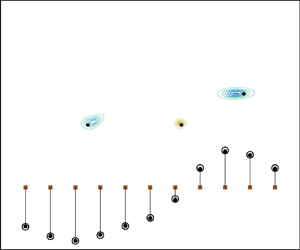

Figure 1. (a) Four configurations of vortices that would generate identical measurements for the set of pressure sensors (brown squares). (b) Two distinct vortex states that differ only in the vortex labelling but generate identical flow fields.

The symmetry with respect to the sign of vortex strength is due to the nonlinear relationship between pressure and vorticity. However, it is important to note that this symmetry issue is partly alleviated by the nonlinear relationship, as well, because of the coupling that it introduces between vortices. Figure 2 depicts the pressure fields for two examples of a pair of vortices: one in which the vortices in the pair have equal strengths and another in which the vortices have equal but opposite strengths. Although the pressure in the vortex cores of both pairs is similar and sharply negative, the pressures outside the cores are distinctly different because the interaction kernel enters the sum with different sign. At the positions of the sensors, the pressure has a small region of positive pressure in the case of vortices of opposite sign. These differences are essential for inferring the relative signs of vortex strengths in sets of vortices. However, it is important to stress that the pressure is invariant to a change of sign of all vortices in the set, so we would still need more prior information to discriminate one overall sign from the other.

Figure 2. Pressure fields and associated sensor measurements for two examples of a pair of vortex elements at ![]() $\boldsymbol {r}_{1} = (-1/2,1)$ and

$\boldsymbol {r}_{1} = (-1/2,1)$ and ![]() $\boldsymbol {r}_{2} = (1/2,1)$, respectively. Sensors are depicted in the pressure field plots as brown squares. Panels show (a,b)

$\boldsymbol {r}_{2} = (1/2,1)$, respectively. Sensors are depicted in the pressure field plots as brown squares. Panels show (a,b) ![]() $\varGamma _{1} = 1$ and

$\varGamma _{1} = 1$ and ![]() $\varGamma _{2} = 1$ and (c,d)

$\varGamma _{2} = 1$ and (c,d) ![]() $\varGamma _{1} = 1$ and

$\varGamma _{1} = 1$ and ![]() $\varGamma _{2} = -1$. In both cases, pressure contours are between

$\varGamma _{2} = -1$. In both cases, pressure contours are between ![]() $-0.5$ (red) and

$-0.5$ (red) and ![]() $0.01$ (blue).

$0.01$ (blue).

Another symmetry arises when there is more than one vortex to estimate, as in figure 1(b), because in such a case, there is no unique ordering of the vortices in the state vector. With each of the vortices assigned a fixed set of parameters, any of the ![]() $N!$ permutations of the ordering leads to the same pressure measurements. This vortex relabelling symmetry is a discrete analogue of the particle relabelling symmetry in continuum mechanics (Marsden & Ratiu Reference Marsden and Ratiu2013); it is also closely analogous to the non-identifiability issue of the mixture models that will be used for the probabilistic modelling in this paper. All of the

$N!$ permutations of the ordering leads to the same pressure measurements. This vortex relabelling symmetry is a discrete analogue of the particle relabelling symmetry in continuum mechanics (Marsden & Ratiu Reference Marsden and Ratiu2013); it is also closely analogous to the non-identifiability issue of the mixture models that will be used for the probabilistic modelling in this paper. All of the ![]() $N!$ solutions are obviously equivalent from a flow field perspective, so this symmetry is not a problem if we assess estimator performance based on flow field metrics. However, the

$N!$ solutions are obviously equivalent from a flow field perspective, so this symmetry is not a problem if we assess estimator performance based on flow field metrics. However, the ![]() $N!$ solutions form distinct points in the state space and we must anticipate this multiplicity when we search for high-probability regions.

$N!$ solutions form distinct points in the state space and we must anticipate this multiplicity when we search for high-probability regions.

The barrier function ![]() $c_{B}({\textit {x}})$ in (2.12) allows us to anticipate and eliminate some modes that arise from problem symmetries, because we can use the bounding region

$c_{B}({\textit {x}})$ in (2.12) allows us to anticipate and eliminate some modes that arise from problem symmetries, because we can use the bounding region ![]() $B$ to reject samples that fail to meet certain criteria. To eliminate some of the aforementioned symmetries, we will, without loss of generality, restrict our vortex estimator to search for vortices that lie above the line of sensors on the

$B$ to reject samples that fail to meet certain criteria. To eliminate some of the aforementioned symmetries, we will, without loss of generality, restrict our vortex estimator to search for vortices that lie above the line of sensors on the ![]() $x$ axis. For cases of multiple vortices in the estimator, we re-order the vortex entries in the state vector by their

$x$ axis. For cases of multiple vortices in the estimator, we re-order the vortex entries in the state vector by their ![]() $x$ position at each Markov-chain Monte Carlo (MCMC) step to eliminate the relabelling symmetry. We also assume that the leftmost vortex has positive strength, which reduces the number of probable states by half; the signs of all estimated vortices can easily be switched a posteriori if new knowledge shows that this assumption is wrong.

$x$ position at each Markov-chain Monte Carlo (MCMC) step to eliminate the relabelling symmetry. We also assume that the leftmost vortex has positive strength, which reduces the number of probable states by half; the signs of all estimated vortices can easily be switched a posteriori if new knowledge shows that this assumption is wrong.

2.3. The true covariance and rank deficiency

The main challenge of the vortex inference problem is that the observation operator is nonlinear, so the posterior (2.11) is not Gaussian. Thus, we will instead sample this posterior and develop an approximate model for the samples, composed of a mixture of Gaussians. However, we can obtain some important insight by supposing that we already know the true state and then linearizing the observation operator about it,

where ![]() $H \equiv \boldsymbol {\nabla }h({\textit {x}}^{\ast }) \in \mathbb {R}^{d \times n}$, the Jacobian of the observation operator at the true state. Then we can derive an approximating

$H \equiv \boldsymbol {\nabla }h({\textit {x}}^{\ast }) \in \mathbb {R}^{d \times n}$, the Jacobian of the observation operator at the true state. Then we can derive an approximating ![]() $n$-dimensional Gaussian model about mean

$n$-dimensional Gaussian model about mean ![]() ${\textit {x}}^{\ast }$ (plus a bias due to noise in the realization of the true measurements) with covariance

${\textit {x}}^{\ast }$ (plus a bias due to noise in the realization of the true measurements) with covariance

A brief derivation of this result is included in Appendix C.

We will refer to ![]() $\varSigma ^{\ast }_{{\textit {X}}}$ as the ‘true’ state covariance. It is useful to note that the matrix

$\varSigma ^{\ast }_{{\textit {X}}}$ as the ‘true’ state covariance. It is useful to note that the matrix ![]() $H^{{\rm T}}\varSigma _{\mathcal{E}}^{-1}H$ is the so-called Fisher information matrix for our Gaussian likelihood (Cui & Zahm Reference Cui and Zahm2021), evaluated at the true state. In other words, it quantifies the information about the state of the system that is available in the measurements. Because all sensors have the same noise variance,

$H^{{\rm T}}\varSigma _{\mathcal{E}}^{-1}H$ is the so-called Fisher information matrix for our Gaussian likelihood (Cui & Zahm Reference Cui and Zahm2021), evaluated at the true state. In other words, it quantifies the information about the state of the system that is available in the measurements. Because all sensors have the same noise variance, ![]() $\sigma _{\mathcal{E}}^{2}$, then

$\sigma _{\mathcal{E}}^{2}$, then ![]() $\varSigma ^{\ast }_{{\textit {X}}} = \sigma _{\mathcal{E}}^{2}(H^{{\rm T}}H)^{-1}$. We can then use the singular value decomposition of the Jacobian,

$\varSigma ^{\ast }_{{\textit {X}}} = \sigma _{\mathcal{E}}^{2}(H^{{\rm T}}H)^{-1}$. We can then use the singular value decomposition of the Jacobian, ![]() $H = U S V^{{\rm T}}$, to write a diagonalized form of the covariance,

$H = U S V^{{\rm T}}$, to write a diagonalized form of the covariance,

Here, the eigenvalue matrix is ![]() $\varLambda = \sigma _{\mathcal{E}}^{2} D^{-1}$, where

$\varLambda = \sigma _{\mathcal{E}}^{2} D^{-1}$, where ![]() $D = S^{{\rm T}}S \in \mathbb {R}^{n \times n}$ is a diagonal matrix containing squares

$D = S^{{\rm T}}S \in \mathbb {R}^{n \times n}$ is a diagonal matrix containing squares ![]() $s^{2}_{j}$ of the singular values

$s^{2}_{j}$ of the singular values ![]() $S$ of

$S$ of ![]() $H$ in decreasing magnitude up to the rank

$H$ in decreasing magnitude up to the rank ![]() $r \leq \min (d,n)$ of

$r \leq \min (d,n)$ of ![]() $H$ and padded with

$H$ and padded with ![]() $n - r$ zeros. The uncertainty ellipsoid thus has semi-axis lengths

$n - r$ zeros. The uncertainty ellipsoid thus has semi-axis lengths

along the directions ![]() $v_{j}$ given by the corresponding columns of

$v_{j}$ given by the corresponding columns of ![]() $V$. Thus, the greatest uncertainty

$V$. Thus, the greatest uncertainty ![]() $\lambda ^{1/2}_{n}$ is associated with the smallest singular value

$\lambda ^{1/2}_{n}$ is associated with the smallest singular value ![]() $s_{n}$ of

$s_{n}$ of ![]() $H$. The corresponding eigenvector,

$H$. The corresponding eigenvector, ![]() $v_{n}$, indicates the mixture of states for which we have the most confusion.

$v_{n}$, indicates the mixture of states for which we have the most confusion.

In fact, the smallest singular values of ![]() $H$ are necessarily zero if

$H$ are necessarily zero if ![]() $n > d$, i.e. when there are fewer sensors than states and the problem is therefore underdetermined. In such a case,

$n > d$, i.e. when there are fewer sensors than states and the problem is therefore underdetermined. In such a case, ![]() $H$ has a null space spanned by the last

$H$ has a null space spanned by the last ![]() $n-r$ columns in

$n-r$ columns in ![]() $V$. However,

$V$. However, ![]() ${\textit {x}}^{\ast }$ is not a unique solution in these problems; rather, it is simply one element of a manifold of vortex states that produce equivalent sensor readings (to within the noise). The covariance

${\textit {x}}^{\ast }$ is not a unique solution in these problems; rather, it is simply one element of a manifold of vortex states that produce equivalent sensor readings (to within the noise). The covariance ![]() $\varSigma ^{\ast }_{{\textit {X}}}$ evaluated at any

$\varSigma ^{\ast }_{{\textit {X}}}$ evaluated at any ![]() ${\textit {x}}^{\ast }$ on the manifold reveals the local tangent to this manifold – directions near

${\textit {x}}^{\ast }$ on the manifold reveals the local tangent to this manifold – directions near ![]() ${\textit {x}}^{\ast }$ along which we get identical sensor values. The true covariance

${\textit {x}}^{\ast }$ along which we get identical sensor values. The true covariance ![]() $\varSigma ^{\ast }_{{\textit {X}}}$ will also be very useful for illuminating cases in which the problem is ostensibly fully determined (

$\varSigma ^{\ast }_{{\textit {X}}}$ will also be very useful for illuminating cases in which the problem is ostensibly fully determined (![]() $n \leq d$), but for which the arrangement of sensors and true vortices nonetheless creates significant uncertainty in the estimate. In some of these cases, the smallest singular values may still be zero or quite small, indicating that the effective rank of

$n \leq d$), but for which the arrangement of sensors and true vortices nonetheless creates significant uncertainty in the estimate. In some of these cases, the smallest singular values may still be zero or quite small, indicating that the effective rank of ![]() $H$ is smaller than

$H$ is smaller than ![]() $n$.

$n$.

2.4. Sampling and modelling of the posterior

The true covariance matrix (2.14) and its eigendecomposition will be an important tool in the study that follows, but we generally will only use it when we presume to know the true state ![]() ${\textit {x}}^{\ast }$ and seek illumination on the estimation in the vicinity of the solution. To characterize the problem more fully and explore the potential multiplicity of solutions, we will generate samples of the posterior and then fit the samples with an approximate distribution

${\textit {x}}^{\ast }$ and seek illumination on the estimation in the vicinity of the solution. To characterize the problem more fully and explore the potential multiplicity of solutions, we will generate samples of the posterior and then fit the samples with an approximate distribution ![]() $\hat {{\rm \pi} }_{{\textit {y}}^{\ast }}({\textit {x}}) \approx {\rm \pi}({\textit {x}}\,|\,{\textit {y}}^{\ast })$ over

$\hat {{\rm \pi} }_{{\textit {y}}^{\ast }}({\textit {x}}) \approx {\rm \pi}({\textit {x}}\,|\,{\textit {y}}^{\ast })$ over ![]() ${\textit {x}}$. The overall algorithm is shown in the flowchart in figure 3 in the context of an example of estimating one vortex with three sensors. For the sampling task, we use the Metropolis–Hastings method (see, e.g. Chib & Greenberg Reference Chib and Greenberg1995), a simple but powerful form of MCMC. This method relies only on differences of the log probabilities between a proposed chain entry and the current chain entry to determine whether the proposal is more probable than the previous entry and should be added to the chain of samples. To ensure that the MCMC sampling does not get stuck in one of possibly multiple high-probability regions, we use the method of parallel tempering (Sambridge Reference Sambridge2014).

${\textit {x}}$. The overall algorithm is shown in the flowchart in figure 3 in the context of an example of estimating one vortex with three sensors. For the sampling task, we use the Metropolis–Hastings method (see, e.g. Chib & Greenberg Reference Chib and Greenberg1995), a simple but powerful form of MCMC. This method relies only on differences of the log probabilities between a proposed chain entry and the current chain entry to determine whether the proposal is more probable than the previous entry and should be added to the chain of samples. To ensure that the MCMC sampling does not get stuck in one of possibly multiple high-probability regions, we use the method of parallel tempering (Sambridge Reference Sambridge2014).

Figure 3. One true vortex and one-vortex estimator using three sensors, with a flowchart showing the overall algorithm and references to figure panels. (a) True covariance ellipsoid. The ellipse on each coordinate plane represents the marginal covariance between the state components in that plane. The true vortex state is shown as a black dot. (b) True sensor data (filled circles) with noise levels (vertical lines), compared with sensor values from estimate (open circles), obtained from expected state of the sample set. (c) The MCMC samples (blue) and the resulting vortex position covariance ellipses (coloured unfilled ellipses) from the GMM. Thicker lines of the ellipses indicate higher weights in the mixture. The true vortex position is shown as a filled black circle, and the true covariance ellipse for vortex position (corresponding to the grey ellipse in (a)) is shown filled in grey. Sensor positions are shown as brown squares. (d) Contours of the expected vorticity field, based on the mixture model.

In practice, we generally have found good results in parallel tempering by using five parallel Markov chains exploring the target distribution raised to respective powers ![]() $3.5^{p}$, where

$3.5^{p}$, where ![]() $p$ takes integer values between

$p$ takes integer values between ![]() $-4$ and

$-4$ and ![]() $0$. We initially carry out

$0$. We initially carry out ![]() $10^{4}$ steps of the algorithm with the MCMC proposal variance set to a diagonal matrix of

$10^{4}$ steps of the algorithm with the MCMC proposal variance set to a diagonal matrix of ![]() $4\times 10^{-4}$ for every state component. Then, we perform

$4\times 10^{-4}$ for every state component. Then, we perform ![]() $10^{6}$ steps with proposal variances set more tightly, uniformly equal to

$10^{6}$ steps with proposal variances set more tightly, uniformly equal to ![]() $2.5\times 10^{-5}$. The sample data set is then obtained from the

$2.5\times 10^{-5}$. The sample data set is then obtained from the ![]() $p = 0$ chain after discarding the first half of the chain (for burn in) and retaining only every

$p = 0$ chain after discarding the first half of the chain (for burn in) and retaining only every ![]() $100$th chain entry of the remainder to minimize autocorrelations in the samples. On a MacBook Pro with a M1 processor, the overall process takes around 20 seconds in the most challenging cases (i.e. the highest-dimensional state spaces). There are other methods that move more efficiently in the direction of local maxima (e.g. such as hybrid MCMC, which uses the Jacobian of the log posterior to guide the ascent). However, the approach we have taken here is quite versatile for more general cases, particularly those in which the Jacobian is impractical to compute repetitively in a long sequential process.

$100$th chain entry of the remainder to minimize autocorrelations in the samples. On a MacBook Pro with a M1 processor, the overall process takes around 20 seconds in the most challenging cases (i.e. the highest-dimensional state spaces). There are other methods that move more efficiently in the direction of local maxima (e.g. such as hybrid MCMC, which uses the Jacobian of the log posterior to guide the ascent). However, the approach we have taken here is quite versatile for more general cases, particularly those in which the Jacobian is impractical to compute repetitively in a long sequential process.

To approximate the posterior distribution over ![]() ${\textit {x}}$ from the samples, we employ a Gaussian mixture model (GMM), which assumes the form of a weighted sum of

${\textit {x}}$ from the samples, we employ a Gaussian mixture model (GMM), which assumes the form of a weighted sum of ![]() $K$ normal distributions (the mixture components)

$K$ normal distributions (the mixture components)

\begin{equation} \hat{\rm \pi}_{\textit{y}^{{\ast}}}(\textit{x}) = \sum_{k=1}^{K}\alpha_{k} \mathcal{N}(\textit{x}\,|\,\bar{\textit{x}}^{(k)},\varSigma^{(k)}), \end{equation}

\begin{equation} \hat{\rm \pi}_{\textit{y}^{{\ast}}}(\textit{x}) = \sum_{k=1}^{K}\alpha_{k} \mathcal{N}(\textit{x}\,|\,\bar{\textit{x}}^{(k)},\varSigma^{(k)}), \end{equation}

where ![]() $\bar {{\textit {x}}}^{(k)}$ and

$\bar {{\textit {x}}}^{(k)}$ and ![]() $\varSigma ^{(k)}$ are the mean and covariance of component

$\varSigma ^{(k)}$ are the mean and covariance of component ![]() $k$ of the mixture. Each weight

$k$ of the mixture. Each weight ![]() $\alpha _{k}$ lies between 0 and 1 and represents the probability of a sample point ‘belonging to’ component

$\alpha _{k}$ lies between 0 and 1 and represents the probability of a sample point ‘belonging to’ component ![]() $k$, so it quantifies the importance of that component in the overall mixture. For a given set of samples and a pre-selected number of components

$k$, so it quantifies the importance of that component in the overall mixture. For a given set of samples and a pre-selected number of components ![]() $K$, the parameters of the GMM (

$K$, the parameters of the GMM (![]() $\alpha _{k}$,

$\alpha _{k}$, ![]() $\bar {{\textit {x}}}^{(k)}$, and

$\bar {{\textit {x}}}^{(k)}$, and ![]() $\varSigma ^{(k)}$) are found via the expectation maximization algorithm (Bishop Reference Bishop2006). It is generally advantageous to choose

$\varSigma ^{(k)}$) are found via the expectation maximization algorithm (Bishop Reference Bishop2006). It is generally advantageous to choose ![]() $K$ to be large (

$K$ to be large (![]() ${\sim }5$–

${\sim }5$–![]() $9$) because extraneous components are assigned little weight, resulting in a smaller number of effective components. It should also be noted that, for the special case of a single mode of small variance, a GMM with

$9$) because extraneous components are assigned little weight, resulting in a smaller number of effective components. It should also be noted that, for the special case of a single mode of small variance, a GMM with ![]() $K=1$ and a sufficient number of samples approaches the Gaussian approximation about the true state

$K=1$ and a sufficient number of samples approaches the Gaussian approximation about the true state ![]() ${\textit {x}}^{\ast }$ described in § 2.3, with covariance

${\textit {x}}^{\ast }$ described in § 2.3, with covariance ![]() $\varSigma ^{\ast }_{{\textit {X}}}$.

$\varSigma ^{\ast }_{{\textit {X}}}$.

As we show in Appendix B, the GMM has a very attractive feature when used in the context of singular vortex elements because we can exactly evaluate the expectation of the vorticity field under the GMM probability,

\begin{equation} E[\boldsymbol{\omega}](\boldsymbol{r}) = \sum_{k=1}^{K} \alpha_{k}\sum_{\textit{J}=1}^{N} [\bar{\varGamma}^{(k)}_{\textit{J}} + \varSigma^{(k)}_{\varGamma_{\textit{J}} \boldsymbol{r}_{\textit{J}}} {\varSigma^{(k)}_{\boldsymbol{r}_{\textit{J}} \boldsymbol{r}_{\textit{J}}}}^{{-}1} (\boldsymbol{r} - \bar{\boldsymbol{r}}^{(k)}_{\textit{J}} )] \mathcal{N}(\boldsymbol{r}\,|\,\bar{\boldsymbol{r}}_{\textit{J}}^{(k)}, \varSigma^{(k)}_{\boldsymbol{r}_{\textit{J}} \boldsymbol{r}_{\textit{J}}}), \end{equation}

\begin{equation} E[\boldsymbol{\omega}](\boldsymbol{r}) = \sum_{k=1}^{K} \alpha_{k}\sum_{\textit{J}=1}^{N} [\bar{\varGamma}^{(k)}_{\textit{J}} + \varSigma^{(k)}_{\varGamma_{\textit{J}} \boldsymbol{r}_{\textit{J}}} {\varSigma^{(k)}_{\boldsymbol{r}_{\textit{J}} \boldsymbol{r}_{\textit{J}}}}^{{-}1} (\boldsymbol{r} - \bar{\boldsymbol{r}}^{(k)}_{\textit{J}} )] \mathcal{N}(\boldsymbol{r}\,|\,\bar{\boldsymbol{r}}_{\textit{J}}^{(k)}, \varSigma^{(k)}_{\boldsymbol{r}_{\textit{J}} \boldsymbol{r}_{\textit{J}}}), \end{equation}

where ![]() $\bar {\boldsymbol {r}}^{(k)}_{{\textit {J}}}$ and

$\bar {\boldsymbol {r}}^{(k)}_{{\textit {J}}}$ and ![]() $\bar {\varGamma }^{(k)}_{{\textit {J}}}$ comprise the position and strength of the

$\bar {\varGamma }^{(k)}_{{\textit {J}}}$ comprise the position and strength of the ![]() ${\textit {J}}$-vortex of mean state

${\textit {J}}$-vortex of mean state ![]() $\bar {{\textit {x}}}^{(k)}$ in (2.1), and

$\bar {{\textit {x}}}^{(k)}$ in (2.1), and ![]() $\varSigma ^{(k)}_{\boldsymbol {r}_{{\textit {J}}}\boldsymbol {r}_{{\textit {J}}}}$ and

$\varSigma ^{(k)}_{\boldsymbol {r}_{{\textit {J}}}\boldsymbol {r}_{{\textit {J}}}}$ and ![]() $\varSigma ^{(k)}_{\varGamma _{{\textit {J}}} \boldsymbol {r}_{{\textit {J}}}}$ are elements in the

$\varSigma ^{(k)}_{\varGamma _{{\textit {J}}} \boldsymbol {r}_{{\textit {J}}}}$ are elements in the ![]() ${\textit {J}}{\textit {J}}$-block of covariance

${\textit {J}}{\textit {J}}$-block of covariance ![]() $\varSigma ^{(k)}$, defined in (2.4). Thus, under a GMM of the state, the expected vorticity field is itself composed of a sum of Gaussian-distributed vortices in Euclidean space (due to the first term in the square brackets), plus a sum of Gaussian-regularized dipole fields arising from covariance between the strengths and positions of the inferred vortex elements (the second term in square brackets).

$\varSigma ^{(k)}$, defined in (2.4). Thus, under a GMM of the state, the expected vorticity field is itself composed of a sum of Gaussian-distributed vortices in Euclidean space (due to the first term in the square brackets), plus a sum of Gaussian-regularized dipole fields arising from covariance between the strengths and positions of the inferred vortex elements (the second term in square brackets).

3. Inference examples

Although we have reduced the problem by non-dimensionalizing it and restricting the possible states, there remain several parameters to explore in the vortex estimation problem: the number and relative configuration of the true vortices; the number of vortices used by the estimator; the number of sensors ![]() $d$ and their configuration; and the measurement noise level

$d$ and their configuration; and the measurement noise level ![]() $\sigma _{\mathcal{E}}$. We will also explore the inference of vortex radius

$\sigma _{\mathcal{E}}$. We will also explore the inference of vortex radius ![]() $\epsilon$ when this parameter is included as part of the state. In the next section, we will explore the inference of a single vortex, using this example to investigate many of the parameters listed above. Then, in the following sections, we will examine the inference of multiple true vortices, to determine the unique aspects that emerge in this context.

$\epsilon$ when this parameter is included as part of the state. In the next section, we will explore the inference of a single vortex, using this example to investigate many of the parameters listed above. Then, in the following sections, we will examine the inference of multiple true vortices, to determine the unique aspects that emerge in this context.

3.1. Inference of a single vortex

In this section, we will explore cases in which both the true vortex system and our estimator consist of a single vortex. We will use this case to draw insight on many of the parameters of the inference problem. For most of the cases, a single line of sensors along the ![]() $x$ axis will be used. The true vortex will remain on the

$x$ axis will be used. The true vortex will remain on the ![]() $y = 1$ line and have unit strength. Many of the examples that follow will focus on the true configuration

$y = 1$ line and have unit strength. Many of the examples that follow will focus on the true configuration ![]() $(x_{1},y_{1},\varGamma _{1}) = (0.5,1,1)$. (The subscript 1 is unnecessary with only one vortex, but allows us to align the notation with that of § 2.1). Note, however, that we will not presume knowledge of any of these states: the bounding region of our prior will be

$(x_{1},y_{1},\varGamma _{1}) = (0.5,1,1)$. (The subscript 1 is unnecessary with only one vortex, but allows us to align the notation with that of § 2.1). Note, however, that we will not presume knowledge of any of these states: the bounding region of our prior will be ![]() $x \in (-2,2)$,

$x \in (-2,2)$, ![]() $y \in (0.01,4)$,

$y \in (0.01,4)$, ![]() $\varGamma \in (0,2)$.

$\varGamma \in (0,2)$.

All of the basic tools used in the present investigation are depicted in figure 3, in which the true configuration is estimated with three sensors arranged uniformly along the ![]() $x$ axis between

$x$ axis between ![]() $[-1,1]$ with

$[-1,1]$ with ![]() $\sigma _{\mathcal{E}} = 5\times 10^{-4}$. Figure 3(a) shows the ellipsoid for covariance

$\sigma _{\mathcal{E}} = 5\times 10^{-4}$. Figure 3(a) shows the ellipsoid for covariance ![]() $\varSigma ^{\ast }_{{\textit {X}}}$, computed at the true vortex state. This figure particularly indicates that much of the uncertainty lies along a direction that mixes

$\varSigma ^{\ast }_{{\textit {X}}}$, computed at the true vortex state. This figure particularly indicates that much of the uncertainty lies along a direction that mixes ![]() $y_{1}$ and

$y_{1}$ and ![]() $\varGamma _{1}$; indeed, the eigenvector corresponding to the direction of greatest uncertainty is

$\varGamma _{1}$; indeed, the eigenvector corresponding to the direction of greatest uncertainty is ![]() $(0.08,0.79,0.61)$. This uncertainty is intuitive: as a vortex moves further away from the sensors, it generates very similar sensor measurements if its strength simultaneously increases in the proportion indicated by this direction. In figure 3(b), the samples obtained from the MCMC method are shown. Here, and in later figures in the paper, we show only the vortex positions of these samples in Euclidean space and colour their symbols to denote the sign of their strength (blue for positive, red for negative). The set of samples clearly encloses the true state, shown as a block dot; the expected value from the samples is

$(0.08,0.79,0.61)$. This uncertainty is intuitive: as a vortex moves further away from the sensors, it generates very similar sensor measurements if its strength simultaneously increases in the proportion indicated by this direction. In figure 3(b), the samples obtained from the MCMC method are shown. Here, and in later figures in the paper, we show only the vortex positions of these samples in Euclidean space and colour their symbols to denote the sign of their strength (blue for positive, red for negative). The set of samples clearly encloses the true state, shown as a block dot; the expected value from the samples is ![]() $(0.51,1.07,1.05)$, which agrees well.

$(0.51,1.07,1.05)$, which agrees well.

The samples also demonstrate the uncertainty of the estimated state. The filled ellipse in this figure corresponds to the exact covariance of figure 3(a) and is shown for reference. As expected, the samples are spread predominantly along the direction of the maximum uncertainty. This figure also depicts an elliptical region for each Gaussian component of the mixture computed from the samples. These ellipses correspond only to the marginal covariances of the vortex positions and do not depict the uncertainty of the vortex strength. The weight of the component in the mixture is denoted by the thickness of the line. One can see from this plot that the GMM covers the samples with components, concentrating most of the weight near the centre of the cluster with two dominant components. The composite of these components is best seen in figure 3(d), in which the expected vorticity field is shown. In the remainder of this paper, this expected vorticity field will be used extensively to illuminate the uncertainty of the vortex estimation. Finally, figure 3(c) compares the true sensor pressures with those corresponding to the expected state from the MCMC samples. These agree to within the measurement noise.

3.1.1. Effect of the number of sensors

In the last section, we found that three sensors were sufficient to estimate a single vortex's position and strength. In this section we investigate how this estimate of a single vortex depends on the number of sensors. In most cases, these sensors will again lie uniformly along the ![]() $x$ axis in the range

$x$ axis in the range ![]() $[-1,1]$. Intuitively, we expect that if we have fewer sensors than there are states to estimate, we will have insufficient information to uniquely identify the vortex. Figure 4 shows that this is indeed the case. In this example, only two sensors are used to estimate the same vortex as in the previous example. The MCMC samples are distributed along a circular arc, but are truncated outside of the aforementioned bounding region. In fact, this arc is a projection of a helical curve of equally probable states in the three-dimensional state space. The samples broaden from the arc the further they are from the sensors due to the increase in uncertainty with distance. The true covariance,

$[-1,1]$. Intuitively, we expect that if we have fewer sensors than there are states to estimate, we will have insufficient information to uniquely identify the vortex. Figure 4 shows that this is indeed the case. In this example, only two sensors are used to estimate the same vortex as in the previous example. The MCMC samples are distributed along a circular arc, but are truncated outside of the aforementioned bounding region. In fact, this arc is a projection of a helical curve of equally probable states in the three-dimensional state space. The samples broaden from the arc the further they are from the sensors due to the increase in uncertainty with distance. The true covariance, ![]() $\varSigma ^{\ast }_{{\textit {X}}}$, cannot reveal the full shape of this helical manifold, which is inherently dependent on the nonlinear relationship between the sensors and the vortex. However, the rank of this covariance decreases to 2, so that the uncertainty along one of its principal axes must be infinite. This principal axis is tangent to the manifold of possible states, as shown by a line in the plot.

$\varSigma ^{\ast }_{{\textit {X}}}$, cannot reveal the full shape of this helical manifold, which is inherently dependent on the nonlinear relationship between the sensors and the vortex. However, the rank of this covariance decreases to 2, so that the uncertainty along one of its principal axes must be infinite. This principal axis is tangent to the manifold of possible states, as shown by a line in the plot.

Figure 4. One true vortex and one-vortex estimator, using two sensors, showing MCMC samples (blue dots) and the resulting vortex position ellipses from the GMM. The estimator truncates the samples outside the bounding region. The true vortex position is shown as filled black circle. The circular curve represents the manifold of possible states that produce the same sensor pressures; the line tangent to the circle is the direction of maximum uncertainty at the true state.

What if there are more sensors than states? Figure 5(a–c) depicts expected vorticity fields for several cases in which there are increasing numbers of sensors arranged along a line, and figure 5(d) shows the expected vorticity when 5 sensors are instead arranged in a circle of radius 2.1 about the true vortex. (The choice of radius is to ensure that the smallest distance between the true vortex and a sensor is approximately 1 in all cases.) It is apparent that the uncertainty shrinks when the number of sensors increases from 3 to 4, but does so less notably when the number increases from 4 to 5. In figure 5(e), the maximum uncertainty is seen to drop by nearly half when one sensor is added to the basic set of 3 along a line, but decreases much more gradually when more than 4 sensors are used. The drop in uncertainty is more dramatic between 3 and 5 sensors arranged in a circle, but becomes more gradual beyond 5 sensors.

Figure 5. One true vortex and one-vortex estimator, using various numbers and configurations of sensors (shown as brown squares) arranged in a line between ![]() $-$1 and 1 (a–c) or in a circle of radius 2.1 (d). Each panel depicts contours of expected vorticity field. True vortex position shown as filled black circle in each. (e) Maximum length of the covariance ellipsoid with increasing number of sensors on line segment

$-$1 and 1 (a–c) or in a circle of radius 2.1 (d). Each panel depicts contours of expected vorticity field. True vortex position shown as filled black circle in each. (e) Maximum length of the covariance ellipsoid with increasing number of sensors on line segment ![]() $x = [-1,1]$ (blue) or circle of radius

$x = [-1,1]$ (blue) or circle of radius ![]() $2.1$ (gold).

$2.1$ (gold).

3.1.2. Effect of the true vortex position

It is particularly important to explore how the uncertainty is affected by the position of the true vortex relative to the sensors. We address this question here by varying this position relative to a fixed set of sensors with a fixed level of noise, ![]() $\sigma _{\mathcal{E}} = 5\times 10^{-4}$. Figure 6(a) depicts contours (on a log scale) of the resulting maximum length of the covariance ellipsoid,

$\sigma _{\mathcal{E}} = 5\times 10^{-4}$. Figure 6(a) depicts contours (on a log scale) of the resulting maximum length of the covariance ellipsoid, ![]() $\lambda _{n}^{1/2}$, based on four sensors placed on the

$\lambda _{n}^{1/2}$, based on four sensors placed on the ![]() $x$ axis between

$x$ axis between ![]() $-1$ and

$-1$ and ![]() $1$. The contours reveal that there is little uncertainty when the true vortex is in the vicinity of the sensors, but the uncertainty increases sharply with distance when the vortex lies outside the extent of sensors. Indeed, one finds empirically that the rates of increase scale approximately as

$1$. The contours reveal that there is little uncertainty when the true vortex is in the vicinity of the sensors, but the uncertainty increases sharply with distance when the vortex lies outside the extent of sensors. Indeed, one finds empirically that the rates of increase scale approximately as ![]() $\lambda _{n}^{1/2} \sim |y_{1}|^{5}$ and

$\lambda _{n}^{1/2} \sim |y_{1}|^{5}$ and ![]() $\lambda _{n}^{1/2} \sim |x_{1}|^{6}$. This behaviour does not change markedly if we vary the number of sensors, as illustrated in figure 6(b). As the true vortex's

$\lambda _{n}^{1/2} \sim |x_{1}|^{6}$. This behaviour does not change markedly if we vary the number of sensors, as illustrated in figure 6(b). As the true vortex's ![]() $x$ position varies (and

$x$ position varies (and ![]() $y_{1}$ is held constant at 1), there is a similarly sharp rate of increase outside of the region near the sensors for 3, 4 or 5 sensors. However, although there is a small range of positions near

$y_{1}$ is held constant at 1), there is a similarly sharp rate of increase outside of the region near the sensors for 3, 4 or 5 sensors. However, although there is a small range of positions near ![]() $x_{1} = 0$ in which 3 sensors have less uncertainty than 4, there is generally less uncertainty at all vortex positions with increasing numbers of sensors. Furthermore, the uncertainty is less variable in this near region when 4 or 5 sensors are used.

$x_{1} = 0$ in which 3 sensors have less uncertainty than 4, there is generally less uncertainty at all vortex positions with increasing numbers of sensors. Furthermore, the uncertainty is less variable in this near region when 4 or 5 sensors are used.

Figure 6. One true vortex and one-vortex estimator, with noise ![]() $\sigma _{\mathcal{E}} = 5\times 10^{-4}$. (a) Contours (on a log scale) of maximum length of covariance ellipsoid,

$\sigma _{\mathcal{E}} = 5\times 10^{-4}$. (a) Contours (on a log scale) of maximum length of covariance ellipsoid, ![]() $\lambda _{n}^{1/2}$, as a function of true vortex position

$\lambda _{n}^{1/2}$, as a function of true vortex position ![]() $(x_{1},y_{1})$ using four sensors, shown as brown squares. Contours are

$(x_{1},y_{1})$ using four sensors, shown as brown squares. Contours are ![]() $10^{-2}$ through

$10^{-2}$ through ![]() $10^{2}$, with

$10^{2}$, with ![]() $10^{-1}$ depicted with a thick black line. (b) Maximum length of covariance ellipsoid vs horizontal position of true vortex (with vertical position held fixed at

$10^{-1}$ depicted with a thick black line. (b) Maximum length of covariance ellipsoid vs horizontal position of true vortex (with vertical position held fixed at ![]() $y_{1} = 1$), and varying number of sensors along line segment

$y_{1} = 1$), and varying number of sensors along line segment ![]() $x = [-1,1]$ (

$x = [-1,1]$ (![]() $d=3$, blue;

$d=3$, blue; ![]() $d = 4$, gold;

$d = 4$, gold; ![]() $d = 5$, green).

$d = 5$, green).

3.1.3. Effect of sensor noise

From the derivation in § 2.3, we already know that the true covariance should depend linearly on the noise variance (2.16). In this section, we explore the effect of sensor noise on the estimation of a single vortex using MCMC and the subsequent fitting with a Gaussian mixture model. We keep the number of sensors fixed at 3 arranged along a line between ![]() $x = -1$ and

$x = -1$ and ![]() $1$, and the true vortex in the original configuration,

$1$, and the true vortex in the original configuration, ![]() $(x_{1},y_{1},\varGamma _{1}) = (0.5,1,1)$. Figure 7 depicts the expected vorticity field as the noise standard deviation

$(x_{1},y_{1},\varGamma _{1}) = (0.5,1,1)$. Figure 7 depicts the expected vorticity field as the noise standard deviation ![]() $\sigma _{\mathcal{E}}$ increases. Unsurprisingly, the expected vorticity distribution exhibits increasing breadth as the noise level increases. However, it is notable that this breadth becomes increasingly directed away from the sensors as the noise increases. Furthermore, the centre of the distribution lies somewhat further from the sensors than the true state, indicating a bias error. This trend toward increased bias error with increasing sensor noise is also apparent in other sensor numbers and arrangements.

$\sigma _{\mathcal{E}}$ increases. Unsurprisingly, the expected vorticity distribution exhibits increasing breadth as the noise level increases. However, it is notable that this breadth becomes increasingly directed away from the sensors as the noise increases. Furthermore, the centre of the distribution lies somewhat further from the sensors than the true state, indicating a bias error. This trend toward increased bias error with increasing sensor noise is also apparent in other sensor numbers and arrangements.

Figure 7. One true vortex and one-vortex estimator, using three sensors (shown as brown squares), with noise levels (a) ![]() $\sigma _{\mathcal{E}} = 2.5 \times 10^{-4}$, (b)

$\sigma _{\mathcal{E}} = 2.5 \times 10^{-4}$, (b) ![]() $5\times 10^{-4}$ and (c)

$5\times 10^{-4}$ and (c) ![]() $1\times 10^{-3}$. Each panel depicts contours of expected vorticity field. True vortex position shown as filled black circle in each.

$1\times 10^{-3}$. Each panel depicts contours of expected vorticity field. True vortex position shown as filled black circle in each.

3.1.4. Effect of true vortex radius

Throughout most of this paper, the radius of the true vortices is fixed at ![]() $\epsilon = 0.01$, and the estimator vortices share this same radius. However, for practical application purposes, it is important to explore the extent to which the estimation is affected by a mismatch between these. If the true vortex is more widely distributed, can an estimator consisting of a small-radius vortex reliably determine its position and strength? Furthermore, can the radius itself be inferred? These two questions are closely related, as we will show. First, it is useful to illustrate the effect of the vortex radius on the pressure field associated with a vortex, as in figure 8(a), which shows the vortex-pressure kernel

$\epsilon = 0.01$, and the estimator vortices share this same radius. However, for practical application purposes, it is important to explore the extent to which the estimation is affected by a mismatch between these. If the true vortex is more widely distributed, can an estimator consisting of a small-radius vortex reliably determine its position and strength? Furthermore, can the radius itself be inferred? These two questions are closely related, as we will show. First, it is useful to illustrate the effect of the vortex radius on the pressure field associated with a vortex, as in figure 8(a), which shows the vortex-pressure kernel ![]() $P_{\epsilon }$ for two different vortex radii,

$P_{\epsilon }$ for two different vortex radii, ![]() $\epsilon = 0.01$ and

$\epsilon = 0.01$ and ![]() $\epsilon = 0.2$. As this plot shows, the effect of vortex radius is fairly negligible beyond a distance of 5 times the larger of these two vortex radii.

$\epsilon = 0.2$. As this plot shows, the effect of vortex radius is fairly negligible beyond a distance of 5 times the larger of these two vortex radii.

Figure 8. (a) Regularized vortex-pressure kernel ![]() $P_{\epsilon }$, with two different choices of blob radius. (b) The maximum length of uncertainty ellipsoid vs blob radius

$P_{\epsilon }$, with two different choices of blob radius. (b) The maximum length of uncertainty ellipsoid vs blob radius ![]() $\epsilon$, for one true vortex and four sensors, when blob radius is included as part of the state vector, for a true vortex at

$\epsilon$, for one true vortex and four sensors, when blob radius is included as part of the state vector, for a true vortex at ![]() $(x_{1},y_{1},\varGamma _{1}) = (0.5,1,1)$. (c) Vorticity contours for a true vortex with radius

$(x_{1},y_{1},\varGamma _{1}) = (0.5,1,1)$. (c) Vorticity contours for a true vortex with radius ![]() $\epsilon =0.2$ (in grey) and expected vorticity from a vortex estimator with radius

$\epsilon =0.2$ (in grey) and expected vorticity from a vortex estimator with radius ![]() $\epsilon = 0.01$ (in blue), using four sensors (shown as brown squares).

$\epsilon = 0.01$ (in blue), using four sensors (shown as brown squares).

As a result of this diminishing effect of vortex radius, one expects that it is very challenging to estimate this radius from pressure sensor measurements outside of the vortex core. Indeed, that is the case, as figure 8(b) shows. This figure depicts the maximum length of the covariance ellipsoid as a function of true vortex radius, when four sensors along the ![]() $x$ axis are used to estimate this radius (in addition to vortex position and strength), for a true vortex at

$x$ axis are used to estimate this radius (in addition to vortex position and strength), for a true vortex at ![]() $(0.5,1)$ with strength 1. The uncertainty is far too large for the radius to be observable until this radius approaches

$(0.5,1)$ with strength 1. The uncertainty is far too large for the radius to be observable until this radius approaches ![]() $\epsilon = 1$. Even when the sensors are within the core of the vortex, they are confused between the blob radius and other vortex states. In fact, one can show that the dependence of the maximum uncertainty on

$\epsilon = 1$. Even when the sensors are within the core of the vortex, they are confused between the blob radius and other vortex states. In fact, one can show that the dependence of the maximum uncertainty on ![]() $\epsilon ^{-3}$ arises because of the nearly identical sensitivity that the pressure sensors have to changes of blob radius and changes in other states, e.g. vertical position in this case. The leading-order term of the difference is proportional to

$\epsilon ^{-3}$ arises because of the nearly identical sensitivity that the pressure sensors have to changes of blob radius and changes in other states, e.g. vertical position in this case. The leading-order term of the difference is proportional to ![]() $\epsilon ^{3}$. Of course, if we presume precise knowledge of the other states, then vortex radius becomes more observable.

$\epsilon ^{3}$. Of course, if we presume precise knowledge of the other states, then vortex radius becomes more observable.

The insensitivity of pressure to vortex radius has a very important benefit, because it ensures that, even when the true vortex is relatively broad in size, a vortex estimator with small radius can still reliably infer the vortex's position and strength. This is illustrated in figure 8(c), which depicts the contours of a true vortex of radius ![]() $\epsilon = 0.2$ (with the same position and strength as in (b)), and the expected vorticity contours from an estimate carried out with a vortex element of radius

$\epsilon = 0.2$ (with the same position and strength as in (b)), and the expected vorticity contours from an estimate carried out with a vortex element of radius ![]() $\epsilon = 0.01$. It is apparent from this figure that the centre of the vortex is estimated very well. In fact, the mean of the MCMC samples is

$\epsilon = 0.01$. It is apparent from this figure that the centre of the vortex is estimated very well. In fact, the mean of the MCMC samples is ![]() $(\bar {x}_{1},\bar {y}_{1},\bar {\varGamma }_{1}) = (0.51, 1.08, 1.04)$, quite close to the true values.